Abstract

Increasing production and use of chemicals and awareness of their impact on ecosystems and humans has led to large interest for broadening the knowledge on the chemical status of the environment and human health by suspect and non-target screening (NTS). To facilitate effective implementation of NTS in scientific, commercial and governmental laboratories, as well as acceptance by managers, regulators and risk assessors, more harmonisation in NTS is required. To address this, NORMAN Association members involved in NTS activities have prepared this guidance document, based on the current state of knowledge. The document is intended to provide guidance on performing high quality NTS studies and data interpretation while increasing awareness of the promise but also pitfalls and challenges associated with these techniques. Guidance is provided for all steps; from sampling and sample preparation to analysis by chromatography (liquid and gas—LC and GC) coupled via various ionisation techniques to high-resolution tandem mass spectrometry (HRMS/MS), through to data evaluation and reporting in the context of NTS. Although most experience within the NORMAN network still involves water analysis of polar compounds using LC–HRMS/MS, other matrices (sediment, soil, biota, dust, air) and instrumentation (GC, ion mobility) are covered, reflecting the rapid development and extension of the field. Due to the ongoing developments, the different questions addressed with NTS and manifold techniques in use, NORMAN members feel that no standard operation process can be provided at this stage. However, appropriate analytical methods, data processing techniques and databases commonly compiled in NTS workflows are introduced, their limitations are discussed and recommendations for different cases are provided. Proper quality assurance, quantification without reference standards and reporting results with clear confidence of identification assignment complete the guidance together with a glossary of definitions. The NORMAN community greatly supports the sharing of experiences and data via open science and hopes that this guideline supports this effort.

Similar content being viewed by others

Motivation for this guidance

A large and increasing number of chemicals are produced and used by modern society, leading to potentially harmful exposures of ecosystems and humans. A recent global inventory tallied > 350,000 chemicals and substances [1], while > 204 million chemicals are now in the largest registries [2]. Current monitoring approaches are capable of detecting only a small set of these chemicals (e.g., tens to hundreds) and are often defined by monitoring requirements related to regulatory frameworks or other chemical management approaches. However, improvements in the sensitivity, selectivity, and operation of analytical instruments, along with advancements in software development for data treatment and data evaluation in recent years have increased the interest to go beyond the target analysis of a few dozen pre-defined chemicals. Suspect and non-target screening (NTS) of a broad range of organic compounds, including transformation products (TPs) and certain organometallic compounds, have become a popular addition to target analysis not only in the scientific community, but also for authorities and regulators [3, 4]. Note that in this article the abbreviation NTS covers the collective term “suspect and non-target screening”, because many aspects and methods are the same for both.

Going beyond target screening broadens the knowledge about the chemical status of the environment and human exposure, plus it allows for retrospective screening and an early warning about emerging contaminants without upfront selection and purchase of standards. NTS also allows the screening of chemicals that are either too expensive, restricted, or not commercially available. Purchase or synthesis of standards for full confirmation can be decided subsequently based on the relevance of the identification, e.g., frequency of detection, potential ecological or toxic effect, or peak intensity. Table 1 includes several examples of applications in the field of environmental monitoring. The sampling, sample preparation, analysis and data evaluation should be tailored according to the study question, as discussed in later sections, which can limit the applicability of retrospective screening in some cases.

First studies on the detection of unknown compounds were already reported in the early 1970s, with the introduction of gas chromatography coupled to mass spectrometry with electron ionisation (GC–EI–MS) [5]. The early harmonisation and reproducible fragmentation by EI led to the inclusion of standard spectra in libraries, such as the National Institute of Standards and Technology (NIST) mass spectral library, which have been used for identification via spectral match since then. The NIST spectral library is still widely used and contains 350,704 spectra of 306,643 compounds for GC–EI–MS as of February 2023 [6]. However, the chemical coverage of GC–MS is limited to volatile compounds unless derivatisation of non-volatiles is performed. In addition, the determination of molecular structures is challenged by low intensity or absence of a molecular ion in approximately 40% of GC–EI–MS spectra [7]. Chemical ionisation (CI) and atmospheric pressure chemical ionisation (APCI), as softer ionisation techniques, can increase the abundance of the molecular ion when coupled to GC separation.

Electrospray ionisation (ESI), along with APCI—both compatible with liquid chromatography (LC)—have extended the chemical space in two ways; by including more polar, water-soluble and larger molecules and by providing more accurate and detailed data on the ionised molecule. High-resolution mass spectrometry (HRMS), now available as benchtop instruments, enables the simultaneous and sensitive detection of ions in full scan mode with high mass resolution (ratio of mass to mass difference ≥ 20,000) and high mass accuracy (≤ 5 ppm mass deviation), improving possibilities for compound identification. Increasing resolution allows the separation of interferences and can reduce the need for sample preparation in some cases, while increasing mass accuracy reduces the number of candidates possible for a mass of interest. Tandem mass spectrometry (MS/MS) provides additional fragment information. Today, MS2 libraries have grown, but are not yet comparable to EI spectra libraries due to lower reproducibility and variabilities in fragmentation with different instruments, techniques and energies applied.

Given the rapid development and increasing use of NTS approaches, data quality has become an important topic. This includes procedures for quality assurance/quality control (QA/QC) as well as assessments of what level of data quality is currently achievable [8]. Several collaborative trials have been organised by the NORMAN network [9, 10], the US EPA [11] and national communities documenting an ongoing need for more harmonisation. Conferences and workshops have been arranged to exchange and evaluate best practice for preparation, acquisition, and data evaluation of samples for HRMS analysis and subsequent suspect and non-target screening workflows. First drafts of national guidelines for NTS are available in Germany (German Chemical Society) and the Netherlands (Royal Netherlands Standardization Institute), with a specific focus on water monitoring. The NTS community in the US (BP4NTA) [12] proposed an NTS study reporting tool for quality assessment of publications in the field [13].

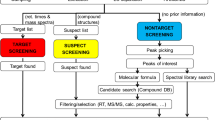

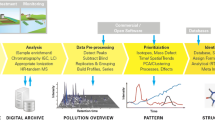

In response to the increasing interest in NTS from regulators, risk assessors and scientists, and continuous comments on the need for more harmonisation in this field, NORMAN members involved in NTS activities have prepared this guidance document, based on the current state of knowledge. This document is intended to support scientific, commercial and governmental laboratories in conducting high quality NTS studies, and help those using NTS data to evaluate the pitfalls and challenges with these techniques. The aim is to provide guidance for all steps (Fig. 1): from sampling and sample preparation to analysis, through to data acquisition, data evaluation and reporting in the context of NTS analyses. While most experience within the NORMAN Network still involves water analysis using LC–ESI–HRMS/MS, other matrices and instrumentations will also be covered, reflecting the rapid development and extension of the field.

Overview on analytical methods for NTS

While target methods are typically optimised for a small set of compounds with rather similar physico-chemical properties, screening methods are generally more generic. Typically, they involve limited sample processing (if any) and separation over a wide hydrophobicity range to minimise compound losses and ensure performance for as many compounds as possible. For liquid samples with sufficiently high concentrations, direct injection is often recommended where possible, while solid samples have to be extracted, usually with organic solvents, such as methanol or acetonitrile (for LC) and hexane or acetone (for GC) or solvent mixtures. For lower concentrated liquid samples, vacuum-assisted evaporative concentration [14], freeze-drying [15] or solid phase extraction (SPE) can be applied. SPE materials capable of different interactions (e.g., ion exchange, Van der Waals interactions, electrostatic interactions) can be combined to broaden the range of enrichable compounds [14, 16]. Chromatographic methods tend to use generic gradients ranging over a broad range of organic solvent content (e.g., 0–100% methanol) with reversed phase (RP, typically C18) columns in LC or temperature gradient (e.g., 40–300 °C) and phenylmethylpolysiloxane columns in GC. For LC, ionisation with ESI covers the widest range of polar compounds, while EI is most common for non-polar compounds separated by GC, although APCI allows better transfer of data evaluation methods from LC–ESI–HRMS/MS workflows.

In NTS, the range of compounds covered by the method is not known a priori, but has to be inferred from knowledge about the performance of the individual method steps and processing of reference compounds. Overall, for NTS methods it is essential to have a good idea about the coverage of the compound domain, particularly about what is not covered. This is one reason why it is recommended starting with a target screening on the data before moving to NTS. The final compound domain of a screening method is the intersection of the domain of each method step, as conceptualised in Fig. 2. There are some attempts to predict the chemical domain of screening methods using quantitative structure-activity relationships (QSARs) based on physico-chemical properties [17] and an on-going activity of NORMAN, while the BP4NTA group recently released the ChemSpace approach [18].

While generic suspect and non-target screening methods strive to cover the largest possible compound domain, they are usually not suitable for compound groups with highly specific properties. Examples are small hydrophilic ionic or neutral compounds such as the pesticide glyphosate, N-nitrosodimethylamine, and ultra-short chain per- and polyfluoroalkyl substances (PFAS) or very non-polar high-molecular weight compounds such as > 6-ring polycyclic aromatic hydrocarbons (PAHs) for which the commonly used enrichment and separation methods are not appropriate. Furthermore, sensitivity is generally lower for screening methods compared to specific methods, which often contain purification steps to eliminate interferences, specific enrichment and separation as well as optimised ionisation conditions. This is especially relevant for compounds with low environmental effect thresholds for which very low detection limits are essential, such as pyrethroids and steroid hormones. If such specific substance classes are of interest, it is usually preferred to use a more specific method or at least evaluate with standards whether the screening method is suitable for the substance class. To address specific chemical domains, the generic methods mentioned above can often be adapted. For example, very hydrophilic compounds can be separated with hydrophilic interaction liquid chromatography (HILIC), supercritical fluid chromatography (SFC) or mixed-mode LC (MMLC) instead of RP (C18)-based chromatography before ESI–HRMS. The next sections address these analytical considerations step by step in greater detail and refer to LC–MS unless stated otherwise.

Sampling and sample preparation for NTS

Sampling is an integral part of a holistic approach and the beginning of the analytical chain [19], since the analytical result is no better than the selected sampling method. In NTS, sampling is typically not tailored to specific chemicals or groups of substances, although specific compound domains might be of interest. The sampling procedure should ensure maximal representation of the environmental chemical patterns, consider spatial and temporal variations and minimise both contamination and loss of compounds. Sampling should be performed by trained personnel who are aware of the risk of contamination of samples and/or losses of compounds posed by incorrect handling. Samples for NTS should not be preserved using any chemical additives (e.g., sodium azide or acids) due to potential sample contamination or sample alteration (i.e., transformation of compounds). Instead they should at least be refrigerated at 4 °C (core temperature according to ISO 5667-3) or better frozen at − 20 °C as soon as possible after sampling. The samples should be transported to the receiving laboratory under these conditions and processed as soon as possible.

To minimise contamination from the sample equipment, high quality solvents should be used for cleaning. As far as possible, sampling devices that come into contact with the sample, tubing, and sampling containers should be made from inert materials that do not sorb or release compounds. While this is in most cases true for borosilicate glass or stainless steel, some compounds also sorb to these materials (e.g., PFAS, phosphonates or complexing agents). In some cases, if the use of plastic or elastic polymers cannot be avoided (e.g., for flexible tubing or sealings), plasticiser-free polymers (e.g., high density polypropylene) and high quality silicone (for seals) should be used. In general, the most appropriate material should be selected depending on the substance domain of interest (hydrophobic or hydrophilic) and potential interference with the sampling material. The appropriate cleaning of the sampling equipment and all other laboratory (glass)ware that is in contact with the sample is critical. After cleaning with laboratory detergents, the equipment must be rinsed with ultrapure water and high-quality solvents. All materials can be baked out at the highest possible temperature (follow manufacturer instructions); for example, borosilicate glassware beakers and bottles up to 410 °C in a furnace. In addition, working in positive air pressure laboratories with air filtration systems is highly recommended for NTS to reduce the contamination of samples via air as much as possible.

Field, laboratory and procedural blanks are necessary to capture potential contamination of the samples by environmental or laboratory background, leaching of materials in contact with the sample or inherent content in the water and solvents used. Detailed examples of how to obtain blank samples for each sample type are described in the following subsections. Disposable gloves should be worn while sampling to protect the sampling person from possible toxic contaminants and to avoid any cross contamination of the samples, containers and equipment. Whenever possible, the use of cosmetics, sunscreens, soaps, medical creams, drinks with caffeine, tobacco and insect repellents should be avoided when sampling or processing samples. Such products often contain high levels of compounds of potential interest. For instance, insect repellents contain up to 50% of diethyltoluamide (DEET), such that even minimal contact will easily contaminate an environmental sample with typically 106 to 109-fold lower levels. If usage is unavoidable for human health protection, it should be noted in the sampling protocol.

The following subsections contain some basics and guiding principles for sample collection and preparation relevant for NTS of various matrices. Details on sampling strategies and methods are given, for example, in the ISO 5667 standard series and in the European Water Framework Guideline Documents [20].

Water

The sampling location, method, season and sampling time should be chosen carefully depending on the study question. The two general approaches for water are (1) spot or grab and (2) composite sampling. Passive sampling, which can be viewed as a specific case of composite sampling, is covered in Sect. “Passive sampling”. Spot sampling involves taking a single or discrete sample at a given location, time, and/or depth of a water body or groundwater aquifer that is representative only of the composition of the matrix during the time of sampling, which is usually seconds to minutes [19]. Composite or integrative samples consist of pooled portions of discrete samples or are collected using continuous automated sampling devices and combined time- or flow-proportionally in one sample, which are representative for the average conditions during the sampling period [21]. The sampling type and the volumes of water to be collected depend on the goals of the study and / or other requirements, such as the storage of backup samples or combination with, e.g., effect-based analysis.

Water samples can either be collected in the field and transported to the laboratory for further processing, or directly extracted on-site. For the latter, different types of mobile SPE devices have been developed, often designed for obtaining large water volumes (20–1000 L) for combined chemical and biological analysis that are otherwise difficult to transport to the laboratory [22, 23]. The time-integrating, microflow, inline extraction (TIMFIE) sampler [24] provides a low-volume system for continuous SPE using a syringe pump and a small SPE cartridge. At some larger monitoring stations, the use of SPE combined with LC–(HR)MS/MS and NTS is daily practice [3]. The “MS2field” online–SPE–LC–HRMS/MS system in a trailer allows in situ automated analysis of samples with a high temporal frequency and minimal lag time in the field [25].

The high sensitivity of the current generations of LC–HRMS equipment allows for direct injection (DI) of water samples without any enrichment steps. The advantages of DI are the small water volumes required, low efforts with sample processing and less risk of background contamination during sample preparation. Minimising the sample processing results in negligible losses of compounds, as each manipulation step may discriminate against substances (e.g., by evaporation, precipitation or degradation). To obtain a sufficient sensitivity, typically large volume injections are used for DI, with volumes of 100 [26], 250 [27] or up to 650 µL [28], as no further enrichment of the sample takes place. In such cases, an adjustment of the sample composition before injection by adjusting pH and solvent addition is necessary to avoid phase dewetting or injection solvent mismatch (see Sect. “Choice of separation method”). A direct preparation of sub-samples for analysis is possible in the field by transferring individual aliquots of 1 mL into autosampler vials from a larger sampling vessel. Depending on the load of suspended particulate matter, settling of particles before aliquoting alone may be sufficient; alternatively, a filtration or centrifugation step might be necessary before analysis, with the accompanying risk of compound losses. A drawback of DI is the potential contamination of the ion source with inorganic salts that would be removed by SPE or liquid–liquid extraction (LLE). This is particularly critical for samples from estuarine or marine environments, for which even a diversion of the eluent flow away from the ion source at early retention times (RT) might not be sufficient.

Performing SPE in the laboratory is still the most commonly used sample preparation method for water samples. SPE often requires a filtration step before extraction to separate particles from the water phase. In general, glass fibre filters with a nominal pore size of 0.7 µm are used, or membrane filters with 0.45 µm pore size. The given pore sizes for the separation between the solid and the dissolved fraction are primarily operational. The freely dissolved fraction can only be sampled by kinetic samplers (i.e., passive samplers) and not separated by a membrane [19]. For screening methods with LC covering medium polar to non-polar compounds, C18 or mixed-mode materials are often used, such as Oasis HLB (Waters) or Chromabond HR-X (Macherey Nagel). Combinations of C18 material with ion exchange material, other polymers and potentially even activated carbon enlarge the compound range to ionised and very polar compounds [14, 16, 29]. To avoid contamination, sufficient cleaning by organic solvents and water within the so-called conditioning step is important. Method blanks with ultrapure water to check for contamination are indispensable. Elution of the enriched chemicals from the SPE material is usually achieved by methanol or acetonitrile. Adjusting the pH of the sample and the eluent is critical to achieve optimal retention and elution. SPE can also be carried out online before the LC to save time and material [30]. Dilution of the organic SPE eluate with water before RP chromatography is recommended to achieve refocusing on the column.

For enrichment of very polar compounds that may not bind to SPE material, both vacuum-assisted evaporation [14] and freeze-drying [15] approaches have been applied successfully. However, the simultaneous enrichment of salts can lead to high ion suppression in the downstream analysis and volatile compounds may be lost.

For screening methods with GC covering non-polar compounds of sufficient volatility, either SPE with C18 material or LLE can be used. Both approaches involve elution or extraction with less polar solvents, such as ethyl acetate, hexane or toluene. Although purification with silica gel before GC analysis is common for specific substance class methods, this may lead to loss of compounds and should be avoided if possible, unless it will not influence the chemical domain of interest in the given sampling campaign. Evaporation to dryness should be avoided to ensure retention of volatile compounds.

Sediment, suspended particulate matter and soil

Soil and sediment samples are well-suited to study chemical contamination throughout time and space as usually no historical water samples (apart from ice cores in some areas) are available, and many contaminants have not been recorded and studied in the past. However, the analysis of organic pollutants in soil, sediment, sludge, and suspended particulate matter can be challenging due to potential interference from natural organic matter (NOM), and the spatial variability observed from site to site. The latter makes representative sampling very important. In addition, some compounds can occur in low concentrations or be strongly bound to the matrix. Sampling for non-target screening does not differ widely from target analysis. The international standards ISO 18400-101:2017 [31], ISO 5667-12:2017 [32], ISO 5667-13:2011 [33] and ISO 5667-17:2008 [34] provide general guidance on the sampling of soil, sediment, sludge and suspended solids, respectively. For NTS, it is especially important to include reference sites from remote areas with (relatively) minor contamination, field blanks and procedural blanks to avoid detection of false positives. Together with replicates this can help to eliminate peaks resulting from the extraction procedure and instrumental analysis, similar to water samples.

In general, extraction procedures for NTS in soil, sediment, sludge and suspended solid samples are similar to procedures for target analysis and involve liquid (shaking), pressurised liquid extraction (PLE, also known as accelerated solvent extraction (ASE)), Soxhlet, ultrasonic, microwave, or supercritical fluid extractions. However, to eliminate the usual heavy matrix in soil, sediment and sludge, very specific extraction procedures (e.g., specific pH, narrow polarity of solvent) are often applied for target analysis of specific substance classes, such as polychlorinated biphenyls (PCBs), PAHs and PFAS. Further clean-up procedures or additional extraction steps are used to eliminate matrix components interfering with the analysis. As NTS aims to cover as many compounds as possible in principle, the extraction and clean-up procedures should be much less specific. For example, solvent mixtures allowing for different interactions are used to cover substances with various functional groups and physico-chemical properties. However, this can be at the expense of selectivity and sensitivity for specific substances, as matrix components will also be extracted with less specific extraction and clean-up steps. Using aprotic solvents (e.g., ethyl acetate, dichloromethane, acetone, hexane) extracts fewer natural compounds with acidic or phenolic groups. For polar compounds, PLE with in-cell clean-up employing either Florisil or neutral alumina as a sorbing phase [35, 36] or QuEChERS (quick, easy, cheap, effective, rugged, and safe)-like LLE [37] with salts to enable separation of water and acetonitrile phase has been successfully used for broad target, suspect or non-target screening in soil and sediment [38]. For non-polar compounds, Soxhlet extraction and ultrasonication with a subsequent fractionation and clean-up step using silica gel were used in soil and sediment, respectively [39] and PLE with in-cell clean-up and PLE with gel permeation chromatography (GPC) clean-up has been applied for NTS in sewage sludge [40]. Elemental sulphur (S8) is often co-extracted from soils and should be removed to protect GC columns. Copper is commonly used to eliminate sulphur and can be added directly to the extraction process, for example, in the PLE cells [41, 42].

Air and dust

Airborne chemical pollution is usually caused by a dynamic complex mixture proportioning in gases and particles. Air samples are typically collected using a variety of commercial and self-designed samplers with filters and sorbent materials. Quartz fibre filters are most commonly used in the samplers for collecting airborne fine particles. Volatile organic compounds (VOCs) in the gas phase can be collected by adsorption on polyurethane foam (PUF) in the samplers. These sampling methods were developed in the past to enable long-term monitoring of regulated legacy contaminants [43, 44]. Typically, the extracts are further subjected to an extensive clean-up, for example, with concentrated sulphuric acid that removes the interfering matrix, but also any compounds that are not stable in the acid, which reduces their applicability to NTS methods. Analysis of raw air sample extracts without clean-up would generate contamination of the analytical system, especially with PUF matrix-based compounds, leading to mass spectra with high interferences and detection limits for contaminants that are often only present at trace levels in air samples. To address the problem of interferences originating from PUF, a new extraction and cleanup method was developed [45] and applied for NTS of emerging contaminants in Arctic air [46]. Although the authors reported detection of over 700 compounds of interest in the particle phase and over 1200 compounds in the gaseous phase, the method has its limitations. It is very time-consuming and expensive, and while the authors reported that several compounds exhibited poor recoveries (e.g., chlorfenvinphos, chlorobenzilate, dichlorvos, endrine aldehyde and etridiazole), it is likely that this also applies to other compounds not tested in the study.

Generic non-selective extraction methods applied for targeted analysis of air are also suggested for NTS of air samples, including microwave assisted extraction (MAE), ultrasonic solvent extraction, and PLE. For LC separations, the most common solvents are ethyl acetate and methanol, while hexane and dichloromethane are used to extract GC-amenable compounds. However, due to environmental safety, dichloromethane is increasingly faced-out for laboratory usage and replaced by non-chlorinated solvents. Clean-up to eliminate interferences has to be balanced against loss of chemicals of interest in air samples. Direct analysis of VOCs in the gas phase by thermal desorption from the sorbent without any sample preparation procedure can be conducted on GC–HRMS or GCxGC–HRMS [47]. Passive sampling of air and air particles is increasingly used for NTS of airborne chemical pollution, as discussed further in Sect. “Passive sampling”.

Dust is a complex mixture of settled particulate matter of both natural and anthropogenic origin, with particle sizes from nanometers to millimetres. According to the literature, there are no consistent conclusions on particle size distribution of various environmental pollution, although concentrations of some pollutants were documented to increase with decreasing particle size [48,49,50]. It is thus important to limit the fractionation of the sampled dust to ensure coverage of a broad spectrum of contaminants. In the literature published so far, sieved samples from vacuum cleaners are most commonly used in NTS [9, 51], although the use of the high-volume small surface sampler (HVS3) [52] and a proprietary dust collector attached to a vacuum cleaner is also being reported [53].

A combination of non-polar and polar solvents is used to ensure extraction of a wide range of compounds from the dust. Acetone was recommended as one of the solvents of choice due to its ability to dissolve plastic particles and fibres, which enabled the detection of bisphenol A and plastic additives [54]. In other studies, indoor dust was extracted by sonication with different solvents: hexane:acetone (3:1) and acetone [52], hexane:acetone:toluene [55], methanol:dichloromethane (9:1), dichloromethane [9, 56], acetonitrile:methanol (1:1) [53]. To avoid losses of contaminants, limited cleanup (for example, fractionation on a SPE column) is recommended, which should be balanced against the need for matrix removal in the analysis. The complex dust matrix is likely to interfere with the chromatography, causing a risk of high detection limits and uninterpretable results.

Biota and biofluids

The sample collection and pre-treatment methods for NTS of biota and biofluids are similar to those used in the target analysis of biota, including dissection or particle size reduction. Biota samples should be kept at − 20 °C or below for short-term storage, but kept at − 80 °C or below for long-term storage. Biota samples are sometimes freeze-dried, but this might carry risks of losing volatile compounds and/or cross-contaminating samples. Samples need to be homogenised before extraction. For fresh samples, the water content is typically determined before homogenisation and extraction [57]. A larger sample amount is recommended for NTS of biota compared with target analysis, along with replicates and QA/QC samples, to obtain consistent and high-quality HRMS/MS data for the characterisation and identification of unknown organic contaminants. Details on sampling strategies and pre-treatment for biota are given, for example, in the European Commission (EC) Guidance Document No 32 on the implementation of biota monitoring under the Water Framework Directive [58] and the Helcom monitoring guidelines [59].

Biota and biofluid samples often contain complex matrix materials, such as proteins, lipids, endogenous metabolites, and/or salts, resulting in interference with the NTS of pollutants. Therefore, a balanced approach is required to extract a wide range of chemicals while minimising the matrix effects. The selection of extraction and clean-up procedures of biota and biofluids mainly depends on (1) the polarity of the analytes of interest, (2) applied chromatography and MS techniques, (3) types and contents of the matrix interferences. Extraction of non-polar compounds from biota samples is traditionally performed using a combination of non-polar and moderately polar solvents (e.g., hexane, dichloromethane, and acetone). The resulting extracts contain varying types and amounts of matrix, such as lipids. It is difficult to remove matrix components (e.g., lipids) completely, but it is often possible to reduce the matrix: xenobiotic contaminants ratio sufficiently for the detection of the xenobiotics. For NTS, non-destructive lipid removal techniques are recommended, for example, GPC [60], dialysis [61], or adsorption chromatography [62]. Sometimes, size separation using GPC or dialysis is combined with adsorption chromatography [62] to further reduce the sample complexity and increase the probability to detect and identify new and emerging contaminants. Subsequently, the final non-polar solvent extracts are commonly analysed with GC–EI/APCI–HRMS for screening non-polar unknown compounds. A recently developed alternative approach is equilibrium passive sampling performed by placing a passive sampler in biota tissue. [63, 64] However, this is limited to more hydrophobic compounds and has not yet been widely applied in NTS.

For polar compounds, LLE and solid–liquid extraction [65] or QuEChERS methods have become increasingly popular for extraction and purification before analysis [37]. Acetonitrile is used for the QuEChERS extraction in combination with salts (e.g., MgSO4, NaCl) and sometimes buffers (e.g., citrate) for phase separation. QuEChERS extraction reduces the amount of extracted lipids, proteins, and salts compared to traditional extraction methods. Further matrix removal is achieved using freezing out [66] and/or dispersive solid-phase extraction (dSPE), e.g., using primary secondary amine (PSA) or online-mixed-mode-SPE [65]. dSPE using PSA removes acidic components (e.g., fatty acids), certain pigments (e.g., anthocyanidins) and to some extent sugars, while freezing-out removes lipids, waxes and sugars and other components with low solubility in acetonitrile that may cause matrix effects and ion source contamination in GC and LC analysis [66]. A range of sorbents has been developed for selective lipid removal using conventional or dSPE, e.g., Z-Sep (Supelco, Bellefonte, PA, USA) and EMR-Lipids (Agilent, St. Clara, CA, USA) as well as hexane/heptane clean-up [57]. Even if these have been designed to remove lipids they do also (partially) remove anthropogenic compounds with similar chemical structure or properties. As a result, such sorbents should be used with caution in NTS studies. LC–ESI–HRMS/MS is commonly used to screen polar unknown contaminants in the resulting final extracts.

The sample and matrix type play an important role in the selection of extraction solvents and clean-up strategies. For example, biofluid samples (e.g., urine, blood, serum, bile) require a relatively simple extraction and purification approach due to lower lipid contents. Organic solvents (e.g., methanol or acetonitrile) are often used to precipitate proteins in biofluid samples, followed by centrifugation or filtration. Similarly, muscle tissue usually contains less lipids than other tissues, such that less lipid removal is necessary. For screening polar compounds, the supernatant can be analysed directly with LC–HRMS [67], while non-polar solvent exchange is required to screen non-polar compounds by GC–HRMS.

Passive sampling

Passive samplers employ a receiving phase (e.g., sorbents, materials with sorption properties) to collect chemicals of interest in situ from environmental compartments (e.g., surface water and wastewater, soil, air, biological matrices) [68, 69]. Passive sampling has been established in legislative frameworks, such as the Water Framework Directive [70], international monitoring/regulatory networks (i.e., Global atmospheric passive sampling (GAPS), [71] and Aqua-GAPS [72]) and international standards [73]. A clear advantage of passive sampling techniques is the generation of time-integrated data along with high enrichment factors, which are beneficial for identification of low-level pollutants [68]. Furthermore, they allow for more direct comparisons of different matrices in terms of the compound range and the chromatographic signature, for example, sediment vs. water, which is more challenging than comparing different matrix extracts (e.g., from SPE and PLE). Thus, these techniques are increasingly used to complement more traditional monitoring of contaminants that may be difficult to analyse by spot or bottle sampling, as well as providing important spatial and temporal trend information [74].

The use of NTS with passive sampling has so far been applied to water/air analysis of samples collected with polydimethylsiloxane (PDMS), polyethylene (PE), Polar Organic Chemical Integrative Sampler (POCIS) and Chemcatchers (typically polystyrene–divinylbenzene) [75,76,77,78,79,80]. In these studies, authors reported the tentative identification of a range of halogenated, organophosphate and musk compounds, synthetic steroids, pharmaceuticals, food additives, plasticisers and pesticides. Screening studies with PDMS wristband passive samplers have provided information on exposure to a wide range of atmospheric chemicals including pesticides, legacy pollutants, consumer products and industrial compounds [81,82,83,84,85,86,87]. The physico-chemical properties of compounds and environmental matrix dictate the type of passive sampler and the analytical methods employed. Typically, many polar chemicals are sampled from water matrices with POCIS or Chemcatcher samplers capable of extracting up to three litres of water over deployments periods of ≤ 30 days [68]. Sample preparation and extraction of passive samplers involves similar protocols as reported for SPE and/or LLE [88], followed by reverse phase liquid chromatography (RPLC)–ESI–HRMS [76, 89]. Non-polar and moderately polar compounds are enriched from water or air using PDMS/PE samplers. Hydrophobic contaminants accumulate in these phases via diffusion. Depending on the surface area of the PDMS/PE used, they have the potential to extract hundreds of litres of water or m3 of air. Sample preparation involves pre-extraction and cleaning of the polymers (i.e., via Soxhlet or LLE) before deployment and compound extraction using the same techniques post deployment. Analyses are typically conducted by GC–MS (or GCxGC) using EI or chemical ionisation (CI) as standard methods for the assessment of persistent and bioaccumulative compounds [75, 77, 81, 85, 90]. To cover polar and non-polar compounds together in a study, a number of different passive samplers can be deployed next to each other.

Extracts from passive samplers typically also contain monomers or oligomers from polymeric media or the sorbent itself. This leads to interferences, ion suppression and/or high background levels (reduced sensitivity), as well as analytical variation [74] and uncertainty in the identification of chemicals. A specific and thorough pre-cleaning of the passive sampler medium is required to minimise the presence of interferences. Similar to limitations observed with SPE and LLE techniques, the passive sampling media enrich many compounds, including matrix components, and therefore, drawbacks can include low recoveries and high ion suppression caused by chemical background. In such cases, a sample clean-up step is sometimes employed before analysis, but it needs to be chosen with care to minimise the number of chemicals of interest that are also removed with the matrix interferences [91]. The additional sample processing step also carries risks of sample contamination during processing. For this reason, blanks are a requirement for NTS. Appropriate extraction and field blanks to assess contamination from sample preparation, storage, processing and analysis in the laboratory and from the passive sampling medium itself are critical to minimise false positive identifications. While the background may be a burden, it can present an advantage, too, because it produces a similar level of ion suppression independently of the matrix, which can allow for better comparisons between different samples or matrices.

Specifically for passive sampling, the variation of sampling rates for different compounds and for different site conditions (flow rate, temperature) pose a problem in (semi-)quantitative analysis (i.e., comparison of peak areas) in the data. While in targeted analysis sampling rates can be determined experimentally or estimated based on chemicals with similar physico-chemical properties or performance reference compounds, this is not directly possible for compounds with unknown structure. The uncertainty can be especially significant for polar chemicals [92]. For this reason, NTS with passive sampling is best suited to determining spatial and temporal trends among sites of comparable conditions. The (semi-)quantification approaches discussed in Sect. “Quantification and semi-quantification of suspects and unknowns” are further hampered by these specific limitations for passive sampling.

LC–HRMS/MS analysis

Choice of separation method

The best LC method for NTS should ideally separate all (at the time of analysis still unknown) isobaric and isomeric compounds that cannot be distinguished by the HRMS detection while showing decent chromatographic peak shapes for all of them. As a proxy, the optimisation is usually done using a large set of reference standards covering a broad compound domain (i.e., logKow, RT, structural variety). However, optimising in a particular direction will usually negatively affect the performance of other compounds of this mixture. Thus, LC methods for NTS will aim at a reasonably good performance for many compounds, rather than maximising it for a small set. However, they will likely show a bad performance for a part of the compounds in a sample, and thus complementary LC methods would be necessary to cover the whole compound inventory.

In this section, we will highlight some guiding principles for choice and optimisation of LC and other liquid-phase separation methods for NTS, addressing mainly stationary phase chemistry, column dimensions, gradient conditions, as well as eluents and eluent modifiers. The choice of the latter two is intimately linked to the choice of the ionisation method, as particularly eluent modifiers will severely impact the ionisation behaviour of molecules. A range of textbooks and numerous journal articles have been published on the proper choice and optimisation of LC methods and the underlying theoretical concepts [93,94,95], some websites also provide invaluable practical information [96].

In general, a LC method for screening should provide a high peak capacity, which is defined as the maximum number of peaks (of uniform width) that can be separated in an elution time window with a fixed resolution [97]. For the complex compound mixtures that are encountered in environmental or biological samples, a high peak capacity can only be achieved by gradient rather than isocratic separations. The peak capacity increases with column length and with decreasing particle sizes of the stationary phase [98] and is larger for shallow than for steep gradients. Thus, long and shallow gradient runs covering a wide range of mobile phase fractions on long columns with small particles would be the best choice for NTS, but this is limited by some practical constraints. The limit for the run time is defined mainly by the desired sample throughput, and in most methods applied in screening, LC method run times do not exceed 30 min. The peak capacity also depends on the flow rate, but this relationship is more complex: for very short gradient runs, high flow rates on short columns are better than on long columns, while for longer gradients, longer columns and lower flow rates perform better [97]. A long column with small particles will result in a high back pressure during the separation. For such cases, columns with superficially porous particles (also termed core–shell particles) are an alternative, as they offer the same peak capacities at particle sizes > 2 µm as sub-2 µm fully porous particles, thus allowing comparable performance at lower back pressures [99].

Although modern (ultra) high-performance liquid chromatography ((U)HPLC) pumps and autosamplers of all vendors can deal with back pressures of 100 MPa or above, working at high pressures decreases the robustness of the methods. Even a small deposition of insoluble matrix constituents or particles in the flow path from environmental samples bearing a significant matrix load will result in stronger pressure increases than for systems run at lower pressures and increase the risk of an excess pressure failure. Therefore, elimination of particles by filtration or (ultra)centrifugation is indispensable. Furthermore, a desired small peak width to obtain a high peak capacity through the use of short columns, high flow rates and small particle sizes might not be compatible with the HRMS detection if the cycle time is too long to provide adequate coverage of the chromatographic peak shape, i.e., less than 8–10 scans across a peak (see Sect. “Choice of mass spectrometry settings”). Moreover, high flow rates above 400 µL/min are often not well-suited for ESI, which is the most widely used ionisation technique for semi-/non-volatile species. Apart from this theoretical concept of peak capacity (further detailed in literature [97, 100]), the actual chromatographic resolution in real environmental samples is lower, as peaks are not evenly distributed in the gradient time continuum, and thus an experimental optimisation using representative samples might be necessary. While in targeted LC–MS methods the column temperature can be adjusted to change the selectivity of the separation, the main purpose in screening methods is to maintain a constant column temperature over time and to lower the viscosity of the eluents and thus backpressure in UHPLC separations.

Reversed-phase separation

In general, reverse phase (RP) separations are most widely used in NTS, employing a rather hydrophobic stationary phase chemistry (mostly C18-, occasionally C8-modified silica gel), or less often more polar columns (biphenyl, pentafluorophenyl (PFP), or phenyl-hexyl modified silica gel) as evident from overviews of methods applied in collaborative trials by the participating laboratories [9,10,11]. On C18 columns, a large fraction of typical environmental contaminants shows a good retention factor and good peak shapes, and the retention stems mainly from hydrophobic interactions. Columns with aromatic ligands (biphenyl-, PFP-, phenyl-hexyl) allow for dipole–dipole and π–π interactions with the analytes, which results in a different selectivity and an increased retention of polar compounds. All silica-based columns have a certain activity of free, acidic silanol groups which are acidic with predominant pKa values in ranges from 3.5 to 4.6 and from 6.2 to 6.8 [101]. These also contribute to the retention of analytes through dipole–dipole (if neutral) or ion exchange (if ionised) interactions, the latter affecting particularly basic analytes, often resulting in poor peak shapes [102]. Vendors continuously expand their portfolio of columns with low free silanol group activity based on advanced synthesis and many such low-activity columns are available, but a complete elimination of free silanol groups is not possible. Polymer columns are an alternative avoiding the drawbacks of silica, but at the cost of lower peak capacities and are hardly used in screening methods. On the other hand, stationary phases associating C18 or C8 ligands with a modified polar particle surface can reduce free silanol group activity while simultaneously allowing hydrophobic interactions and an enhanced retention of polar and hydrophilic compounds [103].

Further considerations in terms of column chemistry are the pH stability and the possibility to use high aqueous eluent fractions. While most silica-based columns allow pH values between 2 (hydrolysis of the bonded phase) and 8 (dissolution of the silica particle), specifically stabilised silica columns allow eluent pH between 1 and 11 or 12, allowing the use of basic eluents. Many C18 (and to a lesser extent other hydrophobic stationary phases) show so-called phase de-wetting [104] when used at high aqueous eluent fractions (typically > 97%) to increase the retention of hydrophilic compounds. The reason is a partial exclusion of the mobile phase from the hydrophobic pores of the bonded phase, which results in irreproducible RTs. The incorporation of polar groups in the bonded phase prevents de-wetting and allows the use of 100% aqueous mobile phases.

The preferred organic eluents for RPLC are methanol, a protic, acidic solvent acting as an H-bond donor, and acetonitrile, an aprotic solvent exhibiting dipole character. Both solvents are well-suited for polar compounds, with a range of eluent additives and exhibit a low background due to solvent clusters in ESI. Methanol is often preferred instead of acetonitrile due to the lower price, while acetonitrile has the advantage of lower viscosity and thus a lower LC back pressure. Acetonitrile also has a higher elution strength compared with methanol and often less peak broadening (e.g., for alcohol compounds). Thus, for hydrophilic compounds better retention can be achieved with methanol, while for hydrophobic compounds, the retention factors are lower and a faster elution can be achieved with acetonitrile. The addition of eluent modifiers in RPLC has two main goals, (i) improving chromatographic retention of ionisable compounds by adjusting the pH of the eluent, and (ii) improving ionisation of compounds. Both must be carefully considered and are in some cases divergent, for example, the RPLC retention of acidic compounds is improved at low pH, yielding the neutral molecule, while the presence of excess protons is not always favourable for deprotonation of these acidic compounds in negative ion mode ESI (Sect. “Choice of ionisation technique”). The choice is typically limited to a few eluent modifiers which are volatile enough to prevent the precipitation of salts in the ion source. These are formic and acetic acid, their ammonium salts, ammonium hydrogen carbonate and ammonia or combinations thereof (Table 2), which might be used as additives or as buffers.

Buffering of eluents is a common practice to obtain reproducible RTs of ionisable compounds with pKa values close to that pH. Small pH changes may be caused by the acidic silanol activity of the column or injection of extracts with a different pH than that of the eluent. With modern LC columns based on high purity silica with a low silanol group activity, the use of unbuffered solutions has become a more common practice. Particularly, formic or acetic acid at 0.1% concentration provide a good pH stability due to the relatively high proton concentrations, which results in a protonation of acidic compounds and of acidic silanol groups to reduce electrostatic interactions, which would cause poor peak shapes of basic compounds. Many other volatile eluent additives/buffers such as small aliphatic amines (e.g., triethylamine) or trifluoroacetic acid used in conventional LC are problematic for MS analysis. They cause severe ion suppression due to competition for charge in positive (amines) or negative mode (trifluoroacetic acid), may form stable ion pairs with other compounds preventing their detection, and can only be removed from the ion source (and LC instrumentation including tubing) after extensive cleaning procedures. The non-volatile ammonium fluoride gained some popularity as an eluent additive [52, 105]. It considerably improves the ionisation efficiency of phenolic and other compounds in ESI-mode as compared to ammonia or ammonium formate/acetate due to the high proton affinity of the fluoride anion. However, the concentration should be 1 mM or below.

Typical LC gradients comprise the whole range of organic eluent/water mixtures, starting at 0 (in case of appropriate columns) or 5% of organic eluent, increasing linearly up to 95–100%, followed by an isocratic phase of varying duration (typically < 10 min) at this level to elute hydrophobic constituents before re-equilibration. Depending on the extent of hydrophobic matrix constituents, an additional rinsing step with a solvent of higher elution strength (e.g., isopropanol) may be included before re-equilibration (e.g., for sediment extracts [106]). While it is good practice in RPLC to use the same composition of the injection solvent as the initial eluent composition (i.e., typically a high aqueous eluent fraction) to ensure good chromatographic peak shape, this approach is often difficult for screening analyses. As discussed in Sect. “Sampling and sample preparation for NTS”, sample preparation is usually limited to keep as many compounds as possible, thus the final extract for analysis often contains compounds and matrices with a large hydrophobicity range. Diluting such extracts with water to match the initial eluent composition of the LC will often result in precipitation of poorly soluble matrix constituents. A subsequent filtration might result in a loss of more hydrophobic compounds along with the filtered precipitates. On the other hand, the LC gradient should start from a low organic eluent fraction (typically 5%) to allow for a retention also of the more hydrophilic compounds. As a result, sample extracts (particularly of biota or sediments obtained with less polar solvents) have to be injected with a significantly higher fraction of methanol or acetonitrile as the initial eluent composition. Such a mismatch in solvent strength and viscosity will result in a deterioration of peak shapes or split peaks of early eluting compounds [107], which becomes more severe with increasing difference in the solvent fraction and larger injection volumes. Thus, a compromise must be found during method development weighing up which solvent fraction and injection volume is feasible with still-acceptable deterioration of peak shapes for early eluting compounds. Figure 3 shows the effect of injection solvent composition on peak shapes, which ranges from a slight deterioration to a complete splitting of the compound peak, with a major portion of the compound eluting at the column dead time. Peak splitting also has an impact on the peak detection in the subsequent data processing steps (Sect. “Data (pre-)processing and prioritisation for NTS”), as badly shaped peaks might not be detected, whereas split/double peaks may suggest the presence of two isomeric compounds, like in the case of atenolol at 70% or 100% methanol in Fig. 3. For large volume injections, a mismatch between the pH of the mobile phase and the injected sample can also result in a deterioration of peak shapes for ionic compounds if their pKa falls between these two pH values [108].

Extracted ion chromatograms of three different hydrophilic compounds at 50 ng/mL depending on the injection solvent composition for a RPLC separation (10 µL injection volume into 300 µL/min water:methanol 95:5 both with 0.1% formic acid at gradient start, on a Phenomenex Kinetex C18 EVO, 50 × 2.1 mm, 2.6 µm particle size; column dead time is 0.5 min)

Separation of hydrophilic and ionic compounds

Highly hydrophilic compounds (in terms of environmental concern often referred to as persistent mobile organic chemicals, PMOC) [109] are often not retained on typical RP columns and elute at or close to the column dead time, where typically strong ion suppression and interferences are observed, impeding reliable peak detection, identification and quantification. Several approaches are available to achieve a separation of highly hydrophilic, neutral and ionic compounds [110, 111], including HILIC, SFC, capillary electrophoresis, ion chromatography (IC) and MMLC. Typically, the logKOW (or logDOW in case of ionisable compounds) is used as an approximation to assess mobility and also chromatographic behaviour, although the LC retention of compounds is more complex and logDOW alone is insufficient to predict whether a compound is actually retained on a RPLC column or not [112].

Capillary electrophoresis and ion chromatography have so far been mainly used for the analysis of inorganic ions, but allow also a separation of ionic organic compounds with a wide polarity range. However, both techniques require a specific interfacing when coupled to MS and have some methodological restrictions, which so far limited a more widespread application in environmental analysis [111, 112], although quite a few studies employed capillary electrophoresis in non-targeted metabolomics [113]. Various types of capillary electrophoresis separations exist, of which capillary zone electrophoresis (CZE), micellar electrokinetic capillary chromatography (MEKC) and capillary electrochromatography (CEC) are most widely used [114]. In all cases, separations are carried out in small fused silica capillaries (20–200 µm diameter), thus sample volumes and flow rates are rather small (typically < 20 nL, and 1–20 nL/min, respectively). Due to the small sample volume, capillary electrophoresis is not very sensitive. However, the use of capillary electrophoresis–MS with a nanoflow sheath liquid interface for target and suspect screening analysis of drinking water samples demonstrated sensitivity down to < 100 ng/L for some analytes [115].

Ion chromatography typically utilises salt solutions, acids or bases with relatively high ionic strengths as eluents. Thus, a coupling to MS requires a reduction of the high ion concentration by a so-called suppressor containing ion exchange membranes or resins before the ion source [116]. Such an approach was successfully used for the suspect screening of haloacetic acids in drinking water after pre-concentration by SPE [117] as well as pesticide TPs in groundwater [118]. A limiting factor for screening applications is the sorption of more hydrophobic compounds to suppressor parts, which can be reduced by higher fractions of solvents [119]. The use of volatile buffers at lower concentrations in non-suppressed IC allows for a direct coupling to a MS ion source. However, the sensitivity is often lower and not all ions can be sufficiently well-separated, making this approach less suitable for screening applications.

SFC utilises supercritical CO2 as the main eluent, which might be modified by the addition of polar solvents (e.g., methanol) or aqueous salt solutions (e.g., ammonium hydroxide) to increase its low polarity in the pure state. It can be used with both hydrophobic stationary phases similar to RPLC and polar stationary phases similar to normal phase LC (NPLC) or HILIC. Thus, offering a large flexibility for adjusting the selectivity and compound domain of the separation [120, 121]. Furthermore, due to the low viscosity originally seen as a “green” substitute for NPLC, SFC gained particular interest in environmental analysis as a complementary technique to RPLC to separate hydrophilic contaminants on polar stationary phases [122, 123]. For certain substances with logDow values close to 0, SFC can exhibit better sensitivity compared to RPLC due to high CO2 content and low water content in the mobile phase which can improve ionisation in ESI [124]

Although different strategies exist for coupling NPLC to mass spectrometry, this remains a challenging issue [125] and no application for the screening of environmental samples has been published so far. Classical NPLC employing aprotic solvents is incompatible with ESI, but could be coupled with APCI or atmospheric pressure photoionisation (APPI) sources. So-called aqueous NPLC uses specific silica hydride-based stationary phases, which bear no silanol groups and can be operated in RP and NP mode, but have not found a widespread application so far [126].

Although the retention behaviour in HILIC separations is not entirely understood, the general idea is that it is caused by a partitioning of an analyte between an acetonitrile-rich mobile phase and a water-enriched layer partially immobilised onto a polar stationary phase [127]. Consequently, gradient separations start with a high fraction of acetonitrile and hydrophilic compounds are retained. The polar analytes are then eluted upon increasing the composition of the aqueous eluent. However, the aqueous fraction of the eluent must not exceed a certain level (typically around 30% [128]), otherwise the water-enriched layer at the surface will disappear, and the column will change into another separation mechanism. Sometimes buffer components (e.g., ammonium acetate) are required to minimise ionic interactions, which can lead to a decrease in ESI response. In analogy to RPLC, where the hydrophilic compounds are affected, in HILIC a large part of the more hydrophobic compounds of an environmental sample elute at the column dead time, which impairs their detection and identification. Thus, HILIC methods provide a complementary approach to RPLC and have so far been used for the screening of environmental samples together with a RPLC method [129]. Drawbacks of HILIC methods are the relatively long equilibration times when operating in gradient mode (it is recommended a post gradient re-equilibration of approximately 20 column volumes) as compared to RP separations and the need to inject the samples in a high solvent fraction [130]. For aqueous samples, which are the most relevant types of samples for HILIC separations, a solvent exchange is, therefore, required, either by a SPE method capable of retaining very hydrophilic analytes or evaporative concentration (see Sect. “Water”). In addition, highly hydrophilic compounds might not dissolve well in the injection solvent [131]. Compared to RPLC, HILIC shows broader chromatographic peaks due to slower and less uniform kinetics and mass transfer. A considerable variety of stationary phases are available for HILIC, which range from bare silica, diol, and amide to multifunctional bonded phases, also including anionic, cationic and zwitterionic functionalities. These show a widely different selectivity and retention behaviour of compounds. In particular, columns with ionic functionalities also show a strong retention of more hydrophobic, ionic analytes of the opposite charge.

In the literature, approaches combining columns with functionalities allowing multimodal interactions have also been termed MMLC separations. This term summarises stationary phases that may combine hydrophobic, ionic and/or polar functionalities, which may be operated in RP and/or HILIC mode [132]. Such mixed-mode columns have been used extensively for the separation of peptides and proteins [133], but rarely in environmental screening methods so far [15, 134]. They hold some promise to allow for the retention and separation of compounds with a wide range of physico-chemical properties in one single separation, particularly extending the RP amendable compound range towards more hydrophilic compounds. In one study, the retention of hydrophilic (ionic and non-ionic) model compounds was compared among RP, HILIC and MMLC columns, which showed a widely different selectivity [135]. The authors particularly noted that some bonded phases showed a significant column bleed from the ionic functional groups, decreasing linear dynamic range and sensitivity in LC–HRMS screening methods. Furthermore, inorganic anions and cations present in samples might cause ion suppression over a considerable RT range, as they are retained by the ion exchange functionalities as well [136].

Bieber et al. employed a direct sequential coupling of a RP with a HILIC column [122]. The poorly retained fraction of the RP-separation (i.e., the hydrophilic compounds) are transferred directly to the HILIC column, along with the HILIC eluent acetonitrile, via a mixing tee. Afterwards, the compounds retained on the RP column are eluted with a gradient with increasing acetonitrile fraction. This technique allowed covering a broad hydrophobicity range while allowing for the direct injection of aqueous samples. The combination of RP and HILIC columns is also promising for comprehensive two-dimensional LC (LCxLC), as both use compatible eluents and provide highly orthogonal separations [137, 138]. LCxLC applications in environmental screening also combined two RP columns [53, 139]. A limitation especially for screening methods, is that there is almost no software which can handle the data of the second separation dimension automatically.

Practical considerations for separation method selection

From the vast number of possible separation techniques and methods, most NTS studies so far only make use of LC, with a clear predominance of reversed phases, and laboratories have established their own routine methods and applied them in different larger scale screening studies [129, 140, 141]. The application of other techniques (especially MMLC, IC, EC, SFC) is still limited to individual, often exploratory studies, in which different setups are tested and the general usefulness of application is demonstrated. Table 3 provides a brief summary of the separation techniques discussed above, their compound domains and potential advantages and disadvantages as a starting point for the choice of the appropriate technique.

Regarding method parameters, Table 4 provides a brief overview of the main considerations for selecting the appropriate conditions. It is essential to evaluate the performance of a chosen method for the given compound domain and matrix using both representative standard compound mixtures and spiked matrix samples. For the latter, observation of the total ion chromatograms can already give an indication how well the matrix is spread along the chromatographic run time. A key question is whether to use the same or two different separation methods for both ionisation polarities. While two different separation methods can be tailored for a good retention and ionisation of respective compound types in each mode individually, using the same method for both runs allows for a direct comparison of positive and negative mode data. This is beneficial for compound identification but may reduce the coverage of some compound types. In addition, it must be considered whether a primary wide-scope screening method should be complemented with one or more additional methods for expanding the compound domain, which means an increased time and financial commitment.

Additional points to consider include background contamination and changes to the system over time. These can be assessed with appropriate QA/QC procedures (see Sect. “Quality assurance and quality control in NTS methods”) and are only mentioned briefly here. Background contamination can arise from numerous factors, including solvents, pumps and degassers. The background present in solvents can vary among suppliers, in general, commercial LC–MS grade solvents are recommended over bi-distilled solvents or Millipore water. Carry-over and other factors should be assessed with blanks during the sample runs, while other factors such as column age, RT shifts over time and loss of separation power can be assessed with internal standards (IS) to ensure a timely replacement.

Choice of ionisation technique

The amenability of a compound to MS detection is foremost governed by the conversion to its ionised form, since this is imperative for this analysis technique. The choice of ionisation technique or interface used in MS systems both defines and restricts the analysable chemical domain. For LC–(HR)MS-based analysis of organic compounds, mainly electrospray ionisation (ESI), atmospheric pressure chemical ionisation (APCI), and atmospheric pressure photoionisation (APPI) are employed and will be discussed briefly in the following. The decision on which ionisation technique to use depends mainly on the mass as well as the polarity of the analytes of interest, as shown in Fig. 4.

For non-polar to moderately non-polar analytes, such as compounds without any polar functional groups or steroids, APPI and APCI are useful techniques, whereas ESI is best for molecules with polar functional groups as discussed further below. In general, it is difficult to predict ionisation efficiencies in NTS, since without analytical standards no exact quantification is possible. The need to provide some form of quantitative information in NTS has led to the development of several strategies to predict ionisation efficiencies in recent years (Sect. “Quantification and semi-quantification of suspects and unknowns”).

Electrospray ionisation

ESI, first introduced by Fenn et al. in 1984 [142], is most widely employed when targeting medium-polar-to-polar compounds ranging from small molecules up to “molecular elephants” of over 100,000 Da as mentioned in Fenn’s Nobel Prize speech. Typically, molecules with polar functional groups, such as alcohols, carboxylic groups, or amines ionise well. ESI is a soft ionisation technique, which allows the production of intact gas-phase ions from a liquid sample, allowing to easily hyphenate LC with (HR)MS instruments. Strictly speaking, ESI is not an ionisation source, but is rather based on ion transfer, i.e., ions must be previously present in the solution (molecules forming adducts, protonated or deprotonated). In short, an electrostatically charged aerosol consisting of µm-sized droplets is formed from the mobile phase (containing the analytes), supported by a nebuliser gas (N2) under an electric field. Due to rapid solvent evaporation, the size is reduced until ions are liberated into the gas phase. Advantageously, ESI is operable in positive or negative ionisation mode, i.e., generating positively or negatively charged ions that are accelerated into the mass spectrometer. One characteristic of ESI is the formation of adduct ions (see “Glossary”) depending on the sample matrix and the presence of ions in the mobile phase. Modifiers can be added to the eluent to improve ionisation efficiency of certain compounds (see Sect. “Reversed-phase (RP) separation”). If more than one ion species is formed from the native molecule, this can facilitate identification as multiple adduct species provide extra information to define the mass of the molecule of interest. However, this phenomenon renders additional steps in data analysis necessary, namely, to group/merge these different features into a single compound (e.g., [M + H]+, [M + Na]+, [M + NH4]+ and [M + K]+ in positive mode, or [M–H]−, [M + CH3COO]−, [M + Cl]− in negative mode), a process termed componentisation (see Sect. “Data pre-processing”). Furthermore, the intensity is spread over several m/z, leading to lower limit of quantifications (LOQs). ESI generally results in singly charged ions for small molecules, but multiply charged ion species are observed especially for larger molecules, such as proteins and other large biomolecules. For environmental cases, large molecules such as water-soluble polymeric substances in wastewater have more than one charge state [143]. Charge states can be identified by calculating the m/z differences between the adjacent isotopologues, e.g., [M + 2H]2+ differs ≈ 0.504 in its isotopologue pattern. ESI is an excellent choice when dealing with medium polar analytes in polar samples, such as water. However, when analysing less hydrophilic compounds in extracts of soil, sediments or biota samples, other ionisation techniques such as APCI and APPI might be more suitable.

Atmospheric pressure chemical ionisation and photoionisation

APCI and APPI are both soft ionisation techniques, producing mass spectra similar to those of ESI in terms of (low) in-source fragmentation. In contrast to ESI, however, both APCI and APPI are restricted to molecules below 2000–3000 Da, since above this limit either ion formation is not effective or in-source fragmentation increases significantly [144, 145]. APCI has been coupled with both LC and GC, whereas APPI is typically coupled with LC.

In APCI, a series of chemical reactions with mobile phase and nitrogen sheath gas molecules leads to the formation of reagent ions (e.g., NH3, CH4), which consecutively react with sample molecules and generate sample ions in the gas phase. The ion species formed are primarily (de)protonated molecules and molecular ions, which should be considered when generating molecular formulas [106, 143]. Since vaporisation of the LC stream in APCI is performed by high temperatures, analysis of thermally labile molecules can be difficult [146]. Typically, lower ion suppression and/or matrix effects are observed in APCI compared with ESI. Depending on the sample matrix and the ionisation efficiency of the compound in the respective ion source, this can result in better sensitivity, e.g., for certain flame retardants [147]. Within the US EPA’s Non-Targeted Analysis Collaborative Trial (ENTACT), the complementarity of ESI and APCI in expanding the chemical space coverage was highlighted, including a detailed inspection of how well diverse groups of chemicals ionise in APCI [148].

APPI can be used in LC–MS-based analysis to measure compounds of low polarity [149]. Here, ions are formed either directly (APPI) or indirectly via dopant assisted photoionisation (DA-APPI). In the case of direct photoionisation, the analyte molecule has a lower ionisation potential than the energy of the photon emitted by the light source (Ar lamp 11.2 eV; Kr lamp 10.03 eV: 10.64 eV = 4:1). In case of DA-APPI, the dopant/solvent employed is amenable to direct photoionisation, produces reagent ions and subsequently ionises the analyte. For the latter approach, care must be taken regarding the miscibility of the dopant (solvents with ionisation potential < 11.2 eV or 10.03 eV, depending on the light source) and the mobile phase, especially when using typical RPLC solvents [145]. Isopropanol (ionisation potential: 10.22 eV) and to a lesser extent methanol can serve as dopant compatible with RPLC separation [143]. As in APCI, prevalent ion species also include molecular ions. APPI is also known to be less affected by matrix effects or ion suppression [143, 150].

A limited number of publications are available comparing sensitivity using different ion sources. A study investigating 40 pesticides in garlic and tomato extracts demonstrated that ESI results in lower limit of detections (LODs) in most cases compared with APCI or APPI [150]. Another study performed a detailed comparison of ESI and APCI for polyaromatic compounds [151] showing that, since ESI yields poor (or no) detection for some compound classes, both APPI and APCI can open the analytical window to compounds of interest in the low, medium to non-polar chemical space for LC-based methods. Clearly, if a more comprehensive view of the sample is desired, combining different ionisation sources would expand the compound coverage in a sample, at the cost of increasing analysis time and effort and additional data analysis steps.

Choice of mass spectrometry settings

The choice of mass spectrometry settings is primarily guided by the HRMS instrument available, the purpose of measurement as well as the separation method(s) chosen. A minimum number of mass spectrometry detection points are necessary to describe a chromatographic peak (i.e., a chromatographically separated compound) to facilitate peak finding. Although quantitative analysis generally aims for 12 to 20 data points per peak, since NTS is not necessarily quantitative, a reasonable number of points, i.e., a minimum of 7, is highly recommended to improve peak detection and thus reduce the inclusion of noise in the final results (see Sect. “Data (pre-)processing and prioritisation for NTS”). Depending on the chromatographic peak width and the corresponding cycle time/acquisition speed (see Sect. “Glossary and definitions”) to provide a certain number of points, the mass spectrometry settings should be adjusted accordingly (e.g., resolution, number of MS2 experiments). In the following, the two most common HRMS instruments will be discussed in more detail, namely, time of flight MS (TOF–MS) and Orbitrap MS. These mass spectrometers are typically coupled with lower resolution mass spectrometers (resolution < 5000) such as quadrupoles or ion traps to provide MS2 or MSn capability. Fourier Transform Ion Cyclotron Resonance (FT–ICR) MS with highest resolution up to 10 million and mass accuracy < 0.2 ppm provides even greater identification capabilities but has so far mainly been used in studies on organic matter characterisation [152]. Due to higher price and longer cycle times required for ultrahigh resolution, it has only been used a few times for NTS of small molecules [153].

Full scan data, mass accuracy and resolution

TOF–MS instruments are characterised by their fast acquisition rates (easily 50 Hz, depending on the type of instrument), which does not affect its resolution. The highest achievable resolutions for state-of-the-art instrumentation are approximately 60,000 for m/z 300 (resolution increases for higher masses). In TOF–MS the continuous ion beam is chopped into ion packets before the flight tube, such that a certain number of ion packets (also called transients) will be combined into one mass spectrum. As a result, the higher the acquisition rate, the fewer ion packets will be combined, yielding a lower absolute signal intensity (and subsequently, lower sensitivity).