Abstract

There exist many sixth-order iterative methods for solving nonlinear scalar equations. The purpose of this work is to bring them together. We develop a scheme for unifying sixth-order iterative methods. Convergence analysis shows that the methods, and family of methods, formulated through the scheme are sixth-order convergent. Finally, some computational results are reported to verify the developed theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Many problems in science and engineering require solving a nonlinear scalar equation f(x)=0 [1–16]. As a result, solving nonlinear equations is an important part of scientific computing. There exist various iterative methods for solving nonlinear scalar equations. We are interested in sixth-order iterative methods, and their dynamics, to find a simple zero, that is f(γ)=0 and f′(γ)≠0, of a nonlinear equation f(x)=0. There exist many sixth-order iterative methods (see, e.g., [5–7, 12, 13, 15]) for solving nonlinear scalar equations. The paper develops a scheme for constructing sixth-order iterative methods or family of methods. The scheme unifies existing sixth-order iterative methods. It is shown that various existing sixth-order iterative methods can be generated by the scheme through a proper choice of the independent parameters.

Let us first explore the existing sixth-order convergent iterative methods and family of methods. Lately, Sharma and Guha [13], through a modification of the well-known Ostrowski’s method [11], developed the following sixth-order convergent method (SG):

where . From here onwards, the preceding method is referred through the initials of the authors, i.e., SG. Neta [7] proposed a family, consisting of three steps and one parameter, of sixth-order convergent iterative methods (NETA):

From here onwards, the above method is referred to as NETA. We observe that for α=0, the second step of the methods NETA and SG is the same, while for the choice a=−1, the third step of the methods NETA and SG is the same. In [6], Grau and Díaz-Barrero developed yet another sixth-order variant of Ostrowski’s method:

The term f(x n )/(f(x n )−2f(y n ))is referred to as Ostrowski’s correction factor [6]. From here onwards, the above method is referred to as GD. Through a suitable modification of Ostrowski’s method, Chun and Ham [5] derived the following family of sixth-order iterative methods:

where u n =f(y n )/f(x n ) and represents a real valued function satisfying and . If one chooses , one obtains the method SG, while if one chooses , one obtains the method GD. From here onwards, the above method is referred to as CH. It may be noticed that the methods SG, CH, and GD are formulated by modifying Ostrowski’s method; as a result, the first two steps of these methods are the same. Through a graceful modification of the well-known Kung and Traub method [17], recently Chun and Neta [16] proposed the following sixth-order iterative method:

From here onwards, the above method is referred to as CN.

In this work, we propose a scheme for forming sixth-order convergent iterative methods. The methods formulated through the scheme consist of three steps. During each iteration, the developed methods require three functional evaluations and one evaluation of the derivative of the function. It is also shown that methods, such as SG, NETA, GD, and CH, that require three functional and one derivative evaluations can be formed through the proposed scheme. In consequence, the proposed scheme offers a unification of the sixth-order iterative methods, which is the main purpose of this work. The rest of the paper is organized as follows: The ‘Scheme for construct- ing sixth-order iterative methods’ section presents the scheme. In the ‘Unification of sixth-order iterative meth- ods’ section, through the scheme, we generate various well-known sixth-order iterative methods. In the ‘Numer- ical work’ section, numerical and dynamical comparisons of various methods are shown. Finally, the ‘Conclusions’ section concludes the article.

Scheme for constructing sixth-order iterative methods

Our aspiration is to develop a sixth-order iterative scheme which unifies sixth-order methods published in the literature. For this purpose, we consider the following three-step iterative scheme:

Here, a j , b k , μ1, μ2, m, and l are independent parameters. The parameters a j , b k , μ1, , while the parameters m and l are positive integers. The parameters are established through the following convergence theorem:

Theorem 1. Let γ be a simple zero of a sufficiently differentiable function in an open interval D. If the initialization x0 is sufficiently close to γ, then the scheme (6) defines sixth-order iterative methods iff a1=2and b1=2/μ1, and the error equation for the family of methods is given as

where e n =x n −γ and c m =fm(γ)/m! with m≥1.

Proof. The Taylor expansion of the function f (x) around the solution γ is given as

Furthermore, from the preceding equation, we have

From the first step of our scheme, we write

substituting Equation 10 into the preceding equation yields

Expanding f(y n ), around the solution γ, through the Taylor series and using f(γ)=0,

substituting Equation 12 into the above equation, we obtain

Dividing Equations 8 and 13 gives

From the second step of our scheme (6), we may write

substituting f(x n )/f′(x n ), from Equation 10, and f(y n )/f(x n ), from Equation 14, into the above equation yields

The Taylor expansion of f(z n )around the solution γ is given as

substituting z n −γ, from Equation 16, into the above equation returns

From the third step of our scheme (6), we have

substituting Equations 9, 14, and 18 into the above equation furnishes the following error relation:

From the above error relation, we may deduce that the three-step scheme (6) will define sixth-order methods, or family of methods, if the following three equations are satisfied simultaneously:

From a simple calculation, we see that a solution is

Substituting a1=2and b1=2/μ1 in Equation 19 produces the required error equation (7). This proves our theorem. □

Consequently, this work contributes the following three-step sixth-order convergent iterative scheme for solving nonlinear equations:

In the preceeding scheme, the parameters a j , b k (with j≥2 and k≥2), and μ m (with m=1,2) are free to choose. From here onwards, the above scheme is referred to as USS for short. Accordingly, USS presents opportunities to form various sixth-order methods. The next section explores few interesting choices of these parameters to formulate methods and family of methods from the published literature.

Unification of sixth-order iterative methods

Let us now derive methods from the published literature. Let us first construct the family of methods developed by Sharma and Guha [13]. For this purpose, we consider

Here, . Substituting these values in the second and third steps of the proposed scheme USS, we get

Using 1 + r + r2 + r3 + ⋯=1/(1−r)for |r|<1 in the second and third steps of the preceeding equation, we obtain the method, SG, developed by Sharma and Guha [13]. Now, to formulate the family of methods developed by Chun and Ham [5], we consider

Here, . Substituting the preceding choices in the second and third steps of the scheme USS, we get

Using 1 + r + r2 + r3 + ⋯=1/(1−r)for |r|<1 in the second step of the preceding equation, we obtain the second step of the method, CH, developed by Chun and Ham [5]. The third step of the method CH is given as (see Equation 4). Here, u n =f(y n )/f(x n ) and is a real valued function satifying and . Through a simple calculation, the function may be expressed as

As a consequence, the formulated method (21), through the scheme USS, is the method developed by Chun and Ham [5]. The method of Grau and Díaz-Barrero [6] can be derived by considering the following:

in the second and third steps of the developed scheme USS. To derive the family of methods developed by Neta et al. (2), the choices are

Here, . To formulate the recently developed sixth-order method CN by Chun and Neta [16], the parameters, in the scheme USS, are chosen as follows:

Substituting the above values in the scheme USS, we get

Using 1 + 2r + 3r2 + 4r3 + ⋯=1/(1−r)2for |r|<1 in the second and third steps of the preceding method yields the method of Chun and Neta [16].

Numerical work

Let be a sequence, generated by an iterative method, converging to γ and e n =x n −γ. If there exist a real number ξ∈ [1,∞) and a nonzero constant C such that

then ξ is called the convergence order of the sequence and the constant C is called the asymptotic error constant. From the preceding relation, the computational order of convergence (COC) is approximated as follows:

All the computations are performed in the programming language C+ + . For numerical precision, the C+ + library ARPREC [1] is being used. For the convergence of the method, it is required that the distance of two consecutive iterates (|xn + 1−x n |) and the absolute value of the function (|f(x n )|), also referred to as residual, be less than 10−300. The maximum allowed iteration is 200.

Solving nonlinear equations

The methods are tested for the following functions:

Various free parameters are chosen as in the method SG: a=2, in the method NETA: α=5, and in the method CH: β=3. The outcome of the numerical experimentation is presented in Table 1. Table 1 reports the number of function evaluations and COC during the second-to-the-last iterative step. COC is rounded to the nearest significant digits. In Table 1, we can see that the methods GD and CN show better results.

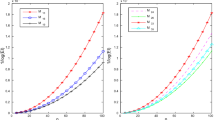

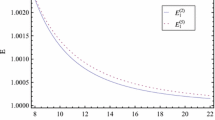

Dynamic behavior of various sixth-order methods

Let f (x) be a complex polynomial and γbe one of its zeros. Furthermore, let be a starting point for an iterative method. Then, the sequence , generated by the iterative methods, may converge or may not converge to the zero γ. If the sequence converges to the zero γ, then the starting point x 0 is attracted to γ. The basin of attraction, corresponding to a zero γof the complex polynomial f (x), is the set of all the starting points x 0 which are attracted to γ.

To make the figures, first we take a rectangle D, of size [−1. 5,1 . 5]×[−1. 5,1,5], and then divide the rectangle into 1,000×1,000grids [18–20]. Further, we apply the iterative method starting at each grid point. The iterative methods converge if the residual, in a maximum of 10 iterations, is less than 10−15. Given that the iterative method does not generate a residual less than 10−10 in the maximum allowed iterations, we say that the initial point does not converge to any root. Let us denote the number of mean iterations by and the percentage of diverging points by ω[14].

We consider the polynomial for finding the n th roots of unity. The n th roots of unity are given by [18–20]

The outcome of our numerical experimentation is reported in Figures 1 and 2. From these figures, we notice that the methods GD and CN which require the least number of iterations while diverging at the least number of points show better results.

Conclusions

This work has developed a scheme to unify various sixth-order iterative methods. Comparison among iterative methods, by using the basins of attraction and also through numerical computations, is also presented. Ideas presented in this work can be further developed and extended to include iterative methods of higher orders such as seven or eight.

References

Bailey DH, Hida Y, Li XS, Thompson B: ARPREC (C++/Fortran-90 arbitrary precision package). 2012.http://crd.lbl.gov/~dhbailey/mpdist/ Accessed Jan 2012

Argyros IK: Computational Theory of Iterative Methods. In Studies in Computational Mathematics. Edited by: Chui CK, Wuytack L. New York: Elsevier; 2007.

Argyros IK, Hilout S: A unifying theorem for Newton’s method on spaces with a convergence structure. J Complexity 2011,27(1):39–54. 10.1016/j.jco.2010.08.005

Argyros IK, Chen D, Qian Q: The Jarrat method in Banach space setting. J. Comput. Appl. Math 1994, 51: 103–106. 10.1016/0377-0427(94)90093-0

Chun C, Ham Y: Some sixth-order variants of Ostrowski root-finding methods. Appl. Math. Comput 2007, 193: 389–394. 10.1016/j.amc.2007.03.074

Grau M, Díaz-Barrero JL: An improvement to Ostrowski root-finding method. Appl. Math. Comput 2006,173(1):450–456. 10.1016/j.amc.2005.04.043

Neta B: A sixth-order family of methods for nonlinear equations. Int. J. Comput. Math 1979, 7: 157–161. 10.1080/00207167908803166

Khattri SK, Argyros IK: Sixth order derivative free family of iterative methods. Appl. Math. Comput 2011,217(12):5500–5507. 10.1016/j.amc.2010.12.021

Khattri SK, Argyros IK: How to develop fourth and seventh order iterative methods? Novi. Sad. J. Math 2010, 40: 61–67.

Khattri SK, Log T: Constructing third-order derivative-free iterative methods. Int. J. Comput. Math 2011,88(7):1509–1518. 10.1080/00207160.2010.520705

Ostrowski AM: Solutions of Equations and System Equations. New York: Academic; 1960.

Ren H, Wu Q, Bi W: New variants of Jarratt’s method with sixth-order convergence. Numer. Algorithm 2009, 52: 585–603. 10.1007/s11075-009-9302-3

Sharma JR, Guha RK: A family of modified Ostrowski methods with accelerated sixth order convergence. Appl. Math. Comput 2007, 190: 111–115. 10.1016/j.amc.2007.01.009

Traub JF: Iterative Methods for the Solution of Equations. Englewood Cliffs: Prentice Hall; 1964.

Wang X, Kou J, Li Y: A variant of Jarratt method with sixth-order convergence. Appl. Math. Comput 2008, 204: 14–19. 10.1016/j.amc.2008.05.112

Changbum C, Neta B: A new sixth-order scheme for nonlinear equations. Appl. Math. Lett 2012,25(2):185–189. 10.1016/j.aml.2011.08.012

Kung HT, Traub JF: Optimal order of one-point and multipoint iterations. J. Assoc. Comput. Mach 1974, 21: 643–651. 10.1145/321850.321860

Ardelean G: A comparison between iterative methods by using the basins of attraction. Appl. Math. Comput 2011,218(1):88–95. 10.1016/j.amc.2011.05.055

Amat S, Busquier S, Plaza S: Dynamics of the King and Jarratt iterations. Aequationes Math 2005, 69: 212–223. 10.1007/s00010-004-2733-y

Susanto H, Karjanto N: Newtons method basin of attraction revisited. Appl. Math. Comput 2009, 215: 1084–1090. 10.1016/j.amc.2009.06.041

Acknowledgements

We gratefully acknowledge the help from Professor Hadi Susanto during the numerical experimentation. We appreciate the reviewers for their insightful comments which improved the quality of our paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

This work was done through the cooperation of both authors. Both authors have contributed in it equally. Both authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Khattri, S.K., Argyros, I.K. Unification of sixth-order iterative methods. Math Sci 7, 5 (2013). https://doi.org/10.1186/2251-7456-7-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2251-7456-7-5