Abstract

An important step to incorporate information in the second law of thermodynamics was done by Landauer, showing that the erasure of information implies an increase in heat. Most attempts to justify Landauer’s erasure principle are based on thermodynamic argumentations. Here, using just the time-reversibility of classical microscopic laws, we identify three types of the Landauer’s erasure principle depending on the relation between the two final environments: the one linked to a logical input 1 and the other to logical input 0. The strong type (which is the original Landauer’s formulation) requires the final environments to be in thermal equilibrium. The intermediate type giving the entropy change of \(k_{\textrm{B}} \ln 2\) occurs when the two final environments are identical macroscopic states. Finally, the weak Landauer’s principle, providing information erasure with no entropy change, when the two final environments are macroscopically different. Even though the above results are formally valid for classical erasure gates, a discussion on their natural extension to quantum scenarios is presented. This paper strongly suggests that the original Landauer’s principle (based on the assumption of thermalized environments) is fully reasonable for microelectronics, but it becomes less reasonable for future few-atoms devices working at THz frequencies. Thus, the weak and intermediate Landauer’s principles, where the erasure of information is not necessarily linked to heat dissipation, are worth investigating.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

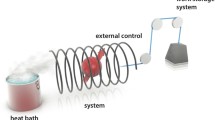

For more than a century, important efforts have been devoted to understand the entropic and energetic costs of manipulating information. The first attempt for incorporating information into thermodynamics was as early as 1871 when James Clerk Maxwell presented the gedanken experiment, now known as Maxwell’s demon [1] (a demon in the middle of a container with a trapdoor could transfer the fast and hot particles from a cold side to a hot one, in apparent violation of the second law of thermodynamics, if he had enough information about the particle velocities and positions). An analysis of Maxwell’s demon was conducted by Szilard [2] as early as 1929 when he studied an idealized heat engine with one particle gas and directly associated the information acquired by measurement with the physical entropy. Any practical implementation of the Maxwell’s demon requires a finite memory to store information about decisions whether, for each particle, the trapdoor will be open or closed. Charles Bennett [3], and independently Oliver Penrose [4], clarified that the erasure of each bit of information in the memory requires a dissipation of heat in the environment, thus recovering the validity of the second law when the memory (demon) and its environment are properly included into the thermodynamic discussion. Bennett’s and Penrose’s conclusions were based on the previous work of Rolf Landauer [5] in 1961, showing that the erasure of information requires dissipation of a (minimum) amount of heat equal to \(k_{\textrm{B}} T\ln 2\), where \(k_B\) is the Boltzmann’s constant and T is the temperature. The work of Landauer is considered a key element on what Bennett named thermodynamics of computation [3, 6,7,8] or what nowadays is known by the more general term of information thermodynamics [9,10,11,12] as seen in Fig. 1.

For the great majority of scientists, the seminal work of Landauer is a masterpiece of science [3, 13,14,15,16,17,18,19,20,21,22,23,24,25] connecting information and thermodynamics. Nevertheless, after more than 60 years, it is still accompanied by controversies. From a theoretical side, some scientists have persistently argued that the Landauer’s principle is not a pertinent way of discussing dissipation in computing devices [26,27,28,29,30,31,32,33,34]. From the experimental side, apart from recent successful experiments validating of the Landauer’s erasure principle [14, 35,36,37,38,39,40], there are few works suggesting some type of drawbacks [41, 42].

Solid symbols denote the number of times cited as a function of year for the keywords Landauer’s erasure principle (red), Information thermodynamics (green) and Thermodynamics of computation (orange). Open symbols denote the same information for some of the relevant papers mentioned in the references of this manuscript. The seminal work of Landauer reached a maximum of attention in the literature when its first experimental validation by Berut et al. [14]. The data are extracted from Ref. [43]

The original motivation of Landauer’s work as a part of his job as a researcher at the International Business Machines Corporation (IBM), however, was not devoted to establish a link between information and thermodynamics, but just to find the minimal (if any) amount of heat dissipated by an ideal computer. He brilliantly anticipated the minimum dissipation of \(k_{\textrm{B}} T \ln 2\) per bit. The heat dissipated in computers is nowadays much larger, and it is the real bottleneck that prevents further progress. The power dissipated in electron devices is directly proportional to the working frequency. Thus, the higher frequency at which we make computations, the more heat is dissipated. The overall amount of power that can be dissipated from the chip imposes a limit on the operating frequency around 5 GHz for real computers, as seen in Fig. 2. In other words, the technology to build a single transistor working at frequencies as high as 1 THz is well developed, but not the technology to extract the amount of heat generated in a chip with \(10^{10}\) of such transistors [44]. In Fig. 2, we indicate the power dissipation for the first computer built in 1945. It was named Electronic Numerical Integrator And Computer (ENIAC) [45], and it required 174 kilowatts of power to run 5000 simple addition or 300 multiplications per second, with a clock rate of 100 kHz. The typical measure of computer performance is given in floating point operations per second (FLOPS). Although the ENIAC did not work with bits, we can estimate its computer performance around 500 Flops with a power efficiency of \(3\times 10^{-12}\) gigaflops/watt (see lower green dot Fig. 2). We compare ENIAC with nowadays supercomputers. In the November 2020 ranking of supercomputers in terms of energy efficiency [46], the NVIDIA DGX SuperPOD was the most energy-efficient supercomputer with 26.2 Gigaflops/watt. This demonstrates an awesome improvement of 12 orders of magnitude in energy efficiency during last 75 years. We can compare such numbers with Landauer’s prediction by noticing that a floating-point operation reads in two numbers and returns one. If this is done on a computer with finite memory capacity, eventually the number which is being returned must erase another number in memory. Thus, according to Landauer’s erasure principle stating that a dissipation of \(k_{\textrm{B}} T\ln 2 \approx 2.8\times 10^{-22}\) Joules is required by bit erased, one Joule of energy for an ideal Landauer computer would enable to re-write \(3.6\times 10^{20}\) bits. Using 64 bits for a floating-point number, one Joule of energy would allow about \(6\times 10^{18}\) floating point operations, which means \(6\times 10^{9}\) Gigaflops/watt. See the frequency-independent result in the orange line in Fig. 2. Certainly, the fact that even the most energy-efficient computers today are still 8 orders of magnitude below the Landauer limit implies that the electronic industry needs to solve many problems before the Landauer’s erasure principle becomes a relevant issue.Footnote 1

The overall message of the Landauer’s erasure principle is that, even after developing the best technology in the future that will minimize the problems of heat dissipation in computers by 8 orders of magnitude, we will still be faced with the fact that some heat dissipated (\(k_{\textrm{B}} T \ln 2\) per bit) will not be an unnecessary nuisance, but a fundamental part of data erasure that cannot be avoided in any way, independently of the details of the computing device.

Solid green symbols denote the power efficiency of different CPU’s as a function of the processor speed. The orange line denotes the power efficiency limit of the strong (original) Landauer’s erasure principle. The blue shaded region corresponds to processor speeds (operating frequencies) close or above 1 THz where the assumption of thermal environment is less evident. The yellow dashed line shows the tendency in last decades, indicating that computers will reach the non-thermal equilibrium before reaching the Landauer limit, so that predictions of computing efficiency based on non-thermal reservoirs will become more relevant than the strong (original) Landauer’s erasure limit

The central topic of our paper is how fundamental is the Landauer’s erasure principle and if some type of extension (or generalization) is possible. In general, the Landauer’s erasure principle is presented (and understood) in the literature as a fundamental result that cannot be avoided in any way. But, is it universally true that, independently of the details of the computing device, a heat dissipation of \(k_{\textrm{B}} T \ln 2\) per bit cannot be avoided when data are erased? We anticipate that the fact that the Landauer limit in Fig. 2 is independent of the frequency (processor speed) is suspicious because the process of thermalization (the change from a non-equilibrium to an equilibrium thermodynamic state) is a dynamical process that requires some time in either classical or quantum reservoirs.

Since the Landauer’s erasure principle is based on a thermodynamic explanation of computations, at first sight, it seems that the preliminary question that we have to answer is: how fundamental is thermodynamics? Thermodynamics is a scientific discipline that explains complex systems through macroscopic properties, avoiding a need to discuss microscopic details. Historically, the thermodynamic laws were developed only for systems in the so-called thermodynamic equilibrium. In recent years, however, thermodynamics as a scientific theory has evolved to systems outside of thermodynamic equilibrium [47]. The so-called classical irreversible thermodynamics, under the hypothesis of local equilibrium, borrows most of the concepts and tools of equilibrium thermodynamics to non-equilibrium systems. Nowadays, even systems outside of local equilibrium are being studied in different branches of thermodynamics [47]. Thus, whether a computing device represents a system that can be studied with some branch of thermodynamic is not a question. By the own flexibility of thermodynamics as a scientific discipline, it is always possible to construct a branch of thermodynamics with the ability to predict the macroscopic behavior of computing devices, even outside of thermodynamic equilibrium. Thermodynamics is becoming a science of everything [48], including a science of information thermodynamics.

The path followed in this paper to understand the universality (or the lack thereof) of the Landauer’s erasure principle is a study of the erasure of information from a microscopic (mechanical) point of view, just by assuming the time-reversibility of microscopic laws, and then checking whether our general results (independent of any thermodynamic concepts) coincide or not with the original Landauer’s erasure principle. We show that depending on the type of final environment involved in the erasure of a logical \({\textbf {1}}\) or a logical \({\textbf {0}}\), three results can be established. The original Landauer’s erasure principle, which we refer to as a strong type of Landauer’s erasure principle, is recovered when the final state of the environment is in a thermodynamic equilibrium. Alternatively, an intermediate relation between manipulation of information and entropy change can be deduced when the only (macroscopic) condition imposed on the final environment is that they look indistinguishable (from a macroscopic point of view) when different logical inputs are involved. Such an intermediate relation gives the well-known limit \(k_{\textrm{B}} \ln 2\) of entropy change when applied to an erasure gate. Finally, for states of environment that look distinguishable we establish the weak type of Landauer’s erasure principle which imply no entropy change for erasure computations.

Thus, we conclude that the original (strong) Landauer’s erasure result is not universal because thermal reservoirs are not universal. As we shall discuss in the last part of this paper, there are modern reservoirs/environments that never thermalize. Moreover, in case of thermalization, the dynamical transition from non-thermal to thermal reservoirs requires some time. In other words, as depicted by the shaded region of Fig. 2, the thermal reservoir assumption of the strong Landauer’s erasure principle cannot be accepted uncritically for computing devices that switch from one state to the other faster than the time required to thermalize the reservoir. In the modern language of open systems [49], these fast changing gate involve non-Markovian environments. As previously indicated, it is important to clarify that the limitations of the Landauer’s erasure principle are not limitations of the information thermodynamics itself because it is always possible to include some type of macroscopic effects of such non-Markovianity in thermodynamic formulations of computation, beyond the original Landauer’s erasure principle.

The structure of the rest of the paper is as follows. In Sect. 2, we define microscopic and macroscopic states, the physical characteristics of a logical gate and the requirements imposed by the time-reversibility of microscopic laws (Liouville theorem). In Sect. 3, we define three types of the relation between manipulation of information and entropy change: strong, weak and an intermediate one, corresponding to three different types of final environments. Finally, we provide a discussion on how the above results can be extended to quantum systems in Sect. 4. We conclude in Sect. 5. We also add two appendixes with technical details.

2 Definitions

In this section, we provide detailed definitions of microscopic and macroscopic states in a general classical erasure gate. The proper understanding of when a set of microscopic states is (or is not) identical to a macroscopic state will be the key-element in the developments of Sect. 3.

2.1 Defining microscopic states

We consider a closed (or isolated in the thermodynamic language) system with N degrees of freedom. We distinguish the \(N_S\) degrees of freedom of the system (the active region of the computing gate) and the \(N_E=N-N_S\) environment degrees which represent all the other degrees of freedom. The degrees of freedom of the system are represented by the vector x with \(6N_S\) components corresponding to three position and three momenta of each particle in the physical space. Similarly, the degrees of freedom of the environment are represented by y as a vector in the \(6N_E\)-dimensional phase space of the environmentFootnote 2. The interaction between all degrees of freedom is determined by the (time-independent) Hamiltonian H(x, y), which fully describes the physical implementation of the logical gate.

Definition 1

(Microscopic state) We define a microscopic state of the gate and environment at time t by the point \(x^{(j)}(t),y^{(j)}(t)\) in the 6N-dimensional phase space \(\Gamma\), where the superscript j labels different solutions (corresponding to different experiments) from the same Hamiltonian H(x, y).

In general, we will consider \(j=1,\ldots ,M\) with M large enough (but not infinite) so that the set of \(x^{(j)}(t),y^{(j)}(t)\) is statistically meaningful.

2.2 Defining macroscopic properties and macroscopic states

After the definition of microscopic states, we define here macroscopic properties and macroscopic states.

Definition 2

(Macroscopic property) We define a macroscopic property as a function \({\textbf {A}}: \Gamma \rightarrow {\mathbb {R}}\) that assigns a real value to each point in the phase space \(\Gamma\). Two phase-space points \(x^{(j)}(t),y^{(j)}(t)\) and \(x^{(k)}(t),y^{(k)}(t)\) are macroscopically identical (according to this property \({\textbf {A}}\)) if and only if \({\textbf {A}}(x^{(j)}(t),y^{(j)}(t))={\textbf {A}}(x^{(k)}(t),y^{(k)}(t))\).

Notice that there are no anthropomorphic implications in the definition of a macroscopic property. No human observation is needed. In our case, \({\textbf {A}}\) can be a the logical information of the system denoted by the logical symbols 0 and 1. One can define these macroscopic properties as a result of a large-scale resolution of the apparatus involved in the identification of such property \({\textbf {A}}\). There is a large set of microscopic states at the output of the gate that are correctly interpreted as, for example, belonging to the logical 0 in the input of another subsequent gate. For a simple and objective definition, for example, a maximum distance from a central phase space point can be used to specify which microscopic states belong to a given macroscopic property.

Once we have a defined macroscopic property, we can define a macroscopic state.

Definition 3

(Macrostate) We define the macroscopic state (or macrostate) A at time t as the set of all microscopic states \(x^{(j)}(t),y^{(j)}(t)\) that have the same macroscopic property \({\textbf {A}}\) at that time, namely

Notice that A is a subspace of \(\Gamma\), while (bold) \({\textbf {A}}\) is just a number in real space.

We are now interested in defining the phase-space volume \(V_A\) of the macrostate A.

Definition 4

[volume of macrostate A] We define the volume of the macrostate A by counting the number of microscopic states that satisfy the condition \({\textbf {A}}(x^{(j)}(t),y^{(j)}(t))={\textbf {A}}\) in Definition 3 as

where \(\delta _{a,b}\) is the Kronecker delta function (that becomes one when \({\textbf {A}}(x^{(j)}(t),y^{(j)}(t))={\textbf {A}}\)) and \(\Delta \Gamma\) is an irrelevant phase-space volume small enough to accommodate zero or one microstate (see “Appendix 1”). We remind that M is large enough (but not infinite) so that the results are statistically meaningful.

We will also be interested in identifying those degrees of freedom of the system alone that belong to the set A. We define the system subspace \(X_A\) as the set of microscopic points in the system phase space \(\Gamma _S\) that belong to A, as \(X_A=\{\text { All } x^{(j)}(t) \in \Gamma _S \text { so that } x^{(j)}(t),y^{(j)}(t) \in A \}\). Similarly, for the points in the environment phase space \(\Gamma _E\), we define the Environment subspace as \(Y_A=\{\text { All } y^{(j)}(t) \in \Gamma _E \text { so that } x^{(j)}(t),y^{(j)}(t) \in A \}\) where the whole phase space is just the product of the system and environment phase spaces, \(\Gamma =\Gamma _S \times \Gamma _E\).

2.3 Physical gate as a transition between microscopic states

For each j-experiment, the Hamiltonian H(x, y) determines the trajectory in the 6N-dimensional phase space between the initial values \(x^{(j)}(t_i),y^{(j)}(t_i)\) and the final values \(x^{(j)}(t_f),y^{(j)}(t_f)\).

Definition 5

[operation] We define an operation or evolution of the states due to the Hamiltonian H(x, y) as a bijective (one-to-one and onto) map \(h(t_f,t_i)\) from the phase space \(\Gamma\) at time \(t_i\) (domain) to the same phase space \(\Gamma\) at time \(t_f\) (range)

where B, as the image of A under the bijective mapping \(h(t_f,t_i)\), is defined as

We notice that no macroscopic property \({\textbf {B}}\) is used in the description of B as image of A in the Definition 5. In other words, the set of microscopic states at the initial time \(t_i\), that define a macroscopic state A, does not need to be a macroscopic state of the same macroscopic property \({\textbf {A}}\) at the latter time \(t_f\).

Proposition 1

The phase-space volume \(v_B(t_f)\) of B, defined as image of A, satisfies \(v_B(t_f) \equiv V_A(t_i)\).

The proof is simple. By construction, the states that belong to B at \(t_f\) are just the states that belonged to A at time \(t_f\).

This is, in fact, a simpler way of stating the Liouville theorem [50].

We insist that the (non-capital) volume \(v_B(t_f)\) do not need to be the volume of a macroscopic state A at the final time \(t_f\) defined as \(V_A(t_f)\). It is possible that \(V_A(t_f) \ne V_A(t_i)\) if the microscopic states that satisfy \({\textbf {A}}(x^{(j)}(t_i),y^{(j)}(t_i))={\textbf {A}}\) at the initial time \(t_i\) are not the same states that satisfy the condition \({\textbf {A}}(x^{(j)}(t_f),y^{(j)}(t_f))={\textbf {A}}\) at the final time \(t_f\).

Obviously, such evolution of microscopic states encodes an evolution of the logical information as well.

Definition 6

(Physical gate) We define a gate at the physical level (with one bit of information that can take two initial logical values) as the following two maps:

-

A map \(h_{{\textbf {0}}}(t_f,t_i)\) when the involved initial microscopic states A are those belonging to the information \({\textbf {0}}\)

-

A map \(h_{{\textbf {1}}}(t_f,t_i)\) when the involved initial microscopic states \(A'\) are those belonging to the information \({\textbf {1}}\).

By construction, such a composed map is also a bijective (one-to-one and onto) map from \(\Gamma \times \Gamma\) to \(\Gamma \times \Gamma\)

In fact, the bijective maps \(h_{{\textbf {0}}}(t_f,t_i)\) or \(h_{{\textbf {1}}}(t_f,t_i)\) mean, at the physical level, that microscopic classical laws are time-reversible [50]. If two phase-space trajectories coincide at one time, then such trajectories are identical at all times. This time-reversibility has important consequences on the type of physical transitions that are allowed.

We are now in conditions to present the following proposition that will be important along the paper:

Proposition 2

If two different operations, \(A \rightarrow B\) and \(A' \rightarrow B'\) (where B and \(B'\) are the images of A and \(A'\), respectively), have a null intersection at the initial time, then they have a null intersection at any time. In other words,

The demonstration is simple. Let us imagine that \(B \cap B' \ne \emptyset\) because \(x^{(j)}(t_f),y^{(j)}(t_f)=x^{(k)}(t_f),y^{(k)}(t_f)\). Then, because of time-reversibility, such trajectories are identical at the initial time too, i.e., \(x^{(j)}(t_i),y^{(j)}(t_i)=x^{(k)}(t_i),y^{(k)}(t_i)\), so that \(A \cap A' \ne \emptyset\) too.

Notice that we have used in the Proposition 2 the fact that different trajectories, for example \(x^{(j)}(t_f),y^{(j)}(t_f)\) and \(x^{(k)}(t_f),y^{(k)}(t_f)\), do not cross in phase-space at any time. This will be the condition that we will check in any proposal of a gate. Notice that physical systems defined from the Hamiltonian H(x, y) do always satisfy this Proposition 2. But, the Proposition 2 is also true for any (non-Hamiltonian) dynamical system that preserves phase-space volumes. As a consequence, the three Landauer’s erasure principles presented in this paper can be relevant, not only for the physical gates linked to H(x, y) studied in this paper, but for applications in other areas outside physics described from divergenceless models.

2.4 Logical gate as transition between macroscopic states

From the logical information alone (forgetting about the microscopic state), we define the gate from a logical point of view as:

Definition 7

(Logical gate) We define a logical gate as a map \(i(t_f,t_i)\) from the logical space \({\mathbb {L}}=\{{\textbf {0}},{\textbf {1}}\}\) at time \(t_i\) (domain) to the same logical space \({\mathbb {L}}\) at time \(t_f\) (range) as

The logical information (or macroscopic property) A or A’ can be perfectly identified with the macroscopic state A or \(A'\) in the Definition 6 of a physical gate. However, the logical information (or macroscopic property) C or C’ in this new definition of a logical gate cannot be identified with the image states B or \(B'\) as defined in 6.

The difference between the physical and logical gate, which is the central point in our future discussion, can be translated to saying that, contrary to the bijective mapping \(h(t_f,t_i)\) for microscopic states, the new logical map for macroscopic states \(i(t_f,t_i)\) is not bijective. For example, we will be interested in two operations that define an erasure gate: the \({\textbf {0}}\rightarrow {\textbf {0}}\) operation and the \({\textbf {1}}\rightarrow {\textbf {0}}\) operation. Clearly, the map is not bijective. In the language of computation, it is said that the erasure gate is the simplest example of logical irreversibility, because the final (logical) information \({\textbf {0}}\) does not allow us to deduce what was the initial (logical) information (either \({\textbf {0}}\) or \({\textbf {1}}\)).

We want to clarify why we said in Definition 6 that \(A,A' \rightarrow B,B'\) is physically reversible (bijective), while we said in Definition 7 that \({\textbf {A}},{\textbf {A'}} \rightarrow {\textbf {C}},{\textbf {C'}}\) can be logically irreversible (not bijective). The first refers to the evolution of microscopic states and the second to the evolution of macroscopic states. By construction, it is possible to find two different phase-space points \(\{x^{(j)}(t_i),y^{(j)}(t_i)\}\ne \{x^{(k)}(t_i),y^{(k)}(t_i)\}\) that have the same (logical) information \({\textbf {A}}(x^{(j)}(t_i),y^{(j)}(t_i))={\textbf {A}}(x^{(k)}(t_i),y^{(k)}(t_i))\), but it is not possible to find two identical (or very similar) phase-space points \(\{x^{(j)}(t_i),y^{(j)}(t_i)\} \approx \{x^{(k)}(t_i),y^{(k)}(t_i)\}\) that have different (logical) information \({\textbf {A}}(x^{(j)}(t_i),y^{(j)}(t_i)) \ne {\textbf {A}}(x^{(k)}(t_i),y^{(k)}(t_i))\).

3 Three types of Landauer’s erasure principle

Next, we distinguish three types of the relation between the erasure of information and its energetic and entropic costs, corresponding to three types of relation between the two final environments: the final environment belonging to the logical operation \({\textbf {1}}\rightarrow {\textbf {0}}\) and the one to \({\textbf {0}}\rightarrow {\textbf {0}}\). Only the third type is the one developed originally by Landauer (in terms of a thermal reservoir). We still keep the name (intermediate and weak) Landauer’s erasure principle for the other two because we believe that we follow the original motivation of Landauer: encoding information in macroscopic properties and analyzing how the distribution of microscopic states that build such macroscopic state change during the erasure procedure. But our approach differs from the original one in the sense that we assume nothing more than time-reversibility of the microscopic laws.

We assume that the gate is characterized by the logical information \({\textbf {0}}\) or \({\textbf {1}}\) (or the corresponding macroscopic states A and \(A'\) in Definition 6), while the environment is characterized by another macroscopic property \({\textbf {E}}_{\textbf {0}}\) or \({\textbf {E}}_{\textbf {1}}\) (or its corresponding macroscopic states E in Definition 3). Since a gate involves two operations, whenever needed we will specify which operation we are referring to by using, for example in an erasure gate, the label \({\textbf {1}}\rightarrow {\textbf {0}}\) or \({\textbf {0}}\rightarrow {\textbf {0}}\). We will also specify the time at which we are defining the macroscopic properties or states, by writing \(t_i\) for the initial time and \(t_f\) for the final one.

3.1 The weak Landauer’s erasure principle

We first consider erasure gates where the final environments are macroscopically different at the final time:

-

Condition C1 ENVIRONMENTS WITH DIFFERENT FINAL MACROSCOPIC PROPERTIES. For two different operations involved in a gate with two initial environment states which have macroscopically identical properties at the initial time (e.g., \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)={\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_i)\)), the two final environment states have different macroscopic properties \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \ne {\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_f)\)) at the final time,Footnote 3,Footnote 4.

Let us analyze C1 for an erasure gate in Fig. 3. The initial logical states \({\textbf {1}}\) in Fig. 3a and \({\textbf {0}}\) in Fig. 3c are different macroscopically (being in the left and in the right, respectively), while having the same environment macroscopic properties and states, \(E_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)= E_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\) and \(Y_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)=Y_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)\). By contrast, the final logical states \({\textbf {0}}\) in Fig. 3b and \({\textbf {0}}\) in Fig. 3d are macroscopically identical (being both on the right), \(X_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)=X_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\), while having different environment macroscopic properties and states, \(E_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\ne E_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\) and \(Y_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f) \ne Y_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\). We clearly satisfy the Proposition 2 at all times so that such an erasure process is possible from our mechanical point of view. Notice that condition C1 implies that the initial macroscopic information will effectively disappear from the final state of the system, but it will appear in the final environment state. These results just show that, due to time-reversibility of microscopic laws, information can never be erased at the microscopic level in a full closed system. We note that we have arrived to the same conclusion as Hemmo and Shenker [30], but within a framework that will allow us to reach Landauer’s and Bennett’s results in a general and compact unified framework.

Proposition 3

The erasure of information with a gate satisfying condition C1 is compatible with no entropy cost \(\Delta S=0\).

For the proof, we use the Boltzmann entropy defined as the number of microstates that correspond to a macrostate (as discussed in “Appendix 1”). We define \(V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)\) as the phase-space volume of the initial macrostate \(X_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i), Y_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)\). Similarly, we define \(V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\) as the phase-space volume of \(X_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i), Y_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\). In the logical operation \({\textbf {1}}\rightarrow {\textbf {0}}\), the condition C1 is compatible with defining the number of microstates of the final macrostate, \(V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\), equal to the number of microstate of the image of the initial macrostate \(v_{B,{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \equiv V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i) =V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\). Identically, in the logical operation \({\textbf {0}}\rightarrow {\textbf {0}}\), we define \(v_{B,{\textbf {0}}\rightarrow {\textbf {0}}}(t_f) \equiv V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i) =V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\). Then,

We have assumed an arbitrary probability p for the \({\textbf {1}}\rightarrow {\textbf {0}}\) and \(1-p\) for \({\textbf {0}}\rightarrow {\textbf {0}}\) operations. The reason why \(\Delta S=0\) is possible is because the condition C1 itself imposes that the environments are microscopically different, so that \(v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \cap v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)=\emptyset\) as required from Proposition 2. Thus, the condition \(\Delta S=0\) does not violate any fundamental microscopic law and a Hamiltonian \(H_{{\textbf {C}}{} {\textbf {1}}}(x,y)\) is possible.Footnote 5

The proof is just a consequence that the initial and final macrostates (seen as light blue and light red regions for the initial and final times, respectively, in Fig. 3) always have the same number of microstates. This fact is the well-known result given by the Liouville theorem [50] when dealing with A and the image of A at a later time. We remind the reader that, in more general scenarios, the number of microscopic points that are part of the macroscopic property \({\textbf {A}}(t_i)\) at the initial time does not need to be equal to the number of points that are part of the macroscopic property \({\textbf {A}}(t_f)\) at the final time.

In fact, reading carefully the original works of Landauer and Bennett, one notices that the possibility of such types of erasure gates, giving \(\Delta S=0\), was already well known to Landauer and Bennett. Bennett mentioned what he thought was the problem with such types of erasure gates in his 1973 paper [13]. He erroneously concluded that it was not possible to use such erasure gates with condition C1 more than once, because the environment is different each time we use the erasure gate (see the macroscopic states of environment in Fig. 3b, d). Contrary to the Bennett’s conclusion, we argue here that such erasure gate with \(\Delta S=0\) can be used as many times as typical erasure gates can. It is erroneously argued in [13] that such an erasure gate will require a reset of the environment to its initial state to make the erasure gate useful again. However, we notice that in a conventional gate, in fact, the initial macroscopic state of environment is not identical to the final macroscopic state of environment: the final one contains more heat than the initial one. And yet, no reset to the initial cooler environment is assumed each time the gate is used. Similarly, we can assume that the change of state of the environment in the gate of Fig. 3 is small enough to be used again without resetFootnote 6. See also “Appendix 2” with a toy model of an erasure gate satisfying condition C1. This toy-model gate works properly without reset more than 30 times despite the fact that the environment is modified each time in such a way that one can guess what was the initial logical value by just looking at the environment variation.

The true reason why the erasure gate depicted in Fig 3 and conventional gates can be used many times is because of the change in the environment degrees of freedom y. In other words, it is mandatory to change the microscopic degrees of freedom of the environment each time an erasure process takes place. Because of the time reversibility of Hamiltonian dynamics, two initially different trajectories of the system alone without environment, \(x^{(j)}(t_i)=\textbf{1}\) and \(x^{(k)}(t_i)=\textbf{0}\), cannot become identical later, \(x^{(j)}(t_i)=x^{(k)}(t_f)=\textbf{0}\). A way to use such erasure gates (with \(\Delta S=0\) or with \(\Delta S \ne 0\)) is to require y(t) to be different each time we use the gate, but not too different. Finally, notice that the role played by the environment y(t) in the gates under condition C1 are quite similar to the role played by the control register in the gates of the reversible computation proposed by Bennett [15, 16, 23]. In both cases, the environment or the control register is the additional degree of freedom y(t) needed to erase the system information x(t) without violating the time-reversibility of the whole system. The difference is that the control register is an active element in reversible computation, while the environment is interpreted here as a passive element without requiring any attention (reset).

Figure 3 shows an example on how to realize irreversible logic with reversible physics. We are requiring the final environments to be slightly different at the macroscopic level. See also "Appendix 2" with a toy model of an erasure gate that works properly without reset. Certainly, our simplified erasure gate has a limit on the number of times it can be used. But, in principle, it is not different from conventional erasure gates in our computers, because they also have a limit on the number of times that they can be consecutively used, which is related to the limit on the extra heat that can be absorbed by the environment when we take into account that the number \(N_E\) of environment particles is not strictly infinite.

Schematic representation of the initial (left panels) and final (right panels) microscopic states (dark blue and red solid circles) and the volumes of macrostates (light blue and red regions) in the system x plus environment y phase space. The upper panels correspond to the operation \({\textbf {1}}\rightarrow {\textbf {0}}\) and the lower panels to \({\textbf {0}}\rightarrow {\textbf {0}}\). The macroscopic property of the system \({\textbf {1}}\) means being on the left of the x axis, while the macroscopic property of the systems \({\textbf {0}}\) means being on the right of x axis. The initial macroscopic environment properties (in the left panels) are identical \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i) = {\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_i)\), while we can distinguish the macroscopic properties of the environment at the final time (in the right panels) \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \ne {\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_f)\). Even though the gate is logically irreversible, it satisfies the time-reversibility of microscopic laws. The relevant point is that condition C1 shows that each operation of the erasure process can be done without any change in the global entropy: the phase-space volumes (entropies) of the initial macroscopic states (in light blue regions in the left panels) are equal to the phase-space volumes (entropies) of the final macroscopic states (in the light red regions in the right panels)

Does the result obtained above, where the information is erased without entropy cost, violate the original Landauer’s prediction? Is the exorcise of the Maxwell demon done by Bennett and Penrose, based on prior Landauer’s cost for erasing data, wrong? We notice that our environments in C1, as plotted in Fig. 3, are not in thermodynamic equilibrium, so our results, as such, are not pertinent to discussions about systems that have assumed the hypothesis of thermodynamic equilibrium. Arguments on why we can expect non-thermal environment in some experiments will be discussed in more detail in Sect. 4. However, as discussed in the introduction, thermodynamics is a scientific discipline flexible enough to accommodate these new results into a new (irreversible or non-equilibrium) branch of thermodynamics.

Finally, the reader can argue that a fair discussion of the environment in present-day real computers has to involve a really large number of degrees of freedom (\(N_E\gg 10^{23}\)) making almost impossible to distinguish final environments, contrary to what we have stated in the C1 condition and in “Appendix 2”. Sure, there are many environments in our ordinary life that can be considered as thermal environments. But, we will show in the last section of this paper that recent experiments in equilibration of closed quantum systems show environments that never thermalize or that the transition from a non-thermal to a thermal reservoir (for those which thermalize) needs some time. Thus, gates at very high frequency can imply (non-Markovian) environments that have not enough time to thermalize (to become independent of their initial conditions \({\textbf {1}}\) or \({\textbf {0}}\)). There is a huge difference between saying that condition C1 is technologically difficult to reach, and saying that C1 is impossible to reach because it violates fundamental laws. In summary, there is no fundamental reason to expect that only thermal reservoirs can be applied to computations, so there is no reason to expect that the original Landauer limit will be impossible to be overcome in future nano-devices.

3.2 The intermediate Landauer’s erasure principle

The second type of relation between the erasure of information and entropic and energetic changes can be obtained by assuming that the final macroscopic environments are identical at the macroscopic level:

-

Condition C2 ENVIRONMENTS WITH IDENTICAL FINAL MACROSCOPIC PROPERTIES. For two different operations involved in a gate with two initial environment states which have macroscopically identical properties at the initial time (e.g., \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)={\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_i)\)), the two final environment states also have identical macroscopic properties (e.g., \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)={\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_f)\)) at the final time (See footnotes 4 and 5).

Notice that we are not imposing that the initial environment state is macroscopically identical to the final environment state in a given operation (we have shown in the previous subsection that this is impossible for an erasure gate), but only that the two final environment states of the different operations involved in a gate are macroscopically identical.

We analyze again an erasure gateFootnote 7 in Fig. 4 with condition C2. The initial logical states \({\textbf {1}}\) in Fig. 4a and \({\textbf {0}}\) in Fig. 4c are different macroscopically (being on the left and on the right, respectively), while having the same environment macroscopic states, \(E_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)= E_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\). The final logical states \({\textbf {0}}\) in Fig. 4b and \({\textbf {0}}\) in Fig. 4d are macroscopically identical (being on the right). Interestingly, we cannot distinguish macroscopically the final environment macroscopic states, \(E_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) = E_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\), as seen in the light red regions in Fig. 4b, d. If we look microscopically at Fig. 4, we see that all the microscopic points (solid red points) in the phase space \(\Gamma\) satisfy the time-reversibility imposed by the condition in 2, i.e., the solid red point of Fig. 4b never overlap with the solid red points of Fig. 4d. As we have repetitively stressed, a gate which is physically time-reversible (at the microscopic level) can be logically irreversible (at the macroscopic level). Notice that the distinguishability (or indistinguishability) between two final macroscopic states can have an objective definition, for a example, by imposing a minimum (or maximum) phase space distance between any two microscopic states belonging to different macroscopic states.

Proposition 4

The erasure of information with a gate satisfying condition C2 implies a minimum entropy cost \(\Delta S=k_B\ln 2\).

We define \(V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)\) as the phase-space volume of the initial macrostate \(X_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i), Y_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)\). Identically for \(X_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i), Y_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\), we define \(V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\). From the Proposition in 1, we now have \(v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)=V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)\) and \(v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)=V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\). How can we achieve condition C2 if \(v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \cap v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)=\emptyset\) ? The answer is accommodating \(v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\) and \(v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\), both, as microstates states belonging to the macrostate \(E_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)= E_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\). To satisfy condition C2, we assume \(V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)=V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\) so that \(V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)=V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)=v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)+v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)=V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)+V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\). Assuming that both initial phase-space macroscopic volumes are identical,Footnote 8\(V_0=V_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)=V_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_i)\), we get,

We have assumed equal a priori probabilities for the \({\textbf {1}}\rightarrow {\textbf {0}}\) and \({\textbf {0}}\rightarrow {\textbf {0}}\) operations. The reason why the entropy increase is minimal is because we can imaging \(v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \cup v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\) smaller than the final macrostate, but \(v_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \cup v_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f)\) cannot be larger than the final macroscopic state. Again the fundamental microscopic Proposition 2 is satisfied and a Hamiltonian \(H_{{\textbf {C}}{} {\textbf {2}}}(x,y)\) is possible \(^{[55]}\).

The proof is just a consequence that the number of microstates of the final macrostate is not equal to the number of microstates of the image of the initial macrostate, as seen in Fig. 4. This result was already indicated by Landauer himself [5]. Notice, however, that we have made no reference to thermodynamic equilibrium at all in the present development (just counting the number of microscopic states that satisfy a macroscopic property). For this reason, we refer to the result Eq. (2) as the weak Landauer’s erasure principle, because it is more general than the original Landauer limit which implicitly assumed that all the entropy increase was due to a production of heat. In this regard, Bennett wrote [16] explicitly: ”Typically the entropy increase takes the form of energy imported into the computer, converted to heat, and dissipated into the environment, but it need not be, since entropy can be exported in other ways, for example by randomizing configuration degrees of freedom in the environment.”

Schematic representation of the initial (left panels) and final (right panels) microscopic states (dark blue and red solid circles) and the volumes of macrostates (light blue and red regions) in the system x plus environment y phase space. The upper panels correspond to the operation \({\textbf {1}}\rightarrow {\textbf {0}}\) and the lower panels to \({\textbf {0}}\rightarrow {\textbf {0}}\). The macroscopic property of the system \({\textbf {1}}\) means being on the left of the x axis, while the macroscopic property of the system \({\textbf {0}}\) means being on the right of x axis. The initial macroscopic environment properties (in the left panels) are identical \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i) = {\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_i)\). The macroscopic properties of the environment at the final time (in the right panels) are also identical \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) = {\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_f)\), in the sense that their microscopic differences are not seen in their macroscopic properties. Even though the gate is logically irreversible, it satisfies the time-reversibility of microscopic laws. The relevant point is that condition C2 shows that each operation of the erasure process is done with an increase in entropy: the phase-space volumes (entropies) of the initial macroscopic states (light blue regions in the left panels) are half of the phase-space volumes (entropies) of the final macroscopic states (red blue regions in the right panels)

The main conclusion of this subsection is that the increase in entropy can be translated into other types of entropies different from thermodynamic entropy. We note that the same conclusion was reached by the works of Vaccaro and Barnett [51, 52]. They explicitly generalized the Landauer’s erasure principle to new scenarios showing that the costs of erasure depend on the nature of the gate and of the environment with which it is coupled. Their papers were inspired by the enlightening previous work of Jaynes [53] that introduced the concept of the generalized second law instead of the usually called second law of thermodynamics, to emphasize that the concept of entropy (as a way of counting how many microstates belong to a given macrostate, as we have done here) does not belong to (equilibrium) thermodynamics only, but can be applied to any system where macroscopic properties matter. We emphasize that, after accepting that the result \(\Delta S=k\ln 2\) has, in general, nothing to do with heat or temperature, new type of gates can be envisioned by looking for new types of entropy different from thermodynamic entropy converted into heat. Such new possibilities will violate the original Landauer’s erasure principle in terms of heat and temperature, without violating Eq. (2) when C2 is assumed.

3.3 The strong Landauer’s erasure principle

The strong relation between manipulation of information and entropy change leads to the original Landauer’s erasure principle. To arrive to it, we invoke the following condition on the final state of environment \(E_B\):

-

Condition C3 MACROSCOPICALLY IDENTICAL FINAL THERMAL ENVIRONMENTS. The final states of environment of different processes of a gate (e.g., \(Y_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f)\) and \(Y_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_f)\)) are described by the same thermal bath (See footnotes 4 and 5).

This condition should be understood as a supplement to C2, i.e., in condition C3 we assume that condition C2 is already satisfied. We are not only imposing that the final states of environment are macroscopically identical, but also that the final states of environment can be described by a state in thermodynamic equilibrium with a well-defined temperature T.

Proposition 5

For an erasure gate satisfying C3 (which implies satisfying C2 too), the erasure of information implies an increment of heat given by \(\Delta Q= k{T}\ln 2\) in the final environments.

For an environment in thermodynamic equilibrium, it is well known that the increment of heat \(\Delta Q\) is related to the increment of entropy \(\Delta S\) through the thermodynamic relation \(\Delta Q = T\Delta S\). Hence, since the increment of entropy is given by Eq. (2), we finally have

Here, from the macroscopic property T, it is easy to understand how the conditions \(Y_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_f) \cap Y_{{\textbf {0}}\rightarrow {\textbf {0}}}(t_f) =\emptyset\) (imposed by the Proposition 2) and \(E_{{\textbf {0}}\rightarrow {\textbf {0}},B}=E_{{\textbf {1}}\rightarrow {\textbf {0}},B}\) can be satisfied simultaneously. The first refers to microscopic variables (particle positions and momenta) in the phase space, while the second refers to the macroscopic temperature. In statistical mechanics, there are many different microscopic states corresponding to the same temperature. Again a Hamiltonian \(H_{{\textbf {C}}{} {\textbf {3}}}(x,y)\) is possible.

Expression (3) is exactly the original Landauer’s erasure principle [5], which we call the strong Landauer’s erasure principle to be distinguished from the previous weak and intermediate ones. The universality of the strong Landauer’s erasure principle in Eq. (3) is based on the assumption that all final states of environments are indeed thermal baths (condition C3). Following the arguments in previous sections and in the next section, the condition C3 is a good approximation for many real environments in Nature, but not necessarily valid for all of them (especially if we deal with very fast computations).

At this point, the reader can wonder why do we insist in the failure of the strong (original) Landauer’s erasure principle when its limit has been validated by several relevant experiments [14, 35,36,37,38,39,40], as indicated in Fig. (1)? All these experiments [14, 35,36,37,38,39,40] have carefully make an effort to ensure that the environment is in thermal equilibrium. Then, for thermal environments, the strong Landauer’s principle is a universal result. Loosely speaking, the experiments are designed to explain the strong Landauer’s erasure principle, rather than the other way around. In fact, the mentioned experiments have been developed imposing adiabatic conditions on the performance of the erasure processes which justify that the environment can be treated as a thermal bath. In this regard, the physical transitions seen as left and right distribution of particles in Fig. 4 cannot be done instantaneously. They require some time to thermalize, to change form two distinguishable macrostates to two indistinguishable macrostates. Therefore, it seems obvious that in the race for faster computing devices, at some point, the assumption that the environments of an electron devices are always thermalized will not be accurate enough because the reservoir will not have enough time to thermalize. This very point is in fact what we will discuss in the next section, taking profit of the vast literature on thermalization (or equilibration) in closed quantum systems.

4 Can the previous results be extended to the quantum regime?

In this paper, we have shown that the original (strong) Landauer’s erasure principle cannot be considered a universal result because it is not true that only thermal reservoirs are available for computations. The key element in our discussion is the fact that it is possible to envision final environments for the \({\textbf {1}}\rightarrow {\textbf {0}}\) and \({\textbf {0}}\rightarrow {\textbf {0}}\) operations with different macroscopic environment properties. But, can we generalize these results to the quantum regime? Below we provide arguments to justify that it is reasonable to expect that, what we have explicitly demonstrated to be valid for classical erasure gates, is also valid for quantum ones. We note that it is far from the scope of this paper to provide such rigorous quantum extension; here, we only give qualitative evidence of that.

In the quantum regime, the difference between microscopic and macroscopic levels of description is even more important than in classical physics. Microscopic quantum laws seem to be very different from the microscopic classical laws. There is still a strong disagreement in the scientific community on how to define a quantum microscopic state (if it exists at all). In other words, the definition of microscopic states is a rather subtle and controversial issue, because it highly depends on the interpretation of quantum mechanics, on which there is no consensus among physicists [54]. A straightforward demonstration that the developments done in Sects. 2 and 3 can be extended into the quantum regime will be done in “Appendix 1” (after selecting a proper interpretation of quantum mechanics). Fortunately, a simple understanding of why condition C3 is not universal in the quantum regime, and why there is a plenty of room to design erasure gates with conditions C2 and even C1, can be formulated in an (more or less) interpretation-neutral manner (in terms of expectations values) by reusing the recent advances on the process of thermalization of closed quantum systems [55,56,57,58,59,60,61].

Let us suppose that the two operations of the erasure gate are defined by the wave functions \(\Psi _{{\textbf {1}}}(x,y,0)\) for the input logical state \({\textbf {1}}\) and \(\Psi _{{\textbf {0}}}(x,y,0)\) for the input logical state \({\textbf {0}}\). Notice that we are using the variables x and y in the quantum regime as the degrees of freedom of the positions of the system and the positions of the environment, respectively. In this sense, x, y represent a point in the configuration space, while x, y represented a point in the phase-space in the classical regime. The use of the same notation will simplify the comparison of classical and quantum microstates done in “Appendix 1”Footnote 9.

Since the total Hamiltonian H(x, y) is time-independent, the pure states \(\Psi _{{\textbf {1}}}(x,y,0)\) and \(\Psi _{{\textbf {0}}}(x,y,0)\) can be described at all times by a unitary evolution \(|\Psi _{\alpha }(t)\rangle =\sum _n c_{n,{\alpha }} e^{-i E_n t/\hbar } |n\rangle\), with \({\alpha }=\{{\textbf {1}},{\textbf {0}}\}\) indicating the initial logical state. The ket \(|n \rangle\) is an energy eigenstate of the global Hamiltonian H(x, y) mentioned in Sect. 2 with eigenvalue \(E_n\). Here, \(c_{n,\alpha }=\langle n|\Psi _{\alpha }(0)\rangle\), which depends on the initial wave function, keeps memory of the initial conditions. The density matrix in the energy representation of such global states can be written as

The diagonal elements of the density matrix \(\rho _{\alpha ,n,n}=|c_{n,\alpha }|^2\) are called populations. They forget the phase of \(c_{n,\alpha }\), and they are time-independent. On the other hand, the off-diagonal elements \(\rho _{\alpha ,m,n}(t)=c_{m,\alpha } c_{n,\alpha }^*e^{i(E_n-E_m)t/\hbar }\) are called coherences. They are time-dependent and they quantify the coherence between the eigenstates \(|n\rangle\) and \(|m \rangle\) by keeping memory of the phase of \(c_{m,\alpha } c_{n,\alpha }^*\). SeeFootnote 10 for a discussion of the initial energies.

By construction of an erasure gate, at the final time \(t_f\), the macroscopic properties linked to the system are identical so that we can identify such macroscopic properties of both quantum states with same final logical sate \({\textbf {0}}\). Then, we assume that a macroscopic property of the environment can be defined from an expectation value [62] of a arbitrary observable \({\hat{A}}\) of the environment that can be written asFootnote 11:

where \(A_{m,n}=\langle m |{\hat{A}} ||n \rangle\). Thus, the discussion on whether \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(t)} \ne \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(t)}\) or \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(t)} \approx \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(t)}\) is a discussion on whether the off-diagonals elements of the density matrix \(c_{m,\alpha } c_{n,\alpha }^*e^{i(E_n-E_m)t/\hbar }\) (that keep the memory of the initial state) are relevant in the evaluation of (5).

But such issues have been clarified during the last years in theoretical and experimental works on thermalization of closed quantum systems. In principle, the second term of the right hand side of (5) is a quasi-periodic function different from zero. There are experiments, for example in ultracold quantum gases trapped in ultrahigh vacuum by means of (up to a good approximation) conservative potentials [63, 64], that can be considered to be of the type of systems described above, with off-diagonal elements always relevant. The near unitary dynamics of such systems has been observed in beautiful experiments on collapse and revival phenomena of bosonic [65, 66] and fermionic [67] fields, without the relaxation phenomena predicted with traditional ensembles of statistical mechanics [68, 69]. Thus, as we have argued along the paper, there are computing scenarios where the condition C1 that environments are macroscopically distinguishable is physically viable. In fact, all these works on quantum thermalization of closed systems have been motivated to understand the recent constructions of several prototypes of the so-called quantum simulations where the behavior of a quantum system, which cannot be solved numerically due to the many-body problem of the Schrodinger equation, is empirically realized in the laboratory by studying the evolution of another controlled quantum system that mimics the first one. Obviously, in such (analog) quantum simulations, and also in (digital) quantum computations dealing with qubits, the need of controlled (non-thermal) environments is mandatory for minimization of decoherence phenomena.

It is true that a system satisfying condition C1 requires an important technological effort on engineering the behavior of the environment. In fact, there are other quantum closed systems that do thermalize and such processes have been reasonably well-understood too. At the initial time \(t=0\), it is clear that \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(0)} \ne \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(0)}\) because we start from macroscopically different states. But, after some time we can find \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(0)} \approx \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(0)}\) if the off-diagonal terms become irrelevant. A simple argument can clarify the need for a delay to reach equilibration in a closed quantum system. Even if none of the terms \(c_{m,\alpha }\), \(c_{n,\alpha }^*\) and \(A_{m,n}\) are exactly zero at any time, it is possible to envision a scenario in which the whole sum of the right hand side of (5) is close to zero because the off-diagonals terms cancel each other due to adding of effectively random complex numbers. However, such randomization requires some time, which is called equilibration time \(t_{\textrm{eq}}\) in the literature. Then, the time evolution of \(\langle A \rangle\) after the equilibration time \(t>t_{\textrm{eq}}\), when the off-diagonal elements of the density matrix are no longer relevant, can be described by a time-independent diagonal density matrix in the energy representation \({\hat{\rho }}_{\textrm{diag}}=\sum _n |\langle n|c_n\rangle |^2 |n\rangle |\langle n |\). In the literature, it is said that a quantum system suffers equilibration when the expectation value in (5) satisfies \(\text {tr}\{ {\hat{A}} \hat{\rho } \}\approx \text {tr}\{ {\hat{A}} {\hat{\rho }}_{\textrm{diag}}\}\) for the overwhelming majority of times (allowing for some sporadic revivals) larger than the equilibration time \(t_{\textrm{eq}}\). Notice that the diagonal matrix, after that, does not yet need to be a (micro-canonical, canonical or grand-canonical) thermal density matrix. In any case, we get macroscopically identical properties of the environments, \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(t)} \approx \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(t)}\). This corresponds to condition C2, where the environments are indistinguishable but not thermal yet. Again, a lot of experimental work on such quantum equilibration scenarios is present in the literature [56,57,58,59]. When \({\hat{\rho }}_{\textrm{diag}}\) is roughly equal to the micro-canonical (canonical or grand-canonical in open systems) density matrix, then the quantum system is said to be thermalized. This corresponds to condition C3.

In the literature [56,57,58,59,60,61], one can find equilibration times \(t_{\textrm{eq}}\) ranging from few femtoseconds to picoseconds, depending on the details and complexity of the systems at hand. If we define \(\theta _{n,\alpha }\) as the phase of \(c_{n,\alpha }\), a simple (but not rigorous) estimation of \(t_{\textrm{eq}}\) can be obtained by noting that at \(t=0\) all phases of the off-diagonal elements (coherences) satisfy \(e^{i(\theta _{n,\alpha }-\theta _{m,\alpha })}e^{i(E_n-E_m)0/\hbar }=e^{i(\theta _{n,\alpha }-\theta _{m,\alpha })}\), so that all phases of the off-diagonal elements together perfectly keep memory of the initial sate \(\Psi _{\alpha }(x,y,0)\). To forget such memory, we require that the sum of all coherences in (5) vanishes after an equilibration time \(t_{\textrm{eq}}\). If such equilibration occurs, all relevant phases \(e^{i(E_n-E_m)t_{\textrm{eq}}/\hbar }\) have to reach a value equal or larger than \(2\pi\) to ensure that \(e^{i(\theta _{n,\alpha }-\theta _{m,\alpha })}e^{i(E_n-E_m)t_{\textrm{eq}}/\hbar }\) are randomly distributed. If we define \(\Delta E_{\textrm{eq}}={\textrm{min}}(E_n-E_m)\) of all relevant energies of the system, a simple estimate of the equilibration time is given by

where h is the Planck constant. For a reservoir of length \(L=100\) nm, with a parabolic relation between energy and momentum, we can estimate a minimal energy gap between energy eigenstates equal to \(\Delta E\approx 10^{-3}\) or \(10^{-4}\) eV. If we use \(\Delta E\approx 10^{-4}\) eV in expression (6), we get an approximate value of the equilibration time \(t_{\textrm{eq}} \approx 1\) ps. Even though the formula (6) is not rigorous at all, it clarifies that process of thermalization, in our case changing from different macroscopic properties \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(0)} \ne \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(0)}\) to identical macroscopic properties \(\langle A \rangle _{{\hat{\rho }}_{{\textbf {1}}}(t_{\textrm{eq}})} = \langle A \rangle _{{\hat{\rho }}_{{\textbf {0}}}(t_{\textrm{eq}})}\), cannot be instantaneous but requires a time to occur. This conclusion can alternatively be reached from the definitions of Markovian and non-Markovian open quantum systems [49]. An open quantum system interacting with an environment is, in principle, a non-Markovian system. The evolution of the system (together with the environment) can only be considered Markovian if we consider the evolution in (coarse-grained) time steps larger than the time interval needed for the environment to relax. Thus, the transition from non-Markovian to Markovian relaxation time also requires a time related to the relaxation of the environment. In conclusion, even in typical environments where the assumption of thermalization is reasonable, we cannot have an instantaneous thermalization process. This delay in the thermalization provides an unquestionable limit on the speed of computations to satisfy the basic assumption of the strong (original) Landauer limit. This limit is also shown in Fig. 2. Beyond THz frequencies, the assumption that environments are always macroscopically identical to a thermal bath is not admissible and the original Landauer dissipation seems not applicable.

5 Conclusions

After more than 60 years, the Landauer’s erasure principle is still accompanied by controversies. In this regard, Landauer himself wrote [70]. ”The path to understanding in science is often difficult. If it were otherwise, we would not be needed. This field [fundamental physical limits of information handling], however, seems to have suffered from an unusually convoluted path.” What we find especially unfortunate during the recent developments in this field is linking the result of the dissipation in computing gates to equilibrium thermodynamicsFootnote 12. This link is unfortunate because it is not only unnecessary (as we have seen in our paper), but it has the undesired effect of unnecessarily limiting the imagination of many researchers. An exception that has overcome this limitation has recently been published in Ref. [71], where erasure gates using squeezed thermal environments are proposed.

Thus, at first sight, it seems that any attempt to discuss possible extensions of the Landauer’s erasure principle beyond thermodynamic equilibrium requires the flexible tools of non-equilibrium thermodynamics. Such non-equilibrium tools will certainly still require some notion of equilibrium to be able to define what is heat, work, etc. As we mentioned, this is the typical path followed for most investigations on Landauer’s extensions. But, this is not the path we have followed in our paper. Can we use a description of the erasure process based exclusively on the mechanical (not thermodynamic) laws of physics? Yes, of course. An erasure gate is, at the end of the day, a physical system whose performance follows the fundamental microscopic laws of physics. As an example, in “Appendix 2”, we have shown a toy model of an erasure gate whose performance during several repetitions is evaluated by numerically solving the fundamental microscopic laws that simultaneously govern the degrees of freedom of the system and the environment.

The reader can (erroneously) argue that we have used some thermodynamics concepts, not only microscopic laws, along the paper because we have included entropy argumentations. We have only used a definition of entropy as the number of microstates that are present in a given macrostate. By construction, such concept of entropy is perfectly adequate in a microscopic description of any system (independently on whether it is used in thermodynamic discussions too). It only requires the proper definition of a macroscopic state in terms of microscopic states, as we have done in Sect. 2. Then, of course, in Sect. 3.3 we have invoked the equilibrium thermodynamics concepts of heat and temperature, but only to reach the original Landauer formulation, which is nothing but a special case of our general formulation.

The main advantage (and drawback) of our paper is that it uses classical microscopic physics. As such, it provides a mathematically simple and physically rigorous understanding of the three types of Landauer’s erasure principle. But, strictly speaking, the results of this paper have not been demonstrated to be valid in quantum scenarios. A rigorous quantum extension of the classical microscopic explanation presented here is far from the scope of this paper. The main reason is because there is still a strong disagreement in the scientific community on how to define a quantum microscopic state (if it exists at all). In fact, even the wave function (linked to any definition of a microstate) is under a lively debate now (does it represent only epistemic knowledge about the outcomes of future measurements? or, is it something ontologically real ?) [54]. Even, it is not clear if the wave function is enough to define a microscopic state, since it is also argued that present quantum theory has to be understood as something emergent; as an average description of an underlying more complicated quantum dynamics (with additional microscopic variables) [54]. Despite this poor understanding of what quantum microscopic states are, in Sect. 4 we have provided some quite general evidences that it is reasonable to expect that the classical results presented here do also apply in a quantum regime. Basically, even under the assumption that a quantum environment will effectively reach some type of equilibrium (whatever it means), some time will be needed to reach it. In addition, in “Appendix 1”, after selecting a particular interpretation of quantum mechanics, we also provided a natural extension of the classical results of the main text to the quantum regime.

Finally, let us mention that the strong Landauer’s erasure principle has not been relevant yet for practical devices because nowadays other larger sources of dissipation are present. It seems reasonable to expect that in the future, when the other sources of dissipation disappear, the strong Landauer’s erasure principle will still not be relevant because future computing devices will work at frequencies for which the assumption of environment in (classical or quantum) thermodynamic equilibrium will no longer be valid as shown in the shaded region in Fig. 2.

We hope that the present work will help to develop new research avenues for engineering computing devices with environments that satisfy condition C2 involving entropy change without heat dissipation, or even approaching condition C1 where the entropy change can be reduced significantly.

Data Availability Statement

Data will be made available on reasonable request. This manuscript has associated data in a data repository. [Authors’ comment: See CORA RDR at https://dataverse.csuc.cat/].

Notes

Certainly, nowadays, the logical information in electronic devices is linked to different potential energies (a low voltage is assigned to a logical \({\textbf {0}}\) and a high voltage to logical \({\textbf {1}}\)). Thus, the operation \({\textbf {1}}\rightarrow {\textbf {0}}\) implies an unavoidable dissipation of energy (to transform a high voltage into a low voltage). In the Landauer’s erasure protocol, and in our example in “Appendix 2”, however, the logical information is linked to another macroscopic property different from the energy, so that \({\textbf {0}}\) and \({\textbf {1}}\) can be defined for macroscopic states with the same energy.

The division between system and environment degrees of freedom is arbitrary, but such arbitrariness will have no effect at all to the discussion. In this sense, the typical division found in the literature between information-bearing degrees of freedom and non-information-bearing degrees of freedom is artificial too.

The dissipation on a gate is a physical process that, obviously, happens independently of whether the humans observe it or not. The fact that the macroscopic properties C1, C2 and C3 seems to be adapted to the anthropomorphic perceptions does not mean that those conditions are subjective or depending on human observations. Macroscopic conditions on physical systems are objective (physical) conditions which have to be satisfied by the microscopic evolutions. One can define these macroscopic properties as a result of a large-scale resolution of the apparatus which fixes the initial microscopic state or detects the final one. The macroscopic properties can be redefined as a particular distribution of phase space points \(X_C(t),Y_C(t)\). In turn, specifying such phase-space points distribution is exactly equivalent to specifying the Hamiltonian \(H_C(x,y)\). In other words, the Hamiltonian \(H_{C_1}(x,y)\) satisfying C1 is different from the Hamiltonian \(H_{C_2}(x,y)\) and both are different from \(H_{C_3}(x,y)\). The three Hamiltonians, by construction, can be designed to satisfy the same logical input/output table, but they are physically different in the way they manipulate the environment degrees of freedom during the system plus environment interaction. Thus, each of the Hamiltonians can have a different dissipation, even if they provide the same logical table. No human perception is involved in the discussion at all.

The conditions C1, C2 and C3 are basically conditions on what types of natural macroscopic properties can be expected for the final (not initial) environments. We consider that initial environments do not have any correlation/entanglement with the initial (\({\textbf {1}}\) or \({\textbf {0}}\)) logical property of the system and that they satisfy \({\textbf {E}}_{{\textbf {1}}\rightarrow {\textbf {0}}}(t_i)={\textbf {E}}_{{\textbf {0}} \rightarrow {\textbf {0}}}(t_i)\) for most relevant macroscopic properties. Of course, one can envision the possibility of more exotic initial environments so that exotic relations between initial and final entropies can be expected. Such engineering of the initial environment is far from the scope of this work and the spirit of the Landauer’s erasure principle.

When dealing with a thermal environment, it is routinely assumed that the entropy variation of the environment is given by \(\Delta S_{env}=\Delta Q/T\), so that only the entropy variation of the system needs to be evaluated. This is the procedure in many classical and quantum developments [21]. By contrast, since we are considering general (not only thermal) environments, we are discussing the variation of the Boltzmann entropy for the whole system plus environment. Our procedure for the direct evaluation of the entropy of the whole system has the additional advantage of not having to assume that the whole entropy is equal to the sum of the entropy of its parts, which is not obvious when the parts have strong correlations between them.

Notice that Landauer and Bennett were right in their argumentation that an erasure gate designed to work only once is not a valid gate in the present discussion of dissipation. Our disagreement here is on the implicit assumption in Landauer’s and Bennett’s argumentation that condition C1 could only be satisfied for erasure gates that could work only once without reset. This last assumption is wrong because we can imagine gates that satisfy condition C1, while they work many times (without reset), as far as the initial and final environments are quite similar (but not identical). As simple toy-model to show the physical soundness of our proposal can be found in “Appendix 2”.

The fact that there is no entropy limit for reversible logic with condition C2 is a well-known result and even tested experimentally [37].

Notice that we can engineer systems with asymmetric phase space volumes for the initial \({\textbf {1}}\) and \({\textbf {0}}\) (or the associated environment phase spaces) so that, in one of the operations, the entropy change can be lower than the value predicted by Landauer as explained in Ref. [12]. In any case, these exotic results (validated experimentally in Ref. [41]) do not contradict the spirit of the original Landauer’s principle. One could also envision exotic entropy relations by considering final \({\textbf {1}}\) and \({\textbf {0}}\) whose macroscopic system properties are different from the macroscopic system properties assigned to the initial \({\textbf {1}}\) and \({\textbf {0}}\).

Let us clarify whether the degrees of freedom y include all the positions of the rest of the Universe or not. In principle, it seems that we would have to consider y as the rest of the Universe, but it is not needed. The mentioned experiments [56,57,58,59,60,61] on closed quantum systems do not include the whole Universe because the time scale dictating the equilibration between the system and the nearby degrees of freedom of the environment can be much shorter than the time-scales introduced by the coupling of the system to the rest of the Universe. Thus, we have the right to discuss our system plus nearby degrees of freedom as a closed quantum system, as far as we are not looking for its behavior at very long times [55]. The whole topic of thermalization of (well-approximated) closed quantum systems [56,57,58,59,60,61] is based on this reasonable separation between a nearby environment and the rest of the Universe.