Abstract

We present a systematic study demonstrating the impact of lattice QCD data on the extraction of generalised parton distributions (GPDs). For this purpose, we use a previously developed modelling of GPDs based on machine learning techniques fulfilling the theoretical requirements of polynomiality, a form of positivity constraint and known reduction limits. A special care is given to estimate the uncertainty stemming from the ill-posed character of the connection between GPDs and the experimental processes usually considered to constrain them, like deeply virtual Compton scattering (DVCS). Moke lattice QCD data inputs are included in a Bayesian framework to a prior model based on an Artificial Neural Network. This prior model is fitted to reproduce the most experimentally accessible information of a phenomenological extraction by Goloskokov and Kroll. We highlight the impact of the precision, correlation and kinematic coverage of lattice data on GPD extraction at moderate \(\xi \) which has only been brushed in the literature so far, paving the way for a joint extraction of GPDs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Generalised parton distributions (GPDs) were introduced in the 1990s [1,2,3] and have been since then a very active topic for theoretical and experimental studies (see e.g. the review papers [4,5,6] among others). This interest is fuelled both by the interpretation of GPDs as 3D number densities of quarks and gluons within the nucleon [7, 8] and by their connection to the energy momentum tensor (EMT) [2, 9].

A recent study [10] pointed out the large magnitude of uncertainty underlying the connection between GPDs and the exclusive processes usually considered to constrain these objects, such as deeply virtual Compton scattering (DVCS) [11, 12], timelike Compton scattering (TCS) [13] or deeply virtual meson production (DVMP) [14, 15]. More precisely, the coefficient functions of these processes are not practically invertible, even when taking into account QCD corrections on a large range of energy scale, and despite the constraints brought by Lorentz covariance. The results of [10] were confirmed in [16] for the JLab range of the skewness \(\xi \), defined as half of the momentum fraction transferred on the lightcone. Accordingly, in this paper, we will consider a range such that \(\xi \in [0.1,0.5]\). In this region, which constitutes the bulk of the most precise experimental data on DVCS, QCD evolution provides little help to alleviate the so-called deconvolution problem. Recently, efforts were conducted to produce GPD models giving a better account of the uncertainty associated to the ill-defined extraction of GPDs from exclusive processes. The study [17] shows that when all the theoretical properties of GPDs (including positivity) are taken into account, the deconvolution uncertainties are mostly present in the so-called Efremov-Radyushkin-Brodsky-Lepage (ERBL) kinematic region.

The ambiguities of the DVCS, TCS and DVMP processes have led to an on-going considerable theoretical and experimental effort to characterise other exclusive processes with richer kinematic structure. The best known case is double DVCS (DDVCS) [18, 19] where both the incoming and outgoing photons are deeply virtual. This additional kinematic degree of freedom allows, at leading order and in a specific kinematic range, the extraction of the “deconvoluted” GPDs. A new analysis performed in [20] suggests that DDVCS is measurable in near-future experiments. Recently, other processes have been put forward such as di-photon production [21, 22], photon meson pair production [23, 24], or the more general single diffractive hard exclusive processes [25, 26].

In addition to the experimental inputs, a new source of information regarding GPDs has emerged through lattice QCD simulations. Indeed, new formalisms developed in [27,28,29,30] have offered the possibility to connect non-local spacelike Euclidean matrix elements computed on the lattice to lightlike ones through perturbation theory. We focus here on two different formalisms. First, the large-momentum effective theory [28], known as the “quasi-distribution” formalism, has triggered a lot of interest, both in terms of simulation and perturbative computation regarding the so-called matching kernels. GPD matching kernels have been computed [31, 32] and the first lattice QCD studies of GPDs have been performed [33].

The second formalism, and the one we will be focusing on through this paper, is known as “short-distance factorisation” or “pseudo-distribution” formalism [29]. Based on a Lorentz decomposition of the Euclidean matrix elements, it allows the extraction of parton distributions in Ioffe time \(\nu \), that is the Fourier conjugate of the momentum fraction x. This method was first introduced in [29], and steady progresses have been done since then, both on the perturbative matching side [34,35,36], and on the lattice simulation one (see for instance [37, 38]). The formalism has two advantages: working with ratios of matrix elements greatly simplifies the renormalisation procedure and allows an easier extrapolation to the continuum limit. It also presents continuous matching kernels. However, this method - like any lattice-QCD attempt to access lightcone distributions - comes with a difficulty in the actual reconstruction of the momentum dependence of the \({\overline{MS}}\) distribution. As only a restricted range of Ioffe times can be probed numerically with acceptable noise levels, the inversion of the Fourier transform to reconstruct an x-dependent distribution is an ill-posed inversion problem, an imputation problem. Several attempts have already been performed to try to handle this specific ill-posed problem [39,40,41].

The combination of phenomenological and non-local spacelike lattice inputs on parton distributions has already been explored in some recent papers, for instance on the proton PDF [42] and pion PDF [43]. For GPD studies, here related to deeply virtual Compton scattering (DVCS) at leading order, the situation is made significantly more complicated due to the higher dimensionality compared to ordinary PDFs. The recent works in [44, 45] have associated lattice data with various phenomenological and experimental inputs, where the authors have mostly considered GPD lattice data at vanishing skewness. An additional difficulty in offering a framework for the joint study of experimental and lattice inputs on GPDs is the number of parameters involved. Indeed, for only two light quark flavours, one needs to model 20 different GPDs – two valence quark distributions, two sea quark, plus a gluon distribution, repeated for the four helicity combinations H, E, \({\tilde{H}}\) and \({\tilde{E}}\) [45]. This abundance of functions to extract forced the authors to employ only basic modelling of the skewness relevant in the small \(\xi \) regime.

In the present work, we develop a different strategy to combine phenomenology and lattice data focusing on moderate skewness. In this domain, lattice computations offer the perspective of a significant reduction of the uncertainty associated to the deconvolution problem of the usually considered exclusive processes. We use a previously developed GPD model presented in [17] which offers significant flexibility precisely at moderate skewness. It is illusory in the current state of experimental and lattice data to perform a satisfactory flavour and helicity decomposition with this kind of flexible model. Therefore, instead of adjusting it on actual experimental and lattice results, we assume that the flavour and helicity decomposition has been obtained already. On the experimental side, we use the phenomenological information encoded in the Goloskokov and Kroll (GK) model [46,47,48], whereas on the lattice side, we generate mock lattice data. Some tension between lattice and experimental data is hinted at in [42] and [45], whereas [43], using short-distance factorization, states that when taking into account all sources of systematic uncertainty, lattice and experimental data are generally compatible. We therefore use this assumption in the study, which offers the possibility to merge phenomenology and lattice inputs thanks to a Bayesian reweighting procedure. This procedure offers advantages with respect to a new fit. First it is far cheaper in terms of computing time, allowing us to explore many more cases in terms of correlation in the data, level of noise or kinematic coverage compared to what a full refit would allow. Then it offers an intuitive way of assessing the reduction of uncertainties including new data. Finally, in a realistic case, real lattice and experimental data might be in tension, and bayesian reweighting can highlight such discrepancies in a more obvious way than what simple \(\chi ^2\) in a full refit would. Indeed, a fit will try to meet the conflicting datasets halfway, and average out a bit the discrepancies.

Summarizing, we probe in this paper whether experimental data which suffers from a deconvolution problem and lattice data which suffers from an imputation one can be combined advantageously. For this first study, we disregard the evolution properties of GPDs and thus the matching kernels between pseudo-Ioffe distributions and GPDs. The impact of the perturbative QCD corrections is left for future works.

In Sect. 2, we briefly review key aspects of GPDs and their connection with Ioffe-time distributions. We also revisit the main characteristics of the flexible GPD model introduced in [17]. In Sect. 3, we show how the model is adjusted to a phenomenological parametrization so as to reproduce the main experimental features of a GPD extraction. Section 4 is dedicated to the generation of the set of mock lattice QCD data. We introduce in Sect. 5 the reweighting procedure to combine our mock lattice QCD data to the GPD model. Finally, we discuss the results of the reweighting in Sect. 6, and conclude.

2 Kinematics and GPD modelling

2.1 Definition and properties of GPDs

GPDs can be formally defined as the Fourier transform of non-local matrix elements evaluated on a lightlike distance \(z^-\) [4]:

where we have restricted here our definition to the unpolarized quark GPD in a nucleon whose mass is labelled m. We also omitted the Wilson line in the definition of the operator, that needs to be added when working in gauges other than lightcone one. The average momentum of the incoming and outgoing hadronic states \(|p_{1}\rangle \) and \(|p_{2}\rangle \) is defined as:

while x is the usual lightcone momentum fraction defined as:

with \(k^{+}_{1,2}\) the incoming and outgoing momentum of the struck quark. The total four-momentum transfer squared t is given by:

and the skewness \(\xi \) is defined as:

GPDs have to obey several properties which play a crucial role in their modelling. First, the forward limit of GPDs is given in terms of PDFs, such that:

where q(x) is the unpolarised quark PDF of flavour q and \(\Theta \) the Heaviside step function. GPDs are also connected to electromagnetic form factors (EFFs) through:

where \(F^q_1\) is the contribution of the quark flavour q to the Dirac EFF. GPDs have to obey the so-called polynomiality property, stating that their Mellin moments are polynomials in \(\xi \) of a given order [49, 50]:

where \([\dots ]\) is the floor function and \(\text {mod}(n,2)\) is 0 for n even, and 1 otherwise. The polynomiality property is understood as a consequence of the Lorentz covariance of GPDs, and can be systematically implemented thanks to the Radon transform [51,52,53] in the double distribution formalism [50]. Finally, let us highlight that GPDs are also constrained by the so-called positivity properties [54,55,56,57], the most classical one being given by:

Respecting all these constraints at once represents a challenge for the phenomenology of GPDs.

GPDs can also be expressed as a function of the Ioffe time parameter \(\nu = p\cdot z\) [58], which is the Fourier conjugate of the average momentum fraction x. The relation between \({\hat{H}}(\nu ,\xi ,t)\) and \(H(x,\xi ,t)\) is thus given by [34]:

In the following, we drop the “hat” on \({\hat{H}}(\nu )\) as the explicit dependence in \(\nu \) or x is enough to indicate whether we work in Ioffe time or momentum space.

As we have mentioned before, non-local matrix elements computed on the lattice are Euclidean, so the distance z between the operators defining the GPD is spacelike (\(z^2 < 0\)) contrary to the lightlike distance \(z^-\) used in the usual lightcone definition of parton distributions. As a result, Ioffe-time pseudo-distributions computed on the lattice, once the continuum limit has been appropriately taken, are functions \(\mathfrak {M}(\nu , \xi , t, z^2)\), which can be matched perturbatively to the \({\overline{MS}}\) scheme to yield the Ioffe-time distributions such as \(H(\nu , \xi , t, \mu ^2)\) (see [34, 36]). Matching from \(z^2\)-dependent pseudo-distributions to \(\mu ^2\)-dependent \({\overline{MS}}\) distributions is only a minor correction at small Ioffe time, which we will neglect in this paper.Footnote 1 This effect should however be properly accounted for in further studies.

In the following, for simplicity we limit the scope of our study to the singlet sea quark GPD \(H^{q(+)}(x, \xi , t) = H^{q}(x, \xi , t) - H^{q}(-x, \xi , t)\). Imposing this parity property means that we will only study the imaginary part of \(H(\nu )\). We also ignore the t-dependence of GPDs and the entanglement between t and \(\xi \) which splits the kinematic domain between physical and unphysical regions, to work with \(t=0\) all along. This choice is made to primarily focus on the deconvolution of the x and \(\xi \) dependence helped by lattice data. The implications of the t dependence are left for a future work.

2.2 GPD modelling with artificial neural networks

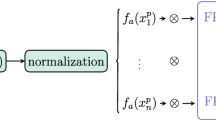

Our study is based on methods recently developed in [17], where the use of artificial neural network (ANN) techniques in a direct modelling of GPDs was implemented for the first time. This new way of modelling has been designed to address the problem of model dependence and implementation of the theoretical constraints one encounters in the GPD phenomenology, but also to facilitate a future inclusion of lattice QCD information. To keep our article self-consistent and self-contained, we remind now important details on ANN GPD modelling.

Our GPD model based on ANNs significantly differs from a textbook implementation of machine learning techniques (see e.g. Ref. [59]). The reason for that is the desire to fulfil in the architecture of the neural network a set of theory-driven constraints for a valid GPD model. These constraints are among others linked to the parity properties of GPDs, the polynomiality property (8) and known limits like (6). The positivity constraints (9) are not guaranteed at the level of the architecture of the network, but rather enforced numerically during the training procedure.

The model proposed in Ref. [17] is built in the double distribution space involving variables \(\beta \) and \(\alpha \). The relation with the GPD H in momentum space is given by the Radon transform:

where \(\textrm{d}\Omega =\textrm{d}\beta \,\textrm{d}\alpha \,\delta (x-\beta -\alpha \xi )\) and \(|\alpha |+|\beta |\le 1\). In (11), \(F(\beta , \alpha ,t)\) is called a double distribution and is the object we will model. The benefit of using the double distribution space is an automatic fulfilment of the polynomiality property by the resulting GPD \(H(x, \xi ,t)\).

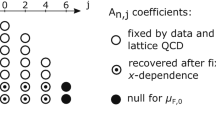

To achieve a satisfactory flexibility and reproduction of known limits, our double distribution model is composed of three parts:

Let us address successively the role of each term in (12). The first one,

ensures by design the proper reduction to the forward limit and has the necessary flexibility to model the \(x=\xi \) line, which is particularly relevant for the current GPD phenomenology. The prefactor \((1-x^2)\) of \(F_C(\beta , \alpha )\) in (12) combined with \(1/(1-\beta ^2)\) in (13) was introduced to facilitate the fulfilment of the positivity constraint (9). The forward limit (unpolarised PDF for the GPD H) is denoted by \(f(\beta ) \equiv H(\beta ,0,0)\), while \(h_{C}(\beta , \alpha )\) is a profile function generalising that proposed by Radyushkin [54]. In this study it is given by:

Thanks to a special design of the activation function and the use of the absolute value, the neural network \(\textrm{ANN}_{C}(|\beta |, \alpha )\) is even w.r.t. both \(\beta \) and \(\alpha \) variables, and it vanishes at the edge of the support region \(|\beta | + |\alpha | = 1\). The symmetry in \(\beta \) is introduced to keep the resulting GPD an odd function of x (relevant for phenomenology of DVCS and TCS), while the symmetry in \(\alpha \) is mandatory, as it allows fulfillment of the time reversal property, i.e. the invariance under \(\xi \leftrightarrow -\xi \) exchange. The normalisation by the integral over \(\alpha \), which can be done analytically, allows enforcement of the proper forward limit, while the rest of the model is typically trained to reproduce the diagonal \(x = \xi \) probed by amplitudes of processes like DVCS, TCS and DVMP.

As the term \(F_{C}(\beta , \alpha )\) was found to be tightly constrained by the necessity of reproducing both \(\xi =0\) and \(x=\xi \) lines, an additional term \(F_{S}(\beta , \alpha )\) was introduced. This term explicitly vanishes on the \(\xi =0\) and \(x=\xi \) lines, i.e. it does not contribute to the fit of \(F_{C}(\beta , \alpha )\) on the phenomenological inputs. Rather, \((x^2-\xi ^2)F_S(\beta , \alpha )\) represents a contribution that is entirely unconstrained by DVCS data at Leading order (LO) accuracy and the knowledge of PDFs, and aims at reproducing the deconvolution uncertainty of exclusive processes. Precisely, it corresponds to a LO shadow distribution as defined and studied in [10]. \(F_S\) is constructed in the following way:

where:

Since this contribution is not constrained in the fit, the major limit on its size, aside from the maximal flexibility of the neural network, is really imposed by the positivity constraint. During training, we seek to maximise the \(N_{S}\) normalisation factor in (16) within the limits allowed by positivity so as to leverage the maximal flexibility. Writing the function \(h_S(\beta , \alpha )\) as the difference of two different profile functions characterized by \(\textrm{ANN}_{S}(|\beta |, \alpha )\) and \(\textrm{ANN}_{S'}(|\beta |, \alpha )\) ensures that \(F_S(\beta , \alpha )\) brings no contribution to the forward limit. The \(f(\beta )\) factor helps to enforce the positivity.

Finally, \(F_{D}(\beta , \alpha )\) gives the additional flexibility necessary to model the D-term, a degree of freedom of GPDs associated to the final terms in \(\xi ^{n+1}\) in (8) and which plays a crucial role in the characterisation of partonic matter [60, 61]. One has:

and

where \(d_{i}\) are coefficients of the expansion of D-term into Gegenbauer polynomials, and where N is an arbitrary truncation parameter.

3 Representation of experimental data and estimation of uncertainties

Let us now discuss how the model encodes a representation of experimental data for processes like DVCS, TCS and DVMP which we will use as an input for the lattice QCD impact study. We stress again that experimental data for the aforementioned processes mostly probe GPDs at the \(x=\xi \) line (with some additional information on the D-term), and that at \(t = 0\), the forward limit, i.e. the PDF, is very well known from a wealth of inclusive and semi-inclusive processes.

We use a built-in PDF parameterisation proposed in [62] for \(f(\beta )\) involved in (13) and (15). Free parameters of the \(F_{C}(\beta , \alpha )\) term are constrained by 200 points of \(H^q(x,\xi =x)\) generated with the GK model [46,47,48] spanning over the range of \(-4< \log _{10}(x = \xi ) < \log _{10}(0.95)\) at fixed \(t = 0\) and \(\mu ^2 = 4~\textrm{GeV}^{2}\). The term \(F_{S}(\beta , \alpha )\) is only constrained by the positivity requirement (9), giving rise to large uncertainties when \(x < \xi \). The details of the constraining procedure are given in Ref. [17].

The uncertainty of the model is encoded in a collection of 100 replicas. This way is convenient for propagation of uncertainty to any related quantity and for the use of Bayesian reweighting techniques. A single replica represents the outcome of the independent fit to 200 \(x=\xi \) points indicated in the previous paragraph, with a random choice of the initial parameters (weights and biases of ANNs, and normalisation parameter \(N_S\) in (16)). In this way, we probe how lattice data can help constraining the ill-posed inverse problem between DVCS data and GPDs. We thus do not fluctuate the output of GK model according to some experimental uncertainties.Footnote 2

To evaluate the mean and standard deviation of a quantity derived from the GPD at a specific kinematic point – which can be the GPD itself, a 3D number density, an observable, etc. – one may use any statistical estimator of the mean and uncertainty of the population X of 101 values returned by replicas. In this analysis we use respectively the median and the median absolute deviation (MAD):

where the factor 1.4826 is derived from the assumption of gaussianity.

We note that the population of 101 values returned by replicas may contain outliers, i.e. pathological values with respect to the other entries, distorting the evaluation of mean and uncertainty. This may happen due to instabilities in the numeric procedures, which cannot be entirely avoided due to the complexity of GPD modelling and the constraining procedure. Many methods for detecting and removing outliers were proposed in the literature, like the \(3\sigma \)-method [63]. Alternatively, one can chose robust estimators with respect to outliers, our motivation for using MAD.

Figure 1 shows 100 replicas of \(H(\nu , \xi , t =0)\) evaluated at three values of \(\xi \), together with its \(1 \bar{\sigma }\) band. For small values of \(\xi \), the replica bundle is extremely coherent, or auto-correlated, at small Ioffe time. This is due to the fact that the positivity constraint limits considerably the freedom of the model for \(x > \xi \), on a region that is therefore all the more extended that \(\xi \) is small.

The set of GPD replicas \(\text {Im}\,H(\nu , \xi ,t=0)\) between Ioffe times \(\nu =0\) and \(\nu =20\) at \(\xi =0.1\) (top), \(\xi =0.5\) (middle), \(\xi =0.9\) (bottom) and their corresponding one standard deviation bands. Due to waning support \(x > \xi \) as \(\xi \) increases, the replicas become less constrained by positivity, oscillate more heavily, and decohere

4 Generating mock lattice QCD data

After describing in the previous section the fit of our flexible GPD model with phenomenological inputs, we turn to the question of the generation of plausible mock lattice QCD data. In accordance with our choice of working in the pseudo-distribution formalism, we will generate data at small Ioffe time \(\nu \). More precisely, for each value of \(\xi \) that we will consider, we generate the data in three independent batches, corresponding to the following regions in \(\nu \):

-

1.

\(0.2\le \nu \le 2\), with data spaced regularly with interval \(\Delta \nu =0.2\),

-

2.

\(2.2\le \nu \le 4\), with interval \(\Delta \nu =0.2\),

-

3.

\(4.4\le \nu \le 6\), with interval \(\Delta \nu =0.4\).

We introduce these three independent batches of lattice QCD data to loosely mimic actual simulations where various sets of points with different correlations arise from varying the boosted hadron momenta and the separation between fields in the non-local operator.

We assume the correlation between different batches of data to be zero. On the contrary, we consider the existence of correlations inside the batches, characterized for commodity by a simple coefficient \(0\le c<1\), which is the same between any two lattice data points in the same batch, and does not vary from batch to batch. This choice of constant correlation coefficient is obviously an oversimplification, but it already allows us to get a rough estimate of the impact of correlations on the determination of GPDs.

We characterise how uncertainty grows from \(\nu = 0\) to \(\nu = 10\) by an exponential slope parameter called b. Figure 2 presents the relative uncertainty profiles which we will use in this study, given by

where \(b^\nu \) is the parameter b to the power of the Ioffe time \(\nu \) and \(b^{\nu _\text {max}}\), the same put with the maximal value of \(\nu \) we suppose achievable on the lattice. As can be immediately checked, this formula guarantees that when \(\nu = \nu _{\text {max}}\), the relative uncertainty is 100%, and when \(\nu = 0\), it saturates to s, here chosen as 5%. We choose the uncertainty to reach 100% at \(\nu _{\text {max}}=10\) to replicate the common behaviour of lattice data, which tend to exhibit uncertainty on the order of its central value around this point in Ioffe time (see, for example, figure 9a of [38]). The parameter b controls the steepness of the uncertainty increase. For \(b \rightarrow 1\), uncertainty increases linearly with Ioffe time, while for larger values of b, it starts with a plateau of good precision and then degrades very quickly. The two cases \(b = 1.1\) and \(b= 2\) presented on Fig. 2 represent two archetypes of lattice uncertainties, corresponding respectively to data of bad or good signal to noise ratio.

We will therefore use as uncertainty of our mock lattice data

where \(\bar{\mu }(\nu )\) is the median of the output of our flexible GPD model at \(\nu \) and the relevant value of \(\xi \). Each of the three batches of data points contains a number of samples at Ioffe times \(\nu _{i}\). We characterize the correlation between these points inside each batch by a coefficient c and express the associated covariance matrix

We then draw the central values \(\mu _i^{\text {Latt}}\) of our mock lattice data points in a normal distribution centered on the median of the output of the flexible GPD model at each \(\nu _i\), with the covariance matrix \(\Omega _{i,i'}^{\text {Latt}}\). This means that we choose our mock lattice data to be globally compatible with the central value of our flexible GPD model fitted on phenomenological inputs.

Figure 3 gives an example of mock lattice data set (orange points) superposed to the replicas of our GPD model. The four panels demonstrate the effect of various combinations of correlation coefficient c and level of noise parameter b. Increasing c increases the degree to which one of the central values influences the choice of the others within a given batch in \(\nu \), while increasing the parameter b results in a data set much more concentrated around the maximum likelihood of the GPD model.

The set of GPD replicas between Ioffe times \(\nu =0\) and \(\nu =6\) at \(\xi =0.1\) (green), their median (blue), the \(1\sigma \) band (red) corresponding to \(b=1.1\) (top) and \(b=2\) (bottom), and the corresponding generated mock lattice data set (orange) determined by \(c=0\) (left) and \(c=0.5\) (right) are shown

5 Bayesian reweighting

Now, we would like to assess the impact of the different sets of mock lattice data generated in the previous section on the GPD model. For this purpose, for each replica \(R_k\), we introduce a goodness-of-fit estimator \(\chi ^{2}_{k}\):

where \(\mu _{i}^{\text {Latt}}\) is the central value of the lattice data generated at point \(\nu _{i}\). With this definition, \(\chi ^{2}_{k}\) estimates the relative compatibility of a given replica \(R_{k}\) with the mock data within the uncertainty of the latter. Bayesian reweighting consists in affecting to each replica \(R_k\) a weight \(\omega _k\) expressed from the goodness-of-fit \(\chi ^2_k\) through [64]

where Z is a normalization factor such that \(\sum _{k}\omega _{k}=1\). One can further define the effective fraction of replicas R which are compatible with the new data set as

where the exponentiated value is the Shannon entropy of the weight set.

In the following, we will generate several sets of mock lattice data at various values of \(\xi \) and reweight our GPD model altogether with these new data at several \(\xi \). We call this the “multikinematic” reweighting, as opposed to the “monokinematic” reweighting where only one value of \(\xi \) is considered. As we do not model any correlation between mock lattice data at different values of \(\xi \), the total \(\chi ^2\) will then be the sum of the \(\chi ^2\) evaluated at each \(\xi \).

5.1 Reweighted statistics

Once weights \(\omega _k\) have been attributed to the replicas, we need to define the central value and standard deviation of a weighted distribution. At each kinematic where we want to evaluate the weighted central value and deviation, we first order the replicas monotoneously, and then define the weighted median \(\bar{\mu }_\omega \) as the element satisfying the condition

The weighted median is the element which most accurately splits the weights evenly. The weighted standard deviation estimator \(\bar{\sigma }_\omega \) is obtained by simply replacing the median by its weighted equivalent in (19).

5.2 Metrics of the impact of Bayesian reweighting

The effective fraction of replicas compatible with the data set \(\tau \) defined in (25) tells us how constraining the new data set is compared to the prior model. It is mostly a tool to evaluate the statistical significance of the reweighted distribution: if \(\tau \) is too small, the weighted mean and standard deviation should not be considered as statistically significant. However, \(\tau \) brings only indirect information on the reduction of uncertainty. To characterise the latter, which is our main physical objective, we introduce the ratio \(\Sigma (y)\), such as

At a given value of y (which can represent any kinematic, in momentum space or Ioffe time), it represents the fraction of uncertainty remaining after reweighting. If \(\Sigma (y)=1\), the uncertainty of the replica bundle at y has not decreased via reweighting. If \(\Sigma (y)<1\), some reduction of uncertainty has occurred via reweighting at that point. We further define the average retainment of uncertainty in Ioffe time as

and a corresponding ratio in momentum space where we adopt a logarithmic scale,

We calculate the uncertainty retainment values \(r_{\nu }\) for the region \(0\le \nu \le 20\) and \(r_{\text {ln}x}\) for the region \(5\times 10^{-3}\le x\le 1\). These two metrics, intended for \(\nu \) and x spaces respectively, assign a global numerical value to the reduction of uncertainty following the introduction of the mock lattice data, which will be convenient to compare various scenarios.

Upper plots: The set of GPD replicas at \(\xi =0.1\) in momentum space (green, left) and Ioffe times space (green, right), their central value (blue-dashed) one \(\sigma \) band (red-dashed), and the reweighted central value (pink-solid) and reweighted one \(\sigma \) band (purple-solid). The mock lattice data was generated using \(b=2\) (high precision) and \(c=0\) (no correlation) at \(\xi =0.1\) (orange-dotted, right). Lower plots: The reweighted to initial uncertainty ratio (purple-solid), the average uncertainty retainment in both x (\(r_{\text {ln}x}=0.78\)) and \(\nu \) (\(r_{\nu }=0.16\)) (green-dashed), and the range in which the lattice data was generated \(\nu =0\) to \(\nu =6\) (orange-shade, right). The associated effective fraction of replicas retained after reweighting is given by \(\tau =0.3\)

Same caption as Fig. 4 up to the fact that the GPD is shown at \(\xi = 0.5\), with mock data added at \(\xi = 0.5\) and similarly \(b=2\) (high precision) and \(c=0\) (no correlation). The average uncertainty retainments are \(r_{\text {ln}x}=0.54\) and \(r_{\nu }=0.25\), \(\tau =0.11\)

Same caption as Fig. 4 up to the fact that the GPD is shown at \(\xi = 0.5\), with mock data added at \(\xi = 0.1\), \(b=1.1\) (low precision) and \(c=0.5\) (correlated data). The average uncertainty retainments are \(r_{\text {ln}x}=1.15\) and \(r_{\nu }=0.93\), \(\tau =0.83\)

One should note that although we generate mock lattice data in the range \(0\le \nu \le 6\), our uncertainty retainment \(r_{\nu }\) metric extends up to \(\nu = 20\). We do this to assess the ability for lattice data to decrease the uncertainty of our GPD model even in Ioffe time regions where we do not expect to be able to extract statistically significant lattice data. Indeed, [43] concluded that the data at low values of Ioffe time, thanks to their smaller uncertainties, represented in effect the bulk of the constraint even at larger Ioffe times. We also do not include the entire region \(0\le x\le 1\) in our metric of uncertainty retainment \(r_{\text {ln}x}\), integrating only from the lower bound \(x=5\times 10^{-3}\). We choose to cap the integration with this lower bound given that \(\Sigma (x)\) becomes relatively constant at lower x. The inclusion of such behaviour in the integration region would completely dominate the discrimination effects at large x.

6 Results of the reweighting

6.1 Monokinematic reweighting

Let us now apply the tools of Bayesian reweighting using our GPD model fitted on phenomenological inputs as a prior, and our mock data as the new information. As a first example, we look at monokinematic reweighting, i.e. we add mock data at a single value of \(\xi \) and measure its impact on the GPD extraction at this same value. We recall that, on average, the generated mock lattice data becomes closer to the most likely output of the prior model as b increases. As c increases, the mock lattice data remains more consistently above or below the central value of the prior model.

Same caption as Fig. 4 up to the fact that the GPD is shown at \(\xi = 0.5\), with mock data added at \(\xi = \{0.1, 0.2, 0.3\}\), \(b=1.1\) (low precision) and \(c=0.5\) (correlated data). The average uncertainty retainments are \(r_{\text {ln}x}=1.0\) and \(r_{\nu }=0.82\), \(\tau =0.77\)

Same caption as Fig. 4 up to the fact that the GPD is shown at \(\xi = 0.5\), with mock data added at \(\xi = \{0.1, 0.2, 0.3, 0.4, 0.5\}\), \(b=1.1\) (low precision) and \(c=0.5\) (correlated data). The average uncertainty retainments are \(r_{\text {ln}x}=0.75\) and \(r_{\nu }=0.65\), \(\tau =0.57\)

The result for \(b=2\) (high precision), \(c = 0\) (uncorrelated data) and \(\xi = 0.1\) is shown on Fig. 4, while Fig. 5 shows the result for \(\xi = 0.5\) and the same other parameters. The right hand side plots show the effect of reweighting in Ioffe time: we observe a large reduction of uncertainty, which remains effective far outside of the range of the data (the light orange zone) although the case \(\xi = 0.5\) shows more fluctuations linked to the lesser coherence of the replica bundle. The average retainment of uncertainty in Ioffe time is \(r_\nu = 0.16\) at \(\xi = 0.1\), and \(r_\nu = 0.25\) at \(\xi = 0.5\).

On the other hand, the left hand side plots show a far less spectacular reduction of uncertainty in momentum space. The situation is actually inverted, with a larger reduction of uncertainty at \(\xi = 0.5\) compared to \(\xi = 0.1\). Indeed, the retainment of uncertainty remains very large at \(r_{\text {ln}x} = 0.78\) at \(\xi = 0.1\), whereas it is \(r_{\text {ln}x} = 0.54\) at \(\xi = 0.5\). The fact that the uncertainty is less reduced in momentum space compared to Ioffe time is an illustration of the imputation problem evoked in the introduction. The reconstruction of the x-dependence from limited Ioffe time data is an inverse problem which triggers a significant rise in uncertainty because the mock data produces no control over the “high-frequency” behaviour of the momentum distribution. Therefore, reweighting is twice less efficient in momentum space compared to Ioffe time at \(\xi = 0.5\), and five times less efficient at \(\xi = 0.1\). Why does it behave so poorly at \(\xi = 0.1\) in momentum space? The answer comes from the extreme coherence of the replicas of the GPD model in Ioffe time in the region where the reweighting is performed. This means that the GPD model contains in the end very little information in this region, such that the reweighting cannot efficiently select features of the distribution that would make a significant difference in momentum space.

We reiterate that the origin of the large coherence is the fact that positivity constrains tightly the GPD on a large part of the x dependence at \(\xi = 0.1\) (namely for \(x > 0.1\)). We add therefore lattice data in a region where another theoretical constraint in momentum space has already considerably reduced the freedom in modelling. Lattice data must be all the better to bear any impact that the initial modelling uncertainties are small. On the other hand, the model is much more flexible at \(\xi = 0.5\), allowing lattice data a relatively better discriminating power in momentum space.

To compare the effect of reweighting at various values of \(\xi \), we show in Fig. 6 the effective fraction of surviving replicas \(\tau \) and the retainment of uncertainty in Ioffe time and momentum space as functions of \(\xi \). As the value of \(\xi \) increases, the replica bundle decoheres and the effective fraction of replicas \(\tau \) drops quickly. For \(\xi > 0.7\), a full refit seems necessary due to the low statistics. We observe that the reduction of uncertainty is systematically better in Ioffe time compared to momentum space, as expected due to the imputation problem. Using better and uncorrelated data (\(b = 2, c= 0\)) produces generally a significant reduction of uncertainty in Ioffe time compared to the other configurations, but that is not reflected in momentum space. Overall, we observe in the case \(b = 1.1\) (low precision) that uncertainty in momentum space decreases with larger values of \(\xi \). The fact that uncertainty is so erratic for \(b = 2\) has to do with the small value of \(\tau \), which makes results unreliable at large \(\xi \), but underlines how constraining the new data are compared to the prior model.

This highlights that with our modelling, it is in the large \(\xi \) region, where positivity does not provide significant constraints on the GPD, that we would observe the largest effect due to the inclusion of lattice data. However, we have worked here at \(t = 0\), where we could use the very well-known unpolarised PDF as an input to our model, and used a simplified version of the positivity constraint neglecting the role of the GPD E for instance. In general, the behaviour of GPDs at \(\xi = 0\) but \(t \ne 0\) is one of the most crucial aspects of GPD phenomenology and can be accessed readily on the lattice. Although our current study invites to preferentially include data at large \(\xi \), in a more complete setting, the extraction of more precise t-dependent PDFs plays a major role. We note that by studying both the limit \(\xi = 0\) and large \(\xi \), one handles two very interesting limits of GPDs: the first is directly involved in the hadron tomography program or the spin sum rule, whereas the latter probes a very different partonic dynamic in the hadron, with a sensitivity to pairs of partons. The small but non-zero values of \(\xi \) can on the other hand be constrained rather efficiently by a combination of experimental data, positivity constraints and arguments from perturbation theory if working at a reasonably hard scale [65]. It remains however interesting to verify whether lattice data at moderately small value of \(\xi \) are in good agreement with positivity constraints considering the words of caution on relying excessively on these inequalities at low renormalisation scale, as underlined for instance in [66].

6.2 Multikinematic reweighting

We would like to explore one last aspect, namely how reweighting is propagated to other values of \(\xi \). So far, we have only studied the effect of reweighting exactly at the same value of \(\xi \) as the data we were simulating. We show in Fig. 7 the result of a reweighting where the data is added at \(\xi = 0.1\), but we observe the impact on the GPD at \(\xi = 0.5\), with \(b=1.1\) (low precision) and \(c = 0.5\) (correlated data). With these large uncertainties, the reweighting does not give any significant reduction of uncertainty at \(\xi = 0.5\) in Ioffe time (\(r_\nu = 0.93\)), and even an increase of uncertainty in momentum space (\(r_{\text {ln}x}=1.15\)) by smearing the distribution.

Let us now add data for \(\xi \in \{0.1, 0.2,0.3\}\) while keeping \(b=1.1\) and \(c=0.5\). The retainment of uncertainty at \(\xi = 0.5\) drops to \(r_\nu = 0.82\) and \(r_x = 1.0\) (Fig. 8). If we now add data for \(\xi \in \{0.1, 0.2,0.3,0.4,0.5\}\), uncertainty retainment at \(\xi = 0.5\) tightens to \(r_\nu = 0.65\) and \(r_x = 0.75\) (Fig. 9). It is however not better than a simple reweighting directly at \(\xi = 0.5\) which results in \(r_\nu = 0.58\) and \(r_x = 0.64\) (see Table 1 for all results, including various combinations of uncertainty and correlation that we have not mentioned in the text or figures). This demonstrates that adding some data at a given value of \(\xi \) only produces a rather minimal effect on other values of higher \(\xi \) within our modelling of GPDs.

7 Conclusion

We have presented a study of the impact of mock lattice QCD data at moderate value of \(\xi \) on a GPD model. The latter is based on machine learning techniques and fitted to the forward limit and diagonal \(x = \xi \) of the phenomenological GK model, which represents the typical experimental information available on GPDs. We further enforce a positivity constraint, which considerably limits the freedom of the model in the region \(x > \xi \). We observe as a result that our model uncertainties are largely autocorrelated in the small Ioffe-time region at small \(\xi \), meaning that lattice data only bring minimal additional reduction of uncertainty in momentum space. We observe that the reduction of uncertainty in momentum space is systematically inferior to that in Ioffe time space, as a consequence of the inverse problem of relating the two representations of GPDs. We also observe that the addition of data at some low values of \(\xi \) impacts only minimally the GPD at higher values of \(\xi \). However, the latter point happens in a context where the t-dependence is neglected. When restored, one recovers the t-dependent PDF at zero skewness, a quantity which is not directly constrained by experimental data. We thus expect that the impact of lattice QCD data in the low-\(\xi \) region will increase with the value of |t|.

The Bayesian method employed here appears to be an adequate way to combine both experimental and lattice knowledge on GPDs, when the lattice data is globally in agreement with the prior model. However, our study highlighted the impact of correlations within the lattice data on a potential joint extraction. Real lattice data frequently present a very high degree of correlation, along with systematic effects that are only starting to be under control. This mandates a very careful treatment to avoid significant biases in the assessment of uncertainty reduction, which represents a challenge for the community.

Data availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The set of replica used for this study is available in the open source PARTONS framework. See http://partons.cea.fr for details.]

Notes

Observe for instance the minute effect of matching between figures 5 and 7 of [38]. It is noticed in this paper, and highlighted in its figure 6, that the matching kernel contains two parts of almost equal magnitude and opposite sign responsible for the rather small effects of the matching.

References

D. Mueller, D. Robaschik, B. Geyer, F.M. Dittes, J. Hořeǰsi, Wave functions, evolution equations and evolution kernels from light ray operators of QCD. Fortsch. Phys. 42, 101–141 (1994). https://doi.org/10.1002/prop.2190420202. arXiv:hep-ph/9812448

X.-D. Ji, Gauge-invariant decomposition of nucleon spin. Phys. Rev. Lett. 78, 610–613 (1997). https://doi.org/10.1103/PhysRevLett.78.610. arXiv:hep-ph/9603249

A.V. Radyushkin, Nonforward parton distributions. Phys. Rev. D 56, 5524–5557 (1997). https://doi.org/10.1103/PhysRevD.56.5524. arXiv:hep-ph/9704207

M. Diehl, Generalized parton distributions. Phys. Rep. 388, 41–277 (2003). https://doi.org/10.1016/j.physrep.2003.08.002. arXiv:hep-ph/0307382

A.V. Belitsky, A.V. Radyushkin, Unraveling hadron structure with generalized parton distributions. Phys. Rep. 418, 1–387 (2005). https://doi.org/10.1016/j.physrep.2005.06.002. arXiv:hep-ph/0504030 [hep-ph]

K. Kumericki, S. Liuti, H. Moutarde, GPD phenomenology and DVCS fitting. Eur. Phys. J. A 52(6), 157 (2016). https://doi.org/10.1140/epja/i2016-16157-3. arXiv:1602.02763 [hep-ph]

M. Burkardt, Impact parameter dependent parton distributions and off forward parton distributions for zeta –> 0. Phys. Rev. D 62, 071503 (2000). https://doi.org/10.1103/PhysRevD.62.071503. arXiv:hep-ph/0005108 [Erratum: Phys. Rev. D 66, 119903 (2002)]

M. Diehl, Generalized parton distributions in impact parameter space. Eur. Phys. J. C25, 223–232 (2002). https://doi.org/10.1007/s10052-002-1016-9. arXiv:hep-ph/0205208

M.V. Polyakov, Generalized parton distributions and strong forces inside nucleons and nuclei. Phys. Lett. B555, 57–62 (2003). https://doi.org/10.1016/S0370-2693(03)00036-4. arXiv:hep-ph/0210165

V. Bertone, H. Dutrieux, C. Mezrag, H. Moutarde, P. Sznajder, The deconvolution problem of deeply virtual Compton scattering. Phys. Rev. D 103(11), 114019 (2021). https://doi.org/10.1103/PhysRevD.103.114019. arXiv:2104.03836 [hep-ph]

A.V. Radyushkin, Scaling limit of deeply virtual Compton scattering. Phys. Lett. 380, 417–425 (1996). https://doi.org/10.1016/0370-2693(96)00528-X. arXiv:hep-ph/9604317

X.-D. Ji, Deeply virtual Compton scattering. Phys. Rev. D 55, 7114–7125 (1997). https://doi.org/10.1103/PhysRevD.55.7114. arXiv:hep-ph/9609381

E.R. Berger, M. Diehl, B. Pire, Time-like Compton scattering: exclusive photoproduction of lepton pairs. Eur. Phys. J. C23, 675–689 (2002). https://doi.org/10.1007/s100520200917. arXiv:hep-ph/0110062 [hep-ph]

A.V. Radyushkin, Asymmetric gluon distributions and hard diffractive electroproduction. Phys. Lett. B385, 333–342 (1996). https://doi.org/10.1016/0370-2693(96)00844-1. arXiv:hep-ph/9605431

J.C. Collins, L. Frankfurt, M. Strikman, Factorization for hard exclusive electroproduction of mesons in QCD. Phys. Rev. D56, 2982–3006 (1997). https://doi.org/10.1103/PhysRevD.56.2982. arXiv:hep-ph/9611433

E. Moffat, A. Freese, I. Cloët, T. Donohoe, L. Gamberg, W. Melnitchouk, A. Metz, A. Prokudin, N. Sato, Shedding light on shadow generalized parton distributions Phys. Rev. D 108(3), 036027 (2023). arXiv:2303.12006 [hep-ph]

H. Dutrieux, O. Grocholski, H. Moutarde, P. Sznajder, Artificial neural network modelling of generalised parton distributions. Eur. Phys. J. C 82(3), 252 (2022). https://doi.org/10.1140/epjc/s10052-022-10211-5. arXiv:2112.10528 [hep-ph] [Erratum: Eur. Phys. J. C 82, 389 (2022)]

M. Guidal, M. Vanderhaeghen, Double deeply virtual Compton scattering off the nucleon. Phys. Rev. Lett. 90, 012001 (2003). https://doi.org/10.1103/PhysRevLett.90.012001. arXiv:hep-ph/0208275

A.V. Belitsky, D. Mueller, Exclusive electroproduction of lepton pairs as a probe of nucleon structure. Phys. Rev. Lett. 90, 022001 (2003). https://doi.org/10.1103/PhysRevLett.90.022001. arXiv:hep-ph/0210313

K. Deja, V. Martinez-Fernandez, B. Pire, P. Sznajder, J. Wagner, Phenomenology of double deeply virtual Compton scattering in the era of new experiments (2023). arXiv:2303.13668 [hep-ph]

A. Pedrak, B. Pire, L. Szymanowski, J. Wagner, Electroproduction of a large invariant mass photon pair. Phys. Rev. D 101(11), 114027 (2020). https://doi.org/10.1103/PhysRevD.101.114027. arXiv:2003.03263 [hep-ph]

O. Grocholski, B. Pire, P. Sznajder, L. Szymanowski, J. Wagner, Phenomenology of diphoton photoproduction at next-to-leading order. Phys. Rev. D 105(9), 094025 (2022). https://doi.org/10.1103/PhysRevD.105.094025. arXiv:2204.00396 [hep-ph]

R. Boussarie, B. Pire, L. Szymanowski, S. Wallon, Exclusive photoproduction of a \(\gamma \,\rho \) pair with a large invariant mass. JHEP 02, 054 (2017). https://doi.org/10.1007/JHEP02(2017)054. arXiv:1609.03830 [hep-ph] [Erratum: JHEP 10, 029 (2018)]

G. Duplančić, K. Passek-Kumerički, B. Pire, L. Szymanowski, S. Wallon, Probing axial quark generalized parton distributions through exclusive photoproduction of a \(\gamma \,\pi ^\pm \) pair with a large invariant mass. JHEP 11, 179 (2018). https://doi.org/10.1007/JHEP11(2018)179. arXiv:1809.08104 [hep-ph]

J.-W. Qiu, Z. Yu, Exclusive production of a pair of high transverse momentum photons in pion-nucleon collisions for extracting generalized parton distributions (2022). arxiv:2205.07846. arXiv:2205.07846 [hep-ph]

J.-W. Qiu, Z. Yu, Single diffractive hard exclusive processes for the study of generalized parton distributions. Phys. Rev. D 107(1), 014007 (2023). https://doi.org/10.1103/PhysRevD.107.014007. arXiv:2210.07995 [hep-ph]

V. Braun, D. Müller, Exclusive processes in position space and the pion distribution amplitude. Eur. Phys. J. C 55, 349–361 (2008). https://doi.org/10.1140/epjc/s10052-008-0608-4. arXiv:0709.1348 [hep-ph]

X. Ji, Parton Physics on a Euclidean Lattice. Phys. Rev. Lett. 110(26), 262002 (2013). https://doi.org/10.1103/PhysRevLett.110.262002. arXiv:1305.1539 [hep-ph]

A.V. Radyushkin, Quasi-parton distribution functions, momentum distributions, and pseudo-parton distribution functions. Phys. Rev. D 96(3), 034025 (2017). https://doi.org/10.1103/PhysRevD.96.034025. arXiv:1705.01488 [hep-ph]

Y.-Q. Ma, J.-W. Qiu, Extracting parton distribution functions from lattice QCD calculations. Phys. Rev. D 98(7), 074021 (2018). https://doi.org/10.1103/PhysRevD.98.074021. arXiv:1404.6860 [hep-ph]

X. Ji, A. Schäfer, X. Xiong, J.-H. Zhang, One-loop matching for generalized parton distributions. Phys. Rev. D 92, 014039 (2015). https://doi.org/10.1103/PhysRevD.92.014039. arXiv:1506.00248 [hep-ph]

Y.-S. Liu, W. Wang, J. Xu, Q.-A. Zhang, J.-H. Zhang, S. Zhao, Y. Zhao, Matching generalized parton quasidistributions in the RI/MOM scheme. Phys. Rev. D 100(3), 034006 (2019). https://doi.org/10.1103/PhysRevD.100.034006. arXiv:1902.00307 [hep-ph]

C. Alexandrou, K. Cichy, M. Constantinou, K. Hadjiyiannakou, K. Jansen, A. Scapellato, F. Steffens, Unpolarized and helicity generalized parton distributions of the proton within lattice QCD. Phys. Rev. Lett. 125(26), 262001 (2020). https://doi.org/10.1103/PhysRevLett.125.262001. arXiv:2008.10573 [hep-lat]

A.V. Radyushkin, Generalized parton distributions and pseudodistributions. Phys. Rev. D 100(11), 116011 (2019). https://doi.org/10.1103/PhysRevD.100.116011. arXiv:1909.08474 [hep-ph]

Z.-Y. Li, Y.-Q. Ma, J.-W. Qiu, Extraction of next-to-next-to-leading-order parton distribution functions from lattice QCD calculations. Phys. Rev. Lett. 126(7), 072001 (2021). https://doi.org/10.1103/PhysRevLett.126.072001. arXiv:2006.12370 [hep-ph]

Y. Ji, F. Yao, J.-H. Zhang, Connecting Euclidean to light-cone correlations: from flavor nonsinglet in forward kinematics to flavor singlet in non-forward kinematics (2022). arXiv:2212.14415 [hep-ph]

K. Orginos, A. Radyushkin, J. Karpie, S. Zafeiropoulos, Lattice QCD exploration of parton pseudo-distribution functions. Phys. Rev. D 96(9), 094503 (2017). https://doi.org/10.1103/PhysRevD.96.094503. arXiv:1706.05373 [hep-ph]

C. Egerer, R.G. Edwards, C. Kallidonis, K. Orginos, A.V. Radyushkin, D.G. Richards, E. Romero, S. Zafeiropoulos, Towards high-precision parton distributions from lattice QCD via distillation. JHEP 11, 148 (2021). https://doi.org/10.1007/JHEP11(2021)148. arXiv:2107.05199 [hep-lat]

J. Karpie, K. Orginos, A. Rothkopf, S. Zafeiropoulos, Reconstructing parton distribution functions from Ioffe time data: from Bayesian methods to Neural Networks. JHEP 04, 057 (2019). https://doi.org/10.1007/JHEP04(2019)057. arXiv:1901.05408 [hep-lat]

L. Del Debbio, T. Giani, J. Karpie, K. Orginos, A. Radyushkin, S. Zafeiropoulos, Neural-network analysis of parton distribution functions from Ioffe-time pseudodistributions. JHEP 02, 138 (2021). https://doi.org/10.1007/JHEP02(2021)138. arXiv:2010.03996 [hep-ph]

J. Karpie, K. Orginos, A. Radyushkin, S. Zafeiropoulos, The continuum and leading twist limits of parton distribution functions in lattice QCD. JHEP 11, 024 (2021). https://doi.org/10.1007/JHEP11(2021)024. arXiv:2105.13313 [hep-lat]

J. Bringewatt, N. Sato, W. Melnitchouk, J.-W. Qiu, F. Steffens, M. Constantinou, Confronting lattice parton distributions with global QCD analysis. Phys. Rev. D 103(1), 016003 (2021). https://doi.org/10.1103/PhysRevD.103.016003. arXiv:2010.00548 [hep-ph]

P.C. Barry et al., Complementarity of experimental and lattice QCD data on pion parton distributions. Phys. Rev. D 105(11), 114051 (2022). https://doi.org/10.1103/PhysRevD.105.114051. arXiv:2204.00543 [hep-ph]

Y. Guo, X. Ji, K. Shiells, Generalized parton distributions through universal moment parameterization: zero skewness case. JHEP 09, 215 (2022). https://doi.org/10.1007/JHEP09(2022)215. arXiv:2207.05768 [hep-ph]

Y. Guo, X. Ji, M.G. Santiago, K. Shiells, J. Yang, Generalized parton distributions through universal moment parameterization: non-zero skewness case (2023). arXiv:2302.07279 [hep-ph]

S.V. Goloskokov, P. Kroll, Vector meson electroproduction at small Bjorken-x and generalized parton distributions. Eur. Phys. J. C 42, 281–301 (2005). https://doi.org/10.1140/epjc/s2005-02298-5. arXiv:hep-ph/0501242 [hep-ph]

S.V. Goloskokov, P. Kroll, The role of the quark and gluon GPDs in hard vector-meson electroproduction. Eur. Phys. J. C 53, 367–384 (2008). https://doi.org/10.1140/epjc/s10052-007-0466-5. arXiv:0708.3569 [hep-ph]

S.V. Goloskokov, P. Kroll, An attempt to understand exclusive pi+ electroproduction. Eur. Phys. J. C 65, 137–151 (2010). https://doi.org/10.1140/epjc/s10052-009-1178-9. arXiv:0906.0460 [hep-ph]

X.-D. Ji, Off forward parton distributions. J. Phys. G 24, 1181–1205 (1998). https://doi.org/10.1088/0954-3899/24/7/002. arXiv:hep-ph/9807358 [hep-ph]

A.V. Radyushkin, Symmetries and structure of skewed and double distributions. Phys. Lett. B 449, 81–88 (1999). https://doi.org/10.1016/S0370-2693(98)01584-6. arXiv:hep-ph/9810466 [hep-ph]

O.V. Teryaev, Crossing and radon tomography for generalized parton distributions. Phys. Lett. B 510, 125–132 (2001). https://doi.org/10.1016/S0370-2693(01)00564-0. arXiv:hep-ph/0102303 [hep-ph]

N. Chouika, C. Mezrag, H. Moutarde, J. Rodríguez-Quintero, Covariant extension of the GPD overlap representation at low Fock states. Eur. Phys. J. C 77, 906 (2017). https://doi.org/10.1140/epjc/s10052-017-5465-6. arXiv:1711.05108 [hep-ph]

C. Mezrag, An introductory lecture on generalised parton distributions. Few Body Syst. 63(3), 62 (2022). https://doi.org/10.1007/s00601-022-01765-x. arXiv:2207.13584 [hep-ph]

A.V. Radyushkin, Double distributions and evolution equations. Phys. Rev. D 59, 014030 (1999). https://doi.org/10.1103/PhysRevD.59.014030. arXiv:hep-ph/9805342 [hep-ph]

B. Pire, J. Soffer, O. Teryaev, Positivity constraints for off-forward parton distributions. Eur. Phys. J. C 8, 103–106 (1999). https://doi.org/10.1007/s100529901063. arXiv:hep-ph/9804284 [hep-ph]

M. Diehl, T. Feldmann, R. Jakob, P. Kroll, The overlap representation of skewed quark and gluon distributions. Nucl. Phys. B 596, 33–65 (2001). https://doi.org/10.1016/S0550-3213(00)00684-2. arXiv:hep-ph/0009255 [hep-ph]

P.V. Pobylitsa, Disentangling positivity constraints for generalized parton distributions. Phys. Rev. D 65, 114015 (2002). https://doi.org/10.1103/PhysRevD.65.114015. arXiv:hep-ph/0201030 [hep-ph]

V. Braun, P. Gornicki, L. Mankiewicz, Ioffe-time distributions instead of parton momentum distributions in description of deep inelastic scattering. Phys. Rev. D 51, 6036–6051 (1995). https://doi.org/10.1103/PhysRevD.51.6036. arXiv:hep-ph/9410318

G. Cybenko, Approximation by superpositions of a sigmoidal function. Math Control Signals Syst. 2(4), 303–314 (1989). https://doi.org/10.1007/BF02551274

K. Goeke, J. Grabis, J. Ossmann, M.V. Polyakov, P. Schweitzer et al., Nucleon form-factors of the energy momentum tensor in the chiral quark-soliton model. Phys. Rev. D 75, 094021 (2007). https://doi.org/10.1103/PhysRevD.75.094021. arXiv:hep-ph/0702030 [hep-ph]

M.V. Polyakov, P. Schweitzer, Forces inside hadrons: pressure, surface tension, mechanical radius, and all that. Int. J. Mod. Phys. A 33(26), 1830025 (2018). https://doi.org/10.1142/S0217751X18300259. arXiv:1805.06596 [hep-ph]

S.V. Goloskokov, P. Kroll, The longitudinal cross-section of vector meson electroproduction. Eur. Phys. J. C 50, 829–842 (2007). https://doi.org/10.1140/epjc/s10052-007-0228-4. arXiv:hep-ph/0611290 [hep-ph]

I.F. Ilyas, X. Chu, Data Cleaning (Association for Computing Machinery, New York, 2019)

R.D. Ball, V. Bertone, F. Cerutti, L. Del Debbio, S. Forte, A. Guffanti, N.P. Hartland, J.I. Latorre, J. Rojo, M. Ubiali, Reweighting and unweighting of parton distributions and the LHC W lepton asymmetry data. Nucl. Phys. B 855, 608–638 (2012). https://doi.org/10.1016/j.nuclphysb.2011.10.018. arXiv:1108.1758 [hep-ph]

H. Dutrieux, M. Winn, V. Bertone, Exclusive meets inclusive at small Bjorken-\(x_B\): how to relate exclusive measurements to PDFs based on evolution equations (2023). arXiv:2302.07861 [hep-ph]

J. Collins, T.C. Rogers, N. Sato, Positivity and renormalization of parton densities. Phys. Rev. D 105(7), 076010 (2022). https://doi.org/10.1103/PhysRevD.105.076010. arXiv:2111.01170 [hep-ph]

Acknowledgements

We thank Savvas Zafeiropoulos, Valerio Bertone, Hervé Moutarde, Benoît Blossier, Kostas Orginos and José Manuel Morgado Chavez for stimulating discussions. This work is supported in part in the framework of the GLUODYNAMICS project funded by the “P2IO LabEx (ANR-10-LABX-0038)” in the framework “Investissements d’Avenir” (ANR-11-IDEX-0003-01) managed by the Agence Nationale de la Recherche (ANR), France. H.D. is supported in part by the U.S. DOE Grant #DE-FG02-04ER41302 and the Gordon and Betty Moore Foundation. The work of P.S. was supported by the Grant No. 2019/35/D/ST2/00272 of the National Science Centre, Poland.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3.

About this article

Cite this article

Riberdy, M.J., Dutrieux, H., Mezrag, C. et al. Combining lattice QCD and phenomenological inputs on generalised parton distributions at moderate skewness. Eur. Phys. J. C 84, 201 (2024). https://doi.org/10.1140/epjc/s10052-024-12513-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-024-12513-2