Abstract

The MEG II experiment, located at the Paul Scherrer Institut (PSI) in Switzerland, is the successor to the MEG experiment, which completed data taking in 2013. MEG II started fully operational data taking in 2021, with the goal of improving the sensitivity of the \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) decay down to \(\sim 6 \times 10^{-14}\) almost an order of magnitude better than the current limit. In this paper, we describe the operation and performance of the experiment and give a new estimate of its sensitivity versus data acquisition time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The MEG II detector is the upgrade, with significant improvements and additions, to the MEG detector [1], which by 2013 had recorded data establishing the best limit to date for the SM violating decay \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) [2]. The goal of the upgraded experiment is to improve the sensitivity of this decay by about one order of magnitude. The physical rationale for this upgrade and the design criteria for the MEG II subdetectors are outlined in [3].

The signal is a \({\upgamma }\)-ray and a positron back-to-back with energy \(E_{\upgamma }\sim {E_{{\textrm{e}}^+}}\sim m_{\upmu }c^2/2=52.83\,\text {MeV}\) emanating at the same time from a common vertex. The main background results from the accidental time coincidence of high-momentum positrons from Michel decay \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upnu \bar{\upnu }\) and high-energy \({\upgamma }\)-rays from radiative muon decay (RMD) \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upnu \bar{\upnu }\upgamma \), positron Bremsstrahlung and positron annihilation in flight \({\textrm{e}}^+ {\textrm{e}}^- \rightarrow \upgamma \upgamma \). An additional smaller background is RMDs with high-momentum positrons and high-energy \({\upgamma }\)-rays.

A schematic view of the detector highlighting the main components can be found in Fig. 1. The detector is located at the \(\uppi \)E5 beamline in the high-intensity proton accelerator facility at the Paul Scherrer Institut (PSI) in Switzerland, allowing the world’s most intense continuous positive muon beam to be stopped in a thin target and to measure the decay products. The positron spectrometer relies on the COnstant Bending RAdius (COBRA) superconducting magnet generating a gradient magnetic field ranging from \(1.27\,\text {T}\) in the centre to \(0.50\,\text {T}\) at either end of the magnet cryostat to measure positron momenta by the Cylindrical Drift CHamber (CDCH). Positron time is measured by the pixelated Timing Counter (pTC), and the Radiative Decay Counter (RDC) helps reject background. Finally, the Liquid Xenon (LXe) detector uses on the scintillation process to measure the energy, position and timing of the incident \({\upgamma }\)-ray.

We use a cylindrical coordinate system \((r,\phi , z)\) with the origin at the centre of COBRA. The z-axis is aligned along the COBRA axis in the direction of the incident muon beam. The azimuthal angle \(\phi = 0\) is opposite the centre of the LXe detector, and corresponds to the x-axis of the associated Cartesian coordinate system, while the y-axis points upward. Positrons follow trajectories with decreasing \(\phi \) coordinate. The polar angle \(\theta \) with respect to the z-axis is also used. The region with \(z < 0\) is called upstream, while the region with \(z > 0\) is called downstream.

The geometrical acceptance of the experiment is defined by the size of the LXe fiducial volume, which is approximately equal to \(\phi _{\upgamma } \in \left( \frac{2}{3} \pi , \frac{4}{3} \pi \right) \) and \(|\cos \theta _{\upgamma } | < 0.35\), giving an overall acceptance of \(\sim 11\%\). The efficiencies given below refer to this geometrical acceptance.

\(\uppi \)E5 beamline section connected to Z-channel. The elements up to Triplet II are shared between the MEG II and the Mu3e [6] experiments

After the years 2017 to 2020 during which the engineering runs required to commission the detector component were performed, data acquisition with the detector fully operational began in 2021 with the \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) trigger activated.

In this paper, we give only a brief summary of the designs of the subdetectors, describing only the differences between the design and the actual realisation, and we describe the operation and performance of the detector and compare the measured resolutions with the design [3]. Finally, the expected sensitivity for the coming years is presented.

2 Beam

Pions and muons are produced by impinging protons, accelerated up to \(590\,\text {MeV}\) by the PSI ring cyclotron, onto the production target TgE at an angle of \(8^{\circ }\) with respect to the beam direction. This configuration, in operation since the second half of 2020, has proved to be less sensitive to variation of the secondary beam intensities as a function of the proton beam position. For a comprehensive and historical description of the PSI facility, see [4, 5].

The \(\uppi \)E5 area is served by a low-energy secondary beamline capable of delivering pions and muons in the \(10\text { to }120 \,\text {MeV}/ {{\textrm{c}}}\) momentum range. It has a \(165^{\circ }\) angle of view with respect to the proton beam on TgE. Figure 2 shows the beamline layout from TgE up the MEG II detector. The AHSW41 dipole, which is part of the proton beamline, captures the pions and muons in the backward direction and defines the momentum accepted by the \(\uppi \)E5 beamline. The collected charged particles are deflected by \(47.5^{\circ }\) and coupled into a straight section consisting of quadrupoles (QSF4*) and sextupoles (HSC4*). Along this section three slit systems (FS41–42–43) are used to reduce the beam intensity delivered to the experimental area and (FS41–43) to cut the momentum distribution. This is possible because the dipoles introduce dispersion into the lattice and because the beams are not monochromatic and there is a correlation between the horizontal transverse coordinate of the beam and the momentum distribution.

In the MEG II experiment, where surface \({\upmu ^+}\) \(({28}\,\text {MeV}/{\textrm{c}})\) are selected and stopped in the muon stopping target (see Sect. 3), FS41 is used to reduce the beam intensity which also reduces the width of the momentum distribution and results in less straggling at the target and a higher stopping efficiency.

After QSF48, the dipole magnet AST41 produces a symmetric deflection of the beam, again at \(47.5^{\circ }\), to either the Z-channel or the U-channel. When the Z-channel mode is selected, the beam is transmitted through a second dipole ASC41, which generates a \(75^{\circ }\) deflection and then delivers the beam into the experimental area.

The beam is coupled into a Wien filter via Triplet I, a quadrupole triplet, to separate the muon beam from the main contaminants: pions and positrons. The separation is achieved vertically at the downstream collimator system. The task of Triplet I is to shape the beam so that it has a horizontal waist, while being vertically parallel to enhance the separation.

The beam is then focused by Triplet II at a collimator, dumping the contaminating beams. For beam tuning campaigns in \(\uppi \)E5, the first measurement point is immediately downstream of the collimator.

Triplet II couples the beam into the Beam Transport Solenoid (BTS). There, a \(300\,\upmu \text {m}\) thick Mylar\(^{\circledR }\) moderator is positioned at the focus to minimise the effects of multiple scattering. A \(190\,\upmu \text {m}\) thick Mylar window separates the vacuum in the beamline from the helium atmospheric volume containing the muon-stopping target at the centre of the COBRA magnet.

The field shape of the COBRA magnet was optimised to reduce the dependence of the bending radius on the emission angle of charged particles coming from the centre of the experiment and to avoid multiple turn trajectories through the tracking system, which severely affected the MEGA experiment [7]. A detailed description of the final part of the beamline, the BTS, COBRA and the magnetic field map, its calculation and measurement, can be found in [1].

Beam tuning at different intensities was performed to meet different requirements, including specific calibration data sets at low beam intensity (down to \(10^6\) particles/s). Figure 3 shows a typical beam profile measured at the centre of the COBRA magnet, where the stopping target is located. The beam is centred at \(x_\textrm{b} = (0.0 \pm 0.5)\,\text {mm}\), \(y_\textrm{b} = (-0.8 \pm 0.5)\,\text {mm}\) with a standard deviation of the two coordinates equal to \(\sigma _{x} = (11.35\pm 0.50)\,\text {mm}\) and \(\sigma _{y} = (11.36\pm 0.50)\,\text {mm}\). In this figure, the slits were tuned to obtain a stopped muon rate \(R_{\upmu } = 5.3 \times 10^{7}\,\text {s}^{-1}\) at the primary proton beam current \(I_{{\textrm{p}}} = 2.2\,\text {mA}\).Footnote 1 Beam settings with similar profiles were achieved in the range of stopped muon rates \(R_{\upmu }=(2-5) \times 10^{7}~\text {s}^{-1}\).

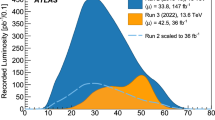

During the 2021–2022 physics runs, different \(R_{\upmu }\) were used, starting with a lower value, to study the detector stability and rate capability, to tune the data acquisition and trigger systems, and to optimise the reconstruction algorithms, as explained in the next sections. Table 1 summarises the used \(R_{\upmu }\), normalised to the typical \(I_{{\textrm{p}}}\) for each year. The listed numbers include the MEG II target-stopping efficiency estimated by simulation to be \(89\%\). A \(5\%\) systematic uncertainty is attached to each measurement of \(R_{\upmu }\), due to uncertainties on the beam measurement setup and variations of the proton beam position on TgE.

The measurement reported here has been performed using an avalanche photodiode detector (SPL4419 Hamamatsu). It has a \(1.5\,\text {mm}\) active area radius, with \(130\,\upmu \text {m}\) depletion layer. The depletion layer is the sensitive part of the detector, allowing for a clear separation between the energy deposit by the muons (stopping particles) and positrons (minimum ionising particles) mainly from stopped muon decay. A scan on the plane perpendicular to the incident beam direction is performed in steps of \(2\,\text {mm}\). Details about the beam monitoring detectors used and developed for the MEG II experiment can be found in [3].

3 Target

The role of the target is to stop the \({\upmu ^+}\) beam over a limited axial region at the centre of the COBRA magnet satisfying a number of contradictory requirements. The target must intercept the largest possible fraction of the beam, yet with a minimal amount of material to reduce the interaction of particles from muon decay. The target parameters are the results of extensive calculations and simulations as well as of the experience gained in MEG. Most relevant, the position and planarity of the target must be precisely known to limit the systematic errors in \({\upmu ^+}\) decay vertex position.

3.1 Concept and design

The design of the MEG II target has been optimised, on the basis of measurements and simulations. Different combinations of material/target thickness/degrader thickness have been considered [8]. A scintillator material (BC400) was selected for the target, which has the advantage, over non-scintillating materials, to allow non-destructive beam intensity and profile measurements with dedicated equipment. After some experimental investigation, the current effort is to exploit the feasibility of this technique within the strict constraints dictated by the MEG II experiment.

In order to identify a \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) event, it is necessary to measure the angles of the \({{\textrm{e}}^+}\) trajectory \(({\phi _{\mathrm {e^+}}},{\theta _{\mathrm {e^+}}})\) at the point where the \({\upmu ^+}\) decays by back-propagating the trajectory measured by the spectrometer up to the target surface. The MEG II spectrometer provides a precision of \(\sim 7\,\text {mrad}\). A precise knowledge of the target position is then required: with a radius of curvature \(\sim 13\,\text {cm}\) for the \({{\textrm{e}}^+}\) trajectory in \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) events, a displacement of the target by \({500}\,\upmu \text {m}\) along its normal direction implies a systematic deviation of \({\sim 4}\,\text {mrad}\) in \({\phi _{\mathrm {e^+}}}\) for \({\phi _{\mathrm {e^+}}}= 0\), and a larger effect for non-zero \({\phi _{\mathrm {e^+}}}\).

Moreover, deformations of the target planarity, which were observed during the MEG data taking, produce a similar effect. The uncertainty on the target position and deformation was the dominant systematic error in the MEG result [2], causing a \({5}\%\) variation of the upper limit on the branching ratio while other contributions were below \({1}\%\).

3.2 The target mechanics

The MEG II target is an elliptical foil (length of \({270}\,\text {mm}\) and height of \({66}\,\text {mm})\) with \((174\pm 20)\,\upmu \text {m}\) average thickness (the error is the maximum deviation not the Gaussian \(\sigma )\). The direction normal to the target foil lies on the horizontal plane (x, z) and forms an angle of \((75.0 \pm 0.1)^{\circ }\) with respect to the beam axis (z-axis). The nominal position and the error (maximum not Gaussian) are evaluated on the basis of the mounting procedure and fixation to the adaptation mechanism, but are not used in the analysis. The target inclination as well as its position are estimated with the methods described in the following.

The target foil is supported by two hollow carbon fibre frames. A pattern of white dots, superimposed on a black background, is printed on both the frame and the foil. The dots are elliptical with a height and a width of \({0.51}\,\text {mm}\) and \({1.52}\,\text {mm}\) on the target and \({0.42}\,\text {mm}\) and \({1.27}\,\text {mm}\) on the frame such that the dots appear circular when imaged at an oblique angle with respect to the target’s surface. Six holes are bored into the target, that are (barely) visible along the ellipse axis on Fig. 4: four along the major axis, two along the minor one located symmetrically with respect to the centre. Starting from the dot at the center, they are located at the place of the (missing) third dots along the short axis, at the place of the (missing) seventh points and between the fourth and fifth dots along the long axis.

The holes and the dots are instrumental in measuring the relative alignment between the target and the tracking detectors as discussed in Sect. 4.5.2.

3.3 The photo camera system for position measurement

The target position at the beginning of a MEG II data-taking run is precisely determined, with improved accuracy with respect to MEG, thanks to reflectors installed on the target frame for a optical survey using a laser.

Target position monitoring over long data taking periods was also possible by reconstructing the position of several fiducial holes made in the target itself. A map of the reconstructed muon decay vertices on the target clearly showed the position of such holes. If the target position assumed in the trajectory reconstruction procedure is not exact, the holes artificially appear at different positions for different \({{\textrm{e}}^+}\) angles. This method allowed one to reconstruct deviations of the target position from the nominal one. It was also effective to identify and correct the deformation of the target planarity. On the other hand, it required a large amount of data, so that it could only be used to monitor the average target position over a few months of data taking, while the target was frequently moved far from its working position (at least every week) to perform the calibration of the LXe detector through a pneumatic system, which did not ensure micrometric repeatability or reproducibility of the target positioning.

The improved resolutions of the MEG II positron spectrometer imposed the development of an additional method for more frequent monitoring of the target relative position over the data taking period to ensure that the systematic errors due to target position and deformation remain limited. The method is based on a photogrammetric survey of a pattern of dots printed on the target itself.

The dots are imaged with two digital CMOS photo cameras placed outside the beam halo, hosted in two independent supports. Two LEDs are placed on one of these support to provide illumination during the acquisition of the target pictures. The supports are fixed to the target insertion system at a distance of \({\sim 1000}\,\text {mm}\) from the centre of the MEG II reference system, in correspondence to the CDCH (see Sect. 4) end-plate. The transverse distance from the z-axis is \({\sim 120}\,\text {mm}\), at \(\theta ={6.3}^{\circ }\).

One camera (IDS, mod. UI-3282SE)Footnote 2 has a Sony IMX264 sensor having \(2456 \times 2054\) pixels each of \({3.5}\,\upmu \text {m}\) size, for a total sensor size of \((8.473 \times 7.086)\,\text {mm}^{2}\) and a TUSS optical system, mod. LVK7518, with a focal length of \({75}\,\text {mm}\) and a maximum aperture of f/1.8. The USB3 protocol is used for communications with front-end computers, since it has been proven to be immune to the magnetic field [9].

The second camera (RVT-1001700S)Footnote 3 is a radiation tolerant camera from Spectral Instruments equipped with \(4 \times 10^{6}\) pixels each of \({5.5}\,\upmu \text {m}\) size, and a \({50}\,\text {mm}\) lens. This camera connects directly to a server communicating via fibre optic [10].

3.4 The photogrammetric method

The photogrammetric method is based on frequent and regular measurements (pictures) taken during the run. The photo cameras image the pattern of dots, and the position of dots on the picture can be determined with standard image processing algorithms. If the target moves between two successive camera shoots, or if it deforms over time, the position of these patterns in the pictures will change. The displacement of the target can be then determined with respect to a reference position, measured at the time of the optical survey.

Two approaches are used to determine the target position, orientation and deformation, starting from the different sets of pictures taken from the two photo cameras. The target position and orientation are described by the coordinates of the target centre in the MEG II reference system and by the Euler angles, respectively. The deformation is taken into account differently by the two approaches.

In the first approach, a \(\chi ^2\) goodness of fit is performed, in which the \(\chi ^2\) is computed from the measured and the expected dot positions, where the latter depend on the target position and deformation and on the parameters of the optical system. The deformation is parameterised with Zernike polynomials; target position and deformation are floating parameters in the fit, while the parameters of the optical system are measured from pictures taken just after the optical survey, when the target parameters are known. There are seven optical parameters: the position of the optical centre (three parameters), the independent components of the unit vector of the optical axis (two parameters), the orientation of the sensor around the optical axis (one parameter), and the distance of the sensor from the centre of the optical system (one parameter).

The method has been validated on a bench-top test, by installing the photo camera, a LED, and a target mock-up on an optical table in a configuration similar to that in the experiment. The target was mounted on a linear stage with \({2.5}\,\upmu \text {m}\) position accuracy. Figure 5 shows the difference between the measured shift in the x-coordinate of the target centre, obtained with the photogrammetric method, as a function of the true shift, for a position scan performed along the x-direction (normal to the target plane). A linear fit has been performed to the distribution. The resulting uncertainty in the x-coordinate of the target centre \(T_x\) is \(\sigma _{T_x} = {12}\,\upmu \text {m}\). Given that the direction transverse to the target plane in the experiment is almost coincident with the x-axis, we can conclude that we fully satisfy the precision requirements \({<500}\,\upmu \text {m}\). The angular coefficient p1 is consistent with 1 and the difference of the intercept p0 with 0 is negligible compared with the required precision.

The second approach minimises a \(\chi ^{2}\) at the camera’s image plane between the measured, imaged dot coordinates \(\left( X'_{{\textrm{CCD}}},Y'_{{\textrm{CCD}}}\right) \) and estimated 3D dot coordinates in the camera coordinate system \(\left( \vec {X}_{\textrm{CAM}}\right) \) for each image. Here, \(X_{\textrm{CAM}},Y_{\textrm{CAM}}\) are parallel with the camera’s CCD and \(Z_{\textrm{CAM}}\) is parallel with the camera’s optical axis. Images are taken every \({\sim 10}\,\text {min}\) to track the position, orientation, and shape of the target in the camera coordinate system. The analysis relies on a single position and orientation of the target with respect to the CDCH to map the coordinates in the camera coordinate system to the standard MEG II coordinate system. This is taken either from an optical survey or the hole analysis. Here, the relative 3D dot coordinates in a local target coordinate system were taken from a CT-scan of the target. This CT-scan contains \(O(10~{\textrm{M}})\) data points including the 3D target deformation \(({<1}\,\text {mm})\). Given the measured distances between the dots on the foil and the camera’s optical focal length, the technique is self-calibrating.

We rely on the following optical equations to project the 3D coordinates in the camera coordinate system onto the image plane where f is the camera’s focal length :

The \(\chi ^{2}\) contains a rigid body transformation \(R\left( \vec {X}_{\textrm{T}},\vec {\theta }_{\textrm{T}}\right) \) with a translation and three Euler angles:

The residuals dot-by-dot at the image plane scaled to the object are shown in Fig. 6. Without any additional shape parameters, the fit results in residuals \({<50}\,\upmu \text {m}\) at the object. These are due to additional deformations between the time of the CT-scan and that of the image, errors in the measured dot coordinates on the image plane, and errors in the projection equations. The residuals can be further suppressed by including elliptical Bessel function parameters (i.e. “drumhead modes”) into the fit, but the rigid body transformation was sufficient for the full 2021 data set given the 3D CT-scan.

The two methods have been compared on pictures taken during the 2021 run, and found to yield consistent results within \({100}\,\upmu \text {m}\) in the direction normal to the target plane, which is the most sensitive to the angular resolutions.

3.5 The target hole method

The hole analysis is performed after correcting the temporal variation of the target position traced by the photogrammetric method to determine the target position relative to the CDCH. The method and the results are described in Sect. 4.5.3.

4 Cylindrical drift chamber

The positron momentum and position vectors are measured with CDCH. In this section we review the main advantages of CDCH over the MEG drift chamber system, its construction, commissioning, reconstruction, alignment and performance.

4.1 Concept and design

The MEG drift chamber system consisted of sixteen individual radially aligned chambers. With this detector configuration, relevant efficiency loss and resolution degradation were caused by the material of the chamber’s mechanical support, electronic cards and cables, which were frequently crossed by the positron trajectories. The CDCH, extensively discussed in [3, 11], overcomes these limitations by replacing the segmented structure of the MEG chambers with a single volume one.

The CDCH, shown in Fig. 7, is a \(1.93\,\text {m}\) long low-mass cylindrical volume, filled with a helium–isobutane gas mixture (the exact composition is specified in Sect. 4.3) and equipped with nine concentric layers of 192 gold-plated tungsten sense wires each, 1728 in total, arranged in a stereo configuration with two views and \(\sim 10\,000\) silver-plated aluminium cathode and guard wires. The sense wires collect the signals from the drift electrons, while the cathode and guard wires form nearly square drift cells and define the electric field within and at the boundaries of the sensitive volume; the cell dimensions range from \(5.8\,\text {mm to }7.5\,\text {mm}\) at center and from \(6.7\,\text {mm to }8.7\,\text {mm}\) at the end-plates. The sense wires within the experimental acceptance, about 1200, are read out by the data acquisition system.

A zoom on the endplate region to magnify the wire stereo geometry is shown in Fig. 8.

4.2 Construction

Construction of the CDCH, which began in late 2016 and was completed in spring 2018, was performed modularly by soldering wires in groups of sixteen onto printed circuit boards (PCBs), which were then mounted between radial spokes at the ends of the chamber. The mechanical structure of the detector achieves its final form by integrating a carbon fibre component securely fastened to both end-plates, effectively enclosing the sensitive volume.

The chamber was delivered to PSI for the commissioning phase in summer 2018 and integrated into the MEG II detector in winter 2018. After installation, the CDCH was operated at PSI with engineering runs in 2019 and 2020 and with physics data taking runs in 2021 and 2022.

4.2.1 DAQ and services

High voltage (HV) is supplied with a commercial system by ISEG Spezialelektronik GmbH, made of mod. EHS F230 and EHS F430 boards in a WIENER crate with MPOD controller, for a total of 144 channels. HV channels are split between two cables by custom-built distribution boards, and eight wires are powered through each cable.

Ionisation signals are read at both ends of the chamber wires by 216 front-end (FE) boards with a bandwidth of \({\sim 400}\,\text{ MHz }\), which are connected to the wire PCBs. The signals are digitised at \({1.2}\,{\text {GSPS}}\) by the WaveDREAM boards of the integrated trigger and DAQ system WaveDAQ, described in Sect. 8. The low voltage (LV, 5 V) is supplied to the FE boards via a dedicated distribution system through the WaveDREAM boards. As the FE boards have a total power consumption of \(\approx 350\,\text {W}\), they have to be cooled with water and glycol by a cooling system built into the board holders and purged with dry air to avoid condensation of water vapour.

The gas is supplied by a dedicated gas system [12] that mixes helium with isobutane and oxygen in the required proportions. Isopropyl alcohol is added by passing a fraction of the helium flow through a thermostated, alcohol-filled bubbler.

4.3 Final geometry and operation

The final geometry of the CDCH differs in some details from the original design. The chamber was designed to have ten layers of sense wires, but during the wiring phase there were delays in establishing the environmental conditions and procedures needed to eliminate the risk of wire breakage. Then, the outermost layer was not installed to fit into the schedule of the CDCH operation. We used Monte Carlo (MC) simulations to verify that the degradation of tracking efficiency and performance due to the missing layer is \({<1}\%\).

First, using Garfield++ simulations [13], the HV operating point was set in the range \(1400\text { to }1480\,\text {V}\), with the innermost layer at the highest voltage, to achieve a gas gain of \(\sim 5 \times 10^{5}\). This configuration gives a reasonable sensitivity to individual ionisation clusters, as confirmed by the distance of closest approach (DOCA) measurements presented in Sect. 4.4. The HV can be set individually for each cell at \({10}\,\text {V}\) steps to account for difference in size of cell due to their radial position within the chamber. The mechanical tension of the wires, which is required to ensure electrostatic stability and to avoid short circuits between the anode and cathode wires, was also calculated using Garfield++ simulations. Several tests were carried out, both on a full-size prototype and on the CDCH itself. The required tension value was determined by stretching the wires by \(+{5.2}\,\text {mm}\) with respect to the nominal length \(({65}\%\) of the elastic limit), based on calculations.

The gas mixture was also optimised in 2019 and 2020 to avoid corona discharges and current spikes and to restore normal operation after a sustained short circuit between an anode and a cathode wire. The original He–isobutane gas mixture \(\left( 90:10\right) \) was modified by the addition of oxygen and isopropyl alcohol \(({1.5}\%)\). The addition of oxygen is a particularly delicate operation due to its electronegativity, as it can capture drift electrons. The oxygen level was initially set to \({2}\%\) to dampen anomalous currents of \(\sim 400\,\upmu \text {A}\) and then gradually reduced to a stable value of \({0.5}\%\) after normal current levels were restored.

In Fig. 9 we show typical currents on six HV channels during normal MEG II beam operation; each HV channel powers 16 sense wires.

4.3.1 Issues and problems

The main problem encountered during the construction and commissioning of CDCH was the breakage of 107 cathode wires. The wire breaks were investigated in detail by microscopic inspections, chromatography, SEM/EDX analyses and immersion tests in water, and were proved to be due to galvanic corrosion of the aluminium core, caused by air humidity penetrating through small cracks in the silver coating. A phenomenological model [14] was developed to predict the number of breaks as a function of the time the wire is exposed to the ambient moisture and the elongation of the wire. The problem was solved by keeping the CDCH in a controlled dry atmosphere during maintenance and operation. When these precautions were taken, the rate of wire breakage dropped to 0 so far.

The impact of \(\sim 100\) missing cathodes \(({< 1}\%\) of the total) was assessed by using MC simulations and found to be negligible. Nevertheless, the presence of broken wire fragments within the CDCH is dangerous as they can cause short circuits affecting several sectors of the chamber. All fragments were carefully removed with a dedicated tool, but one of them caused the strong short circuit cited in Sect. 4.3, which increased the chamber current up to \(\sim 400\,\upmu \text {A}\). A dedicated optimisation procedure for the gas mixture was necessary to restore normal current values.

During the standard data taking it is very important to keep the isopropyl alcohol level stable, to avoid current spikes, by continuously bubbling it within the chamber; an example of the current spikes occurring in case of a shortage of isopropyl alcohol is shown in Fig. 10. An additional problem is the noise caused by occasional short circuits on FE boards. Because the FE boards are densely mounted on the back of the chamber end plates, small movements of a board can cause short circuits between the capacitors on the board and the aluminium support structure of the board itself; such short circuits cause oscillations in the amplifier circuits that can affect the behaviour of large parts of the chamber. These oscillations can only be stopped by switching off the LV of the boards, but since the LV is distributed in groups of eight channels, a single noisy channel will cause eight channels to fail. This problem was solved by inserting suitable insulating plastic blocks to ensure the proper separation between the board elements and the metallic parts of the support structure.

4.4 Reconstruction algorithms

The purpose of the CDCH analysis is to identify and reconstruct the positron tracks using the information on the sense wires provided by the ionisation clusters. The CDCH operates in a high-rate environment; the hit rate per cell can be \({>1}\,\text{ MHz }\) at the innermost wires for \(R_{\upmu } = 5 \times 10^{7}\,\text {s}^{-1}\), corresponding to a cell occupancy of \({25}\%\) in the time window of maximum drift time. Moreover, the geometrical characteristics, with only nine layers and small stereo angles, makes the track finding complicated.

A more detailed description on the reconstruction algorithms is available elsewhere [15].

4.4.1 Waveform processing and hit reconstruction

The first step is to identify the signals induced by drift electrons in the waveforms of the cells traversed by positrons; such signals are called “hits”. Figure 11 shows typical waveforms due to a hit, which consists of several temporally separated pulses from different ionisation clusters that are stretched by the slow drift time of the electrons. For good hit reconstruction efficient signal-to-noise discrimination and pile-up identification are required. Two waveform processing algorithms have been developed to detect hits.

A coherent low frequency noise was observed mainly over adjacent 16 wires. Therefore, the first algorithm starts with coherent noise subtraction using the averaged waveform for adjacent channels excluding the region with signal pulses. This reduces the noise level (FWHM) from \({23}\,\text {mV}\) at \({13}\,\text {mV}\). Significant incoherent high frequency noise is observed for \({>200}\,\text{ MHz }\), while the signal power in this frequency range is negligible. Therefore, we apply high-frequency cut-off at \({225}\,\text{ MHz }\) using a discrete Fourier transform technique. These noise reduction algorithms have been optimised by maximising the number of hits per track and the tracking efficiency, and minimising the chi-square of track fitting.

Hit detection is based on a fixed voltage threshold for two adjacent sampling points and a fixed threshold for the integration over \({20}\,\text {ns}\) from the two initial points. After the hit is detected, the thresholds are lowered to look for a low-amplitude cluster pulse before the detected pulse.

The second method uses a deep-learning algorithm based on a convolutional neural network (CNN). The network model accepts waveforms from eight neighbouring cells as input to learn the pattern of the coherent noise as well as that of the signal and outputs the probability of the first cluster arrival time of a hit at each sampling point. It was trained with samples of simulated waveform data, where hits are added randomly at the expected rate for \(R_{\upmu } = 3 \times 10^{7}\,\text {s}^{-1}\), overlaid with real noise data taken without beam.

Combining the results by the two methods results in a higher hit efficiency but also a higher fake hit rate than the first method. To make the best use of the results of the two methods, the following reconstruction is repeated twice, with only the hits found with the first method and with the hits found with the two methods after combining them. The results are combined after the reconstruction is completed. If the reconstruction is successful with both methods, the higher quality tracks are selected. This approach improves the final tracking efficiency (see Sect. 4.6.2), by a factor of 1.26 compared to applying only the first method, but at the cost of 4 times the computational time for the track finding process described in Sect. 4.4.2 due to the higher number of hits.

The difference in the arrival times \((\alpha )\) and the ratio of the amplitudes \((\beta )\) between the signals measured on the two ends of a wire provides information about the z-coordinate of the hit along the wire. Therefore, once a hit is detected in at least one of the two waveforms from a wire, these values are computed by minimising the following chi-square function,

where i and j are indices for the wire ends (0: upstream end, 1: downstream end, and \(i\ne j)\), \(v_{i}(t_k)\) is the waveform voltage at the k-th sampling point on the end where the hit is detected while \(v_{j}(t_k - \alpha )\) is the voltage at the time \((t_k - \alpha )\) on the other end of the wire,Footnote 4 and \(\sigma _{i(j)}\) is the RMS noise on the waveform. The index k runs for the points in the range \([{-20}\,\text {ns}, {10}\,\text {ns}]\) around the detected signal timing. The resolution of the z-coordinate is several centimetres. Although this is moderate, it helps to ensure that the track finding process is efficient and robust against pile-up. The z-coordinate resolution is improved by exploiting the stereo configuration of the wires in the tracking stage.

Another piece of information provided by the digitised waveforms is the arrival time of the ionisation clusters on the sense wires. Since multiple clusters are frequently generated on one waveform, the time of the first cluster must be identified to correctly reconstruct the drift circle. This is measured from the summed waveform of the two ends after adjusting the relative timing of the two. The arrival time of cluster is the sum of the common track time \((T_{0})\) and the drift time of the cluster, where \(T_0\) is measured by pTC measurement (see Sect. 5). The drift time of the first cluster is converted to the DOCA using the time-distance relationship (TXY tables) described in Sect. 4.4.2.

4.4.2 Track finding and fitting

After identification and reconstruction, the hits are fed into a pattern recognition algorithm (track finder), followed by a track-fitter algorithm, both based on Kalman filters. The former combines hits belonging to the same positron track into a track candidate with a preliminary estimate of the positron’s kinematics. The latter reconstructs the complete trajectory of the positron and provides the best estimate of the kinematics at the target: the positron energy \({E_{{\textrm{e}}^+}}\), the emission angles \(({\theta _{\mathrm {e^+}}},{\phi _{\mathrm {e^+}}})\) and the coordinates of the intersection of the track with the target, \((x_{{\textrm{e}}^+}, y_{{\textrm{e}}^+}, z_{{\textrm{e}}^+})\).

The track-finding algorithm is based on a track following method that starts from hit pairs in outer layers, where occupancy is lower. All compatible combinations of two pairs in different layers form a set of track seeds. Each seed is propagated backward to the adjacent layers, with checking the consistency between the track and hits and updating the track parameters using the Kalman filter algorithm, until the innermost layer, and then propagated forward with attempting to find additional compatible hits to form full single turn track candidates. A track candidate is required to have at least seven hits.

The track fitter uses an extension of the Kalman filter, namely the deterministic annealing filter (DAF) [16] with iterative weighting and annealing process, implemented in the GENFIT package [17, 18] with a proper treatment of the material effect. The left/right ambiguity is also resolved by DAF. The fitter first fits individual track candidates from the track finder and then merge the fitted segments to form full multi-turn tracks inside CDCH. Then, the tracks are propagated forward to the pTC and backward to the target. Once the track matches a pTC cluster, the track is re-fitted with updated DOCA using the best estimated \(T_{0}\), with a correction of the time of flight from each hit to the pTC, and the best method of DOCA estimation discussed bellow. During this re-fitting, missing hits that the track finder was unable to associate to the track are searched for. Frequently, hits in the final half turn are missed by the track finder but can be added in this process, resulting in improved momentum resolution.

The distribution of the DOCA residuals (hit DOCA − track DOCA) estimated with the conventional (NML) and the two (DNN and CNN) neural network based approaches. The curves are fitted double Gaussian functions with a core sigma of \({114}\,\upmu \text {m}\) \(({119}\,\upmu \text {m})\) and a tail sigma of \({236}\,\upmu \text {m}\) \(({259}\,\upmu \text {m})\) with a core fraction of 0.59 (0.70) for the neural network (conventional) approaches

The deviation of the target from planarity is modelled by a triangle mesh in the fitter. The track is first propagated to a virtual plane, a few mm in front of the real target, and then to the nearest triangle of the mesh, where the positron’s kinematics at the emission is reconstructed. The target crossing point \((x_{{\textrm{e}}^+},y_{{\textrm{e}}^+},z_{{\textrm{e}}^+})\) is used as a candidate of muon decay point to correlate the track direction with the first interaction point of the \({\upgamma }\)-ray observed in the LXe detector. The length of the trajectory from the target to the matched pTC counter is converted to the time of flight and subtracted from the pTC hit time to determine the positron emission time at the target \({t_{{\textrm{e}}^+}}\).

During the tracking process, the DOCA of each hit is iteratively refined. The DOCA value is estimated at the beginning using angle-averaged TXY tables extracted using Garfield++ simulations, which determine the drift velocity and lines taking into account the electric and magnetic fields, the ionisation pattern, the diffusion process, etc. The Garfield++ simulations are performed in two-dimensions in \((r, \phi )\) sampling at slices at fixed z-coordinates for each layer and then are interpolated to take into account the longitudinal change in cell size and shape as well as the effect of the magnetic field. Once the track is reconstructed, the DOCA is recalculated taking into account the cell crossing angle of the track. This DOCA estimation is biassed by the low cluster density; the small number of clusters within the cell overestimates the hit distance from the wire.

Another DOCA estimate less prone to intrinsic biases is obtained by neural network approaches [19]. The networks take as input various hit properties (wire and plane number, charge, timing, hit coordinates, track angle, \(T_0\) etc.) and waveforms (for the CNN model) and train on the fitted track DOCA (made using the Garfield++ TXY tables) as an estimator of the true DOCA to create a “data-driven” TXY table. This TXY optimally accounts for ionisation statistics biases, removes errors from the simulated TXY, and uses information from all ionisation clusters.

The distribution of DOCA residuals for the conventional and two neural network based approaches, a dense neural network (DNN) and a CNN, are compared in Fig. 12. The best result is obtained with the CNN that processes the waveforms from all ionisation clusters. The main improvement from the neural network is the suppression of the positive right tail presumably from the suppression of the ionisation statistics bias. This improves the positron kinematics by \({\sim 10}\%\) as shown in Sect. 4.6.1. Therefore, the DOCA is finally updated with the CNN method in the re-fit process.

4.5 Alignment

4.5.1 Wire alignment

The global position of CDCH was measured in both 2021 and 2022 with an optical survey. The information on the relative wire-by-wire alignment was extracted from several measurements during the construction of the chamber. However, after the complete reconstruction of the track, the distributions of the difference between the nominal position of the wires and the wire position calculated by the tracking algorithm using the measured DOCA show systematic deviations of the order of \({\sim 100}\,\upmu \text {m}\), which worsen the tracking resolutions. The deviations were resolved by implementing a track-based alignment.

Two types of tracks can be used to improve relative alignment: Michel positrons and cosmic rays. The former have the advantage of being collected and reconstructed during normal data taking, but also the disadvantage of requiring a more complex reconstruction and being more affected by possible uncertainties in the knowledge of the magnetic field. The latter have the advantage of being straight tracks, but the disadvantage of requiring dedicated data taking periods and a different coverage of the tracking volume. Currently, alignment to cosmic rays is being investigated; in the following we only present the alignment based on Michel positrons.

The alignment procedure is an iterative adjustment of the wire coordinates driven by the mean residual. The residual r is fitted, wire by wire, as a function of the longitudinal position along the wire (z-coordinate) with a parabolic shape:

The parameter \(p_{0}\) corresponds to a global wire displacement, the linear term results from the inclination of the wire to the chamber axis and the quadratic term takes into account the wire sagitta, due to the electrostatic and gravitational forces acting on the wire. \(p_{2}\) is the (absolute) maximum value of the sagitta, which can reach \({\sim 100}\,\upmu \text {m}\).

The number of events required for high quality alignment of a single wire depends strongly on the position of the wire in the chamber due to the trigger criteria to select \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) candidate events. Furthermore, the alignment algorithm is almost insensitive to alignment errors when the track direction is parallel to the misalignment vector. This is because the DOCA estimate does not change when the wire position is shifted along the track direction. Therefore, chamber sectors crossed by a large variety of angles are aligned more efficiently than those crossed with a small scatter of angles.

The alignment procedure was based on 17 million hits, with an average 40 hits per track. We required \(>5000\) hits for each wire to be aligned; 152 wires were excluded from this alignment and the survey results are used. They are at the edges of each layer or with electronic problems. With larger data statistics, the alignment for the edge region can be improved. One way to increase the statistics for the region is to use tracks outside the time window that do not cross the pTC but cross more frequently the CDCH regions outside or at the boundaries of the MEG II geometric and trigger acceptance. These tracks are collected accidentally when another event that meets the trigger conditions opens the WaveDREAM time window.

Figure 13 shows the final mean residuals in the x- (top plot) and y-coordinates (bottom plot) compared to the same residuals obtained using the survey-based alignment. The spreads of the Gaussian cores of the two distributions are \(\sigma ^\mathrm{{sur}}_x={22}\,\upmu \text {m}\) and \(\sigma _y^\mathrm{{sur}}={35}\,\upmu \text {m}\) when the survey-based alignment is used and decrease to \(\sigma _{x,y}^\mathrm{{trk}}<{5}\,\upmu \text {m}\) for both coordinates after the alignment procedure.

The fit parameters \(p_{0}\) (global displacement) and \(p_{2}\) (sagitta) are strongly correlated, as expected. The remaining sagitta is \(\sigma _{p_2}\sim {13}\,\upmu \text {m}\) and the wires with the largest sagittas are concentrated in the peripheral chamber sectors, crossed by fewer tracks than central sectors. The distributions of the displacements of the wire centres with respect to those in the survey have \(\sigma _x \sim {100}\,\upmu \text {m}\), \(\sigma _y \sim {80}\,\upmu \text {m}\) and \(\sigma _z \sim {30}\,\upmu \text {m}\). The distributions of the differences of the wire angles \(\varTheta \) and \(\varPhi \) with respect to the survey have \(\sigma _\varTheta \sim {0.1}\,\text {mrad}\) and \(\sigma _\varPhi \sim {1.1}\,\text {mrad}\), respectively. For the \(\varPhi \) angle the mean value is \({1.05}\,\text {mrad}\), which means that the alignment requires a global azimuthal rotation, while the required polar rotation is minimal, i.e. \({<0.1}\,\text {mrad}\).

The remaining error in wire-alignment is estimated to be between \({2}\,\upmu \text {m}\) at the centre and \({15}\,\upmu \text {m}\) at the end plates. Some correlations between the wire centre translation or the wire rotation and the wire number layer-by-layer have been observed and are currently being investigated.

The effects of the alignment procedure on the resolutions of the kinematic variables are evaluated using the double-turn method; see Sect. 4.6.1 for the details. The distributions of \(z_{{\textrm{e}}^+}\) and \({\phi _{\mathrm {e^+}}}\) are shown in Fig. 14. The advantage of the alignment procedure is highlighted by the strong reduction of the systematic biases and by the narrowing of the distributions.

4.5.2 Relative alignment between magnetic field and CDCH

Due to the gradient magnetic field, misalignment between the CDCH and the magnet results in a non-uniformity of the energy scale with respect to the positron emission angle. In the track reconstruction, we use a 3D map of the magnetic field calculated using a finite element method based on the measured coil dimensions and taking into account the thermal shrinkage of the coil. The angular dependence is minimised by shifting the calculated magnetic field by \(({100}\,\upmu \text {m}, {700}\,\upmu \text {m}, {300}\,\upmu \text {m})\) from the nominal position, with an estimated alignment accuracy of \(100{-}200\,\upmu \text {m}\). After the shift, there is no more bias as shown in Fig. 15, and the scatter of the order of \({\sim 10}\,\text {keV}\) is negligible compared to the energy resolution.

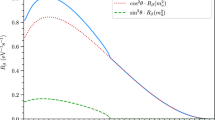

The angular dependence of the positron energy scale versus the angular kinematic variables before and after alignment. The offset on the y-axis is the difference of the measured value with the expected value of the Michel edge. The three superimposed plots show the effects of shifting the magnetic field by \({1}\,\text {mm}\) in x, y, and z

The misalignment in the positive x direction results in a decreasing energy scale versus \({\phi _{\mathrm {e^+}}}\) and \(\cos {\theta _{\mathrm {e^+}}}\). The misalignment in the positive y direction results also in an decreasing energy scale versus \(\cos {\theta _{\mathrm {e^+}}}\). The misalignment in the positive z direction results in an increasing energy scale versus \(\cos {\theta _{\mathrm {e^+}}}\).

4.5.3 Relative alignment between target and CDCH

The precise alignment of the muon stopping target with respect to the spectrometer is crucial in determining the positron emission angle and position as discussed in detail in Sect. 3. We use the holes on the target to align the target with respect to the CDCH. The six holes are visible (as shortage of events) in the reconstructed track distribution in Fig. 16, from which the y- and z-coordinates of the holes (thus those of the target) can be easily estimated. With reference to Fig. 4 the hole centres are located approximately at \({\pm 1}\,\text {cm}\) along the short axis and \({\pm 5}\,\text {cm}\) and \({\pm 8.5}\,\text {cm}\) along the long axis.

The x-alignment exploits the dependence of the estimated y-position on \({\phi _{\mathrm {e^+}}}\), as shown in Fig. 17. Combined with the photogrammetric method using the photo-camera system, which corrects for temporal variation in the target positions, the hole analysis yields the hole-by-hole residual misalignment.

The precision of the hole-by-hole position estimation is limited both by the statistical uncertainty of the positron tracks and by the systematic uncertainties of the method. The statistical uncertainty is \(100{-}200\,\upmu \text {m}\) in each coordinate. All the systematic uncertainties originate from the non-uniform vertex position distribution due to the beam profile, which biases the (y, z) distribution produced by the holes to be closer to the beam centre. This effect is corrected in the hole position estimation, but the uncertainties in the correction factor dominates the hole-by-hole systematic uncertainties of \(({50}\,\upmu \text {m}, {100}\,\upmu \text {m}, {200}\,\upmu \text {m})\).

The global target geometry, both the rotational and translational parameters, is finally fitted to the residuals of the holes, yielding a translation of \(({73}\,\upmu \text {m}, {799}\,\upmu \text {m}, {437}\,\upmu \text {m})\) and a rotation of \({10}\,{\text {mrad}}\) mainly along the minor axis of the target from the results of the photogrammetric method. The accuracy, including systematic uncertainties, is \({100}\,\upmu \text {m}\) for the translation in each axis and \({1.4}\,{\text {mrad}}\) \(({6}\,\text {mrad})\) for the rotation along the minor (major) axis.

4.5.4 Relative alignment between LXe detector and CDCH

The alignment procedure described in Sect. 4.5.1 is insensitive to a global misalignment between CDCH and other subdetectors. Among them, the LXe detector is especially important since it provides information about the \({\upgamma }\)-ray direction.

Events that result with hits in both the CDCH and the LXe detector are used to compare information from both. The appropriate event category is the cosmic rays, which can release energy in the LXe detector and then (or vice versa, depending on the trajectory) pass through the CDCH, producing hits on multiple wires. The cosmic ray events are collected with a specific triggering scheme and with the magnetic field turned off, to obtain straight tracks that are easier to reconstruct and fit. The idea is to select cosmic ray tracks that cross the LXe detector inner face almost perpendicularly and to determine the coordinates of this crossing point using the LXe detector and the CDCH information independently.

On the CDCH side, a method based on the Legendre transform [20, 21] is used for finding and fitting the cosmic ray tracks. The fitted tracks are then extrapolated to the inner face of the LXe detector.

On the LXe detector side, the usual position reconstruction algorithm (see Sect. 6.4) is applied. The selection of cosmic ray tracks almost perpendicular to the detector entrance face is motivated by the fact that the continuous stream of energy release by such cosmic rays is seen from the photosensors on the inner face as a point-like energy deposit, whose position is more reliably reconstructed.

Figure 18 shows the LXe–CDCH difference in the reconstructed z-coordinate \({\varDelta } z = z_{{\textrm{LXe}}} - z_{\textrm{CDCH}}\). The distribution has a Gaussian shape, centred at \({\varDelta } z = (-1.0 \pm 0.8)\,\text {mm}\). This result shows that a small global shift can be applied to better align the two detectors even if it is compatible with zero.

We do not observe any significant deviation in the difference in \(\phi \) within its uncertainty, which is more subject to systematic errors in the measurement because of non-uniform distribution of the cosmic rays with respect to \(\phi \).

The relative alignment between the LXe detector and the CDCH, combined with the relative alignment between the CDCH, the magnetic field and the target, is sufficient to determine the relative positron–photon angle, which is independent of the absolute position of the detectors.

For practical reasons, we need to define an absolute alignment. Reference marks on the COBRA cryostat are used to define the absolute MEG II reference frame. The position of the CDCH in this reference frame is determined by an optical survey with an estimated resolution of a few hundred micrometres, while the absolute position of the LXe detector is measured with uncertainties of \({\sim 600}\,\upmu \text {m}\) as discussed in Sect. 6.4. On this basis, we decided to use the CDCH position as a reference and apply global shifts to the LXe detector, the magnetic field and the target, according to the results of the data analysis for the relative alignments. The shifts of the magnetic field and target, reported in Sects. 4.5.2 and 4.5.3, are quite similar in size and direction, which could indicate a bias in the CDCH survey. The shift in the z direction is also confirmed by the relative alignment between the CDCH and the LXe detector, but with a large uncertainty. For these reasons, the shift to be applied to the LXe detector was determined by combining all available information on a possible bias in the CDCH survey. We obtained \(({90}\,\upmu \text {m},{800}\,\upmu \text {m}, {630}\,\upmu \text {m})\), with a systematic uncertainty of \({1}\,\text {mm}\) assigned in all directions.

The distribution of the double-turn difference for \(z_{{\textrm{e}}^+}\) obtained using the conventional (NML) and neural network (DNN and CNN) approaches to DOCA reconstruction fit with a double Gaussian. \(A_{1,2}\) are the amplitudes of the two Gaussians, the ratio is fixed to 0.05; \(\sigma _{1,2}\) are their standard deviations in \(\text {mm}\)

4.6 Performance

In this section, we discuss the performance of the CDCH in terms of resolution and tracking efficiency. A more extensive discussion is given in a dedicated paper [15]. We recall that the main motivation for building the CDCH was the unsatisfactory performance of the MEG drift chamber system in terms of angular and energy resolutions and tracking efficiency due to its segmented structure.

4.6.1 Resolutions

One technique for evaluating the resolutions of the kinematic variables is the “double-turn” method, which was already used in the MEG experiment[2]. In the MEG II experiment, \({\sim 15}\%\) of the positron tracks cross the chamber volume five times, passing through \(9 \times 5\) sense wire layers. In these tracks, one can identify two separate track segments (“turns”), the first of which corresponds to two chamber crossings and the second to three chamber crossings. Both track segments are independently fitted and propagated to a plane parallel to the target between the two turns and the distributions of the differences between the kinematic variables reconstructed by the two segments are compared.

Figure 19 shows the double-turn distributions for \(z_{{\textrm{e}}^+}\); the shapes of the \(y_{{\textrm{e}}^+}\), \({\phi _{\mathrm {e^+}}}\) and \({\theta _{\mathrm {e^+}}}\) distributions are similar. The three distributions of Fig. 19 are based on the conventional and the neural network based DOCA reconstructions described in Sect. 4.4.2. The neural network approaches improve the width of the distribution by \(1{-}11\%\).

These distributions do not directly represent the effective resolutions of the CDCH, but a combination in quadrature of the resolutions of the first and second turn. The following corrections are required to convert the double-turn results into reliable estimates of the real resolutions. One is to correct for the bias in the double-turn evaluation due to difference in magnetic filed and properties between the two turns and with Michel positrons. Another is to correct for the difference between Michel positrons and the signal positrons due to the different numbers of hits. Both correction factors were evaluated via MC simulations. Good similarity was obtained between the double-turn results on data and those on the Michel MC simulation, showing that we have a solid knowledge of the response of the CDCH. In addition, we can correct for the correlation effect between the variables for the detection of \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) events as discussed in Sect. 10. The correlation parameters are also evaluated with the double turn analysis. The effective resolutions after the corrections are summarized in Table 2.

The energy resolution for experimental data is measured by fitting the theoretical Michel spectrum multiplied by an efficiency function that takes into account the high-energy selection of the spectrum by the spectrometer acceptance and then convoluted with the resolution function formed by the sum of three Gaussian functions to the measured Michel positron spectrum. The result is shown in Fig. 20 in both logarithmic (a) and linear (b) scales. The \(\sigma \) of the core Gaussian function, which accounts for \({\sim 67}\%\) of the integral of the resolution curve, is \(\sigma _{E_{{\textrm{e}}^+}}= {91}\,\text {keV}\), better by \({40}\,\text {keV}\) of the value quoted in the MEG II proposal. The corresponding value for the MEG experiment was \(\sigma _{E_{{\textrm{e}}^+}}={320}\,\text {keV}\).

Fit of the Michel positron spectrum in logarithmic (a) and linear (b) scales. The black histogram is the experimental distribution, the blue curve is the sum of three Gaussian functions describing the resolution around the signal region and the red curve is the fitted function, obtained from the theoretical spectrum multiplied by the acceptance curve shown in c and then convoluted with the resolution function. c The acceptance curve of the spectrometer modelled with an error function

4.6.2 Efficiency

Figure 21 shows the CDCH tracking efficiency for signal positrons \(\varepsilon _{{{\textrm{e}}^+}, \textrm{CDCH}}\) versus \(R_{\upmu }\). The efficiency is defined as the ratio of the number of reconstructed positrons in the signal energy region to the number of emitted positrons in the direction opposite to the LXe acceptance region and detected by the pTC. Since the efficiency depends on the positron energy, it is measured for energies just below the signal energy and its value is extrapolated to the signal energy. The sample used for the calculation is taken through a minimum bias trigger that requires only one hit on the pTC. It is expected that the efficiency decreases with \(R_{\upmu }\), because as \(R_{\upmu }\) increases, the probability of pile-up also increases, making the track-finder algorithm less effective at identifying hits belonging to individual tracks. Nevertheless, the reduction in efficiency from the lowest value of \(R_{\upmu } = 2 \times 10^{7}\,\text {s}^{-1}\) to the highest \(R_{\upmu } = {5\times 10^{7}}\,\text {s}^{-1}\) is moderate \({< 15}\%\). The blue dotted line represents the design value, which is almost reached at the smallest value of \(R_{\upmu }\) and is not far from being reached also at the highest. As a reference we measure \(\varepsilon _{{{\textrm{e}}^+}, {\textrm{CDCH}}} \sim {74}\%\) at \(R_{\upmu } = {3\times 10^{7}}\,\text {s}^{-1}\).

The CDCH is highly transparent towards pTC and allows a high signal detection efficiency for pTC, \(\varepsilon _{{{\textrm{e}}^+},{\textrm{pTC}}} = {(91\pm 2)}\%\) (discussed in Sect. 5.5), which is twice higher than with the MEG drift chamber. The MEG experiment used \(R_{\upmu } = {3 \times 10^{7}}\,\text {s}^{-1}\), with a positron efficiency of \(\varepsilon _{{\textrm{e}}^+} \sim {30}\%\). At the same \(R_{\upmu }\), the positron efficiency is \(\varepsilon _{{{\textrm{e}}^+}} = \varepsilon _{{{\textrm{e}}^+},{\textrm{pTC}}} \times \varepsilon _{{{\textrm{e}}^+}, {\textrm{CDCH}}} \sim {67}\%\).

4.7 Back-up chamber

The detailed analysis of the problems of wire breakage stimulated a long R &D effort to explore possible alternatives to the silver-coated aluminium wires, which were found to be fragile and easily damaged by ambient humidity, with the aim of designing and building a new chamber (CDCH2) without the above problems. Several possible types of wire were explored, with different coating materials (gold and nickel), without coating etc. They were studied from multiple aspects: sensitivity to corrosion processes, mechanical strength, soldering technique on PCBs, etc. We developed a system for measuring wire tension [22], based on the resonant frequency method, as well as with wiring and assembly stations [23]. The final choice was a wire made of pure aluminium with a diameter of \({50}\,\upmu \text {m}\), which is almost insensitive to corrosion and can be efficiently and solidly fixed on PCBs by a combination of soldering and gluing with suitable chemical products. The CDCH2 is scheduled for completion by the end of 2023 and delivery to PSI in spring 2024. At that time, the collaboration will decide whether and when to replace the current chamber.

5 Pixelated timing counter

Measuring the time coincidence between a \({{\textrm{e}}^+}\) and a \({\upgamma }\)-ray with the highest resolution is crucial for reconstructing \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) like events in a high \(R_{\upmu }\) environment. The pTC was developed to measure the \({{\textrm{e}}^+}\) impact time, from which the emission time at the target plane \({t_{{\textrm{e}}^+}}\) can be derived by correcting for the track length reconstructed by CDCH. It also plays an active role in the trigger algorithms by providing information for event selection based on time coincidence and directional matching with the \({\upgamma }\)-ray measurement with the LXe detector.

5.1 Concept and design

The detector consists of two semi-cylindrical sectors arranged mirror-symmetrically upstream and downstream of the target, within the volume between CDCH and the inner wall of the COBRA magnet. Figure 22 shows an illustration of the downstream sector. The support structure consists of a \({20}\,\text {mm}\) thick semi-cylindrical aluminium shell. Plastic scintillator counters are mounted on 16 back-planes running in the z-direction along the structure. To ensure temperature-stable operation, water flows from a cooling system through copper pipes embedded in the structure.

The pTC extends in the z–\(\phi \) plane \({23}< |z| < {117}\,\text {cm}\) and \({-166}^{\circ }< \phi < {5}^{\circ }\) to cover the angular acceptance of \(\upmu ^+ \rightarrow {\textrm{e}}^+ \upgamma \) positron candidates with a back-to-back \({\upgamma }\)-ray reaching the fiducial volume of the LXe detector.

Each sector is segmented into 256 plastic scintillator tiles (Bicron BC422® [24]), coupled to an array of silicon photomultipliers (SiPMs) glued on two opposite sides as shown in Fig. 23. Each array consists of six SiPMs connected in series. Near-ultraviolet sensitive AdvanSiD SiPMs with an active area of \((3 \times 3)\,\text {mm}^{2}\) and a pixel pitch of \({50}\,\upmu \text {m}\) (ASD-NUV-SiPM3S-P) are used. Their spectral response matches well with the \({370}\,\text {nm}\) peak emission of BC422. Each tile is wrapped with a highly efficient \({35}\,\upmu \text {m}\) thick polymeric reflector (VIKUITI 3M Mirror Film®) to increase light reflectance at the surface, and finally wrapped again with a \({30}\,\upmu \text {m}\) thick black TEDLAR® film.

A naked counter with \(H={50}\,\text {mm}\). The coordinates \((v_{\textrm{hit}},w_{\textrm{hit}})\) are the counter local coordinates used in reconstruction in Sect. 5.3

The counter sizes (length L, height H and thickness T) and locations were optimised using MC simulations. The best trade-off between single-counter performance, number of hits for a signal positron (hit multiplicity), detector efficiency and number of channels was found with \(L\times H\times T = (120\times 40\times 5)\,\text {mm}^{3}\) and \((120\times 50\times 5)\,\text {mm}^{3}\) tile sizes (the two different H are used in different regions to maximise hit multiplicity while keeping the highest efficiency). The counters are arranged at a fixed radius (the top of each counter at \(r={29.7}\,\text {cm})\) in a \(16 \times 16\) matrix arranged in the z–\(\phi \) plane. The longitudinal and angular distances between adjacent counters are \({5.5}\,\text {cm}\) and \({10.5}^{\circ }\), respectively. Each line at fixed z is offset by half a counter from the previous one; in addition, the counters are tilted by \({45}^{\circ }\) so that they are nearly perpendicular to the signal positron trajectories. This configuration was chosen to maximise the average hit multiplicity for a signal-like positron. The idea behind the detector design is to take advantage of both the good single-counter resolution (below 100 ps measured in laboratory tests [25]) and the combination of multiple hit information. The mean hit multiplicity per signal event reconstructed from MC, \(\langle N_{\text {hit}} \rangle \sim 9\), leads to the expectation of a total time resolution down to \(\sigma _{{t_{{\textrm{e}}^+}},\textrm{pTC}} \sim {40}\,\text {ps}\).

Most counters (except those at low |z|, due to mechanical constraints) are connected to a laser source via optical fibres. The signals generated by sending synchronous light pulses with the laser to the counters are used to check detector stability and to calibrate the inter-counter time offsets as described in Sect. 5.4.

5.2 Operation

The R &D for the pTC single counter began in 2013 [26]. The full detector was developed and finally commissioned in 2017 [25], when it was tested in the MEG II spectrometer under experimental conditions. Since then, it has always been operational during the MEG II engineering runs, which were conducted once a year.

During standard operation of the pTC, the circulating water is maintained at the fixed temperature of \(9\,^{\circ }\text {C}\), resulting in an effective SiPMs’ temperature range of \(11.0\text { to }14.5\,^{\circ }\text {C}\), depending on their position. The detector volume is constantly purged with dry air to maintain low humidity and prevent a dew point from being reached.

The breakdown voltage for each SiPM array was extrapolated from I–V curves recorded at fixed temperature \(({30}\,^{\circ }\text {C})\). Data collection at different temperatures allowed extraction of the coefficient of breakdown voltage as a function of temperature, resulting in \(24\,\text {mV}\,^{\circ }\text {C}^{-1}/\text {SiPM}\). The optimal operating voltage for each SiPM array was then first determined in laboratory tests by measuring time resolution as a function of overvoltage, and then optimised at the beginning of each run to maximise the signal-to-noise ratio under experimental conditions. A typical value for an array of six-SiPM is \({\sim 164}\,\text {V}\), i.e., an overvoltage of \(3.2\,\text {V}/\text {SiPM}\).

Since the engineering run in 2017, the detector has been running very stably. Only a tiny number of channels (one in 2021, four in 2022) proved dead (i.e., they have no signal). The malfunctioning and dead counters have been replaced with spare ones during the maintenance period. The distribution of pTC hit rate shown in Fig. 24 can be used as a diagnostic and monitoring tool for beam and background. For example, the asymmetry between upstream and downstream is due to the polarisation of the muon beam and the muon decays off-target. The distribution of the hit rate agrees well with that of MC; no unexpected background is observed. Due to the finely segmented configuration, the hit rate of each counter is \({<75}\,\text {kHz}\) despite of the total pTC hit rate of \({\sim 3}\,\text {MHz}\) at \(R_{\upmu } = {5 \times 10^{7}}\,\text {s}^{-1}\).

5.2.1 Issues and problems

During the first years of operation, some problems related to the detachment of SiPMs from the scintillator surface occurred, probably caused by mechanical stress on the SiPM board and loose adhesion of the optical resin to polished surfaces. For this reason, a small subset (a few dozen) of the counters were removed from the detector and glued back on, after applying manually some small scratches to the scintillator surfaces glued to the SiPMs, to ensure better adhesion.

An increase in the dark currents of the SiPMs was observed, likely due to radiation damage in the Si bulk. The \({{\textrm{e}}^+}\) fluence in 2021–2022 runs is \({\sim 1.5\times 10^{10}}~\text {cm}^{-2}\) at the highest hit rate region. The behaviour of the dark current as a function of the total muons stopped on target during the last two years is shown for some channels in Fig. 25. The dark current increment varies between channels due to position-dependent \({{\textrm{e}}^+}\) hit rates and different operating overvoltages. On average, the increment rate is \({9}\,{\text {nA}/(10^{12}\upmu ^+)}\) and \({13}{\text {nA}/(10^{12}\upmu ^+)}\) in 2021 and 2022, respectively, which correspond to \(\sim 40{-}50\,\text {nA}\,\text {d}^{-1}\).

This effect has been studied in detail [27] and a clear correlation between the increase in dark current and the degradation in time resolution has been demonstrated. Based on these studies, we expect a degradation of the overall time resolution of \({\sim 13}\%\) after three years of MEG II running. Although this does not affect the detector performance, we decided to refurbish the detector, by replacing \(\sim 100\) counters.

5.3 Reconstruction algorithms

The \({{\textrm{e}}^+}\) impact time \(t_{\textrm{hit}}\) and the position along the long side of the scintillator \(w_{\textrm{hit}}\) for each counter are reconstructed from the timing of the SiPM signals \((t_{\mathrm {Ch1(2)}}\) for channel 1 (2) of the counter):

where \(L={120}\,\text {mm}\) is the length of the scintillator, \(O_{{\mathrm {Ch1(2)}}}\) is the time offset for the channel, and \(v_{\textrm{eff}}\) is the effective speed of light in the scintillator; \(O_{\mathrm {Ch1(2)}}\) and \(v_{\textrm{eff}}\) are counter-dependent parameters discussed in Sect. 5.4. The signal pulse shape after a shaping amplifierFootnote 5 has a rise time of \({\approx 1.4}\,\text {ns}\) and a full width at half maximum of \({\approx 2.8}\,\text {ns}\). The \(t_{\mathrm {Ch1(2)}}\) is extracted from the pulse using the digital-constant-fraction method, which calculates the crossing time of the signal at a given fraction of the total amplitude. In the offline analysis, different fractions were tested and the fraction with the best time resolution was determined separately for each channel (usually 25%). The local hit coordinate is transformed into the global coordinates using the counter geometry (position and rotation).

A positron usually leaves hits in multiple counters. The series of hits are grouped by a clustering algorithm using the hit timing and position information. The same \({{\textrm{e}}^+}\) can hit counters after exiting the pTC region and travelling another half a turn. These hits are grouped into a different cluster.

The highly granular counter configuration allows estimation of the \({{\textrm{e}}^+}\) trajectory from the hit pattern of each cluster. A look-up table that relates the hit pattern to the radial hit coordinate \((v_{\textrm{hit}})\) was created based on the MC simulation and used to infer \(v_{\textrm{hit}}\) for each hit belonging to the cluster. The cluster timing and position information is passed to the track-finding algorithm to provide the track time \(T_0\) and seed the tracks in CDCH.

The cluster information is next fed into a DAF to fit the trajectory inside the cluster. Two track fitting procedures are applied to each cluster. One is the pTC self-tracking, which uses solely the pTC cluster information and is used for calibration and performance evaluation. The other uses the track reconstructed with CDCH and combines it with the pTC hits. First, a matching test is made between the CDCH tracks and the pTC clusters, and then, the track is extended to the end of the last pTC hit in the cluster for each matched combination. If multiple clusters matched with a single CDCH track, the first one along the trajectory is adopted. During the annealing process of DAF, temporally or spatially inconsistent hits are removed. This filtering process eliminates not only the contamination of hits by different particles but also hits by the same \({{\textrm{e}}^+}\) with small turns after the main passage, which are the main cause of the tail in the pTC timing response.

Figure 26 illustrates the clustering and tracking processes with an example event observed in 2021 at \(R_{\upmu } = {5 \times 10^{7}}\,\text {s}^{-1}\).

An example of pTC hits in an event. a The \(\phi \)–z view of the upstream sector. Counters with hits are shown in colour; different colours show different clusters. The yellow asterisk markers show the reconstructed hit positions and the red star marker shows the point matched with the track from CDCH. The first (orange) and second (green) clusters coincide within \({2}\,\text {ns}\) and thus originate from a single \({{\textrm{e}}^+}\) in different turns. They are separated into different clusters by the spatial information. The third (magenta) one originates from another particle well separated in time \((+{180}\,\text {ns})\). b A 3D view of the first cluster. The cyan squares show the counter planes (arbitrary size), the yellow ellipses show the reconstructed hits, and the red curve shows the fitted track. The seventh hit is incompatible with the others, most likely due to a hit of a secondary particle from the main \({{\textrm{e}}^+}\), and the weight becomes zero in the annealing process of DAF

The timing information in a cluster is combined into the impact time of the first counter:

where \(N_{\textrm{hit}}\) is the number of hits in the cluster, \(t_{\textrm{hit},i}\) is the reconstructed hit time of the i-th hit and \(f_{1,i}\) is the time of flight from the first hit to the i-th hit calculated from the length of the fitted track. Finally, the time of the \({{\textrm{e}}^+}\) emission at the target is calculated as,

where \(f_{0,1}\) is the time of flight from the target to the first hit calculated from the track length (see Sect. 4.4.2).

5.4 Calibration and alignment

The measured signal timing on each channel contains its own time offset \((O_{\mathrm {Ch1(2)}})\) due to different lengths of the readout chain, the variety of scintillator and SiPM responses and differently optimised parameters of the digital-constant-fraction method, resulting in the misalignment of the hit times (see Eq. (5)) and positions (see Eq. (6)) of individual counter measurements. From Eqs. (5) and (6), it is more effective to calibrate the linear combinations of \(O_{\mathrm {Ch1(2)}}\) than to calibrate them separately.

To align the local \(w_{\textrm{hit}}\) coordinate, \(v_{\textrm{eff}}\) and

are calibrated using the \(w_{\textrm{hit}}\) distribution obtained with the Michel positrons. Since the scintillator length L is precisely controlled \(({\mathcal {O}}({10}\,{\upmu \text {m}}))\), we use this physical boundary condition. The centre of the distribution reflects \(O_{\textrm{intra}}\) and the width does \(v_{\textrm{eff}}\). The precision of this method was evaluated to be \({1.1}\,\text {mm}\), which is much better than the \(w_{\textrm{hit}}\) resolution of \(\sim {10}\,\text {mm}\).