Abstract

We present a new supervised deep-learning approach to the problem of the extraction of smeared spectral densities from Euclidean lattice correlators. A distinctive feature of our method is a model-independent training strategy that we implement by parametrizing the training sets over a functional space spanned by Chebyshev polynomials. The other distinctive feature is a reliable estimate of the systematic uncertainties that we achieve by introducing several ensembles of machines, the broad audience of the title. By training an ensemble of machines with the same number of neurons over training sets of fixed dimensions and complexity, we manage to provide a reliable estimate of the systematic errors by studying numerically the asymptotic limits of infinitely large networks and training sets. The method has been validated on a very large set of random mock data and also in the case of lattice QCD data. We extracted the strange-strange connected contribution to the smeared R-ratio from a lattice QCD correlator produced by the ETM Collaboration and compared the results of the new method with the ones previously obtained with the HLT method by finding a remarkably good agreement between the two totally unrelated approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of the extraction of hadronic spectral densities from Euclidean correlators, computed from numerical lattice QCD simulations, has attracted a lot of attention since many years (see Refs. [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30], the works on the subject of which we are aware of, and Refs. [31, 32] for recent reviews). At zero temperature, the theoretical and phenomenological importance of hadronic spectral densities, strongly emphasized in the context of lattice field theory in Refs. [1, 11, 13, 14, 17,18,19], is associated with the fact that from their knowledge it is possible to extract all the information needed to study the scattering of hadrons and, more generally, their interactions.

From the mathematical perspective, the problem of the extraction of spectral densities from lattice correlators is equivalent to that of an inverse Laplace-transform operation, to be performed numerically by starting from a discrete and finite set of noisy input data. This is a notoriously ill-posed numerical problem that, in the case of lattice field theory correlators, gets even more complicated because lattice simulations have necessarily to be performed on finite volumes where the spectral densities are badly-behaving distributions.

In Ref. [14], together with M. Hansen and A. Lupo, one of the authors of the present paper proposed a method to cope with the problem of the extraction of spectral densities from lattice correlators that allows to take into account the fact that distributions have to be smeared with sufficiently well-behaved test functions. Once smeared, finite volume spectral densities become numerically manageable and the problem of taking their infinite volume limit is mathematically well defined. The method of Ref. [14] (HLT method in short) has been further refined in Ref. [21] where it has been validated by performing very stringent tests within the two-dimensional O(3) non-linear \(\sigma \)-model.

In this paper we present a new method for the extraction of smeared spectral densities from lattice correlators that is based on a supervised deep-learning approach.

The idea of using machine-learning techniques to address the problem of the extraction of spectral densities from lattice correlators is certainly not original (see e.g. Refs. [15, 16, 22,23,24,25,26,27,28,29]). The great potential of properly-trained deep neural networks in addressing this problem is pretty evident from the previous works on the subject. These findings strongly motivated us to develop an approach that can be used to obtain trustworthy theoretical predictions. To this end we had to address the following two pivotal questions

-

1.

is it possible to devise a model independent training strategy?

-

2.

if such a strategy is found, is it then possible to quantify reliably, together with the statistical errors, also the unavoidable systematic uncertainties?

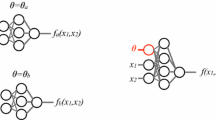

By introducing a discrete functional-basis, with elements \(B_n(E)\), that is dense in the space of square-integrable functions f(E) in the interval \([E_0,\infty )\) with \(E_0>0\), any such function can exactly be represented as \(f(E)=\sum _{n=0}^\infty c_n B_n(E)\). With an infinite number of basis functions (\(N_\textrm{b}=\infty \)) and by randomly selecting an infinite number (\(N_\rho =\infty \)) of coefficient vectors \({\textbf{c}}=(c_0,\ldots , c_{N_\textrm{b}})\), one can get any possible spectral density. This is the situation represented by the filled blue disk. If the number of basis functions \(N_\textrm{b}\) and the number of randomly extracted spectral densities \(N_\rho \) are both finite one has a training set that is finite and that also depends on \(N_\textrm{b}\). This is the situation represented in the first disk on the left. The other two disks schematically represent the situations in which either \(N_\textrm{b}\) or \(N_\rho \) is infinite

The importance of the first question can hardly be underestimated. Under the working assumption, supported by the so-called universal reconstruction theorems (see Refs. [33,34,35]), that a sufficiently large neural network can perform any task, limiting either the size of the network or the information to which it is exposed during the training process means, in fact, limiting its ability to solve the problem in full generality. Addressing the second question makes the difference between providing a possibly efficient but qualitative solution to the problem and providing a scientific numerical tool to be used in order to derive theoretical predictions for phenomenological analyses.

We generate several training sets \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) built by considering randomly chosen spectral densities. These are obtained by choosing \(N_\rho \) random coefficients vectors with \(N_\textrm{b}\) entries, see Fig. 1. For each spectral density \(\rho (E)\) we build the associated correlator C(t) and smeared spectral density \({\hat{\rho }}_\sigma (E)\), where \(\sigma \) is the smearing parameter. We then distort the correlator C(t), by using the information provided by the statistical variance of the lattice correlator (the one we are going to analyse at the end of the trainings), and obtain the input–output pair \((C_\textrm{noisy}(t),{\hat{\rho }}_\sigma (E))\) that we add to \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\). We then implement different neural networks with \(N_\textrm{n}\) neurons and at fixed \(\textbf{N}=(N_\textrm{n},N_\textrm{b},N_{\rho })\) we introduce an ensemble of machines with \(N_\textrm{r}\) replicas. Each machine \(r=1,\ldots ,N_\textrm{r}\) belonging to the ensemble has the same architecture and, before the training, differs from the other replicas for the initialization parameters. All the replicas are then trained over \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) and, at the end of the training process, each replica will give a different answer depending upon \(\textbf{N}\)

Flowchart illustrating the three-step procedure that, after the training, we use to extract the final result. Here C(t) represents the input lattice correlator that, coming from a Monte Carlo simulation, is affected by statistical noise. We call \(C_c(t)\) the c-th bootstrap sample (or jackknife bin) of the lattice correlator with \(c=1,\ldots , N_\textrm{c}\). In the first step, \(C_c(t)\) is fed to all the trained neural networks belonging to the ensemble at fixed \(\textbf{N}\) and the corresponding answers \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},c,r)\) are collected. In the second step, by combining in quadrature the widths of the distributions of the answers as a function of the index c (\(\Delta _\sigma ^\textrm{latt}(E,\textbf{N})\)) and of the index r (\(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\)) we estimate the error \(\Delta _\sigma ^\textrm{stat}(E,\textbf{N})\)) at fixed \(\textbf{N}\). At the end of this step we are left with a collection of results \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N})\pm \Delta _\sigma ^\textrm{stat}(E,\textbf{N})\). In the third and last step, the limits \(\textbf{N}\mapsto \infty \) are studied numerically and an unbiased estimate of \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\) and of its error \(\Delta _\sigma ^\textrm{tot}(E)\), with the latter also taking into account the unavoidable systematics associated with these limits, is finally obtained

In order to address these two questions, the method that we propose in this paper has been built on two pillars

-

1.

the introduction of a functional-basis to parametrize the correlators and the smeared spectral densities of the training sets in a model independent way;

-

2.

the introduction of the ensemble of machines, the broad audience mentioned in the title, to estimate the systematic errors.Footnote 1

A bird-eye view of the proposed strategy is given in Figs. 1, 2 and in Fig. 3. The method is validated by using both mock and true lattice QCD data. In the case of mock data the exact result is known and the validation test is quite stringent. In the case of true lattice QCD data the results obtained with the new method are validated by comparison with the results obtained with the HLT method.

The plan of the paper is as follows. In Sect. 2 we introduce and discuss the main aspects of the problem. In Sect. 3 we illustrate the numerical setup used to obtain the results presented in the following sections. In Sect. 4 we describe the construction of the training sets and the proposed model-independent training strategy. In Sect. 5 we illustrate the procedure that we use to extract predictions from our ensembles of trained machines and present the results of a large number of validation tests performed with random mock data. Further validation tests, performed by using mock data generated by starting from physically inspired models, are presented in Sect. 6. In Sect. 7 we present our results in the case of lattice QCD data and the comparison with the HLT method. We draw our conclusions in Sect. 8 and discuss some technical details in the two appendixes.

2 Theoretical framework

The problem that we want to solve is the extraction of the infinite-volume spectral density \(\rho (E)\) from the Euclidean correlators

computed numerically in lattice simulations. These are performed by introducing a finite spatial volume \(L^3\), a finite Euclidean temporal direction T and by discretizing space-time,

where a is the so-called lattice spacing and \((\tau ,\textbf{n})\) are the integer space-time coordinates in units of a. In order to obtain the infinite-volume spectral density one has to perform different lattice simulations, by progressively increasing L and/or T, and then study numerically the \(L\mapsto \infty \) and \(T\mapsto \infty \) limits. In the \(T\mapsto \infty \) limit the basis functions \(b_T(t,E)\) become decaying exponentials and the correlator is given by

where \(\rho _{L}(E)=0\) for \(E<E_0\) and the problem is that of performing a numerical inverse Laplace-transform operation, by starting from a discrete and finite set of noisy input data. This is a classical numerical problem, arising in many research fields, and has been thoroughly studied (see e.g. Refs. [37, 38] for textbooks discussing the subject from a machine-learning perspective). The problem, as we are now going to discuss, is particularly challenging from the numerical point of view and becomes even more challenging and delicate in our Quantum Field Theory (QFT) context because, according to Wightman’s axioms, QFT spectral densities live in the space of tempered distributions and, therefore, cannot in general be considered smooth and well behaved functions.

Before discussing though the aspects of the problem that are peculiar to our QFT context, it is instructive to first review the general aspects of the numerical inverse Laplace-transform problem that, in fact, is ill-posed in the sense of Hadamard. To this end, we start by considering the correlator in the infinite L and T limits,

and we assume that our knowledge of C(t) is limited to the discrete and finite set of times \(t=a\tau \). Moreover we assume that the input data are affected by numerical and/or statistical errors that we call \(\Delta (a\tau )\).

The main point to be noticed is that, in general, the spectral density \(\rho (E)\) contains an infinite amount of information that cannot be extracted from the limited and noisy information contained into the input data \(C(a\tau )\). As a consequence, in any numerical approach to the extraction of \(\rho (E)\) a discretization of Eq. (4) has to be introduced. Once a strategy to discretize the problem has been implemented, the resulting spectral density has then to be interpreted as a “filtered” or (as is more natural to call it in our context) smeared version of the exact spectral density,

where \(K(E,\omega )\) is the so-called smearing kernel, explicitly or implicitly introduced within the chosen numerical approach.

There are two main strategies (and many variants of them) to discretize Eq. (4). The one that we will adopt in this paper has been introduced and pioneered by Backus and Gilbert [39] and is based on the introduction of a smearing kernel in the first place. We will call this the “smearing” discretization approach. The other approach, that is more frequently used in the literature and that we will therefore call the “standard” one, is built on the assumption that spectral densities are smooth and well-behaved functions. Before discussing the smearing approach we briefly review the standard one, by putting the emphasis on the fact that also in this case a smearing kernel is implicitly introduced.

In the standard discretization approach the infinite-volume correlator is approximated as a Riemann sum,

under the assumption that the infinite-volume spectral density is sufficiently regular to have

for \(N_E\) sufficiently large and \(\sigma \) sufficiently small. By introducing the “veilbein matrix”

and the associated “metric matrix” in energy space,

Eq. (6) is then solved,

By using the previous expressions we can now explain why the problem is particularly challenging and in which sense it is numerically ill-posed.

On the numerical side, the metric matrix \({\hat{G}}\) is very badly conditioned in the limit of large \(N_E\) and small \(\sigma \). Consequently, the coefficients \(g_\tau (E_n)\) become huge in magnitude and oscillating in sign in this limit and even a tiny distortion of the input data gets enormously amplified,

In this sense the numerical solution becomes unstable and the problem ill-posed.

Another important observation concerning Eqs. (10), usually left implicit, concerns the interpretation of \(\hat{\rho }(E_n)\) as a smeared spectral density. By introducing the smearing kernel

and by noticing that, as a matter of fact, the spectral density has to be obtained by using the correlator \(C(a\tau )\) (and not its approximation \({\hat{C}}(a\tau )\), to be considered just as a theoretical device introduced in order to formalize the problem), we have

Consistency would require that \(K(E_n,\omega )=\delta (E_n-\omega )\) but this cannot happen at finite T and/or \(N_E\). In fact \(K(E_n,\omega )\) can be considered a numerical approximation of \(\delta (E_n-\omega )\) that, as can easily be understood by noticing that

has an intrinsic energy-resolution proportional to the discretization interval \(\sigma \) of the Riemann’s sum. A numerical study of \(K(E_n,\omega )\) at fixed \(E_n\) reveals that for \(\omega \ge E_n\) the kernel behaves smoothly while it oscillates wildly for \(\omega < E_n\) and small values of \(\sigma \). A numerical example is provided in Fig. 4.

Top panel: Smearing kernel of Eq. (12) at \(E_n=5\) for three values of \(\sigma \). The reconstruction is performed by setting \(E_0=1\) and \(E_\textrm{max}=10\) as UV cutoff in Eq. (5) and by working in lattice units with \(a=1\). The kernel is smooth for \(\omega \ge E_n\) while it presents huge oscillations for \(\omega <E_n\). In the case \(\sigma =0.1\) these oscillations range from \(-10^{126}\) to \(+10^{121}\). Bottom panel: Corresponding coefficients \(g_\tau (E_n)\) (see Eq. 10) in absolute value for the first 20 discrete times. Extended-precision arithmetic is mandatory to invert the matrix \(\hat{G}_{nm}\). This test, in which \(\det (\hat{G})={\mathcal {O}}\big (10^{-15700}\big )\) for \(\sigma =0.1\), has been performed by using 600-digits arithmetic

Once the fact that a smearing operation is unavoidable in any numerical approach to the inverse Laplace-transform problem has been recognized, the smearing discretization approach that we are now going to discuss appears more natural. Indeed, the starting point of the smearing approach is precisely the introduction of a smearing kernel and this allows to cope with the problem also in the case, relevant for our QFT applications, in which spectral densities are distributions.

By reversing the logic of the standard approach, that led us to Eq. (12), in the smearing approach the problem is discretized by representing the possible smearing kernels as functionals of the coefficients \(g_\tau \) according to

Notice that we are now considering the correlator \(C_{LT}(a\tau )\) at finite T and L and this allows to analyse the results of a single lattice simulation. The main observation is that in the \(T\mapsto \infty \) limit any target kernel \(K_\textrm{target}(E,\omega )\) such that

can exactly be represented as a linear combination of the \(b_\infty (a\tau ,\omega )=\exp (-a\omega \tau )\) basis functions (that are indeed dense in the functional-space \(L_2[E_0,\infty ]\) of square-integrable functions). With a finite number of lattice times, the smearing kernel is defined as the best possible approximation of \(K_\textrm{target}(E,\omega )\), i.e. the coefficients \(g_\tau (E)\) are determined by the condition

Once the coefficients are given, the smeared spectral density is readily computed by relying on the linearity of the problem,

where now

In the smearing approach one has

Moreover, provided that \(\rho (E)\) exists, it can be obtained by choosing as the target kernel a smooth approximation to \(\delta (E-\omega )\) depending upon a resolution parameter \(\sigma \), e.g.

and by considering the limits

in the specified order. When \(\sigma \) is very small, the coefficients \(g_\tau (E)\) determined by using Eq. (17) become gigantic in magnitude and oscillating in sign, as in the case of the coefficients obtained by using Eq. (10). In fact the numerical problem is ill-posed independently of the approach used to discretize it.

We now come to the aspects of the problem that are peculiar to our QFT context. Hadronic spectral densities

are the Fourier transforms of Wightman’s functions in Minkowski space,

where \({\hat{P}}=({\hat{H}}, {\hat{\textbf{P}}})\) is the QCD four-momentum operator and the \({\hat{O}}_i\)’s are generic hadronic operators. According to Wightman’s axioms, vacuum expectation values of field operators are tempered distributions. This implies that also their Fourier transforms, the spectral densities, are tempered distributions in energy–momentum space. It is therefore impossible, in general, to compute numerically a spectral density. On the other hand, it is always possible to compute smeared spectral densities,

where the kernels \(K_i(p)\) are Schwartz functions.

In this paper, to simplify the discussion and the notation, we focus on the dependence upon a single space-time variable,

where the operators \({\hat{O}}_I\) and \({\hat{O}}_F\) might also depend upon other coordinates. The spectral density associated with W(x) is given by

and, to further simplify the notation, we don’t show explicitly the dependence of \(\rho (E)\) w.r.t. the fixed spatial momentum \(\textbf{p}\). The very same spectral density appears in the two-point Euclidean correlator

for positive Euclidean time t. In the last line of the previous equation we have used the fact that, because of the presence of the energy Dirac-delta function appearing in Eq. (27), the spectral density vanishes for \(E<E_0\) where \(E_0\) is the smallest energy that a state propagating between the two hadronic operators \({\hat{O}}_I\) and \({\hat{O}}_F\) can have.

On a finite volume the spectrum of the Hamiltonian is discrete and, consequently, the finite-volume spectral density \(\rho _L(E)\) becomes a particularly wild distribution,

the sum of isolated Dirac-delta peaks in correspondence of the eigenvalues \(E_n(L)\) of the finite volume Hamiltonian. By using the previous expression one has that

and this explains why we have discussed the standard approach to the discretization of the inverse Laplace-transform problem by first taking the infinite-volume limit. Indeed if the Riemann’s discretization of Eq. (6) is applied to \(C_L(a\tau )\) and all the \(E_m\)’s are different from all the \(E_n(L)\)’s one gets \({\hat{C}}_L(a\tau )=0\)!

On the one hand, also in infinite volume, spectral densities have to be handled with the care required to cope with tempered distributions and this excludes the option of using the standard approach to discretize the problem in the first place. On the other hand, in some specific cases it might be conceivable to assume that the infinite volume spectral density is sufficiently smooth to attempt using the standard discretization approach. This requires that the infinite-volume limit of the correlator has been numerically taken and that the associated systematic uncertainty has been properly quantified (a non-trivial numerical task at small Euclidean times where the errors on the lattice correlators are tiny).

The smearing discretization approach can be used either in finite- and infinite-volume and the associated systematic errors can reliably be quantified by studying numerically the limits of Eq. (22). For this reason, as in the case of the HLT method of Ref. [14], the target of the machine-learning method that we are now going to discuss in details is the extraction of smeared spectral densities.

3 Numerical setup

In order to implement the strategy sketched in Figs. 1, 2 and 3 and discussed in details in the following sections, the numerical setup needs to be specified. In this section we describe the data layout that we used to represent the input correlators and the output smeared spectral densities and then provide the details of the architectures of the neural networks that we used in our study.

3.1 Data layout

In this work we have considered both mock and real lattice QCD data and have chosen the algorithmic parameters in order to be able to extract the hadronic spectral density from the lattice QCD correlator described in Sect. 7. Since in the case of the lattice QCD correlator the basis function \(b_T(t,E)\) (see Eq. (1)) is given by

with \(T=64 a\), we considered the same setup also in the case of mock data. These are built by starting from a model unsmeared spectral density \(\rho (E)\) and by computing the associated correlator according to

and the associated smeared spectral density according to

Since in the case of the lattice QCD spectral density, in order to compare the results obtained with the proposed new method with the ones previously obtained in Ref. [40], we considered a Gaussian smearing kernel, also in the case of mock data we made the choice

We stress that there is no algorithmic reason behind the choice of the Gaussian as the smearing kernel and that any other kernel can easily be implemented within the proposed strategy.

Among the many computational paradigms available within the machine-learning framework, we opted for the most direct one and represented both the correlator and the smeared spectral density as finite dimensional vectors, that we used respectively as input and output of our neural networks. More precisely, the dimension of the input correlator vector has been fixed to \(N_T=64\), coinciding with the available number of Euclidean lattice times in the case of the lattice QCD correlator. The inputs of the neural networks are thus the 64-components vectors \(\textbf{C}=\{C(a),C(2a),\ldots ,C(64a)\}\). The output vectors are instead given by \({\hat{\mathbf {\rho }}}_{\mathbf {\sigma }}=\{{\hat{\rho }}_\sigma (E_\textrm{min}),\ldots ,{\hat{\rho }}_ \sigma (E_\textrm{max})\}\). As in Ref. [40], we have chosen to measure energies in units of the muon mass, \(m_\mu =0.10566\) GeV, and set \(E_\textrm{min}=m_\mu \) and \(E_\textrm{max}=24m_\mu \). The interval \([E_\textrm{min},E_\textrm{max}]\) has been discretized in steps of size \(m_\mu /2\). With these choices our output smeared spectral densities are the vectors \({\hat{\mathbf {\rho }}}_{\mathbf {\sigma }}\) with \(N_E=47\) elements corresponding to energies ranging from about 100 MeV to 2.5 GeV.

The noise-to-signal ratio in (generic) lattice QCD correlators increases exponentially at large Euclidean times. For this reason it might be numerically convenient to choose \(N_T<T/a\) and discard the correlator at large times where the noise-to-signal ratio is bigger than one. According to our experience with the HLT method, that inherits the original Backus–Gilbert regularization mechanism, it is numerically inconvenient to discard part of the information available on the correlator provided that the information contained in the noise is used to conveniently regularize the numerical problem. Also in this new method, as we are going to explain in Sect. 4.2, we use the information contained in the noise of the lattice correlator during the training process and this, as shown in Appendix B, allows us to conveniently use all the available information on the lattice correlator, i.e. to set \(N_T=T/a\), in order to extract the smeared spectral density.

We treat \(\sigma \), the width of the smearing Gaussian, as a fixed parameter by including in the corresponding training sets only spectral functions that are smeared with the chosen value of \(\sigma \). This is a rather demanding numerical strategy because in order to change \(\sigma \) the neural networks have to be trained anew, by replacing the smeared spectral densities in the training sets with those corresponding to the new value of \(\sigma \). Architectures that give the possibility to take into account a variable input parameter, and a corresponding variable output at fixed input vector, have been extensively studied in the machine learning literature and we leave a numerical investigation of this option to future work on the subject. In this work we considered two different values, \(\sigma =0.44\) GeV and \(\sigma =0.63\) GeV, that correspond respectively to the smallest and largest values used in Ref. [40].

3.2 Architectures

By reading the discussion on the data layout presented in the previous subsection from the machine-learning point of view, we are in fact implementing neural networks to solve a \({\mathbb {R}}^{N_T}\mapsto {\mathbb {R}}^{N_E}\) regression problem with \(N_T=64\) and \(N_E=47\) which, from now on, fix the dimension of the input and output layers of the neural networks.

There are no general rules to prefer a given network architecture among the different possibilities that have been considered within the machine-learning literature and it is common practice to make the choice by taking into account the details of the problem at hand. For our analysis, after having performed a comparative study at (almost) fixed number of parameters of the so-called Multilayer perceptron and convolutional neural networks, we used feed-forward convolutional neural networks based on the LeNet architecture introduced in Ref. [41].

We studied in details the dependence of the output of the neural networks upon their size \(N_\textrm{n}\) and, to this end, we implemented three architectures that we called arcS, arcM and arcL. These architectures, described in full details in Tables 1, 2 and 3, differ only for the number of maps in the convolutional layers. The number of maps are chosen so that the number of parameters of arcS:arcM:arcL are approximately in the proportion 1 : 2 : 3. For the implementation and the training we employed both Keras [42] and TensorFlow [43].

4 Model independent training

In the supervised deep-learning framework a neural network is trained over a training set which is representative of the problem that has to be solved. In our case the inputs to each neural network are the correlators \(\textbf{C}\) and the target outputs are the associated smeared spectral densities \({\hat{\mathbf {\rho }}}_{\mathbf {\sigma }}\). As discussed in the Introduction, our main goal is to devise a model-independent training strategy. To this end, the challenge is that of building a training set which contains enough variability so that, once trained, the network is able to provide the correct answer, within the quoted errors, for any possible input correlator.

As a matter of fact, the situation in which the neural network can exactly reconstruct any possible function is merely ideal. That would be possible only in absence of errors on the input data and with a neural network with an infinite number of neurons, trained on an infinitely large and complex training set. This is obviously impossible and in fact our goal is the realistic task of getting an output for the smeared spectral density as close as possible to the exact one by trading the unavoidable limited abilities of the neural network with a reliable estimate of the systematic error. In order to face this challenge we used the algorithmic strategy described in Figs. 1, 2 and in Fig. 3. In our strategy,

-

the fact that the network cannot be infinitely large is parametrized by the fact that \(N_\textrm{n}\) (the number of neurons) is finite;

-

the fact that during the training a network cannot be exposed to any possible spectral density is parametrized by the fact that \(N_\textrm{b}\) (the number of basis functions) and \(N_\rho \) (the number of spectral densities to which a network is exposed during the training) are finite (see Fig. 1);

-

the fact that at fixed

$$\begin{aligned} \textbf{N}=(N_\textrm{n},N_\textrm{b},N_{\rho }) \end{aligned}$$(35)the answer of a network cannot be exact, and therefore has to be associated with an error, is taken into account by introducing an ensemble of machines, with \(N_\textrm{r}\) replicas, and by estimating this error by studying the distribution of the different \(N_\textrm{r}\) answers in the \(N_\textrm{r}\mapsto \infty \) limit (see Fig. 2);

-

once the network (and statistical) errors at fixed \(\textbf{N}\) are given, we can study numerically the \(\textbf{N}\mapsto \infty \) limits and also quantify, reliably, the additional systematic errors associated with these unavoidable extrapolations (see Fig. 3).

We are now going to provide the details concerning the choice of the functional basis that we used to parametrize the spectral densities and to build our training sets.

4.1 The functional-basis

In our strategy we envisage studying numerically the limit \(N_\textrm{b}\mapsto \infty \) and, therefore, provided that the systematic errors associated with this extrapolation are properly taken into account, there is no reason to prefer a particular basis w.r.t. any other. For our numerical study we used the Chebyshev polynomials of the first kind as basis functions (see for example Ref. [44]).

The Chebyshev polynomials \(T_n(x)\) are defined for \(x \in [-1,1]\) and satisfy the orthogonality relations

In order to use them as a basis for the spectral densities that live in the energy domain \(E\in [E_0,\infty )\), we introduced the exponential map

and set

Notice that the subtraction of the constant term \(T_n(x(E_0))\) has been introduced in order to be able to cope with the fact that hadronic spectral densities vanish below a threshold energy \(E_0\ge 0\) that we consider an unknown of the problem. With this choice, the unsmeared spectral densities that we use to build our training sets are written as

and vanish identically for \(E\le E_0\). Once \(E_0\) and the coefficients \(c_n\) that define \(\rho (E;N_\textrm{b})\) are given, the correlator and the smeared spectral density associated with \(\rho (E;N_\textrm{b})\) can be calculated by using Eqs. (32) and (33).Footnote 2

Examples of unsmeared spectral densities generated according to Eq. (39) using the Chebyshev polynomial as basis functions for different \(N_\textrm{b}\). For all the examples we set \(E_0=0.8\) GeV. As it can be seen, by generating the \(c_n\) coefficients according to Eq. (40), the larger the number of terms of the Chebyshev series the richer is the behaviour of the spectral density in terms of local structures

The black dashed curve is a generic continuous unsmeared spectral density \(\rho (E)\) while the vertical dashed lines indicate the energy sampling defining the support of \(\rho _\delta (\omega )\) according to Eq. (42). The blue and red curves are the smeared version of respectively \(\rho (\omega )\) and \(\rho _\delta (\omega )\). The green curve represents \(\Sigma _{\sigma ,\Delta E}(E)={\hat{\rho }}_\sigma (E)-{\hat{\rho }}_{\delta ,\sigma }(E)\). The spacing is set to \(\Delta E=0.1\) GeV. The smearing kernel is a normalized Gaussian function of width \(\sigma \) and as resolution width we refer to the full width at half maximum \(2 \sqrt{2\ln 2}\, \sigma \). Top-panel: the resolution width is equal to \(\Delta E\) and it is such that the single peaks are resolved. Bottom-panel: in this case \(2\sqrt{2 \ln 2} \,\sigma \gg \Delta E\) and the two smeared functions are almost undistinguishable

Each \(\rho (E;N_\textrm{b})\) that we used in order to populate our training sets has been obtained by choosing \(E_0\) randomly in the interval [0.2, 1.3] GeV with a uniform distribution and by inserting in Eq. (39) the coefficients

where the \(r_n\)’s are \(N_\textrm{b}\) uniformly distributed random numbers in the interval \([-1,1]\) and \(\varepsilon \) is a non-negative parameter that we set to \(10^{-7}\). Notice that with this choice of the coefficients \(c_n\) the Chebyshev series of Eq. (39) is convergent in the \(N_\textrm{b}\mapsto \infty \) limit and that the resulting spectral densities can be negative and in general change sign several times in the interval \(E\in [0,\infty )\). Notice also that up to a normalization constant, that it will turn to be irrelevant in our training strategy in which the input data are standardized as explained in Sect. 4.3, the choice of the interval \([-1,1]\) for the \(r_n\) random numbers is general.

A few examples of unsmeared spectral densities generated according to Eq. (39) are shown in Fig. 5. As it can be seen, with the choice of the coefficients \(c_n\) of Eq. (40), the larger is the number of terms in the Chebyshev series of Eq. (39) the richer is the behaviour of the resulting unsmeared spectral densities in terms of local structures.

This is an important observation and a desired feature. Indeed, a natural concern about the choice of Chebyshev polynomials as basis functions is the fact that we are thus sampling the space of regular functions while, as we pointed out in Sect. 2, on a finite volume the lattice QCD spectral density is expected to be a discrete sum of Dirac-delta peaks (see Eq. (29)). Here we are relying on the fact that the inputs of our networks will be Euclidean correlators, that in fact are smeared spectral densities, and will also be asked to provide as output the smeared spectral densities \({{\hat{\rho }}}_\sigma (E)\). This allows us to assume that, provided that the energy smearing parameter \(\sigma \) and \(N_\textrm{b}\) are sufficiently large, the networks will be exposed to sufficiently general training sets to be able to extract the correct smeared spectral densities within the quoted errors. To illustrate this point we consider in Fig. 6 a continuous spectral density \(\rho (E)\) and approximate its smeared version through a Riemann’s sum according to

where

The previous few lines of algebra show that the smearing of a continuous function \(\rho (\omega )\) can be written as the smearing of the distribution defined in Eq. (42), a prototype of the finite-volume distributions of Eq. (29), plus the approximation error \(\Sigma _{\sigma ,\Delta E}(E)={\hat{\rho }}_\sigma (E)-{\hat{\rho }}_{\delta ,\sigma }(E)\). The quantity \(\Sigma _{\sigma ,\Delta E}(E)\), depending upon the spacing \(\Delta E\) of the Dirac-delta peaks and the smearing parameter \(\sigma \), is expected to be sizeable when \(\sigma \le \Delta E\) and to become irrelevant in the limit \(\sigma \gg \Delta E\). A quantitative example is provided in Fig. 6 where \(\Sigma _{\sigma ,\Delta E}(E)\) is shown at fixed \(\Delta E\) for two values of \(\sigma \). In light of this observation, corroborated by the extensive numerical analysis that we have performed at the end of the training sessions to validate our method (see Sect. 5.2 and, in particular, Fig. 16), we consider justified the choice of Chebyshev polynomials as basis functions provided that, as is the case in this work, the energy smearing parameter \(\sigma \) is chosen sufficiently large to not be able to resolve the discrete structure of the finite-volume spectrum.

4.2 Building the training sets

Having provided all the details concerning the functional basis that we use to parametrize the unsmeared spectral densities, we can now explain in details the procedure that we used to build our training sets.

A training set \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) contains \(N_\rho \) input–output pairs. Each pair is obtained by starting from a random unsmeared spectral density, parametrized at fixed \(N_\textrm{b}\) according to Eq. (39) and generated as explained in the previous subsection. Given the unsmeared spectral density \(\rho (E;N_\textrm{b})\), we then compute the corresponding correlator vector \(\textbf{C}\) (by using Eq. (32)) and, for the two values of \(\sigma \) that we used in this study, the smeared spectral densities vectors \(\hat{\mathbf {\rho }}_{\mathbf {\sigma }}\) (by using Eq. (33)). From each pair \((\textbf{C},\hat{\mathbf {\rho }}_{\mathbf {\sigma }})\) we then generate an element \((\textbf{C}_\textrm{noisy},\hat{\mathbf {\rho }}_{\mathbf {\sigma }})\) of the training set at fixed \(N_\textrm{b}\) and \(\sigma \),

that we obtain, as we are now going to explain, by distorting the correlator \(\textbf{C}\) using the information provided by the noise of the lattice correlator \(\textbf{C}_\textrm{latt}\) (see Sect. 7).

In order to cope with the presence of noise in the input data that have to be processed at the end of the training, it is extremely useful (if not necessary) to teach to the networks during the training to distinguish the physical content of the input data from noisy fluctuations. This is particularly important when dealing with lattice QCD correlators for which, as discussed in Sect. 3.1, the noise-to-signal ratio grows exponentially for increasing Euclidean times (see Fig. 7). A strategy to cope with this problem, commonly employed in the neural network literature, is to add Gaussian noise to the input data used in the training. There are several examples in the literature where neural networks are shown to be able to learn rather efficiently by employing this strategy of data corruption (see the already cited textbooks Refs. [37, 38]). According to our experience, it is crucially important that the structure of the noise used to distort the training input data resembles as much as possible that of the true input data. In fact, it is rather difficult to model the noise structure generated in Monte Carlo simulations and, in particular, the off-diagonal elements of the covariance matrices of lattice correlators. In the light of these observations we decided to use the covariance matrix \({\hat{\Sigma }}_\textrm{latt}\) of the lattice correlator \(\textbf{C}_\textrm{latt}\) that we are going to analyse in Sect. 7 to obtain \(\textbf{C}_\textrm{noisy}\) from \(\textbf{C}\). More precisely, given a correlator \(\textbf{C}\), we generate \(\textbf{C}_\textrm{noisy}\) by extracting a random correlator vector from the multivariate Gaussian distribution

having \(\textbf{C}\) as mean vector and as covariance the matrix \({\hat{\Sigma }}_\textrm{latt}\) normalized by the factor \(\left( \frac{C(a)}{C_\textrm{latt}(a)}\right) ^2\).

Noise-to-signal ratio of the lattice correlator \(C_\textrm{latt}(t)\), discussed in Sect. 7, whose covariance matrix is used to inject the statistical noise in the training sets

In order to be able to perform a numerical study of the limits \(\textbf{N} \mapsto \infty \), we have generated with the procedure just described several training sets, corresponding to \(N_\textrm{b}=\{16,32,128,512\}\) and \(N_\rho =\{50,100,200,400,800\}\times 10^3\). At fixed \(N_\textrm{b}\), each training set includes the smaller ones, i.e. the training set \({\mathcal {T}}_\sigma (N_\textrm{b},100\times 10^3)\) includes the training set \({\mathcal {T}}_\sigma (N_\textrm{b}, 50\times 10^3)\) enlarged with \(50\times 10^3\) new samples.

4.3 Data pre-processing

A major impact in the machine-learning performance is played by the way the data are presented to the neural network. The learning turns to be more difficult when the input variables have different scales and this is exactly the case of Euclidean lattice correlators since the components of the input vectors decrease exponentially fast. The risk in this case is that the components small in magnitude are given less importance during the training or that some of the weights of the network become very large in magnitude, thus generating instabilities in the training process. We found that a considerable improvement in the validation loss minimization is obtained by implementing the standardization of the input correlators (see next subsection and the central panel of Fig. 8) and therefore used this procedure.

The standardization procedure of the input consists in rescaling the data in such a way that all the components of the input correlator vectors in the training set are distributed with average 0 and variance 1. For a given training set \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) we calculate the \(N_T\)-component vectors \(\mathbf {\mu }\) and \(\mathbf {\gamma }\) whose components are

and

Each correlator in the training is then replaced by

where \(\textbf{C}_\textrm{noisy}\) is the distorted version of \(\textbf{C}\) discussed in the previous subsection. Notice that the vectors \(\mathbf {\mu }\) and \(\mathbf {\gamma }\) are determined from the training set before including the noise. Although the pre-processed correlators look quite different from the original ones, since the components are no longer exponential decaying, the statistical correlation in time is preserved by the standardization procedure.

At the end of the training, the correlators fed into the neural network for validation or prediction have also to be pre-processed by using the same vectors \(\mathbf {\mu }\) and \(\mathbf {\gamma }\) used in the training.

4.4 Training an ensemble of machines

Given a machine with \(N_\textrm{n}\) neurons we train it over the training set \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) by using as loss function the Mean Absolute Error (MAE)

where \({\hat{\mathbf {\rho }}}_\sigma ^{\textrm{pred},i}(\textbf{w})\) is the output of the neural network in correspondence of the input correlator \(\textbf{C}_\textrm{noisy}^i\). In Eq. (48) we used the norm \(|\varvec{{\hat{\rho }}}|=\sum _{j=1}^{N_E=47}|\varvec{{\hat{\rho }}}_j|\) and we have explicitly shown the dependence of the predicted spectral density \({\hat{\mathbf {\rho }}}_{\sigma }^{\textrm{pred},i}(\textbf{w})\) upon the weights \(\textbf{w}\) of the network.

At the beginning of each training session each weight \(w_n\) (with \(n=1,\ldots ,N_\textrm{n}\)) is extracted from a Gaussian distribution with zero mean value and variance 0.05. To end the training procedure we rely on the early stopping criterion: the training set is split into two subsets containing respectively the 80% and 20% of the entries of the training set \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\). The larger subset is used to update the weights of the neural network with the gradient descent algorithm. The smaller subset is the so-called validation set and we use it to monitor the training process. At the end of each epoch we calculate the loss function of Eq. (48) for the validation set, the so-called validation loss, and stop the training when the drop in the validation loss is less than \(10^{-5}\) for 15 consecutive epochs. In the trainings performed in our analysis this occurs typically between epoch 150 and 200. The early stopping criterion provides an automatic way to prevent the neural network from overfitting the input data.

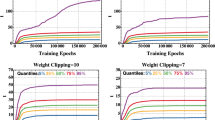

Numerical tests to optimize the training performances. The validation loss functions of the trainings of the \(N_\textrm{r}=20\) replica machines belonging to the same ensemble are plotted with the same color. All the trainings refer to arcL, \(N_\rho =50\times 10^3\), \(N_\textrm{b}=512\) and \(\sigma =0.44\) GeV. If not otherwise specified the data are pre-processed, BS=32 and the learning rate scheduler is implemented. Top panel: Comparison of the validation losses with and without the learning scheduler rate defined in Eq. (49). Central panel: Comparison of the validation losses by implementing or not the input data pre-processing procedure described in Sect. 4.3. Bottom panel: Comparison of the validations losses at different values of the BS parameter

We implement a Mini-Batch Gradient Descent algorithm, with Batch Size (BS) set to 32, by using the Adam optimizer [45] combined with a learning rate decaying according to

where \(e\in {\mathbb {N}}\) is the epoch and \(\eta (0)=2\times 10^{-4}\) is the starting value. The step-function is included so that the learning rate is unchanged during the first 25 epochs. Although a learning rate scheduler is not strictly mandatory, since the Adam optimizer already includes adaptive learning rates, we found that it provides an improvement in the convergence with less noisy fluctuations in the validation loss (see the top panel of Fig. 8). Concerning the BS, we tested the neural network performance by starting from BS = 512 and by halving it up to BS = 16 (see bottom panel of Fig. 9). Although the performance improves as BS decreases we set BS \(=32\) in order to cope with the unavoidable slowing down of the training for smaller values of BS.

As we already stressed several times, at fixed \(\textbf{N}\) the answer of a neural network cannot be exact. In order to be able to study numerically the \(\textbf{N}\mapsto \infty \) limits, the error associated with the limited abilities of the networks at finite \(\textbf{N}\) has to be quantified. To do this we introduce the ensemble of machines by considering at fixed \(\textbf{N}\)

machines with the same architecture and trained by using the same strategy. More precisely, each machine of the ensemble is trained by using a training set \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) obtained by starting from the same unsmeared spectral densities, and therefore from the same pairs \((\textbf{C},\hat{\mathbf {\rho }}_{\mathbf {\sigma }})\) (see Eq. (43)), but with different noisy input correlator vectors \(\textbf{C}_\textrm{noisy}\).

Validation losses at the end of the trainings as functions of the training set dimension \(N_\rho \). The top-plot corresponds to \(\sigma =0.44\) GeV and the bottom-plot to \(\sigma =0.63\) GeV. The different colors correspond to the different architectures and different markers to the different number of basis functions \(N_\textrm{b}\) considered in this study. The error on each point correspond to the standard deviation of the distribution of the validation loss in an ensemble of \(N_\textrm{r}=20\) machines. As expected, the validation loss decreases by increasing \(N_\rho \) (more general training) and/or \(N_\textrm{n}\) (larger and therefore smarter networks). Moreover, at fixed \(N_\textrm{n}\) and \(N_\rho \) the neural network performs better at smaller values of \(N_\textrm{b}\) as consequence of the fact that the training set exhibits less complex features and learning them is easier

In Fig. 9 we show the validation loss as a function of \(N_\rho \) for the different values of \(N_\textrm{b}\), the three different architectures and the two values of \(\sigma \) considered in this study. Each point in the figure has been obtained by studying the distribution of the validation loss at the end of the training within the corresponding ensemble of machines and by using respectively the mean and the standard deviation of each distribution as central value and error. The figure provides numerical evidences concerning the facts that

-

networks with a finite number \(N_\textrm{n}\) of neurons, initialized with different weights and exposed to training sets containing a finite amount of information, provide different answers and this is a source of errors that have to be quantified;

-

the validation loss decreases for larger \(N_\textrm{n}\) because larger networks are able to assimilate a larger amount of information;

-

the validation loss decreases for larger \(N_\rho \) since, if \(N_\textrm{n}\) is sufficiently large, the networks learn more from larger and more general training sets;

-

at fixed \(N_\textrm{n}\) and \(N_\rho \) the networks perform better at smaller values of \(N_\textrm{b}\) because the training sets exhibit less complex features and learning them is easier.

In order to populate the plots in Fig. 9 we considered several values of \(N_\rho \) and \(N_\textrm{b}\) and this is rather demanding from the computational point of view. The training sets that we found to be strictly necessary to quote our final results are listed in Table 4.

5 Estimating the total error and validation tests

Having explained in the previous section the procedure that we used to train our ensembles of machines, we can now explain in details the procedure that we use to obtain our results. We start by discussing the procedure that we use to estimate the total error, taking into account both statistical and systematics uncertainties, and then illustrate the validation tests that we have performed in order to assess the reliability of this procedure.

5.1 Procedure to estimate the total error

The procedure that we use to quote our results for smeared spectral densities by using the trained ensembles of machines, illustrated in Fig. 3, is the following

-

given an ensemble of \(N_\textrm{r}\) machines trained at fixed \(\textbf{N}\), we feed in each machine r belonging to the ensemble all the different bootstrap samples (or jackknife bins) \(C_c(a\tau )\) of the input correlator (\(c=1,\ldots , N_\textrm{c}\)) and obtain a collection of results \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},c,r)\) for the smeared spectral density that depend upon \(\textbf{N}\), c and r;

-

at fixed \(\textbf{N}\) and r we compute the bootstrap (or jackknife) central values \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},r)\) and statistical errors \(\Delta _\sigma ^\textrm{latt}(E,\textbf{N},r)\) and average them over the ensemble of machines,

$$\begin{aligned}&{\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N})=\frac{1}{N_\textrm{r}}\sum _{r=1}^{N_\textrm{r}}{\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},r)\;, \nonumber \\&\Delta _\sigma ^\textrm{latt}(E,\textbf{N})=\frac{1}{N_\textrm{r}}\sum _{r=1}^{N_\textrm{r}}\Delta _\sigma ^\textrm{latt}(E,\textbf{N},r)\;; \end{aligned}$$(51) -

by computing the standard deviation over the ensemble of machines of \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},r)\), we obtain an estimate of the error, that we call \(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\), associated with the fact that at fixed \(\textbf{N}\) the answer of a network cannot be exact;

-

both \(\Delta _\sigma ^\textrm{latt}(E,\textbf{N})\) and \(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\) have a statistical origin. The former, \(\Delta _\sigma ^\textrm{latt}(E,\textbf{N})\), comes from the limited statistics of the lattice Monte Carlo simulation while the latter, \(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\), comes from the statistical procedure that we used to populate our training sets \({\mathcal {T}}_\sigma (N_\textrm{b},N_\rho )\) and to train our ensembles of machines at fixed \(N_\textrm{b}\). We sum them in quadrature and obtain an estimate of the statistical error at fixed \(\textbf{N}\),

$$\begin{aligned} \Delta _\sigma ^\textrm{stat}(E,\textbf{N}) = \sqrt{\left[ \Delta _\sigma ^\textrm{latt}(E,\textbf{N})\right] ^2+\left[ \Delta _\sigma ^\textrm{net}(E,\textbf{N})\right] ^2}\;; \end{aligned}$$(52) -

we then study numerically the \(\textbf{N}\mapsto \infty \) limits by using a data-driven procedure. We quote as central value and statistical error of our final result

$$\begin{aligned}&{\hat{\rho }}_\sigma ^\textrm{pred}(E) \equiv \rho _\sigma ^\textrm{pred}(E,\textbf{N}^\textrm{max})\;, \nonumber \\&\Delta _\sigma ^\textrm{stat}(E) \equiv \Delta _\sigma (E,\textbf{N}^\textrm{max})\;, \end{aligned}$$(53)where \(\textbf{N}^\textrm{max}\), given in Table 4, is the vector with the largest components among the vectors \(\textbf{N}\) considered in this study;

-

in order to check the numerical convergence of the \(\textbf{N}\mapsto \infty \) limits and to estimate the associated systematic uncertainties \(\Delta _\sigma ^{X}(E)\), where

$$\begin{aligned} X=\{\rho ,\textrm{n},\textrm{b}\}\;, \end{aligned}$$(54)we define the reference vectors \(\textbf{N}_X^\textrm{ref}\) listed in Table 4. We then define the pull variables

$$\begin{aligned} P_\sigma ^{X}(E) = \frac{\left| {\hat{\rho }}_\sigma ^{\textrm{pred}}(E)-{\hat{\rho }}_\sigma ^{\textrm{pred}}(E,\textbf{N}^\textrm{ref}_X)\cdot \right| }{\sqrt{\big [\Delta _\sigma ^\textrm{stat}(E)\big ]^2+\big [\Delta _\sigma ^\textrm{stat}(E,\textbf{N}^\textrm{ref}_X)\big ]^2}}\;, \end{aligned}$$(55)and then we weight the absolute value of the difference \(\left| {\hat{\rho }}_\sigma ^{\textrm{pred}}(E)-{\hat{\rho }}_\sigma ^{\textrm{pred}}(E,\textbf{N}^\textrm{ref}_X)\right| \) with the Gaussian probability that this is not due to a statistical fluctuation,

$$\begin{aligned} \Delta ^{X}_\sigma (E) = \left| {\hat{\rho }}_\sigma ^{\textrm{pred}}(E)-{\hat{\rho }}_\sigma ^{\textrm{pred}}(E,\textbf{N}^\textrm{ref}_X)\right| \textrm{erf} \left( \frac{|P_\sigma ^{X}(E)|}{\sqrt{2}}\right) ; \end{aligned}$$(56) -

the total error that we associate to our final result \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\) is finally obtained according to

$$\begin{aligned}&\Delta _\sigma ^\textrm{tot}(E) \nonumber \\&\quad = \sqrt{ \left[ \Delta _\sigma ^\textrm{stat}(E)\right] ^2+ \left[ \Delta _\sigma ^\rho (E)\right] ^2+ \left[ \Delta _\sigma ^\textrm{b}(E)\right] ^2+ \left[ \Delta _\sigma ^\textrm{n}(E)\right] ^2}\;. \end{aligned}$$(57)

Some remarks are in order here. We don’t have a theoretical understanding neither of the dependence of the statistical errors \(\Delta _\sigma ^\textrm{latt}(E,\textbf{N})\) and \(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\) upon E and \(\textbf{N}\) (see Appendix C for a numerical investigation) nor of the rates of convergence of the \(\textbf{N}\mapsto \infty \) limits. Gaining this theoretical understanding is a task that goes far beyond the scope of this paper. The procedure that we have devised to quote our results, that at first sight might appear too complicated, has therefore to be viewed as just one of the possible ways to perform conservative plateaux-analyses of the \(\textbf{N}\mapsto \infty \) limits. This explains our choice of the points \(\textbf{N}^\textrm{ref}_X\) given in Table 4 that, in our numerical setup, provide the most conservative estimates of the systematic errors. The stringent validation tests that we have performed with mock data, and that we are going to discuss below, provide a quantitative evidence that the results obtained by implementing this procedure can in fact be trustworthy used in phenomenological applications.

5.2 Validation

Each row corresponds to a different energy, as written on the top of each plot, and in all cases we set \(\sigma =0.44\) GeV and \(\textbf{N}=\textbf{N}^\textrm{max}=(\textrm{arcL},512,800\times 10^3)\). Left panels: the x-axes corresponds to the replica index \(r=1,\ldots ,N_\textrm{r}=20\) running over the entries of the ensemble of machines. The plotted points correspond to the results \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},r) \pm \Delta _\sigma ^\textrm{latt}(E,\textbf{N},r)\) obtained by computing the bootstrap averages and errors of the \(c=1,\ldots ,N_\textrm{c}=800\) results \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},c,r)\). Right panels: distributions of the central values \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},r)\). The means of these distributions correspond to the results \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N})\) that are shown in the left panels as solid blue lines. The widths correspond to the network errors \(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\) that are represented in the left panels with two dashed blue lines. The blue bands in the left panels correspond to \(\Delta _\sigma ^\textrm{stat}(E,\textbf{N})\), defined in Eq. (52)

Numerical study of the \(\textbf{N}\mapsto \infty \) limits. Each row corresponds to a different energy, as written on the top of each plot, and all cases we set \(\sigma =0.44\) GeV. Left panels: Study of the limit \(N_\rho \mapsto \infty \) at fixed \(N_\textrm{b}=512\) and \(N_\textrm{n}=\)arcL. Central panels: Study of the limit \(N_\textrm{n} \mapsto \infty \) at fixed \(N_\textrm{b}=512\) and \(N_\rho =800\times 10^3\). Right panels: Study of the limit \(N_\textrm{b} \mapsto \infty \) at fixed \(N_\textrm{n}=\)arcL and \(N_\rho =800\times 10^3\). All panels: The horizontal blue line is the final central value, corresponding to \(\textbf{N}=\textbf{N}^\textrm{max}=(\textrm{arcL},512,800\times 10^3)\) (blue points) and it is common to all the panels within the same row. The blue band represents instead \(\Delta _\sigma ^\textrm{tot}(E)\) calculated by the combination in quadrature of all the errors (see Eq. 57). The red points correspond to \(\textbf{N}_X^\textrm{ref}\) and the grey ones to the other trainings, see Table 4

Final results for \(\sigma =0.44\) GeV. Top panel: The final results predicted by the neural networks (blue points) is compared with the true result (solid black). The dotted grey curve in the background represents the unsmeared spectral density. Central panel: Relative error budget as a function of the energy. Bottom panel: Deviation of the predicted result from the true one in units of the standard deviation, see Eq. (58)

In order to illustrate how the method described in the previous subsection can be applied in practice, we now consider an unsmeared spectral density \(\rho (E)\) that has not been used in any of the trainings and that has been obtained as described in Sect. 4.1, i.e. by starting from Eq. (39), but this time with \(N_\textrm{b}=1024\), i.e. a number of basis functions which is twice as large as the largest \(N_\textrm{b}\) employed in the training sessions.

From this \(\rho (E)\) we then calculate the associated correlator \(\textbf{C}\) and the smeared spectral density corresponding to \(\sigma =0.44\) GeV. We refer to the true smeared spectral density as \({\hat{\rho }}_\sigma ^\textrm{true}(E)\), while we call \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\) the final predicted result. Both the unsmeared spectral density \(\rho (E)\) (grey dotted curve, partially visible) and the expected exact result \({\hat{\rho }}^\textrm{true}_\sigma (E)\) (solid black curve) are shown in the top panel of Fig. 12.

Starting from the exact correlator \(\textbf{C}\) corresponding to \(\rho (E)\) we have then generated \(N_\textrm{c}=800\) bootstrap samples from the distribution of Eq. (44), thus simulating the outcome of a lattice Monte Carlo simulation. The \(N_\textrm{c}\) samples have been fed into each trained neural network and we collected the answers \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N},c,r)\) that, as shown in Fig. 10 for a selection of the considered values of E, we have then analysed to obtain \(\Delta _\sigma ^\textrm{latt}(E,\textbf{N})\) and \(\Delta _\sigma ^\textrm{net}(E,\textbf{N})\).

The next step is now the numerical study of the limits \(\textbf{N}\mapsto \infty \) that we illustrate in Fig. 11 for the same values of E considered in Fig. 10. The limit \(N_\rho \mapsto \infty \) (left panels) is done at fixed \(N_\textrm{b}=512\) and \(N_\textrm{n}=\)arcL. The limit \(N_\textrm{n} \mapsto \infty \) (central panels) is done at fixed \(N_\textrm{b}=512\) and \(N_\rho =800\times 10^3\). The limit \(N_\textrm{b} \mapsto \infty \) (right panels) is done at fixed \(N_\textrm{n}=\)arcL and \(N_\rho =800\times 10^3\). As it can be seen, the blue points, corresponding to our results \({\hat{\rho }}_\sigma ^\textrm{pred}(E) \pm \Delta _\sigma ^\textrm{stat}(E)\), are always statistically compatible with the first grey point on the left of the blue one in each plot. The red points correspond to the results \({\hat{\rho }}_\sigma ^\textrm{pred}(E,\textbf{N}_X^\textrm{ref}) \pm \Delta _\sigma ^\textrm{stat}(E,\textbf{N}_X^\textrm{ref})\) that we use to estimate the systematic uncertainties \(\Delta _\sigma ^X(E)\). The blue band correspond to our estimate of the total error \(\Delta _\sigma ^\textrm{tot}(E)\).

In the top panel of Fig. 12 we show the comparison of our final results \({\hat{\rho }}_\sigma ^\textrm{pred}(E) \pm \Delta _\sigma ^\textrm{tot}(E)\) (blue points) with the true smeared spectral density \({\hat{\rho }}_\sigma ^\textrm{true}(E)\) (black curve) that in this case is exactly known. The central panel in Fig. 12 shows the relative error budget as a function of the energy. As it can be seen, the systematics errors represent a sizeable and important fraction of the total error, particularly at large energies. The bottom panel of Fig. 12 shows the pull variable

and, as it can be seen, by using the proposed procedure to estimate the final results and their errors, no significant deviations from the true result have been observed in this particular case.

Left panel: Normalized distribution of the significance defined in Eq. (58). The distribution is calculated over 2000 validation samples generated on the Chebyshev functional space. Right panel: comparison of the normalized cumulative distribution functions (CDF) of the observed distribution of \(p_\sigma \) (blue) and of the normal one obtained from the mean and variance of \(p_\sigma \) (red). The black arrow represents the Kolmogorov–Smirnov statistics (\(D_\textrm{KS}\)), i.e. the magnitude and the position of the maximum deviation between the two CDFs in absolute value

In order to validate our method we repeated the test just described 2000 times. We have generated random spectral densities, not used in the trainings, by starting again from Eq. (39) and by selecting random values for \(E_0\) and \(N_\textrm{b}\) respectively in the intervals [0.3, 1.3] GeV and [8, 1024].

The values of \(p_\sigma (E)\) for all the energies and for all the samples is collected in one set, \(p_\sigma \) for short, whose normalized distribution is shown in the left panels of Fig. 13 (top panel for \(\sigma =0.44\) GeV, bottom panel for \(\sigma =0.63\) GeV). The distributions are nicely bell-shaped around 0 and the right panels in Fig. 13 show the comparison between the normalized cumulative distribution functions of \(p_\sigma \) with the normal distribution obtained from the mean and variance of \(p_\sigma \). The p-value calculated from the Kolmogorov–Smirnov test is much less than 0.05 in both the cases and therefore the distribution of \(p_\sigma \) cannot be considered as a normal one. On the other hand, given the procedure that we use to quote the total error, we observe deviations \({\hat{\rho }}_\sigma ^\textrm{pred}(E)-{\hat{\rho }}_\sigma ^\textrm{true}(E)\) smaller than 2 standard deviations in 95% of the cases and smaller than 3 standard deviations in 99% of the cases. The fact that deviations smaller than 1 standard deviation occur in \(\sim \)80% of the cases for \(\sigma =0.44\) GeV and \(\sim \)85% of the cases for \(\sigma =0.63\) GeV (to be compared with the expected 68% in the case of a normal distribution) is an indication of the fact that our estimate of the total error is indeed conservative. Moreover, from Fig. 13 it is also evident that our ensembles of neural networks are able to generalize very efficiently outside the training set.

In order to perform another stringent validation test of the proposed method, we also considered unsmeared spectral densities that cannot be written on the Chebyshev basis. We repeated the analysis described in the previous paragraph for a set of spectral densities generated according to

where \(0<E_0\le E_1 \le E_2 \le \cdots \le E_\textrm{peaks}\). By plugging Eq. (59) into Eqs. (32) and (33) we have calculated the correlator and smeared spectral density associated to each unsmeared \(\rho (E)\). We have generated 2000 unsmeared spectral densities with \(N_\textrm{peaks}=5000\). The position \(E_n\) of each peak has been set by drawing independent random numbers uniformly distributed in the interval \([E_0,15\,\textrm{GeV}]\) while \(E_0\) has been randomly chosen in the interval [0.3, 1.3] GeV. The coefficients \(c_n\) have also been generated randomly in the interval \([-0.01,0.01]\). The 2000 trains of isolated Dirac-delta peaks are representative of unsmeared spectral densities that might arise in the study of finite volume lattice correlators. These are rather wild and irregular objects that our neural networks have not seen during the trainings. Figure 14 shows the plots equivalent to those of Fig. 13 for this new validation set. As it can be seen, the distributions of \(p_\sigma (E)\) are basically unchanged, an additional reassuring evidence of the ability of our ensembles of neural networks to generalize very efficiently outside the training set and, more importantly, on the robustness of the procedure that we use to estimate the errors.

6 Results for mock data inspired by physical models

In the light of the results of the previous section, providing a solid quantitative evidence of the robustness and reliability of the proposed method, we investigate in this section the performances of our trained ensembles of machines in the case of mock spectral densities coming from physical models. A few more validation tests, unrelated to physical models, are discussed in Appendix A.

For each test discussed in the following subsections we define a model spectral density \(\rho (E)\) and then use Eqs. (32) and (33) to calculate the associated exact correlator and true smeared spectral density. We then generate \(N_\textrm{c}=800\) bootstrap samples \(C_c(t)\), by sampling the distribution of Eq. (44), and quote our final results by using the procedure described in details in the previous section.

6.1 A resonance and a multi-particle plateaux

Results for three different unsmeared spectral densities \(\rho (E)\) (dashed grey lines) generated from the Gaussian mixture model of Eq. (60). The solid lines correspond to \({\hat{\rho }}_\sigma ^\textrm{true}(E)\). The points, with error bars, refer to the predicted spectral densities \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\)

Reconstructed smeared spectral densities (points with errors) compared to the true ones (solid lines) for two unsmeared spectral densities simulating a finite volume distribution, see Eq. (62). The unsmeared \(\rho (E)\) is represented by vertical lines located in correspondence of \(E=E_n\) with heights proportional to \(c_n\)

The first class of physically motivated models that we investigate is that of unsmeared spectral densities exhibiting the structures corresponding to an isolated resonance and a multi-particle plateau. To build mock spectral densities belonging to this class we used the same Gaussian mixture model used in Ref. [24] and given by

where

The sigmoid function \({\hat{\theta }}(E,\delta _1,\zeta _1)\) with \(\delta _1=0.1\) GeV and \(\zeta _1=0.01\) GeV dumps \(\rho ^\textrm{GM}(E)\) at low energies while the other sigmoid \({\hat{\theta }}(E,\delta _2,\zeta _2)\), with \(\zeta _2=0.1\) GeV, connects the resonance to the continuum part whose threshold is \(\delta _2\). The parameter \(C_0=1\) regulates the height of the continuum plateaux which also coincides with the asymptotic behaviour of the spectral density, i.e. \(\rho ^\textrm{GM}(E)\mapsto C_0\) for \(E\mapsto \infty \). We have generated three different spectral densities with this model that, in the following, we call \(\rho _1^\textrm{GM}(E)\), \(\rho _2^\textrm{GM}(E)\) and \(\rho _3^\textrm{GM}(E)\) and whose parameters are given in Table 5.

The predicted smeared spectral densities for smearing widths \(\sigma =0.44\) GeV and \(\sigma =0.63\) GeV are compared to the true ones in Fig. 15. In all cases the predicted result agrees with the true one, within the quoted errors, for all the explored values of E. The quality of the reconstruction of the smeared spectral densities is excellent for \(E<1.5\) GeV while, at higher energies, the quoted error is sufficiently large to account for the deviation of \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\) from \({\hat{\rho }}_\sigma ^\textrm{true}(E)\). This is particularly evident in the case of \(\rho _1^\textrm{GM}(E)\) shown in the top panel.

The same class of physical models can also be investigated by starting from unsmeared spectral densities that might arise in finite volume calculations by considering

The parameter \(E_\textrm{peak}\) parametrizes the position of an isolated peak while the multi-particle part is introduced with the other peaks located at \(E_\textrm{peak}<E_1\le \cdots \le E_{\textrm{N}_\textrm{peaks}}\). We have generated two unsmeared spectral densities, \(\rho _1^\textrm{peak}(E)\) and \(\rho _2^\textrm{peak}(E)\) by using this model. In both cases we set \(N_\textrm{peaks}=10{,}000\), selected random values for the \(E_n\)’s up to 50 GeV and random values for the \(c_n\)’s in the range [0, 0.01]. In the case of \(\rho _1^\textrm{peak}(E)\) we set \(E_\textrm{peak}=0.8\) GeV, \(C_\textrm{peak}=1\) and \(E_1=1.2\) GeV. In the case of \(\rho _2^\textrm{peak}(E)\) we set instead \(E_\textrm{peak}=0.7\) GeV, \(C_\textrm{peak}=1\) and \(E_1=1.5\) GeV.

The predicted smeared spectral densities are compared with the true ones in Fig. 16. In both cases \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\) is in excellent agreement with \({\hat{\rho }}_\sigma ^\textrm{true}(E)\).

It is worth emphasizing once again that the model in Eq. (62) cannot be represented by using the Chebyshev basis of Eq. (39) and, therefore, it is totally unrelated to the smooth unsmeared spectral densities that we used to populate the training sets.

6.2 O(3) non-linear \(\sigma \)-model

Predicted smeared spectral densities for the two-particles contribution to the vector-vector correlator in the \(1+1\) dimensional O(3) non-linear \(\sigma \) model for different values of the two-particle threshold: 0.5 GeV (top panel), 1 GeV (central panel) and 1.5 GeV (third panel). The solid lines and the dashed curve correspond respectively to \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\) and \(\rho ^{O(3)}(E)\)

In this subsection we consider a model for the unsmeared spectral density that has already been investigated by using the HLT method in Ref. [21]. More precisely, we consider the two-particles contribution to the vector-vector spectral density in the the \(1+1\) dimensional O(3) non-linear \(\sigma \)-model (see Ref. [21] for more details) given by

where \(E_\textrm{th}\) is the two-particle threshold. We considered three mock unsmeared spectral densities, that we call \(\rho ^{O(3)}_1(E)\), \(\rho ^{O(3)}_2(E)\) and \(\rho ^{O(3)}_3(E)\), differing for the position of the multi-particle threshold, which has been set respectively to \(E_\textrm{th}=0.5\) GeV, 1 GeV and 1.5 GeV. The reconstructed smeared spectral densities for \(\sigma =0.44\) GeV and \(\sigma =0.63\) GeV are compared with the exact ones in Fig. 17.

The predicted smeared spectral densities are in remarkably good agreement with the true ones in the full energy range and in all cases. This result can be read as an indication of the fact that the smoothness of the underlying unsmeared spectral density plays a crucial rôle in the precision that one can get on \({\hat{\rho }}_\sigma ^\textrm{pred}(E)\), also if the problem is approached by using a neural network approach. Indeed, this fact had already been observed and exploited in Ref. [21], where the authors managed to perform the \(\sigma \mapsto 0\) limit of \({\hat{\rho }}_\sigma ^{O(3)}(E)\) with controlled systematic errors by using the HLT method.

6.3 The R-ratio with mock data

Results for the R-ratio using the parametrization of Ref. [46]. The gray dashed line is R(E) while the solid lines correspond to \(\hat{R}_\sigma ^\textrm{true}(E)\)

The last case that we consider is that of a model spectral density coming from a parametrization of the experimental data of the R-ratio. The R-ratio, denoted by R(E), is defined as the ratio between the inclusive cross section of \(e^+e^-\rightarrow \textrm{hadrons}\) and \(e^+e^-\rightarrow \mu ^+\mu ^-\) and plays a crucial rôle in particle physics phenomenology (see e.g. Refs. [40, 47]).

In the next section we will present results for a contribution to the smeared R-ratio \(R_\sigma (E)\) that we obtained by feeding into our ensembles of trained neural networks a lattice QCD correlator that has been already used in Ref. [40] to calculate the same quantity with the HLT method.

Before doing that, however, we wanted also to perform a test with mock data generated by starting from the parametrization of R(E) given in Ref. [46]. This parametrization, that we use as model unsmeared spectral density by setting \(\rho (E)\equiv R(E)\), is meant to reproduce the experimental measurements of R(E) for energies \(E<1.1\) GeV, i.e. in the low-energy region where there are two dominant structures associated with the mixed \(\rho \) and \(\omega \) resonances, a rather broad peak at \(E\simeq 0.7\) GeV, and the narrow resonance \(\phi (1020)\). Given this rich structure, shown as the dashed grey curve in Fig. 18, this is a much more challenging validation test w.r.t. the one of the O(3) model discussed in the previous subsection.

In Fig. 18, \(\hat{R}_\sigma ^\textrm{pred}(E)\) is compared with \(\hat{R}_\sigma ^\textrm{true}(E)\) for both \(\sigma =0.44\) GeV (orange points and solid curve) and \(\sigma =0.63\) GeV (blue points and solid curve). In both cases the difference \(\hat{R}_\sigma ^\textrm{pred}(E)-\hat{R}_\sigma ^\textrm{true}(E)\) doesn’t exceed the quoted error for all the considered values of E.

7 The R-ratio with lattice QCD data

The results obtained with our ensembles of neural networks in the case of a real lattice QCD correlator are compared with those obtained with the HLT method (grey, Refs. [14, 40]). The top-panel shows the two determinations of the strange-strange connected contribution to the smeared R-ratio for \(\sigma =0.44\) GeV while the case \(\sigma =0.63\) GeV is shown in the bottom-panel

In this section we use our trained ensembles of neural networks to extract the smeared spectral density from a real lattice QCD correlator.

We have considered a lattice correlator, measured by the ETMC on the ensemble described in Table 6, that has already been used in Ref. [40] to extract the so-called strange-strange connected contribution to the smeared R-ratio by using the HLT method of Ref. [14]. The choice is motivated by the fact that in this case the exact answer for the smeared spectral density is not known and we are going to compare our results \(\hat{R}_\sigma ^\textrm{pred}(E)\) with the ones, that we call \(\hat{R}_\sigma ^\textrm{HLT}(E)\), obtained in Ref. [40].

In QCD the R-ratio can be extracted from the lattice correlator

where \(J_\mu (x)\) is the hadronic electromagnetic current. The previous formula (in which we have neglected the term proportional to \(e^{-(T-t)\omega }\) that vanishes in the \(T\mapsto \infty \) limit) explains the choice that we made in Eq. (32) to represent all the correlators that we used as inputs to our neural networks. In Ref. [40] all the connected and disconnected contributions to \(C_\textrm{latt}(t)\), coming from the fact that \(J_\mu =\sum _{f}q_f {{\bar{\psi }}}_f \gamma _\mu \psi _f\) with \(f=\{u,d,s,c,b,t\}\), \(q_{u,c,t}=2/3\) and \(q_{d,s,b}=-1/3\), have been taken into account. Here we consider only the connected strange-strange contribution, i.e. we set \(J_\mu = -\frac{1}{3} {\bar{s}} \gamma _\mu s\) and neglect the contribution associated with the fermionic-disconnected Wick contraction. From \(C_\textrm{latt}(t)\) we have calculated the covariance matrix \({\hat{\Sigma }}_\textrm{latt}\) that we used to inject noise in all the training sets that we have built and used in this work (see Sect. 4.2).

Although, as already stressed, in this case we don’t know the exact smeared spectral density, we do expect from phenomenology to see a sizeable contribution to the unsmeared spectral density coming from the \(\phi (1020)\) resonance. Therefore, the shape of the smeared spectral density is expected to be similar to those shown in Fig. 16 for which the results obtained from our ensembles of neural networks turned out to be reliable. The comparison of \(\hat{R}_\sigma ^\textrm{pred}(E)\) and \(\hat{R}_\sigma ^\textrm{HLT}(E)\) is shown in Fig. 19 and, as it can be seen, the agreement between the two determinations is remarkably good.