Abstract

Flavour-changing-neutral currents (FCNCs) involving the top quark are highly suppressed within the Standard Model (SM). Hence, any signal in current or planned future collider experiments would constitute a clear manifestation of physics beyond the SM. We propose a novel, interference-based strategy to search for top-quark FCNCs involving the Z boson that has the potential to complement traditional search strategies due to a more favourable luminosity scaling. The strategy leverages on-shell interference between the FCNC and SM decay of the top quark into hadronic final states. We estimate the feasibility of the most promising case of anomalous tZc couplings using Monte Carlo simulations and a simplified detector simulation. We consider the main background processes and discriminate the signal from the background with a deep neural network that is parametrised in the value of the anomalous tZc coupling. We present sensitivity projections for the HL-LHC and the FCC-hh. We find an expected 95% CL upper limit of \({\mathcal {B}}_{\textrm{excl}}(t\rightarrow Zc) = 6.4 \times 10^{-5}\) for the HL-LHC. In general, we conclude that the interference-based approach has the potential to provide both competitive and complementary constraints to traditional multi-lepton searches and other strategies that have been proposed to search for tZc FCNCs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A flavour-changing-neutral-current (FCNC) process is one in which a fermion changes its flavour without changing its gauge quantum numbers. In the Standard Model (SM), FCNCs are absent at tree level, suppressed by Cabibbo-Kobayashi-Maskawa (CKM) elements, and potentially additionally suppressed by fermion mass-differences at loop level via the Glashow-Iliopoulos-Maiani (GIM) mechanism [1]. The SM predictions for FCNCs that involve the top quark are extremely small due to the highly effective GIM suppression. The resulting branching ratios (\({\mathcal {B}}\)) for the top-quark two-body decays via FCNCs range from \({\mathcal {B}}(t \rightarrow uH)_{\text {SM}} \sim 10^{-17}\) to \({\mathcal {B}}(t \rightarrow cg)_{\text {SM}} \sim 10^{-12}\) [2,3,4,5,6,7]. However, the top quark plays an important role in multiple theories beyond the SM due to its large coupling to the Higgs, which is relevant for models addressing the Hierarchy Problem and models for electroweak-scale baryogenesis. Several of these models predict enhanced top-quark FCNC couplings [4, 8,9,10,11,12], which we collectively denote here by g. Typically, constraints on g from low-energy and electroweak-precision observables are mild [13,14,15,16,17,18], motivating direct searches for FCNC top-quark decays (\(t\rightarrow qX\) with \(q = u\), c) and FCNC single-top-quark production (\(pp \rightarrow tqX\) or \(qX \rightarrow t\)). While we focus on FCNC interactions with SM bosons in this paper, FCNC interactions of the top quark with new, scalar bosons have been proposed [19] and searched for [20].

Using data taken at the LHC, the ATLAS and CMS collaborations have placed the most stringent upper limits on top-quark FCNC interactions via a photon [21, 22], Z boson [23, 24], Higgs boson [25, 26], and gluon [27, 28]. Even though many searches take advantage of both the FCNC decay and single production to search for a non-zero g, the limits are traditionally presented in terms of FCNC branching ratios, \({\mathcal {B}}(t\rightarrow qX)\). The most stringent limits at 95% confidence level (CL) range from \({\mathcal {B}}(t\rightarrow u\gamma ) < 8.5\times 10^{-6}\) [21] to \({\mathcal {B}}(t\rightarrow cH) < 7.3\times 10^{-4}\) [26]. For FCNCs via the Z boson, the most stringent limits are obtained in a search that uses the decay of the Z boson to \(e^+e^-\) or \(\mu ^+\mu ^-\) in association with a semileptonically decaying top quark [23]. The resulting 95% CL upper limits on g translate to \({\mathcal {B}}(t\rightarrow uZ) < 6.2\)–\(6.6\times 10^{-5}\) and \({\mathcal {B}}(t\rightarrow cZ) < 1.2\)–\(1.3\times 10^{-4}\), depending on the chirality of the coupling.

While the limits in Ref. [23] are obtained with \(\mathcal {L_{\textrm{int}}} = \int {\mathcal {L}}\,\textrm{d}t = 139\) fb\(^{-1}\) of data at \(\sqrt{s} = 13\) TeV, the HL-LHC is expected to provide approximately 3000 fb\(^{-1}\) at 14 TeV. Improved sensitivity to top-quark FCNC processes is hence expected at the HL-LHC, because statistical uncertainties play an important role in these searches. With systematic uncertainties being subdominant, one may naively expect that the upper limits on \({\mathcal {B}}(t\rightarrow qZ)\) scale with the shrinking statistical uncertainty.Footnote 1 Using this extrapolation, the sensitivity is expected to improve roughly by a factor \(\sqrt{\smash [b]{3000 \, \textrm{fb}^{-1} / 139 \, \textrm{fb}^{-1}}} \approx 5\) at the HL-LHC.Footnote 2 The reason for this luminosity scaling is that the partial width for the two-body top-quark FCNC decay and the cross section for FCNC single production are proportional to \(g^2\) due to the lack of interference with SM processes.Footnote 3 As a result, the sensitivity to \({\mathcal {B}}(t\rightarrow qX)\) naively scales as \(1/\sqrt{\mathcal {L_{\textrm{int}}}}\) and the sensitivity to g as \(1/\root 4 \of {\mathcal {L_{\textrm{int}}}}\). Finding instead an observable that scales linearly with g due to interference with the SM would modify favourably the luminosity scaling. Such an interference-based approach would hence be very useful for the search for top-quark FCNCs. In the present work we propose such a novel approach and investigate the feasibility of employing it to search for tZq.

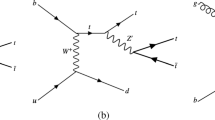

The leading-order diagrams for the three-body decay \(t\rightarrow c b {\bar{b}}\). The left diagram shows the decay via the FCNC tZc coupling and the right the SM decay via a W boson. In the small region of phase space in which the \(c\bar{b}\)-pair reconstructs the W-boson mass and the \(b\bar{b}\)-pair reconstructs the Z-boson mass, both the W and the Z bosons are on-shell and the two amplitudes interfere

There are multiple, phenomenologically relevant examples in which New-Physics (NP) interference with the SM is instrumental for precision NP searches. Examples include searching for \(H\rightarrow c{\bar{c}}\) via exclusive Higgs decays, which makes use of interference with the SM \(H\rightarrow \gamma \gamma \) amplitude [31], or searching for NP in high-energy diboson distributions by exploiting the interference between the SM and energy-enhanced NP contributions from dimension-six operators [32, 33]. Here, we introduce a new setup that can be applied to improve top-quark FCNCs searches. As opposed to other approaches, here both NP and SM amplitudes will be mostly resonant, i.e., contain on-shell –but different– intermediate particles. At tree level, a resonant signal amplitude does not generally interfere with a continuum amplitude, because the former is imaginary and the latter is real. However, if both the signal and the background contain an on-shell particle, interference may occur, as long as the final state is identical.Footnote 4 In this case of on-shell interference, NP and SM amplitudes will still interfere, yet the interference will only be large in a restricted phase-space region. This potential caveat is different to the ones in the aforementioned examples: exclusive decays of the Higgs boson are suppressed by the hadronisation probability to the relevant final-state, e.g., \(J/\psi \), and the interference in diboson tails is suppressed with the decreasing SM amplitude. Our proposal is to search for the three-body decay \(t\rightarrow qb\bar{b}\) in the phase-space region in which there is potentially large NP–SM interference.

The decay \(t\rightarrow qb{\bar{b}}\) contains two interfering contributions: the NP contribution \(t\rightarrow qZ\rightarrow qb\bar{b}\) and the SM one \(t\rightarrow bW^+\rightarrow qb\bar{b}\), as illustrated in Fig. 1. Consequently, the partial width contains a part that is proportional to g. For sufficiently small g the interference term dominates over the NP\(^2\) term (\(\propto g^2\)) in which case the sensitivity to g is expected to scale like \(1/\sqrt{\mathcal {L_{\textrm{int}}}}\), i.e., it improves faster with increasing luminosity than the traditional approach without interference. The interference argument also holds for probing the top-quark FCNCs with the Higgs boson (tHq) or with photons (\(tq\gamma \)) and gluons (tqg). For the Higgs, the interference is suppressed by the light-quark masses of the final-state quarks (\(m_b\) and \(m_q\)) due to the different chirality structure of the SM (vector) and NP (scalar) couplings. For the photon and gluon FCNCs the SM amplitudes peak at small dijet invariant masses with potentially large QCD backgrounds, which require a dedicated study. We will thus focus in this work on top-quark FCNCs with the Z-boson. We stress that the interference signal is not only sensitive to the magnitude of the tZq coupling but is also sensitive to its phase. The interference approach is hence inherently complementary to the traditional FCNC searches and of particular interest in case signs of an anomalous tZq coupling are observed. We will also focus on the tZc coupling, because the interference is larger compared to tZu due to the larger CKM matrix element \(|V_{cb}|\) compared to \(|V_{ub}|\).

In Sect. 2, we establish the theory framework and discuss how to leverage interference based on parton-level expressions for the interference-based rate and its kinematic properties. In Sect. 3, we introduce the Monte Carlo (MC) samples that we use for the sensitivity estimate and discuss the event selection that is tailored towards the FCNC signal. In Sect. 4.1, we briefly introduce the setup of the statistical analysis and then describe in Sect. 4.2 the optimization of the parametrised deep neural network (DNN) that we use for the analysis of the simulated data. The results are given in Sect. 4.3 for the HL-LHC and in Sect. 4.4 for the FCC-hh. We present our conclusions in Sect. 5.

2 \(t\rightarrow cZ\) from on-shell interference in \(t\rightarrow c b{\bar{b}}\)

The focus of this section is to study the three-body top-quark decay \(t\rightarrow cb{\bar{b}}\) in the presence of an anomalous, NP tZc coupling with emphasis on how to take advantage of NP–SM interference to probe the NP coupling. The decay rate is affected by interference between the NP and SM amplitudes, illustrated in the left and right diagram in Fig. 1, respectively. The results of this section are equally well applicable to the \(t\rightarrow ub{\bar{b}}\) decay when an anomalous tZu coupling is present. However, this channel is less promising to provide competitive constraints from an interference-based analysis since the SM amplitude is highly CKM suppressed. We, thus, concentrate on the \(t\rightarrow cb{\bar{b}}\) case.

Given the smallness of the bottom and charm-quark masses with respect to the top-quark mass, the NP–SM interference is large when the chirality of the NP couplings is the same as the one of the SM W-boson contribution, i.e., left-handed vector couplings \(\bar{t}_L\gamma ^\mu c_L Z_\mu \). In contrast, the NP–SM interference is suppressed by the small b- and c-quark masses if the NP originates from right-handed vector or tensor operators. Therefore, we only consider here the most promising case of anomalous left-handed couplings. The Standard Model Effective Theory (SMEFT) parametrises these couplings in terms of two dimension-six operators

Here, \(\varphi \) is the Higgs doublet, \(q_p\) left-handed quark-doublets, and p, r flavour indices in the conventions of Ref. [35].

In the broken phase, by rotating to the quark-mass eigenstates these SMEFT operators can lead to anomalous tree-level tZc couplings to the left-handed quarks, which are the subject of this work. We parametrise them with the phenomenological Lagrangian

with the NP parameter \(g>0\) and the NP phase \(0\le \phi _{\text {NP}}< 2\pi \).Footnote 5 In the up-quark mass basis, the coupling in Eq. (2) is related to the SMEFT Wilson coefficients via \(g e^{i \phi _{\text {NP}}} = \frac{e}{s_wc_w} \frac{v^2}{\Lambda ^2}\bigl (C^{(1)}_{\varphi q;32} - C^{(3)}_{\varphi q;32}\bigr )\), where e is the electromagnetic coupling, \(s_w\) (\(c_w\)) the sine (cosine) of the weak mixing angle, and \(v\simeq 246\) GeV the electroweak vacuum-expectation value.

The squared amplitude for the \(t\rightarrow cb{\bar{b}}\) decay contains three terms: the SM\(^2\) term, the NP\(^2\) term, and their interference, i.e.,

where the underbraces indicate the dependence on the NP parameters. The interference term depends linearly on the NP coupling g and also on the relative, CP-violating phase between NP and SM contribution:

As indicated by Eq. (3) and further discussed in the following, the fully differential rate of \(t\rightarrow cb{\bar{b}}\), is sensitive to the interference term and thus potentially sensitive to both a term that is CP-even in the kinematic variables and proportional to \(\cos \phi \) as well as a term that is CP-odd and proportional to \(\sin \phi \). The cases \(\phi = \{0, \pi \}\) lead to a differential rate of \(t\rightarrow cb{\bar{b}}\) that is CP conserving. In this case, namely, the SM and NP sources of CP violation are aligned and the differential rate is insensitive to CP violation.

The coupling-scaling of the amplitudes does not capture the dependence on the kinematic variables describing the three-body decay. This dependence is essential for designing the search that leverages interference in an optimal manner. The \(t\rightarrow cb{\bar{b}}\) kinematics are fully specified by the two invariant masses \(m_{c\bar{b}}^2\equiv (p_c + p_{{\bar{b}}})^2\) and \(m_{b{\bar{b}}}^2\equiv (p_p + p_{{\bar{b}}})^2\). The different topologies of the NP and the SM amplitudes (compare the two diagrams in Fig. 1) lead to final states with distinct kinematic configuration: “SM events” originate mostly from on-shell W’s, i.e., \(m_{c{\bar{b}}} \sim M_W\), whereas “NP events” from on-shell Z’s, i.e., \(m_{b{\bar{b}}}\sim M_Z\). We illustrate this in Fig. 2a, which shows the standard Dalitz plot for the three-body decay in the top-quark rest frame. in terms of \(m_{c{\bar{b}}}\) and \(m_{b{\bar{b}}}\). The gray area marks the kinematically allowed phase-space. The SM\(^2\) and NP\(^2\) parts of the squared amplitude mainly populate the blue (vertical band) and green (horizontal band) regions, respectively.

In a the Dalitz plot for the three-body decay \(t\rightarrow cb{\bar{b}}\) in the restframe of the top-quark in terms of the two invariant masses \(m_{b{\bar{b}}}\) and \(m_{c{\bar{b}}}\). In gray the kinematically physical region. The dotted vertical and horizontal line indicates the phase-space points of resonant Z- and W-boson production (same in b and c). “Pure SM” events predominantly populate the vertical blue region whereas “pure NP” events the horizontal green region. The red region marks the doubly-on-shell region in which NP–SM interference is the largest. In b and c, we show the doubly differential branching ratio, \(\nicefrac {\textrm{d}^2\mathcal {B}^{\cos /\sin }_{\text {Int}}}{\textrm{d} m_{b{\bar{b}}}\textrm{d} m_{c{\bar{b}}}}\), originating from NP–SM interference proportional to \(g\cos \phi \) and \(g \sin \phi \), respectively. The figure ranges correspond to the doubly-on-shell region (red region in a) and the dotted rectangle centered at the doubly-on-shell point has the width \(\Gamma _W\) and the height \(\Gamma _Z\). Brown regions correspond to negative and green to positive contributions to the branching ratio

The W- and Z-boson widths (\(\Gamma _W\), \(\Gamma _Z\)) control the level of deviations from the on-shell case, i.e., the width of the vertical and horizontal bands in Fig. 2a. This is best seen by employing the Breit–Wigner approximation for the massive vector propagators

which enhances the SM amplitude when \(m_{c{\bar{b}}} \sim M_W\) and the NP one when \(m_{b{\bar{b}}}\sim M_Z\). By integrating over the full phase-space and taking the narrow-width approximation \(\Gamma _W/M_W,\Gamma _Z/M_Z\ll 1\), we recover the usual relations for the fully inclusive branching ratios originating from the SM\(^2\) and NP\(^2\) terms in Eq. (3):

with \(\mathcal {B}(W \rightarrow c{\bar{b}})_{\text {SM}}\propto M_W/\Gamma _W\) and \(\mathcal {B}(Z \rightarrow b{\bar{b}})_{\text {SM}}\propto M_Z/\Gamma _Z\). We collect the expressions for the two-body branching fractions in Appendix A. However, as we shall demonstrate next, the interference is large in the small phase-space region in which both W and Z bosons are on-shell (red region in Fig. 2a):

Explicit computation shows that the NP\(^2\) and SM\(^2\) rates in this doubly-on-shell region are parametrically suppressed by the widths and masses of the Z/W bosons with respect to their inclusive values in Eq. (6)

The net effect is that in total \(\mathcal {B}_{\text {NP/SM}}^{\text {doubly on-shell}}\) are neither enhanced by \(M_{Z/W}/\Gamma _{Z/W}\) nor suppressed by \(\Gamma _{Z/W}/M_{Z/W}\) factors, since \(\mathcal {B}_{\text {NP/SM}}^{}\propto 1/{\Gamma _{Z/W}}\). The relative suppression, however, is welcome as both of these contributions constitute a background for the interference-based analysis we are proposing.

In contrast to “pure SM” and “pure NP” events, “interference-based” events predominantly populate the doubly-on-shell phase-space region, since \(2\textrm{Re}(\mathcal{A}^*_{\text {SM}} {{{\mathcal {A}}}}_{\text {NP}})\) is proportional to the product of W- and Z-boson Breit–Wigner propagators. Summing over final-state polarisations and averaging over the top-quark polarisation we find the double-differential branching ratio originating from the interference term in Eq. (3) to be

with \(N_{\text {Int}} = e^3(3-2s_w^2)|V_{cb}||V_{tb}|/(1536\pi ^3c_ws_w^3)\). The last line defines a shorthand notation for the terms proportional to \(g\cos \phi \) and \(g\sin \phi \). In Fig. 2b and c we show \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\cos }\) and \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\sin }\), respectively, in terms of the two Dalitz variables. In brown are the regions with a negative rate and in green the ones with positive rate. The intersection of the dotted vertical and horizontal line corresponds to the doubly-on-shell point and we have overlaid a rectangle with width and height equal to \(\Gamma _W\) and \(\Gamma _Z\). Equation (9) and its illustration in Fig. 2b and c contain the most relevant parametric dependences that underpin the idea of leveraging interference to probe anomalous tZc couplings.

-

(i)

The denominator in the first line stems from the product of the two Breit–Wigner propagators for the W and Z bosons, see Eq. (5). They enhance the rate from interference in the doubly-on-shell region, which is regulated by both \(\Gamma _W\) and \(\Gamma _Z\). The enhancement of the doubly-on-shell region with respect to the rest of the phase-space region is best seen in Fig. 2b and c for \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\cos }\) and \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\sin }\). The main part of the integrated rate comes from the phase-space region close to the doubly-on-shell region.

-

(ii)

The rate from interference contains terms proportional to both \(\cos \phi \) and \(\sin \phi \). Interference is present independent of whether there is CP violation in the decay (\(\sin \phi \ne 0\)) or whether there is no CP violation (\(\cos \phi =\pm 1\)). However, the CP-odd term proportional to \(\sin \phi \) is odd under the interchange of \(W\leftrightarrow Z\) and \(m_{b{\bar{b}}}\leftrightarrow m_{c{\bar{b}}}\) in Eq. (9), see also Fig. 2c for \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\sin }\). The consequence is that the integrated rate proportional to \(g\sin \phi \) vanishes for the symmetric case \(M_W=M_Z\). A measurement of the phase \(\phi \) thus requires separating events within the doubly-on-shell region, which is experimentally extremely challenging given the jet energy resolution. In contrast, the integrated rate proportional to \(g \cos \phi \) is even under the aforementioned interchanges and does not vanish after integration, see Fig. 2b for \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\cos }\). A dedicated search in the doubly-on-shell region is thus potentially sensitive to \(g\cos \phi \).

In Sect. 3, we will use Monte-Carlo (MC) techniques to simulate events including a simplified detector simulation populating the doubly-on-shell region based on the full matrix-elements, which lead to Eq. (9) and the corresponding expressions for the NP\(^2\) and SM\(^2\) terms. To obtain a first rough estimate of the rate from interference and to illustrate the parametric dependences we present here an approximate phase-space integration of the rate in Eq. (9). Most of the rate originates from events in the doubly-on-shell region, see i) above. We thus keep the \(m_{b{\bar{b}}}\) and \(m_{c\bar{b}}\) dependence in the Breit–Wigner denominators but set \(m_{b\bar{b}}=M_Z\), \(m_{c{\bar{b}}}=M_W\) in the remaining squared amplitude. We then perform the approximate phase-space integration by integrating over the Breit–Wigner factors via

to obtain a rough estimate of the integrated, interference-based rate

We stress that this is only a rough approximation. In fact, the approximation overestimates the rate by a factor of two with respect to properly integrating Eq. (9) over the physical kinematic region and including the full \(m_{b{\bar{b}}}\) and \(m_{c\bar{b}}\) dependence.

As expected from the discussion in ii) above, Eq. (10) does not contain \(g \sin \phi \) terms. The resulting rate is positive (constructive interference) when \(\cos \phi <0\) and negative when \(\cos \phi >0\) (destructive interference), see colormap of \(\textrm{d}^2\mathcal {B}_{\text {Int}}^{\cos }\) in Fig. 2b. For this reason, in the following sections, we will concentrate on the case of constructive interference by choosing

While it may also be possible to search for destructive interference, i.e., a deficit of events in the doubly-resonant phase space, as for example employed in searches for heavy scalars [36, 37] that decay to \(t{\overline{t}}\), we will not pursue this direction here. Equation (10) also illustrates that \(\mathcal {B}_{\text {Int}}\) is not suppressed by factors of \({\Gamma _{W/Z}}/{M_{W/Z}}\). As discussed below Eq. (8), the same holds for the NP\(^2\) and SM\(^2\) rates in the doubly-on-shell region, \({\mathcal {B}_{\text {NP/SM}}^{\text {doubly on-shell}}}\). Therefore, the interference-based rate can compete with the NP\(^2\) rate for sufficiently small g if the analysis targets the doubly-on-shell region. In what follows we investigate the experimental viability of such a dedicated search.

3 Simulated samples and event selection

We generated Monte-Carlo (MC) samples with MadGraph5_aMC@NLO 3.2.0 (MG5) [38] using a custom UFO [39] model, which includes the contact tZc coupling as parametrised in Eq. (2), setting \(\phi =\pi \) (see discussion in Eq. (11)), in addition to the full SM Lagrangian with non-diagonal CKM matrix. All matrix elements are calculated at leading order in perturbative QCD. We validated the custom model by simulating the decay \(t \rightarrow c b {\bar{b}}\) and comparing the distribution of events in the two-dimensional plane spanned by the Dalitz variables \(m_{c{\bar{b}}}^2\) and \(m_{b{\bar{b}}}^2\) (cf. Sect. 2) with the expectation from the explicit calculation (Fig. 2).

In the following, we simulate proton–proton collisions at a centre-of-mass energy of \(14\,\)TeV. The structure of the proton is parametrised with the NNPDF2.3LO set of parton distribution functions [40]. Factorisation and renormalisation scales are set dynamically event-by-event to the transverse mass of the irreducible \(2\rightarrow 2\) system resulting from a \(k_{\textrm{T}}\) clustering of the final-state particles [41]. We simulate the FCNC contribution (\(\propto g^2\)), also referred to as NP\(^2\) in Sect. 2, and the interference contribution (\(\propto g\)) to the signal process \(t\bar{t} \rightarrow c b {\bar{b}}\,\mu ^-\nu _\mu \bar{b}\) separately, whereas the SM contribution to this process is treated as irreducible background. We only simulate the muon channel for simplicity. The reducible background processes always include top-quark pair production with subsequent decay in the lepton\(+\)jets channel with first- or second-generation quarks q and \(q'\). Besides the six-particle final state (\(b{\bar{q}} q'\,\mu ^-\nu _\mu \bar{b}\)), we also simulate resonant production of additional bottom quarks from \(t {\bar{t}} Z(\rightarrow b {\bar{b}})\) and non-resonant contributions from \(t {\bar{t}} b {\bar{b}}\) and \(t {\bar{t}} c {\bar{c}}\). We do not simulate several other small background processes, such as \(W^{-}+\textrm{jets}\) production, diboson production with additional jets or \(t {\bar{t}} H\) production, because their contribution is expected to be negligible either due to their low cross section or their very different kinematic properties.

We only generate muons and final-state partons with transverse momenta larger than 20 GeV and require final-state partons to have a minimum angular distanceFootnote 6 of \(\Delta R = 0.4\) to each other, motivated by the minimum angular distance obtained with jet clustering algorithms. We require the same angular distance between final-state partons and the muon in order to mimic a muon isolation criterion. For events in the six-particle final state, i.e., signal and background contributions to \(b{\bar{b}} b\,\mu ^-\nu _\mu \bar{b}\) as well as the reducible background \(b{\bar{q}} q'\,\mu ^-\nu _\mu \bar{b}\), we require muons and final-state partons to be in the central region of the detector (\(|\eta |< 2.5\)).

For simplicity, we do not use a parton shower in our studies. Instead, we smear the parton-level objects by the detector resolution in order to approximate detector-level jets, muons, and missing transverse momentum. The jet resolution is parametrised as \(\sigma (p_\text {T})/p_\text {T} = -0.334 \cdot \exp (-0.067 \cdot p_\text {T})+5.788/p_\text {T}+0.039\), where the transverse momentum, \(p_{\text {T}}\), is in units of GeV. We obtain this parametrisation from a fit to values from the ATLAS experiment [42]. We recalculate the energy of each jet based on the smeared \(p_\text {T}\) with the jet direction unchanged. We smear the x- and y-components of the missing transverse-momentum vector independently by adding a random number drawn from a Gaussian distribution with mean zero and standard deviation of 24 GeV [43]. We then calculate the scalar missing transverse momentum and the corresponding azimuthal angle. We take the muon transverse momentum resolution to be 2% [44, 45] with no kinematic dependence.

We select events with criteria that are typical for top-quark analyses by the CMS and ATLAS collaborations. We require the muon to be in the central region of the detector (\(|\eta |< 2.5\)) and to have a transverse momentum larger than 25 GeV to mimic typical single-muon trigger thresholds [46, 47]. We do not take trigger, identification, or isolation efficiencies into account. We only accept events with exactly four central jets (\(|\eta |< 2.5\)) to reduce the contamination from the reducible background processes with higher jet multiplicity. Each jet has to have a transverse momentum larger than 25 GeV and we require the missing transverse momentum to be at least 30 GeV.

Given the signal final state, \(c b {\bar{b}}\,\mu ^-\nu _\mu \bar{b}\), we demand the four jets in the event to fulfill the following b-tagging criteria. We require three jets to fulfill a b-tagging criterion with a b-tagging efficiency of 70% and corresponding mis-identification efficiencies of 4% and 0.15% for c-jets and light jets, respectively [48]. The additional fourth jet is often a c-jet and needs to pass a looser b-tagging criterion with a b-tagging efficiency of 91% and a correspondingly larger efficiency for c-jets [48]. The mis-identification efficiency for light jets of this looser b-tagging criterion is 5%. We optimise the b-tagging selection by choosing from various combinations of b-tagging criteria with different b-tagging efficiencies and corresponding mis-tagging efficiencies. Using a benchmark coupling value of \(g=0.01\) and an integrated luminosity of \(3000\,\textrm{fb}^{-1}\), we choose the combination with the highest value of \(S/\sqrt{S+B}\), where S and B are the total number of weighted events for the signal and the background contributions, respectively, as calculated by sampling of jets according to the b-tagging efficiencies for the different jet flavours (S contains both the FCNC and interference contribution).

Instead of removing events that did not pass the b-tagging criteria, we weight events by the total b-tagging probability to avoid large uncertainties due to the limited size of the MC datasets. We weight events in samples for the six-particle final states, where we required all four partons to be central already at generator-level, by a factor of \(\varepsilon _{\textrm{4j}} = 0.5\), as roughly half of the events in top-quark pair production at the LHC have more than four jets due to additional radiation [49]. We use k-factors to scale the MG5 leading-order cross sections of the MC samples to higher orders in perturbation theory. For the six-particle final states associated with top-quark pair production, we use a value of \(986\,\textrm{pb}\) as calculated at next-to-next-to-leading order in QCD including next-to-next-to-leading logarithmic soft gluon resummation [50]. For \(t {\bar{t}} b {\bar{b}}\) and \(t {\bar{t}} c {\bar{c}}\), we use cross sections of \(3.39\,\textrm{pb}\) and \(8.9\,\textrm{pb}\), respectively, as calculated with MG5 at next-to-leading order [51]. For \(t {\bar{t}} Z\) production, we use a cross section of \(1.015\,\textrm{pb}\), which includes next-to-leading order QCD and electroweak corrections [52]. Table 1 summarizes the efficiencies of the event selection, the MG5 leading-order cross sections, the k-factors, the b-tagging efficiencies, and the expected number of events for an integrated luminosity of \(3000\,\textrm{fb}^{-1}\).

Expected number of events for 3000 fb\(^{-1}\) in the \(m_{W{,}\text {reco}}\) vs. \(m_{Z{,}\text {reco}}\) plane (in bins of 2 GeV \( \times \) 2 GeV) for the representative value \(g=0.01\) and \(\cos \phi =-1\): in a from the pure FCNC contribution, in b from the interference contribution with positive and in c with negative event weights, and in d from the sum of the background processes

To show the detector-level distribution of the expected number of events for \(3000\,\textrm{fb}^{-1}\) we define the variables \(m_{W\textrm{,reco}}\) and \(m_{Z\textrm{,reco}}\) in analogy to the parton-level Dalitz variables \(m_{c{\bar{b}}}\) and \(m_{b{\bar{b}}}\) (cf. Sect. 2). For each event, the three jets with invariant mass closest to the top-quark mass form the hadronically decaying top-quark candidate. From these three jets, we assume the jet with the lowest sampled b-tag score to be the c-jet. In case of a tie, we choose the jet with the higher \(p_{\textrm{T}}\). The invariant mass of the two remaining jets is \(m_{Z{,}\text {reco}}\). We then calculate the invariant mass of the c-tagged jet combined with each of the remaining two jets of the hadronic top-quark system, and take the invariant mass closer to \(M_W\) as \(m_{W\textrm{,reco}}\). In Fig. 3, we show the expected number of events for 3000 fb\(^{-1}\) in the two-dimensional plane spanned by \(m_{W\textrm{,reco}}\) and \(m_{Z\textrm{,reco}}\) originating from different contributions: in Fig. 3a events from the pure FCNC contribution, in Fig. 3b events from constructive intereference, in Fig. 3c events from destructive interference, and in Fig. 3d events from the sum of all background processes. The results in figures Fig. 3b and c are in qualitative agreement with the parton-level result proportional to \(g\cos \phi \) shown in Fig. 2b. Compared to it, the distributions are more spread out due to the finite detector resolution. However, the characteristic differences between pure FCNC, interference, and background contributions are still visible.

4 Sensitivity at hadron colliders

Next, we estimate the sensitivity of the interference-based approach to the tZc FCNC coupling in the form of expected upper limits on the coupling constant g and compare it with the traditional approach that focuses on the leptonic decay of the Z boson. The statistical methodology is briefly outlined in Sect. 4.1. To separate the FCNC signal, i.e., the pure FCNC contribution, as well as the interference contribution, from the background, we use a classifier based on deep neural networks (DNN). We parametrise the DNN as a function of the FCNC coupling g for optimal separation over a large range of coupling values. In Sect. 4.2, the architecture and the optimisation of the DNN are explained. The prospects at the HL-LHC are presented in Sects. 4.3, and 4.4 contains estimates for the sensitivity to g in various future scenarios. The section concludes with a comparison to other approaches to constrain tZc FCNC couplings in Sect. 4.5.

4.1 Outline of the statistical methods

Our metric for the sensitivity to the tZc FCNC coupling is the 95% CL expected upper limit on g since this allows for a straightforward comparison with existing searches. The method to derive the upper limit is the following: We create pseudo-measurements by sampling from the background-only histogram assuming a Poisson distribution for the counts per bin. Motivated by the Neyman–Pearson lemma [53], we construct a likelihood-ratio test statistic, t, by comparing the bin counts from the pseudo-measurements \(\textbf{x}\) with the expectation values from the MC simulation under the \(s\!+\!b\)-hypothesis (b-only-hypothesis) \(\mathbf {\lambda }_{s+b}\) (\(\mathbf {\lambda }_{b}\)) for each pseudo-measurement:

The nominal expected upper limit on the coupling strength, \(g_\textrm{excl}\), is derived as the median of all pseudo-measurements under the assumption of the absence of a signal with the CL\(_\textrm{s}\) method [54].

4.2 Optimisation of the parametrised deep neural networks

Resolution effects, in particular the jet-energy resolution, and wrong assignments of jets to the decay branches complicate the reconstruction of invariant masses at detector level and motivate the use of machine-learning techniques to optimise the separation of signal and background in a high-dimensional space. We use the following 31 variables for the training of the DNN: for the b-tagged jets, their transverse momenta, pseudorapidities, azimuthal angles, energies and the highest-efficiency b-tagging working point that the jet passes; for the single muon, its transverse momentum, pseudorapidity and azimuthal angle; for the missing transverse momentum, its magnitude and azimuthal angle. The values of all azimuthal angles \(\phi \) are replaced by the combination of \(\sin \phi \) and \(\cos \phi \) due to the periodicity of the azimuthal angle. The natural logarithm is applied to all transverse momentum and energy spectra and the missing transverse momentum spectrum, as these variables have large positive tails. The dataset is split with fractions of 60% : 20% : 20% into training, validation and test sets. As a last step, all variables are studentised using \(y_i' = (y_i - \mu )/\sigma \), where \(\mu \) refers to the arithmetic mean of the respective variable and \(\sigma \) is the estimated standard deviation.

Besides these 31 observables, we also use the coupling constant g as an input to the DNN, which leads to a parametrised DNN [55]. The idea is to present different values of g to the DNN during the training so that the DNN learns the relative importance of the different signal contributions as a function of g. For example, for \(g > rsim {\mathcal {O}}(0.1)\) the DNN should not focus on the interference contribution at all and instead concentrate on the separation of the FCNC contribution against the backgrounds. This is because the weight of the FCNC contribution exceeds that of the interference contribution by orders of magnitude in that regime. Conversely, for \(g \lesssim {\mathcal {O}}(0.001)\) the DNN should start to focus on the interference contribution more and more to leverage the slower decrease of the number of expected events for the interference contribution compared to the FCNC contribution. To give the DNN the possibility to learn this dependence, we further split the training and the validation set into five stratified subsets. Each of these subsets corresponds to a specific value of \(g\in \{0.001,\,0.005,\,0.01,\,0.05,\,0.1\}\). These values are chosen to cover the range around the current best exclusion limit of about 0.0126 [23]. For the training, the weights of the signal events are adjusted so that for a given value of g the sum of weights in each subset corresponds to the sum of weights of the background contribution.

The constructed DNN has four output nodes: one for pure FCNC events, one for interference events with positive weight, one for interference events with negative weight, and one for background events. For the output layer, we use softmax and for the hidden layers ReLU as the activation function. We use the Adam optimiser [56] and categorical cross-entropy as the loss function. For the determination of the expected exclusion limit, a one-dimensional discriminant

is constructed based on the activation \(\alpha \) of the respective output nodes. We assign a negative prefactor to the output node corresponding to the negative interference contribution, to increase the difference between the background-only and the signal distribution of d. The corresponding histograms of d consist of 10 equidistant bins. To account for charge-conjugated processes, the bin contents are multiplied by a factor of two.

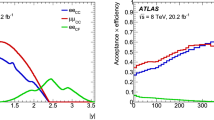

The structure of the DNN as well as the learning rate and the batch size during the training are manually optimised based on the expected exclusion limit on the validation set. A learning rate of 0.001 and a batch size of 1000 is chosen. The final structure of the DNN is \([32,\,128,\,256,\,128,\,64,\,32,\,4]\), with the numbers referring to the number of nodes in the respective layer. The evolution of the expected exclusion limit during the training of the DNN are shown in Fig. 4a.

4.3 Prospects for HL-LHC

The integrated luminosity expected at the HL-LHC is \({\mathcal {L}}=3000\,\textrm{fb}^{-1}\) [57]. Figure 4b contains the CL\(_{\textrm{s}}\) values resulting from the evaluation of the DNN on the test as a function of the coupling constant g. We find an expected upper exclusion limit at 95% CL of

The corresponding nominal upper limit on the branching fraction is \({\mathcal {B}}_{\textrm{excl}}(t \rightarrow Zc) = 6.4 \times 10^{-5}\).

In the following, we highlight some of the features of the machine-learning based analysis to illustrate the employed methods. The distributions of the discriminant for \(g=g_{\textrm{excl}}\) and the rejected hypothesis \(g=0.02\) are shown in Fig. 5 for the signal and the background-only hypothesis. Since the DNN is parameterised in g, the background-only distribution depends on g as well. The number of background events expected in the rightmost bins increases for \(g=0.02\) compared to the bin contents expected for \(g=g_{\textrm{excl}}\). This implies that the DNN adapts to the simplifying kinematics due to the decreasing importance of interference events.

The signal and background distribution of the discriminant for \(g=8.8\times 10^{-3}\) and \(g=0.02\). As the DNN is parameterised in g, the background distribution depends on g as well. The bottom panel shows the ratio of expected signal+background events divided by the number of expected background events, \((S+B)/B\)

In Fig. 6 we show both the bin contents expected for \(g=g_{\textrm{excl}}\) for each background process and the shapes of the signal contributions. Since the irreducible SM background \(t{\overline{t}}_{{\overline{b}}c}\) has the same final state as the signal, the separation from signal events turns out to be rather difficult compared to the reducible backgrounds. In fact with respect to the aforementioned irreducible component, the separation of top-quark pair production with decays to only first- and second-generation quarks, denoted by \(t{\overline{t}}\), can be separated better. Nevertheless, this process remains the most important background contribution due to its high cross section.

The DNN separates the signal from the three processes with an additional heavy-flavour quark pair well; this can be attributed to the different kinematical structure due to the additional particles in the event. It should also be noted that the FCNC distribution has a slightly higher mean than the positive interference distribution. This is due to two factors: Firstly, in the vicinity of \(g=g_{\textrm{excl}}\) the sum of weights of the FCNC contribution is still a bit larger than the sum of weights of the positive interference contribution. Thus, the DNN focusses on separating the FCNC events from the background events because of their larger relative impact on the loss function. Secondly, the distribution of the events in the considered phase space inherently offers more separation power from the background for the FCNC events compared to the interference events, as visualised in the \(m_{W{,}\text {reco}}\) vs. \(m_{Z{,}\text {reco}}\) plane shown in Fig. 3. Additionally, the mean value of the distribution for negative interference events is only slightly lower compared to the positive interference contribution, even though the definition of the discriminant in Eq. (13) considers these with opposite relative signs. This validates the observation from Fig. 3 that the distribution of the negative-interference events in the phase space is quite spread out and thus difficult to separate from the horizontal band of the FCNC contribution in the \(m_{W{,}\text {reco}}\) vs. \(m_{Z{,}\text {reco}}\) plane as well as from the similarly distributed positive-interference contribution.

Number of events for each background process in bins of the discriminant d. The expected number of events in each bin is determined from the nominal expected exclusion limit \(g = 8.8 \times 10^{-3}\) and an integrated luminosity of 3000 fb\(^{-1}\) at HL-LHC. In addition, the shapes of the signal distributions are illustrated

4.4 Prospects for future experiments

We explore the potential of the interference-based approach based on various future scenarios. These include developments in the realms of analysis methods, detector development, and future colliders.

Improved b-tagging The performance of b-tagging algorithms is crucial for the suppression of background contributions. This is evident when considering that the main background contribution after the event selection (see Sect. 3) is \(t{\overline{t}} \rightarrow b{\overline{s}}c\,\mu ^-\nu _\mu {\overline{b}}\), which only differs from the signal final state by an \({\overline{s}}\) instead of a \({\overline{b}}\) quark. Thus, we expect a gain in sensitivity with increasing light-jet rejection factors at the considered b-tagging working points. The b-tagging algorithms that provide this rejection are being constantly improved by the experimental collaborations. An approach based on Graph Neural Networks [58] has already shown increased performance in comparison to traditional approaches. To examine the effects of improved b-tagging algorithms, the analysis is repeated with light-jet rejection rates multiplied by a factor of two. The resulting exclusion limit is

This amounts to a relative improvement of the expected limit of around 9% compared to the baseline result presented in Sect. 4.3.

Improved jet-energy resolution As discussed in Sect. 2, the reconstruction of the Dalitz variables \(m_{c{\bar{b}}}^2\) and \(m_{b{\bar{b}}}^2\) enables the separation of the different contributions to the parton-level \(t \rightarrow b {\overline{b}} c\) decay. However, for the full process, \(t{\overline{t}} \rightarrow bq{\overline{q}}'\,\mu ^-\nu _\mu {\overline{b}}\), the separation power degrades due to the choice of wrong jet combinations in the reconstruction of the invariant masses and the limited jet-energy resolution. Significant improvements in the resolution are expected for experiments at the FCC-hh [59] based on simulation studies for calorimetry [60]. To investigate the impact of this improvement, we scale the expected limit for a jet \(p_{\textrm{T}}\) resolution by a factor of ½ without changing any other parameter. This results in

which corresponds to an improvement of about 16\(\%\).

Improved statistical power The FCC-hh is projected to deliver an integrated luminosity of the order of \(20\,\textrm{ab}^{-1}\) at a centre-of-mass energy of 100 TeV [59]. This presents an excellent opportunity to search for tZc FCNC effects in the realm of small coupling constants with the interference-based approach. We do not generate new MC samples for \(\sqrt{s} = 100\,\)TeV. Instead, we scale the event weights by a common factor of \(\sigma _{t{\overline{t}}}(100\,\textrm{TeV})/\sigma _{t{\overline{t}}}(14\,\textrm{TeV}) \approx 35\), which is the increase of the \(t{\overline{t}}\) cross section due to the higher centre-of-mass-energy [61], as the signal and the main background processes rely on \(t\bar{t}\) production. However, we neglect any difference in the \(\sqrt{s}\) scaling of the cross sections in the presence of additional jets for the background processes. The projected exclusion limit for this scenario is hence a rough estimate. Including these changes and repeating the analysis yields a limit of

which amounts to an improvement of around a factor of four.

Combination of improvements As a last scenario, we combine all three improvements discussed above. Therefore, this scenario corresponds to a rough projection of the sensitivity at a future general-purpose detector at the FCC-hh with significantly improved b-tagging algorithms and jet resolution. Retraining and evaluating the DNN on the adjusted dataset, we obtain an expected limit of

This corresponds to an improvement of about a factor of seven and results in an upper limit on the branching fraction of \({\mathcal {B}}_{\textrm{excl}}^{\text {comb}}(t \rightarrow Zc) = 1.2 \times 10^{-6}\).

4.5 Comparison to other approaches

We compare the sensitivity of the interference-based approach to other approaches that target tZc FCNC effects. We briefly introduce three alternative approaches and then discuss the relative sensitivities of the different methods.

Leptonic analysis Traditionally, tZq FCNCs are searched for by using the leptonic \(Z \rightarrow \ell ^+ \ell ^-\) decay mode instead of the hadronic decay \(Z \rightarrow b{\overline{b}}\). This leads to three-lepton final states for the signal, which are associated with low SM-background contributions. Reference [23] provides the tightest expected exclusion limit for \({\mathcal {B}} (t \rightarrow Zc)\) of \(11 \times 10^{-5}\) to date. It considers both single-top quark production via an FCNC tZc vertex (\(q g \rightarrow t Z \)Footnote 7) and top-quark pair production with an FCNC decay of one of the top quarks. Using the simple scaling introduced in Sect. 1, we obtain an expected exclusion limit for \(3000\,\textrm{fb}^{-1}\) of

Here, we have taken the limit for a left-handed coupling, just as in our studies, and have assumed that systematic uncertainties will reduce according to the same scaling as the statistical uncertainties with the increase in integrated luminosity. This simple projection shows some tension with the extrapolation in Ref. [30] of the search for tZc FCNC effects with \(36.1\,\textrm{fb}^{-1}\) at \(\sqrt{s}=13\,\)TeV [29] by the ATLAS collaboration, which gives an expected upper limit of 4 to \(5 \times 10^{-5}\) for the HL-LHC, depending on the assumptions on the reduction of systematic uncertainties. This limit is looser than the one obtained from the scaling above. This hints at the importance of the correct estimation of the long-term reduction of systematic uncertainties and highlights that the assumption that systematic uncertainties decrease according to the same scaling as statistical uncertainties may indeed be over-optimistic for the leptonic approach. The extrapolation to the FCC-hh scenario results in an expected limit of \(1.6 \times 10^{-6}\), where we again have used an integrated luminosity of \(20\,\textrm{ab}^{-1}\) and included a factor of 35 for the increase of the cross sections with \(\sqrt{s}\), based again on the scaling of the \(t\bar{t}\) cross section. This projection is probably optimistic and we regard it as a rough estimate. In particular, the factor of 35 is unlikely to capture the increase of the cross section of the FCNC production mode accurately. Additionally, this scaling implies a reduction of systematic uncertainties by a factor of more than 15, which does not seem realistic given the challenging experimental conditions at the FCC-hh.

Ultraboosted approach In Ref. [62], it was proposed to search for top-FCNC effects in \(t\gamma \) and tZ production in the ultraboosted regime in which the decay products of the top quark merge into a single jet. In contrast to our approach, this method is only sensitive to the production mode. The ultraboosted approach is projected to yield an exclusion limit of \({\mathcal {B}}(t \rightarrow Zc) < 1.6 \times 10^{-3}\) at the HL-LHC,Footnote 8 considering a single source of systematic uncertainty on the number of background events of 20% [62]. The projected limit for the FCC-hh is \(3.5 \times 10^{-5}\) [62].Footnote 9

Triple-top-quark production Another way to search for top-quark FCNC effects is in triple-top-quark production: \(q g \rightarrow t B^{*}\) with \(B^{*} \rightarrow t {\bar{t}}\) [63,64,65,66]. In this process, a single top quark is produced alongside an off-shell boson \(B^{*}\) mediating the FCNC, which splits into a \(t {\bar{t}}\) pair. The studies are performed for the same-sign lepton topology \(\nu _{\ell } \ell ^+ b \, q \bar{q}' {\bar{b}} \, \nu _{\ell '} \ell '^+ b\), which benefits from the fact that SM background contributions are small. However, as is also the case for ultraboosted tZ production, the expected limit on \({\mathcal {B}}(t \rightarrow Zc)\) of \(1.35 \times 10^{-2}\) at the HL-LHC [65] is relatively weak and has already been surpassed by analyses from the ATLAS [23] and CMS collaborations [24] using the leptonic analysis. The limit achievable at the FCC-hh is estimated to be \(4.6 \times 10^{-4}\) [66].

Discussion We summarise the expected limits of the individual approaches in Table 2. The leptonic analysis yields the most stringent limit at the HL-LHC, while both the ultraboosted and triple-top approaches perform significantly worse than the interference-based method. This is to be expected since these two approaches use the production mode that is suppressed by the charm-quark parton distribution function. Our projected limit for the interference-based approach at HL-LHC of \(6.4 \times 10^{-5}\) is likely to degrade when including systematic uncertainties. However, we restricted ourselves to only one analysis region with exactly four central b-tagged jets. The inclusion of more signal regions would improve the sensitivity while data-driven background estimations from dedicated control regions could mitigate the impact of systematic uncertainties. Additionally, the inclusion of the electron channel will improve the sensitivity.

For the FCC-hh, the relative sensitivity of the interference-based approach compared to the leptonic analysis improves when compared to the HL-LHC scenario. This highlights the power of the interference-based approach when moving towards the realm of smaller and smaller couplings and the analysis of larger datasets with increasing statistical power. Nevertheless, it should be recognised that the FCC-hh would operate in a regime of very high pileup: the average number of visible interactions per bunch crossing is projected to be \(\mu \sim {\mathcal {O}}(1000)\) [59]. This poses notable challenges for flavour tagging and analyses that focus on jets in general. Because of this, more thorough studies with a dedicated detector simulation would be needed to assess and compare the sensitivity of the two approaches at the FCC-hh. The ultraboosted approach benefits significantly more from the energy gain from 14 to \(100\,\text {TeV}\) as the limit is estimated to improve by a factor of approximately 46, while the limit from triple-top-quark production is only projected to improve by a factor of around 29. A clear hierarchy can be deduced: The triple top-quark approach only yields an expected limit of the order of \(10^{-4}\), while the ultraboosted approach is expected to perform better by around one order of magnitude. The interference-based approach and the leptonic analysis are both projected to push this even further to \({\mathcal {O}}(10^{-6})\).

It should also be noted that the \(Z\rightarrow \ell \ell \) and the interference approach have a different sensitivity to tZc and tZu FCNC couplings and are hence complementary. The \(Z\rightarrow \ell \ell \) analysis that focuses on the production mode is less sensitive to the tZc than to the tZu coupling due to the difference in parton distribution functions. Nevertheless, the sensitivities to the two couplings in the production mode are expected to be more similar at FCC-hh due to the evolution of the parton distribution functions considering higher energy scales and the tendency for lower Bjorken x compared to the LHC. In the decay mode, the \(Z\rightarrow \ell \ell \) approach has similar sensitivity to both couplings but relies on charm-quark identification for the distinction of these couplings. In contrast, the interference approach is almost exclusively sensitive to the tZc coupling. Thus, in case an excess over the SM prediction is observed in the future, the combination of these approaches will allow to disentangle possible effects from these two couplings.

5 Conclusions

Top-quark FCNCs are so highly suppressed within the SM that any observation at the LHC or planned future hadron colliders would constitute a clear signal of physics beyond the SM. At hadron colliders, the traditionally most promising and most employed channel to search for tZq FCNCs uses a trilepton signature, relying on the leptonic \(Z\rightarrow \ell ^+\ell ^-\) decay. Since the \(t\rightarrow Z q\) decay rate is quadratically proportional to the FCNC coupling, i.e., \(\propto g^2\), the resulting sensitivity to probe g scales as \(1/\root 4 \of {\mathcal {L_{\textrm{int}}}}\) with the integrated luminosity \({\mathcal {L}}_\textrm{int}\) (assuming systematic uncertainties are small compared to the statistical ones). Given the large datasets expected at the HL-LHC and planned future hadron colliders, we investigated how to improve upon this luminosity scaling with a novel strategy.

We propose to target the hadronic, three-body decay \(t\rightarrow q b \bar{b}\). In the presence of tZq FCNCs, the decay receives two interfering contributions: one from the FCNC (\(t\rightarrow q Z(\rightarrow b\bar{b})\)) and one from the SM (\(t\rightarrow b W^+(\rightarrow q{\bar{b}})\)). Since the two contributions interfere, the three-body rate contains a term linear in the FCNC coupling, i.e., \(\propto g\). Therefore, for sufficiently small g, the sensitivity to probe g scales as \(1/\sqrt{\mathcal {L_{\textrm{int}}}}\) in this channel, thus more favourably than in the traditional multi-lepton searches. We studied the leading parametric dependencies controlling the kinematics of \(t\rightarrow q b {\bar{b}}\) and identified the requirements on the FCNC couplings that would allow leveraging the interference to compete and complement traditional searches. The interference depends on the chirality and the phase of the FCNC coupling. It is largest for a left-handed tZq coupling, while for a right-handed one it is suppressed by the small masses of the bottom and q quark. We have thus focussed on the latter case of left-handed tZq couplings. The interference is active in a small kinematical region in which both the Z and W bosons are “on-shell”. In this small doubly-on-shell region, we showed that the parametric dependence on \(\Gamma /M\) is the same for the SM and the interference contribution. Therefore, targeting this doubly-on-shell region with a dedicated search has the potential to provide sensitivity with an improved luminosity scaling.

Based on these findings, we studied the prospects of the proposed search strategy for the case of left-handed FCNC tZc couplings with constructive interference. We consider the production of \({t\bar{t} \rightarrow c b {\bar{b}}\,\mu ^-\nu _\mu \bar{b}}\) from tZc FCNCs as the signal process. We simulated this signal and relevant background processes with MadGraph5_aMC@NLO and emulated the detector response by smearing the parton-level objects with resolutions similar to those at the ATLAS and CMS experiments. We then separated the FCNC signal processes from the backgrounds with a deep neural network that is parameterised in the value of the FCNC coupling g. This setup accounts for the varying FCNC-interference contribution to the total FCNC signal. If no signs of FCNC production were found, the resulting expected 95% confidence-level upper limit with the HL-LHC dataset is \({{\mathcal {B}}_{\textrm{excl}}(t \rightarrow Zc) = 6.4 \times 10^{-5}}\). At the FCC-hh, the expected limit is improved by up to a factor \(\sim 50\), depending on the assumed detector performance.

While this study did only consider statistical uncertainties, the effect of systematic uncertainties should be studied in the future. The main backgrounds are \(t{\bar{t}}\) production with light-quark jets misidentified as b- or c-jets and \(t{\bar{t}}\) production with a \(W\rightarrow cb\) decay. As in most \(t{\bar{t}}\) measurements, uncertainties in the modelling of the \(t{\bar{t}}\) process may impact the sensitivity. The same is true for b-tagging and jet-related uncertainties. Heavy-flavour-associated \(t{\bar{t}}\) production is only a minor background and the potentially large associated systematic uncertainties are unlikely to significantly affect the sensitivity. Given the promising signal-background separation of the parameterised deep neural network, the statistical uncertainties on the number of events in the signal-dominated phase space may still compete with the systematic uncertainties in the background contributions.

As the integrated luminosity increases, the advantage of the new strategy over the traditional approach generally becomes more pronounced. At the HL-LHC, the new strategy may not outperform the traditional search based on \(Z\rightarrow \ell \ell \) decays. However, at the FCC-hh, it has the potential to be competitive with the established approach. Nevertheless, given their complementarity, the combination of the two strategies will improve over the traditional search alone at both the HL-LHC and the FCC-hh. Additionally, the new interference-based approach demonstrates excellent prospects compared to several other alternative proposals for top-quark FCNC searches.

Our study focussed on the case in which SM- and NP-sources of CP violation are aligned. It would be intriguing to relax this assumption and design dedicated observables, e.g., asymmetry distributions, that optimally leverage the interference in \(t\rightarrow q b{\bar{b}}\) to probe possible CP-violating phases in top-quark FCNC processes. In general, the interference approach will be important to understand the nature of the anomalous coupling in case top-quark FCNCs are observed, as it also provides information on its Lorentz structure.

Given the results of our study on the proposed interference-based approach, it will be interesting to perform an analysis using current LHC data with a consistent treatment of systematic uncertainties and to estimate the sensitivity at the HL-LHC and future hadron-collider experiments under realistic experimental conditions.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.]

Notes

Actually, an extrapolation of the ATLAS FCNC tZq search with \(\int {\mathcal {L}}\,\textrm{d}t = 36.1\) fb\(^{-1}\) at \(\sqrt{s} = 13\) TeV [29] to the HL-LHC [30] showed a smaller improvement than the naive expectation. This highlights the role of realistic detector simulations and the consideration of systematic uncertainties in estimating the sensitivity at the HL-LHC experiments.

In this rough extrapolation, effects from the more challenging experimental conditions, improvements due to upgrades to the detectors, and small changes in the cross sections are neglected.

Changes in the total top-quark width have a negligible effect given the current experimental upper limits on g.

For an example of this at the optics table, see Ref. [34].

In unitarity gauge, only the couplings in Eq. (2) enter the computation of \(t\rightarrow cb{\bar{b}}\). In \(R_\xi \) gauges also the corresponding Goldstone couplings must be included.

\(\Delta R = \sqrt{\left( \Delta \phi \right) ^2+\left( \Delta \eta \right) ^2}\) with \(\phi \) the azimuthal angle and the \(\eta \) the pseudorapidity.

We implicitly include charge-conjugated processes in the following discussions.

We quote the significantly more sensitive semileptonic decay channel of the top quark and do not attempt to provide a combination with the hadronic decay channel.

We scale the limit from Ref. [62] by \(1/\sqrt{2}\) since we assume an integrated luminosity of \(20\,\textrm{ab}^{-1}\) for the FCC-hh instead of \(10\,\textrm{ab}^{-1}\). We perform the same rescaling for the FCC-hh projection of the triple-top-quark method.

References

S.L. Glashow, J. Iliopoulos, L. Maiani, Weak interactions with lepton-hadron symmetry. Phys. Rev. D 2, 1285 (1970). https://doi.org/10.1103/PhysRevD.2.1285

G. Eilam, J.L. Hewett, A. Soni, Rare decays of the top quark in the standard and two Higgs doublet models. Phys. Rev. D 44, 1473 (1991) (Erratum: Phys. Rev. D 59 (1999) 039901). https://doi.org/10.1103/PhysRevD.44.1473

B. Mele, S. Petrarca, A. Soddu, A new evaluation of the \(t\rightarrow cH\) decay width in the standard model. Phys. Lett. B 435, 401 (1998). https://doi.org/10.1016/S0370-2693(98)00822-3. arXiv:hep-ph/9805498

J.A. Aguilar-Saavedra, Top flavor-changing neutral interactions: theoretical expectations and experimental detection. Acta Phys. Pol. B 35, 2695 (2004). arXiv:hep-ph/0409342

J.J. Zhang, C.S. Li, J. Gao, H. Zhang, Z. Li, C.P. Yuan et al., Next-to-leading order QCD corrections to the top quark decay via model-independent FCNC couplings. Phys. Rev. Lett. 102, 072001 (2009). https://doi.org/10.1103/PhysRevLett.102.072001. arXiv:0810.3889

C. Zhang, F. Maltoni, Top-quark decay into Higgs boson and a light quark at next-to-leading order in QCD. Phys. Rev. D 88, 054005 (2013). https://doi.org/10.1103/PhysRevD.88.054005. arXiv:1305.7386

M. Forslund, N. Kidonakis, Soft-gluon Corrections for Single Top Quark Production in Association with Electroweak Bosons, Meeting of the Division of Particles and Fields of the American Physical Society. arXiv:1909.02619

Top Quark Working Group, Working Group Report: Top Quark, Community Summer Study 2013: Snowmass on the Mississippi. arXiv:1311.2028

A. Azatov, G. Panico, G. Perez, Y. Soreq, On the flavor structure of natural composite Higgs models and top flavor violation. JHEP 12, 082 (2014). https://doi.org/10.1007/JHEP12(2014)082. arXiv:1408.4525

P.Q. Hung, Y.-X. Lin, C.S. Nugroho, T.-C. Yuan, Top quark rare decays via loop-induced FCNC interactions in extended mirror Fermion model. Nucl. Phys. B 927, 166 (2018). https://doi.org/10.1016/j.nuclphysb.2017.12.014. arXiv:1709.01690

J.-L. Yang, T.-F. Feng, H.-B. Zhang, G.-Z. Ning, X.-Y. Yang, Top quark decays with flavor violation in the B-LSSM. Eur. Phys. J. C 78, 438 (2018). https://doi.org/10.1140/epjc/s10052-018-5919-5. arXiv:1806.01476

W. Altmannshofer, S. Gori, B.V. Lehmann, J. Zuo, UV physics from IR features: new prospects from top flavor violation. arXiv:2303.00781

F. Larios, R. Martinez, M.A. Perez, Constraints on top quark FCNC from electroweak precision measurements. Phys. Rev. D 72, 057504 (2005). https://doi.org/10.1103/PhysRevD.72.057504. arXiv:hep-ph/0412222

T. Han, R.D. Peccei, X. Zhang, Top quark decay via flavor changing neutral currents at hadron colliders. Nucl. Phys. B 454, 527 (1995). https://doi.org/10.1016/0550-3213(95)95688-C. arXiv:hep-ph/9506461

J.I. Aranda, A. Cordero-Cid, F. Ramirez-Zavaleta, J.J. Toscano, E.S. Tututi, Higgs mediated flavor violating top quark decays \(t \rightarrow u_i H\), \(u_i \gamma \), \(u_i \gamma \gamma \), and the process \(\gamma \gamma \rightarrow t c\) in effective theories. Phys. Rev. D 81, 077701 (2010). https://doi.org/10.1103/PhysRevD.81.077701. arXiv:0911.2304

H. Gong, Y.-D. Yang, X.-B. Yuan, Constraints on anomalous \(tcZ\) coupling from \({\bar{B}} \rightarrow {\bar{K}}^* \mu ^+ \mu ^-\) and \(B_s \rightarrow \mu ^+ \mu ^-\) decays. JHEP 05, 062 (2013). https://doi.org/10.1007/JHEP05(2013)062. arXiv:1301.7535

M. Gorbahn, U. Haisch, Searching for \(t \rightarrow c(u)h\) with dipole moments. JHEP 06, 033 (2014). https://doi.org/10.1007/JHEP06(2014)033. arXiv:1404.4873

H. Hesari, H. Khanpour, M.M. Najafabadi, Direct and indirect searches for top-Higgs FCNC couplings. Phys. Rev. D 92, 113012 (2015). https://doi.org/10.1103/PhysRevD.92.113012. arXiv:1508.07579

N. Castro, K. Skovpen, Flavour-changing neutral scalar interactions of the top quark. Universe 8, 609 (2022). https://doi.org/10.3390/universe8110609. arXiv:2210.09641

ATLAS collaboration, Search for a new scalar resonance in flavour-changing neutral-current top-quark decays \(t \rightarrow qX\) (\(q=u,c\)), with \(X \rightarrow b\bar{b}\), in proton–proton collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. arXiv:2301.03902

ATLAS collaboration, Search for flavour-changing neutral-current couplings between the top quark and the photon with the ATLAS detector at \(\sqrt{s} = 13\) TeV. Phys. Lett. B 137379 (2022). https://doi.org/10.1016/j.physletb.2022.137379. arXiv:2205.02537

CMS collaboration, Search for Anomalous Single Top Quark Production in Association with a Photon in \(pp\) Collisions at \( \sqrt{s}=8 \) TeV. JHEP 04, 035 (2016). https://doi.org/10.1007/JHEP04(2016)035. arXiv:1511.03951

ATLAS collaboration, Search for flavor-changing neutral-current couplings between the top quark and the \(Z\) boson with LHC Run 2 proton–proton collisions at \(\sqrt{s} = 13\) TeV with the ATLAS detector. arXiv:2301.11605

CMS collaboration, Search for associated production of a Z boson with a single top quark and for tZ flavour-changing interactions in pp collisions at \( \sqrt{s}=8 \) TeV. JHEP 07, 003 (2017). https://doi.org/10.1007/JHEP07(2017)003. arXiv:1702.01404

ATLAS collaboration, Search for flavour-changing neutral current interactions of the top quark and the Higgs boson in events with a pair of \(\tau \)-leptons in pp collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. arXiv:2208.11415

CMS collaboration, Search for flavor-changing neutral current interactions of the top quark and Higgs Boson in final states with two photons in proton–proton collisions at \(\sqrt{s}=13 \rm TeV \). Phys. Rev. Lett. 129, 032001 (2022). https://doi.org/10.1103/PhysRevLett.129.032001. arXiv:2111.02219

ATLAS collaboration, Search for flavour-changing neutral-current interactions of a top quark and a gluon in pp collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. Eur. Phys. J. C 82, 334 (2022). https://doi.org/10.1140/epjc/s10052-022-10182-7. arXiv:2112.01302

CMS collaboration, Search for anomalous Wtb couplings and flavour-changing neutral currents in t-channel single top quark production in pp collisions at \(\sqrt{s} =\) 7 and 8 TeV. JHEP 02, 028 (2017). https://doi.org/10.1007/JHEP02(2017)028. arXiv:1610.03545

ATLAS collaboration, Search for flavour-changing neutral current top-quark decays \(t\rightarrow qZ\) in proton–proton collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. JHEP 07, 176 (2018). https://doi.org/10.1007/JHEP07(2018)176. arXiv:1803.09923

ATLAS collaboration, Sensitivity of searches for the flavour-changing neutral current decay \(t\rightarrow qZ\) using the upgraded ATLAS experiment at the High Luminosity LHC, ATL-PHYS-PUB-2019-001

G.T. Bodwin, F. Petriello, S. Stoynev, M. Velasco, Higgs boson decays to quarkonia and the \(H\bar{c}c\) coupling. Phys. Rev. D 88, 053003 (2013). https://doi.org/10.1103/PhysRevD.88.053003.arXiv:1306.5770

M. Farina, G. Panico, D. Pappadopulo, J.T. Ruderman, R. Torre, A. Wulzer, Energy helps accuracy: electroweak precision tests at hadron colliders. Phys. Lett. B 772, 210 (2017). https://doi.org/10.1016/j.physletb.2017.06.043.arXiv:1609.08157

F. Bishara, S. De Curtis, L.D. Rose, P. Englert, C. Grojean, M. Montull et al., Precision from the diphoton Zh channel at FCC-hh. JHEP 04, 154 (2021). https://doi.org/10.1007/JHEP04(2021)154. arXiv:2011.13941

T. Young, I. The Bakerian Lecture. Experiments and calculations relative to physical optics. Philos. Trans. R. Soc. Lond. 94, 1 (1804). https://doi.org/10.1098/rstl.1804.0001

B. Grzadkowski, M. Iskrzynski, M. Misiak, J. Rosiek, Dimension-six terms in the Standard Model Lagrangian. JHEP 10, 085 (2010). https://doi.org/10.1007/JHEP10(2010)085. arXiv:1008.4884

ATLAS collaboration, Search for heavy Higgs Bosons \(A/H\) decaying to a top quark pair in \(pp\) collisions at \(\sqrt{s}=8 \rm TeV \) with the ATLAS detector. Phys. Rev. Lett. 119, 191803 (2017). https://doi.org/10.1103/PhysRevLett.119.191803. arXiv:1707.06025

CMS collaboration, Search for heavy Higgs bosons decaying to a top quark pair in proton–proton collisions at \(\sqrt{s} =\) 13 TeV. JHEP 04, 171 (2020). https://doi.org/10.1007/JHEP04(2020)171 (Erratum: JHEP 03 (2022) 187). arXiv:1908.01115

J. Alwall, R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301

C. Degrande, C. Duhr, B. Fuks, D. Grellscheid, O. Mattelaer, T. Reiter, UFO: the universal FeynRules output. Comput. Phys. Commun. 183, 1201 (2012). https://doi.org/10.1016/j.cpc.2012.01.022. arXiv:1108.2040

R.D. Ball et al., Parton distributions with LHC data. Nucl. Phys. B 867, 244 (2013). https://doi.org/10.1016/j.nuclphysb.2012.10.003.arXiv:1207.1303

V. Hirschi, O. Mattelaer, Automated event generation for loop-induced processes. JHEP 10, 146 (2015). https://doi.org/10.1007/JHEP10(2015)146. arXiv:1507.00020

ATLAS collaboration, Jet energy scale and resolution measured in proton–proton collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. Eur. Phys. J. C 81, 689 (2021). https://doi.org/10.1140/epjc/s10052-021-09402-3. arXiv:2007.02645

ATLAS collaboration, Performance of missing transverse momentum reconstruction with the ATLAS detector using proton–proton collisions at \(\sqrt{s}\) = 13 TeV. Eur. Phys. J. C 78, 903 (2018). https://doi.org/10.1140/epjc/s10052-018-6288-9. arXiv:1802.08168

ATLAS collaboration, Muon reconstruction performance of the ATLAS detector in proton–proton collision data at \(\sqrt{s}\) =13 TeV. Eur. Phys. J. C 76, 292 (2016). https://doi.org/10.1140/epjc/s10052-016-4120-y. arXiv:1603.05598

ATLAS collaboration, Momentum resolution improvements with the inclusion of the Alignment Errors On Track, PLOTS/MUON-2018-003

ATLAS collaboration, Performance of the ATLAS muon triggers in Run 2. JINST 15, P09015 (2020). https://doi.org/10.1088/1748-0221/15/09/p09015. arXiv:2004.13447

CMS collaboration, Performance of the CMS muon trigger system in proton-proton collisions at \(\sqrt{s} =\) 13 TeV. JINST 16, P07001 (2021). https://doi.org/10.1088/1748-0221/16/07/P07001. arXiv:2102.04790

E. Bols, J. Kieseler, M. Verzetti, M. Stoye, A. Stakia, Jet flavour classification using DeepJet. JINST 15, P12012 (2020). https://doi.org/10.1088/1748-0221/15/12/P12012. arXiv:2008.10519

ATLAS collaboration, Measurements of differential cross sections of top quark pair production in association with jets in \({pp}\) collisions at \(\sqrt{s}=13\) TeV using the ATLAS detector. JHEP 10, 159 (2018). https://doi.org/10.1007/JHEP10(2018)159. arXiv:1802.06572

M. Czakon, A. Mitov, Top++: a program for the calculation of the top-pair cross-section at hadron colliders. Comput. Phys. Commun. 185, 2930 (2014). https://doi.org/10.1016/j.cpc.2014.06.021. arXiv:1112.5675

CMS collaboration, First measurement of the cross section for top quark pair production with additional charm jets using dileptonic final states in \(pp\) collisions at \(\sqrt{s}=13\) TeV. Phys. Lett. B 820, 136565 (2021). https://doi.org/10.1016/j.physletb.2021.136565. arXiv:2012.09225

LHC Higgs Cross Section Working Group, Handbook of LHC Higgs Cross Sections: 4. Deciphering the Nature of the Higgs Sector, CERN Yellow Reports: Monographs. CERN-2017-002. https://doi.org/10.23731/CYRM-2017-002. arXiv:1610.07922

J. Neyman, E.S. Pearson, On the problem of the most efficient tests of statistical hypotheses. Philos. Trans. R. Soc. Lond. A 231, 289 (1933). https://doi.org/10.1098/rsta.1933.0009

A.L. Read, Presentation of search results: the \(CL_s\) technique. J. Phys. G 28, 2693 (2002). https://doi.org/10.1088/0954-3899/28/10/313

P. Baldi, K. Cranmer, T. Faucett, P. Sadowski, D. Whiteson, Parameterized neural networks for high-energy physics. Eur. Phys. J. C 76, 235 (2016). https://doi.org/10.1140/epjc/s10052-016-4099-4. arXiv:1601.07913

D.P. Kingma, J. Ba, Adam: a method for stochastic optimization. arXiv:1412.6980

G. Apollinari, I. Béjar Alonso, O. Brüning, M. Lamont, L. Rossi, High-luminosity large hadron collider (HL-LHC): preliminary design report. CERN Yellow Reports: Monographs, CERN-2015-005. https://doi.org/10.5170/CERN-2015-005

ATLAS collaboration, Graph Neural Network Jet Flavour Tagging with the ATLAS Detector, ATL-PHYS-PUB-2022-027

FCC collaboration, FCC-hh: the hadron collider: future circular collider conceptual design report, volume 3. Eur. Phys. J. ST 228, 755 (2019). https://doi.org/10.1140/epjst/e2019-900087-0

M. Aleksa et al., Calorimeters for the FCC-hh. arXiv:1912.09962

M.L. Mangano et al., Physics at a 100 TeV pp collider: standard model processes. arXiv:1607.01831

J.A. Aguilar-Saavedra, Ultraboosted \(Zt\) and \(\gamma t\) production at the HL-LHC and FCC-hh. Eur. Phys. J. C 77, 769 (2017). https://doi.org/10.1140/epjc/s10052-017-5375-7. arXiv:1709.03975

V. Barger, W.-Y. Keung, B. Yencho, Triple-top signal of new physics at the LHC. Phys. Lett. B 687, 70 (2010). https://doi.org/10.1016/j.physletb.2010.03.001.arXiv:1001.0221

C.-R. Chen, Searching for new physics with triple-top signal at the LHC. Phys. Lett. B 736, 321 (2014). https://doi.org/10.1016/j.physletb.2014.07.041

M. Malekhosseini, M. Ghominejad, H. Khanpour, M.M. Najafabadi, Constraining top quark flavor violation and dipole moments through three and four-top quark productions at the LHC. Phys. Rev. D 98, 095001 (2018). https://doi.org/10.1103/PhysRevD.98.095001. arXiv:1804.05598

H. Khanpour, Probing top quark FCNC couplings in the triple-top signal at the high energy LHC and future circular collider. Nucl. Phys. B 958, 115141 (2020). https://doi.org/10.1016/j.nuclphysb.2020.115141.arXiv:1909.03998

Acknowledgements

The authors thank Fady Bishara and Nuno Castro for useful comments on the manuscript. ES thanks LianTao Wang for multiple inspiring discussions. The authors acknowledge the support from the Deutschlandstipendium (LC), the German Research Foundation (DFG) Heisenberg Programme (JE), the Studienstiftung des deutschen Volkes (JLS), and the partial support by the Fermi Fellowship at the Enrico Fermi Institute and the U.S. Department of Energy, Office of Science, Office of Theoretical Research in High Energy Physics under Award No. DE-SC0009924 (ES). Fermilab is operated by the Fermi Research Alliance, LLC under Contract DE-AC02-07CH11359 with the U.S. Department of Energy.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Two-body branching fractions

Appendix A: Two-body branching fractions

Resonant W- and Z-boson production (if top FCNCs are present) dominate the inclusive rate for the three-body decay \(t\rightarrow cb{\bar{b}}\) via the diagrams in Fig. 1. As discussed in Sect. 2, these contributions are well described in the narrow-width approximation in terms of inclusive two-body decay rates. Here, we collect the two-body decay rates in Eq. (6) that enter the decay \(t\rightarrow cb{\bar{b}}\) in the SM and when an anomalous tZc coupling is present:

with \(s_w\) and \(c_w\) the sine and cosine of the weak mixing angle, and \(n_c=3\) the number of colours.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Cremer, L., Erdmann, J., Harnik, R. et al. Leveraging on-shell interference to search for FCNCs of the top quark and the Z boson. Eur. Phys. J. C 83, 871 (2023). https://doi.org/10.1140/epjc/s10052-023-11982-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-023-11982-1