Abstract

Bayesian Machine Learning (BML) and strong lensing time delay (SLTD) techniques are used in order to tackle the \(H_{0}\) tension in f(T) gravity. The power of BML relies on employing a model-based generative process which already plays an important role in different domains of cosmology and astrophysics, being the present work a further proof of this. Three viable f(T) models are considered: a power law, an exponential, and a squared exponential model. The learned constraints and respective results indicate that the exponential model, \(f(T)=\alpha T_{0}\left( 1-e^{-p T / T_{0}}\right) \), has the capability to solve the \(H_{0}\) tension quite efficiently. The forecasting power and robustness of the method are shown by considering different redshift ranges and parameters for the lenses and sources involved. The lesson learned is that these values can strongly affect our understanding of the \(H_{0}\) tension, as it does happen in the case of the model considered. The resulting constraints of the learning method are eventually validated by using the observational Hubble data (OHD).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The current standard model of cosmology, \(\Lambda \)CDM, efficiently explains the evolution and content of the Universe by adding to its visible content two dark sectors: dark matter and dark energy. The first plays a crucial role in stabilizing galaxies and clusters, while the latter is necessary to describe the late-time acceleration of the Universe. However, even with these additions and remarkable efforts, the model still suffers from serious issues, such as the coincidence and the cosmological constant problems [1, 2].

A rather new issue that reveals another trouble with the physical background of \(\Lambda \)CDM is the \(H_{0}\) tension, to be discussed below. Essentially, there are two ways to tackle this new issue, widely discussed in the recent literature. One may just continue addressing the problems in the General Relativity (GR) framework by adding new exotic forms of matter to the Universe’s energy content or either build new gravitational theories beyond GR that could drive the accelerating expansion directly. In both approaches, the new models are bound to pass cosmological and astrophysical tests [3,4,5,6,7,8,9,10,11,12,13] (See also references therein for related problems and models).

In the context of the second approach, several modified theories have been proposed. For instance, the f(R) theory is the simplest extension of GR in which instead of Ricci scalar R in the Einstein-Hilbert action one considers an arbitrary function f(R) [14,15,16,17,18,19,20,21]. Another interesting modification is the f(T) theory wherein the gravitational interaction is described by the Torsion T instead of the curvature tensor. As a result, the Levi-Civita connection is replaced by the Weitzenböck connection in the underlying Riemann–Cartan spacetime. An important benefit of f(T) is that its field equations appear in the form of second-order differential equations, significantly reducing the mathematical difficulties of the models compared to f(R) theories where the field equation leads to fourth-order differential equations. Moreover, the cosmological implications of f(T) theories have already been manifested in several proposed models. These models are not only able to explain the current accelerated cosmic expansion but also provide alternatives to inflation. For all that, the f(T) theory and its cosmological applications have attracted a lot of interest in the recent literature [22,23,24,25,26,27,28,29,30,31,32,33,34,35,36].

We have already mentioned that it is essential to study and ensure that the crafted models pass cosmological and astrophysical tests, especially now that various observational missions are in operation, rendering lots of new data. Moreover, it is crucial to constrain the model parameters and learn proper consequences because the constraints on the background dynamics are essential for understanding the nature of (interacting) dark energy, structure formation, and future singularity problems. In this regard, developing and utilizing techniques that allow us to get reconstructions (including constraints) through a learning procedure in a model-independent way, e.g., directly from observational data, are of great importance.

One of the popular and widely used examples of such techniques in cosmology is the Gaussian Processes [37,38,39,40] which rely on a specific Machine Learning (ML) algorithm indicating how generally ML can be used in cosmology, astrophysics, or in any other field of science where data analysis is crucial. Generically, ML algorithms are data-hungry approaches requiring huge amounts of data to perform training and validation processes. They also carry some drawbacks that may cause catastrophic results in some cases [41]. In other words, ML may become useless in specific situations, in particular, if the collected data have some inherent problem. Biasing is among the reasons that the ML approach may fail. Unfortunately, biasing is a substantial and sometimes unavoidable part of the data collecting process. It can originate from our particular understanding of reality or the model we use to represent reality. For instance, an intrinsic bias in flat \(\Lambda \)CDM has been pointed out by [42]. Generally, a bias can arise in many situations, including those associated with the reasoning under uncertainties actively studied in robotics, dynamical vehicles, and various autonomous system modeling. As a result, Over the years, researchers of computer science and other science fields have developed multiple methods to reduce bias and increase the robustness of ML algorithms. Among them, an interesting case for us, which will be applied in this paper, is Bayesian Machine Learning (BML). It uses model-based generative processes to improve the data problems, among others.Footnote 1 A proper discussion about BML will appear below, in Sect. 3, where it will be indicated how, in general, it can be used in cosmology.

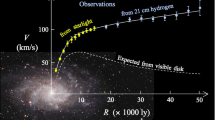

The rest of this section is devoted to the formulation of the specific problem that motivates the present study. It is a relatively new one, known as the \(H_{0}\) tension in the literature: a huge difference between the early-time measurements (e.g., Cosmic Microwave Background (CMB) and Baryon acoustic oscillations (BAO)) and late-time ones (e.g., Type Ia Supernovae (SNe Ia) and \(\textrm{H}(\textrm{z}))\) of the value of the Hubble constant \(H_{0}\). In particular, according to the Planck 2018 [43] results, in a flat \(\Lambda \)CDM model the value of the Hubble constant is \(H_{0}=67.27 \pm 0.60 \mathrm {~km} / \textrm{s} / \textrm{Mpc}\) at \(1\sigma \) confidence level, while the SHOES Team estimated that \(H_{0}=73.2\pm 1.3\mathrm {~km} / \textrm{s} / \textrm{Mpc}\) [44], which exhibits a \(4.14\) \(\sigma \) tension.

This important tension motivated researchers to look for different solutions ranging from indications of new physics to possible hidden sources of systematic errors and biases in observational data [45,46,47,48,49,50] (see references therein for other options to solve the \(H_{0}\) tension). Indeed, to understand the source of such discrepancy that can challenge the \(\Lambda \)CDM model, other independent observational sources have been used to determine the value of \(H_{0}\). For example, strong gravitational lensing systems are powerful and independent candidates for estimating the Hubble parameter and its current tension [51]. The discovery of the first binary neutron star merging event, GW170817, and the detection of an associated electromagnetic counterpart has made this possible, providing an estimate for the Hubble constant of about \(H_{0}=70_{-8}^{+12}\mathrm {~km} / \textrm{s} / \textrm{Mpc}\). Moreover, the analysis of six well-measured systems from the H0LiCOW lensing program [52] has provided abound on the Hubble constant of \(73.3_{-1.8}^{+1.7}\) assuming a flat \(\Lambda \textrm{CDM}\) cosmology [53]. Even though these constraints are weaker than those from SNe Ia and CMB observations, it is expected to improve with the discovery of new merging events with an associated electromagnetic counterpart [54,55,56,57,58,59]. Particularly with observations of the lensed systems from future surveys such as the Large Synoptic Survey Telescope (LSST) [60], are expected to significantly improve the number of well-measured strongly lensed systems [61]. The increased number of observed lensed sources will also allow constraining non-standard cosmologies.

In strong gravitational lensing systems, the total time delay between two images (or two gravitational-wave events), i and j, is given by

where \(\Delta \phi _{i, j}\) is the difference between the Fermat potentials at different image angular positions \(\varvec{\theta }_{i}, \varvec{\theta }_{j}\), and \(\varvec{\beta }\) denoting the source position, and \(\psi \) being the lensing potential [51, 62, 63].

On the other hand, the measured time delay between strongly lensed images \(\Delta t_{i, j}\) combined with the redshifts of the lens \(z_{\textrm{s}}\) and the source \(z_{\textrm{s}}\), and the Fermat potential difference \(\Delta \phi _{i, j}\) determined by lens mass distribution and image positions allow determining the time-delay distance \(D_{\Delta t}\). This quantity, which is a combination of three angular diameter distances, reads

where \(D_{l}, D_{s}\) and \(D_{ls}\) stand for the angular diameter distances to the lens, the source, and between the lens and the source, respectively. In fact, Eq. (2) is a very powerful relationship, combined with BML, which allows us to constrain cosmological models without relying on the physics or observations of the lensing model and Fermat potential. As a result, having only cosmological models and taking into account that in a flat Friedmann–Robertson–Walker (FRW) Universe, the angular diameter distance reads

where \(E\left( z^{\prime },r\right) \) is defined as dimensionless Hubble parameter and it is possible to learn the constraints on the model parameters embedded in it . Ideally, future observational data coming from lensed gravitational wave (GW) signals together with their corresponding electromagnetic wave (EM) will definitely provide us with some new and significant insights which may lead to an alleviation of the \(H_{0}\) tension. Therefore, it is worth investigating their implications on the dark energy and modified theories of gravity.

In our study, we pursue two goals. First, we will use the advantages given by BML to constrain various f(T) models using the physics of GW+EM systems. To our knowledge, this is the first time where BML and time-delay of GW+EM systems have been both involved in studying f(T) models. In this case, the generative process used in BML will be based on Eqs. (2) and (3), thus establishing a direct link between cosmology and strong lensing time delay(SLTD). Our second goal will be to learn how the \(H_{0}\) tension can be solved in f(T) gravity. However, given specific aspects of the method, we have to limit ourselves to considering only three specific viable f(T) models. We emphasize again, taking into account the specific aspects of our analysis, that the learned constraints will be validated using the observational Hubble data (OHD) obtained from cosmic chronometers and BAO data (see, for instance, [39] and references therein).

The validation of the BML results with OHD presented in our study demonstrates that it is reliable to learn possible biases between H(z) and future SLTD data. Moreover, since BML uses a model-based generation process, we are here able, for forecasting purposes, to consider different situations to understand forthcoming data that could affect our understanding of the \(H_{0}\) tension. In particular, we should indicate that, by considering different redshift ranges and numbers for the lenses and sources, we have learned that future SLTD data may strongly affect our understanding of the \(H_{0}\) tension. Hopefully, discussed predictions for the background dynamics can be validated by new missions and data in the near future, proving the forecasting and the robustness of the method used in our analysis.

This paper is organized as follows. In Sect. 2, we provide a brief description of the cosmological dynamics in the frame of the f(T) theory and introduce three specific f(T) models to be constrained using BML. Section (3) provides the methodology of the BML approach used in our analysis. Finally, in Sect. 4, our final results are presented. To finish, the conclusions that follow from the analysis are displayed in Sect. 5.

2 Theoretical framework and models

In this section we briefly introduce the formalism of f(T) gravity and its application in cosmology. Then we introduce three viable models that we will constrain in our study.

2.1 f(T) gravity

In f(T) gravity the dynamical variables are the tetrad fields \(e^A{ }_\mu \), where Greek indices correspond to the spacetime coordinates and Latin indices correspond to the tangent space coordinates. The tetrad fields \(e^A{ }_\mu \) form an orthonormal basis in the tangent space at each point of the spacetime manifold. This implies that they satisfy the relation \(g_{\mu \nu }=\eta _{A B} e^A{ }_\mu e^B{ }_\nu \), with \(g_{\mu \nu }\) the spacetime metric and where \(\eta _{A B}=(1,-1,-1,-1)\) is the tangent-space metric.

The Weitzenböck connection in torsional gravity is defined as

This connection does not include the Riemann curvature but only a non-zero torsion, namely

Additionally, the torsion scalar is

where

with

This theory is equivalent to general relativity at the level of equations of motion and and the generalized Lagrangian could be written as

where \(e={\text {det}}\left( e_\mu ^A\right) =\sqrt{-g}, M_P\) is the Planck mass and f(T) is the arbitrary function of torsion scalar T (we use units where \(c=1\) ).

By varying the above action with respect to the tetrads, we obtain the field equations as

where \(f_T \equiv \partial f(T) / \partial T, f_{T T} \equiv \partial ^2 f(T) / \partial T^2\), and \(T(m)_\rho ^\mu \) is the matter energy-momentum tensor.

2.2 Background dynamics

Concerning the background dynamics of the universe, we should study the cosmology of f(T) gravity in the context of a homogeneous, isotropic, and spatially flat universe, characterized by \(e_{\mu }^{A}={\text {diag}}(1, a, a, a)\), with the FLRW geometry described by

The Friedmann equations in this context become

and

with \(H \equiv \frac{{\dot{a}}}{a}\) being the Hubble parameter, and \(\rho _{dm}, P_{dm}\) being the energy density and pressure for cold dark matter, respectively. Accordingly, we can define the energy density and pressure for dark energy as

Consequently, the dark energy equation of state can be written as

In the above discussed setup the cold dark matter will have its evolution dictated by the conservation of the energy-momentum tensor

while the dark energy density will also follow the conservation equation

with \(\rho _{de}\) and \(P_{de}\) defined by Eq. (14). Since \(T=-6 H^{2}\), the normalized Hubble parameter E(z) can be written as \(E^{2}(z) \equiv \frac{H^{2}(z)}{H_{0}^{2}}=\frac{T(z)}{T_{0}}\), with \(H_{0}\) being the present value of the Hubble parameter, and \(T_{0}=-6 H_{0}^{2}\). It is worth to mention that, in our analysis, for convenience we re-write the Friedmann equation as

with y(z, r) being

and \(\Omega ^{(0)}_{de}\) being the dark energy density parameter today,

produced by the modifying f(T) term. One can note the effect from the modified dynamics of teleparallel gravity is represented by the function y(z, r) , in which r corresponds to the free parameters of the specific model considered. The main characteristics of this function are that GR must be reproduced for some limit of parameter, while at the cosmological level, the concordance model \(\Lambda \)CDM can also be achieved (\(y=1\)).

2.3 f(T) models

In this section we present three f(T) models to be investigated in this work. The three selected functions have already been studied in the literature and are among the preferred ones by available data when compared to the \(\Lambda \)CDM model (see, for instance, [31]). We will see how BML affects the predictions for each model while verifying the consistency with previous works. In what follows, we shall introduce the considered f(T) forms and comment on their cosmological implications.

-

1.

Power-law model The first f(T) model (hereafter \(f_{1}\)CDM) is the power-law model which reads as

$$\begin{aligned} f_{1}= \alpha (-T)^{b}, \end{aligned}$$(21)where \(\alpha \) and b are the two free parameters that can be related through

$$\begin{aligned} \alpha =\left( 6 H_{0}^{2}\right) ^{1-b}\frac{1-\Omega ^{(0)}_{dm}}{2 b-1}. \end{aligned}$$(22)Taking \(z=0, H(z=0)=H_{0}\) in Eq. (19), the distortion factor becomes simply

$$\begin{aligned} y(z, b)=E^{2 b}(z, b), \end{aligned}$$(23)and the Friedmann equation,

$$\begin{aligned} E^{2}(z, b)=\Omega _{m 0}(1+z)^{3}+\Omega ^{(0)}_{de} E^{2 b}(z, b). \end{aligned}$$(24)We can easily see that \(b=0\) reproduces the \(\Lambda \)CDM cosmology. This model gives a de-Sitter limit for \(z=-1\), and deviations from the standard model are more evident for higher |b|. However, these deviations are generally small, as confirmed using numerical techniques.

-

2.

Exponential model The second f(T) model (hereafter \(f_{2}\)CDM) is known as the exponential model, and reads

$$\begin{aligned} f_1(T)=\alpha T_0\left( 1-e^{-p T / T_0}\right) , \end{aligned}$$(25)where, again, \(\alpha \) and p are model parameters that can be related as

$$\begin{aligned} \alpha =\frac{\Omega ^{(0)}_{de}}{1-(1+2 p) e^{-p}}. \end{aligned}$$(26)For this model the \(\Lambda \)CDM model is recovered when \(p \rightarrow +\infty \) or equivalently \(b \rightarrow 0^{+}\). Replacing \(p=\frac{1}{b}\) in the above equation, the distortion becomes

$$\begin{aligned} y(z, b)=\frac{1-\left( 1+\frac{2 E^{2}}{b}\right) e^{-\frac{E^{2}}{b}}}{1-\left( 1+\frac{2}{b}\right) e^{-\frac{1}{b}}}. \end{aligned}$$(27)and ,consequently, the Friedmann equation for this model becomes

$$\begin{aligned} E^{2}(z, b)=\Omega ^{(0)}_{dm}(1+z)^{3}+\Omega ^{(0)}_{de} \frac{1-\left( 1+\frac{2 E^{2}}{b}\right) e^{-\frac{E^{2}}{b}}}{1-\left( 1+\frac{2}{b}\right) e^{-\frac{1}{b}}}.\nonumber \\ \end{aligned}$$(28) -

3.

The square-root exponential model Finally, the third f(T) model (hereafter \(f_{3}\)CDM) considered in this work is also of exponential form but has a different exponent, namely

$$\begin{aligned} f_3(T)=\alpha T_0\left( 1-e^{-p \sqrt{T / T_0}}\right) , p=\frac{1}{b} \end{aligned}$$(29)where the \(\alpha \) and p parameters are related as

$$\begin{aligned} \alpha =\frac{\Omega ^{(0)}_{de}}{1-(1+p) e^{-p}}. \end{aligned}$$(30)It is easy to see \(\Lambda \)CDM model is recovered when \(p \rightarrow +\infty \) or equivalently \(b\rightarrow 0^{+}\). Replacing \(p=\frac{1}{b}\) to the above equation, the distortion factor becomes

$$\begin{aligned} y(z, b)=\frac{1-\left( 1+\frac{E}{b}\right) e^{-\frac{E}{b}}}{1-\left( 1+\frac{1}{b}\right) e^{-\frac{1}{b}}}, \end{aligned}$$(31)and Friedman equations becomes

$$\begin{aligned} E^{2}(z, b)=\Omega _{d m}^{(0)}(1+z)^{3}+\Omega _{d e}^{(0)} \frac{1-\left( 1+\frac{E}{b}\right) e^{-\frac{E}{b}}}{1-\left( 1+\frac{1}{b}\right) e^{-\frac{1}{b}}}. \end{aligned}$$(32)

3 Methodology

In this section we review the building blocks of BML and discuss how it can be used to explore the parameter space of the background dynamics of the three f(T) models introduced in the previous section.

3.1 Bayesian machine learning (BML)

Constraining a cosmological scenario with observational data is the main tool in estimating whether the model is applicable or not. It also reduces the phenomenology step by step, revealing the acceptable scenario. Bayesian modeling and parameter inference with standard methods, Markov chain Monte Carlo (MCMC), play a central role in this chain. Here We will not discuss MCMC in great detail but provide a basic motivation to clarify why we need to look for alternative ways to constrain models. For this purpose, lets us start with the Bayes theorem

where \(P(\theta )\) is the prior belief on the parameter \(\theta \) describing the model under consideration. \(P({\mathcal {D}}|\theta )\) is the likelihood that represents the probability of observing the data \({\mathcal {D}}\) given parameter \(\theta \) or model (in our case would be the cosmological model). Finally, \(P({\mathcal {D}})\) is the marginal likelihood or model evidence. The Bayes theorem allows us to find the probability of a given model with \(\theta \) parameters explaining given data \({\mathcal {D}}\). This probability is noted as \(P(\theta |{\mathcal {D}})\) and actually a conditional probability. It should be mentioned that the marginal likelihood \(P({\mathcal {D}})\) is a useful quantity for model selection because it shows that the model will generate the data, irrespective of its parameter values. However the computation of the mentioned probabilities is impossible and this has led to the development and usage of alternative methods to overcome the intractable computational aspects. One of the alternative methods for performing Bayesian inference is the variational inference discussed below. It is considerably faster than MCMC techniques and does not suffer from convergence issues, making it very attractive for cosmological and astrophysical applications.

Now, how can variational inference be helpful, and what is it? It is a helpful tool since it suggests solving an optimization problem by approximating the target probability density. The Kullback–Leibler (KL) divergence is used as a measure of such proximity [64]. In our case, the target probability density would be the Bayesian posterior which allows us to constrain the model parameters. For this purpose, the first step is finding or proposing a family of densities \({\mathcal {Q}}\) and then finding the member of that family \(q(\theta ) \in {\mathcal {Q}}\) which is the closest one to the target probability density. This member is known as the variational posterior that minimizes the KL divergence to the exact posterior, that is

where \(\theta \) is the latent variable to measure of such proximity, and the KL divergence is defined as

Using Bayes theorem, we can rewrite the above KL divergence as

It can be noticed from the above equation that in order to minimize the above KL divergence term, one needs to minimize the second and third terms in Eq. (36). Now, expanding the joint likelihood \(p({\mathcal {D}}, \theta )\) in Eq. (36) the variational lower bound can be rewritten as [65]

The first term in the above equation is a sort of data fit term maximizing the likelihood of the observational data. In contrast, the second term is KL-divergence between the variational distribution and the prior. It can be interpreted as a regularization term that ensures that the variational distribution does not become too complex, potentially leading to over-fitting. That being said, we have a fitting tool to control the process and efficiently avoid computation problems by increasing or decreasing the contribution of the first two terms in Eq. (35). There is interesting and valuable information on this topic which can be found in [66,67,68,69], to mention a few references.

However, the above discussion does not answer how we can find the approximation for the target probability density. An easy option could be to guess it for a simple model where the Bayes inference is also tractable. In practice, this is not an option. The other option is to learn it from the model directly (in our case, directly from the crafted cosmological model), using Neural Networks (NN). In this case, both terms in Eq. (37) can be interpreted from a new perspective, reducing to the initial personal belief and the generated model belief, respectively. It is a convenient approach that allows overcoming various data-related issues because, in this case, even low-quality data can be used at the end to validate the learned results. This idea and its success represent years of development, allowing to transfer the whole subject to another level. Eventually, we should mention that as a learning method, we use deep probabilistic learning, a type of deep learning accounting for uncertainties in the model, initial belief, belief update and deep neural networks. This approach provides the adequate groundwork to output reliable estimations for many ML tasks.

3.2 Implementation of BML

We will make use of the probabilistic programming package PyMC3 [70], which uses the deep learning library Theano, that is, a deep learning python-based library, providing cutting edge inference algorithms to define the physical model, to perform variational inference and to build the posterior distribution. We found that the PyMC3 public library is enriched with some excellent examples demonstrating how the learning process can be established and the probability distributions can be learned; therefore, we excluded any specific discussion on the mathematical framework behind ML algorithms and BML. We strongly suggest that readers interested in exploring BML and variational inference follow the examples and discussions provided in the PyMC3 manual.

Now, let us discuss how we should understand the above discussion allowing us to integrate BML and variational inference to learn the constraints on the crafted cosmological model. To be short, in our analysis using PyMC3, we have followed the steps:

-

1.

We define our cosmological model and the observable that would be generated, and hence we establish the elements of the so-called generative process. In this paper, this process is based on Eqs. (2) and (3).

-

2.

We treat the data obtained from the generative process as data in the following sense. It is very important to understand the meaning of this step. In particular, having generated the so-called data, we now generate probability distributions showing how a given cosmological model can explain the data. In this way, we significantly reduce the complexity of the problem because the family of probabilities approximating the final posterior will directly depend only on priors. To notice dependency, we need to consider the meaning of the right-hand side of the Bayes theorem, Eq. (33). This is a good starting point as we will see below.

-

3.

Finally, we run the learning algorithm to get a new distribution over the model parameters and update our prior beliefs imposed on the cosmological model parameters.

Generating the learning process and the direction of the learning is always controlled by KL divergence. Since we have involved probabilistic programming, after enough generated probabilistic distributions, we expect to learn the asymptotically correct form for the posterior distribution allowing us to infer the constraints.

In our analysis we considered several different scenarios where data have been generated, which should be understood in the context of the above discussion, to cover different redshift ranges for both lens and sources and different numbers of the lenses and sources. The distribution for the lens \(z_{l}\) and source \(z_{s}\) considered in this paper can be found in Table 2.

To end this section, we would like to mention that we cover lenses and sources distributed over both low and high redshifts. This is because the observational data for the cosmic history of the Universe are available at low redshift ranges and can be used to validate the learned results obtained from BML. On the other hand, we consider high redshift ranges for forecasting reasons; however, the complete validation of the results will have to wait for the near future, when observations of higher redshift data actually become available. Indeed the validation of the learned results is based on the expansion rate data presented in Table 1. Moreover, we need to stress that our initial belief used as an input is the \(\Lambda \)CDM model. Even if we start from different initial beliefs, our learning procedure asymptotically converges to the results discussed in this paper.

4 Learned constraints on the model parameters

In this section, we present the learned constraints on the f(T) cosmological parameters obtained following the procedure described in Sect. 3. For the sake of convenience, we provide our results in three subsections.

\(1\sigma \) and \(2\sigma \) confidence-level contour plots for the cosmological parameters and the parameter b for \(f_{1}\)CDM model using the SLTD simulated data obtained from the generative process based on Eqs. (2) and (3). Each contour color stands for lenses and sources distributed over a specific redshift range with lens number \(N_{\text {lens}}=50\) (navy contour) and \(N_{\text {lens}}=100\) (green, gray, red, and blue contours), respectively. The flat priors as \(H_{0} \in [64,78]\), \(\Omega ^{(0)}_{dm} \in [0.2,0.4]\) and \(b \in [-0.1,0.1]\) have been imposed and used in the generative process. Our initial belief used as an input is the \(\Lambda \)CDM model

BML predictions for the redshift evolution of the Hubble parameter H, dark matter abundance \(\Omega _{m}\), deceleration parameter q, and equation of state parameter \(\omega _{de} = \frac{p_{de}}{\rho _{de}}\) for the best fit values of the model parameters of \(f_{1}\)CDM model presented in Table 3. Each color line stands for lenses and sources distributed over a specific redshift range with lens number \(N_{\text {lens}}=50\) (navy curve) and \(N_{\text {lens}}=100\), green, gray, red, and blue curves, respectively

4.1 \(f_{1}\)CDM

The first case corresponds to the \(f_{1}\)CDM model given by Eqs. (21) and (24) when the generative process for BML has been organized using Eqs. (2) and (3). Moreover, for all cases discussed below, flat priors as \(H_{0} \in [64,78]\), \(\Omega ^{(0)}_{dm} \in [0.2,0.4]\) and \(b \in [-0.1,0.1]\) have been imposed, respectively. Also we need to mention that N lenses (and respective sources) for a given redshift range are distributed uniformly according to the intervals given in Table 2. From our analysis, we have learned that:

-

The best fit values and \(1\sigma \) errors of the model parameters when \(z_{l}\in [0.1,1.2]\) and \(z_{s}\in [0.3,1.7]\) for \(N _{lens}= 50\) are \(\Omega ^{(0)}_{dm} = 0.28 \pm 0.012\), \(H_{0} = 69.61 \pm 0.141\) km/s/Mpc and \(b = 0.00197 \pm 0.005\).

-

On the other hand, when \(z_{l}\in [0.1,1.2]\) and \(z_{s}\in [0.3,1.7]\) with \(N _{lens}= 100\) the best fit values of the model parameters are found to be \(\Omega ^{(0)}_{dm} = 0.29 \pm 0.012\), \(H_{0} = 68.81 \pm 0.145\) km/s/Mpc and \(b = 0.003 \pm 0.005\).

-

Moreover, for the other two cases when \(z_{l}\in [0.1,1.5]\) and \(z_{s}\in [0.3,1.7]\), and \(z_{l}\in [0.1,2.0]\) and \(z_{s}\in [0.3,2.5]\) (in both cases \(N _{lens}= 100\)), we have found that \(\Omega ^{(0)}_{dm} = 0.297 \pm 0.011\), \(H_{0} = 68.84 \pm 0.146\) km/s/Mpc and \(b = 0.0043 \pm 0.0051\), and \(\Omega ^{(0)}_{dm} = 0.328 \pm 0.012\), \(H_{0} = 69.06 \pm 0.175\) km/s/Mpc and \(b = 0.0071 \pm 0.0051\), respectively.

-

Finally, we found \(\Omega ^{(0)}_{dm} = 0.302 \pm 0.0105\), \(H_{0} = 65.56 \pm 0.134\) km/s/Mpc and \(b = 0.00013 \pm 0.0005\), when \(z_{l}\in [0.1,2.4]\) and \(z_{s}\in [0.3,2.5]\) for \(N _{lens}= 100\).

A compact summary of the learned results can be found in Table 3, while Fig. 1 represents the \(1\sigma \) and \(2\sigma \) contour map of \(f_{1}\)CDM. It is easy to see that BML imposed very tight constraints on the parameters. We note that the parameter b, which determines the deviation from the \(\Lambda \)CDM model, is close to zero in all cases, indicating that according to SLTD measurements, the \(f_{1}\)CDM model most likely does not deviate from the \(\Lambda \)CDM model. This is not surprising because similar conclusions have already been achieved in the literature. However, we need to stress that this is the first indication that the developed pipeline is robust and allows us to learn previously known results from a completely different setup, which is impossible to reproduce with classical methods used in cosmology. As in any other ML algorithm, we also need to validate our BML learned results, and for this purpose, we use available OHD as discussed already. In our opinion, it is reasonable to follow this particular way of validating the learned results because we aimed to learn how to solve the \(H_{0}\) tension in this particular model.

The graphical results of the validation process can be found in panel (a) of Fig. 2 where we compare the redshift evolution of the Hubble parameter predicted by BML with OHD from cosmic chronometers and BAO. We also study the dark matter abundance \(\Omega ^{(0)}_{dm}\), deceleration parameter q, and equation of state parameter \(\omega _{de}\) for a better understanding of the background dynamics which can be found in (b), (c), and (d) panels, respectively. We keep the same convention for the navy, green, grey, red, and blue curves as the legends in Fig. 1, and the dots correspond to the 40 data points representing available OHD to be found in Table 1.

We notice that, according to the mean of the learned results, the redshift evolution of the Hubble function matches the OHD at low redshifts perfectly, but some tension arises at high redshifts. This is an interesting warning of the learning method, which should be kept under control in the future analysis of this model with strong lensing time delay data. Additionally, from panel (c) of Fig. 2 we observe a good phase transition between a decelerating and an accelerating phase in all studied cases. On the other hand, the panel (d) of Fig. 2 shows that the model behaves like the cosmological constant as \(\omega _{de} \approx -1\). However, a deviation from the cosmological constant is also expected to observe. Interestingly, this result, in its turn, clearly indicates support for dynamical dark energy models.

In conclusion, we see that the \(f_{1}\)CDM model is not able to solve the \(H_{0}\) tension, and new observational data with significantly increased lens-source numbers will eventually disfavor the model. The same claim, with high fidelity according to learned results, can be said even when the systems are observed beyond currently available redshift ranges. Eventually, another significant result we learned, which should be tackled in the future properly, is that the tension between OHD and SLTD data at high redshifts should be considered seriously.

4.2 \(f_{2}\)CDM

The second model to be considered is \(f_{2}\)CDM given by Eqs. (25) and (28). In this case, the generative process for BML has been also organized following Eqs. (2) and (3). During the study of this model we have learned the best fit values of the model parameters with their \(1\sigma \) errors. In particular, we found:

-

When \(z_{l}\in [0.1,1.2]\) and \(z_{s}\in [0.3,1.7]\) for \(N _{lens}= 50\) the best fit values and \(1\sigma \) errors to be \(\Omega ^{(0)}_{dm} = 0.277 \pm 0.01\), \(H_{0} = 73.59^{+0.174}_{-0.165}\) km/s/Mpc and \(p = 5.88^{+0.29}_{-0.34}\), respectively.

-

On the other hand, when \(z_{l}\in [0.1,1.2]\) and \(z_{s}\in [0.3,1.7]\) with \(N _{lens}= 100\) the best fit values of the model parameters are found to be \(\Omega ^{(0)}_{dm} = 0.28 \pm 0.01\), \(H_{0} = 74.68 \pm 0.148\) km/s/Mpc and \(p = 6.01 \pm 0.256\).

-

Moreover, for the other two cases when \(z_{l}\in [0.1,1.5]\) and \(z_{s}\in [0.3,1.7]\), and \(z_{l}\in [0.1,2.0]\) and \(z_{s}\in [0.3,2.5]\) (in both cases \(N _{lens}= 100\)), we have found that \(\Omega ^{(0)}_{dm} = 0.279 \pm 0.0097\), \(H_{0} = 74.90 \pm 0.135\) km/s/Mpc and \(p = 5.26 \pm 0.179\) with \(\Omega ^{(0)}_{dm} = 0.276 \pm 0.01\), \(H_{0} = 74.64 \pm 0.145\) km/s/Mpc and \(p = 5.4 \pm 0.135\), respectively.

-

Finally, we find that \(\Omega ^{(0)}_{dm} = 0.275 \pm 0.01\), \(H_{0} = 74.41^{+0.183}_{-0.178}\) km/s/Mpc and \(p = 5.67^{+0.175}_{-0.17}\). This is obtained for the case when \(z_{l}\in [0.1,2.4]\) and \(z_{s}\in [0.3,2.5]\) for \(N _{lens}= 100\).

For all cases discussed above, flat priors as \(H_{0} \in [64,78]\), \(\Omega ^{(0)}_{dm} \in [0.2,0.4]\) and \(p \in [-10,10]\) have been imposed. Moreover, N lenses (and sources respectively) for all the considered cases are distributed uniformly as in the case of \(f_{1}\)CDM model discussed in the previous section.

The \(1\sigma \) and \(2\sigma \) confidence-level contour plots for the \(f_{2}\)CDM model, using the of SLTD simulated datasets obtained from the generative process based on Eqs. (2) and (3). Each contour color stands for lenses and sources distributed over a specific redshift range, with lens number \(N_{\text {lens}}=50\) (navy contour) and \(N_{\text {lens}}=100\), green, gray, red, and blue contours, respectively. The flat priors as \(H_{0} \in [64,78]\), \(\Omega ^{(0)}_{dm} \in [0.2,0.4]\) and \(p \in [-10,10]\) have been imposed and used in the generative process. Our initial belief, used as an input, is the \(\Lambda \)CDM model

The BML predictions for the redshift evolution of the Hubble parameter H, dark matter abundance \(\Omega _{m}\), deceleration parameter q, and equation of state parameter \(\omega _{de} = \frac{p_{de}}{\rho _{de}}\) for the best fit values of the model parameters of \(f_{2}\)CDM presented in Table 4. Each color line stands for lenses and sources distributed over a specific redshift range with lens number \(N_{\text {lens}}=50\) (navy curve) and \(N_{\text {lens}}=100\), green, gray, red, and blue curves, respectively

The learned results are summarized in Table 4 and Fig. 3 shows the learned contour plots of the model parameters. The BML again imposes tight constraints on the cosmological parameters for all the considered cases. The learned results indicate that the model at hand deviates more from the standard cosmological model| due to the relatively significant value of the parameter \(b=1/p\) compared to the previous model. Moreover, we provide validation for our BML results in the panel (a) of Fig. 4 where we compare the learned redshift evolution of the Hubble parameter with available OHD. The same Fig. 4, but for the panels (b), (c) and (d), provides the graphical behavior of dark matter abundance \(\Omega _{m}\), the deceleration parameter q, and equation of state parameter \(\omega _{de}\), respectively, taking into account learned mean values of the model parameters. The same convention for the navy, green, grey, red, and blue curves as the legends in Fig. 3 is used.

Apparently, the dots in panel (a) of Fig. 4 represent again the 40 data points from Table 1. We note that the BML prediction for the redshifts evolution of the Hubble function matches the observational data at low redshifts, but we observe some tension at high redshifts. On the other hand, in the panel (c) of Fig. 4, we see a phase transition between a decelerating and an accelerating Universe phase in all considered cases. Moreover, panel (d) shows that the model behaves like the cosmological constant, \(\omega _{de}=-1\), at \(z\ge 0.75\) and the quintessence dark energy, \(\omega _{de}>-1\), at \(z<0.75\). The motioned behaviour holds for all cases considered, indicating an almost linearly increasing functional form for \(\omega _{d}\) for \(z \in [0,0.75]\).

Interestingly, we can say that the model can alleviate the \(H_{0}\) tension because we have a significant deviation from the standard \(\Lambda \)CDM model leading to a higher value for \(H_{0}\). Although the model considered in the previous section also deviates from the standard \(\Lambda \)CDM model, but according to the learned constraints, it is not clear if the \(H_{0}\) tension can or not be solved. We will come to this in the next section. It is worth mentioning that according to these results, future observations with more lenses-sources systems and covering new redshift ranges will most likely not change the estimations of \(\Omega ^{(0)}_{dm}\). Moreover, we observe from Fig. 4 that the evolution of the deceleration parameter q and the evolution of \(\Omega _{m}\) will not be strongly affected by future SLTD data measurements. This point can only be validated in the future.

To finish this section, let us stress again that, according to BML by which SLTD data has been generated, the model considered can solve the \(H_{0}\) tension and describes a quintessence dark energy dominated Universe where initially the dark energy is the cosmological constant.

4.3 \(f_{3}\)CDM

The third model we studied is \(f_{3}\)CDM which is given by Eqs. (29) and (32). We similarly performed the generative process for BML using Eqs. (2) and (3). Imposing the same flat priors as in the case of the \(f_{2}\)CDM model, we are able to learn the constraints on the model parameters. In particular, we have:

-

When \(z_{l}\in [0.1,1.2]\) and \(z_{s}\in [0.3,1.7]\) for \(N _{lens}= 50\) the best fit values with their \(1\sigma \) errors are \(\Omega ^{(0)}_{dm} = 0.267 \pm 0.022\), \(H_{0} = 67.58 \pm 0.161\) km/s/Mpc, \(p = 5.16 \pm 0.1\), respectively.

-

On the other hand, when \(z_{l}\in [0.1,1.2]\) and \(z_{s}\in [0.3,1.7]\) with \(N _{lens}= 100\) the best fit values and \(1\sigma \) errors of the model parameters are found to be \(\Omega ^{(0)}_{dm} = 0.275 \pm 0.018\), \(H_{0} = 73.44 \pm 0.1\) km/s/Mpc and \(p = 5.15 \pm 0.1\).

-

Moreover, for the other two cases, when \(z_{l}\in [0.1,1.5]\) and \(z_{s}\in [0.3,1.7]\), and \(z_{l}\in [0.1,2.0]\) and \(z_{s}\in [0.3,2.5]\) (in both cases \(N _{lens}= 100\)), we find that \(\Omega ^{(0)}_{dm} = 0.237 \pm 0.0167\), \(H_{0} = 73.18 \pm 0.16\) km/s/Mpc and \(p = 4.67 \pm 0.142\) \(\Omega ^{(0)}_{dm} = 0.259 \pm 0.01\), \(H_{0} = 69.86 \pm 0.125\) km/s/Mpc and \(p = 4.87 \pm 0.135\), respectively.

-

Finally, for the case when \(N _{lens}= 100\), we find \(\Omega ^{(0)}_{dm} = 0.256 \pm 0.006\), \(H_{0} = 73.054 \pm 0.102\) km/s/Mpc and \(p = 5.61 \pm 0.095\). In this case, \(z_{l}\in [0.1,2.4]\) and \(z_{s}\in [0.3,2.5]\).

The learned constraints on the model parameters have been summarized in Table 5 and the validation of the BML results for this model is presented in Fig. 5. We keep the same convention for the navy, green, grey, red, and blue curves as the legends in Fig. 6 which shows the learned contour plots. We also note that the \(b=1/p\) parameter having a larger value clearly indicates a deviation from the \(\Lambda \)CDM model.

BML predictions for the redshift evolution of the Hubble parameter H, dark matter abundance \(\Omega _{m}\), deceleration parameter q, and equation of state parameter \(\omega _{de} = \frac{p_{de}}{\rho _{de}}\), for the best fit values of the model parameters of \(f_{3}\)CDM presented in Table 5. Each color line stands for lenses and sources distributed over a specific redshift range with lens number \(N_{\text {lens}}=50\) (navy curve) and \(N_{\text {lens}}=100\) (green, gray, red, and blue curves), respectively

From panel (a) of Fig. 6 we note that the BML prediction for the redshift evolution of the Hubble function H fits the observational data at low redshifts, though some tension can be observed at high redshifts. Moreover, panel (d) shows that the model behaves as the cosmological constant, \(\omega _{de}=-1\), at \(z \ge 2.0\) and the quintessence dark energy, \(\omega _{de}>-1\), at \(z<2.0\). In other words, the SLTD data-based learning shows that the recent Universe should contain quintessence dark energy, which started its evolution with a cosmological constant. In panel (c), a good phase transition between a decelerating and an accelerating phase can be noticed in the learned behaviour of the deceleration parameter q for the five cases that we have considered in this paper. However, this model provided results different from the previous two models. In particular, the model can be used to solve the \(H_{0}\) tension; however, one should note that the SLTD data is not able to give a final answer whether the model can solve the tension or not. In other words, the STLD data can strongly affect our understanding of how to solve the \(H_{0}\) tension in f(T) gravity. Moreover, it can strongly affect the constraints on \(\Omega ^{(0)}_{dm}\), indicating a tension there, too. This is another important consequence that BML allowed inferring from the study of this model.

To end this section, we should mention that the different nature of BML as a tool combined with Eqs. (29) and (32) allows us to develop a pipeline to study and constrain f(T) gravity for cosmological purposes. It allows us to predict and learn how the \(H_{0}\) tension can be solved and how the SLTD data can challenge it in f(T) gravity.

The \(1\sigma \) and \(2\sigma \) confidence-level contour plots for the \(f_{3}\)CDM model, using the of SLTD simulated data obtained from the generative process based on Eqs. (2) and (3). Each contour color stands for lenses and sources distributed over a specific redshift range with lens number \(N_{\text {lens}}=50\) (navy contour) and \(N_{\text {lens}}=100\) (green, gray, red, and blue contours), respectively. The flat priors as \(H_{0} \in [64,78]\), \(\Omega ^{(0)}_{dm} \in [0.2,0.4]\) and \(p \in [-10,10]\) have been imposed, respectively. Our initial belief used as an input is the \(\Lambda \)CDM model

5 Conclusions

Using BML we have addressed the annoying \(H_{0}\) tension. The real source of this issue is still unclear. A fair number of the attempts at solving the problem are based on the idea that the \(H_{0}\) tension is not a mere statistical mismatch or artefact but that it is actually related to physical considerations. However, it must be mentioned that, despite very serious attempts to identify how this challenges our understanding of the Universe, there is still no reliable hint on the actual origin of the problem, and much work should be done yet.

On the other hand, we may need to challenge the \(\Lambda \)CDM model to understand this issue. This can be done by challenging not only our understanding of dark energy but also the dark matter part. To this point, recently, it has been demonstrated using BML that there is a deviation from the cold dark matter paradigm on cosmological scales, which might efficiently solve the \(H_{0}\) tension [48].

In the present paper, we have used BML to constrain f(T) gravity-based cosmological models to see how the problem may be solved there. We have considered and learned the constraints on power-law, exponential, and square-root exponential f(T) models using the SLTD as the main element for the generative process and the key ingredient of the Probabilistic ML approach. In this analysis, we did not rely on the lensing model itself, and what we needed is the redshifts of the lens and sources only. We would like to stress that very tight constraints on the parameters determining the f(T) models have been obtained.

Moreover, our results contain a hint showing that more precise time delay measurements and the number of lensed systems could significantly affect the constraints on the model parameters. Taking into account the \(H_{0}\) tension, we have validated the learned results with the available OHD and found a sign of tension that could exist between lensed GW+EM signals and OHD. Therefore, it is not excluded that utilizing both could lead to some misleading results in the model analysis. On the other hand, we have learned that exponential f(T) in the light of SLTD data could solve the \(H_{0}\) tension, while the power-law model slightly differs from the \(\Lambda \)CDM and definitely cannot solve the problem.

The case of the power-law model is interesting for two reasons: first, we have learned that it could be very close to the \(\Lambda \)CDM model. Second, future SLTD data may indicate slight deviations from the \(\Lambda \)CDM model. In our opinion, this is another hint that in order to solve the \(H_{0}\) tension, the \(\Lambda \)CDM model should be challenged. According to the learned best fit values of the parameters in the case of square-root exponential and exponential f(T) models, we will have a quintessence dark energy dominated recent Universe, which for relatively high redshift evaluations contains a cosmological constant as dark energy.Although the alleviation of \(H_0\) tension by late time modification is achieved through a phantom dark energy behavior [71] but recent studies suggest the tension also can be resolved by reducing the ratio between effective Newton’s constant \(G_{eff}/G_{N}\) and Newton’s constant to less than one [72, 73]. The exponential and squared exponential model in our analysis should therefore satisfy the condition \(G_{eff}/G_n<1\) which leads to a faster \(H_{0}\) expansion rate as we see in our analysis is the case. On the other hand, it has been shown that these models are statistically indistinguishable from \(\Lambda \)CDM model [74], however,in our present work we found that exponential and squared exponential model deviate from \(\Lambda \)CDM model significantly as the value of their p parameter ranging between 5 to 6. Moreover,the learned results indicate that an accelerated expanding phase transition will be observed naturally and smoothly in all three cases considered.

Finally, We would like to mention some interesting aspects that follow from our approach. Since the time delay distances can be measured from the lensed gravitational wave signals and their corresponding electromagnetic wave counterpart, our approach could be very useful during the source identification process. Indeed, we found a clear hint that we can have very strong constraints on lensed GW+EM systems and a reasonable combination of it with the simulations based on LSST, Einstein Telescope (ET), and the Dark Energy Survey (DES) can provide a powerful tool for the present cosmological analysis. A more detailed discussion of such possibilities will be the subject of a forthcoming paper. A final consideration is that the approach proposed in the present one can be easily extended to constrain the lensing systems.

Data Availability

This manuscript has associated data in a data repository. [Authors’ comment: The data presented in this study are available on request from the corresponding author.]

Notes

We use BML as a tool to study cosmology and, for conciseness, have to omit various theoretical and technical details about it, including how it can be used in biased cases. We strongly suggest that readers interested in ML topics search on the web about recent developments and existing problems in this direction to gain more insight.

References

H.E.S. Velten, R.F. vom Marttens, W. Zimdahl, Aspects of the cosmological “coincidence problem”. Phys. Rep. 513, 1–189 (2012). https://doi.org/10.1140/epjc/s10052-014-3160-4. arXiv:1410.2509 [astro-ph.CO]

P.J. Steinhardt, in Critical Problems in Physics. ed. by V.L. Fitch, D.R. Marlow, M.A.E. Dementi (Princeton University Press, Princeton, 1997)

T. Clifton, P.G. Ferreira, A. Padilla, C. Skordis, Modified gravity and cosmology. Phys. Rep. 513, 1–189 (2012). https://doi.org/10.1016/j.physrep.2012.01.001. arXiv:1106.2476 [astro-ph.CO]

L. Baudis, “Dark matter detection’’. J. Phys. G 43(4), 044001 (2016). https://doi.org/10.1088/0954-3899/43/4/044001

G. Bertone, D. Hooper, J. Silk, Particle dark matter: evidence, candidates and constraints. Phys. Rep. 405, 279–390 (2005). https://doi.org/10.1016/j.physrep.2004.08.031. arXiv: 0404175 [hep-ph]

K. Bamba, S. Capozziello, S. Nojiri, S.D. Odintsov, Dark energy cosmology: the equivalent description via different theoretical models and cosmography tests. Astrophys. Space Sci. 342, 155–228 (2012). https://doi.org/10.1007/s10509-012-1181-8. arXiv:1205.3421 [gr-qc]

C. Li, X. Ren, M. Khurshudyan, Y.F. Cai, Implications of the possible 21-cm line excess at cosmic dawn on dynamics of interacting dark energy. Phys. Lett. B 801, 135141 (2020). https://doi.org/10.1016/j.physletb.2019.135141. arXiv:1904.02458 [astro-ph.CO]

M. Khurshudyan, R. Myrzakulov, Phase space analysis of some interacting Chaplygin gas models. Eur. Phys. J. C 77(2), 65 (2017). https://doi.org/10.1140/epjc/s10052-017-4634-y. arXiv:1509.02263 [gr-qc]

E. Elizalde, M. Khurshudyan, Cosmology with an interacting van der Waals fluid. Int. J. Mod. Phys. D 27(04), 1850037 (2017). https://doi.org/10.1142/S0218271818500372. arXiv:1711.01143 [gr-qc]

S. Nojiri, S.D. Odintsov, T. Paul, Barrow entropic dark energy: a member of generalized holographic dark energy family. Phys. Lett. B 825, 136844 (2022). https://doi.org/10.1016/j.physletb.2021.136844. arXiv:2112.10159 [gr-qc]

S.D. Odintsov, D. Saez-Chillon Gomez , G.S. Sharov, Testing the equation of state for viscous dark energy. Phys. Rev. D 101(4), 044010 (2020). https://doi.org/10.1103/PhysRevD.101.044010. arXiv:2001.07945 [gr-qc]

E.J. Copeland, M. Sami, S. Tsujikawa, Dynamics of dark energy. Int. J. Mod. Phys. D 15, 1753–1936 (2006). https://doi.org/10.1142/S021827180600942X. arXiv:0603057 [hep-th]

Y.F. Cai, E.N. Saridakis, M.R. Setare, J.Q. Xia, Quintom cosmology: theoretical implications and observations. Phys. Rep. 493, 1–60 (2010). https://doi.org/10.1016/j.physrep.2010.04.001. arXiv:0909.2776 [hep-th]

S. Nojiri , S.D. Odintsov, Introduction to modified gravity and gravitational alternative for dark energy. eConf C0602061, 06 (2006). https://doi.org/10.1142/S0219887807001928. arXiv:0601213 [hep-th]

W. Hu, I. Sawicki, Models of f(R) cosmic acceleration that evade solar-system tests. Phys. Rev. D 76, 064004 (2007). https://doi.org/10.1103/PhysRevD.76.064004. arXiv:0705.1158 [astro-ph]

S.A. Appleby, R.A. Battye, Do consistent \(F(R)\) models mimic General Relativity plus \(\Lambda \)? Phys. Lett. B 654, 7–12 (2007). https://doi.org/10.1016/j.physletb.2007.08.037. arXiv:0705.3199 [astro-ph]

A.A. Starobinsky, Disappearing cosmological constant in f(R) gravity. JETP Lett. 86, 157–163 (2007). https://doi.org/10.1134/S0021364007150027. arXiv:0706.2041 [astro-ph]

L. Amendola, R. Gannouji, D. Polarski, S. Tsujikawa, Conditions for the cosmological viability of f(R) dark energy models. Phys. Rev. D 75, 083504 (2007). arXiv:0612180 [gr-qc]

G. Cognola, E. Elizalde, S. Nojiri, S.D. Odintsov, L. Sebastiani, S. Zerbini, A class of viable modified f(R) gravities describing inflation and the onset of accelerated expansion. Phys. Rev. D 77, 046009 (2008). https://doi.org/10.1103/PhysRevD.77.046009. arXiv:0712.4017 [hep-th]

T.P. Sotiriou, V. Faraoni, f(R) theories of gravity. Rev. Mod. Phys. 82, 451–497 (2010). https://doi.org/10.1103/RevModPhys.82.451. arXiv:0805.1726 [gr-qc]

S. Nojiri, S.D. Odintsov, Unified cosmic history in modified gravity: from F(R) theory to Lorentz non-invariant models. Phys. Rep. 505, 59–144 (2011). https://doi.org/10.1016/j.physrep.2011.04.001. arXiv:1011.0544 [gr-qc]

Y.F. Cai, S. Capozziello, M. De Laurentis, E.N. Saridakis, f(T) teleparallel gravity and cosmology. Rep. Prog. Phys. 79(10), 106901 (2016). https://doi.org/10.1088/0034-4885/79/10/106901. arXiv:1511.07586 [gr-qc]

Y.F. Cai, S.H. Chen, J.B. Dent, S. Dutta, E.N. Saridakis, Matter bounce cosmology with the f(T) gravity. Class. Quantum Gravity 28, 215011 (2011). https://doi.org/10.1088/0264-9381/28/21/215011. arXiv:1104.4349 [astro-ph.CO]

J.B. Dent, S. Dutta, E.N. Saridakis, f(T) gravity mimicking dynamical dark energy. Background and perturbation analysis. JCAP 01, 009 (2011). https://doi.org/10.1088/1475-7516/2011/01/009. arXiv:1010.2215 [astro-ph.CO]

R. Myrzakulov, Accelerating universe from F(T) gravity. Eur. Phys. J. C 71, 1752 (2011). https://doi.org/10.1140/epjc/s10052-011-1752-9. arXiv:1006.1120 [gr-qc]

R. Zheng, Q.G. Huang, Growth factor in \(f(T)\) gravity. JCAP 03, 002 (2011). https://doi.org/10.1088/1475-7516/2011/03/002. arXiv:1010.3512 [gr-qc]

K. Bamba, G.G.L. Nashed, W. El Hanafy, S.K. Ibraheem, Bounce inflation in \(f(T)\) cosmology: a unified inflaton-quintessence field. Phys. Rev. D 94(8), 083513 (2016). https://doi.org/10.1103/PhysRevD.94.083513. arXiv:1604.07604 [gr-qc]

K. Bamba, S.D. Odintsov, E.N. Saridakis, Inflationary cosmology in unimodular \(F(T)\) gravity. Mod. Phys. Lett. A 32(21), 1750114 (2017). https://doi.org/10.1142/S0217732317501140. arXiv:1605.02461 [gr-qc]

S. Carloni, F.S.N. Lobo, G. Otalora, E.N. Saridakis, Dynamical system analysis for a nonminimal torsion-matter coupled gravity. Phys. Rev. D 93, 024034 (2016). https://doi.org/10.1103/PhysRevD.93.024034. arXiv:1512.06996 [gr-qc]

M. Hohmann, L. Jarv, U. Ualikhanova, Dynamical systems approach and generic properties of \(f(T)\) cosmology. Phys. Rev. D 96(4), 043508 (2017). https://doi.org/10.1103/PhysRevD.96.043508. arXiv:1706.02376 [gr-qc]

R.C. Nunes, S. Pan, E.N. Saridakis, New observational constraints on f(T) gravity from cosmic chronometers. JCAP 08, 011 (2016). https://doi.org/10.1088/1475-7516/2016/08/011. arXiv:1606.04359 [gr-qc]

S. Capozziello, G. Lambiase, E.N. Saridakis, Constraining f(T) teleparallel gravity by big bang nucleosynthesis. Eur. Phys. J. C 77(9), 576 (2017). https://doi.org/10.1140/epjc/s10052-017-5143-8. arXiv:1702.07952 [astro-ph.CO]

R.C. Nunes, Structure formation in \(f(T)\) gravity and a solution for \(H_0\) tension. JCAP 05, 052 (2018). https://doi.org/10.1088/1475-7516/2018/05/052. arXiv:1802.02281 [gr-qc]

M. Benetti, S. Capozziello, G. Lambiase, Updating constraints on f(T) teleparallel cosmology and the consistency with Big Bang Nucleosynthesis. Mon. Not. R. Astron. Soc. 500(2), 1795–1805 (2020). https://doi.org/10.1093/mnras/staa3368. arXiv:2006.15335 [astro-ph.CO]

G.R. Bengochea, R. Ferraro, Dark torsion as the cosmic speed-up. Phys. Rev. D 79, 124019 (2009). https://doi.org/10.1103/PhysRevD.79.124019. arXiv:0812.1205 [astro-ph]

K. Bamba, C.Q. Geng, C.C. Lee, L.W. Luo, Equation of state for dark energy in \(f(T)\) gravity. JCAP 01, 021 (2011). https://doi.org/10.1088/1475-7516/2011/01/021. arXiv:1011.0508 [astro-ph.CO]

X. Ren, S.F. Yan, Y. Zhao, Y.F. Cai, E.N. Saridakis, Gaussian processes and effective field theory of \(f(T)\) gravity under the \(H_0\) tension. arXiv:2203.01926 [astro-ph.CO]

X. Ren, T.H.T. Wong, Y.F. Cai, E.N. Saridakis, Data-driven reconstruction of the late-time cosmic acceleration with f(T) gravity. Phys. Dark Universe 32, 100812 (2021). https://doi.org/10.1016/j.dark.2021.100812. arXiv:2103.01260 [astro-ph.CO]

Y.F. Cai, M. Khurshudyan, E.N. Saridakis, Model-independent reconstruction of \(f(T)\) gravity from Gaussian Processes. Astrophys. J. 888, 62 (2020). https://doi.org/10.3847/1538-4357/ab5a7f. arXiv:1907.10813 [astro-ph.CO]

E. Elizalde, M. Khurshudyan, Swampland criteria for a dark energy dominated universe ensuing from Gaussian processes and H(z) data analysis. Phys. Rev. D 99(10), 103533 (2019). https://doi.org/10.1103/PhysRevD.99.103533. arXiv:1811.03861 [astro-ph.CO]

E.Ó. Colgáin, M.M. Sheikh-Jabbari, Elucidating cosmological model dependence with \(H_0\). Eur. Phys. J. C 81(10), 892 (2021). https://doi.org/10.1140/epjc/s10052-021-09708-2. arXiv:2101.08565 [astro-ph.CO]

E. Ó. Colgáin, M.M. Sheikh-Jabbari, R. Solomon, G. Bargiacchi, S. Capozziello, M.G. Dainotti, D. Stojkovic, Revealing intrinsic flat \(\Lambda \)CDM biases with standardizable candles. arXiv:2203.10558 [astro-ph.CO]

N. Aghanim et al. [Planck], Planck 2018 results. VI. Cosmological parameters. Astron. Astrophys. 641, A6 (2020) [Erratum: Astron. Astrophys. 652 (2021), C4]. https://doi.org/10.1051/0004-6361/201833910. arXiv:1807.06209 [astro-ph.CO]

A.G. Riess, S. Casertano, W. Yuan, J.B. Bowers, L. Macri, J.C. Zinn, D. Scolnic, Cosmic distances calibrated to 1% precision with Gaia EDR3 parallaxes and Hubble space telescope photometry of 75 Milky Way cepheids confirm tension with \(\Lambda \)CDM. Astrophys. J. Lett. 908(1), L6 (2021). https://doi.org/10.3847/2041-8213/abdba. arXiv:2012.08534 [astro-ph.CO]

E. Di Valentino, O. Mena, S. Pan, L. Visinelli, W. Yang, A. Melchiorri, D.F. Mota, A.G. Riess, J. Silk, In the realm of the Hubble tension—a review of solutions. Class. Quantum Gravity 38(15), 153001 (2021). https://doi.org/10.1088/1361-6382/ac086d. arXiv:2103.01183 [astro-ph.CO]

E. Elizalde, M. Khurshudyan, S.D. Odintsov, R. Myrzakulov, Analysis of the \(H_0\) tension problem in the Universe with viscous dark fluid. Phys. Rev. D 102(12), 123501 (2020). https://doi.org/10.1103/PhysRevD.102.123501. arXiv:2006.01879 [gr-qc]

E. Elizalde, M. Khurshudyan, Constraints on cosmic opacity from Bayesian machine learning: the hidden side of the \(H_{0}\) tension problem. arXiv:2006.12913 [astro-ph.CO]

E. Elizalde, J. Gluza, M. Khurshudyan, An approach to cold dark matter deviation and the \(H_{0}\) tension problem by using machine learning. arXiv:2104.01077 [astro-ph. C.O.]

D. Wang, D. Mota, Can \(f(T)\) gravity resolve the \(H_0\) tension? Phys. Rev. D 102(6), 063530 (2020). https://doi.org/10.1103/PhysRevD.102.063530. arXiv:2003.10095 [astro-ph.CO]

S.D. Odintsov, V.K. Oikonomou, Did the Universe experience a pressure non-crushing type cosmological singularity in the recent past? EPL 137(3), 39001 (2022). https://doi.org/10.1209/0295-5075/ac52dc. arXiv:2201.07647 [gr-qc]

T. Treu, Strong lensing by galaxies. Annu. Rev. Astron. Astrophys. 48, 87–125 (2010), https://doi.org/10.1146/annurev-astro-081309-130924. arXiv:1003.5567 [astro-ph.CO]

S.H. Suyu, V. Bonvin, F. Courbin, C.D. Fassnacht, C.E. Rusu, D. Sluse, T. Treu, K.C. Wong, M.W. Auger, X. Ding et al., H0LiCOW-I. H0 Lenses in COSMOGRAIL’s Wellspring: program overview. Mon. Not. R. Astron. Soc. 468(3), 2590–2604 (2017). https://doi.org/10.1093/mnras/stx483. arXiv:1607.00017 [astro-ph.CO]

K.C. Wong, S.H. Suyu, G.C.F. Chen, C.E. Rusu, M. Millon, D. Sluse, V. Bonvin, C.D. Fassnacht, S. Taubenberger, M.W. Auger et al., H0LiCOW-XIII. A 2.4 per cent measurement of H0 from lensed quasars: 5.3 tension between early- and late-Universe probes. Mon. Not. R. Astron. Soc. 498(1), 1420–1439 (2020). https://doi.org/10.1093/mnras/stz3094. arXiv:1907.04869 [astro-ph.CO]

B.P. Abbott et al. [LIGO Scientific, Virgo, 1M2H, Dark Energy Camera GW-E, DES, DLT40, Las Cumbres Observatory, VINROUGE and MASTER], A gravitational-wave standard siren measurement of the Hubble constant. Nature 551(7678), 85–88 (2017). https://doi.org/10.1038/nature24471. arXiv:1710.05835 [astro-ph.CO]

B.P. Abbott et al. [LIGO Scientific and Virgo], GW170817: observation of gravitational waves from a binary neutron star inspiral. Phys. Rev. Lett. 119(16), 161101 (2017). https://doi.org/10.1103/PhysRevLett.119.161101. arXiv:1710.05832 [gr-qc]

B.P. Abbott et al. [LIGO Scientific, Virgo, Fermi-GBM and INTEGRAL], Gravitational waves and gamma-rays from a binary neutron star merger: GW170817 and GRB 170817A. Astrophys. J. Lett. 848(2), L13 (2017). https://doi.org/10.3847/2041-8213/aa920c. arXiv:1710.05834 [astro-ph.HE]

D.A. Coulter, R.J. Foley, C.D. Kilpatrick, M.R. Drout, A.L. Piro, B.J. Shappee, M.R. Siebert, J.D. Simon, N. Ulloa , D. Kasen et al. Swope Supernova Survey 2017a (SSS17a), the optical counterpart to a gravitational wave source. Science 358, 1556 (2017). https://doi.org/10.1126/science.aap9811. arXiv:1710.05452 [astro-ph.HE]

K. Hotokezaka, E. Nakar, O. Gottlieb, S. Nissanke, K. Masuda, G. Hallinan, K.P. Mooley, A.T. Deller, A Hubble constant measurement from superluminal motion of the jet in GW170817. Nat. Astron. 3(10), 940–944 (2019). https://doi.org/10.1038/s41550-019-0820-1. arXiv:1806.10596 [astro-ph.CO]

H.Y. Chen, M. Fishbach, D.E. Holz, A two per cent Hubble constant measurement from standard sirens within five years. Nature 562(7728), 545–547 (2018). https://doi.org/10.1038/s41586-018-0606-0. arXiv:1712.06531 [astro-ph.CO]

Ž. Ivezić et al. [LSST], LSST: from science drivers to reference design and anticipated data products. Astrophys. J. 873(2), 111 (2019). https://doi.org/10.3847/1538-4357/ab042c. arXiv:0805.2366 [astro-ph]

M. Oguri, P.J. Marshall, Gravitationally lensed quasars and supernovae in future wide-field optical imaging surveys. Mon. Not. R. Astron. Soc. 405, 2579–2593 (2010). https://doi.org/10.1111/j.1365-2966.2010.16639.x. arXiv:1001.2037 [astro-ph.CO]

P. Schneider, J. Ehlers, E.E. Falco, Gravitational Lenses (Springer, Berlin, 1992)

P. Schneider, C.S. Kochanek, J. Wambsganss, Gravitational Lensing: Strong, Weak and Micro (Springer, Berlin, 2006)

S. Kullback, R.A. Leibler, On information and sufficiency. Ann. Math. Stat. 22, 79–86 (1951). https://projecteuclid.org/journals/annals-of-mathematical-statistics/ volume-22/issue-1/On-Information-and-Sufficiency/10.1214/aoms/1177729694.full

D.M. Blei, A. Kucukelbir, J.D. McAuliffe, Variational inference: a review for statisticians. J. Am. Stat. Assoc. 112(518), 859–877 (2017). https://doi.org/10.48550/arXiv.1601.00670

A. Graves, Practical variational inference for neural networks. In: Advances in Neural Information Processing Systems, pp. 2348–2356 (2011)

N. Metropolis et al., Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1092 (1953). https://doi.org/10.1063/1.1699114

J. Regier, A.C. Miller, D. Schlegel, R.P. Adams, J.D. McAuliffe, Prabhat, Approximate inference for constructing astronomical catalogs from images. arXiv:1803.00113 [stat. A.P.]

G. Gunapati, A. Jain, P.K. Srijith, S. Desai, Variational inference as an alternative to MCMC for parameter estimation and model selection. Publ. Astron. Soc. Austral. 39, e001 (2022). https://doi.org/10.1017/pasa.2021.64. arXiv:1803.06473 [astro-ph.IM]

J. Salvatier, T. Wiecki, C. Fonnesbeck, Probabilistic programming in Python using PyMC3. PeerJ Comput. Sci. 2, e55 (2016,).https://doi.org/10.48550/arXiv.1507.08050. arXiv:1507.08050 [stat.CO]

S. Banerjee, M. Petronikolou, E.N. Saridakis, Alleviating \(H_0\) tension with new gravitational scalar tensor theories. arXiv:2209.02426 [gr-qc]

L. Kazantzidis, L. Perivolaropoulos, \({ }_{8}\) tension. Is gravity getting weaker at low z? Observational evidence and theoretical implications. Modified Gravity and Cosmology (Springer, Cham). https://doi.org/10.1007/978-3-030-83715-0_33. arXiv:1907.03176 [astro-ph.CO]

F.K. Anagnostopoulos, S. Basilakos, E.N. Saridakis, Bayesian analysis of \(f(T)\) gravity using \(f\sigma _8\) data. Phys. Rev. D 100(8), 083517 (2019). https://doi.org/10.1103/PhysRevD.100.083517. arXiv:1907.07533 [astro-ph.CO]

S. Nesseris, S. Basilakos, E.N. Saridakis, L. Perivolaropoulos, Viable \(f(T)\) models are practically indistinguishable from \(\Lambda \)CDM. Phys. Rev. D 88, 103010 (2013). https://doi.org/10.1103/PhysRevD.88.103010. arXiv:1308.6142 [astro-ph.CO]

Acknowledgements

This work has been partially supported by MICINN (Spain), project PID2019-104397GB-I00, of the Spanish State Research Agency program AEI/10.13039/501100011033, by the Catalan Government, AGAUR project 2017-SGR-247, and by the program Unidad de Excelencia Mar’ıa de Maeztu CEX2020-001058-M. This paper was supported by the Ministry of Education and Science of the Republic of Kazakhstan, grant AP08052034. MK has been supported by the Juan de la Cierva-incorporaci’on grant (IJC2020-042690-I).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Aljaf, M., Elizalde, E., Khurshudyan, M. et al. Solving the \(H_{0}\) tension in f(T) gravity through Bayesian machine learning. Eur. Phys. J. C 82, 1130 (2022). https://doi.org/10.1140/epjc/s10052-022-11109-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-11109-y