Abstract

We present an extension of the simplified fast detector simulator of MadAnalysis 5 – the SFS framework – with methods making it suitable for the treatment of long-lived particles of any kind. This allows users to make use of intuitive Python commands and straightforward C++ methods to introduce detector effects relevant for long-lived particles, and to implement selection cuts and plots related to their properties. In particular, the impact of the magnetic field inside a typical high-energy physics detector on the trajectories of any charged object can now be easily simulated. As an illustration of the capabilities of this new development, we implement three existing LHC analyses dedicated to long-lived objects, namely a CMS run 2 search for displaced leptons in the \(e\mu \) channel (CMS-EXO-16-022), the full run 2 CMS search for disappearing track signatures (CMS-EXO-19-010), and the partial run 2 ATLAS search for displaced vertices featuring a pair of oppositely charged leptons (ATLAS-SUSY-2017-04). We document the careful validation of all MadAnalysis 5 SFS implementations of these analyses, which are publicly available as entries in the MadAnalysis 5 Public Analysis Database and its associated dataverse.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In operation for more than a decade, the Large Hadron Collider (LHC) has already collected a rich amount of data. Whereas this has allowed for a clear establishment of the existence of a particle consistent with the Standard Model (SM) Higgs boson, the experimental evidence of its potential and even whether it is a fundamental scalar is still lacking. There is also no compelling sign for physics beyond the SM (BSM), and even if there are currently several suggestive hints, these are not obviously connected to electroweak symmetry breaking. Before the LHC operation, it was hoped that not only would the Higgs boson be found, but that other particles would be discovered and play a role in protecting the electroweak scale from the hierarchy problem. The lack of evidence therefore suggests that new phenomena may be more complicated to grasp than was hoped for by phenomenologists and experimentalists alike, and hiding in unexpected or underexploited corners of the parameter space. In this context, the high-energy physics community has shown a growing theoretical and experimental interest in long-lived particles (LLPs) [1].

Naive dimensional analysis suggests that a particle should have a decay length \(c\tau \) inversely proportional to its mass M:

There are naturally three mechanisms whereby this expectation can be violated: decays mainly occurring at loop order (for example, the neutral pion decaying to two photons); the particle having small couplings; and there being little phase space for the decay (e.g. if three-body decays dominate and/or the particle spectrum is compressed). The smallness of the naive decay length has led to the large majority of new physics searches at the LHC focusing on promptly decaying new particles. However, the SM contains many long-lived particles: most famously the muon with a proper decay length of 660 m; but also of huge importance are the B mesons, which are the heaviest LLPs in the SM, with masses of about 5 GeV. These latter decay mainly via three-body processes which are severely suppressed by weak couplings and the Cabibbo–Kobayashi–Maskawa (CKM) matrix and so have \(c\tau \sim 0.5\,{\text {mm}}\). This demonstrates just how naive the above expectation is, and indeed particles featuring a long lifetime due to loop, coupling or phase space suppression are also found in a large variety of BSM setups. Heavy LLPs therefore make striking signals with low backgrounds, and offer a path to new discoveries in run 3 of the LHC.

A number of search strategies already exist, targeting different kinds of LLP candidates. If we focus on the case of the LHC run 2 and the usage of the general-purpose ATLAS and CMS experiments, LLPs are searched for through displaced vertices in the inner detector or in the muon spectrometer [2,3,4,5,6,7,8,9,10,11], displaced jets originating from the calorimeters [12,13,14,15,16], displaced lepton jets [17, 18], emerging jets [19], non-pointing and delayed photons [20], disappearing tracks [21,22,23,24], stopped particles [25, 26] and heavy stable charged particles [27,28,29,30]. Reusing these searches and applying them to different models – not just those considered by the experimental analyses – via a user-friendly computer program would be highly valuable. For that reason, allowing this possibility in the MadAnalysis 5 package [31,32,33] has recently become a priority in the development of the program.

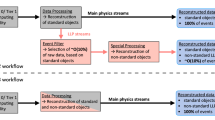

The first steps in this direction date from 2018, when MadAnalysis 5 version 1.6 was released. This consisted of the first version of the code that allowed for handling of displaced leptons [34], and it was the first time that one of the public recasting codes was made suitable for the recasting of an LHC analysis involving displaced objects. In practice, the recasting module of the code had been updated in a twofold manner. First, it was linked to a modified version of Delphes 3 [35] for the simulation of the detector response. These modifications, which were merged shortly later with the official version of the Delphes 3 detector simulator package, allowed the user to go beyond the default behaviour in which all particles are assumed to decay within the tracker volume of the detector. Second, information about the impact parameter and the position from which a lepton originated was incorporated into the internal data format of the code. As a proof of concept, the CMS-EXO-16-022 analysis [36] was implemented in MadAnalysis 5 [37]. This implementation was validated in a satisfactory manner, so that for most signal regions, MadAnalysis 5 reproduced the expected signal for the model given within 20%. However, for long decay lengths (\(\ge 100\,\hbox {cm}\)), the difference between the MadAnalysis 5 result and the expected signal was greater than \(100\%\).

Subsequently, due to the intense experimental and theoretical interest in this area, additional public codes became available. Firstly, a collaborative collection of independent stand-alone codes, called the LLP Recasting Repository, was established on the GitHub platform [38]. It has recently been augmented by two sophisticated disappearing track searches [39, 40]. Secondly, an LLP version of CheckMATE 2 [41], which includes five (classes of) LLP searches, and an updated version of SModelS [42], which includes eight LLP searches, were released.

Last year, the simplified fast detector simulation (SFS) framework of MadAnalysis 5 was introduced. It consists of a new simplified fast detector simulator relying on efficiency and smearing functions [43]. Additional commands were supplemented to the Python command-line interface of MadAnalysis 5 , so that users could design and tune a detector simulator directly from a set of intuitive commands, and the C++ core of the program was accordingly modified to deal with such a detector simulator. Proof-of-concept examples were presented both for simple phenomenological analyses and in the context of the reinterpretation of the results of existing LHC analyses in Refs. [43,44,45], and additional works demonstrated its utility in more in-depth studies [40, 46,47,48,49,50].

In this work, we further extend the SFS framework and the core of MadAnalysis 5 by equipping them with additional methods suitable for the treatment of long-lived objects of any kind. Section 2 is dedicated to a brief review of the MadAnalysis 5 framework and its fast detector simulator module, the SFS, as well as a detailed description of all new developments suitable for LLP studies. In Sect.3, we make use of these developments to implement, in the MadAnalysis 5 SFS framework, the CMS-EXO-16-022 [36] search for displaced leptons in LHC run 2 data. Such a search was already implemented earlier, using Delphes 3 as a detector simulator, and consists thus of an excellent benchmark to evaluate and compare the performance of the SFS simulator to recast LHC searches for long-lived particles. In Sect. 4 we describe the recast of a new run 2 analysis [4] from the ATLAS Collaboration searching for displaced vertices consisting of two (visible) leptons. Section 5 describes the implementation in MadAnalysis 5 SFS of the full run 2 CMS disappearing track search [23, 24]. We summarise and conclude in Sect. 6. All our implementations are publicly available on the MadAnalysis 5 dataverse [51,52,53] and its Public Analysis Database [54].Footnote 1

2 MadAnalysis 5 as a tool for detector simulation and LHC recasting

2.1 Generalities

MadAnalysis 5 [31,32,33] is a general framework dedicated to different aspects of BSM phenomenology. First, it offers various means for users to design a new analysis targeting any given collider signal. To this aim, the code is equipped with a user-friendly Python-based command-line interface and a developer-friendly C++ core. Any user can then either implement their analysis through predefined functionalities available from the interpreter of the program, relying on the normal mode of running, or implement their needs directly in C++ in the expert mode of the code. Second, MadAnalysis 5 allows users to automatically reinterpret the results of various LHC searches for new physics to estimate the sensitivity of the LHC to an arbitrary BSM signal. The list of available searches and details about the validation of their implementation can be obtained through the Public Analysis Database (PAD) of MadAnalysis 5 . Moreover, recent developments allow for automated projections at higher luminosities and the inclusion of theoretical uncertainties [55], and leave the choice to simulate the response of the detector with either the external Delphes 3 package [35] or the built-in SFS framework [43].

The SFS module of MadAnalysis 5 has been designed to emulate the response of a detector through a joint usage of the FastJet software [56] and a set of user-defined smearing and efficiency functions. In this way, the code can effectively mimic typical detector effects such as the impact of resolution degradations and object reconstruction and identification. In practice, MadAnalysis 5 post-processes the set of objects resulting from the application of a jet algorithm to the hadron clusters in a Monte Carlo event. Reconstruction efficiencies, tagging efficiencies and the corresponding mistagging rates are implemented as transfer functions inputted in the MadAnalysis 5 command-line interface. In addition, the latter also allows users to provide the standard deviation associated with the potential Gaussian smearing of the four-momentum of a given object, such smearing representing any reconstruction degradations. All those transfer functions can be general piecewise functions and depend on several variables such as the components of the four-momentum of the object considered.

2.2 Features and new developments of the SFS

2.2.1 The SFS framework in a nutshell

The detector effects described in the previous subsection are implemented through dedicated MadAnalysis 5 comma-nds that are extensively described in the SFS manual [43]. We therefore only provide below a brief description of the extant features, emphasising instead the new developments that are documented for the first time in this work. Instructions related to how to run the code are available from Ref. [33].

In order to turn on the SFS module, users have to start MadAnalysis 5 in the reconstructed mode (./bin/ma5 -R) and switch on reconstruction by means of FastJet. This is achieved by typing inFootnote 2

By default, objects will be reconstructed by making use of the anti-\(k_T\) algorithm [57], with a radius parameter set to \(R=0.4\). The jet algorithm considered and the associated properties can be modified through the commands

where

represents the keyword associated with the jet algorithm adopted, and

represents the keyword associated with the jet algorithm adopted, and

generically denotes any of its properties. For instance,

generically denotes any of its properties. For instance,

would set the jet radius parameter of the anti-\(k_T\) algorithm to \(R=1\). At this stage, hadron-level events have to be provided through files encoded either in the standard HepMC format [58] or in the deprecated StdHep format that is still supported in MadAnalysis 5 . Event files can be imported one by one or simultaneously (through wildcards in the path to the samples) by typing in the command-line interface of MadAnalysis 5

This command can be repeated as many times as necessary when multiple-event files have to be imported. According to the above syntax, all event samples are collected into a single dataset defined by the label

, although multiple datasets (defined through different labels) can obviously be used as well. We refer to Refs. [33, 43] for detailed information about all available options.

, although multiple datasets (defined through different labels) can obviously be used as well. We refer to Refs. [33, 43] for detailed information about all available options.

In addition, users must indicate to the code the set of objects that are invisible and leave no track or hit in a detector, and the set of objects that participate in the hadronic activity in the event. This is achieved by typing in

where, in the above examples, one adds a new state defined through its Particle Data Group (PDG) identifier [59] (pdg-code) to the invisible and hadronic containers. These containers include by default the relevant Standard Model particles and hadrons, as well as the supersymmetric lightest neutralino and gravitino for what concerns invisible.

In order to include in this FastJet-based reconstruction process various detector effects, the SFS comes with three main commands,

The first of these commands indicates to the code that the reconstructed object

can only be reconstructed with a given efficiency. The latter is provided as a function

can only be reconstructed with a given efficiency. The latter is provided as a function

that can be either a constant or depend on the properties of the object (its transverse momentum \(p_T\), its pseudorapidity \(\eta \), etc.). If the optional argument

that can be either a constant or depend on the properties of the object (its transverse momentum \(p_T\), its pseudorapidity \(\eta \), etc.). If the optional argument

is not provided, this function applies to the entire kinematic regime. Otherwise, the efficiency is a piecewise function that is defined by issuing the above command several times, each occurrence coming with a different function

is not provided, this function applies to the entire kinematic regime. Otherwise, the efficiency is a piecewise function that is defined by issuing the above command several times, each occurrence coming with a different function

and a different domain of definition

and a different domain of definition

(specified through inequalities).

(specified through inequalities).

The second command instructs MadAnalysis 5 that the property

of the object

of the object

has to be smeared in a Gaussian way, the resolution being provided as a function

has to be smeared in a Gaussian way, the resolution being provided as a function

of the properties of the object. Such a function can again be given as a piecewise function by issuing the define smearer command several times, for different functions

of the properties of the object. Such a function can again be given as a piecewise function by issuing the define smearer command several times, for different functions

and domains of definition

and domains of definition

. The last command above enables users to provide the efficiency to tag (or mistag) an object

. The last command above enables users to provide the efficiency to tag (or mistag) an object

as a (possibly different) object

as a (possibly different) object

. The efficiencies are given through a function

. The efficiencies are given through a function

of the object properties, the command being issued several times with the optional argument

of the object properties, the command being issued several times with the optional argument

when piecewise functions are in order.

when piecewise functions are in order.

2.2.2 New developments

Relative to its initial release 1.5 years ago, the current public version of the SFS simulator (shipped with MadAnalysis 5 version 1.9) has been augmented with respect to three aspects.

First, new options are available for the treatment of photon, lepton and track isolation. Users can specify the size \(\varDelta R\) of an isolation cone that will be associated with these objects by typing, in the command line interface,

Here,

should be replaced by track, electron, muon or photon, and

should be replaced by track, electron, muon or photon, and

can be either a floating-point number or a set of comma-separated floating point numbers. In the former case, it is associated with the \(\varDelta R\) value of the isolation cone, whereas in the latter case isolation information for several values of the isolation cone radius are computed on runtime. The SFS module then takes care of computing the sum of the transverse momentum of hadrons, photons and charged leptons located inside a cone of radius \(\varDelta R\) centred on the object considered (\(\sum p_T\)), as well as the amount of transverse energy \(\sum E_T\) inside the cone. At the level of the analysis implementation, all isolation cone objects are available, together with the corresponding \(\sum p_T\) and \(\sum E_T\) information. We refer to Appendix B for practical details. These new features are used in practice in the analysis of Sect. 5, where validation was performed by comparing with implementations using Monte Carlo truth information also available to the user.

can be either a floating-point number or a set of comma-separated floating point numbers. In the former case, it is associated with the \(\varDelta R\) value of the isolation cone, whereas in the latter case isolation information for several values of the isolation cone radius are computed on runtime. The SFS module then takes care of computing the sum of the transverse momentum of hadrons, photons and charged leptons located inside a cone of radius \(\varDelta R\) centred on the object considered (\(\sum p_T\)), as well as the amount of transverse energy \(\sum E_T\) inside the cone. At the level of the analysis implementation, all isolation cone objects are available, together with the corresponding \(\sum p_T\) and \(\sum E_T\) information. We refer to Appendix B for practical details. These new features are used in practice in the analysis of Sect. 5, where validation was performed by comparing with implementations using Monte Carlo truth information also available to the user.

Secondly, the SFS module now includes an energy scaling option. Such a capability is known to be useful in numerous analyses, in particular when jet properties are in order. Users can instruct the code to rescale the energy of any given object by typing, in the MadAnalysis 5 interpreter,

Similarly to the other SFS commands, this syntax indicates to the code that the energy of the object

has to be rescaled. This object can be an electron (e), a muon (mu), a photon (a), a hadronic tau (ta) or a jet (j). Once again, the keyword

has to be rescaled. This object can be an electron (e), a muon (mu), a photon (a), a hadronic tau (ta) or a jet (j). Once again, the keyword

is a proxy for the functional form of the scaling function, and

is a proxy for the functional form of the scaling function, and

consists of an optional attribute relevant for piecewise functions. In the specific case of jet energy scaling (JES), users can rely on the equivalent syntax,

consists of an optional attribute relevant for piecewise functions. In the specific case of jet energy scaling (JES), users can rely on the equivalent syntax,

This feature is not used by the analyses presented in this paper, however, but is intended for future applications.

Monte Carlo event records, usually stored in the HepMC format [58], include the exact positions of the vertices related to the (parton-level and hadron-level) cascade decays originating from the hard-scattering process,Footnote 3 as well as the momenta of all intermediate- and final-state objects. This information is however computed by neglecting the impact of the presence of a magnetic field in the detector volume, ignoring the fact that charged particle tracks are typically bent under the influence of such a magnetic field. Subsequently, this can indirectly affect the trajectories of any electrically neutral particle that would emerge from a decay chain involving a charged state. While such effects are negligible in many cases, the computational effort to simulate them exactly is reasonable compared with the remaining workload in the analysis tool chain.

We have therefore equipped the SFS framework with a module handling particle propagation in the presence of a magnetic field. It can be turned on by typing, in the MadAnalysis 5 command line interpreter,

the default value being off. In the default case, all objects propagate along a straight line. When the propagation module is turned on, the direction of the momentum of any charged particle or object and its decay products is modified. The SFS module assumes that all objects produced in the event are subjected to a homogeneous magnetic field parallel to the z-axis, with a magnitude that can be fixed through

In this last command, value stands for any positive floating-point number. On runtime, the SFS module evaluates the trajectory of any electrically charged object as derived from the Lorentz force originating from the field. The code next calculates the coordinates of the point of closest approach, which corresponds to the point of the trajectory at which the distance to the z-axis is smallest, and it finally extracts the values of the transverse and longitudinal impact parameters \(d_0\) and \(d_z\). The formulas internally used are derived in detail in Appendix A.

Each coordinate of the point of closest approach is accessible, in the MadAnalysis 5 command line interpreter, through the XD, YD, ZD symbols. The transverse and longitudinal impact parameters \(d_0\) and \(d_z\) are associated with the D0 and DZ symbols, and their approximated variants in the case of straight-line propagation \({\tilde{d}}_0\) and \({\tilde{d}}_z\) are mapped with the D0APPROX and DZAPPROX symbols. All those symbols can in addition be manipulated as for any other observable implemented in MadAnalysis 5 . For instance, the scalar difference between the transverse impact parameter of two objects can be obtained by prefixing sd to the name of the observable (i.e. through the sdD0 symbol in the example considered). We refer to the manual for more information on observable combinations [31].

The set of commands available in the normal mode of MadAnalysis 5 now support the above variables. It is hence possible to plot (via the command plot of the MadAnalysis 5 interpreter) these observables. Users can also use them in the implementation of selection cuts (through the complementary select and reject commands of the MadAnalysis 5 interpreter), and of course in the SFS framework to define LLP reconstruction and tagging efficiencies. In the expert mode of the code, access to this information is provided as described in Appendix B.

2.3 Technicalities on particle propagation in the SFS

When turned on, the particle propagation module of MadAnalysis 5 assumes the existence of a non-zero magnetic field along the z-axis whose magnitude is fixed by the user. It evaluates the impact of this magnetic field on the trajectories of all intermediate- and final-state objects stored in a hadron-level event record. The implemented algorithm begins with the final-state particles originating from the hard process, and then follows the structure of all subsequent radiation and decays through the mother-to-daughter relations encoded in the event record. The primary interaction vertex is taken as located at the origin of the reference frame, and the hard process is considered to occur at \(t=0\).

For any specific object, the propagation algorithm makes use of information on the object’s creation position \(\mathbf{x}_{\mathrm{creation}}\) and time \(t_{\mathrm{creation}}\) (from the decay of the mother particle or the hard-scattering process), as well as on the time of its decay \(t_{\mathrm{decay}}\). The propagation time \(\varDelta t_{\mathrm{prop}}\) is thus given by

The position \(\mathbf{x}_{\mathrm{decay}}\) at which the object’s decay occurs is then estimated from Eq. (A.3), the momenta of the daughter particles are rotated according to Eq. (A.2) and the impact parameters \(d_0\) and \(d_z\) are computed from Eq. (A.8). The code additionally calculates approximate values for the impact parameters, as would be derived when assuming that the object’s trajectory is a straight line, and information on the momentum rotation angle is consistently passed to all subsequent objects emerging from the object’s (cascade) decay. The propagation of the decay products originating from \(\mathrm{x}_{\mathrm{decay}}\) is next iteratively treated.

Distribution in the angle \(\varDelta \varphi \) (top row) associated with the rotation to which the momentum of the R-hadrons originating from the process (2.2) is subjected, when they propagate in a magnetic field of 4 T. The associated deviation \(\delta d_0\) on the transverse impact parameter of the final-state muons is additionally shown (bottom row). We consider a scenario where the stop mean decay length is set to 1 m, and where the stop mass is fixed to 20 GeV (left) or 100 GeV (right)

It may happen that some objects have two mother particles (like when hadronisation processes are in order). The evaluation of the position \(\mathbf{x}_{\mathrm{creation}}\) and time \(t_{\mathrm{creation}}\) is then potentially ambiguous. However, such a case is always associated with zero lifetimes and is thus irrelevant for the problem considered in this work.

2.4 Validation of the SFS propagation module

The validation of the implementation of object propagation in the SFS framework has been carried out through a study of stop pair production and decay in R-parity-violating supersymmetry [60, 61]. We consider the process

where each of the pair-produced top squarks can decay into an electron or a muon with equal branching ratio. The stop is taken to be long-lived, so that it hadronises into an R-hadron of about the same mass which then flies some distance in the detector before decaying. This leads to the presence of displaced leptons in the final state of each event, as well as to the possible appearance of electrically charged and unstable intermediate particles (i.e. the produced R-hadrons), which are sensitive to an external magnetic field when they propagate. In particular, the momentum of the produced R-hadrons will rotate by an angle \(\varDelta \varphi \) during their propagation. From the formulas presented in Appendix A, we can analytically extract the \(\varDelta \varphi \) distribution for a given stop mass \(m_{{{\tilde{t}}}}\) (which is a good proxy for the R-hadron masses) and decay time \(t_{\mathrm{decay}}\),

Here, \(q_R\) stands for the R-hadron electric charge. The expected \(\varDelta \varphi \) distribution originating from the probability distribution of the decay time can then be compared with predictions obtained with MadAnalysis 5 and its SFS module.

For this purpose, we make use of Pythia 8 [62] to generate 50,000 signal events for two scenarios respectively featuring a stop mass of 20 and 700 GeV, and in both of which the stop mean decay length has been fixed to 1 m.Footnote 4 In the lightest configuration, we expect more enhanced magnetic field effects than in the heaviest setup, due to the larger related cyclotron frequency.

The hadronisation is also performed in Pythia 8, which includes a treatment of the formation of R-hadrons. In the following, since we are interested in the impact of the magnetic field, we do not consider any special effects of the R-hadron interaction with the detector, and treat them as propagating freely in the magnetic field until they decay. Indeed, in the SFS approach these would need to be handled by efficiency functions, prossibly on an analysis-by-analysis basis (see e.g. Ref. [29]).

In the top row of Fig. 1, we show predictions for the \(\varDelta \varphi \) distribution obtained when the magnetic field is set to 4 T. We compare results obtained from our analytical calculations (solid red line) to those returned by MadAnalysis 5 when particle propagation is turned on (blue histograms). Excellent agreement is found, and we can observe that the spectrum is much broader in the case of the lightest scenario. This therefore confirms that the propagator module works as expected.

We assess the effect of the magnetic field on the final-state lepton by examining the shift that is induced on the impact parameter spectrum. For illustrative purposes, we compute the relative difference between the transverse impact parameter \(d_0\) as obtained when particle propagation is turned on, and its \({{\tilde{d}}}_0\) variant assuming straight-line propagation,

We present results for muons in the bottom row of Fig. 1, the electron counterparts being found similar. As already mentioned, the mass of the long-lived R-hadrons (or equivalently the mass of the top squark) plays an important role in the shape of the distribution. The impact is indeed stronger for lighter R-hadrons, as the objects that originate from their decay automatically have a trajectory that is more bent. Although our findings depict possibly important effects, they do not necessarily have important consequences on a typical LLP LHC analysis once the new physics goals in terms of a mass range are defined. This however should be investigated on a case-by-case basis.

The new particle propagator module is used by the recast analyses that we present in the following sections. However, the impact of the magnetic field is not especially significant. There may be other cases in the future where it is important, for example searches for slowly moving particles. Of more importance in the following is that the code now includes methods for correctly treating displaced vertices, track objects, computing \(d_0\), etc.

3 Displaced leptons in the \(e\mu \) channel (CMS-EXO-16-022)

3.1 Generalities

The experimental analysis presented in Ref. [36] is a search for long-lived particles where each event produces two displaced leptons, precisely one electron and one muon. It was carried out by the CMS Collaboration in 2015, i.e. at the beginning of the run-2 data-taking period of the LHC at \(\sqrt{s}=13\,\text {TeV}\) and with a data sample corresponding to an integrated luminosity of \(2.6\,\hbox {fb}^{-1}\). This search superseded the 8 TeV analysis described in Ref. [63]. The electrons and muons which potentially originate from LLP decays are identified via a minimum requirement on the transverse distances of their tracks from the primary interaction vertex, i.e. their respective transverse impact parameters \(d_0\) (a more reliable proxy for displacement than reconstructing vertices).

The selected events are categorised into three signal regions, depending on the values of the electron and muon impact parameters. No significant excess over background was observed, so the results were interpreted as limits on the displaced supersymmetry model [61]. This model contains R-parity-violating stop decays \({{\tilde{t}}_1\rightarrow b\ell }\) with \({\ell =e,\mu ,\tau }\) which happen with equal branching ratio of \(\frac{1}{3}\) for all lepton flavours once lepton universality is assumed (i.e. if the vector \(\mu _L\) has three identical components). Since the stops are only produced in pairs, each signal event would have two displaced leptons.

An implementation of this analysis [34, 37] is already part of the Delphes 3-based Public Analysis Database [54] of MadAnalysis 5 . It was subsequently implemented in the LLP extension of the CheckMATE 2 program [41]. It is therefore a logical choice for the first LLP analysis to implement in the MadAnalysis 5 SFS framework, which we present here, along with several improvements regarding the previous work. While the selection criteria are rather straightforward to apply, the major difficulty in this search is the lack of information about the lepton detection efficiencies for highly displaced tracks. Since the CMS Collaboration did not provide publicly available auxiliary material for this particular analysis, we have to rely on information related to the superseded analysis [63], available online.Footnote 5

Recently, [36] was itself superseded by a full run 2 analysis [64]. The updated search contains many more signal regions but no new recasting material; we attempted a naive recast but the results were not satisfactory, so we are corresponding with the convenors to improve the results and hope to return to this in future work.

3.2 Event selection

The analysis targets events with exactly one electron and one muon in the final state, which are clearly identified as such and which fulfil the following preselection cuts. The imposed lower bounds on the lepton \(p_T\) values are different for electrons (\(42\,\text {GeV}\)) and muons (\(40\,\text {GeV}\)). In both cases, the absolute value of the pseudorapidity \(|\eta |\) must not exceed 2.4. Furthermore, electrons in the overlap region of the barrel and endcap detectors (\(|\eta | \in [1.44, 1.56]\)) are rejected due to lower detector performance compared with other detector regions. In addition, the two leptons must satisfy simple isolation conditions. These impose an upper limit on an isolation variable \(p_T^{\text {iso}}\) defined as the scalar sum of the transverse momentum of all reconstructed objects lying within a cone of a specified size \(\varDelta R\) and that is centred on the lepton’s momentum. The limit on the isolation variable is fixed relative to the lepton momentum, and also depends on \(|\eta |\) in the case of electrons, since the associated detector performance is different in the barrel and the endcaps. A summary of the criteria imposed on the electron and muon candidates considered in the event preselection, including the relevant values for the isolation cone, is given in Table 1.

The analysis selection then enforces the condition that the two leptons carry an opposite electric charge and that their momenta are separated by \({\varDelta R>0.5}\). Besides these criteria, which are given explicitly in the analysis summary, we impose the condition that the position of the production vertex \(\mathbf {v}_{\text {prod}}=(v_x,v_y,v_z)\) of each lepton satisfies

Those conditions are adopted from the superseded analysis [63], for which the information was originally made available in the auxiliary material. The latter however refers to these criteria with an upper bound on \(v_0\) of 4 cm. Even though these requirements are not explicitly justified, and are not even mentioned in the publication associated with either of the analyses, it is likely that they are related to the reconstruction performance of the CMS tracker. Therefore, we decided to include them in our implementation, but with a higher upper limit on \(v_0\). This is motivated by the design of the newer analysis, which is supposed to probe values of \(|d_0|\) up to 10 cm.

The analysis relies on three different signal regions (SR), which classify events according to the smallest of the two absolute values of the electron and muon impact parameters \(|d_{0,e}|\) and \(|d_{0,\mu }|\). The lower bound is \(200\,\upmu \text {m}\) for SR I, \(500\,\upmu \text {m}\) for SR II and \(1000\,\upmu \text {m}\) for SR III. Those regions are moreover exclusive, i.e. there is no overlap between them. These criteria are summarised in Table 2.

3.3 Reconstruction efficiencies

As mentioned above, no dedicated reconstruction efficiencies are provided for this analysis, but they were made available for the superseded 8 TeV analysis. We therefore use them. They are parameterised in terms of the electron and muon \(|d_0|\), and separate selection efficiencies are also given in terms of their \(p_T\). The recasting material for the older analysis [63, 65] thus instructs us to convolve four efficiencies (two for each lepton) and to also include an additional factor of 0.95 to account for the trigger efficiency. This is what has been done in the CheckMATE 2 implementation [41] of the CMS-EXO-16-022 search. However, there are three issues.

-

It is easier to implement an efficiency function in MadAnalysis 5 than histogrammed data. Therefore we use a polynomial fit.

-

The efficiencies in \(|d_0|\) only extend to \(2\,\text {cm}\), whereas the signal regions extend to \(10\,\text {cm}\).

-

The instructions in the auxiliary material of the analysis [63] direct us to impose the cuts of the (older) analysis, but to ignore lepton isolation. In addition, we are supposed to cheat and insist that the truth-level leptons come from stop decays. Therefore, the efficiencies provided as a function of \(p_T\) (which vary from about \(80\%\) at the lower \(p_T\) values accepted by the analysis up to about \(90\%\) at higher \(p_T\)) convolve the effects of isolation and some of the cuts, and are in addition model-specific.

The implementation in [41] took the approach of using Delphes 3, ignoring lepton isolation and relying on the efficiencies provided by the CMS Collaboration. We tried this but without insisting on selecting only leptons issued from top squark decays. We found poor agreement with the CMS analysis, notably for small LLP lifetimes. On the other hand, once we imposed lepton isolation directly in our implementation, then the combined effect was too aggressive. Therefore, we chose to omit the \(p_T\)-dependent efficiencies (recall that there are four functions, two each depending on \(|d_0|\) for the electron and muon, and two depending on \(p_T\) of the electron and muon, respectively) and to keep lepton isolation at the level of the analysis, rendering our code (hopefully) more model-independent: we believe that the information in the \(p_T\) functions must essentially be the cuts relating to isolation requirements. The polynomial fits of the efficiencies for \(|d_0|<2\,\text {cm}\) were realised by the author of [37], which we also employ. This gives

for electrons and muons, respectively, with \(x \equiv |d_0|/\,\text {cm}\). The tabulated efficiencies and the resulting fits are shown in Fig. 2.

Above transverse impact parameters of 2 cm we must make a choice due to the absence of experimental data. That made in the CheckMATE 2 implementation of Ref. [41] is to provide one bin and make a \(\chi ^2\) fit to minimise the differences at the level of the cutflows. This leads to an electron efficiency of 0.06 and a muon efficiency of 0.01. Especially for muons this is effectively consistent with zero, and does not seem reasonable given that at 2 cm the efficiencies are much higher. We can only speculate as to how/why that choice and also some other choices were made in Ref. [41]. In fact, in the SFS analysis we typically found that we were cutting too many events compared with the experimental exclusion. So instead we investigated having either a constant efficiency equal to the value at 2 cm, or a linear extrapolation. It is the latter that produced a better agreement, and so the one we adopt. We thus assume that the lepton efficiencies decrease linearly to zero at \(|d_0|=10\,\text {cm}\), which is the maximum value at which they can be selected in the analysis. The efficiencies for \(2\,\text {cm}<|d_0|\le 10\,\text {cm}\) read

still with \(x \equiv |d_0|/\,\text {cm}\), and they vanish for any \(|d_0|\) value larger than 10 cm.

3.4 Validation of our implementation

The signal process considered in the CMS-EXO-16-022 analysis consists of the production of a pair of long-lived top squarks that then decay into an electron-muon pair,

with \(\ell , \ell ' =e,\mu \). The only validation material available for this process is related to four scenarios in which the top squark mass is fixed to \(m_{{\tilde{t}}}= 700\,\text {GeV}\), and which differ by the stop decay length that ranges from 0.1 to 100 cm. In each case, the CMS Collaboration provides the number of signal events populating the three signal regions of the analysis (see table 4 of Ref. [36]).

To reproduce the CMS results, we generate four samples of 400,000 events with Pythia 8 [62]. In our simulation chain, we focus on the process (3.4) and enforce the decays \({\tilde{t}}\rightarrow b \ell \) to have equal branching ratios of 1/3 for each lepton flavour. It is important to stress that we do not impose the condition that the stops decay only into electrons and muons, but we also consider stop decays into taus. This last subprocess contributes differently to the signal. The resulting electrons and muons indeed exhibit different properties (like their \(p_T\)) that cannot be modelled through a simple multiplicative factor applied to signal yields as obtained after restricting the stops to decay solely into electrons and muons. For a fair comparison with the CMS experimental results and simulations, we next rescale the obtained number of events to match a stop pair-production cross section evaluated at the next-to-leading-order and next-to-leading-logarithmic (NLO + NLL) accuracy in quantum chromodynamics (QCD) [66], i.e. a cross section of \({\sigma \approx 67.05\,\text {fb}}\).

Our results are shown in Table 3 and demonstrate excellent agreement for all considered lifetimes and signal regions, with as a possible exception the SR I region for \(c\tau = 100\,\text {cm}\). Here, a deviation reaching \(150\%\) is found. This is however irrelevant, as the corresponding efficiency is tiny and the uncertainty on the CMS result is larger than \(50\%\) (which is due in particular to the associated low statistics). Moreover, we have no information on the large numerical errors potentially plaguing the CMS predictions.

To complete the validation of our implementation, we derive an exclusion contour at 95% confidence level in the \((m_{{{\tilde{t}}}}, c\tau )\) plane, and compare our findings with the exclusion plot included in the CMS analysis publication. Such a comparison is complicated by two factors.

-

The analysis provides data-driven background estimates for each signal region without uncertainties, giving instead just a maximum value for the background yields.

-

The exclusion associated with scenarios featuring an intermediate stop lifetime depends significantly on a combination of the results emerging from the different signal regions, yet no correlation data are given.

The older MadAnalysis 5 analysis [37] took the approach of choosing the median value of the background with an uncertainty of \(100\%\). In the CheckMATE 2 implementation of Ref. [41], the expected backgrounds are instead taken to be equal to the maximum value possible for the background with an (arbitrary) uncertainty of \(10\%\).

In the public version of the analysis [51], we provide the same values for the background as in the old implementation; MadAnalysis 5 will thus compute the exclusion based on choosing the “best” signal region according to the standard procedure, and this is the most appropriate conservative choice that can be made. However, for the purposes of reproducing the CMS exclusion curve, we also privately modified the statistics module of MadAnalysis 5 to draw the expected background counts from a flat distribution extending up to the maximum value, and combined the predictions for the different signal regions by assuming no correlations. We show both results in Fig. 3, with the default statistics choice labelled as “Uncombined,” and our modified one as “Combined.” The striking agreement for the combined curve over the entire considered range with the observed CMS exclusions (which we scraped digitally, since the values are not provided publicly) is a further validation of our analysis, but also a reminder of the importance for the experimental collaborations to provide correlation data. Since correlation matrices (or information on the validity of combining signal regions in an uncorrelated way) have not been provided by the CMS Collaboration, the “Combined” approach remains private, and only the “Uncombined” version is publicly available.Footnote 6

4 Displaced vertices with oppositely charged leptons (ATLAS-SUSY-2017-04)

4.1 Generalities

The ATLAS search [4] targets events featuring displaced vertices (DVs) associated with a pair of leptons (ee, \(e\mu \) or \(\mu \mu \)) with an invariant mass greater than 12 GeV. It uses run 2 data samples from 2016, corresponding to an integrated luminosity of \(32.8\,\hbox {fb}^{-1}\) at \(\sqrt{s}=13\,\text {TeV}\). No events with a dileptonic displaced vertex compatible with all selection criteria were observed. This analysis mainly considered R-parity-violating supersymmetric scenarios in which colourful scalars (squarks \({\tilde{q}}\)) are produced and decay promptly to a long-lived heavy fermion (neutralino, \({\tilde{\chi }}^0_1\)),

The neutralino next decays into a pair of opposite-sign leptons and a neutrino,

where i, j, k denote fermion generation. The branching ratios into different i, j, k flavours are determined by a coupling \(\lambda _{ijk},\) and the analysis focuses on two different scenarios, namely models featuring either a single \(\lambda _{121}\) coupling or a single \(\lambda _{122}\) coupling. The “\(\lambda _{121}\) scenario” leads to \(\mathrm {BR}({\tilde{\chi }}^0_1 \rightarrow ee\nu ) = \mathrm {BR}({\tilde{\chi }}^0_1 \rightarrow e\mu \nu ) =0.5\), while the “\(\lambda _{122}\) scenario” leads to \(\mathrm {BR}({\tilde{\chi }}^0_1 \rightarrow \mu \mu \nu ) = \mathrm {BR}({\tilde{\chi }}^0_1 \rightarrow e\mu \nu ) =0.5\). The results are also interpreted in the framework of a heavy sequential \(Z'\) boson [67], which has thus the same couplings as the SM Z-boson, but with a lifetime that is artificially modified to make it long-lived. Such a long-lived \(Z'\) would be excluded by searches for displaced hadronic jets, but is an excellent and simple prototype for a two-body LLP decay. It could hence be used to obtain signal selection efficiencies which can be applied to a large set of comparable models.

As opposed to the CMS analysis presented in the previous section, this analysis requires the pair of oppositely charged leptons to originate from the same DV. It also allows for several DVs per event. Moreover, an event is not rejected automatically when a DV does not fulfil all of the requirements imposed, as long as there is at least one other DV surviving the cuts.

An extensive amount of auxiliary material for the reinterpretation of the results of this analysis is provided on HEPData [68] and on the analysis twiki page.Footnote 7 Most importantly, the auxiliary material contains information about individual efficiencies for the vetoes that are applied on DVs in different detector regions, and the overall event selection efficiency after all cuts. Whereas these have a significant impact on the final result, they are rather model- and parameter point-dependent, so that the user must use caution in applying them.

4.2 Selection criteria

The event selection is composed of two types of cuts. First, a number of cuts keep or reject events as a whole. Next, the remaining cuts perform a selection of displaced vertex candidates, but do not reject events unless the number of vertex candidates is reduced to zero.

4.2.1 Event-level requirements

Here we list the event requirements as we apply them in our implementation.

Triggers: We require that at least one out of three triggers, targeting muons and electrons, has fired. A muon trigger requires a muon with \({p_T>60\,\text {GeV}}\) and \(|\eta |<1.05\). On the other hand, since electrons are hard to reconstruct, the analysis also triggers on photons from their electromagnetic showers. Since we do not simulate the effect of electrons passing through the calorimeter, we are more generous and apply the same triggers to generator-level electrons. Hence a single photon trigger requires an electron or a photon with \(p_T>140\,\text {GeV}\), and a diphoton trigger requires two photons or electrons with \(p_T>50\,\text {GeV}\).

Preselection: Preselection requirements are then applied on the one or two candidate particles that emerge from the triggers (and that could be electrons, muons or photons). The constraints applied on their \(p_T\), \(\eta \), \(d_0\) and isolation are listed in Table 4. Electrons must have an impact parameter \(|d_0|>2\,{\text {mm}}\) unless they satisfy the loose electron isolation criteria, whereas photons are not associated with tracks so that they cannot be subject to any \(d_0\) requirement. They are instead all required to be loosely isolated. Two different isolation criteria must be fulfilled by loose electrons. Calorimeter isolation requires that the sum \(E_T^{\text {cone20}}\) of the energy deposits inside a cone of fixed size \(\varDelta R<0.2\) around each electron is as small as

Track isolation restricts the scalar sum \(p_T^{\text {varcone}}\) of the \(p_T\) of all other tracks in a cone with variable size \(\varDelta R\) around the electron track to fulfil

For photons, we enforce instead that

with a constant cone size of \(\varDelta R = 0.2\). For muons, we require a displacement of \(|d_0|>1.5\,{\text {mm}}\). Whereas in the ATLAS analysis this requirement is skipped if the muon track is poorly reconstructed, we simply apply it to all muons in our MadAnalysis 5 implementation.

Cosmic ray veto: We reject events compatible with the presence of cosmic ray muons, i.e. events in which any of the possible lepton pairs (in case electrons are reconstructed as muons) with pseudorapidities \(\eta _{1,2}\) and polar angles \(\phi _{1,2}\) satisfy

Primary vertex: After the trigger selections, the analysis requires the presence of a primary vertex. This is clearly redundant for an SFS-based analysis of only signal events. We therefore do not implement this cut.

Displaced vertex: The set of event-level requirements ends by enforcing events to contain tracks which form at least one displaced vertex. To allow for highly displaced vertices, the ATLAS standard tracking algorithm is supplemented with a large radius tracking algorithm [69], which significantly relaxes the requirements imposed on the tracks. For example, it raises the \(|d_0|\) upper limit from 10 to 300 mm and the \(|d_z|\) upper limit from 250 to 1500 mm. The positions of the displaced vertices are obtained from the reconstructed tracks with a vertexing algorithm [70], via the successive combination of intersecting tracks (taking into account the uncertainties associated with their reconstruction) to vertices and the merging of vertices when their distance is small enough. However, our simulated events already contain information about the vertex positions (which can be slightly modified if the magnetic field is taken into account by using the SFS particle propagator module introduced in Sect. 2). Therefore it would be overkill to attempt to implement the ATLAS algorithm, and fruitless since we have insufficient information regarding the tracker efficiency. Instead, we simply generate an object representing a displaced vertex for each external final-state particle compatible with the track requirements for the DV reconstruction, i.e. tracks satisfying

Then a very simple merging is performed. It consists of replacing two DV objects separated by a distance smaller than 1 mm by a single DV object and assigning all particles associated with the initial vertices to the new DV object. The position assigned to the new DV object is arbitrarily set to the position of one of the two vertices. This should reproduce the merging procedure well enough, assuming that the LLP decay products are either stable or promptly decaying, or sufficiently short-lived particles. In this way, there is no risk to end up with two displaced vertices when there should be only one.

4.2.2 Vertex-level requirements

In a second step, the analysis strategy targets the ensemble of displaced vertices in a given event. Individual (displaced) vertices are rejected if they fail one of the following requirements.

Vertex fit: The ATLAS analysis requires a good fit for the vertex; similarly to the primary vertex cut, we cannot reproduce the algorithm employed in the analysis. However, the HEPData entry provides per-decay acceptances, intended to be applied after all previous cuts. In our MadAnalysis 5 SFS implementation we use them as vertex reconstruction efficiencies, as discussed further in Sect. 4.3.

Transverse displacement: Prompt decays and decays after a small displacement are avoided by considering only DVs at positions \(\mathbf{x}_{\text {DV}}=(x_{\text {DV}},y_{\text {DV}},z_{\text {DV}})\) for which the transverse displacement from the proton collision axis is larger than 2 mm. As in any given simulated Monte Carlo event protons are assumed to collide at the origin, this gives

Fiducial volume: Only vertices in a restricted detector volume, where track and vertex reconstruction are expected to be reliable, are taken into account. This fiducial volume is a cylinder around the beam axis of radius of 300 mm and length of 600 mm. We thus consider only DVs with a position satisfying

Material veto: The ATLAS detector itself contributes to the presence of displaced vertices from interactions of primary particles with detector material [70,71,72]. The material veto removes electrons originating from within the tracking layers or support structures, which consists of about \(42\%\) of the fiducial volume. This would be an exceedingly difficult requirement to simulate in the SFS framework. Fortunately, the ATLAS Collaboration has provided an efficiency map splitting the fiducial volume into a grid in \(r_{xy}\) and \(z_{\text {DV}}\), averaged over the polar angle \(\phi \). This map gives the fraction of each grid element eliminated by the veto. Our ATLAS-SUSY-2017-04 implementation in MadAnalysis 5 hard-codes this map into a C++ function (instead of a fitting function given via the analysis card) and randomly discards vertices with the probability given by the result.

Disabled pixel modules veto: Similar to the material veto, DVs localised in front of disabled pixel modules are vetoed. This corresponds to \(2.3\%\) of the fiducial volume. Efficiencies averaged over the polar angle are again provided in terms of \(r_{xy}\) and \(z_{\text {DV}}\) by the ATLAS Collaboration. We implement them through a hard-coded function.

Two leptonic tracks: At least two leptonic tracks must be associated with each reconstructed vertex. In the provided cutflows (and hence in our implementation) these are implemented as successive cuts on the number of leptons associated with each vertex, \(N(\ell ) \ge 1\) and \(N(\ell ) \ge 2.\).

Invariant mass: The invariant mass of the sum of the momenta of the tracks associated with the displaced vertices give a lower bound on the mass of the decaying long-lived particle. It is required to exceed 12 GeV.

Trigger and preselection matching: The trigger and preselection criteria are also required to hold for the subset of particles associated with each of the displaced vertices. This ensures that the energetic leptons and photons considered originate from the displaced vertices themselves. Naively for typical signal events targeted by the analysis, this should be of negligible impact (and indeed this is generally what we observe). In contrast, for the decay of a relatively light LLP we shall see from the cutflows that this could be very significant, presumably because of triggered photons (and possibly leptons) stemming from initial-state or final-state radiation.

Oppositely charged lepton pair: Each displaced vertex is enforced to involve at least one pair of leptons (ee, \(\mu \mu \) or \(e\mu \)) with opposite electric charge.

4.3 Efficiencies

As described above, three types of efficiencies are provided in the HEPData repository for this analysis. In the auxiliary file describing the recasting material, the user is moreover instructed to apply essentially the cuts described above and then compute a weight for each vertex based on the material veto and disabled pixel veto efficiencies. This yields a per-decay acceptance, and next the per-decay efficiency can be computed by using the provided maps.

The per-event efficiency can be computed by a simple formula. As already mentioned, in our MadAnalysis 5 implementation we do not apply a reweighting but instead randomly eliminate vertices with probability given by the veto weight. Furthermore, we apply the per-decay efficiency as a vertex reconstruction efficiency. This is somewhat simpler to implement (at the expense of requiring more statistics) and obviates the need for a formula to combine multiple vertices per event, but should otherwise be equivalent.

While the material and disabled pixel veto weights are expected to be largely model-independent (except for perhaps models having a large polar angle dependence with multiple DVs per event), the efficiency information convolves trigger/preselection and vertex reconstruction efficiencies, and so is highly model-dependent. The HEPData material contains sets of binned efficiencies in two or three variables, for two models and several masses.

The first model is a toy sequential \(Z'\) model in which the extra gauge boson has the same couplings as the SM Z boson, but a different mass. Such a model would be excluded by searches for dijet resonances, but the results/efficiencies can be applied to any model having events with a single LLP decaying to a lepton pair. Efficiencies are given for fixed masses \(m(Z')\) of 100, 250, 500, 750 and 1000 GeV, with a binning in the transverse distance \(r_{xy}\) and the lepton pair transverse momentum \(p_T(\ell \ell ')\).

The second model is an R-parity-violating supersymmetric (RPV SUSY) model where a heavy fermion (neutralino) decays to two leptons and a neutrino. Obviously, this has implications for the kinematics of the pair of visible leptons, as they do not have to conserve the four-momentum of the neutralino. For this reason, the mass of the neutralino cannot be determined directly from the momenta of the visible lepton pair. Unlike the \(Z'\)-efficiencies, which depend on the mass of the long-lived \(Z'\) but are only given for fixed \(m(Z')\) values, the RPV SUSY efficiencies depend on the invariant mass of the lepton pair \(m(\ell \ell ')\), without any assumption on the neutralino mass. They are therefore binned in the three variables \(r_{xy}\), \(p_T(\ell \ell ')\) and \(m(\ell \ell ')\).

In our implementation we have implemented all of the available efficiencies in two versions, one for two-body LLP decays and one for three-body decays. The user must therefore choose the version appropriate for their model; we tested the effect on the \(Z'\) model using the RPV efficiencies and found deviations of about 15%. However, they should still exercise some caution. Firstly, there can be models with the same signature but different kinematics, for example when the invisible particle escaping a vertex is massive, instead of a massless neutrino; in such cases there are no available efficiencies. Secondly, we also do not know how much of the information corresponds to trigger/preselection efficiencies as compared with vertex reconstruction. Consequently, if the production mechanism changes, there would also be an unknown (but most likely of the order of tens of percent) induced error.

4.4 Validation

A significant amount of material is provided both in the analysis note and as auxiliary online information, and can be used for the validation of the implementation. This includes cutflows, plots (with tabulated data on HEPData) of per-decay and per-event signal efficiencies and excluded cross sections. It should therefore be expected that the final efficiencies should be almost exactly reproducible for the example benchmarks at least.

For the \(Z'\) toy model, there are cutflows provided for six different event samples, in which the \(Z'\) boson has a mass of either 100 GeV or 1000 GeV, and decays exclusively into one of the three dilepton final states ee, \(e\mu \) or \(\mu \mu \).

For the RPV SUSY model, the cutflows are provided for event samples combining the \(\lambda _{121}\) and \(\lambda _{122}\) scenarios, where \(\mathrm {BR}({\tilde{\chi }}_1^0 \rightarrow ee\nu ) = \mathrm {BR}({\tilde{\chi }}_1^0 \rightarrow e\mu \nu ) = \mathrm {BR}({\tilde{\chi }}_1^0 \rightarrow \mu \mu \nu ) = \nicefrac {1}{3}\). In analogy to the \(Z'\) cutflows, the material contains separate cutflows for each of the neutralino decay modes (in a ee, \(e\mu \) and \(\mu \mu \) final state), and an event is counted in the cutflow if it contains at least one vertex associated with the corresponding lepton pair. Here, two different neutralino lifetimes are considered for a single configuration of the squark and neutralino masses, which leads to six different cutflows as well. In addition to the cutflows, plots of selection efficiencies varying the LLP lifetime were provided in the analysis paper for several squark and neutralino masses. To validate our analysis, we performed a scan for the RPV SUSY model for two configurations of the quark and neutralino masses over a wide spectrum of neutralino lifetimes, considering the \(\lambda _{121}\) and \(\lambda _{122}\) couplings separately. The parton-showered and hadronised samples were then passed to our MadAnalysis 5 implementation of the analysis to determine the selection efficiencies, including the effects of the detector through the SFS framework. The code is available publicly [52] and has been used with the goal of reproducing some of the ATLAS results presented in the figures 3–5 of Ref. [4].

4.4.1 Event generation

In the light of the available cutflows, we made use of Pythia 8 (v8.244) [62] to generate six separate samples of 20,000 events describing the production of a sequential \(Z'\) boson and the appropriate ee, \(e\mu \) or \(\mu \mu \) decays for both \(m(Z')=100\,\text {GeV}\) and 1000 GeV. In all cases the proper decay length was fixed at \(c\tau = 250\,{\text {mm}}\). These samples could be directly passed to MadAnalysis 5 .

Various samples of 20,000 events associated with long-lived neutralino production in the RPV SUSY model were generated with MG5_aMC [73] (v2.8.3.2), which we used in conjunction with Pythia 8 (v8.244) for parton showering and hadronisation. The hard-scattering process (4.1) is simulated by mergingFootnote 8 matrix elements containing up to two additional partons via the multi-leg merging (MLM) scheme [74,75,76] as modified by the internal MG5_aMC interface to Pythia 8. Those matrix elements rely on the Minimal Supersymmetric Standard Model (MSSM) UFO model shipped with MG5_aMC [77, 78], are convoluted with the LO set of NNPDF 2.3 [79] parton distribution functions, and include matching cuts derived from a matching scale set to of one fourth of the squark mass. The parameters defining the benchmark scenarios considered (including decay tables so that Pythia 8 could handle squark and neutralino decays) are taken from the parameter card provided on HEPData, so that comparisons of the analysis cutflows could be made on a cut-by-cut basis. Our validation employs two samples which combine all neutralino decay modes with equal branching ratios \({\mathrm{Br}}({\tilde{\chi }}_1^0\rightarrow ee\nu )={\mathrm{Br}}({\tilde{\chi }}_1^0\rightarrow e\mu \nu ) ={\mathrm{Br}}({\tilde{\chi }}_1^0\rightarrow \mu \mu \nu )=\nicefrac {1}{3}\). A classification of the events is then done on the level of the analysis code, depending on the types of leptons originating from the displaced vertices present.

For the validation of the efficiencies, the couplings \(\lambda _{121}\) and \(\lambda _{122}\) are considered separately, and we generated samples of 50,000 events for two configurations of the masses (\(m({\tilde{q}})=700\,\text {GeV}\), \(m({\tilde{\chi }}_1^0)=50\,\text {GeV}\) and \(m({\tilde{q}})=1600\,\text {GeV}\), \(m({\tilde{\chi }}_1^0)=1300\,\text {GeV}\)) and 21 different neutralino lifetimes ranging from \(c\tau =1\,{\text {mm}}\) to \(c\tau =10{,}000\,{\text {mm}}\), for both of the R-parity-violating couplings (i.e. in total 84 samples).

4.4.2 Cutflows

We used the cutflow tables provided for both models as guidelines during the implementation, with the intention to reproduce the efficiencies of the individual intermediate cuts of the analysis, and not just the final efficiencies. However, the per-decay efficiencies provided are not just simple reconstruction efficiencies, but fold in the preselection. Moreover, pile-up effects can induce displaced vertices along the beamline relative to the hard-scattering event, and could thus yield important effects. It was therefore quickly apparent that reproducing the cutflows in detail would not be possible.

Overall selection efficiencies (top) and upper limits on the squark-antisquark production cross section (bottom) obtained with our MadAnalysis 5 implementation (MA5), in comparison with the limits (with uncertainties) found by the ATLAS Collaboration. We consider two configurations of squark and neutralino masses in the \(\lambda _{121}\) (left) and \(\lambda _{122}\) (right) coupling scenario

To test the impact of the pile-up (for which there is not yet support in the SFS framework), we implemented the analysis in HackAnalysis [40] and compared the cutflows. This provided an amelioration of the agreement for intermediate steps, and we observed that the pile-up events were very efficiently removed by the point of applying the two-lepton cut. However, due to the first issue above and the fact that we do not have any efficiency information for the reconstruction of displaced vertices coming from hadronic decays, we could not entirely reproduce each individual step of the cutflows. In the end, our final per-event efficiencies agree nevertheless very well with the ones provided by the ATLAS Collaboration (both with and without pile-up). The complete set of our comparisons of cutflows is given in Appendix C.

4.4.3 Overall selection efficiencies and exclusion limits

To further validate our implementation, we compared our findings for the lifetime dependence of the selection efficiency, and the upper limits on the squark pair-production cross section for different choices of squark and neutralino masses. We considered \(\lambda _{121}\) and \(\lambda _{122}\) couplings separately, in accordance with the information available in the HEPData entry of the analysis. As already mentioned, we choose two configurations of squark and neutralino masses and scan over the lifetime value. Results for the overall selection efficiencies and cross section upper limits are shown in Fig. 4.

We can observe that the MadAnalysis 5 and ATLAS results are in relatively good agreement up to proper decay lengths of 1000 mm. Beyond this value the curves differ. For the \(m({\tilde{q}})=700\,\text {GeV}, \) \(m(\chi _1^0)=50\,\text {GeV}\) case at very large lifetimes (above 5000 mm) there are kinks in the MadAnalysis 5 curves because of very low signal efficiency/insufficient simulated events. We did not simulate more events to improve them, however, for a much more serious reason: the ATLAS curves for \(m({\tilde{q}})=1600\,\text {GeV}\) and \(m(\chi _1^0)=1300\,\text {GeV}\) (blue) contain a noticeable kink above \(c\tau =1000\,{\text {mm}}\), which is particularly striking in the case of the \(\lambda _{121}\) scenario. No reasonable explanation could be found in the selection criteria of the analysis, and it is worth mentioning that according to the analysis note, the ATLAS Collaboration did not generate samples for proper decay lengths above 1000 mm, but combined different samples in the \(c\tau \)-range between 10 mm and 1000 mm. To use these samples for different LLP lifetimes, they reweighted events to account for the different probability of the same events occurring in a sample associated with a different lifetime. For the validation of the MadAnalysis 5 implementation of the analysis, a different sample was generated for each value of \(c\tau \). However, there should be no problem with the reweighting procedure (provided sufficient events are generated). We checked that this also worked for us.

The analysis fiducial volume only extends to 300 mm from the beampipe. For lifetimes above 1000 mm, the decays of a heavy particle within the fiducial volume should be roughly uniformly distributed as a function of decay length from the interaction point (with efficiencies that decrease at larger radius), and most LLPs decay outside of it, so there should be very few events with two DVs. Increasing the lifetime of the LLP beyond 1000 mm should therefore roughly only lead to an overall linear scaling of the signal efficiency inversely proportional to the lifetime. This is what is observed in the per-decay efficiencies, and what we observe in our results (although this is hard to read from the plots). Moreover, when we combine the per-decay efficiencies provided by the ATLAS Collaboration to give a per-event efficiency according to their prescription, the results also agree with our code. Instead, the results in the official plots seem to be dramatically reduced, differing by a factor of 5 at 10,000 mm.

We were not able to find an explanation for this phenomenon. Perhaps events with an additional displaced vertex outside the fiducial region (or even a long way out) are being vetoed by the analysis without this being described anywhere. We reported the issue to the ATLAS SUSY conveners, who were so far not able to provide a solution. The analysis has been taken over by a different group who will investigate this matter once the existing analysis code has been integrated into a new analysis framework, with a view to performing a new analysis with more data. Unfortunately, their conclusions were not yet forthcoming at the time of writing this article, and we therefore regard the results of our analysis for lifetimes above 1000 mm to be unvalidated.

4.5 Long-lived vector-like leptons

4.5.1 Generalities

To demonstrate the exclusion potential of the MadAnalysis 5 implementation of the ATLAS LLP search discussed in this section, we shall apply it to a SM extension containing a vector-like pair of lepton doublets. The phenomenology of such models at the LHC is described in e.g. Ref. [80]. For the sake of our signature, unlike in that reference, we shall assume that the new doublet pair \(L', {\overline{L}}'\) (in two-component spinor notation, where \(L'\) has the same quantum numbers as the left-handed SM lepton doublet L) only mixes with the electron. The corresponding Lagrangian reads

where H is the Higgs doublet, \(e_R^j\) the set of right-handed lepton \(SU(2)_L\) singlets and \(y_e^{ij}\) the elements of the usual lepton Yukawa matrices, which we take to be diagonal. The above model is a relatively simple extension of the SM with only two additional parameters, namely the VLL mass \(M_{\tau '}\) (which is equal for the charged and neutral states at leading order), and the mixing parameter \(\epsilon \). We implemented the model in the Sarah package [81, 82], which we used to generate a custom SPheno code [83] to compute the particle spectrum and the decay tables. Provided the mixing parameter \(\epsilon \) is sufficiently small, the new physical leptons \(\tau '\) and \(\nu '\) are both long-lived and can be produced at colliders via the processes

The neutral vector-like lepton (VLL) \(\nu '\) then decays to an electron and a W-boson, where the latter can further promptly decay to an electron or a muon and a neutrino. This decay chain generates a displaced vertex from which two leptons and a neutrino originate, exactly as in the displaced \(\chi _1^0\) decay studied in the RPV SUSY model. This justifies the use of the corresponding efficiencies to assess the constraints on the VLL doublet model.

The behaviour of the new charged lepton \(\tau '\) is more complicated. At one loop, electroweak symmetry-breaking effects split the neutral and charged states. The charged \(\tau '\) can consequently decay to a \(\nu '\) and an off-shell W-boson, exactly as for the wino model considered in Sect. 5. Whereas the \(\tau '\) decay is associated with a disappearing track signature independently of the \(\epsilon \) value, the channel \(\tau '\rightarrow Ze\) can dominate depending on \(\epsilon \). It then becomes possible that the \(\tau '\) state has a similar lifetime to the \(\nu '\) state and yields DVs from which three leptons are issued when the Z boson decays leptonically. We shall however ignore such a long-lived \(\tau '\) for the following reasons. In the case of the off-shell W-decay dominating, the accurate calculation of the decay to pions (and to a lesser extent the treatment of small mass splittings) is not yet automatic in Sarah (otherwise we could consider a combination with the analysis of the next section). Moreover, in the case of the Ze decay dominating, we have no efficiencies for a three-charged-lepton DV and do not know how that would be interpreted in the analysis (even if those should only consist of a small fraction of the signal rate).

4.5.2 Bounds from ATLAS-SUSY-2017-04

We simulate samples of 50,000 signal events by using MG5_aMC [73] (v2.8.3.2) together with Pythia 8 [62] (v8.244), relying on the UFO model files [78] generated by Sarah [84]. The processes considered are the ones given in Eq. (4.11), and the associated matrix elements are allowed to include up to two additional hard jets. They are merged according to the MLM prescription [74,75,76] with a matching scale set to \(M_{\tau '}/4\), and convoluted with the NNPDF 2.3 LO [79] set of parton distribution functions. We rescaled the resulting signal cross sections with different K-factors (\(\nicefrac {2}{3}\), 1 and \(\nicefrac {3}{2}\)) to parameterise our ignorance of higher-order correction effects.

In order to assess the impact of the ATLAS-SUSY-2017-04 analysis on the model, we first trade the \(\epsilon \) mixing parameter for the proper decay length \(c\tau \) of the neutral VLL \(\nu '\). Next, a scan in the \((M_{\tau '}, c\tau )\) plane is performed, and we evaluate the constraints resulting on each point from our implementation by means of the \(\hbox {CL}_s\) method [85]. We perform a grid scan with mass values below 1600 GeV in steps of 50 GeV, and proper decay lengths \(c\tau \) ranging from 1 to 10,000 mm with equal spacing of 0.5 on a logarithmic scale (i.e. powers of 10 increased in steps of 0.5).

The results are given in Fig. 5, the region in which \(c\tau >1000\,{\text {mm}}\) being highlighted to signal that it is associated with predictions that cannot be trusted due to unsatisfactory validation (see Sect. 4.4). No constraints are found for \(\nu '\) masses below 200 GeV. Moreover, we observe that a neutral VLL \(\nu '\) with a decay length \(c\tau \) below approximately 2 mm is only relatively weakly constrained, its mass being enforced to be above roughly 300 GeV. On the other hand, for higher lifetimes, this lower bound grows to above 700 GeV, comparable to the best constraints on long-lived weakly coupled particles.

Regions of the VLL model that are excluded at 95% confidence level. The results are shown in the \((M_{\tau '}, c\tau )\) plane, and consider different K-factors to be applied to the signal rate. The red-shaded region covers decay lengths above \(c\tau =1000\,{\text {mm}}\), in which there are doubts about the validity of the implementation

5 Disappearing tracks (CMS-EXO-19-010)

5.1 Generalities

Heavy long-lived charged particles produced at the LHC show up in the tracking system of the detectors, and then produce a track that “disappears” if they decay into heavy invisible states. This classic long-lived particle signature is well motivated by models including heavy electroweak multiplets with component states having masses that are only split after electroweak symmetry breaking, and where the lightest state is the neutral one. Canonical examples include supersymmetric winos and higgsinos [40, 86,87,88,89,90,91], and Minimal Dark Matter [92, 93].

The ATLAS Collaboration published a search [21] for new physics when it is manifest through this signature based on an integrated luminosity of 36.1 \({{\mathrm{fb}}}^{-1}\), and they provided together with the analysis results substantial recasting material. The latter includes a pseudocode, and efficiencies for “strong” and “electroweak” LLP production scenarios that convolve a great deal of information about tracklet reconstruction and selection cuts. This formed the basis of a code published in [39] and available on the LLP Recasting Repository [38], and has also been incorporated into CheckMATE 2 [41]. Recently a conference note [22] analysing the full run 2 dataset of 136 \({{\mathrm{fb}}}^{-1} \) appeared, followed by the full paper [22], which was released while this paper was under review. It would be very interesting in the future to recast that search and compare to the one presented here.

On the other hand, the final CMS analysis of the entire LHC run 2 dataset was already extant [23, 24] in early 2020, where the first analysis [23] comprised 38.4 \({{\mathrm{fb}}}^{-1}\) of data from 2015 and 2016, and the second one [24] added 101 \({{\mathrm{fb}}}^{-1}\) from 2017 and 2018. The recasting material consists of cutflows and acceptances for a wide range of LLP masses and lifetimes, but no efficiencies. Moreover, it is a particularly challenging search to recast because the signal regions and many of the cuts are defined in terms of the number of tracker layers that are hit, so the results are dependent on knowledge of the tracker geometry and a method of reproducing the track reconstruction efficiency. This was however achieved in [40] and released as a public code.Footnote 9

We present in the rest of this section the implementation of the code from [40] in the SFS framework of MadAnalysis 5 .

5.2 Technical details about the SFS implementation