Abstract

This article reviews the progress in our understanding of the reconstruction of the bulk spacetime in the holographic correspondence from the dual field theory including an account of how these developments have led to the reproduction of the Page curve of the Hawking radiation from black holes. We review quantum error correction and relevant recovery maps with toy examples based on tensor networks, and discuss how it provides the desired framework for bulk reconstruction in which apparent inconsistencies with properties of the operator algebra in the dual field theory are naturally resolved. The importance of understanding the modular flow in the dual field theory has been emphasized. We discuss how the state-dependence of reconstruction of black hole microstates can be formulated in the framework of quantum error correction with inputs from extremal surfaces along with a quantification of the complexity of encoding of bulk operators. Finally, we motivate and discuss a class of tractable microstate models of black holes which can illuminate how the black hole complementarity principle can emerge operationally without encountering information paradoxes, and provide new insights into generation of desirable features of encoding into the Hawking radiation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The AdS/CFT correspondence [1,2,3] is the most well understood example of the holographic emergence of spacetime and gravity. The heuristic reasoning for the holographic principle of gravity is simply that if we stuff in enough matter in a box, then eventually it will collapse to form a black hole whose maximum possible size would be that of the box [4]. The maximal entropy of a theory of gravity inside the box would then be the Bekenstein–Hawking entropy [5,6,7,8] of the black hole whose horizon is of the size of the box, which explicitly is A/4G, i.e. the quarter of the area A of the horizon measured in Planck units (we set \(\hbar = c =1\)). This heuristic argument relies on a semi-classical description of gravity and should be approximately correct if the size of the box is very large so that gravity is weak even at the black hole horizon when the black hole is of the same size as the box. More precise versions of this argument incorporating covariance under diffeomorphisms and inputs from quantum information theory [9,10,11] have been instrumental in providing a concrete ground for the holographic principle which states that a quantum theory of gravity in a spacetime with appropriate asymptotic boundary conditions can be described in terms of a (non-gravitational) quantum many-body system living at the boundary. The AdS/CFT correspondence is a concrete instance of a holographic duality between quantum (super)gravity with asymptotically anti-de Sitter (AdS) boundary conditions (i.e. with constant negative curvature near the boundary) and a (super)conformal gauge theory.

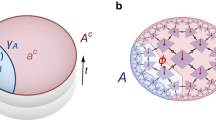

The origin of the AdS/CFT correspondence is from the description of D-branes in open and closed string theory, and is a consequence of open-closed string duality [1] (see [12] for a very accessible account). In closed string theory, coincident Dp branes are solitonic solutions of ten (or eleven) dimensional supergravity with \(AdS_{p+2}\times X\) throats, where X is a compact space of \(8-p\) (or \(9-p\)) dimensions. In open string theory, these coincident branes are \(p+1\) spacetime dimensional defects (extended over p spatial dimensions), whose low energy descriptions are given by non-Abelian gauge theories living on the worldvolume. A decoupling limit (see Fig. 1) isolates the gauge theory from the remaining stringy degrees of freedom in the open string description, whereas in the closed string description, the excitations living in the \(AdS_{p+2}\times X\) near-horizon geometry decouples from those in the remaining spacetime. It follows then that the closed string theory (quantum gravity) in \(AdS_{p+2}\times X\) can be described by a precise \(p+1\)-dimensional gauge theory.Footnote 1 One can obtain more examples of such holographic (a.k.a. gauge/gravity) duality in various dimensions via such string-theoretic setups including those where the gauge theories are non-conformal (and the asymptotic boundary conditions of gravity are non-AdS). We also obtain a precise mapping between the gauge coupling \(g_{YM}\) and the rank of the gauge group (N) with the parameters specifying the boundary conditions of the quantum gravity theory, e.g. L, the asymptotic curvature radius of AdS and the size of the internal manifold X. The AdS/CFT correspondence and its generalizations are often called gauge/gravity dualities [13].

An illustration of how the AdS/CFT correspondence emerges from two dual descriptions of coincident D-branes in open and closed string theory. These figures are from [14]

The most remarkable aspect of these dualities follow from two features of the holographic dictionary. Firstly, the ’t Hooft coupling \(\lambda = g_{YM}^2 N\) is related to a positive power of \(L/l_s\) (\(l_s\) is the string length) that controls the corrections to a two-derivative Einsteinian gravity theory arising from the finite length of the string. Furthermore, N, the rank of the gauge group, is related to a positive power of \(L/l_p\) (\(l_p\) is the Planck length) that controls the quantum corrections to classical gravity. As a result, when both N and \(\lambda \) are large, both \(l_s\) and \(l_p\) are small in units where the asymptotic curvature radius L is set to unity, so that both stringy and quantum effects are suppressed. Thus the dual description is just a classical gravity theory to a very good approximation. The duality therefore implies that a many-body system which evades a quasi-particle description can be described by a classical Einsteinian theory of gravity in one higher dimension with a negative cosmological constant and minimally coupled to a few fields. This has led to an enormous impact on our understanding of the collective description of many strongly interacting systems, including strongly correlated quantum materials [15], non-perturbative dynamics of QCD [16, 17] and its various phases such as the quark-gluon plasma [18].

Over more than two decades, the correspondence has also been subjected to stringent tests, where non-trivial dual quantities such as the anomalous dimensions of single-trace gauge-invariant operators and the spectrum of strings in anti-de Sitter space have been matched using techniques like integrability [19] and localization [20]. Recently a derivation of the correspondence has been achieved when the dual gauge theory is free (zero ’t Hooft coupling) while the string worldsheet sees a quantum spacetime and gets localized at the boundary [21, 22].

The fundamental aspects of how the bulk spacetime and its gravitational dynamics emerge from the dual gauge theory are still shrouded in many mysteries. Nevertheless, there has been remarkable progress in this direction via the tools of quantum information theory. A path breaking proposal by Ryu and Takanayagi [23] and its further refinements [24, 25] that an appropriate codimension two bulk extremal surface anchored to the boundary \(\partial R\) of a boundary spatial subregion R captures the entanglement entropy of that region R in the dual field theory, have been at the heart of these developments. To be specific, we need to consider the causal domain of dependence \(D_R\) of a region R as the set of points where the values of measurements can necessarily be influenced by or influence the data on R. Then the bulk operators in the entanglement wedge, which is the causal domain of dependence of (any) Cauchy slice bounded by the bulk extremal surface anchored to \(\partial R\) and the subregion R at the boundary, can be decoded from the algebra of operators in the dual CFT in \(D_R\) as illustrated in Fig. 9 [26,27,28,29,30,31].

Subsequently, quantum information theory has played a fundamental role in understanding how this entanglement wedge of the emergent spacetime can be reconstructed in the dual conformal field theory (CFT) without encountering inconsistencies. It has been shown that the correct framework which achieves bulk reconstruction in a consistent way can be obtained by reformulating the AdS/CFT correspondence as a quantum error correcting code in which the bulk spacetime is encoded in a redundant way in the Hilbert space of the CFT. Furthermore, the encoding is protected against deletion errors, i.e. allowing for the entanglement wedge to be (approximately) reconstructed from its corresponding boundary subregion even after the complement of the latter is traced out [32,33,34,35].

The connection between the holographic principle of gravity and quantum information theory is currently a topic of fundamental interest to researchers in diverse fields. In fact, this interdisciplinary area of research has been instrumental in developing new perspectives in quantum error correction itself, and has produced novel connections between quantum fields (many-body systems) and quantum information theory as well. One example of such a connection is the postulate of the quantum null-energy condition which states that the expectation value of a null projection of the energy–momentum tensor is bounded from below by a specific null variation of the entanglement entropy [36]. This postulate has not only been proven in holographic field theories [37], but also in generic two-dimensional CFTs [38] and free field theories [39, 40], and is also expected to hold generally. Furthermore, such developments have led to new understanding of connections between entanglement and the renormalization group (RG) flow especially with respect to the existence of quantities which evolve monotonically under the flow [41,42,43].

The most fundamental test of our understanding of the AdS/CFT correspondence is whether we can find explicit mechanisms for the resolution of black hole information paradoxes which are further refinements of Hawking’s original result that a semi-classical black hole should lose its mass to thermal (Hawking) radiation violating unitarity [44, 45]. The understanding of the AdS/CFT correspondence in the information theory framework has driven remarkable progress in this frontier as well. In particular, it has been shown that the AdS/CFT correspondence itself leads us to the correct way to compute the fine grained entanglement entropy of the Hawking radiation.Footnote 2 Surprisingly, these computations can be done in the semi-classical evaporating black hole geometry itself and produce results which are consistent with unitarity. In setups where the holographic system is connected to a bath which collects the Hawking quanta of an evaporating black hole, the bath develops an entanglement wedge, called the island which contains portions of the interior of the black hole, once the black hole is past the Page time (approximately the time when the black hole and the Hawking radiation have the same number of degrees of freedom). The inclusion of this spatially disconnected island leads to reproduction the Page curve [47, 48] for the time-dependence of the von Neumann entropy of the Hawking radiation in consistency with unitarity without invalidating the effective semiclassical description of bulk physics [49,50,51,52,53]. The further understanding of how information of the black hole interior is encoded without encountering fundamental inconsistencies is probably the most exciting topic at the intersection of quantum information theory and gravity.

The present review is aimed to provide an accessible account for researchers in diverse fields to follow the developments connecting the holographic emergence of spacetime and gravity with quantum information theory. We also give a special emphasis on recent developments in connection with black holes. Our account is somewhat complementary to existing reviews in literature, and is also self-contained. As instances of focused reviews on subtopics covered here, we would especially like to mention [54] which reviews the holographic entanglement entropy proposal, [55,56,57] which review the black hole information puzzles and their possible resolutions, [58,59,60] which review aspects of bulk reconstruction, and [61] (see [62] for a very accessible summary) which reviews recent progress in reproduction of the Page curve (the time-dependence of the entanglement entropy of Hawking radiation) from the AdS/CFT correspondence. We also update the content of these reviews, and describe the central concepts and proposals along with essences of their derivations or arguments supporting them in a sufficiently detailed manner. Furthermore, we present perspectives on the open questions and some promising directions for research in the near future.

The plan of the review is as follows. In Sect. 2, we introduce the Ryu-Takanayagi surface and its generalization the quantum extremal surface which is the key to compute the entanglement entropy of a subregion in the dual field theory. As mentioned before, this is at the heart of the connection between quantum information theory and the holographic correspondence. We also review the proof of these proposals for bulk extremal surfaces at leading and subleading orders. We furthermore discuss the consistency checks for the proposal for the quantum extremal surface. We especially outline the proof that the entanglement wedge contains the bulk causal wedge which is the key to the understanding of the non-triviality of bulk reconstruction. Furthermore, we discuss how the holographic prescriptions for computing entanglement entropy reproduce entanglement inequalities (especially the strong subadditivity) and the alternative maximin construction.

In Sect. 3, we introduce the entanglement wedge reconstruction hypothesis and the key postulate of equality of bulk and boundary relative entropies in the bulk semi-classical approximation. We then discuss various implications of the latter postulate especially how it implies the emergence of gravitational field equations at linearized order, and furthermore how the bulk canonical energy gets connected to Fisher information at the boundary. We also discuss progress in understanding of the emergence of bulk from modular flow at the boundary, and the basic reasons for reformulating bulk reconstruction as a quantum error correcting code. Afterwards, we introduce the appearance of islands which are disconnected portions of the entanglement wedge especially in the context of double holography, and introduce and justify the island rule for computing the entanglement entropy of a subregion of a bath in contact with a holographic system described by a semiclassical evaporating black hole while sketching how this reproduces the Page curve of Hawking radiation.

In Sect. 4, we review quantum error correction especially in relation to operator algebras, and discuss how this framework together with the postulate of equality of bulk and boundary relative entropies imply reconstruction of local bulk operators in the entanglement wedge in terms of the operators of the dual field theory in the region of interest. We discuss how apparent inconsistencies of bulk reconstruction are mitigated by formulating the bulk reconstruction in AdS/CFT correspondence as a quantum error correcting code that protects against deletion of complementary boundary subregions. Toy models of AdS/CFT correspondence based on tensor networks which provide examples of perfect recovery maps for the entanglement wedge in the dual subregion of the field theory are then presented. We focus particularly on the Petz map, and discuss how a specific variation could provide the desired approximate recovery even at sub-leading order, and relate to the modular flow at the boundary. We furthermore discuss the connection between the bulk radial coordinate and renormalization group flow in this context with a perspective on issues which may spur further universal understanding of the holographic correspondence.

In Sect. 5, we review the exciting recent progress in the reproduction of the Page curve from Hawking radiation. In particular, we focus on how replica wormhole saddles imply the quantum extremal surfaces and islands responsible for restoring behavior of the Page curve that is consistent with unitarity although these wormholes provide an intrinsically averaged description of the black hole microstates. We then discuss the state-dependence of the encoding of the interior in the framework of universal (approximate) subsystem recovery and also how the Python’s lunch mechanism generates exponential complexity of the state-dependent encoding.

Furthermore, we present a perspective on issues that are crucial to fully understand how the black hole complementarity principle can emerge operationally without encountering information paradoxes such as the AMPS paradox while emphasizing the need for a complex encoding of the interior. We motivate the need for tractable microstate models of black holes which can demonstrate the realization of all desirable features of the encoding in the Hawking radiation especially information mirroring (with decoding possible without full knowledge of interior) and exponentially complex encoding of the black hole interior excitations simultaneously. Furthermore, it should explain the origin of self-averaging in real time to be consistent with the implications of replica wormholes. We proceed to discuss a class of tractable microstate models explicitly, their promising results in this direction along with implications and some open questions.

In Sect. 6, we conclude with a discussion on some of the topics of significance which are not covered in this review and some promising directions for further research.

2 Quantum extremal surfaces

2.1 Partial proof of the quantum extremal surface proposal

The entanglement entropy of a boundary subregion provides the most fundamental link between holography and quantum information.

Ryu and Takayanagi (RT) [23] were the first to describe how entanglement entropy could be computed holographically in a static semi-classical spacetime (dual to a large N quantum field theory). Then Hubeny, Rangamani and Takayanagi (HRT) [24] extended this idea to time dependent geometries. The RT/HRT formula states that the entanglement entropy of a subregion R of the boundary theory is proportional to the area of the classical, bulk co-dimension twoFootnote 3 extremal surface that is anchored to the boundary of R and is homologous to R (see Fig. 2). The RT proposal was shown to be correct at the leading order in \(\hbar \) by Lewkowycz and Maldacena (LM) [63]. A generalization of this proof for the HRT proposal using a bulk version of the Schwinger–Keldysh contour was described in [64]. The RT/HRT prescription has led to a much simpler proof of the strong sub-additivity of entanglement entropy [27, 65] and also to new inequalities that holographic entanglement entropy should satisfy [66,67,68,69]. The next to leading order correction to the holographic entanglement entropy for semi-classical static situations was computed by Faulkner, Lewkowycz and Maldacena (FLM) [28]. They found that the entanglement entropy correct to \({\mathcal {O}}(\hbar ^{0})\) is given by

where \({\mathcal {A}}(X_{R})\) is the area of the classical bulk extremal HRT surface (\(X_{R}\)) and the leading order quantum corrections are given by the bulk entanglement entropy (\(S_{\text {ent-bulk}}\)) which is as follows. As shown in Fig. 3, the classical extremal surface divides the bulk into two sub-regions \(R_{b}\) and \({\bar{R}}_{b}\); \(S_{\text {ent-bulk}}\) is the entanglement entropy of the reduced density matrix (in the bulk effective field theory) on the bulk sub-region \(R_{b}\) that is connected to the boundary region R. The bulk quantum fields are assumed to be described by an effective field theory (EFT) living on a fixed background and the entanglement entropy of the bulk sub-region connected to the boundary region R is computed using standard quantum field theory techniques. The quantity defined in Eq. (1) is called the generalized entropy. The quantity \(S_{\text {ent-bulk}}\) suffers from divergences, which can be absorbed into the renormalization of the Newton’s constant leading to a well defined generalized entropy [70,71,72,73,74]. It is not clear if the LM and FLM proofs (reviewed below) can be extended to include higher order corrections involving multiple loops of quantized gravitons. However, the Engelhardt–Wall proposal is expected to work for all orders in \(\hbar \) in which we consider the full quantum theory of the bulk matter on a fixed (but backreacted) semiclassical gravitational background as discussed below.

Engelhardt and Wall (EW) [25] conjectured that the holographic entanglement entropy of a boundary sub-region R is given by the generalized entropy of the quantum extremal surface (QES) \(\chi _{R}\) anchored to (\(\partial R\)), so that

where the quantum extremal surface is the surface that extremizes the generalized entropy and is homologous to R. If there are multiple such extremal surfaces, the one with the smallest generalized entropy satisfying the homology constraint is picked.Footnote 4 This QES proposal is different from the FLM formula, which is the generalized entropy of the classical RT/HRT surface.

The QES proposal hasn’t been proven, however it is believed to be correct since it satisfies some non trivial consistency checks which we will review in Sect. 2.2. This proposal has been checked order by order in \(\hbar \) for a few sub-leading corrections by comparing computations in the bulk with those in the dual boundary CFT [75,76,77]. In the rest of this review, we will use units where \(\hbar =1\) unless explicitly mentioned.

Before describing the checks on the QES proposal, we will first review the classical argument from [63] that proves the RT formula. The entanglement entropy of a boundary sub-region R can be computed using the replica trick. This consists of going to Euclidean time and considering an angular direction in the boundary field theory with origin at the boundary of R. This is labelled by \(\tau \), with \(\tau = \tau +2 \pi \). The boundary quantum field theory (QFT) is then considered in a sequence of spaces (\({\widetilde{M}}_{n}\)) with \(\tau = \tau + 2 \pi n\), for positive integers n. This sequence is holographically dual to a sequence of bulk geometries labelled by \({\widetilde{B}}_{n}\) with the asymptotic boundary \({\widetilde{M}}_{n}\). One then computes the Rènyi entropy (\(S_{n}\)) as follows:

Analytic continuation to non-integer n is well defined due to the Carlson theorem [78]. The \(n \rightarrow 1\) limit gives the von Neumann entropy. Here \(Z[{\widetilde{M}}_{n}]\) and \(Z[{\widetilde{M}}_{1}]\) are the QFT partition functions for the spacetime \(\widetilde{M_{n}}\) and the original spacetime \(M_{1}\). The holographic dictionary in the large N limit tells us the following:

where \(I[{\widetilde{B}}_{n}]\) is the on-shell classical bulk action for the bulk geometry \({\widetilde{B}}_{n}\). The bulk geometries \({\widetilde{B}}_{n}\) have a \({\mathbb {Z}}_{n}\) symmetry that corresponds to cyclic permutations of the n replicas. Taking a quotient with this, one can define \(B_{n} = {\widetilde{B}}_{n}/ {\mathbb {Z}}_{n}\). Due to the quotient, these bulk geometries have the same boundary conditions as the original geometry (\({\widetilde{B}}_{1}\)), that is \(\tau = \tau + 2\pi \). These geometries typically have a conical defect with opening angle \(\frac{2 \pi }{n}\) at the fixed points of the \({\mathbb {Z}}_{n}\) symmetry. The classical bulk action is a \(\tau \) integral of a local Lagrangian density, therefore it follows that \(I[{\widetilde{B}}_{n}] = n I[B_{n}]\). Thus the Rènyi entropy can be written as follows:

Note that when evaluating \(I[B_{n}]\) one excludes any contributions from the conical singularity. We can now analytically continue to non-integer n and take the \(n \rightarrow 1\) limit. The von Neumann entropy is then:

Varying n corresponds to changing the opening angle of the conical defect. The metric and other fields also have to change due to this change in n. However since the geometry \(B_{n}\) is a solution of the equations of motion, the first order variations of the bulk action away from these solutions should vanish. Thus the only change in the action comes from a boundary term (at the conical singularity). Therefore, the von Neumann entropy is essentially a boundary term at the conical singularity, which is a co-dimension 2 hypersurface in the bulk. This boundary term was calculated in [63] and was shown to reproduce the RT formula.

The above argument was extended by FLM [28] to include quantum corrections (to \({\mathcal {O}}(\hbar ^{0})\)) by considering quantum fluctuations in the bulk EFT. The partition function of bulk quantum fields is then given by the following:

where \(\rho _{n}\) is a state of the bulk quantum fields in the bulk geometry \(B_{n}\) . The Rènyi entropy can now be written as follows:

The von Neumann entropy is then:

We can add and subtract the term \(-\partial _{n}(\ln Tr[\rho _{1}^{n}])\) to Eq. (9) and after some algebra we get:

with

and

The \(S_{\text {ent-bulk}}\) term involves \(\rho _{1}\) which is the density matrix of bulk quantum fields in the original geometry. This therefore computes the bulk entanglement entropy. The \(S_{\text {area}}\) term can be expressed as a variation of a local Lagrangian, which for the usual 2 derivative gravity action gives the area term as seen before for the classical case. This concludes a heuristic review of the arguments of FLM for the quantum correction to the RT formula.

We will now define the causal wedge and the entanglement wedge. These are important for bulk reconstruction, which is the major focus of this review. For a boundary sub-region R, the boundary domain of dependence of R, labelled by \(D_{R}\) is defined to be the set of all boundary points such that any in-extensible timelike curve that passes through any point in \(D_{R}\) necessarily intersects R (i.e. D(R) is the set of points where the values of measurements can necessarily be influenced by or influence the data on R). The bulk casual wedge (\(W_{R}\)) is then defined to be the intersection of the causal past and future of R. \(W_{R} = \mathcal {J^{+}}(D_{R}) \cap \mathcal {J^{-}}(D_{R})\) The boundary of \(W_{R}\) is called the causal surface and is labelled by \(C_{R}\). See Fig. 4 for a pictorial depiction of these definitions.

The boundary domain of dependence is coloured purple, the causal wedge is the green bulk region and the causal surface is coloured blue. The quantum extremal surface is marked as \(\chi _{R}\) and is shown to lie deeper into the bulk than the causal surface. Figure from [25]

Classically it should be possible to reconstruct any bulk operator within \(W_{R}\) in terms of boundary operators on \(D_{R}\) since they are in causal contact with each other. Entanglement wedge reconstruction however states that any operator in the entanglement wedge of R can be reconstructed from operators in \(D_R\), where the entanglement wedge is defined to be the bulk domain of dependence of the Cauchy surface that interpolates between R and the extremal surface anchored to \(\partial R\). This has led to the notion of sub-region duality [79,80,81], which states that sub-regions of the boundary are dual to sub-regions of the bulk. Bulk reconstruction will be described in more detail in Sect. 3. We will now use the definitions from above to review certain checks on the QES proposal.

Sanity checks Any proposal for the holographic entanglement entropy that claims to be correct to all orders in \(\hbar \) must pass the following preliminary checks.

-

1.

It must agree with the RT/HRT formula at leading order in \(\hbar \)

-

2.

It must agree with the FLM formula at next to leading order in \(\hbar \)

Engelhardt and Wall [25] argued that their proposal indeed passes these two checks. We review these arguments below.

Since the FLM proposal is valid only up to \({\mathcal {O}}(\hbar ^{0})\), it is enough to show that the following is true:

The generalized entropy consists of two terms \(S_{gen}(X) = \frac{{\mathcal {A}}(X)}{4 G \hbar } + S_{ent} \) . In a semi-classical bulk the classical and quantum extremal surfaces are expected to be a distance \({\mathcal {O}}(\hbar )\) apart. Thus the entanglement entropies of bulk fields (\(S_{ent}\)) for the two surfaces are expected to differ only at \({\mathcal {O}}(\hbar )\). That is \(S_{ent}(X_{R}) - S_{ent}(\chi _{R}) = {\mathcal {O}}(\hbar )\). Now we can look at the area term. Since the classical extremal surface extremizes the area, first order variations of the classical surface do not affect the area. That is since \(X_{R}\) and \(\chi _{R}\) are a distance \(\hbar \) apart, the leading order difference in the two areas is at most \(\hbar ^{2}\), that is \(A(X_{R}) - A(\chi _{R}) = {\mathcal {O}}(\hbar ^{2})\). This therefore proves that the holographic entanglement entropy proposal agrees with the RT/HRT and FLM formulas at the appropriate orders. The FLM formula and the QES proposal will not agree at higher orders in \(\hbar \) and one can perform computations at higher orders to determine if the QES proposal is correct [75,76,77]. The QES proposal passes some essential consistency checks and is therefore believed to be correct. These checks will be the focus of the rest of this section.

2.2 The entanglement wedge contains the causal wedge

If the sub-region duality is consistent then the QES must lie deeper in the bulk than causal surfaces. This is an important consistency check that the QES proposal passes. In this section we reason why this consistency condition should hold and review a proof of why the QES proposal passes this check.

Let us assume that the extremal surface can lie closer to the boundary than the causal surface. Let us consider a pure state in the dual theory at the boundary. The entanglement entropy of its reduced density matrices on R and its complement (\(\rho _{R}\) and \(\rho _{{\bar{R}}}\)) must therefore be the same. This can be reproduced by the QES proposal if \(X_{R} = X_{{\bar{R}}}\) since the bulk fields are also in a pure state. As shown in Fig. 5 if \(X_{R}\) lies within the causal wedge \(W_{R}\) then the region between \(C_{R}\) and \(X_{R}\) would be in the entanglement wedge of \({\bar{R}}\) and can therefore be reconstructed on \({\bar{R}}\). However this region is in causal contact with \(D_R\) and therefore can be affected by a signal propagating from \(D_R\). This leads to a contradiction in the dual boundary theory since R and \({\bar{R}}\) are causally disconnected. This can be avoided only if \(X_{R}\) lies deeper in the bulk than \(C_{R}\) and is spacelike to it.

This is a spatial slice of the geometry. The boundary is the circumference of the circle. R (red) is the boundary subregion of interest and \({\bar{R}}\) is its complement. \(C_{R}\) (magenta) is the causal surface for R and \(X_{R} = X_{{\bar{R}}}\) (black) is the classical extremal surface, which is shown to lie within the causal wedge of R. The grey region is the entanglement wedge of \({\bar{R}}\). Anything in the grey region can be reconstructed from \({\bar{R}}\). A signal from R can propagate up to \(C_{R}\) and therefore into the entanglement wedge of \({\bar{R}}\). This violates micro-causality of the CFT

It was shown in [82] that if the classical null energy condition (NEC) holds then the classical extremal surface lies deeper in the bulk than the causal surface. The NEC states that \(T_{kk}\ge 0\) for any future directed null vector \(k^{\mu }\). This can be violated if there is quantum matter in the bulk and the classical extremal surface can therefore be closer to the boundary than \(C_{R}\) or it could be timelike separated from it. The way to avoid this inconsistency is to use the QES instead of the classical extremal surface. Below we review the argument from [25] which shows that the QES \(\chi _{R}\) lies deeper in the bulk than \(C_{R}\) and is spacelike to it.

We will first state the generalized second law (GSL). The usual second law of thermodynamics states that the thermodynamic entropy of any closed system is nondecreasing in time. The GSL is a statement about the monotonicity of the generalized entropy [83]. The generalized entropy is computed on a Cauchy slice (at some “time”), the GSL then states that the variation of this generalized entropy along any future directed normal to the Cauchy slice is non negative. The generalized entropy can be defined for any causal horizon (\(H^{+}\)), which is defined to be the boundary of the past of any future directed timelike or null worldline. Define \(H = H^{+} \cap \varSigma \), where \(\varSigma \) is a Cauchy slice and \(H^{+}\) is a future causal horizon. Then the GSL states the following [25, 83]

where \(\delta H^{\mu }\) is a normal to H and \(k^{\mu }\) is any future directed null vector. We will also require the following theorem from [83], see also [25].

Theorem 1

Let M and N be co-dimension one null surfaces that split the spacetime into two parts, an interior (Int) and exterior (Ext), where Ext is defined to be the region containing the boundary subregion \(D_{R}\) which is of interest. Let \(M \cap Ext(N)\) be empty. Also assume that M and N coincide at some point p and that M and N are smooth in the neighbourhood of p. Let \(\varSigma \) be a spatial slice that passes through p. Then there exists a normal (\(\delta \varSigma ^{\mu }\)) to \(\varSigma \cap M\) in the neighbourhood of p such that

where \(k^{\mu }\) is a future directed null normal to M and N.

To use this theorem and show that the QES lies deeper than the causal surface we identify the null splitting surface M with the boundary of the entanglement wedge. This can be generated by shooting null rays from \(\chi _{R}\) towards R. Let us choose the Cauchy slice \(\varSigma \) such that it intersects the boundary of the entanglement wedge at the QES. Let us also assume that \(\varSigma \) intersects the future causal horizon of \(D_{R}\) at \(H^{+}(D)\). The statement that the QES lies deeper than the causal surface can be proved by contradiction. Assume that \(\chi _{R} \cap \mathrm{Int} H^{+}(D_{R})\) is non empty as shown in Fig. 6. We can continuously shrink the boundary domain of dependence \(D_{R}\) to a new region \(D'\) such that the new causal horizon intersects \(\varSigma \) at \(H^{+}(D')\), which is contained entirely in \(\mathrm{Ext}(\chi _{R})\) (see Fig. 6). Since we are shrinking the region continuously we can choose a \(D'\) such that its future causal horizon \(H^{+}(D')\) intersects \(\chi _{R}\) at p, is tangent to it at p and is in \(\mathrm{Ext}(\chi _{R})\) everywhere else. We can now identify \(H^{+}(D')\) as N from Eq. (15) to obtain the following:

where \(EW(\chi _R)\) (the boundary of the entanglement wedge) is the surface generated by shooting null rays from \(\chi _{R}\) towards R. Since the QES extremizes the generalized entropy, the left hand side of Eq. (16) is zero. Therefore we have

with equality only if \(\chi _{R}\) lies on \(H^{+}(D')\) in a neighbourhood of p. However the generalized second law states that

The equality holds only in non-generic spacetimes. Therefore for generic spacetimes we have a contradiction. A similar argument by using the time reversed GSL establishes a contradiction for the past causal horizon. The proof can be extended to the non generic case, see [25] for details. Therefore the QES is spacelike or null separated from the causal surface and is deeper in the bulk.

\(\varSigma \) is a spatial slice that contains the QES \(\chi _{R}\). The projections of \(H^{+}(D)\) (blue) and \(H^{+}(D')\) (red) are shown. The dotted curve is the QES that intersects and is tangent to \(H^{+}(D')\) at point p. The region to the right of the QES is \(Ext(\chi _{R})\). Figure reproduced from [25]

This statement leads to an important conclusion. The von Neumann entropy is invariant under unitary transformations. Suppose we perturb the boundary with some unitary operator localized to R, this should leave the boundary von Neumann entropy unchanged. This boundary perturbation leads to sources for the bulk fields. However only the bulk fields within \(W_{R}\) can be affected due to the boundary unitary. Since \(\chi _{R}\) lies deeper in the bulk than \(C_{R}\) and is spacelike or null separated from it, the QES is unchanged due to the boundary unitary perturbation. The bulk entanglement entropy \(S_{\text {ent-bulk}}\) is also unaffected since the von Neumann entropy is unchanged under unitary transformations. Thus the generalized entropy is unaffected by such boundary unitary perturbations. The classical extremal surface \(X_{R}\) can lie inside the causal wedge in spacetimes that violate the classical null energy condition. Thus the FLM holographic entropy would be changed under boundary unitaries, whereas the QES proposal is consistent with entanglement wedge reconstruction and the invariance of the boundary von Neumann entropy under unitary transformations of the state.

2.3 Maximin vs extremal: strong sub-additivity and entanglement wedge nesting

In the previous subsection we have described how the QES proposal is consistent with the invariance of the boundary von Neumann entropy under unitary transformations. There are two other conditions that any proposal for holographic entanglement entropy should satisfy: (1) strong sub-additivity of the von Neumann entropy and (2) entanglement wedge nesting. The second condition states that if we consider a boundary subregion \(R' \subset R\) then the entanglement wedge of \(R'\) should lie within the entanglement wedge of R. The subalgebra of operators localized to \(R'\) must be a subset of the subalgebra of operators localized to R and subregion duality implies the same must be true for the dual operators localized to the corresponding entanglement wedges. Thus entanglement wedge nesting is a consequence of subregion duality. The strong sub-additivity condition states that [84, 85]

where A, B and C are three boundary sub-regions. A simple geometric proof of the strong sub-additivity for the RT prescription was given in [65] (see Fig. 7).

The left most figure computes \(S(A\cup B) + S(B \cup C)\) via the RT prescription. The figure in the middle is simply a re-colouring of the different RT surfaces and must have the same entropy as the left most figure. The red and blue curves in the middle figure are not the extremal surfaces for the sub-regions B and \(A\cup B \cup C\) respectively, and therefore have a larger area than \(S(A \cup B \cup C) + S(B)\). Figure from [86]

Strong sub-additivity and entanglement wedge nesting was shown for the covariant HRT prescription in [27] using the maximin surfaces defined as follows. For the boundary subregion R we consider all possible Cauchy surfaces \(\varSigma \) that contain R and find the minimal area surface that is homologous to R (\(X_{R}(\varSigma )\)) on each of these slices. Then we maximize over all such surfaces \(X_{R}(\varSigma )\) to obtain the maximin surface. This surface is more convenient for proofs since it corresponds to a minimal surface on some Cauchy slice just like the RT surface. The maximin surface was shown to be equivalent to the HRT surface if the null curvature condition (NCC) holds [27]. The NCC states that \(R_{\mu \nu } k^{\mu } k^{\nu } \ge 0\) for any null vector \(k^{\mu }\). Wall [27] proved that these maximin surfaces exist in spacetimes without horizons and on spacetimes with Kasner like singularities. Thus in spacetimes satisfying the NCC the existence of HRT surfaces is guaranteed. This existence proof was then extended to generic blackholes in AdS with singularities that are not Kasner like [87]. The quantum generalization of the maximin surface was defined in [88] as follows. For a boundary subregion R we consider all possible Cauchy surfaces \(\varSigma \) containing R and find the surface that is homologous to R and minimizes \(S_{gen}\), then we maximize over all the Cauchy slices. In [88] it was proved that the quantum maximin surfaces exist, are identical to the QES and obey strong sub-additivity as well as entanglement wedge nesting.

The RT/HRT and maximin prescription has led to stronger inequalities on the von Neumann entropy that do not hold for non holographic systems [66,67,68,69]. These inequalities haven’t been shown to hold when we include quantum corrections via the QES prescription, however exploration in this direction was initiated in [89] where it was shown that if the bulk entropies obey the monogamy of mutual information [66] then the dual boundary entropies also obey the same.

3 Bulk reconstruction

3.1 The entanglement wedge reconstruction hypothesis

The AdS/CFT dictionary relates observables in the large N strongly coupled QFT at the boundary to the observables in the semi-classical bulk spacetime. The Euclidean partition function (Z) of the boundary theory is related to the on-shell bulk gravitational action (I) as follows [2, 3]:

where \(\phi _{0}\) is the boundary value of a bulk field \(\phi \) and is identified with the source of the dual boundary operator \({\mathcal {O}}\). Thus the bulk gravitational action is the generating functional of all connected correlation functions in the boundary theory. This is called the Gubser–Klebanov–Polyakov–Witten (GKPW) prescription in literature. This prescription implies that the connected correlation functions of the field theory can then be obtained by functionally differentiating the on-shell dual bulk gravitational action I with respect to the sources. Divergences in the on-shell bulk gravitational action I arise due to the infinite volume of the AdS spacetime near the boundary and these mimic the local ultraviolet divergences in the dual field theory. These divergences can be systematically removed by first regularizing with a radial cut-off \(r =\epsilon \) (the boundary is at \(r =0\)) and subtracting them with diffeomorphism-invariant local counterterms on the cut-off surface. This procedure is called holographic renormalization [90,91,92,93] (see [94] for implementation in more general cases). The radial cutoff thus mimics an energy-scale cutoff in the dual field theory. We discuss more on this issue in Sect. 4.4. The Lorentzian generalization of (20) has been discussed in [95,96,97,98].

An equivalent and useful way to state the correspondence which generalizes readily to the Lorentzian signature is as follows. Corresponding to any state \(\rho _B\) in the boundary theory there exists an asymptotically \(AdS_{d+1}\) solution B in the dual gravity theory which satisfies appropriate smoothness conditions such as absence of naked singularities (unless explicitly mentioned we will assume that B has no horizon). The generic on-shell asymptotic boundary behavior of a scalar field \(\phi \) dual to an operator \({\mathcal {O}}\) with scaling dimension \(\varDelta \) in such a geometry is:

The source \(\phi _0\) which couples to \({\mathcal {O}}\) is identified with the leading term (non-normalizable mode) in the asymptotic expansion as mentioned before. The coefficient of the sub-leading term (normalizable mode) gets identified with the expectation value of \({\mathcal {O}}\) in the dual state \(\rho _B\) as indicated above. The mass of the field m is related to the scaling dimension \(\varDelta \) via

with l the AdS radius. An extrapolate dictionary stated in [99, 100] relates correlation functions of the boundary theory in the state \(\rho _B\) to scattering S-matrices of the semiclassical bulk fields in the geometry B as follows:Footnote 5

For instance a four point function \( \left\langle O(x_1)O(x_2)O(x_3)O(x_4) \right\rangle \) can be obtained from a \(2\rightarrow 2\) bulk scattering experiment shown in Fig. 8. This extrapolate dictionary has been shown to be equivalent to the GKPW prescription (20) in [102, 103].

A scattering experiment in the bulk that is equivalent to a 4 point function in the boundary. Figure from [58]

The extrapolate dictionary reproduces boundary observables as boundary limits of bulk observables. However, this is not the goal of bulk reconstruction which aims to do the opposite, namely describe (reconstruct) bulk observables in terms of boundary observables. A naive way to do this is to solve the bulk equations of motion with boundary conditions determined by the data of the boundary CFT (expectation values of the dual operators etc) and then use the extrapolate dictionary. For a free bulk scalar field this gives (see [100] for details of the computation):

where the integration is over all boundary points (x) that are spacelike separated from the bulk point X and K is referred to as the smearing function (it is the inverse of the bulk-to-boundary propagator). This expression is correct at the leading order in N and the 1/N corrections can be obtained by perturbatively solving the bulk equations of motion including the bulk vertices.

This naive procedure suffers from a major issue. Equation (23) says that a bulk local operator \(\phi (X)\) depends on all CFT operators localized to a region spacelike to X. This non-locality persists even when the bulk operator is pushed to the boundary. Therefore Eq. (23) doesn’t smoothly reduce to the extrapolate dictionary. In order to recover the extrapolate dictionary the smearing function K(X, x) must become more and more local as X is pushed to the boundary.

Hamilton, Kabat, Lifschytz, and Lowe (HKLL) addressed this issue in the context of the AdS Rindler wedge which is the bulk causal wedge \(W_R\) of a ball-shaped region R at the boundary. Recall that the boundary limit of \(W_R\), i.e. \(\partial W_R\) is \(D_R\), the boundary domain of dependence of R. HKLL showed that the reconstruction of the bulk operator in a Rindler wedge can be made manifestly consistent with the extrapolate dictionary if we work in Rindler coordinates (which covers \(W_R\)) instead of the global coordinates of AdS [104, 105]. This is referred to as AdS-Rindler reconstruction. For example, we can choose the CFT state to be the vacuum. The dual geometry is then pure AdS. The HKLL reconstruction procedure is explicitly

where \(\tau \) is the Rindler time. Note that the integration is restricted to \(D_R\) the boundary domain of dependence of R and \({\mathcal {O}}(x,\tau )\) is the Heisenberg picture operator evolved with the Rindler Hamiltonian which generates translation in \(\tau \), i.e. boosts. The smearing function \(K^{\text {Rindler}}\) is known explicitly in terms of the mode functions obtained from the semi-classical quantization of the bulk field in Rindler wedge (see [58]). This leads to the causal wedge reconstruction conjecture, which states that a bulk field within the causal wedge \(W_R\) of a boundary subregion R can be reconstructed on the boundary domain of dependence \(D_{R}\), i.e. \(\phi (X)\) can be represented using boundary operators within \(D_{R}\) provided \(X \in W_{R}\). As we move X closer to the boundary, a smaller \(D_{R}\) is required to reconstruct \(\phi \) on the boundary. This is manifestly consistent with the extrapolate dictionary and therefore solves the issue that occurred in the global reconstruction. It is important to note that the explicit smearing function is known only for the ball-shaped boundary subregions in the vacuum state.

The entanglement wedge reconstruction conjecture states that bulk operators within the entanglement wedge of some boundary region R can be reconstructed from operators on \(D_{R}\) at the boundary. This is called the entanglement wedge reconstruction conjecture [26,27,28,29,30]. This conjecture has now been proven using methods of operator algebra (quantum) error correction as will be discussed in Sect. 4.2. This automatically implies the causal wedge reconstruction since the causal wedge is contained within the entanglement wedge as shown earlier. Note that in the case of the AdS-Rindler wedge reconstruction, the causal and entanglement wedges coincide.

However, the prescription given by Eq. (24) cannot be correct when we consider entanglement wedge reconstruction. Generically the entanglement wedge contains a region which is spacelike separated from the boundary domain of dependence \(D_{R}\). This is referred to as the causal shadow [29] (see Fig. 9).Footnote 6 The causal shadow is spacelike to \(D_{R}\), therefore all bulk operators in the causal shadow would commute with operators in \(D_{R}\). Thus if an equation analogous to (24) is correct for entanglement wedge reconstruction, then all bulk operators in the causal shadow must commute with each other, which leads to an inconsistency since they are not necessarily mutually spacelike separated. See Fig. 9 for an illustration.

The solid black lines are the edge of the boundary domain of dependence \(D_{R}\). Solid blue lines are the edge of the causal wedge and the solid red lines are the edge of the entanglement wedge. The black dots are two bulk operators in the causal shadow region (beyond the causal wedge and within the entanglement wedge). These two operators need not be spacelike separated and need not commute, however a bulk reconstruction formula analogous to Eq. (24) would imply that they commute with each other

Jafferis, Lewkowycz, Maldacena and Suh (JLMS) [30] proposed that this inconsistency can be resolved if the bulk reconstruction equation takes the form:

where \(\rho _{R}\) is the reduced density matrix on the boundary subregion R and \(H_\rho = - \log \rho \) is the modular Hamiltonian and K is an appropriate smearing function. The conjugation of the operator \({\mathcal {O}}\) by the density matrix is called modular flow [106] and s is the modular flow parameter. Since the modular Hamiltonian \(H_\rho \) is typically non-local, the modular flowed operators \({\mathcal {O}}_{s}\) are non-local also, and therefore they do not commute with \(\phi \). This resolves the inconsistency due to the causal shadow described before. The JLMS proposal reduces to the HKLL prescription (24) for ball-shaped regions in the vacuum as the modular Hamiltonian is then exactly the Rindler Hamiltonian which generates boosts [107,108,109] implying that \({\mathcal {O}}_{s}(x) = {\mathcal {O}}(\tau ,x)\). Generically, the modular Hamiltonian is local only for boundary regions with sufficient symmetry and for vacuum states.

The entanglement wedge reconstruction hypothesis states that an appropriate smearing function K should always exist for (25). Progress towards an explicit construction of this smearing function will be discussed later in this section.

3.2 The equivalence of bulk and boundary relative entropies and its consequences

We proceed to first show the key result of [30] which establishes the equivalence of the boundary and bulk relative entropies as a consequence of the Engelhardt–Wall prescription [25] for the holographic entanglement entropy in the semi-classical approximation. This will be the fundamental input in the proof of entanglement wedge reconstruction to be discussed in Sect. 4.2.2 in the framework of operator algebra error correction. We will also study some of the striking consequences which follow from this relation.

The relative entropy between two states \(\rho \) and \(\sigma \) is a measure of their distinguishability (divergence) and is defined as

Its classical analogue is the Kullback–Leibler divergence between two probability distributions. It could be helpful to see how the relative entropy arises as a measure of distinguishability. Consider a positive-operator-valued-measure (POVM) which is a set of positive semi-definite operators \(A_i\) such that \(\sum _i A_i = {\hat{1}}\). We then define the classical probability distributions p and q obtained via \(p_i = \mathrm{Tr}(A_i\sigma )\) and \(q_i = \mathrm{Tr}(A_i\rho )\), and use the classical Kullback–Liebler divergence between p and q to define

with the supremum taken over all possible POVMs. \(S_1\) is thus a measure of distinguishability between the two states for a single measurement. We can similarly consider n copies of both \(\rho \) and \(\sigma \) along with all POVMs acting on these n-copies, and define \(S_n :=S_n(\rho | \sigma )\). The result of Hiai and Petz is that [110]:

We can paraphrase this as the statement that the probability that we can confuse between \(\rho \) and \(\sigma \) after we perform a large number (n) of measurements on \(\rho \) decreases as \(\exp (-n S(\rho | \sigma ))\) as \(n\rightarrow \infty \). In quantum field theory, the relative entropy is a measure of how well we can distinguish two states based on the algebra of observables in a subregion R. See [111] for a detailed and illuminating discussion. The relative entropy is invariant under simultaneous unitary transformations of the two states, and therefore like the von-Neumann entropy, it is an observable that depends only on \(D_R\) and not the specific choice of R.

The first crucial property of the relative entropy is that it is non-negative and vanishes if and only if the two states are identical [112]. It follows also from the similar feature of Kullback–Leibler divergence as should be clear from the above discussion. Furthermore, the relative entropy is related to mutual information. Consider the union of two subregions A and B, a joint state \(\rho _{A\cup B}\) and the uncorrelated state \(\rho _A \otimes \rho _B\) with each density matrix obtained by tracing out the complement of the corresponding subregion. The mutual information between A and B subregions in the joint state is defined as

with S(A), S(B) and \(S(A\cup B)\) referring to the von Neumann entropies of \(\rho _A\), \(\rho _B\) and \(\rho _{A\cup B}\) respectively. One can readily see that

The non-negativity of the relative entropy then implies that I(A, B) is positive and vanishes only when the two intervals are fully uncorrelated.

The second crucial property of the relative entropy is its monotonicity under completely positiveFootnote 7 trace preserving (CPTP) maps. A density matrix maps to another density matrix under a CPTP map and will characterize an arbitrary noise channel in the context of quantum error correction. It has been shown that [113]

for an arbitrary CPTP map \({\mathcal {N}}\). Considering \({\mathcal {N}}\) to be the tracing out of a subregion C, we can readily see that the monotonicity of the relative entropy implies the strong subadditivity property (19) of the entanglement entropy as follows. Under this trace operation we should have the inequality

from the monotonicity property. We easily obtain (19) from the above inequality using (28) and (27). The strong-subadditivity of the entanglement entropy is saturated for quantum Markov chain states in which A and C are independently conditioned by B as will be discussed in Sect. 4.2.2. This will have implications for toy models of holography.

To proceed further, we rewrite the relative entropy in the following form

where \(\varDelta S\) is the difference between the von Neumann entropies of the states \(\rho \) and \(\sigma \), and \(\varDelta \left\langle H_{\sigma }\right\rangle \) denotes the difference between the expectation value of the modular Hamiltonian of \(H_\sigma = - \log \sigma \) in these two states. Note these differences are exact and not infinitesimal. Since the relative entropy is non-negative and reaches its extremal vanishing value when the two states are identical, the first order change in the relative entropy must vanish for an infinitesimal difference between the two states i.e. when \(\rho = \sigma +\delta \sigma \). This implies the first law of entanglement entropy [114]

for any infinitesimal variation of the state \(\sigma \).

The Engelhardt–Wall prescription states that for any boundary subregion R

where \(\chi _R\) is the quantum extremal surface which extremizes the generalized entropy with \(S_{\text {ent-bulk}}\) the entanglement entropy of the bulk matter within the corresponding entanglement wedge. The area term can be viewed as the expectation value of an operator in the bulk effective field theory (\(Tr[\rho \frac{{\hat{A}}_{\chi _{R}}}{4 G }]\)). Note that the von-Neumann entropy is simply the expectation value of the modular Hamiltonian: \(S(\sigma ) = Tr[ \sigma H_{\sigma } ] = \left\langle H_{\sigma }\right\rangle _{\sigma }\). Therefore Eq. (32) can be written as an equivalence between the bulk and boundary modular Hamiltonians as [115]:

Note that the area operator \({\hat{A}}_{\chi _{R}}\) will commute with both \(H_{\text {bulk}}\) and \(H_{\text {bdy}}\) since \(X_R\) is spacelike separated with all points in the corresponding bulk and boundary regions. Furthermore, the boundary relative entropy in the form (31) is

From (32) we readily obtain that

and similarly from (33) we obtain

Subtracting Eq. (35) from Eq. (36) and noticing that the area term cancels, we immediately obtain the desired result [30]

stating the equivalence between the boundary and bulk relative entropies. It is interesting to note that a similar equivalence between boundary and bulk mutual information (between two subregions) can also be readily proved [28].

Let us consider a bulk field \(\phi \) within the entanglement wedge of some boundary region R. Equation (33) implies:

The area term in \(H_{\text {bdy}}\), which is localized on the extremal surface and is spacelike to the interior of the entanglement wedge, drops out. Therefore bulk causality implies that the bulk and boundary modular flows are identical.

The definition of the modular Hamiltonian contains some ambiguities. For example in lattice gauge theories observables are located on the links of the lattice. Therefore, when a surface splitting space into two parts cuts a link, it is not clear which side of the surface should include the observable on that link. These ambiguities are localized on the boundary of R. Nevertheless, the relative modular Hamiltonian \(H_{\text {rel-bdy}}= H_{R-\text {bdy}}-H_{{\bar{R}}-\text {bdy}}\) is free of such ambiguities. Similarly, the bulk modular Hamiltonian has ambiguities localized on the extremal surface, but the bulk relative modular Hamilton is free of these ambiguities. The relative bulk and boundary modular Hamiltonians should be identical, i.e. \(H_{\text {rel-bdy}} = H_{\text {rel-bulk}}\), if the area term cancels out, i.e. if R and its complement \({\overline{R}}\) share the same extremal surface (and hence the two corresponding entanglement wedges are complements of each other). This happens for a pure state (and horizonless bulk geometries). This ambiguity free modular Hamiltonian can be used to define the modular flow.

To see consequences of the equivalence of bulk and boundary relative entropies, we need the first law of entanglement entropy (31) which simply follows from the vanishing of the first order variation of the relative entropy as shown above. We will also need the result for the second order variation of the relative entropy. Let

We readily see that

where terms containing \(\delta _2\sigma \) vanish for the same reason as in the case of the first order variation mentioned before. This implies

The quantity \(\left\langle \delta _1\sigma , \delta _1\sigma \right\rangle _\sigma \) is called the quantum Fisher information which defines a Riemannian metric on the space of states. Quantum Fisher information is important in the study of quantum metrology and state estimation, where it bounds the amount of information that can be obtained about a state by generalized measurements [116]. The positivity of relative entropy implies that the quantum Fisher information is positive.

Let us consider \(\sigma \) to be the reduced density operator on a ball shaped subregion B of the vacuum state of a holographic CFT and \(\rho \) to be the corresponding reduced density operator of a perturbed state close to the CFT vacuum. The vacuum is dual to pure AdS and the perturbed state is dual to a perturbation of pure AdS. It was shown in [117, 118] using results from [109, 119] that at the leading and subleading orders the variation of the bulk relative entropy can be written in the form (with the bulk metric \(g = g_0 + \epsilon \delta g + {\mathcal {O}}(\epsilon ^2)\)):Footnote 8

where \(\varSigma \) is the Cauchy slice bounded by the boundary subregion and the bulk extremal surface, \(\delta g\) is the bulk metric perturbation and

is the Lie derivative of the bulk metric g in the direction of \(\xi \) which is the timelike Killing vector associated to the bulk Rindler wedge (note that \(\xi \) vanishes on the extremal surface). \(E_{\mu \nu }\) is proportional to the equations of motion. Furthermore, \({\mathcal {E}}\) is a symplectic form on \(\varSigma \) given by:

where h is the induced metric on \(\varSigma \) and \(p^{\mu \nu } = \sqrt{h}(K^{\mu \nu } -h^{\mu \nu } K ) \) with \(K_{\mu \nu }\) denoting the extrinsic curvature of the Cauchy surface (and \(K = h^{\mu \nu }K_{\mu \nu }\)).

Since \({\mathcal {L}}_{\xi } g_0 = 0\) as \(\xi \) is a Killing vector associated to the unperturbed metric \(g_0\), the canonical energy vanishes, i.e. \({\mathcal {E}} =0\) at leading order in \(\epsilon \). The first order variation of relative entropy must vanish due to the positivity of relative entropy. Since this should hold for any Cauchy surface \(\varSigma \), we obtain:

This simply implies that the perturbed metric should satisfy linearized Einstein’s equation expanded about the background for an arbitrary perturbation. Therefore positivity of relative entropy is equivalent to linearized Einstein equations.

The second order variation of the relative entropy is then

The vanishing of the linearized equations of motion finally implies that [121]:

The right hand side is the canonical energy of the linearized perturbation [119]. Using the equivalence of the bulk and boundary relative entropies and (40), we obtain that the left hand side is exactly the quantum Fisher information in the boundary. Therefore, the quantum Fisher information of a perturbation of the density matrix in the CFT is dual to the bulk canonical energy of the dual linearized gravitational perturbation [121]. The positivity of the Fisher information (which follows from that of the relative entropy) then must imply the positivity of the canonical energy as indeed is the case for perturbation about any stable vacuum.

3.3 Modular flow and bulk reconstruction

3.3.1 The JLMS smearing function

In this section we describe the explicit construction of the smearing function [31] in Eq. (25). For this it is useful to first consider the Fourier transform of the modular flowed operators:

where \(H_{\sigma }\) is the modular Hamiltonian for the state \(\sigma \). We describe below an explicit expression for the smearing function from [31] that can be obtained by looking at the zero modular frequency mode. We can consider a bulk operator \(\phi \) in the entanglement wedge and look at its zero mode \(\phi _{0}(X)\). Since the bulk modular flow is the same as boundary modular flow as seen in Eq. (38), it follows that \([\phi _{\omega } , H_{\sigma } ] = \omega \phi _{\omega }\). Therefore the zero mode \(\phi _{0}\) commutes with the modular Hamiltonian and this field must be localized on the extremal surface. It was then shown in [31] that the zero mode of the dual boundary operator is:

where \(\chi _{R}\) is the extremal surface corresponding to a boundary subregion R. This generalizes the results from [122,123,124]. Inverting this expression gives

where \(K_{0}\) can be obtained by inverting the usual bulk to boundary correlator defined as follows:

This shows how bulk operators on the QES can be reconstructed. If we foliate the entanglement wedge by extremal surfaces corresponding to smaller and smaller boundary subregions contained within R we can reconstruct operators on the full entanglement wedge. This foliation is well defined due to entanglement wedge nesting which was reviewed in Sect. 2.3. The inversion of the bulk to boundary propagator only needs to be done over the extremal surface making this a much simpler computation compared to the inversion of the full bulk to boundary propagator. (A similar technical computation in a different context was done in [125].)

These modular zero modes have been used to define the modular Berry connection for the boundary CFT [126], which has been related to the Riemann curvature in the bulk [127].

3.3.2 A note on modular Hamiltonians for excited states and bulk reconstruction

We have described the JLMS proposal and an explicit construction of the smearing function. The only missing ingredient for entanglement wedge reconstruction is the modular Hamiltonian, which can be explicitly obtained for sufficiently symmetric boundary subregions of the vacuum state [107,108,109, 128, 129]. However in generic situations the modular Hamiltonian is a non-local object that is very hard to compute (for examples see [130,131,132]). Using the Casini, Huerta and Myers map [109] Sarosi and Ugajin [133] have described an explicit CFT construction of the modular Hamiltonian for ball shaped subregions in slightly excited states close to the vacuum. Let the excited state be \(\rho = \sigma + \delta \sigma \), then the modular Hamiltonian is [133]:

This construction of the modular Hamiltonian doesn’t assume that the CFT is holographic. Sarosi and Ugajin [133] show using the results of [118] (valid for the Rindler wedge) that their construction of the modular Hamiltonian relates the quantum Fisher information to the canonical energy of an emergent bulk for any CFT without assuming either the RT/HRT formula in the bulk or the large N limit for the CFT. The expressions for the kernel \({\mathcal {K}}_{n}\) in Eq. (50) can be found in [133], here we reproduce the kernels for \(n=1,2\):

Note that the kernel \(K_{1}\) is the same as the kernel seen in the twirled Petz map [35, 134] described in Sect. 4.2. See [135, 136] for an explicit evaluation of Eq. (50).

3.4 Why bulk reconstruction is quantum error correction

We have already seen hints of a connection between entanglement wedge reconstruction and quantum error correction. For instance Eqs. (33) and (38) have been argued to be equivalent to the conditions for quantum error correction [34]. Equation (50) has a structure reminiscent of the twirled Petz map [35, 134] which will be reviewed in Sect. 4.2. Moreover entanglement wedge reconstruction leads to an interesting puzzle [32]. Consider three boundary subregions A,B and C, as shown in Fig. 10. The gray regions are the entanglement wedges for the corresponding boundary subregions. The dot indicates a bulk field \(\phi (X)\). This bulk field lies outside the entanglement wedges of each of the three boundary regions. However the field lies in the entanglement wedge of \(A\cup B\), \(B\cup C\) and \(A \cup C\). Thus the bulk operator \(\phi \) can be reconstructed on \(A\cup B\) and must therefore commute with all operators on C due to causality in the boundary theory. Similarly we can argue that \(\phi \) must commute with all operators on A and B by reconstructing it on \(B\cup C\) and \(A\cup C\) respectively. Therefore the reconstructed bulk operator must be proportional to the identity since it commutes with all CFT operators. This inconsistency can be avoided if the three representations of the bulk operator on the three subregions are not the same. Thus the same bulk operator is encoded as different operators on the boundary subregions. Such redundant encoding is essential to quantum error correction. This connection between bulk reconstruction and quantum error correction will be described in detail in Sect. 4.2.

Three boundary subregions with their corresponding entanglement wedges are shown. The dot indicates a bulk operator. Figure from [32]

3.5 A first look at islands

Following the QES proposal by Engelhardt and Wall [25], the Page curve [47, 48] for an evaporating black hole in \(AdS_{2}\) was computed in [49, 50]. Similar models for black hole evaporation were studied in [137, 138]. The results from these models show that the semi-classical geometry can see features of unitarity (Page curve) in black hole evaporation. The information paradox is of course not resolved since it is still not clear how the information about the black hole interior is encoded in the radiation and how it can be decoded. Nevertheless this was an important step towards the resolution of the paradox.

The setup for these computations is Jackiw–Teitelboim [139,140,141] (JT) gravity with bulk matter described by a \(1+1\) dimensional CFT. This is dual to a quantum dot. At some time \(t=0\) the bulk boundary conditions are changed by coupling the dual quantum dot to a wire described by the same \(1+1\) dimensional CFT as in the bulk but on a flat background without dynamical gravity (see Fig. 11). Therefore quanta can now flow across the boundary and the black hole in \(AdS_{2}\) starts evaporating. The QES can be explicitly computed in this setup since the bulk entanglement entropy is that of a two dimensional CFT which can be obtained using the methods of Cardy and Calabrese [142]. It was shown in [49, 50] that the QES has a phase transition which leads to the turning around of the Page curve of the black hole. At early times, the QES remains close to the bifurcation point of the original black hole horizon (before coupling to the bath) and starts moving outward towards the boundary. The von Neumann entropy of the dual quantum dot increases due to the emitted Hawking quanta. After the Page time, when the black hole and the radiation have same number of degrees of freedom, (a more precise definition of the Page time is in Sect. 5.1), a different extremal surface has minimal generalized entropy. This QES is located just inside the event horizon of the black hole and has a decreasing entropy, and thus implying that the the entanglement entropy of the quantum dot decreases. This is the desired feature of the Page curve if the full system has an unitary evolution (to be discussed later).

Although a Page curve was seen for the entropy of the quantum dot (dual to the evaporating black hole), the entropy of the Hawking radiation computed semi-classically in [49] was shown to grow monotonically as seen in Hawking’s original computation. In Sect. 5.1, we will analyze these models from the point of view of the full gravitational path integral which computes the Rènyi entropies of the Hawking radiation and explain how the naive semi-classical computation should be refined by including appropriate saddles which automatically reproduce the location of the QES and give results consistent with unitarity. Such computations however simplify remarkably in a so-called doubly holographic setup when the bulk matter comprising the Hawking quanta is itself holographic. So, we briefly discuss this below.

In the setup of [51] the full two-dimensional quantum dot plus wire (bath) system has a three dimensional holographic dual. This can be though of as a locally \(AdS_{3}\) geometry with a dynamical boundary where the JT gravity theory is located (see Fig. 11). This is essentially the same as the setup in [143, 144] where the dynamical boundary was called the Planck brane.

The doubly holographic setup from [51]. A quantum dot (shown in black) described holographically by JT gravity with holographic bulk matter is brought in contact with the same bath holographic CFT without gravity. The boundary condition is such that the black hole in JT gravity can evaporate. The holographic dual of the full setup is locally \(AdS_{3}\) spacetime with a codimension one Planck brane where the JT theory lives

The computation of the generalized entropy in this setup is simple since the bulk entanglement entropy can be computed via the original RT/HRT prescription in the dual three dimensional gravity theory to obtain:

where x is the location of the QES on the Planck brane and \(X_{x}\) is the classical extremal surface in three dimensions that is anchored to x at one end and the boundary of the semi-infinite interval in the bath system at the other (see Fig. 12). The first area term in this two dimensional case is simply the value of the dilaton (\(\phi \)) from the JT theory at the location of the QES. The entanglement wedge for the black hole in this setup is shown in Fig. 12.

The red segment in the figure on the left indicates where the bulk entanglement entropy must be computed from the point of view of the 2D gravity theory. The red region on the right is the late time entanglement wedge of the black hole in the 3D theory. The entanglement wedge of the bath is the grey region in the right figure, which includes an island behind the horizon

If we try to compute the entanglement entropy of the bath CFT by usual methods, that is by tracing out everything to the left of the black dot in Fig. 12 (left), we would end up with an entropy that increases forever in time. This was seen in [49]. (This naive computation is the coarse-grained entropy as will be defined later.) However in the doubly holographic setup the full CFT (bulk + bath) is dual to a three dimensional geometry. We should therefore use the RT/HRT prescription to compute the entanglement entropy of the bath. This results in an entanglement wedge for the bath that is exactly the complement of the entanglement wedge for the quantum dot (black hole) and hence they have the same entanglement entropies and Page curves as should be the case if the full system is a pure state and evolves unitarily. (The QES has exactly the same phase transition in the doubly holographic setup at Page time as in the computation in [49] which is for a general CFT.) If we look from the two dimensional perspective after the Page time (Fig. 12 (left)) we see two disconnected pieces in the entanglement wedge of the bath (black curves in Fig. 12) forming islands. However if we look at this from the three dimensional perspective, the two islands are connected (Fig. 12 (right)). This is a realization of \(ER = EPR \) [145] paradigm in which the bath should be connected to the interior via a bridge in an extra dimension (wormhole) as a result of large amount of entanglement between them generated by the accumulation of semiclassical Hawking EPR (maximally entangled) pairs.

Based on the above computation the authors of [51] proposed a new rule for computing entanglement entropies in setups that involve reference systems coupled to gravitational systems. This new rule for S(R) the entanglement entropy of a subregion R in the bath (without gravity) coupled to a gravitating system is as follows:

According to this rule, we should first extremize over the islands I in the gravitating system and then minimize over the extrema. \(S_{\text {ent-bulk}}\) is the entanglement obtained from the semiclassical description of the system (in the doubly holographic case it is given by the area of the RT surface). When R is the entire bath (right of the black dot in Fig. 12), the first term in Eq. (54) equals the entropy of the black hole (B) since the full state on \(I \cup B \cup R\) is pure. The second term is simply the area of the shared QES. Therefore this new island rule gives the same entropy for the bath as that of the black hole leading to the same Page curve for both subsystems.

After Page time the position of the QES in all such setups geometrically realizes the Hayden–Preskill time for information mirroring [146] (more discussion in Sect. 5.3.1) [49]. More precisely, if some information is thrown into the blackhole post Page time then after the Hayden Preskill time the information crosses the QES. Therefore, the information escapes the entanglement wedge of the boundary and enters the island i.e. the entanglement wedge of the wire (Hawking radiation). It follows that the information thrown into the black hole can be recovered from the Hawking radiation after the Hayden–Preskill time.

These island computations in two dimensional gravity have been extended to higher dimensions in [147,148,149]. It has also been shown that islands can extend outside of event horizons [150]. The doubly holographic setups have been further analyzed in [151] where the Page transition has been studied after excising intervals in the bath CFT . Further studies have been done in [152,153,154,155,156,157,158,159,160]. Islands in the context of de-Sitter and cosmological spacetimes have been studied in [161,162,163,164,165,166,167] and also in [168] in the context of AdS/BCFT duality. The island rule (54) can be derived generally without invoking the doubly holographic setup as will be reviewed in Sect. 5.1.

4 Holography and quantum error correction

4.1 Preliminaries: quantum error correction

Quantum error correction (QEC) is a mathematical framework that allows for partial or complete recovery of quantum information that is corrupted or lost by noise arising due to unwanted interactions of the quantum system with the environment [169]. Formally, such noise is modelled as a completely positive trace-preserving (CPTP) map \({\mathcal {N}}: {\mathcal {B}}({\mathcal {H}}) \rightarrow {\mathcal {B}}({\mathcal {K}})\) from the set of bounded linear operators on Hilbert space \({\mathcal {H}}\) to the set of bounded linear operators on another Hilbert space \({\mathcal {K}}\) [112]. Such a CPTP map on the system density operators can be described in terms of a set of Kraus operators \(\{E_{i}\}\), as, \({\mathcal {N}}(\rho ) = \sum _{i} E_{i}\rho E_{i}^{\dagger }\). The operators \(E_{i}\) are often said to be the error operators associated with the noise map \({\mathcal {N}}\).

Since the no-cloning theorem prevents perfect copying of an arbitrary quantum state [170], QEC aims to protect against the effects of noise by encoding the information into entangled states of a larger Hilbert space. Specifically, for an d-dimensional quantum system with associated Hilbert space \({\mathcal {H}}\), an [[n, k]] quantum code protects k qudits by encoding them into a \(d^{k}\)-dimensional subspace \({\mathcal {C}}\) of the n-qudit space \({\mathcal {H}}^{\otimes n}\). Throughout this discussion, we will assume that the noise acts identically and independently on each of the n qudits that constitute the encoded space.

A QEC code \({\mathcal {C}}\) is said to correct perfectly for the noise \({\mathcal {N}}\), iff there exists a CPTP map \({\mathcal {R}}: {\mathcal {B}}({\mathcal {H}}^{\otimes n}) \rightarrow {\mathcal {B}}({\mathcal {C}})\) – often called the recovery map – such that,