Abstract

We describe a new code and approach using particle-level information to recast the recent CMS disappearing track searches including all Run 2 data. Notably, the simulation relies on knowledge of the detector geometry, and we also include the simulation of pileup events directly rather than as an efficiency function. We validate it against provided acceptances and cutflows, and use it in combination with heavy stable charged particle searches to place limits on winos with any proper decay length above a centimetre. We also provide limits for a simple model of a charged scalar that is only produced in pairs, that decays to electrons plus an invisible fermion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Classic searches for new physics at the Large Hadron Collider (LHC) involve the assumption of new heavy particles that are either stable or decay rapidly, either to a stable (hidden) particle or Standard Model (SM) states. With the LHC entering a precision phase for existing searches, there is now significant interest in looking for alternatives to this paradigm, where new discoveries could be hiding in plain sight. One such alternative involves long-lived particles (LLPs) that may decay inside or even some distance beyond the detectors.Footnote 1 This has attracted significant interest, including a series of workshops https://longlivedparticles.web.cern.ch/ and a community white paper [1]. Several searches for such particles have already been undertaken using Run 2 data, and are often highly complementary to prompt searches for the same models.

A heavy (electromagnetically) charged particle can have a long lifetime if it has very weak couplings and/or little phase space in its decays. In the latter case, if it decays to a slightly lighter neutral particle, most of its energy will be carried away and it will seem to disappear inside a particle detector. Such scenarios are very common in models of physics Beyond the Standard Model, especially among heavy SU(2) multiplets which have a neutral component; these would only be split in mass by electroweak effects/loops which are typically on the order \({\mathcal {O}} (100)\) MeV, meaning that the decay of the charged state is typically into the neutral one and a single pion. Such a particle would make an excellent dark matter particle, and indeed minimal dark matter [2, 3] falls into this category; in the case of an SU(2) multiplet it resembles a wino of (split) supersymmetry [4,5,6,7,8,9]. Such signatures are also ubiquitous in non-minimal scenarios [10,11,12,13,14,15,16,17,18,19,20,21,22] and in a recent example were found to appear naturally within the context of dark matter models arising from Dirac gauginos [23], where limits from disappearing tracks were also discussed. They have also been considered as signatures at future colliders, e.g. [24,25,26,27,28].

ATLAS [29] published a search for this signature based on 36.1 \({\mathrm{fb}}{}^{-1}\), with substantial recasting material including efficiencies (i.e. information about the proportion of events that are selected by the experiments due to the response of the detector rather than just the cuts applied); this material was used and then applied to other models [30] in a code available on the LLPrecasting github repository https://github.com/llprecasting/recastingCodes. Very recently a conference note [31] with the full Run 2 dataset of 136 \({\mathrm{fb}}{}^{-1} \) appeared.

On the other hand, CMS published two disappearing track searches: [32] with 38.4 \({\mathrm{fb}}{}^{-1}\) of data from 2015 and 2016 and [33] with 101 \({\mathrm{fb}}{}^{-1}\) from 2017 and 2018. These together provide the most powerful exclusion for disappearing tracks (which should be equivalent to the newly released [31]). However, while there was substantial validation material, including cutflows and acceptances for events with one and two charged tracks separately, unlike for [29] no efficiencies were provided. While the provided acceptances can be used in a simplified models approach for fermionic disappearing tracks, as in [23] based on SModelS [34,35,36,37,38] (which includes LLPs), it is a unique challenge to apply the results of this search to other models: the development of a strategy and code which can recast the search is the subject of this paper.

By recasting we mean the emulation/reinterpretation of the experimental analysis in a framework independent of the experimental one, that takes new simulated signal events and gives an estimation of what would have been seen. This is with the aim that the recast can be applied to models (or subsets of the parameter space) other than those originally considered by the experimental collaboration. This procedure is now well-developed for LHC searches. It is increasingly common for analyses to provide supplemental information for this purpose, often in the form of efficiencies or even pseudo-code; see [39] for a recent review and references therein. There now exist several popular frameworks for this purpose, which differ in their principal objectives: GAMBIT [40,41,42] is a scanning tool, designed to explore the likelihood space of a model, which can construct likelihoods for model points from collider data through its module ColliderBit [43]; CheckMATE [44,45,46] aims to check models for exclusion against a wide variety of analyses, including now several LLP ones [47]; MadAnalysis [48,49,50,51,52,53] aims at providing a general analysis framework for examining data in detail, which can also be used to check models against many important analyses provided by users in its Public Analysis Database [54, 55]. Notable also is rivet [56, 57] which is mainly used to store Standard Model analyses for reuse, but can be applied to limit new physics scenarios (via their influence on SM processes) through contur [58, 59].

There are also different strategies for detector simulation. In principle, the more complete the simulation the more accurate the recasting should be, but the full simulation of ATLAS and CMS detectors remains closed-source and there is far too little information in the public domain to allow an accurate independent attempt. There would be little hope for recasting were it not for experimentally-determined efficiencies for reconstruction of physics objects (electrons, muons, jets etc) that have been published for the LHC since before the beginning of operation (e.g. [60]); but these must be updated as the detectors and algorithms are improved and they are often not produced with recasting needs in mind. Hence the two main approaches to a detector simulation are either to use a fast simulation of an idealised detector (including response of calorimeters, magnetic fields, etc) through Delphes [61] and apply the experimental efficiencies to what it reconstructs; or to directly apply the efficiencies to the particle-level data, known as a “smearing” or “simplified fast simulation” approach (see e.g. [53, 56] for recent discussions). Naively the Delphes approach would be more accurate, and this was almost certainly true for the early operation of the LHC, but more recent analyses involve such sophisticated object reconstruction algorithms that an idealised detector simulation gives an underestimate of the performance, and it is more accurate to use a pure smearing approach. Moreover, for the analysis of interest here we require a modelling of the tracker system (in particular because the signal regions are defined in terms of the number of layers hit), which is not available elsewhere; and also a concept of the physical extent of the calorimeters/muon system, which is also absent at present in both approaches. Hence to implement our recasting we created a lightweight and fast code that uses particle-level information, that can easily be adapted to other analyses, that we call HackAnalysis. Ultimately we expect that the analysis described here will become available in the existing frameworks and that HackAnalysis should be useful for prototyping new features, hence the facetious name.

Note that whether the charged Standard Model particle is a pion or a lepton is not especially relevant for the disappearing track signature. For example, a scalar partner of the leptons in supersymmetric models, if it is nearly degenerate with the neutral fermionic partner of the gauge/higgs bosons (the neutralino) – such as in a co-annihilation scenario for dark matter – would also give a disappearing track. This case has not yet been considered in the experimental searches or [30], and so as an application of our results we shall investigate such a model here. It has the interesting peculiarity (from a theoretical point of view) that only events with two charged tracks occur, since they must be produced in pairs, in contrast to the wino/SU(2) multiplet case.

This paper is organised as follows. In Sect. 2 we provide the details of how this search has been recast: the description of the cuts in Sect. 2.1; how we modelled the detector in Sect. 2.2; how we modelled pileup in Sect. 2.3; and details about event simulation and how they affect the missing energy calculation in Sect. 2.4. In Sect. 3 we discuss the validation of our approach, with additional material in Appendix A. In Sect. 4 we reproduce the CMS exclusion for winos, and combine it with a recasting of heavy stable charged particle searches to place limits on winos up to infinite lifetime; 200 GeV winos are excluded for all proper decay lengths above 2 cm, and 700 GeV winos for all proper decay lengths above 20 cm. In Sect. 5 we apply our code to a model with charged scalars that are only produced in pairs and decay to an electron and a neutral fermion (equivalent to a right-handed slepton in supersymmetric models that is almost degenerate with a neutralino, such as in a co-annihilation region). Finally in Sect. 6 we give some details about the recasting code HackAnalysis and how it can be used.

2 Recasting the CMS disappearing track search

The CMS search for disappearing tracks interpreted its results in terms of supersymmetric models, and (in HEPDataFootnote 2) provided parameter cards to generate events alongside substantial recasting material for such models. Hence to recast and validate the analysis we must consider the same model(s) and reproduce their data. The relevant particles in this model are a “chargino,” that is a charged Dirac fermion \({\tilde{\chi }}^\pm ;\) and a “neutralino,” i.e. a stable neutral fermion \({\tilde{\chi }}^0.\) They are always produced in pairs, either of two charged particles \({\tilde{\chi }}^\pm {\tilde{\chi }}^\mp \) or one charged and one neutral, \({\tilde{\chi }}^\pm {\tilde{\chi }}^0\) (or two neutral fermions that leave no tracks). The chargino then produces a track in the detector when it lives long enough, and then “disappears” by decaying to a neutralino and a pion, or a lepton/neutrino pair:

hence events are characterised by the number of charginos present as one or two track events. The production is considered to be purely electroweak in origin (i.e. any additional particles that could decay to them should be so heavy as to give negligible contribution, unlike e.g. the “strong production” scenario of [29]) which means that initial state radiation (of the incoming partons) is relevant but final state radiation much less so.

The two scenarios considered were a wino and a higgsino; in general in supersymmetry the electroweakino sector consists of winos, higgsinos and binos which mix. A pure wino is an SU(2) triplet, while a higgsino is a doublet and a bino a singlet. In the case that they are pure states with no mixing the mass splitting of the neutral and charged states can be calculated accurately at two loops [62] and the decay width of the chargino into a single pion becomes only a function of its mass. From the experimental point of view, however, the actual mass splitting is not measurable (at least as concerns the LHC; for future colliders this may not be true), and only the lifetime is relevant. In the two scenarios considered by CMS, the decay channel in Eq. (2.1) was either 100% into pions (in the wino case), or 95% pions and 5% leptons (in the higgsino case). These differences are negligible from the recasting point of view. The production cross-sections for the wino case and higgsino case will be different functions of the mass, and the ratio of single track to double track events are different, roughly 2 : 1 for winos and 7 : 2 for higgsinos. However, since the recasting acceptances are given separately for double and single tracks, the wino and higgsino data are effectively identical (simply because they both involve fermions with very similar production mechanisms; and the efficiencies depend mostly on the kinematics and track properties). Hence throughout we shall focus only on the wino case.

While a pure wino or pure higgsino would have a lifetime given only by its mass, because the lifetime depends very strongly on the mass splitting it is very sensitive to a small admixture of other multiplets. Since a pure electroweak multiplet in supersymmetric models is only ever an approximation, and typically the mixing among the charginos or neutralinos is not negligible, in general the lifetime of the chargino can vary over many orders of magnitude, often without noticeably affecting the production cross-section or any of the other data relevant for the recasting. Hence to derive more general limits CMS were justified in varying the proper decay length of the chargino in each parameter point between 0.2 cm and 10,000 cm by hand, and we shall therefore use the same parameter cards/approach here. Of course, more mixing between the states would break this assumption and so to test a fully general model it would be necessary to use a code such as the one described here.

In this section we shall first describe the details of the experimental cuts, before presenting our approach to modelling the detector, pileup and finally validation of our code.

2.1 Triggers and cuts

The analyses [32, 33] require a hardware trigger on missing transverse energy (MET). However, one of the peculiarities of this type of search is that long-lived charged particles that reach the muon system will be reconstructed as muons, and therefore they lead to no missing energy. To mitigate this,  is used in the triggers and event selection, which is the missing momentum without including muons in the calculation (Table 1). The exact triggers used in the analysis are not given, and it is known that the trigger thresholds actually changed throughout the data taking periods, which leads to differences in the provided cutflows between the 2017 and first part of 2018 data (that should naively be identical).

is used in the triggers and event selection, which is the missing momentum without including muons in the calculation (Table 1). The exact triggers used in the analysis are not given, and it is known that the trigger thresholds actually changed throughout the data taking periods, which leads to differences in the provided cutflows between the 2017 and first part of 2018 data (that should naively be identical).

The MET cuts (after the triggers) are then \(p_T^{\mathrm{miss}} > 100\ \mathrm{GeV} \) for the 2015/16 data and  for 2017/18. To match the first few cuts in the provided cutflows it is necessary in reconstruction to take into account the probability of the triggers turning on, for example from [63]. In the simulation we use an approximation based on the information there.

for 2017/18. To match the first few cuts in the provided cutflows it is necessary in reconstruction to take into account the probability of the triggers turning on, for example from [63]. In the simulation we use an approximation based on the information there.

Subsequently, there are cuts based on the jets and missing energy:

-

We require at least one jet with \(p_T > 110\) GeV and \(|\eta | < 2.4\). Jets are reconstructed using the anti-\(k_T\) algorithm [64, 65] with radius parameter \(R=0.4\).

-

The difference \(\Delta \phi \) between the highest-\(p_T\) jet and \({\mathbf{p}}_T^{\mathrm{miss}}\) must be greater than 0.5 radians.

-

If \(n_{jets} \ge 2\), the maximum \(\Delta \phi _\mathrm{max} < 2.5\) radians.

-

Due to a failure in 2018, we reject \(-1.6< \phi ({\mathbf{p}}_T^{\mathrm{miss}}) < -0.6\) for the second part of the data taking period in 2018B (39 \({\mathrm{fb}}{}^{-1}\)) known as the “HEM veto.”

After the cuts, the selection of charged tracks are weeded out:

-

We keep only isolated tracks with \(p_T > 55\ \mathrm{GeV} \) and \(|\eta |<2.1\); isolation is such that the scalar sum of the \(p_T\) of all other tracks within \(\Delta R < 0.3\) is less than 5% of candidate track’s \(p_T\).

-

We remove tracks within regions of incomplete detector coverage in the muon system for \(0.15< | \eta | < 0.35\) and \(1.55< | \eta | < 1.85\), and \(1.42< |\eta | < 1.65 \) corresponding to the transition region between the barrel and endcap sections of the ECAL.

-

Tracks whose projected entrance into the calorimeter is within \(\Delta R < 0.05\) of a nonfunctional or noisy channel are rejected. The location of these are not given, nor is the total angular coverage given in the papers; it does not seem to be significant, however, and we shall ignore it.

-

Tracks must be separated from jets having \(p_T > 30\ \mathrm{GeV} \) by \(\Delta R ( \mathrm{track,\ jet} ) > 0.5\).

-

With respect to the primary vertex, candidate tracks must have a transverse impact parameter (\(|d_0|\)) less than 0.02 cm and a longitudinal impact parameter (\(|d_z|\)) less than 0.50 cm.

-

Tracks are rejected if they are within \(\Delta R( \mathrm{track,\ lepton} ) < 0.15\) of any reconstructed lepton candidate, whether electron, muon, or \(\tau _h\). This requirement is referred to as the “reconstructed lepton veto”. In particular, the overlap removal of LLPs near muons will apply to an LLP that is reconstructed as a muon.

-

Candidate tracks are rejected from the search region if they are within an \(\eta -\phi \) region in which the local efficiency is less than the overall mean efficiency by at least two standard deviations; this removes 4% of remaining tracks, but there is no information about where they are – we therefore may simply apply a flat \(96\%\) probability of meeting this criterion for each track.

Next, the data are split into regions (2015, 2016A and 2016B, 2017, 2018A and 2018B) according to the cuts above (notably the different triggers/MET cut between the two analyses) and the HEM veto for 2018B, but also

-

For the 2017 data, tracks are rejected within the angular region \( 2.7< \varphi < \pi \), \(0< \eta < 1.42.\)

-

For the 2018 data period, tracks are rejected within the angular region \( 0.4< \varphi < 0.8\), \(0< \eta < 1.42.\)

We therefore see that the 2017 and 2018A periods have almost identical cuts (since the cut \(\Delta \varphi \sim 0.4\) in both cases and the HEM veto applies to 2018B). There are differences in the experimentally provided cutflows which must instead be due to differences in triggers and trigger efficiencies; but the final efficiencies passing all cuts are very similar. There is also no information about the difference between periods 2016A and 2016B other than that the trigger configurations changed between the two in an unspecified way (which is unlikely to significantly affect the final efficiencies), so we treat them as identical in the analysis.

For each period, there are hit-based quality requirements on the tracks, which are detected as they pass through pixel and then tracker layers; and we must determine whether they have disappeared:

-

Tracks must hit all of the pixel layers (three for 2015/16, four for 2017/18).

-

Tracks must have at least three missing outer hits in the tracker layers along its trajectory, i.e. it must stop, and not just disappear just before leaving the tracker.

-

Track must have no missing middle hits, i.e. there must be a continuous line of hits until it disappears.

-

\(E_\mathrm{calo}^{\Delta R< 0.5} < 10\ \mathrm{GeV} \) for each track, i.e. the calorimeter energy measured within \(\Delta R < 0.5\) must be less than \(10\ \mathrm{GeV} \), ensuring that it does indeed stop (and is not just missed by the tracker). This cut is of negligible importance to signal events (but very important for background, which includes charged hadrons); it is also somewhat tricky to implement when considering pileup, since the pileup contribution to this measure must be subtracted, as will be discussed later.

Finally, we must decide which signal region the tracks fall into; these are based on the number of layers hit by the track. For the 2017/2018 data, the three regions are:

-

SR1: \(n_\mathrm{lay} = 4\)

-

SR2: \(n_\mathrm{lay} = 5\)

-

SR3: \(n_\mathrm{lay} \ge 6\).

For the original analysis/data in 2015 and 2016, there is only one signal region, corresponding to seven hits or more overall in the tracker. Since the earlier analysis took place before the pixel detector upgrade, the pixel detector contained only three layers whereas for the second analysis we have four. For the sake of simplicity we only model the latter detector, and therefore we impose the criterion of more than seven hits for these regions.

2.2 Modelling the detector

Recent experimental analyses often include techniques for compensating for the imperfections of the detectors, to the point that simulating the detector response as performed e.g. in Delphes may be less accurate than using particle-level information and data-driven efficiencies. For this analysis, the most important physics objects are the charged tracks, muons, the MET and jets. Of these, the momenta/energies of the jets are relatively unimportant, in that we require only the directions; and the efficiency of the MET reconstruction has been studied [63]. On the other hand, there is no public code to simulate the tracker response to charged particles in terms of hits and layers, and for LLP analyses, and this one in particular, we must take into account the physical dimensions of the detector: whether a charged particle decays within before leaving the muon system, for example, so that we can properly compute the MET.

There is no efficiency information given by the experiment about the reconstruction of tracks as a function of length or direction. Moreover, the signal regions are defined in terms of the number of pixel/tracker layers traversed. This is a unique feature of the analysis, and is in contrast to e.g. the ATLAS disappearing track search, where (presumably model-dependent) tracklet efficiency information was given. This means that to accurately recast the CMS analysis the reinterpretation must have some model of the position of the layers. Therefore, by using a probability of a layer hit being registered we model the possibility of missing inner/middle hits, as well as “fake” outer hits, and this appears to be important for accurately reproducing the signal efficiencies, which are otherwise too high; we use a fixed per-hit efficiency of \(94.5\%\) [66] and clearly this has a significant impact on reducing the number of tracks observed, because we just need to miss one hit along the length of the track for it to be discounted.

Otherwise, to model the track hits, we assign a track object to each charged particle passing through the tracker and then compute the layers that it may pass through. We model the location of the layers in the pixel, inner barrel and outer barrel as cylinders, and inner discs and end-cap discs as fixed radius discs at given transverse distances from the interaction point, assumed to be perpendicular to the beam axis (which is not exactly correct, but good enough). The geometry and positions, along with a host of other useful information for this search, are found in [66,67,68] and especially the thesis of Andrew Hart, http://rave.ohiolink.edu/etdc/view?acc_num=osu1517587469347379 and references therein. The values we used are given in Table 2.

We therefore arrive at a unique approach to computing the efficiency of observing a track and determining its signal region. Since it depends directly on the geometry of the detector, we hope that it is model-independent and can therefore be used to accurately recast results for models with different kinematics, such as long-lived scalars/vectors.

2.3 Effect of pileup and track isolation

In principle, pileup affects the jets, MET calculation, track isolation and calculation of \(E_\mathrm{calo}^{\Delta R < 0.5}\), as well as providing fake tracks. To compensate, CMS employ rather effective mitigation techniques. Moreover, simulating pileup in a simplified simulation is rather unusual, since its effects are usually absorbed into the efficiencies. However, we have no such efficiencies that apply for this analysis, except for the MET trigger. Therefore we implemented pileup events in the code HackAnalysis. This is accomplished by simulating minimum bias events in pythia, and storing the final state particles that register in the detector, and also metastable particles that leave tracks. These mainly consist of hadrons. These events are stored in a compressed text file, which can then be read into HackAnalysis (in this way we save significant processing time). As each signal event is read in/simulated, a number, drawn from a Poisson distribution with mean equal to the observed pileup average, of these stored events is randomly selected from the minimum bias database, and added to the event, with their vertices randomly distributed along the beam axis and in time according to the same distribution as used in Delphes pileup events.

In general in a simplified simulation approach there are then a number of different ways for pileup events to be combined with the signal event. In a naive detector simulation we would combine them before any jet clustering and the MET calculation was performed. However, sophisticated techniques are employed by the experimental collaborations such as Jet Vertex Tagging to remove the effects of pileup, and we would then be forced to attempt to implement some version of these without knowledge of the details. Instead, we can choose to only include these events in the event record after the MET and jet clustering calculations have been performed. In this way they can be used to provide fake tracks and be included in the isolation computation. However, the idea of HackAnalysis is that the user can modify these aspects to suit their use case.

In addition, when particle-level data (as opposed to detector simulation) is used in codes such as GAMBIT, typically the effect of isolation requirements upon particle reconstruction are incorporated via efficiencies derived from experimental data. However, for our disappearing charged tracks we have no such efficiencies and so we store the momenta of the hadrons (both charged and neutral, that are long-lived enough to leave a track/reach the colorimeters) in the event record so that they can be used to compute the isolation. This is different to the typical approach of forgetting about hadrons once they have been clustered into jets. However, by examining the cutflows in Appendix A it is clear that the isolation requirement imposes a very severe reduction in the number of events (of around \(60\%\)), and so this inclusion is absolutely vital; it can also be seen that our modelling works rather well.

For the CMS disappearing track analysis considered here, we chose to include the effects of pileup only for the the isolation calculation and for providing fake tracks (i.e. hadrons that are identified as disappearing tracks, potentially because of missing hits). Ideally we would also include its effects in the calculation of \(E_\mathrm{calo}^{\Delta R < 0.5},\) however even after subtracting the median energy (which is the standard mitigation technique) its effects were still too large and we simply disabled this cut. As can be seen from the cutflows in Table 6 where we compare the simulation with and without pileup events it is actually the track isolation that plays the biggest role in identifying the track as having disappeared, and the \(E_\mathrm{calo}^{\Delta R < 0.5}\) cut has negligible effect. It is also clear from comparing the cutflows that the effects of pileup are tiny, so that in principle an almost as accurate result could be found by neglecting it in this case.

2.4 Event simulation and MET calculation

To simulate events, the CMS analysis used leading-order pythia [69] simulations. These cannot properly account for hard initial state radiation (ISR); therefore CMS adjusted the MET and the \(p_T \) of the chargino pair using a data-driven approach, by comparing the process to \(Z\rightarrow \mu \mu \) events. This is somewhat difficult to implement in a recasting tool, and in any case for different models it may not be applicable, since the topology of the process may be very different. Hence we take the (more standard) approach of simulating the hard process including up to two hard jets using MadGraph [70]. However, there is then the choice of how to match to the parton showers, since there are several prescriptions. To investigate the effects of this, we implemented three different approaches: MLM matching [71] within pythia; CKKW-L merging [72,73,74] within pythia; and the version of MLM matching using reweighting from MadGraph 2.9 (which also uses pythia). This latter approach meant using a hepmc [75] interface, and was substantially slower to run per point, hence we used it for the benchmark points but not for a complete scan.

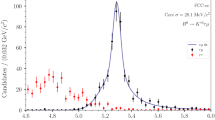

Comparison of missing transverse energy distribution (proportion of events per GeV in each bin) for double-chargino and single-chargino events, for both CKKW-L merging and MLM matching. Left: 100 GeV winos with 10 cm proper decay length; right: 1100 GeV winos with 10 cm proper decay length. Not shown is the overflow bin

The effect of the different matching/merging approaches should be seen in the MET calculation and also the distribution of momenta of the tracks. While they should be equivalent, CKKW-L merging is generally regarded as superior (if more complicated) because it leads to a smoother distribution; but provided that the matching scale in MLM is well chosen (and related to the hard process) this should not be a problem. We took the merging/matching scale to be one quarter of the chargino mass. In Fig. 1 we compare the merging/matching approaches for 100 GeV and 1100 GeV. Clearly the distributions are both smooth, and there is not an obvious merit for one or the other from the plots; the differences are also small. However, since the MET cut and even more so the trigger appear on a rapidly falling part of the distribution, the small differences are amplified and the proportion of events passing the cut differ by between 10 to \(30\%\), which leads to substantial uncertainty on the predictions. This is a strong argument in favour of the experimental collaborations providing not only the matching/merging scheme, but also the parameters used (matching scale, etc) in their simulations.

Not related to the impact of merging/matching, but to illustrate the effect of charginos escaping from the detector on the MET calculation, in Fig. 2 the distribution of missing energy can be seen for 700 GeV winos with proper decay lengths of 10 cm and 10,000 cm; in the right-hand plot most charginos continue to the muon system, so the single-chargino events have large missing energy, and double-chargino events even more so (i.e. most of the double-chargino events are in the overflow bin). This underlines the sensible choice of CMS to use both \(p_T^{\mathrm{miss}} \) and  in the cuts, and that our code is able to reproduce this effect.

in the cuts, and that our code is able to reproduce this effect.

The effect of merging/matching on the distribution of \(p_T\) of the charginos is shown in Fig. 3, for 100 GeV winos and 1100 GeV winos. As in the MET case, both distributions are smooth and there is no obvious superior choice just from examining the plots, but substantial differences between the two can be seen in particular for lighter winos, which again contribute to the uncertainty. As a result, in the validation of the code we provide more than one set of results so that the effect of matching/merging can be seen, which yields differences up to about \(30\%\) overall.

Comparison of missing transverse energy distribution (proportion of events per GeV in each bin) for double-chargino and single-chargino events, for both CKKW-L merging and MLM matching. Left: 700 GeV winos with 10 cm proper decay length; right: 700 GeV winos with 10,000 cm proper decay length. On the right-hand figure, nearly \(80\%\) of \({\tilde{\chi }}^\pm {\tilde{\chi }}^\mp \) events lie in the first bin, since the charginos are both escaping and being classed as muons, giving almost no missing energy. For \({\tilde{\chi }}^\pm {\tilde{\chi }}^0\) events the chargino nearly always escapes, leading to \(40\%\) of the events having MET larger than 400 GeV; the overflow bin is not shown

Comparison of the distribution of highest \(p_T\) chargino per event (labelled as \(p_T\) first track vs proportion of events per GeV in each bin) for double-chargino and single-chargino events, for both CKKW-L merging and MLM matching. Left: 100 GeV winos; right: 1100 GeV winos. The distribution uses particle-level information before any detector considerations, so is independent of decay length. Not shown is the overflow bin, which contains negligible events

3 Validation

The recasting material provided in HEPData includes cutflows for six benchmark points (300 GeV and 700 GeV, lifetimes of 10 cm, 100 cm and 1000 cm for each) for both the wino and higgsino cases; acceptances for each signal region for masses between 100 and 1100 \(\mathrm{GeV}\) and a large range of lifetimes; and of course the exclusion plots. There is not a significant difference between the wino and higgsino cases in terms of cutflows; the main difference is the ratio of the two-charged-track events to single charged tracks, which for the wino case is roughly 1 : 2 and for the higgsino case 2 : 7. In general, the efficiencies for two-charged-track events are roughly twice those for the single-charge-track events, with a reduction at longer lifetimes because the presence of a very long track interferes with the MET calculation.

There is therefore thankfully a large amount of data for validation. The classic standard is to compare cutflows, and we present a selection of these in Appendix A, with many more available online at the address given in Sect. 6. We provide a comparison of the three different matching/merging approaches in Table 7, where it can be seen that the MadGraph MLM and CKKW-L approaches give the best agreement for the chosen data point. In contrast to the recast of the ATLAS disappearing track search [30], which typically found differences of at most a few percent in the final efficiencies between the different approaches, we often find differences of order 10%; the differences are presumably mainly related to the trigger efficiency. For each point we simulated 500 k events which, when split across 15 cores on a 40-core 3500 GHz cluster computer, took about an hour per point to simulate both MLM and CKKW-L events combined. In Table 6 we demonstrate the (marginal) effect of the pileup on the cutflow, as discussed previously. In Tables 8, 9 and 10 we compare all of the the benchmark points for one signal region, namely 2018A, for the MadGraph MLM matching (which uses reweighting rather than vetos) which we denote “HEPMC,” since that is the input mode for the code HackAnalysis. Throughout good agreement can be seen.

While we have discussed the uncertainties coming from the different matching/merging approaches on the theoretical side, the cutflows – and especially the acceptances provided by CMS on HEPData– actually come with substantial uncertainties too. The reason for this is the large statistics required: the efficiencies for the signal regions in this analysis range from \(10^{-2}\) down to \(10^{-5}\) (or lower) with most values being from \(10^{-4}\) to \(10^{-3}\); in order to have a \(10\%\) uncertainty on an efficiency of \(10^{-4}\) one would have to simulate \(10^6\) events, whereas from the quoted statistical errors it appears that CMS simulated of the order of \(5\times 10^4\) for each cutflow table and \(3 \times 10^3\) for each point in the acceptances table. Moreover, while the acceptances are quoted for a very large number of lifetimes, events were only simulated for a small number and the results for intermediate lifetimes are computed by reweighting the events according to the decay length. These are from 0.2 cm to 10,000 cm in logarithmic steps. For example, let us look at the quoted acceptances for single chargino events (which are the most important) and take the best case at short lifetimes: a 100 GeV wino. The acceptances for signal region 2018A are:

The data up to decay lengths of about 10 cm is therefore up to 100% uncertainty and there is little point attempting to match to better than a factor of 2 (or even at all below 1 cm). Similarly at longer decay lengths a similar story plays out for this signal region (i.e. the data is not meaningful). Instead it is only useful to compare to the “best” number of layers for any point, as that is where the statistical power is greatest. Therefore to give a reasonable measure of the uncertainty from our code we compute

where \(\epsilon _i^{CMS}\) is the acceptance according to HEPData for the best signal region (i.e. the number of layers that produces the best expected limit according to the \(\mathrm {CL}_s\) procedure, where by expected we mean taking the number of observed events equal to the background) for the data-taking period i; \(\epsilon _i\) is the same thing for our code; and \({\mathcal {L}}_i\) is the integrated luminosity of the period i. In effect we are comparing the predicted total number of events over the whole \(139\ {\mathrm{fb}}{}^{-1} \), effectively including the 2015 and 2016 data in the \(n_\mathrm{lay} \ge 6\) region. The values for single chargino events are given in Table 4 and for double chargino events in Table 5. We do not show the values where the uncertainty on the acceptances is too high (i.e. for some 100 GeV values). As can be seen, good agreement is found over the whole range for both MLM and CKKW-L matching/merging, with perhaps better agreement for the latter. Especially given the above observations about the uncertainties in the HEPData, and the fact that the acceptance for this analysis is a very rapidly changing function of both mass and decay length, the accuracy is entirely adequate, as will be seen in the next section.

4 Long dead winos

Having validated our code by comparing with cutflows and acceptances, here we reproduce the exclusion limits for winos, which is a classic SU(2) triplet fermion having zero hypercharge and can be regarded as a Minimal Dark Matter candidate. However, since we have a recasting code, we can do more: we can also apply the constraints from other analyses. In particular, very long-lived winos can also be looked for in searches for heavy stable charged particles; there is the analysis [76] by ATLAS which provided extensive recasting material including efficiencies and pseudocode. Notably this formed the basis for a code on the LLPrecasting repository https://github.com/llprecasting/recastingCodes/blob/master/HSCPs/ATLAS-SUSY-2016-32/. By treating any chargino that escapes the muon system as a stable particle, and approximating the ATLAS muon chambers as a cylinder of radius 12 m and of length 46 m (so \(|z| < 23\) m) we can use this analysis to constrain longer-lived charginos. Although the ATLAS analysis has only \(36.1 {\mathrm{fb}}{}^{-1} \) it is especially powerful, since a heavy particle in the muon system is a rather striking signal, and so together these two anaylyses can provide overlapping regions of exclusion.

For the CMS disappearing track analysis, we generate two charged track and one charged track events separately, and use the same data as for Tables 5 and 4 supplemented by additional refinement points at intermediate lifetimes. To calculate exclusion limits we use a python code (available online) to combine the results from different data-taking periods in the same signal region (and treat the 2015/2016 data as belonging to signal region 3) to produce a \(\mathrm {CL}_s\) value, and exclude points at \(95\%\) confidence level. For the production cross-sections we use the publicly-available NLO-NLL results [77, 78] from https://twiki.cern.ch/twiki/bin/view/LHCPhysics/SUSYCrossSections.

For the ATLAS heavy stable charged particle analysis, we used the leading-order pythia code from the LLPrecasting repository, with the same cross-sections and a bespoke code to calculate the exclusion limits. We also implemented the same search into our framework so that both analyses could be run on the same events.

The results are shown in Fig. 4, where we show our exclusion limits, and those of the CMS analysis for comparison. To show the much greater reach of the more recent analysis, we include for comparison as a purple dashed curve the exclusion limit for the older ATLAS disappearing-track analysis ATLAS-SUSY-2016-06 [29]. As can be seen the excluded regions overlap so that 200 GeV winos are excluded for any decay lengths (\(c\tau \)) longer than about 2 cm, and 700 GeV winos for lengths longer than about 20cm. One peculiarity of the ATLAS search is that it is only sensitive to masses above around 175 GeV (since the mass measurement relies on time of flight information they placed a cut on the lowest masses). Hence in principle the disappearing track search is most sensitive for very light winos even at rather long lifetimes, and a (meta-) stable wino below about 175 GeV cannot be ruled out by these searches.

Most importantly, however, we see that our approach is well able to reproduce the exclusion plot for both MLM matching and CKKW-L merging approaches, and the differences between them give a measure of the uncertainty in the result.

As a final caveat on these results, we note that for very long-lived charginos – or, indeed, any SU(2) multiplet with a (meta-)stable neutral component – the production of a neutral and charged fermion together leads to very large missing energy (as can be seen from the differences in Fig. 2, which should be a trigger for prompt searches and may lead to additional exclusions (which are already excluded by our results in this case). It would be interesting to explore this in the context of other models, but would require a recasting of the relevant analyses to take this effect into account and we leave it for future work.

5 Limits on light charged scalars

Having demonstrated the versatility of the code and reproduced the CMS data, here we apply it to a model with charged scalars. As we stressed in Sect. 2.2, our model-independent approach to modelling the detector should be accurate when used for this purpose, which is a key advantage. If we add a single charged scalar \(S^-\) with hypercharge 1 (and charged under lepton number) and a neutral fermion \({\tilde{\chi }}^0\) then the most general Lagrangian is

Clearly this is a prototype of a bino and right-handed slepton in supersymmetry, except that the couplings \(\lambda _2, \lambda _3\) and \(y_{RS}\) are undetermined. This is an excellent (if rather fine-tuned) dark matter model.

The relevant classic constraints from LEP are searches for right-handed sleptons [79,80,81,82,83].Footnote 3 The prompt limits areFootnote 4 up to 100 GeV, although we shall consider a highly compressed spectrum for which such bounds vanish. On the other hand, the bounds on stable charged particlesFootnote 5 are robust and also extend to 99.4 GeV for right-handed sleptons (99.6 for left-handed) so we conservatively only consider scalar masses above 100 GeV.

The classic prompt LHC constraints are dominated by pair production of \(S^\pm \) via a Z boson; the cross-sections are small but there should, in principle, be limits from conventional monojet/monophoton (mono-X) searches. As discussed in [30], such searches are very generic, requiring only that new hidden particles are produced so that they give missing transverse energy to recoil against. However, in the absence of new heavy mediators that can be produced on-shell these only give very weak limits; for this model the current limits are not significant because the cross-sections are so small (only \(85\, \mathrm {fb}\) at 100 GeV, compared to \({\mathcal {O}} (10{,}000)\) fb for winos), and furthermore such searches typically require rather large missing energy cuts. We verified this by checking the model (with prompt decays) using the \(36\, \mathrm {fb}^{-1}\) monojet search [84] implemented in MadAnalysis in [85] (which is the only analysis of that type currently available in that framework), and found very poor sensitivity (i.e. no exclusion for any mass) even when we extrapolated the luminosity to \(3000\, \mathrm {fb}^{-1}\) using [52]. Of course, it would be interesting to implement and check against other more recent (or future) searches when they become available. It might also be interesting to explore them for the HL-LHC. Moreover, in contrast to the wino/SU(2) multiplet case, since the charged \(S^\pm \) bosons are only produced in pairs (for winos charged pair production concerns roughly one third of the events), long-lived scalars do not generally lead to large missing energy since they will either both be classed as muons or neither (if the decay length is short enough), and it would be interesting to implement prompt searches in our framework to take this into account.

The width of the charged scalar decay (neglecting the electron mass) is given by

so we can have a long lifetime for the charged scalar via a small mass difference and/or coupling. We fix \(m_{S^\pm } - m_{{\tilde{\chi }}^0} = 90\ \mathrm {MeV} < m_\pi \) (so that even loop-induced decays to pions are impossible, since we want to consider a different channel to the wino model) so that the proper decay length is

We implemented this model in SARAH [86,87,88,89,90,91] to calculate the spectra and decays precisely, and produced a UFO [92] for MadGraph to generate events for the LHC searches. We computed the limits from disappearing tracks and heavy stable charged particle searches using HackAnalysis, and, since this can be a dark matter model, we also computed the dark matter relic density using MicrOMEGAs5.2 [93, 94]. The results are shown in Fig. 5. In contrast to the wino case, the HSCP and DT search exclusion regions do not overlap, because the DT limits are much weaker thanks to the small cross-section. On the other hand, the HSCP region is excluded by the dark matter density, while there is some complementarity between the DT and DM searches. The parameter space is then viable for smaller masses and both smaller and longer lifetimes.

Since the model only produces two charged tracks at a time, it is perhaps natural to wonder whether this could be used as a signature in itself. From Table 3, we see that single-track events have a background of \(\sigma _B \sim {\mathcal {O}} (0.1)\) fb in any given region. Let us assume that double-track events have no background. Denote the efficiency of a single track being detected as \(\varepsilon \); then the probability of detecting both tracks is \(~ \varepsilon ^2.\) For a given integrated luminosity L and signal cross-section \(\sigma \), we have \(S_1\) single-track events and \(S_2\) double-track events, where

For there to be an advantage in performing a dedicated double-track search, we need \(S_2 >1, S_1 \lesssim \sqrt{N_B} \) where \(N_B = L \sigma _B\) is the number of background single-track events. Then

The first criterion is rather hard to meet even at the HL-LHC; for cross-sections of \({\mathcal {O}}(100)\) fb and \(3\, \mathrm {ab}^{-1}\) we can reach \(\varepsilon > rsim 2\times 10^{-3}\). But then \(S_1/\sqrt{N_B} \sim {\mathcal {O}}(100)\) so the single-track search would still be (much) more powerful. Perhaps a dedicated analysis with more generous cuts would give larger signal efficiencies (at the expense of larger backgrounds, generally for the single-track case) but that is beyond the scope of this work.

6 A hackable recasting code

Here we describe the code that incorporates the recast of the CMS disappearing track search, and also another version of the ATLAS heavy stable particle search, so that both can be run on the same simulated events. This code is called HackAnalysis and is available at https://github.com/llprecasting/recastingCodes/tree/master/DisappearingTracks/CMS-EXO-19-010; it is maintained at https://goodsell.pages.in2p3.fr/hackanalysis where versions with any other analyses in future will appear.

As mentioned in the introduction, HackAnalysis is not meant to be a new framework, but merely to be used for prototyping so that analyses/features can be exported e.g. to MadAnalysis. It is therefore designed to be flexible and editable to give the user complete control at every stage without obfuscation. It has three modes of operation: (1) event generation using pythia; (2) reading LesHouchesEvent (.lhe) files (presumably from MadGraph) and showering through pythia; (3) reading hepmc2 files. In the latter two cases, either compressed (via gzip) or uncompressed files are accepted. In modes (1) and (2) multicore operation is possible (via pragma omp); in mode (2) this means the .lhe or .lhe.gz files should be split into one file per core (which can be performed automatically with a python script provided).

As a basis for the event handling, events from pythia or hepmc are converted to a common event format which is based on a modified version of the heputils (https://gitlab.com/hepcedar/heputils) package where, in compliance with the licence, the namespace is renamed (as HEP). In addition some code is taken from mcutils and in principle smearing can be applied identically to GAMBIT although this is not actually used for these analyses.

The code HackAnalysis is very lightweight, making use as far as possible of existing libraries; this means that editing and (re)building is very fast. It therefore requires pythia [69] (from version 8.303) for showering/event generation; fastjet [65] for jet clustering (in principle this could be slimmed down to fjcore, but it may be desirable to use the more advanced features for e.g. pileup subtraction); YODA (https://yoda.hepforge.org/) to read YAML files and handle histogramming (and the reading of HEPData efficiency tables if required, although so far no analyses rely on this feature) and hepmc2 if reading/writing that format is desired. Compilation is straightforward on a unix-based system; the user must only provide the paths for the necessary packages in the Makefile:

The only subtlety here is the YAMLpath; the code uses the YAML reader included in YODA, but a peculiarity of that package is that the header files are not installed in the installation directory, so this path must point to the directory where the YODA code is stored. Note that it would be straightforward to include a separate YAML reader at the expense of installing more packages.

Once the code is built, a library and three executables are created, named analysePYTHIA.exe, analysePYTHIA_LHE.exe and analyseHEPMC.exe, corresponding to modes (1), (2) and (3) respectively. All three accept a YAML file to specify settings such as which analyses to run, the names of pythia configuration files, names of output files, whether to include pileup, etc.

An example YAML file to run the program would be:

We show here the two analyses included with the initial release: the CMS disappearing track (DT_CMS) and ATLAS Heavy Stable Particle Search (HSCP_ATLAS) [76].

The output is a set of text files: an efficiency file (which contains the efficiency and uncertainty of each signal region) which resembles an SLHA format; a cutflow file (which prints the cutflows in a verbose text form); and a file of YODA histograms. Example input files, configuration files for pythia for modes (1) and (2) (for MLM/CKKW-L matching/merging) and python files for reading the output and calculating exclusion limits for the included analyses are provided. Moreover, code for generating and storing a pileup event file are provided.

7 Conclusions

We have presented an approach and a code to recast the CMS disappearing track searches using the full Run 2 data, and shown that in combination with heavy stable charged particle searches a large part of the parameter space can be excluded for a wide variety of models. We have presented extensive validation material and discussion of the technical challenges, as well as some exclusions for a new model.

It would now be interesting to apply the code to produce tables of efficiencies for each signal region for different classes of models (scalars with one or two tracks, vectors with one or two tracks) so that searches can be recast in a simplified models approach. Clearly, with three signal regions and six data-taking periods it is impractical to publish such tables in a paper, but they should ultimately be available online. It would also be useful to apply the results to complete models such as those in [21] or [23] and explore new scenarios. We hope to return to these in future work. Moreover, the analysis and approaches described here should also be made available in other frameworks; the implementation in MadAnalysis based on this work was recently completed [95]; see also [96] which appeared after this work.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The data/codes are provided publicly and linked to in the paper.]

Notes

Of course metastable particles with lifetimes above one second are disfavoured cosmologically, but above one microsecond is effectively eternity for the LHC.

Combined by the LEP SUSY working group at http://lepsusy.web.cern.ch/lepsusy/Welcome.html.

References

J. Alimena et al., Searching for long-lived particles beyond the Standard Model at the Large Hadron Collider. J. Phys. G 47, 090501 (2020). https://doi.org/10.1088/1361-6471/ab4574arXiv:1903.04497

M. Cirelli, N. Fornengo, A. Strumia, Minimal dark matter. Nucl. Phys. B 753, 178 (2006). https://doi.org/10.1016/j.nuclphysb.2006.07.012arXiv:hep-ph/0512090

M. Cirelli, F. Sala, M. Taoso, Wino-like Minimal Dark Matter and future colliders. JHEP 10, 033 (2014). https://doi.org/10.1007/JHEP01(2015)041. arXiv:1407.7058. [Erratum: JHEP 01, 041 (2015)]

C.H. Chen, M. Drees, J.F. Gunion, A nonstandard string/SUSY scenario and its phenomenological implications. Phys. Rev. D 55, 330 (1997). https://doi.org/10.1103/PhysRevD.55.330. arXiv:hep-ph/9607421. [Erratum: https://doi.org/10.1103/PhysRevD.60.039901]

N. Arkani-Hamed, S. Dimopoulos, Supersymmetric unification without low energy supersymmetry and signatures for fine-tuning at the LHC. JHEP 06, 073 (2005). https://doi.org/10.1088/1126-6708/2005/06/073arXiv:hep-th/0405159

M. Ibe, S. Matsumoto, T.T. Yanagida, Pure gravity mediation with \(m_{3/2} = 10\)-\(100{{ m TeV}}\). Phys. Rev. D 85, 095011 (2012). https://doi.org/10.1103/PhysRevD.85.095011arXiv:1202.2253

L.J. Hall, Y. Nomura, S. Shirai, Spread supersymmetry with wino LSP: gluino and dark matter signals. JHEP 01, 036 (2013). https://doi.org/10.1007/JHEP01(2013)036arXiv:1210.2395

A. Arvanitaki, N. Craig, S. Dimopoulos, G. Villadoro, Mini-split. JHEP 02, 126 (2013). https://doi.org/10.1007/JHEP02(2013)126arXiv:1210.0555

M. Citron, J. Ellis, F. Luo, J. Marrouche, K.A. Olive, K.J. de Vries, End of the CMSSM coannihilation strip is nigh. Phys. Rev. D 87, 036012 (2013). https://doi.org/10.1103/PhysRevD.87.036012arXiv:1212.2886

M. Low, L.-T. Wang, Neutralino dark matter at 14 TeV and 100 TeV. JHEP 08, 161 (2014). https://doi.org/10.1007/JHEP08(2014)161arXiv:1404.0682

R. Mahbubani, P. Schwaller, J. Zurita, Closing the window for compressed Dark Sectors with disappearing charged tracks. JHEP 06, 119 (2017). https://doi.org/10.1007/JHEP06(2017)119. arXiv:1703.05327. [Erratum: JHEP 10, 061 (2017)]

H. Fukuda, N. Nagata, H. Otono, S. Shirai, Higgsino dark matter or not: role of disappearing track searches at the LHC and future colliders. Phys. Lett. B 781, 306 (2018). https://doi.org/10.1016/j.physletb.2018.03.088arXiv:1703.09675

M. Garny, J. Heisig, B. Lülf, S. Vogl, Coannihilation without chemical equilibrium. Phys. Rev. D 96, 103521 (2017). https://doi.org/10.1103/PhysRevD.96.103521arXiv:1705.09292

J.-W. Wang, X.-J. Bi, Q.-F. Xiang, P.-F. Yin, Z.-H. Yu, Exploring triplet-quadruplet fermionic dark matter at the LHC and future colliders. Phys. Rev. D 97, 035021 (2018). https://doi.org/10.1103/PhysRevD.97.035021arXiv:1711.05622

A. Bharucha, F. Brümmer, N. Desai, Next-to-minimal dark matter at the LHC. JHEP 11, 195 (2018). https://doi.org/10.1007/JHEP11(2018)195arXiv:1804.02357

A. Biswas, D. Borah, D. Nanda, When freeze-out precedes freeze-in: sub-TeV fermion triplet dark matter with radiative neutrino mass. JCAP 1809, 014 (2018). https://doi.org/10.1088/1475-7516/2018/09/014arXiv:1806.01876

A. Belyaev, G. Cacciapaglia, J. Mckay, D. Marin, A.R. Zerwekh, Minimal spin-one isotriplet dark matter. Phys. Rev. D 99, 115003 (2019). https://doi.org/10.1103/PhysRevD.99.115003arXiv:1808.10464

D. Borah, D. Nanda, N. Narendra, N. Sahu, Right-handed neutrino dark matter with radiative neutrino mass in gauged B–L model. Nucl. Phys. B 950, 114841 (2020). https://doi.org/10.1016/j.nuclphysb.2019.114841arXiv:1810.12920

G. Bélanger et al., LHC-friendly minimal freeze-in models. JHEP 02, 186 (2019). https://doi.org/10.1007/JHEP02(2019)186arXiv:1811.05478

A. Filimonova, S. Westhoff, Long live the Higgs portal! JHEP 02, 140 (2019). https://doi.org/10.1007/JHEP02(2019)140arXiv:1812.04628

A. Das, S. Mandal, Bounds on the triplet fermions in type-III seesaw and implications for collider searches. Nucl. Phys. B 966, 115374 (2021). https://doi.org/10.1016/j.nuclphysb.2021.115374arXiv:2006.04123

L. Calibbi, F. D’Eramo, S. Junius, L. Lopez-Honorez, A. Mariotti, Displaced new physics at colliders and the early universe before its first second. JHEP 05, 234 (2021). https://doi.org/10.1007/JHEP05(2021)234. arXiv:2102.06221

M.D. Goodsell, S. Kraml, H. Reyes-González, S.L. Williamson, Constraining electroweakinos in the minimal Dirac gaugino model. SciPost Phys. 9, 047 (2020). https://doi.org/10.21468/SciPostPhys.9.4.047arXiv:2007.08498

D. Curtin, K. Deshpande, O. Fischer, J. Zurita, New physics opportunities for long-lived particles at electron-proton colliders. JHEP 07, 024 (2018). https://doi.org/10.1007/JHEP07(2018)024arXiv:1712.07135

J. de Blas, et al., The CLIC potential for new physics. 3/2018 (2018). https://doi.org/10.23731/CYRM-2018-003. arXiv:1812.02093

M. Saito, R. Sawada, K. Terashi, S. Asai, Discovery reach for wino and higgsino dark matter with a disappearing track signature at a 100 TeV \(pp\) collider. Eur. Phys. J. C 79, 469 (2019). https://doi.org/10.1140/epjc/s10052-019-6974-2arXiv:1901.02987

R. Capdevilla, F. Meloni, R. Simoniello, J. Zurita, Hunting wino and higgsino dark matter at the muon collider with disappearing tracks. JHEP 06, 133 (2021). https://doi.org/10.1007/JHEP06(2021)133arXiv:2102.11292

S. Bottaro, D. Buttazzo, M. Costa, R. Franceschini, P. Panci, D. Redigolo, L. Vittorio, Closing the window on WIMP dark matter. Eur. Phys. J. C 82, 31 (2022). https://doi.org/10.1140/epjc/s10052-021-09917-9arXiv:2107.09688

ATLAS, M. Aaboud, et al., Search for long-lived charginos based on a disappearing-track signature in pp collisions at \( sqrt{s}=13 \) TeV with the ATLAS detector. JHEP 06, 022 (2018). https://doi.org/10.1007/JHEP06(2018)022. arXiv:1712.02118

A. Belyaev, S. Prestel, F. Rojas-Abbate, J. Zurita, Probing dark matter with disappearing tracks at the LHC. Phys. Rev. D 103, 095006 (2021). https://doi.org/10.1103/PhysRevD.103.095006arXiv:2008.08581

ATLAS, Search for long-lived charginos based on a disappearing-track signature using 136 fb\(^{-1}\) of \(pp\) collisions at \(\sqrt{s}\) = 13 TeV with the ATLAS detector (2021)

CMS, A.M. Sirunyan, et al., Search for disappearing tracks as a signature of new long-lived particles in proton-proton collisions at \(sqrt{s} =\) 13 TeV. JHEP 08, 016 (2018). https://doi.org/10.1007/JHEP08(2018)016. arXiv:1804.07321

CMS, A.M. Sirunyan, et al., Search for disappearing tracks in proton-proton collisions at \(sqrt{s} =\) 13 TeV. Phys. Lett. B 806(2020). https://doi.org/10.1016/j.physletb.2020.135502. arXiv:2004.05153

S. Kraml, S. Kulkarni, U. Laa, A. Lessa, W. Magerl, D. Proschofsky-Spindler, W. Waltenberger, SModelS: a tool for interpreting simplified-model results from the LHC and its application to supersymmetry. Eur. Phys. J. C 74, 2868 (2014). https://doi.org/10.1140/epjc/s10052-014-2868-5arXiv:1312.4175

G. Alguero, S. Kraml, W. Waltenberger, A SModelS interface for pyhf likelihoods. Comput. Phys. Commun. 264, 107909 (2021). https://doi.org/10.1016/j.cpc.2021.107909arXiv:2009.01809

F. Ambrogi, S. Kraml, S. Kulkarni, U. Laa, A. Lessa, V. Magerl, J. Sonneveld, M. Traub, W. Waltenberger, SModelS v1.1 user manual: improving simplified model constraints with efficiency maps. Comput. Phys. Commun. 227, 72 (2018). https://doi.org/10.1016/j.cpc.2018.02.007arXiv:1701.06586

F. Ambrogi et al., SModelS v1.2: long-lived particles, combination of signal regions, and other novelties. Comput. Phys. Commun. 251, 106848 (2020). https://doi.org/10.1016/j.cpc.2019.07.013arXiv:1811.10624

C.K. Khosa, S. Kraml, A. Lessa, P. Neuhuber, W. Waltenberger, SModelS database update v1.2.3 (2020). arXiv:2005.00555. https://doi.org/10.31526/lhep.2020.158

LHC Reinterpretation Forum, W. Abdallah, et al., Reinterpretation of LHC results for new physics: status and recommendations after run 2. SciPost Phys. 9, 022 (2020). arXiv:2003.07868. https://doi.org/10.21468/SciPostPhys.9.2.022

GAMBIT, P. Athron, et al., GAMBIT: the global and modular beyond-the-standard-model inference tool. Eur. Phys. J. C 77, 784 (2017). https://doi.org/10.1140/epjc/s10052-017-5321-8. arXiv:1705.07908. [Addendum: Eur. Phys. J. C 78, 98 (2018)]

GAMBIT, P. Athron, et al., Combined collider constraints on neutralinos and charginos. Eur. Phys. J. C 79, 395 (2019). arXiv:1809.02097. https://doi.org/10.1140/epjc/s10052-019-6837-x

A. Kvellestad, P. Scott, M. White, GAMBIT and its application in the search for physics beyond the standard model (2019). arXiv:1912.04079. https://doi.org/10.1016/j.ppnp.2020.103769

GAMBIT, C. Balázs, et al., ColliderBit: a GAMBIT module for the calculation of high-energy collider observables and likelihoods. Eur. Phys. J. C 77, 795 (2017). arXiv:1705.07919. https://doi.org/10.1140/epjc/s10052-017-5285-8

M. Drees, H. Dreiner, D. Schmeier, J. Tattersall, J.S. Kim, CheckMATE: confronting your favourite new physics model with LHC data. Comput. Phys. Commun. 187, 227 (2015). https://doi.org/10.1016/j.cpc.2014.10.018arXiv:1312.2591

J.S. Kim, D. Schmeier, J. Tattersall, K. Rolbiecki, A framework to create customised LHC analyses within CheckMATE. Comput. Phys. Commun. 196, 535 (2015). https://doi.org/10.1016/j.cpc.2015.06.002arXiv:1503.01123

D. Dercks, N. Desai, J.S. Kim, K. Rolbiecki, J. Tattersall, T. Weber, CheckMATE 2: from the model to the limit. Comput. Phys. Commun. 221, 383 (2017). https://doi.org/10.1016/j.cpc.2017.08.021arXiv:1611.09856

N. Desai, F. Domingo, J.S. Kim, R.R.d.A. Bazan, K. Rolbiecki, M. Sonawane, Z.S. Wang, Constraining electroweak and strongly charged long-lived particles with CheckMATE (2021). arXiv:2104.04542

E. Conte, B. Fuks, G. Serret, MadAnalysis 5, a user-friendly framework for collider phenomenology. Comput. Phys. Commun. 184, 222 (2013). https://doi.org/10.1016/j.cpc.2012.09.009arXiv:1206.1599

E. Conte, B. Fuks, MadAnalysis 5: status and new developments. J. Phys. Conf. Ser. 523, 012032 (2014). https://doi.org/10.1088/1742-6596/523/1/012032arXiv:1309.7831

E. Conte, B. Dumont, B. Fuks, T. Schmitt, New features of MadAnalysis 5 for analysis design and reinterpretation. J. Phys. Conf. Ser. 608, 012054 (2015). https://doi.org/10.1088/1742-6596/608/1/012054arXiv:1410.2785

E. Conte, B. Fuks, Confronting new physics theories to LHC data with MADANALYSIS 5. Int. J. Mod. Phys. A 33, 1830027 (2018). https://doi.org/10.1142/S0217751X18300272arXiv:1808.00480

J.Y. Araz, M. Frank, B. Fuks, Reinterpreting the results of the LHC with MadAnalysis 5: uncertainties and higher-luminosity estimates. Eur. Phys. J. C 80, 531 (2020). https://doi.org/10.1140/epjc/s10052-020-8076-6arXiv:1910.11418

J.Y. Araz, B. Fuks, G. Polykratis, Simplified fast detector simulation in MADANALYSIS 5. Eur. Phys. J. C 81, 329 (2021). https://doi.org/10.1140/epjc/s10052-021-09052-5arXiv:2006.09387

B. Dumont, B. Fuks, S. Kraml, S. Bein, G. Chalons, E. Conte, S. Kulkarni, D. Sengupta, C. Wymant, Toward a public analysis database for LHC new physics searches using MADANALYSIS 5. Eur. Phys. J. C 75, 56 (2015). https://doi.org/10.1140/epjc/s10052-014-3242-3arXiv:1407.3278

J.Y. Araz et al., Proceedings of the second MadAnalysis 5 workshop on LHC recasting in Korea. Mod. Phys. Lett. A 36, 2102001 (2021). https://doi.org/10.1142/S0217732321020016arXiv:2101.02245

A. Buckley, D. Kar, K. Nordström, Fast simulation of detector effects in Rivet. SciPost Phys. 8, 025 (2020). https://doi.org/10.21468/SciPostPhys.8.2.025arXiv:1910.01637

C. Bierlich et al., Robust independent validation of experiment and theory: Rivet version 3. SciPost Phys. 8, 026 (2020). https://doi.org/10.21468/SciPostPhys.8.2.026arXiv:1912.05451

J.M. Butterworth, D. Grellscheid, M. Krämer, B. Sarrazin, D. Yallup, Constraining new physics with collider measurements of Standard Model signatures. JHEP 03, 078 (2017). https://doi.org/10.1007/JHEP03(2017)078arXiv:1606.05296

A. Buckley, et al., Testing new-physics models with global comparisons to collider measurements: the Contur toolkit (2021). arXiv:2102.04377

ATLAS, G. Aad, et al., Expected performance of the atlas experiment—detector, trigger and physics (2009). arXiv:0901.0512

DELPHES 3, J. de Favereau, C. Delaere, P. Demin, A. Giammanco, V. Lemaître, A. Mertens, M. Selvaggi, DELPHES 3, a modular framework for fast simulation of a generic collider experiment. JHEP 02, 057 (2014). arXiv:1307.6346. https://doi.org/10.1007/JHEP02(2014)057

M. Ibe, S. Matsumoto, R. Sato, Mass splitting between charged and neutral winos at two-loop level. Phys. Lett. B 721, 252 (2013). https://doi.org/10.1016/j.physletb.2013.03.015arXiv:1212.5989

CMS, A.M. Sirunyan, et al., Performance of missing transverse momentum reconstruction in proton-proton collisions at \(\sqrt{s} =\) 13 TeV using the CMS detector. JINST 14, P07004 (2019). arXiv:1903.06078. https://doi.org/10.1088/1748-0221/14/07/P07004

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_t\) jet clustering algorithm. JHEP 04, 063 (2008). https://doi.org/10.1088/1126-6708/2008/04/063arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). https://doi.org/10.1140/epjc/s10052-012-1896-2arXiv:1111.6097

CMS, V. Veszpremi, Performance verification of the CMS Phase-1 Upgrade Pixel detector. JINST 12, C12010 (2017). arXiv:1710.03842. https://doi.org/10.1088/1748-0221/12/12/C12010

CMS, S. Chatrchyan, et al., The CMS Experiment at the CERN LHC. JINST 3, S08004 (2008). https://doi.org/10.1088/1748-0221/3/08/S08004

CMS, CMS Technical Design Report for the Pixel Detector Upgrade (2012). https://doi.org/10.2172/1151650

T. Sjöstrand, S. Ask, J.R. Christiansen, R. Corke, N. Desai, P. Ilten, S. Mrenna, S. Prestel, C.O. Rasmussen, P.Z. Skands, An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024arXiv:1410.3012

J. Alwall, R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, H.S. Shao, T. Stelzer, P. Torrielli, M. Zaro, The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079arXiv:1405.0301

M.L. Mangano, M. Moretti, R. Pittau, Multijet matrix elements and shower evolution in hadronic collisions: \(W b {\bar{b}}\) + \(n\) jets as a case study. Nucl. Phys. B 632, 343 (2002). https://doi.org/10.1016/S0550-3213(02)00249-3arXiv:hep-ph/0108069

L. Lonnblad, Correcting the color dipole cascade model with fixed order matrix elements. JHEP 05, 046 (2002). https://doi.org/10.1088/1126-6708/2002/05/046

L. Lonnblad, S. Prestel, Matching tree-level matrix elements with interleaved showers. JHEP 03, 019 (2012). https://doi.org/10.1007/JHEP03(2012)019arXiv:1109.4829

L. Lönnblad, S. Prestel, Merging multi-leg NLO matrix elements with parton showers. JHEP 03, 166 (2013). https://doi.org/10.1007/JHEP03(2013)166arXiv:1211.7278

M. Dobbs, J.B. Hansen, The HepMC C++ Monte Carlo event record for high energy physics. Comput. Phys. Commun. 134, 41 (2001). https://doi.org/10.1016/S0010-4655(00)00189-2

ATLAS, M. Aaboud, et al., Search for heavy charged long-lived particles in the ATLAS detector in 36.1 fb\(^{-1}\) of proton-proton collision data at \(\sqrt{s} = 13\) TeV. Phys. Rev. D 99, 092007 (2019). arXiv:1902.01636. https://doi.org/10.1103/PhysRevD.99.092007

B. Fuks, M. Klasen, D.R. Lamprea, M. Rothering, Gaugino production in proton-proton collisions at a center-of-mass energy of 8 TeV. JHEP 10, 081 (2012). https://doi.org/10.1007/JHEP10(2012)081arXiv:1207.2159

B. Fuks, M. Klasen, D.R. Lamprea, M. Rothering, Precision predictions for electroweak superpartner production at hadron colliders with Resummino. Eur. Phys. J. C 73, 2480 (2013). https://doi.org/10.1140/epjc/s10052-013-2480-0arXiv:1304.0790

ALEPH, A. Heister, et al., Search for scalar leptons in e+ e- collisions at center-of-mass energies up to 209-GeV. Phys. Lett. B 526, 206 (2002). arXiv:hep-ex/0112011. https://doi.org/10.1016/S0370-2693(01)01494-0

ALEPH, A. Heister, et al., Absolute mass lower limit for the lightest neutralino of the MSSM from e+ e- data at s**(1/2) up to 209-GeV. Phys. Lett. B 583, 247 (2004). https://doi.org/10.1016/j.physletb.2003.12.066

DELPHI, J. Abdallah, et al., Searches for supersymmetric particles in e+ e- collisions up to 208-GeV and interpretation of the results within the MSSM. Eur. Phys. J. C 31, 421 (2003). arXiv:hep-ex/0311019. https://doi.org/10.1140/epjc/s2003-01355-5

L3, P. Achard, et al., Search for scalar leptons and scalar quarks at LEP. Phys. Lett. B 580, 37 (2004). arXiv:hep-ex/0310007. https://doi.org/10.1016/j.physletb.2003.10.010

OPAL, G. Abbiendi, et al., Search for anomalous production of dilepton events with missing transverse momentum in e+ e- collisions at s**(1/2) = 183-Gev to 209-GeV. Eur. Phys. J. C 32, 453 (2004). arXiv:hep-ex/0309014. https://doi.org/10.1140/epjc/s2003-01466-y

ATLAS, M. Aaboud, et al., Search for dark matter and other new phenomena in events with an energetic jet and large missing transverse momentum using the ATLAS detector. JHEP 01, 126 (2018). arXiv:1711.03301. https://doi.org/10.1007/JHEP01(2018)126

D. Sengupta, Implementation of a search for dark matter in the mono-jet channel (36.1 fb-1; 13 TeV; ATLAS-EXOT-2016-27) (2021)

F. Staub, SARAH (2008). arXiv:0806.0538

F. Staub, From superpotential to model files for FeynArts and CalcHep/CompHep. Comput. Phys. Commun. 181, 1077 (2010). https://doi.org/10.1016/j.cpc.2010.01.011arXiv:0909.2863

F. Staub, Automatic calculation of supersymmetric renormalization group equations and self energies. Comput. Phys. Commun. 182, 808 (2011). https://doi.org/10.1016/j.cpc.2010.11.030arXiv:1002.0840

F. Staub, SARAH 3.2: Dirac Gauginos, UFO output, and more. Comput. Phys. Commun. 184, 1792 (2013). https://doi.org/10.1016/j.cpc.2013.02.019arXiv:1207.0906

F. Staub, SARAH 4: a tool for (not only SUSY) model builders. Comput. Phys. Commun. 185, 1773 (2014). https://doi.org/10.1016/j.cpc.2014.02.018arXiv:1309.7223

M.D. Goodsell, S. Liebler, F. Staub, Generic calculation of two-body partial decay widths at the full one-loop level. Eur. Phys. J. C 77, 758 (2017). https://doi.org/10.1140/epjc/s10052-017-5259-xarXiv:1703.09237

C. Degrande, C. Duhr, B. Fuks, D. Grellscheid, O. Mattelaer, T. Reiter, UFO—the universal FeynRules output. Comput. Phys. Commun. 183, 1201 (2012). https://doi.org/10.1016/j.cpc.2012.01.022arXiv:1108.2040

G. Bélanger, F. Boudjema, A. Goudelis, A. Pukhov, B. Zaldivar, micrOMEGAs5.0: freeze-in. Comput. Phys. Commun. 231, 173 (2018). https://doi.org/10.1016/j.cpc.2018.04.027arXiv:1801.03509

G. Belanger, A. Mjallal, A. Pukhov, Recasting direct detection limits within micrOMEGAs and implication for non-standard Dark Matter scenarios. Eur. Phys. J. C 81, 239 (2021). https://doi.org/10.1140/epjc/s10052-021-09012-zarXiv:2003.08621

M. Goodsell, Implementation of a search for disappearing tracks (139/fb; 13 TeV; CMS-EXO-19-010) (2021)

J.Y. Araz, B. Fuks, M.D. Goodsell, M. Utsch, Recasting LHC searches for long-lived particles with MadAnalysis 5 (2021). arXiv:2112.05163

Acknowledgements

We thank Brian Francis for very helpful discussions about the CMS analysis. We thank Sabine Kraml, Humberto Reyes Gonzalez and Sophie Williamson for collaboration on related topics; Andre Lessa for helpful discussions; and Jack Araz, Benjamin Fuks, Manuel Utsch for collaboration on LLP recasting in MadAnalysis, and Benjamin Fuks for comments on the draft. We thank the organisers of the LLP workshops https://longlivedparticles.web.cern.ch/, especially MDG acknowledges support from the grant “HiggsAutomator” of the Agence Nationale de la Recherche (ANR) (ANR-15-CE31-0002).

Author information

Authors and Affiliations

Corresponding author

Appendix A: Cutflow comparisons

Appendix A: Cutflow comparisons

See Tables 6, 7, 8, 9, and 10.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Goodsell, M.D., Priya, L. Long dead winos. Eur. Phys. J. C 82, 235 (2022). https://doi.org/10.1140/epjc/s10052-022-10188-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10188-1