Abstract

The capture of scintillation light emitted by liquid Argon and Xenon under molecular excitations by charged particles is still a challenging task. Here we present a first attempt to design a device able to have a sufficiently high photon detection efficiency, in order to reconstruct the path of ionizing particles. The study is based on the use of masks to encode the light signal combined with single-photon detectors, showing the capability to detect tracks over focal distances of about tens of centimeters. From numerical simulations it emerges that it is possible to successfully decode and recognize signals, even of rather complex topology, with a relatively limited number of acquisition channels. Thus, the main aim is to elucidate a proof of principle of a technology developed in very different contexts, but which has potential applications in liquid argon detectors that require a fast reading. The findings support us to think that such innovative technique could be very fruitful in a new generation of detectors devoted to neutrino physics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

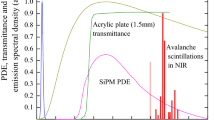

This work is aimed at introducing a new and more efficient collection method of prompt photons emitted by charged particle in noble liquid filling Time Projection Chambers (TPCs), in order to obtain track images, instead of simple triggering signals. As it is known, noble elements in the liquid phase (LAr, LXe) are used as target and detector in high energy physics. In these liquid gases, relativistic charged particles produce large amount of scintillation light in the Vacuum UltraViolet (VUV) range. However, in TPCs the event reconstruction is just based on the collection of drift electrons and the fast light signal is exploited only to set the trigger time \(t_0\) for the data acquisition. The benefits of this novel technique are several, as rate capability, especially relevant for accelerator based experiments, and possibility to work in magnetic field. On the other side, such an imaging detector presents also critical issues. For example, performance of conventional optics in VUV range is very poor and readout electronics must be operated in cryogenic conditions with single-photon detection capability.

In order to face these new challenges we are conceiving a system where the light signal is filtered by Coded Masks and read by Silicon Photomultipliers (SiPMs). The latters guarantee the required performances and offer the advantage of robustness, large number of densely packed small pixels and strong reduction of dark noise at low temperature. The coded masks should have a sufficiently high photon detection efficiency without the use of special materials and complex designs. They provide a sufficiently wide and deep field of view and a large aperture, in such a way to minimize the number of SiPMs.

As proof of principle of the above quoted imaging method, we assume the Near Detector [1] of the DUNE experiment [2] as an inspiring situation, without entering into a full realistic modeling of it. Taking into account that the detector will be hit by the most intense high-energy neutrino beam, the high-rate capability is mandatory. In particular we plan to have a LAr volume in the SAND apparatus (System for on-Axis Neutrino Detection) where a \(0.6\ T\) magnetic field is present, equipped with coded masks and SiPM arrays. The typical energies of the particles (mainly muons) produced in neutrino interactions are sufficiently high to generate \(\approx 10^4\ \mathrm{photons/sr/cm}\) at the \(\lambda _{VUV} \simeq 128\, nm\) wavelength. The imaging reconstruction of neutrino events in the LAr target will be exploited not only to continuously monitor the neutrino-beam spectrum but also to measure neutrino fluxes and cross-section in LAr in order to constrain nuclear effects. However, for the specific application described above, the designers will face the problem of balancing the beam spill length and the long time scintillation constant, leading to possible multiple interactions. This aspect is outside the scope of the present paper and it will concern an evaluation of: the effective probability of events occurring in the fiducial volume “seen” by the masks, the electronic timing, the filtering of the diffused light background and the optical effects of mixture of noble liquids on the light transmission.

In this work, the basic principles of the imaging technique with coded masks are presented. The exploitation of other optical schemes for VUV photons is also under consideration and they will be the topic of future papers.

2 Imaging by coded masks

It is well understood that a small pinhole is required to achieve high spatial resolution. But a single pinhole also dims the light in the image, so much that it may be below the sensitivity of light-sensors. A matrix of multiple pinholes increases light collection, but the source reconstruction from multiple superimposed images becomes more convoluted, and this approach requires to exploit fast numerical methods [3, 4]. Each bright point of the light source deposits an image of the pinhole array on the viewing screen. Knowledge of the geometry of the pinholes arrangement (the coded mask) allows for an efficient numerical reconstruction of the source [5]. Initially, random arrays of pinholes, used in X-ray astronomy [6, 7], were replaced by binary Uniformly Redundant Arrays (URAs) [8, 9], which were shown to be optimal for imaging [10,11,12,13,14,15,16,17,18,19,20,21]. The peculiar autocorrelated distribution of pinholes allows to contain a quasi-uniform amount of all possible spatial frequencies. Thereby, allowing high spatial resolution without limiting the image brightness. Furthermore, more information about the source object is encoded in the scaling of the shadow image of the object points, so leading to a stereographic effect. In particular, hard X-ray astronomy commonly uses URA-based coded masks [22,23,24] and their generalizations, like MURA matrices (Modified URAs), which will be described in the next sections, as well as spectroscopy [25], medical imaging [26,27,28], plasma physics [29] and homeland security [30]. In the type of applications we are interested in, the light sources are posited at length scales of the optical apparata (from meters down to centimeters), then we are mainly concerned with the so-called Near Field settings. They imply important geometrical effects, leading to distortions in the collected data and the presence of artifacts in reconstruction of the image. Thus, this situation has to be carefully considered, in order to improve the reconstruction technique.

2.1 General geometrical settings

By scanning the literature, we notice that several simplifying approximations adopted elsewhere, say in astronomy, do not apply in the experimental conditions we assume here. More precisely, specific features/requirements of our setup are listed in the following:

-

Near Field sources, that is their typical spatial extension and distance from the detector are of the same order of magnitude as the optical apparatus (typically tens of centimeters),

-

non-planar sources,

-

filiform sources,

-

weak sources (\( \approx 10 ^ 4\ \mathrm{photons/sr/cm}\))

-

non-static sources,

-

limited detector information capacity ( \(10^2 - 10^3\) electronic digital channels),

-

need for a 3-D reconstruction.

These settings are mathematically described by a function \({{\mathcal {O}}}\left( {\mathbf {r}},z, {{\hat{\varOmega }}}, t\right) \) that denotes the light density of the source to be detected. The variable t is the time and the other ones are drawn in Fig. 1 and discussed in the following. Each point of the source is labeled by the coordinates \((\mathbf {r}, z) = (x, y, z)\). The mask and detector planes are parallel to each other and are placed along the z-axis. A source point S, emitting in the direction \({{\hat{\varOmega }}}\), leaves a projection on the mask plane, whose coordinates are labeled as \(\mathbf {r}^{\prime \prime } = (x^{\prime },y^{\prime })\), and a projection on the detector plane, whose coordinates are labeled as \(\mathbf {r}^{\prime \prime } = (x^{\prime \prime }, y^{\prime \prime })\).

Moreover, we assume that the diffraction effects can be neglected, as the aperture size \(p_m\) of the single mask pixel is sufficiently large (\(p_m \gg \lambda _{VUV}\)) to make the geometrical optics approximation still good. Also, interference effects of light coming from the different apertures are neglected. These strong assumptions will be verified in future works, distinguishing them from genuine noise effects. Further, we assume light to be monochromatic, disregarding at the first stage the effects of finite band width in the spectrum of the emitted light.

In an approximate modeling of the imaging phenomenon in a perfectly transparent medium (for instance see [31]), we further assume a planar, isotropic and time-independent averaged density of the emitted photons at \(\left( {\mathbf {r}}, z \right) \), thus

This source provides an image on a plane detector placed at the distance \(a+b\) from the reference frame origin, where a is the focal plane-mask distance and b is the detector-mask distance. At the point \({\mathbf {r}}^{\prime \prime }\) on the detector plane \(z = a +b\), the image is described by the collected density of photons \(P\left( {\mathbf {r}}^{\prime \prime } \right) \) and it is provided by the integral linear mapping

where the scaled variable source density \(O\left( \mathbf {\xi } \right) = O_0\left( \frac{a}{b} \mathbf {\xi } \right) \) is filtered by a kernel, which is the product of a geometrical projective factor and the transmission aperture mask function \(A \left( {\mathbf {r}}^{\prime } \right) , \) where \({\mathbf {r}}^{\prime } = \frac{a}{a+b}\left( {\mathbf {r}}^{\prime \prime } + \mathbf { \xi }\right) \) denotes the points belonging to the mask plane at \(z = a\). Typically, \(A \left( {\mathbf {r}}^{\prime } \right) \) is a function taking values on \(\left\{ 0,1 \right\} \) on the mask plane (supposed to be parallel to the detector plane), whose domain is the union of non intersecting squares of equal side length \(p_m\), defining the apertures of the mask. The values 1 correspond to apertures and the values 0 to blind regions.

Ultimately, the function \(A \left( {\mathbf {r}}^{\prime } \right) \) is completely defined by a binary matrix, denoted by a(i, j), of suitable dimensions \(q_x \times q_y\) (not necessarily equal to each other) corresponding to the optically useful region. Thus, a point-like source located in \({\mathbf {r}}\) on the \(z= 0\) plane contributes to the image, if the vector \({\mathbf {r}}^{\prime }\) is such that \(A \left( {\mathbf {r}}^{\prime } \right) = 1\). Moreover, if a whole mask aperture is illuminated by a point source, the projected image on the sensor screen will have the size \(p_m (a + b)/a\).

The expression (2.2) further simplifies in the Far Field approximation, i. e. \(|{\mathbf {r}}^{\ ''} - {\mathbf {r}}| \ll a+b\), and accordingly the geometrical projective factor reduces to 1. Thus, one obtains the image function in the Far Field form as

When \(\frac{a}{b}\frac{| \mathbf { \xi }|}{|{\mathbf {r}}^{\prime \prime } |} = \frac{| {\mathbf {r}}|}{|{\mathbf {r}}^{\prime \prime } |} \lesssim 0.1\) the paraxial approximation still holds and, in (2.2), one can resort to a truncated Taylor series expansion of the geometrical projective factor around \( \frac{| {\mathbf {r}}|}{|{\mathbf {r}}^{\prime \prime } |} = 0\). In most of the applications, the expansion up to second-order [32] is considered, but we will limit ourselves to the zero-order, thus providing a \(\mathbf { \xi }\)-independent distortion of the image. Thus, due to the finite source/detector distance, the geometrically distorted density can be approximated by a corrected correlation (2.3) according to

which can be used in the reconstruction with the same procedure. In the setting we are going to consider, the correction introduced by the prefactor in (2.4) is a function of \({\mathbf {r}}^{\prime \prime }\), radially increasing its value up to 5–6% at the border of the mask with respect to the value at its centre. This geometric distortion of the collected intensity of the image may be of some relevance in the case of long tracks, crossing the field of view. Otherwise, for paraxial sources of angular apertures \(< 10^{\circ }\), the correction may be completely discarded.

2.2 Focal plane

The quality of the imaging process is critically determined by the technological characteristics of the photodetectors, intended for the capture and recording of photons arriving at the detector. Without going into further details, let us assume that the sensitive region is covered by a square grid of pixels, each with a \(p_d\) side.

A crucial aspect of the coded mask imaging is the existence of a special plane, called focal plane, parallel to both the mask and the detector planes. It emerges by observing that, in general, the projection of a mask aperture does not cover exactly an integer number of pixels. In fact, the size of the aperture shadow depends on the factor \((a+b)/a\) and it is projected on a number

of detector pixels. Because of the discrete character of the coding procedure, in order to avoid generic fractional covering of the photosensors, which will lead to defocusing and artificial effects in the reconstruction, it is clear that \(\alpha \) has to take only integer values. Thus, a focal plane corresponds to take \(\alpha = 1, 2,\ldots \) and, correspondingly, it determines the distance source-mask a, if all the other parameters are fixed by technological requirements. On the other hand, a is constrained by the physics we are interested in. Typically, we will privilege the plane corresponding to \(\alpha = 1\).

The aspect to outline in this context is that the process of reconstruction provides a representation of the light source on the focal plane. Thus, for our research, the particle filamentary tracks are directly reproduced only when they lay on the focal plane, or cross it at a small angle. Then, a question to be answered is how to estimate the focal depth of the coded system and which are the corrections to be implemented, to obtain a suitable reconstruction.

A further consequence of this geometrical setting is the concept of Field of View (FoV), defined as the portion of the focal plane that projects the entire pattern of the mask on a finite size detector. Equivalently, one may consider the counterimage on the focal plane seen by a single aperture. That will be a square of side length \(l_m = p_m (a+b) / b\). Hence the FoV is simply a rectangle of area \( (q_x \times l_m) \times (q_y \times l_m)\), where \(q_x\) and \(q_y\) are the number of rows and columns in the coded mask matrix, respectively. In this perspective, the focal plane is a covering of a set of squares (cells) of minimal side length \(l_{res} = p_d\ a /b\), each of them projected one-to-one on a detector pixel of area \(p_d \times p_d\). Thus, \(l_{res}\) is the resolution length of the system, whose evaluation for \(b/a \ll 1\) is \( l_{res} \simeq l_m \). The parameter \(l_{res}\) is particularly relevant, since it predicts the ability to distinguish different sources in the FoV. Furthermore, \(\theta _{FoV}\) is the angle under which \(l_m\) is seen by the detector plane.

3 Decoding: general aspects

Our aim is to decode the experimental image \(P\left( {\mathbf {r}}^{\, ''}\right) \), also in the corrected form (2.4), in order to reconstruct the source function \(O\left( {\mathbf {r}}\right) \). To this purpose, if formula (2.3) still holds, we need to find a suitable kernel function for the decoding operator G, such that \( ( A \otimes G ) \left( {\mathbf {r}}\right) = \delta \left( {\mathbf {r}}\right) \). Thus, the reconstruction problem of the source function in terms of the given image is ruled by

where \(\star \) is convolution product.

As seen above, the mask function \(A\left( {\mathbf {r}}\ ' \right) \) naturally introduces a discretization, described by the matrix \(a\left( i, j \right) \) and the scale parameter \(p_m\). Therefore one has to look for a discrete version \(g\left( i, j \right) \) of the decoding kernel G, accompanied with suitable correlation conditions of the form

To this aim, it was shown in [10, 12] that an optimal compromise between the reduction of coding noise (or artifacts) and the amplification of coherent effects, known also as discretization noise, is obtained if the periodic autocorrelation function (PACF) of the aperture array has constant sidelobes, i.e.

where the peak K and the sidelobe parameter \(\lambda \) are numbers to be determined. Therefore, by combining (3.3) and (3.2) it is very easy to compute the decoding kernel of G, which will be of the form \(g\left( i, j \right) = \frac{a\left( i, j \right) }{K-1} - \frac{\lambda }{K \left( K-1\right) }\). Arrays with this property are commonly referred to as Uniformly Redundant Arrays (URAs), as originally introduced by Fenimore and Cannon [10] for the special case \(q_y = q_x +2\) both prime integers. The construction of such a family of matrices is based upon quadratic residues in Galois fields \(GF(p_1, \dots , p_n)\) (\(p_i\) are integer powers of prime integers) [9]. We consider here a slight variation of URAs, called Modified Uniformly Redundant Arrays (MURAs) [9, 20], which is a family of arrays obtained by the method of the quadratic residues for \(q_y = q_x = q\) prime integer, but possessing a PACF with two-valued sidelobes, i. e. \(\lambda _1\) and \(\lambda _2\), instead of a single one.Footnote 1 For large q it can be proved that the ratio (called the open fraction) of the apertures with respect to the total number of the matrix elements rapidly tends to \(50\%\).

MURAs offer the advantage to be square matrices with open fraction \(\sim 50\%\), furthermore the algorithmic construction and the decoding kernel of G are simple modifications of the URA’s case. Since the construction method of the MURA masks is well known from the literature, here we report only the basic formulas for a \(q \times q\) matrix,

where \(a_1\) is a Legendre sequence of order q, given by

for a generating element \(\mu \) of GF(q) (see Fig. 2). To enlarge the FoV, we will consider combinations (mosaic) of masks, assembled side by side in juxtaposition and possibly with rows and columns cyclically permuted, which do not change the PACF function.

Once obtained the image of a source on the detector screen \(P_{det}\), it will be decodified by using a suitable discretization of the formula in (3.1) and the deconvolution matrix in (3.4), we

Using such a general procedure, one may manage simulations, up to now only geometrical but meaningful (Sect. 6), of several light signals for testing the general properties of the coded mask technique.

3.1 Integer affine transformations

In order to extract the basic properties of the imaging process via coding masks, we introduce here an algebraic approach, based on the observation that the image of a point-like source S on the detector can be represented as an inhomogeneous affine mapping, from the mask points to the detector points, parametrically dependent on the S coordinates. After a suitable change of the reference frame, the source is coordinated by \(S = \left( \mu _S\, p_m, \nu _S\, p_m, \delta _S\, a\right) \), in terms of the aperture pitch \(p_m\) on the mask plane and the focal distance a. Specifically, along the axis orthogonal to the mask, the third coordinate \( z = \delta _S\, a\) is expressed by the relative distance \(\delta _S\) of the source from the focal plane. Thus, the source is located between this plane and the mask for \( 0<\delta _S <1 \), otherwise it lies beyond it for \( \delta _S> 1 \). In its turn, \(S_\bot = p_m \left( \mu _S, \nu _S \right) \) expresses the orthogonal projection of the source position on the mask plane. Likewise, the mask apertures will be denoted by the set of coordinates \(H = p_m {\mathcal {H}} \), where \({\mathcal {H}}\) is a list of ordered pairs of integers \(\left( \mu , \nu \right) \) only, identifying a lattice of point-like apertures on the mask. Thus, assuming the point-like source S on the focal plane, we can represent the discretized image density as the affine transformation over a \(q^2\)-dimensional vector space (from the mask lattice) by

which singles out a lattice of points on the detector plane. Since the blind pixels in the mask are excluded from the mapping, actually only a number, equal to the peak value of the mask \(K = \frac{q^2-1}{2}\), of correspondences is needed to be computed.

Since the above mapping is equivalent to projecting only one light ray from the source to the detector through a single point (for instance its center) of the aperture, it is more realistic to consider more of such points, in particular, closer to the aperture sides. Then, one may consider \(\rho + 1\) crossing points for each aperture, generating the set of coordinates

Moreover, as mentioned above, in order to widen the Field of View (FoV), a mosaic of four masks can be implemented, further enlarging the set \({\mathcal {H}}_{\sigma }\). Hence, the previous mapping (3.7) can be modified for sources in generic positions and rescaled by the pitch \(p_d\) of the detector pixel as follows

Finally, since the detector pixels are also quantized and identified by a lattice of pairs of integers (in \(p_d\) units), each projected point \(\widehat{T_{S} } {H} \) is properly assigned to a specific pixel by rounding up

which is a nonlinear and not invertible operation. Thus, part of the complete information will be lost and an intrinsic discretization noise is introduced. So, a statistical analysis may be required to deal with the detected data. Thus, the representation (3.9)–(3.10) of the coded mask action allows us to algebraically study some of the main properties of the acquisition and reconstruction algorithms. In particular, one can obtain a dual spectral description of the masks. To this aim, let us observe that: (1) the individually resolved sources belong to the lattice of points on the FoV \(\left\{ S_{\bot \, i, j } \right\} = l_{res} \times \left\{ \left( i, j \right) \right\} \), with i, j running over \(1 - q\), and (2) the relation (3.9) is linear in \(S_\bot \). Thus the \(q^2 \times q^2\) matrix of columns \(\varPhi = \left\{ \widehat{T_{{S_1} }} {H}, \dots , \widehat{T_{{S_{q^2}} } }{H} \right\} \) represents a linear application from the \(q^2\)-dimensional space of the discretized light distribution, where \(\left\{ S_{\bot \,k } \right\} _{k = 1, \dots , q^2}\) forms a basis in that space. The target discretized image space Y represents the pixel measurements on the discretized device plane. Thus, one handles with a fully discretized version of the coded mask transfer matrix for the linear mapping

possibly encoding the intrinsic and extrinsic noise into the vector E. Multiple point-like sources superimpose their images which, because of the limited resolution power or by the discretization, are defocused on two or more surrounding device pixels. Moreover, here it is important to notice from (3.9) that the dependency of \(\varPhi \) on the relative distance \(\delta _S\) is non linear and needs a separate discussion. Actually, in the on-focal-plane case the relations (3.9)–(3.10) provide the transfer matrix \(\varPhi \), which could be algebraically derived from its very definition in terms of the MURA mask. It turns out that \(\varPhi \) is a binary symmetric non degenerate matrix, its inverse represents the action of the corresponding decoding operator G, and its spectrum is real and by induction can be proved to be

where the eigenvalue degeneracy is given. As a consequence, any combination of sources in the focal plane is decomposed in the sum of \(q^2\) eigenvectors

of \(\varPhi \). Their images are simply scaled, or reflected-scaled, only by the two distinct, but very close, factors \( \pm \frac{q\pm 1}{2}\) (a quasi-flat spectrum is a remarkable property allowing for good reconstructions), except for the non-degenerate eigenvalue K. The corresponding eigenvector, say \(\hat{e}_0\), has all equal components, without specific information about the details of a generic source. However, since the other eigenvectors contain negative components, implying “unphysical” sources, \(\hat{e}_0\) is needed to correctly reconstruct the source.

On the contrary, out of the focal plane (\(\delta _S \ne 1\)), numerics is needed to deal with the prescribed relations (3.9)–(3.10). By using the same lattice of single sources as above, the matrix \(\varPhi \) loses the previous simple structure: it is not symmetric anymore and its elements take values over a finite set of real positive numbers. However, at least for the explored values \(\delta _S \approx 1\), these matrices are still diagonalizable, but their eigenvalues take complex values and are not degenerate. Still, there exists a maximal isolated real eigenvalue, the others appear in conjugated pairs, whose absolute values fill a band, extending from the degenerated values indicated in (3.12) to 0. So, the spectrum is not longer quasi-flat and the phases make the eigenvalues migrate in the disk around the origin of the complex plane of radius \(\approx q/2\). This corresponds to a superposition of many scalings and rotations of the image around the axes of the optical system. Even if at the moment we do not have any analytic tool to describe such a situation, remarkably a \(\delta _S\)-dependent rescaling of the source lattice allows to find a pure real spectrum for \(\varPhi \), which becomes symmetric, but still the eigenvalues range over a band of the order q near 0. The rescaling is of the order of \(\delta _S\), even if its exact expression for restoring the ideal simple spectrum (3.12) is not achieved yet. Several different techniques to calibrate such a factor are actively under investigation with the aim to realize a numerical focusing method.

4 Design specifications

Since the photosensors will be arranged in a square matrix, we will consider MURA coded masks. Even if conceptually this is not necessary, the below defined geometries are a compromise among the technological limitations (available matrices of SiPM photosensors, electronic and mechanical constraints, allowed heat dissipation rate in the scintillation liquid) and the image reconstruction requirements. In other terms, we would explore here imaging systems involving few sensor channels, which in a more generic context may be an arbitrarily scalable factor. As mentioned above, to enlarge the FoV, we consider a mosaic of masks. After inspecting several types of assembling, we arrived at the conclusion that the best solution consists of four cyclically arranged masks. By exploiting the cyclic shift property of the MURAs, we periodically permute columns and rows also on the mosaic to optimize the resolution of the paraxial light sources. Simply, the so built mosaic allows us to expand the region of light collection with the same basic pattern. Thus, sources at large angular position with respect to the normal at the mask can project on the detector screen their images coming from different apertures. We have to stress that the detector array keeps the same number of rows and columns as a single mask. Furthermore, after a restriction of the image on the effective detector matrix, the deconvolution procedure will proceed as usual.

4.1 The 6 detectors setup

Most of the previous considerations on the use of the coded masks of small rank and in the near field conditions suggest that their stereographic properties are partially shadowed in the reconstruction of a source. Thus, the obvious solution is to expand the detector dimensions or, alternatively, try to dispose more of them in different configurations, allowing to detect the true spatial extension of the tracks we are looking for. Thus, we arrive at the concept of a spatially distributed system of coded masks. In particular, in the present paper we propose to consider a Stage of Observation, bounded by pairs of coaxial parallel coded mask devices, as schematically represented in Fig. 3. The main features of such a setup are:

-

1.

6 mask mosaics define a cubic Stage for the physics of interest;

-

2.

the masks are identical in a \(2\times 2\) mosaic;

-

3.

each pair of parallel mosaics shares the same symmetry axis;

-

4.

the 6 SiPM detectors are coplanar to the mosaics, at the same distance b from the coupled mask;

-

5.

the center of the Stage is the origin of an orthogonal reference system;

-

6.

the masks are as far apart from the origin exactly as the focal distance a, thus the coordinate planes passing through the origin are themselves focal planes.

Furthermore, one can outline several details, namely:

-

1.

the detectors will provide redundant information, which has to be simplified/exploited;

-

2.

a lower number of devices can be used, exploiting more efficiently the performed measurements;

-

3.

a primary interest will be to study the possible measurements by means of couples of parallel coded masks;

-

4.

a second step is to exploit the performances of couples of orthogonal masks;

-

5.

since presently the geometry of the specific experimental imaging-system cannot be fully determined, the cubic setup can be deformed in a more general parallelepiped structure, with non-coincident focal planes and shifted masks.

Following the previous prescriptions, many different experimental setups have been studied, taking into account actual technical requirements, related to the intensity of light emission, number of electronic channels per detector array and geometry of the Stage of Observation. However the analyses presented in this paper are based on the simulation performed according to just one design. The parameters of a single device are reported in Table 1.

5 The single pinhole camera approximation

The problem of the spatial localization of the source is hardly solved by using only one coded small-order mask, then we need to use more than one. The simplest considered mask arrangement is made up by two parallel coded systems, sharing the same focal plane. For sake of simplicity, we suppose that the apertures of the two masks result to be co-axial, that is, any orthogonal line to mask planes intersects the corresponding apertures on both of them. However the results we are going to present can be extended also to non-aligned masks.

In order to obtain simple formulas to reconstruct the image, we approximate each coded mask with a single pinhole camera. Such an effective (point-like) aperture is set at the center of each mask, in the origin of the reference frame of the mask (see Fig. 4, left). Let us call \(O_A\) and \(O_B\) the center points of the masks on the left (A) and on the right (B), respectively, with the coinciding axes. In this scheme the imaging process is reduced to a projective application of the source points on the focal plane through the poles \(O_A\) and \(O_B\). For the stipulated approximation to be valid, the source must be sufficiently far from the mask and the angle subtended by two different apertures of the mask has to be small. Then, taking into account the existence of a preferred focal plane, the approximation validity interval can be expressed as

where q as above is the dimension of the mask and L is a typical transverse distance of the source from the center of the FoV.

In practice, let us fix a point-like source S located in the space between the two masks, with coordinates \(\left( x_S, y_S, z_S\right) \) with respect to a right-handed frame of reference \(\left( O, x, y, z\right) \). We denote by \(y_A = |y_S- a|\) and \(y_B = |y_S+a|\) the length of the projections on the y-axis of the segments \(\overline{O_A S}\) and \(\overline{O_B S}\) with the restriction

The projection of S on the focal plane is done by the intersection of two straight lines of the bundle through S and \(O_{A, B}\), respectively. These intersections are denoted by \(P_A = \left( x_A, a, z_A\right) , \quad P_B = \left( x_B, a, z_B\right) \), which belong to a line passing through the intersection of the axis system O with the focal plane. This can be proved by elementary geometry. Of course, the reconstruction of sources closer to a mask is seen more far apart on the focal plane, but closer to the center O when seen from the opposite side. The Cartesian equations of these two lines are

and their intersections are readly found to be

The first two previous equations represent the harmonic mean of the A and B coordinates. Such elementary formulas are of great help in localizing sources. In fact, it is enough to compute for the same point-like source the x, z coordinates on the focal plane seen by the two masks and compute their harmonic mean, providing the correct value.

Left – A source S seen by a pair of single-pinhole cameras sharing the focal plane (\(f_A = f_B\)). \(P_A\) and \(P_B\) are the apparent positions observed by \(O_A\) and \(O_B\), respectively. Right – A source S seen by a pair of single-pinhole cameras with distinct focal planes (\(f_A \ne f_B\)), separated by a supplementary distance s. Again, \(P_A\) and \(P_B\) are the apparent positions observed by \(O_A\) and \(O_B\), respectively

In the more general case of non co-focal masks, in the same approximation (see Fig. 4, right) analogous formulas hold, namely

where s is the separation distance of the focal planes. This is a significant design parameter, because the photon collection and the spatial resolution depend on it. Also, the configuration with \(s<0\) (focal plane closer to the opposite mask) can be implemented.

Several configurations of non planar sources have been simulated and successfully analyzed by calculating the harmonic mean. These checks are partially reported in the following (Sect. 6.1).

Finally, the method is not particularly useful in the numerical evaluation of the third coordinate (\(y_S\) in this case) since its estimate is affected by large uncertainty. This behaviour can be understood (disregarding the effect of the quadratic intensity falling off with the distance) by noticing that the localization procedure of a point-like source performed here is equivalent to establishing a one-to-one correspondence between the set of sequences of \(2^{q\times q}\) bits and the set of adjacent convex 3-polytopes, generated by the planes emerging from the sensor devices and tangentially intersecting the mask apertures. The polytopes tile the space in front of the mask, but their shape is not regular, nor their sizes. In particular, in correspondence with the focal plane, there is a stratum of polytopes, which are elongated in the orthogonal direction about ten times the transversal section size \(\sim l_{res}\). Thus, they allow us to determine pretty well the coordinates of the sources in that plane, but very roughly in the direction of the mask axes.

Assuming that the association of pixels on different parallel detectors is correct, the error on \(x_S\) is given by the following formulaFootnote 2

where \({\tilde{\sigma }} = l_{res}/\sqrt{12}\). Same formula can be used for the second coordinate (\(z_S\) in this example).

However, it is necessary to stress that a larger error can be introduced by wrong association of pixels on opposite detectors. This association can be driven by topological criteria case-by-case. Here we want to stress that the associated pixels must be on the same Cartesian quadrant and on the same line with respect to the system origin (look at the position of \(P_A\) and \(P_B\) in Fig. 4, left).

5.1 3-D reconstruction in the single-pinhole camera approximation

By using the single-pinhole approximation, it is quite simple to prove how to reconstruct single linear tracks in the space by means of two parallel co-focal masks and a third one, this latter placed orthogonally with respect to the other ones (Fig. 5). The basic idea rests upon the elementary projective geometry.

Stereographic projections of a given segment (in red) from three different poles \(O_1, \; O_2, \; O_6\). \(O_1\) and \(O_2\) are on the same x-axis, symmetrically placed at the distance \(\pm a\) from the origin. The third pole \(O_6\) is at \(-a\) on the z-axis. The red segment \(\overline{P_0 P_1}\) indicates a light track, whose projections are indicated in green and orange on the plane \(z{-}y\) and in pink on the plane \(x{-}y\). The other visual poles have been indicated for future reference

A physical track, represented by a segment \(\overline{P_0 P_1}\), will be stereographically projected by the three line bundles emerging from the pinholes \(O_1\), \(O_2\) onto the common focal plane \(y{-}z\) and from \(O_6\) onto \(y-x\), respectively. The three projected segments belong to straight lines with equations

where \(\rho , \;\bar{ \rho }, \; \rho _\bot \) are the angular coefficients and \(\sigma ,\bar{ \sigma }, \sigma _\bot \) the intersections with the y axis obtained from the 2-dimensional reconstructed images (see Fig. 5). These six quantities are related to the parameters of the physical track in the space, i. e. its direction and one of its points. More precisely, parametrizing the track line by the line unit vector \(M = \left( n_x, n_y, n_z \right) \) and one of its point \(P_0 = \left( x_0, y_0, z_0\right) \), one can analytically derive the equations of the projected lines on the chosen planes. Thus, one obtains the parameters in (5.8) in terms of linear fractional combinations of M and \(P_0\) components. Thus, it is possible to solve such an algebraic overdetermined system, together with certain consistency conditions. For instance, looking at the first two equations in (5.8) and from the observed projected segments on the \(y{-}z\) plane (detected by \(O_1\) and \(O_2\)), it is easy to calculate the intercept with the y-axis and one of the slope

Thus, the angular coefficient of the track projected on the \(y{-}z\) plane is the mean value of \(\rho , {\bar{\rho }}\), weighted with the inverted intersections (\(\rho \) weighted with \({\bar{\sigma }}\), \({\bar{\rho }}\) with \(\sigma \)). Remarkably, this result can be also simply deduced taking into account that the projected line intercepts the y axis in the point \((z^*, y^*)\) and the z axis in the point with coordinates

On the other hand, the information connected with the projected segments on the \(y{-}z\) plane allows to estimate the other component of the line unit vector along the perpendicular axis, accordingly with the analytic formula, namely

But, as remarked in the previous section for the last equation in (5.4), we expect that the collected data will provide quite inaccurate evaluations of such a parameter. In any case, three pairs of parallel masks allow to use equation (5.9) cyclically permuting the oriented planes and obtaining:

Of course, one may apply similar considerations from the images projected onto pairs of orthogonal planes. For the pair \(\left\{ y{-}z \right\} _{O_1}, \left\{ y-x \right\} _{O_6}\) in (5.8), one obtains

Left column The light track crossing is shown in real dimensions in three different views. Central and right columns Reconstruction of the light track on three couples of parallel detectors. The experimental setup is that in Fig. 3

Also in this case, we expect that (5.11) will be very useful in determining the slope of the line, while (5.12) will be affected by large uncertainty. But, in the perpendicular-mask setup we can invert the role of the detectors in Eq. (5.11) in order to get the slope in the \(y - x\) view. So it results

Of course, consistency relations arise when comparing various formulas among themselves. Specifically, the following identities hold

These relations can be exploited not only to check the consistency of the data, but also to remove the dependence of the formulas on \(\rho \) and \(\sigma \). So, two perpendicular detectors are good enough to get the intercepts on y axis

Therefore, a couple of perpendicular detectors are the minimal setup for the 3-D reconstruction of a linear track and we expect that similar algorithms can be implemented also for second-degree curves. It is evident that the procedure here presented does not take into account the actual experimental obstacles. Then, many detectors in parallel and perpendicular configuration are mandatory to make redundant measurements in large volumes.

6 Simulation and signal reconstruction

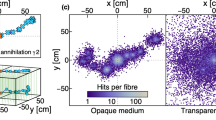

In order to quantitatively study strength and weakness of the coded mask system so far described and to get a more realistic understanding of the coded masks performance, a toy Monte Carlo has been implemented. The light rays are emitted uniformly along the simulated track and propagate linearly according to the direction extracted isotropically on the full solid angle.

Simulation of the four light-points. The left frame represents the actual sources in the \(x{-}z\) view. The other two frames represent the reconstructed signal by means of two different detectors. Detector A (central frame) is closer to the sources, detector B (right frame) is farther. For detail on the image reconstruction see Sect. 6.1

At this stage of investigation, we are concerned mainly with the effect of coded masks on the light signal by looking for simple formulas to decode it. In order to reach this goal we did not simulate the actual physical effects (light yield from LAr [33, 34], light absorption, light scattering, SiPM efficiency and so on). In the future a full Monte Carlo simulation will be implemented.

The simulation has been performed with different parameters (mask rank, pixel sizes, values of a and b lengths) in order to verify the correctness of the formulas presented in this paper. Here we present the results obtained with the experimental setup made by 6 coded masks and 6 SiPM matrices (see Table 1 and Fig. 3) with coincident focal planes (\(s=0\)). The full optimisation of the detector setup will be defined by also taking into account mechanical and cryogenic requirement (see Sect. 1).

A reference example of the projection/reconstruction procedure applied in the present work is reported in Fig. 6, where a simulated linear light-track crosses the Stage of Observation. In the left column the original track is plotted in the different views. In central and right columns the track is reconstructed using the photon signal collected by the SiPM detectors. At this step we did not apply any kind of filter on the reconstructed image, we simply take the absolute value of the content of each bin (as an effect of the decoding also negative values are possible, but significantly lower in absolute value than the signal). We would like to stress that the track does not lay on focal planes. Then, as expected, the shape of the reconstructed track in the same view is different because of the point-by-point distance of the track from each detector. Indeed the measurement with only one mask reproduces a distorted image of the track. A true image can be reconstructed combining the information from different masks, as explained in previous sections and verified in the following ones.

6.1 Application of the harmonic-mean method and signal filter

Simple light signals were studied to evaluate the effectiveness of the harmonic-mean method. Four point-sources have been simulated at the vertices of a square (12 cm size) on the x, z plane (Fig. 7). The signal is collected by a couple of parallel detectors. The first one (A) is at 17 cm from the light-sources, the second one at 33 cm. The left frame in Fig. 7 shows the “true” positions of the light-sources. The signal reconstruction on the detector A is shown in the central frame. The right frame represents the signal on the opposite detector (B). From these figures, it is apparent that the reconstructed positions are subjected to a homogeneous scaling effect, due to the different perspective projection. Indeed the reconstructed image is larger for the closer detector (A) and smaller for the farther detector (B). By calculating the harmonic mean (Eq. (5.4)) and estimating the error (Eq. (5.7)) one gets the reconstructed coordinates \(\pm (5.5 \pm 1.2)\ \mathrm{cm}\) of the light sources. They are compatible within \(0.42 \ \sigma \) with the actual coordinates \(\pm 6.0\ \mathrm{cm}\). Moreover, we verified that also the third coordinate \(y_S\) can be correctly estimated by the third equation in (5.4). However, we stress that this is just a particular case as this formula for \(y_S\) is not in general reliable.

Left frame – simulation of a neutrino interaction. The three tracks do not lay on the same plane. Central and Right frames show the reconstructed image in the \(x{-}z\) view. The superimposed green dots characterize the pixels selected as signal. The position of the points \(\alpha \), \(\beta \) and \(\gamma \) is estimated by means of the harmonic mean. To compare “truth” and reconstruction look at Table 2

For the analysis of signals more complex than 4 light-points, a preliminary procedure was implemented in order to follow the track and extract the spatial extent (i.e., the distance between the end-points) of the signal from the noise. Based on a careful investigation of the image histograms, we adopted the following step procedure:

-

when a negative content is associated to a bin of the reconstructed signal, this content is substituted with its absolute value;

-

a Gaussian low-pass filter is applied to the two-dimensional distribution in order to get a better separation between noise and signal;

-

the distribution of the values of all bins is studied and fitted with a Gaussian;

-

a threshold is fixed on such a distribution: typically we accept as signal the bins with a separation from the Gaussian center larger than \(4\sigma \).

We know that this selection method has not been tested on a full Monte Carlo. The application of this method and the threshold choice for fully simulated neutrino interactions are necessary. However, we will use this preliminary signal selection as a provisional instrument to check the formulas found in this work.

In Fig. 8, left frame, the tracks generated by a simulated neutrino interaction are shown in the \(x{-}z\) view. Obviously the tracks do not lay on a single plane. The light signal emitted by the tracks is collected and reconstructed by two parallel masks sharing the same focal plane (Fig. 8, center and right). The position of the end-points (\(\alpha \), \(\beta \) and \(\gamma \)) has been reconstructed by means of the harmonic mean applied to the edge signal pixels. The measured coordinates are compared with the “true” ones in Table 2 assuming the error of formula (5.7). The agreement is fairly good. The largest discrepancy (\(2\sigma \)) is due to the vanishing of the reconstructed track when the original one is too far from a detector (on the y-axis, perpendicular to the analysed view).

There are at least two comments concerning the presented reconstruction. First of all it does not represent a realistic simulation of a generic event that may occur in DUNE experiment, but it is a proof of principle of how the coded mask technology may be applied in the context of the high energy Physics. On the other hand, it is clear that the chosen set up (dimensions of the mask matrix, magnification ratio in particular) leads to a resolution power, which limits the possibility to discriminate among high complex topologies. But, in principle one may scale the number of pixels in order to significantly improve the resolution, without distorting the basic concepts involved in our considerations.

6.2 Image 3-D analysis

The signal due to a linear light-track has been simulated to check the estimates of Sect. 5.1. The track is described in the space by the following cosine directors and by an arbitrary point

In the Cartesian notation

The signal has been analyzed by means of the detectors \(O_1\), \(O_2\) and \(O_6\). The detected images are shown in Fig. 9 where a linear fit is superimposed on the selected pixels (black dots). The parameters of the fit are also reported in Fig. 9. The detectors in \(O_1\) and \(O_2\) are parallel and the final track in the \(y{-}z\) view can be reconstructed according to formulas 5.9. The result is comparable with the first Eq. (6.2)

Detectors \(O_1\) and \(O_6\) are perpendicular and allow to reconstruct the light track in the space. The fit parameters have been used in the formulas (5.11), (5.13) and (5.15) to get

Neglecting the errors due to the pixel size, the errors on the fit parameters are enough to claim that the calculated equations are in good agreement with the original ones. Then the results of Sect. 5.1 are confirmed in the frame of the single-pinhole approximation.

Reconstruction of a linear track on three different detectors (\(O_1\), \(O_2\) and \(O_6\)). The linear fit is applied to the pixels (black dots) selected according to the preliminary algorithm of signal recognition. The fit parameters shown in the frames have been used to reconstruct the original track in 3-D. See Sect. 6.2 for details

7 Conclusions

In the present work, we have reported a study concerning the application of the method of coded masks as detectors of tracks of charged particles in scintillating media. It has been shown that the system actually allows for the detection of tracks over focal distances of the order of tens of centimeters. From theoretical arguments and numerical simulations, it emerges that it is possible to implement decoding and recognition procedures for signals, even complex ones such as neutrino interactions, with a relatively limited number of channels (few hundreds for each SiPM array). By using a preliminary procedure of noise reduction and signal clustering, it has been shown the possibility to make measurements in agreement with the theoretical evaluations. If opposite and/or orthogonally arranged masks are used, the measurements can be correlated, by using simple geometrical formulas. A 3-dimensional reconstruction is possible, even for sources out of the focal planes. An alternative image reconstruction method is being pursued, based on the calculation of deconvolution matrices depending on the depth of field. This approach, which is intrinsically 3-D, might solve the problem of the limited depth of field due to the near field conditions. Other critical issues must be yet carefully studied, as intensity of the photon signal, detection efficiency, rejection of noise and artifacts. Therefore we are developing a full Monte Carlo in order to complete the design of a real detector. The implementation of such Monte Carlo is in progress in parallel with the design and construction of prototypes of the detector for the imaging of neutrino interactions in LAr. Also the feasibility to complement the reconstruction of such events with the timing information is under analysis.

Finally, complete validation of high complex signal reconstruction will require other deep considerations. In this regard, recently in [35] points of interest in particle trajectories, such as the initial point of electromagnetic particles, from straight line-like tracks to branching tree-like electromagnetic showers, have been studied by means of Neural Network, significantly improving the efficiency of finding candidate interaction vertices, and hence candidate neutrino interactions, which may be used in high-level physics inference, for instance in the context of the DUNE and SBND experiments. Those developments merit our attention in further dedicated efforts.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: No experimental data are available as only MC simulations have been performed.]

Notes

\(\lambda _1 - \lambda _2 =1\).

According to this result, we observe that this uncertainty is lower in the configuration with \(s < 0\) but in this case the single-pinhole approximation can be not suitable.

References

A. Abed Abud et al. (DUNE Collaboration), (2021). arXiv:2103.13910

B. Abi et al. (DUNE Collaboration), Eur. Phys. J. C 80, 978 (2020)

L. Mertz, N.O. Young, Proceedings of the International Conference on Optical Instruments and Techniques (Chapman and Hall, London, 1961)

L. Mertz, Trasformation in Optics (Wiley, New York, 1965)

D. Calabro, J.K. Wolf, Inf. Control 11, 537 (1968)

R.H. Dicke, Astrophys. J. 153, L101 (1968)

F.A. Horrigan, H.H. Barrett, Appl. Opt. 12, 2686 (1973)

L. Baumert, Cyclic Different Sets, Lecture Notes in Mathematics (Springer, New York, 2021)

A. Busboom, H. Elders-Boll, H.D. Schotten, Exp. Astron. 8, 97 (1998)

E.E. Fenimore, T.M. Cannon, Appl. Opt. 17, 337 (1978)

E.E. Fenimore, Appl. Opt. 17, 3562 (1978)

E.E. Fenimore, T.M. Cannon, Appl. Opt. 20, 1858 (1981)

L. Fuchs, Fundamentals. In Abelian Groups. Springer Monographs in Mathematics (Springer, Cham, 2015)

R. Lidl, H. Niederreiter, Finite Fields. In Encyclopedia of Mathematics and its Applications (Cambridge University Press, Cambridge, 1996). https://doi.org/10.1017/CBO9780511525926

T. Beth, D. Jungnickel, H. Lenz, Design Theory (Bibliographisches Institut, Mannheim, 1985)

L. Bömer, M. Antweiler, Proc. IEEE Int. Conf. Acoust., Speech, Signal Processing (Institute of Electrical and Electronics Engineers, Piscataway, 1989)

L. Bömer, M. Antweiler, H. Schotten, Frequenz 47, 190 (1993)

L. Bömer, M. Antweiler, Signal Process. 30, 1 (1993)

S.R. Gottesman, E.J. Schneid, IEEE Trans. Nucl. Sci. 33, 745 (1986)

S.R. Gottesman, E.E. Fenimore, Appl. Opt. 28, 4344 (1989)

Y.H. Liu et al., IEEE Trans. Nucl. Sci. 45, 2095 (1998)

F. Gunson, B. Polychronopulos, Mon. Not. R. Astron. Soc. 177, 485 (1976)

K.A. Nugent, H.N. Chapman, Y. Kato, J. Mod. Opt. 38, 1957 (1991)

J. in ’t Zand, (2021). https://universe.gsfc.nasa.gov/archive/cai/coded_intr.html

M. Harwit, N.J.A. Sloane, Hadamard Transform Optics (Academic Press, New York, 1979)

H.H. Barrett, J. Nucl. Med. 13, 382 (1972)

W. Swindell, H.H. Barrett, Radiological Imaging: The Theory of Image Formation, Detection and Processing, ed. by H.H. Barrett (Academic Press, New York, 1996)

R.C. Lanza, R. Accorsi, F. Gasparini, Coded Aperture Imaging, U.S. Patent No. US6,737,652B2, Date of Patent: May 18 (2004)

E.E. Fenimore, T.M. Cannon, D.B. Van Hulsteyn, P. Lee, Appl. Opt. 18, 945 (1979)

M. Cunningham et al., IEEE Nucl. Sci. Symp. Conf. Rec. 1, 312 (2005)

R. Accorsi, F. Gasparini, R.C. Lanza, Nucl. Instrum. Methods Phys. Res. A 474, 273 (2001)

R. Accorsi, R.C. Lanza, Appl. Opt. 40, 4697 (2001)

T. Doke, K. Masuda, E. Shibamura, Nucl. Instrum. Methods Phys. Res. A 291, 617 (1990)

E. Segreto, Phys. Rev. D 103, 043001 (2021)

L. Dominè, P.C. de Soux, F. Drielsma, D.H. Koh, R. Itay, Q. Lin, K. Terao, K. Vang Tsang, T.L. Usher, Phys. Rev. D 104, 032004 (2021)

Acknowledgements

The present research is supported by the Italian Ministero dell’Università e della Ricerca (PRIN 2017KC8WMB) and by the Istituto Nazionale Fisica Nucleare (experiments NU@FNAL and MMNLP).

Author information

Authors and Affiliations

Consortia

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Andreotti, M., Bernardini, P., Bersani, A. et al. Coded masks for imaging of neutrino events. Eur. Phys. J. C 81, 1011 (2021). https://doi.org/10.1140/epjc/s10052-021-09798-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-021-09798-y