Abstract

Data-driven methods of background estimations are often used to obtain more reliable descriptions of backgrounds. In hadron collider experiments, data-driven techniques are used to estimate backgrounds due to multi-jet events, which are difficult to model accurately. In this article, we propose an improvement on one of the most widely used data-driven methods in the hadron collision environment, the “ABCD” method of extrapolation. We describe the mathematical background behind the data-driven methods and extend the idea to propose improved general methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Standard Model (SM) of particle physics is compatible with almost all of the measurements from particle experiments. In contrast to the successes on Earth, astrophysical measurements seem to imply existence of energy component that cannot be explained by SM, and pose a serious challenge.

Despite theoretical and experimental efforts, there is no direct evidence that any of the solutions proposed is correct. Moreover, it is not clear what direction should be taken in order to resolve the problem. Particles predicted by viable extensions of the SM are already excluded beyond many TeV’s at the LHC [1]. It may turn out that these new states are massive enough to be beyond the reach of the LHC for direct production. However, it does not exclude the possibility that interesting physics are waiting to be found in rarer and more complicated final states. For example, we may have to entertain the possibility of exotic final states [2, 3], where new states appear as a continuum rather than as a resonance, above backgrounds. In either case, better accuracy of background estimation is necessary.

For many processes of interest, automatic calculations to next-to-leading order (NLO) in strong interactions are accessible in modern Monte Carlo event generators [4]. However, even at the NLO, theoretical uncertainties are larger than statistical uncertainty for many processes at the LHC. And as the number of final-state hadronic jets increase, even the accuracy of NLO calculations decreases [5]. Parton showering, hadronization, and underlying events have smaller effect on the theoretical uncertainty, but nevertheless are not negligible.

To reduce the uncertainties related to background estimation, various data-driven estimation methods could be employed. Data-driven methods make use of the data in the “background” dominated control region (CR) to estimate background contributions in the “signal” region (SR), where interesting events may be found. The method of interpolating using side-bands is a canonical method. In analyses involving hadron collision data, we often employ a method of extrapolation, called “ABCD,” a data-driven background estimation method. It should be noted that data-driven methods do not entirely exclude the use of simulated data. In this article, we review the main idea behind data-driven methods and then extend it to find an improvement for the extrapolation method.

2 Data-driven methods of background estimation

The concept of estimating backgrounds from the data itself is nothing new. Important discoveries in the history of particle physics would not have been possible without such estimations, given that the underlying theory of particle interactions were not very well known or had large uncertainties [6,7,8,9,10].

While there are many ways that data-driven methods can be divided, in this article, we will group them into two categories. In the first category, there are data-driven methods that use interpolations from the measurements performed on the side-bands. These methods are used when we look for a new particle state in a restricted range of kinematic phase space (usually mass). In the second category, there are methods we use when straight interpolations are difficult to employ. The methods that use extrapolations based on information in signal-depleted regions, fall in this group. An extrapolation method, called the “ABCD” method, is often used in hadron collider experiments, where predictions of multijet production processes have large uncertainties [11,12,13]. For more complicated analyses, it could involve combinations of the two categories.

2.1 Interpolation methods

We briefly review the interpolation methods, which will give us ideas on how to extend and improve extrapolation methods. In an interpolation method, measurements are performed in the side-bands or CRs that surround the SR and the information is combined to estimate the backgrounds in the signal region. In the absence of other information, the minimal assumption is that the background would have a smooth distribution.

Let us take a one-dimensional example. We may assume that the signal region is in \(x_0 \sim x_0+\varDelta \), Without loss of generality. The number of backgrounds in this region for a distribution of backgrounds described by f(x) may be expressed as \(F(x_0) \equiv \int _{x_0}^{x_0+\varDelta } f(x) dx\). Let us take a simple side-band of equal width to either side of the signal region. The backgrounds on the left(right) side-band are \(F(x_0-\varDelta )\) (\(F(x_0+\varDelta )\)), respectively. If we assume that the series expansion is valid, we can then express the entries in the side-bands as

From the two side-bands, the best estimate of \(F(x_0)\) is obtained by taking the average of the two:

which is a well-known result.

For a background whose distribution is of the \(f(x)=ax+b\) form, the answer is exact. However, for a shape that has higher-order terms, this approximation may not be enough. If we allow two side-bands on each side, the terms proportional to \(\varDelta ^2\) can be eliminated.

The best estimate from two equal width side-bands on each side is

which is accurate for background distribution f(x) that is locally a cubic function. One can easily understand this, since with one side-band on each side, we can fit a line through the two measurement points for interpolation, and thus find the linear function exactly. And with two side-bands on each side, we have four measurements, therefore, we can fit a cubic function for interpolation.

A similar idea can be adapted to a case with more than one dimension. Let us consider a rectangular signal region in x, y space between \(x_0 \sim x_0+\varDelta _x\) and \(y_0 \sim y_0+\varDelta _y\). Altogether, we can use 8 side-bands, four on the sides of the rectangle and four regions on the corners. Without any prior knowledge of the background distributions, and using similar arguments as before, the best estimate for interpolation is

2.2 “ABCD” extrapolation methods

In background estimation using interpolation methods, the signal is completely surrounded by CRs that provide strong constraints. They would be useful if the signal is localized. However, in searches for new physics signatures at large energies, the signal of interest is expected to populate higher energy, mass, or jet multiplicity regions. In these cases, measurements based on the signal-depleted CRs must be extrapolated to the SR.

We introduce the notation to be used for the extrapolation methods. We can use the extrapolation methods of background estimation if the dependence of an observable on x and y is mostly independent, as:

where the non-independent component is in \(\epsilon \). We assume that the non-independent part is small \(|\epsilon |<<1\). Then the integral in a rectangular region would be mostly factorizable as well.

where \(\varSigma \) is the average value of \(\epsilon \) over this range and depends on the amount of dependence between the two variables, x and y. \(S_x\)(\(S_y\)) is the integral of \(P_x\)(\(P_y\)) in the range \(x_0\sim x_1\) (\(y_0\sim y_1\)), respectively. For a fixed-width window, \(x_1=x_0+\varDelta _x\) and \(y_1=y_0+\varDelta _y\), F is a function of \(x_0\) and \(y_0\), so we can omit the arguments \(x_1\) and \(y_1\) as

An estimate of F(x, y) is obtained by taking suitable products of the Fs in the neighboring regions as:

where the \(\varDelta \)’s stand for either \(\varDelta _x\) or \(\varDelta _y\). The \(\varDelta _x\varDelta _y\) term would vanish if \(\epsilon (x,y)\rightarrow 0\). Therefore, the error of the estimation depends on the degree of non-independence of x and y. In this derivation, we do not assume that \(S_x\) (\(S_y\)) vary slowly as a function of x (y), respectively, but that \(\varSigma \) varies slowly enough that the series expansion is valid.

The method is often referred to as the “ABCD” method (Eq. 11) or matrix method. In an ABCD method, two-dimensional phase space is divided into four regions, one of which is the SR and the neighboring three regions are the CRs. The choice of the two control variables used for this purpose depends on the physics case of interest, but should be as independent as possible. In hadron collision experiments, such extrapolation methods are used to estimate the backgrounds in a variety of settings. Usually, the signature of interest is expected at high energies or large particle multiplicities, therefore, the interpolation methods cannot be used. It is in this regime where the need for these methods arises because of large theoretical or experimental uncertainties in prediction using simulations or calculations. The data-driven approach can bypass many of these difficulties.

The information from the three A, B, and C CRs, is used to estimate the backgrounds in the signal region, D (Fig. 1). Generally, we can express the estimate of \(F_D\) as \(\hat{F}_D\),

where the \(\varDelta \)’s are either \(x_1-x_0\), \(x_2-x_1\), \(y_1-y_0\), or \(y_2-y_1\).

When \(x_2\) and/or \(y_2\) is taken to infinity, the expansion, in general, is not valid unless \(\varSigma =0\) since \(\varDelta \rightarrow \infty \). However, even if \(\varSigma \ne 0\), under certain conditions, the expansion could still be valid. For the case \(x_2\rightarrow \infty \), if the distribution \(P_x(x)\) falls sharply as x increases, then Eq. (12) could be still valid. Since \(\varSigma (x_1, x_2, y_0, y_1)\approx \varSigma (x_1, x_1+\delta _x, y_0, y_1)\), remembering that \(\varSigma \) is the average value of \(\epsilon \) in the given region, thus \(x_2\) is not as relevant since the data are distributed heavily towards lower values of x. Under these conditions,

where \(\varSigma _i\) (\(\varSigma _{ij}\)) is the partial derivative with respect to the ith argument (i and j arguments), respectively, and \(\varDelta \)s are either \(\varDelta _{x1}\), \(\varDelta _{y1}\), or \(\varDelta _{y2}\). In summary, with the ABCD method, measurements in three regions neighboring the SR can be used to give the accurate description to \(O(\varDelta ^2)\), given that the correlation between the x and y is weak and the distribution falls sharply in x and y.

3 Improving the data-driven extrapolation method

As was the case with interpolation, it is possible to improve the accuracy of extrapolation methods by including more CRs. We derive several new analytic results and provide some case studies to demonstrate their efficacy.

3.1 Extended ABCD methods

Control regions used in the extended ABCD methods. The upper right region is the signal region, while the rest are the control regions. The hatched regions are the nominal regions used in an ABCD method, while the other open regions (in addition to the hatched regions) are incorporated in the extended ABCD methods

We assume that the SR is \(x>x_0\) and \(y>y_0\) (Fig. 2) and that the joint distribution in x and y is mostly factorizable. Then we can express the number of entries in the SR as \(F(x_0, y_0) = S_x(x_0)S_y(y_0)[1+\varSigma (x_0, y_0)]\). By using more information in the CRs \([x_0-2\varDelta _x, x_0-\varDelta _x]\) as well as \([x_0-\varDelta _x, x_0]\) and similarly in y, the accuracy can be improved as

where \(\varDelta \) stands for either \(\varDelta _x\) or \(\varDelta _y\). With fixed-width CRs, terms up to \(\varDelta ^2\) can be exactly canceled. Therefore, the effects of correlations among variables on the prediction are mitigated as well. In the appendix, we give an explicit expression for Eq. (14).

We can extend the idea further by using information in eight CRs (Fig. 2), where it is possible to get accuracy of the \(O(\varDelta ^4)\) order:

However, having more CRs does not always result in reduced error. Since the method involves multiplication or division operations, statistical uncertainties, due to the finite number of entries in each CR directly affect the uncertainty of the prediction. From practical considerations, it may be desirable to have fewer CRs, so we also derived an optimal expression for the case of five control regions, by allowing for two control region bins in either x or y, but not in both. In the case of two control region bins in x, but one in y, the optimal combination of the control region measurements is

As before, the error depends on the assumptions of weak correlations among the dependent variables x and y, as described by \(\epsilon (x,y)\). We also assume that \(\epsilon (x,y)\) varies slowly enough to allow for the series expansion.

While the results derived are for fixed width bins, they can be applied to the variable widths cases. The variable-widths bins could be modified into fixed-width bins by locally stretching or squeezing the control variables phase space. And as long as this operation does not invalidate the assumption of the weak correlations, these methods are applicable.

3.2 Case studies of extended ABCD methods

3.2.1 Toy example

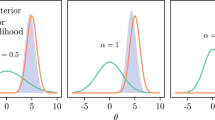

Plot of ratio of prediction to the truth of the different extrapolation methods as a function of \(\alpha \) together with error bands for the example distribution in Eq. (17)

As a simple test, we apply the ABCD method and the extended ABCD method of Eq. (16) to a distribution

which is a smoothly decreasing distribution in x and y, but otherwise arbitrary. The distribution would separable in x and y in the absence of the \(x+y\) term, which provides some correlation between x and y. For simplicity, the boundaries for the ABCD method are set to \(x_0=1\), \(x_1=2\), \(x_2=3\), \(y_0=1\), \(y_1=1\), \(y_2=2\). The true value of the area in D is \(F_D=0.1210\) for \(\alpha =0.5\), while the ABCD method (Eq. 12) yields 0.1247. The extended ABCD method with the left boundary at \(x_{-1}=0\) yields 0.1195. Extended ABCD method reduces the error in prediction by a factor of 2.5 for this case.

Figure 3 shows how the predictions of ABCD and extended ABCD change with \(\alpha \). The bands represent the error terms of the respective methods in the appendix. Since the distribution is known explicitly, the error terms can be calculated. As \(\alpha \rightarrow 0\), both methods converge to 1, as expected, since the distribution becomes independent in x and y.

3.2.2 \(t\bar{t}\)+multi-jets in hadronic channels

For the second case study, we apply various ABCD methods of background estimations to \(t\bar{t}+jj\) simulated sample. The \(t\bar{t}\)+multi-jets processes are backgrounds to many of the searches for physics beyond the standard model at the LHC [14, 15]. While calculation of \(t\bar{t}+jj\) is available at the next-to-leading order (NLO), it has relatively larger theoretical uncertainties than what is desired by the experiments [5]. Furthermore, the quoted uncertainties in the literature are on the overall inclusive cross sections, but in some phase space, the uncertainties on the the differential cross sections could be even larger. It is difficult to envision improved calculations for these processes in the foreseeable future. Therefore, having a more reliable data-driven technique is important for these processes.

We generated one million events of \(pp\rightarrow t\bar{t}jj\) sample at \(\sqrt{s}=14\) TeV with MG5aMC@NLO v2.61 at LO [4]. The extra partons are required to have \(p_T>20\) GeV and \(|\eta |<5.0\). The partons are hadronized with Pythia 8 [16]. Delphes 3 fast detector simulation and reconstruction were subsequently applied [17]. The reconstructed jets are required to be \(p_T>30\) GeV and \(|\eta |<2.4\). We required zero isolated lepton that satisfies \(p_T>20\) GeV and \(|\eta |<2.4\) in an event.

The distribution of the number of hadronic jets (\(N_j\)) and the number of b-tagged jets (\(N_{bj}\)) is shown in Fig. 4, and the number of entries in each bin is listed in Table 1. The correlation coefficient of the two variables is 0.139, hence, they are weakly correlated. We apply the methods in Eqs. (14–16), taking \(N_j\) and \(N_{bj}\) as control variables. The SR is \(N_j\ge 9\) and \(N_{bj}\ge 4\). It could be applicable in a scenario where signature of interest consists of multijets and multiple b-tagged jets.

The results of applying various extrapolation methods are shown in Table 2. The uncertainties in the predictions are statistical uncertainties due to the number of entries in the control region. They are evaluated by an ensemble test where the number of entries in each control region fluctuates according to a Poisson distribution. The extended ABCD methods allow for better prediction in terms of reduced deviation from the truth, at the cost of increased statistical uncertainties.

Next, we consider cases where the control variables are continuous. We take the hadronic scalar sum of jet transverse momenta (\(H_T\)) and the sixth leading jet transverse momentum (\(p_{T6}\)) as the control variables. The two variables are obviously correlated (correlation coefficient: 0.660), as shown in Fig. 5. We deliberately chose these variables to better exemplify the advantages of the extended ABCD methods.

Since the distribution drops rapidly as \(H_T\) or \(p_{T6}\), we consider two different use cases. In the first case, the widths of the CRs and SR (\(\varDelta _x\)) are wider than the widths of the distribution, and in the second case, the widths are similar or smaller than the width of the distribution of each control variable (Fig. 5). Table 3 shows how the different regions are defined and the number of entries in the respective regions for the two cases. In the first case, the region of interest (D) has a lower limit on \(H_T\). This could be a typical use case in hadron colliders where we are interested in phenomena at high energies. In the second case, D is much narrower, and although this is not the most general use case, it is nonetheless interesting for illustration purposes. The bins are chosen such that the number of entries do not vary greatly among the different regions (Table 4).

In the first case, the ABCD method yields \(4802\pm 122\) while the extended ABCD method of Eq. (16) yields \(9976\pm 488\). The ABCD method is inadequate because of the correlation between \(p_{T6}\) and \(H_T\). In the second case, the ABCD method yields \(3886\pm 128\) while the extended ABCD method yields \(4493\pm 291\). In both cases, the presence of \(A'\) and \(C'\) control regions provides an additional lever arm and allows us to take into consideration the dependence on \(H_T\) better.

One of the important reasons to use the data-driven method is to reduce some of the systematic uncertainties. Through several case studies, we demonstrate that the extended ABCD methods provide estimates that are closer to the truth. For cases where independent variables are not easy to find, the extended ABCD method could still take into account some of the correlations. In many analyses, the normalization of the background is treated as a nuisance parameter to be constrained further by fitting to data. The extended ABCD methods can provide smaller uncertainty on the prior of the normalization and thus move towards reducing systematic uncertainties.

4 Conclusions

We propose extensions to the ABCD method of extrapolated background estimation by exploiting information from additional control regions. The extended ABCD methods could be useful when the control variables are not exactly independent, since they can mitigate the effects of correlations among the variables. Through several case studies, we demonstrate that they provide more accurate predictions at the cost of increased statistical uncertainties.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The simulated data are used to demonstrate the various methods. The original data are quite large and not straightforward to use. The application of the method can be verified using the numbers in the tables 1 through 4.]

References

D. del Re, Exotics at the LHC, PoS (ICHEP2018) 710

H. Cai et al., SUSY Hidden in the Continuum. Phys. Rev. D 85, 015019 (2012)

C. Gao et al., Collider phenomenology of a Gluino continuum. arXiv:1909.04061 [hep-ph]

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. J. High Energy Phys. 2014, 79 (2014)

G. Bevilacqua, M. Worek, On the ratio of ttbb and ttjj cross sections at the CERN Large Hadron collider. J. High Energy Phys. 2014, 135 (2014)

J.J. Aubert et al., Experimental observation of a heavy particle. J. Phys. Rev. Lett. 33, 1404 (1974)

J. Augustin et al., Discovery of a narrow resonance in \(e^+e\) annihilation. Phys. Rev. Lett. 33, 1406 (1974)

S.W. Herb et al., Observation of a dimuon resonance at 9.5 GeV in 400-GeV proton–nucleus collisions. Phys. Rev. Lett. 39, 252 (1977)

F. Abe et al., (CDF Collaboration), Observation of top quark production in \(p\bar{p}\) collisions with the collider detector at Fermilab. Phys. Rev. Lett. 74, 2626 (1995)

S. Abachi et al., (DØ Collaboration), Observation of the Top Quark. Phys. Rev. Lett. 74, 2632 (1995)

S. Abachi et al. (DØ Collaboration), Search for the top quark in \(p\bar{p}\) collisions at \(\sqrt{(}s)=1.8\) TeV. Phys. Rev. Lett. 72, 2138 (1994)

S. Abachi et al., (DØ Collaboration), Search for high mass top quark production in \(p\bar{p}\) collisions at \(\sqrt{(}s)=1.8\) TeV. Phys. Rev. Lett. 74, 2422 (1995)

O. Behnke et al., Data Analysis in High Energy Physics (Wiley-VCH Verlag GmbH & Co. KGaA, New York, 2013), p. 348

G. Aad, et al. (ATLAS Collaboration), Search for phenomena beyond the Standard Model in events with large b-jet multiplicity using the ATLAS detector at the LHC. arXiv:2010.01015 [hep-ex]

A. M. Sirunyan, et al. (CMS Collaboration), Search for the production of four top quarks in the single-lepton and opposite-sign dilepton final states in proton-proton collisions at \(\sqrt{s}=13\) TeV

T. Sjöstrand et al., An Introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159 (2015)

J. de Favereau et al., (Delphes3 Collaboration), DELPHES 3. A modular framework for fast simulation of a generic collider experiment, JHEP 02, 057 (2014)

Acknowledgements

This work was supported in part by the Korean National Research Foundation (NRF) Grants NRF-2018R1A2B6005043 and NRF-2020R1A2B5B02001726.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Expressions for the extended ABCD methods

Appendix A: Expressions for the extended ABCD methods

We give an explicit expression for Eq. (14) up to \(\varDelta ^3\):

To reduce clutter, we omit the arguments (x, y) to \(\varSigma \) function. The superscripts (m, n) stand for partial derivatives, as \(\varSigma ^{(m,n)} = (\frac{\partial }{\partial x})^m (\frac{\partial }{\partial y})^n\varSigma (x,y)\).

And the expression for Eq. (16) up to \(\varDelta ^3\) order is

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Choi, S., Oh, H. Improved extrapolation methods of data-driven background estimations in high energy physics. Eur. Phys. J. C 81, 643 (2021). https://doi.org/10.1140/epjc/s10052-021-09404-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-021-09404-1