Abstract

The evaluation of higher-order cross-sections is an important component in the search for new physics, both at hadron colliders and elsewhere. For most new physics processes of interest, total cross-sections are known at next-to-leading order (NLO) in the strong coupling \(\alpha _s\), and often beyond, via either higher-order terms at fixed powers of \(\alpha _s\), or multi-emission resummation. However, the computation time for such higher-order cross-sections is prohibitively expensive, and precludes efficient evaluation in parameter-space scans beyond two dimensions. Here we describe the software tool xsec, which allows for fast evaluation of cross-sections based on the use of machine-learning regression, using distributed Gaussian processes trained on a pre-generated sample of parameter points. This first version of the code provides all NLO Minimal Supersymmetric Standard Model strong-production cross-sections at the LHC, for individual flavour final states, evaluated in a fraction of a second. Moreover, it calculates regression errors, as well as estimates of errors from higher-order contributions, from uncertainties in the parton distribution functions, and from the value of \(\alpha _s\). While we focus on a specific phenomenological model of supersymmetry, the method readily generalises to any process where it is possible to generate a sufficient training sample.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The determination of cross-sections beyond leading order (LO) is typically very computationally expensive because of the evaluation of tensorial loop integrals. This is especially so for hadronic interactions, where the loop integrals must themselves be numerically integrated over the relevant parton distribution functions (PDFs).

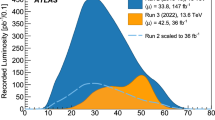

The computational cost of evaluation per parameter point restricts the usage of next-to-leading order (NLO) cross-sections to (simplified) new physics models with only one or two relevant parameters. However, higher-order contributions can be very important in many other models. This is especially true for strong interactions, where NLO contributions can be of comparable size to the LO contribution. The physics impact of this restriction is quite dramatic. In Fig. 1, reproduced from Ref. [1], we show as an example the significant differences between the limits resulting from a parameter scan of the Constrained Minimal Supersymmetric Standard Model (CMSSM) using LO rather than NLO cross-sections. This also highlights the importance of propagating theory uncertainties, e.g. from the PDFs and the scale dependence, through to the physical observables. As the uncertainties on LO hard-scattering cross-sections are typically very large, owing in part to the missing higher-order terms, such theory error propagation to the cross-sections can only really be considered representative from NLO accuracy onwards. Even at NLO, we can see in Fig. 1 that the impact is considerable, and needs to be taken into account when fitting models.

Colour map showing the profile likelihood ratio \({\mathcal {L}}/{\mathcal {L}}_{\mathrm {max}}\) for a ColliderBit scan over the CMSSM model defined by \(m_0\) and \(m_{1/2}\), with \(\tan \beta =30\), \(A_0=-2m_0\) and \(\mu >0\), using the ATLAS zero-lepton supersymmetry search likelihood from \(\sqrt{s}=8\) TeV data [2]. The solid white line indicates the 95% CL exclusion contour found with GAMBIT 1.0 [3] using LO cross-sections. The solid blue line shows the corresponding ATLAS 95% CL observed exclusion limit with NLO+NLL cross-sections, with dashed blue lines showing the \(\pm 1 \sigma \) theoretical cross-section uncertainty. Reproduced from Fig. 3 in Ref. [1]

In this work we present xsec, an attempt at a general solution to the speed problem irrespective of the type of cross-section being evaluated, the size of the parameter space of the model, and the underlying precision of the calculation. We achieve this by performing machine-learning regression on a pre-generated training dataset consisting of cross-sections sampled from a model. In this first version of xsec, we will be focusing on strong-production cross-sections in the MSSM, but the selection of cross-sections and models will be extended in future versions.

The increasing sparsity of training data in models with larger numbers of free parameters means that a lot of care must go into performing the regression, and that reliable regression errors can only be determined on a point-by-point basis. We choose to use Gaussian process (GP) regression [4], a highly flexible Bayesian method for modelling functions. It is not restricted to pre-determined functional shapes with a set number of parameters, and is instead directly informed by the data together with some prior understanding of the underlying correlation structure of the function that it is used to model. Moreover, a point-specific regression uncertainty follows naturally from the posterior predictive distribution, which is straightforward to compute in closed form.

Training GP models involves the inversion of a matrix of the size of the number of training points. This means that while GPs are a very powerful regression tool, single GPs scale very badly with increasing training data, and training becomes infeasible beyond \({{\mathcal {O}}}(10^4)\) data points. To overcome this difficulty we use a model with factorised and distributed training, where the data are split into manageable subsets each assigned to a single GP. The regression prediction is subsequently determined by a combination of the predictions of the individual GPs [5, 6].

Current codes that can evaluate a broad collection of MSSM cross-sections at NLO include Prospino 2.1 [7,8,9,10,11,12] and MadGraph 5 [13]. However, the evaluation time per parameter point on a modern CPU is in the range of minutes for a single final state, far too slow for large parameter scans. This does not include the calculation of scale, \(\alpha _s\), nor PDF errors, which in Prospino increases the evaluation time by orders of magnitude. In the MadGraph implementation, this can be done more efficiently through reweighting techniques. However, the NLO functionality for the MSSM has only recently been made publicly available in MadGraph, and after the computationally expensive sample generation campaign for the current version of xsec was completed.

A test of the calculation time per parameter point for all strong-production cross-sections in Prospino, including the evaluation of scale, \(\alpha _s\), and PDF errors, when Prospino has been heavily optimised with the ifort compiler, takes around 2.5 hours on a single Intel Xeon-Gold 6138 (Skylake) core running at 2.0 GHz.

Fast evaluation of strong-production cross-sections in the MSSM at NLO, with next-to-leading (NLL) and next-to-next-to-leading (NNLL) logarithmic resummation, already exists in the form of the NLL-fast and NNLL-fast codes [14,15,16,17,18,19,20,21,22,23,24], which add NLL and NNLL corrections to existing NLO results from Prospino. However, these results are restricted to interpolation in a two-dimensional subspace spanned by the gluino mass and a common squark mass, and results are given as a sum over outgoing squark flavours.

In addition to the processes found in Prospino, cross-sections for gaugino and slepton pair production, as well as gaugino-gluino production, can be evaluated at NLO+NLL precision by the Resummino code [25,26,27,28,29,30,31,32,33]. Here, the total running time for all chargino and neutralino production processes at NLO (again on a single 2.0 GHz Intel Xeon-Gold 6138 Skylake core) is around three hours, with no evaluation of scale, \(\alpha _s\), nor PDF errors. Combined NLO+NLL precision takes four days.

Recently, the DeepXS package [34] appeared, which performs a fast evaluation of neutralino and chargino pair production using neural networks. This is currently limited to a small set of the most phenomenologically relevant processes in the MSSM-19, and does not include uncertainties arising from PDFs, or the value of \(\alpha _s\).

The xsec 1.0 code is currently designed to reproduce all the NLO results of Prospino 2.1 for strong production in the MSSM in a small fraction of the time, including scale errors, and with the addition of PDF and \(\alpha _s\) errors based on a modern PDF set. It does not include NNLL or even NLL resummation as can be found in NNLL-fast and NLL-fast, however, unlike those codes it provides reliable results for non-degenerate squark masses, within the inherent limitations of the training set generated with Prospino. We intend to extend the code to also include NLL resummation in future releases.

Unlike NNLL-fast, xsec performs a separate evaluation of the cross-section for all distinct flavour combinations of the first two generations of squarks. The xsec code also treats sbottom and stop pair-production processes separately, but this has limited impact as the two cross-sections are the same at LO (for the same masses),Footnote 1 as Prospino – and therefore our training set – is limited to light quark initial states. A complete list of available processes can be found in Table 1.

The code is written in Python with heavy use of the NumPy [35] package for numerical calculations, and is compatible with both Python 2 and 3. It can be installed using the pip package manager and run as a set of functions from a self-contained module. We also provide interfaces through SLHA files [36] and a command-line tool.

The rest of this paper is structured as follows. We begin in Sect. 2 with an introduction to the GP regression framework that we employ. In Sect. 3 we describe our GP training regime, including how we compute the required training sets of NLO cross-sections. In Sect. 4, we perform a thorough validation of the results from xsec, with a comparison to results from existing codes. Section 5 covers the structure of the code and its interfaces, and we conclude in Sect. 6.

2 Regression framework

2.1 Gaussian process regression

The basic objective in regression is to estimate an unknown function value \(f({\mathbf {x}})\) at some new \({\mathbf {x}}\) point, given that we know the function values at some other \({\mathbf {x}}\) points. A Bayesian approach to this task is provided by Gaussian process regression, in which we express our degree of belief about any set of function values as a joint Gaussian pdf. We underline here that this pdf should be understood in a purely Bayesian sense – it does not imply any randomness in the true function we are approximating.

We begin by defining some notation and terminology, indicating in italics the terminology commonly used in the GP and machine learning literature. Each input point \({\mathbf {x}}\) has m components (features), which in our case will correspond to masses and mixing angles from the MSSM squark and gluino sector. We denote by \({\mathbf {x}}_*\) the new input point (test point) for which we will estimate the unknown, true function value \(f_* \equiv f({\mathbf {x}}_*)\), here an NLO production cross-section. We let \({\mathbf {x}}_i\) with \(i=1,\ldots ,n\) denote the n input points (training points) at which we know the function values \(f_i \equiv f({\mathbf {x}}_i)\) (targets). The combined set \({\mathcal {D}}= \{{\mathbf {x}}_i, f_i\}_{i=1}^{n}\) is referred to as the training set. The complete set of input components in our training set can be expressed as an \(n \times m\) matrix \(X = [{\mathbf {x}}_1, \ldots , {\mathbf {x}}_n]^\mathrm {T}\). Similarly, the complete set of known function values can be collected in a vector \({\mathbf {f}} = [f_1, \ldots , f_n]^\mathrm {T}\). Thus, our training set can also be expressed as \({\mathcal {D}}= \{X, {\mathbf {f}}\}\).

The starting point for GP regression is the formulation of a joint Gaussian prior pdfFootnote 2

which formally describes our degree of belief for possible function values at both the training points X and the test point \({\mathbf {x}}_*\), before we look at the training data. This prior is chosen indirectly by choosing a mean function \(m(\cdot )\) and a covariance function or kernel \(k(\cdot ,\cdot )\), defined to specify the following expectation values for arbitrary input points:

We note that while the mean function and kernel are defined as functions of inputs in \({\mathbf {x}}\) space, the function values represent mean and covariance values in f space. Our joint Gaussian prior can then be expressed as

where

The choice and optimisation of the kernel and mean function constitute the main challenge in GP regression, and we will discuss these aspects in detail in the next sections.

Our goal is to obtain a predictive posterior pdf for the unknown function value \(f_*\) at \({\mathbf {x}}_*\). From the fully specified GP prior we can now find this simply by “looking at” the training data \({\mathbf {f}}\), i.e. by deriving from the GP prior \(p({\mathcal {D}}, f_* | {\mathbf {x}}_*)\) the conditional pdf

The mean and variance of this univariate Gaussian can be expressed in closed form as

The prediction \(\mu _*\) for \(f_*\) is thus simply the prior mean \(m({\mathbf {x}}_*)\) plus a shift given by a weighted sum of the shifts of the known function values from their corresponding prior means, \({\mathbf {f}} - m(X)\). The weights are proportional to the covariances between the prediction at \({\mathbf {x}}_*\) and the known function values at the training points X, as set by the kernel \(k({\mathbf {x}}_*, X)\). The prediction variance \(\sigma _*^2\) is given as the prior variance \(k({\mathbf {x}}_*, {\mathbf {x}}_*)\) reduced by a term representing the additional information provided by the training data about the function value at \({\mathbf {x}}_*\). This naturally depends only on the kernel. We will refer to the width \(\sigma _*\) simply as the regression error or GP prediction error, keeping in mind that it should be interpreted in a Bayesian manner.

2.2 Kernel choice and optimisation

Choosing the kernel, Eq. (3), is the main modelling step in GP regression. It effectively determines what types of functional structure the GP will be able to capture. In particular, it encodes the smoothness and the periodicity (if applicable) of the function that is being modelled, as it controls the expected correlation between function values at two different points. The choice of prior mean function, Eq. (2), is typically much less important, as we discuss at the end of this section.

The question of the optimal kernel choice is covered in more detail in Refs. [4, 37]. The squared-exponential kernel

is the standard choice. It results in an exponentially decreasing correlation as the Euclidean distance between two input points increases with respect to a length-scale hyperparameter \(\ell \). The signal variance \(\sigma _f^2\) is a hyperparameter containing information about the amplitude of the modelled function. This is a universal kernel [38], which means that it is in principle capable of approximating any continuous function given enough data. The infinite differentiability and exponential behaviour of this kernel typically result in a very smooth posterior mean.

However, for our purposes, the squared-exponential has some problems. Its sensitivity to changes in the function means that the length scale \(\ell \) is usually determined by the smallest ‘wiggle’ in the function [37]. We hence consider also the Matérn kernel family: like the squared-exponential, these are universal and stationary, i.e., only functions of the relative positions of the two input points, but additionally incorporate a smoothness hyperparameter \(\nu \) following the basic form

where \(\varGamma (\nu )\) is the gamma function and \(K_{\nu }\) is a modified Bessel function of the second kind. For the modelling of cross-section functions, we adopt the Matérn kernel class on the basis of its superior performance. This has followed significant testing and cross-validation across a number of different problems [39,40,41]. During testing we found \(\nu =\frac{3}{2}\) to be optimal for our purposes, in which case Eq. (13) simplifies to

To account for the fact that some directions in the input space of masses and mixing angles may have more impact on the cross-section values than others, we use an anisotropic, multiplicative Matérn kernel,

where we have also included a signal variance hyperparameter \(\sigma _f^2\), similar to the one in Eq. (12). Here \(x^{(d)}\) denotes the \(d\hbox {th}\) component of the input vector \({\mathbf {x}}\), and \(\varvec{\ell }\), with components \(\ell _d\), is a vector containing one length scale per \({\mathbf {x}}\) component. The product over the dimensions of the parameter space results in points only being strongly correlated if in each dimension, their distance is small with respect to the relevant length scale.

So far we have focused on the “noise-free” case, in which the training targets \({\mathbf {f}}\) are the exact values of the true function at the training points. In this case the predictive posterior \(p(f_* | {\mathcal {D}}, {\mathbf {x}}_*)\) collapses to a delta function when \({\mathbf {x}}_*\) equals a training point. In theory this is a reasonable approach, since what we seek is a surrogate model for an expensive, but precise and deterministic numerical computation. In practice, however, allowing for some uncertainty also at the training points typically results in a more well-behaved and stable regression model. The main reason for this is that the additional wiggle-room in the modelling can ease the challenging matrix numerics of GP regression, as we will discuss in some detail in Sect. 2.3.

We therefore add a “white-noise” term,

to our kernel, where \(\sigma _{\epsilon }^2\) is the hyperparameter that sets the amount of “noise”. The effect of this term is simply to add \(\sigma _{\epsilon }^2\) along the diagonal of the covariance matrix \(\varSigma \), as well as to the prior variance at the test point, \(k({\mathbf {x}}_*, {\mathbf {x}}_*)\). It is known as homoscedastic noise, as it is the same for all data points.

In GP terminology, to include this additional variance term corresponds to going from the noise-free case to a scenario with noisy training data. The targets are then considered measurements \(y_i \equiv y({\mathbf {x}}_i) = f({\mathbf {x}}_i) + \epsilon _i\), with the noise \(\epsilon _i\), introduced in the process of performing the \(i\hbox {th}\) measurement, modelled by a Gaussian distribution \({\mathcal {N}}(0, \sigma _\epsilon ^2)\). However, we remind the reader that for our case this Gaussian pdf represents an adopted effective Bayesian degree of belief in the accuracy of the training data, rather than an expression of actual random noise.

Conceptually we should then make the substitution \(f \rightarrow y\) in our definitions from Sect. 2.1. Our training set becomes \({\mathcal {D}}= \{X, {\mathbf {y}}\}\), with \({\mathbf {y}} = [y_1, \ldots , y_n]^\mathrm {T}\), and the GP prior becomes a joint pdf for \({\mathbf {y}}\) and \(y_*\):

The prior mean function and kernel now specify expectation values in y space,

where we note that \({\mathbb {E}}[y({\mathbf {x}})] = {\mathbb {E}}[f({\mathbf {x}})]\) since the Gaussian noise term has zero mean. Likewise, the predictive posterior pdf becomes

with mean and variance

Our complete kernel is then given by

Fixing \(\nu = \frac{3}{2}\), as discussed above, we are left with the set \(\mathbf {\theta }=\{\sigma _f^2,\varvec{\ell },\sigma _\epsilon ^2\}\) of undetermined hyperparameters. To be fully Bayesian, one would introduce a prior pdf \(p(\mathbf {\theta })\) for the hyperparameters and obtain the GP posterior \(p(y_* | {\mathcal {D}}, x_*)\) by marginalising over \(\mathbf {\theta }\),

In our high-dimensional case with large datasets, such integration would come at a steep computational expense, even with MCMC methods. We therefore follow the common approach of using a point estimate for the hyperparameters, found by maximising the log-likelihood function [4]

Finding an adequate set of hyperparameters constitutes the model training step in the GP approach. It is complicated by the fact that each optimisation step requires the computation of the inverse and determinant of the \(n\times n\) covariance matrix \(\varSigma \), which scales poorly with the number of training points n. To increase speed and numerical stability, \(\varSigma \) is generally not directly inverted in practice, and its Cholesky decomposition is used instead. In an attempt to avoid local optima, we employ the SciPy implementation of the differential evolution method [42, 43], rather than performing a gradient-based search.

Recent work has demonstrated that the theoretical prediction error \(\sigma _*^2\) in Eq. (22) systematically underestimates the mean-squared prediction error when the hyperparameters are learned from the data [44]. As proposed there, we account for the uncertainty on the point estimate of the hyperparameter by adding a correction term to \(\sigma _*^2\), derived from the Hybrid Cramér–Rao Bound. In our case, with a constant prior mean function, this extra term amounts to

where \({\mathbf {1}} \equiv [1,\ldots ,1]\). In particular, this increases the prediction error at test points far from the training data.

Compared to the choice of kernel, the choice of the prior mean function, Eq. (18), is typically less important. Following conditioning on a sufficiently large training set, the prior gets overpowered and the posterior mean is primarily influenced by the training data through the second term in Eq. (21). For this reason the prior mean function is commonly taken to be zero everywhere. Nevertheless, it is sensible to incorporate our knowledge of the mean, and we therefore use the sample mean of the target values \({\mathbf {y}}\) as a prior mean function that is constant in \({\mathbf {x}}\).

2.3 Regularisation of the covariance matrix

A practical challenge when training GPs is to ensure numerical stability when inverting the covariance matrix \(\varSigma \). The precision of the result is controlled by the condition number \(\kappa \) of \(\varSigma \), which can be considered a measure of the sensitivity of the inversion to roundoff error. It is computed as the ratio \(\lambda _{\mathrm {max}}/\lambda _{\mathrm {min}}\) between the highest and lowest eigenvalues of \(\varSigma \), and becomes infinite for a singular matrix. The loss of numerical precision at high \(\kappa \) becomes most obvious when the predictive variance, computed according to Eq. (22), evaluates to a negative number. In order to prevent this problem, it is essential to understand how to control \(\kappa \).

When the target values of training points are strongly correlated, their corresponding rows and columns in \(\varSigma \) are nearly identical. This leads to eigenvalues close to zero and a very large condition number. It has been shown that in the worst case, \(\kappa \) can grow linearly with the number of training points and quadratically with the signal-to-noise ratio \({\mathrm {SNR}} = \sigma _f/\sigma _\epsilon \) [45].

Increasing the noise level improves numerical stability, as a larger diagonal contribution \(\sigma _\epsilon ^2\) to \(\varSigma \) enhances the difference between otherwise similar rows and columns. Therefore, we add a term to the log likelihood in Eq. (25) that penalises hyperparameter choices with extremely high signal-to-noise ratios, as suggested in Ref. [45]. Our objective function for training GPs then becomes

The large exponent guarantees that situations where \({\mathrm {SNR}} > {\mathrm {SNR}}_{\mathrm {max}}\) are the only ones where the penalty term has a significant effect. We use \({\mathrm {SNR}}_{\mathrm {max}}=10^4\).

In some cases the likelihood penalty in Eq. (27) does not decrease the condition number sufficiently to stabilise the inversion. However, choosing a lower overall value for \({\mathrm {SNR}}_{\mathrm {max}}\) dilutes the information in the training data to an extent that it can sometimes be fitted by noise, even when unnecessary. We therefore check the condition number after the optimisation with the penalty term, and proceed to increase the homoscedastic noise \(\sigma _\epsilon ^2\) just for the inversion step until the condition number drops below a reasonable value \(\kappa _{\mathrm {max}}\) [46]:

We set \(\kappa _{\mathrm {max}} = 10^9\), roughly corresponding to a maximal loss of nine digits accuracy from the total of 16 in a 64-bit double-precision floating-point number.

These measures may seem to deteriorate the performance of our regression model, but they are necessary to ensure the numerical stability. The underlying reason is that we have essentially noiseless data, and are hitting the limits of floating-point precision in the process of calculating the GP predictions. In comparison to the scale and PDF uncertainties on the cross-sections, the resulting regression errors nevertheless remain small, as we demonstrate in Sect. 4.

2.4 Distributed Gaussian processes and prediction aggregation

With n training points, the complexity of the matrix inversion operations in Eqs. (21) and (22) scales as \(n^3\), making standard GP regression unsuitable for problems that require large training sets. To overcome this challenge we construct a regression model based on distributed Gaussian processes (DGPs) [5]: we partition the total training set \({\mathcal {D}}\) into d manageable subsets \({\mathcal {D}}_i\), and for each \({\mathcal {D}}_i\) we train a new GP \({\mathcal {M}}_i\). These GPs are referred to as experts. The prediction from our regression model is obtained by aggregating the predictions from the individual experts. For this prediction aggregation we follow the approach know as the Generalised Robust Bayesian Committee Machine (GRBCM) [6], for which we summarise the main steps below.

First we construct a data subset \({\mathcal {D}}_1 \equiv {\mathcal {D}}_c\), randomly chosen from \({\mathcal {D}}\) without replacement, which will be used to train a single communication expert \({\mathcal {M}}_c\). Next, we partition the remaining data into subsets \(\{{\mathcal {D}}_i\}_{i=2}^d\), each of which will serve to train one expert \({\mathcal {M}}_i\). Following Refs. [5, 6], all experts are then trained simultaneously, such that they share a common set of hyperparameters.

The GRBCM approach places no restrictions on how to partition the data to form the subsets \(\{{\mathcal {D}}_i\}_{i=2}^d\). However, empirical studies have shown that some clustering of the data can help the experts to become sensitive to local, short-scale variability of the target function [6, 47]. Compared to using a simple random partition, we have noticed minor improvements with a disjoint partition, where the data is split into local subsets based on the mass parameter with the smallest length-scale hyperparameter. Tests with k-means clustering did not indicate further improvements in our case, nor did tests with sorting on less dominant features.

The special role of the communication expert \({\mathcal {M}}_c\) becomes evident at the prediction stage. For each of the experts \(\{{\mathcal {M}}_i\}_{i=2}^d\), we construct an improved expert \({\mathcal {M}}_{+i}\) by replacing the corresponding dataset \({\mathcal {D}}_i\) with the extended set \({\mathcal {D}}_{+i} = \{{\mathcal {D}}_i, {\mathcal {D}}_c\}\). That is, for prediction the communication dataset \({\mathcal {D}}_{c}\) is shared by all the experts \({\mathcal {M}}_{+i}\). The communication expert \({\mathcal {M}}_c\) serves as a common baseline to which the experts \({\mathcal {M}}_{+i}\) can be compared. In the final combination, the prediction from expert \({\mathcal {M}}_{+i}\) will be weighted according to the differential entropy difference between its predictive distribution and that of \({\mathcal {M}}_{c}\).

The central approximation that allows for computational gains in DGPs and related approaches is an assumption that the individual experts can be treated as independent, which corresponds to approximating the kernel matrix of the combined problem, i.e. without partition into experts, as block-diagonal. In the GRBCM approach, this approximation is expressed as the conditional independence assumption \({\mathcal {D}}_i \perp {\mathcal {D}}_j | {\mathcal {D}}_c, y_*, {\mathbf {x}}_*\) for \(2 \le i \ne j \le d\), which enables the approximation \(p({\mathcal {D}}_i|{\mathcal {D}}_j,{\mathcal {D}}_c,y_*, {\mathbf {x}}_*) \approx p({\mathcal {D}}_i|{\mathcal {D}}_c, y_*, {\mathbf {x}}_*)\). That is, when the information contained in the communication set \({\mathcal {D}}_c\) is known, we assume that the predictive distribution for points in subset \({\mathcal {D}}_i\) should not be strongly influenced by the additional information contained in subset \({\mathcal {D}}_j\).

Using Bayes’ theorem and the above independence assumption, the exact predictive distribution \(p(y_* | {\mathcal {D}}, {\mathbf {x}}_*)\) can now be approximated as

where we have introduced the weights \(\beta _i\) for the predictions from different experts, and defined \(\beta _1 \equiv -1 + \sum _{i=2}^d \beta _i\). By applying Bayes’ theorem again, we can express our approximation for \(p(y_* | {\mathcal {D}}, {\mathbf {x}}_*)\) in terms of the corresponding predictive distributions from the individual experts, \(p_{+i} (y_* | {\mathcal {D}}_{+i}, {\mathbf {x}}_*)\) and \(p_c (y_* | {\mathcal {D}}_c, {\mathbf {x}}_*)\). Leaving out normalisation factors, the distribution for the aggregated prediction becomes

with mean \(\mu _{\mathrm {DGP}}\) and variance \(\sigma _{\mathrm {DGP}}^2\) at \({\mathbf {x}}_*\) given by

Following Ref. [6], we set the weights \(\beta _i\) to

The reason for assigning weight \(\beta _2 = 1\) for expert \({\mathcal {M}}_{+2}\) is that the transition

in Eq. (29) is exact for \(i=2\), \(\beta _2=1\). For each remaining expert \({\mathcal {M}}_{+i\ge 3}\), the weight is taken to be the difference in differential entropy between the baseline predictive distribution of the communication expert, \(p_c(y_* | {\mathcal {D}}_c, {\mathbf {x}}_*)\), and that of the given expert, \(p_{+i}(y_* | {\mathcal {D}}_{+i}, {\mathbf {x}}_*)\). Thus, if an expert \({\mathcal {M}}_{+i}\) provides little additional predictive power over \({\mathcal {M}}_{c}\), its relative influence on the aggregated prediction is low.

Requiring the experts to share a common set of hyperparameters effectively disfavours overfitting of individual experts. Moreover, the risk of overfitting is alleviated by the fact that after training, each expert is extended with the communication dataset \({\mathcal {D}}_c\) that it did not see during training, and its weight in the prediction aggregation is regularised through the comparison to the communication expert.

The GRBCM split of the dataset into d experts reduces the complexity of training from \(n^3\) to \({\mathcal {O}}(d (n/d)^3 = n^3d^{-2})\). The memory, storage space, and evaluation all depend directly on the size of the matrix, and scale as \({\mathcal {O}}(n^2)\) for a regular GP, but as \({\mathcal {O}}(n^2/d)\) in the GRBCM approach.

3 Training

3.1 Sample generation

We generate the inputs for our training data calculations by sampling the physical gluino and squark masses and (for third-generation squarks) the angles describing mass mixing between gauge eigenstates. The only other parameters involved in the production of gluinos and squarks to NLO QCD are the strong coupling \(\alpha _s\) and the SM quark masses. The cross-sections depend on \(\alpha _s\) both through the matrix element and the PDF. To capture the cross-section variation due to the uncertainty on \(\alpha _s\), we generate separate input points with \(\alpha _s\) set to 0.1180 (central value), 0.1165 (\(1\sigma \) lower value) and 0.1195 (\(1\sigma \) upper value), using the corresponding PDF sets, and train separate GPs on the ratio of cross-sections obtained with the central and \(\pm 1\sigma \) values. For the SM masses we use a fixed value for the bottom and top quark masses, and assume the other quark masses to be zero.

In the sample generation we do not simply sample over a regular grid of parameter values, for three reasons:

-

1.

Grid sampling is inefficient when one parameter is more important than the others: too many evaluations are spent on varying the less influential parameters whilst keeping the important one at a fixed value.

-

2.

The curse of dimensionality renders this sampling technique infeasible for processes that depend on more than three or four parameters.

-

3.

The complexity of sampling and cross-section calculation for the large number of processes that we consider (in terms of final-state squark flavours) means that it is more efficient to evaluate multiple cross-sections for every parameter combination than to generate separate samples for each final state.

We sample individual baseline masses for the gluino, \(m_{{\tilde{g}}}\), for the first and second-generation (gauge eigenstate) squarks, \(m_{{\tilde{u}}_L}\), \(m_{{\tilde{d}}_L}\), \(m_{{\tilde{c}}_L}\), \(m_{{\tilde{s}}_L}\), \(m_{{\tilde{u}}_R}\), \(m_{{\tilde{d}}_R}\), \(m_{{\tilde{c}}_R}\), and \(m_{{\tilde{s}}_R}\), and for the third-generation (mass eigenstate) squarks \(m_{{\tilde{b}}_1}\), \(m_{{\tilde{t}}_1}\), \(m_{{\tilde{b}}_2}\), and \(m_{{\tilde{t}}_2}\). We do this in two different ways: either drawing from a uniform distribution on the interval [50, 3500] GeV, or from a hybrid distribution uniform on the interval [50, 150] GeV and logarithmic on the interval [150, 3500] GeV. We order the third-generation squarks in mass after sampling, so that \({{\tilde{t}}_1}\) and \({{\tilde{b}}_1}\) are by definition the lightest. We intentionally choose these sampling ranges to be slightly beyond our final claimed region of validity for the regression – typically 200–3000 GeV – in order to try to avoid large regression errors at the edges. The regions where our regression has been validated more than cover the ranges of masses of interest for the LHC. We sample the cosines of the sbottom and stop mixing angles, \(\cos \theta _{{\tilde{b}}}\) and \(\cos \theta _{{\tilde{t}}}\), uniformly on the interval \([-1, 1]\).

On top of our baseline sampling, we employ further sub-sampling of the particle masses in order to properly include cross-section resonances within our training set. This ensures that our training dataset is more densely sampled where the cross-sections of interest vary the most. To do this, we generate and then combine six different training sets:

-

(i)

All masses sampled from the uniform prior.

-

(ii)

All masses sampled from the hybrid prior.

-

(iii)

Employing the uniform prior for both the mass of the gluino and for a common squark mass scale, and taking a Gaussian prior with width 50 GeV for the difference between the common squark mass scale and the masses of the individual squarks.

-

(iv)

Employing the uniform prior for the mass of the gluino, the hybrid prior for a common squark mass scale, and the same Gaussian prior as in (iii) to draw values for the squark masses around the common scale.

-

(v)

Employing the uniform prior for a common mass scale, and using the same Gaussian prior as in (iii) to draw a gluino mass and the individual squark masses around the common scale.

-

(vi)

Varying the gluino mass and a common squark mass independently on a two-dimensional grid spanning from 60 to 3000 GeV, with steps of 60 GeV.Footnote 3

By joining these samples, we are able to achieve both good sampling of low masses (where cross-sections are large) via the logarithmic mass prior, and acceptable sampling of large masses via the uniform prior.

Because R-parity is assumed in the MSSM, there are no new s-channel resonances in the mass range where we train our GPs, and SM resonances are too light to have any impact. Therefore, we do not have to worry directly about sampling densely in the region of resonances. However, at LO there are potential effects for gluino pair production near threshold from destructive interference between diagrams with s-channel gluons and those with t-channel squarks. For squark production, the chirality of the final-state squarks affects the contribution from t-channel gluino exchange, so that for example in squark–squark production for equal chiralities the matrix element has a zero at \(m_{{\tilde{g}}}\rightarrow 0\), and a maximum around \(m_{{\tilde{g}}}\simeq {\bar{m}}_{{\tilde{q}}}\), where \({\bar{m}}_{{\tilde{q}}}\) is the average mass of the first and second-generation squarks. Both these effects can lead to non-monotonous behaviour of the cross-section as a function of masses (bumps and dips). In Sect. 4 we shall see this particularly for gluino pair production. This necessitates the separate samples with (near) degenerate masses, where such effects are larger.

Even though all non-degenerate squark masses enter into the LO cross-sections, to the precision of our training sample (see Sect. 3.2), only two mass parameters enter into the NLO corrections to gluino and first/second-generation squark production: the gluino mass \(m_{{\tilde{g}}}\) and the averaged first and second-generation squark mass \({\bar{m}}_{{\tilde{q}}}\). Therefore, any additional interference structures present in the NLO corrections to these processes must be visible in the \((m_{{\tilde{g}}},{\bar{m}}_{{\tilde{q}}}) \) slice of the parameter space. For stop/sbottom production, such structures should be similarly visible in the \((m_{{\tilde{g}}}, m_{{\tilde{t}}_1/{\tilde{b}}_1})\) slice. As a result, we shall spend some time below in validation (Sect. 4) looking at gluino and first/second-generation squark production cross-sections in terms of \(m_{{\tilde{g}}}\) and \({\bar{m}}_{{\tilde{q}}}\), and third-generation squark production in terms of \(m_{{\tilde{g}}}\) and \(m_{{\tilde{t}}_1}\).

Table 1 gives the list of cross-sections available from xsec, and their parameter dependencies. The reader may wonder at this point why we train our GPs on the total cross-section in terms of all the non-degenerate masses, rather than simply on the NLO corrections (in terms of just \(m_{{\tilde{g}}}\) and \({\bar{m}}_{{\tilde{q}}}\) for gluino/first/second-generation squark production, supplemented with the three relevant third-generation parameters each for stop and sbottom production). One reason is that we have designed xsec to be more general than Prospino; in future releases we intend to make use of training data from other tools able to move beyond the degenerate-squark-mass approximation. The other reason is that we feel it is more convenient for a user to simply obtain full LO+NLO (and future +NLL+NNLL+...) cross-sections from xsec, rather than needing to install the correct LO cross-section calculator and PDF set, call them, and then combine the results. Similarly, training on and returning the full cross-section will make simultaneously including cross-sections from different calculators (chosen e.g. as most appropriate for different theories or processes) far more straightforward.

3.2 Calculation of NLO training cross-sections

We use Prospino 2.1 to generate cross-sections for our training samples. This calculates, amongst other things, NLO cross-sections for strong production processes in the MSSM for proton-proton collisions at a given centre-of-mass (CoM) energy, and for a choice of renormalisation/factorisation scales. We have modified the code to set \(\alpha _s\) accordingly, and to accept generic PDF sets from LHAPDF 6.2 [48]. For the current version of xsec we have used the PDF4LHC15_nlo_30_pdfas symmetric Hessian NLO PDF set with 30 eigenvector members and two members with varied strong coupling \(\alpha _s(m_Z)=0.1180\pm 0.0015\) [49].

Prospino performs the PDF integral of the partonic process using the VEGAS [50] importance sampling algorithm for Monte Carlo integration. The convergence criterion leaves some numerical noise in the result, typically of the order of \(10^{-3}\) relative to the central cross-section value.

In order to obtain K-factors for gluino and first/second-generation squark production, Prospino first calculates the LO and NLO cross-sections using a single squark mass, obtained as the average over the masses of all first and second-generation squarks, i.e. eight in total; this mass is employed even for any internal third-generation squark propagators. The ratio of the LO and NLO results gives the K-factor for the process in question. Prospino then recomputes the cross-section at LO, without the assumption of an average mass, and the corresponding NLO value is found by multiplying this LO result by the K-factor calculated for the average squark mass. The calculation proceeds similarly for stop and sbottom production, except that the third-generation squark masses are kept non-degenerate for all steps of the calculation, meaning that first and second-generation masses are still averaged in the K-factor calculation. The final cross-sections should thus be viewed as an approximation to a fully non-degenerate squark mass NLO calculation. The effect of this assumption was investigated in Ref. [51] and found to be relatively small in most parts of parameter space.

In total, Prospino allows 141 final states containing gluinos and different flavour squarks. However, due to charge conjugation relations and NLO QCD identities in the cross-section (relating certain combinations of left and right-handed final-state squarks under the exchange of masses), only 49 distinct final states are needed to represent all processes calculated by Prospino in our training sample. These relationships are handled internally in xsec, and therefore need not directly concern the user. A list of the available cross-sections from the user’s side can be found in Table 1.

For every parameter point and every process in the training sample, we calculate 34 different cross-section values. These include: (i) a central value, (ii) 30 values using the PDF eigenvectors, (iii) one value where \(\alpha _s\) is taken to its lower value, (iv) one where \(\alpha _s\) is taken to its upper value, (v) a value where the renormalisation/factorisation scale is doubled, and, (vi) a value where the renormalisation/factorisation scale is halved.

From the values in (i) and (ii) we follow the PDF4LHC guidelines to calculate the symmetric PDF uncertainty on the central value, understood to be a 68% confidence level bound.Footnote 4 Similarly, we follow the PDF4LHC prescription to calculate the 68% confidence level bound from varying \(\alpha _s\) alone, using the results from (iii) and (iv). We do not add the PDF and \(\alpha _s\) errors, but train different DGPs for the two errors. We note that these errors should be added in quadrature after evaluation if the user wishes to obtain a single 68% confidence bound incorporating both effects.

Further, we follow the standard lore of estimating an uncertainty associated with missing higher-order corrections by calculating the spread in cross-section values under scale variation coming from (v) and (vi). In xsec this is referred to as the scale uncertainty. Choosing exactly how to interpret this asymmetric uncertainty as a probability distribution (flat, Gaussian or otherwise), and whether/how to combine it with the (Gaussian) \(\alpha _s\) and PDF uncertainties, is left to the user.

In total this leaves us with seven cross-sections: the central value and the two-sided PDF, \(\alpha _s\) and scale uncertainties.

3.3 Training implementation details

For each final state, we train one large DGP with multiple experts on the central cross-section value, and five smaller regular GPs: one each on the upper and lower values arising from regularisation and factorisation scale variation, one each on the cross-sections for the variation of \(\alpha _s\) (and corresponding PDFs) to its upper and lower limit in its 68% confidence interval (these values are later symmetrised), and a single GP on the symmetric 68% confidence level PDF variation.

To improve the training, we try to simplify the target model. The span of some ten orders of magnitude in cross-sections across the sampled parameter space means that it is numerically challenging to train the DGPs on the raw cross-section numbers. Before training, we scale out part of the dependence on the final-state masses by first multiplying the central input cross-section by the square of the (average) final-state mass, and then take the logarithm of the result. For the remaining five ‘error’ values, we train on the logarithm of the ratio to the central cross-section, such that after the reverse transformation, the final predictions for the ratios are strictly positive. As noted in Sect. 2.2, we choose a constant prior mean equal to the sample average of the transformed target values, which results in GPs effectively modelling the deviation from this mean value. We also rescale all input parameters to the interval [0, 1] before training, to improve numerical stability and precision.

The transformations during training are automatically recorded and reversed at the time of evaluation. As the GPs are trained on the logarithm of the (mass-rescaled) cross-section, the resulting predictive distribution for the absolute cross-section is a log-normal distribution. Due to the positive skew of this distribution, we base the central cross-section estimate on the median. Specifically, let \({\mathcal {N}}(\mu _\text {DGP}({\mathbf {x}}_*), \sigma ^2_\text {DGP}({\mathbf {x}}_*))\) denote the aggregated DGP predictive distribution for the log-transformed cross-section at point \({\mathbf {x}}_*\), after accounting for the constant prior shift and the mass scaling applied to the training data. The central cross-section value \(y_\textsf {xsec} \) returned by xsec is then

with associated asymmetric regression uncertainties \(\varDelta _\textsf {xsec} ^-\) and \(\varDelta _\textsf {xsec} ^+\). These are constructed from the bounds of the \(1\sigma \) credible interval \(\exp (\mu _\text {DGP}\pm \sigma _\text {DGP})\)Footnote 5:

More generally, the range \(y_\textsf {xsec} \pm m \varDelta ^\pm _\textsf {xsec} \) corresponds to an \(n \sigma \) credible interval, where n is given by

so that \(n = m\) to first order in \((m \varDelta ^\pm _\textsf {xsec}/ y_\textsf {xsec})\) and \((\varDelta ^\pm _\textsf {xsec}/ y_\textsf {xsec})\).

We train the GPs with the union of the training sets discussed in Sect. 3.1. In order to choose the optimal training parameters (relative sizes of the five different training samples, total number of training points, and experts per process), we have carried out a long programme of testing and cross-validation, in which we optimised training parameters separately for each process type, e.g. separately for gluino pair production and gluino–squark production. Our goal was to achieve good performance in terms of the regression error estimated by the DGP, while keeping disk size, memory footprint, training and evaluation time down to acceptable levels.

The processor time spent on training the GPs varies for each process and depends critically on the number of training points per expert because of the GP scaling relations, as well as on optimiser settings like the population size and convergence thresholds for the differential evolution. In practice, the total time we spent on training a single process ranges from 4.5 CPU hours for a simple case with only three parameters, such as \({\tilde{c}}_L^* {\tilde{c}}_L\) production, to 141 CPU hours for gluino pair production, which has ten parameters at NLO. In these most extreme examples, the use of parallel processing for the matrix operations, in particular for the different experts, reduced the actually elapsed wall-clock time by a factor 8–12, compared to the CPU time.

4 Validation

To validate the xsec cross-section results we generate three main test sets: \({\mathcal {D}}_\textsf {test}\), \({\mathcal {D}}_{\textsf {test-tb}}\) and \({\mathcal {D}}_\textsf {MSSM-24}\). The set \({\mathcal {D}}_\textsf {test}\) is used for testing all cross-sections except for the stop/sbottom pair-production processes, which are tested with the set \({\mathcal {D}}_{\textsf {test-tb}}\). The sets \({\mathcal {D}}_\textsf {test}\) and \({\mathcal {D}}_{\textsf {test-tb}}\) contain 10,000 and 5000 points, respectively, and in both cases the input points are sampled in the same way as for the corresponding training sets. The third set, \({\mathcal {D}}_\textsf {MSSM-24}\), contains 19,000 points. We use this in the validation of all cross-sections. Here we draw the input points from the MSSM-24, as defined at \(Q=1\) TeV, using uniform priors for the MSSM parameters. Our definition of the MSSM-24 follows that in Ref. [3], except that we parameterise the Higgs sector using the higgsino mass parameter \(\mu \) and the tree-level mass of the \(A^0\) boson \(m_{A^0}\), instead of the soft-breaking mass parameters \(m_{H_u}^2\) and \(m_{H_d}^2\). For the samples in \({\mathcal {D}}_\textsf {MSSM-24}\) we use SoftSUSY 4.0 to calculate the physical mass spectrum from the MSSM input parameters [52, 53]. In addition to the three main test sets, we also generate a number of process-specific two-dimensional parameter grids for further validation.

As part of the validation we will discuss two simple error measures and their distributions for our sets of test points. The first measure is just the relative error,

which measures the relative deviation of the xsec result \(y_\textsf {xsec} \) from the true Prospino value \(y_\textsf {prosp} \). By assuming some sampling prior \(\pi ({\mathbf {x}}_*)\) for the test points \({\mathbf {x}}_*\) and looking at the resulting distribution of \(\epsilon ({\mathbf {x}}_*)\) values, we can get a global picture of how well xsec performs for the different supersymmetric production processes included in xsec.

One of the strengths of GP regression is that the method directly provides point-wise uncertainty estimates, here in terms of \(\varDelta ^-_\textsf {xsec} ({\mathbf {x}}_*)\) and \(\varDelta ^+_\textsf {xsec} ({\mathbf {x}}_*)\), which are based on the width \(\sigma _\text {DGP}({\mathbf {x}}_*)\) of the DGP predictive distribution (see Eq. 37). As discussed in Sect. 2, the point-wise regression uncertainty is connected to a Bayesian degree of belief regarding the unknown function value at \({\mathbf {x}}_*\), given the particular training set \({\mathcal {D}}\) and the modelling choices made in constructing and optimising the kernel.

An interesting question is then how this point-wise uncertainty compares to the actual deviation between \(y_\textsf {xsec} \) and the true Prospino value \(y_\textsf {prosp} \) across the input feature space. That is, we should also investigate the distribution of the standardised residual

in our sets of test points. Here the notation \(\varDelta ^\pm _\textsf {xsec} \) is shorthand for using \(\varDelta ^+_\textsf {xsec} \) when \(y_\textsf {prosp}- y_\textsf {xsec} > 0\) and \(\varDelta ^-_\textsf {xsec} \) when \(y_\textsf {prosp}- y_\textsf {xsec} < 0\). By studying the distribution of \(z({\mathbf {x}}_*)\) values for our test sets, and comparing to the unit normal distribution, we will obtain a global picture of the extent to which the point-wise regression errors are conservative or not, compared to the true deviations.

In cases where the main source of GP regression uncertainty is actual random noise in the training data, and we learn this noise level from the data by including a white-noise term, Eq. (16), in the GP kernel, we expect the residual in Eq. (40) to be distributed as \({\mathcal {N}}(0,1)\).Footnote 6 We can understand this from a simple example with single-component input points x. Assume that the target data are noisy measurements of the form \(y(x) = f(x) + \delta \), where the noise \(\delta \) is distributed as \(p(\delta ) = {\mathcal {N}}(0,\sigma ^2_{\text {noise}})\). Let the GP posterior predictive distribution for \(y(x_*)\) be given by \({\mathcal {N}}(\mu _\text {GP}(x_*),\sigma ^2_\text {GP}(x_*))\). If the noise is the dominant uncertainty we have \(\sigma _\text {GP}(x_*) \approx \sigma _{\text {noise}}\), and we can express the residual as

Given the generative model for the data, the first term will follow an \({\mathcal {N}}(0,1)\) distribution. The second term is the number of standard deviations (as measured by the GP’s own uncertainty) by which the GP prediction \(\mu _\text {GP}\) differs from the true value of the underlying function f. While this term can in general not be expected to be normally distributed, the assumption of noise-dominated uncertainty implies that its contribution to the residual is \(\ll 1\), and hence, that the z distribution is close to \({\mathcal {N}}(0,1)\).

On the other hand, if the true noise level is tiny and some other source of uncertainty dominates \(\sigma _\text {GP}\), i.e., if \(y \approx f\) and \(\sigma _\text {GP} \gg \sigma _\text {noise}\), we get

Thus, in this limit it is the generally small, and potentially non-Gaussian, second term from Eq. (41) that dominates the residual.

The latter scenario is most similar to the case we have for the xsec residual in Eq. (40). The actual noise level in the Prospino training data is very small, as typically is the additional error in Eq. (26) accounting for uncertainty in the hyperparameter choice. Assuming some reasonably uninformative sampling prior \(\pi ({\mathbf {x}}_*)\), this means that for most points the widths \(\varDelta ^\pm _\textsf {xsec} ({\mathbf {x}}_*)\) are dominated by the homoscedastic error contribution that we include to stabilise the numerics (see Sect. 2.3). As this is a global error contribution, we can expect the resulting regression error for most test points to be larger than the actual error, \(y_\textsf {prosp} ({\mathbf {x}}_*) - y_\textsf {xsec} ({\mathbf {x}}_*)\). We therefore in general expect the \(z({\mathbf {x}}_*)\) distributions to be narrow compared to \({\mathcal {N}}(0,1)\), and not necessarily Gaussian. For comparison, we will include a graph of \({\mathcal {N}}(0,1)\) in all our plots of residual distributions.

In the coming subsections, much of our focus will be on the regression errors and the related relative error \(\epsilon \) and residual z. However, we remind the reader that the regression errors that we find are typically far subdominant to the cross-section uncertainties coming from the scale and PDF errors.

4.1 Gluino pair production

The gluino pair-production cross-section to NLO in QCD depends on the gluino mass and all the other squark masses. Naturally, the gluino mass is the dominant feature of the DGP after training. The mass-averaging approximation that Prospino uses (see Sect. 3.2) means that the average first/second-generation squark mass \({\bar{m}}_{{\tilde{q}}}\) is a strong predictor of the NLO contribution. We therefore provide it to the GPs as a separate feature, i.e. as if it were part of the vector of parameters \({\mathbf {x}}\). The importance of the individual first and second-generation squark masses roughly follows the PDF contributions from their corresponding quarks, due to LO t-channel squark exchange diagrams.

Given that the mass range of parameters (features) in the training samples is [100, 3500] GeV, we validate the cross-section on the sub-interval [200, 3000] GeV for both gluino and squark masses, where the cross-section regression has solid support from training data and the regression error is small.

Gluino pair-production cross-section as a function of gluino mass, with all squark masses fixed at 1 TeV. The central value is shown in light green, the scale error in pink, and the PDF error in violet. The \(\alpha _s\) error is too small to be visible on the scale of the plot. Superimposed on the prediction are the Prospino values (dots). Inset is a close-up of the region at low gluino mass, and below we show the residual between the xsec prediction and the Prospino values

Gluino pair-production cross-section as a function of average first and second-generation squark mass. The gluino mass is fixed at 1 TeV. Shown are the central xsec prediction (solid line), the \(1\sigma \) regression error band (light green), the scale error (pink), the PDF error (violet), the Prospino values (dots) and the corresponding NNLL-fast NLO result (crosses)

In Fig. 2 we compare the gluino pair-production cross-sections predicted by xsec, presented as a function of the gluino mass, with values taken directly from Prospino (but not in the training set). For this comparison we fix the squark masses to a common value of 1 TeV. We also show the associated xsec-predicted uncertainty from the renormalisation scale and the PDFs as bands. The uncertainties from the regression provided by xsec and from \(\alpha _s\) are too small to be visible on the logarithmic scale. However, below the plot we show the residual between the xsec prediction and the Prospino value (Eq. 40), as a multiple of the xsec regression uncertainty. We observe good agreement overall with the Prospino result. As expected, the scale error dominates at low gluino masses, and the PDF error at high masses.

Gluino pair-production cross-section as a function of average first and second-generation squark mass for a set of different gluino masses. Shown is the central xsec prediction (solid line), the \(1\sigma \) and \(2\sigma \) regression error bands (shaded regions), and the Prospino values (dots). We also show the residuals of the comparison to Prospino

A particular phenomenon occurs in gluino pair production due to destructive interference between LO diagrams when \(m_{{\tilde{g}}}\approx m_{{\tilde{q}}}\), resulting in a vanishing partonic cross-section at threshold [7]. This can be found as a significant dip in the total pair-production cross-section when one or more of the squark masses become degenerate with the gluino. We show that xsec reproduces this behaviour to very good precision in Fig. 3. Here the gluino mass is fixed to 1 TeV while the squark masses are run together as a common squark mass \({\bar{m}}_{{\tilde{q}}}\). This figure also clearly demonstrates how subdominant the regression error is compared to the scale and PDF errors. Finally, Fig. 3 compares the results of xsec to the corresponding NLO result from NNLL-fast based on the same PDF set. We observe a slight systematic difference at the \(\sim \)1% level. This is at the level of the interpolation error quoted by NNLL-fast.

The same gluino pair-production cross-section as a function of a common squark mass for a selection of different gluino masses can be found in Fig. 4, showing that xsec reproduces the feature across the whole assumed range of validity for the regression. As expected, the regions in which the cross-section changes most rapidly are the most difficult to predict, but we see that with one exception the prediction is always within \(2\sigma \) of the Prospino value, over a large number of test points.

Gluino pair-production cross-section as a function of the \({{\tilde{u}}}_L\) mass. All other squark masses are fixed at 1 TeV and the gluino mass is set to 1.5 TeV. Shown is the central xsec prediction (solid line), the \(1\sigma \) and \(2\sigma \) regression error bands (shaded regions), the Prospino values (dots) and the corresponding NNLL-fast NLO result (crosses)

Gluino pair-production cross-section as a function of the \({{\tilde{d}}}_R\) mass. All other squark masses are fixed at 1 TeV and the gluino mass is set to 2 TeV. Shown is the central xsec prediction (solid line), the \(1\sigma \) and \(2\sigma \) regression error bands (shaded regions), the Prospino values (dots) and the corresponding NNLL-fast NLO result (crosses)

In Figs. 5 and 6, we show the potential importance of being able to deal with non-degenerate squark masses in xsec. Here, we vary the \({{\tilde{u}}}_L\) and \({{\tilde{d}}}_R\) mass alone, respectively, with all other squarks fixed at 1 TeV and the gluino at 1.5 TeV for \({{\tilde{u}}}_L\) and 2 TeV for the plot with \({{\tilde{d}}}_R\). While less pronounced, qualitatively the same dip feature due to the t-channel interference as discussed above can be seen here as well. In this example, the NNLL-fast NLO result fails to reproduce the feature, as expected from its inherent assumption of degenerate squark masses.

Figure 7 shows the distributions of the relative error (left) and the residual (right) for the central cross-section value using the test sets \({\mathcal {D}}_\textsf {test}\) and \({\mathcal {D}}_\textsf {MSSM-24}\). All distributions are normalised to unity, and the y-axis range is set to show all bins with non-zero values. In both sets the relative error is well below 5% for most points, and in fact there is only a single point, in the \({\mathcal {D}}_\textsf {MSSM-24}\) set, with an error greater than 5%. From the comparison to the unit normal distribution we see that, as expected, the xsec regression error is somewhat larger than the true error, but with no apparent bias. The xsec prediction is also robust under a change of the test sample to the MSSM-24.

The relative error (left) and residual (right) distributions for the gluino pair-production cross-section in the test sets \({\mathcal {D}}_\textsf {test}\) (solid) and \({\mathcal {D}}_\textsf {MSSM-24}\) (dashed). The input points in \({\mathcal {D}}_\textsf {test}\) are sampled from the same distribution as the training set, while the points in \({\mathcal {D}}_\textsf {MSSM-24}\) are sampled from the MSSM-24 using flat priors for the MSSM parameters. All distributions are normalised to unity. The unit normal distribution is shown for comparison as a dotted black line

It is also instructive to perform two-dimensional grid scans of mass planes to show the relative error and residual of the central cross-section value as a function of two of the features at a time. Two examples of this are found in Fig. 8, where we show the result in the planes of \((m_{{\tilde{g}}}, {\bar{m}}_{{\tilde{q}}})\) and \((m_{{\tilde{g}}}, m_{{\tilde{d}}_R})\). We see that the relative errors and residuals are correlated in the mass planes, as should be expected from Gaussian processes when the dominant uncertainty is not due to random noise in the training data, but rather due to the lack of information in regions where the function is changing quickly. We also note that the regression uncertainty is largest when \(m_{{\tilde{g}}}\approx m_{{\tilde{q}}}\). This shows that the destructive interference dip seen in Figs. 3, 4 and 5 is the part of this cross-section function that is the most challenging to capture in the regression. Further improvements could be made by adding extra training points, but only at significant cost to the evaluation speed, which seems unwarranted given the small regression errors compared to the other errors. Naive counting of the number of bins in the residual plots (right panels) above the 1, 2, and \(3\sigma \) levels indicates that the quoted xsec regression error is in general conservative compared to the actual error (i.e. fewer bins show large residuals than expected from Gaussian statistics).

In addition to the regression errors, xsec also predicts scale, PDF and \(\alpha _s\) errors from separate GPs performing regression on the relative size of the respective error bands, see Sect. 3.3. The performance of these GPs in the case of gluino pair-production is shown in Fig. 9 in terms of the resulting relative error on the errors, compared to values calculated using Prospino for the \({\mathcal {D}}_\textsf {test}\) and \({\mathcal {D}}_\textsf {MSSM-24}\) test sets. To be precise, we compute the analogue of Eq. (39) for each individual type of error:

where \(\delta _\textsf {prosp} ({\mathbf {x}}_*)\) represents the true width of the scale, PDF, or \(\alpha _s\) error band at the test point \({\mathbf {x}}_*\), as computed with Prospino, and \(\delta _\textsf {xsec} ({\mathbf {x}}_*)\) is the corresponding xsec estimate.

While PDF and \(\alpha _s\) errors computed by xsec are symmetric by default (see Sect. 5.3), the scale errors are not. We therefore symmetrise these before adding them in quadrature to the PDF and \(\alpha _s\) errors, to obtain a combined error. We find that the relative error on this combined error is below 10% in over 90% of the test points.

Given that the absolute magnitude of the cross-section is much larger than the absolute magnitude of the individual errors, these errors on errors are largely insignificant. Very similar conclusions can be reached for the scale, PDF and \(\alpha _s\) errors for the other processes included in xsec, and for the sake of brevity we do not show the relative errors on the errors for other processes.

Gluino–squark pair-production cross-section as a function of gluino mass (left) and squark masses (right), for production of first and second-generation squarks. Shown are individual (left-handed) squark final states (colours) and the sum of all first and second-generation final states (black). In the left-hand plot all squark masses are fixed at 1 TeV. In the right-hand plot all masses except for the final-state squark mass are fixed at 1 TeV. The central-value xsec prediction is shown with error bands from regression (solid line), scale error (dashed) and PDF error (dotted). The \(\alpha _s\) error is too small to be visible. Also shown are the Prospino values (dots)

4.2 Pair production of gluinos with first or second-generation squarks

The data that we employ for training xsec 1.0 are limited by the fact that Prospino assumes flavour conservation and neglects heavy quarks in the proton PDFs. It therefore offers gluino–squark pair-production cross-sections only for processes with first and/or second-generation squarks in the final state. However, cross-sections for gluino–squark production with sbottoms or stops in the final state are expected to be very small. We also note that Prospino returns the cross-section for the sum over charge-conjugate final states, i.e. \({\tilde{g}}{\tilde{q}}_i+{\tilde{g}}{\tilde{q}}_i^*\), making it pointless to train xsec separately on the two final states. In addition to these limitations, at NLO QCD the numerical value of the cross-section is identical for left and right-handed squark final states, as long as their masses are identical. Although we use this fact internally in xsec to reduce the total file size of the DGPs, the user can freely request any first or second-generation final-state squark.

The relative error (left) and residual (right) distributions for the first and second-generation gluino-squark cross-sections, for the test sets \({\mathcal {D}}_\textsf {test}\) (solid) and \({\mathcal {D}}_\textsf {MSSM-24}\) (dashed). All distributions are normalised to unity. The unit normal distribution is shown as a dotted black line for comparison to the residual distributions

The sizes of the gluino–squark production cross-sections are naturally dominated by the gluino and final-state squark masses. Because of flavour conservation, to the level of approximation used in Prospino ’s K-factor calculation, the only additional property of the model parameter space (i.e. feature) used by xsec is the average squark mass \({\bar{m}}_{{\tilde{q}}}\). The range of sparticle masses over which we assume this cross-section evaluation to be valid is the same as for gluino pair production, i.e. [200, 3000] GeV.

In Fig. 10 we show the predicted gluino–squark production cross-sections as a function of the gluino mass (left) and the individual \({\tilde{q}}_L\) masses (right). For the individual squark masses we keep all other masses at 1 TeV and change only the mass of the final-state squark. Also shown are the predicted regression, PDF and scale errors, and the residual between the xsec predictions and the Prospino values calculated for the same parameters. We see that xsec reliably predicts the contribution from individual squark final-state flavours. This is even the case in the region of very low final-state squark masses, which tests xsec on the arguably strange scenario in which the particular final-state squark is much lighter than the average mass of the first and second-generation squarks.

For the \({\tilde{g}} {\tilde{d}}_L \) and \({\tilde{g}} {\tilde{u}}_L\) processes, the scale error is the dominant uncertainty across the full mass range in both the gluino mass and the final-state squark mass. For the \({\tilde{g}} {\tilde{s}}_L \) and \({\tilde{g}} {\tilde{c}}_L\) processes it is generally the PDF error that dominates the uncertainty, except at low gluino masses, where the scale error is more important. The fact that the regression error residuals comparing the xsec and Prospino values seem correlated between the four processes shown is due to the same training sample being used for all processes, causing the distances to the nearest, most influential training points to be the same in all cases.

In Fig. 11 we show the distributions of the relative error between the xsec prediction and the corresponding Prospino results, as well as the residual, for each individual flavour final state. We use the same two test sets, \({\mathcal {D}}_\textsf {test}\) and \({\mathcal {D}}_\textsf {MSSM-24}\), as for the gluino pair-production cross-section in Fig. 7. All the distributions are normalised to unity.

The relative errors and residuals are similar across all squark flavours. There are no obvious differences in performance with the two sets of test points. The relative error distributions show that for almost all points in the test sets, the true regression error is below 10%, and xsec tends to overestimate the Prospino cross-section by a few percent. Comparing the residual and \({\mathcal {N}}(0,1)\) distributions, we see that the predicted xsec regression uncertainty is conservative; indeed, notably more so than for the gluino pair-production cross-section (Fig. 7).

We can also compare the relative error across mass planes, which is shown in Fig. 12 separately for all \({\tilde{g}}{\tilde{q}}_L\) processes, in terms of the gluino mass and the average first and second-generation squark mass. Here the final-state squark mass for each plotted cross-section is set equal to the average squark mass. We see that the regression error is below 8% across this plane for all four of the \({\tilde{g}} {\tilde{d}}_L \), \({\tilde{g}} {\tilde{u}}_L \), \({\tilde{g}} {\tilde{s}}_L \), and \({\tilde{g}} {\tilde{c}}_L \) production cross-sections.

The relative error of the production cross-section for gluinos and first or second-generation squarks, as a function of the average mass of first and second-generation squarks and the mass of the gluino. Shown are results for the production of \({\tilde{g}} {\tilde{d}}_L \) (top left), \({\tilde{g}} {\tilde{u}}_L \) (top right), \({\tilde{g}} {\tilde{s}}_L \) (bottom left), and \({\tilde{g}} {\tilde{c}}_L \) (bottom right). The final-state squark mass for each process is set equal to the average squark mass

Squark–anti-squark pair-production cross-sections for first and second-generation squarks. Panels show cross-sections as a function of the average of all first and second-generation squark masses (left) and gluino mass (right), for a selection of final states (colours) and the total cross-section for production of first and second-generation squark–anti-squark pairs (black)

4.3 First and second-generation squark–anti-squark pair production

We now look at the production cross-sections for squark–anti-squark pairs \({{\tilde{q}}}_{L/R} {{\tilde{q}}}^{(\prime )*}_{L/R}\). The flavours of the pair may be identical or different, and all four combinations of squark handedness are treated as separate processes. If the flavours of the two squarks are different, the process is assumed to include the charge-conjugate state. In this section we discuss only final states with first and second-generation (anti-)squarks, where the final-state flavour may come from first and second-generation (anti-)quarks sampled from the proton.

Within the limitations set by the training data from Prospino, the LO first and second-generation squark–anti-squark cross-sections depend on the masses of the gluino and the final-state squark(s), and the NLO corrections further on the mean mass of all first and second-generation squarks. Again, we validate the cross-section on the sub-interval [200, 3000] GeV of the training data (in both gluino and squark mass). For squark–anti-squark production, one should keep in mind that masses below the lower end of this range may be affected by resonant production through Z and W, and while xsec ’s reported regression error increases below 200 GeV, it cannot take these resonances into account, as they are not included in its training data. The resulting cross-sections reported by xsec must thus be seen as wholly unreliable for squark masses below 50 GeV.

In NLO QCD, cross-sections for the two sets of process pairs \(({{\tilde{q}}}_L {{\tilde{q}}}_L^*, {{\tilde{q}}}_R{{\tilde{q}}}_R^*)\) and \(({{\tilde{q}}}_R {{\tilde{q}}}_L^{\prime *},{{\tilde{q}}}'_R {{\tilde{q}}}_L^*)\) differ within each set only by an exchange of the appropriate squark masses. Removing also charge-conjugate states, an initial number of 64 independent processes \({\tilde{q}}_{L/R}{\tilde{q}}_{L/R}^{\prime *}\) therefore reduces to 20 unique cross-sections. To save training time and user disk space, we reuse the DGPs for the identical processes in xsec simply by employing symbolic links and mapping the masses accordingly. This is however invisible to the user.

In Fig. 13 we show the predicted first and second-generation squark–anti-squark production cross-sections as a function of the mean first and second-generation squark mass \({\bar{m}}_{{\tilde{q}}}\) (left) and the gluino mass (right). We show results for a selection of sub-processes, and for the total cross-section (black, rescaled for readability). All other masses are kept at 1 TeV. We see that xsec reliably predicts the contribution from individual squark final states, although at high squark masses the PDF error (dotted line) for some processes is consistent with zero cross-section. We also see that xsec correctly captures the contribution of the gluino t-channel diagram, which controls the cross-section when the final-state squarks have different chirality, leading to the peak in Fig. 13 (right) for L-R combinations when \(m_{{\tilde{g}}}\simeq m_{{\tilde{q}}}\).

In Fig. 14 we show the distributions of the relative regression error and residual (Eqs. (39) and (40)) for the points in the test sets \({\mathcal {D}}_\textsf {test}\) and \({\mathcal {D}}_\textsf {MSSM-24}\). We have normalised all distributions to unity. The comparison to the unit normal distribution included in Fig. 14 (right column) shows that for both test sets, and all processes, the xsec regression error is conservative with respect to the true error.

First and second-generation squark–squark pair-production cross-section as a function of the average first and second-generation squark mass (left) and gluino mass (right), for a selection of final states (colours) and the (rescaled) total cross-section for first and second-generation squark–squark production (black)

The relative regression error in Fig. 14 (left column) is below 10% for all processes for the vast majority of test points. There is again a slight tendency for xsec to overestimate the Prospino cross-section values, in particular for the \({\mathcal {D}}_\textsf {MSSM-24}\) test set. As this set has individual flat priors for all the squark (soft) masses, which only a subset of \({\mathcal {D}}_\textsf {test}\) has, we expect it to be more challenging to reproduce as its points are more likely to lie on the outskirts of the validation region.

The relative error distributions are most narrow for the processes producing a squark–anti-squark pair of the same type, i.e., for the four \({\tilde{q}}_L^* {\tilde{q}}_L\) processes and the corresponding \({\tilde{q}}_R^* {\tilde{q}}_R\) processes (not shown). These cross-sections are easier to model, as the final state involves only a single mass parameter. The \({\tilde{s}}_L^* {\tilde{s}}_L\) and \({\tilde{c}}_L^* {\tilde{c}}_L\) processes have particularly small relative errors. This is likely due to the smallness of the proton PDFs for the s and c quarks, which effectively makes the gluino t-channel diagram irrelevant and thus further simplifies the parameter dependence.

4.4 First and second-generation squark pair production

This section looks at the validation of DGPs trained to predict cross-sections for squark–squark pair production, \({{\tilde{q}}}_{L/R} {{\tilde{q}}}^{(\prime )}_{L/R}\). As should be clear from the notation, the flavours of the pair may be identical or different, and all four combinations of squark handedness are treated as separate processes. The processes are always assumed to include the charge-conjugate state. Again, we discuss only final states with first and second-generation squarks, where at LO the final-state flavour comes from first and second-generation quarks in the proton.

As for squark–anti-squark production, within the limitations set by the design of Prospino, the LO first and second-generation squark–squark pair-production cross-sections depend on the mass(es) of the final-state squarks and the gluino mass, and the NLO corrections further depend on the mean mass of the first and second-generation squarks. We again validate our cross-sections on the sub-interval [200, 3000] GeV of the training data, for both gluino and squark masses.

If the squark masses are interchanged appropriately, the cross-sections for the process pairs \(({{\tilde{q}}}_L {{\tilde{q}}}_L, {{\tilde{q}}}_R{{\tilde{q}}}_R)\) are identical in Prospino, as are those for the process pairs \(({{\tilde{q}}}_R {{\tilde{q}}}_L^{\prime },{{\tilde{q}}}'_R {{\tilde{q}}}_L)\). The 64 independent processes \({\tilde{q}}_{L/R}{\tilde{q}}_{L/R}^{\prime }\) can therefore be reduced to 20 unique cross-sections. Again we use symbolic links to reuse DGPs for processes with identical cross-sections.