Abstract

Two of the elements of the Cabibbo–Kobayashi–Maskawa quark mixing matrix, \(|V_{ub}|\) and \(|V_{cb}|\), are extracted from semileptonic B decays. The results of the B factories, analysed in the light of the most recent theoretical calculations, remain puzzling, because for both \(|V_{ub}|\) and \(|V_{cb}|\) the exclusive and inclusive determinations are in clear tension. Further, measurements in the \(\tau \) channels at Belle, Babar, and LHCb show discrepancies with the Standard Model predictions, pointing to a possible violation of lepton flavor universality. LHCb and Belle II have the potential to resolve these issues in the next few years. This article summarizes the discussions and results obtained at the MITP workshop held on April 9–13, 2018, in Mainz, Germany, with the goal to develop a medium-term strategy of analyses and calculations aimed at solving the puzzles. Lattice and continuum theorists working together with experimentalists have discussed how to reshape the semileptonic analyses in view of the much higher luminosity expected at Belle II, searching for ways to systematically validate the theoretical predictions in both exclusive and inclusive B decays, and to exploit the rich possibilities at LHCb.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Executive summary

The magnitudes of two of the elements of the Cabibbo–Kobayashi–Maskawa (CKM) quark mixing matrix [1, 2], \(|V_{ub}|\) and \(|V_{cb}|\), are extracted from semileptonic B-meson decays. The results of the B factories, analysed in the light of the most recent theoretical calculations, remain puzzling, because – for both \(|V_{ub}|\) and \(|V_{cb}|\) – the determinations from exclusive and inclusive decays are in tension by about 3\(\sigma \). Recent experimental and theoretical results reduce the tension, but the situation remains unclear. Meanwhile, measurements in the semitauonic channels at Belle, Babar, and LHCb show discrepancies with the Standard Model (SM) predictions, pointing to a possible violation of lepton-flavor universality. LHCb and the upcoming experiment Belle II have the potential to resolve these issues in the next few years.

Thirty-five participants met at the Mainz Institute for Theoretical Physics to develop a medium-term strategy of analyses and calculations aimed at the resolution of these issues. Lattice and continuum theorists discussed with experimentalists how to reshape the semileptonic analyses in view of the much larger luminosity expected at Belle II and how to best exploit the new possibilities at LHCb, searching for ways to systematically validate the theoretical predictions, to confirm new physics indications in semitauonic decays, and to identify the kind of new physics responsible for the deviations.

1.1 Format of the workshop

The program took place during a period of five days, allowing for ample discussion time among the participants. Each of the five workshop days was devoted to specific topics: the inclusive and exclusive determinations of \(|V_{cb}|\) and \(|V_{ub}|\), semitauonic B decays and how they can be affected by new physics, as well as related subjects such as purely leptonic B decays and heavy quark masses. In the mornings, we had overview talks from the experimental and theoretical sides, reviewing the main aspects and summarizing the state of the art. In the late afternoon, we organized discussion sessions led by experts of the various topics, addressing questions that have been brought up before or during the morning talks.

1.2 Exclusive heavy-to-heavy decays

The \(B\rightarrow D^{(*)}\ell \nu \) decays have received significant attention in the last few years. New Belle results for the \(q^2\) and angular distributions have allowed studies of the role played by the parametrization of the form factors in the extraction of \(|V_{cb}|\). It turns out that the extrapolation to zero-recoil is very sensitive to the parametrization employed, a problem that can be solved only by precise calculations of the form factors at non-zero recoil. Until these are completed, the situation remains unclear, with repercussions on the calculation of \(R(D^*)\) as well, with diverging views on the theoretical uncertainty of present estimates based on heavy quark effective theory (HQET) expressions.

Beside a critical reexamination of these recent developments, we discussed several incremental and qualitative improvements in lattice QCD, also in baryonic decays. Though unlikely to carry much weight in determining \(|V_{cb}|\), the latter offer great opportunities to test lepton-flavor universality violation (LFUV) and lattice QCD. The discussions also addressed the fact that QCD errors are now almost as small as effects from QED. Thus, further improvement must be theoretically made by properly studying the effect of QED radiation, especially the treatment of soft photons and photons that are neither soft nor hard and their sensitivity to the meson wave functions.

Concerning studies of LFUV, we discussed the role played by higher excited charmed states in establishing new physics and the challenges that the present \(R(D^{(*)})\) measurements represent for model building.

1.3 Exclusive heavy-to-light decays

This determination of \(|V_{ub}|\) relies on nonperturbative calculations of the form factor of \(B\rightarrow \pi \ell \nu \), which is the most precise channel. We discussed the status of the light-cone sum rule (LCSR) calculations and several recent improvements in lattice QCD, in particular the most recent results from the Fermilab Lattice & MILC Collaborations and from the RBC & UKQCD Collaborations, as well as future prospects. The Fermilab/MILC calculation alone leads to a remarkably small total error on \(|V_{ub}|\), about \(4\%\). While at present the most precise extraction of \(|V_{ub}|\) comes from \(B\rightarrow \pi \ell \nu _\ell \), it is worth considering the channel \(B_s \rightarrow K\ell \nu \) as well, because here the lattice-QCD calculations are affected by somewhat smaller uncertainties. \(B_s \rightarrow K\ell \nu \) can be accessible at Belle II in a run at the \(\varUpsilon (5S)\) and a precision of about 5–10% could be achieved with \(1~\text {fb}^{-1}\). On the other hand, LHCb has an ongoing analysis of the ratio \(B(B_s \rightarrow K\ell \nu )/B(B_s\rightarrow D_s\ell \nu )\), which will provide a new determination of \(|V_{ub}/V_{cb}|\). This approach follows the success that LHCb demonstrated for semileptonic baryon decays via the precise measurement of the ratio \(B(\varLambda _b\rightarrow p\mu \nu )/(\varLambda _b\rightarrow \varLambda _c \mu \nu )\) in the high-\(q^2\) region. This measurement, combined with precise lattice-QCD calculations of the form factors, allowed the extraction of ratio \(|V_{ub}/V_{cb}|\) with an uncertainty of 7%. We discussed also other channels, in particular how to study \(B\rightarrow \pi \pi \ell \nu \) including the resonant structures. Careful studies of other heavy-to-light channels will also be crucial to improve the signal model for the inclusive \(|V_{ub}|\) measurements.

1.4 Inclusive heavy-to-heavy decays

The theoretical predictions in this case are based on an operator product expansion. Theoretical uncertainties already dominate current determinations, and better control of all higher-order corrections is needed to reduce them. In this respect, it would be important to have the perturbative-QCD corrections to the complete coefficient of the Darwin operator and to check the treatment of QED radiation in the experimental analyses. A full \(O(\alpha _s^3)\) calculation of the total width may be within reach with recently developed techniques. From the experimental point of view, new and more accurate measurements will be most welcome, in particular to better understand the correlations between different moments and moments with different cuts. A better determination of the higher hadronic mass moments and a first measurement of the forward–backward asymmetry would benefit the global fit, as would a better understanding of higher power corrections. The importance of having global fits to the moments in different schemes and by different groups has also been stressed. This calls for an update of the 1S scheme fit and could lead to a cross-check of the present theoretical uncertainties. Lattice QCD already provides inputs to the fit with the calculation of the heavy quark masses, which have been reviewed. New developments discussed at the workshop may soon be able to provide additional information that can be fed into the fits, such as constraints on the heavy-quark quantities \(\mu _\pi ^2\) and \(\mu _G^2\). The two main approaches are (i) computing inclusive rates directly with lattice QCD and (ii) using the heavy quark expansion for meson masses, precisely computed at different quark mass values. The state of theoretical calculations for inclusive semitauonic decays has also been discussed, as they represent an important cross-check of the LFUV signals.

1.5 Inclusive heavy-to-light decays

This determination is based on various well-founded theoretical methods, most of which agree well. The 2017 endpoint analysis by BaBar seems to challenge this consolidated picture, suggesting discrepancies between some of the methods and a lower value of \(|V_{ub}|\). For the future, the complete NNLO corrections in the full phase space should be implemented and the various methods should be upgraded in order to make the best use of the Belle II differential data based on much higher statistics. These data will make it possible to test the various methods and to calibrate them, as they will contain information on the shape functions. The SIMBA and NNVub methods seem to have the potential to fully exploit the \(B\rightarrow X_u \ell \nu \) (and possibly radiative) measurements through combined fits to the shape function(s) and \(|V_{ub}|\). The separation of \(B^\pm \) and \(B^0\) in the experimental analyses will certainly help to constrain weak annihilation, but the real added value of Belle II could be precise measurements of kinematic distributions in \(M_X\), \(q^2\), \(E_l\), etc. A detailed measurement of the high \(q^2\) tail might be very useful, also in view of attempts to check quark-hadron duality. Experimentally, better hybrid (inclusive + exclusive) Monte Carlos are badly needed; s-\({{\bar{s}}}\) popping should be investigated to develop a better understanding of kaon vetos. The \(b\rightarrow c\) background will be measured better, which will benefit these analyses.

1.6 Leptonic decays

The measurement of \(B\rightarrow \tau \nu \) is not yet competitive with semileptonic decays for measuring \(|V_{ub}|\), because of a 20% error on the rate. Belle II will improve on this. The corresponding lattice-QCD calculation is however very precise, with an error below 1%, according to the 2019 report from FLAG [3] and based mainly on a result from Fermilab/MILC that was presented at the workshop. That said, the mode is useful today to model builders trying to understand new physics explanations of the tension between inclusive and exclusive determinations of \(|V_{ub}|\). Belle II will also access \(B\rightarrow \mu \nu (\gamma )\) with the possibility to reach an uncertainty on the branching fraction of about 5% with \(50~ab^{-1}\), allowing for a new determination of \(|V_{ub}|\) in the long term. We discussed also the LHCb contribution to leptonic decays with the process \(B\rightarrow \mu \mu \mu \nu _\mu \) where two of the muons come from virtual \(\gamma \) or light vector meson decays. A study of this channel has been published in [4] and a very stringent upper limit obtained, inconsistent with the existing branching fraction predictions, indicating the need for reliable theoretical calculations.

2 Heavy-to-heavy exclusive decays

The aim of this section is to present an overview of \(b\rightarrow c\) exclusive decays. After an introduction to the parametrization of the relevant form factors between hadronic states we describe the status of current lattice QCD calculations with particular focus on \(B\rightarrow D^*\) and \(\varLambda _b\rightarrow \varLambda _c\). Next, we discuss experimental measurements of \(B\rightarrow D^{(*)}\) semileptonic decays with special focus on the ratios \(R(D^{(*)})\), and several phenomenological aspects of these decays: the extraction of \(V_{cb}\), theoretical predictions for \(R(D^{(*)})\), the role of \(B\rightarrow D^{**}\) transitions and constraints on new physics. We also briefly discuss the information that is required to reproduce results presented in experimental analyses and to incorporate older measurements into approaches based on modern form factor parametrizations. We conclude with the description of HAMMER, a tool designed to more easily calculate the change in signal acceptancies, efficiencies and signal yields in the presence of new physics.

2.1 Parametrization of the form factors

In this section, we introduce the form factors for the hadronic matrix elements that arise in semileptonic decays. Several different notations appear in the literature, often using different conventions depending on whether the final-state meson is heavy (e.g., D) or light (e.g., \(\pi \)). A general decomposition relies, however, only on Lorentz covariance and other symmetry properties of the matrix elements. As discussed below, it is advantageous to choose the Lorentz structure so that the form factors have definite parity and spin.

In this spirit, let us consider the matrix elements for a meson decay \(B_{(l)}\rightarrow X^{(*)}\ell \nu \), where the quark content of the \({\bar{B}}\) is bl with l a light quark (u, d, or s), and the quark content of the X is \({\bar{q}}l\) where q can be either a light quark or the c quark. The desired decomposition can be written as

where \(q^\mu = (p - p')^\mu \) is the momentum transfer, \(S={\bar{b}}q\) is the scalar current, \(P={\bar{b}}\gamma ^5q\) is the pseudoscalar current, \(V^\mu = {\bar{b}}\gamma ^\mu q\) is the vector current, \(A^\mu = {\bar{b}}\gamma ^\mu \gamma _5 c\) is the axial current, \(T^{\mu \nu } = {\bar{b}}\sigma ^{\mu \nu } c\) is the tensor current, \(m_q\) is the mass of the quark q, M is the mass of the parent meson (B in this case), m (without subscript) is the mass of the daughter meson, and \(r=m/M\). Contracting Eqs. (2.2) and (2.6) with \(q_\mu \) and using the appropriate Ward identities shows that the scalar form factor, \(f_0\), and pseudoscalar form factor, \(A_0\), appear in the vector and axial vector transitions. The \(J^P\) quantum numbers of the form factors are given in Table 1. The tensor form factors in Eqs. (2.3) and (2.7) appear in extensions of the Standard Model.

One can impose bounds on the shape of these form factors by using QCD dispersion relations for a generic decay \(H_b\rightarrow H_q\ell {\bar{\nu }}\). Since the amplitude for production of \(H_b H_q\) from a virtual W boson is determined by the analytic continuation of the form factors from the semileptonic region of momentum transfer \(m_\ell ^2< q^2 < M^2- m^2\) to the pair production region \(q^2\ge M^2 + m^2\), one can find constraints in the pair-production region, amenable to perturbative QCD calculations, and then propagate the constraint to the semileptonic region by using analyticity. The result of this process applied to the form factors is the model-independent Boyd–Grinstein–Lebed (BGL) parametrization [5, 6], which expands a form factor F(z) in the dimensionless variable z as

where \(t_\pm = (M\pm m)^2\), \(B_F(z)\) are known as the Blaschke factors, which incorporate the below- or near-threshold [7] poles in the s-channel process \(\ell \nu \rightarrow {\bar{B}}X\), and \(\phi _F(z)\) is called the outer function. The poles, and hence the Blaschke factor, depend on the spin and the parity of the intermediate state, which is why it is useful to use fixed \(J^P\) for the form factors. See Sect. 3.5.5 for more details.Footnote 1 Of course, in practical applications the series (2.8) is truncated at some power \(z^{n_F}\).

By taking certain linear combinations of form factors with the same spin and parity one obtains the BGL notation for the helicity amplitudes,

leaving aside the (BSM) tensor form factors. Here the velocity transfer

with \(v_M=p/M\) and \(v_m=p'/m\), is often used in heavy-to-heavy decays. For heavy-to-light decays it can be helpful to work with the energy of the daughter meson in the rest frame of the parent, i.e.,

These form factors are subject to three kinematic constraints, namely

where \(q^2_\text {max}=(M-m)^2\), corresponding to \(w=1\) and \(E=m\).

The variable z can also be expressed via w,

where \(N=(t_+ - t_0)/(t_+ - t_-)\), is real for \(q^2 \le (M + m)^2\), and it becomes a pure phase beyond that limit. The constant \(t_0\) defines the point at which \(z=0\). Often \(t_0 = t_-\), one end of the kinematic range, so z ranges from 0 at maximum \(q^2\) to \(z_\text {max}=(1-\sqrt{r})^2/(1+\sqrt{r})^2\) when \(m_\ell \approx 0\). Alternatively, the choice \(t_0=(M+m)(\sqrt{M}-\sqrt{m})^2\) sets \(z=0\) exactly in the middle of the kinematic range. Even for \(B\rightarrow \pi \ell \nu \), z is always a small quantity, which ensures a fast convergence of the power series defined in (2.8).

Unitarity constraints from the QCD dispersion relations are translated into constraints for the coefficients of the BGL expansion. In general,

for each form factor F, but in the particular case of \({\bar{B}}\rightarrow D^*\ell {\bar{\nu }}\) the bound becomes

for the f and \({\mathcal {F}}_1\) form factors, because they have the same quantum numbers. These bounds are known as the weak unitarity constraints.

A modification of the BGL parametrization by Bourrely, Lellouch and Caprini (BCL) [8] is often chosen in analyses of heavy-to-light decays. The BCL parametrization improves BGL by fixing two artifacts of the truncated BGL series. In particular, it removes an unphysical singularity at the pair production threshold and corrects the large \(q^2\) behavior (see [9, 10]) in the functional form. These two modifications improve the convergence of the expansion. However, the kinematic range is much more constrained in the heavy-to-heavy case, and lies farther from both the production threshold and the large \(q^2\) region. Therefore, the presence of far singularities or an incorrect asymptotic behavior are not expected to spoil the z-expansion in that case.

In the heavy-to-heavy case, one can sharpen the weak unitarity constraints on the BGL coefficients using heavy quark symmetry (HQS) which relates the different \(B^{(*)}\rightarrow D^{(*)}\ell {{\bar{\nu }}}\) channels and their form factors: each form factor is either proportional to the Isgur–Wise function \(\xi (w)\) or zero. Using heavy quark effective theory (HQET) one can improve the precision by introducing radiative and power (i.e. in inverse powers of the heavy masses) corrections. Then we can define any form factor in such a way that it admits the expansion in both \(\alpha _s\) and the heavy quark masses

These expansions can be used to link the z expansion coefficients of different form factors, leading to the so-called strong unitarity constraints [11, 12]. The power corrections depend on subleading Isgur–Wise functions that have been estimated with QCD sum rules [13,14,15].

Previous analyses of \(B\rightarrow D^*\ell \nu \) have used the Caprini–Lellouch–Neubert (CLN) parametrization [11]. CLN employ a notation for the form factors that satisfies (2.24),Footnote 2

where the letter naming the form factor (S, P, V and A) encodes its quantum numbers (scalar, pseudoscalar, vector and axial vector), and \(R^\text {CLN}_{1,2}\) are two convenient ratios of form factors. Sometimes the ratio \(R^\text {CLN}_0 = P^\text {CLN}_1/A^\text {CLN}_1\) is considered.

In the CLN parametrization the strong unitarity constraints obtained with HQET at NLO are used to remove some of the coefficients of the z expansion. Further, specific numerical coefficients are introduced in a polynomial in w for \(R_{1,2}^\text {CLN}\). The numerical values were determined using information available in 1997, which has been partly superseded but not updated. The numerical values also omit error estimates (which were discussed in the original CLN paper [11], although in an optimistic manner) because at the time the experimental statistical errors dominated, which is no longer the case. A consensus of the workshop recommends that CLN no longer be used, certainly not unless the numerical coefficients have been updated and the ensuing theoretical uncertainties are accounted for. It is better to use a general form of the z expansion.

HQET naturally presents another basis for the form factors of the \({\bar{B}}\rightarrow D^{(*)}\ell {\bar{\nu }}\) processes. Using velocities instead of momenta and otherwise mimicking the Lorentz structure of Eqs. (2.2), (2.5), and (2.6), the notation is \(h_+\) and \(h_-\) for \({\bar{B}}\rightarrow D\ell {\bar{\nu }}\), and \(h_V\) and \(h_{A_{1,2,3}}\) for \({\bar{B}}\rightarrow D^{*}\ell {\bar{\nu }}\). In the heavy quark limit, these form factors tend to

for \(X=+\), \(A_1\), \(A_3\), V, and

with \(Y=-\), \(A_2\). Here \(\eta (\alpha _s) = 1 + O(\alpha _s)\), while \(\beta (\alpha _s) = O(\alpha _s)\). In this representation, the identities expressed in Eqs. (2.18)–(2.20) become evident.

Finally, for the case of a baryonic decay \(\varLambda _b\rightarrow Y_{(q)}\ell \nu \), with \(Y=p,\varLambda _c\), we define

where M is the mass of the \(\varLambda _b\), m is the mass of the daughter baryon and \(s_\pm = (M\pm m)^2 - q^2\). The z expansions for the baryonic form factors employed in Ref. [16] use trivial outer functions and do not impose unitarity bounds on the coefficients of the expansion. As a result, the coefficients are unconstrained and reach values as high as \(\sim 10\). See also Sect. 3.5.5.

2.2 Heavy-to-heavy form factors from lattice QCD

The lattice QCD calculation of the form factors for the semileptonic decay of a hadron uses two- and three-point correlation functions, which are constructed from valence quark propagators obtained by solving the Dirac equation on a set of gluon field configurations. Averaging the correlation functions over the gluon field configurations then yields the appropriate Feynman path integral. The two-point correlation functions give the amplitude for a hadron to be created at the time origin and then destroyed at a time T. The three-point correlation functions include the insertion of a current J at time t on the active quark line, changing the active quark from one flavor to another. Usually calculations are performed with the initial hadron at rest. Momentum is inserted at the current so that a range of momentum transfer, q, from initial to final hadron can be mapped out.

The three-point correlation functions (for multiple q values) and the two-point correlation functions (with multiple momenta in the case of the final-state hadron) are fit as functions of t and T to determine the matrix elements of the currents between initial and final hadrons that yield the required form factors. An important point here is that the initial and final hadrons that we focus on are the ground-state particles in their respective channels. However, terms corresponding to excited states must be included in the fits in order to make sure that systematic effects from excited-state contamination are taken into account in the fit parameters that yield the ground-state to ground-state matrix element of J and hence the form factors.

Statistical uncertainties in the form factors obtained obviously depend on the numbers of samples of gluon-field configurations on which correlation functions are calculated. To improve statistical accuracy further, calculations usually include multiple positions of the time origin for the correlation functions on each configuration. The numerical cost of the calculation of quark propagators falls as the quark mass increases and so heavy (b and c) quark propagators are typically numerically inexpensive. The accompanying light quark propagators for heavy-light hadrons are much more expensive, especially if u/d quarks with physically light masses are required. It is this cost that limits the statistical accuracy that can be obtained, especially since the statistical uncertainty for a heavy-light hadron correlation function (on a given number of gluon field configurations) also grows as the separation in mass between the heavy and light quarks increases.

A key issue for heavy-to-heavy (b to c) form factor calculations is how to handle heavy quarks on the lattice. Discretization of the Dirac equation on a space-time lattice gives systematic discretization effects that depend on powers of the quark mass in lattice units. The size of these effects depends on the value of the lattice spacing and the power with which the effects appear (i.e., the level of improvement used in the lattice Lagrangian).

Since the b quark is so heavy, its mass in lattice units will be larger than 1 on all but the finest lattices (\(a < \) 0.05 fm) currently in use. Highly-improved discretizations of the Dirac equation are needed to control the discretization effects. A good example of such a lattice quark formalism is the highly improved staggered quark (HISQ) action developed by HPQCD [17] for both light and heavy quarks with discretization errors appearing at \(O(\alpha _s(am)^2)\) and \(O((am)^4)\). An alternative approach is to make use of the fact that b quarks are nonrelativistic inside their bound states. This means that a discretization of a nonrelativistic action (NRQCD) can be used, expanding the action to some specified order in the b quark velocity. Discretization effects then depend on the scales associated with the internal dynamics and these scales are all much smaller than the b quark mass. Relativistic effects can be included and discretization effects corrected at the cost of complicating the action with additional operators. A third possibility is to start from the Wilson quark action and improved versions of it but to tune the parameters (such as the quark mass) using a nonrelativistic dispersion relation for the meson, which is known as the Fermilab method [18]. This removes the leading source of mass-dependent discretization effects, while retaining a discretization that connects smoothly to the continuum limit. Again, improved versions of this approach (such as the Oktay–Kronfeld action [19]) include additional operators.

The c quark has a mass larger than \(\varLambda _{\mathrm {QCD}}\) but within lattice QCD it can be treated successfully as a light quark because its mass in lattice units is less than 1 on lattices in current use (with \(a < 0.15\) fm). This means that, although discretization effects are visible in lattice QCD calculations with c quarks, they are not large and can easily be extrapolated away accurately for a continuum result. For example, discretization effects are less than 10% at \(a=0.15\) fm in calculations of the decay constant of the \(D_s\) using the HISQ action [20]. Purely nonrelativistic approaches to the c quark are therefore not useful on the lattice. There can be some advantage for b-to-c form factor calculations in using the same action for b and c, however, as we discuss below.

Because lattice and continuum QCD regularize the theory in a different way, the lattice current J needs a finite renormalization factor to match its continuum counterpart so that matrix elements of J, and form factors derived from them, can be used in continuum phenomenology. For NRQCD and Wilson/Fermilab quarks the current J must be normalized using lattice QCD perturbation theory. Since this is technically rather challenging it has only been done through \(O(\alpha _s)\) and this leaves a sizeable (possibly several percent) systematic error from missing higher-order terms in the perturbation theory. If Wilson/Fermilab quarks are used for both b and c quarks, then arguments can be made about the approach to the heavy-quark limit that can reduce, but not eliminate, this uncertainty [21].

Relativistic treatments of the b and c quarks have a big advantage here, because J can generally be normalized in a fully nonperturbative way within the lattice QCD calculation and without additional systematic errors. The advantages of this approach were first demonstrated by the HPQCD collaboration using the HISQ action to determine the decay constant of the \(B_s\) [22]. The HISQ PCAC relation normalizes the axial-vector current in this case. Calculations for multiple quark masses on lattices with multiple values of the lattice spacing allow both the physical dependence of the decay constant on quark mass and the dependence of the discretization effects to be mapped out so that the physical result at the b quark mass can be determined. This calculation has now been updated and extended to the B meson by the Fermilab Lattice and MILC collaborations [23], achieving better than 1% uncertainty. HPQCD is now carrying out a similar approach to b-to-c form factor calculations [24], and the JLQCD collaboration is also working in that direction [25] with Möbius domain-wall quarks.

An equivalent approach, using ratios of hadronic quantities at different quark masses where normalization factors cancel, has been developed by the European Twisted Mass collaboration using the twisted-mass action [26, 27] for Wilson fermions.

2.2.1 \(B \rightarrow D^{(*)}\) form factors from lattice QCD

Early lattice QCD calculations of \(B\rightarrow D\) form factors were limited to the determination of \({\mathcal {G}}^{B\rightarrow D} (w) = 4 r f_+ (q^2)/(1+r)\) (with notation defined near (2.16)) at the zero-recoil point \(w=1\). Results include the \(N_f=2+1\) calculation of Fermilab/MILC [28, 29] and the \(N_f=2\) calculation of Atoui et al. [30]. More recently Fermilab/MILC [31] and HPQCD [32, 33] have presented \(N_f=2+1\) calculations of the \(B\rightarrow D\) form factor at non-zero recoil based on partially overlapping subsets of the same MILC asqtad (\(a^2\) tadpole improved) ensembles.

The Fermilab/MILC calculation [31] uses configurations with four different lattice spacings and with pion masses in the range [260, 670] MeV. The bottom and charm quarks are implemented in the Fermilab approach. The form factors \(f_{+,0}^{B\rightarrow D}(w)\) are extracted from double ratios of three point functions up to a matching factor which is calculated at 1-loop in lattice perturbation theory. The results are presented in terms of three synthetic data points which can be subsequently fitted using any form factor parametrization. The systematic uncertainty due to the joint continuum-chiral extrapolation is about 1.2% and dominates the error budget.

The HPQCD calculations [32, 33] rely on ensembles with two different lattice spacings and two/three light-quark masses values, respectively. The treatment of heavy quarks is different from that used in the Fermilab/MILC papers: the bottom quark is described in NRQCD and the charm quark using HISQ. The form factors are extracted from appropriate three-point functions and the results are presented in terms of the parameters of a modified BCL z expansion that incorporates dependence on lattice spacing and light-quark masses into the expansion coefficients.

In order to combine the Fermilab/MILC and HPQCD results [3], it is necessary to generate a set of synthetic data which is (almost exactly) equivalent to the HPQCD calculation. The two sets of synthetic data can then be combined while taking into account the correlation due to the fact the Fermilab/MILC and HPQCD share MILC asqtad configurations. As mentioned above, dominant uncertainties are of systematic nature, implying that this correlation (whose estimate is rather uncertain) is a subdominant effect. A simultaneous fit of Fermilab/MILC and HPQCD synthetic data together with the available Belle and Babar data yields a determination of \(|V_{cb}|\) with an overall 2.5% uncertainty (dominated by the experimental error which contributes about 2% to the total error).

Finally, both collaborations present values for both the \(f_+\) and \(f_0\) form factors, which allow for a lattice only calculation of the SM prediction for R(D). The uncertainty on the Fermilab/MILC and HPQCD combined determination of R(D), without experimental input, is about 2.5% and is negligible compared to current experimental errors.

The advantage of an approach in which currents can be nonperturbatively normalized has been demonstrated by HPQCD for \(B_s \rightarrow D_s\) form factors in [34]. They use the HISQ action for all quarks, extending the method developed for decay constants. The range of heavy quark masses can be increased on successively finer lattices (keeping the value in lattice units below 1) until the full range from c to b is reached. The full \(q^2\) range of the decay can also be covered by this method since the spatial momentum of the final state meson (which should also be less than 1 in lattice units) grows in step with the heavy meson/quark mass. Results from [34] improve on the uncertainties obtained in [33] with NRQCD b quarks and this promising all-HISQ approach is now being extended to other processes. It is interesting to observe that the \(B_s\rightarrow D_s\) form factors are very close to the \(B\rightarrow D\) form factors over the entire kinematic range, see also [35, 36].

Calculations of \(B\rightarrow D^*\) form factors at non-zero recoil are considerably more involved due to difficulties in describing the resonant \(D^*\rightarrow D \pi \) decay. Up to now, lattice QCD simulations have focused on the single \(B \rightarrow D^*\) form factor that contributes to the rate at zero recoil, \(A_1(q^2_\mathrm{max})\). The quantity generally quoted is \(h_{A_1}(1)\) where

The combination of the lattice QCD result and the experimental rate, extrapolated to zero recoil, yields a value for \(V_{cb}\).

The Fermilab Lattice/MILC Collaborations have achieved the highest precision for this result so far [37]. They use improved Wilson quarks within the Fermilab approach for both b and c quarks and work on gluon field configurations that include u/d (with equal mass) and s quarks in the sea (\(n_f=2+1\)) using the asqtad action. By taking a ratio of three-point correlation functions they are able simultaneously able to improve their statistical accuracy and reduce part of the systematic uncertainty from the normalization of their current operator. Their result is \(h_{A_1}(1)=0.906(4)(12)\) where the uncertainties are statistical and systematic respectively. Their systematic error is dominated by discretization effects. They take the systematic uncertainty from missing higher-order terms in the perturbative current matching [38] to be \(0.1\alpha _s^2\).

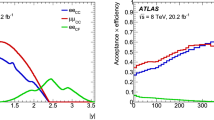

The HPQCD collaboration have calculated \(h_{A_1}(1)\) on gluon field configurations that include \(n_f=2+1+1\) HISQ sea quarks using NRQCD b quarks and HISQ c quarks [39]. Their result, \(h_{A_1}(1) = 0.895(10)(24)\) has a larger uncertainty, dominated by the systematic uncertainty of \(0.5\alpha _s^2\) allowed for in the current matching. They were also able to calculate the equivalent result for \(B_s \rightarrow D_s^*\), obtaining \(h^s_{A_1}(1) = 0.879(12)(26)\) and demonstrating that the dependence on light quark mass is small. The \(B_s \rightarrow D_s^*\) provides a better lattice QCD comparison point than \(B \rightarrow D^*\) because it has less sensitivity to light quark masses (in particular the \(D^*D\pi \) “cusp”) and to the volume. More recently the HPQCD collaboration have used the HISQ action for all quarks, with a fully nonperturbative current normalization, to determine \(h^s_{A_1}(1)\) [24]. Their result, \(h^s_{A_1}(1) = 0.9020(96)(90)\) agrees well with the earlier results and has smaller systematic uncertainties. Figure 1 compares the three results.

Plot taken from Ref. [24] showing the comparison of lattice QCD results for \(h_{A_1}(1)\) (left side) and \(h^s_{A_1}(1)\) (right side). Raw results for \(h_{A_1}(1)\) are from [39] and [37] and are plotted as a function of valence (= sea) light quark mass, given by the square of \(M_{\pi }\). On the right are points for \(h^s_{A_1}(1)\) from [39] plotted at the appropriate valence mass for the s quark, but obtained at physical sea light quark masses. The final result for \(h_{A_1}(1)\) from [37], with its full error bar, is given by the inverted blue triangle. The inverted red triangles give the final results for \(h_{A_1}(1)\) and \(h^s_{A_1}(1)\) from [39]. The HPQCD results of [24] are given by the black stars

The importance of being able to compare lattice QCD and experiment away from the zero recoil point is now clear and several lattice QCD calculations are underway, attempting to cover the full \(q^2\) range of the decay and all 4 form factors. This includes calculations for \(B \rightarrow D^*\) from JLQCD [25] with Möbius domain-wall quarks, Fermilab/MILC [40] (see also talk at Lattice 2019) with improved Wilson/Fermilab quarks and LANL/SWME with an improved version of this formalism known as the Oktay–Kronfeld action [41]. Calculations for other \(b\rightarrow c\) pseudoscalar-to-vector form factors, \(B_s \rightarrow D^*_s\) [42] and \(B_c \rightarrow (J/\psi ,\eta _c)\) are also underway from HPQCD [43, 44] using the all-HISQ approach. At the same time further \(B \rightarrow D\) and \(B_s \rightarrow D_s\) form factor calculations are in progress, including those using a variant of the Fermilab approach known as relativistic heavy quarks on RBC/UKQCD configurations [45]. In future we should be able to compare results from multiple actions with experiment for improved accuracy in determining \(|V_{cb}|\).

2.2.2 \(\varLambda _b \rightarrow \varLambda _c^{(*)}\) form factors from lattice QCD

The \(\varLambda _b \rightarrow \varLambda _c\) form factors have been calculated with \(2+1\) dynamical quark flavors; the vector and axial vector form factors can be found in Refs. [16], while the tensor form factors (which contribute to the decay rates in many new-physics scenarios) where added in Ref. [46]. This calculation used two different lattice spacings of approximately 0.11 fm and 0.08 fm, sea quark masses corresponding to pion masses in the range from 360 down to 300 MeV, and valence quark masses corresponding to pion masses in the range from 360 down to 230 MeV. The lattice data for the form factors, which cover the kinematic range from near \(q^2_{\mathrm{max}}\approx 11\,\mathrm{GeV}^2\) down to \(q^2\approx 7\,\mathrm{GeV}^2\), were fitted with a modified version of the BCL z expansion [8] discussed in Sect. 2.1, where simultaneously to the expansion in z, an expansion in powers of the lattice spacing and quark masses is performed. No dispersive bounds were used in the z expansion here (this is something that can perhaps be improved in the future, see also Sect. 3.5.5). The form factors extrapolated to the continuum limit and physical pion mass yield the following Standard Model predictions:

for the fully integrated decay rate, which has a total uncertainty of 6.3% (corresponding to a 3.2% theory uncertainty in a possible \(|V_{cb}|\) determination from this decay rate),

for the partially integrated decay rate, which has a total uncertainty of 4.5% (corresponding to 2.3% for \(|V_{cb}|\)), and

for the lepton-flavor-universality ratio, which has a total uncertainty of 3.1%. The systematic uncertainties of the vector and axial vector form factors are dominated by finite-volume effects and the chiral extrapolation. Both of these can be reduced substantially in the future by adding a new lattice gauge field ensemble with physical light-quark masses and a large volume, and dropping the “partially quenched” data sets that have \(m_\pi ^{(\mathrm {val})}<m_\pi ^{(\mathrm {sea})}\). Adding another ensemble at a third, finer lattice spacing will also be beneficial to better control the continuum extrapolation.

At this workshop, there was some discussion about the validity of the modified z expansion; it has been argued that it would be safer to first perform chiral/continuum extrapolations and then perform a secondary z expansion fit. This is expected to make a difference mainly if nonanalytic quark-mass dependence from chiral perturbation theory is included. However, the fits used in Ref. [16] for the \(\varLambda _b\) form factors were analytic in the lattice spacing and light-quark mass. Note that the shape of the \(\varLambda _b \rightarrow \varLambda _c\, \mu ^- {\bar{\nu }}_\mu \) differential decay rate was later measured by LHCb, and found to be in good agreement with the lattice QCD prediction all the way down to \(q^2=0\) [47].

Motivated by the prospect of an LHCb measurement of \(R(\varLambda _c^*)\), work is now also underway to compute the \(\varLambda _b \rightarrow \varLambda _c^{*}\) form factors in lattice QCD, for the \(\varLambda _c^*(2595)\) and \(\varLambda _c^*(2625)\), which have \(J^P=\frac{1}{2}^-\) and \(J^P=\frac{3}{2}^-\), respectively. Preliminary results were shown at the workshop. For these form factors, the challenge is that, to project the \(\varLambda _c^*\) interpolating field exactly to negative parity and avoid contamination from the lower-mass positive parity states, one needs to perform the lattice calculation in the \(\varLambda _c^*\) rest frame. With the b-quark action currently in use, discretization errors growing with the \(\varLambda _b\) momentum then limit the accessible kinematic range to a small region near \(q^2_{\mathrm{max}}\). To predict \(R(\varLambda _c^*)\), it will be necessary to combine the lattice QCD results for the form factors in the high-\(q^2\) region with heavy-quark effective theory and LHCb data for the shapes of the \(\varLambda _b \rightarrow \varLambda _c^*\, \mu ^-{\bar{\nu }}_\mu \) differential decay rates [48].

2.3 Measurements of \(B \rightarrow D^{(*)} \ell \nu \) and related processes

2.3.1 Measurements with light leptons

The decays \(B\rightarrow D^*\ell \nu \) and \(B\rightarrow D\ell \nu \) have been measured at Belle and BaBar as well as at older experiments (CLEO, LEP). Unfortunately, most of these measurements assume the Caprini–Lellouch–Neubert parametrization of the form factors (see Sect. 2.1) and report results in terms of \(|V_{cb}|\) times the only form factors relevant at the zero-recoil point \(w=1\), namely \({\mathcal {F}}(1)\equiv h_{A_1}(1)\) for \(B\rightarrow D^*\ell \nu \) and \({\mathcal {G}}(1)\equiv 2\sqrt{M_D M_B}/(M_D + M_B))f_+(1)\) for \(B\rightarrow D\ell \nu \), and of the other CLN parameters, instead of a general form of the z expansion or the raw spectra. The heavy flavor averaging group (HFLAV) has performed an average of these CLN measurements [49] and reports

Notice that Eq. (2.40) together with \(h_{A_1}(1)=0.904(12)\) [3] leads to the low value \(|V_{cb}|=38.76(69) 10^{-3}\). Eq. (2.41) together with \({\mathcal {G}}(1)=1.0541(83)\) [31] leads to a consistent result \(|V_{cb}|=39.58(99) 10^{-3}\). In the case of \(B\rightarrow D\ell \nu \) one can also use the existing lattice calculations at non-zero recoil [31, 32] to guide the extrapolation to zero recoil, together with the w spectrum measured by Belle [50]. In the BGL parametrization, this leads to a higher value, \(|V_{cb}|=40.83(1.13) 10^{-3}\), a more reliable determination than (2.41). In the following we will have a closer look at the most recent measurements by the various experiments.

Belle has recently updated the untagged measurement of the \(B^0\rightarrow D^{*-}\ell ^+\nu \) mode [51]. While the new analysis is based on the same 711 \(\hbox {fb}^{-1}\) Belle data set, the re-analysis takes advantage of a major improvement of the track reconstruction software, which was implemented in 2011, leading to a substantially higher slow pion tracking efficiency and hence to much larger signal yields than in the previous publication [52]. Again \(D^{*+}\) mesons are reconstructed in the cleanest mode, \(D^{*+}\rightarrow D^0\pi ^+\) followed by \(D^0\rightarrow K^-\pi ^+\), combined with a charged, light lepton (electron or muon) and yields are extracted in 10 bins for each of the 4 kinematic variables describing the \(B^0\rightarrow D^{*-}\ell ^+\nu \) decay. These yields are published along with their full error matrix. The updated publication also contains an analysis of these yields using both the CLN and the BGL form factors (where BGL has only 5 free parameters). The CLN analysis results in \(\eta _\mathrm {EW}{\mathcal {F}}(1)|V_{cb}|=(35.06\pm 0.15(\mathrm{stat})\pm 0.56(\mathrm{syst}))\times 10^{-3}\), while the BGL fit gives \(\eta _\mathrm {EW}{\mathcal {F}}(1)|V_{cb}|=(34.93\pm 0.23(\mathrm{stat})\pm 0.59(\mathrm{syst}))\times 10^{-3}\). Both results are thus well consistent. This contrasts with a tagged measurement of \(B^0\rightarrow D^{*-}\ell ^+\nu \) first shown by Belle in November 2016 [53]. Analyzing the raw data of this measurement in terms of the CLN and BGL form-factors gives a difference of almost two standard deviations in \(|V_{cb}|\) [54, 55]. However, this result has remained preliminary and will not be published. A new tagged analysis, using an improved version of the hadronic tag is now underway and should clarify the experimental situation.

Babar has presented a full four-dimensional angular analysis of \(B^0\rightarrow D^{*0}\ell ^-\nu _\ell \) decays, using both CLN and BGL parametrizations [56]. This analysis is based on the full data set of 450 \(\hbox {fb}^{-1}\), and exploits the hadronic B-tagging approach. The full decay chain \(e^+e^-\rightarrow \varUpsilon (4S)\rightarrow B_\mathrm{tag} B_\mathrm{sig}(\rightarrow D^*\ell \nu _\ell )\) is considered in a kinematic fit that includes constraints on the beam properties, the secondary vertices, the masses of \(B_\mathrm{tag}\), \(B_\mathrm{sig}\), \(D^*\) and the missing neutrino. After applying requirements on the probability of the \(\chi ^2\) of this constrained fit, which is the main discriminating variable, the remaining background is only about \(2\%\) of the sample. The resolution on the kinematic variables is about a factor five better than the one possible with untagged measurements. The shape of the form factors is extracted using an unbinned maximum likelihood fit where the signal events are described by the four dimensional differential decay rate. The extraction of \(|V_{cb}|\) is performed indirectly by adding to the likelihood the constraint that the integrated rate \(\varGamma ={\mathcal {B}}/\tau _B\), where \({\mathcal {B}}\) is the \(B\rightarrow D^*\ell \nu \) branching fraction and \(\tau _B\) is the B-meson lifetime. The values of these external inputs are taken from HFLAV [49]. The final result, using \(h_{A_1}(1)\) from [37], is \(|V_{cb}|=(38.36\pm 0.90)\times 10^{-3}\) with a 5-parameter BGL version and \(|V_{cb}|=(38.40\pm 0.84)\times 10^{-3}\) in the CLN case, both compatible with the above HFLAV average. Nevertheless, the individual form factors show significant deviations from the world average CLN determination by HFLAV.

LHCb has extracted \(V_{cb}\) from semileptonic \(B_s^0\) decays for the first time [57]. The measurement uses both \(B_s^0\rightarrow D_s^{-}\mu ^+\nu _{\mu }\) and \(B_s^0\rightarrow D_s^{*-}\mu ^+\nu _{\mu }\) decays using 3 \(\hbox {fb}^{-1}\) collected in 2011 and 2012. The value of \(|V_{cb}|\) is determined from the observed yields of \(B_s^0\) decays normalized to those of \(B^0\) decays after correcting for the relative reconstruction and selection efficiencies. The normalization channels are \(B^0\rightarrow D^-\mu ^+\nu _{\mu }\) and \(B^0\rightarrow D^{*-}\mu ^+\nu _{\mu }\) with the \(D^-\) reconstructed with the same decay mode as the \(D_s\), (\(D_{(s)}^-\rightarrow [K^+K^-]_{\phi }\pi ^-\)), to minimize the systematic uncertainties. The shapes of the form factors are extracted as well, exploiting the kinematic variable \(p_{\perp }(D_s)\) which is the component of the \(D_s^-\) momentum perpendicular to the \(B_s^0\) flight direction. This variable is correlated with \(q^2\). In this analysis both the CLN parametrization and a 5-parameter version of BGL have been used. The results for \(V_{cb}\) are

where the first uncertainty are statistical, the second systematic and the third due to the limited knowledge of the external input, in particular the \(B_s^0\) to \(B^0\) production ratio \(f_s/f_d\), which is known with an uncertainty of about 5%. The results are compatible with both the inclusive and exclusive decays. Although not competitive with the results obtained at the B factories, the novel approach used can be extended to the semileptonic \(B^0\) decays.

2.3.2 Past measurements of R(D) and \(R(D^*)\)

\(R_{D}\) and \(R_{D^*}\) are defined as the ratios of the semileptonic decay width of \(B_d\) and \(B_u\) meson to a \(\tau \) lepton and its associated neutrino \(\nu _\tau \) over the B decay width to a light lepton. A summary of the currently available measurements of \(R_{D}\) and \(R_{D^*}\) is presented in Table 2, showing the yield of B signal and B normalization decays and the stated uncertainties. The data were collected by the BaBar and Belle experiments at \(e^+e^-\) colliders operating at the \(\varUpsilon (4S)\) resonance, which decays exclusively to pairs of \(B^+B^-\) or \(B^0{{\bar{B}}}^0\) mesons. The LHCb experiment operates at the high energy pp collider at CERN at total energies of 7 and 8 TeV, where pairs of b-hadrons (mesons or baryons) along with a large number of other charged and neutral particles are produced. While the maximum production rate of the \(\varUpsilon (4S)\rightarrow B{{\bar{B}}}\) events has been 20 Hz, the rates observed at LHCb exceed 100 kHz.

Currently we have only two measurements [58,59,60] of the ratios \(R_{D}\) and \(R_{D^*}\) based on two distinct samples of hadronic tagged \(B{{\bar{B}}}\) events with signal \(B\rightarrow D\tau \nu _\tau \) and \(B\rightarrow D^*\tau \nu _\tau \) decays and purely leptonic tau decays, \(\tau ^- \rightarrow e^-{{\bar{\nu }}}_e\nu _\tau \) or \(\tau ^- \rightarrow \mu ^-{\bar{\nu }}_\mu \nu _\tau \). In addition, there is a measurement from Belle [62, 63] of \(R_{D^*}\) with hadronic tags and a semileptonic one-prong \(\tau \) decay (\(\tau ^-\rightarrow \pi ^-\nu _\tau \) or \(\tau ^-\rightarrow \rho ^-\nu _\tau \)). A Belle measurement [61] of \(R_{D}\) and \(R_{D^*}\) with semi-leptonic tags and purely leptonic \(\tau \) decays appeared recently, superceding a previous measurement [67] of \(R_{D^*}\) obtained with the same technique.

At LHCb only decays of neutral B mesons producing a charged \(D^*\) meson and a muon of opposite charge are selected, with a single decay chain \(D^{*+}\rightarrow D^0 (\rightarrow K^-\pi ^+) \pi ^+\). LHCb published two measurements [64,65,66] of \(R_{D^*}\), the first relying on purely leptonic \(\tau \) decays and normalized to the \(B^0 \rightarrow D^{*+}\mu ^-{{\bar{\nu }}}_\mu \) decay rate, and the more recent one using 3-prong semileptonic \(\tau \) decays, \(\tau ^-\rightarrow \pi ^+\pi ^-\pi ^- \nu _\tau \) and normalization to the decay \(B^0 \rightarrow D^{*+}\pi ^+\pi ^-\pi ^-\). This LHCb measurement extracts directly the ratio of branching fractions \({{{\mathcal {K}}}}(D^*)=\mathcal{B}(B^0 \rightarrow D^{*+}\tau ^-{{\bar{\nu }}}_\tau )/{{{\mathcal {B}}}}(B^0 \rightarrow D^{*+}\pi ^+\pi ^-\pi ^-)\). The ratio \({{{\mathcal {K}}}}(D^*)\) is then converted to \(R(D^*)\) by using the known branching fractions of \(B^0 \rightarrow D^{*+}\pi ^+\pi ^-\pi ^-\) and \(B^0 \rightarrow D^{*+}\mu ^-{{\bar{\nu }}}_\mu \).

BaBar and Belle analyses rely on the large detector acceptance to detect and reconstruct all final state particles from the decays of the two B mesons, except for the neutrinos. They exploit the kinematics of the two-body \(\varUpsilon (4S)\) decay and known quantum numbers to suppress non-\(B{{\bar{B}}}\) and combinatorial backgrounds. They differentiate the signal decays involving two or three missing neutrinos from decays involving a low mass charged lepton, an electron or muon, plus an associated neutrino.

LHCb isolates the signal decays from very large backgrounds by exploiting the relatively long B decay lengths which allows for a separation of the charged particles from the B and charm decay vertex from many others originating from the pp collision point. There are insufficient kinematic constraints and therefore the total B meson momentum is estimated from its transverse momentum, degrading the resolution of kinematic quantities like the missing mass and the momentum transfer squared \(q^2\). Also, the production of \(D^{*+} D_s^-\) pairs with the decay \(D_s^-\rightarrow \tau ^-\bar{\nu }_\tau \) leads to sizable background in the signal sample.

The summary in Table 2 indicates that the results are not inconsistent. For BaBar and Belle the systematic uncertainties are comparable for \(R_{D^*}\), while Belle systematic uncertainties are smaller for \(R_{D}\). However the differences in the signal yield and the background suppression lead to smaller statistical errors for BaBar. The Belle measurements based on semileptonic tagged samples result in a 50% smaller signal yield than for the hadronic tag samples. For the two LHCb measurements, the event yields exceed the BaBar yields by close to a factor of 20, but the relative statistical errors on \(R_{D^*}\) are comparable to BaBar, and the systematic uncertainties are larger by a factor of 2.

2.3.3 Lessons learned

All currently available measurements are limited by the difficulty of separating the signal from large backgrounds from many sources, leading to sizable statistical and systematic uncertainties. The measurement of ratios of two B decay rates with the very similar – if not identical – final state particles, significantly reduces the systematic uncertainties due to detector effects, tagging efficiencies, and also from uncertainties in the kinematics due to form factors and branching fractions. For all three experiments the largest systematic uncertainties are attributed to the limited size of the MC samples, the fraction and shapes of various backgrounds, especially from decays involving higher mass charm states, and uncertainties in the relative efficiency of signal and normalization, the efficiency of other backgrounds, as well as lepton mis-identification. Though the total number of \(B{{\bar{B}}}\) events of the full Belle data set exceeds the one for BaBar by 65%, the signal BaBar signal yield for \(B \rightarrow D^{(*)} \tau \nu _\tau \) exceeds Belle by 67% due to differences in event selection and fit procedures.

While the use by Belle of semileptonic B decays as tags for \(B\bar{B}\) events benefits from the fewer decay modes with higher BFs, the presence of a neutrino in the tag decays results in the loss of stringent kinematic constraints. The resulting signal yields are lower by 50% compared to hadronic tags, and the backgrounds are much larger. The use of the ECL, namely the sum of the energies of the excess photons in a tagged event, in the fit to extract the signal yield is somewhat problematic, since it includes not only the photons left over from incorrectly reconstructed \(B {{\bar{B}}}\) events, but also photons emitted from the high intensity beams. As a result the signal contributions are difficult to separate from the very sizable backgrounds.

2.3.4 Outlook for R(D) and \(R(D^*)\)

Belle II and the upgraded LHCb are expected to collect large data samples with considerably improved detector performances. This should lead to much reduced detector related uncertainties, higher signal fractions, and opportunities to measure many related processes. The goal is to push the sensitivity of many measurements of critical variables and distributions beyond theory uncertainties and thereby increase the sensitivity to non-Standard Model processes.

Currently there are only two measurements of the ratio \(R_{D}\), one each by BaBar and Belle, based on two distinct samples of hadronic tagged \(B {{\bar{B}}}\) events for the signal \(B\rightarrow D\tau \nu _\tau \) and \(B\rightarrow D^* \tau \nu _\tau \) decays. The decay \(B\rightarrow D\tau \nu _\tau \) is dominated by a P-wave, whereas in the \(B\rightarrow D^* \tau \nu _\tau \) S, P, and D waves contribute and the impact for contributions from new physics processes is expected to be smaller. A contribution of a hypothetical charged Higgs would result in an S-wave for \(B\rightarrow D\tau \nu _\tau \), and a P-wave for \(B\rightarrow D^*\tau \nu _\tau \), thus measurements of the angular distributions and the polarization of the \(\tau \) lepton or D and \(D^*\) mesons will be important. Such measurements would of course also serve as tests of other hypotheses, for instance contributions from leptoquarks. The studies for many decay modes, the detailed kinematics of the signal events, the four-momentum transfer \(q^2\), the lepton momentum, the angles and momenta of D and \(D^*\) and the \(\tau \) spin should be extended to perform tests for potential new physics contributions.

Belle II will benefit from major upgrades to all detector components, except for the barrel sections of the calorimeter and the muon detector. In addition, a new data acquisition and analysis software are being developed to benefit from the very high data rates and improved detector performance. Upgrades to the precision tracking and lepton identification, especially at lower momenta, are expected to significantly improve the mass resolution and purity of the signal samples. This should also improve the detector modeling of efficiencies for signal and backgrounds and fake rates that are the major contributions to the current systematic uncertainties. The much larger data rates should allow choice of cleaner and more efficient \(B {{\bar{B}}}\) tagging algorithms.

Major improvements to the MC simulation signal and backgrounds will be needed. They require much better understanding of all semileptonic B decays, contributing to signal and backgrounds, i.e., updated measurements of branching fractions and form factors and theoretical predictions, especially for backgrounds involving higher mass charm mesons, either resonances or states resulting from charm quark fragmentation. The fit to extract the signal yields could be improved by reducing the backgrounds and making use of fully 2D or 3D distributions of kinematic variables, and by avoiding simplistic parametrizations. The suppression of fake photons and \(\pi ^0\)s needs to be scrutinized to avoid unnecessary signal loss and very large backgrounds for \(D^{*0}\) decays. Shapes of distributions entering multi-variable methods to reduce the backgrounds should be scrutinized by comparisons with data or MC control samples, and any significant differences should be addressed. The use of ECL, the sum of the energies of all unassigned photon in an event, may be questionable, given the expected high rate of beam generated background.

The first study by Belle of the \(\tau \) spin in \(B\rightarrow D^*\tau \nu _\tau \) decays with \(\tau ^-\rightarrow \rho ^-\nu _\tau \) or \(\tau ^-\rightarrow \pi ^-\nu _\tau \) is very promising, it indicates that much larger and cleaner data samples will be needed. The systematic uncertainty on the \(R_{D^*}\) measurement of 11% is dominated by the hadronic B decay composition of 7% and the size of the MC sample [63]. The measured transverse \(\tau \) polarization of \(P_\tau = -0.38\pm 0.51^{+0.21}_{-0.16}\) is totally statistics dominated, and implies \(P_\tau < 0.5\) at 90% C.L.

Among the many other measurements Belle II is planning, ratios R for both inclusive and inclusive semileptonic B decays are of interest, for instance in addition to \(R_D\), \(R_{D^{*}}\), and \(R_{D^{**}}\) also \(R_{X_c}\), as well as \(R_\pi \) and \(R_{X_u}\), which rely on unique capabilities of Belle II.

The LHCb detector is currently undergoing a major upgrade with the goal to switch to an all software trigger and to be able to select and record data up to rates of 100 kHz. Replacements of all tracking devices are planned, ranging from radiation hard pixel detector near interaction region to scintillation fibers downstream. Improvements to electron and muon detection and reduction in pion misidentification will be critical for the suppression of backgrounds, and should also allow rate comparison for decays involving electron or muons. LHCb relies on large data samples rather than MC simulation to assess signal efficiencies and most importantly the many sources of backgrounds and their suppression.

Several analyses are underway based on Run 1 and Run 2 data samples, and are benefiting from improved trigger capabilities. The first analysis based on 3-prong \(\tau \) decays showed a clear separation of the \(\tau \) decay vertex from both the D and the proton interaction point, improving the signal purity to about 11%, compared to 4.4% for the purely leptonic 1-prong \(\tau \) decay. This may therefore be the favored \(\tau \) decay mode, and should also be tried for \(B^+\rightarrow D^0\tau ^+\nu _\tau \). Improved measurements of the branching fractions for normalization and the \(\tau \) decays will be essential.

As a follow-up on the first LHCb measurement of \(R_{D^*}\), a simultaneous fit to two disjoint \(D^0 \mu ^-\) and \(D^{*+}\mu ^-\) samples is in preparation, taking into account the large feed-down from \(D^*\) decay present in the \(D^0 \mu ^-\) sample. As pointed out above, the decay \(B^+\rightarrow D^0\tau ^+\nu _\tau \) is more sensitive to new physics processes than \(B^0\rightarrow D^{*-}\tau ^+\nu _\tau \) and thus this analysis is expected to be very important to establish the excess in these decay modes and its interpretation. This analysis will benefit from the addition of dedicated triggers sensitive to \(D^0 \mu ^-\), \(D^{*+}\mu ^-\), \(\varLambda _c^+ \mu \) and \(D_s^{+}\mu \) final states.

LHCb is considering a series of other ratios measurements, among several \(b \rightarrow c\) transitions (\({{\bar{B}}}_s^0 \rightarrow D_s^- \tau ^+\nu _\tau \), \(B\rightarrow D^{**} \tau ^+\nu _\tau \) and \(\varLambda _b^+\rightarrow \varLambda _c^{(*)} \tau ^+\nu _\tau \)) and certain \(b\rightarrow u\) transitions (\(B^+\rightarrow \rho ^0\tau ^+\nu _\tau \), \(B^+\rightarrow p{{\bar{p}}} \tau ^+\nu _\tau \) and \(\varLambda _b^0\rightarrow p\tau ^-\nu _\tau \)), most of which will be challenging to observe and not trivial to normalize. The decay \(\varLambda _b^+\rightarrow \varLambda _c^* \tau ^+\nu _\tau \) probes a different spin structure, and a precise measurement of \(R_{\varLambda _c}\) would be of great interest for the interpretation of the excess of events in \(R_{D}\) . The observation of the decay \(B_c^-\rightarrow J/\psi (\rightarrow \mu ^+\mu ^-) \tau ^- (\rightarrow \mu ^-{{\bar{\nu }}}_\mu \nu _\tau ) {{\bar{\nu }}}_\tau \) has recently been reported. It is a very rare process which is only observable at LHCb. The final state of 3 muons is a unique signature, though impacted by sizable backgrounds from hadron misidentification. The measured ratio \(R_{J/\psi } = 0.71\pm 0.17 \pm 0.18\) has large uncertainties, dominated systematically by the signal simulation since the form factors are unknown.

2.4 Extraction of \(V_{cb}\) and predictions for \(R_{D^{(*)}}\)

The values of \(V_{cb}\) extracted from inclusive and exclusive decays have been in tension for a long time [68]. In order to extract \(V_{cb}\) from \(B\rightarrow D^{(*)}l\nu \) data we need information on the form factors, which is mostly provided by lattice QCD. For the \(B\rightarrow D\) form factors \(f_{+,0}\) there are lattice results at \(w\ge 1\) [3, 31, 32]. A fit to all the available experimental and lattice data of \(B\rightarrow Dl\nu \) leads to [69]

with \(\chi ^2/\mathrm {dof} = 19.0/22\). Similar results have been obtained in [3]. For \(B\rightarrow D^*\) at the moment there is only information on one of the four form factors at zero-recoil, \(A_1(w=1)\) [37, 39], however further developments look promising [70,71,72]. At the other end of the w or \(q^2\) spectrum there are results available from LCSR [73, 74]. In view of the advanced experimental precision, a key question for the precise extraction of \(V_{cb}\) and a robust prediction of \(R(D^{(*)})\equiv {\mathcal {B}}(B\rightarrow D^{(*)} \tau \nu )/{\mathcal {B}}(B\rightarrow D^{(*)}l\nu )\) is how large the theoretical uncertainties are. For example, whenever relations such as (2.24) are used, how large are HQET corrections beyond NLO, i.e. of \(O\left( \alpha _s^2,\varLambda ^2_{\mathrm {QCD}}/m_{c,b}^2, \alpha _s \varLambda _{\mathrm {QCD}}/m_{c,b}\right) \) and how accurate are the QCDSR results that are used at NLO? A guideline for an answer to these questions can be provided by studying the size of NLO corrections in the HQET expansion and by a comparison with corresponding available lattice results [12]. A definite answer, especially for the pseudoscalar form factor \(P_1\), which is needed for the prediction of \(R(D^*)\), will be given only by future lattice results [70,71,72].

In all experimental analyses prior to 2017, HQET relations have been employed in terms of a form of the CLN parametrization [11] where theoretical uncertainties noted in Ref. [11] were set to zero by fixing coefficients to definite numbers. Moreover, the slope and curvature of \(R_{1,2}(w)\) depend on the same underlying theoretical quantities as \(R_{1,2}(1)\), which makes the variation of the latter and fixing of the former inconsistent. In future experimental analyses this has to be taken into account.

Recent preliminary Belle data [53] allowed for a reappraisal of fits to \(B\rightarrow D^*l\nu \) by several groups [12, 39, 54, 55, 75,76,77]. For the first time, Ref. [53] reported deconvoluted w and angular distributions which are independent of the parametrization. This allowed to test the possible influence of different parametrizations on the extracted value of \(V_{cb}\). Indeed, based on that data set the central values for \(\vert V_{cb}\vert \) varied by up to 6% between CLN and BGL fits [54, 55, 77]. By floating some additional parameters of the less flexible CLN parametrization, the agreement between BGL and CLN could be restored [54, 76]. Furthermore, in the literature one could observe a correlation of smaller central values for \(V_{cb}\) with stronger HQET+QCDSR input [12, 39, 53,54,55, 75,76,77].

Recently, on top of the tagged analysis Ref. [53] a new untagged Belle analysis of \(B\rightarrow D^*l\nu \) appeared [78]. The new, more precise data brought the \(|V_{cb}|\) central values of the CLN and BGL fits closer together. However, in order to obtain a reliable error, it is necessary to employ the BGL parametrization with a sufficient number of coefficients rather than the CLN parametrization. Including the new data, Ref. [79] obtains

with a \(\chi ^2/\mathrm {dof} = 80.1/72\). The inclusion of LCSRs or strong unitarity constraints, where input from HQET is used in a conservative way, basically does not change the fit result [79]. The \(V_{cb}\) value in Eq. (2.43) differs by \(1.9\sigma \) from the inclusive result.

The shortcomings of the CLN parametrization have been addressed in several recent articles [36, 75, 77, 80, 86]: varying the coefficients of the HQE consistently allows for a simultaneous description of the available experimental and lattice data in \(B\rightarrow D\), while the parametrization dependence in the extraction of \(V_{cb}\) from Ref. [53] remains [75]. Including additionally contributions at \(O(1/m_c^2)\) and higher orders in the z expansion, the extracted values for \(V_{cb}\) using the BGL parametrization and the HQE become compatible [80].

For the above reasons, older HFLAV averages, which are based on the CLN parametrization, should not be employed in future analyses, with the exception of the total branching ratios, whose parametrization dependence is expected to be negligible. The two most recent experimental analyses of \({\bar{B}}_{(s)} \rightarrow D_{(s)}^*l^-{\bar{\nu }}_l\) [56, 57] present results obtained in both CLN and a simplified version of the BGL parametrization. They did not observe sizeable parametrization dependence, but found very different values of \(V_{cb}\). However, they did not provide data in a format that allows for independent reanalyses.

For the lepton flavor nonuniversality observables \(R(D^{(*)})\) we list a few recent theoretical predictions in Table 3. Predictions for further lepton flavor non-universality observables of underlying \(b\rightarrow cl\nu \) transitions can be found in Refs. [87, 88]. Compared to predictions from before 2016, the predictions in Table 3 make use of new lattice results and new experimental data. The results are based on different methodologies and a different treatment of the uncertainties of HQET + QCDSR. We have a very good consensus for R(D) predictions because in this case the predictions are dominated by the recent comprehensive lattice results from Refs. [31, 32, 89]. QED corrections to R(D) remain a topic which deserves further study [90, 91]. In the case of \(R(D^*)\), as we do not have yet lattice information on the form factor \(P_1\), we can use the exact endpoint relation \(P_1(w_{\mathrm {max}}) = A_5(w_{\mathrm {max}})\) and results from HQET and QCDSR. Depending on the estimate of the corresponding theory uncertainty one obtains different theoretical errors for the prediction of \(R(D^*)\). As soon as we have lattice results for \(P_1\) [70], the different fits will stabilize and we expect a similar consensus as for R(D). Despite the most recent experimental results being closer to the SM predictions, the \(R(D^{(*)})\) anomaly persists and remains a tough challenge for model builders.

2.5 Semileptonic \(B\rightarrow D^{**}\ell {{\bar{\nu }}}\) decays

Semileptonic B decays to the four lightest excited charm mesons, \(D^{**} = \{D_0^*,\, D_1^*,\) \(D_1,\, D_2^*\}\), are important both because they are complementary signals of possible new physics contributions to \(b\rightarrow c\tau {{\bar{\nu }}}\), and because they are substantial backgrounds to the \(R(D^{(*)})\) measurements (as well as to some \(|V_{cb}|\) and \(|V_{ub}|\) measurements). Thus, the correct interpretation of future \(B\rightarrow D^{(*)}\ell {{\bar{\nu }}}\) measurements requires consistent treatment of the \(D^{**}\) modes.

The spectroscopy of the \(D^{**}\) states is important, because in addition to the impact on the kinematics, it also affects the expansion of the form factors [92, 93] in HQET [94, 95]. The isospin averaged masses and widths for the six lightest charm mesons are shown in Table 4. In the HQS [96, 97] limit, the spin-parity of the light degrees of freedom, \(s_l^{\pi _l}\), is a conserved quantum number, yielding doublets of heavy quark symmetry, as the spin \(s_l\) is combined with the heavy quark spin [98]. The ground state charm mesons containing light degrees of freedom with spin-parity \(s_l^{\pi _l} = \frac{1}{2}^-\) are the \(\big \{D,\, D^*\big \}\). The four lightest excited \(D^{**}\) states correspond in the quark model to combining the heavy quark and light quark spins with \(L=1\) orbital angular momentum. The \(s_l^{\pi _l} = \frac{1}{2}^+\) states are \(\big \{D_0^*,\, D_1^*\big \}\) while the \(s_l^{\pi _l} = \frac{3}{2}^+\) states are \(\big \{D_1,\, D_2^*\big \}\). The \(s_l^{\pi _l} = \frac{3}{2}^+\) states are narrow because their \(D^{(*)}\pi \) decays only occur in a d-wave or violate heavy quark symmetry. In the case of \(B_s\) decays, all four \(D_s^{**}\) states are narrow.

A simplifying assumption used in Refs. [92, 93] to reduce the number of subleading Isgur–Wise functions was to neglect certain \(O(\varLambda _{\mathrm{QCD}}/m_{c,b})\) contributions involving the chromomagnetic operator in the subleading HQET Lagrangian, motivated by the fact that the mass splittings in both the \(s_l^{\pi _l} = \frac{1}{2}^+\) and \(s_l^{\pi _l} = \frac{3}{2}^+\) doublets were measured to be much smaller than \(m_{D^*} - m_D\). This is not supported by the more recent data (see Table 4), so Ref. [101] extended the predictions of Refs. [92, 93] accordingly, including deriving the HQET expansions of the form factors which do not contribute in the \(m_\ell = 0\) limit. The impact of arbitrary new physics operators was analyzed in Ref. [100], including the \(O(\varLambda _{\mathrm{QCD}}/m_{c,b})\) and \((\alpha _s)\) corrections in HQET. The corresponding results in the heavy quark limit were obtained in Ref. [102].

The large impact of the \(O(\varLambda _{\mathrm{QCD}}/m_{c,b})\) contributions to the form factors can be understood qualitatively by considering how heavy quark symmetry constrains the structure of the expansions near zero recoil. It is useful to think of a simultaneous expansion in powers of \((w-1)\) and \((\varLambda _{\mathrm{QCD}}/m_{c,b})\). (The kinematic ranges are \(0 < w-1 \lesssim 0.2\) for \(\tau \) final states, and \(0 < w-1 \lesssim 0.3\) for e and \(\mu \).) The decay rates to the spin-1 \(D^{**}\) states, which are not helicity suppressed near \(w=1\), are of the form

Here \(\varepsilon \) is a power-counting parameter of order \(\varLambda _{\mathrm{QCD}}/m_{c,b}\), and the 0-s are consequences of heavy quark symmetry. The \(\varepsilon ^2\) term in the first parenthesis is fully determined by the leading order Isgur–Wise function and hadron mass splittings [92, 93, 100, 101]. The same also holds for those new physics contributions to \(B\rightarrow D_0^* \ell {{\bar{\nu }}}\), which are not helicity suppressed. This explains why the \(O(\varLambda _{\mathrm{QCD}}/m_{c,b})\) corrections to the form factors are very important, and can make O(1) differences in physical predictions, without being a sign of a breakdown of the heavy quark expansion. The sensitivity of the \(D^{**}\) modes to new physics is complementary and sometimes greater than those of the D and \(D^*\) modes [100, 102]. Thus, using HQET, the predictions for \(B\rightarrow D^{**}\tau {{\bar{\nu }}}\) are systematically improvable by better data on the e and \(\mu \) modes, just like they are for \(B\rightarrow D^{(*)} \tau {{\bar{\nu }}}\) [75], and are being implemented in HAMMER [103,104,105].

2.6 New physics in \(B\rightarrow D^{(*)} \tau \nu \)

Independently of the recent discussion on form factor parametrizations and their influence on the extraction of \(V_{cb}\) (covered in Sect. 2.1) it is clear from Table 3 that the SM cannot accomodate the present experimental data on \(R(D^{(*)})\). Even after the inclusion of the most recent Belle measurement [106], the significance of the anomaly remains \(3.1\sigma \). This leaves, apart from an underestimation of systematic uncertainties on the experimental side, NP as an exciting potential explanation. The required size of such a contribution comes as a surprise, however: defining \({{\hat{R}}}(X)\equiv R(X)/R(X)_{\mathrm{SM}}\), the new average corresponds to \({{\hat{R}}}(D)=1.14\pm 0.10\) and \({{\hat{R}}}(D^*)=1.14\pm 0.06\); for NP to accommodate these data, a contribution of 5–10% relative to a SM tree-level amplitude is required for NP interfering with the SM, and \(O(40\%)\) for NP without interference. An effect of this size can be clearly identified with upcoming measurements by LHCb and Belle II [107, 108]. It would also immediately imply large effects in other observables.

The potential of \(R(D^{(*)})\) as discovery modes does not diminish the importance of additional measurements with b-hadrons. Specifically, even with a potential discovery, model discrimination will require measurements beyond these ratios. These additional measurements fall in four categories:

-

Additional R(X) measurements such as \(R(D^{**}), R(\varLambda _c), R(X_c), R(J/\psi )\) and \(R(B_s^{(*)})\), are important crosschecks to establish \(R(D^{(*)})\) as NP with independent systematics and provide independent NP sensitivity (especially \(R(X_c)\) and \(R(\varLambda _c)\)), as discussed in Sects. 2.3 and 2.5 . Note, however, the existence of an approximate sum rule relating the NP contributions to \(R(\varLambda _c)\), R(D), and \(R(D^*)\) [109].

-

Integrated angular and polarization asymmetries and polarization fractions are excellent model discriminators. In many models they are completely determined once the measurements of \(R(D^{(*)})\) are taken into account. For instance, the recent measurement of the longitudinal polarization fraction of the \(D^*\) in \(B\rightarrow D^*\tau \nu \), \(F_L(D^*)\), was able to rule out solutions that remained compatible with the whole set of the remaining \(b\rightarrow c\tau \nu \) data [109,110,111,112,113,114]. The model-discriminating potential of both \(R(D^{(*)})\) and selected angular quantities is visualized in Fig. 2, where fit results for pairs of \(B\rightarrow D^{(*)}\tau \nu \) observables within all phenomenologically viable single-mediator scenarios with left-handed neutrinos to the state-of-the-art data are shown.

-

Differential distributions in \(q^2\) and the different angles are extremely powerful in distinguishing between NP models, as can be seen for instance from a recent analysis of data with light leptons in the final state [86]. They require, however, large amounts of data and the insufficient information on the decay kinematics can pose difficulties for the interpretation of the data, as discussed in Sect. 2.7. However, already the rather rough available information on the differential rates \(d\varGamma /dq^2(B\rightarrow D^{(*)}\tau \nu )\) [58, 60] is excluding relevant parts of the parameter space [114,115,116,117,118].

-

An analysis of the flavor structure of the observed effect, e.g. in \(b\rightarrow c (e,\mu )\nu \), \(b\rightarrow u\tau \nu \) and \(t\rightarrow b\tau \nu \) transitions.

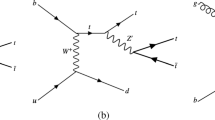

State-of-the-art fit results in single-mediator models for selected pairs of observables in \(B\rightarrow D^{(*)}\tau \nu \) decays (following Ref. [114] for form factor and input treatment). All outer ellipses correspond to \(95\%\) confidence level, inner (where present) to \(68\%\). We show the SM prediction in grey, the experimental measurement/average in yellow (where applicable) and scenarios I, II, III IV and V in dark green, green, dark blue, dark red and red, respectively, see text. Contours outside the experimental ellipse imply that the measured central values cannot be accomodated within that scenario. The limit \(BR(B_c\rightarrow \tau \nu )\le 30\%\) has been applied throughout, but affects only the fits with scalar coefficients. Dark green contours are missing in the two graphs on the right, because the predictions of scenario I are identical to the SM ones