Abstract

In the paper, we analyze the \(\eta _c\) decays into light hadrons at the next-to-leading order QCD corrections by applying the principle of maximum conformality (PMC). The relativistic correction at the \(\mathcal{{O}}(\alpha _s v^2)\)-order level has been included in the discussion, which gives about \(10\%\) contribution to the ratio R. The PMC, which satisfies the renormalization group invariance, is designed to obtain a scale-fixed and scheme-independent prediction at any fixed order. To avoid the confusion of treating \(n_{f}\)-terms, we transform the usual \(\overline{\mathrm{MS}}\) pQCD series into the one under the minimal momentum space subtraction scheme. To compare with the prediction under conventional scale setting, \(R_\mathrm{{Conv,mMOM}-r}= \left( 4.12^{+0.30}_{-0.28}\right) \times 10^3\), after applying the PMC, we obtain \(R_\mathrm{PMC,mMOM-r}=\left( 6.09^{+0.62}_{-0.55}\right) \times 10^3\), where the errors are squared averages of the ones caused by \(m_c\) and \(\Lambda _\mathrm{mMOM}\). The PMC prediction agrees with the recent PDG value within errors, i.e. \(R^\mathrm{exp}=\left( 6.3\pm 0.5\right) \times 10^3\). Thus we think the mismatching of the prediction under conventional scale-setting with the data is due to improper choice of scale, which however can be solved by using the PMC.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The heavy quark mass provides a natural hard scale for the heavy quarkonium decays into light hadrons or photons. Calculations of their decay rates are considered as one of the earliest applications of pQCD. The charmonium has become a popular field since the discovery of \(J/\psi \) resonance at SLAC and Brookhaven in 1974. There are lots of successful experimental studies about charmonium, including the precise measurements of spectrum, lifetimes and branch ratios, cf. a comprehensive review given in the PDG [1]. At the same time, many theoretical efforts have been tried for an appropriate description of charmonium. As an important breakthrough, a systematic pQCD analysis of the heavy quarkonium inclusive annihilation and production has been given within the nonrelativistic QCD theory (NRQCD) in 1995 [2].

According to the NRQCD framework, the quarkonium decay rate can be factored into a sum of products of the short-distance coefficients and the long-distance matrix elements (LDMEs). The short-distance coefficients are perturbatively calculable in a power series of \(\alpha _s\). The LDMEs can be estimated by means of the velocity power counting rule, i.e. the LDMEs can be classified in terms of the relative velocity between the constituent quarks of the heavy quarkonium. Especially, the color-singlet ones can be directly related to the wavefunction (derivative of the wavefunction) at the origin, which then can be calculated via proper potential models.

The decay rates of the pseudoscalar quarkonium into light hadrons and photons have been calculated at the next-to-leading order (NLO) level [3, 4]. The relativistic corrections at the \(\mathcal{{O}}(\alpha _sv^2)\)-order have been given in Refs. [5, 6]. Within the NRQCD factorization framework, the decay rate of the \(\eta _c\) into light hadrons or photons can be expressed as

and

where \(F_1\), \(G_1\), \(F_{\gamma \gamma }\) and \(G_{\gamma \gamma }\) are short distance coefficients. The symbol \(\cdots \) stands for the contributions from high-dimensional LDMEs which are at least at the level of \(\mathcal{O}(v^4 \Gamma )\). \(m_c\) is the c-quark pole mass.Footnote 1 \(v^2\) is the squared heavy quark or antiquark velocity in the meson rest frame. For the case of \(\eta _c\), it can be calculated by

To suppress the uncertainty from the LDMEs, one usually calculates the ratio

where \(a(\mu )={\alpha _s(\mu )}/{(4\pi )}\), \(R_0(\mu )=\frac{81 \pi ^2 C_F}{2 \alpha ^2 N_C} a^2(\mu )\), \(\mu \) is an arbitrary renormalization scale, and \(\beta _0=11-\frac{2}{3}n_{f}\) (\(n_{f}\) being the active flavor number) is the leading \(\beta \)-term of the renormalization group function. It is noted that the factorization scale dependence is missing at this level, which is the case even at the NNLO level [8], we are thus free of the factorization scale-setting problem.

It is conventional to take the renormalization scale as the typical momentum flow of the process or the one to eliminate the large logs of the pQCD series, we call this conventional scale-setting approach. As will be shown later, such a simple treatment on scale introduces large scale uncertainty and makes the lower-order prediction unreliable. At present, the \(\eta _c\) decays into light hadrons or photons have been calculated up to NNLO level, which however still shows a poor pQCD convergence [8,9,10]. Thus by simply pursuing higher-and-higher order terms may not be the solution for those high-energy processes. In fact, even if we obtain a small scale uncertainty for global quantities such as the total cross-section or the decay rate at a certain fixed order, it is due to cancelations among different orders; the scale uncertainty for each order is still uncertain and could be very large. Two such examples for Higgs boson decay and the hadronic production of Higgs boson can be found in Refs. [11, 12]. When one applies conventional scale-setting, the renormalization scheme- and initial renormalization scale- dependence are introduced at any fixed order. Thus, a proper scale-setting approach is important for the fixed-order predictions.

Such large scale uncertainty has long been observed, and to improve the accuracy of R, Ref. [13] suggested to resum the final-state chains of the vacuum-polarization bubbles and got \(R^\mathrm{{NNA}}=(3.01\pm 0.30\pm 0.34)\times 10^3\) for the naive non-Abelianization resummation [14] and \(R^\mathrm{{BFG}}=(3.26\pm 0.31\pm 0.47)\times 10^3\) for the background-field-gauge resummation [15], respectively. Both predictions are consistent with the world average given by Particle Data Group (PDG) in year 2000 [16], which gives \(R^\mathrm{exp}=\left( 3.3\pm 1.3\right) \times 10^3\). As an attempt, the authors of Ref. [13] also presented a prediction by using the Brodsky–Lepage–Mackenzie (BLM) scale-setting approach [17] and got a much larger R value, i.e. \(R^\mathrm{{BLM}}=9.9\times 10^3\).

We should point out that those predictions are different from the value derived from the new experimental measurements, which shows \(R^\mathrm{exp}=\left( 6.3\pm 0.5\right) \times 10^3\) [1]. As will be shown later, the BLM prediction given in Ref. [13] is questionable. Thus it is interesting to show whether an improved pQCD analysis could be done and could explain the new \(R^\mathrm{exp}\), as is the purpose of this paper. Especially, it is important to show whether the mismatching of the data and the pQCD prediction is caused by improper choice of scale or by some other reasons.

A novel scale-setting approach, the Principle of Maximum Conformality (PMC) [18,19,20,21], has been developed in recent years. The PMC satisfies renormalization group invariance [22] and it reduces in \(N_C\rightarrow 0\) Abelian limit [23] to the standard Gell–Mann–Low method [24]. A more convergent pQCD series without factorial renormalon divergence can be obtained. The PMC scales are physical in the sense that they reflect the virtuality of the gluon propagators at a given order, as well as setting the effective number (\(n_{f}\)) of active flavors. The resulting resummed pQCD expression thus determines the relevant “physical” scales for any physical observable, thereby providing a solution to the renormalization scale-setting problem. Because all the scheme-dependent \(\{\beta _i\}\)-terms in pQCD series have been resummed into the running couplings with the help of renormalization group equation, the PMC predictions are renormalization scheme independent at every order. Such scheme independence can be demonstrated by using commensurate scale relations [25] among different observables. A number of PMC applications have been summarized in the review [26,27,28]. The PMC provides the underlying principal for the BLM, and in the following, we shall adopt the PMC to set the renormalization scale.

Up to NLO level, the expression of R can be rewritten as

where the \(\mathrm {\overline{MS}}\)-coefficients \(r_{i,j}\) can be read from Eq. (4), in which \(r_{i,0}\) are conformal ones. Following the standard PMC procedures, we get

where \(\ln {Q^2_1}/{\mu ^2} = -{r_{2,1}}/{r_{1,0}}\). Here, we have set the unknown PMC scale \(Q_2=Q_1\) such that to ensure the scheme-independence of R under any renormalization schemes via proper commensurate scale relations [25], whose exact value can be determined by the NNLO term which is not available at present.

If directly using the \(\overline{\mathrm{MS}}\)-scheme expression (4), we shall obtain a small PMC scale \(Q_1=0.86\) or 0.78 GeV for the prediction with or without relativistic correction. It is already close to the low-energy region, this explains why a large \(R^\mathrm{BLM}\) is obtained in Ref. [13]. [At the NLO level, the BLM prediction is the same as the PMC prediction if all \(n_{f}\)-terms are pertained to \(\alpha _s\)-running.] For this case, a reliable prediction can only be obtained by using certain low-energy \(\alpha _s\)-model, which however will introduce extra model dependence for the prediction.

Following the idea of PMC, only those \(\{\beta _i\}\)-terms that are pertained to the renormalization of running coupling should be absorbed into the running coupling. For the processes involving three-gluon or four-gluon vertex, the scale-setting problem is more involved [29]. The MOM scheme is a physical scheme which is based on the renormalization of the triple-gluon vertex at some symmetric off-shell momentum. The MOM scheme carries information about the vertex at a specific momentum configuration. This external momentum configuration is non-exceptional and there are no infrared issues, thus avoiding the confusion of distinguishing \(\{\beta _i\}\)-terms. Thus to avoid the ambiguity of applying the PMC on R, similar to the case of QCD BFKL Pomeron [30,31,32], we shall first transform the results from the \(\overline{\mathrm{MS}}\)-scheme to the momentum space subtraction scheme (MOM-scheme) [33, 34] and then apply the PMC. Another reasons for choosing the MOM scheme lie in that a better pQCD convergence can be obtained by using the MOM scheme than using the \(\overline{\mathrm{MS}}\)-scheme, and a more reasonable PMC scale in perturbative region can be achieved.

For the purpose, we adopt the perturbative relation between the \(\overline{\mathrm {MS}}\)-scheme running coupling and the mMOM-scheme one as [35]

where for the Landau gauge, \( D_1 =d_{1,0}+d_{1,1} n_{f}\), \(d_{1,0} =\frac{169}{144}N_C\), and \(d_{1,1} = - \frac{5}{18}\). We then obtain

where \(R_0^\mathrm{{mMOM}}(\mu )=\frac{81 \pi ^2 C_F}{2 \alpha ^2 N_C} \left( a^\mathrm{{mMOM}}(\mu )\right) ^2\). After applying the PMC, we obtain a new PMC scale \(Q'_{1}=\exp (-3d_{1,1}) Q_{1}\), which equals to 1.99 or 1.80 GeV for the prediction with or without relativistic correction. Such a larger PMC scale indicates a reliable pQCD prediction can be achieved by using the mMOM scheme.

To do the numerical calculation, we adopt the c-quark and b-quark running masses as the \(\mathrm {\overline{MS}}\)-scheme ones [1]: \(\overline{m}_c(\overline{m}_c)=(1.27\pm 0.03)\) GeV and \(\overline{m}_b(\overline{m}_b)=(4.18_{-0.03}^{+0.04})\) GeV. By using the relation between the pole mass \(m_Q\) and the \(\mathrm {\overline{MS}}\)-scheme running mass \(\bar{m}_Q\) [36,37,38,39]:

we obtain \(m_c=1.49\pm 0.03\) GeV. To be consistent, we adopt the two-loop \(\alpha _s\)-running, whose behavior is fixed by using the reference point \(\alpha _s(m_Z)=0.1181\pm 0.0011\) [1]. And we adopt \(\left\langle {{v^2}} \right\rangle _{\eta _c}=0.430\,\mathrm{GeV}^2/m_c^2\) [40, 41].

Numerical results of the QCD asymptotic scales \(\Lambda _\mathrm{{\overline{MS}}}\) and \(\Lambda _\mathrm{mMOM}\) under Landau gauge are listed in Table 1, where the errors are dominantly caused by the uncertainty \(\Delta \alpha _s(m_Z)=\pm 0.0011\). The asymptotic scales for different schemes satisfy the relation [11, 35], \({\Lambda _\mathrm{{mMOM}}}/ {\Lambda _\mathrm{{\overline{MS}}}} = \exp ({2D_1}/{\beta _0})\).

As a cross-check, by using the same input parameters, we obtain the same \(\overline{\mathrm{MS}}\)-scheme prediction on R under conventional scale-setting as that of Ref. [13]. Due to the reasons listed above, we shall adopt the mMOM-scheme to do our following discussions.

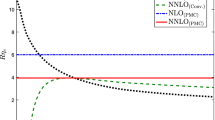

The ratio R at the NLO level versus the initial choice of \(\mu \) under the mMOM scheme. \(m_c=1.49\) GeV. The symbol “\(-r\)” stands for relativistic corrections. For conventional scale setting, the sensitivity of \(\mu \) is very large. After applying the PMC, R is independent to the choice of \(\mu \)

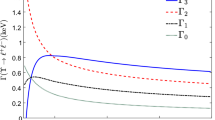

We present the PMC prediction on R at the NLO level versus the initial choice of \(\mu \) in Fig. 1, which is under the mMOM scheme and both the results before and after applying the PMC are presented. Under conventional scale setting, R shows a strong scale dependence which decreases with the increment of \(\mu \). More explicitly, by varying \(\mu \) from \(m_c\) to \(4m_c\), the ratio R will change from \(\sim 9\times 10^3\) to \(\sim 3\times 10^3\). After applying the PMC, the PMC scale \(Q'_1\) is the same for any choice of \(\mu \), leading to scale independent prediction. The relativistic correction brings an extra \(\sim 2\%\) contribution to the conventional prediction and \(\sim 14\%\) contribution to the PMC prediction. Thus the relativistic correction is important, especially for the PMC predictions. Figure 1 shows that if choosing \(\mu =Q'_1\), the values of R under conventional scale setting shall be equal to the PMC ones.

After applying the PMC, due to the elimination of divergent renormalon terms as \(n! \beta _0^n\alpha _s^n\), the pQCD series shall be more convergent. We present the LO and NLO terms of R before and after applying the PMC in Table 2. We define a parameter \(\kappa =R_\mathrm{{NLO}} / R_\mathrm{{LO}}\) to show the relative importance of the NLO-term and the LO-term. Table 2 confirms that a better pQCD convergence can be achieved by applying the PMC. A larger \(\kappa \) and a larger scale uncertainty for each term indicate that one cannot get the exact value for each term by using a guessed scale suggested by conventional scale-setting.

Analyzing the pQCD series in detail, we observe that the scale errors for conventional scale-setting are rather large for each term, and a possible net small scale error for a pQCD approximant is due to correlations/cancelations among different orders. On the other hand, due to the fact that the running of \(\alpha _s\) at each order has its own \(\{\beta _i\}\)-series governed by the renormalization group equation, the \(\beta \)-pattern for the pQCD series at each order is a superposition of all the \(\{\beta _i\}\)-terms which govern the evolution of the lower-order \(\alpha _s\) contributions at this particular order. Thus, inversely, the PMC scale at each order is determined by the known \(\beta \)-pattern, and the individual terms of R at each order shall be well determined.

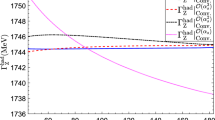

Uncertainties of R under the mMOM-scheme from the c-quark pole mass \(m_c\) and the asymptotic scale \(\Lambda _\mathrm{mMOM}\), where the error bars are squared averages of the errors from those two error sources. The symbol “\(-r\)” stands for corresponding relativistic corrections. The experimental prediction of Ref. [1] is presented as a comparison

We present the theoretical uncertainties for the conventional and the PMC scale settings in Fig. 2, in which the errors are squared averages of the ones from the choices of the c-quark pole mass \(m_c\) and the asymptotic scale \(\Lambda _\mathrm{mMOM}\). As a comparison, the experimental prediction of Ref. [1] is also presented. Under conventional scale-setting, Fig. 2 shows that the errors caused by \(m_c\) and \(\Lambda _\mathrm{mMOM}\) is smaller than the case of PMC scale-setting, which is however diluted by the quite large scale uncertainty. For example, the value of R with [or without] relativistic corrections shall be varied within the large region of \(\left( 4.12^{+4.69}_{-1.50}\right) \times 10^3\) [or \(\left( 4.21^{+5.06}_{-1.56}\right) \times 10^3\)] for \(\mu \in [m_c,4m_c]\). Under PMC scale-setting, the scale uncertainty is greatly suppressed, and the R uncertainty is dominated by the choices of two parameters \(m_c\) and \(\Lambda _\mathrm{mMOM}\), which give about \(10\%\) contribution to R. The value of R decreases with the increment of \(m_c\), and increases with the increment of \(\Lambda _\mathrm{mMOM}\). More explicitly, we have

where the first error is for \(m_c\in [1.46, 1.52]\) GeV and the second one is caused by taking \(\Lambda _\mathrm{mMOM}\) to be the values listed in Table 1.

Figure 2 shows that the conventional prediction of R with or without relativistic correction is about \(3.6\sigma \) deviation from the data. This discrepancy becomes even larger by including the NNLO term [8], thus the authors there even doubt the validity of NRQCD theory for this particular observable.Footnote 2 However, Fig. 2 shows that after applying the PMC, the pQCD prediction and the data are consistent with each other within reasonable errors even at the NLO level. The condition of the branching ratio 1 / R is similar. This indicates that the large discrepancy between the data and the pQCD prediction is caused by improper choice of renormalization scale, and a simple guessed scale may lead to false prediction or false conclusion. Thus a proper setting of the renormalization scale is important for lower-order predictions.

As a final remark, one may also calculate R by using the determined predictions on the decay widths (1) and (2) separately. If taking all input parameters as the central value of our present choices, we obtain \(R_\mathrm{Conv.,mMOM-r}\sim 2.57\times 10^{-3}\) and \(R_\mathrm{PMC,mMOM-r}\sim 2.64\times 10^{-3}\), both of which are quite different from our above predictions (11,13). Thus there are large differences for those two treatments on R, which starts at \(\alpha _s^4\)-order level. Such large differences can be explained by the weaker pQCD convergence as can be seen from Table 2, which shall be suppressed by including more-and-more loop terms. We prefer the usually adopted way of using Eq. (4) to calculate R, in which the uncertainty from the LDME is suppressed and there is no factorization scale dependence up to the NNLO level.

As a summary, in this paper, we have studied the ratio of the \(\eta _c(1S)\) decay rate into hadrons over its decay rate into photons by applying the PMC. The PMC provides a systematic way to set the optimal renormalization scale for high energy process, whose prediction is free of initial renormalization scale dependence at any fixed order. A more convergent pQCD series can be achieved and the residual scale dependence due to unknown high-order terms are highly suppressed. Figure 2 shows that the large discrepancy between the data and the pQCD prediction by using a guessed scale suggested by conventional scale-setting can be cured by applying the PMC. The PMC, with its solid physical and theoretical background, greatly improves the precision of standard model tests, and it can be applied to a wide variety of perturbatively calculable processes.

Notes

The choice of pole mass avoids the ambiguity of using \(\overline{\mathrm{MS}}\)-mass for separating the renormalization group involved \(\beta \)-terms [7].

The NNLO results given in Ref. [8] cannot be conveniently adopted for setting the PMC scales. We need to confirm which \(n_{f}\)-terms are conformal and which are not, thus the scale-setting procedures are much more involved. And, we think such a complex NNLO calculation need to be confirmed by other groups.

References

C. Patrignani et al., Particle Data Group, Chin. Phys. C 40, 100001 (2016)

G.T. Bodwin, E. Braaten, G.P. Lepage, Phys. Rev. D 51, 1125 (1995)

R. Barbieri, E. d’Emilio, G. Curci, E. Remiddi, Nucl. Phys. B 154, 535 (1979)

K. Hagiwara, C.B. Kim, T. Yoshino, Nucl. Phys. B 177, 461 (1981)

H.K. Guo, Y.Q. Ma, K.T. Chao, Phys. Rev. D 83, 114038 (2011)

Y. Jia, X.T. Yang, W.L. Sang, J. Xu, JHEP 1106, 097 (2011)

S.Q. Wang, X.G. Wu, X.C. Zheng, G. Chen, J.M. Shen, J. Phys. G 41, 075010 (2014)

F. Feng, Y. Jia, W. L. Sang, arXiv:1707.05758

A. Czarnecki, K. Melnikov, Phys. Lett. B 519, 212 (2001)

F. Feng, Y. Jia, W.L. Sang, Phys. Rev. Lett. 115, 222001 (2015)

D.M. Zeng, S.Q. Wang, X.G. Wu, J.M. Shen, J. Phys. G 43, 075001 (2016)

S.Q. Wang, X.G. Wu, S.J. Brodsky, M. Mojaza, Phys. Rev. D 94, 053003 (2016)

G.T. Bodwin, Y.Q. Chen, Phys. Rev. D 64, 114008 (2001)

M. Beneke, V.M. Braun, Phys. Lett. B 348, 513 (1995)

B.S. DeWitt, Phys. Rev. 162, 1195 (1967)

D.E. Groom et al., Particle Data Group, Eur. Phys. J. C 15, 1 (2000)

S.J. Brodsky, G.P. Lepage, P.B. Mackenzie, Phys. Rev. D 28, 228 (1983)

S.J. Brodsky, X.G. Wu, Phys. Rev. D 85, 034038 (2012)

S.J. Brodsky, L. Di Giustino, Phys. Rev. D 86, 085026 (2012)

M. Mojaza, S.J. Brodsky, X.G. Wu, Phys. Rev. Lett. 110, 192001 (2013)

S.J. Brodsky, M. Mojaza, X.G. Wu, Phys. Rev. D 89, 014027 (2014)

S.J. Brodsky, X.G. Wu, Phys. Rev. D 86, 054018 (2012)

S.J. Brodsky, P. Huet, Phys. Lett. B 417, 145 (1998)

M. Gell-Mann, F.E. Low, Phys. Rev. 95, 1300 (1954)

S.J. Brodsky, H.J. Lu, Phys. Rev. D 51, 3652 (1995)

X.G. Wu, S.J. Brodsky, M. Mojaza, Prog. Part. Nucl. Phys. 72, 44 (2013)

X.G. Wu, Y. Ma, S.Q. Wang, H.B. Fu, H.H. Ma, S.J. Brodsky, M. Mojaza, Rept. Prog. Phys. 78, 126201 (2015)

X.G. Wu, S.Q. Wang, S.J. Brodsky, Front. Phys. (Beijing) 11, 111201 (2016)

M. Binger, S.J. Brodsky, Phys. Rev. D 74, 054016 (2006)

X.C. Zheng, X.G. Wu, S.Q. Wang, J.M. Shen, Q.L. Zhang, JHEP 1310, 117 (2013)

S.J. Brodsky, V.S. Fadin, V.T. Kim, L.N. Lipatov, G.B. Pivovarov, JETP Lett. 70, 155 (1999)

M. Hentschinski, A. Sabio Vera, C. Salas, Phys. Rev. Lett. 110, 041601 (2013)

W. Celmaster, R.J. Gonsalves, Phys. Rev. Lett. 42, 1435 (1979)

W. Celmaster, R.J. Gonsalves, Phys. Rev. Lett. 44, 560 (1980)

L. von Smekal, K. Maltman, A. Sternbeck, Phys. Lett. B 681, 336 (2009)

N. Gray, D.J. Broadhurst, W. Grafe, K. Schilcher, Z. Phys. C 48, 673 (1990)

D.J. Broadhurst, N. Gray, K. Schilcher, Z. Phys. C 52, 111 (1991)

K.G. Chetyrkin, M. Steinhauser, Phys. Rev. Lett. 83, 4001 (1999)

K. Melnikov, T.V. Ritbergen, Phys. Lett. B 482, 99 (2000)

G.T. Bodwin, H.S. Chung, D. Kang, J. Lee, C. Yu, Phys. Rev. D 77, 094017 (2008)

H.S. Chung, J. Lee, C. Yu, Phys. Lett. B 697, 48 (2011)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant No. 11625520.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Du, BL., Wu, XG., Zeng, J. et al. The \(\eta _c\) decays into light hadrons using the principle of maximum conformality. Eur. Phys. J. C 78, 61 (2018). https://doi.org/10.1140/epjc/s10052-018-5560-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-018-5560-3