Abstract

The Muskingum model is a popular hydrologic flood routing technique; however, the accurate estimation of model parameters challenges the effective, precise, and rapid-response operation of flood routing. Evolutionary and metaheuristic optimization algorithms (EMOAs) are well suited for parameter estimation task associated with a wide range of complex models including the nonlinear Muskingum model. However, more proficient frameworks requiring less computational effort are substantially advantageous. Among the EMOAs teaching–learning-based optimization (TLBO) is a relatively new, parameter-free, and efficient metaheuristic optimization algorithm, inspired by the teacher-student interactions in a classroom to upgrade the overall knowledge of a topic through a teaching–learning procedure. The novelty of this study originates from (1) coupling TLBO and the nonlinear Muskingum routing model to estimate the Muskingum parameters by outflow predictability enhancement, and (2) evaluating a parameter-free algorithm’s functionality and accuracy involving complex Muskingum model’s parameter determination. TLBO, unlike previous EMOAs linked to the Muskingum model, is free of algorithmic parameters which makes it ideal for prediction without optimizing EMOAs parameters. The hypothesis herein entertained is that TLBO is effective in estimating the nonlinear Muskingum parameters efficiently and accurately. This hypothesis is evaluated with two popular benchmark examples, the Wilson and Wye River case studies. The results show the excellent performance of the “TLBO-Muskingum” for estimating accurately the Muskingum parameters based on the Nash–Sutcliffe Efficiency (NSE) to evaluate the TLBO’s predictive skill using benchmark problems. The NSE index is calculated 0.99 and 0.94 for the Wilson and Wye River benchmarks, respectively.

Similar content being viewed by others

Introduction

The complex nature of flood events may challenge the straightforward, rapid, and accurate hydrological analysis. On the other hand, accurate and rapid-response modeling and simulation are necessary for flood routing in terms of minimization of damages and economic costs1. Hydrograph routing is a technique employed to simulate the changes in the shape of a flood hydrograph as inflow charges through a river channel or a reservoir2,3. These methods can be generally classified into two categories: hydraulic and hydrologic models4. The former models, such as Hydrologic Engineering Center River Analysis System (HEC-RAS) and MIKE11, are relatively complex compared to hydrologic routing models due to their dependency on solving the continuity, the energy, and/or the momentum equations5,6. What’s more, hydraulic routing models require detailed data and information river geometry and Manning's roughness coefficient, whose determination is time consuming and expensive and challengeable for calibration purposes4. On the other hand, hydrologic routing models, such as the Muskingum method, are more popular because of their simplicity, even though hydraulic routing methods are more accurate and have a more physically-based foundation. Yet, the robust calibration of hydrologic models has improved their accuracy to acceptable levels7. The three-parameter nonlinear Muskingum model features storage-time parameter (\(K\)), dimensionless river reach weighting factor (\(\chi\)), and dimensionless nonlinear flood wave (m) parameters, which must be estimated by traditional mathematical techniques or evolutionary and metaheuristic optimization algorithms (EMOAs)8.

Among the mathematical techniques one can cite the segmented least-squares method (S-LSM) by Gill9, univariate least squares (ULS) by Heggen10, least squares (LS) by Aldama11, the nonlinear least-squares method (N-LSM) by Yoon and Padmanabhan12, feasible sequential quadratic programming13, the Lagrange multiplier (LM) method by Das14, the Broyden–Fletcher–Goldfarb–Shannon (BFGS) technique by Geem15, and the Newton-type trust region algorithm (NTRA) by Sheng et al.16, among others. These mentioned techniques are relatively straightforward; yet, they are computationally burdensome and are commonly trapped into local optima. Moreover, the performance of the mathematical techniques is highly dependent on the quality of an initial search point, which means that the search for a solution is likely to converge to a local optimum, instead of a global optimum if the initial search point is not selected near the unknown global solution17.

Among the EMOAs are the genetic algorithm (GA) by Mohan18, harmony search (HS) by Kim et al.17 and by Geem et al.19, the ant colony algorithm (ACA) by Zhan and Xu20, the gray-encoded accelerating genetic algorithm (GEAGA) by Chen and Yang21, particle swarm optimization (PSO) by Chu and Chang22, the immune clonal selection algorithm (ICSA) by Luo and Xie23, the parameter setting free harmony search (PSF-HS) algorithm by Geem8, the imperialist competitive algorithm (ICA) by Tahershamsi and Sheikholeslami24, multi-objective particle swarm optimization (MOPSO) by Azadnia and Zahraie25, differential evolution (DE) by Xu et al.26, a combination of the simulated annealing (SA) algorithm and hybrid harmony search algorithm (HHSA) by Karahan et al.27, modified honey-bee mating optimization (MHBMO) algorithm by Niazkar and Afzali28, the backtracking search algorithm (BSA) by Yuan et al.29, Weed Optimization Algorithm (WOA) for extended nonlinear Muskingum model by Hamedi et al.30, PSO for a new form of Muskingum (four-parameter Muskingum model proposed by Easa31) by Moghaddam et al.32, modified PSO by Norouzi and Bazargan33, hybrid modified honey-bee mating (HMHBM) algorithm by Niazkar and Afzali34, bat algorithm (BA) by Farzin et al.35, wolf pack algorithm (WPA) by Bai et al.36, shark algorithm (SA) by Farahani et al.37. These and other algorithms have been previously applied to estimate the three parameters of the nonlinear form of the Muskingum parameters (\(K\), \(\chi\), and m). These EMOAs achieve an acceptable accuracy in the estimation of Muskingum parameters that are near global optima; yet, they must be pre-calibrated to assure their computational efficiency and accuracy. Sarzaeim et al.38 reported that all EMOAs involve controlling parameters including (1) general parameters, and (2) specific parameters. The group (1) parameters are required for all optimization algorithms (e.g., population size and number of iterations), whereas the group (2) parameters are specified by the optimization algorithms. In contrast, the relatively new teaching–learning-based optimization (TLBO) proposed by Rao et al.39 is a parameter-less method, i.e., TLBO does not involve controlling parameters. For purpose of illustration in comparison with other well-known EMOAs the GA features at least two specific parameters (the mutation and cross-over rates), and PSO involves at least three algorithmic parameters (learning factors, the variation of weight, and maximum velocity parameters)39. The recently-developed Cat Swarm Optimization (CSO) algorithm features four algorithmic parameters (seeking memory pool, seeking the range of selected dimension, counts of dimension to change, and self-position consideration)40. The Flower Pollination Algorithm (FPA) features four specific control parameters (the size of initial population, the scale factor for controlling step size, the levy distribution parameter, and the switch probability)41. Therefore, the calibration of the three-parameter nonlinear Muskingum model with each of the above EMOAs turns into a calibration of two sets of parameters, three for the routing model and those specific to the optimizing algorithm. The important advantage that distinguishes a parameter-free algorithm from other optimization algorithms stems from the fact that the output solutions of EMOAs are highly sensitive to the values of specific algorithmic parameters. Garousi-Nejad42 discussed how the quality performance of EMOAs are highly dependent on the tuning of algorithmic parameters. The main issue is that the optimization search may be stopped at local optima rather than achieving convergence to the global, best, solution. Optimization performance is highly sensitive to the specific algorithmic parameters pre-calibration, and sensitivity analysis is necessary to assign suitable values of the algorithmic parameters5. Moreover, Okkan and Kirdemir43 demonstrated less computational effort and faster convergence for optimization algorithms like PSO by developing modified algorithms with fewer control parameters. The cited studies seek to apply EMOAs with minimum controlling parameters.

This study proposes the TLBO algorithm coupled with nonlinear Muskingum routing to estimate the parameters of the flood routing model to overcome the limitations of the search techniques and the calibration of evolutionary algorithmic parameters. TLBO is a metaheuristic search algorithm with a significant merit that distinguishes it from other cited optimization algorithms because it does not involve algorithmic parameters39. In other words, its application does not require a pre-calibration process which leads to faster and more efficient estimation of Muskingum’s parameters. Proper calibration of the algorithmic parameters implies time-consuming computations and effort44. The novelty of this study is the application of a parameter-free TLBO, which constitutes a significant advantage in estimating the three parameters of the nonlinear Muskingum model. This work evaluates the “TLBO-Muskingum” framework’s performance The successful TLBO application in this work may encourage its use in other hydrologic problems.

This study couples the parameter-free TLBO and the nonlinear Muskingum model to estimate the model’s parameters by optimizing outflow predictions (Fig. 1). The hypothesis herein entertained is that “TLBO-Muskingum” can accurately predict outflow with less computational effort because it does not involve algorithm calibration. The TLBO’s performance is assessed by applying it to two well-known flood routing benchmark problems, relying on the Nash–Sutcliffe Efficiency (NSE) index as a hydrological performance criterion to evaluate the TLBO’s accuracy in outflow prediction.

Methods

This study is structured as follow: the nonlinear Muskingum model is briefly presented in “The nonlinear Muskingum flood-routing model”. The TLBO algorithm, its functionality, and flowchart are described in “The teaching-learning-based optimization (TLBO) algorithm”. in detail. Next, the “TLBO-Muskingum” framework is introduced in “Linking TLBO to the Muskingum model”. The results from two flood routing benchmarks are discussed in “Results and discussion”, and the concluding remarks are presented in “Concluding remarks”.

The nonlinear Muskingum flood-routing model

The relation between stream flow and reach storage is nonlinear; therefore, the original linear form of the Muskingum flood-routing model has been superseded by the following continuity and nonlinear Muskingum model, respectively8,9,45:

where \(S_{t}\), \(I\), and \(O_{t}\) denote the channel storage (with dimension of L3) of a river reach, rate of inflow with dimension of L3/T to a river reach, and rate of outflow (with dimension of L3/T) to a river reach, respectively, at time t; \(K\), \(\chi\), and m = storage-time constant parameter, dimensionless weighting factor, and dimensionless parameter related to the nonlinearity of the flood wave, respectively. The following Eq. (3) is derived from Eq. (2):

Substituting Eq. (3) in Eq. (1) and taking the derivate of \(S_{t}\) with respect to time produces:

Equation (4) is an ordinary first-order, nonlinear (when \(m\ne 1)\), differential equation that does not have an analytical solution. Instead, Eq. (4) is routinely solved numerically. The following conditions are applied in the numerical solution of equation: (1) the inflow hydrograph \(I_{t}\) is known, and (2) the initial inflow equals the initial outflow \(O_{1} ( = I_{1} )\) Assumption (2) and Eq. (2) imply that \(S_{1} ( = KO_{1}^{m} )\). Given values of the Muskingum parameters (\(K\),\(\chi\), and m) and applying the numerical discretization of the time derivative in Eq. (4) generates a recursive equation for reach storage \(S_{t + 1}\) written in Eq. (5):

in which Δt denotes the time step of hydrograph simulation. Therefore, the outflow \(O_{t}\) is calculated with Eq. (6) as follows:

The values of the parameters \(K\),\(\chi\), and m must be calibrated to achieve accurate outflow predictions with Eq. (6). Parameter calibration and hydraulic prediction can be achieved efficiently and accurately with EMOAs. The next section describes TLBO for the estimation of the Muskingum parameters with this parameter-free metaheuristic optimization algorithm in detail.

The teaching–learning-based optimization (TLBO) algorithm

Teaching–learning-based optimization (TLBO) is a population-based, meta-heuristic, optimization algorithm inspired by the swarm intelligence of a population seeking to change from a current situation to an optimal situation by overall knowledge improvement (i.e. grades) of students in a classroom39. The peculiar feature of TLBO is that it does not require algorithmic parameters for its implementation other than general parameters ubiquitous to all evolutionary optimization algorithms such as population size and the number of iterations. Recall the GA features crossover and mutation rates, whose values affect the optimization performance and the accuracy of results38.

TLBO starts searching for the optimal solution of a well-posed problem with an initial population whose members’ scores are the values of the decision variables, such as grades earned by students. TLBO strives to improve the population’s quality by means of a “Teacher Phase” and a “Learner Phase” to achieve a solution that is very near to the globally optimal solution. In the following the “Teacher Phase” and “Learner Phase” are discussed.

In a classroom the teacher has the highest-level knowledge of a particular topic and endeavors to teach the students to advance their overall knowledge, and, consequently, raise their individual and average grades in exams. In the “Teacher Phase” the person with the best grade is considered as a teacher making efforts to transfer knowledge to the other learners (i.e. students) in a class. I Suppose there are n students in a class and that they have an average grade \({M}_{i}\) in exam i. The most successful student with the best grade \({X}_{T,i}\) in exam i among the n students plays the teacher’s role. The difference between the teacher’s level and the average level of knowledge (\({Diff}_{i}\)) in exam i is expressed as follows:

in which, \({r}_{i}=\) random number in \(\left[\mathrm{0,1}\right]\), and \({T}_{F}=\) a random number that accounts for the teacher factor that depends on teaching quality, and equals either 1 or 2.

By teaching and transferring knowledge to students their new, improved, grades in the next exam are defined by Eq. (9):

where, \(X^{\prime}_{j,i}\) and \(X_{j,i} =\) new and old grades of student j in exam i, respectively.\(X_{j,i}\) is transferred to the “Learner Phase” if the old grade is better than the new one, otherwise \(X^{\prime}_{j,i}\) is transferred.

In the “Learner Phase” the top students help their peers to improve their knowledge through, for example, team work in assignments, leading to new grade improvement (\(X^{\prime\prime}\)). This helping interaction between two students A and B in each exam i is defined as follow:

This teacher-students interaction is the fundamental inspiration for TLBO, in which the number of students in the class is the algorithm population size and the number of exams is the number of iterations. The TLBO steps are defined as follow:

-

(1)

Define the population size, the number of iterations, and the objective function.

-

(2)

Randomly initialize the grades (\({X}_{i,j}\)) of n students (j = 1, 2, …, n) in exam i = 1.

-

(3)

Evaluate the objective function for n students in exam i.

-

(4)

Select the student with the best grade as teacher and calculate \({Diff}_{i}\) for exam i.

-

(5)

To calculate \(X^{\prime}_{j,i}\) for n students in exam i.

-

(6)

Compare \(X_{j,i}\) and \(X^{\prime}_{j,i}\) and select the better one for transferring to the next step.

-

(7)

Select randomly each pair of students and calculate \(X_{j,i}^{^{\prime\prime}}\).

-

(8)

Compare \(X^{\prime}_{j,i}\) and \(X_{j,i}^{^{\prime\prime}}\) and select the better one for transferring to the next step.

-

(9)

Evaluate the objective function for all students, check whether the stop criterion is satisfied (the optimal solution is achieved), otherwise the algorithm will iterate from step (4).

A more in-depth description of TLBO can be found in Refs.38,39,46.

Linking TLBO to the Muskingum model

Figure 2 depicts the flowchart of the algorithmic “TLBO-Muskingum” method to estimate the Muskingum model parameters (i.e. \(K\),\(\chi\), and m). The algorithm starts by defining the population size (number of students), number of iterations (number of exams), and the objective function. Next, the initial population of Muskingum’s parameters is generated randomly. The flood hydrograph is then simulated with Eqs. (5) and (6). The values of the objective function for each sequence of scores earned by the students are calculated following the Muskingum simulation. The next step improves the current population of decision variables (i.e., the estimates of \(K\),\(\chi\), and m) by calculating the mean value of the objective function and selecting the best solution as the teacher of the population. Afterward, the population of parameters is updated in the teaching phase (i.e. moving the population toward the teachers’ sequence of simulated outflows) and the learning phase (i.e. updating the population based on the interaction between the students). In other words, the new population of parameters is generated with the modifier operator such that each student (or parameter estimate) starts moving towards the best solution in the population by means of the linear and random base equation (this is the Teacher Phase). Furthermore, the improvement of the population is guided by the interactions between students using a linear equation based on the difference between their positions (this is the so-called Learner Phase). The Muskingum simulation is repeated with the improved or updated population and the objective function is re-evaluated. The optimal solution is reported whenever the user-specified termination criterion is satisfied. Otherwise, the iterations involving improvement of the current population, Muskingum simulation, evaluation of objective functions, and assessment of the termination criterion proceed until convergence is achieved.

The population size is set equal to 100, the number of iterations is 500, and the objective function is expressed as below:

where, \(SSD\) is the sum of the squared deviation between the observed and simulated outflows at time interval t, \({O}_{i}\) is the observed outflow at time interval i, and \({\widehat{O}}_{i}\) is the simulated outflow at time interval i.

Results and discussion

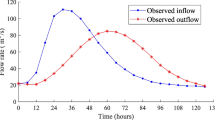

The performance of the “TLBO-Muskingum” in estimating the three-parameters of nonlinear Muskingum model is evaluated with two well-known benchmark problems: (1) the Wilson cased study47, and (2) the River Wye in the United Kingdom48, both with one single peak flood event. The former one is a popular benchmark problem based on the data provided by Wilson (1974) and has been employed commonly in several studies9,14,22,50,51,52,53 to be linked to EMOAs for estimation of nonlinear Muskingum model’s parameters. The minor lateral nature of the flow makes this case study ideal for flood routing studies48.

The second case study is based on flood event of the River Wye in Dec 1960 in the United Kingdom49. The 69–75 km riverbed characteristics (without tributaries and small lateral inflow) make it ideal for flood-routing calibration purposes.

Along with objective function evaluation (SSD), the Nash–Sutcliffe Efficiency (NSE) is herein calculated for more precise evaluation of flood routing and optimization performance in hydrological point of view. The NSE is a normalized index of error variance53 that measures the predictive skill of hydrologic models. It takes value in the range of (− ∞, 1]. The closer NSE is to 1, the more accurately the hydrological model performs54. The NSE is defined as follows:

where NSE is the Nash–Sutcliffe Efficiency index, \({O}_{i}\) is the observed outflow at time interval i, \({\widehat{O}}_{i}\) is the simulated outflow at time interval i, and \(\overline{O }\) is the average of observed outflow.

The “TLBO-Muskingum” has been implemented for 10 runs for each of the benchmark problems. The objective function values in all 500 iterations have been plotted in Fig. 3. It is shown in Fig. 3 that in both case studies the converges was achieved by iteration 500, the best objective function is 169.3 and 102,511.9 for the Wilson and Wye River benchmarks, respectively. For more precise insight, the zoom-in objective function values have been extracted up to iteration 50 in Fig. 3, which depicts the fast convergence of TLBO in reaching the optimal solution.

Figure 3 also illustrates how all 10 runs reached the same objective function, which results in negligible difference between the 10 runs for each benchmark problem. The calculated average, minimum (min), maximum (max), and standard deviation (SD) statistics of all the runs are listed in Tables 1 and 2 for the Wilson and Wye River benchmarks, respectively. These statistics are not significantly different at each time step and the SD values are small (the average standard deviation between the simulated outflows in all time steps for all 10 runs is \(6\times {10}^{-6}\) and \(7\times {10}^{-5}\) for the Wilson and Wye River examples, respectively). The small SD values stem from the high convergence capability of the TLBO in outflow simulations, which confirms the high reliability and robustness of “random-based” TLBO method in flow prediction. The average values of the simulated outflow were used for further analysis. It is important to notice that the results from the 10 runs were calculated without calibration of any algorithmic parameters. The observed and average simulated outflows timeseries calculated with the optimal values of the Muskingum parameters are listed in Tables 3 and 4 for the Wilson and Wye River case studies, respectively. The SSD as objective function from calculated outflows along with the NSE index as a standard hydrological performance metric are listed in Tables 3 and 4, as well. The calculated SSD is shown as function of the iteration number in Fig. 3. The NSE values clearly show how accurately the “TLBO-Muskingum” simulates the outflows, and consequently how the three parameters of Muskingum are estimated precisely. The NSE value for the Wilson case study is 0.99, demonstrating the excellent performance of the optimization process. The NSE equals 0.94 regarding the Wye River, also showing high algorithmic accuracy. Recall that the closer the NSE to 1, the more accurate the model prediction is.

Figure 4 depicts the timeseries of the observed and simulated hydrographs for case studies 1 and 2, along with the inflows. The simulated outflow hydrographs correspond to the average estimates of the Muskingum parameters. It is seen in Fig. 4 the overall good fit between observations and predictions. The accuracy of the “TLBO-Muskingum” algorithm’s predictions is relatively higher for the first benchmark problem (NSE = 0.99 and R2 = 0.99, see Table 5). The accuracy is nevertheless high in the second case study (NSE = 0.94 and R2 = 0.97, see Table 5), except for the peak outflow: which is underestimated. This is evident in Fig. 5 illustrating the scatterplots of the observed and simulated outflows. Clearly in the case of the Wilson example the accuracy is high for the entire range of the outflows (R2 = 0.99), while as discussed earlier, the predictive skill of the TLBO-Muskingum method for estimating lower outflows is superior to that associated with the peak outflows. The prediction of high flows may be improved by using a longer time series in the training phase of the Muskingum model. Furthermore, applying TLBO to the four-parameter Muskingum model may lead to better performance of the flood routing model with respect to peak flows, which may be addressed in future work.

Table 5 lists the optimal values of the Muskingum’s parameters calculated by TLBO (\(K\),\(\chi\), and m), the NSE, and the average run time obtained with 10 runs. The average run time for the Wilson and Wye examples equal 2.531 and 3.488 s, respectively. This shows the rapid convergence of the TLBO-Muskingum method.

For comparison purposes, the results from coupling GA18 and PSO22 to three-parameter Muskingum flood routing for Wilson example with the same objective function have been extracted and presented in Fig. 6. The sensitivity analysis has been implemented in the application of both GA and PSO to increase the accuracy of optimization-simulation Muskingum flood routing18,22 which is computationally expensive and time-consuming, additionally the optimization perform sensitive to the algorithmic parameters to reach the global optima. The SSD value for fine-tuned GA and PSO are 23.0 and 36.9, respectively. In addition, deviation of peak flood (DPO) and deviation of peak time (DPOT) have been considered for comparison. The results show that all three optimization algorithms are equally efficient in terms of DPOT (DPOTTLBO = DPOTGA = DPOTPSO = 0), while TLBO outperforms the GA and PSO in terms of DPO; DPOTLBO = 0.42, DPOGA = 0.70, and DPOPSO = 0.60. This demonstrates the TLBO’s capability to estimate the peak flood value which is a critical value in flood routing accuracy. It should be noted that the performances of the GA and PSO were obtained after parameter calibration, while TLBO reached the solutions without algorithmic parameter tuning.

Concluding remarks

There are many evolutionary optimization algorithms such as the Genetic Algorithm (GA) and Particle Swarm Optimization (PSO) among well-known ones, and Cat Swarm Optimization (CSO) and Flower Pollination Algorithm (FPA) among recently developed ones. These algorithms calculate near global optimal solutions for complex problems. Yet, their performance relies on the pre-calibration of algorithmic parameters, for there is no deterministic method for their assignment. The specification of evolutionary algorithmic parameters is commonly guided by experienced gained with similar optimization problems, if available. This paper implemented TLBO to estimate the three parameters of the nonlinear Muskingum models. TLBO was coupled with a nonlinear Muskingum flood routing model to make optimal predictions of outflow hydrographs by means of \(K\),\(\chi\), and m calibration, which is the major challenge in Muskingum application for flood routing purposes. The coupling of TLBO with Muskingum routing bypassed the need for specific algorithmic optimization parameters, which are not required in TLBO. The results of NSE index (0.99 and 0.94 for the Wilson and Wye River examples, respectively) demonstrate the excellent performance of TLBO for estimating the parameter values rapidly without requiring fine-tuning of optimizing algorithmic parameters. The excellent performance of TLBO with the 3-parameter Muskingum model makes the TLBO a suitable candidate to tackle the 4-parameter Muskingum routing problem in future works, which may lead to even better accuracy of Muskingum flood routing.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Giordano, R. et al. Modelling the complexity of the network of interactions in flood emergency management: The Lorca flash flood case. J. Environ. Model. Softw. 95, 180–195 (2017).

McCarthy, G. T. The unit hydrograph and flood routing. In Proc., Conf. of the North Atlantic Division, U.S. Army Corps of Engineers, New London, Conn. (1938).

Tewolde, M. H. & Smithers, J. C. Flood routing in ungauged catchments using Muskingum methods. Water SA 32(3), 379–388 (2006).

Song, X., Kong, F. & Zhu, Z. Application of Muskingum routing method with variable parameters in ungauged basin. Water Sci. Eng. 4(1), 1–12 (2011).

Aboutalebi, M., Bozorg Haddad, O. & Loaiciga, H. A. Application of the SVR-NSGAII to hydrograph routing in open channels. J. Irrig. Drain. Eng. https://doi.org/10.1061/(ASCE)IR.1943-4774.0000969 (2016).

Niazkar, M. & Afzali, S. Parameter estimation of an improved nonlinear Muskingum model using a new hybrid method. Hydrol. Res. 48(5), 1253–1267 (2017).

Chow, V. T., Maidment, D. & Mays, L. Applied Hydrology (McGraw-Hill, 1988).

Geem, Z. W. Parameter estimation of the nonlinear Muskingum model using parameter-setting-free harmony search algorithm. J. Hydrol. Eng. 16(8), 684–688 (2011).

Gill, M. A. Flood routing by Muskingum method. J. Hydrol. 36(3–4), 353–363 (1978).

Heggen, R. J. Univariate least squares Muskingum flood routing. Water Resour. Bull. 20(1), 103–107 (1984).

Aldama, A. Least-squaresparameter estimation for Muskingum flood routing. J. Hydraul. Eng. 4(580), 580–586 (1990).

Yoon, J. & Padmanabhan, G. Parameter estimation of linear and nonlinear Muskingum models. J. Water Resour. Plan. Manag. 119(5), 600–610 (1993).

Kshirsagar, M. M., Rajagopalan, B. & Lal, U. Optimal parameter estimation for Muskingum routing with ungauged lateral inflow. J. Hydrol. 169(1–4), 25–35. https://doi.org/10.1016/0022-1694(94)02670-7 (1995).

Das, A. Parameter estimation for Muskingum models. J. Irrig. Drain. Eng. 130(2), 140–147 (2004).

Geem, Z. Parameter estimation for the nonlinear Muskingum model using the BFGS technique. J. Irrig. Drain. Eng. 5(474), 474–478 (2006).

Sheng, Z., Ouyang, A., Liu, L. & Yuan, G. A novel parameter estimation method for Muskingum model using new Newton-type trust region algorithm. Math. Probl. Eng. https://doi.org/10.1155/2014/634852 (2014).

Kim, J. H., Geem, Z. W. & Kim, E. S. Parameter estimation of the nonlinear Muskingum model using harmony search. J. Am. Water Resour. Assoc. 37(5), 1131–1138 (2001).

Mohan, S. Parameter estimation of nonlinear Muskingum models using genetic algorithm. J. Hydraul. Eng. 2(137), 137–142 (1997).

Geem, Z. W., Kim, J. H. & Yoon, Y. N. Parameter calibration of the nonlinear Muskingum model using harmony search. J. Korea Water Resour. Assoc. 33(S1), 3–10 (2000).

Zhan, S. C. & Xu, J. Application of ant colony algorithm to parameter estimation of Muskingum Routing Model. J. Nat. Disasters 14(5), 20–24 (2005).

Chen, J. & Yang, X. Optimal parameter estimation for Muskingum model based on Gray-Encoded Accelerating Genetic algorithm. Commun. Nonlinear Sci. Numer. Simul. 12(5), 849–858 (2007).

Chu, H. & Chang, L. Applying particle swarm optimization to parameter estimation of the nonlinear Muskingum model. J. Hydraul. Eng. 14(9), 1024–1027 (2009).

Luo, J. & Xie, J. Parameter estimation for nonlinear Muskingum model based on immune clonal selection algorithm. J. Hydrol. Eng. 15(10), 844–851 (2010).

Tahershamsi, A. & Sheikholeslami, R. Optimization to identify Muskingum model parameters using imperialist competitive algorithm. Int. J. Optim. Civ. Eng. 1(3), 475–484 (2011).

Azadnia, A. & Zahraie, B. Optimization of nonlinear Muskingum method with variable parameters using multi-objective particle swarm optimization. In Proceeding of World Environmental and Water Resources Congress, Rhode Island, USA, 16–20 May (2010).

Xu, D., Qiu, L. & Chen, S. Estimation of nonlinear Muskingum model parameter using differential evolution. J. Hydrol. Eng. 17(2), 348–353 (2012).

Karahan, H., Gurarslan, G. & Geem, Z. Parameter estimation of the nonlinear Muskingum flood-routing model using a hybrid harmony search algorithm. J. Hydrol. Eng. 18(3), 352–360 (2013).

Niazkar, M. & Afzali, S. Assessment of modified honey bee mating optimization for parameter estimation of nonlinear Muskingum models. J. Hydrol. Eng. https://doi.org/10.1061/(ASCE)HE.1943-5584.0001028 (2014).

Yuan, X., Wu, X., Tian, H., Yuan, Y. & Adnan, R. Parameter identification of nonlinear Muskingum model with backtracking search algorithm. Water Resour. Manag. 30(8), 2767–2783 (2016).

Hamedi, F. et al. Parameter estimation of extended nonlinear Muskingum models with the weed optimization algorithm. J. Irrig. Drain. Eng. 142(12), 04016059 (2016).

Easa, S. M. New and improved four-parameter non-linear Muskingum model. Proc. Inst. Civ. Eng. Water Manag. 167(5), 288–298 (2014).

Moghaddam, A., Behmanesh, J. & Farsijani, A. Parameters estimation for the new four-parameter nonlinear Muskingum model using the Particle Swarm Optimization. Water Resour. Manag. 30(7), 2143–2160 (2016).

Norouzi, H. & Bazargan, J. Flood routing by linear Muskingum method using two basic floods data using Particle Swarm Optimization (PSO) algorithm. Water Sci. Technol. Water Supply 20(5), 1897–1908 (2020).

Niazkar, M. & Hosein Afzali, S. New nonlinear variable-parameter Muskingum models. KSCE J. Civ. Eng. 21(7), 2958–2967 (2017).

Farzin, S. et al. Flood routing in river reaches using a three-parameter Muskingum model coupled with an improved bat algorithm. Water 10(9), 1130 (2018).

Bai, T., Wei, J., Yang, W. & Huang, Q. Multi-objective parameter estimation of improved Muskingum model by wolf pack algorithm and its application in Upper Hanjiang River, China. Water 10(10), 1415 (2018).

Farahani, N.,et al. A new method for flood routing utilizing four-parameter nonlinear Muskingum and shark algorithm. Water Resour. Manag. 33, 1–15 (2019).

Sarzaeim, P., Bozorg-Haddad, O. & Chu, X. Teaching-learning-based optimization (TLBO) algorithm. In Advanced Optimization by Nature-Inspired Algorithms. Studies in Computational Intelligence Vol. 720 (ed. Bozorg-Haddad, O.) (Springer, 2018). https://doi.org/10.1007/978-981-10-5221-7_6.

Rao, R. V., Savsani, V. J. & Vakharia, D. P. Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 43(3), 303–315 (2011).

Bahrami, M., Bozorg-Haddad, O., & Chu, X. Cat Swarm Optimization (CSO) algorithm. In Advanced Optimization by Nature-Inspired Algorithms, Studies in Computational Intelligence, (ed. Omid Bozorg-Haddad) vol. 720, 9–18, (Springer, 2018).

Azad, M., Bozorg-Haddad, O., & Chu, X. Flower pollination algorithm (FPA). In Advanced Optimization by Nature-Inspired Algorithms, Studies in Computational Intelligence, vol. 720, 59–67 (Springer, 2018).

Garousi-Nejad, I., Bozorg-Haddad, O. & Loáiciga, H. Modified firefly algorithm for solving multireservoir operation in continuous and discrete domains. J. Water Resour. Plan. Manag. https://doi.org/10.1061/(ASCE)WR.1943-5452.0000644 (2016).

Okkan, U. & Kirdemir, U. Locally tuned hybridized particle swarm optimization for the calibration of the nonlinear Muskingum flood routing model. J. Water Clim. Change 11(1S), 343–358 (2020).

Rao, R. V. Introduction to optimization. In Teaching Learning Based Optimization Algorithm. (ed. Rao, R. Venkata.) 1–8 (Springer International Publishing, 2016).

Tung, Y. K. River flood routing by nonlinear Muskingum method. J. Hydraul. Eng. 111(12), 1447–1460 (1985).

Rao, R. V. & Kalyankar, V. D. Parameters optimization of modern machining processes using teaching-learning-based optimization algorithm. Eng. Appl. Artif. Intell. 26(1), 524–531 (2013).

Wilson, E. M. Engineering Hydrology (MacMillan Education Ltd., 1974).

Karahan, H., Gurarslan, G. & Geem, Z. W. A new nonlinear Muskingum flood routing model incorporating lateral flow. Eng. Optim. 47(6), 737–749 (2015).

Natural Environment Research Council (NERC). Flood Studies Report, Vol. 3: Flood Routing Studies. (NERC, 1975).

Barati, R. Application of Excel Solver for parameter estimation of the nonlinear Muskingum models. KSCE J. Civ. Eng. 17(5), 1139–1148 (2013).

Vatankhah, A. R. Discussion of parameter estimation of the nonlinear Muskingum flood-routing model using a hybrid Harmony Search algorithm by HalilKarahan, GurhanGurarslan, and Zong Woo Geem. J. Hydrol. Eng. 19(4), 839–842 (2014).

Easa, S. M. Evaluation of nonlinear Muskingum model with continuous and discontinuous exponent parameters. KSCE J. Civ. Eng. 19(7), 2281–2290 (2015).

Bozorg-Haddad, O., Hamedi, F., Fallah-Mehdipour, E., Orouji, H. & Marino, M. A. Application of a hybrid optimization method in Muskingum parameter estimation. J. Irrig. Drain. Eng. https://doi.org/10.1061/(ASCE)IR.1943-4774.0000929,04015026 (2015).

Nash, J. E. & Sutcliffe, J. V. River flow forecasting through conceptual models part I-A discussion of principles. J. Hydrol. 10(3), 282–290 (1970).

Acknowledgements

The corresponding author thanks Iran’s National Science Foundation (INSF) for its support of this research.

Author information

Authors and Affiliations

Contributions

O.B.-H.; First author, corresponding author, conceptualization; funding acquisition; methodology; project administration; supervision; validation; visualization; roles/writing—original draft. P.S.; Second author, data curation; investigation; formal analysis; resources; roles/writing—original draft. H.A.L.; Third author, validation; visualization; writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bozorg-Haddad, O., Sarzaeim, P. & Loáiciga, H.A. Developing a novel parameter-free optimization framework for flood routing. Sci Rep 11, 16183 (2021). https://doi.org/10.1038/s41598-021-95721-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-95721-0

- Springer Nature Limited

This article is cited by

-

Investigation of river water pollution using Muskingum method and particle swarm optimization (PSO) algorithm

Applied Water Science (2024)

-

Study and verification on an improved comprehensive prediction model of landslide displacement

Bulletin of Engineering Geology and the Environment (2024)

-

Estimation of Muskingum's equation parameters using various numerical approaches: flood routing by Muskingum's equation

International Journal of Environmental Science and Technology (2024)

-

Muskingum Models’ Development and their Parameter Estimation: A State-of-the-art Review

Water Resources Management (2023)

-

Structural-optimized sequential deep learning methods for surface soil moisture forecasting, case study Quebec, Canada

Neural Computing and Applications (2022)