Abstract

The inevitable accumulation of errors in near-future quantum devices represents a key obstacle in delivering practical quantum advantages, motivating the development of various quantum error-mitigation methods. Here, we derive fundamental bounds concerning how error-mitigation algorithms can reduce the computation error as a function of their sampling overhead. Our bounds place universal performance limits on a general error-mitigation protocol class. We use them to show (1) that the sampling overhead that ensures a certain computational accuracy for mitigating local depolarizing noise in layered circuits scales exponentially with the circuit depth for general error-mitigation protocols and (2) the optimality of probabilistic error cancellation among a wide class of strategies in mitigating the local dephasing noise on an arbitrary number of qubits. Our results provide a means to identify when a given quantum error-mitigation strategy is optimal and when there is potential room for improvement.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Recent advances in quantum technologies have resulted in the availability of noisy intermediate-scale quantum (NISQ) devices, promising advantages of quantum information processing by controlling tens to hundreds of qubits1,2. However, inevitable noise remains a critical roadblock for their practical use; every gate has a chance of error, and their continuing accumulation will eventually destroy any potential quantum advantage. While quantum error correction enables in-principle means to suppress such error indefinitely, they involve measuring error syndromes and making adaptive corrections. In contrast, NISQ devices often cannot adaptively execute quantum operations.

This technological hurdle has motivated the study of quantum error mitigation, resulting in a diverse collection of alternative techniques (e.g., zero-error noise extrapolation3,4,5,6,7,8, probabilistic error cancellation3,9,10,11,12,13, and virtual distillation14,15,16,17,18,19). All share in common that they avoid adaptive operations. Instead, error-mitigation algorithms suppress errors by sampling available noisy devices many times and classically post-processing these measurement outcomes. Such techniques generally have drastically reduced technological requirements, providing potential near-term solutions for suppressing errors in other NISQ algorithms (e.g., variational algorithms for estimating the ground state energy in quantum chemistry20,21,22,23).

The performance of these algorithms is typically analyzed on a case-by-case basis. While this is crucial for understanding the value of a particular methodology in a specific practical context, it leaves open a fundamental question: What is the ultimate potential of quantum error mitigation? The motivation to answer this question parallels the development of heat engines. There, Carnot’s theorem allows us to understand the ultimate efficiency of all possible heat engines24, allowing us to know what is physically forbidden and enabling a universal means to understand what specific engines have the greatest room for potential improvement.

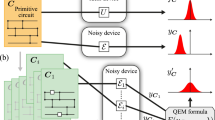

Here, we initiate a research program toward characterizing the ultimate limits of quantum error mitigation. We propose a framework to formally define error mitigation as any strategy that requires no adaptive quantum operations (see Fig. 1). We introduce maximum estimator spread as a universal benchmark for error-mitigation performance—a quantity that tells us how many extra runs of a NISQ device guarantee that outputs are within some desired accuracy threshold. We then derive fundamental lower bounds for this spread—that no current or yet-undiscovered error-mitigation strategy can violate. Our bounds are represented in terms of the reduction in the distinguishability of quantum states due to the noise effect, providing an operational understanding of the cost for error mitigation.

A A major goal of many near-term algorithms is to estimate the expectation value of some observable A, when acting on the output ψ of some idealized computation U applied to some input ψin. B However, noise prevents the exact synthesis of ψ. Quantum error-mitigation protocols assist to estimate the true expectation value \(\langle A\rangle ={{{\rm{Tr}}}}(A\psi )\) without using the adaptive quantum operations necessary in general error correction. This is done by (1) using available NISQ devices to synthesize N distorted quantum states \({\{{{{{\mathcal{E}}}}}_{n}(\psi )\}}_{n = 1}^{N}\) and (2) acting some physical process \({{{\mathcal{P}}}}\) on these distorted states to produce a random variable EA that approximates A. This procedure can then be repeated over M rounds to draw M samples of EA, whose mean is used to estimate 〈A〉. C We can characterize the efficacy of such protocol by (1) its spread ΔeA, the difference between maximum and minimum possible values of EA and (2) the bias \({b}_{A}(\psi )=\left\langle {E}_{A}\right\rangle -\langle A\rangle\). Here we derive ultimate lower bounds on ΔeA for each given bias that no such error-mitigation protocol can exceed, as well as tighter bounds when \({{{\mathcal{P}}}}\) is restricted only to coherent interactions over Q noisy devices at a time. This then tells us how many times \({{{\mathcal{P}}}}\) must be executed to estimate 〈A〉 within some desired accuracy and failure probability.

We then illustrate two immediate consequences of our general bounds. The first is in the context of mitigating local depolarizing noise in variational quantum circuits20,25. We show that the maximum estimator spread grows exponentially with circuit depth for the general error-mitigation protocol, confirming a suspicion that the well-known exponential growing estimation error observed in several existing error-mitigation techniques3,26 is a consequence of the fundamental obstacle shared by the general error-mitigation strategies. Our second study shows that probabilistic error cancellation—a prominent method of error mitigation—minimizes the maximum estimator spread when mitigating local dephasing noise acting on an arbitrary number of qubits. These results showcase how our bounds can help rule out what error-mitigation performance targets are unphysical, and identify what methods are already near-optimal.

Results

Framework

Our framework begins by introducing a formal definition of error mitigation. Consider an ideal computation described by (1) application of some circuit U to some input ψin (2) measurement of the output state ψ in some arbitrary observable A (see Fig. 1A). In realistic situations, however, there is noise, such that we have only access to NISQ devices capable of preparing some certain distorted states \({{{\mathcal{E}}}}(\psi )\). The aim is then to retrieve desired output data specified by \(\langle A\rangle ={{{\rm{Tr}}}}(A\psi )\). Here, we assume \(-{\mathbb{I}}/2\le A\le {\mathbb{I}}/2\) without loss of generality. This is because any observable O can be shifted and rescaled to some A satisfying this condition, from which full information of O can be recovered. For instance, if we are interested in a non-identity Pauli operator P, which has eigenvalues ±1, we instead consider an observable A = P/2. Note also that while ψ is pure in many practically relevant instances, our analysis applies equally when ψ is mixed.

We consider NISQ devices with no capacity to execute adaptive quantum operations. That is, they cannot enact different quantum operations conditioned on a measurement outcome. We then refer to an algorithm aimed to estimate 〈A〉 under such constrained devices as an error-mitigation strategy. Each error-mitigation strategy involves sampling NISQ devices configured in N settings for some integer N. Denote the states generated by these configurations by \({{{{\mathcal{E}}}}}_{1}(\psi ),\ldots ,{{{{\mathcal{E}}}}}_{N}(\psi )\), with effective noise channels \({\{{{{{\mathcal{E}}}}}_{i}\}}_{i = 1}^{N}\), where these effective noise channels can be different from each other in general. The effective noise channel is a non-adaptive operation that connects an ideal state to a distorted state and may be different from the actual noise channel that happens in the NISQ device. Nevertheless, one can always find such an effective noise channel given the descriptions of the actual noise channels and the idealized circuit U. The strategy then further describes some physical process \({{{\mathcal{P}}}}\)—which is independent of either the input ψin or the ideal output ψ—that takes these distorted states as input and outputs some classical estimate random variable EA of \({{{\rm{Tr}}}}(A\psi )\) (see Fig. 1B). The aim is to generate EA such that its expected value 〈EA〉 is close to \({{{\rm{Tr}}}}(A\psi )\). Each round of the protocol involves generating a sample of EA. M rounds of this procedure then enable us to generate M samples of EA, whose mean is used to estimate \({{{\rm{Tr}}}}(A\psi )\).

Each error-mitigation strategy can then be entirely described by its choice of \({{{\mathcal{P}}}}\) and \({\{{{{{\mathcal{E}}}}}_{i}\}}_{i = 1}^{N}\). Our most fundamental bound pertain to all possible choices. However, we can often make these bounds tighter in situations where further practical limitations constrain how many distorted states \({{{\mathcal{P}}}}\) can coherently interact. Error mitigation protocols under such constraints typically select N = KQ to a multiple of Q, such that the N distorted states are divided into K clusters, each containing Q distorted states. We label these as \({\{{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\}}_{q = 1,k = 1}^{Q,K}\) for convenience. \({{{\mathcal{P}}}}\) is then constrained to represent (1) local measurement procedures M(k) that can coherently interact distorted states within the kth cluster (i.e., \({\{{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\}}_{q = 1}^{Q}\)) to produce some classical interim outputs i(k) and (2) classical post-processing function eA that transform the interim outputs \({\{{i}^{(k)}\}}_{k = 1}^{K}\) into a sample of EA.

We name such a protocol as (Q, K)-error mitigation, and refer to the generation of each i(k) as an experiment. Each round of a (Q, K)-error mitigation protocol thus contains K experiments on systems of up to Q distorted states. We also summarize the above procedure in Fig. 2 and give a formal mathematical definition in Methods. Figure 3 and accompanying captions discuss how several prominent error-mitigation methods fit into this framework.

A (Q, K)-error mitigation protocol is motivated when practical considerations limit the maximum number of distorted states that our mitigation process \({{{\mathcal{P}}}}\) can coherently interact to Q. A general approach then divides these into K = ⌈N/Q⌉ groups of size Q. To estimate 〈A〉 of some ideal output state ψ, each round of (Q, K)-mitigation involves first using available NISQ devices to generate Q copies of each distorted states \({{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\), for each of k = 1, … K. These distorted states are then grouped together as inputs into K experiments, where each group consists of a single copy of each \({{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\). The kth experiment then involves applying some general (possibly entangling) POVM \(\{{M}_{{i}^{(k)}}^{(k)}\}\) on the kth grouping, resulting in measurement outcome i(k). Classical computing is then deployed to produce an estimate eA(i(1), … , i(K)) whose average after M rounds of the above process is used to estimate \({{{\rm{Tr}}}}(A\psi )\). Note that there can be additional quantum operations before the POVM measurements \(\{{M}_{{i}^{(k)}}^{(k)}\}\), but these can be absorbed into the description of the POVMs without loss of generality.

Our framework encompasses all commonly used error-mitigation protocols, a sample of which we outline here. A Probabilistic error cancellation3 assumes we can only act a single coherent state each round, where it seeks to undo a given noise map \({{{\mathcal{E}}}}\) by applying a suitable stochastic operation \({{{\mathcal{B}}}}\). Thus it corresponds to the case of Q = K = 1. B Rth order noise extrapolation assumes3,4 the capacity to synthesize R + 1 NISQ devices whose outputs represent distortions of ψ at various noise strengths. It then uses individual measurements of an observable A on these distorted states to estimate the observable expectation value on the zero-noise limit. Thus it is an example where Q = 1 and K = R + 1. C Meanwhile, R-copy virtual distillation14,15 involves running an available NISQ device R times to synthesize R copies of a distorted state \({{{\mathcal{E}}}}(\psi )\). Coherent interaction \({{{\mathcal{D}}}}\) over these copies followed by a suitable measurement MA then enables improved estimation of 〈A〉. Thus it is an example where K = 1 and Q = R. In the main text and Methods, we provide a detailed account of each protocol and how it fits within our framework.

Several comments on our error-mitigation framework are in order. We first note that, for a given set of noisy circuits that result in effective noise channels \({\{{{{{\mathcal{E}}}}}_{i}\}}_{i = 1}^{N}\), our framework assumes to apply an additional process \({{{\mathcal{P}}}}\) after the noisy circuits and does not include processes within the initial noisy circuits. Our framework thus excludes error correction, which employs adaptive processes integrated into noisy circuits. This allows our framework to differentiate error mitigation from error correction and makes it useful to investigate the limitations imposed particularly on the former.

One might think that this would overly restrict the scope of error mitigation, which could also use some processes in noisy circuits. This can be avoided by considering that such processes are already integrated into the description of effective noise channels \({\{{{{{\mathcal{E}}}}}_{i}\}}_{i = 1}^{N}\). In other words, the effective noise channel can be considered as a map that connects an ideal state to a distorted state affected by not only a noise channel but non-adaptive processes accessible to a given near-term device; the error mitigation process \({{{\mathcal{P}}}}\) is then an additional process that follows them. This is manifested in the Rth order noise extrapolation in Fig. 3B, in which R different noise levels realized on a near-term device are represented by the set \({\{{{{{\mathcal{E}}}}}_{i}\}}_{i = 1}^{R}\) of effective noise channels.

More broadly, taking appropriate effective noise channels allows our framework to include error-mitigation protocols that employ modified circuits. Namely, if \({\{{{{{\mathcal{N}}}}}_{i}\}}_{i = 1}^{N}\) are the noisy circuits that an error-mitigation protocol employs and \({{{\mathcal{U}}}}\) is the ideal circuit, then such an error-mitigation strategy is encompassed in our framework with \({{{{\mathcal{E}}}}}_{i}={{{{\mathcal{N}}}}}_{i}\circ {{{{\mathcal{U}}}}}^{{\dagger} }\). This, for instance, includes the conventional strategy of probabilistic error cancellation applied to a noisy circuit, in which a probabilistic operation is applied after every noisy gate.

We also remark that our framework leaves the freedom of how to choose the round number M and the sample number N = KQ per round for a given shot budget; if the total shot budget is T, one is free to choose any N and M such that T = NM. As we describe shortly, our results in Theorem 1 and Corollary 2 are concerned with the number of rounds M, and they apply to any choice of shot allocation. However, our results become most informative by choosing as large M (equivalently, as small N) as possible. The strategies in Fig. 3 admit small N’s that do not scale with the total shot budget, representing examples for which our results give fruitful insights into their round number M. On the other hand, some strategies that employ highly nonlinear computation on the measurement outcomes (e.g., exponential noise extrapolation11, subspace expansion27) require a large N, in which case our results on the round number M can have a large gap from the actual sampling cost.

Our framework also allows one to assume some pre-knowledge prior to the error-mitigation process. For instance, this includes the information about the underlying noise or some pre-computation that error-mitigation process can use in its strategy. The results in Theorem 1 and Corollary 2 then give information about the round number M given such pre-knowledge. Since the process of obtaining the pre-knowledge itself may be considered as a part of error-mitigation process, there are many possible divisions between the pre-computation and the error-mitigation process. Our results apply to any choice of pre-knowledge, and this can be flexibly chosen depending on one’s interest. For instance, R-copy virtual distillation can be considered as a (R, 1)-error mitigation (that is, N = R) as in Fig. 3C under the pre-knowledge of an eigenvalue of the noisy state, which is one of the settings discussed in ref. 14 (see also Methods). This pre-knowledge allows for a small choice of N, making the estimation of the round number M by our method insightful. Another example includes the Clifford Data Regression28, which can employ a linear regression based on a pattern learned from a training set. By considering the first learning step as the pre-computation, our results provide a meaningful bound for the sampling cost in the latter stage in which the output from the circuit of interest is compared to the model estimated from the training set.

Up to the flexibility described above, our framework encompasses a broad class of error-mitigation strategies proposed so far3,4,11,14,15,27,28,29,30,31,32.

Quantifying performance

The performance of an error-mitigation protocol is determined by how well the random variable EA governing each estimate aligns with \({{{\rm{Tr}}}}(A\psi )\). We can characterize this by (1) its bias, representing how close 〈EA〉 is to the ideal expectation value \({{{\rm{Tr}}}}(A\psi )\) and (2) its spread, representing the amount of intrinsic randomness within EA.

A protocol’s bias quantifies the absolute minimum error with which it can estimate \({{{\rm{Tr}}}}(A\psi )\), given no restrictions on how many rounds it can run (i.e., samples of EA it can draw). Mathematically, this is represented by the difference \({b}_{A}(\psi )=\langle {E}_{A}\rangle -{{{\rm{Tr}}}}(A\psi )\). Since the error-mitigation strategy should work for an arbitrary state ψ and observable A, we can introduce the maximum bias:

to bound the bias of an error-mitigation protocol in estimating expectation values over all output states and observables of interest. Hereafter, we will also assume \({b}_{\max }\le 1/2\), as this condition must be satisfied for any meaningful error-mitigation protocol. This is because a maximum bias of 1/2 can always be achieved by the trivial “error-mitigation” protocol that outputs eA = 0 regardless of ψ or A.

Of course, having \({b}_{\max }=0\) still does not guarantee an effective error-mitigation protocol. Each sample of EA will also deviate from \({{{\rm{Tr}}}}(A\psi )\) due to intrinsic random error. The greater this randomness, the more samples we need from EA to ensure that the mean of our samples is a reliable estimate of its true expectation value 〈EA〉. The relation is formalized by Hoeffding’s inequality33. Namely, suppose \({\{{x}_{i}\}}_{i = 1}^{M}\) are M samples of a random variable X with xi ∈ [a, b], the number M of samples that ensures an estimation error \(| \left\langle X\right\rangle -{\sum }_{i}{x}_{i}/M| \,<\, \delta\) with probability 1 − ε is given by \(\frac{| a-b{| }^{2}}{2{\delta }^{2}}\log (2/\varepsilon )\propto | a-b{| }^{2}\). In our context, the latter quantity corresponds to the maximum spread in the outcomes of estimator function eA defined by:

where ΔeA is the difference between the maximum and minimum possible values that EA can take, i.e., \({{\Delta }}{e}_{A}:={e}_{A,\max }-{e}_{A,\min }\) where \({e}_{A,\max }:=\mathop{\max }\nolimits_{{i}^{(1)}\ldots {i}^{(K)}}{e}_{A}({i}^{(1)}\ldots {i}^{(K)})\) and \({e}_{A,\min }:=\mathop{\min }\nolimits_{{i}^{(1)}\ldots {i}^{(K)}}{e}_{A}({i}^{(1)}\ldots {i}^{(K)})\).

\({{\Delta }}{e}_{\max }\) thus directly relates to the sampling cost of an error-mitigation protocol. Given an error-mitigation protocol whose estimates have maximum spread \({{\Delta }}{e}_{\max }\), it uses sample EA of order \({{{\mathcal{O}}}}({{\Delta }}{e}_{\max }^{2}\log (1/\varepsilon )/{\delta }^{2})\) times to ensure that its estimate of 〈EA〉 has accuracy δ and failure rate ε. Therefore, we may think of \({{\Delta }}{e}_{\max }\) as a measure of computational cost or feasibility. Its exponential scaling with respect to the circuit depth, for example, would imply eventual intractability in mitigating associated errors in a class of non-shallow circuits.

We note that if the variance of EA happens to be small, the actual sampling cost required to achieve the accuracy δ and failure rate ε can be smaller than the estimate based on the maximum spread. In this sense, \({{\Delta }}{e}_{\max }\) quantifies the round number M that one would practically use in the worst-case scenario. However, knowing the variance of EA beforehand is a formidable task in general, and the worst-case estimate gives a useful benchmark to assess the feasibility of a given error-mitigation strategy in such situations.

Fundamental limits

Our main contribution is to establish a universal lower bound on \({{\Delta }}{e}_{\max }\). Our bound then determines the number of times an error-mitigation method samples EA (and thus the number of times we invoke a NISQ device) to estimate A within some tolerable error.

To state the bound formally, we utilize measures of state distinguishability. Consider the scenario where Alice prepares a quantum state in either ρ and σ and challenges Bob to guess which is prepared. The trace distance \({D}_{{{{\rm{tr}}}}}(\rho ,\sigma )=\frac{1}{2}\parallel \rho -\sigma {\parallel }_{1}\) (where ∥ ⋅ ∥1 is the trace norm) then represents the quantity such that Bob’s optimal probability of guessing correctly is \(\frac{1}{2}(1+{D}_{{{{\rm{tr}}}}}(\rho ,\sigma ))\). When ρ and σ describe states on K-partite systems S1 ⊗ ⋯ ⊗ SK, we can also consider the setting in which Bob is constrained to local measurements, resulting in the optimal guessing probability \(\frac{1}{2}(1+{D}_{{{{\rm{LM}}}}}(\rho ,\sigma ))\) where DLM is the local distinguishability measure34 (see also Methods). In our setting, we identify each local subsystem Sk with a system corresponding to the kth experiment in Fig. 2. We are then in a position to state our main result:

Theorem 1 Consider an arbitrary (Q, K)-mitigation protocol with maximum bias \({b}_{\max }\). Then, its maximum spread \({{\Delta }}{e}_{\max }\) is lower bounded by:

where \({\tilde{\psi }}_{Q}^{(K)}:={\otimes }_{k = 1}^{K}{\otimes }_{q = 1}^{Q}\left[{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\right]\) and \({\tilde{\phi }}_{Q}^{(K)}:={\otimes }_{k = 1}^{K}{\otimes }_{q = 1}^{Q}\left[{{{{\mathcal{E}}}}}_{q}^{(k)}(\phi )\right]\) are distorted states corresponding to the QK copies of some ideal outputs ψ and ϕ, and \({{{{\mathcal{E}}}}}_{q}^{(k)}\) is the effective noise channel for the qth input in the kth experiment.

Combining this with Hoeffding’s inequality leads to the following bound on the sampling cost.

Corollary 2 Consider an arbitrary (Q, K)-mitigation protocol with maximum bias \({b}_{\max }\). Then, an estimation error of \({b}_{\max }+\delta\) is realized with probability 1 − ε when the number of samples M satisfies:

where \({\tilde{\psi }}_{Q}^{(K)}:={\otimes }_{k = 1}^{K}{\otimes }_{q = 1}^{Q}\left[{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\right]\) and \({\tilde{\phi }}_{Q}^{(K)}:={\otimes }_{k = 1}^{K}{\otimes }_{q = 1}^{Q}\left[{{{{\mathcal{E}}}}}_{q}^{(k)}(\phi )\right]\).

Theorem 1 and Corollary 2 offer two qualitative insights. The first is the potential trade-off between sampling cost and systematic error—we may reduce the sampling cost by increasing tolerance for bias. The second is a direct relation between sampling cost and distinguishability—the more a noise source degrades distinguishability between states, the more costly the error is to mitigate.

The intuition behind this relation rests on the observation that the error-mitigation process is a quantum channel. Thus, any error-mitigation procedure must obey data-processing inequalities for distinguishability. On the other hand, error mitigation aims to improve our ability to estimate expectation values of various observables, which would enhance our ability to distinguish between noisy states. The combination of these observations then implies that distinguishability places a fundamental constraint on required sampling costs to mitigate error. For details of the associated proof, see Methods.

Observe that our bound involves the local distinguishability DLM(ρ, σ) rather than the standard trace distance \({D}_{{{{\rm{tr}}}}}(\rho ,\sigma )\). This is due to the constraints we placed of \({{{\mathcal{P}}}}\) that limits it to coherently interacting the outputs of a finite number of NISQ devices—reflecting the hybrid nature of quantum error mitigation utilizing quantum and classical resources in tandem. Notably, these quantities coincide for the most powerful NISQ devices (the ones allowing coherent interactions between all N noisy initial states). This case then corresponds to the most fundamental bound:

which represents the ultimate performance limits of all (Q, K) error-mitigation protocols that coherently operate on N = QK distorted states each round.

We also remark that our framework can give tighter bounds when available error-mitigation methods involve specific states and observables (see Eq. (36)).

Alternative bounds

While the bounds derived above in terms of distinguishability have a clear operational meaning, its evaluation in realistic settings can face two significant hurdles. (1) It involves evaluating the distinguishability between two quantum states whose dimensions scale exponentially with KQ, making its evaluation costly for protocols that require many NISQ samples per round. (2) It requires that we have tomographic knowledge of the effective noise channels \({{{{\mathcal{E}}}}}_{q}^{(k)}\).

One potential means around this is to identify bounds on the distinguishability measures that alleviate such hurdles. For example, since \({D}_{{{{\rm{tr}}}}}(\rho ,\sigma )\le \sqrt{1-F(\rho ,\sigma )}\) for any pair of states ρ and σ where \(F(\rho ,\sigma ):={\left({{{\rm{Tr}}}}\sqrt{{\sigma }^{1/2}\rho {\sigma }^{1/2}}\right)}^{2}\) is the (squared) fidelity35, this, together with Eq. (5), implies:

This form only involves the computation of the trace distance and fidelity of single-copy states, both of which can be computed by semidefinite programming36.

Meanwhile, the need for tomographic knowledge of \({{{{\mathcal{E}}}}}_{q}^{(k)}\) can be mitigated by using subfidelity37:

The subfidelity bounds F(ρ, σ) from below, and thus also lower bounds the maximum spread:

subfidelity between two unknown states can be measured by a quantum computer using a circuit of constant depth38,39 (see also Methods). This obviates the need for tomographical data, while its low depth means that the noise in this process is typically much smaller than the noise in our circuits of interest. We remark that, instead of using the subfidelity, one could use an alternative quantity that lower bounds the fidelity that can be estimated by NISQ devices, e.g., truncated fidelity40. Such techniques could enable benchmarking protocols that allow us to rule out a candidate NISQ device should our bounds suggest their error profiles are too adverse to support any viable means of error mitigation.

In addition, the maximum in the right-hand sides of (6) and (8) do not need to be evaluated exactly; any choice of states ψ and ϕ provides a valid lower bound for the maximum spread. While these alternative bounds may not be as tight, they still serve as universal lower bounds that can put non-trivial constraints on the error-mitigation performance (see Remark 2 in Supplementary Note 1 and Supplementary Note 3).

Error-mitigating layered circuits

Quantitatively, the above bounds enable us to determine the ultimate performance limits of error mitigation given a particular set of imperfect quantum devices specified by error channels \(\{{{{{\mathcal{E}}}}}_{q}^{(k)}\}\). We now illustrate how this enables the identification of sampling overheads when performing error mitigation on a common class of NISQ algorithms—layered circuits used extensively in variational quantum eigensolvers41. Variational algorithms typically assume a quantum circuit consisting of multiple layers of unitary gates \({\{{U}_{l}\}}_{l = 1}^{L}\) acting on an n-qubit system. Indeed, as designed with NISQ applications in mind, they are key candidates for benchmarking of error-mitigation protocols7,42,43.

In particular, consider a local depolarizing noise25,44, in which the depolarizing channel \({{{{\mathcal{D}}}}}_{\epsilon }(\rho ):=(1-\epsilon )\rho +\epsilon {\mathbb{I}}/2\) acts on each qubit. A general approach to mitigate this error is to employ a (Q, K)-mitigation protocol for some Q and K, in which the kth experiment involves depolarizing noise with noise strength ϵk (Fig. 4).

Layered circuits are used extensively in variational algorithms for NISQ devices. They involve repeated layers of gates, each consisting of some unitary Ul. A standard noise model for such circuits involves the action of local depolarizing noise \({{{{\mathcal{D}}}}}_{\epsilon }\) on each qubit during each layer of the circuit. The kth experiment in a general (Q, K)-protocol involves running this circuit Q times to produce a distorted state \({\otimes }_{q = 1}^{Q}{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\) with some noise strength ϵk—which possibly varies over different experiments. The protocol then measures each \({\otimes }_{q = 1}^{Q}{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\) for k = 1, … , K and outputs an estimate EA through classical post-processing of the measurements results.

Taking U = UL ⋯ U2 U1 in Fig. 2 and applying Theorem 1 to this setting, we obtain the following bound (see Supplementary Note 1 for the proof).

Theorem 3 For an arbitrary (Q, K)-error mitigation with maximum bias \({b}_{\max }\) applied to n-qubit circuits with L-layer unitaries under local depolarizing noise, the maximum spread is lower bounded as:

where \({\epsilon }_{\min }:=\mathop{\min }\nolimits_{k}{\epsilon }_{k}\) is the minimum noise strength among K experiments.

Theorem 3 suggests that error-mitigation strategies encompassed in our framework will use exponentially many samples with respect to the circuit depth L. This validates our intuition that information should quickly get degraded due to the sequential noise effects, incurring exponential overhead to remove the accumulated noise effect.

We also remark that, although we here focus on the exponential growth of the maximum spread with respect to the circuit depth L for the sake of generality, one can expect that the maximum spread grows exponentially with the total gate number nQKL rather than just the layer number L in many practical cases.

Protocol benchmarking

Theorems 1 and 3 place strategy-independent bounds on the maximum spread for each Q and K and available noise channels \({{{{\mathcal{E}}}}}_{q}^{(k)}\), enabling us to identify the ultimate potential of error mitigation under various noise settings and operational constraints. Comparing this limit with that achieved by specific known methods of error mitigation then provides a valuable benchmark, helping us assess their optimality and quantify the potential room for improvement. We illustrate this here by considering probabilistic error cancellation3, while we discuss how our framework can be applied to other prominent error-mitigation protocols in Methods.

Probabilistic error cancellation is an error-mitigation protocol that produces an estimate of \({{{\rm{Tr}}}}(A\psi )\) using a distorted state \({{{\mathcal{E}}}}(\psi )\) each round (see Fig. 3A). It then fulfills the criteria of being a (1, 1)-protocol, i.e., Q = K = 1. Here, we assume that the description of the noise channels is given as pre-knowledge, in which case the estimator becomes unbiased, i.e., \({b}_{\max }=0\). Probabilistic error cancellation operates by identifying a complete basis of processes \({\{{{{{\mathcal{B}}}}}_{j}\}}_{j}\) such that \({{{{\mathcal{E}}}}}^{-1}={\sum }_{j}{c}_{j}{{{{\mathcal{B}}}}}_{j}\) for some set of real (but possibly negative) numbers \({\{{c}_{j}\}}_{j}\). Setting γ := ∑j∣cj∣, the protocol then (1) applies \({{{{\mathcal{B}}}}}_{j}\) to the noisy state \({{{\mathcal{E}}}}(\psi )\) with probability pj = ∣cj∣/γ, (2) measures A to get outcome aj, and (3) multiplies each outcome by \(\gamma \,{{{\rm{sgn}}}}({c}_{j})\) and takes the average.

In the context of our framework, we can introduce a quantum operation \({{{\mathcal{B}}}}\) that represents first initializing a classical register to a state j with probability pj and applying \({{{{\mathcal{B}}}}}_{j}\) to \({{{\mathcal{E}}}}(\psi )\) conditioned on j. Meanwhile, MA represents an A-measurement of the resulting quantum system combined with a measurement of the register, resulting in the outcome pair (aj, j). Taking \({e}_{A}^{{{{\rm{PEC}}}}}\left(({a}_{j},j)\right)=\gamma\, {{{\rm{sgn}}}}({c}_{j}){a}_{j}\), we see that the maximum spread of this estimator is given by:

a well-studied quantity that is already associated with the sampling overhead of probabilistic error cancellation3.

The optimal sampling cost γopt is then achieved by minimizing such γ over all feasible \({\{{{{{\mathcal{B}}}}}_{j}\}}_{j}\)45. Once computed for a specific noise channel \({{{\mathcal{E}}}}\), we can compare it to the lower bounds in Theorem 1 to determine if there is possible room for improvement.

Let us now consider local dephasing noise on an n-qubit system, where the dephasing noise \({{{{\mathcal{Z}}}}}_{\epsilon }(\rho ):=(1-\epsilon )\rho +\epsilon Z\rho Z\) acts on each qubit. We find that the optimal cost is obtained as:

This can be compared to the bound for \({{\Delta }}{e}_{\max }\) from Theorem 1 that applies to every mitigation protocol with Q = K = 1. Note that, since K = 1, \({D}_{{{{\rm{LM}}}}}={D}_{{{{\rm{tr}}}}}\). We then get:

Detailed computation to obtain (11) and (12) can be found in Supplementary Note 2. Remarkably, the two quantities—the maximum spread for the probabilistic error cancellation and the lower bound for arbitrary unbiased mitigation strategies with Q = K = 1—exactly coincide. This shows that probabilistic error cancellation achieves the ultimate performance limit of unbiased (1, 1)-protocols for correcting local dephasing noise for an arbitrary qubit number n.

We can also consider the d-dimensional depolarizing noise \({{{{\mathcal{D}}}}}_{\epsilon }^{d}(\rho )=(1-\epsilon )\rho +\epsilon {\mathbb{I}}/d\). The bound from Theorem 1 for this noise is obtained as:

which is slightly lower than \({{\Delta }}{e}_{\max }^{{{{\rm{PEC}}}}}=\frac{1+(1-2/{d}^{2})\epsilon }{1-\epsilon }\)45,46,47, with difference being O(ϵ). This suggests that probabilistic error cancellation is nearly optimal for this noise model, while still leaving the possibility for a better protocol to exist.

We can also apply similar techniques to study the performance of other prominent error-mitigation protocols. Here, we plot the estimator spread for probabilistic error cancellation, virtual distillation, and noise extrapolation, and their corresponding lower bounds for local dephasing noise (Fig. 5) and global depolarizing noise (Fig. 6). We note that, for virtual distillation and extrapolation, we evaluated (36) that allows us to bound ΔeA in (2) with a specific observable A of interest. We provide details for the evaluation of these values in Supplementary Note 2. We can observe that both protocols perform near-optimal limits at the low-error regime. At the high-error regime, their performance can diverge significantly from our lower bounds depending on underlying noise models and mitigation strategies. We emphasize that such divergences are expected because of the high generality of our lower bounds. Narrowing the gaps between the fundamental lower bounds and achievable maximum spread, e.g., finding more examples such as probabilistic error cancellation for local dephasing noise, will be a natural direction for future work.

Solid green curve: \({{\Delta }}{e}_{\max }\) for probabilistic error cancellation and the lower bound for unbiased (1, 1)-mitigation protocols, which coincide as explained in the main text. Brown curve: ΔeA with \(A=\frac{1}{2}{\otimes }_{i = 1}^{n}{X}_{i}\) for 2-copy virtual distillation with GHZ state inputs and the lower bound for (2, 1)-mitigation protocols with the same bias, which coincide as explained in Supplementary Note 2. Triangles and rectangles: ΔeA with \(A=\frac{1}{2}{\otimes }_{i = 1}^{n}{X}_{i}\) for 11th order noise extrapolation with GHZ state inputs (triangles) and a lower bound for (1, 12)-mitigation protocols with the same bias (rectangles).

Green curves: \({{\Delta }}{e}_{\max }\) for probabilistic error cancellation (dashed) and the lower bound for unbiased (1, 1)-mitigation protocols (solid). Brown curves: ΔeA with \(A=\frac{1}{2}{\otimes }_{i = 1}^{n}{X}_{i}\) for 2-copy virtual distillation with GHZ state inputs (dashed) and the lower bound for (2, 1)-mitigation protocols with the same bias (solid). Triangles and rectangles: ΔeA with \(A=\frac{1}{2}{\otimes }_{i = 1}^{n}{X}_{i}\) for 1st order noise extrapolation (triangles) and a lower bound for (1, 2)-mitigation protocols with the same bias (rectangles).

Discussion

Our work aimed to identify the ultimate performance limits of quantum error mitigation—a large class of techniques designed to estimate the outputs of ideal quantum circuits by post-processing measurement data from imperfect counterparts. This involved identifying a universal performance measure—applicable to any such error-mitigation protocols—that captures how many extra executions of available NISQ devices the protocol uses to ensure that its estimates are sufficiently close with some required probability of success. We then derived ultimate performance limits that pertain to all such error mitigation methods. The significance of our bounds parallels that of various fundamental converse bounds in quantum information (e.g., quantum communication48,49,50 and thermodynamics51,52,53), representing the ultimate performance limits that quantum error-mitigation protocols can never surpass. Our bounds particularly demonstrate that probabilistic error cancellation is optimal in the maximum spread to mitigate local dephasing noise among all unbiased error-mitigation protocols that involve no coherent interactions between multiple copies of distorted states, and imply that the exponential growth in the maximum spread on mitigating noise in layered circuits is an unavoidable feature shared by the general error-mitigation protocols.

We note that our performance bounds have focused on the scaling of M, representing how many rounds an error-mitigation protocol should be run to get a reliable estimate of some observable 〈A〉. Although this analysis is sufficient for many present methods of error mitigation, it is possible to also improve estimates of 〈A〉 by scaling the number of distorted outputs we process in a single round (e.g., extrapolation11 and subspace expansion27). While our framework in Fig. 1 encompasses such methodologies—and as such all bounds on estimation error apply—full understanding of the performance of such protocols would involve further investigation on how estimation error scales with respect to N or K. This then presents a natural direction for future research.

Our results also offer potential insights into several related fields. Non-Markovian dynamics have shown promise in decreasing sampling costs in error mitigation54. Since non-Markovianity is known to be deeply related to the trace distance55, our newly established relations between trace distance and quantum error mitigation hint at promising relations between the two fields. The second direction is to relate our general framework of quantum error mitigation to the established theory of quantum error correction. Quantum error correction concerns algorithms that prevent degrading the trace distance between suitably encoded logical states, while our results indicate that less reduction in trace distance can enable smaller error mitigation costs. Thus, our work provides a toolkit for identifying fundamental bounds in the transition from error mitigation to error correction as we proceed from NISQ devices toward scalable quantum computing. This then complements presently active research in error suppression that combines the two techniques56,57,58,59. Beyond error suppression, quantum protocols in many diverse settings also share the structure of classical post-processing of quantum measurements—from quantum metrology and illumination to hypothesis testing and stochastic analysis60,61,62,63,64. Our framework—suitably extended—could thus identify new performance bounds in each of these settings.

Methods

Formal definition of (Q, K)-error mitigation

Here, we give a formal definition of (Q, K)-error mitigation as a quantum operation. Since POVM measurements in different experiments are independent of each other, the whole measurement process can be represented as a tensor product of each POVM. Then, the classical post-processing following the measurement is a classical-classical channel such that the expected value of the output will serve as an estimate of the desired expectation value. We can then formalize an error-mitigation process as a concatenation of these two maps.

Definition 4 ((Q, K)-error mitigation). For an arbitrary observable A satisfying \(-{\mathbb{I}}/2\le A\le {\mathbb{I}}/2\), a (Q, K)-mitigation protocol—involving Q inputs and K experiments—is a concatenation of quantum-classical channel ΛA and classical-classical channel \({\hat{e}}_{A}\) as \({\hat{e}}_{A}\circ {{{\Lambda }}}_{A}\). Here, ΛA has a form:

where \(\{{M}_{{i}^{(k)}}^{(k)}\}\) is the POVM for the kth experiment acting on Q copies of n-qubit noisy states, and i := i(1)… i(K) denotes a collection of measurement outcomes with \(\left|{{{\bf{i}}}}\right\rangle =\left|{i}^{(1)}\ldots {i}^{(K)}\right\rangle\) being a classical state acting on K subsystems. The channel \({\hat{e}}_{A}\) implements a K-input classical function eA such that:

for some function bA(ψ) called bias, and:

is the probability of getting outcomes i = i(1)…i(K) for the input noisy states \({\{{{{{\mathcal{E}}}}}_{q}^{(k)}(\psi )\}}_{q = 1,k = 1}^{Q,K}\).

Proof of Theorem 1—The intuition behind Theorem 1 lies in the intimate relation between the effect of error mitigation and distinguishability of quantum states. Recall that the goal of quantum error mitigation is to estimate the expectation value of an arbitrary observable A for an arbitrary ideal state ψ only using the noisy state \({{{\mathcal{E}}}}(\psi )\). Although \({{{\rm{Tr}}}}(A{{{\mathcal{E}}}}(\psi ))\) can deviate from \({{{\rm{Tr}}}}(A\psi )\), error mitigation correctly allows us to estimate \({{{\rm{Tr}}}}(A\psi )\), which appears to have eliminated noise effects. Since each error-mitigation strategy should also work for another state ϕ, it should be able to remove the noise and estimate \({{{\rm{Tr}}}}(A\phi )\) out of \({{{\rm{Tr}}}}(A{{{\mathcal{E}}}}(\phi ))\). Does this “removal” of noise imply that error mitigation can help distinguish \({{{\mathcal{E}}}}(\psi )\) and \({{{\mathcal{E}}}}(\phi )\)?

The subtlety of this question can be seen by looking at how quantum error mitigation works. The estimation of \({{{\rm{Tr}}}}(A{{{\mathcal{E}}}}(\psi ))\) without error mitigation is carried out by making a measurement with respect to the eigenbasis of \(A={\sum }_{a}a\left|a\right\rangle \,\left\langle a\right|\), which produces a probability distribution \(p(a| {{{\mathcal{E}}}}(\psi ),A)\) over possible outcomes {a}. Because of the noise, the expectation value of this distribution is shifted from \({{{\rm{Tr}}}}(A\psi )\). Similarly, the same measurement for a state \({{{\mathcal{E}}}}(\phi )\) produces a probability distribution \(p(a| {{{\mathcal{E}}}}(\phi ),A)\), whose expectation value may also be shifted from \({{{\rm{Tr}}}}(A\phi )\). An error-mitigation protocol applies additional operations, measurements and classical post-processing to produce other probability distributions \({p}_{{{{\rm{EM}}}}}(a| {{{\mathcal{E}}}}(\psi ),A)\) and \({p}_{{{{\rm{EM}}}}}(a| {{{\mathcal{E}}}}(\phi ),A)\) whose expectation values get closer to the original ones. As a result, although the expectation values of the two error-mitigated distributions get separated from each other, they also get broader, which may increase the overlap between the two distributions, possibly making it even harder to distinguish two distributions (see Fig. 7).

The top schematic illustrates the probability distribution of an observable A for two noisy states \({{{\mathcal{E}}}}(\psi )\) and \({{{\mathcal{E}}}}(\phi )\). The expectation values are shifted from the true values due to the noise effects. As in the bottom schematic, error mitigation converts them to other distributions whose expectation values are closer to the true values than the initial noisy distributions are. However, the converted distributions get broader, and the overlap between two distributions increases in general.

One can see that this intuition that error mitigation does not increase the distinguishability is indeed right by looking at the whole error-mitigation process as a quantum channel. Then, the data-processing inequality implies that the distinguishability between any two states should not be increased by the application of quantum channels. This motivates us to rather use this observation as a basis to put a lower bound for the necessary overhead.

Let us recall that the trace distance admits the following form:

and similarly the local distinguishablity measure can be written as34:

where LM is the set of POVMs that take the form \({M}_{{i}^{(1)}}^{(1)}\otimes \cdots \otimes {M}_{{i}^{(K)}}^{(K)}\), where \({M}_{{i}^{(k)}}^{(k)}\) represents some POVM local to system Sk, and LM2 is the set of two-outcome measurements realized by local measurements together with classical post-processing. The second forms for the above measures particularly tell that they quantify how well two states can be distinguished by accessible quantum measurements. By definition, it is clear that:

for all states ρ and σ, and the inequality often becomes strict65,66.

The local distinguishability measure satisfies the data-processing inequality under all local measurement channels. Namely, for all states ρ and σ defined on a composite system \({\otimes }_{k = 1}^{K}{S}_{k}\), and for an arbitrary quantum-classical channel \({{\Lambda }}(\cdot )={\sum }_{i}{{{\rm{Tr}}}}\left(\,\cdot \,{M}_{{i}^{(1)}}^{(1)}\otimes \cdots \otimes {M}_{{i}^{(K)}}^{(K)}\right)\left|{\bf i}\right\rangle \left\langle {\bf i}\right|\),

where in the inequality we used that the set of local measurement channels is closed under concatenation.

Let us define:

Since the channel ΛA in Definition 4 is a local measurement channel, we employ (20) to get:

where:

and pi and qi are classical distributions defined in (16) for ψ and ϕ respectively, which satisfy:

When \(\hat{p}\) and \(\hat{q}\) are tensor products of classical states, i.e., \(\hat{p}={\hat{p}}^{(1)}\otimes \cdots \otimes {\hat{p}}^{(K)}\) and \(\hat{q}={\hat{q}}^{(1)}\otimes \cdots \otimes {\hat{q}}^{(K)}\), it holds that:

This can be seen as follows. Let M⋆ be the optimal POVM element achieving the trace distance in (17). Then, we get:

where:

is a classical dephasing channel. The effective POVM element Δ(M⋆) has the form:

Since each \(\left|{{{\bf{i}}}}\right\rangle \,\left\langle {{{\bf{i}}}}\right|\) is a local POVM element and 0 ≤ 〈i∣M⋆∣i〉 ≤ 1 because \(0\le {M}^{\star }\le {\mathbb{I}}\), the two-outcome measurement \(\{{{\Delta }}({M}^{\star }),{\mathbb{I}}-{{\Delta }}({M}^{\star })\}\) can be realized by a local measurement and classical post-processing, and thus belongs to LM2. This, together with (18), implies \({D}_{{{{\rm{tr}}}}}(\hat{p},\hat{q})\le {D}_{{{{\rm{LM}}}}}(\hat{p},\hat{q})\), and further combining (19) gives (25).

Combining (22) and (25) gives:

We now connect (29) to the expression (24) of the expectation value and bias. Let us first suppose \({{{\rm{Tr}}}}(A\psi )+{b}_{A}(\psi )\ge {{{\rm{Tr}}}}(A\phi )+{b}_{A}(\phi )\). Let \({{{{\mathcal{I}}}}}^{\star }:=\left\{\left.{{{\bf{i}}}}\ \right|\ {p}_{{{{\bf{i}}}}}-{q}_{{{{\bf{i}}}}}\ge 0\right\}\) and let \({\overline{{{{\mathcal{I}}}}}}^{\star }\) be the complement set. Let us also define \(A^{\prime} =A+{\mathbb{I}}/2\), which satisfies \(0\le A^{\prime} \le {\mathbb{I}}\) due to \(-{\mathbb{I}}/2\le A\le {\mathbb{I}}/2\). Then, we get:

where in the third line we used (24), in the fourth line we used the maximum and minimum estimator values:

and in the last line we used that:

and that the trace distance reduces to the total variation distance:

for all classical states \(\hat{p}={\sum }_{i}{p}_{i}\left|i\right\rangle \,\left\langle i\right|\) and \(\hat{q}={\sum }_{i}{q}_{i}\left|i\right\rangle \,\left\langle i\right|\). Combining (29) and (30), we get:

On the other hand, if \({{{\rm{Tr}}}}(A\psi )+{b}_{A}(\psi )\le {{{\rm{Tr}}}}(A\phi )+{b}_{A}(\phi )\), we flip the role of ψ and ϕ to get:

Defining \({{\Delta }}{e}_{A}:={e}_{A,\max }-{e}_{A,\min }\), these two can be summarized as:

Optimizing over A, ϕ, and ψ on both sides, we reach:

where in the second line we used that we can always take the numerator positive by appropriately flipping ψ and ϕ, in the third line we fixed \({A^{\prime} }^{\star }={A}^{\star }+{\mathbb{I}}/2\) to the one that achieves the trace distance \({{{\rm{Tr}}}}[{A^{\prime} }^{\star }(\psi -\phi )]={D}_{{{{\rm{tr}}}}}(\psi ,\phi )\) as in (17), and in the fourth line we used the definition of \({b}_{\max }\). □

Measuring subfidelity

To estimate the subfidelity (7) for n-qubit states ρ and σ, it suffices to measure the two quantities, \({{{\rm{Tr}}}}(\rho \sigma )\) and \({{{\rm{Tr}}}}(\rho \sigma \rho \sigma )\), which can be measured by a quantum computer38,39. For readers’ convenience, here we summarize several methods that can measure the subfidelity and see that the measurement can be done by a constant-depth quantum circuit.

Let us begin by \({{{\rm{Tr}}}}(\rho \sigma )\). Note that \({{{\rm{Tr}}}}(\rho \sigma )={{{\rm{Tr}}}}(S\,\rho \otimes \sigma )\) where S is the n-qubit SWAP operator defined by \(S\left|\psi \right\rangle \otimes \left|\phi \right\rangle =\left|\phi \right\rangle \otimes \left|\psi \right\rangle\) with \(\left|\psi \right\rangle\) and \(\left|\phi \right\rangle\) being arbitrary n-qubit pure states. This can be famously measured by the SWAP test38 that uses one ancillary qubit and n-qubit SWAP gate controlled on the ancillary qubit. Since the n-qubit SWAP gate can be realized by swapping individual qubits, the SWAP test runs with n uses of qubit SWAP gates controlled on the ancillary qubit, taking the circuit depth n.

One can significantly reduce the circuit depth by employing the destructive SWAP test67. Note that \({{{\rm{Tr}}}}(\rho \sigma )={{{\rm{Tr}}}}({S}_{2}^{\otimes n}\rho \otimes \sigma )\) where \({S}_{2}:=\mathop{\sum }\nolimits_{i,j = 0}^{1}\left|ij\right\rangle \,\left\langle ji\right|\) is the qubit SWAP operator. This is obtained by measuring ρ ⊗ σ with respect to the eigenbasis of \({S}_{2}^{\otimes n}\), which is just a tensor product of the eigenbasis of S2. Therefore, such a measurement can be accomplished by individually measuring a pair of qubits from ρ and σ with respect to the eigenbasis of S2, for which one can use, e.g., Bell measurement. These measurements can run in parallel and thus only needs a constant depth circuit with respect to n (in fact, depth 2) that involves n two-qubit gates.

We remark that, at this point, we have already obtained a valid lower bound of \({{\Delta }}{e}_{\max }\) because the second term in (7) is positive, only improving the lower bound. Nevertheless, evaluating the second term, which involves \({{{\rm{Tr}}}}(\rho \sigma \rho \sigma )\), can significantly improve the bound particularly when ρ and σ are highly noisy and their purity is small.

\({{{\rm{Tr}}}}(\rho \sigma \rho \sigma )\) can be measured by a similar strategy to the one for \({{{\rm{Tr}}}}(\rho \sigma )\) with two copies of ρ and σ. Instead of the SWAP operator S, consider the CYCLE operator C defined as \(C\left({\otimes }_{i = 1}^{4}\left|{\psi }_{i}\right\rangle \right)={\otimes }_{i = 1}^{4}\left|{\psi }_{i+1}\right\rangle\) where \(\left|{\psi }_{i}\right\rangle ,i=1,2,3,4\) is an arbitrary n-qubit pure state with \(\left|{\psi }_{5}\right\rangle :=\left|{\psi }_{1}\right\rangle\). Then, it is straightforward to check that \({{{\rm{Tr}}}}(\rho \sigma \rho \sigma )={{{\rm{Tr}}}}(C\,\rho \otimes \sigma \otimes \rho \otimes \sigma )\). This can be measured by a generalization of the SWAP test where CYCLE gate C is controlled on the single ancillary qubit. Similarly to the case of SWAP, the CYCLE gate C can be decomposed into \(C={C}_{2}^{\otimes n}\) where kth C2 gate (for any k = 1, … , n) acts on the four-qubit state that consists of the kth qubit of ρ, σ, ρ, and σ. Since C2 can be realized by three SWAP gates, one can measure \({{{\rm{Tr}}}}(\rho \sigma \rho \sigma )\) with 3n uses of qubit-SWAP gates controlled on the ancillary qubit, taking the circuit depth 3n.

Similarly to the case of \({{{\rm{Tr}}}}(\rho \sigma )\), we can realize a significant reduction in the circuit depth by making the measurement destructive. All we have to do is to measure individual four-qubit states that each C2 gate acts on with respect to the eigenbasis of C2. Since the measurement of each C2 can be run in parallel and each measurement circuit has a depth independent of n, this results in a constant-depth circuit that measures \(C={C}_{2}^{\otimes n}\).

We note the apparent similarity between the construction above and the circuit used in virtual distillation14,15. In particular, the strategy of destructive measurement was extensively discussed in ref. 15. It is interesting to see that a construction that is highly relevant to a specific error-mitigation protocol provides a bound applicable to a general class of error-mitigation protocols.

Applications to other error-mitigation protocols

Here, we discuss how our framework can be applied to other two prominent error-mitigation protocols, noise extrapolation and virtual distillation.

Extrapolation methods3,4 are used in scenarios where there is no clear analytical noise model. These strategies consider a family of noise channels \({\{{{{{\mathcal{N}}}}}_{\xi }\}}_{\xi }\), where ξ corresponds to the noise strength. The assumption here is that the description of \({{{{\mathcal{N}}}}}_{\xi }\) is unknown, but we have the ability to “boost” ξ such that \(\xi \ge \tilde{\xi }\) where \(\tilde{\xi }\) is the noise strength present in some given noisy circuit. The idea is that by studying how the expectation value of an observable depends on ξ, we can extrapolate what its value would be if ξ = 0. In particular, the Rth order Richardson extrapolation method work as follows. Let us take constants \({\{{\gamma }_{r}\}}_{r = 0}^{R}\) and \({\{{c}_{r}\}}_{r = 0}^{R}\) with \(1={c}_{0} \,<\, {c}_{1} < \cdots <\, {c}_{R}\le 1/\tilde{\xi }\) such that:

Using these constants, one can show that:

where \({b}_{A}(\psi )={{{\mathcal{O}}}}({\tilde{\xi }}^{R+1})\). This allows us to estimate the true expectation value using noisy states under multiple noise levels, as long as \(\tilde{\xi }\) is sufficiently small.

Richardson extrapolation is an instance of (1, R + 1)-error mitigation. In particular, we have:

in Definition 4. For an observable A = ∑a aΠa where Πa is the projector corresponding to measuring outcome a, the POVMs \({\{{M}_{{a}^{(k)}}^{(k)}\}}_{k = 1}^{R+1}\) and classical estimator function eA take the forms:

where \({\{{\gamma }_{k}\}}_{k = 0}^{R}\) are the constants determined by (38). One can easily check that plugging the above expressions in the form of Definition 4 leads to (39).

Because of the constraint \(-{\mathbb{I}}/2\le A\le {\mathbb{I}}/2\), every eigenvalue a satisfies −1/2 ≤ a ≤ 1/2. This implies that:

and:

leading to \({{\Delta }}{e}_{\max }\le \mathop{\sum }\nolimits_{r = 0}^{R}| {\gamma }_{r}|\). On the other hand, any observable A having ±1/2 eigenvalues saturates this inequality. Therefore, we get the exact expression of the maximum spread for the extrapolation method as:

Next, we discuss virtual distillation14,15, which is an example of (Q, 1)-error mitigation. Let ψ be an ideal pure output state from a quantum circuit. We consider a scenario where the noise in the circuit acts as an effective noise channel \({{{\mathcal{E}}}}\) that brings the ideal state to a noisy state of the form:

for a certain \({\{{\lambda }_{k}\}}_{k = 1}^{d}\), where d is the dimension of the system and \({\{{\psi }_{k}\}}_{k = 1}^{d}\) constructs an orthonormal basis with ψ1 := ψ. We also assume that λ is given as pre-knowledge. This form reflects the intuition that, as long as the noise is sufficiently small, the dominant eigenvector should be close to the ideal state ψ. For a more detailed analysis of the form of this spectrum, we refer readers to ref. 68.

The Q-copy virtual distillation algorithm aims to estimate \({{{\rm{Tr}}}}(W\psi )\) for a unitary observable W satisfying \({W}^{2}={\mathbb{I}}\) (e.g., Pauli operators) by using Q copies of \({{{\mathcal{E}}}}(\psi )\). The mitigation circuit consists of a controlled permutation and unitary W, followed by a measurement on the control qubit with the Hadamard basis. The probability of getting outcome 0 (projecting onto \(\left|+\right\rangle \,\left\langle +\right|\)) is:

This implies that:

providing a way of estimating \({{{\rm{Tr}}}}(W\psi )\) with the bias \(| \mathop{\sum }\nolimits_{k = 2}^{d}{({\lambda }_{k}/\lambda )}^{Q}{{{\rm{Tr}}}}(W{\psi }_{k})| \le \mathop{\sum }\nolimits_{k = 2}^{d}{({\lambda }_{k}/\lambda )}^{Q}\).

We can see that this protocol fits into our framework with K = 1 and \({{{{\mathcal{E}}}}}_{q}={{{\mathcal{E}}}}\) for q = 1, … , Q as follows. For an arbitrary observable A, we can always find a decomposition with respect to the Pauli operators {Pi} as:

for some set of real numbers {ci}. We now apply the virtual distillation circuit for Pi at probability ∣ci∣/∑j∣cj∣ and—similarly to the case of probabilistic error cancellation—employ an estimator function defined as:

with γ := ∑i∣ci∣, where we treat i as a part of the measurement outcome. Then, we get:

where pi0 is the probability (47) with W = Pi multiplied by ∣ci∣/∑j∣cj∣, pi1 = 1 − pi0, and \({b}_{A}(\psi ):=\mathop{\sum }\nolimits_{k = 2}^{d}{({\lambda }_{k}/\lambda )}^{Q}{{{\rm{Tr}}}}(A{\psi }_{k})\). Optimizing over observables \(-{\mathbb{I}}/2\le A\le {\mathbb{I}}/2\), we have:

and:

Note added to proof

During the completion of our manuscript, we became aware of an independent work by Wang et al.69, which showed a result related to our Theorem 3 on the exponential scaling of the maximum estimator spread.

Code availability

Source codes used to generate the plots are available from the corresponding author upon request.

References

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505 (2019).

Temme, K., Bravyi, S. & Gambetta, J. M. Error mitigation for short-depth quantum circuits. Phys. Rev. Lett. 119, 180509 (2017).

Li, Y. & Benjamin, S. C. Efficient variational quantum simulator incorporating active error minimization. Phys. Rev. X 7, 021050 (2017).

Giurgica-Tiron, T., Hindy, Y., LaRose, R., Mari, A. & Zeng, W. J. Digital zero noise extrapolation for quantum error mitigation. 2020 IEEE International Conference on Quantum Computing and Engineering (QCE) 306 (2020).

He, A., Nachman, B., de Jong, W. A. & Bauer, C. W. Zero-noise extrapolation for quantum-gate error mitigation with identity insertions. Phys. Rev. A 102, 012426 (2020).

Kandala, A. et al. Error mitigation extends the computational reach of a noisy quantum processor. Nature 567, 491 (2019).

Dumitrescu, E. F. et al. Cloud quantum computing of an atomic nucleus. Phys. Rev. Lett. 120, 210501 (2018).

Buscemi, F., Dall’Arno, M., Ozawa, M. & Vedral, V. Direct observation of any two-point quantum correlation function. Preprint at https://arxiv.org/abs/1312.4240 (2013).

Buscemi, F., Dall’Arno, M., Ozawa, M. & Vedral, V. Universal optimal quantum correlator. Int. J. Quantum Inf. 12, 1560002 (2014).

Endo, S., Benjamin, S. C. & Li, Y. Practical quantum error mitigation for near-future applications. Phys. Rev. X 8, 031027 (2018).

Song, C. et al. Quantum computation with universal error mitigation on a superconducting quantum processor. Sci. Adv. 5, eaaw5686 (2019).

Zhang, S. et al. Error-mitigated quantum gates exceeding physical fidelities in a trapped-ion system. Nat. Commun. 11, 587 (2020).

Koczor, B. Exponential error suppression for near-term quantum devices. Phys. Rev. X 11, 031057 (2021).

Huggins, W. J. et al. Virtual distillation for quantum error mitigation. Phys. Rev. X 11, 041036 (2021).

Czarnik, P., Arrasmith, A., Cincio, L. & Coles, P. J. Qubit-efficient exponential suppression of errors. https://doi.org/10.48550/arXiv.2102.06056 (2021).

Cai, Z. Resource-efficient purification-based quantum error mitigation. Preprint at https://arxiv.org/abs/2107.07279 (2021).

Huo, M. & Li, Y. Dual-state purification for practical quantum error mitigation. Phys. Rev. A 105, 022427 (2022).

Xiong, Y., Ng, S. X. & Hanzo, L. Quantum error mitigation relying on permutation filtering. IEEE Trans. Commun. 70, 1927 (2022).

Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242 (2017).

McArdle, S., Endo, S., Aspuru-Guzik, A., Benjamin, S. C. & Yuan, X. Quantum computational chemistry. Rev. Mod. Phys. 92, 015003 (2020).

Cao, Y. et al. Quantum chemistry in the age of quantum computing. Chem. Rev. 119, 10856 (2019).

McArdle, S., Yuan, X. & Benjamin, S. Error-mitigated digital quantum simulation. Phys. Rev. Lett. 122, 180501 (2019).

Carnot, S. Reflections on the motive power of fire, and on machines fitted to develop that power. Paris: Bachelier 108, 1824 (1824).

Wang, S. et al. Noise-induced barren plateaus in variational quantum algorithms. Nat. Commun. 12, 6961 (2021).

Yuan, X., Zhang, Z., Lütkenhaus, N. & Ma, X. Simulating single photons with realistic photon sources. Phys. Rev. A 94, 062305 (2016).

McClean, J. R., Kimchi-Schwartz, M. E., Carter, J. & de Jong, W. A. Hybrid quantum-classical hierarchy for mitigation of decoherence and determination of excited states. Phys. Rev. A 95, 042308 (2017).

Czarnik, P., Arrasmith, A., Coles, P. J. & Cincio, L. Error mitigation with Clifford quantum-circuit data. Quantum 5, 592 (2021).

Bonet-Monroig, X., Sagastizabal, R., Singh, M. & O’Brien, T. E. Low-cost error mitigation by symmetry verification. Phys. Rev. A 98, 062339 (2018).

Bravyi, S., Sheldon, S., Kandala, A., Mckay, D. C. & Gambetta, J. M. Mitigating measurement errors in multiqubit experiments. Phys. Rev. A 103, 042605 (2021).

Yoshioka, N. et al. Generalized quantum subspace expansion. Phys. Rev. Lett. 129, 020502 (2022).

McClean, J. R., Jiang, Z., Rubin, N. C., Babbush, R. & Neven, H. Decoding quantum errors with subspace expansions. Nat. Commun. 11, 636 (2020).

Hoeffding, W. Probability inequalities for sums of bounded random variables. J. Am. Stat. Assoc. 58, 13 (1963).

Matthews, W., Wehner, S. & Winter, A. Distinguishability of quantum states under restricted families of measurements with an application to quantum data hiding. Commun. Math. Phys. 291, 813 (2009).

Fuchs, C. & van de Graaf, J. Cryptographic distinguishability measures for quantum-mechanical states. IEEE Trans. Inf. Theory 45, 1216–1227 (1999).

Watrous, J. The Theory of Quantum Information (Cambridge University Press, 2018).

Miszczak, J. A., Puchała, Z., Horodecki, P., Uhlmann, A. & Życzkowski, K. Sub– and super–fidelity as bounds for quantum fidelity. Quantum Inf. Comput. 9, 0103 (2009).

Ekert, A. K. et al. Direct estimations of linear and nonlinear functionals of a quantum state. Phys. Rev. Lett. 88, 217901 (2002).

Bacon, D., Chuang, I. L. & Harrow, A. W. Efficient quantum circuits for schur and clebsch-gordan transforms. Phys. Rev. Lett. 97, 170502 (2006).

Cerezo, M., Poremba, A., Cincio, L. & Coles, P. J. Variational quantum fidelity estimation. Quantum 4, 248 (2020).

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 4213 (2014).

Kim, Y. et al. Scalable error mitigation for noisy quantum circuits produces competitive expectation values. Preprint at https://arxiv.org/abs/2108.09197 (2021).

Sagastizabal, R. et al. Experimental error mitigation via symmetry verification in a variational quantum eigensolver. Phys. Rev. A 100, 010302 (2019).

Müller-Hermes, A., Stilck França, D. & Wolf, M. M. Relative entropy convergence for depolarizing channels. J. Math. Phys. 57, 022202 (2016).

Takagi, R. Optimal resource cost for error mitigation. Phys. Rev. Res. 3, 033178 (2021).

Jiang, J., Wang, K. & Wang, X. Physical implementability of linear maps and its application in error mitigation. Quantum 5, 600 (2021).

Regula, B., Takagi, R. & Gu, M. Operational applications of the diamond norm and related measures in quantifying the non-physicality of quantum maps. Quantum 5, 522 (2021).

Bennett, C. H., Shor, P. W., Smolin, J. A. & Thapliyal, A. V. Entanglement-assisted classical capacity of noisy quantum channels. Phys. Rev. Lett. 83, 3081 (1999).

Pirandola, S., Laurenza, R., Ottaviani, C. & Banchi, L. Fundamental limits of repeaterless quantum communications. Nat. Commun. 8, 15043 (2017).

Berta, M., Brandão, F. G. S. L., Christandl, M. & Wehner, S. Entanglement cost of quantum channels. IEEE Trans. Inf. Theory 59, 6779 (2013).

Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 5, 183 (1961).

Brandão, F., Horodecki, M., Ng, N., Oppenheim, J. & Wehner, S. The second laws of quantum thermodynamics. Proc. Natl. Acad. Sci. USA 112, 3275 (2015).

Gour, G., Jennings, D., Buscemi, F., Duan, R. & Marvian, I. Quantum majorization and a complete set of entropic conditions for quantum thermodynamics. Nat. Commun. 9, 5352 (2018).

Hakoshima, H., Matsuzaki, Y. & Endo, S. Relationship between costs for quantum error mitigation and non-markovian measures. Phys. Rev. A 103, 012611 (2021).

Breuer, H.-P., Laine, E.-M., Piilo, J. & Vacchini, B. Colloquium: non-Markovian dynamics in open quantum systems. Rev. Mod. Phys. 88, 021002 (2016).

Suzuki, Y., Endo, S., Fujii, K. & Tokunaga, Y. Quantum error mitigation as a universal error reduction technique: applications from the nisq to the fault-tolerant quantum computing eras. PRX Quantum 3, 010345 (2022).

Lostaglio, M. & Ciani, A. Error mitigation and quantum-assisted simulation in the error corrected regime. Phys. Rev. Lett. 127, 200506 (2021).

Piveteau, C., Sutter, D., Bravyi, S., Gambetta, J. M. & Temme, K. Error mitigation for universal gates on encoded qubits. Phys. Rev. Lett. 127, 200505 (2021).

Xiong, Y., Chandra, D., Ng, S. X. & Hanzo, L. Sampling overhead analysis of quantum error mitigation: uncoded vs. coded systems. IEEE Access 8, 228967 (2020).

Lloyd, S. Enhanced sensitivity of photodetection via quantum illumination. Science 321, 1463 (2008).

Giovannetti, V., Lloyd, S. & Maccone, L. Quantum metrology. Phys. Rev. Lett. 96, 010401 (2006).

Audenaert, K. M., Nussbaum, M., Szkoła, A. & Verstraete, F. Asymptotic error rates in quantum hypothesis testing. Commun. Math. Phys. 279, 251 (2008).

Binder, F. C., Thompson, J. & Gu, M. Practical unitary simulator for non-markovian complex processes. Phys. Rev. Lett. 120, 240502 (2018).

Blank, C., Park, D. K. & Petruccione, F. Quantum-enhanced analysis of discrete stochastic processes. npj Quantum Inf. 7, 1–9 (2021).

Lami, L., Palazuelos, C. & Winter, A. Ultimate data hiding in quantum mechanics and beyond. Commun. Math. Phys. 361, 661 (2018).

Corrêa, W. H. G., Lami, L. & Palazuelos, C. Maximal gap between local and global distinguishability of bipartite quantum states. Preprint at https://arxiv.org/abs/2110.04387 (2021).

Garcia-Escartin, J. C. & Chamorro-Posada, P. Swap test and hong-ou-mandel effect are equivalent. Phys. Rev. A 87, 052330 (2013).

Koczor, B. The dominant eigenvector of a noisy quantum state. New J. Phys. 23, 123047 (2021).

Wang, S. et al. Can error mitigation improve trainability of noisy variational quantum algorithms? Preprint at https://arxiv.org/abs/2109.01051 (2021).

Acknowledgements

We thank Yuichiro Matsuzaki, Yuuki Tokunaga, Hideaki Hakoshima, Kaoru Yamamoto, Jayne Thompson, and Francesco Buscemi for fruitful discussions, and Kento Tsubouchi for pointing out an error in a preliminary version of the manuscript. This work is supported by the Singapore Ministry of Education Tier 1 Grant RG162/19 and RG146/20, the National Research Foundation under its Quantum Engineering Program NRF2021-QEP2-02-P06, the Singapore Ministry of Education Tier 2 Project MOE-T2EP50221-0005 and the FQXi-RFP-IPW-1903 project, “Are quantum agents more energetically efficient at making predictions?” from the Foundational Questions Institute, Fetzer Franklin Fund, a donor advised fund of Silicon Valley Community Foundation, and the Lee Kuan Yew Postdoctoral Fellowship at Nanyang Technological University Singapore. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of National Research Foundation or the Ministry of Education, Singapore. S.E. is supported by Moonshot R&D, JST, Grant No., JPMJMS2061; MEXT Q-LEAP Grant No., JPMXS0120319794, and PRESTO, JST, Grant No., JPMJPR2114. S.M. would like to take this opportunity to thank the “Nagoya University Interdisciplinary Frontier Fellowship” supported by JST and Nagoya University.

Author information

Authors and Affiliations

Contributions

R.T., S.E., and S.M. came up with a preliminary idea on connecting the state distinguishability to error mitigation. R.T. conceived the project, obtained the main results, and wrote the manuscript draft. S.E. proposed a way of directly estimating a lower bound of the fidelity bound on a quantum computer, which eventually resulted in the subfidelity bound. S.M. contributed to the analysis to compare the subfidelity bound to the trace-distance bound. R.T. and M.G. wrote the manuscript. All authors contributed to the interpretation and discussion of the results.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Takagi, R., Endo, S., Minagawa, S. et al. Fundamental limits of quantum error mitigation. npj Quantum Inf 8, 114 (2022). https://doi.org/10.1038/s41534-022-00618-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-022-00618-z

- Springer Nature Limited

This article is cited by

-

Group-theoretic error mitigation enabled by classical shadows and symmetries

npj Quantum Information (2024)

-

Exponential concentration in quantum kernel methods

Nature Communications (2024)

-

Drug design on quantum computers

Nature Physics (2024)

-

Exponentially tighter bounds on limitations of quantum error mitigation

Nature Physics (2024)

-

Quantifying the effect of gate errors on variational quantum eigensolvers for quantum chemistry

npj Quantum Information (2024)