Abstract

Concealed scene understanding (CSU) is a hot computer vision topic aiming to perceive objects exhibiting camouflage. The current boom in terms of techniques and applications warrants an up-to-date survey. This can help researchers better understand the global CSU field, including both current achievements and remaining challenges. This paper makes four contributions: (1) For the first time, we present a comprehensive survey of deep learning techniques aimed at CSU, including a taxonomy, task-specific challenges, and ongoing developments. (2) To allow for an authoritative quantification of the state-of-the-art, we offer the largest and latest benchmark for concealed object segmentation (COS). (3) To evaluate the generalizability of deep CSU in practical scenarios, we collected the largest concealed defect segmentation dataset termed CDS2K with the hard cases from diversified industrial scenarios, on which we constructed a comprehensive benchmark. (4) We discuss open problems and potential research directions for CSU.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Concealed scene understanding (CSU) aims to recognize objects that exhibit different forms of camouflage, as in Fig. 1. By its very nature, CSU is clearly a challenging problem compared with conventional object detection [1, 2]. It has numerous real-world applications, including search-and-rescue work, rare species discovery, healthcare (e.g., automatic diagnosis of colorectal polyps [3, 4] and lung lesions [5]), agriculture (e.g., pest identification [6] and fruit ripeness assessment [7]), and content creation (e.g., recreational art [8]). In the past decade, both academia and industry have widely studied CSU, and various types of images with camouflaged objects have been handled with traditional computer vision and pattern recognition techniques, including hand-engineered patterns (e.g., motion cues [9, 10] and optical flow [11, 12]), heuristic priors (e.g., color [13], texture [14] and intensity [15, 16]) and a combination of techniques [17–19].

In recent years, thanks to benchmarks becoming available (e.g., COD10K [20, 23] and NC4K [24]) and the rapid development of deep learning, this field has made important strides forward. In 2020, Fan et al. [20] released the first large-scale public dataset - COD10K - geared towards the advancement of perception tasks having to deal with concealment. This has also inspired other related disciplines. For instance, Mei et al. [25, 26] proposed a distraction-aware framework for the segmentation of camouflaged objects, which can be extended to the identification of transparent materials in natural scenes [27]. In 2023, Ji et al. [28] developed an efficient model that learns textures from object-level gradients, and its generalizability has been verified through diverse downstream applications, e.g., medical polyp segmentation and road crack detection.

Although multiple research teams have addressed tasks concerned with concealed objects, we believe that stronger interactions between the ongoing efforts would be beneficial. Thus, we mainly review the state and recent deep learning-based advances in CSU. Meanwhile, we contribute a large-scale concealed defect segmentation dataset termed CDS2K. This dataset consists of hard cases from diverse industrial scenarios, thus providing an effective benchmark for CSU.

Previous surveys and scope

To the best of our knowledge, only a few survey papers have been published in the CSU community, which [29, 30] mainly reviewed non-deep learning techniques. There are some benchmarks [31, 32] with narrow scopes, such as image-level segmentation, where only a few deep learning methods were evaluated. In this paper, we present a comprehensive survey of deep learning CSU techniques, thus widening the scope. We also offer more extensive benchmarks with a more comprehensive comparison and with an application-oriented evaluation.

Contributions

Our contributions are summarized as follows: (1) We represent the initial effort to thoroughly examine deep learning techniques tailored towards CSU thoroughly. This includes an overview of its classification and specific obstacles, as well as an assessment of its advancement during the era of deep learning, achieved through an examination of existing datasets and techniques. (2) To provide a quantitative evaluation of the current state-of-the-art, we have created a new benchmark for concealed object segmentation (COS), which is a crucial and highly successful area within CSU. It is the most up-to-date and comprehensive benchmark available. (3) To assess the applicability of CSU with deep learning in real-world scenarios, we have restructured the CDS2K dataset – the largest dataset for concealed defect segmentation – to include challenging cases from various industrial settings. We have utilized this updated dataset to create a comprehensive benchmark for evaluation. (4) Our discussion delves into the present obstacles, available prospects, and future research areas for the CSU community.

2 Background

2.1 Task taxonomy and formulation

2.1.1 Image-level CSU

In this section, we introduce five commonly used image-level CSU tasks, which can be formulated as a mapping function \(\mathcal{F}: \mathbf{X} \mapsto \mathbf{Y}\) that converts the input space X into the target space Y.

• Concealed object segmentation (COS) [23, 28] is a class-agnostic dense prediction task that segments concealed regions or objects with unknown categories. As presented in Fig. 2(a), the model \(\mathcal{F}_{\text{COS}} : \mathbf{X} \mapsto \mathbf{Y}\) is supervised by a binary mask Y to predict a probability \(\mathbf{p} \in [0,1]\) for each pixel x of image X, which is the confidence level that the model determines whether x belongs to the concealed region.

Illustration of the representative CSU tasks. Five of these are image-level tasks: (a) concealed object segmentation (COS), (b) concealed object localization (COL), (c) concealed instance ranking (CIR), (d) concealed instance segmentation (CIS), and (e) concealed object counting (COC). The remaining two are video-level tasks: (f) video concealed object detection (VCOD) and (g) video concealed object segmentation (VCOS). Each task has its own corresponding annotation visualization, which is explained in detail in Section 2.1

• Concealed object localization (COL) [24, 33] aims to identify the most noticeable region of concealed objects, which is in line with human perception psychology [33]. This task is to learn a dense mapping function \(\mathcal{F}_{\text{COL}}: \mathbf{X} \mapsto \mathbf{Y}\). The output Y is a non-binary fixation map captured by an eye tracker device, as illustrated in Fig. 2(b). Essentially, the probability prediction \(\mathbf{p} \in [0,1]\) for a pixel x indicates how conspicuous its camouflage is.

• Concealed instance ranking (CIR) [24, 33] ranks different instances in a concealed scene based on their detectability. The level of camouflage is used as the basis for this ranking. The objective of the CIR task is to learn a dense mapping \(\mathcal{F}_{\text{CIR}}: \mathbf{X} \mapsto \mathbf{Y}\) between the input space X and the camouflage ranking space Y, where Y represents per-pixel annotations for each instance with corresponding rank levels. For example, in Fig. 2(c), there are three toads with different camouflage levels, and their ranking labels are from [24]. To perform this task, one can replace the category ID for each instance with rank labels in instance segmentation models such as Mask R-CNN [34].

• Concealed instance segmentation (CIS) [35, 36] is a technique that aims to identify instances in concealed scenarios based on their semantic characteristics. Unlike general instance segmentation [37, 38], where each instance is assigned a category label, CIS recognizes the attributes of concealed objects to distinguish between different entities more effectively. To achieve this objective, CIS employs a mapping function \(\mathcal{F}_{\text{CIS}}: \mathbf{X} \mapsto \mathbf{Y}\), where Y is a scalar set comprising various entities used to parse each pixel. This concept is illustrated in Fig. 2(d).

• Concealed object counting (COC) [39] is a newly emerging topic in CSU that aims to estimate the number of instances concealed within their surroundings. As illustrated in Fig. 2(e), the COC estimates the center coordinates for each instance and generates their counts. Its formulation can be defined as \(\mathcal{F}_{\text{COC}}: \mathbf{X} \mapsto \mathbf{Y}\), where X is the input image and Y represents the output density map that indicates the concealed instances in scenes.

Overall, the image-level CSU tasks can be categorized into two groups based on their semantics: object-level (COS and COL) and instance-level (CIR, COC, and CIS). Object-level tasks focus on perceiving objects while instance-level tasks aim to recognize semantics to distinguish different entities. Additionally, COC is regarded as a sparse prediction task based on its output form, whereas the others belong to dense prediction tasks. Among the literature reviewed in Table 1, COS has been extensively studied while research on the other three tasks is gradually increasing.

2.1.2 Video-level CSU

Given a video clip \(\{\mathbf{X}_{t}\}_{t=1}^{T}\) containing T concealed frames, the video-level CSU can be formulated as a mapping function \(\mathcal{F}: \{\mathbf{X}_{t}\}_{t=1}^{T} \mapsto \{\mathbf{Y}_{t}\}_{t=1}^{T}\) for parsing dense spatial-temporal correspondences, where \(\mathbf{Y}_{t}\) is the label of frame \(\mathbf{X}_{t}\).

• Video concealed object detection (VCOD) [40] is similar to video object detection [41]. This task aims to identify and locate concealed objects within a video by learning a spatial-temporal mapping function \(\mathcal{F}_{\text{VCOD}}: \{\mathbf{X}_{t}\}_{t=1}^{T} \mapsto \{ \mathbf{Y}_{t}\}_{t=1}^{T}\) that predicts the location \(\mathbf{Y}_{t}\) of an object for each frame \(\mathbf{X}_{t}\). The location label \(\mathbf{Y}_{t}\) is provided as a bounding box (see Fig. 2(f)) consisting of four numbers \((x,y,w,h)\) indicating the target’s location. Here, \((x,y)\) represents its top-left coordinate, while w and h denote its width and height, respectively.

• Video concealed object segmentation (VCOS) [42] originated from the task of camouflaged object discovery [40]. Its goal is to segment concealed objects within a video. This task usually utilizes spatial-temporal cues to drive the models to learn the mapping \(\mathcal{F}_{\text{VCOS}}: \{\mathbf{X}_{t}\}_{t=1}^{T} \mapsto \{ \mathbf{Y}_{t}\}_{t=1}^{T}\) between input frames \(\mathbf{X}_{t}\) and corresponding segmentation mask labels \(\mathbf{Y}_{t}\). Figure 2(g) shows an example of its segmentation mask.

In general, compared to image-level CSU, video-level CSU is developing relatively slowly, because collecting and annotating video data is labor-intensive and time-consuming. However, with the establishment of the first large-scale VCOS benchmark on MoCA-Mask [42], this field has made fundamental progress while still having ample room for exploration.

2.1.3 Task relationship

Among image-level CSU tasks, the CIR task requires the highest level of understanding as it may not only involve four subtasks, e.g., segmenting pixel-level regions (i.e., COS), counting (i.e., COC), or distinguishing different instances (i.e., CIS), but also ranking these instances according to their fixation probabilities (i.e., COL) under different difficulty levels. Additionally, regarding two video-level tasks, VCOS is a downstream task for VCOD since the segmentation task requires the model to provide pixel-level classification probabilities.

2.2 Related topics

Next, we will briefly introduce salient object detection (SOD), which, like COS, requires extracting properties of target objects, but one focuses on saliency while the other on the concealed attribute.

• Image-level SOD aims to identify the most attractive objects in an image and extract their pixel-accurate silhouettes [43]. Various network architectures have been explored in deep SOD models, e.g., multi-layer perceptron [44–47], fully convolutional [48–52], capsule-based [53–55], transformer-based [56], and hybrid [57, 58] networks. Meanwhile, different learning strategies are also studied in SOD models, including data-efficient methods (e.g., weakly-supervised with categorical tags [59–63] and unsupervised with pseudo masks [64–66]), multi-task paradigms (e.g., object subitizing [67, 68], fixation prediction [69, 70], semantic segmentation [71, 72], edge detection [73–77], image captioning [78]), and instance-level paradigms [79–82]. To learn more about this field comprehensively, readers can refer to popular surveys or representative studies on visual attention [83], saliency prediction [84], co-saliency detection [85–87], RGB SOD [1, 88–90], RGB-D (depth) SOD [91, 92], RGB-T (thermal) SOD [93, 94], and light-field SOD [95].

• Video-level SOD. The early development of video salient object detection (VSOD) originated from introducing attention mechanisms in video object segmentation (VOS) tasks. At that stage, the task scenes were relatively simple, containing only one object moving in the video. As moving objects tend to attract visual attention, VOS and VSOD were equivalent tasks. For instance, Wang et al. [96] used a fully convolutional neural network to address the VSOD task. With the development of VOS techniques, researchers introduced more complex scenes (e.g., with complex backgrounds, object movements, and two objects), but the two tasks remained equivalent. Thus, later works have exploited semantic-level spatial-temporal features [97–100], recurrent neural networks [101, 102], or offline motion cues such as optical flow [101, 103–105]. However, with the introduction of more challenging video scenes (containing three or more objects, simultaneous camera, and object movements), VOS and VSOD were no longer equivalent. However, researchers continued to approach the two tasks as equivalent, ignoring the issue of visual attention allocation in multi-object movement in video scenes, which seriously hindered the development of the field. To address this issue, in 2019, Fan et al. [106] introduced eye trackers to mark the changes in visual attention in multi-object movement scenarios, for the first time posing the scientific problem of attention shift in VSOD tasks, and constructed the first large-scale VSOD benchmark – DAVSOD,Footnote 1 as well as the baseline model SSAV, which propelled VSOD into a new stage of development.

• Remarks. COS and SOD are distinct tasks, but they can mutually benefit via the CamDiff approach [107]. This has been demonstrated through adversarial learning [108], leading to joint research efforts such as the recently proposed dichotomous image segmentation [109]. In Section 6, we will explore potential directions for future research in these areas.

3 CSU models with deep learning

This section systematically reviews CSU with deep learning approaches based on task definitions and data types. We have also created a GitHub baseFootnote 2 as a comprehensive collection to provide the latest information in this field.

3.1 Image-level CSU models

We review the existing four image-level CSU tasks: concealed object segmentation (COS), concealed object localization (COL), concealed instance ranking (CIR), and concealed instance segmentation (CIS). Table 1 summarizes the key features of these reviewed approaches.

3.1.1 Concealed object segmentation

This section discusses previous solutions for camouflage object segmentation (COS) from two perspectives: network architecture and learning paradigm.

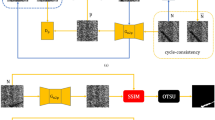

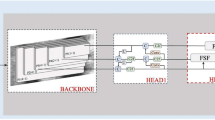

• Network architecture. Generally, fully convolutional networks (FCNs [150]) are the standard solution for image segmentation because they can receive the input of a flexible size and undergo a single feed-forward propagation. As expected, FCN-shaped frameworks dominate the primary solutions for COS, which fall into three categories:

(1) Multi-stream framework, shown in Fig. 3(a), contains multiple input streams to learn multi-source representations explicitly. MirrorNet [110] was the first attempt to add an extra data stream as a bio-inspired attack, which can break the camouflaged state. Several recent works have adopted a multi-stream approach to improve their results, such as supplying pseudo-depth generation [149], pseudo-edge uncertainty [114], adversarial learning paradigm [108], frequency enhancement stream [135], multi-scale [134] or multi-view [141] inputs, and multiple backbones [147]. Unlike other supervised settings, CRNet [142] is the only weakly-supervised framework that uses scribble labels as supervision. This approach helps alleviate overfitting problems on limited annotated data.

Network architectures for COS at a glance. We present four types of frameworks from left to right: (a) multi-stream framework, (b) bottom-up/top-down framework and its variant with deep supervision (optional), and (c) branched framework. See Section 3.1.1 for more details

(2) Bottom-up and top-down framework, as shown in Fig. 3(b), uses deeper features to enhance shallower features gradually in a single feed-forward pass. For example, C2FNet [113] adopts this design to improve concealed features from coarse-to-fine levels. In addition, SegMaR [136] employs an iterative refinement network with a sub-network based on this strategy. Furthermore, other studies [20, 23, 25, 26, 112, 118–121, 124, 125, 129, 138–140, 143–145, 148] utilized a deeply-supervised strategy [151, 152] on various intermediate feature hierarchies using this framework. This practice, also utilized by the feature pyramid network [153], combines more comprehensive multi-context features through dense top-down and bottom-up propagation and introduces additional supervision signals before final prediction to provide more dependable guidance for deeper layers.

(3) Branched framework, shown in Fig. 3(c), is a single-input-multiple-output architecture, consisting of both segmentation and auxiliary task branches. It should be noted that the segmentation part of this branched framework may have some overlap with previous frameworks, such as single-stream [21] and bottom-up and top-down [24, 28, 33, 108, 111, 115–117, 122, 123, 125–128, 130–133, 137] frameworks. For instance, ERRNet [123] and FAPNet [127] are typical examples of jointly learning concealed objects and their boundaries. Since these branched frameworks are closely related to the multi-task learning paradigm, we will provide further details.

• Learning paradigm. We discuss two common types of learning paradigms for COS tasks: single-task and multi-task.

(1) Single-task learning is the most commonly used paradigm in COS, which involves only a segmentation task for concealed targets. Based on this paradigm, most current works [20, 23, 121] focus on developing attention modules to identify target regions.

(2) Multi-task learning introduces an auxiliary task to coordinate or complement the segmentation task, leading to robust COS learning. These multi-task frameworks can be implemented by conducting confidence estimation [108, 117, 130, 132], localization/ranking [24, 33], category prediction [21] tasks and learning depth [111, 149], boundary [116, 122, 123, 126, 127, 131], and texture [28, 115] cues of camouflaged objects.

3.1.2 Concealed instance ranking

There has been limited research conducted on this topic. Lv et al. [24] observed for the first time that existing COS approaches could not quantify the difficulty level of camouflage. Regarding this issue, they used an eye tracker to create a new dataset, called CAM-LDR [33], that contains instance segmentation masks, fixation labels, and ranking labels. They also proposed two unified frameworks, LSR [24] and its extension LSR+ [33], to simultaneously learn triple tasks, i.e., localizing, segmenting, and ranking camouflaged objects. The insight behind it is that discriminative localization regions could guide the segmentation of the full scope of camouflaged objects, and then, the detectability of different camouflaged objects could be inferred by the ranking task.

3.1.3 Concealed instance segmentation

This task advances the COS task from the regional to the instance level, a relatively new field compared with the COS. Then, Le et al. [36] built a new CIS benchmark, CAMO++, by extending the previous CAMO [21] dataset. They also proposed a camouflage fusion learning strategy to fine-tune existing instance segmentation models (e.g., Mask R-CNN [34]) by learning image contexts. Based on instance benchmarks such as in COD10K [20] and NC4K [24], the first one-stage transformer framework, OSFormer [35], was proposed for this field by introducing two core designs: a location-sensing transformer and coarse-to-fine fusion. Recently, Luo et al. [146] proposed segmenting camouflaged instances with two designs: a pixel-level camouflage decoupling module and an instance-level camouflage suppression module.

3.1.4 Concealed object counting

Sun et al. [39] recently introduced a new challenge for the community called indiscernible object counting (IOC), which involves counting objects that are difficult to distinguish from their surroundings. They created IOCfish5K, a large-scale dataset containing high-resolution images of underwater scenes with many indiscernible objects (focusing on fish) and dense annotations to address the lack of appropriate datasets for this challenge. They also proposed a baseline model called IOCFormer by integrating density-based and regression-based methods in a unified framework.

Based on the above summaries, the COS task is experiencing a rapid development period, resulting in numerous contemporary publications each year. However, very few proposed solutions have been proposed for the COL, CIR, and CIS tasks. This suggests that these fields remain under-explored and offer significant room for further research. Notably, many previous studies are available as references (such as saliency prediction [84], salient object subitizing [68], and salient instance segmentation [82]), providing a solid foundation for understanding these tasks from a camouflaged perspective.

3.2 Video-level CSU models

There are two schools of thought for the video-level CSU task, including detecting and segmenting camouflaged objects from videos. Refer to Table 2 for details.

3.2.1 Video concealed object detection

Most works [156, 158] formulated this topic as the degradation problem of the segmentation task due to the scarcity of pixel-wise annotations. They, as usual, were trained on segmentation datasets (e.g., DAVIS [161] and FBMS [162]) but evaluated the generalizability performance on a video camouflaged object detection dataset, MoCA [40]. These methods consistently opt to extract offline optical flow as motion guidance for the segmentation task, but they diversify over the learning strategies, such as fully-supervised learning with real [40, 157, 160] or synthetic [155, 158] data and self-supervised learning [156, 159].

3.2.2 Video concealed object segmentation

Xie et al. [154] proposed the first work on camouflaged object discovery in videos. They used a pixel-trajectory recurrent neural network to cluster foreground motion for segmentation. However, this work is limited to a small-scale dataset, CAD [163]. Recently, based upon the localization-level dataset MoCA [40] with bounding box labels, Cheng et al. [42] extended this field by creating a large-scale VCOS benchmark MoCA-Mask with pixel-level masks. They also introduced a two-stage baseline SLTNet to implicitly utilize motion information.

From what we have reviewed above, the current approaches for VCOS tasks are still in a nascent state of development. Several concurrent works in well-established video segmentation fields (e.g., self-supervised correspondence learning [164–168], unified framework for different motion-based tasks [169–171]) points the way to further exploration. In addition, considering high-level semantic understanding has a research gap that merits being supplied, such as semantic segmentation and instance segmentation in the camouflaged scenes.

4 CSU datasets

In recent years, various datasets have been collected for both image- and video-level CSU tasks. In Table 3, we summarize the features of the representative datasets.

4.1 Image-level datasets

• CAMO-COCO [21] is tailor-made for COS tasks with 2500 image samples across eight categories, divided into two sub-datasets, i.e., CAMO with camouflaged objects and MS-COCO with non-camouflaged objects. Both CAMO and MS-COCO contain 1250 images with a split of 1000 for training and 250 for testing.

• NC4K [24] is currently the largest testing set for evaluating COS models. It consists of 4121 camouflaged images sourced from the Internet and can be divided into two primary categories: natural scenes and artificial scenes. In addition to the images, this dataset also provides localization labels that include both object-level segmentation and instance-level masks, making it a valuable resource for researchers working in this field. In a recent study by Lv et al. [24], an eye tracker was utilized to collect fixation information for each image. As a result, a CAM-FR dataset of 2280 images was created, with 2000 images used for training and 280 for testing. The dataset was annotated with three types of labels: localization, ranking, and instance labels.

• CAMO++ [36] is a newly released dataset that contains 5500 samples, all of which have undergone hierarchical pixel-wise annotation. The dataset is divided into two parts: camouflaged samples (1700 images for training and 1000 for testing) and non-camouflaged samples (1800 images for training and 1000 for testing).

• COD10K [20, 23] is currently the largest-scale dataset, featuring a wide range of camouflaged scenes. It contains 10,000 images from multiple open-access photography websites, covering 10 super-classes and 78 sub-classes. Of these images, 5066 are camouflaged, 1934 are non-camouflaged pictures, and 3000 are background images. The camouflaged subset of COD10K is annotated using different labels such as category labels, bounding boxes, object-level masks, and instance-level masks, providing a diverse set of annotations.

• CAM-LDR [33] comprises of 4040 training and 2026 testing samples. These samples were selected from commonly-used hybrid training datasets (i.e., CAMO with 1000 training samples and COD10K with 3040 training samples), along with the testing dataset (i.e., COD10K with 2026 testing samples). CAM-LDR is an extension of NC4K [24] that includes four types of annotations: localization labels, ranking labels, object-level segmentation masks, and instance-level segmentation masks. The ranking labels are categorized into six difficulty levels – background, easy, medium1, medium2, medium3, and hard.

• S-COD [142] is the first dataset designed specifically for the COS task under the weakly-supervised setting. The dataset includes 4040 training samples, with 3040 samples selected from COD10K and 1000 from CAMO. These samples were re-labeled using scribble annotations that provide a rough outline of the primary structure based on first impressions, without pixel-wise ground-truth information.

• IOCfish5K [39] is a distinct dataset that focuses on counting instances of fish in camouflaged scenes. This COC dataset comprises 5637 high-resolution images collected from YouTube, with 659,024 center points annotated. The dataset is divided into three subsets, with 3137 images allocated for training, 500 for validation, and 2000 for testing.

Remarks

In summary, three datasets (CAMO, COD10K, and NC4K) are commonly used as benchmarks to evaluate camouflage object segmentation (COS) approaches, with the experimental protocols typically described in Section 5.2. For the concealed instance segmentation (CIS) task, two datasets (COD10K and NC4K) containing instance-level segmentation masks can be utilized. The CAM-LDR dataset, which provides fixation information and three types of annotations collected from a physical eye tracker device, is suitable for various brain-inspired explorations in computer vision. Additionally, there are two new datasets from CSU: S-COD, designed for weakly-supervised COS, and IOCfish5K, focused on counting objects within camouflaged scenes.

4.2 Video-level datasets

• CAD [163] is a small dataset comprising nine short video clips and 836 frames. The annotation strategy used in this dataset is sparse, with camouflaged objects being annotated every five frames. As a result, there are 191 segmentation masks available in the dataset.

• MoCA [40] is a comprehensive video database from YouTube that aims to detect moving camouflaged animals. It consists of 141 video clips featuring 67 categories and comprises 37,250 high-resolution frames with corresponding bounding box labels for 7617 instances.

• MoCA-Mask [42], an extension of the MoCA dataset [40], provides human-annotated segmentation masks every five frames based on the MoCA dataset [40]. MoCA-Mask is divided into two parts: a training set consisting of 71 short clips (19,313 frames with 3946 segmentation masks) and an evaluation set containing 16 short clips (3626 frames with 745 segmentation masks). To label those unlabeled frames, pseudo-segmentation labels were synthesized using a bidirectional optical flow-based strategy [172].

Remarks

The MoCA dataset is currently the largest collection of videos with concealed objects, while it only offers detection labels. As a result, researchers in the community [156, 158] typically assess the performance of well-trained segmentation models by converting segmentation masks into detection bounding boxes. Recently, there has been a shift towards video segmentation in concealed scenes with the introduction of MoCA-Mask. Despite these advancements, the quantity and quality of data annotations remain insufficient for constructing a reliable video model that can effectively handle complex concealed scenarios.

5 CSU benchmarks

In this investigation, our benchmarking is built on COS tasks since this topic is relatively well-established and offers a variety of competing approaches. The following sections will provide details over the evaluation metrics (Section 5.1), benchmarking protocols (Section 5.2), quantitative analyses (Section 5.3, Section 5.4, Section 5.5), and qualitative comparisons (Section 5.6).

5.1 Evaluation metrics

As suggested in [23], there are five commonly used metricsFootnote 3 available for COS evaluation. We compare a prediction mask P with its corresponding ground-truth mask G at the same image resolution.

• MAE (mean absolute error, \(\mathcal{M}\)) is a conventional pixel-wise measure, which is defined as:

where W and H are the width and height of G respectively, and \((x,y)\) are pixel coordinates in G.

• F-measure can be defined as:

where \(\beta ^{2}=0.3\) is used to emphasize the precision value over the recall value, as recommended in [90]. Two other metrics are derived from:

where \(\mathbf{P}(T)\) is a binary mask obtained by thresholding the non-binary predicted map P with a threshold value \(T \in [0,255]\). The symbol \(|\cdot |\) calculates the total area of the mask inside the map. Therefore, it is possible to convert a non-binary prediction mask into a series of binary masks with threshold values ranging from 0 to 255. By iterating over all thresholds, three metrics are obtained with maximum (\(F_{\beta}^{\mathrm{mx}}\)), mean (\(F_{\beta}^{\mathrm{mn}}\)), and adaptive (\(F_{\beta}^{\mathrm{ad}}\)) values of the F-measure.

• Enhanced-alignment measure (\(E_{\phi}\)) [180, 181] is a recently proposed binary foreground evaluation metric, which considers both local and global similarity between two binary maps. Its formulation is defined as follows:

where ϕ is the enhanced-alignment matrix. Similar to \(F_{\beta}\), this metric also includes three values computed over all the thresholds, i.e., maximum (\(E_{\phi}^{\mathrm{mx}}\)), mean (\(E_{\phi}^{\mathrm{mn}}\)), and adaptive (\(E_{\phi}^{\mathrm{ad}}\)) values.

• Structure measure (\(\mathcal{S}_{\alpha}\)) [182, 183] is used to measure the structural similarity between a non-binary prediction map and a ground-truth mask:

where α balances the object-aware similarity \(\mathcal{S}_{o}\) and region-aware similarity \(\mathcal{S}_{r}\). As in the original paper, we use the default setting for \(\alpha =0.5\).

5.2 Experimental protocols

As suggested by Fan et al. [23], all competing approaches in the benchmarking analysis were trained on a hybrid dataset comprising the training portions of the COD10K [20] and CAMO [21] datasets, totaling 4040 samples. The models were then evaluated on three popular used benchmarks: COD10K’s testing portion with 2026 samples [20], CAMO with 250 samples [21], and NC4K with 4121 samples [24].

5.3 Quantitative analysis of CAMO

As reported in Table 4, we evaluated 36 deep learning-based approaches on the CAMO testing dataset [21] using various metrics. These models were classified into two groups based on the backbones they used: 32 convolutional-based and four transformer-based models. For those models using convolutional-based backbones, several interesting findings are observed and summarized as follows.

,

,  , and

, and  , respectively, with ↑/↓ indicating that higher/lower scores are better. If the results are unavailable since the code has not been made public, we use a hyphen (−) to denote it. We will follow these notations in subsequent tables unless otherwise specified

, respectively, with ↑/↓ indicating that higher/lower scores are better. If the results are unavailable since the code has not been made public, we use a hyphen (−) to denote it. We will follow these notations in subsequent tables unless otherwise specified• CamoFormer-C [148] achieved the best performance on CAMO with the ConvNeXt [176] based backbone, even surpassing some metrics produced by transformer-based methods, such as \(\mathcal{S}_{\alpha}\) value: 0.859 (CamoFormer-C) vs. 0.856 (DTINet [133]) vs. 0.849 (HitNet [143]). However, CamoFormer-R [148] with the ResNet-50 backbone was unable to outperform competitors with the same backbone, such as using multi-scale zooming (ZoomNet [134]) and iterative refinement (SegMaR [136]) strategies.

• For those Res2Net-based models, FDNet [135] achieved the top performance on CAMO with high-resolution input of 4162. In addition, SINetV2 [23] and FAPNet [127] also achieved satisfactory results using the same backbone but with a small input size of 3522.

• DGNet [28], is an efficient model that stands out with its top-3 performance compared to heavier models such as JSCOD [108] (121.63M) and PopNet [149] (181.05M), despite having only 19.22M parameters and 1.20G computation costs. Its performance-efficiency balance makes it a promising architecture for further exploration of its potential capabilities.

• Interestingly, CRNet [142] – a weakly-supervised model – competes favorably with the early fully-supervised model SINet [20]. This suggests that there is room for developing models to bridge the gap towards better data-efficient learning, e.g., self-/semi-supervised learning.

Furthermore, transformer-based methods can significantly improve performance due to their superior long-range modeling capabilities. Here, we test four transformer-based models on the CAMO testing dataset, yielding three noteworthy findings:

• CamoFormer-S [148], utilizes a Swin transformer design to enhance the hierarchical modeling ability on concealed content, resulting in superior performance across the entire CAMO benchmark. We also observed that the PVT-based variant CamoFormer-P [148] achieved comparable results but with fewer parameters, i.e., 71.40M (CamoFormer-P) vs. 97.27M (CamoFormer-R).

• DTINet [133] is a dual-branch network that utilizes the MiT-B5 semantic segmentation model from SegFormer [177] as backbone. Despite having 266.33M parameters, it has not delivered impressive performance due to the challenges of balancing these two heavy branches. Nevertheless, this attempt defies our preconceptions and inspires us to investigate the generalizability of semantic segmentation models in concealed scenarios.

• We also investigated the impact of input resolution on the performance of different models. HitNet [143] uses a high-resolution image of 7042, which can improve the detection of small targets, but at the expense of increased computation costs. Similarly, convolutional-based approaches such as ZoomNet [134] achieved impressive results by taking multiple inputs with different resolutions (the largest being 5762) to enhance segmentation performance. However, not all models benefit from this approach. For instance, PopNet [149] with a resolution of 4802 failed to outperform SINetV2 [23] with 3522 in all metrics. This observation raises two critical questions: should high-resolution be used in concealed scenarios, and how can we develop an effective strategy for detecting concealed objects of varying sizes? We will propose potential solutions to these questions and present an interesting analysis of the COD10K in Section 5.5.

5.4 Quantitative analysis of NC4K

Compared to the CAMO dataset, the NC4K [24] dataset has a larger data scale and sample diversity, indicating subtle changes may have occurred. Table 5 presents quantitative results on the current largest COS testing dataset with 4121 samples. The benchmark includes 28 convolutional-based and four transformer-based approaches. Our observations are summarized as follows.

• CamoFormer-C [148] still outperformed all methods on NC4K. In contrast to the awkward situation observed on CAMO as described in Section 5.3, the ResNet-50 based CamoFormer-R [148] now performed better than two other competitors (i.e., SegMaR [136] and ZoomNet [134]) on NC4K. These results confirm the effectiveness of CamoFormer’s decoder design in mapping latent features back to the prediction space, particularly for more complicated scenarios.

• DGNet [28] shows less promising results on the challenging NC4K dataset, possibly due to its restricted modeling capability with small model parameters. Nevertheless, this drawback provides an opportunity for modification since the model has a lightweight and simple architecture.

• While PopNet [149] may not perform well on small-scale CMAO datasets, it has demonstrated potential in the challenging NC4K dataset. This indicates that using an extra network to synthesize depth priors would be helpful for challenging samples. When compared to SINetV2 based on Res2Net-50 [23], PopNet has a heavier design (188.05M vs. 26.98M) and larger input resolution (5122 vs. 3522), but only improves the \(E_{\phi}^{\mathrm{mn}}\) value by 0.6%.

• Regarding the CamoFormer [148] model, there is now a noticeable difference in performance between its two variants. Specifically, the CamoFormer-S variant based on Swin-B lags behind while the CamoFormer-P variant based on PVTv2-B4 performs better.

5.5 Quantitative analysis of COD10K

In Table 6, we present a performance comparison of 36 competitors, including 32 convolutional-based models and four transformer-based models, on the COD10K dataset with diverse concealed samples. Based on our evaluation, we have made the following observations.

• CamoFormer-C [148], which has a robust backbone, remains the best-performing method among all convolutional-based methods. Similar to its performance on NC4K, CamoFormer-R [148] has once again outperformed strong competitors with identical backbones such as SegMaR [136] and ZoomNet [134].

• Similar to its performance on the NC4K dataset, PopNet [149] achieved consistently competitive results on the COD10K dataset, ranking second only to CamoFormer-C [148]. We believe that prior knowledge of the depth of the scene plays a crucial role in enhancing the understanding of concealed environments. This insight will motivate us to investigate more intelligent ways to learn structural priors, such as incorporating multi-task learning or heuristic methods into our models.

• Notably, HitNet [143] achieved the highest performance on the COD10K benchmark, outperforming models with stronger backbones such as Swin-B and PVTv2-B4. To understand why this is the case, we calculated the average resolution of all samples in the CAMO (W = 693.89 and H = 564.22), NC4K (W = 709.19 and H = 529.61), and COD10K (W = 963.34 and H = 740.54) datasets. We found that the testing set for COD10K has the highest overall resolution, which suggests that models utilizing higher resolutions or multi-scale modeling would benefit from this characteristic. Therefore, HitNet is an excellent choice for detecting concealed objects in scenarios where high-resolution images are available.

5.6 Qualitative comparison

This section visually assesses the performance of current top models on challenging and complex samples that are prone to failure. We compare qualitative results predicted by ten groups of top-performing models, including six convolutional-based models (i.e., CamoFormer-C [148], DGNet [28], PopNet [149], ZoomNet [134], FDNet [135] and SINetV2 [23]), two transformer-based models (i.e., CamoFormer-S [148] and HitNet [143]), as well as two other competitors (i.e., the earliest baseline SINet [20] and a weakly-supervised model CRNet [142]). All samples are selected from the COD10K testing dataset according to seven fine-grained attributes. The qualitative comparison is presented in Fig. 4, revealing several interesting findings.

Qualitative results of ten COS approaches. More descriptions on visual attributes in each column refer to Section 5.6

• The attribute of multiple objects (MO) poses a challenge due to the high false-negative rate in current top-performing models. As depicted in the first column of Fig. 4, only two out of ten models could locate the white flying bird approximately, as indicated by the red circle in the GT mask. These two models are CamoFormer-S [148], which employs a robust transformer-based encoder, and FDNet [135], which utilizes a frequency-domain learning strategy.

• The models we tested can accurately detect big objects (BO) by precisely locating the target’s main part. However, these models struggle to identify smaller details such as the red circles highlighting the toad’s claws in the second column of Fig. 4.

• The small object (SO) attribute presents a challenge as it only occupies a small area in the image, typically less than 10% of the total pixels as reported by COD10K [20]. As shown in the third column of Fig. 4, only two models (CamoFormer-S and CamoFormer-C [148]) can detect a cute cat lying on the ground at a distance. Such a difficulty arises for two main reasons. First, models struggle to differentiate small objects from complex backgrounds or other irrelevant objects in an image. Second, detectors may miss small regions due to down-sampling operations caused by low-resolution inputs.

• The out-of-view (OV) attribute refers to objects partially outside the image boundaries, leading to incomplete representation. To address this issue, a model should have a better holistic understanding of the concealed scene. As shown in the fourth column of Fig. 4, both CamoFormer-C [148] and FDNet [135] can handle the OV attribute and maintain the object’s integrity. However, two transformer-based models failed to do so. This observation has inspired us to explore more efficient methods, such as local modeling within convolutional frameworks and cross-domain learning strategies.

• The shape complexity (SC) attribute indicates that an object contains thin parts, such as an animal’s foot. In the fifth column of Fig. 4, the stick insect’s feet are a good example of this complexity, being elongated and slender and thus difficult to predict accurately. Only HitNet [143] with high-resolution inputs can predict a right-bottom foot (indicated by a red circle).

• The attribute of occlusion (OC) refers to the partial occlusion of objects, which is a common challenge in general scenes [184]. In Fig. 4, for example, the sixth column shows two owls partially occluded by a wire fence, causing their visual regions to be separated. Unfortunately, most of the models presented were unable to handle such cases.

• The indefinable boundary (IB) attribute is difficult to address due to its uncertainty between the foreground and background. As shown in the last column of Fig. 4, a matting-level sample.

• In the last two rows of Fig. 4, we display the predictions generated by SINet [20], which was our earliest baseline model. Current models have significantly improved location accuracy, boundary details, and other aspects. Additionally, CRNet [142], a weakly-supervised method with only weak label supervision, can effectively locate target objects to meet satisfactory standards.

6 Discussion and outlook

Based on our literature review and experimental analysis, we discuss five challenges and potential CSU-related directions in this section.

• Annotation-efficient learning. Deep learning techniques have significantly advanced the field of CSU. However, conventional supervised deep learning is data-hungry and resource-consuming. In practical scenarios, we hope the models can work on limited resources and have good generalizability. Thus developing effective learning strategies for CSU tasks is a promising direction, e.g., the weakly-supervised strategy in CRNet [142].

• Domain adaptation. Camouflaged samples are generally collected from natural scenes. Thus, deploying the models to detect concealed objects in auto-driving scenarios is challenging. Recent practice demonstrates that various techniques can be used to alleviate this problem, e.g., domain adaptation [185, 186], transfer learning [187], few-shot learning [188], and meta-learning [189].

• High-fidelity synthetic dataset. To alleviate algorithmic biases, increasing the diversity and scale of data is crucial. The rapid development of artificial intelligence generated content (AIGC) [190] and deep generative models, such as generative adversarial networks [191–193] and diffusion models [194, 195], is making it easier to create synthetic data for general domains. Recently, to address the scarcity of multi-pattern training images, Luo et al. [107] proposed a diffusion-based image generation framework that generates salient objects on a camouflaged sample while preserving its original label. Therefore, a model should be capable of distinguishing between camouflaged and salient objects to achieve a robust feature representation.

• Neural architecture search. Automatic network architecture search (NAS) is a promising research direction that can discover optimal network architectures for superior performance on a given task. In the context of concealment, NAS can identify more effective network architectures to handle complex background scenes, highly variable object appearances, and limited labeled data. This can lead to the development of more efficient and effective network architectures, resulting in improved accuracy and efficiency. Combining NAS with other research directions, such as domain adaptation and data-efficient learning, can further enhance the understanding of concealed scenes. These avenues of exploration hold significant potential for advancing the state-of-the-art and warrant further investigation in future research.

• Large model and prompt engineering. This topic has gained popularity and has even become a direction for the natural language processing community. Recently, the segment anything model (SAM) [196] has revolutionized computer vision algorithms, although it has limitations [197] in unprompted settings on several concealed scenarios. One can leverage the prompt engineering paradigm to simplify workflows using a well-trained robust encoder and task-specific adaptions, such as task-specific prompts and multi-task prediction heads. This approach is expected to become a future trend within the computer vision community. Large language models (LLMs) have brought both new opportunities and challenges to AI, moving towards artificial general intelligence further. However, it is challenging for academia to train the resource-consuming large models. There could be a promising paradigm in which the state-of-the-art deep learning CSU models are used as the domain experts, and the large models could work as an external component to assist the expert models by providing an auxiliary decision, representation, etc.

7 Defect segmentation dataset

Industrial defects usually originate from the undesirable production process, e.g., mechanical impact, workpiece friction, chemical corrosion, and other unavoidable physical conditions, whose external visual form is usually with unexpected patterns or outliers, e.g., surface scratches, spots, holes on industrial devices; color difference, indentation on fabric surface; impurities, breakage, stains on the material surface, etc. Although previous works have achieved promising advances in identifying visual defects by vision-based techniques, such as classification [198–200], detection [201–203], and segmentation [204–206], these techniques work on the assumption that defects are easily detected, but they ignore those challenging defects that are “seamlessly” embedded in their materials’ surroundings. With this, we elaborately collected a new multi-scene benchmark, named CDS2K, for the concealed defect segmentation task, whose samples were selected from existing industrial defect databases.

7.1 Dataset organization

To create a dataset of superior quality, we established three principles for selecting data: (1) The chosen sample should include at least one defective region, which will serve as a positive example. (2) The defective regions should have a pattern similar to the background, making them difficult to identify. (3) We also select normal cases as negative examples to provide a contrasting perspective with the positive examples. These samples were selected from the following well-known defect segmentation databases.

• MVTecADFootnote 4 [207, 208] contains several positive and negative samples for unsupervised anomaly detection. We manually selected 748 positive and 746 negative samples with concealed patterns from two main categories: (1) object category as in the 1st row of Fig. 5: pill, screw, tile, transistor, wood, and zipper. (2) texture category as in the 2nd row of Fig. 5: bottle, capsule, carpet, grid, leather, and metal nut. The number of positive/negative samples is shown with yellow circles in Fig. 5

Sample gallery of our CDS2K. It is collected from five sub-databases: (a)–(l) MVTecAD, (m)–(o) NEU, (p) CrackForest, (q) KolektorSDD, and (r) MagneticTile. The defective regions are highlighted with red rectangles. (Top-Right) Word cloud visualization of CDS2K. (Bottom) The statistic number of positive/negative samples of each category in our CDS2K

• NEUFootnote 5 provides three different databases: oil pollution defect images [209] (OPDI), spot defect images [210] (SDI), and steel pit defect images [211] (SPDI). As displayed in the third row (green circles) of Fig. 5, we selected 10, 20, and 15 positive samples from these databases separately.

• CrackForestFootnote 6 [212, 213] is a densely-annotated road crack image database for the health monitoring of urban road surface. We selected 118 samples with concealed patterns from them, and the samples are shown in the third row (red circle) of Fig. 5.

• KolektorSDDFootnote 7 [205] collected and annotated by Kolektor Group, which contains several defective and non-defective surfaces from the controlled industrial environment in a real-world case. We manually selected 31 positive and 30 negative samples with concealed patterns, and the samples are shown in the third row (blue circle) of Fig. 5.

• MagneticTile defectFootnote 8 [214] datasets contain six common magnetic tile defects and corresponding dense annotations. We picked 388 positive and 386 negative examples, displayed as white circles in Fig. 5.

7.2 Dataset description

The CDS2K comprises 2492 samples, consisting of 1330 positive and 1162 negative instances. Three different human-annotated labels are provided for each sample – category, bounding box, and pixel-wise segmentation mask. Figure 6 illustrates examples of these annotations. The average ratio of defective regions for each category is presented in Table 7, which indicates that most of the defective regions are relatively small.

7.3 Evaluation on CDS2K

Here, we evaluate the generalizability of current cutting-edge COS models on the positive samples of CDS2K. Regrading the code availability, we chose four top-performing COS approaches: SINetV2 [23], DGNet [28], CamoFormer-P [148], and HitNet [143]. As reported in Table 8, our observations indicate that these models are not effective in handling cross-domain samples, highlighting the need for further exploration of the domain gap between natural scenes and downstream applications.

8 Conclusion

This paper aims to provide an overview of deep learning techniques tailored for concealed scene understanding (CSU). To help readers view the global landscape of this field, we have made four contributions. First, we provide a detailed survey of CSU, which includes its background, taxonomy, task-specific challenges, and advances in the deep learning era. To the best of our knowledge, this survey is the most comprehensive one to date. Second, we have created the largest and most up-to-date benchmark for concealed object segmentation (COS), which is a foundational and prosperous direction at CSU. This benchmark allows for a quantitative comparison of state-of-the-art techniques. Third, we collected the largest concealed defect segmentation dataset, CDS2K, by including hard cases from diverse industrial scenarios. We have also constructed a comprehensive benchmark to evaluate the generalizability of deep CSU in practical scenarios. Finally, we discuss open problems and potential directions for this community. We aim to encourage further research and development in this area.

We would conclude from the following perspectives. (1) Model. The most common practice is based on the architecture of sharing UNet, which is enhanced by various attention modules. In addition, injecting extra priors and/or introducing auxiliary tasks improve the performance, while there are many potential problems to explore. (2) Training. Fully-supervised learning is the mainstream strategy in COS, but few researchers have addressed the challenge caused by insufficient data or labels. CRNet [142] is a good attempt to alleviate this issue. (3) Dataset. The existing datasets are still not sufficiently large and diverse. This community needs more concealed samples involving more domains (e.g., autonomous driving and clinical diagnosis). (4) Performance. Transformer and ConvNext based models outperform other competitors by a clear margin. Cost-performance tradeoff is still under-studied, for which DGNet [28] is a good attempt. (5) Metric. There are no well-defined metrics that can consider different camouflage degrees of different data to provide a comprehensive evaluation. This causes unfair comparisons.

Additionally, existing CSU methods focus on the appearance attributes of concealed scenes (e.g., color, texture, and boundary) to distinguish concealed objects without sufficient perception and output from the semantic perspective (e.g., relationships between objects). However, semantics is a good tool for bridging the human and machine intelligence gap. Therefore, beyond the visual space, semantic level awareness is key to the next-generation concealed visual perception. In the future, CSU models should incorporate various semantic associations, including integrating high-level semantics, learning vision-language knowledge [215], and modeling interactions across objects.

We hope that this survey provides a detailed overview for new researchers, presents a convenient reference for relevant experts, and encourages future research.

Availability of data and materials

Our sources including code and datasets can be accessed via GitHub: https://github.com/DengPingFan/CSU.

Code availability

Our code and datasets are available at https://github.com/DengPingFan/CSU, which will be updated continuously to watch and summarize the advancements in this rapidly evolving field.

Notes

Abbreviations

- CSU:

-

concealed scene understanding

- COS:

-

concealed object segmentation

- COL:

-

concealed object localization

- CIR:

-

concealed instance ranking

- CIS:

-

concealed instance segmentation

- COC:

-

concealed object counting

- VCOD:

-

video concealed object detection

- VCOS:

-

video concealed object segmentation

- SOD:

-

salient object detection

- VSOD:

-

video salient object detection

- IOC:

-

indiscernible object counting

- AIGC:

-

artificial intelligence generated content

- NAS:

-

network architecture search

- SAM:

-

segment anything model

- LLMs:

-

large language models

References

Fan, D.-P., Zhang, J., Xu, G., Cheng, M.-M., & Shao, L. (2023). Salient objects in clutter. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(2), 2344–2366.

Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid scene parsing network. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 6230–6239). Los Alamitos: IEEE.

Ji, G.-P., Xiao, G., Chou, Y.-C., Fan, D.-P., Zhao, K., Chen, G., et al. (2022). Video polyp segmentation: a deep learning perspective. Management International Review, 19(6), 531–549.

Ji, G.-P., Zhang, J., Campbell, D., Xiong, H., & Barnes, N. (2023). Rethinking polyp segmentation from an out-of-distribution perspective. arXiv preprint arXiv:2306.07792.

Fan, D.-P., Zhou, T., Ji, G.-P., Zhou, Y., Chen, G., Fu, H., et al. (2020). Inf-Net: automatic COVID-19 lung infection segmentation from CT images. IEEE Transactions on Medical Imaging, 39(8), 2626–2637.

Liu, L., Wang, R., Xie, C., Yang, P., Wang, F., Sudirman, S., et al. (2019). PestNet: an end-to-end deep learning approach for large-scale multi-class pest detection and classification. IEEE Access, 7, 45301–45312.

Rizzo, M., Marcuzzo, M., Zangari, A., Gasparetto, A., & Albarelli, A. (2023). Fruit ripeness classification: a survey. Artificial Intelligence in Agriculture, 7, 44–57.

Chu, H.-K., Hsu, W.-H., Mitra, N. J., Cohen-Or, D., Wong, T.-T., & Lee, T.-Y. (2010). Camouflage images. ACM Transactions on Graphics, 29(4), 51.

Boult, T. E., Micheals, R. J., Gao, X., & Eckmann, M. (2001). Into the woods: visual surveillance of noncooperative and camouflaged targets in complex outdoor settings. Proceedings of the IEEE, 89(10), 1382–1402.

Conte, D., Foggia, P., Percannella, G., Tufano, F., & Vento, M. (2009). An algorithm for detection of partially camouflaged people. In S. Tubaro & J.-L. Dugelay (Eds.), Proceedings of the sixth IEEE international conference on advanced video and signal based surveillance (pp. 340–345). Los Alamitos: IEEE.

Yin, J., Han, Y., Hou, W., & Li, J. (2011). Detection of the mobile object with camouflage color under dynamic background based on optical flow. Procedia Engineering, 15, 2201–2205.

Kim, S. (2015). Unsupervised spectral-spatial feature selection-based camouflaged object detection using VNIR hyperspectral camera. The Scientific World Journal, 2015, 1–8.

Zhang, X., Zhu, C., Wang, S., Liu, Y., & Ye, M. (2016). A Bayesian approach to camouflaged moving object detection. IEEE Transactions on Circuits and Systems for Video Technology, 27(9), 2001–2013.

Galun, M., Sharon, E., Basri, R., & Brandt, A. (2003). Texture segmentation by multiscale aggregation of filter responses and shape elements. In 2003 IEEE international conference on computer vision (pp. 716–723). Los Alamitos: IEEE.

Tankus, A., & Yeshurun, Y. (1998). Detection of regions of interest and camouflage breaking by direct convexity estimation. In 1998 IEEE workshop on visual surveillance (pp. 1–7). Los Alamitos: IEEE.

Tankus, A., & Yeshurun, Y. (2001). Convexity-based visual camouflage breaking. Computer Vision and Image Understanding, 82(3), 208–237.

Mittal, A., & Paragios, N. (2004). Motion-based background subtraction using adaptive kernel density estimation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 302–309). Los Alamitos: IEEE.

Liu, Z., Huang, K., & Tan, T. (2012). Foreground object detection using top-down information based on EM framework. IEEE Transactions on Image Processing, 21(9), 4204–4217.

Li, S., Florencio, D., Zhao, Y., Cook, C., & Li, W. (2017). Foreground detection in camouflaged scenes. In 2017 IEEE international conference on image processing (pp. 4247–4251). Los Alamitos: IEEE.

Fan, D.-P., Ji, G.-P., Sun, G., Cheng, M.-M., Shen, J., & Shao, L. (2020). Camouflaged object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2774–2784). Los Alamitos: IEEE.

Le, T.-N., Nguyen, T. V., Nie, Z., Tran, M.-T., & Sugimoto, A. (2019). Anabranch network for camouflaged object segmentation. Computer Vision and Image Understanding, 184, 45–56.

Zhang, Q., Yin, G., Nie, Y., & Zheng, W.-S. (2020). Deep camouflage images. In Proceedings of the 34th AAAI conference on artificial intelligence (pp. 12845–12852). Menlo Park: AAAI Press.

Fan, D.-P., Ji, G.-P., Cheng, M.-M., & Shao, L. (2022). Concealed object detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(10), 6024–6042.

Lv, Y., Zhang, J., Dai, Y., Li, A., Liu, B., Barnes, N., et al. (2021). Simultaneously localize, segment and rank the camouflaged objects. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 11591–11601). Los Alamitos: IEEE.

Mei, H., Ji, G.-P., Wei, Z., Yang, X., Wei, X., & Fan, D.-P. (2021). Camouflaged object segmentation with distraction mining. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8772–8781). Los Alamitos: IEEE.

Mei, H., Yang, X., Zhou, Y., Ji, G.-P., Wei, X., & Fan, D.-P. (2023). Distraction-aware camouflaged object segmentation. SCIENTIA SINICA Informationis. Advance online publication. https://doi.org/10.1360/SSI-2022-0138

Yu, L., Mei, H., Dong, W., Wei, Z., Zhu, L., Wang, Y., et al. (2022). Progressive glass segmentation. IEEE Transactions on Image Processing, 31, 2920–2933.

Ji, G.-P., Fan, D.-P., Chou, Y.-C., Dai, D., Liniger, A., & Van Gool, L. (2023). Deep gradient learning for efficient camouflaged object detection. Management International Review, 20(1), 92–108.

Kulchandani, J. S., & Dangarwala, K. J. (2015). Moving object detection: review of recent research trends. In 2015 international conference on pervasive computing (pp. 1–5). Los Alamitos: IEEE.

Mondal, A. (2020). Camouflaged object detection and tracking: a survey. International Journal of Image and Graphics, 20(4), 2050028.

Bi, H., Zhang, C., Wang, K., Tong, J., & Zheng, F. (2022). Rethinking camouflaged object detection: models and datasets. IEEE Transactions on Circuits and Systems for Video Technology, 32(9), 5708–5724.

Caijuan, S., Bijuan, R., Ziwen, W., Jinwei, Y., & Ze, S. (2022). Survey of camouflaged object detection based on deep learning. Journal of Frontiers of Computer Science and Technology, 16(12), 2734.

Lv, Y., Zhang, J., Dai, Y., Li, A., Barnes, N., & Fan, D.-P. (2023). Towards deeper understanding of camouflaged object detection. IEEE transactions on circuits and systems for video technology, 33(7), 3462–3476.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask R-CNN. In 2017 IEEE international conference on computer vision (pp. 2980–2988). Los Alamitos: IEEE.

Pei, J., Cheng, T., Fan, D.-P., Tang, H., Chen, C., & Van Gool, L. (2022). Osformer: one-stage camouflaged instance segmentation with transformers. In S. Avidan, G. J. Brostow, M. Cissé, et al. (Eds.), Proceedings of the 17th European conference of computer vision (pp. 19–37). Berlin: Springer.

Le, T.-N., Cao, Y., Nguyen, T.-C., Le, M.-Q., Nguyen, K.-D., Do, T.-T., et al. (2022). Camouflaged instance segmentation in-the-wild: dataset, method, and benchmark suite. IEEE Transactions on Image Processing, 31, 287–300.

Xie, E., Wang, W., Ding, M., Zhang, R., & Luo, P. (2021). Polarmask++: enhanced polar representation for single-shot instance segmentation and beyond. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(9), 5385–5400.

Chen, H., Sun, K., Tian, Z., Shen, C., Huang, Y., & Blendmask, Y. Y. (2020). Top-down meets bottom-up for instance segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8570–8578). Los Alamitos: IEEE.

Sun, G., An, Z., Liu, Y., Liu, C., Sakaridis, C., Fan, D.-P., et al. (2023). Indiscernible object counting in underwater scenes. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 13791–13801). Los Alamitos: IEEE.

Lamdouar, H., Yang, C., Xie, W., & Zisserman, A. (2020). Betrayed by motion: camouflaged object discovery via motion segmentation. In H. Ishikawa, C.-L. Liu, T. Pajdla, et al. (Eds.), Proceedings of the 15th Asian conference on computer vision (pp. 488–503). Berlin: Springer.

Jiao, L., Zhang, R., Liu, F., Yang, S., Hou, B., Li, L., et al. (2022). New generation deep learning for video object detection: a survey. IEEE Transactions on Neural Networks and Learning Systems, 33(8), 3195–3215.

Cheng, X., Xiong, H., Fan, D.-P., Zhong, Y., Harandi, M., Drummond, T., et al. (2022). Implicit motion handling for video camouflaged object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 13854–13863). Los Alamitos: IEEE.

Fan, D.-P., Cheng, M.-M., Liu, J.-J., Gao, S.-H., Hou, Q., & Borji, A. (2018). Salient objects in clutter: bringing salient object detection to the foreground. In V. Ferrari, M. Hebert, C. Sminchisescu, et al. (Eds.), Proceeding of the 15th European conference on computer vision (pp. 196–212). Berlin: Springer.

He, S., Lau, R. W. H., Liu, W., Huang, Z., & Yang, Q. (2015). SuperCNN: a superpixelwise convolutional neural network for salient object detection. International Journal of Computer Vision, 115(3), 330–344.

Li, G., & Yu, Y. (2015). Visual saliency based on multiscale deep features. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5455–5463). Los Alamitos: IEEE.

Wang, L., Lu, H., Ruan, X., & Yang, M.-H. (2015). Deep networks for saliency detection via local estimation and global search. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3183–3192). Los Alamitos: IEEE.

Kim, J., & Pavlovic, V. (2016). A shape-based approach for salient object detection using deep learning. In B. Leibe, J. Matas, N. Sebe, et al. (Eds.), Proceedings of the 14th European conference on computer Vision (pp. 455–470). Berlin: Springer.

Zeng, Y., Zhang, P., Zhang, J., Lin, Z., & Lu, H. (2019). Towards high-resolution salient object detection. In 2019 IEEE/CVF international conference on computer vision (pp. 7233–7242). Los Alamitos: IEEE.

Liu, N., & Dhsnet, J. H. (2016). Deep hierarchical saliency network for salient object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 678–686). Los Alamitos: IEEE.

Wu, Z., Su, L., & Huang, Q. (2019). Cascaded partial decoder for fast and accurate salient object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3907–3916). Los Alamitos: IEEE.

Zhang, P., Wang, D., Lu, H., Wang, H., & Yin, B. (2017). Learning uncertain convolutional features for accurate saliency detection. In 2017 IEEE international conference on computer vision (pp. 212–221). Los Alamitos: IEEE.

Hou, Q., Cheng, M.-M., Hu, X., Borji, A., Tu, Z., & Torr, P. H. S. (2019). Deeply supervised salient object detection with short connections. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(4), 815–828.

Zhuge, M., Fan, D.-P., Liu, N., Zhang, D., Xu, D., & Shao, L. (2023). Salient object detection via integrity learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(3), 3738–3752.

Liu, Y., Zhang, Q., Zhang, D., & Han, J. (2019). Employing deep part-object relationships for salient object detection. In 2019 IEEE/CVF international conference on computer vision (pp. 1232–1241). Los Alamitos: IEEE.

Qi, Q., Zhao, S., Shen, J., & Lam, K.-M. (2019). Multi-scale capsule attention-based salient object detection with multi-crossed layer connections. In IEEE international conference on multimedia and expo (1762-1767). Los Alamitos: IEEE.

Liu, N., Zhang, N., Wan, K., Shao, L., & Han, J. (2021). Visual saliency transformer. In 2021 IEEE/CVF international conference on computer vision (pp. 4702–4712). Los Alamitos: IEEE.

Li, G., & Yu, Y. (2016). Deep contrast learning for salient object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 478–487). Los Alamitos: IEEE.

Tang, Y., & Wu, X. (2016). Saliency detection via combining region-level and pixel-level predictions with CNNs. In B. Leibe, J. Matas, N. Sebe, et al. (Eds.), Proceedings of the 14th European conference on computer Vision (pp. 809–825). Berlin: Springer.

Wang, L., Lu, H., Wang, Y., Feng, M., Wang, D., Yin, B., et al. (2017). Learning to detect salient objects with image-level supervision. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3796–3805). Los Alamitos: IEEE.

Li, G., Xie, Y., & Lin, L. (2018). Weakly supervised salient object detection using image labels. In S. A. McIlraith, & K. Q. Weinberger (Eds.), Proceedings of the 32nd AAAI conference on artificial intelligence (pp. 7024–7031). Menlo Park: AAAI Press.

Cao, C., Huang, Y., Wang, Z., Wang, L., Xu, N., & Tan, T. (2018). Lateral inhibition-inspired convolutional neural network for visual attention and saliency detection. In S. A. McIlraith, & K. Q. Weinberger (Eds.), Proceedings of the 32nd AAAI conference on artificial intelligence (pp. 6690–6697). Menlo Park: AAAI Press.

Li, B., Sun, Z., & Supervae, Y. G. (2019). Superpixelwise variational autoencoder for salient object detection. In Proceedings of the 33rd AAAI conference on artificial intelligence (pp. 8569–8576). Menlo Park: AAAI Press.

Zeng, Y., Zhuge, Y., Lu, H., Zhang, L., Qian, M., & Yu, Y. (2019). Multi-source weak supervision for saliency detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 6074–6083). Los Alamitos: IEEE.

Zhang, D., Han, J., & Zhang, Y. (2017). Supervision by fusion: towards unsupervised learning of deep salient object detector. In 2017 IEEE international conference on computer vision (pp. 4068–4076). Los Alamitos: IEEE.

Zhang, J., Zhang, T., Dai, Y., Harandi, M., & Hartley, R. (2018). Deep unsupervised saliency detection: a multiple noisy labeling perspective. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 9029–9038). Los Alamitos: IEEE.

Shin, G., Albanie, S., & Xie, W. (2022). Unsupervised salient object detection with spectral cluster voting. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3970–3979). Los Alamitos: IEEE.

He, S., Jiao, J., Zhang, X., Han, G., & Lau, R. W. (2017). Delving into salient object subitizing and detection. In 2017 IEEE international conference on computer vision (pp. 1059–1067). Los Alamitos: IEEE.

Islam, M. A., Kalash, M., & Bruce, N. D. B. (2018). Revisiting salient object detection: simultaneous detection, ranking, and subitizing of multiple salient objects. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 7142–7150). Los Alamitos: IEEE.

Wang, W., Shen, J., Dong, X., & Borji, A. (2018). Salient object detection driven by fixation prediction. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1711–1720). Los Alamitos: IEEE.

Kruthiventi, S. S. S., Gudisa, V., Dholakiya, J. H., & Babu, R. V. (2016). Saliency unified: a deep architecture for simultaneous eye fixation prediction and salient object segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5781–5790). Los Alamitos: IEEE.

Zeng, Y., Zhuge, Y., Lu, H., & Zhang, L. (2019). Joint learning of saliency detection and weakly supervised semantic segmentation. In 2019 IEEE/CVF international conference on computer vision (pp. 7222–7232). Los Alamitos: IEEE.

Wang, L., Wang, L., Lu, H., Zhang, P., & Ruan, X. (2016). Saliency detection with recurrent fully convolutional networks. In B. Leibe, J. Matas, N. Sebe, et al. (Eds.), Proceedings of the 14th European conference on computer Vision (pp. 825–841). Berlin: Springer.

Li, X., Yang, F., Cheng, H., Liu, W., & Shen, D. (2018). Contour knowledge transfer for salient object detection. In V. Ferrari, M. Hebert, C. Sminchisescu, et al. (Eds.), Proceedings of the 15th European conference on computer Vision (pp. 370–385). Berlin: Springer.

Wang, W., Zhao, S., Shen, J., Hoi, S. C., & Borji, A. (2019). Salient object detection with pyramid attention and salient edges. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1448–1457). Los Alamitos: IEEE.

Liu, J.-J., Hou, Q., Cheng, M.-M., Feng, J., & Jiang, J. (2019). A simple pooling-based design for real-time salient object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3917–3926). Los Alamitos: IEEE.

Zhao, J.-X., Liu, J.-J., Fan, D.-P., Cao, Y., Yang, J., & Cheng, M.-M. (2019). EGNet: edge guidance network for salient object detection. In 2019 IEEE/CVF international conference on computer vision (pp. 8778–8787). Los Alamitos: IEEE.

Su, J., Li, J., Zhang, Y., Xia, C., & Tian, Y. (2019). Selectivity or invariance: boundary-aware salient object detection. In 2019 IEEE/CVF international conference on computer vision (pp. 3798–3807). Los Alamitos: IEEE.

Zhang, L., Zhang, J., Lin, Z., Lu, H., & He, Y. (2019). CapSal: leveraging captioning to boost semantics for salient object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 6024–6033). Los Alamitos: IEEE.

Li, G., Xie, Y., Lin, L., & Yu, Y. (2017). Instance-level salient object segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 247–256). Los Alamitos: IEEE.

Tian, X., Xu, K., Yang, X., Yin, B., & Lau, R. W. (2022). Learning to detect instance-level salient objects using complementary image labels. International Journal of Computer Vision, 130(3), 729–746.

Fan, R., Cheng, M.-M., Hou, Q., Mu, T.-J., Wang, J., & Hu, S.-M. (2019). S4Net: single stage salient-instance segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 6103–6112). Los Alamitos: IEEE.

Wu, Y.-H., Liu, Y., Zhang, l., Gao, W., & Cheng, M.-M. (2021). Regularized densely-connected pyramid network for salient instance segmentation. IEEE Transactions on Image Processing, 30, 3897–3907.

Borji, A., & Itti, L. (2012). State-of-the-art in visual attention modeling. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1), 185–207.

Borji, A. (2019). Saliency prediction in the deep learning era: successes and limitations. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(2), 679–700.

Fan, D.-P., Li, T., Lin, Z., Ji, G.-P., Zhang, D., Cheng, M.-M., et al. (2022). Re-thinking co-salient object detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(8), 4339–4354.

Fan, D.-P., Lin, Z., Ji, G.-P., Zhang, D., Fu, H., & Cheng, M.-M. (2020). Taking a deeper look at co-salient object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2916–2926). Los Alamitos: IEEE.

Zhang, D., Fu, H., Han, J., Borji, A., & Li, X. (2018). A review of co-saliency detection algorithms: fundamentals, applications, and challenges. ACM Transactions on Intelligent Systems and Technology, 9(4), 1–31.

Borji, A., Cheng, M.-M., Hou, Q., Jiang, H., & Li, J. (2019). Salient object detection: a survey. Computational Visual Media, 5(2), 117–150.

Wang, W., Lai, Q., Fu, H., Shen, J., Ling, H., & Yang, R. (2021). Salient object detection in the deep learning era: an in-depth survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(6), 3239–3259.

Borji, A., Cheng, M.-M., Jiang, H., & Li, J. (2015). Salient object detection: a benchmark. IEEE Transactions on Image Processing, 24(12), 5706–5722.

Zhou, T., Fan, D.-P., Cheng, M.-M., Shen, J., & Shao, L. (2021). RGB-D salient object detection: a survey. Computational Visual Media, 7(1), 37–69.

Fan, D.-P., Lin, Z., Zhang, Z., Zhu, M., & Cheng, M.-M. (2020). Rethinking RGB-D salient object detection: models, data sets, and large-scale benchmarks. IEEE Transactions on Neural Networks and Learning Systems, 32(5), 2075–2089.

Cong, R., Zhang, K., Zhang, C., Zheng, F., Zhao, Y., Huang, Q., et al. (2022). Does thermal really always matter for RGB-T salient object detection? IEEE Transactions on Multimedia. Advance online publication. https://doi.org/10.1109/TMM.2022.3216476

Tu, Z., Li, Z., Li, C., Lang, Y., & Tang, J. (2021). Multi-interactive dual-decoder for RGB-thermal salient object detection. IEEE Transactions on Image Processing, 30, 5678–5691.

Fu, K., Jiang, Y., Ji, G.-P., Zhou, T., Zhao, Q., & Fan, D.-P. (2022). Light field salient object detection: a review and benchmark. Computational Visual Media, 8(4), 509–534.

Wang, W., Shen, J., & Shao, L. (2017). Video salient object detection via fully convolutional networks. IEEE Transactions on Image Processing, 27(1), 38–49.

Le, T.-N., & Sugimoto, A. (2017). Deeply supervised 3D recurrent FCN for salient object detection in videos. In T. K. Kim, S. Zafeiriou, G. Brostow, et al. (Eds.), Proceedings of the British machine vision conference (pp. 1–13). Durham: BMVA Press.