Abstract

Background

Progress in remote educational strategies was fueled by the advent of the COVID-19 pandemic. This pilot RCT explored the efficacy of a decentralized model of simulation based on principles of observational and peer-to-peer learning for the acquisition of surgical skills.

Methods

Sixty medical students from the University of Montreal learned the running subcuticular suture in four different conditions: (1) Control group (2) Self-learning (3) Peer-learning (4) Peer-learning with expert feedback. The control group learned with error-free videos, while the others, through videos illustrating strategic sub-optimal performances to be identified and discussed by students. Performance on a simulator at the end of the learning period, was assessed by an expert using a global rating scale (GRS) and checklist (CL).

Results

Students engaging in peer-to-peer learning strategies outperformed students who learned alone. The presence of an expert, and passive vs active observational learning strategies did not impact performance.

Conclusion

This study supports the efficacy of a remote learning strategy and demonstrates how collaborative discourse optimizes the students’ acquisition of surgical skills. These remote simulation strategies create the potential for implantation in future medical curriculum design.

Trial Registration: NCT04425499 2020-05-06.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Surgical skills simulation laboratories support the development of technical and non-technical surgical skills for professional practice [1]. They are experiential classrooms embedded within medical schools and, or hospitals where learners (e.g., medical students and surgical residents) acquire a range of skills in an environment that offers the practicality of a surgical setting without the risks to patient safety. In this study, we focus on a learning environment for technical skills referred to as the centralized model of simulation (C-SIM). C-SIM is characterized by an environment for learners to practice technical surgical skills under the supervision of experienced educators [1]. The typical training session in the Ce-SIM model consists of three main phases: instructions and preparation, hands-on practice, and practice/post-practice feedback.

Although the idea of a decentralized model of simulation (DeC-SIM) has been investigated for a few decades [2], the recent COVID-19 pandemic has catalyzed these efforts [3, 4]. During the COVID-19 pandemic, the access to surgical skills simulation laboratories became limited due to physical distancing, and in order to continue skills development, other options needed to be considered [4]. As opposed to C-SIM, DeC-SIM is characterized by an environment in which learners can prepare, practice, and receive feedback remotely and outside of the simulation laboratories from the comfort of their homes or other locations.

The overarching theory used to guide the development of technical surgical skills is Ericsson’s deliberate practice [5]. It refers to a particular type of practice that is purposeful and systematic through the use of instructions, motivation, and accurate feedback [1, 4, 5]. There are several instructional design elements that need to be addressed through research, before educators and program directors were to consider DeC-SIM as a possible augmentation to more traditional training approaches in the post-pandemic era. These instructional design elements need to apply to all three phases of simulation (i.e., instructions and preparation, hands-on practice, and practice/post-practice feedback). In this study, we address how to structure instructions and pre-practice preparation in a DeC-SIM model to (a) most optimally develop procedural knowledge prior to physical practice, and (b) improve learners’ performance in the initial hands-on practice. Evidence suggests that trainees can acquire suturing skills independently [6,7,8,9], however, the efficacy of this type of practice is influenced by how well these trainees were instructed [10, 11]. That is, the efficacy of hands-on practice depends on the efficacy of instructions and preparation [11]. Furthermore, it has been shown that surgical trainees are effective at using video-based instructions for preparation [12], and that creating opportunities for peer-to-peer collaboration [13, 14], with and without an expert [2, 15], can further facilitate preparation and effective instructions. However, these isolated instructional elements have not been put together into a more complex educational intervention that would support the DeC-SIM model in the future [16,17,18]. Therefore, the purpose of this study was to investigate the efficacy of a complex educational intervention as a means to prepare senior medical students for subsequent hands-on, simulation-based practice in a DeC-SIM model of simulation.

2 Materials and methods

This study was approved by the institutional review board of the University of Montreal (CERSES 20-068-D); registered (ClinicalTrials.gov NCT04425499); and completed in 2020 as a pilot randomized control, four-arm experimental design. This study was conducted in accordance with the principles of the international conference on the harmonization of the guidelines of good clinical practice (International Conference on Harmonization Guidelines for Good Clinical Practice (ICH-GCP)) and following the declaration of Helsinki on human research.

2.1 Consent statement

Informed consent (consent to participate and consent for the results to be published) were obtained from all participant. All participants were over 18 years old.

2.2 Participants

Sixty (n = 60) first—(n = 43) and second—(n = 17) year medical students were recruited to voluntarily participate in this study. The only inclusion criterion was an active enrollment within the first 2 years of medical school. A short questionnaire inquiring about previous experience was completed by the participants in order to confirm their level was novice. Exclusion criteria were: Self-reported injury during the trial; completing surgical rotations before the trial; returning from a break such as a sabbatical, and a medical degree in another country. The participants could withdraw from the study at any point and have their data excluded. After informed consent, all participants were randomly assigned to four experimental groups using stratified randomization (by year of study). This occurred via a pseudo-random number generator in which a default seed was used as the reference for randomness, assigning the participant to one of the four groups. When a group reached the target number of participants (n = 15) the assignment was stopped.

2.3 Power calculation

Using global rating scale (GRS) scores [19] from previous work [6, 20], and based on 0.8 power to detect a statistically significant difference (p = 0.05, two-sided), 12 students per group were the minimum required.

2.4 Materials and instruments

The Gamified Educational Network (GEN) Learning Management System (LMS) is a multi-feature, online learning management system developed at maxSIMhealth laboratory (maxSIMhealth.com), Ontario Tech University that combines online learning and home-based simulation [4]. GEN permits easy content creation and integration of features such as instructions, collaboration, video uploads, and feedback through video assessment. Several features utilized in this study include (Fig. 1): upload feature, where the participants upload a video of themselves performing the suturing skill; a collaborative discussion board, which permits collaboration and feedback, and multiple choice surveys in the form of the global rating scales (GRS and checklists) adjacent to the videos. GEN displays segmented progression bars and permits selective section completion, where progressive completion blocks sections and guides the participants through the activity. For this study, GEN was made available in French and English and was designed to be platform agnostic (i.e., accessible by desktop computers, tablets, and smartphones).

2.4.1 Simulators and instruments

The simulators were suturing pads (FAUX Skin Pad, https://www.fauxmedical.com/) affixed to a table's surface using a custom designed holder (maxSIMhealth.com). The sutures (3–0) and instruments (needle driver, forceps, scissors) were supplied by the Unité de formation chirurgicale of the Hôpital Maisonneuve-Rosemont, Montreal (Fig. 2).

2.5 Procedure

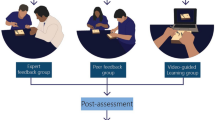

The protocol of this study has been previously published [21], (Fig. 3). The study was divided into two distinct phases. The first phase was the acquisition of procedural knowledge. The main aims of this phase were to assess the learners’ procedural knowledge (pre-test), provide them with opportunities for observational practice, collaboration and feedback, and re-test their procedural knowledge (post-test). The observational practice, collaboration and feedback sub-phase were constructed based on the Cheung et al. study [2]. The second phase was a test of initial hands-on performance. This phase was based on Miller’s model and assessed the ‘procedural knowledge’ (knows how), and ‘competence’ (shows how) [22]. These two levels were included as the overarching goal was to test how well, in the absence of physical practice, the elements of observational practice, collaboration, and feedback, prepare learners for subsequent practice in simulation.

2.5.1 Phase 1: The acquisition of procedural knowledge

This first part of the study includes the pre- and the post-test which focuses on knowledge acquisition. On the first day of the study, participants were emailed a unique link to access GEN. They were directed to the introduction page which briefly explained the activities, their due dates, and the steps required to complete the project (refer to Fig. 1a). Next, they participated in a pre-test, where they viewed eight videos—six of the videos had errors embedded in them (Table 1)—while two were error-free. The participants were unaware of the type of videos presented. The participants were asked to assess the performances depicted in these videos using two assessment tools derived from the Objective Structured Assessment of Technical Skills (OSATS): Global Rating Scale (GRS) and Subcuticular Suture Checklist [10, 23, 24].

2.5.2 Observational practice, collaboration and feedback

During this 3-day sub-phase, the participants were allowed to view a separate set of videos, as often as desired under one of the four experimental conditions. Control group: the participants viewed eight videos showing an expert performing a running subcuticular suture without errors. Self-learning: the participants viewed eight videos; six of these videos contained errors while two did not. In these two groups, they did not interact with any other participants. Peer-learning: the participants in this group also viewed eight videos, with six containing errors, and two error-free. However, they interacted with the other fourteen participants in this group for 3 days, and their task was to comment on the errors observed in the videos. The interactive format of GEN encouraged exchanges between participants in an anonymous fashion through the use of avatars (Fig. 1b). All participants were required to leave at least one comment for each video in order to proceed to the next phase of the study, and they could view each other’s comments and respond. Peer-learning with expert feedback: This group had the same conditions as the peer-learning group, however an expert provided comments and feedback to the group. The expert was a canadian-trained general surgeon and faculty member represented by a unique and easily identifiable-avatar.

2.5.3 Post-test

After a 3-day instructional period, all participants performed a post-test which consisted of the same eight videos and assessment tools presented in a different order.

2.5.4 Phase 2: Practical skills—performance test

Two weeks prior to the study, the simulators and instruments (Fig. 2) were sent to each participant. These simulators were used to conduct a test of performance, designed to measure how well the participants performed the skill on their first attempt after the instructional phase.

To accomplish this, after the post-test (i.e., test of procedural knowledge), the participants were instructed to record themselves opening a suturing kit and executing their first attempt at a running subcuticular suture. They uploaded their video on GEN and within a week, an expert surgeon assessed the participant’s performance in a blinded manner.

2.6 Measurement tools

OSATS is a validated tool developed at the University of Toronto to assess surgical skills [19, 23, 24]. OSATS is composed of two parts. First is the GRS, by which global competencies are graded on a scale from 1 to 5 for a maximum possible total of 40 points (Table 2). The second is a checklist, which is a list of steps, and their order of execution, graded as dichotomous for a maximal score of 25 (Table 2).

2.7 Data collection

2.7.1 Phase 1: The acquisition of procedural knowledge

The correct answers for the GRS and checklist were integrated into the GEN. Using the GRS and checklist, the participants were asked to identify correct and incorrect actions demonstrated within each video. If a mistake was present in the video but the participants checked the step as though it had been done properly, a point was deducted. Similarly, a point was deducted if a correctly executed step was not checked by the participant.

2.7.2 Phase 2: The initial hands-on performance

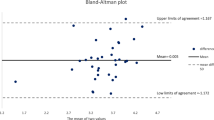

The expert surgeon used the same GRS and checklist to evaluate the suturing performance recorded by each participant uploaded to GEN.

2.8 Statistical analyses

We followed the intention-to-treat (ITT) analysis, which includes each participant randomized according to randomized treatment assignment. This nullifies noncompliance, protocol deviations, withdrawal, and anything that happens after randomization [25]. Missing data for any of the tests resulted in the complete omission of the student’s data for statistical analysis. The Statistical Package for the Social Sciences [26] was employed for statistical analyses.

2.8.1 Phase 1: The acquisition of procedural knowledge

Please refer to Fig. 4 for a graphic representation of the statistical analyses employed for phase 1. Initially, a separate mixed design analysis of variance (ANOVA) model with 4 groups (between subject factor) and 2 tests (within subject factor) was used to test the efficacy of the training method on procedural knowledge for GRS and checklists. Significant main effects at p < 0.05 were further analyzed using appropriate post hoc tests. However, if significant interaction between group and test was found, a set of simple main effects were used. We aimed to answer two questions: Were the groups similar or different in the pre-test? Were the groups similar or different in the post test? To achieve this, we used separate one-way ANOVAs for pre-test and post-test with a group as a single factor with four levels. All results that showed significance with p < 0.05 were further analyzed with the Tukey’s honestly significant difference (HSD) as a post-hoc analysis to compare the groups’ means and highlight differences.

This figure shows the algorithm used for the statistical analysis in phase 1. Initially, a mixed-design ANOVA was used to test for main effects (ME) and interaction between group and test. If the interaction was found significant, we employed a set of tests of simple main effects in the form of a separate one-way ANOVAs for pre- and post-tests. For the significant one-way ANOVAs, we employed Tukey HSD test to test for group differences

2.8.2 Phase 2: The initial hands-on performance

A one-way, between groups ANOVA was used to test the efficacy of the training method on performance. Results were significant at p < 0.05 and were further analyzed with the Tukey’s honestly significant difference (HSD) as a post-hoc analysis to highlight differences.

3 Results

3.1 Phase 1: The acquisition of procedural knowledge

The data for one participant were excluded due to the lack of completion of the post-test (Self-learning group). The results for the GRS and checklist were analyzed separately and are presented in Table 3.

3.1.1 Global rating scales

In summary, the results showed that the control group identified more errors within the videos on the post-test as compared to the other three groups. Specifically, the Tukey HSD tests revealed that the Control group showed higher scores on the post-test than Self-learning group (p = 0.008), Peer-learning (p = 0.007), and the Peer-learning with expert feedback (p = 0.001) groups.

Firstly, the mixed design ANOVA revealed a significant interaction between group and test (F = 2.87, p = 0.044). Subsequently, simple main effects were used to determine the difference between the four groups at each level of the test variable (i.e., pre-test and post-test). These analyses showed that there were no significant differences between the four groups at pre-test (F(3,55) = 1.39, p = 0.255). In contrast, there was a significant difference between the four groups during the post-test (F(3,55) = 6.649, p < 0.001). Tukey HSD tests revealed that the Control group showed higher scores on the post-test than Self-learning group (p = 0.008), Peer-learning (p = 0.007), and the Peer-learning with expert feedback (p = 0.001) groups. None of the other three groups showed significant difference during the post-test at p = 0.05.

3.1.2 Checklist

The results showed that the control group identified more errors within the videos on the post-test as compared to the other three groups. Specifically, the Tukey HSD tests revealed that the Control group showed higher scores on the post-test than Self-learning group (p = 0.033), but not from Peer-learning (p = 0.323), and the Peer-learning with expert feedback (p = 0.458) groups.

Firstly, the mixed design ANOVA revealed a significant interaction between group and test (F(3,55) = 7.189, p < 0.001). Simple main effects showed that there were no significant differences between the four groups during the pre-test (F(3,55) = 1.677, p = 0.188). In contrast, there was a significant difference between the four groups during the post-test (F(3,55) = 2.696, p = 049). Tuckey HSD tests revealed that the Control group showed higher scores on the post-test than Self-learning group (p = 0.033), but not from Peer-learning (p = 0.323), and the Peer-learning with expert feedback (p = 0.458) groups. None of the other three groups showed significant difference between each other on this measure during the post-test at p = 0.05.

3.1.3 Phase 2: Practical skills—performance test

The data for two participants in the Self-learning group, two participants in the Peer-learning group, and one participant in the Peer-learning with expert group were excluded due to the lack of completion of this test. The results for the GRS and skill specific checklist were analyzed separately, and are presented in Table 4.

3.1.4 Global rating scales

The one-way between group ANOVA revealed a significant main effect (F(3,54) = 6.70, p = 0007). Pairwise comparisons showed that the Control and Self-learning groups did not differ significantly from each other (t = −0.27, p = 0.993), and the Peer-learning and Peer-learning with expert feedback group did not differ from each other (t = −0.30, p = 0.990). On the contrary, when contrasted with the Control group both the Peer-learning group (t = 3.21, p = 0.012) and the Peer-learning with expert group (t = 2.96, p = 0.023) showed higher scores. Similarly, when contrasted with the Self-learning group both the Peer-learning group (t = 3.37, p = 0.008) and the Peer-learning with expert group (t = 3.12, p = 0.015) showed higher scores.

3.1.5 Checklist

The one-way between group ANOVA revealed a significant main effect (F(3,54) = 8.13, p = 0002). Pairwise comparisons showed that the Control and Self-learning groups did not differ significantly from each other (t = 0.39, p = 0.980), and the Peer-learning and Peer-learning with experts groups did not differ from each other (t = 1.20, p = 0.630). On the contrary, when contrasted with the Control group both the Peer-learning group (t = 2.95, p = 0.024) and the Peer-learning with expert group (t = 4.25, p = 0.001) showed higher scores. Similarly, when contrasted with the Self-learning group the Peer-learning with expert group (t = 3.72, p = 0.003) showed higher scores. When contrasted with the Self-learning group, the Peer-learning group also only showed trends towards achieving higher scores (t = 2.47, p = 0.077).

4 Discussion

The concept of a decentralized model of simulation (DeC-SIM) is not new [2], however, the recent COVID-19 pandemic catalyzed vast research and development efforts in this area [3, 4]. Based on Ericsson’s theory of deliberate practice [5], to ensure the effectiveness and subsequent consideration of DeC-SIM as a possible adjunct to more traditional training approaches (i.e., C-SIM), the initial work should focus on creating a set of best practices for designing basic simulation elements such as instructions, scheduling and monitoring remote practice, maintaining learners’ motivation, and providing accurate feedback [1, 4, 5]. In this study, we have focused on how to structure instructions in a DeC-SIM model to (a) most optimally develop procedural knowledge prior to physical practice, and (b) improve learners’ performance during the initial hands-on practice.

The results of phase 1 showed that all learners improved their procedural knowledge of the suturing technique, becoming more familiar with the suturing task and the assessment tools. On the pre-test, all learners scored similarly, while on the post-test, the learners in the control group had a higher result, although this may not necessarily be interpreted as representative of a superior performance. The learners in the control group observed and assessed a set of eight error-free videos, while those in the other three groups observed and assessed videos with built-in errors; making the videos of the control group easier to assess. Overall, the shift in the ability to discern error-free and erroneous videos from pre-test to post-test implies that the observational practice was effective.

Phase 2 of this study aimed to address whether the conditions of observational practice led to different psychomotor performances on the very first attempt at hands-on practice. This was based on Miller’s model [22] which proposes that the degree of procedural knowledge and the degree of competence, or what we refer to as the first attempt at psychomotor performance, may not always match. Although typically research shows a gap in transfer of procedural knowledge to competent performance [27], we wanted to test the opposite hypothesis—that although the various conditions of observational practice and collaboration lead to similar procedural knowledge, they may have a differential impact on initial motor performance.

The results of phase 2 suggest that collaborative, peer learning conditions lead to procedural knowledge that translates to an improved initial motor performance compared to similar practice in isolation. Furthermore, observing error-free videos vs those with errors during the observational practice, did not impact the psychomotor performance. Most importantly, however, the presence of an expert in the collaborative, peer-learning group did not affect the initial motor performance.

Collectively, our results are in support of the idea of ‘preparation for future learning' [28]. More specifically, these results indicate that DeC-SIM is a feasible addition to the current laboratory-based simulation learning model. For this approach to be optimal, virtual learning management systems, such as GEN, must support collaborative, peer-learning approaches [2, 13, 14, 29]. One key finding stemming from the current study, is that the addition of an expert in a collaborative, peer-learning group does not impact the development of procedural knowledge or subsequent motor performance. The fact that the presence of an expert did not lead to better learning outcomes may have a practical implication for future adoption of DeC-SIM by relevant stakeholders and policymakers.

Although promising, the study has several limitations that should be acknowledged. First, the experimental design used was not orthogonal. In our context, orthogonality refers to the property of the experimental design that ensures that all conditions of practice may be studied independently. Instead, in this exploratory, pilot randomized control study, a planned comparison design approach was used. Because of the exploratory nature of this work, and the aim of testing a complex intervention, our focus was on a few comparisons of interest rather than every possible comparison. Future work will emphasize the need for more orthogonal designs. Secondly, the participants’ satisfaction with the learning environment was not assessed. According to Kirkpatrick’s model [30], the participant’s experience should be evaluated and may provide approximate levels of acceptability of the new training approach by the end point users. In addition, based on the principles of Utilization-Focused Evaluation (U-FE) [31] such assessment of satisfaction may also provide early evidence of the areas of improvement of the intervention. Also, for the performance test, future studies should consider additional raters in order to have a reliable and stable assessment [32]. Finally, we only investigate the effectiveness of the DeC-SIM when applied to the acquisition of fundamental surgical skills by naive or novice learners. In accordance with contemporary progressive learning frameworks [33], future work should extend our current findings to more complex skills and more advanced learners.

In summary, the current results fit well with prior evidence on this topic, and suggest that junior surgical learners are effective at using video-based instructions for preparation [12], and that creating opportunities for peer-to-peer collaboration [13, 14], with and without an expert [2, 15], can further facilitate preparation and instructions for subsequent hands-on practice. However, to the best of our knowledge, this is the first study to include a set of instructional elements to form a complex simulation intervention that would support DeC-SIM model in the future.

References

Reznick RK, MacRae H. Teaching surgical skills–changes in the wind. New Engl J Med. 2006;355(25):2664–9. https://doi.org/10.1056/NEJMra054785.

Cheung JJ, Koh J, Brett C, Bägli DJ, Kapralos B, Dubrowski A. Preparation With Web-Based Observational Practice Improves Efficiency of Simulation-Based Mastery Learning. Simul Healthc. 2016;11(5):316–322. https://doi.org/10.1097/SIH.0000000000000171. PMID: 27388862.

Brydges R, Campbell DM, Beavers L, Khodadoust N, Iantomasi P, Sampson K, Goffi A, Caparica Santos FN, Petrosoniak A. Lessons learned in preparing for and responding to the early stages of the COVID-19 pandemic: one simulation’s program experience adapting to the new normal. Adv Simul (London). 2020;5:8. https://doi.org/10.1186/s41077-020-00128-y.

Dubrowski A, Kapralos B, Peisachovich E, Da Silva C, Torres A. A Model for an online learning management system for simulation-based acquisition of psychomotor skills in health professions education. Cureus. 2021;13(3):e14055. https://doi.org/10.7759/cureus.14055.

Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. J Assoc Am Med Coll. 2004;79(10):S70–81.

Brydges R, Dubrowski A, Regehr G. A new concept of unsupervised learning: directed self-guided learning in the health professions. J Assoc Am Med Coll. 2010;85(10 Suppl):S49–55. https://doi.org/10.1097/ACM.0b013e3181ed4c96.

Brydges R, Carnahan H, Rose D, Dubrowski A. Comparing self-guided learning and educator-guided learning formats for simulation-based clinical training. J Adv Nurs. 2010;66(8):1832–44. https://doi.org/10.1111/j.1365-2648.2010.05338.x.

Safir O, Williams CK, Dubrowski A, Backstein D, Carnahan H. Self- directed practice schedule enhances learning of suturing skills. Can J Surg. 2013;56(6):E142–7. https://doi.org/10.1503/cjs.019512.

Brydges R, Peets A, Issenberg SB, Regehr G. Divergence in student and educator conceptual structures during auscultation training. Med Educ. 2013;47(2):198–209. https://doi.org/10.1111/medu.12088.

Brydges R, Mallette C, Pollex H, Carnahan H, Dubrowski A. Evaluating the influence of goal setting on intravenous catheterization skill acquisition and transfer in a hybrid simulation training context. Simul Healthc. 2012;7(4):236–42. https://doi.org/10.1097/SIH.0b013e31825993f2.

Manzone J, Regehr G, Garbedian S, Brydges R. Assigning medical students learning goals: do they do it, and what happens when they don’t? Teach Learn Med. 2019;31(5):528–35. https://doi.org/10.1080/10401334.2019.1600520.

Dubrowski A, Xeroulis G. Computer-based video instructions for acquisition of technical skills. J Vis Commun Med. 2005;28(4):150–5. https://doi.org/10.1080/01405110500518622.

Grierson LE, Barry M, Kapralos B, Carnahan H, Dubrowski A. The role of collaborative interactivity in the observational practice of clinical skills. Med Educ. 2012;46(4):409–16. https://doi.org/10.1111/j.1365-2923.2011.04196.x.

Welsher A, Rojas D, Khan Z, VanderBeek L, Kapralos B, Grierson LEM. The application of observational practice and educational networking in simulation-based and distributed medical education contexts. Simul Healthc. 2018;13(1):3–10. https://doi.org/10.1097/SIH.0000000000000268.

Rojas D, Cheung JJ, Weber B, Kapralos B, Carnahan H, Bägli DJ, Dubrowski A. An online practice and educational networking system for technical skills: learning experience in expert facilitated vs. independent learning communities. Stud Health Technol Inform. 2012;173:393–7.

Craig P, Dieppe PA, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:A1655.

Haji FA, Da Silva C, Daigle DT, Dubrowski A. From bricks to buildings: adapting the Medical Research Council framework to develop programs of research in simulation education and training for the health professions. Simul Healthc. 2014;9(4):249–59. https://doi.org/10.1097/SIH.0000000000000039.

Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be? British Med J. 2004;328:1561–3.

Faulkner H, Regehr G, Martin J, Reznick R. Validation of an objective structured assessment of technical skill for surgical residents. Acad Med. 1996;71(12):1363–5. https://doi.org/10.1097/00001888-199612000-00023.

Brydges R, Carnahan H, Rose D, Rose L, Dubrowski A. Coordinating progressive levels of simulation fidelity to maximize educational benefit. Acad Med. 2010;85(5):806–12. https://doi.org/10.1097/ACM.0b013e3181d7aabd.

Guérard-Poirier N, Beniey M, Meloche-Dumas L, Lebel-Guay F, Misheva B, Abbas M, Dhane M, Elraheb M, Dubrowski A, Patocskai E. An educational network for surgical education supported by gamification elements: protocol for a randomized controlled trial. JMIR Res Protoc. 2020;9(12):e21273. https://doi.org/10.2196/21273.

Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65(9 Suppl):S63–7. https://doi.org/10.1097/00001888-199009000-00045.

Reznick R, Regehr G, MacRae H, Martin J, McCulloch W. Testing technical skill via an innovative "bench station" examination. Am J Surg. 1997;173(3):226–30. https://doi.org/10.1016/s0002-9610(97)89597-9.

Martin JA, Regehr G, Reznick R, MacRae H, Murnaghan J, Hutchison C, Brown M. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg. 1997;84(2):273–8. https://doi.org/10.1046/j.1365-2168.1997.02502.x.

Gupta SK. Intention-to-treat concept: a review. Perspect Clin Res. 2011;2(3):109–12. https://doi.org/10.4103/2229-3485.8322128.

Corp IBM. IBM SPSS statistics for windows, version 25.0. Armonk, NY: IBM Corp; 2017.

Witheridge A, Ferns G, Scott-Smith W. Revisiting Miller’s pyramid in medical education: the gap between traditional assessment and diagnostic reasoning. Int J Med Educ. 2019;10:191–2. https://doi.org/10.5116/ijme.5d9b.0c37.

Manzone JC, Mylopoulos M, Ringsted C, Brydges R. How supervision and educational supports impact medical students’; preparation for future learning of endotracheal intubation skills: a non-inferiority experimental trial. BMC Med Educ. 2021;21(1):102. https://doi.org/10.1186/s12909-021-02514-0.

Noerholk LM, Tolsgaard MG. Structural individualism or collaborative mindsets: next steps for peer learning. Med Educ. 2021. https://doi.org/10.1111/medu.14721.

Dubrowski A, Morin MP. Evaluating pain education programs: an integrated approach. Pain Res Manage. 2011;16(6):407–10. https://doi.org/10.1155/2011/320617.

Patton MQ. Qualitative research and evaluation methods. 3rd ed. Thousand Oaks, CA: Sage; 2002.

Margolis MJ, Clauser BE, Cuddy MM, Ciccone A, Mee J, Harik P, Hawkins RE. Use of the mini-clinical evaluation exercise to rate examinee performance on a multiple-station clinical skills examination: a validity study. Acad Med. 2006;81(10):S56–60.

Guadagnoli M, Morin MP, Dubrowski A. The application of the challenge point framework in medical education. Med Educ. 2012;46(5):447–53. https://doi.org/10.1111/j.1365-2923.2011.04210.x.

Acknowledgements

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Funding

This work was supported by funding from the Natural Sciences and Engineering Research Council of Canada (NSERC), Canada Research Chairs in Healthcare Simulation, and the Canadian Foundation for Innovation. We also thank the Académie CHUM and the PREMIER scholarship program at the Université de Montréal for their support.

Author information

Authors and Affiliations

Contributions

All members of the research team contributed to the conception of the RCT. N.G.P. and E.P. created the course on GEN. A.T. and A.D. programmed the course on GEN. N.G.P, F.M., R.Y. and E.P. created the videos on GEN. GEN is an original creation by B.K. and A.D. N.G.P, A.T. and E.P. performed the RCT. N.G.P. and A.T. collected the data. N.G.P., L.M.D, A.D. and E.P. took part in the analysis and interpretation of the data collected. N.G.P., A.D. and E.P. contributed to the drafting of the final manuscript. All members of the team have read and approved the final version of the manuscript and made sure to review all aspects regarding the accuracy or integrity of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guérard-Poirier, N., Meloche-Dumas, L., Beniey, M. et al. The exploration of remote simulation strategies for the acquisition of psychomotor skills in medicine: a pilot randomized controlled trial. Discov Educ 2, 19 (2023). https://doi.org/10.1007/s44217-023-00041-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44217-023-00041-2