Abstract

For parameter estimation of continuous and discrete distributions, we propose a generalization of the method of moments (MM), where Stein identities are utilized for improved estimation performance. The construction of these Stein-type MM-estimators makes use of a weight function as implied by an appropriate form of the Stein identity. Our general approach as well as potential benefits thereof are first illustrated by the simple example of the exponential distribution. Afterward, we investigate the more sophisticated two-parameter inverse Gaussian distribution and the two-parameter negative-binomial distribution in great detail, together with illustrative real-world data examples. Given an appropriate choice of the respective weight functions, their Stein-MM estimators, which are defined by simple closed-form formulas and allow for closed-form asymptotic computations, exhibit a better performance regarding bias and mean squared error than competing estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For many parametric distributions, so-called Stein identities are available, which rely on moments of functional expressions of a corresponding random variable. These identities are named after Charles Stein, who developed the idea of uniquely characterizing a certain distribution family by such a moment identity [see 21, 22]. Many examples of both continuous and discrete distributions together with their Stein characterizations can be found in Stein et al. [23], Sudheesh [24], Sudheesh and Tibiletti [25], Landsman and Valdez [15], Weiß and Aleksandrov [30], Anastasiou et al. [1] and the references therein. Stein identities are not a mere tool of probability theory. During the last years, there was also a lot of research activity on statistical applications of Stein identities, for example to goodness-of-fit (GoF) tests [4, 31], sequential change-point monitoring by control charts [29], and shrinkage estimation [14]. For references on these and further statistical applications related to Stein’s method, see Betsch et al. [4], Anastasiou et al. [1], Kubokawa [14]. In the present article, a further statistical application of Stein identities is investigated and exemplified, namely to the development of closed-form parameter estimators for continuous or discrete distributions. The idea to construct generalized types of method-of-moments (MM) estimators based on an appropriate type of Stein identity plus weighting function, referred to as Stein-MM estimators, was first explored in some applications by Arnold et al. [2] and Wang and Weiß [28]. Recently, Ebner et al. [6] discussed the Stein-MM approach in a much broader way, and also the present article provides a comprehensive treatment of Stein-MM estimators for various distributions. The main motivation for considering Stein-MM estimation is that the weighting function might be chosen in such a way that the resulting estimator shows better properties (e. g., a reduced bias or mean squared error (MSE)) than the default MM estimator or other existing estimators. Despite the additional flexibility offered by the weighting function, the Stein-MM estimators are computed from simple closed-form expressions, and consistency and asymptotic normality are easily established, also see Ebner et al. [6].

In what follows, we apply the proposed Stein-MM estimation to three different distribution families. We start with the illustrative example of the exponential (Exp) distribution in Sect. 2. This simple one-parameter distribution mainly serves to demonstrate the general approach for deriving the Stein-MM estimator and its asymptotics, and it also indicates the potential benefits of using the Stein-MM approach for parameter estimation. Afterward in Sect. 3, we examine a more sophisticated type of continuous distribution, namely the two-parameter inverse Gaussian (IG) distribution. In Sect. 4, we then turn to a discrete distribution family, namely the two-parameter negative-binomial (NB) distribution. Illustrative real-world data examples are also presented in Sects. 3–4. Note that neither the exponential distribution nor any discrete distribution have been considered by Ebner et al. [6], and their approach to the Stein-MM estimation of the IG-distribution differs from the one proposed here, see the details below. Also Arnold et al. [2], Wang and Weiß [28] did not discuss any of the aforementioned distributions. Finally, we conclude in Sect. 5 and outline topics for future research.

2 Stein Estimation of Exponential Distribution

The exponential distribution is the most well-known lifetime distribution, which is characterized by the property of being memory-less. It has positive support and depends on the parameter \(\lambda >0\), where its probability density function (pdf) is given by \(\phi (x)=\lambda e^{-\lambda x}\) for \(x > 0\) and zero otherwise. A detailed survey about the properties of and estimators for the \(Exp (\lambda )\)-distribution can be found in Johnson et al. [10, Chapter 19]. Given the independent and identically distributed (i. i. d.) sample \(X_1,\ldots ,X_n\) with \(X_i\sim Exp (\lambda )\) for \(i=1,\ldots ,n\), the default estimator of \(\lambda >0\), which is an MM estimator and the maximum likelihood (ML) estimator at the same time, is given by \(\hat{\lambda } = 1/\overline{X}\), where \(\overline{X}=\tfrac{1}{n}\sum _{i=1}^{n} X_i\) denotes the sample mean. This estimator is known to neither be unbiased, nor to be optimal in terms of the MSE, see Elfessi & Reineke [7]. To derive a generalized MM estimator with perhaps improved bias or MSE properties, we consider the exponential Stein identity according to Stein et al. [23, Example 1.6], which states that

for any piecewise differentiable function f with \(f(0)=0\) such that \(\mathbb {E}\big [\vert f'(X)\vert \big ]\), \(\mathbb {E}\big [\vert f(X)\vert \big ]\) exist. Solving (2.1) in \(\lambda\) and using the sample moments \(\overline{f'(X)}=\tfrac{1}{n}\sum _{i=1}^{n} f'(X_i)\) and \(\overline{f(X)}=\tfrac{1}{n}\sum _{i=1}^{n} f(X_i)\) instead of the population moments, the class of Stein-MM estimators for \(\lambda\) is obtained as

Note that the choice \(f(x)=x\) leads to the default estimator \(\hat{\lambda } = 1/\overline{X}\). Generally, \(f(x) \not = x\) might be interpreted as a kind of weighting function, which assigns different weights to large or low values of X than the identity function does. For deriving the asymptotic distribution of the general Stein-MM estimator \(\hat{\lambda }_{f,Exp }\), we first define the vectors \({\varvec{Z}}_i\) with \(i=1,\ldots ,n\) as

Their mean equals

where we define \(\mu _f(k,l,m):=\mathbb {E}[X^k\cdot f(X)^l\cdot f'(X)^m]\) for any \(k,l,m\in \mathbb {N}_0=\{0,1,\ldots \}\). Then, the following central limit theorem (CLT) holds.

Theorem 2.1

If \(X_1,\ldots ,X_n\) are i. i. d. according to \(Exp (\lambda )\), then the sample mean \(\overline{{\varvec{Z}}}\) of \({\varvec{Z}}_1,\ldots ,{\varvec{Z}}_n\) according to (2.3) is asymptotically normally distributed as

where \(N (\textbf{0}, {\varvec{\Sigma }})\) denotes the multivariate normal distribution, and where the covariances are given as

The proof of Theorem 2.1 is provided by Appendix A.1. In the second step of deriving the asymptotics of \(\hat{\lambda }_{f,Exp }\), we define the function \(g(u,v):=\frac{u}{v}\). Then, \(\hat{\lambda }_{f,Exp }=g(\overline{{\varvec{Z}}})\) and \(\lambda =g({\varvec{\mu }}_Z)\). Applying the Delta method [18] to Theorem 2.1, the following result follows.

Theorem 2.2

If \(X_1,\ldots ,X_n\) are i. i. d. according to \(Exp (\lambda )\), then \(\hat{\lambda }_{f,Exp }\) is asymptotically normally distributed, where the asymptotic variance and bias are given by

The proof of Theorem 2.2 is provided by Appendix A.2. Note that the moments \(\mu _f(k,l,m)\) involved in Theorems 2.1 and 2.2 can sometimes be derived explicitly, see the subsequent examples, while they can be computed by using numerical integration otherwise.

After having derived the asymptotic variance and bias without explicitly specifying the function f, let us now consider some special cases of this weighting function. Here, our general strategy is as follows. We first specify a parametric class of functions, where the actual parameter value(s) are determined only after a second step. In this second step, we compute asymptotic properties of the Stein-MM estimator such as bias, variance, and MSE, and then we select the parameter value(s) such that some of the aforementioned properties are minimized within the considered class of functions. Detailed examples are presented below. The chosen parametric class of functions should be sufficiently flexible in the sense that by modifying its parameter value(s), it should be possible to move the weight to quite different regions of the real numbers. Its choice may also be guided by the aim of covering existing parameter estimators as special cases. For the exponential distribution discussed here in Sect. 2, we already pointed out that the default ML and MM estimator \(\hat{\lambda } = 1/\overline{X}\) corresponds to \(f(x)=x\), so the choice of a parametric class of functions including \(f(x)=x\) appears reasonable. This leads to our first illustrative example, namely the choice \(f_a: [0;\infty )\rightarrow [0;\infty )\), \(f_a(x)=x^a\), where \(a=1\) leads to the default estimator \(\hat{\lambda } = 1/\overline{X}\). Here, we have to restrict to \(a>0\) to ensure that the condition \(f(0)=0\) in (2.1) holds. Using that

the following corollary to Theorem 2.2 is derived.

Corollary 2.3

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(Exp (\lambda )\), and let \(f_a(x)= x^a\) with \(a>\tfrac{1}{2}\). Then, \(\hat{\lambda }_{f_a,Exp }\) is asymptotically normally distributed, where the asymptotic variance and bias are given by

Furthermore, the MSE equals

The proof of (2.5) and Corollary 2.3 is provided by Appendix A.3. Note that in Corollary 2.3, \(\left( {\begin{array}{c}r\\ s\end{array}}\right)\) denotes the generalized binomial coefficient given by \(\Gamma (r+1)/\Gamma (s+1)/\Gamma (r-s+1)\).

In Fig. 1a, the asymptotic variance and bias of \(\hat{\lambda }_{f_a,Exp }\) according to Corollary 2.3 are presented. While the variance is minimal for \(a=1\) (i. e., for the ordinary MM and ML estimator), the bias decreases with decreasing a (i. e., bias reductions are achieved for sublinear choices of \(f_a(x)=x^a\)). Hence, an MSE-optimal choice of \(f_a(x)\) is obtained for some \(a\in (0.5;1)\). This is illustrated by Fig. 1b, where the MSE of Corollary 2.3 is presented for different sample sizes \(n\in \{10,25,50,100\}\). The corresponding optimal values of a are determined by numerical optimization as 0.952, 0.978, 0.988, and 0.994, respectively. As a result, especially for small n, we have a reduction of the MSE (and of the bias as well) if using a “true” Stein-MM estimator (i. e., with \(a\not =1\)). Certainly, if the focus is mainly on bias reduction, then an even smaller choice of a would be beneficial.

As a second illustrative example, let us consider the functions \(f_u(x)=1-u^x\) with \(u\in (0;1)\), which are again sublinear, but this time also bounded from above by one. Again, we can derive a corollary to Theorem 2.2, this time by using the moment formula

Corollary 2.4

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(Exp (\lambda )\), and let \(f_u(x)=1- u^x\) with \(u\in (0;1)\). Then, \(\hat{\lambda }_{f_u,Exp }\) is asymptotically normally distributed, where the asymptotic variance and bias are given by

Furthermore, the MSE equals

The proof of (2.6) and Corollary 2.4 is provided by Appendix A.4.

This time, the variance decreases for increasing u, whereas the bias decreases for decreasing u. As a consequence, an MSE-optimal choice is expected for some u inside the interval (0; 1). This is illustrated by Fig. 2a, where the minima for \(n\in \{10,25,50,100\}\), given that \(\lambda =1\), are attained for \(u\approx 0.918\), 0.963, 0.981, and 0.990, respectively. The major difference between the two types of weighting functions in Corollaries 2.3 and 2.4 is given by the role of \(\lambda\) within the expression for the MSE. For \(f_a(x)\) in Corollary 2.3, \(\lambda\) occurs as a simple factor such that the optimal choice for a is the same across different \(\lambda\). Hence, the optimal a is simply a function of the sample size n, which is very attractive for applications in practice. For \(f_u(x)\) in Corollary 2.4, by contrast, the MSE depends in a more sophisticated way on \(\lambda\), and the optimal u differs for different \(\lambda\) as illustrated by Fig. 2b. Thus, if one wants to use the weighting function \(f_u(x)\) in practice, a two-step procedure appears reasonable, where an initial estimate is computed via \(\hat{\lambda } = 1/\overline{X}\), which is then refined by \(\hat{\lambda }_{f_u,Exp }\) with u being determined by plugging-in \(\hat{\lambda }\) instead of \(\lambda\) (also see Section 2.2 in Ebner et al. [6] for an analogous idea).

We conclude this section by pointing out two further application scenarios for the use of Stein-MM estimators \(\hat{\lambda }_{f,Exp }\). First, in analogy to recent Stein-based GoF-tests such as in Weiß et al. [31], \(\hat{\lambda }_{f,Exp }\) might be used for GoF-applications. More precisely, the idea could be to select a set \(\{f_1,\ldots , f_K\}\) of weighting functions, and to compute \(\hat{\lambda }_{f_k}\) for all \(k=1,\ldots ,K\). As any \(\hat{\lambda }_{f_k}\) is a consistent estimator of \(\lambda\) according to Theorem 2.2, the obtained values \(\{\hat{\lambda }_{f_1},\ldots ,\hat{\lambda }_{f_K}\}\) should vary closely around \(\lambda\). For other continuous distributions with positive support, such as the IG-distribution considered in the next Sect. 3, we cannot expect that \(\overline{f'(X)} / \overline{f(X)}\) has an (asymptotically) unique mean for different f, see Remark 3.1, so a larger variation among the values in \(\{\hat{\lambda }_{f_1},\ldots ,\hat{\lambda }_{f_K}\}\) is expected. Such a discrepancy in variation might give rise for a formal exponential GoF-test. But as the focus of this article is on parameter estimation, we postpone a detailed investigation of this GoF-application to future research.

A second type of application is illustrated by Table 1, which refers to a simulation experiment with \(10^5\) replications per scenario. For simulated i. i. d. \(Exp (1)\)-samples of sizes \(n\in \{10,25,50,100\}\), about 10 % of the observations were randomly selected and contaminated by an additive outlier, namely by adding 5 to the selected observations. Note that the topic of outliers in exponential data received considerable interest in the literature [10, pp. 528–530]. Then, different estimators \(\hat{\lambda }_{f,Exp }\) are computed from the contaminated data, where the first three choices of the weighting function f are characterized by a sublinear increase, whereas the fourth function, \(f(x)=x\), corresponds to the default estimator \(\hat{\lambda } = 1/\overline{X}\). Table 1 shows that all MM estimators are affected by the outliers, e. g., in terms of the strong negative bias. But comparing the four columns of bias and MSE values, respectively, it gets clear that the novel Stein-MM estimators are more robust against the outliers, having both lower bias and MSE than \(\hat{\lambda }\). Especially the choice \(f(x)=\ln (1+x)\), a logarithmic weighting scheme, leads to a rather robust estimator. The relatively good performance of the Stein-MM estimators can be explained by the fact that the weighting functions increase sublinearly (which is also beneficial for bias reduction in non-contaminated data, recall the above discussion), so the effect of large observations is damped. To sum up, by choosing an appropriate weighting function f within the Stein-MM estimator \(\hat{\lambda }_{f,Exp }\), one cannot only achieve a reduced bias and MSE, but also a reduced sensitivity towards outlying observations.

3 Stein Estimation of Inverse Gaussian Distribution

Like the exponential distribution considered in the previous Sect. 2, the IG-distribution with parameters \(\mu ,\lambda >0\), abbreviated as \(IG (\mu ,\lambda )\), has positive support, where the pdf is given by

The IG-distribution is commonly used as a lifetime model (as it can be related to the first-passage time in random walks), but it may also simply serve as a distribution with positive skewness and, thus, as an alternative to, e. g., the lognormal distribution [see ]. Detailed surveys about the properties and applications of \(IG (\mu ,\lambda )\), and on many further references, can be found in Folks & Chhikara [8], Seshadri [19] as well as in Johnson et al. [10, Chapter 15]. In what follows, the moment properties of \(X\sim IG (\mu ,\lambda )\) are particularly relevant. We have \(\mathbb {E}[X]=\mu\), \(\mathbb {E}[1/X]=1/\mu +1/\lambda\), and \(\mathbb {V}[X] =\mu ^3/\lambda\). In particular, positive and negative moments are related to each other by

see Tweedie [26, p. 372] as well as the aforementioned surveys.

Remark 3.1

At this point, let us briefly recall the discussion in Sect. 2 (p. 7), where we highlighted the property that for i. i. d. exponential samples, the quotient \(\overline{f'(X)} / \overline{f(X)}\) has an (asymptotically) unique mean for different f. From counterexamples, it is easily seen that this property is not true for \(IG (\mu ,\lambda )\)-data. The Delta method implies that the mean of \(\overline{f'(X)} / \overline{f(X)}\) is asymptotically equal to \(\mathbb {E}[f'(X)]\big /\mathbb {E}[f(X)]\), which equals

-

\(1/\mathbb {E}[X] = 1/\mu\) for \(f(x)=x\), but

-

\(2\mathbb {E}[X]/\mathbb {E}[X^2] = 2\mu \big /\big (\mu ^2(1+\tfrac{\mu }{\lambda })\big ) = 2\lambda \big /\big (\mu (\lambda +\mu )\big )\) for \(f(x)=x^2\).

From now on, let \(X_1,\ldots ,X_n\) be an i. i. d. sample from \(IG (\mu ,\lambda )\), which shall be used for parameter estimation. Here, one obviously estimates \(\mu\) by the sample mean \(\overline{X}\), but the estimation of \(\lambda\) is more demanding. In the literature, the MM and ML estimation of \(\lambda\) have been discussed (see the details below), while our aim is to derive a generalized MM estimator with improved bias and MSE properties based on a Stein identity. In fact, as we shall see, our proposed approach can be understood as a unifying framework that covers the ordinary MM and ML estimator as special cases. A Stein identity for \(IG (\mu ,\lambda )\) has been derived by Koudou & Ley [13, p. 172], which states that

holds for all differentiable functions \(f: (0;\infty )\rightarrow \mathbb {R}\) with \(\lim _{x \rightarrow 0} f(x)\,\phi (x) =\lim _{x \rightarrow \infty } f(x)\,\phi (x)=0\). Solving (3.2) in \(\lambda\) and using the sample moments \(\overline{h(X)}=\tfrac{1}{n}\sum _{i=1}^{n} h(X_i)\) instead of \(\mathbb {E}\big [h(X)\big ]\) (where h might be any of the functions involved in (3.2)), the class of Stein-MM estimators for \(\lambda\) is obtained as

Here, the ordinary MM estimator of \(\lambda >0\), i. e., \(\hat{\lambda }_{MM }=\overline{X}^3/S^2\) with \(S^2\) denoting the empirical variance [27], is included as the special case \(f\equiv 1\), whereas the ML estimator \(\hat{\lambda }_{ML }=\overline{X}\big /\big (\overline{X}\cdot \overline{1/X}-1 \big )\) [26] follows for \(f(x)=1/x\). Hence, (3.3) provides a unifying estimation approach that covers the established estimators as special cases.

Remark 3.2

At this point, a reference to Example 2.9 in Ebner et al. [6] is necessary. As already mentioned in Sect. 1, also Ebner et al. [6] proposed a Stein-MM estimator for the IG-distribution, which, however, differs from the one developed here. The crucial difference is given by the fact that Ebner et al. [6] tried a joint estimation of \((\mu ,\lambda )\) based on (3.2), namely by jointly solving two equations that are implied by (3.2) if using two different weight functions \(f_1\not = f_2\). The resulting class of estimators, however, does not cover the existing MM and ML estimators, so Ebner et al. [6] did not pursue the Stein-MM estimation of the IG-distribution further. By contrast, as we did not see notable potential for improving the estimation of \(\mu\) by \(\overline{X}\) (recall the diverse optimality properties of the sample mean as an estimator of the population mean [e. g., 20]), we used (3.2) to only derive an estimator for \(\lambda\). In this way, we were able to recover both the MM and ML estimator of \(\lambda\) within (3.3).

For deriving the asymptotic distribution of our general Stein-MM estimator \(\hat{\lambda }_{f,IG }\) from (3.3), we first define the vectors \({\varvec{Z}}_i\) with \(i=1,\ldots ,n\) as

Their mean equals

Then, the following CLT holds.

Theorem 3.3

If \(X_1,\ldots ,X_n\) are i. i. d. according to \(IG (\mu ,\lambda )\), then the sample mean \(\overline{{\varvec{Z}}}\) of \({\varvec{Z}}_1,\ldots ,{\varvec{Z}}_n\) according to (3.4) is asymptotically normally distributed as

where \(N (\textbf{0}, {\varvec{\Sigma }})\) denotes the multivariate normal distribution, and where the covariances are given as

The proof of Theorem 3.3 is provided by Appendix A.5.

In the second step of deriving the asymptotics of \(\hat{\lambda }_{f,IG }\), we define the function \(g(x_1,x_2,x_3,x_4,x_5):=x_1^2(2x_5+x_3)/(x_4-x_1^2x_2)\). Then, \(\hat{\lambda }_{f,IG }=g(\overline{{\varvec{Z}}})\) and \(\lambda =g({\varvec{\mu }}_Z)\). Applying the Delta method [18] to Theorem 3.3, the following result follows.

Theorem 3.4

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(IG (\mu ,\lambda )\), and define \(\vartheta _{f,IG }:=\mu _f(2,1,0)-\mu ^2\mu _f(0,1,0)\). Then, \(\hat{\lambda }_{f,IG }\) is asymptotically normally distributed, where the asymptotic variance and bias, respectively, are given by

and

The proof of Theorem 3.4 is provided by Appendix A.6.

Before we discuss the effect of f on bias and MSE of \(\hat{\lambda }_{f,IG }\), let us first consider the special cases of the ordinary MM and ML estimator. Their asymptotics are immediate consequences of Theorem 3.4. For the MM estimator \(\hat{\lambda }_{MM }\), we have to choose \(f\equiv 1\) such that \(f'\equiv 0\). As a consequence,

This leads to a considerable simplification of Theorem 3.4, see Appendix A.7, which is summarized in the following corollary.

Corollary 3.5

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(IG (\mu ,\lambda )\), then \(\hat{\lambda }_{MM }=\overline{X}^3/S^2\) is asymptotically normally distributed with asymptotic variance \(\sigma _{MM }^2 = \frac{2}{n}\,\lambda (\lambda +3\mu )\) and bias \(\mathbb {B}_{MM } = \frac{3}{n}\,(\lambda +3\mu )\).

While we are not aware of a reference providing these asymptotics, they can be verified by using Tweedie [27, p. 704]. There, normal asymptotics for the reciprocal \(1/\hat{\lambda }_{MM }\) are provided: \(\sqrt{n}\big (\hat{\lambda }_{MM }^{-1}-\lambda ^{-1}\big )\ \sim N \big (0,\ 2(1+3\mu /\lambda )/\lambda ^2\big )\). Applying the Delta method with \(g(x)=1/x\) and \(g'(x)=-1/x^2\) to it, we conclude that \(\sqrt{n}(\hat{\lambda }_{MM }-\lambda )\) has the asymptotic variance \(\lambda ^4\cdot 2(1+3\mu /\lambda )/\lambda ^2 = 2\lambda \, (\lambda +3\mu )\) like in Corollary 3.5.

Next, we consider the special case of the ML estimator \(\hat{\lambda }_{ML }\), which follows by choosing \(f(x)= 1/x\) such that \(f'(x)=-1/x^2\). Again, the joint moments \(\mu _f(k,l,m)\) simplify a lot:

Together with Theorem 3.4, see Appendix A.8, we get the following corollary.

Corollary 3.6

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(IG (\mu ,\lambda )\), then \(\hat{\lambda }_{ML }=\overline{X}\big /\big (\overline{X}\cdot \overline{1/X}-1 \big )\) is asymptotically normally distributed with asymptotic variance \(\sigma _{ML }^2 = \frac{2}{n}\,\lambda ^2\) and bias \(\mathbb {B}_{ML } = \frac{3}{n}\,\lambda\).

Comparing Corollaries 3.5 and 3.6, it is interesting to note that the MM estimator has larger asymptotic bias and variance than the ML estimator: \(\sigma _{MM }^2 = \sigma _{ML }^2+\tfrac{6\lambda \mu }{n}\) and \(\mathbb {B}_{MM } =\mathbb {B}_{ML }+\tfrac{9\mu }{n}\). To verify the asymptotics of Corollary 3.6, note that the ML estimator \(\hat{\lambda }_{ML }\) has been shown to follow an inverted-\(\chi ^2\) distribution: \(\hat{\lambda }_{ML }\ \sim \ n\,\lambda \cdot \text {Inv-}\chi ^2_{n-1}\) [see 26, p. 368]. Using the formulae for mean and variance of \( \text {Inv-}\chi ^2_{n-1}\) [see 3, p. 431], we get

for large n, which agrees with Corollary 3.6.

Remark 3.7

To analyze the performance of the asymptotics provided by Theorem 3.4 (and that of the special cases discussed in Corollaries 3.5 and 3.6), if used as approximations to the true distribution of \(\hat{\lambda }_{f,IG }\) for finite sample size n, we did a simulation experiment with \(10^5\) replications. The obtained results for various choices of \((\mu ,\lambda )\) and f(x) are summarized in Table 2. It can be recognized that the asymptotic approximations for mean and standard deviation generally agree quite well with their simulated counterparts. Only for the case \(f(x)\equiv 1\) (the default MM estimator) and sample size \(n=100\), we sometimes observe stronger deviations. But in the large majority of estimation scenarios, we have a close agreement such that the conclusions derived from the asymptotic expressions are meaningful for finite sample sizes as well.

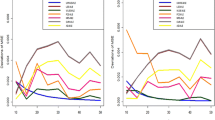

In analogy to our discussion in Sect. 2, let us now analyze the performance of the Stein-MM estimator \(\hat{\lambda }_{f,IG }\) for the weight functions \(f_a: (0;\infty )\rightarrow (0;\infty )\), \(f_a(x)=x^a\) with \(a\in \mathbb {R}{\setminus }\{-\frac{1}{2}\}\). Recall that this class of weight functions cover the default MM estimator \(\hat{\lambda }_{MM }\) for \(a=0\) and the ML estimator \(\hat{\lambda }_{ML }\) for \(a=-1\). The choice \(a=-\frac{1}{2}\) (right in the middle between these two special cases) has to be excluded as it leads to a degenerate estimator \(\hat{\lambda }_{f_a,IG }\) according to (3.3). For this reason, the subsequent analyses in Figs. 3 and 4 are done separately for \(a<-\frac{1}{2}\) (plots on left-hand side, covering the ML estimator) and \(a>-\frac{1}{2}\) (plots on right-hand side, covering the MM estimator).

Plots of \(n\,\sigma ^2 _{f_a,IG }\) and \(n\,\mathbb {B}_{f_a,IG }\), where points indicate minimal variance and bias values. Scenarios \((\mu ,\lambda )=(1,3)\) with a \(a\in (-2.5;-0.5)\) and b \(a\in (-0.5;0.8)\), and \((\mu ,\lambda )=(3,1)\) with c \(a\in (-2.5;-0.5)\) and (d) \(a\in (-0.5;0.8)\). Dotted lines at \(a=-1\) and \(a=0\) correspond to default ML and MM estimator, respectively

Let us start with the analysis of asymptotic bias and variance in Fig. 3. The upper and lower panel consider two different example situations, namely \((\mu ,\lambda )=(1,3)\) and \((\mu ,\lambda )=(3,1)\), respectively, while left-hand and right-hand side are separated by the pole at \(a=-\frac{1}{2}\). The right-hand side shows that the default MM estimator is neither (locally) optimal in terms of asymptotic bias nor in terms of variance. In fact, the optimal a for \(a>-\frac{1}{2}\) is around \(-0.1\) for \((\mu ,\lambda )=(1,3)\), and around \(-0.2\) for \((\mu ,\lambda )=(3,1)\). However, comparing the actual values at the Y-axis to those of the plots on the left-hand side, we recognize that the asymptotic bias and variance get considerably smaller for some region with \(a<-\frac{1}{2}\). In particular, the ML estimator is clearly superior to the MM estimator, and as shown by Figs. 3a and c, the ML estimator is even optimal in terms of the asymptotic variance. It has to be noted, however, that the curve corresponding to the asymptotic variance is rather flat around \(a=-1\), so moderate deviations from \(a=-1\) do not have a notable effect on the variance. Thus, it is important to also consider the optimum bias, which is reached for some a around \(-0.65\) in both (a) and (c). So it appears to be advisable to choose an \(a>-1\) for optimal overall estimation performance.

Plots of MSE\(_{f_a,IG }\), where points indicate minimal MSE values. Scenarios \((\mu ,\lambda )=(1,3)\) with a \(a\in (-2.5;-0.5)\) and b \(a\in (-0.5;0.8)\), and \((\mu ,\lambda )=(3,1)\) with c \(a\in (-2.5;-0.5)\) and d \(a\in (-0.5;0.8)\). Dotted lines at \(a=-1\) and \(a=0\) correspond to default ML and MM estimator, respectively

This is confirmed by Fig. 4, where the asymptotic MSE is shown for various sample sizes n and the same scenarios as in Fig. 3. While the ML estimator approaches the MSE-optimum for increasing n, we get an improved performance for smaller sample sizes if choosing \(a\in (-1;-0.5)\) appropriately (e. g., \(a\approx -0.8\) if \(n\le 50\)). Generally, an analogous recommendation holds for \(a>-\frac{1}{2}\) in parts (b) and (d), with MSE-optima at a around \(-0.1\) and \(-0.2\), respectively, but much smaller MSE values can be reached for \(a<-\frac{1}{2}\). To sum up, the default MM estimator (and more generally, Stein-MM estimators \(\hat{\lambda }_{f_a,IG }\) with \(a>-\frac{1}{2}\)) are not recommended for practice due to their rather large bias, variance, and thus MSE, while the ML estimator constitutes at least a good initial choice for estimating \(\lambda\), being optimal in terms of asymptotic variance. However, unless the sample size n is very large, an improved MSE performance can be achieved by reducing a to an appropriate value in \((-1;-0.5)\) in the second step and by computing the corresponding Stein-MM estimate \(\hat{\lambda }_{f_a,IG }\).

Example 3.8

As an illustrative data example, let us consider the \(n=25\) runoff amounts at Jug Bridge in Maryland [see \(\mu\) is estimated by the sample mean as \(\approx 0.803\), and using the ML estimator \(\hat{\lambda }_{f_{-1},IG }\) as an initial estimator for \(\lambda\), we get the value \(\approx 1.440\). As outlined before, this initial model fit might now be used for searching estimators with improved performance. Some examples (together with further estimates for comparative purposes) are summarized in Table 3. The ML estimator (\(a=-1\)) is also optimal in asymptotic variance, whereas the bias-optimal choice is obtained for a somewhat larger value of a, namely \(a\approx -0.668\). The corresponding estimate is slightly lower than the ML estimate, similar to the value for \(a=-1.5\), and can thus be seen as a fine-tuning of the initial estimate. By contrast, a notable change in the estimate happens if we turn to \(a>-0.5\). The “constrained-optimal” choices (optimal given that \(a>-0.5\)) as well as the MM estimate lead to nearly the same values (around 1.51) and are thus visibly larger than the actually preferable estimates for \(a<-0.5\). Also their variance and bias are about 2.5 times larger than those of the estimates for \(a<-0.5\).

4 Stein Estimation of Negative-binomial Distribution

While the previous sections (and also the research by Ebner et al. [6]) solely focussed on continuous distributions, let us now turn to the case of discrete-valued random variables. Here, the most relevant type are count random variables X, having a quantitative range contained in \(\mathbb {N}_0\). The probably most well-known distributions for counts are Poisson and binomial distributions, both depending on the (normalized) mean as their only model parameter. But as already discussed in Sect. 3, there is hardly any potential for finding a better estimator of the mean than the sample mean, so we do not further discuss these distributions. Instead, we focus on another popular count distribution, namely the NB-distribution with parameters \(\nu >0\) and \(\pi \in (0;1)\), abbreviated as \(NB (\nu ,\pi )\). Such \(X\sim NB (\nu ,\pi )\) has the range \(\mathbb {N}_0\), probability mass function (pmf) \(\mathbb {P}(X=x) = \left( {\begin{array}{c}\nu +x-1\\ x\end{array}}\right) \, (1-\pi )^x\, \pi ^\nu\), and mean \(\mu = \mathbb {E}[X]=\frac{\nu (1-\pi )}{\pi }\). By contrast to the equidispersed Poisson distribution, its variance \(\sigma ^2:= \mathbb {V}[X]=\frac{\nu (1-\pi )}{\pi ^2}\) is always larger than the mean (overdispersion), which is an important property for applications in practice. A detailed survey about the properties of and estimators for the \(NB (\nu ,\pi )\)-distribution can be found in Johnson et al. [11, Chapter 5]. Instead of the original parametrization by \((\nu ,\pi )\), it is often advantageous to consider either \((\mu ,\nu )\) or \((\mu ,\pi )\), where \(\nu\) or \(\pi\), respectively, serve as an additional dispersion parameter once the mean \(\mu\) has been fixed. In case of the \((\mu ,\nu )\)-parametrization, it holds that \(\pi =\tfrac{\nu }{\nu +\mu }\) and \(\mathbb {V}[X]=\tfrac{\nu +\mu }{\nu }\,\mu\), whereas we get \(\nu =\tfrac{\pi \,\mu }{1-\pi }\) and \(\mathbb {V}[X]=\tfrac{1}{\pi }\,\mu\) for the \((\mu ,\pi )\)-parametrization. Besides the ease of interpretation, these parametrizations are advantageous in terms of parameter estimation. While MM estimation is rather obvious, namely \(\mu\) by \(\overline{X}\) and \(\nu _{MM }=\overline{X}^2/(S^2-\overline{X})\), \(\pi _{MM }=\overline{X}/S^2\), ML estimation is generally demanding as there does not exist a closed-form solution, see the discussion by Kemp & Kemp [12], i. e., numerical optimization is necessary. However, there is an important exception: the NB’s ML estimator of the mean \(\mu\) is given by \(\overline{X}\) [12, p. 867], i. e., \(\overline{X}\) is both the MM and ML estimator with its known appealing performance. So it suffices to find an adequate estimator for \(\nu\) or \(\pi\), respectively, the ML estimators of which do not have a closed-form expression.

These difficulties in estimating \(\nu\) or \(\pi\), respectively, serve as our motivation for deriving a generalized MM estimator. For this purpose, we consider the NB’s Stein identity according to Brown & Phillips [5, Lemma 1], which can be expressed as either

for any function f such that \(\mathbb {E}\big [\vert f(X)\vert \big ]\), \(\mathbb {E}\big [\vert f(X+1)\vert \big ]\) exist. Note that the discrete difference \(\Delta f(x):= f(x+1)-f(x)\) in (4.1) and (4.2) plays a similar role as the continuous derivative \(f'(x)\) in the previous Stein identities (2.1) and (3.2).

Stein-MM estimators are now derived by solving (4.1) in \(\nu\) or (4.2) in \(\pi\), respectively, and by using again sample moments \(\overline{h(X)}=\tfrac{1}{n}\sum _{i=1}^{n} h(X_i)\) instead of the involved population moments \(\mathbb {E}\big [h(X)\big ]\) (with \(\mu\) being estimated by \(\overline{X}\)). As a result, the (closed-form) classes of Stein-MM estimators for \(\nu\) and \(\pi\) are obtained as

Note that the choice \(f(x)=x\) (hence \(\Delta f(x)=1\)) leads to the default MM estimators given above. The ML estimators are not covered by (4.3) this time, because they do not have a closed-form expression at all. Note, however, that the so-called “weighted-mean estimator” for \(\nu\) in (2.6) of Kemp & Kemp [12], which was motivated as a kind of approximate ML estimator, is covered by (4.3), namely by choosing \(f_\alpha (x)=\alpha ^x\) with \(\alpha \in (0;1)\). It is also worth pointing to Savani & Zhigljavsky [17], who define an estimator of \(\nu\) based on the moment \(\overline{f(X)}\) for some specified f; their approach, however, usually does not lead to a closed-form estimator.

For deriving the asymptotic distribution of the general Stein-MM estimator \(\hat{\nu }_{f,NB }\) or \(\hat{\pi }_{f,NB }\), respectively, we first define the vectors \({\varvec{Z}}_i\) with \(i=1,\ldots ,n\) as

Their mean equals

where we define \(\tilde{\mu }_f(k,l,m):=\mathbb {E}[X^k\cdot f(X)^l\cdot f(X+1)^m]\) for any \(k,l,m\in \mathbb {N}_0\). Then, the following CLT holds.

Theorem 4.1

If \(X_1,\ldots ,X_n\) are i. i. d. according to a negative binomial distribution, then the sample mean \(\overline{{\varvec{Z}}}\) of \({\varvec{Z}}_1,\ldots ,{\varvec{Z}}_n\) according to (4.4) is asymptotically normally distributed as

where \(N (\textbf{0}, {\varvec{\Sigma }})\) denotes the multivariate normal distribution, and where the covariances are given as

The proof of Theorem 4.1 is provided by Appendix A.9.

In the second step of deriving the Stein-MM estimators’ asymptotics, we define the function

-

\(g_\nu (x_1,x_2,x_3,x_4):=x_1(x_4-x_3)/(x_3-x_1x_2)\) for \(\hat{\nu }_{f,NB }\),

-

\(g_\pi (x_1,x_2,x_3,x_4):=(x_4-x_3)/(x_4-x_1x_2)\) for \(\hat{\pi }_{f,NB }\).

Then, \(\hat{\nu }_{f,NB }=g_\nu (\overline{{\varvec{Z}}})\), \(\nu =g_\nu ({\varvec{\mu }}_Z)\), and \(\hat{\pi }_{f,NB }=g_\pi (\overline{{\varvec{Z}}})\), \(\pi =g_\pi ({\varvec{\mu }}_Z)\), respectively, holds. Applying the Delta method [18] to Theorem 4.1, the following theorems follow.

Theorem 4.2

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(NB (\nu ,\, \tfrac{\nu }{\nu +\mu })\), and define \(\eta _1:=\tilde{\mu }_f(1,1,0)-\mu \cdot \tilde{\mu }_f(0,0,1)\). Then, \(\hat{\nu }_{f,NB }\) is asymptotically normally distributed, where the asymptotic variance and bias, respectively, are given by

and

The proof of Theorem 4.2 is provided by Appendix A.10.

Theorem 4.3

Let \(X_1,\ldots ,X_n\) be i. i. d. according to \(NB (\tfrac{\pi \,\mu }{1-\pi },\, \pi )\), and define \(\eta _2:=\tilde{\mu }_f(1,0,1)-\mu \cdot \tilde{\mu }_f(0,0,1)\). Then, \(\hat{\pi }_{f,NB }\) is asymptotically normally distributed, where the asymptotic variance and bias, respectively, are given by

and

The proof of Theorem 4.3 is provided by Appendix A.11.

Our first special case shall be the function \(f_\alpha (x)=\alpha ^x\) with \(\alpha \in (0;1)\), which is inspired by Kemp & Kemp [12]. For evaluating the asymptotics in Theorems 4.1–4.3, we need to compute the moments

As shown in the following, this can be done by explicit closed-form expressions. The idea is to utilize the probability generating function (pgf) of the NB-distribution,

together with the following property:

where \(x_{(r)}:=x\cdots (x-r+1)\) for \(r\in \mathbb {N}_0\) denote the falling factorials. The main result is summarized by the following lemma.

Lemma 4.4

Let \(X\sim NB (\nu ,\,\pi )\). For the mixed factorial moments, we have

The proof of Lemma 4.4 is provided by Appendix A.12. The factorial moments are easily transformed into raw moments by using the relation \(x^k=\sum _{j=0}^{k} S_{k,j}\, x_{(j)}\), where \(S_{k,j}\) are the Sterling numbers of the second kind (see [11], p. 12). Then, \(\mathbb {E}[X^k\cdot \alpha ^{(l+m) X}]\) follows by plugging-in \(z=\alpha ^{l+m}\) into Lemma 4.4.

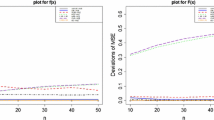

Stein-MM estimator \(\hat{\nu }_{f,NB }\) for \(\mu =2.5\). Plots of \(n\,\sigma ^2 _{f,NB }\) and \(n\,\mathbb {B}_{f,NB }\) for parametrization (4.1), where points indicate minimal variance and bias values. Weighting function (a)–(b) \(f(x)=\alpha ^x\) with \(\alpha \in (0,1)\), and (c)–(d) \(f(x)=(x+1)^a\) with \(a\in (-1,1.5)\). The gray graphs in (c)–(d) correspond to the comparative choice \(f(x)=x^a\), which leads to the default MM estimator for \(a=1\) (dotted lines)

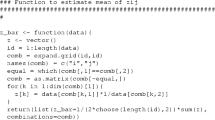

While general closed-form formulae are possible in this way for \(\tilde{\mu }_{f_\alpha }(k,l,m)\) as well as for Theorems 4.1–4.3, the obtained results are very complex such that we decided to omit the final expressions. Instead, we compute \(\tilde{\mu }_{f_\alpha }(k,l,m)\) and, thus, the expressions of Theorems 4.1–4.3 numerically. This is easily done in practice, in fact for any reasonable choice of the function f, by computing

where the upper summation limit M is chosen sufficiently large, e. g., such that \(M^k\cdot f(M)^l\cdot f(M+1)^m\cdot P(X=M)\) falls below a specified tolerance limit. In this way, we generated the illustrative graphs in Figs. 5 (estimator \(\hat{\nu }_{f,NB }\)) and 6 (estimator \(\hat{\pi }_{f,NB }\)). There, parts (a)–(b) always refer to the above choice \(f_\alpha (x)=\alpha ^x\), and clear minima for variance and bias for \(f_\alpha (x)=\alpha ^x\) can be recognized. To be able to compare with the respective default MM estimator, we did analogous computations for \(f_a(x)=x^a\) (where \(a=1\) for the default MM estimator), which, however, is only defined for \(a>0\) as X becomes zero with positive probability. As can be seen from the gray curves in parts (c)–(d), variance and basis usually do not attain a local minimum for \(a>0\). Therefore, parts (c)–(d) mainly focus on a slight modification of the weight function, namely \(f_{a,1}(x)=(x+1)^a\), which is also well-defined for \(a<0\).

Stein-MM estimator \(\hat{\pi }_{f,NB }\) for \(\mu =2.5\). Plots of \(n\,\sigma ^2 _{f,NB }\) and \(n\,\mathbb {B}_{f,NB }\) for parametrization (4.2), where points indicate minimal variance and bias values. Weighting function (a)–(b) \(f_\alpha (x)=\alpha ^x\) with \(\alpha \in (0,1)\), and (c)–(d) \(f_{a,1}(x)=(x+1)^a\) with \(a\in (-1,1.5)\). The gray graphs in (c)–(d) correspond to the comparative choice \(f_a(x)=x^a\), which leads to the default MM estimator for \(a=1\) (dotted lines)

The optimal choices for \(\alpha\) and a, respectively, lead to very similar variance and bias values, see Table 4. While \(\alpha ^x\) leads to a slightly larger variance than \((x+1)^a\), its optimal bias is visibly lower. For both choices of f, however, the optimal Stein-MM estimators perform clearly better than the default MM estimator, see the dotted line at \(a=1\) in parts (c)–(d) in Figs. 5 and 6. Altogether, also in view of the fact that explicit closed-form expressions are possible for \(\alpha ^x\) (although being rather complex), we prefer to use \(f_\alpha (x)=\alpha ^x\) as the weighting function, in accordance to Kemp & Kemp [12]. For this choice, we also did a simulation experiment with \(10^5\) replications (in analogy to Remark 3.7), in order to check the finite-sample performance of the asymptotic expressions for variance and bias. We generally observed a very good agreement between asymptotic and simulated values. Especially for the estimator \(\hat{\pi }_{f_\alpha ,NB }\), the asymptotic approximations show an excellent performance, whereas the estimator \(\hat{\nu }_{f_\alpha ,NB }\) sometimes leads to extreme estimates if \(\nu =2.5\) and \(n=100\). But except these few outlying estimates, also \(\hat{\nu }_{f_\alpha ,NB }\) is well described by the asymptotic formulae. Detailed simulation results are available from the authors upon request.

Example 4.5

As an illustrative data example, let us consider \(n=150\) counts of red mites on apple leaves (see [16], p. 271), who confirmed “a good fit of the negative binomial” for these data. The parameter \(\mu\) is estimated by the sample mean as \(\approx 1.147\). In case of the \((\mu , \nu )\)-parametrization, we use the ordinary MM estimator as an initial estimator for \(\nu\), leading to the value \(\approx 1.167\). Based on this initial model fit, we search for Stein-MM estimators with \(f_\alpha (x)=\alpha ^x\) having an improved performance. The resulting estimates (together with further estimates for comparative purposes) are summarized in the upper part of Table 5. It can be seen that the initial estimate (last column) is corrected downwards to a value close to 1 (i. e., we essentially end up with the special case of a geometric distribution). Here, it is interesting to note that the numerically computed ML estimate as reported in Rueda & O’Reilly [16], also leads to such a result, namely to the value 1.025. In this context, we also recall Kemp & Kemp [12] who proposed the choice \(f_\alpha (x)=\alpha ^x\) to get a closed-form approximate ML estimator for \(\nu\).

We repeated the aforementioned estimation procedure also for the \((\mu , \pi )\)-parameterization, starting with the initial MM-estimate \(\approx 0.504\) for \(\pi\), see the lower part of Table 5. Again, the initial estimate is corrected downwards to a value around 0.46.

5 Conclusions

In this article, we demonstrated how Stein characterizations of (continuous or discrete) distributions can be utilized to derive improved moment estimators of model parameters. The main idea is to first choose an appropriate parametric class of weighting functions. Then, the final parameter values are determined such that the resulting Stein-MM estimator has asymptotically optimal mean or variance properties within the considered class, and certainly also lower variance and bias than existing parameter estimators. Here, the optimal choice from the given class of weighting functions is implemented based closed-form expressions for asymptotic distributions, possibly together with numerical optimization routines. The whole procedure was exemplified for three types of distribution: the continuous exponential and inverse Gaussian distribution, as well as the discrete negative-binomial distribution. For all these distribution families, we observed an appealing performance in various aspects, and we also demonstrated the application of our findings to real-world data examples.

Taking together the present article and the contributions by Arnold et al. [2], Wang & Weiß [28], Ebner et al. [6], Stein-MM estimators are now available for a wide class of continuous distributions. For discrete distributions, however, only the negative-binomial distribution (see Sect. 4 before) and the discrete Lindley distributions [see 28] have been considered so far. Thus, future research should be directed towards Stein-MM estimators for further common types of discrete distribution. Our research also gives rise to several further directions for future research. While our main focus was on selecting the weight function with respect to minimal bias or variance, we also briefly pointed out in Sect. 2 that such a choice could also be motivated by robustness to outliers. In fact, there are some analogies to “M-estimation” as introduced by Huber [9]. It appears to be promising to analyze if robustified MM estimators can be achieved by suitable classes of weighting function. As another direction for future research (briefly sketched in Sect. 2), the performance of GoF-tests based on Stein-MM estimators should be investigated. Finally, one should analyze Stein-MM estimators in a regression or time-series context.

Change history

02 July 2024

A Correction to this paper has been published: https://doi.org/10.1007/s44199-024-00083-x

References

Anastasiou, A., Barp, A., Briol, F.-X., Ebner, B., Gaunt, R.E., Ghaderinezhad, F., Gorham, J., Gretton, A., Ley, C., Liu, Q., Mackey, L., Oates, C.J., Reinert, G., Swan, Y.: Stein’s method meets computational statistics: a review of some recent developments. Stat. Sci. 38(1), 120–139 (2023)

Arnold, B.C., Castillo, E., Sarabia, J.M.: A multivariate version of Stein’s identity with applications to moment calculations and estimation of conditionally specified distributions. Commun. Stat. Theory Methods 30(12), 2517–2542 (2001)

Bernardo, J.M., Smith, A.F.M.: Bayesian Theory. John Wiley & Sons Inc, New York (1994)

Betsch, S., Ebner, B., Nestmann, F.: Characterizations of non-normalized discrete probability distributions and their application in statistics. Electronic Journal of Statistics 16(1), 1303–1329 (2022)

Brown, T.C., Phillips, M.J.: Negative binomial approximation with Stein’s method. Methodol. Comput. Appl. Probab. 1(4), 407–421 (1999)

Ebner, B., Fischer, A., Gaunt, R.E., Picker, B., Swan, Y.: Point estimation through Stein’s method. arXiv:2305.19031 (2023)

Elfessi, A., Reineke, D.M.: A Bayesian look at classical estimation: the exponential distribution. J. Stat. Educ. 9(1), 5 (2001)

Folks, J.L., Chhikara, R.S.: The inverse Gaussian distribution and its statistical application – a review. J. Roy. Stat. Soc. B 40(3), 263–289 (1978)

Huber, P.J.: Robust estimation of a location parameter. Ann. Math. Stat. 35(1), 73–101 (1964)

Johnson, N.L., Kotz, S., Balakrishnan, N.: Continuous Univariate Distributions, Volume 1. 2nd edition, John Wiley & Sons, Inc., New York (1995)

Johnson, N.L., Kemp, A.W., Kotz, S.: Univariate Discrete Distributions. 3rd edition, John Wiley & Sons, Inc., New York (2005)

Kemp, A.W., Kemp, C.D.: A rapid and efficient estimation procedure for the negative binomial distribution. Biomed. J. 29(7), 865–873 (1987)

Koudou, A.E., Ley, C.: Characterizations of GIG laws: a survey. Probab. Surv. 11, 161–176 (2014)

Kubokawa, T.: Stein’s identities and the related topics: an instructive explanation on shrinkage, characterization, normal approximation and goodness-of-fit. Japanese Journal of Statistics and Data Science, in press (2024)

Landsman, Z., Valdez, E.A.: The tail Stein’s identity with applications to risk measures. N. Am. Actuar. J. 20(4), 313–326 (2016)

Rueda, R., O’Reilly, F.: Tests of fit for discrete distributions based on the probability generating function. Commun. Stat.-Simul. Comput. 28(1), 259–274 (1999)

Savani, V., Zhigljavsky, A.A.: Efficient estimation of parameters of the negative binomial distribution. Commun. Stat.-Theory Methods 35(5), 767–783 (2006)

Serfling, R.J.: Approximation Theorems of Mathematical Statistics. John Wiley & Sons Inc, New York (1980)

Seshadri, V.: The Inverse Gaussian Distribution. Statistical Theory and Applications, Springer, New York (1999)

Shuster, J.J.: Nonparametric optimality of the sample mean and sample variance. Am. Stat. 36(3), 176–178 (1982)

Stein, C.: A bound for the error in the normal approximation to the distribution of a sum of dependent random variables. Proc. Sixth Berkeley Sympos. Math. Stat. Probab. 2, 583–602 (1972)

Stein, C.: Approximate Computation of Expectations. IMS Lecture Notes, Volume 7, Hayward, California (1986)

Stein, C., Diaconis, P., Holmes, S., Reinert, G.: Use of exchangeable pairs in the analysis of simulations. In P. Diaconis & S. Holmes (eds): Stein’s Method: Expository Lectures and Applications, IMS Lecture Notes, Vol. 46, 1–25 (2004)

Sudheesh, K.K.: On Stein’s identity and its applications. Stat. Probab. Lett. 79(12), 1444–1449 (2009)

Sudheesh, K.K., Tibiletti, L.: Moment identity for discrete random variable and its applications. Statistics 46(6), 767–775 (2012)

Tweedie, M.C.K.: Statistical properties of inverse Gaussian distributions. I. Ann. Math. Stat. 28(2), 362–377 (1957)

Tweedie, M.C.K.: Statistical properties of inverse Gaussian distributions. II. Ann. Math. Stat. 28(3), 696–705 (1957)

Wang, S., Weiß, C.H.: New characterizations of the (discrete) Lindley distribution and their applications. Math. Comput. Simul. 212, 310–322 (2023)

Weiß, C.H.: Control charts for Poisson counts based on the Stein–Chen identity. Advanced Statistical Methods in Statistical Process Monitoring, Finance, and Environmental Science, Springer, in press (2023)

Weiß, C.H., Aleksandrov, B.: Computing (bivariate) Poisson moments using Stein-Chen identities. Am. Stat. 76(1), 10–15 (2022)

Weiß, C.H., Puig, P., Aleksandrov, B.: Optimal Stein-type goodness-of-fit tests for count data. Biomed. J. 65(2), 2200073 (2023)

Acknowledgements

The author thank the two referees for their useful comments on an earlier draft of this article.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – Projektnummer 437270842.

Author information

Authors and Affiliations

Contributions

SN and CHW contributed to the design and implementation of the research, to the analysis of the results and to the writing of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare they have no conflict of interest.

Consent for publication

The authors hereby consent to publication of their work upon its acceptance.

Additional information

The original online version of this article was revised: explanatory text: "When this article was published, a correction by the author in Corollary 3.5 was accidentally ignored (cf. attachment). Instead of "...reciprocal 26, p. 368.] Using the formulae for mean and variance of 3, p. 431] we get 1... "there should be"... reciprocal 1 ...

Derivations

Derivations

1.1 Proof of Theorem 2.1

The asymptotic normality immediately follows from the Lindeberg–Lévy CLT [18, p. 28]. For the covariances, we get

In the last line, we applied the Stein-identity (2.1) to \(g(X_i):=\tfrac{1}{2}f(X_i)^2\) with the derivative \(g'(X_i)=f'(X_i)f(X_i)\):

We conclude the proof by noting that the second expression for \(\sigma _{12}\) in Theorem 2.1 immediately follows by using the expression for \(\sigma _{22}\).

1.2 Proof of Theorem 2.2

First, we evaluate the gradient and Hessian of g in \({\varvec{\mu }}_Z\), which leads to \({\varvec{D}}\) and \({\varvec{H}}\) given by

Applying the Delta method to Theorem 2.1, the asymptotic normality

follows. The 2nd-order Taylor approximation \(\hat{\lambda }_{f,Exp } - \lambda \approx {\varvec{D}}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)+\tfrac{1}{2}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)^\top {\varvec{H}}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)\) allows to conclude the asymptotic bias as

The explicit expression for the asymptotic variance \(\sigma _{f,Exp }^2 = \frac{\sigma ^2}{n}\) follows by applying \({\varvec{D}}\) and Theorem 2.1:

Similarly, using \({\varvec{H}}\), the asymptotic bias becomes

This completes the proof of Theorem 2.2.

1.3 Proof of Corollary 2.3

We start by proving (2.5). As the pdf of X is given by \(\phi (x)=\lambda e^{-\lambda x}\) for \(x > 0\) and zero otherwise, we get

where the integrand of the last integral is the pdf of a two-parameter gamma distribution, namely \(Gamma (a+1,\tfrac{1}{\lambda })\) with \(a>-1\) and \(\lambda >0\) according to Johnson et al. [10, p. 343]. Note that \(\mathbb {E}[X^a]\) corresponds to \(\mu _{f_a}(0,1,0)\) in our notation. In view of Theorem 2.2, we also need the following moments, which are implied by (2.5):

Inserting these expressions into Theorem 2.2, we get

This completes the proof of Corollary 2.3.

1.4 Proof of Corollary 2.4

Let us start by deriving (2.6). We have

This immediately leads to

Insertion into Theorem 2.2 leads to

Finally, the MSE equals

This completes the proof of Corollary 2.4.

1.5 Proof of Theorem 3.3

The asymptotic normality immediately follows from the Lindeberg–Lévy CLT [18, p. 28]. For the covariances, we get

1.6 Proof of Theorem 3.4 (Sketch)

In what follows, we sketch the derivations for the variance and bias, respectively. Recall the abbreviation \(\vartheta _f:=\mu _f(2,1,0)-\mu ^2\mu _f(0,1,0)\). First, we evaluate the gradient and Hessian of g in \({\varvec{\mu }}_Z\), which leads to \({\varvec{D}}\) and \({\varvec{H}}\) given by

Here, for the sake of readability, the upper triangle of the symmetric matrix \({\varvec{H}}\) was replaced by stars ‘\(*\)’. Applying the Delta method to Theorem 3.3, the asymptotic normality

follows. The 2nd-order Taylor approximation \(\hat{\lambda }_{f,IG } - \lambda \approx {\varvec{D}}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)+\tfrac{1}{2}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)^\top {\varvec{H}}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)\) allows to conclude the asymptotic bias as

The explicit expression for the asymptotic variance \(\sigma _{f,IG }^2 = \frac{\sigma ^2}{n}\) follows by applying \({\varvec{D}}\) and Theorem 3.3. After tedious calculations, we obtain the expression for \(\sigma _{f,IG }^2\) stated in Theorem 3.4. Similarly, using \({\varvec{H}}\), the expression for the asymptotic bias is derived. This completes the proof of Theorem 3.4.

1.7 Proof of Corollary 3.5 (Sketch)

If \(f\equiv 1\), we have \(\mu _1(k,l,m)=\delta _{m,0}\,\mathbb {E}[X^k]\), recall (3.6), where \(\delta _{\cdot ,\cdot }\) denotes the Kronecker delta, so Theorem 3.4 simplifies considerably:

where \(\vartheta _1 = \mathbb {E}[X^2]-\mu ^2 = \mathbb {V}[X] = \mu ^3/\lambda\), and

Using the following moments (see [26], p. 366),

and after tedious calculations, this leads to

and

So the proof of Corollary 3.5 is complete.

1.8 Proof of Corollary 3.6 (Sketch)

Recall from (3.7) that for \(f(x)=1/x\), we have \(\mu _{1/x}(k,l,m)=(-1)^m\,\mathbb {E}[X^{k-l-2m}]\). In particular, \(\vartheta _f = \mathbb {E}[X]-\mu ^2\,\mathbb {E}[X^{-1}] = \mu -\mu ^2\,(\mu ^{-1}+\lambda ^{-1}) =-\mu ^2/\lambda\). Furthermore, positive and negative moments are related to each other by (3.1), where closed-form expressions for moments of order 2–4 are given by (A.1). This can be used to simplify the joint moments involved in Theorem 3.4 as follows:

After tedious calculations, Theorem 3.4 simplifies to

for the variance, and to

for the bias. So the proof of Corollary 3.6 is complete.

1.9 Proof of Theorem 4.1

The asymptotic normality immediately follows from the Lindeberg–Lévy CLT [18, p. 28]. For the covariances, we get

1.10 Proof of Theorem 4.2 (Sketch)

In what follows, we sketch the derivations for the variance and bias, respectively. Recall the abbreviation \(\eta _1:=\tilde{\mu }_f(1,1,0)-\mu \cdot \tilde{\mu }_f(0,0,1)\). Furthermore, in order to denote the results more compactly, let us abbreviate \(\tilde{\mu }_{klm}:= \tilde{\mu }_f(k,l,m)\). First, we evaluate the gradient and Hessian of \(g_\nu\) in \({\varvec{\mu }}_Z\), which leads to \({\varvec{D}}\) and \({\varvec{H}}\) given by

Here, for the sake of readability, the upper triangle of the symmetric matrix \({\varvec{H}}\) was replaced by stars ‘\(*\)’. Applying the Delta method to Theorem 4.1, the asymptotic normality

follows. The 2nd-order Taylor approximation \(\hat{\nu }_{f,NB } - \nu \approx {\varvec{D}}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)+\tfrac{1}{2}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)^\top {\varvec{H}}(\overline{{\varvec{Z}}}-{\varvec{\mu }}_Z)\) allows to conclude the asymptotic bias as

The explicit expressions for the asymptotic variance \(\sigma _{f,NB }^2 = \frac{\sigma ^2}{n}\) follow by applying \({\varvec{D}}\) and Theorem 4.1. After tedious calculations, we obtain the expressions stated in Theorem 4.2, which completes the proof.

1.11 Proof of Theorem 4.3 (Sketch)

In what follows, we sketch the derivations for the variance and bias, respectively. Recall the abbreviation \(\eta _2:=\tilde{\mu }_f(1,0,1)-\mu \cdot \tilde{\mu }_f(0,0,1)\), and let us again the abbreviations \(\tilde{\mu }_{klm}:= \tilde{\mu }_f(k,l,m)\). First, we evaluate the gradient and Hessian of \(g_\pi\) in \({\varvec{\mu }}_Z\), which leads to \({\varvec{D}}\) and \({\varvec{H}}\) given by

Here, for the sake of readability, the upper triangle of the symmetric matrix \({\varvec{H}}\) was replaced by stars ‘\(*\)’. Then, the remaining steps are like in Appendix A.10. This completes the proof of Theorem 4.3.

1.12 Proof of Lemma 4.4

We proof Lemma 4.4 by induction.

Base case: For \(k=0\) with \(x_{(0)}=1\), we get \(\mathbb {E}[z^X]=\text {pgf}\,(z)\).

Inductive step: Let us assume that the statement holds for a given k. Then,

By the induction hypothesis, we get

This completes the proof of Lemma 4.4.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nik, S., Weiß, C.H. Generalized Moment Estimators Based on Stein Identities. J Stat Theory Appl (2024). https://doi.org/10.1007/s44199-024-00081-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44199-024-00081-z