Abstract

In the count data set, the frequency of some points may occur more than expected under the standard data analysis models. Indeed, in many situations, the frequencies of zero and of some other points tend to be higher than those of the Poisson. Adapting existing models for analyzing inflated observations has been studied in the literature. A method for modeling the inflated data is the inflated distribution. In this paper, we extend this inflated distribution. Indeed, if inflations occur in three or more of the support point, then the previous models are not suitable. We propose a model based on zero, one, \(\ldots ,\) and k inflated points with probabilities \(w_{0},w_1,\ldots ,\) and \(w_{k},\) respectively. By choosing the appropriate values for the weights \(w_{0},\ldots ,w_{k},\) various inflated distributions, such as the zero-inflated, zero–one-inflated, and zero–k-inflated distributions, are derived as special cases of the proposed model in this paper. Various illustrative examples and real data sets are analyzed using the obtained results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the important causes of overdispersion in the count data is an inflated number of zeros in excess of the number expected under the distribution. In such cases, one of the appropriate models is zero-inflated Poisson (ZIP) distribution. There are numerous papers in the literature dealing with the ZIP model. The earliest studies on the ZIP model were done by Cohen [6] and Yoneda [37]. Lambert [14] introduced and studied the ZIP regression model using the Expectation-Maximization (EM) approach. Vandenbroek [34] gave a score test for testing a standard Poisson regression model. Jansakul and Hinde [11] extended this test to a more general situation where the zero probability depends on covariates. Ridout et al. [27] derived a score test for testing a ZIP regression model against the zero-inflated negative binomial alternatives. Agarwal et al. [1] applied the ZIP regression model for analyzing spatial count data sets. The score test using the normal approximation might underestimate the nominal significance level for small sample cases. Jung et al. [12] proposed a parametric bootstrap method for this problem. They observed that the ZIP regression model for prediction is more robust than the usual Poisson regression model. Long et al. [20] developed a marginalized ZIP regression model approach for independent responses to model the population mean count directly, allowing straightforward inference for overall exposure effects and derived an empirical robust variance estimation for overall incidence density ratios. Zhu et al. [40] have extended zero-inflated count models to account for random effects. Lim et al. [16] proposed the ZIP regression mixture model to account for both excess zeros and overdispersion caused by unobserved heterogeneity. A Bayesian latent factor ZIP model was proposed by Neelon and Chung [25] to analyze the molecular differences among breast cancer patients. Furthermore, ZIP models for censored data were studied by Saffari and Adnan [28] and Yang and Simpson [36]. Research on these models is still in progress. For instance, the ZIP model’s parameters have been estimated using the Genetic Algorithm by Dikheel and Jouda [9] and the Shrinkage estimator by Zandi et al. [38]. ZIP models continue to be frequently utilized in many studies, even with the development of more comprehensive models [24, 32]. Empirical evidence shows that inflation may occur at more than one point. For example, Lin and Tsai [17] discussed a model that can be applied to both excessive zeros and ones known and called the zero–one inflated Poisson (ZOIP) model. Zhang et al. [39] initially studied the likelihood-based ZOIP model without covariates. When the covariates are available, it is essential to build a ZOIP regression model to clarify the relationship between the covariates and the response variable. Tang et al. [33], Liu et al. [18], Liu et al. [19], and Arora and Chaganty [5] studied the statistical inference for the ZOIP model. Melkersson and Rooth [23] proposed a zero–two inflated Poisson model, which accounts for a relative excess of both zero–two children in modeling complete female fertility.

In this article, we attempt to provide a generalization for an inflated regression model based on the Poisson distribution. For this purpose, we generalized the inflated points to \(0,\ldots ,k,\) for \(k=0,1,2,\ldots .\) This generalization provides various benefits for the modeling of inflated and non-inflated data. For example, this generalization includes all previous inflated regression models based on Poisson distribution. Also, this model provides a wide range of models to the researcher, who can choose the most appropriate ones according to the data. In summary, the originality of this paper lies in the development of the family of inflated Poisson distribution and its application to generalized linear models. This generalization is significant because it gives the researcher access to the entire family of Poisson-based inflated distributions (the most used family in discrete inflated distributions) at a single model. Thus researcher chooses the appropriate model for the data set by choosing the appropriate value of k according to the inflation at each point or any number of points. Besides, with other choices of k and distribution weights, new models can also be introduced. Selecting the appropriate value for k might be a challenge when using the ZKIP models. An effective approach for selecting the correct value for k in the ZKIP models is described in order to address this issue. Section 7 provides an explanation of this algorithm along with useful examples.

Therefore, the rest of the paper is organized as follows. In Sect. 2, we introduce the zero to k inflated Poisson (ZKIP) distribution and some special cases. Estimation by the maximum likelihood (ML) method and standard errors of the ML estimates are outlined in Sect. 3. Section 4 deals with the regression ZKIP model and the ML estimation, EM algorithm, and hypotheses testing for this model. In Sect. 5, the method of the randomized quantile residual (RQR) for the adequacy of the proposed model is introduced. Simulations are conducted in Sect. 6 to assess the usefulness of this model. In Sect. 7, two real data sets are used to demonstrate the flexibility and superiority of the proposed model against the existing ones. Finally, in Sect. 8, conclusions are given.

2 Proposing the New Model

We can build the zero to k inflated distribution with introducing the function g(y) as

where f(y) is a discrete distribution, say Poisson, \(0 \le w_{i}, i=0,\ldots ,k,\) \(\eta ({\textbf{w}})=\sum _{i=0}^{k} w_{i}\) and \(0\le \eta ({\textbf{w}}) \le 1.\) By substituting the Poisson probability mass function (PMF) \(f(y)=\exp (-\lambda )\lambda ^y/y!,\) \(y=0, 1, \ldots ,\) into Equation (1), we have

where \({\textbf{w}}=(w_{0},\ldots ,w_{k}).\) The discrete random variable Y with the PMF (2) is called to follow the ZKIP distribution and denoted by \(Y\sim \)ZKIP\(({\textbf{w}},\lambda ).\) Here, some special cases of the family of ZKIP models are given as follows:

-

\(k=0\) \(\rightarrow \) ZIP distribution [9, 24, 38] and [32].

-

\(k=1\) \(\rightarrow \) zero–one inflated Poisson distribution [17, 19, 33, 39], and [13].

-

\(k=2\) \(\rightarrow \) zero–one–two inflated Poisson distribution [32].

-

\(k>1, w_{1}=w_{2},\ldots ,w_{k-1}=0\) \(\rightarrow \) zero and k inflated Poisson distribution [5, 23, 30].

If \(Y \sim \)ZKIP\((y;\,{\mathbf{w}},\lambda ),\) then the moment generating function is obtained from Eq. (2) as

Equation (3) gives the expectation and variance of the random variable Y as

Figure 1 shows PMF of the ZKIP distribution for \(\lambda =1\) and some selected values of k and \({\textbf{w}}.\)

3 Estimation

Let \({\textbf{Y}}=(Y_1,\ldots ,Y_n)\) be a random sample arising from the ZKIP distribution. In this section, we study the problem of estimating unknown parameter vector \({\boldsymbol{\theta }} = ({\textbf{w}},\lambda )\) on the basis of \({\textbf{Y}}.\) Let

We can rewrite PMF (2) as

where \(I(y>k)=1-\sum _{i=0}^{k} I_{i}(y).\) Thus, the likelihood function (LF) of the observed sample \({\textbf{Y}}={{\textbf{y}}}\) reads

Then the logarithm of the LF (LLF) is

From Eq. (8), the ML estimates are derived by solving the following equations with respect to the parameters:

where

Since there is no closed-form solution for this system of equations, we may use numerical methods to estimate the parameters. To do this, we need to derive the elements of the observed information, which are given by

where

and

For the problems of interval estimation and testing statistical hypotheses, the Fisher information (FI) matrix is useful. The FI matrix \(({\texttt {I}}(\Theta )={\texttt {I}}_{ij}, {i,j=0,\ldots ,k+1}),\) obtained from the observed information matrix by taking the expected values of each entry, is given as follows:

If \(\theta \) is the arbitrary parameter of model and \({\hat{\theta }}\) is the ML estimates for this parameter, then from Lehmann et al. [15], we have

Therefore, we can use asymptotic distribution (12) to construct an asymptotic confidence interval and hypotheses testing for the parameters of the proposed model (see Lehmann et al. [15]) for more details).

4 ZKIP Regression Model

In Sect. 2, we introduce and motivate the ZKIP distribution. Recall that the PMF of the ZKIP distribution is

where \(P_{\lambda }(y) = \exp (-\lambda )\lambda ^{y}/y!\) for \(y=0, 1, \ldots .\) So the ZKIP is a distribution with \(k+2\) parameters \({\textbf{w}}=(w_{0},\ldots ,w_{k})\) and \(\lambda .\) In fact, it is a mixture of \(k+2\) distributions. The first distribution is degenerate at zero with weight \(w_{0},\) and the second is degenerate at one with weight \(w_{1},\) and the same goes for up to k. Finally, the \((k+2)\)th the distribution is the Poisson with mean \(\lambda \) with weight \((1-\eta ({\textbf{w}})).\)

Suppose that we have a vector \({\textbf{y}}=(y_{1},\ldots ,y_{n})\) of n independent count responses from a ZKIP distribution. We assume that, associated with each \(y_{i},\) the vector of covariates \(({\textbf{x}}_{i}^{T})\) has been observed. The layout of the observed data is shown in Table 1.

From (13), the LF of the available data in Table 1 can be rewritten as

where \({\boldsymbol{\lambda }}=(\lambda _{1},\ldots ,\lambda _{n})\) and \(P_{\lambda _j}(y_j) = \exp (-\lambda _{j})\lambda _{j}^{y_{j}}/y_{j}!,\) \((y_{j} \ge 0).\) To connect the parameters with the covariates, we follow the standard generalized linear model (GLM) framework for the multinomial distribution;\, see Agresti [2] for further reading. The \(k+2\) mixing distributions can be viewed as \(k+2\) nominal categories. Thus, the probabilities of the \(k+2\) (degenerate(0), degenerate(1), \(\ldots ,\) degenerate(k), Poisson) categories are \(w_{0}, w_{1}, \ldots , w_{k},\) \((1-\eta ({\textbf{w}})),\) respectively. Following the GLM baseline category logit model for the multinomial, let

Here, we treat the Poisson distribution as the baseline category, and thus we have \(((k+2) - 1) = k+1\) equations for the other \(k+1\) categories. As in log-linear models, the ZKIP regression model assumes that the Poisson parameter \(\lambda _{i}\) is a loglinear function of the covariates;\, that is,

where \({\boldsymbol{\beta }} = (\beta _{1},\ldots ,\beta _{p})^{T}\) is a p-dimensional unknown regression parameters vector. For the sake of brevity, we assume that the parameters vector \({\boldsymbol{\gamma }}= (\gamma _{0},\ldots ,\gamma _{k})\) is constant. The generalization where these \(k+1\) parameters are functions of the covariates is straightforward. Thus, the parameters of the ZKIP regression model are \({\boldsymbol{\beta }}\) and \({\boldsymbol{\gamma }}.\) In what follows, the problems of estimating parameters and hypotheses testing are discussed in detail.

4.1 Estimation of Regression Parameters

In the following, we study methods for estimating the parameters of the ZKIP regression model. Two popular methods are the ML and EM methods. The ML method involves optimizing the LF (14) or the logarithm of the LF with respect to the unknown parameters \({\boldsymbol{\beta }}\) and \({\boldsymbol{\gamma }}.\) By substituting the reparameterizations (15) into the LF (14), we can rewrite (8) as follows:

where \(\log (\lambda _{j})={\textbf{x}}_{j}^{T}{\boldsymbol{\beta }}\) and \(\gamma ^*=1/\{1+\sum _{l=0}^{k} \exp (\gamma _{l})\} .\) The ML estimates can be obtained by maximizing the LLF (16) directly with respect to the parameters. Alternatively, one can take the partial derivatives of the LLF and solve the \((k+2)\) score equations as follows:

An alternative and popular method for parameter estimation is the EM approach. The EM approach treats the observed data \({\textbf{y}}=(y_{1},\ldots ,y_{n})\) as a part of complete data that includes \({\textbf{z}}=({\textbf{z}}_{1},\ldots ,{\textbf{z}}_{n}),\) which is regarded as missing. Here each \({\textbf{z}}_{i}=(z_{i0},\ldots ,z_{i(k+1)})\) is a \(k+2\) component vector with PMF (17). By the definition of latent variable \({\textbf{z}},\) we have

where \(w_{k+1}=(1-\eta ({\textbf{w}})).\)

Then, the conditional PMF of \(y_{i}\) given \({\textbf{z}}_{\textbf{i}}\) is calculated. Thus, the joint distribution of the observed and missing data, which is given by the ZKIP distribution, is a mixture of Poisson with \(k+1\) degenerate distributions at zero to k. Consider a latent variable \({\textbf{z}}=(z_{0},\ldots ,z_{k+1}),\) which is distributed as a multinomial with parameters \((1,w_{0},\ldots ,w_{k+1}).\) Note that \({\textbf{z}}\) takes values \((0,\ldots ,0,1_{i},0,\ldots ,0)\) with probability \(w_{i},\) \(i=0,\ldots ,(k+1).\) That is,

Thus the conditional distribution of Y given \({\textbf{z}}=(z_0, \ldots , z_{k+1})\) is

Finally, the joint PMF of \((Y, {\textbf{z}})\) is obtained from (17) and (19) as

Therefore from (20), the complete LF of the ZKIP model is

By (15), the LLF of the complete data \(({\textbf{y}}, {\textbf{z}})\) reads

For \(w_{1}=\cdots =w_{k}=0,\) the ZKIP is reduced to the ZIP model. From (22), the LLF of the ZIP for the complete data is derived as

Lambert [14] used Eq. (22) as the LLF of the complete data for the ZIP model to obtain the EM estimates.

We now proceed to describe the EM algorithm (Dempster et al. [8];\, Wu [35]) for the ZKIP model. The first step in the EM algorithm involves selecting some initial values for the unknown parameters. The choice of the initial values is important for the convergence of the algorithm. An incorrect choice of the initial values could result in slow convergence or breakdown of the algorithm. We recommend using the proportions of zeros, \(\ldots ,\) k’s, respectively, in the observed data as initial values for the parameters \(w_{0},\ldots ,w_{k}\) and use the relations (15) to get initial values \(\gamma _{00},\ldots ,\gamma _{k0},\) for the parameters \(\gamma _{0},\ldots ,\gamma _{k},\) respectively. The next step involves filling the latent values \({\textbf{z}}_{\textbf{i}}\) by their expectations, which is the E-step. We will use the conditional expected values of \(E({\textbf{z}}|{\textbf{y}})\) given in Table 2 to generate \({\textbf{z}}_{\textbf{i}}\)’s.

We use Table 2 to estimate the missing values in the expectation step of the EM algorithm as follows:

For the maximization step in the EM algorithm, we solve the following score equations instead of maximizing the complete LF directly:

where \({\hat{z}}_{(k+1)j}=1-\sum _{i=0}^{k}{\hat{z}}_{ij}.\) In summary, the EM algorithm to estimate the parameters \((\gamma _{0},\ldots ,\gamma _{k})\) and the regression parameter \({\boldsymbol{\beta }}\) for the ZKIP regression model is as follows.

-

1.

Select initial values \({\boldsymbol{\beta}}_{{\bf 0}},\) \((\gamma _{00},\ldots ,\gamma _{k0})\) for the parameters \({\boldsymbol{\beta }}\) and the vector of parameters \((\gamma _{0},\ldots ,\gamma _{k}),\) respectively.

-

2.

E-step: Estimate \({\hat{z}}_{ij}\)’s, \(i=0,\ldots ,k\) using Eqs. (23) and (24).

-

3.

M-step: Solve Eqs. (25) and (26) and obtain updated estimates \({\boldsymbol{\beta}}_{{\bf 1}},\) \((\gamma _{01},\ldots ,\gamma _{k1}).\)

-

4.

Repeat E-step and M-step until the parameter estimates converge.

In the next subsection, we discuss how to obtain the standard errors of the estimates obtained by the EM algorithm.

4.2 Standard Errors for the EM Algorithm

The most commonly used method to get the standard errors in the mixture models is to compute the matrix of partial derivatives of the LLF for the observed data, that is, to calculate the information matrix from the observed data. Lambert [14] used this method for computing the standard errors for the ZIP regression model. Lin and Tsai [17] used the Hessian matrix to get the standard errors for the zero–k inflated Poisson model without actually computing second-order partial derivatives of the LLF. Recall that the Hessian matrix comes out as a byproduct of the nonlinear optimization methods in the common statistical packages.

To compute the standard errors of the estimates obtained by the EM algorithm, we follow the approach described by Louis [22]. The relation between the likelihood of the complete, observed, and missing data is given by

where \({\textbf{y}}\) and \({\textbf{z}}\) stand for the observed and missing data, respectively. By taking the logarithm from Equation (27), we get

where \(\ell _{obs}({\textbf{w}},{\boldsymbol{\lambda }}|{\textbf{y}})=\log \left\{ L_{obs}({\textbf{w}},{\boldsymbol{\lambda }}|{\textbf{y}}) \right\} ,\) \(\ell _{comp}({\textbf{w}},{\boldsymbol{\lambda }}|{\textbf{y}},{\textbf{z}})=\log \left\{ L_{comp}({\textbf{w}},{\boldsymbol{\lambda }}|{\textbf{y}},{\textbf{z}}) \right\} \) and \(\ell _{miss}({\textbf{w}},\) \({\boldsymbol{\lambda }}|({\textbf{z}}|{\textbf{y}}))=\log \{L_{miss}({\textbf{w}},\) \({\boldsymbol{\lambda }}|({\textbf{z}}|{\textbf{y}}))\}.\) Taking second-order partial derivatives from Eq. (28), the information matrices for the complete, observed, and missing data satisfy the following identity:

where the matrices \(I_{comp}\) and \(I_{miss}\) are, respectively, the Hessian matrix of the LLF of complete data and the LLF of missing data. Since the right hand side of Eq. (29) depends on the missing data, Louis [22] suggested to take the expected value of the missing data given the observed. This gives us the identity

In other words, Louis [22] estimated of the observed information matrix by

Note that the LLF of the complete data for the ZKIP regression model is given by Eq. (22), and the corresponding first-order derivatives are shown in Eqs. (25) and (26). The elements of the matrix \(E(I_{comp}|{\textbf{y}})\) are the expected values of the negative of second-order partial derivatives of the complete LLF (22) given by

To see this, from Eq. (15), we have

and then Eq. (22) yields

From Eq. (32) we conclude that

After some algebraic manipulations and the value of \(E({\textbf{z}}|{\textbf{y}})\) from Table 2, the following result is concluded

Similarly, \(E\left[ -{\partial ^{2} \ell _{comp}}/{\partial \gamma _{l} \partial \gamma _{s} }\right] \) and \(E\left[ -{\partial ^{2} \ell _{comp}}/{\partial {\boldsymbol{\beta }}\partial {{\boldsymbol{\beta }}}^{\varvec{T}}}\right] \) are obtained. The LLF of the missing data for the ZKIP regression model is

Finally, the elements of the matrix \(E(I_{miss}|{\textbf{y}})\) are the negative of the expected value of the second-order derivatives of (35). These are given by

4.3 Hypothesis Testing

Testing the impact of the jth covariate on the count response is equivalent to testing \(H_0: \beta _j= 0\) vs. \(H_1: \beta _j\ne 0.\) This hypothesis test is straightforward and can be done using the standard Wald statistic, \( z={\hat{\beta }}_i/SE({\hat{\beta }}_i),\) which has asymptotically standard normal distribution under the null hypothesis \(H_0.\) Here, \(SE(\hat{\beta _j})\) minus twice the LLF stands for the standard error of the estimate \(\hat{\beta _j}.\) An alternative approach for testing \(H_0: \beta _j = 0\) is to use the generalized likelihood ratio test statistic defined by

which has asymptotically the chi-Square distribution with one degree of freedom, where \(\widetilde{{\boldsymbol{\beta }}}\) and \(\widetilde{\boldsymbol{\gamma }}\) denote the ML estimates under the hypothesis \(\beta _j=0\) and \({\hat{\beta }}\) and \(\hat{\boldsymbol{\gamma }}\) are the ML estimates under the ZKIP model.

Since \(0 \le w_i \le 1,\ (i = 0,\ldots ,k),\) the null hypothesis \(H_0: w_{i_1}=\cdots =w_{i_r}= 0,\) \(0\le i_r \le k,\) corresponds to testing the parameters to check the necessity of their presence in the model. In this case, the regularity conditions are not met. That is, the standard asymptotic theory for the LRT statistic (36) is not applicable, and in fact, its asymptotic distribution is a mixture of chi-Square distributions.

5 The Model Diagnosis

The model diagnosis is an essential step to ensure that a fitted model is adequate for the observed data set. However, diagnosing counts models is still a challenging research problem. Pearson and deviance residuals are often used in practice for diagnosing counts models, despite wide recognition that these residuals are far from normality when applied to count data. RQRs, proposed by Dunn and Smyth [10] and Park et al. [26], are used to overcome the above-mentioned problems. The key idea of the RQR is to randomize the lower tail probability into a uniform random number between the discontinuity gap of the cumulative density function (CDF). It can be shown that the RQRs are normally distributed under the true model. To the best of our knowledge, the RQR has not been applied to the residual analyses for zero-inflated or modified mixed effects models.

To do this, we follow the Dunn and Smyth [10] approach. The RQR inverts the fitted distribution function for each response value and finds the equivalent standard normal quantile. Let \(G(y;\,{\textbf{w}},\lambda )\) denote the CDF for random variable y. If the CDF is continuous, then \(G(y_i;\, {\textbf{w}},\lambda )\) is uniformly distributed on the unit interval. RQRs can thus be defined as

where \(\Phi ^{-1}(\cdot )\) is the quantile function of the standard normal distribution. However, if the CDF is discrete, then randomization is added to modify \(q_i\) in Eq. (37). To be more specific, let \(g(y_i;\, {\textbf{w}},\lambda )\) denote the PMF of y. The CDF can be redefined as

where U is a uniform random variable on [0, 1], and \(G(y^-;\, {\textbf{w}},\lambda )\) is the lower limit of G in y. When G is discrete, we let \(a_i = lim_{y\rightarrow y_i^-} G(y;\, \hat{{\textbf{w}}}_i,{\hat{\lambda }}_i)\) and \(b_i = G(y_i;\, \hat{{\textbf{w}}}_i,{\hat{\lambda }}_i).\) Then, the randomized quantile residual is defined by

where \(G^*_i\) is a uniform random variable on the interval \((a_i,b_i],\) and \(q_i \sim N(0,1).\) Here, N(0, 1) stands for the standard normal distribution. Therefore, the only information that is required for calculating RQRs is the CDF of the response variable. In numerical analysis, we will use RQRs to investigate the adequacy of the used models.

6 Simulation Studies

In this simulated scenario, we have evaluated the efficiency of the ZKIP regression model with the link function \(\log (\lambda )=\beta _0 +\beta _1 {\textbf{x}}_{1}+\beta _2 {\textbf{x}}_{2},\) where \(\beta _0=0.5,\) \(\beta _1=\beta _2=-0.05,\) \(w_0=w_1=w_2=w_3=0.2\) and \({\textbf{x}}_{1}\) and \({\textbf{x}}_{2}\) are, respectively, generated from N(0, 1) and the Bernoulli distribution with parameter 0.5 and \(k=0,1,2,3.\) In each step, we select a sample with sizes of \(n=100, 500, 1000\) from ZKIP regression \((k=0,1,2,3)\) models and estimate the parameters of the assumed models using the ML method. We repeat this step 10,000 times. If the model and its related definitions work correctly, the estimates obtained for the parameters of this models should be close to their real values. Also, as the sample size rises, the means of bias and standard error should be decreased. This assertions are supported by Table 3’s results.

For the next simulated scenario, we have selected a sample with sizes \(n=100, 500, 1000,\) from Z3IP regression model (with the parameters similar to Table 3). Then, we have fitted the regression ZKIP with \(k=0,1,2\) and Poisson models, for the same sample. As anticipated, the proposed model performed admirably results. The regression Z3IP model shows the best outcomes based on the comparative criteria in Table 4.

7 Real Data Analysis

To assess the performance of the proposed ZKIP model and the corresponding regression version, two real data sets are analyzed.

7.1 Example 1 (Alcohol Consumption)

DeHart et al. [7] described a study in which “moderate to heavy drinkers” (at least 12 alcoholic drinks/week for women, 15 for men) were recruited to keep a daily record of each drink that they consumed over a 30-day study period. Participants also completed a variety of rating scales covering daily events in their lives and items related to self-esteem. Among the researchers’ hypotheses, the negative events, particularly those involving romantic relationships, are suspected to be related to the amount of alcohol consumed, especially among those with low self-esteem.

In this example, we consider numall (number of alcoholic beverages, or “drinks,” consumed in one day) as a response variable. In Tables 5 and 6 , we fit the ZKIP distribution to this data set. In the following, negevent (an index for combining the total number and intensity of negative events experienced during the day) and nrel (a measure of negative relationship interactions) are considered covariate variables. Tables 7 and 8 show the fitted regression ZKIP models.

The results in Tables 5 and 6 show that the ZKIP regression model with \(k=6\) performs well and dominates the rest models. Tables 7 and 8 also confirm that the regression ZKIP with \(k=6\) is the best model among the considered models. In Table 9, we consider the problem of the hypothesis testing \(H_0 :w_6 = 0\) against the alternative \(H_1 :w_5> 0\) and \(H_1 :w_7 = 0,\) with significance level \(\alpha = 0.05.\) The asymptotic distribution is \(0.5\chi ^{2}_0 + 0.5\chi ^{2}_1,\) where \(\chi ^{2}_0 \equiv 0,\) for the Alcohol consumption data set (for more details, see [29]). We see that \(k=6\) is the best choose for the ZKIP model.

With these explanations, an algorithm can be presented to choose the appropriate value of k. We start fitting the inflated model from \(k=0\) and continuing this fitting until k, so there is no significant difference between the fitting of k and the larger \(k+1.\)

7.2 Example 2 (Lung Data Set)

This data set is about survival in those with advanced lung cancer from the “North Central Cancer Treatment Group” (Loprinzi et al. [21]). Performance scores rate how well the patient can perform usual daily activities. The Eastern Cooperative Oncology Group (ECOG) performance score is measured by the physician as 0 = asymptomatic, 1 = symptomatic but completely ambulatory, 2 = in bed \(<50\%\) of the day, 3 = in bed > 50% of the day but not bedbound, 4 = bedbound (ph.ecog). We consider the ph.ecog as the response variable and the Age and Sex of patients as covariates. The fitted ZKIP model for the response variable in the Lung data set is shown in Tables 10 and 11 . It is observed that the ZKIP model with \(k=2\) is the best one. Tables 12 and 13 confirm the same results in the regression ZKIP model. Table 14 also introduces \(k=2\) as an appropriate value for the regression ZKIP model for this data set.

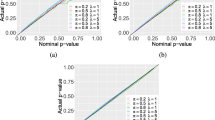

Figures 3 and 5 for, respectively, real data sets 1 and 2 show the RQRs of the regression ZKIP model for all observations. For the best k-obtained regression ZKIP fitted, these figures approximately show a random scatter around zero for RQR in the three real data sets, which seems to be reasonable. Figures 2 and 4 also show that the RQRs follow approximately the N(0, 1) distribution for the obtained k (Figs. 2, 3, 4, 5).

8 Conclusions

Previous research about the inflated Poisson distribution has suggested that inflation may occur at one or two points. By introducing the ZKIP distribution, in addition to covering all previous studies, we allowed the inflated points to be three or even more. Also, in this work, we studied the properties of the ZKIP distribution and the ZKIP regression model. The flexibility of the ZKIP distribution (which was perfectly illustrated with real data examples) shows that it may be used as a suitable model for real data set analysis with discrete responses. This flexibility was also observed in the regression version of the ZKIP distribution.

The introduced model can be used in decision trees, random forests and statistical quality control or any model that can be used for inflated discrete data. Also, this model can be developed for the neutrosophic statistics [3, 4, 31].

References

Agarwal, D.K., Gelfand, A.E., Citron-Pousty, S.: Zero-inflated models with application to spatial count data. Environ. Ecol Stat. 9, 341–355 (2002)

Agresti, A.: Analysis of Ordinal Categorical Data, 3rd edn. Wiley, Hoboken (2013)

AlAita, A., Aslam, M.: Analysis of covariance under neutrosophic statistics. J. Stat. Comput. Simul. 93(3), 397–415 (2023)

AlAita, A., Talebi, H., Aslam, M., Al Sultan, K.: Neutrosophic statistical analysis of split-plot designs. Soft Comput. 27, 7801–7811 (2023)

Arora, M., Chaganty, N.R.: EM estimation for zero-and k-inflated Poisson regression model. Computation 9(9), 94 (2021)

Cohen, A.C.: Estimating the parameters of a modified Poisson distribution. J. Am. Stat. Assoc. 55, 139–143 (1960)

DeHart, T., Tennen, H., Armeli, S., Todd, M., Affleck, G.: Drinking to regulate romantic relationship interactions: the moderating role of self-esteem. J. Exp. Soc. Psychol. 44, 527–538 (2008)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood estimation from incomplete data via the EM algorithm (with discussion). J. R. Stat. Soc. Ser. B (Stat. Methodol.) 39, 1–38 (1977)

Dikheel, T.R., Jouda, H.A.: Zero inflation Poisson regression estimation by using Genetic Algorithm (GA). J. Surv. Fish. Sci. 10(3S), 5257–5268 (2023)

Dunn, P.K., Smyth, G.K.: Randomized quantile residuals. J. Comput. Graph. Stat. 5(3), 236–244 (1996)

Jansakul, N., Hinde, J.P.: Score tests for zero-Inflated Poisson models. Comput. Stat. Data Anal. 40, 75–96 (2002)

Jung, B.C., Jhun, M., Lee, J.W.: Bootstrap tests for overdispersion in a zero-inflated Poisson regression model. Biometrics 61, 626–629 (2005)

Kang, Y., Wang, S., Wang, D., Zhu, F.: Analysis of zero-and-one inflated bounded count time series with applications to climate and crime data. TEST 32(1), 34–73 (2023)

Lambert, D.: Zero-inflated Poisson regression, with an application to defects in manufacturing. Technometrics 34, 1–14 (1992)

Lehmann, E.L., Romano, J.P., Casella, G.: Testing Statistical Hypotheses. Springer Texts in Statistics, 3rd edn. Springer, New York (2005)

Lim, H.K., Li, W.K., Yu, P.L.: Zero-inflated Poisson regression mixture model. Comput. Stat. Data Anal. 71, 151–158 (2014)

Lin, T.H., Tsai, M.H.: Modeling health survey data with excessive zero and K responses. Stat. Med. 32(9), 1572–1583 (2013)

Liu, W.C., Tang, Y.C., Xu, A.C.: A zero-and-one inflated Poisson model and its application. Stat. Interface 11, 339–351 (2018)

Liu, W.C., Tang, Y.C., Xu, A.C.: Zero-and-one-inflated Poisson regression model. Stat. Pap. 62, 915–934 (2021)

Long, D.L., Preisser, J.S., Herring, A.H., Golin, C.E.: Zero-inflated Poisson regression with application to defects in manufacturing. Stat. Med. 33, 5151–5165 (2014)

Loprinzi, C.L., Laurie, J.A., Wieand, H.S., Krook, J.E., Novotny, P.J., Kugler, J.W., Bartel, J., Law, M., Bateman, M., Klatt, N.E.: Prospective evaluation of prognostic variables from patient-completed questionnaires. North Central Cancer Treatment Group. J. Clin. Oncol. 12(3), 601–607 (1994)

Louis, T.A.: Finding the observed information matrix when using the EM algorithm. J. R. Stat. Soc. 44(2), 226–233 (1982)

Melkersson, M., Rooth, D.O.: Modeling female fertility using inflated count data models. J. Popul. Econ. 13(2), 189–203 (2000)

Motalebi, N., Owlia, M.S., Amiri, A., Fallahnezhad, M.S.: Monitoring social networks based on Zero-inflated Poisson regression model. Commun. Stat. Theory Methods 52(7), 2099–2115 (2023)

Neelon, B., Chung, D.J.: The LZIP: a Bayesian latent factor model for correlated zero-inflated counts. Biometrics 73, 185–196 (2017)

Park, K., Jung, D., Kim, J.M.: Control charts based on randomized quantile residuals. Appl. Stoch. Models Bus. Ind. 36(4), 716–729 (2020)

Ridout, J., Hinde, J., Demetrio, G.B.: A score test for testing a zero-inflated Poisson regression model against zero-inflated negative binomial alternatives. Biometrics 57, 219–223 (2001)

Saffari, S.E., Adnan, R.: Zero-inflated Poisson regression models with right censored count data. Matematika 27, 21–29 (2011)

Self, S.G., Liang, K.Y.: Asymptotic properties of maximum likelihood estimators and likelihood ratio tests under nonstandard conditions. J. Am. Stat. Assoc. 82(398), 605–610 (1987)

Serra, I.J.A., Polestico, D.L.L.: On the zero and k-inflated negative binomial distribution with applications. Adv. Appl. Stat. 88(1), 1–23 (2023)

Smarandache, F.: Introduction to neutrosophic statistics (2014). https://doi.org/10.13140/2.1.2780.1289

Sun, Y., Zhao, S., Tian, G.L., Tang, M.L., Li, T.: Likelihood-based methods for the zero-one-two inflated Poisson model with applications to biomedicine. J. Stat. Comput. Simul. 93(6), 956–982 (2023)

Tang, Y.C., Liu, W.C., Xu, A.C.: Statistical inference for zero-and-one-inflated Poisson models. Stat. Theory Relat. Fields 1, 216–226 (2017)

Vandenbroek, J.: A score test for zero inflation in a Poisson distribution. Biometrics 51, 738–743 (1995)

Wu, C.F.J.: On the convergence properties of the EM algorithm. Ann. Stat. 11, 95–103 (1983)

Yang, Y., Simpson, D.G.: Conditional decomposition diagnostics for regression analysis of zeroinflated and left-censored data. Stat. Methods Med. Res. 21, 393–408 (2012)

Yoneda, K.: Estimations in some modified Poisson distributions. Yokohama Math. J. 10, 73–96 (1962)

Zandi, Z., Bevrani, H., Belaghi, R.A.: Shrinkage estimation in the zero-inflated Poisson regression model with right-censored data. Commun. Stat. Theory Methods (2023). https://doi.org/10.1080/03610926.2023.2196751

Zhang, C., Tian, G.L., Ng, K.W.: Properties of the zero-and-one inflated Poisson distribution and likelihood-based inference methods. Stat. Interface 9, 11–32 (2016)

Zhu, H., Luo, S., DeSantis, S.M.: Zero-inflated count models for longitudinal measurements with heterogeneous random effects. Stat. Methods Med. Res. 26, 1774–1786 (2017)

Acknowledgements

The authors are grateful to the editor-in-chief and the anonymous referees for their helpful suggestions.

Funding

This research received no specific grant from any funding agency in the public, commercial, or nonprofit sectors.

Author information

Authors and Affiliations

Contributions

HS and MD contributed to the design and implementation of the research, to the analysis of the results and to the writing of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare they have no conflicts of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saboori, H., Doostparast, M. Zero to k Inflated Poisson Regression Models with Applications. J Stat Theory Appl 22, 366–392 (2023). https://doi.org/10.1007/s44199-023-00067-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44199-023-00067-3