Abstract

The study of system safety and reliability has always been vital for the quality and manufacturing engineers of varying fields for which generally the continuous probability distributions are proposed. Bivariate and multivariate continuous distributions are the candidates while studying more than one characteristic of the system. In this article, an attempt is made to address this issue when the reliability systems generate bivariate and correlated count datasets. The bivariate generalized geometric distribution (BGGD) is believed to serve as a potential candidate to model such types of datasets. Bayesian approach of data analysis has the potential of accommodating the uncertainty associated with the model parameters of interest using uninformative and informative priors. A real life bivariate correlated dataset is analyzed in Bayesian framework and the results are compared with those produced by the classical approach. Posterior summaries including posterior means, highest density regions, and predicted expected frequencies of the bivariate data are evaluated. Different information criteria are evaluated to compare the inferential methods under study. The entire analysis is carried out using Markov chain Monte Carlo (MCMC) set-up of data augmentation implemented through WinBUGS.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability and system engineers often encounter the difficulty of dealing with uncertainties present in the system where more than one study variables are of interest to them. Medical experts also face the similar situations when the life of patients goes at stake for the failure of vital organs like heart, brain, kidney, liver, lungs, and the likes. Choice of discrete or continuous and bivariate or multivariate distributions depends on the nature and number of the study variables. A vast literature exists on the construction of probability distributions. For this, one may refer to [1]. No hard and fast criteria could be established to construct probability distributions. More details on this issue may be found in [1,2,3].

If bivariate continuous distributions are to be used, we could choose from parametrical distributions to analyze bivariate lifetime data suggested in the literature, [4,5,6,7,8]. As our study corresponds to the reliability of system generating bivariate count data, so the most suitable distribution to model such types of datasets is believed to be the bivariate generalized geometric distribution (BGGD) proposed by [9]. Many bivariate distributions for continuous random variables are introduced in the literature to be used in data analysis, especially in applications of survival data in the presence of censored data and covariates (see, for example, [10,11,12,13,14,15,16,17,18,19,20]. The recent study includes [21]. Alternatively, it can be observed in the literature that it is not very common the use of bivariate distributions for survival data assuming discrete data. Some discrete bivariate distributions have been introduced in the literature as the bivariate geometric distribution of [4] or the bivariate geometric distribution of [22], but these discrete distributions are still not very popular in the analysis of bivariate lifetime data, especially in the presence of censored data and covariates (see also, [4, 23,24,25,26,27,28]).

Classical methods are frequently used in the analysis but they suffer from a certain drawbacks. The frequentists consider parameters to be unknown fixed quantities and they just rely on the current data and deprive the results of any prior information available about the parameters of interest. However, the Bayesians treat the parameters as random quantities and hence assign a probability distribution to the parameters. The Bayesian analysis is a modern inferential technique that endeavors to estimate the model parameters taking both the current data and prior information about the parameters into account. As a result, we get a posterior distribution that is believed to average the current data and the prior information. The posterior distribution thus derived is the achilis heal and the work-bench of the Bayesians to infer about the parameters based on numerical procedures and the entire estimation is then based on the very posterior distribution. A good review of the advantages of using Bayesian methods may be seen in [29]. The posterior distributions often have complex multidimensional functions that require the use of Markov chain Monte Carlo (MCMC) methods to draw results, [30,31,32,33,34]. Its use is very popular for analyzing bivariate continuous or discrete random variables in presence of censored data and covariates (see for example, [29, 35,36,37,38,39,40]. Recently, [41] has considered weighted bivariate geometric distribution and [42] has used the q-Weibull distribution in classical as well as Bayesian frameworks. In recent years, the use of Markov Chain Monte Carlo (MCMC) methods has gained much popularity, [43, 44] and [45].

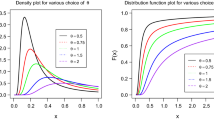

It has been established that the BGGD is a good choice to model and analyze reliability count data appearing in medicine, engineering. The probability mass function (pmf) of BGGD is given as

for \(x=\mathrm{0,1},\dots , y=\mathrm{0,1},\dots , 0<{\theta }_{1}<1, 0<{\theta }_{2}<1, \alpha >0, {\alpha }^{^{\prime}}=1-\alpha .\) And the cumulative distribution function (CDF) is

Here \(\alpha\), \({\theta }_{1}\) and \({\theta }_{2}\) are unknowns parameters that control the behavior of the datasets emerging from the BGGD. Estimating the unknown parameters is ultimate goal of the inferential statistics.

Due to variety of applications of the BGGD, the efficient estimation of the PDF and the CDF of the BGGD is the purpose of the present study. In [9] have recently worked out the classical maximum likelihood estimators for the BGGD. Taking into account and to avail the aforesaid advantages, we estimate the parameters of the BGGD in Bayesian framework. We have used the MCMC methods to draw results and applied different model selection criteria to compare the methods under consideration. Such as ML, AIC, AICC, BIC is also known as Schwarz criterion), and HQC.

2 The Frequentist Approach of Statistical Analysis

In statistical terminology, the data generating pattern of any system or model depends on the system-specific characteristics, called parameters. So the data being generated from the model is believed to advocate the values of the parameters causing the system to generate the dataset. The uncertainty associated with the data values is defined in terms of frequencies of the data values emerging again and again from the system under study. The objective of the analysis is to infer the characteristics of the system or model from the relevant data collected randomly. It is considered as the default approach to be used in variety of areas of sciences. Commonly used frequentist methods of statistical inference include uniformly minimum variance unbiased estimation, maximum likelihood (ML) estimation, percentile estimation, least squares estimation, weighted least squares estimation, etc. But we just report the most commonly used ML estimation method whose results will henceforth be compared with their Bayesian counterparts.

2.1 Maximum Likelihood (ML) Estimation

The likelihood function gives the probability of the situation that the model, system or distribution under study have witnessed to generate the observed sample. The frequentist method of maximum likelihood estimation professed by [46] calls for choosing those values of the parameters that maximize the probability of the very observed sample. We generally opt for algebraic maximization of the likelihood function to find the ML estimates, but we may also opt for evaluating the probabilities of the observed samples at all possible values of the parameters and to choose those parametric values as the estimates that maximize the evaluated probabilities of the observed samples.

Algebraically, the ML estimation may be proceeded as follows. Let us consider the random sample of size \(n\) from the bivariate correlated data \(\left({x}_{i},{y}_{j}\right)\) for \(i=\mathrm{1,2},\dots ,{n}_{1}\) and \(j=\mathrm{1,2},\dots ,{n}_{2}\) from the BGGD \(f\left(x,y;\alpha ,{\theta }_{1},{\theta }_{2}\right)\) given in (11). The log likelihood function \(l\left(x,y;\alpha ,{\theta }_{1},{\theta }_{2}\right)\) may be written as

Equating to zero the first partial derivatives of the log-likelihood function \(l\left(x,y;\alpha ,{\theta }_{1},{\theta }_{2}\right)\) with respect to the set of unknown parameters \(\alpha ,{\theta }_{1},{\theta }_{2}\) yields the normal equations which may be solved simultaneously to get the required ML estimates. However, if the normal equations are too complex to be solved simultaneously, we have to proceed to numerical methods or by direct maximization of the log-likelihood function.

2.2 ML estimation–Algebraic approach

Let us consider the real dataset for the BGGD given in Appendix A1 in Table 8 appearing in [47,48,49], where X represents the counts of surface and Y, the count of interior faults in 100 lenses. The summary of the data is presented in Table 1 along with its figurative representation made in Fig. 1.

Obviously, the observed data is positively skewed and negatively correlated. As stated in Sect. 3.1, the normal equations we get using the observed dataset are complicated and hence the estimates are found using the numerical methods. Following [9], the ML estimates, standard error (SE) and 95% confidence intervals for the parameters are reported in Table 2.

2.3 ML Estimation–Graphical approach

As already discussed in Sect. 3.1, we may opt for direct maximization of the likelihood function to find the ML estimates. The ML estimation theory suggested by [46] calls for choosing those values of the parameters that maximize the probability of the observed sample, and these values are regarded as the ML estimates. This technique is used here to find the ML estimates by plotting the observed dataset of the BGGD at different parametric values. The resulting plots generated in R package and are displayed in Fig. 2. We observed that the highest probability is obtained at \(\mathrm{\alpha }=\) 2.288 \(, {\uptheta }_{1}=\) 0.676 \(, {\uptheta }_{2}=\) 0.652 (the 1st one of the plots of Fig. 2), hence they may be regarded as the ML estimates.

The graph in first cell corresponds to that produced by using the ML estimates. The maximum likelihood is found to be 3.2619E-192 at the ML estimates, i.e., \(\alpha =\) 2.288 \(, {\theta }_{1}=\) 0.676 \(, {\theta }_{2}=\) 0.652. It is also interesting to note that the value of highest ordinate, i.e., 0.02822 appears at the data pair (\(x=2,y=1\)) at the ML estimates and the value of negative log-likelihood is found to be -432.957.

3 The Bayesian Approach of Statistical Analysis

A brief overview on this topic is already given in Sect. 1. Bayesian method combines prior information about the model parameters with dataset using Bayes rule yielding the posterior distribution. The Bayes rule is named after Thomas Bayes, whose work on this topic was published in 1763, 2 years after his death, [50]. To establish Bayesian inference set-up, we need a model or system in the form of a probability distribution controlled by a set of parameters to be estimated, the sample dataset generated by the model or distribution, and a prior distribution based on the prior knowledge of experts regarding the parameters of interest. These elements are formally combined in to posterior distribution which is regarded as a key-distribution and work-bench for the subsequent analyses. The algorithm is explained in [42].

If \(f(D|\theta )\) is the data distribution depending upon the vector of parameters \(\theta\),\(p(\theta )\) is the joint prior distribution of vector of parameters \(\theta\), \(L(D|\theta )\) is the likelihood function defining the joint probability of the observed sample data \(D\) conditional upon the parameter vector \(\theta\), then the posterior distribution of \(\theta\) conditional upon the data \(D\), denoted by \(f\left(\theta |D\right)\), is given by

The denominator is also termed as predictive distribution of data and is usually treated as the normalizing constant to make it a proper density. It may be omitted in evaluating the Bayes estimates but must be retained in comparing the models. The posterior distribution has the potential to balance the information provided by the data and prior distribution. It is of the supreme interest of the Bayesians but often has very complex and complicated nature and hence needs numerical methods to evaluate it.

3.1 The Prior Distributions

It has already been highlighted that main difference between frequentist and Bayesian approaches is to incorporate prior information regarding the model parameters into the analysis. The formal way of doing so it to quantify the initial knowledge of experts in the form of a prior distribution that can adequately fit to the nature of the parameter and the experts’ opinion. The parameters of the prior distribution, known as hyperparameters, are elicited in the light of the subjective expert opinion. So, the prior is leading if selected and elicited carefully and adequately, otherwise it may be misleading.

3.1.1 Uninformative Priors

In the situations when there is lack of knowledge about the model parameters, we choose vague, defuse or flat priors. As the BGGD is based on the set of parameters \(\alpha ,{\theta }_{1}, {\theta }_{2}\), so we assume uninformative uniform priors for the parameters as follows:

where \(\alpha >0, 0<{\theta }_{1}<1, 0<{\theta }_{2}<1,\) and \({h}_{i}>0\), for \(i=\mathrm{1,2},\dots ,6\) are the set of hyperparameters associated with the priors.

3.1.2 Informative Priors

When there is sufficient information available about the model parameters, we assign informative priors to the model parameters in such a way that they can adequately represent the knowledge available for the model parameters being examined. In the present situation, we assign an exponential distribution to \(\alpha\) and independent beta distributions to \({\theta }_{1}\) and \({\theta }_{2}\) given as under

Here again \({h}_{i}>0\), for \(i=\mathrm{7,8},\dots ,11\) are the set of hyperparameters associated with the priors that should be elicited in the light of expert opinion. It is to notice that the elicitation of hyperparameters is beyond the scope of this study, so we would opt for merely choosing the values of the hyperparameters to be used in the subsequent Bayesian analysis.

3.2 The Posterior Distribution

Being specific to estimation of the parameters of BGGD, let the vector of parameters of interest and the data are denoted by \(\theta =(\alpha ,{\theta }_{1}, {\theta }_{2})\) and \(D=(X,Y)\) respectively. The data distribution is denoted by \(f(D|\theta )\) and prior distribution by \(p(\theta )\). Then using the Bayes rule, the posterior distribution denoted by \(f(\theta |D)\) may be written as

where, all the notations are already defined. The posterior distribution \(f\left\{\theta |D\right\}\) may also be written as kernel density in proportional form as

The marginal posterior distributions \(g\left(\alpha |D\right),{ g(\theta }_{1}|D)\) and \({g(\theta }_{2}|D)\) of the parameters \(\alpha ,{\theta }_{1}\) and \({\theta }_{2}\) may be found by integrating out the nuisance parameters from the posterior distribution \(f\left\{\alpha ,{\theta }_{1}, {\theta }_{2}|D\right\}\) as follows:

And

3.3 Bayes Estimates

To work out the Bayes estimates of the parameters \((\alpha ,{\theta }_{1}, {\theta }_{2})\), we need to specify some loss function. A variety of loss functions is used to derive the Bayes estimates. Under the well-known squared error loss function, the Bayes estimates \(\widehat{\alpha }\), \({\widehat{\theta }}_{1}\) and \({\widehat{\theta }}_{2}\) are the arithmetic means of their marginal posterior distributions, and are evaluated as

The marginal posterior distributions are generally of complicated and complex forms and hence need numerical methods to evaluate them. Markov Chains Monte Carlo (MCMC) is the most frequently used numerical method to be used in Bayesian inference. So we also proceed with MCMC with the WinBUGS package to find the posterior summaries of the parameters of interest.

3.4 The MCMC Method

The MCMC method selects random sample from the probability distribution according to random process termed as Markov Chain where every new step of the process depends on the current state and is completely independent of previous states. The MCMC methods can be implemented using any of the standard softwares like R, Python, etc., but the most specific software being used for the Bayesian analysis is Windows based Bayesian inference using Gibbs Sampling (WinBUGS). We have implemented WinBUGS using the following scheme.

-

(i)

Define model based on probability mass function (11) of BGGD and then click Check Model menu in WinBUGS software.

-

(ii)

Load data given in Appendix.

-

(iii)

Specify the nodes and run the codes for 10,000 times following a burn-in of 5000 iterations.

WinBUGS codes used to analyze the data are given in Appendix A2.

3.5 Bayesian Results Under Uniform Non-informative Priors

Here we have assumed uniform priors for all the set of parameters under study as defined in section 0, and the resulting Bayes estimates, standard errors, medians and 95% highest density regions along with the values of the hyperparameters are presented in Table 3.

It is observed that the elicited hyperparameters have high impact on the Bayes estimates. The initial values have no effect no significant effects on the posterior estimates if the true convergence is achieved.

3.6 Convergence Diagnostics

Sequential plots are used in WinBUGS to assess difficulties in MCMC and realization of the model. In MCMC simulations, the values of the parameters of interest are sampled from the posterior distributions. So the estimates will be convergent if the posterior distributions are stationary in nature and the Markov chain will seem to be mixing well. To check convergence, different graphical representations of parametric behavior are used in MCMC implemented through WinBUGS.

3.6.1 History Time Series Plots

The time series history plots of parameters are presented in Fig. 3. Here, Markov chain seems to be mixing well enough and is being sampled from the stationary distribution. The plots are in the form of horizontal bands with no long upward or downward trends. It indicates that the Markov chain has converged.

3.6.2 Dynamic Traces and Autocorrelation Function

The traces of the parameters and autocorrelation graphs of \(\mathrm{\alpha },{\uptheta }_{1}\) and \({\uptheta }_{2}\) are presented in Fig. 4. These graphs also confirm convergence.

3.7 Bayes Estimates Using Informative Exponential-Beta Priors

The ideal characteristic of Bayesian analysis is that it can accommodate the prior information shared by the field experts about the unknown parameters in the analysis. It is important to notice that the experts may not be the experts of statistics and hence cannot translate their expertise in statistical terms. So it is the sole responsibility of the statisticians to formally utilize the experts’ prior information to elicit the values of the hyperparameters of the prior density which are to be subsequently used in the Bayesian analysis. Elicitation of hyperparameters is beyond the scope of our study. However, an exhaustive discussion on the elicitation of hyperparameters may be found in [51]. We have chosen the values of the hyperparameters with drastic changes and a summary of the Bayes estimates against all values are presented in Table 4.

It is observed that the elicited hyperparameters have high impact on the Bayes estimates. The initial values have no significant effect on the posterior estimates if the convergence in Markov chain is achieved. However, the change in the initial values causes a slight change in the parametric estimates.

3.8 Possible Predictive Inference

After finding out the Bayes estimates, it is necessary to evaluate them based on the predictive inference. As we have the data having 100 observations, so predicted sample of size 100 observations is generated based on the Bayes estimates obtained through MCMC based analysis. The predicted data along with their summaries are presented in Tables 5, 6.

Obviously, there exist some differences in the predicted estimates as compared to those of the original observed dataset. Definitely, these changes may be due to different Bayes estimates that are evaluated after accommodating the prior information about the model parameters via the hyperparameters.

4 Comparison of the Frequentist and Bayesian Approaches

An important aspect of this study is to compare the Bayes estimation method with the classical ML estimation method. We have accomplished this by using different model selection criteria presented as under.

4.1 Model Selection Criteria

The classical and Bayesian methods of estimation are compared using the model selection criteria, i.e., ML, AIC, AICC, BIC, and HQC, which are defined by

and

Here \(ln[L\left(\alpha ,{\theta }_{1},{\theta }_{2}\right)]\) denotes the log-likelihood, \(n\) denotes the number of observations and k denotes the number of parameters of the distribution under consideration. The smaller the values of these criteria are, the better the fit is. For more discussion on these criteria, see [52, 53]. The Maximum likelihood estimates, uninformative Bayes estimates and informative Bayes estimates along with their associated values of the model selection criteria are reported in Table 7.

Here we witness that the values of model selection criteria produced by Bayes method are less than those produced by the ML estimation method, which declare the Bayesian method more appropriate. Definitely, it is due to the distinct characteristic of the Bayesian methods that they incorporate the prior information related to the model parameters. However, it is pertinent to note that these results are sensitive to the selection of values of the hyperparameters. Hence a careful elicitation of the hyperparameters is demanding and earnest need of using the Bayesian methods. Carefully selected or elicited values of the hyperparameters may lead to even better estimates.

5 Summary and Conclusions

The bivariate generalized geometric distribution is believed to model reliability count datasets emerging from diverse phenomena. To understand the data generating phenomena, it is necessary to estimate the model parameters of the BGGD. To accomplish this, statistical theory offers two competing approaches, namely the frequentist and Bayesian approaches. The former approach is based on current data only; whereas, the later one utilizes prior information in addition to the current dataset produced by system or phenomenon. This study offers a comparison between the frequentist and Bayesian estimation approaches. To elaborate the frequentist approach, different descriptive measures and the maximum likelihood estimates are evaluated. The Bayesian estimation approach has also been illustrated by using uninformative and informative priors. We have worked out the posterior summaries of the parameters comprising posterior means, standard errors, medians, credible intervals and predictions for both types of the priors using the MCMC simulation technique. Correlated bivariate count dataset on counts of surface and interior faults is used for the illustration purpose. Comparison of the two estimation methods has been made using different model selection criteria. It is proved by working out all the estimates that all the model selection criteria including ML, AIC, AICC, BIC, and HQC have proved that the Bayesian approach outperforms the competing ML approach across the board. It has also been observed that the results may coincide if the information contained in the prior distribution and the datasets agree. However, the improved prior information may improve the results. As a future study, it is recommended that the Bayesian analysis of datasets may be done by using the formally elicited hyperparameters of the priors instead of values chosen by the experimenter.

Data Availability

Data used in this article are available in Appendix A1. The computer codes of are given in Appendix A2.

Abbreviations

- BGGD:

-

Bivariate generalized geometric distribution

- MCMC:

-

Markov chain Monte Carlo

- WinBUGS:

-

Bayesian inference using Gibbs sampling

- ML:

-

Maximum likelihood

- AIC:

-

Akaike information criterion

- AICC:

-

Corrected AIC

- BIC:

-

Bayes information criterion

- HQC:

-

Hannan-Quinn criterion

References

Kemp, C., Papageorgiou, H.: Bivariate hermite distributions. Indian J. Stat. Ser. A 44, 269–280 (1982)

Johnson, N.L., Kotz, S., Balakrishnan, N.: Discrete Multivariate Distributions, 165th edn. Wiley, New York (1997)

Lai, C.-D.: Constructions of discrete bivariate distributions. In: Advances in Distribution Theory, Order Statistics, and Inference, pp. 29–58. Springer (2006)

Basu, A.P., Dhar, S.: Bivariate geometric distribution. J. Appl. Stat. Sci. 2(1), 3–44 (1995)

Sarhan, A.M., Balakrishnan, N.: A new class of bivariate distributions and its mixture. J. Multivar. Anal. 98(7), 1508–1527 (2007)

Jamalizadeh, A., Kundu, D.: Weighted Marshall-Olkin bivariate exponential distribution. Statistics 47(5), 917–928 (2013)

Kundu, D., Gupta, A.K.: On bivariate Weibull-geometric distribution. J. Multivar. Anal. 123, 19–29 (2014)

Sankaran, P., Kundu, D.: A bivariate Pareto model. Statistics 48(2), 241–255 (2014)

Gómez-Déniz, E., Ghitany, M., Gupta, R.C.: A bivariate generalized geometric distribution with applications. Commun. Stat. Theory Methods 46(11), 5453–5465 (2017)

Gumbel, E.J.: Bivariate exponential distributions. J. Am. Stat. Assoc. 55(292), 698–707 (1960)

Freund, J.E.: A bivariate extension of the exponential distribution. J. Am. Stat. Assoc. 56(296), 971–977 (1961)

Marshall, A.W., Olkin, I.: A generalized bivariate exponential distribution. J. Appl. Probab. 4(2), 291–302 (1967)

Marshall, A.W., Olkin, I.: A multivariate exponential distribution. J. Am. Stat. Assoc. 62(317), 30–44 (1967)

Downton, F.: Bivariate exponential distributions in reliability theory. J. R. Stat. Soc. 32(3), 408–417 (1970)

Hawkes, A.G.: A bivariate exponential distribution with applications to reliability. J. R. Stat. Soc. 11, 129–131 (1972)

Block, H.W., Basu, A.: A continuous, bivariate exponential extension. J. Am. Stat. Assoc. 69(348), 1031–1037 (1974)

Hougaard, P.: A class of multivanate failure time distributions. Biometrika 73(3), 671–678 (1986)

Sarkar, S.K.: A continuous bivariate exponential distribution. J. Am. Stat. Assoc. 82(398), 667–675 (1987)

Arnold, B.C., Strauss, D.: Bivariate distributions with exponential conditionals. J. Am. Stat. Assoc. 83(402), 522–527 (1988)

Hanagal, D.D.: Bivariate Weibull regression model based on censored samples. Stat. Pap. 47(1), 137–147 (2006)

de Oliveira, R.P., Achcar, J.A.: Basu-Dhar’s bivariate geometric distribution in presence of censored data and covariates: some computational aspects. Electron. J. Appl. Stat. Anal. 11(1), 108–136 (2018)

Arnold, B.C.: A characterization of the exponential distribution by multivariate geometric compounding. Indian J. Stat. Ser. A 37, 164–173 (1975)

Nair, K.M., Nair, N.U.: On characterizing the bivariate exponential and geometric distributions. Ann. Inst. Stat. Math. 40(2), 267–271 (1988)

Sun, K., Basu, A.P.: A characterization of a bivariate geometric distribution. Statist. Probab. Lett. 23(4), 307–311 (1995)

Dhar, S.K., Balaji, S.: On the characterization of a bivariate geometric distribution. Commun. Stat. Theory Methods 35(5), 759–765 (2006)

Krishna, H., Pundir, P.S.: A bivariate geometric distribution with applications to reliability. Commun. Stat. Theory Methods 38(7), 1079–1093 (2009)

Li, J., Dhar, S.K.: Modeling with bivariate geometric distributions. Commun. Stat. Theory Methods 42(2), 252–266 (2013)

Davarzani, N., et al.: Bivariate lifetime geometric distribution in presence of cure fractions. J Data Sci. 13(4), 755–770 (2015)

Adams, E.S.: Bayesian analysis of linear dominance hierarchies. Anim. Behav. 69(5), 1191–1201 (2005)

Foreman-Mackey, D., et al.: emcee: the MCMC hammer. Publ. Astron. Soc. Pac. 125(925), 306 (2013)

Lunn, D., et al.: The BUGS Book: A Practical Introduction to Bayesian Analysis. CRC Press (2012)

Kruschke, J.: Doing Bayesian Data Analysis: A Tutorial with R and BUGS. Academic Press/Elsevier (2011)

Barry, J.: Doing Bayesian data analysis: A tutorial with R and BUGS. Eur. J. Psychol. 7(4), 778 (2011)

Carlin, B.P., Louis, T.A.: Bayes and Empirical Bayes Methods for Data Analysis. Chapman and Hall/CRC (2010)

Achcar, J.A., Santander, L.A.M.: Use of approximate Bayesian methods for the Block and Basu bivariate exponential distribution. J. Ital. Stat. Soc. 2(3), 233–250 (1993)

dos Santos, C.A., Achcar, J.A.: A Bayesian analysis for the Block and Basu bivariate exponential distribution in the presence of covariates and censored data. J. Appl. Stat. 38(10), 2213–2223 (2011)

Achcar, J., Davarzani, N., Souza, R.: Basu-Dhar bivariate geometric distribution in the presence of covariates and censored data: a bayesian approach. J. Appl. Stat. 43(9), 1636–1648 (2016)

Davarzani, N., et al.: A Bayesian analysis for the bivariate geometric distribution in the presence of covariates and censored data. J. Stat. Manag. Syst. 20(1), 1–16 (2017)

Scollnik, D.P.M.: Bayesian inference for three bivariate beta binomial models. Open Stat. Probab. J. 8(1), 27 (2017)

de Oliveira, R.P., et al.: Discrete and continuous bivariate lifetime models in presence of cure rate: a comparative study under Bayesian approach. J. Appl. Stat. 46(3), 449–467 (2019)

Najarzadegan, H., et al.: Weighted bivariate geometric distribution: simulation and estimation. Commun. Stat. Simul. Comput. (2018). https://doi.org/10.1080/03610918.2018.1520870

Abbas, N.: On examining complex systems using the q-weibull distribution in classical and bayesian paradigms. J. Stat. Theory Appl. 19(3), 368–382 (2020)

Gelfand, A.E., Smith, A.F.: Sampling-based approaches to calculating marginal densities. J. Am. Stat. Assoc. 85(410), 398–409 (1990)

Chib, S., Greenberg, E.: Understanding the metropolis-hastings algorithm. Am. Stat. 49(4), 327–335 (1995)

Kruschke, J.: Doing Bayesian Data Analysis: A Tutorial with R, JAGS, and Stan. Academic Press (2014)

Fisher, R.A.: On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. Lond. 222(594–604), 309–368 (1922)

Aitchison, J., Ho, C.: The multivariate Poisson-log normal distribution. Biometrika 76(4), 643–653 (1989)

Karlis, D., Meligkotsidou, L.: Finite mixtures of multivariate Poisson distributions with application. J. Stat. Plan. Inference 137(6), 1942–1960 (2007)

Sarabia, J.M., Gómez-Déniz, E.: Construction of multivariate distributions: a review of some recent results. (invited article with discussion: M. del Carmen Pardo and Jorge Navarro). SORT-Statistics and Operations Research Transactions, pp. 3–36 (2008)

Bayes, T.: Bayes An essay towards solving a problem in the doctrine of chances. Philos. Trans. R. Soc. 53, 376–418 (1763)

Garthwaite, P.H., Kadane, J.B., O’Hagan, A.: Statistical methods for eliciting probability distributions. J. Am. Stat. Assoc. 100(470), 680–701 (2005)

Fang, Y.: Asymptotic equivalence between cross-validations and Akaike information criteria in mixed-effects models. J. Data Sci. 9(1), 15–21 (2011)

Burnham, K.P., Anderson, D.R.: Multimodel inference: understanding AIC and BIC in model selection. Sociol. Methods Res. 33(2), 261–304 (2004)

Acknowledgements

The author is grateful to the Editorial Office and anonymous reviewers for their helpful comments and suggestions to improve the quality and presentation of the manuscript. The Author would also like to express his heartfelt appreciation to the editors of Journal of Statistical Theory and Applications for full waiver of the APC payments.

Funding

The entire research is conducted in my own capacity and resources without any funding support from my office, department or funding agency.

Author information

Authors and Affiliations

Contributions

I am the sole author of this article and hence the entire work is done by me.

Corresponding author

Ethics declarations

Conflict of Interest

The author declares no competing conflict of interests and states that no funding source or sponsor has participated in the realization of this work.

Ethical Approval and Consent to Participate

Not required, as the dataset was not collected form human participants.

Consent for Publication

I hereby consent for publication of this article after acceptance.

Appendices

Appendix A1

See Table 8.

Appendix A2

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abbas, N. On Classical and Bayesian Reliability of Systems Using Bivariate Generalized Geometric Distribution. J Stat Theory Appl 22, 151–169 (2023). https://doi.org/10.1007/s44199-023-00058-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44199-023-00058-4

Keywords

- Bayesian analysis

- Bivariate generalized geometric distribution

- Correlated count data

- Maximum likelihood estimation

- MCMC methods

- Model selection criteria

- Posterior estimates