Abstract

We consider a problem of finding the best way to control a system, known as an optimal control problem (OCP), governed by non-linear Volterra Integral Equations with Weakly Singular kernels. The equations are based on Genocchi polynomials. Depending on the applicable properties of Genocchi polynomials, the considered OCP is converted to a non-linear programming problem (NLP). This method is speedy and provides a highly accurate solution with great precision using a small number of basis functions. The convergence analysis of the approach is also provided. The accuracy and flawless performance of the proposed technique and verification of the theory are examined with some examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Spectral methods have been widely employed to solve mathematical models that arise in various fields, such as heat conduction, quantum mechanics, and fluid dynamics [1]. These methods use different infinitely differentiable orthogonal functions as trial functions, which lead to various spectral approaches [1, 2]. The high accuracy of these approaches has led to their widespread use in solving many problems in applied mathematics and engineering, such as those encountered in heat conduction, boundary-layer heat transfer, chemical kinetics, and superfluidity [3,4,5,6]. In studying many non-linear problems in these fields, we are often confronted with singular Volterra integral equations that pose significant challenges in finding real solutions. In this essay, we present a numerical approach for solving OCP governed by non-linear Volterra integral equations with weakly singular kernels. The authors in [7] investigate the local convergence of a Sequential quadratic programming method for non-linear optimal control of weakly singular Hammerstein integral equations. They also discussed sufficient conditions for local quadratic convergence of their propounded approach. In [8], an optimal control problem for the non-linear weakly singular Volterra integral equation is considered, and second order sufficient optimality condition has been established for it. No numerical results are listed in this article. A kind of OCP governed by a system of weakly singular variable-order fractional integral equation has been established in [9], and a numerical approach with the Chebyshev cardinal function and its operational matrix of integration has been introduced. This method is also examined through several examples. In [10], numerical approaches for optimal control governed by the integro-differential system with singular kernels are presented. The method is designed to minimizes the difference between the optimal state and target function within a specific time frame. In this article, Genocchi polynomials and their operational matrices have been utilized to dissolve OCP governed by non-linear Volterra integral equations with weakly singular kernels of the following form:

subject to

where \(f(t)\in L^2[0,T]\) and G are locally Lipchitz continuous, smooth, and a Hammerstein non-linear function; and \(\mu\) and \(\nu\) are real positive numbers. There are many multiple applications of OCP governed by non-linear Volterra integral equations with weakly singular Kernels, in various areas, such as mathematical economy, chemistry, and physics, like the scattering of waves and particles, heat conduction, semiconductors, population dynamics, and fluid flow [11, 12].

The article is formatted in the following way: This article presents some necessary basic definitions of Genocchi polynomials in Sect. 2. Section 3 demonstrates a numerical suggested technique based on Genocchi polynomials. This article approximates the error analysis of our proposed method in Sect. 4. Section 5 examines some examples to demonstrate the performance and precision of the proposed scheme. Section 6 provides the conclusion of the study.

2 Properties of the Genocchi Polynomials

Genocchi polynomials and numbers have been widely used in various branches of applied sciences, such as complex analytic number theory, homotopy theory, differential topology, and quantum groups [13, 15]. In terms of approximating unknown functions, Genocchi polynomials can be used to construct a linearly independent set of functions that can be used as a basis for approximating other functions. This is useful because it allows us to approximate a wide range of functions using a relatively small number of basis functions because of orthogonality property.

In addition, Genocchi polynomials can be used for numerical integration and function approximation [14]. The Genocchi polynomials \(G_n(x)\) and numbers \(G_n\) are usually expressed utilizing the exponential generating functions \(\mathcal {Q}(t,x)\) and \(\mathcal {Q}(t)\), respectively, as follows [13, 15]:

\(G_n(x)\) is the Genocchi polynomial of order n, and is defined as follows:

where \(G_{n-k}\) is the Genocchi number, which can be calculated from

\(B_n\) is Bernoulli numbers. For more awareness about the Genocchi polynomials, you can refer to references [13, 15,16,17]. Suppose that f(t) is an arbitrary function belonging to \(L^2[0,1]\). We can approximate it as follows:

in which \(C=[c_1,c_2,\ldots ,c_N]^T\) is unknown vectors; \(\mathcal {X}_t=[1,t,t^2,\ldots ,t^N]^T\) and \(G(t)=[G_1(t),G_2(t),\cdots ,G_N(t)]\).

3 Implementation of Genocchi Polynomials Collocation Method

So far, various methods have been given to solve optimal control problems with different constraints. Some of these methods obtain necessary optimal conditions by using Pontryagin’s maximum principle [18, 19]. Applying the collocation method based on Genocchi Polynomials involves the discretization of both the cost function (1) and the controlled integral equation with weakly singular kernels (2). Firstly, we implement a spectral approach based on the Genocchi polynomials to solve equation (2). We compute the following integral for \(m=0,1,\ldots\) to apply the propounded approach for approximating the system dynamics in (2)

We assume

According to relation (2), we have

We approximate z(t) and u(t) in Eq. (10) as follows:

So, we obtain

We write the integral part of (12) in matrix form as follows:

in which \(X_s=[1,s,s^2,\ldots ,s^N].\) From (8), we obtain

By utilizing (14), (13) is converted to

By considering \(\kappa _{m,m}=\frac{\Gamma (1-\mu )\Gamma (\mu +\nu +1)}{\Gamma (\nu +{m}-\mu +2)}\), Equation (15) can be converted to matrix form as follows

\(\psi\) is an infinite diagonal matrix, and \(\phi\) is an infinite vector. By approximating each element of vector \(\phi\) by Genocchi polynomials, we obtain

Then, we have

By substituting (18) in (16), we will have Eq. (12) as follows:

By utilizing N nodal points of the Newton–Cotes rule \(t_s=\frac{2s-1}{2N}, s=1,2,\ldots , N\), we collocate (19) as follows

For approximating the cost function given in (2), the Gauss Legendre (GL) quadrature has been applied after the appropriate interval transformation.

where \(w_{j}^{'}=\frac{T}{2}w_j\) and \(\tau _j^{'}=\frac{T(\tau _j+1)}{2}\). \(\tau _j\)’s are the GL nodes, zeros of Legendre polynomial \(L_N(t)\) in \([-1, 1]\), and \(w_j\)’s are the corresponding weights. The quadrature weights, \(w_j\), can be obtained by the following relation [20]:

By substituting \(u(t)=U^TG(t)\) and \(x(t)=f(t)-C^TG\psi \gamma G\mathcal { X}_{t}\) in (22), we have

Finally, OCP given in (1) and (2), is approximated and converted to the following NLP

subject to

After calculating unknown vectors C and U by solving the resulted NLP given in (25) and (26), we use the following Equations to obtain x(t) and u(t)

4 Error Analysis

The theory of approximation is instrumental in solving various integral and differential equations. Using the following theorem, we can apply the proposed approach to calculate an arbitrary function approximation.

Theorem 1

Let \(\mathcal {P}=span\{G_1(t),G_2(t),\ldots ,G_N(t)\}\subset L^{2}[0,1]\). It is obvious that \(\mathcal {P}\) is a subspace of the \(L^2[0,1]\) whose dimension is finite, and so, f(t), as an arbitrary function in \(L^2[0,1]\), has a unique best approximation in \(\mathcal {P}\), is named for example. \(f^*(t)\), such that

that unique coefficients \(c_n\), for \(0\le n\le N\) can be used to express any arbitrary function f(x) in terms of the Genocchi polynomials

where C consisting of the unique coefficient is called the Genocchi coefficient matrix C is given by the following:

where \(L=\{\int _{0}^{1}f(t){G}_{m}(t)dt\}, m=0,1,\ldots , N\) is a \(N-dimensional\) matrix and \(\mathcal {T}^{(0,1)}=\left[ \int _{0}^{1}G_n(t)G_m(t)dt\right] _{N\times N}\) is a square matrix. The method of calculating \(\mathcal {F}\) and \(\mathcal {T}^{(0,1)}\) is explained in [17].

Theorem 2

Let \(f(t)\in C^{n+1}[0,1]\) and \(\mathcal {P}=span\{G_1(t),G_2(t),\ldots ,G_N(t)\}\), if \(C^TG(t)\) is the best approximation of f(t) out of the set of polynomials \(\mathcal {P}\) then [13]

where

According to Theorem 2, The estimation \(C^TG(t)\) converges to f(t) as n goes to infinity.

The functions x and u are called admissible if, by putting them in (2), they satisfy in this equation. The set of admissible pairs is described as follows:

The errors of \(\hat{x}\) and \(\hat{u}\) described in (27) are proven to be bounded above by

On the other hand, G is locally Lipschitz concerning \((x(t),u(t))\in \mathcal {B}\); therefore, there is a constant \(Q_1>0\) such that

By utilizing equations (36) and (35), we obtain

By substituting (5) in (37), we get

we define \(\eta (t,\nu ,\mu )\) as follows

\(B(\mu ,\nu )\) is a beta function, which is defined as follows

By utilizing inequality (38) and (40), we get

and

We can establish an upper bound for \(||u(t)-\hat{u}(t)||\) from Theorem 2.

Let \(\hat{x}_N\) and \(\hat{u}_N\) are defined as in equation (27), then we set

Theorem 3

Let \(\bar{\mathcal {J}}_{N}^{*}=\inf _{\mathcal {A}_N}J\) and \(J^*=\inf _{\mathcal {B}}J\) and \(J^*\), also \(J^*\) is finite and unique, then the following inequality holds.

Proof: It is evident that the relation

is satisfied, so \(\mathcal {J}_{i}^{*}\) is a non-increasing sequence that is bounded and convergent to \(\mathcal {J}^{*}\)

5 Illustrative Examples

To examine the efficiency of the propounded collocation approaches, some examples are given in this section. All computations utilize Mathematica 10.4 on a PC with AMD A6-4400 M APU with Radeon(tm) HD Graphics, CPU @ 2.70 GHz and 4.0 GB of RAM. To verify the error of the propounded approach, the following notations are taken into consideration:

where \(u^*\) and \(x^*\) are exact optimal control and state solutions in OCP described in (1) and (2) and \(\hat{u}^*\) and \(\hat{x}^*\) are approximate optimal control and state solutions in the resulted NLP given in (25) and (26). The absolute errors of optimal objective functional \(\mathcal {J}^*\) are also defined as follows:

Example 1

Consider the following OCP governed by non-linear Volterra integral equation

subject to

where

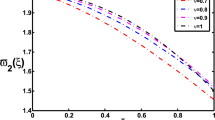

\(HypergeometricPFQ[\{a_1,\cdots ,a_p\},\{b_1,\ldots ,b_q\},z]\) is mathematical Notation of traditional Notation \({^{\scriptscriptstyle {\mathrm {}}}_{p}\mathop {{\mathop {{\text {F}}}\nolimits ^{}_{q}}}\nolimits }(a_1,\ldots ,a_p,b_1,\ldots ,b_q;z)= \sum _{k=0}^{\infty }\frac{\prod \nolimits _{j=1}^{p}(a_j)_k z^k}{\prod \limits _{j=1}^{q}(b_j)_kk!}\), where \(q\le {p}\) or \(q=p-1\) and \(|z|<1\) or \(q=p-1\) and \(|z|=1\) and \(Re[\sum _{j=1}^{p-1}b_j-\sum _{j=1}^{p}a_j]>0\). The exact solution of this OCP is \(x^*(t)=t^5\) and \(u^*(t)=Cos(t)\). The plots of approximate state and control functions for \(N=5\) are given in Figs. 1 and 2. The exact and approximate solutions overlap with each other, which demonstrates the exactness and correctness of the propounded method. The exact solutions are shown with continuous lines, and approximate solutions are shown with dots. The optimal value of objective function for different values of N is shown in Table 1. The absolute errors of control and state functions for various values of points in interval [0, 1] are given in Table 2. The graphs of absolute errors are given in Figs. 3 and 4.

The absolute error of control function with \(N=4\) for example 1

Example 2

Consider

with the dynamical system

in which

where \(x^*(t)=t\sin (t)\) and \(u^*(t)=cos(t)\).

Figures 5 and 6 show the exact and approximate optimal control and state for \(N = 4\). It is obvious that the exact and approximate solutions completely overlap. The exact and approximate solutions are shown respectively with, continuous orange line and black dots. In Table 3, the optimal value of \(J^*\) is given for \(N=2,3,4.\) Absolute errors of optimal state and control solutions in different value of t is also demonstrated in Table 4. The graphs of absolute errors are given in Figs. 7 and 8.

6 Conclusions

The main objective of this paper is to present an effective method that uses the collocation method based on Genocchi polynomials to solve OCP governed by non-linear Volterra integral equations with weakly singular kernels. The propounded approach efficiently and simply reduces the considered system to an NLP. The generated optimization problem is solved by NMinimize function in Mathematica software. The proposed method has been analyzed for convergence. Some illustrative examples have been provided to demonstrate the scheme’s easy applicability and good accuracy. It should be noted that the method can be used in the same way to solve OCP governed by integral equations which have kernels containing both points, endpoint and an Abel-type singularity, with exact solutions being typically non-smooth that have many engineering applications. We hope to refer to problems of this type in a later study. For subsequent research, we can utilize other polynomials like Legendre, Chebyshev, Bernoulli, etc. Due to substantial applications of the first kind of Volterra integral equations with singular kernels in load leveling problems and power engineering systems, the propounded approach can also be utilized for solving OCP governed with these equations for subsequent works.

Availability of Data and Materials

The authors confirm that the data supporting the findings of this study are available within the article.

References

Canuto, C., Hussaini, M.Y., Quarteroni, A., Thomas, Jr.: A Spectral Methods in Fluid Dynamics. Springer Science and Business Media (2012)

Ebrahimzadeh, A., Khanduzi, R.S.P., Beik, A., Baleanu, D. : Research on a collocation approach and three metaheuristic techniques based on MVO, MFO, and WOA for optimal control of fractional differential equation. J. Vib. Control 29(3–4), 661–674 (2023)

Abdou, M.A.: On a symptotic methods for Fredholm Volterra integral equation of the second kind in contact problems. J. Comput. Appl. Math. 154, 431–446 (2003)

Datta, K.B., Mohan, B.M.: Orthogonal Functions in Systems and Control. World Scientific, Singapore (1995)

Ramos, J.I., Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods in Fluid Dynamics. Springer, New York (1988)

Atkinson, K.E.: The Numerical Solution of Integral Equations of the Second Kind. Cambridge University Press, Cambridge (1996)

Alt, W., Sontag, R., Trltzsch, F.: An SQP method for optimal control of weakly singular Hammerstein integral equations. Appl. Math. Optim. 33, (1996)

Rsch, A., Trltzsch, F.: Sufficient second order optimality conditions for a state-constrained optimal control problem of a weakly singular integral equation. Numer. Funct. Anal. Optim. 23, 173–194 (2002)

Heydari, M.H., Mahmoudi, MR., Avazzadeh, Z., Baleanu, D.: cardinal functions for a new class of non-linear optimal control problems with dynamical systems of weakly singular variable-order fractional integral equations. J. Vib. Control 26, 713–723 (2020)

Chiang, S.: Numerical optimal unbounded control with a singular integro-differential equation as a constraint. In: Conference Publications, 2013, 129. American Institute of Mathematical Sciences (2013)

Lamm, P.K., Eldn, L.: Numerical solution of first-kind Volterra equations by sequential Tikhonov regularization. SIAM J. Numer. Anal. 3(4), 1432–1450 (1997)

Teriele, H.J.: Collocation methods for weakly singular second- kind Volterra integral equations with nonsmooth solution, IMA (Institute of Mathematics and its Applications). J. Numer. Anal. 2, 437449 (1982)

Isah, A., Phang, C.: On Genocchi operational matrix of fractional integration for solving fractional differential equations. AIP Conf. Proc. 1795, 020015 (2017)

Isah, A.: Poly-Genocchi polynomials and its applications. AIMS Math. 6(8), 8221–8238 (2021)

Loh, J.R., Phang, C., Isah, A.: New operational matrix via Genocchi polynomials for solving Fredholm-Volterra fractional integro-differential equations. Adv. Math. Phys. 2017, 112 (2017)

Sadeghi Roshan, S., Jafari, H., Baleanu, D.: Solving FDEs with Caputo-Fabrizio derivative by operational matrix based on Genocchi polynomials. Math. Methods Appl. Sci 41, 91349141 (2018)

Hashemizadeh, E., Ebadi, M.A., Noeiaghdam, S.: Matrix method by Genocchi polynomials for solving non-linear Volterra integral equations with weakly singular kernels. Symmetry 12, 2105 (2020)

Jajarmi, A., Pariz, N., Effati, S., Kamyad, A.V.: Infinite horizon optimal control for non-linear interconnected largescale dynamical systems with an application to optimal attitude control. Asian J. Control 14(2012), 1239–1250 (2012)

Jajarmi, A., Baleanu, D.: On the fractional optimal control problems with a general derivative operator. Asian J. Control 23, 1062–1071 (2021)

Maleknejad, K., Ebrahimzadeh, A.: An efficient hybrid pseudo-spectral method for solving optimal control of Volterra integral systems. Math. Commun. 19, 417–435 (2014)

Acknowledgements

We thank the anonymous reviewers for helpful comments, which lead to define improvement in the manuscript.

Funding

No funding use for this research.

Author information

Authors and Affiliations

Contributions

AE and EH, these authors contributed equally to all parts of this research.

Corresponding author

Ethics declarations

Conflict of interest

There is no competing interest in this research.

Ethics approval and consent to participate

All the authors agreed to participate in this research.

Consent for publication

All the authors agreed to publish this research.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ebrahimzadeh, A., Hashemizadeh, E. Optimal Control of Non-linear Volterra Integral Equations with Weakly Singular Kernels Based on Genocchi Polynomials and Collocation Method. J Nonlinear Math Phys 30, 1758–1773 (2023). https://doi.org/10.1007/s44198-023-00156-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44198-023-00156-y