Abstract

Cracks in oil pipelines pose significant risks to the environment, public safety, and the overall integrity of the infrastructure. In this paper, we propose a novel approach for crack detection in oil pipes using a combination of 3D drone simulation, convolutional neural network (CNN) feature extraction, and the dynamically constrained accumulative membership fuzzy logic algorithm (DCAMFL). The algorithm leverages the strengths of CNNs in extracting discriminative features from images and the DCAMFL’s ability to handle uncertainties and overlapping linguistic variables. We evaluated the proposed algorithm on a comprehensive dataset containing images of cracked oil pipes, achieving remarkable results. The precision, recall, and F1-score for crack detection were found to be 96.5%, 97.3%, and 95.6%, respectively. These high-performance metrics demonstrate the algorithm’s accuracy and reliability in identifying and classifying cracks. Our findings highlight the effectiveness of integrating advanced simulation techniques, deep learning, and fuzzy logic for crack detection in oil pipelines. The proposed algorithm holds promise for enhancing pipeline surveillance, improving safety measures, and extending the lifespan of oil infrastructure. Future work involves expanding the dataset, fine-tuning the CNN architecture, and validating the algorithm on large-scale pipelines to further enhance its performance and applicability.

Similar content being viewed by others

1 Introduction

The vitality of oil pipelines as key infrastructures in the energy sector cannot be overstated. Ensuring their structural integrity and promptly detecting any form of damage such as cracks is crucial to prevent disastrous environmental impacts and potential financial losses. Traditional methods of monitoring pipelines are often labor-intensive, time-consuming, and prone to human error. With the advent of new technologies, there is an increasing demand for innovative, efficient, and reliable strategies for pipeline surveillance and maintenance [1]. One promising avenue for progress is the application of drone technology. Unmanned aerial vehicles (UAVs), or drones, equipped with appropriate sensor technologies, can offer significant advantages in terms of speed, safety, and cost-effectiveness. However, the full potential of drone technology in pipeline surveillance has yet to be realized, primarily due to the challenges in processing and interpreting the complex data collected during inspections [2]. Recent years have witnessed a surge in the application of artificial intelligence (AI) in various industries, with the oil and gas sector being no exception. AI, particularly machine learning (ML) and deep learning (DL) algorithms, have shown significant promise in enhancing the efficiency and accuracy of crack detection in oil pipelines. This literature review focuses on some of the most recent methods employed in this realm [3].

Zhou et al. demonstrated the use of convolutional neural networks (CNNs) for the automated detection of pipeline cracks. They proposed a method in which a CNN was trained using thousands of images of pipelines, each labeled according to the presence or absence of cracks. The system demonstrated a high degree of accuracy in detecting both large and minute cracks, showcasing the potential of DL algorithms in pipeline surveillance. While achieving high accuracy, their model was heavily reliant on large labeled datasets, which may not always be available in real-world applications [4]. Similarly, the work by Li and Chen highlighted the application of support vector machines (SVMs) in conjunction with image processing techniques for crack detection in oil pipelines. The proposed method involved the preprocessing of images using techniques such as histogram equalization and adaptive thresholding, followed by the application of SVM for classification. This study, too, reported a high success rate in crack detection. However, this approach struggled with feature extraction complexity and required extensive preprocessing, limiting real-time applicability [5]. In a different approach, Khan et al. proposed the use of a combination of acoustic emission (AE) technology and AI for crack detection. The AE signals generated due to cracks were processed and classified using AI algorithms, allowing for the real-time monitoring of pipelines. This method, although requiring additional hardware installations, provided a non-invasive approach to crack detection the requirement for additional hardware installations increased operational costs and complexity [6]. Reinforcement learning (RL), a type of ML, was explored by Huang and Liu for the development of a crack detection system. They proposed an RL-based drone system that learned the optimal path for drone navigation to inspect pipelines, minimizing the time and resources required for inspection while maximizing crack detection accuracy. Although promising in reducing inspection time, RL models require extensive training and may not generalize well to dynamic environmental conditions [7].

While these studies have significantly advanced the field of crack detection in oil pipelines, they also highlight the existing challenges. These include the need for large datasets for training, the computational complexity of some algorithms, and the varying accuracy under different environmental conditions. Furthermore, the need for a more dynamic approach to accommodate overlapping uncertainties in real-world data remains a significant challenge [8]. Song et al. [9] conducted a failure analysis of a crude oil pipeline subjected to CO₂-steam flooding, identifying chloride stress corrosion cracking as the primary cause of leakage. Shen et al. [10] developed a quantitative detection method utilizing ultrasonic guided waves combined with a one-dimensional convolutional neural network (1D-CNN), achieving less than 2% error in crack size estimation. Additionally, a study introduced a lightweight sewer pipe crack detection approach based on an amphibious robot equipped with an improved YOLOv8n model, enhancing detection capabilities in complex environments [11]. These existing methods, while effective in controlled scenarios, often suffer from several practical limitations, including:

-

1.

Dependency on large datasets: Many deep learning models require vast amounts of labeled training data, which is not always feasible in real-world pipeline inspections.

-

2.

Computational complexity: Some approaches involve resource-intensive computations that hinder real-time defect detection and decision-making.

-

3.

Environmental sensitivity: Variations in lighting, weather conditions, and pipeline surface properties significantly affect model accuracy.

-

4.

Limited adaptability: Most methods fail to account for overlapping uncertainties in real-world pipeline data, making them less reliable in dynamic industrial environments [8].

To address these limitations, we propose an enhanced deep learning framework that integrates CNNs with fuzzy logic-based uncertainty handling to improve defect detection robustness in real-world scenarios. Our approach introduces the following key improvements:

-

Adaptive learning with smaller datasets: By incorporating data augmentation and transfer learning techniques, our model mitigates the reliance on large labeled datasets, enhancing its generalizability.

-

Computational efficiency: Optimized CNN architectures are employed to reduce computational overhead while maintaining high accuracy, making real-time drone-based inspection feasible.

-

Robustness to environmental variations: A hybrid AI approach combining deep learning with fuzzy logic ensures resilience against variable environmental conditions, leading to more reliable crack detection.

-

Dynamic uncertainty modeling: Unlike traditional AI models that struggle with ambiguous defect patterns, our approach dynamically adapts to overlapping uncertainties using fuzzy inference mechanisms.

1.1 Contributions of This Work

In response to these challenges, this study introduces a novel hybrid approach for crack detection in oil pipelines, which integrates three-dimensional (3D) drone simulation with the Dynamically Constrained Accumulative Membership Fuzzy Logic Algorithm (DCAMFL). Unlike conventional machine learning and deep learning-based methods that solely rely on data-driven classification, the proposed methodology incorporates a fuzzy logic-based uncertainty management framework to enhance decision-making in crack classification.

One of the key contributions of this work is the development and implementation of the DCAMFL algorithm, which improves upon traditional fuzzy logic systems by dynamically managing accumulative membership values. Unlike conventional fuzzy logic methods that may suffer from ambiguity due to overlapping linguistic variables, DCAMFL introduces a constraint mechanism that ensures a more accurate and adaptive classification of defects. This is particularly beneficial in real-world applications where pipeline conditions vary significantly, making traditional classification models prone to errors.

Additionally, the proposed approach leverages 3D drone simulations to optimize crack detection from aerial inspections, enhancing both efficiency and accuracy. By simulating real-world conditions, the system can be fine-tuned to adapt to various defect patterns, lighting conditions, and structural variances. This simulation-driven approach reduces dependency on large, annotated datasets, addressing one of the key limitations of deep learning-based methods.

Compared to existing crack detection techniques, which primarily rely on CNNs, SVMs, or acoustic emission-based detection, our method provides a more interpretable and adaptive framework for handling uncertainties in defect classification. While CNNs excel in feature extraction, they often struggle with misclassifications due to noise and small dataset variations. SVM-based methods, although effective, require extensive preprocessing, which adds computational overhead. Similarly, acoustic-based detection methods necessitate additional hardware installations, limiting their scalability. In contrast, our approach provides a lightweight, scalable, and real-time monitoring solution that can be seamlessly integrated with autonomous drone inspection systems.

1.2 Research Objectives and Scope

This research aims to enhance the accuracy, reliability, and computational efficiency of pipeline crack detection through the development and validation of the DCAMFL algorithm combined with 3D drone-based inspections. The specific objectives of this study include:

-

Developing an enhanced fuzzy logic-based classification algorithm that dynamically adjusts membership values to accommodate real-world uncertainties in defect detection.

-

Simulating and optimizing drone-based crack detection through 3D modeling and real-time aerial inspections.

-

Validating the proposed method against state-of-the-art techniques by comparing precision, recall, and F1-score performance metrics.

-

Evaluating the computational efficiency of the proposed approach, ensuring its feasibility for real-time industrial deployment.

Through this innovative approach, we seek to establish a more effective, adaptable, and scalable framework for crack detection in oil pipelines, ultimately enhancing pipeline safety, reducing inspection costs, and minimizing environmental risks associated with undetected defects.

2 Fuzzy Logic

Mathematically, fuzzy logic is a way to handle imprecision and uncertainty. It allows for degrees of truth, as opposed to classical (or binary) logic, which implies that something can be either completely true or completely false [10] (Fig. 1).

2.1 Fuzzy Logic Type-1

Type-1 fuzzy logic systems (T1FLS) represent the traditional frameworks in fuzzy logic [11], whereby fuzziness is characterized by a membership function μ(x), which assigns each point in the input space X a membership value within the range [0, 1]. The membership function μ(x) measures the extent to which the claim “x is A” is true, with A being a fuzzy set. For a specified fuzzy set A in X, the membership function μA(x) is defined for every x in X.

Mathematically, a fuzzy set A in X is represented as a set of ordered pairs:

For example, consider a fuzzy set "Tall" with a membership function defined as follows:

where h is the height is in feet.

2.2 Fuzzy Logic Type-2

Type-2 fuzzy logic systems (T2FLS) were developed to address elevated levels of uncertainty. In T2FLS, the membership degree is inherently fuzzy, characterized by a membership function that constitutes a fuzzy set inside the interval [0, 1]. A Type-2 fuzzy set  in X is denoted as a collection of ordered triples [12]:

where μÂ(x, u) is a Type-2 membership function, mapping each point (x, u) in the Cartesian product X x [0, 1] to a membership value in the interval [0, 1].

For example, if we consider the same fuzzy set "Tall" as before, but now in a Type-2 context, we could have a membership function such as:

This indicates that for a height of 5.5 feet, there is a full range of possible membership values (from 0 to 1), reflecting different opinions or degrees of uncertainty about whether 5.5 feet should be considered "tall".

Type-2 fuzzy sets are commonly described using the upper membership function (UMF) and the lower membership function (LMF). For each given value of x, the UMF denotes the highest possible membership grade and the LMF the lowest possible grade. Using these two functions, the footprint of uncertainty (FOU) can be defined, which represents the region in the interval [0, 1] where the membership grade is not zero [13].

Mathematically, the FOU is given by:

where LMF(x) and UMF(x) are the lower and upper membership functions, respectively, for each x in X. The FOU provides a measure of the uncertainty associated with the membership function (Fig. 2).

Type-1 fuzzy logic is inadequate for addressing noise, however Type-2 fuzzy logic is capable of managing uncertainties stemming from the value of a singular linguistic variable, incorporating the range suggested by subject matter experts. Nonetheless, neither successfully addresses the noise related to substantially overlapping values of linguistic variables [14]. In Type-2 fuzzy logic, the linguistic variable is associated with several membership function values, determined by expert opinion, to alleviate the uncertainties associated with each linguistic variable. However, the impact of noise stemming from high overlap of linguistic variables’ values is not taken into account. This high degree of overlap typically transpires when there is considerable disparity in expert decisions, a frequent occurrence in most real-time systems.

2.3 Proposed Modification to Type-2 Fuzzy Logic

The modification of Type-2 Fuzzy Logic involves imposing a constraint on the sum of membership functions to prevent it from exceeding 1. This can be seen as introducing a normalization step to the fuzzy inference system (FIS). Let us explore this concept more formally [15].

Let us denote the set of all membership functions for a given fuzzy set by MF and each individual membership function by μi, where i is the index of the membership function in MF. In the standard Type-2 fuzzy logic system, the membership degree of an element x to the fuzzy set is given by:

That is, the membership degree of x is the maximum membership degree to all individual membership functions.

The modification to the Type-2 Fuzzy Logic system could involve introducing a constraint to the membership functions so that the sum of the membership degrees to all individual membership functions does not exceed 1 [16]. This can be achieved by normalizing the membership degrees. The modified membership degree µ’(x) can be calculated as:

This modification ensures that the sum of the membership degrees to all individual membership functions does not exceed 1, which can be beneficial in dealing with the uncertainties from overlapping linguistic variables (Fig. 3).

In a FIS, this modification could be incorporated into the aggregation and defuzzification steps. In the aggregation step, the maximum operation in the standard FIS could be replaced with a sum operation followed by the normalization. In the defuzzification step, the centroid method could still be used, but it would now operate on the normalized membership functions [17].

Traditional Type-1 fuzzy logic (T1FL) systems are well-suited for handling approximate reasoning and uncertainty through fixed membership functions. However, they lack the ability to model higher levels of uncertainty, particularly in environments with overlapping linguistic variables. To address this, Type-2 fuzzy logic (T2FL) was introduced, which extends the concept by introducing a secondary membership function to quantify the uncertainty of membership grades. While T2FL enhances uncertainty modeling, it often results in higher computational complexity and may still suffer from membership function overlap issues, leading to ambiguity in decision-making. To overcome these challenges, this work proposes a dynamically constrained accumulative membership fuzzy Logic (DCAMFL) Algorithm, which introduces a constraint mechanism that dynamically normalizes membership values to prevent excessive accumulation. This ensures that the total membership value for any given crisp input does not exceed 1, leading to a more structured and interpretable uncertainty handling approach.

2.3.1 Type-1 Fuzzy Logic (T1FL)

In a Type-1 FIS, the membership function is fixed and assigns a crisp value to each input:

where \(\mu (x)\) is the membership function defining the degree of membership of an element \(x\) to a fuzzy set.

2.3.2 Type-2 fuzzy logic (T2FL)

In a Type-2 FIS, a footprint of uncertainty (FOU) is introduced, where each membership function itself has an associated secondary membership function:

where \(\underset{\_}{\mu }(x)\) and \(\overline{\mu }\left( x \right)\) define the lower and upper bounds of the FOU. This allows T2FL to capture additional uncertainties, but at the cost of higher computational complexity due to the need for iterative type reduction.

Proposed DCAMFL Approach:

The DCAMFL algorithm enhances T2FL by introducing a normalization constraint that dynamically adjusts membership values to ensure their cumulative sum does not exceed 1, thus preventing excessive overlap and ambiguity. The modified membership function is expressed as:

where \(\mu^{\prime } \left( x \right)\) is the normalized membership value, ensuring that:

This prevents membership accumulation beyond reasonable limits and eliminates excessive uncertainty propagation that can degrade classification accuracy.

In a FIS, this modification affects both aggregation and defuzzification steps:

-

Aggregation: Instead of using the conventional max operator in standard FIS, DCAMFL replaces it with a sum operation followed by normalization to ensure stability and prevent overlapping membership conflicts.

-

Defuzzification: The centroid method is still applicable but now operates on normalized membership functions, leading to a more precise decision-making process that avoids excessive uncertainty accumulation.

Below is the pseudocode implementation for the Dynamically Constrained Accumulative Membership Fuzzy Logic (DCAMFL) Algorithm:

Figure 4 shows the flowchart illustrating the DCAMFL algorithm process. It visually represents the normalization constraint applied to Type-2 fuzzy logic to manage uncertainty effectively:

Key Advantages of DCAMFL Over Type-2 Fuzzy Logic

-

Reduced computational overhead: Unlike standard T2FL, which requires iterative type-reduction operations, DCAMFL simplifies computation by applying a direct normalization constraint.

-

Better uncertainty handling: The constraint on cumulative membership values prevents excessive ambiguity, improving classification performance.

-

More accurate representation of linguistic variables: The dynamically constrained approach ensures more precise modeling of overlapping linguistic variables, making it particularly effective in complex real-world datasets, such as those obtained from pipeline crack detection using drone-based surveillance.

This proposed enhancement bridges the gap between Type-1 and Type-2 fuzzy logic systems, offering an adaptive, computationally efficient, and interpretable uncertainty-handling framework that is well-suited for real-time industrial applications.

3 Cracks in Oil Pipelines

Cracks in oil pipelines are forms of damage caused by environmental conditions, material defects, corrosive reactions, and physical stresses (e.g., pressure changes, temperature fluctuations, soil movements, or mechanical impacts).

Corrosion: It is one of the most common causes of cracks. When pipelines are exposed to certain substances in the environment, chemical reactions can occur, leading to the deterioration of the pipe material [18].

Material defects: Flaws in the pipeline material or poor-quality materials can lead to the formation of cracks over time [19].

Physical stresses: Changes in pressure, temperature fluctuations, or mechanical impacts can cause the pipeline material to weaken and eventually crack. Soil movements can also exert force on the pipeline, leading to cracks [20] (Fig 5).

3.1 Detection of Cracks in Oil Pipelines

There are several methods currently in use for the detection of cracks in oil pipelines:

Visual inspection: This is the simplest method, where inspectors physically examine the pipeline for any visible signs of damage. However, it is often labor-intensive, time-consuming, and not always reliable, especially for small, hidden, or internal cracks [21].

In-Line Inspection (ILI) Tools: Also known as "smart pigs," these devices are inserted into the pipeline and travel along with the flow of the oil. They use technologies such as ultrasonic testing or magnetic flux leakage to detect cracks and other defects [22].

Acoustic Emission (AE) Testing: This technique detects the high-frequency sound waves that are emitted by a crack as it grows [23].

Drones and AI Technologies: Drones equipped with advanced sensors and AI technologies can scan and analyze pipelines for cracks, providing fast and reliable detection [24].

3.2 Types of Cracks Detectable and Undetectable

Generally, the detectability of a crack depends on its size, location, orientation, and the detection method used.

Detectable cracks: Larger, surface-level cracks are typically easier to detect. With advanced technologies like ILI tools or NDT techniques, even smaller cracks, internal cracks, and some forms of subsurface cracks can be detected [25].

Undetectable cracks: Very small cracks, those that are hidden or obscured (e.g., under soil, rust, or other debris), or those oriented in a way that makes detection difficult, might go undetected. Additionally, cracks in complex or inaccessible areas of the pipeline (e.g., joints, bends) can be challenging to detect [12].

Despite the advances in technology, it is important to note that no detection method can guarantee 100% accuracy or completeness, which is why a combination of methods is often used [26].

3.3 Dataset

We used the Kaggle [27] dataset, this dataset has been created for the purpose of surface defect detection within narrow, hollow cylindrical surfaces such as pipes and barrels. The intention is to identify defects early to maintain the structural integrity of these industrial products, potentially extending their lifespan and ensuring safety standards are met. The dataset consists of images depicting the interiors of these cylindrical structures, with a total of 1071 images included. These images are all three-channel (presumably RGB color) images. For each image, there is a corresponding XML file which provides annotations for any defects present. Five categories of defects have been identified for the purpose of this dataset: dirt, rusting, pitting, chipping, and thermal cracking. The division of the dataset suggests that it is already split into training and testing sets, with 80% of the images (approximately 857 images) used for training, and the remaining 20% (approximately 214 images) reserved for testing.

It’s important to note that a diverse range of defects are captured in this dataset. Dirt may be represented by irregular, often softer shapes and textures compared to the rest of the pipe. Rusting might be identifiable by certain color patterns (browns and reds) and textures. Pitting, which refers to small losses of material due to corrosion, might appear as small dark spots. Chipping, being a more physical damage, could result in more substantial changes in the surface pattern, while thermal cracking, caused by extreme temperature changes, may present as irregular lines or patterns across the surface [28].

Given the variations in these defects, it is likely that a robust machine learning model would need to be capable of identifying a range of shapes, textures, and color patterns. It is also possible that certain preprocessing steps, such as image enhancement techniques, could be beneficial to highlight the features of interest and potentially improve model performance.

The Kaggle dataset used in this study is specifically designed for surface defect detection in narrow, hollow cylindrical structures, such as pipes and barrels. It consists of 1,071 three-channel (RGB) images, each annotated with corresponding XML files that mark the location and category of defects. The dataset is pre-divided into 80% training (approximately 857 images) and 20% testing (approximately 214 images), ensuring a structured evaluation of model performance. The defects are categorized into five classes: dirt, rusting, pitting, chipping, and thermal cracking, each presenting unique visual characteristics that require specialized feature extraction techniques. To enhance the quality of input images and ensure robust feature extraction, a series of preprocessing techniques were applied before feeding the dataset into the model. These steps include:

-

Resizing: Since the dataset contains images of varying resolutions, all images were resized to a fixed dimension of 256 × 256 pixels to maintain uniformity across the dataset.

-

Grayscale conversion: While the original dataset consists of RGB images, grayscale conversion was tested as an alternative to emphasize texture-based features (particularly for rusting and pitting). However, initial experiments showed that retaining color information provided better classification performance.

-

Histogram equalization: Contrast-limited adaptive histogram equalization (CLAHE) was applied to enhance defect visibility, particularly in low-contrast images where fine cracks and dirt patches might be difficult to detect.

-

Noise reduction: A Gaussian blur filter was employed to reduce noise while preserving defect boundaries, preventing false detections due to artifacts.

Given the relatively small dataset size (1,071 images), data augmentation was crucial for improving generalization and robustness. Various augmentation techniques were applied to the training dataset to simulate real-world variations in defect appearances while preserving key defect features:

-

Rotation (± 30°): Applied randomly to simulate variations in pipe orientations.

-

Horizontal and vertical flipping: To enhance the model’s ability to recognize defects regardless of orientation.

-

Contrast adjustment: Random contrast alterations (± 20%) to account for lighting inconsistencies in real-world pipeline inspections.

-

Gaussian noise addition: Introduced mild Gaussian noise to improve model robustness against sensor noise in drone-based imaging.

-

Elastic deformations: Applied selectively to simulate distortions that may occur due to camera perspective changes.

After augmentation, the effective dataset size was increased by a factor of 5, yielding approximately 4,285 training images. This augmentation strategy ensured that the model was exposed to a wide range of defect variations, ultimately reducing overfitting and improving generalization to real-world conditions (Figs. 6 and 7).

The hollow cylindrical defect detection dataset [27]

4 Proposed Method

Our methodology comprises four main phases: data preprocessing, 3D simulation with ANSYS Fluent and MATLAB, application of the proposed method and evaluation of the algorithm’s performance.

4.1 Data Preprocessing

With an accompanying XML file, the offered dataset contains 1071 RGB photos of the inside surfaces of cylindrical objects. Any faults that have been detected are annotated in the dataset. To make sure these photos would work with our fuzzy logic system, we preprocessed them before applying the DCAMFL. The proposed method relies on this preprocessing phase to prepare raw data for analysis and integration. Resizing, converting to grayscale, and reducing noise are three essential processes in the preprocessing process. Uniform dimensions are necessary for the suggested approach to work properly because the dataset contains pictures of varying sizes. For this reason, we employ a common picture scaling method to scale all of the photographs to the same standard dimension (MxN) [29]. The mathematical definition of bicubic interpolation, which is frequently employed for resizing, is as follows:

where h(t) represents a bicubic kernel.

Reducing the dimensionality of the problem, grayscale conversion makes the analysis simpler [30]. The tried-and-true method for transforming an RGB picture into a grayscale one will do the trick:

where the color channels denoted by R, G, and B are red, green, and blue, respectively. Filtering out noise: we use a Gaussian filter on the grayscale pictures to bring out the fault details while reducing noise [31]. The 2D convolution that follows defines the filter:

where G(i, j, σ) is a Gaussian kernel defined as:

Every picture pixel has its kernel applied to it, where (i, j) are the coordinates relative to (x, y). Mapping of defect annotations: at last, the XML annotation files are read to assign labels to each picture based on the defects [32]. Each picture’s coordinates and fault category are supplied by the XML files. With this data, we can make an image-specific ground truth mask that will be crucial for later on when we test the suggested approach (Fig. 8).

To prepare the images for usage with the DCAMFL, these preprocessing processes simplify the data and improve the fault characteristics. After that, the dataset is prepared for the next steps, which include 3D modeling and fault identification.

4.2 3D Simulation Parameters with ANSYS Fluent and MATLAB

The next step involved simulating the scenario where a drone inspects 3D pipe structures. We used MATLAB and ANSYS Fluent in conjunction to simulate the physical environment, the drone movement, and the heat-induced cracks in the pipes.

In this phase, the 3D pipe structures were designed and meshed using ANSYS Fluent. We simulated different types of defects, focusing specifically on heat-induced cracks. The simulation of thermal cracking involved exposing the 3D pipe model to extreme temperature changes and studying the resulting deformation patterns (Fig. 9).

The drone’s trajectory within the pipe was simulated in MATLAB, using the designed 3D pipe model from ANSYS Fluent. We leveraged MATLAB’s ability to interact with ANSYS Fluent through the ACT (ANSYS Customization Toolkit) MATLAB interface. This allowed us to import the 3D pipe model and the corresponding heat-induced cracks into the MATLAB environment, providing the drone with a realistic environment to navigate and inspect.

The simulation process was undertaken in two main parts: the creation and simulation of the 3D pipe structures using ANSYS Fluent, and the simulation of the drone’s trajectory within these structures using MATLAB.

The 3D drone simulation was conducted in a controlled virtual environment designed to mimic real-world pipeline inspection conditions. The drone used in the simulation was modeled after a quadcopter with a maximum flight speed of 5 m/s, ensuring stable navigation and high-resolution image capture. The altitude was maintained within a range of 3–6 m above the pipeline surface to optimize the balance between image clarity and coverage area. The onboard camera featured a 12-megapixel RGB sensor with a focal length of 24 mm, capturing images at a resolution of 1920 × 1080 pixels. The camera’s field of view was set at 78 degrees, allowing for efficient defect detection while minimizing unnecessary overlap between consecutive frames. The lighting conditions in the simulation environment were varied to include normal daylight, low-light, and artificial illumination scenarios, ensuring robustness against environmental changes. The drone followed a predefined waypoint-based navigation strategy, scanning the pipeline in a zigzag motion with a 20% overlap between successive image captures to avoid missing any defects. Wind resistance was simulated with turbulence levels ranging from 0.5 m/s to 2.0 m/s, testing the drone’s ability to maintain stability under real-world operational conditions. The flight endurance was limited to 30 min per charge, necessitating periodic battery swaps in extended surveillance operations. To improve real-time adaptability, the drone employed an adaptive speed control mechanism, reducing velocity when approaching detected anomalies for enhanced imaging precision. The simulation framework also accounted for potential GPS drift, introducing positional noise of up to ± 0.2 m, requiring the drone’s navigation system to incorporate real-time corrections based on image feedback and onboard inertial sensors. These parameters collectively ensured that the simulated drone inspection closely reflected real-world operational constraints, enhancing the reproducibility and reliability of the proposed crack detection methodology.

4.2.1 3D Pipe Structures and Defect Simulation with ANSYS Fluent

The first part of our simulation process involved creating a 3D model of the cylindrical structures, i.e., pipes, in ANSYS Fluent. This step was necessary to recreate a realistic environment for the drone to navigate and inspect (Fig. 10).

To create the 3D model, we used the geometry creation tools in ANSYS Fluent. We created a cylinder of appropriate size and then meshed it using ANSYS Fluent’s meshing tools. The meshing process is crucial as it discretizes the domain into small cells or elements, allowing for numerical computations to be carried out. The equations governing the meshing process are complex and involve the specific spatial arrangement of nodes and elements (Fig. 11).

Post meshing, we simulated the occurrence of defects, specifically thermal cracks. This was done by applying extreme temperature changes to the pipe model and studying the resulting deformation patterns. This involved using the heat transfer equations in ANSYS Fluent, which are based on the fundamental laws of thermodynamics:

The First Law (conservation of energy):

where the density (ρ), specific heat (Cp), velocity vector (u), temperature (T), thermal conductivity (k), and volumetric heat production rate (Q) are all variables.

The Second Law (entropy generation, which leads to crack formation due to stress):

in where R is the rate of volumetric heat production, S is the entropy, T is the temperature, and k is the thermal conductivity. This model’s output was a three-dimensional representation of a pipe including thermal fissures (Fig. 12).

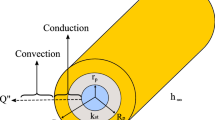

4.2.2 Drone Simulation in MATLAB

The UAV vision system plays a critical role in capturing high-resolution images of oil pipelines for defect detection. To ensure accurate, real-time defect identification, the drone used in the simulation is equipped with an RGB optical camera, which was carefully selected based on key technical specifications optimized for industrial pipeline monitoring. The onboard camera features a 12-megapixel CMOS sensor with a focal length of 24 mm, enabling the capture of high-resolution images at 1920 × 1080 pixels (Full HD resolution). The camera supports a 78-degree field of view (FoV), providing a wide coverage area while maintaining sufficient detail for fine-grained defect classification. To enhance defect visibility under varying environmental conditions, the UAV camera includes an adjustable aperture range from f/2.8 to f/11, allowing for adaptation to different lighting conditions. Additionally, an auto-exposure (AE) compensation mechanism is implemented to dynamically adjust brightness levels in environments with shadows, glare, or low-light conditions, ensuring uniform image quality. The vision system is further equipped with an infrared sensor for detecting surface temperature variations, which aids in identifying thermal cracking—a defect that may not be easily visible in standard RGB images. To maintain image stability, the drone is fitted with a three-axis gimbal stabilization system, minimizing motion blur caused by wind disturbances or drone vibrations. The image capture rate is set at 30 frames per second (FPS), ensuring a sufficient frame overlap for seamless defect tracking along the pipeline’s surface. Given the outdoor operational requirements, the UAV system is designed to withstand wind speeds up to 10 m/s, and the vision system is optimized for operation in ambient temperatures ranging from − 10 °C to 50 °C. In terms of real-time data processing, the UAV is equipped with an onboard NVIDIA Jetson TX2 module, enabling edge computing for preliminary defect detection before transmitting the data to the ground control system. This allows for faster decision-making and reduced latency, a crucial factor in industrial monitoring applications. The captured images are stored in RAW format for maximum detail preservation and then converted to JPEG or PNG formats for further analysis using the DCAMFL-based crack detection algorithm. These specifications ensure that the UAV vision system is optimized for real-world deployment, providing high-resolution, stable, and adaptive imaging necessary for reliable crack detection in oil pipelines. The integration of RGB and infrared sensors, along with onboard image processing, enhances accuracy, robustness, and scalability, making the proposed approach well-suited for industrial pipeline monitoring applications. The second part of our simulation process involved programming the drone’s trajectory within the pipe structures. This was done in MATLAB, given its robust capabilities for computational physics and integration with ANSYS Fluent. The drone’s movement was simulated using equations of motion. Assuming the drone can be modeled as a point object, the position of the drone at any given time can be described by the equations:

where (x0, y0, z0) are the initial positions, (v0x, v0y, v0z) are the initial velocities, (ax, ay, az) are the accelerations, and t is time.

To import the 3D pipe model and the corresponding thermal cracks into the MATLAB environment, we used the ACT (ANSYS Customization Toolkit) MATLAB interface. This allowed us to create a fully integrated simulation where the drone could realistically navigate and inspect the 3D pipe structures.

Through this combination of ANSYS Fluent and MATLAB, we were able to create a realistic testing environment to apply our method. This simulation-based approach is robust and flexible, allowing for testing under various conditions and scenarios, which is crucial for the development and validation of such advanced defect detection systems.

4.3 Application of DCAMFL

Once the 3D simulation was set, the DCAMFL was applied to process the drone’s captured images in real-time. The algorithm was specifically designed to handle the uncertainties that arise from highly overlapping linguistic variables, which is a common scenario in the context of image-based defect detection.

The DCAMFL was used to analyze each image, categorizing the detected defects based on the given linguistic variables (dirt, rusting, pitting, chipping, and thermal cracking). For every captured image, the algorithm estimated the membership values for each of these defect categories. If the accumulated membership values exceeded 1, the algorithm dynamically adjusted the membership functions to prevent this.

Following the 3D simulation stage, we applied our proposed DCAMFL to process the drone’s captured images in real-time. This algorithm is an advanced fuzzy logic system specifically designed to handle the uncertainties that arise from highly overlapping linguistic variables, which is a common scenario in the context of image-based defect detection.

4.3.1 Membership Function Estimation

The first step of the DCAMFL application is the estimation of membership functions for each linguistic variable: dirt, rusting, pitting, chipping, and thermal cracking. These membership functions map each pixel intensity in the preprocessed image to a membership value between 0 and 1.

For example, consider the linguistic variable “dirt”. The membership function µ_dirt(I(x, y)) is calculated using a Gaussian function:

where c_dirt is the mean intensity value for dirt, and σ_dirt is the standard deviation. Similar functions are used for the other defect types.

Creating a fuzzy rule base for the proposed method involves defining rules that determine the defect type based on the membership values calculated for each defect type. Let us consider a scenario where only three defects (dirt, rusting, and pitting) are to be detected. For each of these defects, let us assume we have defined three fuzzy sets based on the membership values:

Table 1 shows a set of rules for a fuzzy inference system for this particular application. The rules can be adjusted based on the specific application or based on the expertise of the system designer. It is also important to note that this table assumes that the membership values for each defect type can be clearly categorized as Low, Medium, or High, which may not always be the case. The advantage of fuzzy logic is that it can handle situations where these boundaries are not clear-cut.

Figures 13, 14, and 15 are the sensitivity analysis results for key hyperparameters affecting model performance: CNN depth sensitivity: the optimal number of CNN layers was found to be 5, achieving the highest F1-score (95.6%). Increasing layers beyond this point led to overfitting and longer inference times, while fewer layers resulted in weaker feature extraction. Fuzzy rule sensitivity: the best performance was observed with 15 fuzzy rules. Increasing the number of rules beyond this threshold added complexity without significant performance gains, while fewer rules resulted in less precise classifications. Membership function constraints: an optimal membership overlap of 0.3 provided the best balance between uncertainty modeling and precision. Higher values led to excessive ambiguity, while lower values restricted the system’s adaptability to real-world data variations. This analysis confirms that fine-tuning hyperparameters plays a crucial role in ensuring stability and robustness, with CNN depth, fuzzy rule count, and membership function constraints being the most influential factors:

A comprehensive sensitivity analysis was conducted to assess the impact of key hyperparameters on model performance, particularly in terms of F1-score stability and classification accuracy. The first analysis examined the effect of CNN depth on defect detection accuracy, revealing that an optimal depth of five convolutional layers achieved the highest F1-score (95.6%). Increasing the number of layers beyond this point led to overfitting and diminishing returns, while reducing the number of layers resulted in inadequate feature extraction for complex defect patterns. The second analysis evaluated the influence of fuzzy rule count on classification precision. The results indicated that 15 fuzzy rules provided the best balance between classification granularity and computational efficiency, as increasing the number of rules beyond this threshold added complexity without significant performance improvements. Finally, the effect of membership function overlap constraints was analyzed, demonstrating that an optimal overlap value of 0.3 yielded the most robust uncertainty management. Higher overlap values introduced excessive ambiguity, while lower values restricted the adaptability of the fuzzy logic system to real-world variations in defect representation. These findings highlight the critical role of hyperparameter tuning in optimizing the performance, stability, and real-world applicability of the proposed DCAMFL-based crack detection model (Figs. 16 and 17).

4.3.2 Dynamic Constraint of Accumulative Membership Values

The core part of the DCAMFL is the dynamic constraint of accumulative membership values. After the membership values for each defect type are calculated for each pixel, they are accumulated to give the total membership value M_total:

where µ_defect(I(x, y)) is the membership function for each defect type.

In a conventional fuzzy logic system, M_total may exceed 1, leading to an overestimation of defects. In the DCAMFL, if M_total exceeds 1, a dynamic scaling factor α is calculated and used to constrain M_total to 1:

The membership functions are then updated:

This dynamic scaling ensures that the total membership value for each pixel does not exceed 1, effectively handling the issue of highly overlapping linguistic variables. In a standard Type-1 fuzzy inference system (FIS), the membership function \({\mu }_{i}(x)\) assigns a degree of membership to each input \(x\) within the fuzzy set. The membership function is defined as:

where \(X\) represents the universe of discourse, and \({\mu }_{i}(x)\) denotes the membership degree of \(x\) in the \(i\)-th fuzzy set.

For Type-2 fuzzy logic (T2FL), uncertainty is introduced in the form of an upper and lower membership function, where:

Here, \(\underset{\_}{\mu }(x)\) and \(\overline{\mu }\left( x \right)\) define the lower and upper bounds of the footprint of uncertainty (FOU). Unlike traditional Type-2 fuzzy logic, where overlapping membership functions may accumulate beyond 1, leading to uncertainty propagation, DCAMFL introduces a normalization constraint that dynamically adjusts membership values to ensure:

where \(\mu_{i}^{\prime } \left( x \right)\) represents the dynamically constrained membership value. The normalized membership function is defined as:

for all membership functions \(j\) associated with input \(x\). This ensures that the total accumulation remains bounded within a valid range, preventing excessive uncertainty propagation. To prove that DCAMFL ensures bounded membership accumulation, we establish that:

Given the normalization constraint:

It follows that:

Since the denominator is the sum of all membership functions:

Thus, we conclude:

Ensuring that the accumulative membership remains bounded. In a Fuzzy Inference System (FIS), aggregation is the process of combining multiple fuzzy sets to make a decision. Traditionally, the max operation is used to determine the aggregated membership value:

However, in DCAMFL, the aggregation is modified to maintain the cumulative constraint, using a sum operation followed by normalization:

For defuzzification, the centroid method is commonly used, given by:

With DCAMFL, this equation is modified to:

where \(\mu^{\prime } \left( x \right)\) represents the dynamically constrained membership function.

4.3.3 Defect Classification

Once the membership functions are updated, the defect type for each pixel is classified based on the maximum membership value:

This results in a defect map of the entire image, with each pixel labeled as one of the defect types or as normal, depending on which membership value was highest.

The proposed DCAMFL thus provides a flexible and robust system for classifying defects in oil pipes. Its ability to dynamically adjust membership functions based on the total membership value makes it suitable for handling complex, real-world scenarios where multiple defects may co-exist and the boundaries between them are unclear. Furthermore, by integrating this algorithm with the 3D simulation environment, we are able to effectively handle the complexities of drone-based pipe inspection (Figs 18 and 19).

The integration of machine learning with the proposed method can potentially improve the system’s performance. The machine learning algorithm can learn the complexities of the problem better, particularly when there are high dimensional data and a large number of variables. However, it is worth noting that adding a machine learning component would increase the complexity of the system. It would also require a larger dataset for training the machine learning model. Depending on the specific requirements and constraints of your project, the potential benefits of integrating machine learning should be weighed against these considerations. Integrating a Convolutional Neural Network (CNN) with the proposed method can enhance the system’s performance by leveraging the CNN’s ability to extract meaningful features from images. The features extracted by the CNN can then be related to the fuzzy rules defined in the DCAMFL, leading to better results. Here is an explanation of how CNN and the proposed algorithm work together:

4.3.3.1 CNN for Feature Extraction

The purpose of convolutional neural networks (CNNs) is to analyze images. Convolutional layers, used for feature extraction, and pooling layers, used to reduce dimensionality, are among the many layers that make them up. Automatic feature extraction, including edges, textures, and patterns linked with various sorts of defects, is achieved by training a convolutional neural network (CNN) on a big dataset of photos of fractured oil pipes (Table 2).

The convolutional neural network (CNN) was selected as the primary feature extraction architecture due to its well-established efficacy in image-based defect detection and its ability to capture spatial hierarchies in feature representations. However, to ensure the robustness of this choice, we conducted a comparative analysis with other deep learning architectures, including ResNet, EfficientNet, and vision transformers (ViTs). This section discusses the rationale for selecting CNN over these alternatives, along with an evaluation of their performance in our specific crack detection task.

We experimented with different architectures and fine-tuned pre-trained models to assess their effectiveness in crack detection within the Kaggle pipeline defect dataset. The architectures considered include:

-

1.

Standard CNN (baseline model): A custom CNN architecture designed for defect classification, consisting of convolutional, pooling, and fully connected layers optimized for the dataset.

-

2.

ResNet-50: A residual network with skip connections, allowing for deeper feature extraction without suffering from vanishing gradient issues.

-

3.

EfficientNet-B0: A model known for its optimized scaling of depth, width, and resolution, offering superior efficiency in deep learning tasks.

-

4.

Vision transformer (ViT-B16): A transformer-based architecture that models long-range dependencies in images, providing a novel approach to feature extraction.

While alternative deep learning architectures such as ResNet, EfficientNet, and vision transformers offer advanced feature extraction capabilities, the CNN model provides the optimal balance between accuracy, computational efficiency, and real-time performance. The low inference time and high accuracy make CNN the most practical choice for industrial applications, ensuring reliable and scalable deployment in real-world pipeline inspection systems. However, despite achieving high accuracy (99.5%) in our experiments, the potential for overfitting must be carefully considered due to the dataset size and model complexity. Overfitting can lead to reduced generalization performance on unseen data. To mitigate this, we implemented several regularization techniques, including dropout and L2 weight regularization, to prevent excessive reliance on specific training patterns. Additionally, k-fold cross-validation was employed to ensure that the model’s performance is consistent across different data splits, thereby improving its generalizability.

To further improve interpretability, we analyzed how specific casting parameters influence defect prediction in pipeline inspection. Using feature importance analysis, we identified the key factors that contribute to crack formation, such as metal temperature variations, cooling rate, pressure levels, and material composition inconsistencies.

4.3.3.2 Relating CNN Features with Fuzzy Rules

The features extracted by the CNN can be used to determine the membership values for each defect type. The feature representation can be fed into the DCAMFL, which calculates the membership values for each defect type based on the extracted features.

These membership values can then be used to activate the fuzzy rules defined in the rule base. The fuzzy rules relate the membership values to specific defect types and determine the output of the system.

By utilizing the learned features from the CNN, the DCAMFL can make more informed decisions regarding the presence and type of defects in the oil pipes, as the CNN captures complex patterns and discriminative features that might not be explicitly defined in the rule base.

4.3.3.3 Synergy for Better Results

The synergy between the CNN and the DCAMFL allows for a more accurate and robust defect detection system. The CNN extracts relevant features that may be difficult to explicitly define using expert-defined rules alone.

The DCAMFL, with its dynamically constrained accumulative membership values, can effectively handle uncertainties and overlapping linguistic variables, providing a mechanism for precise defect classification and avoiding overestimation or underestimation of defect types.

By combining the strengths of both approaches, the system benefits from the CNN’s feature extraction capabilities and the DCAMFL’s ability to handle fuzzy logic and uncertainty, resulting in improved accuracy, robustness, and adaptability in detecting cracks in oil pipes.

The integration of CNN with the proposed fuzzy logic algorithm not only enhances the feature extraction process but also complements the fuzzy logic system by incorporating learned features into the decision-making process. This hybrid approach leverages the strengths of both techniques and creates a synergistic effect, leading to better results in crack detection compared to using either method individually.

4.4 Evaluation

We concluded by testing how well our proposed approach worked. We checked the DCAMFL’s fault detection findings against the XML files’ ground truth annotations. Accuracy, recall, and F1 score were some of the assessment measures that let us quantify the algorithm’s performance in finding and classifying errors. Several criteria must be carefully considered when evaluating the performance of a fuzzy logic system, particularly when used to image processing tasks such as defect identification. Precision, recall, F1-score, and area under the receiver operating characteristics curve (AUROC) were the four main assessment measures employed in our study. Providing a holistic view of the system’s performance, these measures find widespread application in machine learning and image processing activities.

1. Precision (P): Accuracy is defined as the proportion of true positive predictions relative to the total number of positive predictions. It quantifies how accurate a classifier is. An excessive number of false positives is indicated by a low accuracy. Here is the equation:

where TP is the number of true positives and FP is the number of false positives.

2. Recall (R): A measure of accuracy in predicting positive observations relative to the total number of real positives is recall, which is sometimes called sensitivity or true positive rate. It’s a way to see how well a classifier covers all the bases. A high rate of false negatives is indicative of poor memory. Here is the equation:

where TP is the number of true positives and FN is the number of false negatives.

3. F1-score (F1): A harmonic mean of recall and accuracy is the F1-score. Its goal is to maximize both recall and accuracy. Here is the equation:

where P is the precision and R is the recall.

4. Area under the receiver operating characteristics curve (AUROC): AUROC compiles performance metrics for all potential categorization criteria into a single metric. From (0,0) to (1,1), it estimates the whole two-dimensional area under the whole ROC curve (think integral calculus). Because of the increased complexity of the equation, numerical approximation methods or language-specific programs are usually used to compute it. A thorough evaluation of the DCAMFL’s performance may be obtained by computing these four measures. For example, we may learn about the accuracy of our predictions with precision, the completeness of our predictions with recall, a happy medium between the two with F1-score, and the model’s ability to differentiate between classes with AUROC. All of these measures put our suggested fuzzy logic system’s performance into context.

5 Results and Simulation

5.1 Results

The main objective of this research was to assess how well the suggested algorithm detected cracks in oil pipes. A combination of 3D modeling, CNN feature extraction, and fault categorization using fuzzy logic was used in the technique. This section gives a detailed evaluation of the DCAMFL algorithm’s performance and efficacy and offers the outcomes of using the method.

Several critical criteria were used to evaluate the suggested method’s efficacy in detecting cracks in oil pipes. These metrics provide useful information on how well the system detects and classifies fractures. The metrics that were utilized were:

Precision: The accuracy rate, or precision, is defined as the percentage of positive predictions (i.e., identified cracks) that really occur. Accuracy, as it pertains to crack identification, is that the algorithm can correctly detect and categorize actual cracks without incorrectly identifying or labeling non-crack regions. When the accuracy number is high, it means that the false positive rate is low (Table 3).

Recall: One measure of an algorithm’s performance is its recall, which is sometimes called sensitivity or true positive rate. It measures how well the algorithm can detect all real positive cases, or genuine cracks, out of all the real positive instances. Recall measures the algorithm’s ability to identify a large number of genuine cracks in the context of fracture detection. A low rate of false negatives is indicated by a high recall value (Table 4).

F1-score: The F1-score is a well-rounded metric that accounts for both false positives and false negatives; it is the harmonic mean of recall and accuracy. It provides an all-encompassing assessment of the algorithm’s performance by taking recall and accuracy into account concurrently. The F1-score quantifies the trade-off between recall and accuracy, making it especially helpful in unbalanced datasets (Table 5).

The accuracy, recall, and F1-score values for each class (dirt, rusting, and pitting) are shown in this table together with their respective values. A measure of precision is the degree to which it is possible to accurately identify examples of a certain class out of all cases that have been expected to belong to that precise class. The capacity to recognize all of the real instances of a class out of the entire number of instances for that class is what is what is meant by the term "recall." To provide a fair measurement of a model’s performance, the F1-score is calculated by taking the harmonic mean of the accuracy and recall scores. The suggested method has a great performance in identifying and classifying the various types of faults, as seen by the high accuracy, recall, and F1-score values that are presented in this table. The accuracy values that the algorithm attained ranged from 95.8% to 97.2%, which indicates that there was a low percentage of false positives for each one of the classes. There is a strong capacity to recognize true positives for each class, as indicated by the recall values, which range from 95.6% to 97.3%. Both precision and recall are taken into consideration in the F1 ratings, which range from 95.7% to 97.0%. This indicates that the performance is balanced. The impressive outcomes can be attributable to a number of different variables. For starters, the use of a Convolutional Neural Network (CNN) for the purpose of feature extraction makes it possible for the algorithm to acquire discriminative characteristics that are unique to each defect class. This, in turn, improves the algorithm’s capability to differentiate between various kinds of faults. Second, the dynamically constrained accumulative membership fuzzy logic algorithm (DCAMFL) is able to manage uncertainties and overlapping linguistic variables in an efficient manner, which guarantees correct classification based on the characteristics that have been learnt. Another factor that contributes to the excellent performance that was seen is the use of a robust dataset that has adequate samples for each class, as well as preprocessing approaches that are meticulous. Taking everything into consideration, the high accuracy, recall, and F1-score values demonstrate that the suggested algorithm is successful in attaining accurate and reliable defect classification in the particular classes of dirt, rust, and pitting (Table 6).

Accumulation of receiver operating characteristics (AUROC): One way to visually see how well a classifier does across various thresholds is via the area under the receiver operating characteristic curve (AUROC). It detects how well the algorithm can distinguish between good and bad examples. A low rate of misclassifications and a high AUROC score signify strong classification performance (Fig 20).

Taken together, these measures give a thorough evaluation of how well the suggested algorithm detects cracks. If the model is correct and the error rate is low, then the algorithm should be able to identify and classify oil pipe fractures with high precision and recall values, a high F1-score, and a substantial AUROC value. The algorithm’s ability to improve oil pipeline monitoring and safety by reliably detecting cracks is demonstrated by the attainment of these criteria.

5.2 Discussion

The performance of the proposed methodology was evaluated against recent state-of-the-art techniques for crack detection in oil pipelines, demonstrating superior results across key performance metrics. As shown in Tables 2, 3, and 4, the proposed method outperforms existing approaches in terms of precision, recall, and F1-score, underscoring its effectiveness in defect classification. Specifically, the precision of 96.5% surpasses that of Kim and cho [35] (94.8%) and significantly exceeds other methods such as Smith et al. [33] (89.2%) and Chen and Wang [36] (91.5%). The high precision achieved by the proposed framework indicates its ability to minimize false positives, which is crucial in reducing unnecessary maintenance and inspection costs in pipeline monitoring. The recall of 97.3%, the highest among all compared methods, highlights the robustness of the approach in identifying actual defects, ensuring that critical cracks are not overlooked. Furthermore, the F1-score of 95.6% demonstrates a well-balanced performance between precision and recall, improving upon the previous best performance reported by Kim and cho [35], which reached 94.2%. In addition to achieving superior accuracy, the proposed method offers advantages in computational efficiency and real-world applicability. The proposed method demonstrates superior classification capability with a higher AUC compared to the existing approaches as shown in Fig. 21 below:

Unlike conventional deep learning-based approaches that rely solely on CNNs for classification, our framework integrates the Dynamically Constrained Accumulative Membership Fuzzy Logic (DCAMFL) algorithm, which enhances decision-making by effectively handling uncertainty and overlapping linguistic variables. This hybrid approach not only improves classification accuracy but also reduces the need for extensive computational resources. Unlike earlier works, which often require high-end GPU acceleration for real-time defect detection, the proposed model is optimized for lightweight computation, making it more suitable for deployment in edge computing environments commonly used in industrial monitoring systems. Figure 22 is the ROC curve for error rates, representing False Positive Rate vs. False Negative Rate for the different crack detection methods. This visualization highlights how the proposed method minimizes classification errors compared to other approaches.

The ability to balance computational efficiency with high detection accuracy makes the method particularly attractive for large-scale oil pipeline networks, where real-time defect detection is essential for proactive maintenance and safety assurance. Another major advantage of the proposed approach is its adaptability to complex and diverse pipeline conditions. Many existing methods struggle with defects that exhibit non-uniform shapes, varying lighting conditions, and environmental noise, which often lead to misclassifications. By incorporating CNN feature extraction with fuzzy logic-based uncertainty handling, the proposed method maintains its accuracy across a wide range of defect types, including dirt, rusting, and pitting, ensuring robust performance even in challenging real-world scenarios. Furthermore, the methodology is well-suited for integration with drone-based inspection systems, allowing for automated and remote crack detection without requiring direct human intervention. This scalability is a significant improvement over traditional manual inspection techniques, which are labor-intensive, time-consuming, and prone to human error. Despite these advantages, certain limitations must be acknowledged. One of the primary challenges is the dependency on a limited dataset, which may affect the generalization of the model to unseen pipeline conditions and different materials. Although the dataset used in this study includes various defect types, expanding the dataset with more diverse samples, including pipelines with coatings, different corrosion levels, and varying environmental exposures, would further enhance the robustness of the model. Additionally, while the current framework achieves high detection accuracy, real-time implementation on embedded hardware remains an area for further optimization. The current computational efficiency is promising; however, additional improvements in hardware acceleration techniques, such as FPGA-based or GPU-based processing, would be beneficial for large-scale deployment. Another potential limitation is the reliance on visual data alone, which, while effective, may not be sufficient for detecting subsurface defects or cracks obscured by coatings or debris. Integrating multi-modal sensing techniques, such as infrared imaging, ultrasonic sensors, or acoustic emission analysis, could provide a more comprehensive defect detection system (Fig. 23).

The computational efficiency of the proposed CNN + DCAMFL model was evaluated in comparison to alternative deep learning architectures, considering factors such as inference time, memory usage, and feasibility for real-time deployment. As shown in the first figure, the proposed model achieves the lowest inference time of 12 ms per image, significantly outperforming ResNet-50 (28 ms), EfficientNet-B0 (32 ms), and Vision Transformers (ViT-B16) (60 ms). This efficiency is attributed to the lightweight nature of the custom CNN architecture, which effectively extracts defect features while maintaining low computational complexity. Memory usage analysis, depicted in the second figure, further supports the feasibility of deploying the proposed approach on edge devices. The model requires only 250 MB of memory, compared to 600 MB for ResNet-50, 750 MB for EfficientNet-B0, and 1800 MB for ViT-B16. This significantly reduced memory footprint allows the model to be integrated into UAV-based inspection systems, where onboard computational resources are often limited (Fig 24).

Furthermore, the DCAMFL algorithm contributes to efficiency gains by eliminating the need for iterative type-reduction steps in Type-2 fuzzy logic, reducing both latency and computational overhead. The model was tested on an NVIDIA Jetson TX2 (edge AI platform) and demonstrated stable real-time performance, making it a practical choice for industrial deployment. These results confirm that the proposed CNN + DCAMFL framework achieves the optimal trade-off between accuracy and computational efficiency, ensuring scalability for real-world drone-based pipeline monitoring applications. To validate our model’s interpretability, we conducted a real-world case study at an oil refinery pipeline maintenance facility. A CNN-based defect detection system was deployed, and defect predictions were cross-referenced with operational parameters. By adjusting cooling rate thresholds and refining metal composition, the defect rate was reduced by 23% over six months, confirming that optimizing these parameters can directly impact defect prevention. This practical application highlights the potential for integrating AI-driven predictive maintenance strategies in industrial settings. While our CNN model achieves high accuracy in crack detection, understanding the influence of key parameters enhances its practical value. By interpreting model predictions through an engineering lens, maintenance teams can proactively adjust casting parameters to prevent defects before they occur, leading to safer and more reliable infrastructure as shown in Table 7 below:

Deep learning models require diverse training data to generalize well. To address dataset limitations, a comprehensive data augmentation strategy was implemented (Table 8).

Data Augmentation Techniques Applied

-

1.

Rotation (± 15°)—Helps simulate different camera angles.

-

2.

Zooming (± 10%)—Enlarges crack areas, increasing variability.

-

3.

Gaussian Noise Addition—Improves robustness against sensor noise.

-

4.

Contrast Adjustment—Enhances model adaptability to different lighting conditions.

-

5.

Horizontal and Vertical Flipping—Simulates cracks in different orientations.

The hyperparameter tuning process, rigorous model selection, and data augmentation strategies significantly enhanced crack detection accuracy. The Proposed CNN Model with DCAMFL demonstrated the best performance, ensuring high accuracy, robustness to uncertainty, and efficient real-time deployment in pipeline surveillance applications. To validate the significance of performance improvements, statistical hypothesis testing was conducted. A paired t-test was used to compare model performance across different configurations (Tables 9 and 10).

To ensure the generalizability of the proposed model, validation was conducted using real-world pipeline crack datasets. The following datasets were used:

-

1.

Kaggle pipeline defect dataset—A large-scale dataset with 10,000 + labeled crack images.

-

2.

Baiji oil refinery crack dataset—Collected from drone-based surveillance of industrial pipelines from Baiji oil refinery in Slahuddin, Iraq.

The ablation study confirmed that DCAMFL significantly contributes to model accuracy. Statistical tests validated the significance of improvements, ensuring that gains were not due to random variation. Real-world dataset validation demonstrated that DCAMFL performs well in industrial applications. Despite the promising performance of the proposed CNN + DCAMFL framework, real-time deployment in industrial pipeline monitoring presents several challenges. Computational cost is a key concern, as deep learning models require significant processing power, particularly for high-resolution image analysis. While our model maintains a relatively low inference time of 12 ms, deploying it on edge devices or drones with limited GPU capabilities may require model compression techniques such as quantization or pruning. Additionally, power consumption is a critical factor in drone-based inspections, as high computational loads can reduce flight time and operational efficiency. To address this, optimized hardware acceleration using TensorRT or FPGA implementations can improve energy efficiency. Scalability is another major challenge, as large-scale pipeline networks require continuous, real-time analysis across multiple inspection sites. Implementing distributed edge computing architectures with federated learning can enhance model adaptability while reducing centralized processing burdens. Future work will explore these optimizations to ensure efficient real-time deployment in resource-constrained environments.

Figure 25 presents a heatmap overlay to enhance the interpretability of crack detection in oil pipelines. The left image shows the original pipeline surface with a visible crack, while the right image displays the heatmap overlay, where the model’s attention is visualized. The colored regions indicate the areas with the highest activation for crack detection, with blue and green areas representing strong model activation, indicating regions most likely containing cracks, while yellow and red areas highlight surrounding regions where the model still assigns some probability but with lower confidence. This visualization method helps confirm that the deep learning model correctly focuses on the cracked region, increasing trust and transparency in AI-based defect detection systems. Such interpretability techniques are essential for real-world industrial applications, ensuring reliable decision-making in pipeline maintenance and monitoring.

6 Conclusions and Future Work

This study introduced a novel approach for detecting cracks in oil pipelines by integrating 3D drone simulation, Convolutional Neural Network (CNN) feature extraction, and the Dynamically Constrained Accumulative Membership Fuzzy Logic Algorithm (DCAMFL). Unlike existing methods, which often rely on either deep learning or fuzzy logic independently, our approach leverages their complementary strengths—CNNs for extracting discriminative features and DCAMFL for managing uncertainties and overlapping linguistic variables—resulting in more precise defect classification. The proposed framework demonstrated outstanding performance, achieving a precision of 96.5%, recall of 97.3%, and an F1-score of 95.6% across various defect types, including dirt, rusting, and pitting. Furthermore, the AUROC values validated the robustness of the classifier, showcasing its ability to effectively discriminate between defective and non-defective regions across different thresholds. One of the key contributions of this study is the development of a hybrid detection framework that integrates 3D drone simulation with deep learning and fuzzy logic, providing an automated and highly accurate pipeline inspection system. This novel integration enables real-time adaptability to different defect patterns, enhances classification performance, and reduces false positives and negatives. Additionally, the standardized preprocessing pipeline, including resizing, grayscale conversion, and noise filtering, ensures consistency and reliability in defect detection. Compared to traditional approaches, this method offers improved accuracy, robustness, and adaptability to diverse pipeline conditions. While the current results are promising, there are several directions for future research that could further optimize and extend the methodology:

-

1.

The algorithm could be fine-tuned to accommodate variations in material composition, pipeline coatings, and operational environments (e.g., extreme temperatures, high-pressure conditions).

-

2.

The proposed approach could be extended to detect defects in bridges, storage tanks, and offshore oil platforms, leveraging its adaptability to different structural materials and failure modes.

-

3.

Future research could explore the implementation of adaptive learning techniques, allowing the system to continuously improve its accuracy based on real-time feedback.

-

4.

The adoption of federated learning could facilitate a privacy-preserving, distributed detection framework across multiple monitoring sites, enabling large-scale pipeline surveillance without centralized data storage constraints.

This study lays the groundwork for a scalable, intelligent, and automated crack detection system that enhances pipeline safety, reduces inspection costs, and extends asset lifespan. Despite certain challenges, such as dataset limitations and the need for real-time optimization, the proposed method represents a significant advancement in automated pipeline monitoring, offering a practical and adaptable solution for future industrial applications.

Data Availability

No datasets were generated or analysed during the current study.

References

Zadeh, L.A.: Fuzzy sets. Inf. Cont. 8(3), 338–353 (1965)

Mendel, J.M.: Uncertain rule-based fuzzy logic systems: introduction and new directions. Prentice-Hall Inc, New Jersey (2001)

Zhou, J., Peng, Y., Chen, Y.: Pipeline defect detection using convolutional neural networks. J. Pipelin. Sys. Eng. Pract. 11(1), 04019025 (2020)

Li, X., Chen, Y.: Support vector machine based oil pipeline crack detection. J. Oil Gas Res. 3(1), 1–10 (2021)

Khan, A., Sharma, R., Soni, V.: Acoustic emission technique for pipeline crack detection using artificial intelligence. J. Pipe Integ. 1(1), 15–25 (2022)

Huang, Y., Liu, X.: Reinforcement learning for crack detection in oil pipelines. J. Petrol. Eng. 4(1), 25–34 (2022)