Abstract

Distributed Nash equilibrium seeking of aggregative games is investigated and a continuous-time algorithm is proposed. The algorithm is designed by virtue of projected gradient play dynamics and aggregation tracking dynamics, and is applicable to games with constrained strategy sets and weight-balanced communication graphs. The key feature of our method is that the proposed projected dynamics achieves exponential convergence, whereas such convergence results are only obtained for non-projected dynamics in existing works on distributed optimization and equilibrium seeking. Numerical examples illustrate the effectiveness of our methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Equilibrium seeking with game-theoretic formulation has received much research attention due to its broad applications in control systems, smart grids, communication networks and artificial intelligence [1, 2]. Various algorithms have been developed to achieve the equilibrium strategy such as [3–6]. Aggregative games characterize and simplify the strategic interaction by aggregation, and models that belong to such a type of games include the well-known Cournot duopoly competition, congestion control of communication networks [7], public environmental models [8], and multiproduct-firm oligopoly [9]. Because of the large-scale systems involved in these problems, seeking or computing the Nash equilibrium in a distributed manner is of practical significance.

Recently, numerous works have focused on distributed Nash equilibrium seeking for aggregative games. To name a few, Koshal et al., [10] have developed distributed synchronous and asynchronous algorithms for information exchange and equilibrium computation. Ye and Hu [11] have adopted an aggregative game with a distributed seeking strategy for the modeling and analysis of energy consumption control in smart grid. Liang et al., [12] have presented a distributed continuous-time design for generalized Nash equilibrium seeking of aggregative games with coupled equality constraints. Under the same structure of the game, Deng [13] has further considered general nonsmooth cost functions, and Gadjov and Pavel [14] have used forward–backward operator splitting method to solve the problem. Zhu et al., [15] have developed distributed seeking algorithms over strongly connected weight-balanced and weight-unbalanced directed graphs. Belgioioso et al., [16] have presented distributed generalized Nash equilibrium seeking in aggregative games on a time-varying communication network under partial-decision information.

In this work, we consider distributed Nash equilibrium seeking of aggregative games, where the aggregation information is unavailable to each local player and the communication graph can be directed with balanced weights. Although similar problems have already been considered in many works, we further focus on the development of a distributed algorithm with exponential convergence to the Nash equilibrium. The importance of exponential convergence is at least two-fold. First, exponential convergence is asympototically faster than sublinear convergence. Second, exponential convergence may be necessary to develop further properties such as robustness against computation or discretization errors and communication and other external disturbances. Although exponential convergence results have been obtained in some literature on distributed optimization and game such as [11, 17, 18], the exponential results cannot hold for projected dynamics that deal with local constraints. In this work, we consider the aggregative game with local feasible constraints and design distributed projected seeking dyanmics. First, a distributed projected gradient play dynamics is designed, where we replace the global aggregation by its local estimation to calculate the gradient. Then an aggregation tracking dynamics is augmented, where the distributed tracking signals are local parts of the aggregation. We analyze these interconnected dynamics and prove that our distributed algorithm achieves an exponential convergence to the Nash equilibrium. The contributions are as follows:

-

A distributed Nash equilibrium seeking algorithm for aggregative game is developed. The algorithm is designed with two interconnected dynamics: a projected gradient play dynamics for equilibrium seeking and a distributed average tracking dynamics for estimation of the aggregation. The projected part can deal with local constrained strategy sets, which generalizes those unconstrained ones such as [11]. Also, our distributed algorithm applies to weight-balanced directed graphs, which improves the algorithm in [12].

-

Exponential convergence of the proposed distributed algorithm is obtained, which is consistent with the convergence results in [11, 17] for unconstrained problems and is stronger than those constrained ones in [10, 12, 14–16]. In other words, this is the first work to propose an exponentially convergent distributed algorithm for aggregative games with local feasible constraints.

The rest of paper is organized as follows. Section 2 shows some basic concepts and preliminary results, while Sect. 3 formulates the distributed Nash equilibrium seeking problem of aggregative games. Then Sect. 4 presents our main results including algorithm design and analysis. Section 5 gives a numerical example to illustrate the effectiveness of the proposed algorithm. Finally, Sect. 6 gives concluding remarks.

2 Preliminaries

In this section, we give basic notations and related preliminary knowledge.

Denote \(\mathbb{R}^{n}\) as the n-dimensional real vector space; denote \(\mathbf{1}_{n} = (1,\ldots,1)^{T} \in \mathbb{R}^{n}\), and \(\mathbf{0}_{n} = (0,\ldots,0)^{T} \in \mathbb{R}^{n}\). Denote \(\operatorname{col}(x_{1},\ldots,x_{n}) = (x_{1}^{T},\ldots,x_{n}^{T})^{T}\) as the column vector stacked with column vectors \(x_{1},\ldots,x_{n}\), \(\|\cdot \|\) as the Euclidean norm, and \(I_{n}\in \mathbb{R}^{n\times n}\) as the identity matrix. Denote ∇f as the gradient of f.

A set \(C \subseteq \mathbb{R}^{n}\) is convex if \(\lambda z_{1} +(1-\lambda )z_{2}\in C\) for any \(z_{1}, z_{2} \in C\) and \(0\leq \lambda \leq 1\). For a closed convex set C, the projection map \(P_{C}:\mathbb{R}^{n} \to C\) is defined as

The projection map is 1-Lipschitz continuous, i.e.,

A map \(F:\mathbb{R}^{n}\rightarrow \mathbb{R}^{n}\) is said to be μ-strongly monotone on a set Ω if

Given a subset \(\Omega \subseteq \mathbb{R}^{n}\) and a map \(F: \Omega \to \mathbb{R}^{n}\), the variational inequality problem, denoted by \(\operatorname{VI}(\Omega ,F)\), is to find a vector \(x^{*}\in \Omega \) such that

and the set of solutions to this problem is denoted by \(\operatorname{SOL}(\Omega ,F)\) [19]. When Ω is closed and convex, the solution of \(\operatorname{VI}(\Omega ,F)\) can be equivalently reformulated via projection as follows:

It is known that the information exchange among agents can be described by a graph. A graph with node set \(\mathcal{V} = \{1,2,\ldots,N\}\) and edge set \(\mathcal{E}\) is written as \(\mathcal{G}=(\mathcal{V}, \mathcal{E})\) [20]. The adjacency matrix of \(\mathcal{G}\) can be written as \(\mathcal{A} = [a_{ij}]_{N\times N}\), where \(a_{ij} >0\) if \((j,i) \in \mathcal{E}\) (meaning that agent j can send its information to agent i, or equivalently, agent i can receive some information from agent j), and \(a_{ij} =0\), otherwise. A graph is said to be strongly connected if, for any pair of vertices, there exists a sequence of intermediate vertices connected by edges. For \(i \in \mathcal{V}\), the weighted in-degree and out-degree are \(d_{\text{in}}^{i} =\sum_{j=1}^{N} a_{ij}\) and \(d_{\text{out}}^{i} =\sum_{j=1}^{N} a_{ji}\), respectively. A graph is weight-balanced if \(d_{\text{in}}^{i} = d_{\text{out}}^{i}\), \(\forall i \in \mathcal{V}\). The Laplacian matrix is \(L= \mathcal{D}_{\text{in}} - \mathcal{A}\), where \(\mathcal{D}_{\text{in}} = \operatorname{diag}\{d_{\text{in}}^{1}, \ldots , d_{ \text{in}}^{N}\} \in \mathbb{R}^{N \times N}\). The following result is well known.

Lemma 1

Graph \(\mathcal{G}\) is weight-balanced if and only if \(L + L^{T}\) is positive semidefinite; it is strongly connected only if zero is a simple eigenvalue of L.

3 Problem formulation

Consider an N-player aggregative game as follows. For \(i\in \mathcal{V} \triangleq \{1,\ldots,N\}\), the ith player aims to minimize its cost function \(J_{i}(x_{i},x_{-i}): \Omega \to \mathbb{R}\) by choosing the local decision variable \(x_{i}\) from a local strategy set \(\Omega _{i} \subset \mathbb{R}^{n_{i}}\), where \(x_{-i} \triangleq \operatorname{col}(x_{1},\ldots,x_{i-1},x_{i+1},\ldots,x_{N})\), \(\Omega \triangleq \Omega _{1} \times \cdots \times \Omega _{N} \subset \mathbb{R}^{n}\) and \(n = \sum_{i\in \mathcal{V}} n_{i}\). The strategy profile of this game is \(\boldsymbol{x} \triangleq \operatorname{col}(x_{1},\ldots,x_{N})\in \Omega \). The aggregation map \(\sigma : \mathbb{R}^{n}\to \mathbb{R}^{m}\), to specify the cost function as \(J_{i}(x_{i},x_{-i}) = \vartheta _{i}(x_{i},\sigma (\boldsymbol{x}))\) with a function \(\vartheta _{i}: \mathbb{R}^{n_{i}+m} \to \mathbb{R}\), is defined as

where \(\varphi _{i} : \mathbb{R}^{n_{i}} \to \mathbb{R}^{m}\) is a map for the local contribution to the aggregation.

The concept of Nash equilibrium is introduced as follows.

Definition 1

A strategy profile \(\boldsymbol{x}^{*}\) is said to be a Nash equilibrium of the game if

Condition (2) means that all players simultaneously take their own best (feasible) responses at \(x^{*}\), where no player can further decrease its cost function by changing its decision variable unilaterally.

We assume that the strategy sets and the cost functions are well-conditioned in the following sense.

-

A1:

For any \(i\in \mathcal{V}\), \(\Omega _{i}\) is nonempty, convex and closed.

-

A2:

For any \(i\in \mathcal{V}\), the cost function \(J_{i}(x_{i},x_{-i})\) and the map \(\varphi (x_{i})\) are differentiable with respect to \(x_{i}\).

In order to explicitly show the aggregation of the game, let us define map \(G_{i}: \mathbb{R}^{n_{i}} \times \mathbb{R}^{m} \to \mathbb{R}^{n_{i}}\), \(i\in \mathcal{V}\) as

Also, let \(G(\boldsymbol{x},\boldsymbol{\eta }) \triangleq \operatorname{col}(G_{1}(x_{1},\eta _{1}), \ldots, G_{N}(x_{N}, \eta _{N}))\). Clearly, \(G(\boldsymbol{x},\boldsymbol{1}_{N}\otimes \sigma (\boldsymbol{x})) = F(\boldsymbol{x})\), where the pseudo-gradient map \(F:\mathbb{R}^{n} \to \mathbb{R}^{n}\) is defined as

Under A1 and A2, the Nash equilibrium of the game is a solution of the variational inequality problem \(\operatorname{VI}(\Omega ,F)\), referring to [19]. Moreover, we need the following assumptions to ensure the existence and uniqueness of the Nash equilibrium and also to facilitate algorithm design.

-

A3:

The map \(F(\boldsymbol{x})\) is μ-strongly monotone on Ω for some constant \(\mu >0\).

-

A4:

The map \(G(\boldsymbol{x},\boldsymbol{\eta })\) is \(\kappa _{1}\)-Lipschitz continuous with respect to \(\boldsymbol{x} \in \Omega \) and \(\kappa _{2}\)-Lipschitz continuous with respect to η for some constants \(\kappa _{1},\kappa _{2}>0\). Also, for any \(i\in \mathcal{V}\), \(\varphi _{i}\) is \(\kappa _{3}\)-Lipschitz continuous on \(\Omega _{i}\) for some constant \(\kappa _{3}>0\).

Note that the strong monotonicity of the pseudo-gradient map F has been widely adopted in the literature such as [21–23].

The following fundamental result is from [19].

Lemma 2

Under A1–A4, the considered game admits a unique Nash equilibrium \(\boldsymbol{x}^{*}\).

In the distributed design for our aggregative game, the communication topology for each player to exchange information is assumed as follows.

-

A5:

The network graph \(\mathcal{G}\) is strongly connected and weight-balanced.

The goal of this paper is to design a distributed algorithm to seek the Nash equilibrium for the considered aggregative game over weight-balanced directed graph.

4 Main results

In this section, we first propose our distributed algorithm and then analyze its convergence.

4.1 Algorithm

Our distributed continuous-time algorithm for Nash equilibrium seeking of the considered aggregative game is designed as the following differential equations:

Algorithm parameters α and β satisfy

where

and \(\lambda _{2}\) is the smallest positive eigenvalue of \(\frac{1}{2}(L+L^{T})\) (L is the Laplacian matrix).

Remark 1

Conditions in (5) are needed for the exponential convergence of the algorithm, which will be clarified in our convergence analysis. It is not difficult to verify that these conditions are feasible for any positive constants μ, \(\lambda _{2}\), \(\kappa _{1}\), \(\kappa _{2}\), \(\kappa _{3}\). In other words, one can always find parameters α and β satisfying (5). Here, we require that the spectral information \(\lambda _{2}\) is available. In a fully distributed environment, one may employ distributed algorithms such as [24] to calculate \(\lambda _{2}\) first and then assign parameters α and β.

The compact form of (4) can be written as

where \(\boldsymbol{\varphi }(\boldsymbol{x}) = \operatorname{col}(\varphi _{1}(x_{1}), \ldots, \varphi _{N}(x_{N}))\). Furthermore, we can rewrite (7) as

The dynamics with respect to x can be regarded as distributed projected gradient play dynamics with the global aggregation \(\sigma (\boldsymbol{x})\) replaced by local variables \(\eta _{1}, \ldots, \eta _{N}\). The dynamics with respect to η is distributed average tracking dynamics that estimates the value of \(\sigma (\boldsymbol{x})\). The design idea is similar to [11, 12]. Here, we use projection operation to deal with local feasible constraints, and replace the nonsmooth tracking dynamics in [12] by this simple one to cope with weight-balanced graphs.

4.2 Analysis

Since \(\mathcal{G}\) is weight-balanced, \(\boldsymbol{1}_{N}^{T} L = \boldsymbol{0}_{N}^{T}\). Combining this property with dynamics (8) yields

As a result,

is an invariant set of (8). In other words, there holds \(col(\boldsymbol{x}(t), \boldsymbol{\eta }(t)) \in \mathcal{M}\), \(\forall t>0\) provided that \(\operatorname{col}(\boldsymbol{x}(0), \boldsymbol{\eta }(0)) \in \mathcal{M}\). Consequently, we can simply restrict our analysis over \(\mathcal{M}\) rather than the entire space.

First, we verify that any equilibrium \(\operatorname{col}(\boldsymbol{x}, \boldsymbol{\eta }) \in \mathcal{M}\) of dynamics (8) has its x part being the Nash equilibrium \(\boldsymbol{x}^{*}\).

Theorem 1

Under A1–A5, the equilibrium of dynamics (8) over \(\mathcal{M}\) is unique, and can be expressed as

Proof

Any equilibrium of (8) should satisfy

which are obtained by setting \(\dot{\boldsymbol{x}}\), \(\dot{\boldsymbol{\eta }}\) and \(\frac{d}{dt}\boldsymbol{\varphi }(\boldsymbol{x})\) as zeros. Since \(\mathcal{G}\) is strongly connected, \(L\otimes I_{m}\boldsymbol{\eta } = \boldsymbol{0}\) implies \(\eta _{1} = \eta _{2} = \cdots = \eta _{N} = \eta ^{\diamond }\) for some \(\eta ^{\diamond }\) to be further determined. By \(\mathcal{M}\), \(( \boldsymbol{x}^{\diamond },\boldsymbol{1}_{N}\otimes \eta ^{\diamond })\) should also satisfy \(\eta ^{\diamond }= \sigma (\boldsymbol{x}^{\diamond })\).

Substituting \(\boldsymbol{x}^{\diamond }\), \(\boldsymbol{1}_{N}\otimes \eta ^{\diamond }\) into the projected equation for the equilibrium yields

which indicates \(\boldsymbol{x}^{\diamond }= \boldsymbol{x}^{*}\). Therefore, the point given in (10) is the equilibrium of (8). This completes the proof. □

In view of the identity (9) derived from (8), let

Then it follows from \(L \boldsymbol{1}_{N} = \boldsymbol{0}_{N}\) and (8) that

The whole dynamics with respect to x and y consists of two interconnected subsystems as shown in Fig. 1. Each dynamical subsystem has its own state variable, equilibrium point and external input.

Our convergence results are given in the following theorem.

Theorem 2

Under A1–A5, the distributed continuous-time algorithm (4) with parameters satisfying (5) converges exponentially. To be specific,

where \((\boldsymbol{x}^{*}, \boldsymbol{\eta ^{*}})\) is the equilibrium given in (10) and

Proof

It follows from (5) that \(\gamma ^{*} > 0\), and

Let

We verify the following three properties.

-

(1)

\(\|\boldsymbol{\xi }(\boldsymbol{x},\boldsymbol{y})\| \leq \alpha \cdot \kappa _{2} \|\boldsymbol{y}\|\).

-

(2)

The map F is κ-Lipschitz continuous.

-

(3)

The map H is \(\omega _{1}\)-strongly monotone.

Property (1) holds because

Property (2) follows from the fact that

Property (3) holds because

and

In addition, there holds the identity \(H(\boldsymbol{x}^{*}) = \boldsymbol{0}\), since \(\boldsymbol{x}^{*}\) is the Nash equilibrium.

Consider the following Lyapunov candidate function

Its time derivative along the trajectory of (11) is

Next, we focus on dynamics (12). Let

where the time derivative \(\dot{\boldsymbol{x}}\) is along the dynamics (11).

Clearly, \(\boldsymbol{1}_{N}^{T}\otimes I_{m} \boldsymbol{\zeta }(\boldsymbol{x},\boldsymbol{y}) = \boldsymbol{0}\). Also, since

there holds

Let

Then \(\boldsymbol{y} = \widehat{\boldsymbol{y}} + \widehat{\boldsymbol{y}}^{\bot }\). Since \(\boldsymbol{1}_{N}^{T}L = \boldsymbol{0}_{N}^{T}\), it follows from (12) that

As a result,

Consider the following Lyapunov candidate function

The time derivative of \(V_{2}\) along the trajectory of (12) is

where the last inequality follows from Rayleigh quotient theorem [25, Page 234]. Also, since \(\boldsymbol{y}(t) = \widehat{\boldsymbol{y}}^{\bot }(t)\), \(\forall t\geq 0\),

Combining \(V_{1}\) and \(V_{2}\), let

The time derivative of V along the trajectory of (11) and (12) is

Thus,

Recall that

Hence,

Thus, the exponential convergence of the terms \(\|\boldsymbol{x} - \boldsymbol{x}^{*}\|\), \(\|\boldsymbol{\eta } - \boldsymbol{\eta }^{*}\|\) can be established. This completes the proof. □

Remark 2

Exponential convergence of distributed algorithms has become a research topic in recent years. [18] has designed a distributed discrete-time optimization algorithm and proves its exponential convergence via a small-gain approach, while [26] has introduced a criterion for the exponential convergence of distributed primal-dual gradient algorithms in either continuous or discrete time. Theorem 2 provides an exponential convergence result by analyzing the interconnected subsystems.

5 Numerical example

In this section, we test the performance of our algorithm by using a numerical example. Here, we use Euler method to discretize the continuous-time algorithm, that is,

where \(\tau >0\) is a fixed stepsize. Consider a Cournot game played by \(N = 20\) competitive players. For \(i\in \mathcal{V}=\{1,\ldots, N\}\), the cost function \(\vartheta _{i}(x_{i},\sigma )\) and strategy set \(\Omega _{i}\) are

where

and

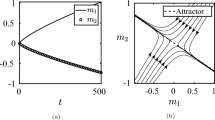

It can be verified that the game mode satisfies A1–A4 with constants \(\mu = 0.1770\), \(\kappa _{1} = 0.2199\), \(\kappa _{2} = 0.0030\), \(\kappa _{3} = 1\). We adopt a network graph as shown in Fig. 2, which satisfies A5. We also use directed cycle graph and undirected Erdos–Renyi (ER) graph to test the performance of our algorithm. We assign \(\alpha = 0.9\) and \(\beta = 1\) to render the conditions in (5) and use a stepsize \(\tau = 0.01\) for the disretization scheme. The trajectory of the strategy profile generated by our algorithm and its convergence performance are shown in Fig. 3. Here, the linearly decreasing error of \(\ln \|\boldsymbol{x}(t) - \boldsymbol{x}^{*}\|\) indicates that our algorithm achieves expoenntial convergence, which verifies the theoretical results given by our Theorem 2 and also performs robustness for different types of graphs.

Further, we use the undirected ER graph to compare our algorithm with the one given in [12]. The numerical results are shown in Fig. 4. It indicates that both algorithms converge to the Nash equilibrium, while asympototically, the convergence speeds are different. Compared with [12], our algorithm has exponential convegence as shown in Fig. 4, and it applies to directed graphs.

6 Conclusions

A distributed algorithm has been proposed for Nash equilibrium seeking of aggregative games, where the strategy set can be constrained and the network is described by a weight-balanced graph. The exponential convergence has been established. The effectiveness of our method has also been illustrated by a numerical example. It is concluded by this work that exponential convergence can still be achieved by projected distributed Nash equilibrium seeking algorithms. Further work may consider generalized Nash equilibrium seeking problems and their exponentially convegent distributed algorithms.

Availability of data and materials

Not applicable.

Code Availability

Not applicable.

References

W. Saad, Z. Han, H.V. Poor, T. Basar, Game-theoretic methods for the smart grid: an overview of microgrid systems, demand-side management, and smart grid communications. IEEE Signal Process. Mag. 29(5), 86–105 (2012)

P.R. Wurman, S. Barrett, K. Kawamoto et al., Outracing champion Gran Turismo drivers with deep reinforcement learning. Nature 602, 223–228 (2022)

H.K. Khalil, P.V. Kokotovic, Feedback and well-posedness of singularly perturbed Nash games. IEEE Trans. Autom. Control 24(5), 699–708 (1979)

J.S. Shamma, G. Arslan, Dynamic fictitious play, dynamic gradient play, and distributed convergence to Nash equilibria. IEEE Trans. Autom. Control 50(3), 312–327 (2005)

M. Zinkevich, M. Johanson, M. Bowling, C. Piccione, Regret minimization in games with incomplete information, in Advances in Neural Information Processing Systems, vol. 20 (Curran Associates, Vancouver, 2007), pp. 1729–1736

P. Frihauf, M. Krstic, T. Basar, Nash equilibrium seeking in noncooperative games. IEEE Trans. Autom. Control 57(5), 1192–1207 (2012)

J. Barrera, A. Garcia, Dynamic incentives for congestion control. IEEE Trans. Autom. Control 60(2), 299–310 (2015)

R. Cornes, Aggregative environmental games. Environ. Resour. Econ. 63(2), 339–365 (2016)

V. Nocke, N. Schutz, Multiproduct-firm oligopoly: an aggregative games approach. Econometrica 86(2), 523–557 (2018)

J. Koshal, A. Nedić, U.V. Shanbhag, Distributed algorithms for aggregative games on graphs. Oper. Res. 63(3), 680–704 (2016)

M. Ye, G. Hu, Game design and analysis for price-based demand response: an aggregate game approach. IEEE Trans. Cybern. 47(3), 720–730 (2017)

S. Liang, P. Yi, Y. Hong, Distributed Nash equilibrium seeking for aggregative games with coupled constraints. Automatica 85(11), 179–185 (2017)

Z. Deng, Distributed generalized Nash equilibrium seeking algorithm for nonsmooth aggregative games. Automatica 132, 109794 (2021)

D. Gadjov, L. Pavel, Single-timescale distributed GNE seeking for aggregative games over networks via forward–backward operator splitting. IEEE Trans. Autom. Control 66(7), 3259–3266 (2021)

Y. Zhu, W. Yu, G. Wen, G. Chen, Distributed Nash equilibrium seeking in an aggregative game on a directed graph. IEEE Trans. Autom. Control 66(6), 2746–2753 (2021)

G. Belgioioso, A. Nedić, S. Grammatico, Distributed generalized Nash equilibrium seeking in aggregative games on time-varying networks. IEEE Trans. Autom. Control 66(5), 2061–2075 (2021)

P. Yi, Y. Hong, F. Liu, Initialization-free distributed algorithms for optimal resource allocation with feasibility constraints and its application to economic dispatch of power systems. Automatica 74(12), 259–269 (2016)

A. Nedić, A. Olshevsky, W. Shi, Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM J. Optim. 27(4), 2597–2633 (2017)

F. Facchinei, J. Pang, Finite-Dimensional Variational Inequalities and Complementarity Problems. Operations Research (Springer, New York, 2003)

C. Godsil, G.F. Royle, Algebraic Graph Theory. Graduate Texts in Mathematics, vol. 207 (Springer, New York, 2001)

P. Yi, L. Pavel, An operator splitting approach for distributed generalized Nash equilibria computation. Automatica 102, 111–121 (2019)

Z. Deng, X. Nian, Distributed generalized Nash equilibrium seeking algorithm design for aggregative games over weight-balanced digraphs. IEEE Trans. Neural Netw. Learn. Syst. 30(3), 695–706 (2019)

F. Parise, B. Gentile, J. Lygeros, A distributed algorithm for almost-Nash equilibria of average aggregative games with coupling constraints. IEEE Trans. Control Netw. Syst. 7(2), 770–782 (2020)

H. Zhang, J. Wei, P. Yi, X. Hu, Projected primal–dual gradient flow of augmented Lagrangian with application to distributed maximization of the algebraic connectivity of a network. Automatica 98, 34–41 (2018)

R.A. Horn, C.R. Johnson, Matrix Analysis, 2nd ed. (Cambridge University Press, Cambridge, 2013)

S. Liang, L. Wang, G. Yin, Exponential convergence of distributed primal-dual convex optimization algorithm without strong convexity. Automatica 105, 298–306 (2019)

Funding

This work was partially supported by the National Natural Science Foundation of China under Grant 61903027, 72171171, 62003239, Shanghai Municipal Science and Technology Major Project under Grant 2021SHZDZX0100, and Shanghai Sailing Program under Grant 20YF1453000.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation and analysis were performed by Shu Liang, Peng Yi, Yiguang Hong, and Kaixiang Peng. The first draft of the manuscript was written by Shu Liang and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liang, S., Yi, P., Hong, Y. et al. Exponentially convergent distributed Nash equilibrium seeking for constrained aggregative games. Auton. Intell. Syst. 2, 6 (2022). https://doi.org/10.1007/s43684-022-00024-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43684-022-00024-4