Abstract

The concept of Meaningful Human Control (MHC) has gained prominence in the field of Artificial Intelligence ethics. MHC is discussed in relation to lethal autonomous weapons, autonomous cars, and more recently, AI systems in general. Little, however, has been done to analyze the concept. Those using MHC tend to look at it narrowly and intuitively—as if it is clear what it means. They fail to see the many issues concerning human control over machines. In this article, I break the concept into its three constitutive words (‘meaningful’, ‘human’, and, ‘control’) to outline the many meanings of MHC. While the intention is not to come to the real meaning of MHC, this analysis brings up the many issues that should be considered if meaningful human control is to be realized. These include: which humans count as meaningful in the application context, whether the control those humans are given must be meaningful, whether humans must retain control over the things that are meaningful in life, whether the style of control is human-like, whether each actor (designer, operator, subject, government) has the control they need, and what it is exactly that a human is controlling (e.g., the training data, the inputs, the outputs, etc. of the AI system).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Contemporary methods in Artificial Intelligence (AI) like Machine Learning (ML) have significantly increased the autonomy of machines. Machines can have outputs due to considerations, and the weighting of those considerations, that were not given to them by human beings. Not only are machines tasked with a particular output, but they are tasked with determining how to achieve a particular output. This level of autonomy delegated to machines raises concerns over how humans can retain meaningful human control over these machines.

The debate about meaningful human control (MHC) began in the context of lethal autonomous weapons systems (LAWS). The idea that a machine could autonomously target and inflict lethal harm to people without a human being in control was met with objections from a variety of scholars [see e.g., [1–3]]. It must be noted that others have argued that it would be unethical not to use them [4, 5]. These debates center around a narrow conception of MHC. For example, Amoroso and Tamburrini [6] claim there is a threefold role for human control on weapons systems to be “meaningful.” Humans must occupy the role of ‘failsafe actor’—preventing a harmful malfunctioning of the weapon. Human control must be able to ascribe responsibility to someone to avoid accountability gaps in the case of a weapon breaching international law. Finally, human control should ensure that it is humans and not machines taking decisions that concern the life, physical integrity and property of people.

I call this narrow because of the many meanings I highlight in this paper, the meaning discussed in the context of Laws corresponds to Sects. 2.2, 3.3, 4.3 below. That is, meaningful is a modifier of control, it is the operator’s control we are concerned with, and it is the control over the outputs of the AI system that need to be controlled. While important, this article aims to show that there are many other humans that need control over much more than the outputs of an AI system.

The concept of MHC has since been applied outside of LAWS (e.g., autonomous cars [7, 8] and surgical robots [9]). It gets to the heart of concerns about AI. It is the concept that highlights the novel ethical concerns regarding AI. When an autonomous car crashes and kills its passenger, it does not seem to the media or the public at large as just another crash. It is a crash that strikes us as more wrong than others. Something particularly bad seems to have occurred due to AI being in control of the vehicle. The consequences in each case are seemingly the same—only the agent in control is different. The machine is in control rather than a human.

While this article explores the different meanings of MHC, it will not be an attempt to come to the true meaning of the phrase. Rather, the purpose is to gain insight into the many ways that humans can be out of control of AI-powered systems. For it is not a simple case of an operator being in control of a machine. There are many ways humans can be out of control of machines. In what follows, I take each word of the phrase MHC in turn to facilitate a conceptual analysis. This article is both a way to dig deeper into what MHC is meant to capture and an argument for using.

First, there is ‘meaningful’. Meaningful could be taken to be modifying ‘human’. This would mean that only meaningful humans are candidates for control over AI. This interpretation may exclude humans that are not meaningful in a particular context (e.g., a non-medically trained person given control over AI that categorizes x-rays). Or we take ‘meaningful’ to modify ‘control.’ Then MHC is about a human having control over a particular AI system in a meaningful way (whatever that amounts to). This points to the idea that not all control is meaningful. This is the meaning that can most often be derived from the use of MHC in the literature on MHC. Meaningful could also refer to those things that are meaningful in human lives. To put it simply, we may want humans to have control over what is meaningful in life.

Second, we have the word ‘human’. This could simply be taken to be a modifier for ‘control’. That is, the type of control we are looking for is human or human-like control. This opens the possibility of a machine being in control if the control it exerts is human-like. However, ‘human’ could also be taken to be referring to the ‘who’ that is supposed to be in control. It could refer to the designer, operator, or subject of a particular AI system. ‘Human’ could also be taken to refer to governments having control over the contexts that AI can be used in and the outputs that they can have. There may be contexts in which too much control is lost by delegating tasks to machines. Governments, then, should ban machines from those contexts.

Third, and last, we have ‘control’. What is it that we want control over? In the most obvious sense, MHC refers to control over the outputs, or consequences, of a particular AI system. However, ‘control’ could also be taken to be a government’s control through laws and norms governing the acceptability of AI operating in certain contexts (as mentioned above). In this sense, control does not refer to our control over a particular AI system, but to the integration of AI into society. Finally, ‘control’ could be taken to be control of ‘the good life’ broadly speaking. If it is the subjects that are the ‘humans’ in question, then they may be deserving of control over how they conceive of and achieve the good life. AI that dictates who gets a job may be steering our conceptions of what a good job candidate is. Delegating to AI how ‘good’ and ‘bad’ are applied to people is a loss of control over what we conceive to be a good life.

Teasing out the possible meanings of MHC uncovers the many ethical considerations that should be taken into account when implementing AI systems so that human control is maintained. Too often, designers and companies focus on one meaning of MHC, which leaves out the many other considerations that are articulated below.

2 Meaningful

2.1 Meaningful modifying human

Meaningful, when modifying ‘human’, suggests that the control must rest with a meaningful human. It is important to note that ‘meaningful human’ in this usage is tied to the context. The question is “which humans are meaningful in this context—which humans are candidates for control?” Often, answering this question will exclude, for example, children, those with dementia, and other human beings that do not have the capacities considered necessary for the type of control we are here concerned with. For example, on this meaning of MHC, a child may be forbidden to operate an autonomous car alone as they are not a human with the capacities required to exercise the control needed.

What is it about children that would exclude them from being able to exercise control over an autonomous car—at least as they exist today? In a very simple sense, most of them are too small to keep their hands on, and see over, the steering wheel. This would prevent them from doing what, for example, Tesla requires:

As with all Autopilot features, you must be in control of your vehicle, pay attention to its surroundings and be ready to take immediate action including braking [10]

Not only would children be unable to pay attention to the car’s surroundings, but they do not have the training required to take immediate action. If one has never driven, then how can we expect them to exercise control when the algorithm goes wrong? Considerations like this would also exclude anyone who could not see well enough to pay attention to the car’s surroundings or had physical limitations, preventing them from steering or accelerating the car.

This would also exclude people who are incapacitated due to medication, drug, or alcohol use. This all amounts to the same rules for who is allowed to be in control of a non-autonomous vehicle. If you would not be allowed to be behind the wheel of a non-autonomous vehicle, then it appears you should not be allowed to be behind the wheel of an autonomous one—at least the level of autonomous vehicle that will exist in the foreseeable future.

Of course, Tesla’s requirement, and others like it, may go away once we achieve a higher level of autonomous vehicle—one that does not require the operator to be aware of the car’s surroundings and be ready to take over. The Guardian predicted that we could ride in the backseat of cars by 2020 [11], and Elon Musk himself predicted full self-driving cars by 2018, and then 2020 [12], and now that they are ‘very close’ to arriving [13]. Others do not have such positivity. The CEO of Volkswagen recently said that fully autonomous cars “may never happen” [14] and companies like Uber have given up on developing them [15].

So far, we have only discussed the possibility that certain humans are excluded from being candidates for having MHC in a particular context based on their capacities. However, there is another way certain humans may be excluded. In some contexts, humans with certain qualifications may be the only humans that should be considered for candidacy for having MHC. Consider an algorithm that scans X-rays for potential health issues [see e.g., [16, 17]]. I, to the best of my knowledge, am a fully capable human being. I do not suffer from any mental illnesses and am not currently under the influence of alcohol or drugs. However, I have none of the knowledge necessary to exercise control over an algorithm that scans X-rays. In this context, it is necessary that the human in question also has some specialist knowledge (and possibly a qualification proving such knowledge).

Going back to autonomous cars, it seems that Uber had this in mind when it was testing its self-driving cars.Footnote 1 Only specially trained drivers were allowed behind the wheel. People with a clean driving record could apply but would “go through a series of driving tests in a regular car. If they pass those and get through the interview process, they'll next enter Uber's three-week training period” [18]. Uber has a very specific subset of humans in mind who are qualified to exercise MHC.

This all amounts to the idea that the ‘human’ we are talking about that is supposed to be in control cannot be just any human. That is, some humans are sure to be excluded from candidacy for the exercise of this control that we are talking about in virtue of their not having certain capacities or qualifications. When implementing an AI-powered system, which humans are meaningful candidates for control should be made explicit.

2.2 Meaningful modifying control

When meaningful is taken as modifying ‘control’ the term seems to suggest that some forms of control are meaningless. And much research has been done to show that certain implementations of control are meaningless.

Again, Tesla serves as an instructive example. Tesla describes their ‘autopilot’ feature as requiring: “a fully attentive driver, who has their hands on the wheel and is prepared to take over at any moment” [10]. The existence of the ‘autopilot’ feature implies that a human can remain fully attentive and be able to take over at any moment.

However, research has shown that a ‘driver’ requires on average 8–15 s to gain situational awareness after a cue is given that a manual takeover is required [19]. This is far too long given a dangerous driving situation in which one second can be the difference between life and death. In this instance, while the human behind the wheel is given control—there are certain psychological limitations that prevent that control from qualifying as ‘meaningful’.

This version of MHC is also found in Article 36 regarding autonomous weapons—the first document proposing the concept of MHC. It states that MHC has the following premises:

(1) That a machine applying force and operating without any human control whatsoever is broadly considered unacceptable. (2) That a human simply pressing a 'fire' button in response to indications from a computer, without cognitive clarity or awareness, is not sufficient to be considered 'human control' in a substantive sense [20]

Premise (2) above uses meaningful to modify ‘control’. A human may have ‘control’ because they have to initiate the firing process; however, there is nothing meaningful about it. The human control in question must be more ‘substantive’ according to Article 36.

So far we have seen that there are psychological limitations that may make delegation of control to a particular human not very meaningful. However, there are also epistemic limitations given contemporary AI methods which cause control to be less than meaningful. Let’s say that an algorithm has labeled a traveler as ‘high-risk’—preventing them from boarding their flight. A human, a highly qualified computer scientist or developer even, could review these labels and be given plenty of time to do so. However, that human would have no idea why the algorithm labels this particular person as high-risk. If the whole point of using the algorithm is to detect patterns that are associated with, for example, ‘extremism’ that a human would miss, then any particular human is not going to have the ability to understand and endorse the label of ‘high-risk’.

This very situation happened to Eyal Weizman when his ESTA (US visa waiver) was revoked due to an algorithm developed by DataRobot (contracted by the US Department of Homeland Security) which flagged him as a security threat [21]. The analyst told Weizman that he did not know why the algorithm flagged him as such—and that 15 years of detailed travel history would have to be given to figure out if Weizman was indeed a security threat. The analyst given control did not have the information needed to meaningfully exercise control over the algorithm. Hopefully, the intention of giving a human control over an algorithm is to exercise that control before someone’s autonomy is restricted. In this example, without evidence, Weizman would have to give up his privacy—and the privacy of others to regain his ability to travel to the US.Footnote 2

Assuming that a ‘meaningful human’ is given control over an algorithm (Sect. 2.1) we can see now that there are a few ways in which their control can still be less than meaningful. There may be psychological limitations to humans that prevent the control from being exercised and epistemic limitations of the algorithm which prevent humans from having the knowledge required to meaningfully exercise control. There is a third interpretation of meaningful, however. It could just be a noun—signifying that what is desired by MHC is human control over ‘the meaningful’.

2.3 Meaningful things

If there are things that are meaningful in life, then it may be that we want control over what is considered meaningful to be retained by humans. What is considered to be ‘beautiful’, ‘ugly’, ‘good’, ‘bad’, etc., it could be argued, should be decided by human beings. If an AI system had an output that told us that there was nothing meaningful about social interaction and that we should concentrate on other things we would rightly ask “based on what?” The problem with contemporary AI (in the form of Machine Learning) is that we cannot get a useful answer to that question. The outputs will be generated based on complex patterns that are not necessarily articulable in human language [23]. Without an answer to this question, it seems that if the AI system’s output is taken seriously, then we will have lost control over deciding what is meaningful. In other words:

Keeping MHC over machines means restricting machines to outputs that do not amount to value judgments. Machines that can make decisions based on opaque considerations should not be telling humans what decisions morally ought to be made and, therefore, how the world morally ought to be [24]

Now it should be pointed out that machines themselves do not make value judgments. They do not evaluate or ‘decide’. Machines powered by ML find statistical patterns and use that to classify inputs. Having a machine tell us that social interaction is bad is as much a machine evaluating social interaction as a magic 8 ball would be evaluating if we asked it the same question. Of course, in both cases we have still delegated outputs that have evaluative content to the machine (or magic 8 ball).

While some may find the point obvious that machines cannot tell us what morally ought to be, recent AI startups, and proposals show that there is a group of people who think that humans don’t have the greatest track record of deciding how the world ought to be—and therefore that AI systems could do better. There is something intuitive about this. However, I think a distinction will clear up the tension. There is a difference between saying machines would be better in a particular moral situation and saying that machines would be better at deciding what is moral. So, when people say that machines are more moral, they could be starting from the former and mistakenly applying it also to mean the latter.

In the former case, for example, a machine could be more consistent at applying a set of guidelines for rejecting or granting a loan to people. The machine would never have a bad day, be too tired, or have an implicit or explicit bias against a person based on race, gender, or sexual preference. This is not to say that some machines will not have those biases built in, but a simple automated system could, in principle, be better in this context. The machine would be applying clear rules that were decided by humans to evaluate the loan application. However, we could enhance this machine with machine learning that goes beyond the rules that humans came up with. The machine could be trained on past loan data to formulate its own (opaque) rules about how to reject and grant loans. In this case, we would have a machine for which we could not evaluate the rules by which people are granted loans. The machine is making its own rules which have significant moral consequences. It is in these cases that control over what is meaningful is lost.

One example for control over what is meaningful being lost in this way can also be found in the art world: In 2021 Warner Brothers signed a deal with an AI company called Cinelytic which advertises that it, with AI, can:

Inform your casting, greenlighting, financing, budgeting and release decisions in real-timeFootnote 3

Here, AI chooses which art projects (in this case films) are to be funded. The value of artistic expression is hence, in part, determined by an algorithm—an algorithm that bases its outputs on factors that are not known to us. These factors ground decisions about what is ‘good’—or how the world should be.

Imagine that a machine learning algorithm was developed to tell you what you should buy at the grocery store. We do not know what considerations are used by the algorithm to determine the list of items. All we know is that the algorithm was trained with data regarding your grocery store purchase history. In this scenario, the algorithm could be recommending products based on the likelihood that you will buy them (similar algorithms are used to recommend movies, tv shows, gadgets, etc.). That may or may not be a good consideration for deciding what to buy at the grocery store. The point is that the specific considerations and their weighting regarding what food products one should buy is an evaluative decision. Not knowing how those decisions are made is a loss of control—similar to your son or daughter deciding which groceries to buy. Their considerations would not align with your own—and it is those considerations that are important.

Much more is to be said about losing control over what is meaningful [see e.g., [25]]. This is merely a suggestion that there may be a fundamental loss of control when machines are delegated certain decisions. This may help explain negative reactions to things like AI evaluating beautyFootnote 4 or evaluating candidates for a job.Footnote 5 Although the hype surrounding what AI can do has exploded, we should take a second to think about whether a decision should be delegated to AI.

3 Human

3.1 Human modifying ‘control’

Human could first be seen simply as a modifier for ‘control’. Although one would rightly assume that this simply just means that a human is in control—it could also refer to a human style of control. In chess one often talks about brilliant, unexpected moves as an ‘engine move’. That is, the move was in the style of how a computer engine would make a move. Chess commentators frequently analyze possible moves in a game, and some are discarded because they are not “human” moves. Considering this we can think of ‘human control’ as allowing for the possibility of something other than a human having control as long as the control exercised is human-like.

One of the most cited journal articles on the subject of MHC over autonomous systems uses ‘human control’ with this meaning in mind. Filippo Santo de Sio and Jeroen van den Hoven in their 2018 Frontiers in Artificial Intelligence article place two conditions on meaningful human control. The first is:

To be under meaningful human control, a decision-making system should demonstrably and verifiably be responsive to the human moral reasons relevant to the circumstances—no matter how many system levels, models, software, or devices of whatever nature separate a human being from the ultimate effects in the world, some of which may be lethal. That is, decision-making systems should track (relevant) human moral reasons [8]

This condition (called the tracking condition in the paper) is specifically about the autonomous system itself making decisions in line with human moral reasons.Footnote 6 That is, the system should make decisions that are ‘human-like’ in that they respond to human moral reasons. The control lies in the verifiable capability of the system to respond to such reasons. It is possible to achieve this condition without having a human involved in any aspect of a particular decision made by the autonomous system.

Implementations of such systems fall into the field of ‘machine ethics’. Machine ethicists aim to endow machines with moral reasoning capabilities [see e.g., [28,29,30]]. Although there is much work being done in this field—fundamental objections have been raised. These include: that machine ethicists have so far failed to provide an upshot to developing such machines [31], development of such systems risks the reinforcement of power asymmetries in society [32], decisions by such systems will be skewed by implementation constraints and economic viability [32], that such systems cannot ‘value’ and therefore cannot act for moral reasons [33], and that these efforts should be redirected towards ensuring safety [34, 35].

The point is not to settle this debate here. Rather, it is important to understand that humans could modify control in a way that allows for the possibility of machines being in control in a human-like way. If machines cannot have human-like control for whatever reason, then this would not be an option. I am inclined to say that this is not desirable or possible; however, when using the concept of MHC it is important to specify if the given conceptualization includes or excludes this possibility.

3.2 Designer

One human candidate is the designer of an algorithm. There is a lot of control that the designer can exert to ensure that an algorithm is aligned with human values. From the choice of what function the algorithm has to the specific methodology used, to the data used to train the algorithm are choices that impact how that algorithm will behave in the wild.

The choice of project to work on is already a major ethical decision. When Google joined the US Department of Defense’s project MAVEN which aims to deploy AI in the service of weapons of war there was controversy [36]. The outcry stems from the choice that Google made to develop AI for a specific purpose. This only makes sense when we understand that Google, as a designer, has a choice on which projects to pursue. The control that an operator has here is quite limited. The operator could object to using AI in weapons of war; however, by the time they have the opportunity to do that, the weapons have been designed and developed. The operator herself cannot control others using the new weapon. Designers have more power of control here. They have the power to decide not to build these weapons in the first place.

Albert Einstein, discussing the responsibility of physicists in developing the atomic bomb, had this to say about Alfred Nobel:

Alfred Nobel invented the most powerful explosive ever known up to his time, a means of destruction par excellence. In order to atone for this, in order to relieve his human conscience, he instituted his awards for the promotion of peace and for achievements of peace.

He is, in effect, saying that those physicists that helped to create the bomb deserve moral responsibility for its terrible outcomes—and will need atonement. Designers of AI systems today are in a similar position. Those working on methods and techniques which will be incorporated into surveillance and weapons hold some moral responsibility for what happens next. This is because they can be accountable (but not blameworthy) for the harm that the resulting technologies cause. That is, they are held responsible for a particular harm that they were not responsible for preventing [37].

It is a good sign to see that the outcry about Google’s pursuit of this specific project did not just come from activists and academics; rather, the group setting out to convince Google to stop this pursuit were employees of Google itself. These engineers did not want to be a part of this project—and they exerted some control by speaking out against it. If MHC is, in part, about assigning accountability and responsibility for the consequences of AI, then the designers of these algorithms themselves must exert control over the projects that they choose to work on.

However, choosing a project to work on only goes so far. How one goes about that project comes with many choices that designers have control over. What dataset will they use to train their algorithm? ImageNet RouletteFootnote 7 (that has since been shut down) is a great example of the dangers of not controlling the training data. ImageNet Roulette would be able to identify faces from uploaded images and match labels to those faces. Its algorithm was trained on the (in)famous ImageNet database which has over 14 million images [38] labeled by people using Amazon’s Mechanical Turk.Footnote 8 While preparing for a lecture (aiming to get a good example of racism and AI) I uploaded an image of Trayvon Martin (a young black man murdered by George Zimmerman because of the color of his skin) onto the site. It labeled his face as “rapist”. The only information used to apply this label was the smiling face in the picture I uploaded.

It does not come as a shock that the system showed bias against women and people of color [40]. The point of this example is that designers also have choices regarding how to build an algorithm. The control they exercise (or do not) regarding which datasets they use—and whether they practice due diligence regarding how that dataset was compiled and labeled matter.

Joanna Bryson and Andreas Theodorou have argued that MHC can be realized if we ensure that the system operates within the parameters that we expect. They claim that despite the hype regarding out-of-control AI, there is nothing difficult about establishing the boundaries by which an AI system operates. They argue, “constraining processes like learning and planning allows them to operate more efficiently and effectively, as well as more safely” [41]. Scott Robbins makes a similar claim while proposing that AI be ‘enveloped’. He argues that rather than focusing on the explainability of a particular AI system, clearly articulating the boundaries, possible inputs, possible outputs, functions, and training data of an AI system allows humans to decide when, where, and if that system should be used—giving them more control [42].

These articles point to the fact that designers themselves hold the responsibility of control over the direction of AI research and design. If they abdicate their responsibility of control over this, then little can be done to prevent AI-powered surveillance and weapons that will cause irreparable harm to society. We cannot unmake the next atomic bomb. It simply should not be made in the first place.

3.3 Operator

The operator is the most obvious candidate for the human subject in MHC. MHC, coming out of the autonomous weapons literature was most definitely referring to the person that would be using the autonomous weapon—and, therefore, the algorithm. Article 36, a non-profit group working to regulate weapons systems states that: “‘Positive obligations’—rules on the use of [Weapons Systems]—should require users to control location, duration and target specification, as well as other aspects of design and use” [43] emphasis mine]. They specifically target the users—or operators—of the system.

It makes sense to focus on the operator—as the operator of any technology holds the responsibility to use it properly. Many technologies require certifications or licenses to operate them. Cars, planes, boats, MRI machines, forklifts, guns, etc., all require some form of training before one is permitted to use them. This is because we know that, if used incorrectly, significant harm can be done. The training provides the knowledge they need to have control over the technology that they will be responsible for.

The question that we need to answer with each AI system is whether or not the operator will have the knowledge needed to have control over the system. If an algorithm labels someone as ‘high-risk’, preventing them from receiving a visa like in the example of Eyal Weizman, does the operator have any information with which to verify this label? In short, is the operator in a position to have control that is meaningful? Knowing that systems will make mistakes, there must be a way for operators to override or correct them. If not, the control that operators have is illusory.

3.4 Subject

While riding my bike through the city I frequently come across situations in which I need to look a driver in the eye to ensure that they know I am there. I will change my course of action depending on whether I can catch the driver’s eye. Autonomous cars, being driven by algorithms, change this scenario. Catching the passenger’s eye does not give me the same control over the situation I have when it is a human driver.Footnote 9

In this scenario, I am not the operator or designer of the algorithm, I am subject. The algorithm will be making decisions that affect me. But I am merely a visual input into the AI system. How am I to know whether the AI system ‘sees’ me? If I knew that it was aware of me, the way I would navigate my environment would be different from if it was not. However, I do not have this information—which lessens the control I have. It may not notice me at all—or react to something completely unexpected. The point is that, in principle, it is possible that subjects of an algorithm have control over how they navigate an increasingly algorithmic world.

This consideration has not gone completely unnoticed by researchers. A team at Princeton University created a device for pedestrians to wear that would better ensure that driverless cars were able to recognize them [44]. While it may seem ridiculous that the responsibility lies with pedestrians—who will most likely not benefit from driverless cars—it is not without precedent. Runners and bikers at night often wear reflective gear to prevent cars from hitting them. Having to wear a recognition device for autonomous cars may just become another fact of life.

Driverless cars are just one of many examples. When black women are not recognized by facial recognition algorithms [see e.g., [45] that they must use to do something (get through security at the airport, take an online exam) they lose some of the control they had. When people are subjected to video interviews where AI judges and hiring committees categorizes them,Footnote 10 they lose control over how to be an effective interviewee. The point is that those who will be required to interact with an AI system also require some control. There must be a plan for them to have the information necessary for them, and to know how to act in such a way that protects their safety and interests.

3.5 Government

Governments are also candidates for the humans that are supposed to be in control. In the race to replace ‘dumb’ infrastructure’ with ‘smart’ infrastructure it is important that the new infrastructure is under society’s control. If the system is so complex and opaque that governments must delegate control to technology companies, then we have a serious problem. Think of the control delegated to Boeing regarding certifying their 737 Max. Boeing simply had too much incentive to certify a terrible product—causing the death of hundreds.

Governments can exercise control over AI by creating legally binding boundaries for it. The EU AI Act is an attempt to do just this. The legislation has categories of risk that give AI different rules depending on which category it fits into. The highest level of risk (“unacceptable risk”) constitutes a ban. The act describes AI systems that are manipulative as well as AI systems that use so-called ‘social scoring’ as unacceptably risky.Footnote 11. The second highest level of risk (“high risk”) is AI that poses a threat to ‘health, safety, and fundamental rights’. If an AI system is labeled high risk, then the provider must meet a list of obligations including the meeting of specified data quality criteria, the creation of technical documentation, and ensuring that ‘systems can be effectively overseen by natural persons’ [46].

I will not get into the debate about the quality of this piece of legislation.Footnote 12 What it shows is an attempt by a government to exert control over these systems. Governments cannot oversee the operation of every AI system—but they can require companies to build them according to rules which go some way towards keeping them under meaningful human control.

4 Control

4.1 The training data of an AI system

The data that trains a particular algorithm is central to how that algorithm will work in the wild. The previously mentioned ImageNet Roulette dramatically highlighted this. The dataset used by ImageNet showed how ethically salient the data labeling process is. The ImageNet database contains 2,395 categories for people. When Kate Crawford and artist Trevor Paglen used labeled photos of humans within the ImageNet database to power an AI algorithm to identify and categorize novel faces used as input [47], the results were less than acceptable.

ImageNet Roulette made it clear that data used to train the system was incredibly important for how that system operated. Amazon attempted to use AI for hiring and had to shut the system down after it was clear that the algorithm preferred men over women for management positions. The data used to train the algorithm was obtained from the past hiring that Amazon had done—which was also heavily biased toward men. Apple experienced the same thing when it applied AI to handle decisions about credit card limits. Steve Wozniak (and others) publicly complained he was given 10 times the credit limit that his wife was given despite having only shared bank accounts and the same assets [48]. Finally, there is an algorithm used to detect skin cancer which was widely acclaimed and was shown to be as good or better than dermatologists at detecting melanoma. However, it was later shown that the algorithm performed terribly on those with darker skin tones. When researchers looked at the training data they found that of the 2436 training images where skin color was available only 10 images had darker skin—with only 1 having the darkest skin tone (according to the Fitzpatrick scale) [49].

If an algorithm has been trained without data from a particular group, then the algorithm is likely not to function properly for that group. The first way to facilitate control here—is to be transparent about the training data used. A doctor who knows that the skin cancer-detecting algorithm was not trained with much data from people with darker skin tones will be in a better position to exercise control over the algorithm. Its outputs when used on a person with a darker skin tone will not hold as much weight. The second way to facilitate control is to ensure that the training data are representative of the target population. Designers, operators, and governments should implement methods to exercise control over this. Designers must work with operators to ensure that the training data are representative of the intended target group, and governments could require third-party audits [50].

4.2 The inputs to an AI system

Another way to exert some control over AI algorithms is to control the possible inputs that they will receive. The use of an AI algorithm to evaluate potential hires could have a wide variety of inputs. We could use their social media feeds, employment history, criminal background check, search history, photos, etc. However, this creates many possibilities that the system is using irrelevant or unethical considerations to reach its output.

Employers are not allowed to ask if a woman is planning on having kids in an interview because this should be irrelevant to whether or not they get the job. Having social media feeds as inputs to an AI hiring system allows for exactly this information to be used to determine whether someone gets a job. And due to the opacity of ML systems, we would not even know it was happening.

This complexity can arise due to having multiple sources from which inputs can come in, but can also arise simply from the environment that a machine operates in. A machine operating in an industrial setting can have tightly controlled input possibilities. However, autonomous cars operating on the open road can seemingly have unlimited possible inputs. Everything that can appear on a road is a possible input. Other cars, road signs, bicycles, pedestrians, children playing, animals, etc. This makes it extremely complex to get the algorithm to reach the correct outputs. It also makes it difficult to understand exactly what went wrong. Tesla cars infamously have great difficulty differentiating overpasses and turning semi-trucks—leading to multiple deaths [51]. Scholars have argued that autonomous cars simply will not work unless these inputs can be controlled [42, 52].

Each source of input must be justified. It is not the case that more data will necessarily lead to better outcomes. Furthermore, the possible inputs arising from a particular context can create insurmountable difficulties for controlling the system. AI works best, as mentioned above when enveloped—in tightly controlled environments where possible inputs are known and accounted for [42, 52].

4.3 The outputs of an AI system

What can the AI system do? Many AI systems are tasked with categorizing inputs. The type of categorization matters. Determining whether a specific object is in an image is very different from categorizing people as high and low risk. The latter types of outputs have moral consequences. People labeled as high-risk may be denied a visa or kept in prison. Other systems have physical outputs—like autonomous vehicles. They can turn, accelerate, brake, etc. Autonomous weapons can target and inflict lethal harm.

Control lies also in restricting the outputs of these systems. Governments may need to ban certain types of outputs. As previously mentioned, various actors are attempting to get governments to ban lethal autonomous weapons. Other scholars have suggested that evaluative outputs (e.g. labeling someone as high-risk) should not be delegated to AI systems [24]. There is, as of yet, no obvious answer to the question of which outputs should and should not be delegated to AI. Designers, operators, and governments must exercise control by choosing these outputs.

At the very least, the outputs should be verifiable—and it should be possible for operators to meaningfully evaluate those outputs. Some outputs may be in principle unverifiable. One cannot, for example, determine whether an algorithm was correct in labeling someone as high-risk. You cannot, after locking them away, determine whether they would have committed another crime. This makes the AI system difficult to evaluate regarding its accuracy. It would be odd to use a system for which the accuracy of that system was unknown.

4.4 The limits of AI

Control could refer to one’s ability to control what contexts AI is acceptable to operate in and what decisions we delegate to such systems. We exercise this kind of control all the time with technology. We do not use chainsaws in daycare centers. Industrial robots operate in a very controlled environment where humans cannot get close to ensure safety. The goal is to constrain the system to limit the possible consequences, i.e., enveloping them.

This concept has been used by Luciano Floridi to show how autonomous vehicles could be successful. Rather than having autonomous vehicles operate in the widely variable driving infrastructure that exists today, one could envision a completely enveloped environment in which autonomous cars do not have to rely on cameras to ‘see’ road signs and pedestrians; rather, the infrastructure for autonomous cars would be sensored and pedestrians would be prevented from walking within the enveloped environment. This prevents many of the problems that exist with autonomous cars today. Muddy stop signs or bad weather would not cause them to miss road signs, exits, and lane lines. They would simply ‘read’ them via their sensors. And pedestrians and bikers would not be around so there could be no crashes resulting in their deaths.

While interesting, this would be a costly project—and Floridi himself has pointed out that it may not be worth the effort. However, there are other enveloped environments where autonomous vehicles have performed quite well.Footnote 13

4.5 The good life

There may be multiple ways of existing as human beings. Some of them are not good human existences. One could interpret MHC being about control over what counts as meaningfully human existence and how one could achieve that existence is what MHC refers to. If AI starts telling us what counts as a meaningful human life (and being wrong about it), then it could force us to adapt to its conception. We could be striving for a life that was chosen by an algorithm that may have little to do with an actually good human life.

It is conceivable, for example, that children adapt themselves to machines by forcing smiles in school with the aim of being counted as ‘engaged’. Our writings may change (be dumbed down) so as not to confuse machines reading, categorizing, and evaluating them. While similar concerns have been raised about, for example, calculators and math performance or spell checkers and spelling performance, these machines present a novel concern.Footnote 14 It is not only that the delegation of writing to large language models (e.g., ChatGPT) could cause a decrease in writing performance, but the delegation of the evaluation of writing to machines could cause writers to adapt to what the machines thinks is good writing. A machine’s evaluation may deviate from our own—especially when the writing is novel and creative.

This is about our control regarding how we can exist as human beings. The concern is that the world we are building with AI may be one in which humans merely serve to benefit the data-hungry machines and those who profit from them rather than AI being used to benefit human beings.

We are sFensoring the world, our homes, and ourselves through smart CCTV cameras, digital assistants, smartphones and watches, etc. These sensors monitor our emotions, our relationships, our daily routines and habits, and our likes and preferences. These data are used to make judgments about us as human beings that may determine whether we get a loan, get a promotion, or will be placed on a watch list.

The increased knowledge of such surveillance has already been shown to create a chilling effect—an effect on how we behave in the world. We do not say certain things for fear of a machine taking it out of context and blocking our account. We do not speak about our political beliefs because this could deny our ability to get a visa to a particular country.

Added to this is the concern that the world we are building when we rely increasingly on AI-powered machines is unsustainable. Robbins and van Wynsberghe argue that in our increasing dependence upon AI, “we run the risk of locking ourselves into a technological infrastructure that is energy-intensive in both its development and use, as well as energy-intensive to mitigate certain ethical concerns” [53]. Our good lives and the good lives of future generations depend upon a world with a healthy climate. We, as humans, must exert control over the sustainability of the infrastructure that we choose to depend upon.

5 Conclusion

Meaningful human control is the critical concept that gets at the heart of why AI is not just another technology—but one that creates novel ethical issues. Many of our fears surrounding AI are fears of losing control. Here, I have highlighted the many meanings of MHC—not to define it; rather, to survey the many threats to our control over these machines and to point to questions that will have to be considered if we are to have a chance of maintaining that control.

There will never be a one size fits all solution to maintaining our control over AI-powered machines. The capabilities of these machines and the contexts they are in will each necessitate their own conditions for control. Every step of the design of these systems requires thinking about how each of the different humans outlined in Sect. 3 will be able to maintain control. That control has to be meaningful—and the control and responsibility need to be given to those humans that are meaningful in the context at hand. Designers must think about the control they exercise by not only selecting the training data, inputs, and capabilities of these systems—but over the very projects they choose to work on. The operators need the training and information with which to override or correct mistakes in the system. Bystanders need to be able to safely navigate a world increasingly powered by AI. Finally, governments must put up legal guardrails to ensure that these conditions are met.

Not only are the narrow control concerns of individual AI-powered systems important, but the broader concerns about controlling the things that are meaningful in life and individuals’ control over their conceptions and realization of the good life are also important. The speed with which AI-powered systems are incorporated into our everyday lives challenges this control.

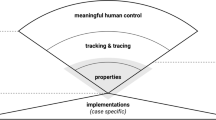

Much, however, remains to be done. Identifying the many meanings of MHC and the associated ethical issues is only the first step in a process that should lead to concrete design requirements. Future work should focus on a framework for determining which meanings apply to specific AI systems. This would allow designers to know what ethical issues surrounding MHC apply to their machine. Another step that requires more work is to translate these issues surrounding MHC into actionable properties. Cavalante Siebert et. Al., for example, used the tracking and tracing conditions of Santoni de Sio and van den Hoven to come up with actionable properties for AI systems to be under MHC [54]. While I think that Santoni de Sio and van den Hoven’s paper covers a very narrow interpretation of MHC (Sect. 3.1), it is important for work like this to be done with the meanings of MHC brought up in this article so that designers can realize MHC through design.

AI is a powerful technology. It is being incorporated into our lives in a myriad of ways. The convenience it offers is seductive. We must find a way to ensure that we are using it to realize a world that we had meaningful control to choose – not a world mysteriously controlled by AI.

Notes

Uber has since given up on self-driving cars [15].

Weizman is a professor who runs an organization called Forensic Architecture which investigates war crimes. His travel itineraries and contacts are deeply sensitive, so he was unable to divulge this information to the US government. See [22].

“Cinelytic | Built for a Better Film Business,” accessed February 27, 2021, https://www.cinelytic.com/platform/.

See e.g., http://beauty.ai/ which is the “First International Beauty Contest Judged by Artificial Intelligence”.

See e.g., https://www.hirevue.com/ which claims that video interviews “combined with artificial intelligence—provides excellent insight into attributes like social intelligence (interpersonal skills), communication skills, personality traits, and overall job aptitude.” There is no evidence that this approach works – and many have rightly called it pseudoscientific and biased [see e.g., [26]].

For a discussion about what kind of reasons MHC should be tracking see [27].

ImageNet Roulette is no longer active but you can read about it here: https://www.nytimes.com/2019/09/20/arts/design/imagenet-trevor-paglen-ai-facial-recognition.html

Mechanical Turk is a platform in which people can complete small tasks for pennies. The ethics of using this service are already questionable. See e.g. Williamson [39].

It is odd that the simple design requirement of having a light that signals that a car is operating in autonomous mode does not exist. It seems relevant that people would know whether a Tesla is being operated by an algorithm or a human.

See e.g., https://www.hirevue.com/.

Social scoring is the practice of using AI by governments to generate ‘trustworthiness’ scores for individuals and giving preferential treatment to those with high scores [46].

For a critical analysis see [46]

Thanks to an anonymous reviewer for this point.

References

Sharkey, N.: The evitability of autonomous robot warfare. Int. rev. Red Cross. 94, 787–799 (2012)

Sharkey N. Towards a principle for the human supervisory control of robot weapons. PT. 2014;

Tonkens, R.: The case against robotic warfare: a response to Arkin. Routledge, Military Ethics and Emerging Technologies (2014)

Arkin R. Governing lethal behavior: embedding ethics in a hybrid deliberative/reactive robot architecture. Proceedings of the 3rd ACM/IEEE international conference on Human robot interaction [Internet]. New York, NY, USA: Association for Computing Machinery; 2008 [cited 2020 Aug 19]. p. 121–8. Available from: https://doi.org/10.1145/1349822.1349839

Arkin, R., Ulam, P., Wagner, A.R.: Moral decision making in autonomous systems: enforcement, moral emotions, dignity, trust, and deception. Proc. IEEE 100, 571–589 (2012)

Amoroso, D., Tamburrini, G.: Autonomous weapons systems and meaningful human control: ethical and legal issues. Curr Robot Rep. 1, 187–194 (2020)

Mecacci, G., Santoni de Sio, F.: Meaningful human control as reason-responsiveness: the case of dual-mode vehicles. Ethics Inf. Technol. 22, 103–115 (2020)

Santoni de Sio, F., van den Hoven, J.: Meaningful human control over autonomous systems: a philosophical account. Front. Robot. AI. 5, 1–14 (2018)

Ficuciello, F., Tamburrini, G., Arezzo, A., Villani, L., Siciliano, B.: Autonomy in surgical robots and its meaningful human control. Paladyn J. Behav. Robot. 10, 30–43 (2019)

Tesla. Autopilot and Full Self-Driving Capability [Internet]. Tesla Support. 2019 [cited 2021 Feb 24]. Available from: https://www.tesla.com/support/autopilot

Adams T. Self-driving cars: from 2020 you will become a permanent backseat driver [Internet]. the Guardian. 2015 [cited 2021 May 17]. Available from: http://www.theguardian.com/technology/2015/sep/13/self-driving-cars-bmw-google-2020-driving

Hawkins AJ. Here are Elon Musk’s wildest predictions about Tesla’s self-driving cars [Internet]. The Verge. 2019 [cited 2021 May 17]. Available from: https://www.theverge.com/2019/4/22/18510828/tesla-elon-musk-autonomy-day-investor-comments-self-driving-cars-predictions

BBC News. Elon Musk says full self-driving Tesla tech “very close.” BBC News [Internet]. 2020 Jul 9 [cited 2021 May 17]; Available from: https://www.bbc.com/news/technology-53349313

Chin C. Key Volkswagen Exec Admits Full Self-Driving Cars “May Never Happen” [Internet]. The Drive. 2020 [cited 2021 May 17]. Available from: https://www.thedrive.com/tech/31816/key-volkswagen-exec-admits-level-5-autonomous-cars-may-never-happen

Kollewe J. Uber ditches effort to develop own self-driving car [Internet]. the Guardian. 2020 [cited 2021 May 17]. Available from: http://www.theguardian.com/technology/2020/dec/08/uber-self-driving-car-aurora

Adams, S.J., Henderson, R.D.E., Yi, X., Babyn, P.: Artificial intelligence solutions for analysis of X-ray images. Can Assoc Radiol J. 72, 60–72 (2021)

López-Cabrera, J.D., Orozco-Morales, R., Portal-Diaz, J.A., Lovelle-Enríquez, O., Pérez-Díaz, M.: Current limitations to identify COVID-19 using artificial intelligence with chest X-ray imaging. Health Technol. 11, 411–424 (2021)

Kerr D. Here’s how your Uber is learning to drive [Internet]. CNET. 2017 [cited 2021 Jun 29]. Available from: https://www.cnet.com/news/uber-self-driving-car-safety-driver-training-pittsburgh-test-track/

Wright, T.J., Agrawal, R., Samuel, S., Wang, Y., Zilberstein, S., Fisher, D.L.: Effects of alert cue specificity on situation awareness in transfer of control in Level 3 automation. Transp. Res. Rec.. J. Transp. Res. Board. 2663, 27–33 (2017)

Article 36. The United Kingdom and lethal autonomous weapons systems [Internet]. United Kingdom: Article 36; 2016 Apr. Available from: http://www.article36.org/wp-content/uploads/2016/04/UK-and-LAWS.pdf

Weizman E. I was denied entry into the U.S. because of a “Homeland Security algorithm” [Internet]. Fast Company. 2020 [cited 2021 Feb 2]. Available from: https://www.fastcompany.com/90466400/i-was-denied-entry-into-the-u-s-im-not-a-terrorist-i-investigate-human-rights-abuses

Mackey R. Homeland Security Algorithm Revokes U.S. Visa of War Crimes Investigator Eyal Weizman [Internet]. The Intercept. 2020 [cited 2021 Feb 2]. Available from: https://theintercept.com/2020/02/20/homeland-security-algorithm-revokes-u-s-visa-war-crimes-investigator-eyal-weizman/

Robbins, S.: A misdirected principle with a catch: explicability for AI. Mind. Mach. 29, 495–514 (2019)

Robbins S. Machine Learning & Counter-Terrorism: Ethics, Efficacy, and Meaningful Human Control [Internet] [Doctoral Thesis]. [Delft, The Netherlands]: Technical University of Delft; 2021 [cited 2021 Feb 7]. Available from: https://repository.tudelft.nl/islandora/object/uuid:ad561ffb-3b28-47b3-b645-448771eddaff

Robbins S. Recommending Ourselves to Death: values in the age of algorithms. In: Genovesi S, Kaesling K, Robbins S, editors. Recommender Systems: Legal and Ethical Issues [Internet]. Springer Nature; 2023. Available from: forthcoming

Engler A. Auditing employment algorithms for discrimination [Internet]. Brookings. 2021 [cited 2021 Sep 13]. Available from: https://www.brookings.edu/research/auditing-employment-algorithms-for-discrimination/

Veluwenkamp, H.: Reasons for meaningful human control. Ethics Inf Technol. 24, 51 (2022)

Wallach, W.: Implementing moral decision making faculties in computers and robots. AI & Soc. 22, 463–475 (2007)

Wallach, W., Allen, C.: Moral machines: teaching robots right from wrong, 1st edn. Oxford University Press, New York (2010)

Scheutz, M.: The need for moral competency in autonomous agent architectures. In: Müller, V.C. (ed.) Fundamental issues of artificial intelligence, pp. 515–525. Springer International Publishing, Springer (2016). https://doi.org/10.1007/978-3-319-26485-1_30

van Wynsberghe, A., Robbins, S.: Critiquing the reasons for making artificial moral agents. Sci Eng Ethics. 25, 719–735 (2019)

Herzog, C.: Three risks that caution against a premature implementation of artificial moral agents for practical and economical use. Sci. Eng. Ethics. 27, 3 (2021)

Véliz C. Moral zombies: why algorithms are not moral agents. AI & Soc [Internet]. 2021 [cited 2021 Jun 28]; Available from: https://doi.org/10.1007/s00146-021-01189-x

Sharkey, A.: Can we program or train robots to be good? Ethics Inf. Technol. 22, 283–295 (2020)

Yampolskiy, R.V.: Artificial intelligence safety engineering: why machine ethics is a wrong approach. In: Müller, V.C. (ed.) Philosophy and theory of artificial intelligence, pp. 389–396. Springer, Berlin, Heidelberg (2013). https://doi.org/10.1007/978-3-642-31674-6_29

Wakabayashi D, Shane S. Google Will Not Renew Pentagon Contract That Upset Employees. The New York Times [Internet]. 2018 Jun 1 [cited 2021 Feb 27]; Available from: https://www.nytimes.com/2018/06/01/technology/google-pentagon-project-maven.html

van de Poel, I.: The relation between forward-looking and backward-looking responsibility. In: Vincent, N.A., van de Poel, I., van den Hoven, J. (eds.) Moral responsibility beyond free will and determinism, pp. 37–52. Springer, Netherlands, Dordrecht (2011). https://doi.org/10.1007/978-94-007-1878-4_3

Reynolds M. New computer vision challenge wants to teach robots to see in 3D [Internet]. New Scientist. 2017 [cited 2021 Feb 27]. Available from: https://www.newscientist.com/article/2127131-new-computer-vision-challenge-wants-to-teach-robots-to-see-in-3d/

Williamson, V.: On the ethics of crowdsourced research. PS Political Sci. Politics. 49, 77–81 (2016)

Metz C. ‘Nerd,’ ‘Nonsmoker,’ ‘Wrongdoer’: How Might A.I. Label You? The New York Times [Internet]. 2019 Sep 20 [cited 2021 Feb 27]; Available from: https://www.nytimes.com/2019/09/20/arts/design/imagenet-trevor-paglen-ai-facial-recognition.html

Bryson, J.J., Theodorou, A.: How society can maintain human-centric artificial intelligence. In: Toivonen, M., Saari, E. (eds.) Human-centered digitalization and services, pp. 305–323. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-7725-9_16

Robbins, S.: AI and the path to envelopment: knowledge as a first step towards the responsible regulation and use of AI-powered machines. AI Soc. 35, 391–400 (2020)

Article 36. Regulating Autonomy in Weapons Systems [Internet]. Blackmore Ltd.; 2020 [cited 2021 Jun 28]. Available from: https://article36.org/wp-content/uploads/2020/10/Regulating-autonomy-leaflet.pdf

Li Z, Wu C, Wagner S, Sturm JC, Verma N, Jamieson K. REITS: Reflective Surface for Intelligent Transportation Systems. Proceedings of the 22nd International Workshop on Mobile Computing Systems and Applications [Internet]. 2021 [cited 2023 Apr 15]. p. 78–84. Available from: http://arxiv.org/abs/2010.13986

Buolamwini J, Gebru T. Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Conference on Fairness, Accountability and Transparency [Internet]. 2018 [cited 2019 Jun 25]. p. 77–91. Available from: http://proceedings.mlr.press/v81/buolamwini18a.html

Veale, M., Borgesius, F.Z.: Demystifying the draft EU artificial intelligence act — analysing the good, the bad, and the unclear elements of the proposed approach. Comput. Law Rev. Int. 22, 97–112 (2021)

Wong JC. The viral selfie app ImageNet Roulette seemed fun – until it called me a racist slur. The Guardian [Internet]. 2019 Sep 18 [cited 2021 Oct 14]; Available from: https://www.theguardian.com/technology/2019/sep/17/imagenet-roulette-asian-racist-slur-selfie

Apple co-founder says Apple Card algorithm gave wife lower credit limit. Reuters [Internet]. 2019 Nov 11 [cited 2021 Oct 18]; Available from: https://www.reuters.com/article/us-goldman-sachs-apple-idUSKBN1XL038

Wen, D., Khan, S.M., Xu, A.J., Ibrahim, H., Smith, L., Caballero, J., et al.: Characteristics of publicly available skin cancer image datasets: a systematic review. Lancet Digit. Health. 4, e64-74 (2022)

Reddy, S., Allan, S., Coghlan, S., Cooper, P.: A governance model for the application of AI in health care. J. Am. Med. Inform. Assoc. 27, 491–497 (2019)

Hawkins AJ. Tesla didn’t fix an Autopilot problem for three years, and now another person is dead [Internet]. The Verge. 2019 [cited 2023 Apr 16]. Available from: https://www.theverge.com/2019/5/17/18629214/tesla-autopilot-crash-death-josh-brown-jeremy-banner

Floridi, L.: Enveloping the world: the constraining success of smart technologies. In: Mauger, J. (ed.) CEPE 2011: Ethics in interdisciplinary and intercultural relations, pp. 111–116. Wisconsin, Milwaukee (2011)

Robbins, S., van Wynsberghe, A.: Our new artificial intelligence infrastructure: becoming locked into an unsustainable future. Sustainability 14, 4829 (2022)

Cavalcante Siebert, L., Lupetti, M.L., Aizenberg, E., Beckers, N., Zgonnikov, A., Veluwenkamp, H., et al.: Meaningful human control: actionable properties for AI system development. AI Ethics. 3, 241–255 (2023)

Acknowledgements

This paper has benefitted from discussions with my colleagues in the CST AI Research Group at the Center for Science and Thought here in Bonn where I presented an earlier version of this paper. I would like to especially thank Inga Blundell for her helpful feedback and discussion on earlier versions of the paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Robbins, S. The many meanings of meaningful human control. AI Ethics (2023). https://doi.org/10.1007/s43681-023-00320-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43681-023-00320-6