Abstract

This article analyzes mid-implementation course corrections in a quality improvement innovation for a maternal and child health network working in a large Midwestern metropolitan area. Participating organizations received restrictive funding from this network to screen pregnant women and new mothers for depression, make appropriate referrals, and log screening and referral data into a project-wide data system over a one-year pilot program. This paper asked three research questions: (1) What problems emerged by mid-implementation of this program that required course correction? (2) How were advocacy targets developed to influence network and agency responses to these mid-course problems? (3) What specific course corrections were identified and implemented to get implementation back on track? This ethnographic case study employs qualitative methods including participant observation and interviews. Data were analyzed using the analytic method of qualitative description, in which the goal of data analysis is to summarize and report an event using the ordinary, everyday terms for that event and the unique descriptions of those present. Three key findings are noted. First, network participants quickly responded to the emerged problem of under-performing screening and referral completion statistics. Second, they shifted advocacy targets away from executive appeals and toward the line staff actually providing screening. Third, participants endorsed two specific course corrections, using “opt out, not opt in” choice architecture at intake and implementing visual incentives for workers to track progress. Opt-out choice architecture and visual incentives served as useful means of focusing organizational collaboration and correcting mid-implementation problems.

Similar content being viewed by others

This study examines inter-organizational collaboration among human service organizations serving a specific population of pregnant women and mothers at risk for perinatal depression. These organizations received restrictive funding from a local community network to screen this population for risk for depression, make appropriate referrals as indicated, and log screening and referral data into a project-wide data system for a 1-year pilot program. This paper asked three specific research questions: (1) What problems emerged by mid-implementation of the screening and referral program that required course correction? (2) How were advocacy targets developed to influence network and agency responses to these mid-course problems? (3) What specific course corrections were identified and implemented to get implementation back on track?

Previous scholarship (McMillin 2017) reported the background of how the maternal and child health organization studied here began as a community committee funded by the state legislature to address substance use by pregnant women and new mothers. Ultimately this committee grew into a 501(c)3 nonprofit backbone organization (McMillin 2017) that increasingly served as a pass-through entity for many grants it administered and dispersed to health and social service agencies who were members and partners of the network and primarily served families with young children. One important grant was shared with six network partner agencies to create a pilot program and data-sharing system for a universal screening and referral protocol for perinatal mood and anxiety disorders. This innovation used a network-wide shared data software system into which staff from all six partner agencies entered their screening results and the referrals they gave to clients.

Universal screening and referral for perinatal mood and anxiety disorders and co-occurring issues meant that every partner agency would do some kind of screening (virtually always an empirically validated instrument such as the Edinburgh Postnatal Depression Scale), and every partner would respond to high screens (scores over the designated clinical cutoff of whatever screening instrument was being used, indicating the presence of or high risk for clinical depression) with a referral to case managers in partner agencies that were also funded by the network. The funded partners faced a very tight timeline that anticipated regular screening and enrollment of an estimated number of clients in case management and depression treatment for every month of the fiscal program year. A slow start in screening and enrolling patients meant that funded partners would likely be in violation of their grant contract with the network while facing a rapidly closing window of time in which they would be able to catch up and provide enough contracted services to meet the contractual numbers for their catchment area, which could jeopardize funding for a second year. This paper covers the 4 months in the middle of the pilot program year when network staff realized that funded partners were seriously behind schedule in the amount of screens and referrals for perinatal mood and depression these agencies were contracted to make at this point in the fiscal year.

Background and Significance

Although challenging and complex for many human service organizations, collaboration with competitors in the form of “co-opetive relationships” has been linked to greater innovation and efficiency (Bunger et al. 2017, p. 13). But grant cycle funding can add to this complexity in the form of the “capacity paradox,” in which small human service organizations working with specific populations face funding restrictions because they are framed as too small or lacking capacity for larger scale grants and initiatives (Terrana and Wells 2018, p. 109). Finally, once new initiatives are implemented in a funded cycle, human service organizations are increasingly expected to engage in extensive, timely, and often very specific data collection to generate evidence of effectiveness for a particular program (Benjamin et al. 2018).

Mid-Course Corrections During Program Implementation

Mid-course corrections during implementation of prevention programs targeted to families with young children have long been seen as important ways to refine and modify the roles of program staff working with these populations and add formal and informal supports to ongoing implementation and service delivery prior to final evaluation (Lynch et al. 1998; Wandersman et al. 1998). Mid-course corrections can help implementers in current interventions or programs adopt a more facilitative and individualized approach to participants that can improve implementation fidelity and cohesion (Lynch et al. 1998; Sobeck et al. 2006). Comprehensive reviews of implementation of programs for families with young children have consistently found that well-measured implementation improves program outcomes significantly, especially when dose or service duration is also assessed (Durlak and DuPre 2008; Fixsen et al. 2005). Numerous studies have emphasized capturing implementation data at a low enough level to be able to use it to improve service data quickly and hit the right balance of implementation fidelity and thoughtful, intentional implementation adaptation (Durlak and DuPre 2008; Schoenwald et al. 2010; Schoenwald et al. 2013; Schoenwald and Hoagwood 2001; Tucker et al. 2006).

Inter-Organizational Networks and Mid-Course Corrections

Inter-organizational networks serving families with young children face special challenges in making mid-course corrections while maintaining implementation fidelity across member organizations (Aarons et al. 2011; Hanf and O’Toole 1992). Implementation through inter-organizational networks is never merely a result of clear success or clear failure; rather, it is an ongoing assessment of how organizational actors are cooperating or not across organizational boundaries (Cline 2000). Frambach and Schillewaert (2002) echo this point by noting that intra-organizational and individual cooperation, consistency, and variance also have strong effects on the eventual level of implementation cohesion and fidelity that a given project is able to reach. Moreover, recent research suggests that while funders and networks may emphasize and prefer inter-organizational collaboration, individual agency managers in collaborating organizations may see risks and consequences of collaboration and may face dilemmas in complying with network or funder expectations (Bunger et al. 2017). Similar organizations providing similar services with overlapping client bases may fear opportunism or poaching from collaborators, and interpersonal trust as well as contracts or memoranda of understanding might be needed to assuage these concerns (Bunger 2013; Bunger et al. 2017). Even successful collaboration may expedite mergers between collaborating organizations that are undesired or disruptive to stakeholders and sectors (Bunger 2013). While funders may often prefer to fund larger and more comprehensive merged organizations, smaller specialized community organizations founded by and for marginalized populations may struggle to maintain their community connection and focus as subordinate components of larger firms (Bunger 2013).

Organizational Advocacy for Mid-Course Corrections

Organizational policy practice and advocacy for mid-course corrections in a pilot program likely looks different from the type of advocacy and persuasion efforts that might seek to gain buy-in for initial implementation of the program. Fischhoff (1989) notes that in the public health workforce, individual workers rarely know how to organize their work to anticipate the possibility or likelihood of mid-course corrections because most work is habituated and routinized to the point that it is rarely intentionally changed, and when it is changed, it is due to larger issues on which workers expect extensive further guidance. When a need for even a relatively minor mid-course correction is identified, it can result in everyone concerned “looking bad,” from the workers adapting their implementation to the organizations requesting the changes (Fischhoff 1989, p. 112). There is also some evidence that health workers have surprisingly stable, pre-existing beliefs about their work situations and experiences, and requests to make mid-course corrections in work situations may have to contend with workers’ pre-existing, stable beliefs about the program they are implementing no matter how well-reasoned the proposed course corrections are (Harris and Daniels 2005). Given a new emphasis in social work that organizational policy advocacy should be re-conceptualized as part of everyday organizational practice (Mosley 2013), a special focus on strategies that contribute to the success of professional networks and organizations that can leverage influence beyond that of a single agency becomes increasingly important.

Incenting Collaboration: Opt-out Methods

Given the above problems with inter-organizational collaboration, increased attention has turned to automated methods of implementation that reduce burden on practitioners without unduly reducing freedom of choice and action. Behavioral economics and behavioral science approaches have been suggested as ways to assist direct practitioners to follow policies and procedures that they are unlikely to intend to violate. Evidence suggests that behavior in many contexts is easy to predict with high accuracy, and behavioral economics seeks to alter people’s behavior in predictable, honest, and ethical ways without forbidding any options or significantly adding too-costly incentives, so that the best or healthiest choice is the easiest choice (Thaler and Sunstein 2008).

Significance

Following Mosley’s (2013) recommendation, this paper examines in detail how a heavily advocated quality improvement pilot program for a maternal and child health network working in a large Midwestern metropolitan area attempted to make mid-implementation course corrections for a universal screening and referral program for perinatal mood and anxiety disorders conducted by its member agencies. This paper answers the call of recent policy practice and advocacy research to examine how “openness, networking, sharing of tasks,” and building and maintaining positive relationships are operative within organizational practice across multiple organizations (Ruggiano et al. 2015, p. 227). Additionally, this paper focuses on extending recent research to understand how mandated screening for perinatal mood and anxiety disorders can be implemented well (Yawn et al. 2015).

Method

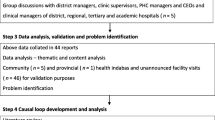

This study used an ethnographic case study method because treating the network and this pilot program as a case study makes it possible to examine unique local data while also locating and investigating counter-examples to what was expected locally (Stake 1995). This method makes it possible to inform and modify grand generalizations about the case before such generalizations become widely accepted (Stake 1995). This study also used ethnographic methods such participant observation and informal, unstructured interview conversations at regularly scheduled meetings. Adding these ethnographic approaches to a case study which is tightly time-limited can help answer research questions fully and efficiently (Fusch et al. 2017). Data were collected at regular network meetings, which are 2–3 h long and held twice a month. One meeting is a large group of about 30 participants who supervise or perform screening and case management for perinatal mood and anxiety disorders as well as local practitioners in quality improvement and workforce training and development. A second executive meeting was held with 8–12 participants, typically network staff and the two co-chairs of each of organized three groups, a screening and referral group, a workforce training group, and a quality improvement group, to debrief and discuss major issues reported at the large group meeting.

For this study, the author served as a consultant to the quality improvement group and attended and took notes on network meetings in the middle of the program year (November through February) to investigate how mid-course corrections in the middle of the contract year were unfolding. These network meetings generally used a world café focus group method, in which participants move from a large group to several small groups discussing screening and referral, training, and quality improvement specifically, then moved back to report small group findings to the large group (Fouché and Light 2011). The author typed extensive notes on an iPad, and note-taking during small group breakouts could only intermittently capture content due to the roving nature of the world café model. Note-taking was typically unobtrusive because virtually all participants in both small and large group meetings took notes on discussion. Note-taking and note-sharing were also a frequent and iterative process, in which the author commonly forwarded the notes taken at each meeting to network staff and group participants after each meeting to gain their insights and help construct the agenda of the next meeting. By the middle of the program year, network participants had gotten to know each other and the author quite well, so the author was typically able to easily arrange additional conversations for purposes of member checking. These informal meetings supplemented the two regular monthly meetings of the network and allowed for specific follow-up in which participants were asked about specific comments and reactions they had shared at previous meetings.

Brief PowerPoint presentations were also used at the beginning of successive meetings during the program year to summarize announcements and ideas from the last meeting and encourage new discussion. Often, PowerPoints were used to remind participants of dates, deadlines, statistics, and refined and summarized concepts. Because so many other meeting participants took their own notes and shared them during meetings, a large amount of feedback on meeting topics and their meaning were able to be obtained. The author then coded the author’s meeting notes in an iterative and sequenced process guided by principles of qualitative description, in which the goal of data analysis is to summarize and report an event using the ordinary, everyday terms for that event and the unique descriptions of those present (Sandelowski and Leeman 2012). This analytic method was chosen because it is especially useful when interviewing health professionals about a specific topic, in that interpretation stays very close to the data presented while leveraging all of the methodological strengths of qualitative research, such as multiple, iterative coding, member checking, and data triangulation (Neergaard et al. 2009). In this way, qualitative organizational research remains rigorous, while the significance of findings is easily translated to wider audiences for rapid action in intervention and implementation (Sandelowski and Leeman 2012).

Results

By the middle of the program year, network meeting participants explicitly recognized that mid-course corrections were needed in the implementation of the new quality improvement and data-sharing program for universal screening and referral of perinatal mood and anxiety disorders. After iterative analysis of shared meeting notes, three key challenges were salient as themes from network meetings in the middle of the program year.

Regarding the first research question, concerning what problems emerged by mid-implementation that required course correction, data showed that the numbers of clients screened and referred were a fraction of what was contractually anticipated by midway through the program year. This problem was two-fold, in that fewer screenings than expected were reported, but also data showed that those clients who screened as at risk for a perinatal mood and anxiety disorder were not consistently being given the referrals to further treatment indicated by the protocol. This was the first time the network had seen “real numbers” from the collected data for the program year that could be compared with estimated and predicted numbers for each part of the program year, both in terms of numbers anticipated to be screened and especially in terms of numbers expected to be referred to the case management services being funded by the network. However, the numbers were starkly disappointing: only about half of those whose screening scores were high enough to trigger referrals were actually offered referrals, and only about 2/3 of those who received referrals actually accepted the referral and followed up for further care. By the middle of the program year, only 16% of expected, estimated referrals had been issued, and no network partner was at the 50% expected.

In responding to this data presentation, participants offered several possible patient-level explanations. First, several noted that patients commonly experience inconsistent providers during perinatal care and may have little incentive to follow up on referrals after such a fragmented experience. One participant noted a patient who had been diagnosed with preeclampsia (a pregnancy complication marked by high blood pressure) by her first provider, but the diagnostician gave no further information beyond stating the diagnosis, and then the numerous other providers this patient saw never mentioned it again. This patient moved through care knowing nothing about her diagnosis and with little incentive to accept or follow up with other referrals. Other participants noted that the typical approach to discharge planning and care transitions by providers was a poor match for clean, universal screening and referral, and that satisfaction surveys had captured patient concerns about how they were given information, which was typically on paper and presented to the patient as she leaves the hospital or medical office. As one participant noted, “We flood them the day mom leaves the hospital and we’re lucky if the paper ever gets out of the back seat of the car.” Others noted that patients may not follow up on referrals simply because they are feeling overwhelmed with other areas of life or are feeling emotionally better without further treatment.

However, while these explanations may shed light on why referred patients did not follow up on or keep referrals, they do nothing to explain why no referral or follow-up was offered for screens that were above the referral cutoff. Two further explanations were salient. One explanation centered on the idea that some positive screens were potentially being ignored because staff may be reluctant to engage or feared working with clients in crisis—described as an, “If I don’t ask the question, I don’t have to deal with it” mindset. All screening tools used numeric scores, so that triggered referrals were not dependent on staff having to decide independently to make a referral, but conveying the difficult news that a client had scored high enough on a depression scale to warrant a follow-up referral may have been daunting to some staff. An alternative explanation suggested that staff were not ignoring positive screens but were not understanding the intricacies and expectations of the screening process. Of the community agencies partnering with the network to provide screening, many were also able to provide case management as well, but staff did not realize that internal referrals to a different part of their agency still needed to be documented. In this case, a number of missed referrals could have been provided but never documented in the network data-sharing system.

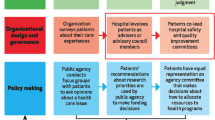

Regarding the second research question, concerning how advocacy targets needed to change based on the identification of the problem, participants agreed that the previous plan to reinforce the importance of the screening program to senior executives in current and potential partner agencies (McMillin 2017) needed to be updated to reflect a much tighter focus on the line staff actually doing the work (or alternatively not doing the work in the ways expected) in the months remaining in the funded program year. One participant noted that the elusive warm handoff—a discharge and referral where the patient was warmly engaged, clearly understood the expected next steps, and was motivated to visit the recommended provider for further treatment—was also challenging for staff who might default to a “just hand them the paper” mindset, especially for those staff who were overwhelmed and understaffed. The network was funding additional case managers to link patients to treatment, but partner agencies were expected to screen using usual staff, who had been trained but not increased or otherwise compensated to do the screening. Additional concerns mentioned the knowledge and preparation of staff to make good referrals, with an example noted of one staff member seemingly unaware of how to make a domestic violence referral even through a locally well-known agency specializing in interpersonal violence treatment and prevention has been working with the network for some time. Meeting participants agreed that in the time remaining for the screening and referral pilot, advocacy efforts would have to be diverted away from senior executives and toward line staff if there was to be any chance of meeting enrollment targets and justifying further funding for the screening and referral program.

Participants also noted that while the operational assumption was that agencies that were network partners this pilot year would remain as network partners for future years of the universal screening and referral program, there was no guarantee about this. Partner agencies that struggled to complete the pilot year of the program, with disappointing numbers, may decline to participate next year, especially if they lost network funding based on their challenged performance in the current program year. This suggested that additional advocacy at the executive level might still be needed, as executives could lead their agencies out of the network screening system after June 30, but that for the remainder of the program year, the line staff themselves who were performing screening needed to be heavily engaged and lobbied to have any hope of fully completing the pilot program on time.

Regarding the third research question, concerning specific course corrections identified and implemented to get implementation back on track, a prolonged brainstorming session was held after the disappointing data were shared. This effort produced a list of eight suggested “best practices” to help engage staff performing screening duties to excel in the work: (1) making enrollment targets more visible to staff, perhaps by using visual charts and graphs common in fundraising drives; (2) using “opt-out” choice architecture that would automatically enroll patients who screened above the cutoff score unless the patient objected; (3) sequencing screens with other paperwork and assessments in ways that make sure screens are completed and acted upon in a timely way; (4) offering patients incentives for completing screens; (5) educating staff on reflective practice and compassion fatigue to avoid or reduce feelings of being overwhelmed about screening; (6) using checklists that document work that is done and undone; (7) maintaining intermittent contact and follow-up with patients to check on whether they have accepted and followed up on referrals; and (8) using techniques of prolonged engagement so that by the time staff are screening patients for perinatal mood and anxiety disorders, patients are more likely to be engaged and willing to follow up.

Further discussion of these best practices noted that there was no available funding to compensate either patients for participating in screening or staff for conducting screening. Long-term contact or prolonged engagement also seemed to be difficult to implement rapidly in the remaining months of the program year. Low-cost, rapid implementation strategies were seen as most needed, and it was noted that strategies from behavioral economics were the practices most likely to be rapidly implemented at low-cost. Visual charts and graphs displaying successful screenings and enrollments while also emphasizing the remaining screenings and enrollments needed to be on schedule were chosen for further training because these tactics would involve virtually no additional cost to partner agencies and could be implemented immediately. Likewise, shifting to “opt-out” enrollment procedures was encouraged, where referred patients would be automatically enrolled in case management unless they specifically objected. In addition, the network quickly scheduled a workshop on how to facilitate meetings so that supervisors in partner agencies would be assisted in immediately discussing and implementing the above course corrections and behavioral strategies with their staff.

Training on using visual incentives emphasized three important components of using this technique. First, it was important to make sure that enrollment goals were always visually displayed in the work area of staff performing screening and enrollment work. This could be something as simple as a hand-drawn sign in the work area noting how many patients had been enrolled compared with what that week’s enrollment target was. Ideally this technique would transition to an infographic that was connected to an electronic dashboard in real time—where results would be transparently displayed for all to see in an automatic way that did not take additional staff time to maintain. Second, the visual incentive needed to be displayed vividly enough to alter or motivate new worker behavior, but not so vividly as to compete with, distract, or delay new worker behavior. In many social work settings, participants agreed that weekly updates are intuitive for most staff. Without regular check-in’s and updates of the target numbers, it could be easy for workers to lose their sense of urgency about meeting these time-constrained goals. Third, training emphasized teaching staff how behavioral biases could reduce their effectiveness. Many staff are used to (and often good at) cramming and working just-in-time, but this is not possible when staff cannot control all aspects of work. Screeners cannot control the flow of enrollees—rather they must be ready to enroll new clients intermittently as soon as they see a screening is positive—so re-learning not to cram or work just-in-time suggested a change in workplace routines for many staff.

Training on “opt-out” choice architecture for network enrollment procedures emphasized using behavioral economics and behavioral science to empower better direct practitioners. Evidence suggests that behavior in many contexts is easy to predict with high accuracy, and behavioral economics seeks to alter people’s behavior in predictable, honest, and ethical ways without forbidding any options or significantly adding too-costly incentives, so that the best or healthiest choice is the easiest choice (Thaler and Sunstein 2008). Training here also emphasized meeting Thaler and Sunstein’s (2008) two standards for good choice architecture: (1) the choice had to be transparent, not hidden, and (2) it had to be cheap and easy to opt out. Good examples of such choice architecture were highlighted, such as email and social media group enrollments, where one can unsubscribe and leave such a group with just one click. Bad or false examples of choice architecture were also highlighted, such as auto-renewal for magazines or memberships where due dates by which to opt out are often hidden and there is always one more financial charge before one is free of the costly enrollment or subscription. Training concluded by advising network participants to use opt-in choice architecture when the services in question are highly likely to be spam, not meaningful, or only relevant to a fraction of those approached. Attendees were advised to use opt-out choice architecture when the services in question are highly likely to be meaningful, not spam, and relevant to most of those approached. Since those with positive depression screens were not approached unless they had scored high on a depression screening, automatic enrollment in a case management service where clients would receive at least one more contact from social services was highly relevant to the population served in this pilot program and was encouraged, with clients always having the right to refuse.

To jump-start changing the behavior of the staff in partner agencies actually doing the screenings, making the referrals, and enrolling patients in the case management program, the network quickly scheduled a facilitation training so that supervisors and all who led staff or chaired meetings could be prepared and empowered to discuss enrollment and teach topics like opt-out enrollment to staff. This training emphasized the importance of creating spaces for staff to express doubt or confusion about what was being asked of them. One technique that resonated with participants was doing check-ins with staff during a group training by asking staff to make “fists to fives,” a hand signal on a 0–5 scale on how comfortable they were with the discussion, where holding a fist in the air is discomfort, disagreement, or confusion and waving all five fingers of one hand in the air meant total comfort or agreement with a query or topic. Training also emphasized that facilitators and trainers should acknowledge that conflict and disagreement typically comes from really caring, so it was important to “normalize discomfort,” call it out when people in the room seem uncomfortable, and reiterate that the partner agency is able and willing to have “the tough conversations” about the nature of the work.

Discussion

Mid-course corrections attempted during implementation of a quality improvement system in a maternal and child health network offered several insights to how organizational policy practice and advocacy techniques may rapidly change on the ground. Specifically, findings highlighted the importance of checking outcome data early enough to be able to respond to implementation concerns immediately. Participants broadly endorsed organizational adoption of behavioral economic techniques to influence rapidly the work behavior of line staff engaged in screening and referral before lobbying senior executives to extend the program to future years. These findings invite further exploration of two areas: (1) the workplace experiences of line staff tasked with mid-course implementation corrections, and (2) the organizational and practice experiences of behavioral economic (“nudge”) techniques.

This network’s approach to universal screening and referral was very clearly meant to be truly neutral or even biased in the client’s favor. Staff were allowed and even encouraged to use their own individual judgment and discretion to refer clients for case management, even if the client did not score above the clinical cutoff of the screening instrument. Mindful of the dangers of rigid, top-down bureaucracy, the network explicitly sought to empower line staff to work in clients’ favor, yet still experienced disappointing results.

This outcome suggests several possibilities. First, it is possible that, as participants implied, line staff were sometimes demoralized workers or nervous non-clinicians who were not eager to convey difficult news regarding high depression scores to clients who may have already been difficult to serve. As Hasenfeld’s classic work (Hasenfeld 1992) has explicated, the organizational pull toward people-processing in lieu of true people-changing is powerful in many human service organizations. Tummers (2016) also recently showcases the tendency of workers to prioritize service delivery to motivated rather than unmotivated clients. Smith (2017) suggests that regulatory and contractual requirements can ameliorate disparities in who gets prioritized for what kind of human service, but the variability if human service practice makes this problem hard to eliminate altogether.

However, it is also possible that line staff did not see referral as clearly in a client’s best interest but rather as additional paperwork and paper-pushing within their own workplaces, additional work that line staff were given neither extra time nor compensation to complete. Given that ultimately the number of internal referrals that were undercounted or undocumented was seen as an important cause of disappointing project outcomes, staff reluctance to engage in extra bureaucratic sorting tasks is a distinct possibility. The line staff here providing screening may have seen their work as less of a clinical assessment process and more of a tedious, internal bureaucracy geared toward internal compliance and payment rather than getting needy clients to worthwhile treatment. Further research on the experience of line staff members performing time-sensitive sorting tasks is needed to understand how even in environments explicitly trying to be empowering and supportive of worker discretion; worker discretion may have negative impacts on desired implementation outcomes.

In addition to the experience of line staff in screening clients, the interest and embrace of agency supervisors in choosing behavioral economic techniques for staff training and screening processes also deserves further study. Grimmelikhuijsen et al. (2017) advocate broadly for further study and understanding of behavioral public administration which integrates behavioral economic principles and psychology, noting that whether one agrees or disagrees with the “nudge movement” (p. 53) in public administration, it is important to understand its growing influence. Ho and Sherman (2017) pointedly critique nudging and behavioral economic approaches, noting that they may hold promise for improving implementation and service delivery but do not focus on front-line workers, and the quality and consistency of organizational and bureaucratic services in which arbitrariness remains a consistent problem.

Finally, more research is needed on links between organizational policy implementation and state policy. In this case, state policy primarily set report deadlines and funding amounts with little discernible impact on ongoing organizational implementation. This gap also points to challenges in how policymakers can feasibly learn from implementation innovation in the community and how successful innovations can influence the policy process going forward.

Limitations

This article’s findings and any inferences drawn from them must be understood in light of several study limitations. This study used a case study method and ethnographic approaches of participant observation, a process which always runs the risk of the personal bias of the researcher intruding into data collection as well as the potential for social desirability bias among those observed. Moreover, a case study serves to elaborate a particular phenomenon and issue, which may limit its applicability to other cases or situations. A critical review of the use of the case study method in high-impact journals in health and social sciences found that case studies published in these journals used clear triangulation and member-checking strategies to strengthen findings and also used well-regarded case study approaches such as Stake’s and qualitative analytic methods such as Sandelowski’s (Hyett et al. 2014). This study followed these recommended practices. Continued research on health and human service program implementation that follows the criteria and standards analyzed by Hyett et al. (2014) will contribute to the empirical base of this literature while ameliorating some of these limitations.

Conclusion

Research suggests that collaboration may be even more important for organizations than for individuals in the implementation of social innovations (Berzin et al. 2015). The network studied here adopted behavioral economics as a primary means of focusing organizational collaboration. However, a managerial turn to nudging or behavioral economics must do more than achieve merely administrative compliance. “Opt-out, not-in” organizational approaches could positively affect implementation of social programs in two ways. First, it could eliminate unnecessary implementation impediments (such as the difficult conversations about depression referrals resisted by staff in this case) by using tools such as automatic enrollment to push these conversations to more specialized staff who could better advise affected clients. Second, such approaches could reduce the potential workplace dissatisfaction of line staff, including any potential discipline they could face for incorrectly following more complicated procedures. Thaler and Sunstein (2003) explicitly endorse worker welfare as a rationale and site for behavioral economic approaches. They note that every system as a system has been planned with an array of choice decisions already made, and given that there is always a starting default, it should be set to predict desired best outcomes. This study supports considering behavioral economic approaches for social program implementation as a way to reset maladaptive default settings and provide services in ways that can be more just and more effective for both workers and clients.

References

Aarons, G. A., Hurlburt, M., & Horwitz, S. M. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 4–23. https://doi.org/10.1007/s10488-010-0327-7.

Benjamin, L. M., Voida, A., & Bopp, C. (2018). Policy fields, data systems, and the performance of nonprofit human service organizations. Human Service Organizations: Management, Leadership & Governance, 42(2), 185–204. https://doi.org/10.1080/23303131.2017.1422072.

Berzin, S. C., Pitt-Catsouphes, M., & Gaitan-Rossi, P. (2015). Defining our own future: Human service leaders on social innovation. Human Service Organizations: Management, Leadership & Governance, 39(5), 412–425. https://doi.org/10.1080/23303131.2015.1060914.

Bunger, A. C. (2013). Administrative coordination in nonprofit human service delivery networks: The role of competition and trust. Nonprofit and Voluntary Sector Quarterly, 42(6), 1155–1175. https://doi.org/10.1177/0899764012451369.

Bunger, A. C., McBeath, B., Chuang, E., & Collins-Camargo, C. (2017). Institutional and market pressures on interorganizational collaboration and competition among private human service organizations. Human Service Organizations: Management, Leadership & Governance, 41(1), 13–29. https://doi.org/10.1080/23303131.2016.1184735.

Cline, K. D. (2000). Defining the implementation problem: Organizational management versus cooperation. Journal of Public Administration Research and Theory: J-PART, 10(3), 551–571.

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350. https://doi.org/10.1007/s10464-008-9165-0.

Fischhoff, B. (1989). Helping the public make health risk decisions. In V. T. Covello, D. B. McCallum, & M. T. Pavlova (Eds.), Effective risk communication (pp. 111–116). New York: Springer.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network.

Fouché, C., & Light, G. (2011). An invitation to dialogue: “The world café” in social work research. Qualitative Social Work, 10(1), 26–48. https://doi.org/10.1177/1473325010376016.

Frambach, R. T., & Schillewaert, N. (2002). Organizational innovation adoption: A multi-level framework of determinants and opportunities for future research. Journal of Business Research, 55(2), 163–176. https://doi.org/10.1016/S0148-2963(00)00152-1.

Fusch, P. I., Fusch, G. E., & Ness, L. R. (2017). How to conduct a mini-ethnographic case study: A guide for novice researchers. The Qualitative Report, 22(3), 923–941.

Grimmelikhuijsen, S., Jilke, S., Olsen, A. L., & Tummers, L. (2017). Behavioral public administration: Combining insights from public administration and psychology. Public Administration Review, 77(1), 45–56. https://doi.org/10.1111/puar.12609.

Hanf, K., & O’Toole, L. J. (1992). Revisiting old friends: Networks, implementation structures and the management of inter-organizational relations. European Journal of Political Research, 21(1–2), 163–180. https://doi.org/10.1111/j.1475-6765.1992.tb00293.x.

Harris, C., & Daniels, K. (2005). Daily affect and daily beliefs. Journal of Occupational Health Psychology, 10(4), 415–428. https://doi.org/10.1037/1076-8998.10.4.415.

Hasenfeld, Y. (1992). Human services as complex organizations. Newbury Park: Sage Publications.

Ho, D. E., & Sherman, S. (2017). Managing street-level arbitrariness: The evidence base for public sector quality improvement. Annual Review of Law and Social Science, 13, 251–272. https://doi.org/10.1146/annurev-lawsocsci-110316-113608.

Hyett, N., Kenny, A., & Dickson-Swift, V. (2014). Methodology or method? A critical review of qualitative case study reports. International Journal of Qualitative Studies on Health and Well-Being, 9(1), 23606. https://doi.org/10.3402/qhw.v9.23606.

Lynch, K. B., Geller, S. R., Hunt, D. R., Galano, J., & Dubas, J. S. (1998). Successful program development using implementation evaluation. Journal of Prevention & Intervention in the Community, 17(2), 51–64. https://doi.org/10.1300/J005v17n02_05.

McMillin, S. E. (2017). Organizational policy advocacy for a quality improvement innovation in a maternal and child health network: lessons learned in early implementation. Journal of Policy Practice, 16(4), 381–396.

Mosley, J. E. (2013). Recognizing new opportunities: Reconceptualizing policy advocacy in everyday organizational practice. Social Work, 58(3), 231–239. https://doi.org/10.1093/sw/swt020.

Neergaard, M. A., Olesen, F., Andersen, R. S., & Sondergaard, J. (2009). Qualitative description–the poor cousin of health research? BMC Medical Research Methodology, 9(1), 52. https://doi.org/10.1186/1471-2288-9-52.

Ruggiano, N., Taliaferro, J. D., Dillon, F. R., Granger, T., & Scher, J. (2015). Identifying attributes of relationship management in nonprofit policy advocacy. Journal of Policy Practice, 14(3–4), 212–230. https://doi.org/10.1080/15588742.2014.956970.

Sandelowski, M., & Leeman, J. (2012). Writing usable qualitative health research findings. Qualitative Health Research, 22(10), 1404–1413. https://doi.org/10.1177/1049732312450368.

Schoenwald, S. K., & Hoagwood, K. (2001). Effectiveness, transportability, and dissemination of interventions: What matters when? Psychiatric Services, 52(9), 1190–1197. https://doi.org/10.1176/appi.ps.52.9.1190.

Schoenwald, S. K., Hoagwood, K. E., Atkins, M. S., Evans, M. E., & Ringeisen, H. (2010). Workforce development and the organization of work: The science we need. Administration and Policy in Mental Health and Mental Health Services Research, 37(1–2), 71–80. https://doi.org/10.1007/s10488-010-0278-z.

Schoenwald, S. K., Mehta, T. G., Frazier, S. L., & Shernoff, E. S. (2013). Clinical supervision in effectiveness and implementation research. Clinical Psychology: Science and Practice, 20(1), 44–59. https://doi.org/10.1111/cpsp.12022.

Smith, S. R. (2017). The future of nonprofit human services. Nonprofit Policy Forum, 8(4), 369–389. https://doi.org/10.1515/npf-2017-0019.

Sobeck, J. L., Abbey, A., & Agius, E. (2006). Lessons learned from implementing school-based substance abuse prevention curriculums. Children & Schools, 28(2), 77–85. https://doi.org/10.1093/cs/28.2.77.

Stake, R. E. (1995). The art of case study research. Thousand Oaks: Sage.

Terrana, S. E., & Wells, R. (2018). Financial struggles of a small community-based organization: A teaching case of the capacity paradox. Human Service Organizations: Management, Leadership & Governance, 42(1), 105–111. https://doi.org/10.1080/23303131.2017.1405692.

Thaler, R. H., & Sunstein, C. R. (2003). Libertarian paternalism is not an oxymoron. University of Chicago Public Law & Legal Theory Working Paper 43. Retrieved from: http://chicagounbound.uchicago.edu/cgi/viewcontent.cgi?article=1184&context=public_law_and_legal_theory.

Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. New Haven: Yale University Press.

Tucker, S., Klotzbach, L., Olsen, G., Voss, J., Huus, B., Olsen, R., Orth, K., & Hartkopf, P. (2006). Lessons learned in translating research evidence on early intervention programs into clinical care. MCN, American Journal of Maternal Child Nursing, 31(5), 325–331. https://doi.org/10.1097/00005721-200609000-00012.

Tummers, L. (2016). The relationship between coping and job performance. Journal of Public Administration Research and Theory, 27(1), 150–162. https://doi.org/10.1093/jopart/muw058.

Wandersman, A., Morrissey, E., Davino, K., Seybolt, D., Crusto, C., Nation, M., Goodman, R., & Imm, P. (1998). Comprehensive quality programming and accountability: Eight essential strategies for implementing successful prevention programs. The Journal of Primary Prevention, 19(1), 3–30. https://doi.org/10.1023/A:1022681407618.

Yawn, B. P., LaRusso, E. M., Bertram, S. L., & Bobo, W. V. (2015). When screening is policy, how do we make it work? In J. Milgrom & A. W. Gemmill (Eds.), Identifying perinatal depression and anxiety: Evidence-based practice in screening, psychosocial assessment and management (pp. 32–50). Malden, MA: John Wiley & Sons. https://doi.org/10.1002/9781118509722.ch2.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The author declares that this work complied with appropriate ethical standards.

Conflict of Interest

The author declares that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

I affirm that this article has not been published elsewhere and that it has not been submitted simultaneously for publication elsewhere.

Rights and permissions

About this article

Cite this article

McMillin, S.E. Quality Improvement Innovation in a Maternal and Child Health Network: Negotiating Course Corrections in Mid-Implementation. J of Pol Practice & Research 1, 23–36 (2020). https://doi.org/10.1007/s42972-020-00004-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42972-020-00004-z