Abstract

The quantum approximate optimization algorithm (QAOA) is a leading iterative variational quantum algorithm for heuristically solving combinatorial optimization problems. A large portion of the computational effort in QAOA is spent by the optimization steps, which require many executions of the quantum circuit. Therefore, there is active research focusing on finding better initial circuit parameters, which would reduce the number of required iterations and hence the overall execution time. While existing methods for parameter initialization have shown great success, they often offer a single set of parameters for all problem instances. We propose a practical method that uses a simple, fully connected neural network that leverages previous executions of QAOA to find better initialization parameters tailored to a new given problem instance. We benchmark state-of-the-art initialization methods for solving the MaxCut problem of Erdős-Rényi graphs using QAOA and show that our method is consistently the fastest to converge while also yielding the best final result. Furthermore, the parameters predicted by the neural network are shown to match very well with the fully optimized parameters, to the extent that no iterative steps are required, thereby effectively realizing an iteration-free QAOA scheme.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum computers are currently found in the so-called noisy intermediate-scale quantum (NISQ) era, comprising a small number of qubits and high error rates that accumulate rapidly with the number of operations (Preskill 2018). A popular family of quantum algorithms in the NISQ era is the variational quantum algorithms (VQAs), which offer a heuristic approach for solving optimization problems (Cerezo et al. 2021). VQAs use relatively shallow quantum circuits and are, therefore, more resilient to noise compared to standard quantum algorithms (McClean et al. 2017). They employ quantum circuits of parameterized gates that are updated, by means of classical computation, to reach an optimum of a predefined objective function (Nannicini 2019). The quantum approximate optimization algorithm (QAOA) is a particular VQA designed specifically to approximately solve combinatorial optimization problems (Farhi et al. 2014; Farhi and Harrow 2016; Crooks 2018), which are of high importance for various fields in both industry and academia (Korte et al. 2011).

In VQAs and QAQA in particular, each optimization step involves many quantum circuit executions, hence reducing the number of optimization steps is highly desirable. Choosing proper QAOA parameters can improve both the convergence rate (Zhou et al. 2020; Sack and Serbyn 2021) and the accuracy of the final solution (Farhi et al. 2014; Sack and Serbyn 2021). Finding better initial parameters for the QAOA circuit is thus a matter of active research (Crooks 2018; Zhou et al. 2020; Sack and Serbyn 2021; Brandao et al. 2018; Khairy et al. 2019; Akshay et al. 2021; Alam et al. 2020; Rabinovich et al. 2022).

Most initialization methods offer a single, common, set of parameters for all problem instances (Crooks 2018; Brandao et al. 2018; Zhou et al. 2020; Akshay et al. 2021). This is a pragmatic approach and is often very successful in reducing the number of required QAOA iterations. Nevertheless, it inherently ignores important relations between problem instances and their optimal parameters. These relations, which are based on the information carried by the particular description of each problem instance, can potentially be utilized to save further iterations. Other methods that manage to personalize the initial parameters per problem instance (Sack and Serbyn 2021; Alam et al. 2020) show an impressive improvement, exponentially better compared to a random parameter initialization (Sack and Serbyn 2021), yet at a high cost of many additional quantum circuit executions. These findings entail that using a different initialization per problem instance is beneficial and motivates the exploration of more cost-effective schemes for generating personalized parameters for each problem instance.

The goal of this paper is to reduce the number of required QAOA iterations without compromising algorithm performance and without invoking any additional quantum circuit executions, by using better initialization parameters.

We propose a practical approach based on neural network (NN) that predicts appropriate initialization parameters for QAOA per problem instance. The NN takes as input a predefined encoding of the problem instance and outputs the corresponding QAOA parameters. To that end, the NN is trained using past results of QAOA optimizations as labeled data. We evaluate our method on the standard MaxCut benchmark; the method can be similarly applied to other problems.

This study provides the following contributions: (a) it demonstrates that the QAOA’s variational parameters can be learned efficiently and robustly by a simple NN for the MaxCut problem without any additional quantum computation; (b) the proposed learning scheme is empirically shown to outperform state-of-the-art QAOA initialization methods in terms of the approximation ratio and convergence speed; (c) using the proposed method may relinquish the need for any further optimization of the quantum circuit altogether, potentially saving many costly quantum circuit executions; and (d) we show that these advantages become more profound as the size of the problem (the number of nodes in the graph) increases.

The paper is structured as follows: Section 2 provides the relevant background. It describes the QAOA algorithm, the MaxCut problem, and previous works on parameter initialization for the QAOA circuit. Section 3 introduces our proposed method. Section 4 benchmarks several initialization techniques alongside our method and provides a detailed comparison of their performances. Finally, we discuss the central advantages of the proposed method for the NISQ era in Section 5.

2 Background

2.1 The quantum approximate optimization algorithm (QAOA)

The QAOA can be regarded as a time discretization of adiabatic quantum computation (Farhi et al. 2014; Crooks 2018; Farhi et al. 2000), whose p-layers circuit Ansatz constructs the following state:

where N is the number of qubits. \(H_C\) is called the problem Hamiltonian, which is defined uniquely by the specific problem we are trying to solve (see below for the MaxCut problem example), and \(H_M\) is called the mixer Hamiltonian, provided in the same form for all problems, and given by \(H_M = \sum _{n=1}^{N} \sigma _{n}^{x}\), where \(\sigma _{n}^{j}\) is the Pauli operator j that acts on qubit n. Finally, \(|{+}\rangle =\frac{1}{\sqrt{2}}(|{0}\rangle +|{1}\rangle )\) is the \(+1\) eigenstate of \(\sigma ^{x}\). The p-dimensional vectors \(\vec {\beta }\) and \(\vec {\gamma }\) are the variational real parameters that correspond to \(H_M\) and \(H_C\), respectively. The objective cost function is determined by the expectation value of the problem Hamiltonian

and we denote the optimal parameters by \((\vec {\beta }^{*},\vec {\gamma }^{*})\), with which the optimal solution is attained:

QAOA is an iterative algorithm: it starts with an initial guess of the \((\vec {\beta },\vec {\gamma })\) parameters, then the expectation value of the problem Hamiltonian of Eq. 2 is evaluated by repeated measurements of the same circuit to reach a certain level of statistical accuracy. Once the cost function is calculated, the \((\vec {\beta },\vec {\gamma })\) parameters are updated towards the next iteration by a classical optimizer so as to optimize \(F_{p}(\vec {\gamma },\vec {\beta })\). The overall QAOA iterative process requires many executions of the quantum circuit; first, reaching a satisfactory level of statistical error \(\epsilon \) typically requires \(O(\epsilon ^{-2})\) shots (Gilyén et al. 2019). To keep the same level of accuracy, the number of shots grows with the system size; it was recently estimated to grow exponentially with size (Lotshaw et al. 2022); second, the optimization process requires additional circuit executions: if the optimizer is gradient-based, then the derivative of the cost function with respect to the \((\vec {\beta },\vec {\gamma })\) parameters must also be calculated at each iteration, requiring many additional executions of the quantum circuit to evaluate the gradients, e.g., by following the so-called “parameter shift rule” (Mitarai et al. 2018; Schuld et al. 2019). Alternatively, gradient-free optimizers can avoid the calculations of the derivatives, yet at a high cost of many more optimization iterations (Conn et al. 2009). The iterative QAOA process thus requires many executions of the quantum circuit, and each optimization step that can be avoided translates directly into a significant reduction in computational resources.

2.2 The MaxCut problem

The maximum cut (MaxCut) problem is an NP-hard combinatorial problem that has become the canonical problem to benchmark QAOA (Zhou et al. 2020; Willsch et al. 2020). It is defined over an undirected graph \(G=(V,E)\), where \(V={1,2,...,N}\) denotes the set of nodes, and E is the set of edges. The (unweighted) maximum cut objective is the partition of the nodes into two disjoint subsets \(\{+1,-1\}\), such that the number of edges connecting nodes from the two subsets is maximal.

Within QAOA, the problem Hamiltonian \(H_C\) that corresponds to the MaxCut problem is given by Farhi et al. (2014):

such that an edge (i, j) contributes to the sum if and only if the (i, j) qubits are measured anti-aligned. The common performance metric of QAOA for the MaxCut problem is the approximation ratio:

where \(C_{\text {max}}\) is the maximum cut of the graph.

2.3 QAOA initialization techniques

The attempt to find optimal parameters for QAOA, which would require a minimum number of optimization steps for solving the MaxCut problem, is currently an extensive research topic.

The most intuitive initialization scheme is linear, where the \((\vec {\beta },\vec {\gamma })\) parameters vary linearly from one layer to the other, such that \(\beta \) is gradually turned off and \(\gamma \) is turned on. This linear approach is inspired by adiabatic quantum computation (Farhi et al. 2000): the circuit begins from the ground state of the mixer Hamiltonian and aims at reaching the ground state of the problem Hamiltonian. While very simple and computationally efficient, the linear solution can be sub-optimal (see, e.g., Zhou et al. (2020); Willsch et al. (2020)). As an alternative approach, several initialization methods selected the same set of parameters for common-type graph instances, such as regular graphs (Crooks 2018; Brandao et al. 2018; Zhou et al. 2020; Akshay et al. 2021; Shaydulin et al. 2019). For example, it was suggested by Crooks (2018) to use a batches optimization method, in which initial parameters are found by optimizing batches of graphs in parallel. This way, the parameters fit multiple graphs and should also fit new graphs. The problem, however, with such homogeneous methods is that in practice, the optimal parameters change from one graph instance to another, even within the same family of graphs (Zhou et al. 2020). Such non-personalized methods ignore by construction of possible relations between specific problem instances and their corresponding optimal parameters.

Indeed, other studies have suggested using a different, personalized set of parameters for each problem instance. One method suggested initializing a \(p+1\) layers circuit based on the optimized p layer circuit. This proved useful (Zhou et al. 2020): for three-regular graphs, the number of optimization steps reduced exponentially, from \(2^{O(p)}\) for random parameter initialization to O(poly(p)) (Zhou et al. 2020). However, it required the full optimization of the p layer circuit. Similarly, Alam et al. (2020) used a regression model to predict the initial parameters of QAOA for a circuit with \(p \ge 2\), given the optimized parameters for the corresponding single-layer circuit, i.e., \(p=1\). This requires the generation of an optimized dataset for both the one-layer circuit and the desired p-layers circuit; moreover, given a new problem instance, this method requires full optimization of its single-layer circuit. Recently, it was shown that reusing the optimal parameters of small graph instances can be beneficial for solving the MaxCut problem of larger graph instances, when using single-layer QAOA circuits (\(p=1\)) (Galda et al. 2021). This is due to the confined combinatorial number of small regular subgraphs with radius p (the connection between the number of circuit layers and the performance on p-radius graphs was analyzed in Farhi et al. (2014) and is also addressed here, in Section 4.4). However, for \(p>1\), the combinatorial number of subgraphs increases rapidly and the method becomes less practical.

An illustration of the proposed method: each new problem instance (e.g., a graph in the MaxCut problem) is encoded (e.g., by its adjacency matrix) and inserted as an input into a trained neural network. The latter predicts better initial parameters for QAOA, specifically tailored to the given problem instance (the graph)

The Trotterized quantum annealing (TQA) is an initialization protocol that personalizes the initial parameters for a new problem instance by running the quantum circuit on many different linear initializations and choosing the initial parameters that achieve the best result (Sack and Serbyn 2021). Another approach proposed by Shaydulin et al. (2019) is a multi-start method in which the objective function is evaluated in different places in the parameters domain by using gradient-free optimizers. They show that it is possible to reach good minima with a finite number of circuit evaluations. These methods perform well but at a high computational cost per problem instance, as they optimize the initial parameters for each new problem instance. In particular, they do not leverage the knowledge obtained from previous QAOA optimizations.

Several methods used machine learning techniques to improve the performance of QAOA. For example, several studies have attempted to facilitate the optimization step by replacing the standard classical optimizers with reinforcement learning methods and meta-learning algorithms (Khairy et al. 2019; Wauters et al. 2020; Khairy et al. 2020, 2019; Wilson et al. 2021; Verdon et al. 2019; Wang et al. 2021). Friedrich et al. use NN to avoid barren plateaus (Friedrich and Maziero 2022). Egger et al. proposed the warm-start QAOA algorithm, where the qubits are initialized in an approximated solution instead of the uniform superposition as typically done in QAOA (Egger et al. 2021). Jain et al. used a graph neural network (GNN) for predicting the probability of each node being on either side of the cut in order to initialize warm-start QAOA (Jain et al. 2021). Unlike these methods, we use NN only to predict the initial parameters. Since we do not alter the optimization process, our method can be applied together with any of the other methods.

3 Our method

Given a combinatorial optimization problem, we predict the best initialization parameters for QAOA for a new particular problem instance, as illustrated in Fig. 1. We use a simple, fully connected neural network (NN) that takes as an input an encoding of the problem instance and predicts initialization parameters for that specific instance. The encoding of the problem instances may vary from one combinatorial problem to another. In our case of solving the MaxCut problem, we encode each graph instance by its adjacency matrix, which is then fed to the network, as described below.

The method is based on the assumption that the QAOA was already applied on n different problem instances \(S=\{s_i\}_{i=1}^n\), and that both the set of instances and the set of the corresponding final parameters \(P=\{\vec {\beta _i},\vec {\gamma _i}\}_{i=1}^n\) were saved and can be used as labeled data for the NN training. This allows us to exploit past QAOA calculations for predicting the initial parameters \((\vec {\beta }_{new},\vec {\gamma }_{new})\) for a new problem instance.

Formally, we train a neural network \(f_\theta \) to map each problem instance from S to its optimal circuit parameters from P. The input of the NN encodes the problem instance and the NN output is a vector of size 2p, where p is the number of layers in the quantum circuit: p parameters for \(\vec {\beta }\) and p parameters for \(\vec {\gamma }\). The neural network parameters \(\theta \) are trained to minimize the L2 norm

where \(f_{\theta } (s_{i})\) is the NN output corresponding to the i’th instance.

Finally, once the neural network is trained, predicting the initial parameters for a QAOA calculation of a new problem instance is done by setting \((\vec {\beta }_{new},\vec {\gamma }_{new}) = f_\theta (s_{new})\).

In comparison to previous methods, which either offer a fixed set of parameters for all problem instances or personalize the parameters per problem instance, but at a high cost of extra quantum circuit execution, our scheme manages, by design, to personalize the parameters per problem instance with no such extra cost. This is an a-priori advantage. In the next section, the empirical performance of the methods is examined.

3.1 Applying to the MaxCut problem

We exemplify our method on the MaxCut problem. We encode each problem instance, i.e., a graph, by its adjacency matrix. As illustrated in Fig. 2, the matrix is reshaped into a one-dimensional binary vector of length \(\frac{N(N-1)}{2}\), which is given as an input to the NN.

4 Results

4.1 Setup, benchmarking, and implementation details

Setup

We test the proposed method by solving the MaxCut problem for Erdős-Rényi graphs of \(N=6 - 16\) nodes. In an Erdős-Rényi graph, each edge (i, j) exists with a certain probability, independently from all other edges. We consider two different graph ensembles: (a) constant Erdős-Rényi graphs—where the existing probability for each edge is constant and equals \(p=0.5\), for all graphs; (b) random Erdős-Rényi graphs—where the edge probability changes between graphs but remains the same for all edges within a graph: for each graph, we uniformly sample a probability in the range \(p\in [0.3,0.9]\) and assign it to all the edges. While the first constant probability setup is the most commonly considered in the literature (see, e.g., Sack and Serbyn (2021)), the latter setup with a random edge probability better represents natural, real-world scenarios, where the probability for creating a connection between nodes depends on variables that may vary across graphs. For example, if the nodes of the graph represent people and a connection indicates an interaction between two people, then the probability of the interaction usually varies depending on external variables, such as place, age, and culture, and varies between different communities.

Benchmarking

To evaluate the proposed initialization method, we compare its performance to those of four initialization methods: (a) the batches optimization method (Crooks 2018; b) the Trotterized quantum annealing (TQA) initialization procedure (Sack and Serbyn 2021) with a predefined \(\Delta t\); (c) a simple linear method; and (d) an average method. The first two methods (Crooks 2018; Sack and Serbyn 2021) are state-of-the-art methods, described in Section 2.3, whereas the latter two are simpler yet natural baselines. We chose to focus on the batches and the TQA optimization methods because they are the best initialization methods known to date that, given a new test graph, do not require any additional computational effort, as is also the case in our proposal.

To enable the comparison, we implemented all four benchmark methods, as follows. In the batches optimization method, the QAOA initial parameters are determined by finding a fixed, optimized set of parameters for a large training set of graphs that are optimized in parallel. This set of optimized parameters is then used as the initialization point for new test graphs. We implemented the batches optimization method, where we used 200 training graphs for each setup choice, i.e., {graph ensemble (random or constant ER), number of qubits, and number of layers}. This is the same training set size used by Crooks (2018), which we explicitly verified is sufficient, in the sense that optimizing over a larger set did not yield parameters that performed better on the test set.

The TQA initialization procedure takes the following linear form:

where \(l=1...p\) indicates the layer’s number in the quantum circuit. In the complete TQA protocol, the hyperparameter \(\Delta t\) is determined by performing a simple grid search that optimizes the overall performance for each graph. This requires extra quantum circuit executions for each new graph instance, which we wanted to avoid to enable a fair comparison. It was numerically observed by Sack and Serbyn (2021) that for graph ensembles of similar nature (e.g., regular unweighted graphs, weighted regular graphs, and constant ER graphs), the optimal value of \(\Delta t\) does not vary by much. Therefore, in our experiments, for each kind of ensemble (constant and random ER), number of nodes n, and number of layers p, we performed the complete TQA protocol over 50 training graphs and averaged their results to find a single \(\Delta t^*\) that optimizes the overall performance. We then used this optimized \(\Delta t^*\) to evaluate the performance of TQA on new graphs. See Appendix 1 for more details.

We also implemented the simpler baselines: (a) a linear initialization in which \(\beta \) decreases linearly from \(\frac{\pi }{4}(1-\frac{1}{p})\) to \(\frac{\pi }{4p}\) and \(\gamma \) increases linearly from \(\frac{\pi }{p}\) to \(\pi (1-\frac{1}{p})\). This schedule is similar to the TQA one but simpler, as it employs no further hyperparameters. The reason for this particular choice is the periodicity of \(\beta _i \in [0,\frac{\pi }{2}]\) and \(\gamma _i \in [0,2\pi ]\) when plugged into Eq. 2. Note that a similar choice was made in Zhou et al. (2020); Fuchs et al. (2021; b) an average initialization, which takes the optimal parameters of 100 training graphs and averages them. This initialization method has not yet been addressed in the literature. Yet, it is natural to consider it once we have independent sets of optimized \((\vec {\beta },\vec {\gamma })\) parameters, as we do in this work. Moreover, our numerical results indicate that, given its simplicity and performance, this is a relatively good choice. We further tried a random initialization scheme, but it performed poorly, starting at a low approximation ratio and reaching suboptimal local minima after optimization; therefore, it is not shown here.

Implementation details

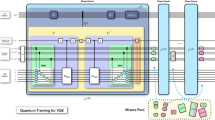

We built a dataset of 5,000 graphs for each setup combination, namely the size of the graph, number of layers, and sampling technique, as described above. The size of the dataset was chosen by increasing the dataset until the convergence of the corresponding zeroth iteration approximation ratio (see Appendix 2 for a detailed description). In particular, we considered Erdős-Rényi (ER) graphs of sizes 6 to 16 nodes, whose edges are sampled randomly at a probability of 0.5 or using a random uniform probability in the range [0.3, 0.9]. Then, we optimized each graph’s MaxCut solution by the QAOA algorithm, using the BFGS classical optimizer (Broyden 1970; Fletcher 1970; Goldfarb 1970; Shanno 1970), where we used the full TQA algorithm to initialize our \((\vec {\beta },\vec {\gamma })\) parameters. Using this procedure, we built a labeled training set of {graph, optimized \((\vec {\beta },\vec {\gamma })\) parameters} pairs, with which we trained the neural network, based on the L2-norm loss function of Eq. 6. All our QAOA circuit executions were performed on Qiskit’s noiseless state vector simulator (Aleksandrowicz et al. 2019) (see Sturm (2023) for a recent tutorial on QAOA implementation using Qiskit).

In all setups, we used a simple fully connected feed-forward three-layer network architecture: the input layer is composed of \(\frac{N(N-1)}{2}\) neurons, so as to encode the adjacency matrix of an undirected graph of N nodes (see Fig. 2), the hidden layer has a fixed number of 100 neurons, and the output layer has 2p neurons that encode the learned \((\vec {\beta },\vec {\gamma })\) parameters of the optimized QAOA circuit.

The approximation ratio (Eq. 5) during QAOA optimization, starting from initial parameters obtained by the different methods, averaged over 50 graphs of \(N=14\) nodes. The QAOA circuit is composed of two layers. All graphs are sampled using ER: (a) with constant edge probability and (b) with random edge probability. The proposed NN method converges the fastest in both cases. A more significant improvement is observed in the case of graphs with random edge probability

4.2 Constant Erdős-Rényi

We begin with ER graphs with \(N=14\) nodes generated with a constant edge probability of 0.5. Figure 3a compares the performance of the proposed NN method with those of (a) the batches optimization method (Crooks 2018), (b) the TQA initialization procedure (Sack and Serbyn 2021), (c) the linear method, and (d) the average method, as described above. Each point indicates the averaged approximation ratio (Eq. 5) achieved by a two-layer QAOA circuit over 50 test graphs as a function of the QAOA optimization step iterations. The standard error of the mean is less than \(0.47\%\) and is not displayed in the figure for visual clarity.

It is seen that prior to the QAOA optimization steps, at the zeroth iteration, each method reaches a different approximation ratio and that all methods improve from one iteration to another until converging to the same approximation ratio. Yet, each method converges at a different rate. Figure 3a demonstrates that, compared to all other methods, the NN method begins at a better approximation ratio and converges the fastest. This means that the NN learns to predict adequate \((\vec {\beta },\vec {\gamma })\) parameters, given the adjacency matrix of a test graph. In fact, it can be observed that the initial approximation ratio performance of our NN method is so close to the final parameters that hardly any optimization step is required. Note that for such a shallow two-layer circuit, the QAOA is not expected to reach an optimal approximation ratio, as indeed observed for all initialization methods.

It is also observed that the simple average method is the second-best approach for the constant ER ensemble with an edge probability of \(p=0.5\). This implies that the distribution over the \((\vec {\beta },\vec {\gamma })\) parameters is rather confined. What would be the effect of a more widespread ensemble distribution on our results? To check that, we next examine the performance of the benchmark initialization methods, alongside the NN one, on a random ER ensemble.

4.3 Random Erdős-Rényi

So far, we tested our method in the standard setup: graphs are randomly drawn from ER with an edge probability of 0.5. Our approach relies on a straightforward neural network (NN), which may exhibit sensitivity to samples drawn from distributions divergent from the training distribution, as highlighted in previous works (Ben-David et al. 2010; Amosy and Chechik 2022). Nonetheless, several investigations indicate that the utilization of neural networks for weight prediction in the context of other neural networks results in increased robustness to distributional shifts (Amosy et al. 2024; Volk et al. 2023; Amosy et al. 2022). This observation serves as the impetus for us to assess the efficacy of our method across a spectrum of distributions broader than the conventional Erdős-Rényi framework. We now expand this setup by assigning each graph with an edge probability that is sampled uniformly from the interval [0.3,0.9]. This way, we increase the diversity between the graphs, making it more realistic and challenging to guess good initial parameters (Zhou et al. 2020; Sack and Serbyn 2021; Galda et al. 2021).

Figure 3b shows the performance of all our benchmark methods in the case of such a random ER graph ensemble. The results resemble those of the constant ER ensemble, with similar trends. In particular, the NN method begins from the highest approximation ratio and converges faster than all other methods. Yet, in the random ER case, the differences between the methods are much more pronounced. First, it is seen that, in contrast to the constant ER case, not all methods converge to the same approximation ratio, with the simple linear method reaching the lowest approximation ratio. Moreover, all methods, except for the NN one, exhibit a much slower convergence rate than the constant ER scenario. The performance of the NN method is manifested in the achievable approximation ratio at the zeroth iteration, showing a significant approximation ratio gap of roughly \(3\%\) from the second-best result obtained by the batches optimization method.

This is not surprising: the NN method is designed to predict the best \((\vec {\beta },\vec {\gamma })\) parameters per graph instance, i.e., to personalize its performance. This is in contrast to all the benchmark methods, which essentially suggest a fixed set of \((\vec {\beta },\vec {\gamma })\) parameters for all the test graphs, independent of the particular graph’s structure. It should be noted that, in principle, the TQA has the capacity of personalizing over a particular graph, by searching for the optimal dt that maximizes its results. However, as explained in Section 4.1, this search of \(\Delta t\) for each graph comes at a very high computational cost per graph, which we aim to avoid.

It is seen in Fig. 3b that also in the more challenging case of random ER graphs, the NN method requires merely a single iteration to converge. In contrast, the TQA, which converges the fastest out of all other methods, requires about 8–12 iterations (depending on accuracy) to reach the same level of approximation ratio. We demonstrate the computational saving of our method by estimating the quantum circuit executions in our experiments. In our case, using the BFGS optimizer, we observed that even in the small \(p=2\) circuit depth, each intermediate iteration requires, on average, 12 estimations of the cost function \(F_p(\vec {\beta },\vec {\gamma })\), each with different \((\vec {\beta },\vec {\gamma })\) parameters, so as to evaluate the relevant gradients. As each cost function estimation typically requires thousands of shots to get a meaningful statistical accuracy, we get an overall saving of hundreds of thousands of quantum circuit executions. This saving is non-negligible and is expected to grow linearly with the circuit’s depth.

4.4 Varying the size of the graph

4.4.1 Noiseless simulations

In previous sections, we examined the performance of the proposed NN method on graphs with a fixed number of \(N=14\) nodes. Next, we examine the method on random ER graphs with varying number of nodes and draw our attention to the first QAOA evaluation, without applying any optimization steps. To that end, we generated 200 different graphs for each graph size, from \(N=6\) to 16. Then, for each graph, we obtained the initial parameters using all the different methods, and ran a two-layer QAOA circuit using those parameters (zeroth iteration only). Figure 4 shows the average approximation ratio achieved by each method as a function of graph size.

The approximation ratio (Eq. 5) attained by each initialization method shown as a function of the number of nodes in the graph. Graphs were sampled using ER with a random edge probability for each graph size as in Fig. 3b. The approximation ratios were calculated at the zeroth iteration, i.e., no optimization steps were taken. The number of layers is \(p=2\). It is observed that the relative performance of our proposed method increases with graph size

It is seen that the NN method outperforms all other methods, irrespective of the graphs’ size. Moreover, the preference of the NN method over the other methods increases with the number of nodes, especially with respect to the batches and the average optimization methods. In comparison to the TQA method, the NN shows a rather fixed, large performance gap of roughly \(8\%\) in approximation ratio in favor of the NN method.

These results indicate that as we increase the graph size N for a fixed number of layers p, the advantage of our method becomes more significant. To understand this trend, we go back to the structure of the QAOA algorithm and consider what happens when the number of layers is kept fixed while the number of nodes increases, in approximating the MaxCut problem. We recall that QAOA is a p-local algorithm: in the case of the MaxCut problem, the algorithm’s objective function (see Eq. 2) is a sum of the expectation value terms of all edges in the graph (i.e., sum of \(\langle \psi _{p}(\vec {\beta },\vec {\gamma }) |\sigma _{i}^{z}\sigma _{j}^{z}| \psi _{p}(\vec {\beta },\vec {\gamma }) \rangle \) terms); following the commutation relation of the involved operators reveals that each expectation value term is influenced only by nodes that are at most p edges away from the calculated edge term, i.e., all subgraphs with radius of at most p (Farhi et al. 2014; Barak and Marwaha 2021). Zhou et al. showed that for certain families of graphs, such as the three-regular graphs, where the possible number of subgraphs with confined radius p is finite, the spread of the optimal parameters vanishes in the limit \(N\rightarrow \infty \) (Zhou et al. 2020). This may justify a non-personalized approach which initializes a single set of \((\vec {\beta },\vec {\gamma })\) parameters for all graph instances. In contrast, in random ER graphs, the number of confined subgraphs is not finite, but rather grows with N. Accordingly, the set of optimal parameters is not expected to converge to a single set as N grows. This explains the decline of the approximation ratio as N grows for methods that try to initialize with the same parameters for all graphs and emphasizes the power of a personalized approach as proposed here. In the next section, we examine further the effect of personalizing the initial parameters. But first, we verify that our proposed approach maintains its relative advantage also in a noisy setup.

4.4.2 Noisy simulations

To test our method also in the presence of quantum noise, we chose to simulate a simple yet effective noise model, with the following properties: (1) a unified readout error rate of \(2\%\) over all qubits; (2) we have defined the single qubit gates (excluding the RZ gates which are implemented in a virtual, noiseless manner also on real quantum hardware) under a depolarizing noise channel with a depolarizing parameter \(\lambda = 0.001\); and (3) two-qubit gates were defined under a depolarizing noise channel with a depolarizing parameter \(\lambda = 0.01\). The depolarizing parameters defined for the single- and two-qubit depolarizing noise channels induce effective error rates of the same orders of magnitude as current state-of-the-art superconducting quantum computers.

The approximation ratio (Eq. 5) attained by the TQA and our method in noisy simulations, shown as a function of the number of nodes in the graph. The approximation ratios were calculated at the zeroth iteration, i.e., no optimization steps were taken. The number of layers is \(p=2\). Error bars indicate the variance in approximation ratios between graphs. It is observed that the relative performance of our proposed method is maintained also in noisy simulations

Initial \(\beta \) (left figure) and \(\gamma \) (right figure) parameters as a function of layers in the QAOA circuit, for three different individual graphs. A comparison is made between the prediction of the NN (solid blue lines), the optimized parameters (dashed blue lines), and the other non-personalized baselines: the simple average method (solid red line with square marks), the batches method (solid brown line with circle marks), and the TQA (solid orange with star marks). The NN predictions are shown to follow closely the optimized curves for all three graphs

Figure 5 presents the average approximation ratios attained by both the TQA and our methodology across varying graph sizes. For each graph size, we evaluated 50 graphs extracted from the same dataset utilized in the noiseless analysis (see Fig. 4). We used the same NN that was trained using data carried from noiseless QAOA executions. It is, however, expected that NN trained with data from noisy calculations would lead to better results, since the NN prediction is expected to correct for some of the persistent errors. Similarly, for the TQA method, we employed the optimal \(\Delta t^*\) parameter, attained from noiseless calculations, as presented in Appendix 1. It is seen in Fig. 5 that, also in the presence of noise, the NN-based solution consistently outperforms the TQA in achieving higher approximation ratios for every graph size examined. This outcome substantiates the robustness of our approach under noisy conditions.

4.5 Personalization

The key objective of our method is to create personalized initial parameters per problem instance. Figure 6 depicts the \((\vec {\beta },\vec {\gamma })\) values as a function of the layers in a four-layer circuit for three individual graphs (graph-1, graph-2, graph-3) with \(N=12\) nodes, sampled from the random ER ensemble. The exact optimized parameters are marked in dotted blue lines of different shades and different markers: graph-1 is marked in dark blue circles, graph-2 is shown in blue squares, and graph-3 is shown in light blue triangles. The NN parameters are shown in solid lines with corresponding shades and markers. The simple average method, the batches method, and the TQA all produce a single set of parameters, depicted in red, brown, and orange, respectively. It is evident that the \((\vec {\beta },\vec {\gamma })\) parameters obtained by our NN approach closely resemble the optimal parameters per graph, thus performing the personalization successfully. In contrast, other approaches, that do not have the flexibility to personalize the initial parameters, predict parameters that are approximately linear and are further away from the optimal parameters of the individual graphs.

5 Discussion and outlook

This work joins an existing effort to find proper initialization for the QAOA circuit’s variational parameters. We showed that a simple neural network could be trained to predict initial \((\vec {\beta },\vec {\gamma })\) parameters for QAOA circuits that solve the MaxCut problem, per graph instance. Moreover, we showed that these predicted parameters match very well with the optimal parameters, to which the QAOA iterative scheme eventually converges. This enables an effectively iteration-free approach, where the QAOA circuit is executed only once, with the predicted parameters, thus saving a significant amount of computational resources. We demonstrated that our approach requires up to 85% fewer iterations compared to current state-of-the-art initialization methods to reach optimized results for solving the MaxCut problem on both constant and random Erdős-Rényi graphs.

Our method assumes the availability of previous QAOA optimizations for different instances of the same problem. The neural network we employed is a simple, fully connected three-layer network, and the classical burden of training the network is negligible. Given a new problem instance, our method directly predicts the corresponding variational parameters without needing to execute the quantum circuit. In addition, as the training set grows, the neural network can rapidly adjust to the new data by a few classical learning iterations. Thus, in contrast to other methods, our method does not require any auxiliary executions on quantum devices. In this paper, we employed the simplest deep network possible. Other NN architectures, like graph neural networks (GNN), may be better for this problem, and we expect this to be a topic of future research.

The ability of the proposed NN method to personalize the predicted parameters per graph instance becomes more significant as the graph ensemble is more varied and the spread of optimal parameters is broader. We demonstrated this property by showing that while the proposed method outperforms all benchmark methods for constant edge Erdős-Rényi graphs, it suppresses them even further when the edge probability is taken to be random. We thus conjecture that our method will provide an even more significant benefit for classes of graphs that show larger distributions, e.g., for random and weighted graphs, for which the optimal parameters are known to have a wider distribution (Zhou et al. 2020). Such graphs are prevalent in many practical applications, such as VLSI design and social networking (see, e.g., Spirakis et al. (2021)).

Another manifestation of the same observation is that for the realistic scenario of a finite number of layers p and a growing number of graph nodes N, our method leads to better performance compared to other methods (see Fig. 4) for approximating the MaxCut of random Erdős-Rényi graphs; as quantum computers develop and have more qubits, they are able to solve larger instance problems. Yet, for practical solutions, the number of layers in QAOA must be finite. This makes our method especially practical in the NISQ era, where quantum devices increase in size but are too noisy for executing deep circuits.

Finally, in this work, we focused on the MaxCut problem but we believe that our method is beneficial for any optimization problem, solved via a variational quantum algorithm (not necessarily QAOA), that incorporates an underlying mapping between the different instances of the problem and their corresponding optimized variational parameters (see also Friedrich and Maziero (2022)). This is left for future research.

Code availability

Our code is publicly available at https://github.com/amosy3/IterativeFreeQAOA.

References

Akshay V, Rabinovich D, Campos E, Biamonte J (2021) Parameter concentrations in quantum approximate optimization. Phys Rev A 104(1):010401

Alam M, Ash-Saki A, Ghosh S (2020) Accelerating quantum approximate optimization algorithm using machine learning. In: 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), IEEE, pp 686–689

Aleksandrowicz G, Alexander T, Barkoutsos P, Bello L, Ben-Haim Y, Bucher D, Cabrera-Hernández FJ, Carballo-Franquis J, Chen A, Chen C-F et al (2019) Qiskit: an open-source framework for quantum computing. 16

Amosy O, Chechik G (2022) Coupled training for multi-source domain adaptation. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 420–429

Amosy O, Eyal G, Chechik G (2024) Late to the party? On-demand unlabeled personalized federated learning. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 2184–2193

Amosy O, Volk T, Ben-David E, Reichart R, Chechik G (2022) Text2Model: model induction for zero-shot generalization using task descriptions. arXiv:2210.15182

Barak B, Marwaha K (2021) Classical algorithms and quantum limitations for maximum cut on high-girth graphs. arXiv:2106.05900

Ben-David S, Blitzer J, Crammer K, Kulesza A, Pereira F, Vaughan JW (2010) A theory of learning from different domains. Mach Learn 79:151–175

Brandao FG, Broughton M, Farhi E, Gutmann S, Neven H (2018) For fixed control parameters the quantum approximate optimization algorithm’s objective function value concentrates for typical instances. arXiv:1812.04170

Broyden CG (1970) The convergence of a class of double-rank minimization algorithms 1. general considerations. IMA J Appl Math 6(1):76–90

Cerezo M, Arrasmith A, Babbush R, Benjamin SC, Endo S, Fujii K, McClean JR, Mitarai K, Yuan X, Cincio L et al (2021) Variational quantum algorithms. Nat Rev Phys 3(9):625–644

Conn AR, Scheinberg K, Vicente LN (2009) Introduction to derivative-free optimization. SIAM

Crooks GE (2018) Performance of the quantum approximate optimization algorithm on the maximum cut problem. arXiv:1811.08419

Egger DJ, Mareček J, Woerner S (2021) Warm-starting quantum optimization. Quantum 5:479

Farhi E, Goldstone J, Gutmann S (2014) A quantum approximate optimization algorithm. arXiv:1411.4028

Farhi E, Goldstone J, Gutmann S, Sipser M (2000) Quantum computation by adiabatic evolution

Farhi E, Harrow AW (2016) Quantum supremacy through the quantum approximate optimization algorithm. arXiv:1602.07674

Fletcher R (1970) A new approach to variable metric algorithms. Comput J 13(3):317–322

Friedrich L, Maziero J (2022) Avoiding barren plateaus with classical deep neural networks. Phys Rev A 106(4):042433

Fuchs FG, Kolden HØ, Aase NH, Sartor G (2021) Efficient encoding of the weighted max \(k\)-cut on a quantum computer using QAOA. SN Comput Sci 2(2):1–14

Galda A, Liu X, Lykov D, Alexeev Y, Safro I (2021) Transferability of optimal QAOA parameters between random graphs. In: 2021 IEEE International conference on quantum computing and engineering (QCE), IEEE, pp 171–180

Gilyén A, Arunachalam S, Wiebe N (2019) Optimizing quantum optimization algorithms via faster quantum gradient computation. In: Proceedings of the thirtieth annual ACM-SIAM symposium on discrete algorithms, SIAM, pp 1425–1444

Goldfarb D (1970) A family of variable-metric methods derived by variational means. Math Comput 24(109):23–26

Jain N, Coyle B, Kashefi E, Kumar N (2021) Graph neural network initialisation of quantum approximate optimisation. arXiv:2111.03016

Khairy S, Shaydulin R, Cincio L, Alexeev Y, Balaprakash P (2019) Reinforcement learning for quantum approximate optimization. Research Poster, accepted at Supercomputing 19

Khairy S, Shaydulin R, Cincio L, Alexeev Y, Balaprakash P (2019) Reinforcement-learning-based variational quantum circuits optimization for combinatorial problems. arXiv:1911.04574

Khairy S, Shaydulin R, Cincio L, Alexeev Y, Balaprakash P (2020) Learning to optimize variational quantum circuits to solve combinatorial problems. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 2367–2375

Korte BH, Vygen J, Korte B, Vygen J (2011) Combinatorial optimization vol 1

Lotshaw PC, Nguyen T, Santana A, McCaskey A, Herrman R, Ostrowski J, Siopsis G, Humble TS (2022) Scaling quantum approximate optimization on near-term hardware. arXiv:2201.02247

McClean JR, Kimchi-Schwartz ME, Carter J, De Jong WA (2017) Hybrid quantum-classical hierarchy for mitigation of decoherence and determination of excited states. Phys Rev A 95(4):042308

Mitarai K, Negoro M, Kitagawa M, Fujii K (2018) Quantum circuit learning. Phys Rev A 98(3):032309

Nannicini G (2019) Performance of hybrid quantum-classical variational heuristics for combinatorial optimization. Phys Rev E 99(1):013304

Preskill J (2018) Quantum computing in the NISQ era and beyond. Quantum 2:79

Rabinovich D, Sengupta R, Campos E, Akshay V, Biamonte J (2022) Progress towards analytically optimal angles in quantum approximate optimisation. Mathematics 10(15):2601

Sack SH, Serbyn M (2021) Quantum annealing initialization of the quantum approximate optimization algorithm. arXiv:2101.05742

Schuld M, Bergholm V, Gogolin C, Izaac J, Killoran N (2019) Evaluating analytic gradients on quantum hardware. Phys Rev A 99(3):032331

Shanno DF (1970) Conditioning of quasi-newton methods for function minimization. Math Comput 24(111):647–656

Shaydulin R, Safro I, Larson J (2019) Multistart methods for quantum approximate optimization. In: 2019 IEEE High performance extreme computing conference (HPEC), IEEE, pp 1–8

Spirakis P, Nikoletseas S, Raptopoulos C (2021) Max cut in weighted random intersection graphs and discrepancy of sparse random set systems. LIPIcs: Leibniz International proceedings in informatics

Sturm A (2023) Theory and implementation of the quantum approximate optimization algorithm: a comprehensible introduction and case study using Qiskit and IBM Quantum computers. arXiv:2301.09535

Verdon G, Broughton M, McClean JR, Sung KJ, Babbush R, Jiang Z, Neven H, Mohseni M (2019) Learning to learn with quantum neural networks via classical neural networks. arXiv:1907.05415

Volk T, Ben-David E, Amosy O, Chechik G, Reichart R (2023) Example-based hypernetworks for multi-source adaptation to unseen domains. In: Findings of the association for computational linguistics: EMNLP 2023, pp 9096–9113

Wang H, Zhao J, Wang B, Tong L (2021) A quantum approximate optimization algorithm with metalearning for MaxCut problem and its simulation via tensorflow quantum. Math Probl Eng 2021

Wauters MM, Panizon E, Mbeng GB, Santoro GE (2020) Reinforcement-learning-assisted quantum optimization. Phys Rev Phys 2(3):033446

Willsch M, Willsch D, Jin F, De Raedt H, Michielsen K (2020) Benchmarking the quantum approximate optimization algorithm. Quantum Inf Process 19:1–24

Wilson M, Stromswold R, Wudarski F, Hadfield S, Tubman NM, Rieffel EG (2021) Optimizing quantum heuristics with meta-learning. Quantum Mach Intell 3(1):1–14

Zhou L, Wang S-T, Choi S, Pichler H, Lukin MD (2020) Quantum approximate optimization algorithm: performance, mechanism, and implementation on near-term devices. Phys Rev X 10(2):021067

Acknowledgements

We would like to thank Yoni Zimmermann and Yehuda Naveh for the fruitful discussions.

Funding

Open access funding provided by Bar-Ilan University.

Author information

Authors and Affiliations

Contributions

Conceptualization: Ohad Amosy, Tamuz Danzig, Adi Makmal Methodology: Ohad Amosy, Tamuz Danzig, Adi Makmal Formal analysis and investigation: Ohad Amosy, Tamuz Danzig Code implementation—neural networks: Ohad Amosy Code implementation—QAOA optimization: Tamuz Danzig Code implementation—QAOA optimization in noisy simulations: Ohad Lev Writing—original draft preparation: Ohad Amosy, Tamuz Danzig Writing—review and editing: Ohad Lev, Ely Porat, Gal Chechik, Adi Makmal Supervision: Adi Makmal All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

1 Finding an optimal \(\Delta t\) for the TQA method

In our experiments, for each number of nodes N, number of layers p, and graph ensemble (constant and random ER graphs, as in the main text), we applied a grid search to find the optimal \(\Delta t\) (Eq. 7), as in Sack and Serbyn (2021). We averaged the best \(\Delta t\)’s over 50 different graphs to obtain a single initialization that would fit as well as possible the variety of graphs. Figure 7 shows the optimal \(\Delta t\) for different graph sizes, with \(p=2\). For the random ER graphs, the spread of the optimal \(\Delta t\) is wider, as the distribution of the graphs is broader.

2 Training data size

Each network was trained using 5,000 data points. Figure 8 depicts the resulting approximation ratio as a function of the training data size. It is seen that the approximation ratio increases with the size of the training data and that the resulting AR is well converged with 5,000 samples, as the AR improves by less than 0.01 in comparison to a training set of size 1,000. Moreover, in comparison to other methods (see Fig. 4), it is observed that a training set of 1000 samples is already sufficient to get \((\vec {\beta },\vec {\gamma })\) parameters that reach better AR than that of the batches method and that a training size of merely 50 samples would suffice to outperform the TQA method.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amosy, O., Danzig, T., Lev, O. et al. Iteration-Free quantum approximate optimization algorithm using neural networks. Quantum Mach. Intell. 6, 38 (2024). https://doi.org/10.1007/s42484-024-00159-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42484-024-00159-y