Abstract

Quantum neural networks are expected to be a promising application in near-term quantum computing, but face challenges such as vanishing gradients during optimization and limited expressibility by a limited number of qubits and shallow circuits. To mitigate these challenges, an approach using distributed quantum neural networks has been proposed to make a prediction by approximating outputs of a large circuit using multiple small circuits. However, the approximation of a large circuit requires an exponential number of small circuit evaluations. Here, we instead propose to distribute partitioned features over multiple small quantum neural networks and use the ensemble of their expectation values to generate predictions. To verify our distributed approach, we demonstrate ten class classification of the Semeion and MNIST handwritten digit datasets. The results of the Semeion dataset imply that while our distributed approach may outperform a single quantum neural network in classification performance, excessive partitioning reduces performance. Nevertheless, for the MNIST dataset, we succeeded in ten class classification with exceeding 96% accuracy. Our proposed method not only achieved highly accurate predictions for a large dataset but also reduced the hardware requirements for each quantum neural network compared to a large single quantum neural network. Our results highlight distributed quantum neural networks as a promising direction for practical quantum machine learning algorithms compatible with near-term quantum devices. We hope that our approach is useful for exploring quantum machine learning applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum machine learning has emerged as a promising application for near-term quantum computers. Popular quantum machine learning algorithms like quantum kernel methods (Havlíček et al. 2019; Schuld and Killoran 2019; Haug et al. 2023) and quantum neural networks (QNNs) (Cerezo et al. 2021; Mitarai et al. 2018; Farhi and Neven 2018; Schuld et al. 2014) have been studied. QNNs, particularly deep QNNs, exhibit remarkable expressibility (Abbas et al. 2021; Sim et al. 2019; Schuld et al. 2021), but the limitations of current quantum devices, such as restricted qubit counts and constrained circuit depths, reduce the model complexity. In addition, QNNs suffer from optimization problems of vanishing gradients during optimization (McClean et al. 2018; Cerezo et al. 2021; Wang et al. 2021).

To mitigate these problems, a promising direction in QNNs research is the development of distributed algorithms across multiple quantum devices (Pira and Ferrie 2023). An advantage of distributed QNNs is reported that a kind of distributed QNNs offers an exponential reduction in communication for inference and training compared to classical neural networks using gradient descent optimization (Gilboa and McClean 2023). Additionally, a distributed approach allows us to accelerate simulation by directly partitioning a given problem for parallel computation, for example, by distributing data to multiple quantum circuits for digit recognition or distributing the calculation of partitioned Hamiltonians for variational quantum eigensolvers (Du et al. 2021). Another approach enables us to approximate the evaluation of outputs of a large quantum circuit by reconstructing it from the results of small quantum circuits (Marshall et al. 2023). This is achieved by a circuit cutting technique, i.e., expressing two-qubit gates as a sum of the tensor products of single-qubit unitaries (Bravyi et al. 2016). This approach, however, requires an exponential number of small quantum circuit evaluations. Further research into distributed QNNs frameworks that fully utilize multiple quantum computers while overcoming hardware limitations is required.

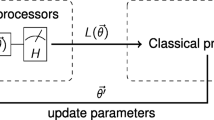

The flow of our distributed approach over \(n_\text {qc}\) QNNs. First, we equally partition features \(\varvec{x}_i\) into \(\{\varvec{x}_{i,j}\}_{j=1}^{n_\text {qc}}\). We input partitioned features \(\{\varvec{x}_{i,j}\}_{j=1}^{n_\text {qc}}\) to variational quantum circuits \(\{U(\varvec{x}_{i,j},\varvec{\phi }_j)\}_{j=1}^{n_\text {qc}}\). Then, we evaluate a loss function using the sum of expectation values as outputs from the quantum circuits and optimize the parameters \(\{\varvec{\phi }_j\}_{j=1}^{n_\text {qc}}\) in quantum circuits to minimize the loss function

In this paper, we introduce a novel approach utilizing distributed QNNs for processing separately partitioned features over multiple QNNs to avoid using circuit cutting techniques. Specifically, we partition the input features and separately encode them into distinct QNNs. We then sum the expectation values from the QNNs to make predictions. Unlike the recent study in Ref. Wu et al. (2022) that used distributed QNNs via encoding significantly downscaled partitioned features for feature extraction and another QNN to perform binary classification of the MNIST dataset via encoding the extracted features, our approach requires fewer qubits and can handle the entire MNIST dataset of \(28\times 28\) features and 60000 training data. So, our approach is more efficient for large features and multi-class classification. We numerically investigate the performance of our proposed distributed QNNs approach.

First, we compared the classification performance between a single QNN and our distributed approach on the Semeion handwritten digit dataset (Semeion Handwritten Digit 2008). Due to the high dimensionality of the original \(16 \times 16\)-dimensional data, which was beyond our simulation capabilities especially when simulating it with a single large QNN, we reduced the \(16 \times 16\) features to \(8 \times 8\) via average pooling. This preprocessed data was then classified using either a single QNN or our distributed QNNs. The results demonstrated that our distributed approach achieved higher accuracy and lower loss compared to the single QNN. Further, we extended our distributed method to handle the original \(16 \times 16\)-dimensional data, employing configurations of four and eight independent QNNs. While both distributed models also showed a nice performance, the result of eight QNNs achieved higher accuracy but at the expense of increased loss, compared to the four QNNs. These results imply that encoding all features into a single QNN may not be an optimal approach and too many partitions degrade performance.

Furthermore, in order to validate the scalability of our distributed QNNs approach, we applied our distributed approach to the MNIST handwritten digit dataset (LeCun et al. 2010). This dataset consists of 60000 training data and 10000 test data, each of \(28\times 28\) size. By employing 14 QNNs with our distributed approach, we achieved exceeding \(96\%\) accuracy in ten class classifications of this large dataset. This accomplishment is particularly notable considering the computational demand of classically simulating the multi-class classification task on MNIST with a single QNN.

Our results highlight distributed QNNs as an effective and scalable architecture for quantum machine learning, with applicability to real-world problems. We anticipate our proposed method will aid future distributed QNNs research and investigations into quantum advantage by enabling experiments on large practical datasets.

2 Method

In this section, we present our distributed QNNs approach, as shown in Fig. 1. Our distributed QNNs model consists of \(n_\text {qc}\) shallower and narrower quantum circuits \(\{U(\varvec{x}_{i,j},\varvec{\phi }_j)\}_{j=1}^{n_\text {qc}}\), where each circuit processes a unique subset of the input features. These subsets \(\{\varvec{x}_{i,j}\}_{j=1}^{n_\text {qc}}\) represent the jth partition of the ith input data \(\varvec{x}_i\). We employ the expectation values with a set of observables \(\{{O}^{(k)}\}_{k=1}^{d_\text {out}}\) for the outputs of the QNNs, where \(d_\text {out}\) denotes the dimension of outputs. Here, we define the total outputs across all QNNs \(\varvec{y}_i\) corresponding to the input \(\varvec{x}_i\) as the sum of the expectation values:

where c is a constant value to adjust the outputs. For a classification task, we then apply the softmax function to normalize the outputs. The procedure of our model can be summarized as follows:

-

1.

Partitioning input feature \(\varvec{x}_i\) into \(\{\varvec{x}_{i,j}\}_{j=1}^{n_\text {qc}}\).

-

2.

Encoding the partitioned features \(\{\varvec{x}_{i,j}\}_{j=1}^{n_\text {qc}}\) into \(n_\text {qc}\) QNNs respectively.

-

3.

Evaluating expectation values for each QNN with observables \(\{O^{(k)}\}_{k=1}^{d_\text {out}}\).

-

4.

Calculating \(\varvec{y}_i\) in Eq. 1 by summing the expectation values from each QNN and multiplying a constant value c.

-

5.

(For classification task, applying softmax function.)

-

6.

Calculating a loss function using \(\{\varvec{y}_i\}_{i=1}^N\) for regression task or using \(\{\text{ Softmax }(\varvec{y}_i)\}_{i=1}^N\) for classification task, where N is the number of data and \(\text{ Softmax }(\cdot )\) is the softmax function.

-

7.

Optimizing parameters \(\{\varvec{\phi }_j\}_{j=1}^{n_\text {qc}}\) to minimize the loss.

We used this procedure in our numerical experiments.

3 Results

In this section, we present the validation of our distributed QNNs approach through the classification of the Semeion and MNIST handwritten digit datasets. In the following, we briefly describe the setup of our numerical experiments. First, we focused on the Semeion dataset (Semeion Handwritten Digit 2008), containing 1593 of \(16 \times 16\)-dimensional data representing digits from 0 to 9, with each feature value assigned an integer from 0 to 255.

For preprocessing the Semeion dataset, we adopted a normalization strategy for angle encoding. Specifically, we normalized the data values between 0 and \(\pi /8\) for encoding 64 features per QNN, and between 0 and \(\pi /4\) for encoding 32 features per QNN. Then, we applied \(2\times 2\) average pooling to the normalized data to reduce the dimension \(16 \times 16\) to \(8 \times 8\) due to the limitation of our GPU memory capacity, especially when simulating the classification task with a single QNN.

The architectural designs of our single QNN and distributed QNNs are illustrated in Fig. 2 and described further in the Appendix. The primary distinction between these architectures lies in the number of qubits and encoding layers, which are adjusted according to the size of partitioned features allocated to each QNN in the distributed setup. In our approach, we distributed the evenly partitioned features across multiple independent QNNs. Then, we evaluated the expectation values using a set of observables \(\{X_1,\ldots ,X_5,Z_1,\ldots ,Z_5\}\) for each QNN, followed by summing over \(n_\text {qc}\) expectation values, where we denote \(n_\text {qc}\) as the number of QNNs. We applied the softmax function to this aggregate multiplied by a constant factor and then evaluated cross-entropy loss as our loss function. We optimized the parameters \(\{\varvec{\phi }_j\}_{j=1}^{n_\text {qc}}\) in QNNs to minimize the loss function using Adam optimizer (Kingma and Ba 2014) with learning rate 0.005. To perform our numerical experiments efficiently, we utilized “torchquantum” (Wang et al. 2022) library, known for efficient classical simulation of quantum machine learning.

3.1 Results for the Semeion dataset

First, we focused on the classification of the \(8\times 8\) dimensionally reduced Semeion dataset using both a single QNN and two QNNs model. For the two QNNs model, we partitioned the features so that each QNN processed four rows of the data. The results of those models with fivefold cross-validation are shown in Table 1. The comparative results revealed that the two QNNs model outperformed the single QNN model in terms of accuracy and loss. The results underscore the potential benefits of our distributed QNNs approach over a single QNN.

Encouraged by this result, we sought to examine the scalability of our approach with an increased number of partitions, analyzing how the performance of distributed QNNs changes with more partitions. Therefore, we extended our exploration to the classification of the original \(16\times 16\) Semeion dataset, employing with four and eight QNNs. In these setups, each QNN processes four or two rows of features per QNN, respectively. While both the four QNNs and eight QNNs model demonstrated effective performance, the result of the eight QNNs model in loss is inferior to the four QNNs model. This result implies that distributing excessively partitioned features across multiple QNNs decreases performance since we optimize parameters to minimize loss.

In conclusion, our results indicate that encoding all features into a single QNN is not always the best approach. Moreover, our results indicate that the distribution of excessively partitioned features across multiple QNNs leads to a decline in overall performance.

3.2 Results for the MNIST dataset

We further validated scaling to more partitions by classifying MNIST handwritten digit dataset (LeCun et al. 2010), containing 60000 training data and 10000 test data representing digits from 0 to 9. These samples are characterized by a higher dimensionality of \(28 \times 28\), with feature values ranging from 0 to 255. As preprocessing, we normalized the values between 0 and \(\pi /4\) for angle encoding on single-qubit rotation gates, maintaining consistency with the preprocessing methodology used in our Semeion experiments. We distributed the equally partitioned features across 14 QNNs, i.e., encoding two rows of features into each QNN. The same set of observables was employed here as well. From the result of this numerical experiment, as shown in Table 2, our distributed approach achieved exceeding \(96\%\) accuracy for the test data, demonstrating the robustness of our distributed approach against performance degradation. In addition, our distributed approach operates accurately at this scale, which is infeasible to simulate classically using a single QNN. This success highlights the potential of our distributed QNNs approach to be a highly effective and scalable architecture for practical quantum machine learning. Our findings imply that distributed QNNs could play an important role in advancing the field of quantum machine learning.

4 Conclusion

In this paper, we have proposed a novel distributed QNNs approach encoding partitioned features across multiple shallower and narrower QNNs compared to a single large QNN. By using the sum of the expectation values from these independent QNNs, our distributed QNNs achieve superior performance compared to a single QNN. However, our results imply that an excessive number of partitions reduces the performance. Nevertheless, we achieved high accuracy in classifying a large dataset of MNIST, which is a challenging task for classical simulations using a single large QNN. Importantly, our distributed QNNs approach provides practical advantages that are compatible with current quantum devices. Specifically, our distributed QNNs approach effectively reduces qubit requirements and circuit depth for each individual QNN, and the shallower and narrower circuits may help in mitigating the vanishing gradient problems during optimization compared to a single large QNN.

In our future work, we will enhance our approach by incorporating quantum communication between QNNs to explore a quantum advantage in distributed quantum machine learning. We are also interested in ensembling the outputs of multiple quantum circuits encoding various different partitioned features, which may further improve performance, and encoding multichannel images, which could broaden the applicability of distributed QNNs. Overall, our results highlight the promise of distributed quantum algorithms to mitigate hardware restrictions and also pave the way for realizing the vast possibilities inherent in near-term quantum machine learning applications.

Data and code availability

Our jupyter notebooks with our results are available on GitHub (https://github.com/puyokw/DistributedQNNs).

References

Abbas A, Sutter D, Zoufal C, Lucchi A, Figalli A, Woerner S (2021) The power of quantum neural networks. Nat Comput Scie 1(6):403–409

Bravyi S, Smith G, Smolin JA (2016) Trading classical and quantum computational resources. Phys Rev X 6(2):021043

Cerezo M, Sone A, Volkoff T, Cincio L, Coles PJ (2021) Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat Commun 12(1):1791

Cerezo M, Arrasmith A, Babbush R, Benjamin SC, Endo S, Fujii K, McClean JR, Mitarai K, Yuan X, Cincio L et al (2021) Variational quantum algorithms. Nature Reviews. Physics 3(9):625–644

Du Y, Qian Y, Tao D (2021)Accelerating variational quantum algorithms with multiple quantum processors. arXiv:2106.12819

Farhi E, Neven H (2018) Classification with quantum neural networks on near term processors. arXiv:1802.06002

Gilboa D, McClean JR (2023) Exponential quantum communication advantage in distributed learning. arXiv:2310.07136

Haug T, Self CN, Kim M (2023) Quantum machine learning of large datasets using randomized measurements. Mach Learn Scie Technol 4(1):015005

Havlíček V, Córcoles AD, Temme K, Harrow AW, Kandala A, Chow JM, Gambetta JM (2019) Supervised learning with quantum-enhanced feature spaces. Nature 567(7747):209–212

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

LeCun Y, Cortes C, Burges C (2010) Mnist handwritten digit database 2. ATT Labs http://yann.lecun.com/exdb/mnist

Marshall SC, Gyurik C, Dunjko V (2023) High dimensional quantum machine learning with small quantum computers. Quantum 7:1078

McClean JR, Boixo S, Smelyanskiy VN, Babbush R, Neven H (2018) Barren plateaus in quantum neural network training landscapes. Nat Commun 9(1):4812

Mitarai K, Negoro M, Kitagawa M, Fujii K (2018) Quantum circuit learning. Phys Rev A 98(3):032309

Pira L, Ferrie C (2023) An invitation to distributed quantum neural networks. Quantum Mach Intell 5(2):1–24

Schuld M, Killoran N (2019) Quantum machine learning in feature hilbert spaces. Phys Rev Lett 122(4):040504

Schuld M, Sinayskiy I, Petruccione F (2014) The quest for a quantum neural network. Quantum Inf Process 13(11):2567–2586

Schuld M, Sweke R, Meyer JJ (2021) Effect of data encoding on the expressive power of variational quantum-machine-learning models. Phys Rev A 103(3):032430

Semeion Handwritten Digit (2008) UCI Machine Learning Repository. https://doi.org/10.24432/C5SC8V

Sim S, Johnson PD, Aspuru-Guzik A (2019) Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. Adv Quantum Technol 2(12):1900070

Wang S, Fontana E, Cerezo M, Sharma K, Sone A, Cincio L, Coles PJ (2021) Noise-induced barren plateaus in variational quantum algorithms. Nat Commun 12(1):6961

Wang H, Ding Y, Gu J, Li Z, Lin Y, Pan DZ, Chong FT, Han S (2022) Quantumnas: noise-adaptive search for robust quantum circuits. In: The 28th IEEE International symposium on high-performance computer architecture (HPCA-28)

Wu J, Tao Z, Li Q (2022) wpscalable quantum neural networks for classification. In: 2022 IEEE International conference on quantum computing and engineering (QCE), IEEE, pp 38–48

Funding

Open Access funding provided by The University of Tokyo. This work is supported by JSPS KAKENHI Grant Number JP23K19954.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: The detailed model description used in numerical experiments

Appendix: The detailed model description used in numerical experiments

Here, we describe the details of our QNN and distributed QNNs used in Section 3. As we mentioned in Section 3, the architecture difference between a single QNN and multiple QNNs is the number of qubits and encoding layers depending on the number of features. Below, we provide a comprehensive description of the QNN architectures.

Our QNN architecture (Fig. 2) consists of single-qubit rotation gates RX and RY, and CZ gates. Our QNN architecture is characterized by alternating layers of a parameterized unitary transformation \(U_\phi \) and a data encoding transformation \(U_x\).

The data encoding transformation \(U_x\) encodes the features through the angles of n RX and n RY gates acting on the ith qubit, where n is the number of qubits. Then, n CZ gates act on the ith target and \((i \mod n)+1\)th control qubits. The total number of \(U_x\) is \(\lceil \text {n}\_\text {features}/2 \rceil \), where \(\text {n}\_\text {features}\) denotes the number of features for a single QNN or partitioned features for each QNN.

The unitary transformation \(U_\phi \) consists of 20 layers of unitary transformation U, consisting of n RX gates and n RY gates acting on the ith qubit, and n CZ gates with the ith qubit as the control and the \((i \mod n)+1\)th qubit as the target. The total number of \(U_\phi \) is \(\lceil \text {n}\_\text {features}/2 \rceil +1\). Note that we excluded the CZ transformations \(U_\text {ent}\) just before the measurement. In addition, the parameters in \(U_\phi \) are initialized with uniform random values between 0 and \(\pi \).

For example, we describe the quantum circuit we used for classifying \(8\times 8\) sized reduced Semeion dataset with two QNNs, as shown in Fig. 3. As we mentioned above, we apply unitary transformations \(U_\phi (\phi _{1:320})\) to \(|{0}\rangle ^{\otimes 8}\), \(U_x(x_{1:16})\) for input first 16 features, \(U_\phi (\phi _{321:640})\) for transformation and entanglement, \(U_x(x_{17:32})\) for input the following 16 features, and \(U_\phi (\phi _{641:960})\) excluding the last CZ transformations \(U_\text {ent}\) just before the measurements. Then, we measure the expectation values \(\langle {\psi _f}|\, X_1| {\psi _f}\rangle ,\ldots ,\langle {\psi _f}|\, X_5 |{\psi _f}\rangle ,\langle {\psi _f}| Z_1\langle {\psi _f}|,\)\(\ldots ,\langle {\psi _f}|\, Z_5|{\psi _f}\rangle \), where \(|{\psi _f}\rangle \) represents the quantum states just before measurements. Another QNN has the same architecture with independent parameters and inputs the last 32 features.

Our QNN architecture. The detail is described in the Appendix

The example of our QNN architecture used for classifying the \(8 \times 8\) reduced sized Semeion dataset with two QNNs. Note that we excluded the CZ transformations \(U_\text {ent}\) just before the measurement, as we mentioned in the Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kawase, Y. Distributed quantum neural networks via partitioned features encoding. Quantum Mach. Intell. 6, 15 (2024). https://doi.org/10.1007/s42484-024-00153-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42484-024-00153-4