Abstract

The combination of machine vision and grinding robots can be visualized as a collaboration between human eyes and limbs to achieve a deep integration between external perception and execution actions. This combination will give the grinding robot more operability and flexibility, which will enable it to better realize the purpose of replacing humans with machines. In response to the demand for flexible grinding of titanium surface edges proposed by a titanium manufacturer, this paper conducts an in-depth study on the prototype system of vision-guided grinding robots and related applications. Firstly, this study analyzes the shortcomings of the existing robotic regrinding process and achieves the improvement of the regrinding process by introducing machine vision technology. Subsequently, this study further utilizes machine vision and image processing algorithms to achieve high-quality recognition and high-precision positioning of metal surface edges. Then, the D–H parameter model of the regrinding robot is established, and the planning and simulation of the regrinding trajectory is carried out using the position information of the identified regrinding edges. Finally, the simulation-validated grinding trajectory is introduced into the grinding robot, and the effectiveness of the proposed scheme is verified by actual grinding experiments.

Article Highlights

-

The existing robots’ regrinding process of edges of metal surface is analyzed, and the improved robots’ regrinding process integrated into the vision system is put forward.

-

Two key techniques, including localization of grinding edges based on machine vision, kinematics modeling and its application of grinding trajectory planning, are studied.

-

The experimental titanium product provided by our cooperative enterprise is used to be tested and verified, and the result shows this method meets the production requirements of the enterprise.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Polishing the surface or edge of metal products is one of the indispensable important contents in manufacturing. With the development of manufacturing industry and the increase of labor cost, the traditional manual polishing method has been difficult to adapt to the current requirements of intelligent upgrading of manufacturing industry. In summary, the use of industrial robots to assist or even replace manual grinding is a mainstream development trend at present.

Grinding has gradually become a common area for industrial robots, and many manufacturers have launched more mature products for the market. However, the existing products are still far from being completely automated and flexible due to the single specification of the grinding object, the fact that the fixed position of the grinding object cannot be changed at will, and the need to frequently switch or even create new grinding programs when grinding different objects. In view of this, it is necessary to install “eyes” for industrial robots, allowing them to perceive the location of external grinding objects and other information like humans, and realize the autonomous planning and automatic generation of grinding trajectories accordingly, so as to promote the operation flexibility of grinding robots and their adaptability in the face of different grinding objects, and form a good foundation for the automation and intelligent transformation of grinding operation processes. This paper focuses on the key technology of vision-guided grinding robot and its application, which is an effective implementation of this idea. Vision-guided grinding robots are mainly involved in two research areas: machine vision inspection and grinding robots.

The essence of machine vision is to achieve the correlation and conversion of two-dimensional image information and three-dimensional spatial position information by collecting images of target objects and combining them with the calibration results of vision systems. At present, machine vision inspection technology has been widely used in education, medical, agriculture, machinery manufacturing, cell phone screen inspection and many other industries. With this, many valuable results have been born. For example, Singh et al. [1] proposed a framework for automatic detection of surface defects based on machine vision and convolutional neural networks, which solved the problem of detecting common surface defects in centerless grinding of tapered rollers and has positive significance for traditional labor-intensive industries to improve the automation of the inspection process. Yang et al. [2] proposed a surface defect detection method for steel rails based on machine vision and YOLO v2 deep learning network model, which can accurately detect and locate defects with an average accuracy of 97.11%, showing good robustness. Ding et al. [3] developed a laser-based machine vision measurement system, which can measure the 3D contours of deformed surfaces and can perform deformation displacement analysis based on the contour data obtained from the measurements. Liu [4] proposed a physical education teaching evaluation method based on artificial intelligence and machine vision for the current problem of inefficient and error-prone human evaluation in physical education, which can play a good role in assisting and supporting physical education teachers by using artificial intelligence algorithms for data analysis and machine vision to identify the teaching process. Ansari et al. [5] proposed a visual inspection method for rice seed variety purity, which uses machine vision and combines multivariate analysis based on color, morphology and texture features to achieve the detection of rice variety purity, forming a good pavement for the subsequent construction of an automated rice seed variety purity inspection system or even an automated germination rate monitoring system. Jian et al. [6] proposed an improved algorithm for cell phone screen image defect identification and segmentation detection for the problem of misalignment of cell phone screen images caused by vibration, and further developed an automated detection system that can effectively detect various types of defects on cell phone screens. Zhong et al. [7] proposed a machine vision-based 3D measurement method for structured light, which uses machine learning for structured light 3D measurement, reduces the complexity of measurement operation and computation time, and makes real-time measurement possible.

In contrast, the essence of a grinding robot is to add a floating spindle and abrasive to the end of an industrial robot, and then rely on the drive of each joint of the robot to move the abrasive along the preset trajectory of the workpiece surface, and then complete the grinding operation. As an important field of industrial robot applications, grinding has been thoroughly studied by many scholars. For example, Jeon et al. [8] developed an automatic grinding robot system for engine cylinder liner oil groove machining, which can adjust its position by itself according to various types of oil grooves. Ge [9] established a robotic weld in-line grinding system incorporating laser vision sensors for off-line grinding of weld seams with uneven surface quality. Wan et al. [10] combined machine vision technology with industrial robots to design a robotic system from loading to grinding, which solved the problems of difficult workpiece positioning and complicated trajectory schematic teaching during grinding and realized the automated operation from workpiece loading, grinding to discharging. Guo et al. [11] proposed a robot grinding motion planning method, including robot motion planning and weld grinding, and verified the effectiveness of the proposed method by grinding experiments of pipe fitting welds. Xu et al. [12] designed and fabricated a novel prototype of a wheeled pipe polishing and grinding robot, which consists of a moving structure, a positioning structure and a polishing structure, and has the advantages of compact structure, adaptability and high grinding efficiency. Wang et al. [13] proposed an improved whale optimization algorithm and applied it to the optimization of the grinding trajectory, which has a positive effect on reducing the impact effects in grinding and improving the grinding smoothness.

The combination of machine vision and industrial robots can be visualized as the collaboration between human eyes and limbs to achieve a deep communion between external perception and execution of actions. This combination also gives robots more intelligent characteristics, enabling them to better realize the purpose of replacing humans with machines. For example, material sorting robots [14], firefighting robots [15], and agricultural harvesting robots [16] that apply machine vision have shown good performance in their respective applications. At the same time, there are some research works about the vision system for the grinding robot, which also provides a good foundation for the research of this paper. Diao et al. [17] proposed a 3D vision system that could easily integrate into an intelligent grinding system, which can be suitable for industrial sites. Zhao et al. [18] proposed a vision-based grinding strategy for the mobile robot, and this strategy was proven to support reconstruction of workpiece surface and generation of grinding path by measuring point clouts. Wan et al. [19] set up a grinding workstation constituting of machine vision, and study case showed that this grinding workstation can determined the object position and targeted the robotic grinding trajectory by the shape of the burr on the surface of an object. These works stated above proves to a certain extent that the integrated application of machine vision technology and grinding robot is technically feasible and potentially advantageous.

Based on the above analysis, this paper conducts an in-depth study on the vision-guided grinding robot prototype system and related applications for a titanium surface edge flexible grinding demand proposed by a titanium manufacturer. Firstly, this study analyzes the shortcomings of the existing robotic regrinding process and achieves the improvement of the regrinding process by introducing machine vision technology. Subsequently, this study further utilizes machine vision and image processing algorithms to achieve high-quality recognition and high-precision positioning of metal surface edges. Then, the D–H parameter model of the regrinding robot is established, and the planning and simulation of the regrinding trajectory is carried out using the position information of the identified regrinding edges. Finally, the simulation-validated grinding trajectory is introduced into the grinding robot, and the effectiveness of the proposed scheme is verified by actual grinding experiments.

2 Analysis of existing robots’ regrinding process and its improvement

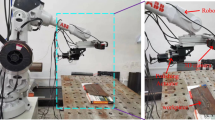

Nowadays, industrial robots are used in many grinding operations, as shown in Fig. 1. The use of grinding robots has improved the efficiency of grinding operations to a certain extent. However, in general, the control of the position and attitude of the grinding head at the end of the robot is still mainly achieved with the help of a robot FlexPendant, which still lacks the ability to automatically plan the grinding process by matching specific objects.

At present, the process flow of most grinding robots is not flexible enough, as shown in Fig. 2. For a specific specification of the grinding object, it is necessary to first fix it in the same position, and then establish the corresponding program according to the requirements of the grinding operation, and then use the grinding program to generate the grinding track to drive the robot to complete the grinding operation. The area of the grinding teeth of the grinding head is usually large enough to ensure that the regrinding operation is completed even if there is a slight deviation in the plate position when fixing. However, the limitations of this grinding process are significant, as different grinding targets require different grinding procedures to be established in advance. If a wide range of metal surface edges need to be regrinded in practice, the operator needs to frequently switch or even create new regrinding programs, which causes a lot of inconvenience to the control and operation of the regrinding robot. In addition, although industrial robots are used in grinding operations, there are still many limitations in the application scenarios due to the large proportion of time spent on manual teaching to generate grinding programs and the relatively single specification of grinding objects, which is not conducive to the promotion and popularity of robotic grinding systems.

In this study, a vision system is added to the existing grinding robot, and the position coordinates of the edge to be ground on the metal surface are obtained through the vision system. On this basis, combined with the inverse kinematics analysis of the robot, the planning and simulation of the robot grinding trajectory are realized. The introduction of the machine vision system allows the grinding robot to match the grinding object to achieve automatic detection and positioning of the spatial position, i.e., without special emphasis on the need to keep the specifications of the grinding object constant. It is also not necessary to fix the grinding object in the same position under the same specification, which makes the grinding robot much more flexible and flexible. The improved robot grinding process is shown in Fig. 3.

Taking the grinding of metal surface edges as an example, compared with the existing robot grinding process, the biggest difference of the improved robot grinding process is mainly reflected in the following two aspects. Firstly, the improved grinding robot can use machine vision technology to identify and locate the grinding edge, so as to detect and extract the spatial position information of the grinding edge. Secondly, the robot can plan and simulate the grinding trajectory based on the grinding edge position information obtained from vision detection. In summary, these two aspects are also the core content of this paper’s research.

3 Recognition and localization of grinding edges based on machine vision

In a vision-guided metal edge grinding robot system, the main function of the vision part is to identify and locate the position of the grinding edge. This can be achieved by first calibrating the vision camera and the robot and establishing a conversion relationship from the vision image to the spatial position of the grinding object and then to the spatial position under the grinding robot. On this basis, the image acquisition, pre-processing and edge extraction of the grinding object are performed to obtain high-quality edge profile information of the grinding object. Finally, using the system calibration results, the collected edge information of the regrind object is transformed into spatial position information that can be understood by the industrial robot.

3.1 Vision camera calibration

The imaging of an industrial camera is determined by the geometric model of imaging, which is essentially the use of the small-aperture imaging principle to map a point in the scene onto the image plane through the camera lens. Therefore, the imaging process of the camera is also called the transformation process of the projection geometry.

Suppose that the true position of any point P in space in the world coordinate system is represented by the coordinates pw (Xw, Yw, Zw), but its position in the camera coordinate system is not consistent with Pw. This is mainly because the origin of the camera coordinate system is determined by the camera optical center, the default optical axis is the Z-axis, and the projection direction of the object is the positive Z-axis direction. When using the vision system for spatial localization, the first problem to be solved is to convert the position of the photographed object from the position under the world coordinate system to the position under the camera coordinate system. If the position coordinates of the point P in the camera coordinate system are defined as p (X, Y, Z), then there exists a conversion relationship between p and pw as shown in Eq. (1).

where R represents a 3 × 3 rotation matrix, T represents a 3 × 1 translation vector, and M1, composed of the two, represents the external parameters of the camera. M1 represents the coordinate transformation relationship between the world coordinate system and the camera coordinate system.

A two-dimensional planar image can be visually obtained by using a camera to shoot images, and point P is presented as a two-dimensional point on the image. Generally, the pixel coordinate system can be established by taking the upper left corner of the image as the origin and the two adjacent sides perpendicular to each other starting from the upper left corner of the image as the coordinate axis. If the pixel coordinate of P is known to be p0 (u, v), then the transformation relationship between p0 and p is satisfied as shown in Eq. (2).

where f represents the camera focal length; dx and dy denote the physical scale size of a single pixel point on a two-dimensional image, along the direction of two coordinate axes, respectively; (u0, v0) denotes the coordinates of the intersection of the camera optical axis and the image plane in the pixel coordinate system. M2 is an internal parameter of the camera, which represents the conversion relationship from pixel coordinates to camera coordinates.

By associating Eq. (1) with Eq. (2), the conversion relationship between p0 and pw can be obtained as follows:

The purpose of vision camera calibration is to obtain the conversion matrix from the world coordinate system to the pixel coordinate system, i.e., the internal and external parameters of the camera. When performing camera calibration, the most widely used method is the checkerboard calibration method proposed by Zhang [20]. The calibration method proposed by Zhengyou Zhang uses the images of the calibration target with different orientations as shown in Fig. 4 to solve for the internal and external parameters. Zhengyou Zhang’s calibration method defines the plane where the checkerboard is located as the Xw − Yw plane of the world coordinate system, takes a specific corner point extracted from the checkerboard as the origin Ow of the world coordinate system, and takes the two sides of the checkerboard passing through Ow as the Xw and Yw axes. Since the size of the checkerboard is known, the coordinates of all corners on the checkerboard in the world coordinate system can be obtained, and the Zw values of all corners in the world coordinate system are identical to 0. Subsequently, using the image detection algorithm, the pixel coordinates of all corner points of checkerboard can be easily extracted.

After the pixel coordinates of corner points and the corresponding world coordinate values are obtained, a mathematical relationship model between the two can be built using Eq. (3). From Eq. (2), it can be seen that the camera internal reference represents the inherent properties of the camera and has no relationship with the actual placement orientation of the calibration target. Thus, it is possible to establish multiple sets of mathematical correspondences by taking multiple sets of images of the calibration target with different orientations, and use the idea of nonlinear least squares to solve for a suitable M2. Based on this, M1 can be further obtained by continuing to use the idea of nonlinear least squares solution. It is worth noting that the external parameters represent the spatial position conversion relationship between the world coordinate system and the camera coordinate system, so once the external parameters are solved, the camera position must not be changed at will, otherwise M1 needs to be re-calibrated.

Since the calibration method proposed by Zhang Zhengyou has been very mature and widely used, this paper will not describe it in detail.

3.2 Hand-eye calibration of robot

The vision camera calibration enables the conversion from pixel coordinates to world coordinates. However, the robot grinding operation needs to be driven by the robot, so it is also necessary to convert the edge position information of the metal surface to be repaired under the robot coordinate system, so as to realize the association with the robot operation system.

In practice, the relationship between the camera and the robot is divided into two types, “eye-in-hand” and “eye-to-hand”. The former means that the camera is mounted directly on the robot, while the latter means that the camera is mounted at a fixed position outside the robot. Both methods have their advantages and disadvantages. Considering the stability and safety of robot grinding operation, this paper chooses the correlation method with eye-to-hand.

The height of the grinding table and the grinding object are easy to measure, and the imaging plane is placed parallel to the metal surface to be polished. In summary, this paper uses a monocular camera acting on a 2D plane and obtains the conversion relationship between image coordinates and robot end coordinates by means of affine transformation, as shown in Eq. (4).

where p′ (x′, y′) represents the coordinates of point P in space ignoring the height information under the robot coordinate system; p1 (x, y) represents the image coordinates of point P on the two-dimensional plane image corresponding to the actual physical scale, which satisfies the conversion relation with the pixel coordinates as shown in Eq. (5); R′ represents a 2 × 2 rotation matrix; while C represents a 2 × 1 translation vector.

It can be seen from the formula (4) that at least three groups of coordinates corresponding to three points are needed to calculate R′ and C and obtain the conversion relationship between the image coordinate system and the robot coordinate system, so as to achieve the purpose of hand-eye calibration. In order to perform the robot hand-eye calibration, it is necessary to move the end of the robot to at least three points to be calibrated. The coordinate values of these points in the robot base coordinate system are then read by the FlexPendant and combined with the corresponding image coordinate values for calculation. To reduce errors generated by the robot or other parties, it is common in reality to collect the coordinates of nine points for calculation and have the end of the robot move sequentially to the nine position points on the calibration target to obtain a more accurate hand-eye calibration result by averaging. The nine-point calibration target commonly used for robot hand-eye calibration is shown in Fig. 5.

3.3 Recognition and localization of grinding edges

Once the vision system and robot are calibrated, the grinding object can be fixed on the grinding table for image capture and acquisition. There are no other special requirements for the fixing position of the grinding object, only that the edges of the metal surface to be ground are within the working space of the robot and the field of view of the camera.

Considering that the acquired image may have noise interference and affect the subsequent edge recognition and positioning accuracy, it is necessary to filter the image first, so as to further improve the quality of the captured image. There are three commonly used image denoising means, such as mean filtering, median filtering and Gaussian filtering. Through experimental comparison, Gaussian filtering [21] can make the edges of the objects in the image smoother and can blur the image of the metal surface without the edge information being significantly disturbed. This is more in line with the need to achieve the refinement of metal surface edges in this paper, so Gaussian filtering is used to pre-process the captured images. The principle of Gaussian filtering is shown in Eq. (6).

where Iσ represents the output image, I represents the input image, * represents the convolution operation, and Gσ represents a two-dimensional Gaussian kernel with standard deviation σ. The standard deviation σ is closely related to the degree of smoothness of Gaussian filtering, which often needs to be determined after several trial selections according to the specific situation when it is used.

In order to enhance the distinction between the polished object and other background environments in the shot image, so as to create favorable conditions for the subsequent polished edge extraction, the filtered image also needs to be binarized and open-operated.

Binarization means presetting a threshold value. It is 255 when the grayscale of a pixel point is higher than the threshold setting and 0 when it is lower than the threshold setting, making the image appear distinctly black and white. The key to binarization is to determine a reasonable threshold value. If a fixed threshold is used, trial selection and image processing experiments are required continuously, which is obviously less efficient and the threshold is determined with a certain degree of blindness. In this paper, Otsu’s binarization method [22] is used to improve the process, which counts the frequency of occurrence of each pixel value and then iterates through all possible thresholds (256 in total) to obtain the best image processing result. Among them, the selection of the best threshold value is judged by Eq. (7).

where IA represents the sum of all pixel values greater than the current threshold; IB represents the sum of all pixel values less than the current threshold; SA represents the variance of all pixel values greater than the current threshold; SB represents the variance of all pixel values less than the current threshold; S represents the weighted sum of the variances of the pixel values of the two classes under the division of the current threshold, and the threshold taken as the smallest value of S is the optimal threshold for image binarization.

After binarization, there may still be a small number of noise points in the image. At this time, the opening operation in morphology can be used to further process the image. Open operation is a filter based on geometric operation, which essentially processes the image by first eroding and then dilating to achieve the purpose of removing isolated noise, as shown in Fig. 6.

After the open operation, the image can be used for edge recognition. Comparing various current edge detection operators, the Canny operator [23] is selected in this paper to extract the edge contour of the metal surface to be grinded. The basic idea of the Canny operator is to calculate the integrated gradient amplitude of each pixel point in the x-axis and y-axis directions of the image coordinate system, and take the local maximum value of the integrated gradient amplitude as the edge to be extracted, and finally set the pixel value at the non-edge position to 0 to realize the extraction of the edge contour.

After the outline of the grinding edge is identified, the pixel coordinates of the grinding edge can be clarified and then substituted into the vision camera and robot hand-eye calibration results. Subsequently, the spatial position information of the grinding edge in the robot’s base coordinate system can be obtained, and the positioning detection of the grinding edge can be realized.

4 Kinematics modeling and its application of grinding trajectory planning

After obtaining information on the position of the grinding edge in the robot’s base coordinate system, the grinding trajectory planning can be performed. The robot grinding trajectory planning is based on the inverse solution of robot kinematics [24, 25]. In other words, the robot end poses are known and the individual joint angles are solved in reverse. The continuous trajectory is calculated using polynomial interpolation to obtain the individual joint angle variations, which provides the basis for the final realization of the regrinding trajectory driven by the joint angle variations.

4.1 Inverse solution analysis of robot D–H model and kinematics

The D–H model [26, 27] is established by the D–H parameter method, which can be used to describe the changes between the joint coordinate systems of the manipulator. D–H parameter rule is a common method to establish robot model. Taking a six-axis industrial robot as an example, it has three translations and three rotations, totaling six degrees of freedom. The principle of D–H parameter method is to solve the four-order homogeneous transformation matrix Ai between two adjacent axes, establish the position and attitude relationship between the joints of each axis, and obtain the position and attitude of the end of the robot in the robot base coordinate system. The definition of Ai is shown in formula (8):

where i represents the joint label, i = 1–6 for 6-axis industrial robots; di, ai, αi, θi are the D–H parameters of industrial robots, which represent the linkage deflection, linkage deflection, torsion angle and rotation angle of joint i, respectively.

By multiplying the six flush transformation matrices together, the positional matrix \(A_{0}^{6}\) from the robot base coordinate system to the robot end coordinate system is obtained, which can be expressed by Eq. (9).

The inverse solution of robot operation is based on the position of the grinding head at the end of the robot, and the angle of rotation θi of each joint is solved by the transformation matrix in turn. Take the solution of θ1 as an example, multiply \(A_{1}^{{{ - }1}}\) at both ends of formula (9); then use the relationship that the matrices at both ends after multiplying \(A_{1}^{{{ - }1}}\) are equal and the values of corresponding elements are also equal to obtain θ1 as shown in formula (10):

where \(m_{1} = a_{y} d_{6} + p_{y}\) and \(n_{1} = a_{x} d_{6} + p_{x}\). In the same way, θ2–θ6 can also be solved by similar matrix transformation and correspondence. Considering that the method of robot inverse kinematics analysis has been developed more mature, it will not be repeated here.

4.2 Research on robot trajectory planning and simulation

Based on the D–H parameters of the robot, a simulation model of the robot, namely D–H parameter model, can be constructed, as shown in Fig. 7. The essence of this D–H parameter model is to set a series of connecting rods and connect them by joints to form a spatial open motion chain. Taking a six-axis industrial robot as an example, each axis of the six-axis robot is established by setting the joint rotation angle, joint distance, linkage length, linkage rotation angle, and joint type of the robot, and finally connecting each axis to build a robot simulation model.

On the basis of the industrial robot simulation model, the trajectory of the robot end can be planned. When simulating the motion trajectory, it is necessary to set the angle, velocity and acceleration of the initial point and the end point. Wherein the speed and the acceleration are set to be zero, then the pose of the robot end corresponding to the point is respectively solved in a Cartesian space, the joint angle of the robot is solved according to the pose, and finally the joint angle, the angular velocity and the acceleration in the motion track are calculated by using a polynomial interpolation method.

5 Case study

5.1 Construction of prototype system of vision-guided grinding robot

The hardware of the experimental regrinding robot mainly consists of an industrial robot, an industrial camera and lens, and a floating grinding spindle. The specific parameters are shown in Table 1.

According to the lighting environment of the laboratory, the grinding platform is placed on the left side of the robot, and the industrial camera is fixed above it. Subsequently, the grinding head at the end of the robot is fixed on the wheel of the robot through a connecting plate, and an air pump and a frequency converter are connected. The grinding object is placed on the working platform within the shooting range of the camera. The original intention of designing the vision-guided grinding robot is to grind the obvious defects such as metal edge burrs and metal surface bulges, so as to reduce the manual workload in the rough grinding process. Grinding operation does not require high precision, so the floating grinding head which provides air pressure to achieve flexible grinding is selected. The vision-guided grinding robot system for the laboratory is shown in Fig. 8.

5.2 Vision system calibration

After the grinding system of the vision-guided robot is set up, the vision camera and the industrial robot can be calibrated, and the conversion from the pixel coordinates of the shot image to the coordinates under the base coordinate system of the industrial robot is realized. The grinding platform and the grinding object are determined, and the height information can be obtained by measurement. In addition, when calibrating the vision camera, the checkerboard calibration target can be kept coplanar with the metal surface to be ground, so the 2D camera can be used for image acquisition. In this experiment, the internal and external parameters of the camera were calibrated by using the 12 checkerboard calibration pictures shown in Fig. 4, which have different positions and orientations but are all coplanar with the ground metal surface.

First, extract the corner pixel coordinates of the twelve checkerboard calibration target images, as shown in Fig. 9.

Subsequently, the Zhang Zhengyou calibration method and the nonlinear least squares method are used to obtain the appropriate intrinsic parameter matrix of the vision camera as follows:

On the basis of obtaining M2, similar external parameters can be obtained. Because the plane of the calibration board and the distance between the calibration target and the camera are known, the rotation matrix R in the extrinsic parameter matrix can be simplified to a rotation vector. Taking the world coordinate system constructed on the first of the 12 calibration board images as an example, the external parameters of the camera obtained by calibration are as follows:

After the camera calibration, the hand-eye calibration is performed to obtain the transformation matrix between the camera coordinate system and the robot base coordinate system. Place the nine-point calibration plate at the same height as the ground metal surface. By manually controlling the pose of the industrial robot, the industrial robot is adjusted so that the tail end of the law wheel of the industrial robot is in one-to-one correspondence with the centers of nine circles on the nine-point calibration plate, and the coordinates (x′, y′)of the robot corresponding to the nine points under the time base coordinate system are respectively recorded. Then, the pixel coordinates (u, v) of the centers of the nine circles in the image captured by the nine-point calibration plate are extracted. Finally, according to the correspondence between the pixel coordinates and the coordinates of the robot end-movement position points, the hand-eye calibration results can be obtained by solving Eqs. (4)–(5).

When nine-point calibration method is adopted, the pixel coordinates of nine-point and its coordinates in the robot base coordinate system are shown in Table 2.

According to Sect. 3.2, the hand-eye calibration results can be calculated from the data in Table 2 as follows:

5.3 Extraction and localization of grinding edges

After the calibration is completed, a titanium alloy product is fixed and placed as the grinding object (as shown in Fig. 10), and the camera is used to collect and preprocess the image of the grinding object. The image collected by the camera and its pre-processing results are shown in Fig. 11.

After preprocessing, Canny operator is used to extract the edge contour to be trimmed. In this example, the edge of the metal surface to be trimmed is similar to a rectangle, so the contour information of the trimmed edge can be obtained by extracting 4 vertices of the edge and by contour fitting. The extraction and fitting of the contour of the grinding edge are shown in Fig. 12.

According to the pixel coordinates of the vertices obtained in Fig. 12, the coordinates of the four vertices of the grinding edge in the robot base coordinate system are obtained by substituting the calibration results of the vision system (as shown in Table 3), so as to realize the spatial positioning of the grinding edge.

5.4 Grinding trajectory planning and simulation

According to the D–H parameters of the grinding robot shown in Table 1, the simulation model of the industrial robot is established. It is worth paying attention to that the working point of the end of robot simulation model established based on D–H parameters is the center of the flange, rather than the center of the diamond grinding disc. When performing the grinding operation, it’s necessary to ensure the contact between the grinding disc and the grinding object. So a tool coordinate system based on the grinding disc needs to be established, thus transferring the working zero point and the working direction from the flange to the grinding disc. The establishment of tool coordinate system mainly includes TCP (tool centre position) calibration and TCF (tool coordinate frame) calibration [28,29,30]. Among them, the main method of TCP and TCF calibration is N-point method (N ≥ 3), and the calibration accuracy is affected by the actual operation and often improved with the increase of N. Because the working area of the grinding disc is large, the 3-point method can basically meet the experimental accuracy requirements. Taking the 3-point method for TCP calibration as an example, a fixed reference point is firstly determined in the robot workspace. Then control the movement of the robot, and make the grinding disc center reach the fixed reference point in three different attitudes, as shown in Fig. 13. At the same time, record the coordinate values and their corresponding euler angles when the grinding disc center arrive the reference point in each attitude. Finally, the position conversion relationship between the flange center and grinding disc center can be obtained by calling the program of the robot system or using some open source toolbox. TCP calibration requires a fixed arrival position and different arrival attitudes, which is different of TCF calibration. When implementing the 3-point method of TCF calibration, the grinding disc center is required to maintain a fixed attitude, and there are only coordinate transformations. In addition to the above difference, the calibration process of TCP and TCF is similar, so it will not be repeated here.

Subsequently, the joint angle information of the industrial robot at the positions of the four vertices is obtained by substituting the data in Table 3 and using the inverse kinematics equation of the robot. In order to ensure that the metal edge can be fully ground, it is extended by 5 cm on the basis of the four vertices, so that the grinding of the vertices is smoother. At the same time, a transfer point is set right above the first vertex as the working point before and after the robot begins to grind. At the beginning, the trajectories of the grinding robot from the zero point to the transfer point and returning to the zero point from the transfer point after the grinding are all arcs, and the rest are straight lines. The angle, angular velocity and acceleration of the joint angle in the grinding trajectory are obtained by applying a quintic polynomial interpolation. The simulation results of grinding trajectory are shown in Fig. 14.

5.5 Metal surface edge grinding experiment

The grinding trajectory verified by simulation is imported into the robot FlexPendant. Then, a grinding program is run to grind the edge of the metal surface. According to Fig. 12b, there are four sides that the regrinding robot needs run, and the grinding sequence is as follows:D → A → B → C → D. Figure 15 shows the initial position of the regrinding robot, and Fig. 16 shows the actual process of the regrinding operation.

The comparison of metal surface edges before and after grinding is shown in Fig. 17.

In order to show the change of the metal surface edge before and after grinding more intuitively, texture analysis is used for further comparative analysis, as shown in Fig. 18.

It can be seen from the observation of Figs. 17 and 18 that the edge texture before grinding is rough, and the surface edge after grinding is smoother, thus achieving the purpose of grinding the metal surface edge.

At present, there is still a relatively difficult to complete replace hand-based grinding with the vision-guided grinding robot. After communication with the cooperative enterprise, the research purpose is to polish the easy-to-grind metal surface and remove the edge burrs, thus reducing the work intensity of subsequent grinding operators. This also forms a good foundation for the complete realization of robot-based automatic grinding in the future. In the experimental environment of vision-guided grinding robot, the surface roughness value of the grinding object that measured by the surface roughness meter reduced to less than 0.8 μm from the original 2–3 μm, and the edge burrs were significantly removed. This improve the quality of the metal surfaces and their edges significantly, which meet the application requirements of the enterprise.

6 Conclusion

As two core technologies in the field of intelligent manufacturing, the combination of machine vision and industrial robots will endow robots with more intelligent characteristics and provide support for the better realization of replacing people with machines. Based on this point, a prototype system of vision-guided grinding robot is developed to meet the demand of flexible grinding of titanium surface edge put forward by a titanium production enterprise in reality, and the experimental verification is carried out for the test titanium provided by the enterprise, and the experimental results show that the proposed scheme is feasible. The research work in this paper is derived from the needs of our cooperative enterprise, and the goal is that we can solve the practical problems of the enterprise. Our starting point of research work is the improvement of robot grinding process of the cooperative enterprise by introducing machine vision technology, and the experimental result prove that this application method can effectively solve the problem of replacing people with robots in the rough grinding stage, which has the typical characteristic of multi-technology fusion application.

Compared with the traditional grinding robot which depends on the preset trajectory, the prototype system developed in this paper has been significantly improved in operational flexibility and work flexibility, but there is still a certain distance from the full realization of flexible grinding. In particular, in the grinding of complex surfaces or cavities, the limitations of the camera field of view and the complexity of the grinding trajectory to be planned pose challenges to the practical application of the proposed grinding robot. In addition, the prototype system developed in this paper is still in the experimental validation stage, and the integration and packaging of the system need to be further improved. In the future, how to improve the operation flexibility and system integration level of the grinding robot so that it can be better applied to the production practice of enterprises will be the key research direction of this paper.

Availability of data and materials

All data generated or analyzed during this study are included in this manuscript.

References

Singh SA, Desai KA (2023) Automated surface defect detection framework using machine vision and convolutional neural networks. J Intell Manuf 34(4):1995–2011. https://doi.org/10.1007/s10845-021-01878-w

Yang H, Wang Y, Hu J et al (2021) Deep learning and machine vision-based inspection of rail surface defects. IEEE Trans Instrum Meas 71:5005714. https://doi.org/10.1109/TIM.2021.3138498

Ding Y, Zhang X, Kovacevic R (2016) A laser-based machine vision measurement system for laser forming. Measurement 82:345–354. https://doi.org/10.1016/j.measurement.2015.10.036

Liu YR (2021) An artificial intelligence and machine vision based evaluation of physical education teaching. J Intell Fuzzy Syst 40(2):3559–3569. https://doi.org/10.3233/JIFS-189392

Ansari N, Ratri SS, Jahan A et al (2021) Inspection of paddy seed varietal purity using machine vision and multivariate analysis. J Agric Food Res 3:100109. https://doi.org/10.1016/j.jafr.2021.100109

Jian C, Gao J, Ao Y (2017) Automatic surface defect detection for mobile phone screen glass based on machine vision. Appl Soft Comput 52:348–358. https://doi.org/10.1016/j.asoc.2016.10.030

Zhong C, Gao Z, Wang X et al (2019) Structured light three-dimensional measurement based on machine learning. Sensors 19(14):3229. https://doi.org/10.3390/s19143229

Jeon DJ, Noh TY, Jung CW, et al. (2012) Development of grinding robot system for engine cylinder liner’s oil groove. In: Proceedings of the ASME 2012 international mechanical engineering congress and exposition, Houston, Texas, pp 1513–1519. https://doi.org/10.1115/IMECE2012-86212

Ge J, Deng Z, Li Z et al (2021) Robot welding seam online grinding system based on laser vision guidance. Int J Adv Manuf Technol 116:1737–1749. https://doi.org/10.1007/s00170-021-07433-4

Wan G, Wang G, Li F et al (2021) Robotic grinding station based on visual positioning and trajectory planning. Comput Integr Manuf Syst 27(1):118–127. https://doi.org/10.13196/j.cims.2021.01.010

Guo W, Zhu Y, He X (2020) A robotic grinding motion planning methodology for a novel automatic seam bead grinding robot manipulator. IEEE Access 8:75288–75302. https://doi.org/10.1109/ACCESS.2020.2987807

Xu ZL, Lu S, Yang J et al (2017) A wheel-type in-pipe robot for grinding weld beads. Adv Manuf 5(2):182–190. https://doi.org/10.1007/s40436-017-0174-9

Wang T, Xin Z, Miao H et al (2020) Optimal trajectory planning of grinding robot based on improved whale optimization algorithm. Math Probl Eng 2020:3424313. https://doi.org/10.1155/2020/3424313

Zhou K, Meng Z, He M et al (2020) Design and test of a sorting device based on machine vision. IEEE Access 8:27178–27187. https://doi.org/10.1109/ACCESS.2020.2971349

Dhiman A, Shah N, Adhikari P et al (2022) Firefighting robot with deep learning and machine vision. Neural Comput Appl 34:2831–2839. https://doi.org/10.1007/s00521-021-06537-y

Cho SI, Chang SJ, Kim YY et al (2002) AE—automation and emerging technologies: development of a three-degrees-of-freedom robot for harvesting lettuce using machine vision and fuzzy logic control. Biosyst Eng 82(2):143–149. https://doi.org/10.1006/bioe.2002.0061

Diao S, Chen X, Luo J (2018) Development and experimental evaluation of a 3D vision system for grinding robot. Sensors 18(9):3078. https://doi.org/10.3390/s18093078

Zhao X, Lu H, Yu W et al (2022) Vision-based mobile robotic grinding for large-scale workpiece and its accuracy analysis. IEEE/ASME Trans Mechatron 28(2):895–906. https://doi.org/10.1109/TMECH.2022.3212911

Wan G, Wang G, Fan Y (2021) A robotic grinding station based on an industrial manipulator and vision system. PLoS ONE 16(3):e0248993. https://doi.org/10.1371/journal.pone.0248993

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22(11):1330–1334. https://doi.org/10.1109/34.888718

Ramadan ZM (2019) Effect of kernel size on Wiener and Gaussian image filtering. TELKOMNIKA Telecommun Comput Electron Control 17(3):1455–1460. https://doi.org/10.12928/telkomnika.v17i3.11192

Puneet P, Garg N (2013) Binarization techniques used for grey scale images. Int J Comput Appl 71(1):8–11. https://doi.org/10.5120/12320-8533

Rong W, Li Z, Zhang W, Sun L (2014) An improved CANNY edge detection algorithm. In: IEEE international conference on mechatronics and automation, Tianjin, China, pp 577–582. https://doi.org/10.1109/ICMA.2014.6885761

Kucuk S, Bingul Z (2006) Robot kinematics: Forward and inverse kinematics. INTECH Open Access Publisher, London

Zaplana I, Hadfield H, Lasenby J (2022) Closed-form solutions for the inverse kinematics of serial robots using conformal geometric algebra. Mech Mach Theory 173:104835. https://doi.org/10.1016/j.mechmachtheory.2022.104835

Cai J, Deng J, Zhang W et al (2021) Modeling method of autonomous robot manipulator based on DH algorithm. Mob Inf Syst 2021:4448648. https://doi.org/10.1155/2021/4448648

Žlajpah L, Petrič T (2023) Kinematic calibration for collaborative robots on a mobile platform using motion capture system. Robot Comput Integr Manuf 79:102446. https://doi.org/10.1016/j.rcim.2022.102446

Yin S, Guo Y, Ren Y et al (2014) A novel TCF calibration method for robotic visual measurement system. Optik 125(23):6920–6925. https://doi.org/10.1016/j.ijleo.2014.08.049

Cakir M, Deniz C (2019) High precise and zero-cost solution for fully automatic industrial robot TCP calibration. Ind Robot Int J Robot Res Appl 46(5):650–659. https://doi.org/10.1108/IR-03-2019-0040

Jiang L, Gao G, Na J, et al. (2023) A fast calibration method of the tool frame for industrial robots. In: 2023 IEEE 12th data driven control and learning systems conference (DDCLS). IEEE, pp 875–880. https://doi.org/10.1109/DDCLS58216.2023.10166707

Funding

Supported by the Key Research and Development Program of Shaanxi (Program No. 2022GY-254), P.R. China.

Author information

Authors and Affiliations

Contributions

DX wrote the original draft preparation, LC wrote and edited the final draft, LL reviewed the manuscript, NR was responsible for formal check.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, C., Dun, X., Li, L. et al. Vision-guided robot application for metal surface edge grinding. SN Appl. Sci. 5, 236 (2023). https://doi.org/10.1007/s42452-023-05468-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-023-05468-8