Abstract

This study presents an investigation into the uncertainty of images reconstructed by the total focusing method (TFM) using non-destructive evaluation (NDE) and phased array probes. Four neural network architectures based on the U-Net model are used to probabilistically segment TFM images and evaluate the uncertainty of the segmentation results. The models are trained on three simulated phased-array datasets, which contain various sources of uncertainty from the simulated defects or surrounding material. Physical limitations, such as the defect’s shadow zone, led to high uncertainty. Results demonstrate that probabilistic segmentation can be helpful in determining the source of uncertainty within segmented TFM images. The model performance is investigated based on several metrics, and the influence of defect size on model performance is shown. The probabilistic U-Net shows the highest F1-score overall test datasets. This study contributes to the advancement of NDE using TFM by providing insights into the uncertainty of the reconstructed images and proposing a solution for addressing it.

Article Highlights

-

This study uses neural networks to probabilistically segment TFM images and investigate uncertainty.

-

The probabilistic U-Net outperforms other models, and high uncertainty is found in the shadow zone, reflections, and small defects.

-

Three different defect types are investigated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In order to assess the structural integrity of parts, reliable and accurate defect detection with non-destructive evaluation (NDE), is essential. For fracture mechanics calculations, characteristics like defect size, shape, and position can be used to estimate the defects’ severity [1]. For estimating these defect characteristics, ultrasound is a well-established method [2]. Especially ultrasonic imaging with phased array probes, together with reconstruction techniques, is widely used in the industry. This is due to the increasing available computing power and the higher degree of automation in ultrasound inspection practices [3]. The combination of full matrix capture (FMC), which is a technique where every transmitter and receiver combination is captured, together with the total focusing method (TFM) is suited for the reconstruction of complex flaw patterns [4]. With TFM, originally introduced by [5], the entire probe aperture is used to create a transmitter and receiver image in each pixel of the reconstruction volume. This enables a transmitter- and receiver-side synthetic focusing [5].

Deep learning methods have been researched increasingly in recent years, to automate and improve defect characterization with ultrasound data. In [6], with a phased array ultrasound setup, the grain orientation and defects were estimated for authentic steel welds. Synthetic data were used in this study. With a deep neural network, they estimated the grain orientation and, through this, the velocity model. Afterward, the data were reconstructed with the TFM, with and without the adapted velocity model, to detect the defects. It was found that the defects could be estimated much more accurately when using the predicted grain orientation map in the TFM reconstruction in comparison to isotropic models [6].

Rao et al. [7] presented a fully-convolutional network architecture, which takes as input the FMC dataset and predicts a reconstructed image. In the image, each pixel has its respective velocity attached to it. This architecture, therefore, replaces the TFM completely. The dataset was a simulated specimen representing metal parts bonded with adhesive layers. In the adhesive layer, random defects, so-called kissing bond defects were introduced. It was found that the kissing bond defects and the metal parts were reconstructed accurately by the model [7].

A study directly applying TFM images as input for a neural network can be found in [4]. In this study, defects were localized in TFM images with bounding boxes. The ultrasound waves were simulated in a quadratic domain with circular defects. The neural network was trained only on a single image. Despite this limited training dataset size, the position of the defects was recognized accurately [4].

In order to extract important features, it is possible to preprocess the TFM images. Mwema et al. [8] this for atomic force measurements by utilizing the power spectral density to preprocess images.

Based on the given literature review, the uncertainty within the neural network-based segmentation of TFM images was not investigated. This is the subject of this study. In applications, it is important to make decisions concerning the structural integrity of a part based on defect characteristics. However, the uncertainty estimate within these quantities is conventionally not regarded, which can be quantified with the methods introduced in this study.

article is organized as follows: Sect. 2 introduces the applied methods for generating the dataset and the neural network architectures and evaluation metrics. In Sect. 3, the results are presented and discussed. Finally, Sect. 4 provides the conclusion.

2 Methods

This Section introduces the applied methods of this study. At first, the FMC procedure and TFM are described. Afterward, the simulation setup for generating the data is explained. Further, the neural network architectures for the probabilistic segmentation and the used evaluation metrics are introduced.

2.1 Full matrix capture and the total focusing method

FMC is a data acquisition technique for phased array probes, where every transmitter and receiver combination is captured. The procedure of FMC is displayed in Fig. 1a. In the first firing sequence of the FMC procedure, element one excites an ultrasound wave, which propagates through the whole volume or is reflected at e.g. defects, and all n elements (including the one which transmits ultrasonic wave) record a signal. [9] The captured data constitute the first row of the so-called information matrix A. After the first, the second, the third, and nth elements are fired in the same manner and fill the rest of the information matrix with in total \({\text{n}}^2\) signals [9]. Post-processing can derive all phased array inspection configurations from the matrix, such as plane B-scan or sector B-scan. The TFM outperforms all other configurations since the entire imaging plane is focused as opposed to a single point at a certain depth [5].

This study utilizes synthetic data instead of real ultrasound signals, and as a result, the units used to measure the data are dimensionless, in contrast to the standard unit of volts used for measuring ultrasound signals in real-world applications.

The TFM algorithm starts with transforming every single A-scans using the Hilbert transform \({\widetilde{H}}\). The Hilbert transform only changes the phase information of the signal and not the frequency content. This transform generates an accurate signal envelope for all frequencies, and this smooths reconstructed images without losing any information [10]. The analytical signal Za, derived by applying \({\widetilde{H}}\) to one element of the information matrix \(A_{{\text{i}},{\text{j}}}\), is defined as

where i stands for the imaginary unit. After the signals of the information matrix have been converted to the analytical signals \(Za_{i,j}\), the intensity I of each pixel in the imaging plane is calculated by

where \(x_{\text {f}}\) and \(y_{\text {f}}\) represent the focal spot or pixel in the imaging plane. The time \(t_{i,j}\) is the time at which each receiver j would measure a reflected signal from a transducer i [9]. Figure 1b represents the time t from the first element to the focal point and then to the \(n_{th}\) element, therefore \(t=t_1+t_{\text {n}}\). The signal amplitudes at these respective time points in each signal are summed up for each pixel in the imaging plane. The time point is dependent on the ultrasound velocity of the medium, which needs to be known in advance. The resulting complexed-valued image is normalized in the end in order to get real values. For more information on the TFM, please refer to [5].

In a data acquisition procedure for FMC together with the resulting information matrix and in b illustration of the TFM. The figure was adapted from [9]

2.2 Dataset generation by numerical modelling of phased array measurements

In this study, randomly generated synthetic data will be investigated. The data in this study has been produced stochastically, offering the advantage of exploring numerous defect positions and testing scenarios. The simulations, furthermore, did not account for surface roughness. However, in actual structures, surface roughness causes signal attenuation and wave dispersion [11]. Using real specimens to obtain a sufficiently large training dataset would have been challenging. In General, since the data is synthetic, its transferability to real data remains an open question.

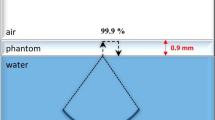

For the simulations, the software Salvus from the Mondaic AG [12] was used. Salvus is based on the spectral element method and is capable of conducting waveform simulations on the GPU. A quadratic domain or mesh of the size 60x60 mm was modeled and the acoustic wave equation was solved. Instead of elastic acoustic waves were chosen since they are computationally less expensive. The results, however, are expected to be similar to an elastic simulation since the applied excitation, which was a vector force in the y-direction, mainly introduces longitudinal waves. Further, the p-wave velocity \(v_{\text{p}}\) was used for the TFM reconstruction. The material parameters of aluminum are used (p-wave velocity \(v_{\text{p}}=6380\,\frac{\rm{m}}{\rm{s}}\) and density \(\rho =2700\, \frac{\text{g}}{\text{m}^3}\)). At all edges of the structure except the upper one, absorbing boundaries were applied. The excitation wavelet was the second derivative of a Ricker wavelet with a center frequency of 2.25 MHz, which is a common frequency for ultrasonic testing. The specifications of the simulated phased array probes are given in Table 1.

In the structured mesh, defects were introduced by removing elements of the mesh where the defects should be present. In order to reduce staircasing, and model the shape of the defect accurately, the mesh was defined with much smaller elements than necessary, for mitigating numerical dispersion. An element size of 1.39 mm (with two elements per wavelength) would be sufficient. However, an element size of 0.27 mm was chosen to reproduce the defect shapes more accurately.

Three different datasets, with different defects, were generated (see Fig. 2). These are:

-

Dataset 1: round defect in a homogeneous medium

-

Dataset 2: complicated defect shapes in a homogeneous medium

-

Dataset 3: round defect in a medium with microstructure noise

For each of these datasets, 1000 simulations were conducted, 700 were used for training, 50 for validation, and 150 for testing. One TFM image of each of the three datasets is displayed in Fig. 2.

The first dataset (Fig. 2 left) round defects with different random diameters and positions were simulated. The same procedure for the defects was used in the third dataset (Fig. 2 right). However, the connections between 800 neighboring elements were removed randomly. This should mimic the microstructure noise of coarse-grained material, for which the grains can be seen as small scatterers. A similar approach was proposed in [13]. Highly scattering materials are a common problem in ultrasonic testing [14]. The second dataset represents complicated-shaped reflectors. Due to physical constraints, such as the larger shadow zones under elongated defects, and the limitations due to the wavelength, complicated defect shapes are harder to capture accurately [15]. These defects were generated using randomly shaped Bezier curves. A detailed description of this approach is given in [16].

The generation of the three datasets was randomized. For the round defects (datasets 1 and 3), the diameter was randomly selected between 2 and 6 mm. The position of the defects in x- and y- were varied between 2 and 8 mm. When the defect intersected the boundaries of the mesh, with a large defect diameter and a small distance to the edges, a new defect position was selected. The procedure was the same for the complicated defect shapes, although with 2–8 mm, a larger range of defect diameters was chosen.

Figure 3 displays a flow chart outlining the procedure to generate a single sample, which includes a TFM image and its corresponding label.

2.3 Neural network architectures for probabilistic segmentation

The goal of probabilistic image segmentation is to regard the dataset’s ambiguity in the segmentation process. Probabilistic segmentation was mainly applied in biomedical imaging, as in [17, 18], and [19] since errors in the segmentation could have severe consequences in this field. Similar requirements are also present in NDE, where deep learning is applied more frequently. In NDE, depending on the part, small defects can lead to critical failures of large structures. For this reason, there is a need to assess the performance of the NDE method to evaluate if a defect can be found or not. This is normally done with probability of detection (POD) curves [20]. These, however, give no information on why a single defect may not be found. The uncertainty estimates of segmentation results can bridge that gap and give insight into why certain defects are harder to capture. Furthermore, it makes the neural network more explainable, currently a limiting factor in their application.

For probabilistic image segmentation, different neural network architectures are available in the literature, which takes different aspects of the uncertainty in the segmentation into account [17]. Conventional neural networks for segmentation are deterministic. This means that they will always predict the same segmentation mask for a given input image. This, however, gives a false impression of certainty since, for example, a network trained with a different initialization could predict a different segmentation mask [17]. With an uncertainty estimate, the areas where the model is unsure about its prediction can be estimated. With probabilistic models, it is possible to draw different segmented samples for the same input image. In general, probabilistic models try to find the posterior distribution p over the weights w, given the training dataset X, and its labels Y [21].

The labels in this study are binary (defect or no defect). Since the posterior distribution is untraceable, it has to be estimated empirically by sampling from the segmentation models [21]. In this study, three architectures will be used for that purpose and one deterministic U-Net for comparison. The implementation can be found in [22]. The four models are visualized in Fig. 4, and are explained in the following.

U-Net with softmax output This is the well-established U-Net architecture on which many variants are built. It is schematically displayed in Fig. 4a. Initially, it was introduced by [23] and has been widely used since then. The vanilla U-Net is purely deterministic and has a softmax output, which gives a probability score for each class [22]. This model is used as a benchmark for the other models.

U-Net with Monte Carlo (MC) dropout MC dropout was introduced by [24] for classification and regression and adapted by [21] for segmentation tasks. The goal is to find the true posterior distribution over several models with different random dropout configurations. Random dropout is used in the training and testing phases. The model used is shown in Fig. 4b and, compared to the vanilla U-Net, has some further dropout layers. The dropout probability was set to 0.5, which is the probability of keeping each weight [17].

Ensemble of U-Nets Combining the prediction of several models is a common approach to improve the performance, and to avoid overfitting. From each individually deterministic model of the ensemble, the segmentation can be sampled [17]. Four models were chosen as an ensemble in this study. The ensemble of models is sketched in Fig. 4c.

Probabilistic U-Net The probabilistic U-Net, as displayed in Fig. 4d, was introduced by [18]. It combines the vanilla U-Net and a conditional variational auto-encoder. The auto-encoder samples in the testing phase from the latent space, and thus makes different predictions for different samples z [17]. For more information concerning the probabilistic U-Net please refer to [18].

The model’s hyperparameter tuning was conducted for the learning rate and batch size. The models were trained with the early stopping criterion based on the validation dataset loss.

2.4 Metrics

To compare the output of the neural networks quantitatively, meaningful metrics need to be used. Most of these metrics work on a thresholded image and are based on the confusion matrix. The confusion matrix is a result of comparing the thresholded prediction with its label. In Fig. 5, a confusion matrix for a binary segmentation problem, which is also the task in this work, is visualized.

From the confusion matrix, the most common segmentation or classification metrics are derived. For a balanced dataset, accuracy is a widely used metric, which is the ratio of the correct classified samples over all samples.

However, the accuracy gives a false impression about the performance when the dataset is imbalanced. For imbalanced datasets, the F1-score is a suitable metric. It is calculated as the harmonic mean between recall and precision. Recall and precision are defined as

and

The F1-score is calculated with

Czolbe et al. [17] proposed, furthermore, an uncertainty metric H based on pixel-wise predictions (not thesholded). It is defined as

where \(p(y=c \vert x,X,Y)\) is the probability to predict class \(c \in C\) in one pixel, x is one sample of the training dataset X and y its respective label. Y are the set of labels of the training dataset [17]. The uncertainty H takes low values when the pixel-wise predicted values are close to zero or close to one and higher values in the middle of this range. H gives an impression of how uncertain the model is about its predictions.

3 Results and discussion

This Section introduces, at first, the results of the uncertainty estimation on three datasets. Afterward, the performance of the models based on several segmentation metrics is discussed. In the end, we evaluate model performance on different defect sizes.

3.1 Estimating the uncertainty in the segmentation

In order to estimate the uncertainty within the segmentation, 15 samples were drawn from the respective models. We applied uncertainty estimates (see Eq. 8) on these 15 predicted images, and the results were averaged. Since the U-Net with the softmax output is deterministic, no variation within the 15 samples is present. In Figs. 6, 7, and 8 the mean uncertainty H of the TP, FN, FP, and TN over the whole test dataset and is displayed for the different datasets and models.

In general, H is high when the model has incorrect (FN, FP) compared to the correct predictions (TP, TN). This was also noticed by [17], who reported a correlation between the uncertainty metric and the segmentation error. The only exception here is the MC dropout model, which shows a higher uncertainty within datasets 2 and 3 for TPs than FNs. The uncertainty of the TNs is much smaller in comparison to the other cases. The reason for the low uncertainty of the TN may be that most of the pixels in one image have no defect, and because of this, the models focus during the training on getting this case correct. Furthermore, most of the pixels with no defect in the image have values close to zero, which simplifies the decision for the model. The probabilistic U-Net shows the lowest uncertainties H for all datasets and the MC dropout model the highest.

To evaluate wether the uncertainties can explain false segmentations, and, therefore, have a relevant meaning for the interpretation, we investigate the uncertainties of three defects from dataset 2. This dataset was chosen since it most clearly shows areas where the models do not work well. Figure 9 shows the uncertainties for three defects. In the top row, the three TFM images are displayed together with the cutout in green, where the uncertainty is investigated. For each model, the uncertainty estimates of 15 predictions were averaged.

Uncertainty of the segmentation of three complicated-shaped defects (dataset 2). For the cutout in the top row, the uncertainties are visualized. The red boundary gives the ground truth, and the blue boundary gives the predicted defects, whereby the average of 15 predictions was taken and thresholded by 0.5

It can be seen that, as in the whole test dataset the MC dropout model shows the highest uncertainty and the probabilistic U-Net the lowest one. When the prediction boundaries differ from the ground truth boundaries, high uncertainty values are usually present. In general, the uncertainty values at the boundary of the defects are high. Furthermore, in the shadow zone of the defect the shape of the defect is not captured well and also high uncertainty values are present. For the defect in the first column all models, except the probabilistic U-Net, interpret the multiple reflections as a second defect with high H. In general, the probabilistic U-Net shows the lowest uncertainty within its segmentation and also the qualitatively best segmenting result.

3.2 Performance of the models on each dataset

In this Subsection, the models will be compared based on the segmentation metrics, which have been introduced in Sect. 2.4. The recall, precision, and F1-score are given for the three datasets in Figs. 10, 11, and 12. The accuracy was not used since it is unsuitable for an imbalanced dataset. The metrics were calculated for the whole test dataset, using the average prediction of 15 samples from the models. This was done to find the prediction against which the models converge when sampling from them.

For all datasets, the model with the highest F1-score, and therefore, the best-performing model, is the probabilistic U-Net. The probabilistic U-Net also shows the lowest uncertainty in its prediction (see Figs. 6, 7, 8, and 9). The MC dropout model has the lowest F1-score and also the highest uncertainty. For dataset 1, the models show the smallest standard deviation, represented by the error bars. This dataset also has the lowest TP, and TN uncertainties (see Fig. 6)

3.3 Performance of the models over the defect size

A common procedure to evaluate the performance of an NDE method is to investigate the system response to defects of different sizes. The maximal amplitude of a defect echo can be used to estimate the size [2]. This is, however, dependent on the wavelength \(\lambda\). When the defect is within a range from \(\lambda /2\) to \(\lambda /4\), resonance effects occur, and with a defect size smaller than \(\lambda /6\), Rayleigh scattering becomes dominant [25].

The defect size was varied from 0.5 to 4.0 mm in 15 steps for all three datasets. The middle point of the defects is located in the middle of the domain at \(x=30\) mm and \(y=30\) mm. Since the F1-score and H are correlated, and the F1-score is a more established metric to evaluate the segmentation performance, it is used in this investigation. For each TFM image representing one defect size, 15 predictions were drawn from the models and averaged. The error bars in Figs. 13, 14, and 15 represent the standard deviations of the F1-score for the 15 predictions. It is assumed that the standard deviation of the F1-score gives an estimate of how the model’s predictions differ and, therefore, how uncertain the model considers its prediction. Since the U-Net with the softmax output is deterministic, no error bar is plotted. The \(\lambda /2\) wavelength for the center frequency of 2.25 MHz is 1.42 mm. The \(\lambda /2\) wavelength is marked in Figs. 13, 14, and 15. For the dataset with the complicated defect shapes, the same defect shape was chosen for all defect sizes and rescaled accordingly. The microstructure noise, introduced by removing the connection between some elements in the mesh, was kept random.

It can be seen that the F1-score increases after the \(\lambda /2\) threshold. A reason for this can be that the linearity between defect size and amplitude of the signals no longer holds [25]. Furthermore, for the probabilistic U-Net, the standard deviation of the F1-score from the drawn samples increases below \(\lambda /2\) for the dataset with the round and complicated defect shapes. As also concluded in the previous investigation, the MC dropout model shows the lowest F1-score. The other models reach similar F1-scores. The standard deviation for the dataset with the microstructure noise is the highest, especially for the probabilistic U-Net.

4 Conclusions

In this study, it was investigated whether the ambiguity within TFM images can be captured by segmenting the data with neural network architectures. Four different architectures were investigated: U-Net with a softmax output, an ensemble of U-Nets, U-Net with Monte Carlo dropout, and the so-called probabilistic U-Net. Concerning the model performance and capturing the uncertainty within the data, the probabilistic U-Net was most suitable for this task. Several physical effects, which lead to uncertainty within the TFM image, were also found to be where the model segmentations had high uncertainty. These physical effects or regions are the shadow zone below the defects, where the ultrasound wave could not propagate, multiple reflections within the TFM image, which are mistaken for a defect, or the ambiguities within the amplitude of the defects when the defects are smaller than \(\lambda /2\). For all of these scenarios, the models show a higher uncertainty in the images. In the future, such uncertainty estimates can be used to identify sources of uncertainty within images of non-destructive measurements. Together with a conventional POD evaluation, this gives a more holistic evaluation of the limitations of an NDT method.

Data availability

The data will be shared at a reasonable request.

References

Rao J, Saini A, Yang J, Ratassepp M, Fan Z (2019) Ultrasonic imaging of irregularly shaped notches based on elastic reverse time migration. NDT &E Int 107:102135. https://doi.org/10.1016/j.ndteint.2019.102135

Felice VM, Fan Z (2018) Sizing of flaws using ultrasonic bulk wave testing: a review. Ultrasonics 88:26–42. https://doi.org/10.1016/j.ultras.2018.03.003

Grager JC., Mooshofer H, Eschler E, Grosse CU (2017) Optimierung der Total Focusing Method für die Prüfung von CFK-Werkstoffen, DGZfP-Jahrestagung, https://www.ndt

Hasanian M, Majid GR, Golchinfar B, Hossain S (2020) Automatic segmentation of ultrasonic TFM phased array images: the use of neural networks for defect recognition. In: Proceedings of the SPIE, sensors, and smart structures technologies for civil, mechanical, and aerospace systems, vol 10(1117/12), pp 2558975

Holmes C, Drinkwater BW, Wilcox PD (2005) Post-processing of the full matrix of ultrasonic transmit-receive array data for non-destructive evaluation. NDT &E Int 38:701–711. https://doi.org/10.1016/j.ndteint.2005.04.002

Singh J, Tantr K, Mulholland A, MacLeod C (2022) Deep learning based inversion of locally anisotropic weld properties from ultrasonic array data. Appl Sci 12:532. https://doi.org/10.1016/j.ndteint.2005.04.002

Rao J, Yang F, Jizhong Mo H, Kollmannsberger S, Rank E (2022) Quantitative reconstruction of defects in multi-layered bonded composites using fully convolutional network-based ultrasonic inversion. J Sound Vib 542:117418. https://doi.org/10.1016/j.jsv.2022.117418

Mwema FM, Akinlabi ET, Oladijo OP (2019) The use of power spectrum density for surface characterization of thin films. Photoenergy Thin Film Mater. https://doi.org/10.1002/9781119580546.ch9

Grager JC, Mooshofer H, Grosse CU (2018) Evaluation of the imaging performance of a CFRP-adapted TFM algorithm. In: 12th European conference on non-destructive testing (ECNDT), Gothenburg, https://www.ndt.net/article/ecndt2018/papers /ecndt-0183-2018.pdf

Oruklu E, Lu Y, Saniie J (2009) Hilbert Transform Pitfalls, and Solutions for Ultrasonic NDE Applications. In: IEEE international ultrasonics symposium proceedings. https://doi.org/10.1109/ULTSYM.2009.5441903

Wang Z, Cui X, Ma H, Kang Y, Deng Z (2018) Effect of surface roughness on ultrasonic testing of back-surface micro-cracks. Appl Sci 8:1233. https://doi.org/10.3390/app8081233

Afanasiev M, Boehm C, van Driel M, Krischer L, Rietmann M, May DA, Knepley MG, Fichtner A (2019) Modular, and flexible spectral-element waveform modelling in two, and three dimensions. Geophys J Int 216:1675–1692. https://doi.org/10.1093/gji/ggy469

Boehm R, Heinrich W (2008) Störsignalminderung bei der Ultraschallprüfung durch ein Auswerteverfahren in Anlehnung an die Synthetic Aperture Focussing Technique (SAFT), DACH-Jahrestagung in St.Gallen

Van Pamel A (2015) Ultrasonic inspection of highly scattering materials, Imperial College London, PhD Thesis

Boehm R, Brackrock D, Kitze J, Brekow G, Kreutzbruck M (2010) Advanced crack shape analysis using SAFT. In: AIP conference proceedings, vol 1211, p 814. https://doi.org/10.1063/1.3362490

Viquerat J, Hachem E (2020) A supervised neural network for drag prediction of arbitrary 2D shapes in laminar flows at low Reynolds number. Comput Fluids 210:104645. https://doi.org/10.1016/j.compfluid.2020.104645

Czolbe S, Arnavaz K, Krause O, Feragen A (2021) Is Segmentation Uncertainty Useful?. In: Information processing in medical imaging, lecture notes in computer science, vol 12729. Springer. https://doi.org/10.1007/978-3-030-78191-0_55

Kohl S, Romera-Paredes B, Meyer C, De Fauw J, Ledsam JR, Maier-Hein K, Eslami S, Jimenez Rezende, D, Ronneberger O (2018) A probabilistic U-Net for segmentation of ambiguous images. In: Advances in neural information processing systems, vol 31. https://doi.org/10.48550/arXiv.1806.05034

Monteiro M, Le Folgoc L, Coelho de Castro D, Pawlowski N, Marques B, Kamnitsas K, van der Wilk M, Glocker B (2020) Stochastic segmentation networks Modelling spatially correlated aleatoric uncertainty. In: Advances in neural information processing systems, vol 33

Rentala VK, Kanzler D, Fuchs P (2022) POD evaluation: the key performance indicator for NDE 4.0. J Nondestruct Eval. https://doi.org/10.1007/s10921-022-00843-8

Kendall A, Badrinarayanan V, Cipolla R (2017) Bayesian SegNet: model uncertainty in deep convolutional encoder-decoder architectures for scene understanding. In: Proceedings of the British machine vision conference (BMVC). https://doi.org/10.5244/C.31.57

Czolbe S, Arnavaz K, Krause O (2021) probabilistic_segmentation, GitHub repository, GitHub (2021), https://github.com/SteffenCzolbe/segmen tation

Ronneberger O, Fischer P, Brox T (2015) U-Net: Convolutional networks for biomedical image segmentation. In: Medical image computing, and computer-assisted intervention, MICCAI, (2015) Lecture Notes in Computer Science, 9351. Springer. https://doi.org/10.1007/978-3-319-24574-4_28

Gal Y, Ghahramani Z (2016) Dropout as a Bayesian approximation: representing model uncertainty in deep learning. In: International conference on machine learning, New York, https://proceedings.mlr.press/v48/gal16.pdf

Seeber A, Vrana J, Mosshofer H, Goldammer M (2018) Correct sizing of reflectors smaller than one wavelength. In: 12th European conference on non-destructive testing (ECNDT), Gothenburg, https://www.ndt.net/article/ecndt2018/papers /ecndt-0177-2018.pdf

Acknowledgements

The authors thank Dr. Lion Krischer and Dr. Christian Böhm for their support in implementing the simulations in Salvus and Isabelle Stüwe for proofreading.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

SS: Conceptualization, methodology, implementation, analysis, writing; HW: Methodology, review, and editing; CUG: Review and editing.

Corresponding author

Ethics declarations

Competing interests

All authors declare no competing interests.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schmid, S., Wei, H. & Grosse, C.U. On the uncertainty in the segmentation of ultrasound images reconstructed with the total focusing method. SN Appl. Sci. 5, 108 (2023). https://doi.org/10.1007/s42452-023-05342-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-023-05342-7