Abstract

The process of estimating the compaction parameters namely the maximum dry density (MDD) and optimum moisture content (OMC) through laboratory tests is time-consuming, labor-intensive, and costly. These issues can be avoided by developing prediction models that are able to accurately predict the compaction parameters from index properties that are easier to estimate in the laboratory. As a result, this study focuses on employing artificial neural networks (ANNs) for the prediction of the compaction parameters of aggregate base course samples from the grain size distribution and Atterberg limits. Additionally, different ANNs with different structures were tested in order to set the optimum hyperparameters that minimize the errors in the predictions. Specifically, this study investigates the impact of the number of hidden layers, number of neurons per hidden layer, and activation functions on the performance of the ANNs. Furthermore, the weight decay method, which is the most common regularization technique, was used during the training of the ANNs in order to avoid overfitting and control the changes in the connection weights. The results indicate that the optimum hyperparameter settings changes depending on the optimized output. Additionally, the ReLU activation function is the most stable function that produces the best predictions. Moreover, the results show that ANN approach represents a major innovative tool for accurately predicting the compaction parameters with R2 values of 0.826 and 0.911 for predicting the MDD and OMC.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In Egypt, flexible pavement is considered the most common pavement utilized in the construction of roads that almost the entire transportation network consists of flexible pavement. In general, flexible pavement consists of multiple layers (as shown in Fig. 1) that are responsible for safely transferring the traffic load to the soil without causing any failure. Specifically, the process of the structural design of asphalt pavement mainly focuses on safely transferring traffic loads without exceeding the soil strength in order to avoid any form of failure [1,2,3]. The first layer in the flexible asphalt pavement is the wearing surface and this layer is in direct contact with traffic. The main objective of the wearing surface layer is to provide some desirable characteristics such as rut resistance, drainage, friction, noise control, and smoothness besides with protecting underlying layers from surface water [4]. The wearing surface layer is followed by the base course layer which consists of crushed aggregate and is responsible for providing additional load distribution for the underlying layers. The aggregate base course layer is, then, followed by the subbase layer that is not always needed which is the case in Egypt as the subbase layer is rarely used. In general, the subbase layer is responsible for additional distribution of traffic loads. Generally, the compaction process of the different layers is crucial in order to achieve some specifications. For the aggregate base course layer, a relative compaction of 95% or higher is desirable in order to develop a stable layer to support other aggregate base course layers or support the placement of the wearing course layer.

The main objective of the compaction process is to reduce the air voids between the soil particles by adding water as a lubricating medium. This process focuses on improving the bearing capacity, slope stability, and shear strength of the different pavement layers and reducing some of the undesirable characteristics such as reducing undesirable settlements, permeability, and swelling [5]. The most common laboratory test used for estimating the desired compaction parameters is called Proctor test and it was proposed in 1933. In general, Proctor test mimics the field compaction conditions as the tested sample got subjected to a specific compaction energy during testing that is equivalent to the field compaction energy [6]. Generally, there are two procedures for Proctor test namely the standard and modified Proctor tests. The standard Proctor test is more convenient for normal traffic conditions, while the modified test is more convenient for the case of heavy loads such as the construction of airfield pavements [7]. During Proctor test, the relation between the dry density and water content should be drawn in a graph in order to find the maximum dry density (MDD) and the corresponding optimum moisture content (OMC) [8] as shown in Fig. 2. The OMC is the water content corresponding to the dry density at the peak that is called the MDD [9]. Estimating the OMC is essential for quantifying the amount of water needed in the field in order to achieve the required density. Similarly, estimating the MDD through laboratory tests is crucial in order to calculate the relative compaction value and make sure that the field dry density satisfies the specifications. In general, the relative compaction is calculated using the following equation:

On the other side, conducting Proctor test requires considerable time (around 2–3 days) and large amount of aggregate (around 20 kg for conducting one test). These drawbacks have motivated researchers from all over the world to propose alternative methods that are capable of predicting the compaction parameters. As a result, over the last few years, a large number of prediction models have been developed in order to estimate the compaction parameters from other soil properties that are easier and faster to estimate such as Atterberg limits. Most of these studies employ regression analysis in order to develop the prediction models, while a small number of papers employ some machine learning techniques in order to predict the OMC and MDD. Table 1 summarizes previous studies and shows the scope and main outcomes of every study. The table clearly shows that machine learning techniques outperform the traditional MLR technique as it offers highly accurate predictions. However, machine learning approaches are rarely used in the literature for the prediction of the compaction parameters. Additionally, all the previous studies focus on the prediction of the soil or the subgrade layer compaction parameters and no study, to the best of the author’s knowledge, focused on developing prediction models for the estimation of the aggregate base course compaction parameters. As a result, this paper focuses on developing ANN models for estimating the compaction parameters of the aggregate base course samples.

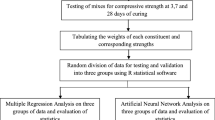

2 Methodology

2.1 Different types of the aggregate base samples

This study used aggregate base samples collected from different roads in Egypt. These samples were taken from base course samples already used in the construction of the asphalt pavement. As the result, a total of 64 aggregate base samples were collected. In Egypt, the Egyptian code for urban and rural roads (ECP, 2008) part (4) [29] defines multiple aggregate types or grades that are used for the construction of the aggregate base course. These different aggregate grades are summarized in Table 2 and Fig. 3. In 2015 and 2016, the highway and research laboratory at Cairo University, Egypt collected 216 aggregate base samples from different locations all over Egypt. These samples follow different aggregate grades as shown in Fig. 4. Thus, the dataset used in this study, that consist of 64 samples, contains samples that follow the four aggregate grades (A, B, C, and D) with approximately the same percentages. These samples were tested in multiple laboratory tests such as the standard Proctor test, specific gravity, Atterberg limits, and particle size distribution according to the British Standard practice (BS 1377) [30] in order to estimate the different properties of these samples.

2.2 Artificial neural networks

ANNs have been frequently used as a powerful tool for the prediction of different parameters in different problems in the engineering field and over the last few years ANNs have been employed in the prediction process for estimating essential parameters for the design of asphalt pavements. In the pavement engineering field, the study by Othman and Abdelwahab [3] utilized ANNs for the prediction of the optimum asphalt content from the aggregate gradation. Similarly, the study by Othman [31] proposed ANN models for the prediction of the hot asphalt mix properties from the aggregate gradation. In addition, the study by Ardakani and Kordnaeij [25] utilized ANNs for predicting some of the soil characteristics. Generally, ANNs try to mimic human brains and the general structure of any ANN consists of three main layers namely the input, hidden, and output layers. The main function of the input layer is to receive the input data or signals, while the main objective of the hidden layers is to receive and manipulate the received information. Then, the output later, which is the final layer, is responsible for generating the predictions of the ANN [32]. In ANNs, the neuron is the main unit that is responsible for receiving the signals and manipulating them using some activation functions. In general, the activation functions are the functions responsible for producing the predictions of the neurons and in the final layer these activation functions are responsible for producing the predictions of the ANNs. The calculations are mainly done at the node where firstly a weighted sum of the input signal is done by multiplying the input signal (X) by the connection weight (W) [33]. Then, the activation functions are used to produce the output signal using the weighted sum value as shown in the following equations:

where: X = the input signal or values; W = weights associated with ethe connections; B = bias values; H = the weighted sum of the input signal or values; F(H) is the activation function; and Y = the output value.

ANNs can be categorized into different types such as the feedforward and feedback ANNs. Furthermore, there are multiple training algorithms that can be used to train ANNs including the supervised and unsupervised learning. For the aim of this study, the backpropagation supervised algorithm is employed to train the ANNs and produce the predictions. In general, the process of training an ANN is iterative and during the iterations, the connection weights are updated in a way that minimizes a specific loss function. Previous studies have shown that the final predictions of the ANNs are affected by the hyperparameters such as the structure of the ANN (including the number of neurons per hidden layer and the number of hidden layers in the structure of the ANN), the activation function, the learning rate, and the training epochs. As a result, setting the optimum ANN the improve the performance of the ANN requires an extensive search. Thus, in this study, different number of ANNs with different hyperparameters will be tested in order to select the hyperparameters that produce the best predictions. Specifically, 240 different ANNs with different numbers of hidden layers, number of neurons per hidden layer, and with different activation functions will be investigated in order to choose the optimum setup that can accurately predict the compaction parameters namely the OMC and MDD.

In general, the activation function might take multiple expressions. In this paper, the impact of the most common three activation functions, that are widely used in the applications of ANNs on the asphalt pavement engineering field [31], on the performance of the ANNs will be tested. These three activation functions are:

The Rectified Linear Unit (ReLU) function

The sigmoid activation function

The hyperbolic tangent activation function

In this study, a total of 80 different ANNs with different architectures will be tested for every activation function starting from a simple ANN that consists of one hidden layer and one neuron in this hidden layer to a complex ANN with four hidden layers and 20 neurons per hidden layer. the general structure of the ANNs proposed in this study is shown in Fig. 5. For the inputs, previous studies have shown that both the grain size distribution and index properties are the main factors that affect the compaction parameters. Thus, the proposed ANNs will utilize the index properties and grain size distribution as the inputs to predict the compact parameters. The main advantage of using these inputs is that these inputs can be easily estimated through laboratory tests that are inexpensive and fast to conduct. As a result, this study uses the plastic limit (PL), liquid limit (LL), and grain size distribution for the prediction of both the OMC and MDD.

2.3 Experimental procedure and dealing with overfitting

The main objective of ANNs is to develop prediction models that perform well on both the training dataset and new data that was never introduced to the ANN. However, the process of training and developing an ANN that can generalize in new datasets is challenging [34]. The main challenge in the training process is that little training will result in an ANN that performs poorly on the training dataset and on new datasets. This model underfits the problem. On the other side, too much training of the ANN will result in an ANN that performs well on the training dataset and poorly on new datasets. In this case, the model overfits the problem. In general, dealing with underfitting is easy and can be solved by increasing the capacity of the model. For ANNs, capacity refers to the ability of the model to fit a variety of functions. Increasing the capacity of the model can be achieved by changing the structure of the model by increasing the number of the hidden layers or by increasing the number of neurons per hidden layer [35]. Previous studies show that underfitting can be easily addressed; however, it is more common to have an overfit model [36]. Overfitting can be discovered by monitoring the performance of the ANN during the training process by evaluating the ANN error on the training set and on a new dataset that was not used during the training process, this dataset is called the validation dataset. Traditionally, the performance is monitored by plotting the error curves for both the training and validation set during the training process. These curves are called the learning curves. In this case, overfitting is detected when the error curves show that the training set keeps dropping while the validation set error decreases at the beginning of the training process and then, at some point, it starts to increase again. In other words, as training progresses, the generalization error may decrease to a minimum and then increase again as the network adapts to the idiosyncrasies of the training data. An ANN model will overfit the problem if it has the sufficient capacity or complexity to do so. Thus, reducing the capacity of the model can reduce the likelihood of the model to overfit the training data. In general, the capacity or complexity of the ANN model is mainly defined by two factors: the structure of the ANN (in terms of the number of hidden layers and the number of neurons per hidden layer) and the parameters (in terms of the weights of the connections). Thus, overfitting can be reduced by reducing the complexity of the ANN by changing the network structure and changing the values of the connection weights [37]. In other words, the complexity of the ANN model can be varied by changing the number of adaptive parameters in the network and this process is called “structural stabilization”. The second principal approach to controlling the complexity of the ANN model is through the use of regularization which involves the addition of a penalty term to the error function. The simplest and most common regularization method is to add a penalty term to the loss function in proportion to the magnitudes of the weights in the model. This method is called the weight regularization, or the weight decay and it has shown very promising performance for ANN in a large number of studies over the decades [34,35,36]. In general, small weights indicate a less complex model that is more stable and less sensitive to statistical fluctuations in the input data. On the other hand, large weights tend to cause sharp transitions in the activation functions and thus large changes in output for small changes in inputs.

In this study, the number of data used for the development of the ANNs is small which indicates an overfitting problem while developing most of the ANN. Thus, in order to deal with overfitting, different ANNs with different structures will be tested starting from a very simple ANN with one hidden layer and one neuron per hidden layer to a more complex ANN with 4 hidden layers and 20 neurons per hidden layer. The selection of these structures is based on previous ANNs architectures employed in previous studies in the area of pavement engineering. These architectures start from a simple ANN with one hidden layer and one neuron per hidden layer and the architectures get complex to reach and architecture that consists of four hidden layers [38,39,40]. Additionally, the maximum number of neurons per hidden layer in previous studies is 20 neurons per hidden layer [39, 40]. Thus, in this study, the exploration or the search process starts from testing a very simple ANN with one hidden layer and one neuron per hidden layer to a very complex architecture that consists of four hidden layers and 20 neurons per hidden layer. Additionally, these different ANNs were tested in combination with the three different activation functions mentioned earlier (ReLU, sigmoid, and hyperbolic tangent). Furthermore, the weight decay method is used during the training of the ANNs in order to avoid overfitting and control the changes in the connection weights. In this case, the loss function has an additional penalty term that penalizes the ANN when the weights increase. Thus, the loss function used during the training of the ANNs takes the following format:

where: \({y}_{i}\) = the output of the ANN; \({t}_{i}\) is the actual output or the target value; \({\theta }_{i}\) = the connection weight; \(n\)= the number samples, \(m\)= the number of connection weight; and \(\lambda\) = the regularization hyperparameter.

The loss function used consists of two terms. The first term is the traditional loss function and consists of the square error of the prediction of the ANN. The second term is the penalization term, and it consists of two parts to penalize the model based on the magnitude of the weights. The second part is the size or summation of the connection weights, and the first part (\(\lambda\)) is the regularization hyperparameter which refers to the amount of attention the optimization process should pay to the penalty, and it is used to control the impact of the penalty on the loss function. The regularization hyperparameter takes a value between zero to one. If the regularization hyperparameter is too strong and close to one, the model will underestimate the weights and in turn underfit the data. On the other side, if the regularization hyperparameter is too weak and close to zero, the model will be allowed to overfit the training data. Setting the regularization hyperparameter value is mainly based on a grid search using trial and error in order to reach a value that balance the two terms in the loss function. Previous studies show that the regularization hyperparameter, traditionally, takes a small value that is ranged from 0.1 to 0.0001. Thus, in this study, seven regularization hyperparameters were tested (0.1, 0.05, 0.01, 0.005, 0.001, 0.0005, 0.0001) and a value of 0.001 was chosen as it was the value showing the best predictions across multiple ANNs with different structures. Additionally, initial weights are assigned randomly at the beginning of the training process and the training process lasts for 1000 iterations. The dataset used in this study was divided into three sets: training set (40 samples), validation set (12 samples), and testing set (12 samples). The training dataset is used for the ANN learning (or training) process, by adjusting the weight and bias vectors to minimize the differences between the outputs and the targets. The validation dataset is used to monitor the convergence of the ANN learning process, and it is often used to avoid overfitting so that the ANN model is applicable to new inputs beyond the ones used in training or validating the ANN model. The testing dataset is used to check the performance of the trained ANN once completed. The training, testing, and validation sets were randomly selected from the original dataset before the training of the different ANNs. Then, the same training, validation, and testing datasets were used for the training and development of the different ANNs. Additionally, the gradient descent optimization algorithm was adopted to update the connection weights in a way that minimizes the loss function during the training process. For the learning rate, the two studies by Ye et al. [41] and Tong et al. [42] have conducted a sensitivity analysis and studied the impact of different learning rates on the training process in similar applications for the prediction of some characteristics or parameters in the pavement engineering field. These two studies concluded that a learning rate with a value of 0.2 should perform better than other values. Thus, in this study, a learning rate of 0.2 was used to update the connection weights during the training process.

2.4 Evaluating the performance of the proposed ANNs

In general, the process of evaluating the different hyperparameters of the ANN should be based on the accuracy of every ANN in predicting the compaction parameters. Traditionally, the evaluation process is conducted based on some statistical indicators that present the accuracy of the predictions relative to the actual values. The coefficient of determination (R2) is the most common and widely used metric used to test the accuracy of any prediction model. Thus, the evaluation process of the different ANNs will be conducted based on the R2 values. In general, the R2 value can be calculated using the following formula:

where, \(h_{i}\) is the output of the ANN, \(t_{i}\) is the actual output or the target value, and \(\overline{{h_{i} }}\) is the average of the calculated ANN output.

3 Analysis and results

This section mainly focuses on presenting the result or the accuracy of the different ANNs tested with different settings. Thus, Tables 3 and 4 show the coefficient of determination of the different ANNs when used on the testing set for both the OMC and MDD. Additionally, in order to make it easy to compare the values in the tables, the cells are highlighted from red to green where the red cells indicate that the ANN has a low level of accuracy with a low R2 value. On the other side, the cells that are highlighted in green indicate that these ANNs have good performance and can accurately predict the prediction parameter with high R2 values.

3.1 Estimation of the MDD

This section mainly focuses on understanding the performance of the different ANNs in predicting the MDD values as shown in Table 3. The results show that ReLU activation function is the activation function that achieves the best performance and can accurately predict the compaction parameters. The best performance can be achieved using multiple different ANN architectures with the ReLU activation function and an R2 value of 0.826 can be achieved. On the other side, both the sigmoid and tanh activation functions perform poorly when compared with the ReLU activation function, indicating overfitting as the complexity of the ANN increases using more complex activation functions such as the sigmoid and tanh activation functions. Additionally, the results show that the ReLU activation function is the most stable function as most of the ANNs perform with similar performance. On the other hand, the performance of the different ANNs employing the sigmoid and tang activation function shows high variations in the performance and the ability in predicting the MDD. Moreover, there is no specific pattern for the performance of the different ANNs with respect to their structure in terms of the number of hidden layers or the number of neurons per hidden layer.

3.2 Estimation of the OMC

While the previous subsection focuses on evaluating the performance of the different ANN structures in predicting the MDD, this section investigates and studies the performance of the different ANNs in predicting the OMC. Thus, the coefficients of determination of the different ANNs are shown in Table 4. The results show that the ReLU activation is the best activation function that offers accurate predictions when compared with both the sigmoid and tanh activation functions. Additionally, the ReLU activation function is the most stable as the different ANN architectures perform similar with minor changes, unlike the reaming activation functions that show huge fluctuations in the performance across the different architectures. Moreover, the results show that the performance of the ANNs does not change with the increase in the number of hidden layers per neuron; however, minor improvements can be observed with the increase in the number of hidden layers in the ANN. The best ANN employing the ReLU activation function can predict the OMC with an R2 value of 0.82. On the other side, most of the ANNs using both the sigmoid and tanh activation functions perform poorly when compared to the ANNs using the ReLU activation function, which indicates overfitting. Additionally, for both the sigmoid and tanh activation functions, there is no specific pattern for the performance of the different ANNs with respect to their structure in terms of the number of hidden layers or the number of neurons per hidden layer. However, some ANNs that employ the tanh activation function can outperform the performance of the ReLU activation function. The best R2 value can be achieved using an ANN that consist of one hidden layer, five neurons in this hidden layer, and with the tanh activation function. This ANN can predict the OMC with R2 values of 0.911; however, finding this ANN requires an extensive search. Thus, it is recommended to use the activation function that can predict the OMC with high accuracy and with stable predictions across the different architectures. As a result, it is recommended to use the ReLU activation function.

3.3 The optimum architecture for predicting the two compaction parameters

While the previous two subsections mainly focus on finding the optimum ANN for a specific parameter (OMC or MDD), this section focuses on selecting the optimum ANN that can accurately predict both the OMC and MDD. Thus, the coefficients of determination for both the OMC and MDD were combined in a new R2 value called the balanced R2 and can be calculated as follows:

The balances R2 value was calculated for every ANN and the results are shown in Table 5. The results show that the ReLU activation function is the optimum activation function that can predict both the OMC and MDD. In general, the performance of the different ANNs using the ReLU activation is stable as the changes in the performance or prediction accuracy are minor from one ANN to the other. Additionally, the best ANN employs the ReLU activation function and has an R2balanced of 0.822. On the other side, the different ANNs using the sigmoid and tanh activation functions perform poorly when compared to the ReLU activation function and their performance are subjected to huge variations, which indicates overfitting. However, one of the ANNs using the tanh activation function outperforms all the other ANNs. This ANN has an R2balanced of 0.826 and consists of four hidden layers and 12 neurons per hidden layer (as shown in Fig. 6), which is slightly better than the ANN using the ReLU activation function. In order to compare the performance of the different ANNs, Table 6 shows the performance of the optimum ANN for predicting the OMC individually, the MDD individually, and both the OMC and MDD. The table shows that the optimum ANN for predicting the two compaction parameters has a good performance that is similar to the performance of the optimum ANN for predicting only one parameter individually. The table shows that the optimum ANN for predicting the two parameters can predict the MDD with 2.5% lower R2 values than the optimum ANN for predicting the MDD individually. Similarly, the optimum ANN for predicting the two parameters can predict the OMC with 6.1% lower R2 values than the optimum ANN for predicting the OMC individually. These differences are minor and indicate that the optimum ANN for predicting the two parameters perform similar to the optimum ANN for predicting every output individually.

3.4 Multiple linear regression (MLR)

One of the most common techniques that have been used in the literature for predicting the compaction parameters is MLR analysis. Thus, this technique will be used to validate the ANN models developed in this study. For the MLR model development, the backward elimination was adopted to exclude the insignificant variables. The backward elimination starts by developing a MLR model that contains all the input variables and then if one of the variables does not contribute to the regression model, this variable is eliminated, and a new regression model is developed. Then, this process keeps running until a model that only includes the significant variables, that contribute to the regression model, is developed. The backward elimination process followed while developing the regression models for predicting the OMC and MDD is shown in Tables 7 and 8 and Tables 9 and 10 summarize the final MLR models for predicting the OMC and MDD. Comparing the performance of the MLR models and the optimum ANN models shows that ANNs outperform the traditional MLR technique as shown in Table 11. The table shows that the MLR models can predict the OMC and MDD with low accuracy as the R2 values of the two models are 0.395 and 0.393 for predicting the MDD and OMC respectively. On the other hand, ANNs can predict the OMC and MDD with much better performance than the optimum ANN can predict the OMC and MDD with R2 values of 0.911 and 0.826.

4 Conclusion

This paper mainly focuses on providing ANN prediction models for estimating the compaction parameters of aggregate base course samples that were collected from different roads all over Egypt. A total of 64 samples were collected and tested in order to develop the ANN prediction models that use Atterberg limits and grain size distribution as the inputs to predict the OMC and MDD. Additionally, in order to set the optimum hyperparameters that produced the best predictions, different ANNs with different architectures and activation functions were tested. Moreover, the weight decay, which is the most common regularization method, was used to avoid overfitting of the ANNs during the training process. The main outcomes of this study can be summarized as follows:

-

The optimum ANN hyperparameters vary depending on the optimized output (OMC individually, MDD individually, or both) as shown in Table 12.

-

In general, the ReLU activation function is the most efficient activation function. However, one ANN that employs the tanh activation functions slightly outperforms (R2balanced = 0.826) the performance of all the ANNs that use the linear activation function (R2balanced = 0.822). However, the performance of the different ANNs using the tanh activation function is unstable and is subjected to large variations that require an extensive search. Additionally, the difference in the performance between the optimum ANN using the tanh activation function and the optimum ANN using the ReLU activation function is minor (0.004 in R2balanced). As a result, the ReLU activation function is considered the optimum activation function that offers the best stable performance.

-

Results of this study show that ANN is a useful technique for the prediction of the aggregate base compaction parameters with high accuracy.

-

The developed ANNs can be used for the prediction of the compaction parameters instead of the standard Proctor test with high accuracy (R2 = 0.911 and 0.826 for OMC and MDD). This approach saves significant time, material, and effort. As a result, these ANNs will be useful for the prediction of the aggregate base OMC and MDD for road construction projects in Egypt.

-

It is shown that the ANN approach outperforms the traditional regression analysis technique as the ANNs provide much more accurate results than the MLR models.

Abbreviations

- ANN:

-

Artificial neural networks

- OMC:

-

Optimum moisture content

- MDD:

-

Maximum dry density

- LL:

-

Liquid limit

- PL:

-

Plastic limit

- PI:

-

Plasticity Index

- MLR:

-

Multiple linear regression

- %P(i):

-

Percentage of the passing from sieve (i)

References

Rakaraddi PG, Gomarsi V (2015) Establishing relationship between CBR with different soil properties. In: IJRET, pp 182–188

Mousa KM, Abdelwahab HT, Hozayen HA (2018) Models for estimating optimum asphalt content from aggregate gradation. In: Proceedings of the institution of civil engineers—construction materials. https://doi.org/10.1680/jcoma.18.00035

Othman K, Abdelwahab H (2021) Prediction of the optimum asphalt content using artificial neural networks. Metall Mater Eng J Assoc Metall Eng Serbia AMES 27(2):227–242

HMA Pavement Mix Type Selection Guide. IS 128. National Asphalt Pavement Association and Federal Highway Administration. Lanham, MD and Washington D.C. respectively

Sridharan A, Nagaraj HB (2005) Plastic limit and compaction characteristics of finegrained soils. Proc Inst Civ Eng Ground Improv 9(1):17–22. https://doi.org/10.1680/grim.2005.9.1.17

Proctor RR (1933) Fundamental principles of soil compaction. Eng News Rec 111:13

Viji VK, Lissy KF, Sobha C, Benny MA (2013) Predictions on compaction characteristics of fly ashes using regression analysis and artificial neural network analysis. Int J Geotech Eng 7(3):282–291. https://doi.org/10.1179/1938636213Z.00000000036

Standard, A.S.T.M (2012) D 698: Standard Test Methods for Laboratory Compaction Characteristics Of Soil Using Standard Effort (12 400 Ftlbf/ ft3 (600 Kn-m/m3). ASTM International, West Conshohocken

Zainal AKE (2016) Quick estimation of maximum dry unit weight and optimum moisture content from compaction curve using peak functions. Appl Res J 2:472–480

Jumikis AR (1946) Geology of soils of the newark (NJ) metropolitan area. J Soil Mech Found ASCE 93(SM2):71–95

Jumikis AR (1958) Geology of soils of the newark (NJ) metropolitan area. J Soil Mech Found Div 84(2):1–41

Ring GW, Sallberg JR, Collins WH (1962) Correlation of compaction and classification test data. Highw Res Board Bull 325:55–75

Ramiah BK, Viswanath V, Krishnamurthy HV (1970) Interrelationship of compaction and index properties. In: Proceedings of the 2nd South East Asian conference on soil engineering 577, vol. 587

Hammond AA (1980) Evolution of one point method for determining the laboratory maximum dry density. In: Proceedings of the Icc, vol 1, pp. 47–50

Wang MC, Huang CC (1984) Soil compaction and permeability prediction models. J Environ Eng 110(6):1063–1083. https://doi.org/10.1061/(ASCE)0733-9372(1984)110:6(1063)

Sinha SK, Wang MC (2008) Artificial neural network prediction models for soil compaction and permeability. Geotech Geol Eng 26(1):47–64. https://doi.org/10.1007/s10706-007-9146-3

Al-Khafaji AN (1993) Estimation of soil compaction parameters by means of atterberg limits. Q J Eng GeolHydrogeol 26(4):359–368. https://doi.org/10.1144/GSL.QJEGH.1993.026.004.10

Blotz LR, Benson CH, Boutwell GP (1998) Estimating optimum water content and maximum dry unit weight for compacted clays. J Geotech Geoenviron Eng 124(9):907–912. https://doi.org/10.1061/(ASCE)1090-0241(1998)124:9(907)

Gurtug Y, Sridharan A (2004) Compaction behaviour and prediction of its characteristics of fine grained soils with particular reference to compaction energy. Soils Found 44(5):27–36. https://doi.org/10.3208/sandf.44.5_27

Sridharan A, Sivapullaiah PV (2005) Mini compaction test apparatus for fine grained soils. Geotech Test J 28(3):240–246

Di Matteo L, Bigotti F, Ricco R (2009) Best-fit models to estimate modified proctor properties of compacted soil. J Geotech Geoenviron Eng 135(7):992–996. https://doi.org/10.1061/(ASCE)GT.1943-5606.0000022

Günaydın O (2009) Estimation of soil compaction parameters by using statistical analyses and artificial neural networks. Environ Geol 57(1):203. https://doi.org/10.1007/s00254-008-1300-6

Bera A, Ghosh A (2011) Regression model for prediction of optimum moisture content and maximum dry unit weight of fine grained soil. Int J Geotech Eng 5(3):297–305. https://doi.org/10.3328/IJGE.2011.05.03.297-305

Farooq K, Khalid U, Mujtaba H (2016) Prediction of compaction characteristics of fine-grained soils using consistency limits. Arab J Sci Eng 41(4):1319–1328. https://doi.org/10.1007/s13369-015-1918-0

Ardakani A, Kordnaeij A (2017) Soil compaction parameters prediction using GMDH-type neural network and genetic algorithm. Eur J Environ Civ Eng. https://doi.org/10.1080/19648189.2017.1304269

Gurtug Y, Sridharan A, İkizler SB (2018) Simplified method to predict compaction curves and characteristics of soils. Iran J Sci Technol Trans Civ Eng 42(3):207–216. https://doi.org/10.1007/s40996-018-0098-z

Hussain A, Atalar C (2020) Estimation of compaction characteristics of soils using Atterberg limits. IOP Conf Ser Mater Sci Eng 800:012024

Özbeyaz A, Soylemez M (2020) Modeling compaction parameters using support vector and decision tree regression algorithms. Turk J Elec Eng Comp Sci 2020(28):3079–3093. https://doi.org/10.3906/elk-1905-179

ECP (Egyptian Code Provisions) (2008) ECP(104/4): Egyptian code for urban and rural roads. Part (4): Road material and its tests. Housing and Building National Research Center, Cairo, Egypt

Standard B 1377 (1990) Methods of test for soils for civil engineering purposes. British Standards Institution, London

Othman K (2022) Prediction of the hot asphalt mix properties using deep neural networks. Beni-Suef Univ J Basic Appl Sci 11:40. https://doi.org/10.1186/s43088-022-00221-3

Haykin S (1994) Neural networks, a comprehensive foundation. Prentice Hall, New Jersey

McCulloch W, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5:115–133

Goodfellow I, Bengio Y, Courville A (2017) Deep learning (adaptive computation and machine learning series). Massachusetts, Cambridge, pp 321–359

Reed R, MarksII RJ (1999) Neural smithing: supervised learning in feedforward artificial neural networks. MIT Press, New York

Salman S, Liu X (2019) Overfitting mechanism and avoidance in deep neural networks. arXiv:1901.06566

Chris Bishop J, Bishop C, Hinton G, Bishop P (1995) Neural networks for pattern recognition. Adv Texts Econom 27(2):227–242

Othman K (2021) Deep neural network models for the prediction of the aggregate base course compaction parameters. Designs 5(4):78

Othman K, Abdelwahab H (2021) Prediction of the soil compaction parameters using deep neural networks. Transp Infrastruct Geotech. https://doi.org/10.1007/s40515-021-00213-3

Othman K (2022) Artificial neural network models for the estimation of the optimum asphalt content of asphalt mixtures. Int J Pavement Res Technol. https://doi.org/10.1007/s42947-022-00179-6

Ye W, Jiang W, Tong Z, Yuan D, Xiao J (2021) Convolutional neural network for pothole detection in asphalt pavement. Road Mater Pavement Des 22(1):42–58

Tong Z, Gao J, Han Z, Wang Z (2018) Recognition of asphalt pavement crack length using deep convolutional neural networks. Road Mater Pavement Des 19(6):1334–1349

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

(KO) literature search and review, research methodology, data analysis, and manuscript writing, data preparation and manuscript review.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Availability of data and materials

The data that support the findings of this study are available from the corresponding author, [KO], upon reasonable request.

Code availability

The data that support the findings of this study are available from the corresponding author, [KO], upon reasonable request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Othman, K. Estimation of the compaction parameters of aggregate base course using artificial neural networks. SN Appl. Sci. 4, 272 (2022). https://doi.org/10.1007/s42452-022-05158-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-022-05158-x