Abstract

Aiming to monitor wear condition of milling cutters in time and provide tool change decisions to ensure manufacturing safety and product quality, a tool wear monitoring model based on Bagging-Gradient Boosting Decision Tree (Bagging-GBDT) is proposed. In order to avoid incomplete tool state information contained in a single domain feature parameter, a multi-domain combination method is used to extract candidate characteristic parameter sets from time domain, frequency domain, and time–frequency domain. Then top 21 significant features are screened by eXtreme Gradient Boosting selection method. Synthetic Minority Oversampling Technique technology is integrated during feature selection to overly sample feature vectors, so that wear condition categories can be well balanced. Bagging idea is then introduced for parallel calculation of the gradient boosting decision tree and to improve its generalization ability. A Bagging-GBDT milling cutter wear condition prediction model is constructed and verified by public ball-end milling data set. Experiments show that random features and training samples selection can effectively improve prediction performance and generalization ability of prediction model. Our Bagging-GBDT model gains F1 score of 0.99350, which is 0.2% and 13.2% higher than the random forest algorithm and basic GBDT model, respectively.

Article Highlights

-

Candidate parameter sets are extracted from multi-domain (time, frequency, and time–frequency).

-

Topmost significant features are screened by XGBoost selection, and balanced via SMOTE technology.

-

Bagging idea is introduced for parallel calculation of the gradient boosting decision tree and to improve generalization ability of the prediction model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

High-speed milling technology is an important branch of advanced manufacturing industry. Cutting tool acts directly on workpieces, so its wear state will affect machining accuracy, surface quality and production efficiency of products. In recent years, with the development of new-generation information technologies such as sensors and artificial intelligence, realization of intelligent monitoring of tool wear has become one of research hotspots [1,2,3].

A fitful model for study of tool wear is difficult to construct when all signal and factors are considered, thus scholars have to try various models. One direct method to perform tool health assessment is through logistic regression (LR). In Yu [4], prognostic features are selected with a penalization method, and prediction model formed via manifold regularization. Liu [5] proposes a novel unsupervised CNN-transformer neural network (CTNN) model for wear estimation. The transformer model and convolutional neural networks (CNN) are parallely used to process condition monitoring (CM) data. The effectiveness and superiority of this method are validated on a public dataset. Zhang [6] establishes a Least Square Support Vector Machine (LS-SVM) based wear model, which manifests that the LS-SVM model is capable of tool wear prediction at specified cutting conditions.

Above basic models are mostly targeting at a certain monitoring condition and lack of accuracy. Some scholars have been applying optimization method to achieve better prediction performance. To clearly obtain the grade of tool wear condition, Liao [7] optimizes the SVM classifier with a grey wolf algorithm, which reflects to be more accurate and with higher generalization ability. Li [8] aims at the problems of slow convergence of traditional artificial neural networks and ease of falling into local minimums. They adopted least square method to optimize a support vector machine model, so that the recognition accuracy of tool wear was higher than that of traditional neural networks. In García-Nieto [9], particle swarm optimization algorithm was used in kernel parameter setting of Supported Vector Machine (SVM), that resulted in accuracy of 95% for wear status evaluation. Li [10] established a evaluation model based on Random Forest (RF) algorithm with accuracy rate up to 99.1%. Krishnakumar [11] extracted statistical features from vibration signals to train one BP neural network, and the identification accuracy of high speed machining of titanium (Ti-6Al-4 V) alloy went to 95.4%.

Single method always lack the ability of generalization, and optimization of a single model is difficult and easy to be overfit. To evaluate synthetic performance of different methods, thoughts of integrated learning, such as eXtreme Gradient Boosting (XGBoost) [12, 13] and Bagging [14], are now imported for cutting wear prediction.

In this paper, multi-domain feature extraction is performed on machining data of one typical milling cutter. At the same time, aiming to reduce large dimensionality of different sensor signal features, which negatively affect complexity and robustness of machine learning algorithm, an advanced feature extraction methodology based on XGBoost feature significance analysis is proposed. In order to improve generalization ability and computing efficiency of basic Gradient Boosting Decision Tree (GBDT), we introduce the Bagging idea to form one Bagging-GBDT milling cutter wear prediction model. Compared with RF and basic GBDT prediction models, prediction performance of this modified model is going to be verified. To be more clear, we illustrate the structure of this paper in Fig. 1.

2 Methods

Our methods will follow the pipeline of data acquisition, dimension reduction and prediction.

2.1 Wear status data acquisition

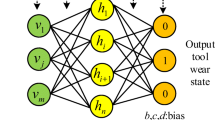

Tool wear condition recognition is a multi-type pattern recognition problem. In Fig. 2, acquisited sensor signals are preprocessed, and then analyzed in time domain, frequency domain and time frequency domain. Sensitive features of milling cutter wear condition are extracted and selected to form the optimal classification features. Finally, an evaluation model of milling cutter wear condition is constructed.The main steps are signal acquisition and data processing, feature selection and tool wear condition recognition.

This experiment uses data set released by US PHM Society (2010) High Speed Milling Machine Tool Health Prediction Competition [15]. Equipment and processing parameters in PHM experiments are shown in Table 1.

Figure 3 is an experimental system for monitoring wear condition of milling cutters. Cutting force signal (qx, qy, qz) and vibration signal (zx, zy, zz) are collected by installing a three-way dynamometer and an acceleration vibration sensor. Every cutter is used for 315 times of 108 mm face-milling cut along X axis under the exact same operation time. After each pass is completed, wear parameters of the 3 blades of the milling cutter are measured with a microscope.

2.2 Feature extraction and dimension reduction

2.2.1 Sensor signal features extraction

For that characteristic parameters in a single domain may contain incomplete information, several different characteristic parameters are extracted from time domain, frequency domain and time frequency domain to form a candidate characteristic parameter set [16]. The extracted time domain and frequency domain feature values are listed in Table 2.

Wavelet packet decomposition theory [17] is employed to obtain frequency energy characteristics in different time periods. Wavelet packet transform is a supplement and extension of wavelet transform. Except the ability of low frequency signal decomposition, it can also decompose high frequency region without any redundancy or omission, and extract the energy characteristics of the whole frequency band [18]. The wavelet packet basis db6 is selected to decompose cutting force and vibration signals in three layers. Decomposition coefficients of 8 subbands \({r}_{(3,i)},i={0,1},\ldots ,7\) of the low and high frequencies in the third layer are extracted. The wavelet packet energy of each subband is

\(N\) is the number of sampling points. There are 8 types of energy.

2.2.2 Feature dimensionality reduction

Feature selection is for determining the upper limit of a model, and avoiding dimensional disaster by removing redundant features, thereby improving the accuracy of the model [19]. Feature selection methods mainly include filtering, packaging and embedding. The feature selection method based on XGBoost is one of the packaging methods, in which feature importance is used as a reference to extract features that are more pertain to wear loss.

XGBoost improves the objective function of GBDT, and introduces a regular term in the loss function, which can accelerate convergence speed while improving generalization ability of a single tree.

The objective function model is:

\(l\left({y}_{i}, {\widehat{y}}_{i}\right)\) is square loss function of the true value \({y}_{i}\) and the predicted value \({\widehat{y}}_{i}\), \(\Omega \left({f}_{i}\right)\) is a regularization term.

where \(\gamma\) represents the difficulty coefficient of tree segmentation used to control the growth of the tree; T denotes number of leaf nodes; λ is a L2 regularization coefficient.

Finally, the available objective function is:

\({g}_{i}\) is the first derivative of the loss function \(l\left({y}_{i},{\widehat{y}}_{i}\right)\); \({h}_{i}\) is the second derivative of the loss function \(l\left({y}_{i},{\widehat{y}}_{i}\right)\); \({I}_{j}\in \left\{q\left({X}_{i}\right)=j\right\}\).

XGBoost feature selection depends on the importance of each feature's contribution to the model, which is the sum of times the feature is used for tree segmentation. XGBoost uses a greedy strategy for tree segmentation, traversing each feature, calculating gains before and after splitting all features according to formula (4), and selecting the feature with the largest gain as the split node.

2.2.3 Unbalanced data processing

Prediction of milling cutter wear condition has the problem of unbalanced data classification. There are more data of normal wear than initial and sharp wear, that will induce more samples being classified into categories with more samples, resulting in poor generalization of the model. Synthetic Minority Oversampling Technique (SMOTE) is often used for unbalanced data classification [12, 20]. The idea is to interpolate samples of the nearest K less-sample categories to form a new category and add it into the data set, so that different categories tend to be more balanced.

2.3 Wear prediction based on Bagging-GBDT

2.3.1 GBDT method

Gradient Boosting Decision Tree (GBDT) is an integrated learning method based on decision tree, where the relationship between attribute vector and prediction object are mapped. Starting from irregular and disorder sample data, an optimal partition rule is followed until the predicted value is obtained at the leaf node. GBDT learns Classification And Regression Tree (CART) model \({\mathrm{T}}({\mathrm{x}};{\uptheta }_{m})\) by fitting negative gradient of mean square error loss function, so that loss function can decrease gradually, and fitting function \({f}_{0}(x)\) infinitely close to the ground-truth value. The GBDT algorithm flow is as follows:

-

1.

Initialize the weak classifier

$${f}_{0}\left(x\right)=argmin\sum_{i=1}^{N}L({y}_{i},\rho )$$(6)\({y}_{i}\) is the true value of the sample; \(\rho\) is a constant.

L denotes loss function:

$$L\left(y,f\left(x\right)\right)={(y-f(x))}^{2}$$(7)The total loss of all N samples is

$${L}_{all}=\sum_{i=1}^{N}L({y}_{i},{f}_{m}({x}_{i}))$$(8)where \({f}_{m}({x}_{i})\) is the mth predicted value.

-

2.

Minimize the loss function

Negative gradient function is

$$-g\left({x}_{i}\right)=-\frac{\partial L({y}_{i},f({x}_{i}))}{\partial f({x}_{i})}$$(9)We construct a negative gradient fitting function \({\mathrm{h}}({\mathrm{x}}_{i};{\mathrm{\alpha }})\) to fit the negative gradient \(-g\left({x}_{i}\right)\).

$${\mathrm{\alpha }}_{m}=argmin\sum_{i=1}^{N}{(-g\left({x}_{i}\right)-\beta {\mathrm{h}}({\mathrm{x}}_{i};{\mathrm{\alpha }}))}^{2}$$(10)where \({\mathrm{\alpha }}_{m}\) and \(g\left({x}_{i}\right)\) denote residual parameter and gradient, \(\beta\) and \({\mathrm{\alpha }}\) are coefficients.

-

3.

Optimize weight factor

$${\beta }_{m}=argmin\sum_{i=1}^{N}L({y}_{i},{f}_{m-1}\left({x}_{i}\right)+\beta h({\mathrm{x}}_{i};{\mathrm{\alpha }}_{m}))$$(11)where \({\beta }_{m}\) is weight coefficient; \({f}_{m-1}\left({x}_{i}\right)\) is fitting function of the m-1st iteration.

-

4.

Update forecast function

$${f}_{m}\left(x\right)={f}_{m-1}\left(x\right)+{\beta }_{m}{h}_{m}({\mathrm{x}};{\mathrm{\alpha }}_{m})$$(12)\({f}_{m}\left(x\right)\) is fitting function of the current iteration; \({\beta }_{m}{h}_{m}({\mathrm{x}};{\mathrm{\alpha }}_{m})\) is the m-1st iterative negative gradient fitting function.

In the above there are dependencies between weak learners, so it is difficult to parallelize training data. For this reason, a Bagging ensemble learning method is employed to randomly select training samples to improve the generalization ability of GBDT model.

2.3.2 Construction of Bagging-GBDT algorithm

Bagging [21] is an ensemble learning framework, which realizes parallel computation by forming a series of independent base classifiers from different training samples, that can also improve algorithm accuracy and efficiency. In this paper, the idea of bagging is applied to the field of milling cutter wear condition prediction based on basic learning device of GBDT (Fig. 4).

BootStrap method [22] is adopted for updating sample distribution—original data is randomly sampled and put back, to diverse sample data of each classifier and improve performance of the ensemble classifier. For a given training set, T training feature subsets are formed by T-round bootstrap sampling, and then T weak classifiers are generated by GBDT learning on the T training feature subsets respectively. Finally, those weak classifiers are integrated into a strong classifier.

GBDT evaluation model is a series model. During its construction, weak decision tree model is established depending on the prediction results of the previous one. After adoption of Bagging algorithm, there will be no dependence between GBDT models of each base classifier. Through parallel computing mechanism of Bagging framework, calculation speed is greatly improved. At the same time variance of prediction error is reduced, and model generalization is improved.

2.3.3 Performance evaluation

The purpose of machine learning is to train samples for recognition of new samples. Common indexes to evaluate effect of machine learning include precision, recall and weighted harmonic mean. In Table 3, T and F denote correct and wrong prediction, P and N represent positive and negative categories, and the diagonal lines of confusion matrices TP and TN represent correct prediction results.

At the same time, Receiver Operating Characteristic (ROC) curve and Area Under the Curve (AUC) are often used to evaluate the quality of classifiers. ROC curve reflects a comprehensive prediction on relationship of sensitivity and specificity, where closer relationship will result in ROC curve to the upper left corner. Greater AUC value indicates higher prediction accuracy.

3 Results

Data processing and modeling work in this paper are based on Python programming language, combined with Scikit-Learn machine learning library, Pywalvelets wavelet library and SMOTE library for dealing with unbalanced data.

3.1 Data preparation

In the milling cutter wear monitoring experiment, a total of six milling cutters (c1–c6) under the same working conditions are collected for their whole life cycle signal data, including cutting force signals and vibration signals. Trend of the wear value of the c4 milling cutter throughout its life cycle is given as an example in Fig. 5.

In this case, the first to the 30th pass is defined as the initial wear stage, the 31st to 210th pass as the normal stage, and the 211th until 315th as the sharp wear stage. In the following, c4 milling cutter data are mainly analyzed.

Figure 6 shows The number of tool passes() for the c4 milling cutter during its whole cutting life cycle. Except qx_6 and qx_7, the other signals, qx_0 to qx_5, can be employed as indicators of the degree of wear.

According to the above signal analysis, we extract 12, 3 and 8 features from time domain, frequency domain and time–frequency domain respectively, for each cutting force or vibration signal along x, y and z direction. Totally (12 + 3 + 8) × 6 = 138 features are extracted along life cycle (315 passes) of one cutter, so size of the final feature matrix is 315 × 138.

Also taking c4 milling cutter as an example, organize all features into a feature matrix and then train the XGBoost feature selection model to evaluate importance of each feature in descending order (Fig. 7).

The top 50 features are shown in Fig. 7. The maximum value, 0.0765, represents that the cutting force along z-axis direction is the most important. Unto this analysis, we select the topmost important 21 features to form a new feature matrix. It is easy to find that 18 of those features represent cutting force signals, which also means the main factor affecting wear loss is cutting force. The final size of this feature matrix is now shrank into 315 × 21.

Let 0, 1 and 2 denote the three wear stages, initial wear, normal wear and sharp wear respectively. Figure 8 is comparison diagram of category distribution before and after adopting SMOTE method for the feature matrix. Originally samples of the three stages accounted for 9.5%, 57.1% and 33.3% respectively. After SMOTE, the number of samples of category 0 and 1 are increased significantly, and the resulted feature matrix size is increased into 540 × 21.

3.2 Prediction

During Bagging-GBDT model training, 5-folds cross validation is exerted on the above balanced dataset. To obtain optimized Bagging-GBDT parameters and compare performance between different models, all of the experimental prediction studies utilize the same data processed after SMOTE. The size of training samples input into Bagging-GBDT model is 540 × 21. Parameters of our model is optimized via Grid search method (Table 4).

Learning curve of Bagging-SVM and Bagging-GBDT model is shown in Fig. 9. Compared with Bagging-SVM, Bagging-GBDT gains a more higher classification accuracy, which is 5%.

Algorithms like traditional k-Nearest Neighborhood (KNN) and SVM, and other different algorithms as GBDT, Adaboost based on the Boosting idea and Random Forest based on the Bagging idea are included in our study. The experimental results are shown in Table 5.

In Fig. 10, SVM shows the worst prediction performance. The overall performance of integrated algorithms (such as RF, GBDT, and Adaboost) is better than that of a single learner (such as KNN and decision tree). F1 value of Bagging-GBDT is the highest, which is up to 0.9935. Compared with random forest and basic GBDT, the results are improved by 0.2% and 13.2% respectively.

ROC curves of RF and Bagging-GBDT models are shown in Fig. 11. The ROC curves of the Bagging-GBDT model on initial wear, normal wear and sharp wear are concentrated to the upper left corner, which also means it has a higher AUC value than RF.

4 Discussion

From wear loss trend of the c4 milling cutter (Fig. 5), it can be seen that the average wear amount of three cutting edges of one cutting tool has high degree of differentiation between three wear stages: initial wear, normal wear and sharp wear. This trend can also be easily reproduced from other milling cutters.

By wavelet analysis, we can easily partition and obtain new features from the angle of energy frequency. Those features, qx_0, …, qx_5 in Fig. 6, manifesting high correlation between frequency band and wear loss are effective factors and will be employed for further analysis.

Learning curve is for judging generalization ability of a model. When prediction accuracy of training set is greater than that of cross validation set, the model appears to be over-fitted. When prediction accuracy of both training set and cross validation set is low, the model shows underfit. The learning curve of Bagging-GBDT (Fig. 9) shows high prediction accuracy on both training set and validation set, which increases as sample number increases, that implies strong generalization ability of this model.

Both GBDT and Adaboost belong to forward stepwise algorithm, and GBDT shows stronger robustness. Compared with the random forest which also uses the Bagging idea, the prediction performance of Bagging-GBDT is better, mainly because of random feature selection and sampling with replacement scheme during decision tree construction.

5 Conclusion

In order to improve generalization performance of GBDT model, a Bagging algorithm is introduced for milling cutter wear condition prediction.

-

1.

First, synthesized candidate feature parameter sets are constructed from multiple domains. Then, 21 features closely related to wear condition are screened out according to importance evaluation via XGBoost algorithm. 18 of them are statistical values of cutting force signals, so the cutting force has the greatest impact on wear condition of milling cutter.

-

2.

During Bagging-GBDT modeling, randomly selected features and samples with replacement are input, and parallel computation is implemented, so our model tends to be more generalized. Experimental results of Bagging GBDT has F1 score of 0.9935, which is 0.2% and 13.2% higher than that of RF and GBDT respectively, which also verifies that our model is more generalizing and more reliable and stable, thus has more excellent prediction performance.

The research of milling cutter wear loss prediction based on Bagging-GBDT model has certain theoretical and practical significance. This paper offers a data-driven offline prediction model of milling cutter wear loss based on an open historical experimental data, which is obviously mismatch with lots of real working conditions. In our further studies, online real-time monitoring and prediction is going to be realized.

Availability of data and materials

The experimental data and models used during the current study are available from the corresponding author on reasonable request.

References

Zhou C (2020) Research on vibration measuring tool holder system and signals’ singularity analysis for online tool wear condition. Shandong University

Li L, Li S, Nv Z (2019) Review on machine tool failure state monitoring. Mech Electr Technol 125(4):110–114

Rmili W, Ouahabi A, Serra R, Leroy R (2016) An automatic system based on vibratory analysis for cutting tool wear monitoring. Measurement 77:117–123

Yu J (2018) Tool condition prognostics using logistic regression with penalization and manifold regularization. Appl Soft Comput 64:454–467

Liu H, Liu Z, Jia W et al (2021) Tool wear estimation using a CNN-transformer model with semi-supervised learning. Meas Sci Technol 32(12):125010

Zhang C, Zhang H (2016) Modelling and prediction of tool wear using LS-SVM in milling operation. Int J Comput Integr Manuf 29(1):76–91

Liao X, Li Y, Chen C et al (2020) Tool wear condition recognition based on kernel principal component and grey wolf optimizer algorithm. Comput Integ Manuf Syst 26(11):3031–3039

Li W (2013) Research on key technologies of tool condition monitoring and prediction in turning and milling. Southwest Jiaotong University, Chengdu

García-Nieto P, García-Gonzalo E, Vilán JA (2015) A new predictive model based on the PSO-optimized support vector machine approach for predicting the milling tool wear from milling runs experimental data. Int J Adv Manuf Technol 86:1–12

Li F, Xie F, Li N (2019) Evaluation of wear condition in end milling cutter with random forest algorithm. Mech Sci Technol Aerosp Eng 39(3):424–519

Krishnakumar P, Rameshkumar K, Ramachandran KI (2015) Tool wear condition prediction using vibration signals in high speed machining (HSM) of titanium (Ti-6Al-4V) alloy. Procedia Comput Sci 50:270–275

Xie M, Wu Y (2020) Research on big data analysis and prediction method for wer status of CNC milling cutter. Mach Tool Hydraul 48(21):105–110

Wu S, Shi Y (2019) Research on quality control method based on BP network and XGBoost. Manuf Autom 41(12):12–17

Bi Y, Han A, Zhang Z et al (2019) Study on short-term load forecasting model based on fuzzy Bagging-GBDT. Proc CSU-EPSA 31(7):51–56

Phm Society (2010) PHM data challenge [EB/OL]. https://www.phmsociety.org/competition/phm/10

Zhou Y, Sun W (2020) Tool wear condition monitoring in milling process based on current sensors. IEEE Access 8:95491–95502

Wang J, Cao X (2019) Research of milling cutter wear monitoring based on spindle vibration signal. Foreign Electron Meas Technol 38(2):103–108

Zhao J, Liu X, Hu R (2017) A coiflets wavelet method for real-timely detecting and locating transient surges of network voltage. Power Syst Prot Control 45(15):8–14

Feng X, Qu G (2019) Feature selection for high dimensional data based on deep learning and random forest. Comput Eng Des 40(9):2494–2501

Yusuf A, John A (2019) Classifiers ensemble and synthetic minority oversampling techniques for academic performance prediction. Int J Inform Commun Technol (IJ-ICT) 8(3):122–127

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Cheng Y, Han J, Liu B, Li P, Ji K (2020) Prediction of transmission line galloping by one class SVM based on the Bagging algorithm for constructing a strong classifier. J Vib Ration Shock 39(9):152–158

Acknowledgements

We are grateful to theoretical assistance on tool wear mechanism from Zhu Ping of Guizhou Haochi Tech. Co. Ltd.

Funding

Award Number: 71761007, National Science Foundation of China; 72061006, National Science Foundation of China.

Author information

Authors and Affiliations

Contributions

Code developed by WL, YZ and HC; paper written by WL, YZ and WX; experiments conducted by WX and TZ; original idea presented by HC and TZ.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, W., Li, W., Zhang, Y. et al. Bagging-gradient boosting decision tree based milling cutter wear status prediction modelling. SN Appl. Sci. 3, 879 (2021). https://doi.org/10.1007/s42452-021-04856-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-021-04856-2