Abstract

Intelligent manufacturing requires significant technological interventions to interface manufacturing processes with computational tools in order to dynamically mold the systems. In this era of the 4th industrial revolution, Artificial neural network (ANNs) is a modern tool equipped with a better learning capability (based on the past experience or history data) and assists in intelligent manufacturing. This research paper reports on ANNs based intelligent modelling of a turning process. The central composite design is used as a data-driven modelling tool and huge input–output is generated to train the neural networks. ANNs are trained with the data collected from the physics-based models by using back-propagation algorithm (BP), genetic algorithm (GA), artificial bee colony (ABC), and BP algorithm trained with self-feedback loop. The ANNs are trained and developed as both forward and reverse mapping models. Forward modelling aims at predicting a set of machining quality characteristics (i.e. surface roughness, cylindricity error, circularity error, and material removal rate) for the known combinations of cutting parameters (i.e. cutting speed, feed rate, depth of cut, and nose radius). Reverse modelling aims at predicting the cutting parameters for the desired machining quality characteristics. The parametric study has been conducted for all the developed neural networks (BPNN, GA-NN, RNN, ABC-NN) to optimize neural network parameters. The performance of neural network models has been tested with the help of ten test cases. The network predicted results are found in-line with the experimental values for both forward and reverse models. The neural network models namely, RNN and ABC-NN have shown better performance in forward and reverse modelling. The forward modelling results could help any novice user for off-line monitoring, that could predict the output without conducting the actual experiments. Reverse modelling prediction would help to dynamically adjust the cutting parameters in CNC machine to obtain the desired machining quality characteristics.

Similar content being viewed by others

1 Introduction

Surface quality of the engineered parts plays pivotal role and determines their functional performance and service life [1]. Dimensional accuracy, surface finish, and subsurface integrity aspects are some of the important surface quality parameters and have direct influence on wear behaviour, tribological characteristics, fatigue strength and corrosion resistance etc. Note that, deteriorated surface quality of the machined part could eventually decrease the fatigue life, increase friction which in turn results in wear and noise during their operation. The deflection forces in turning of soft materials, such as aluminium alloys will result in poor cylindricity and circularity etc. (that is, form errors) [2, 3]. Machined shafts with high precision are used in almost all machines, satellites, submarine, automotive, aircraft etc. applications. Hence, control of form (i.e. cylindricity and circularity) errors is an important issue and given high importance in machining industries [4]. To meet the stringent industry requirements for economic machining in large production scale, Higher material removal rate with good surface quality and dimensional accuracy are major requirements of economic machining in industries. Note that, in machining parts with high tolerance and precision, there is close interdependency among the productivity and product quality (i.e. surface quality) [5]. Inappropriate methods (i.e. expert’s recommendation, guidelines of data handbook, and trial–error method etc.) of controlling machining parameters may result in reduced productivity and poor surface quality [6].

Four popular methods were used to develop models for surface roughness and material removal rate: theoretical or mathematical modelling based on physics of the process [7, 8], multiple regression technique [2], fuzzy interface system [9], and network modelling [10]. The assumptions were mandated in physics based models, while determining the accurate value of minimum un-deformed chip thickness related to the radius of round cutting edge [11]. These assumptions are difficult to measure and model accurately. Soft computing tools (neural networks, fuzzy logic, genetic algorithm and so-on) have been considered in machining industry from the last two decades. Soft computing tools pose the following capabilities.

-

1.

Excellent learning and generalisation capabilities from input–output data,

-

2.

The capability to recognise and understand the mechanics and dynamics of a process

-

3.

Capture the dependencies among multiple inputs and outputs that could approximate the future predictions

-

4.

Minimizes the need for practical experiments and expert’s decision

Soft computing tools will handle the complex and uncertainty conditions by developing the physics-based models to predict and optimise machining processes [12]. Note that, ANNs had made better predictions, compared to multiple regression models. This is due to their inherent ability to understand and handle uncertainties and model non-linearities with complex interactions [10]. It was found that the neural models had shown better prediction than fuzzy and multiple regression models for surface quality characteristics. Only surface roughness in turning was aimed in their research work, leaving scope for cylindricity and circularity type of form errors. The present research work is focused on soft computing-based approach to model machining quality characteristics, namely surface roughness, cylindricity error, circularity error, and material removal rate.

Despite many research efforts made in the past, developing the basic relationship between variables is still missing to analyse the metal cutting phenomenon [12]. This observation is valid till today, as most of the studies do not provide complete insights of the machining process, which has a vital role on machining quality (i.e. cylindricity error CE, circularity error Ce, and surface roughness SR) and economics (i.e. better material removal rate MRR) of machining. Modelling of turning process is still challenging due to their existing complexity and exiting intellectual problem, which led to the development of many fascinating models by various researchers. Modelling of surface quality and improving productivity is the primary objective of machining.

In the recent past, meta-heuristic algorithms are extensively used to solve complex modelling multi-input and multi-output manufacturing problems [13]. Meta-heuristic algorithms (i.e. GA, ABC, particle swarm optimisation (PSO), etc.) are popularly known for their robustness, faster convergence, solution accuracy and ability to overcome local minima while training back propagation neural network (BPNN) [14]. The choice of a specific algorithm to train neural networks is quite often difficult, because of different learning mechanisms and their tuning parameters, computational complexity and the problem domain. The learning mechanism in PSO will evolve by the evaluation of personal best position with neighbouring best position and global best positions for all particles as a single pattern [15]. This may lead to the trapping of PSO solution in local minima. The meta-heuristic algorithms (i.e. GA, PSO, ABC) were employed to tune NN that might ensure approximate gene expressions. Their research work showed that, GA tuned NN performed better than PSO and ABC tuned NN [16]. NN models (i.e. PSO-NN, GA-NN, BPNN) were developed for both forward and reverse modelling of welding suprocess [17]. Noteworthy, that BPNN was found to perform better than GA-NN and PSO-NN.

ABC algorithm had shown superior performance than PSO and DE, due to their better balances with exploration and exploitation processes that could solve many engineering design problems [18]. ABC algorithm successfully optimized the machining parameters compared to other methods (GA, harmony search and simulated annealing) [19]. Bat algorithm showed better performance than cuckoo search algorithm for benchmark classification problems when trained with neural networks [20]. ABC algorithm produced better performance than other nature inspired algorithms such as Bat algorithm, ant colony optimization and GA [21]. ABC algorithm is employed to train the neural networks for the various applications such as signal processing [22], classify data sets for machine learning community [23], and prediction of ripping production [24]. ABC trained neural network performed better prediction of ripping production compared to imperialism competitive algorithm (ICA) and PSO [24]. ABC-NN had performed better as compared to GA-NN, and BP-NN, while modelling the tube spinning process [25].

It is worth to mention that, the ANNs is dependent on training data. Therefore, introducing intermediate feedback connections to the network as a dynamic element could result in better prediction (recurrent neural network, that performance is RNN) [26]. More recently, NN tools (RNN, BPNN, GA-NN, ABC-NN) were employed for both forward and reverse modelling of different manufacturing processes [26,27,28]. Significant attention is required to develop the predictive tools for the practising engineers to obtain desired machining quality characteristics.

Intelligent manufacturing is a latest development, which can fulfil the primary requirements of Industry 4.0 (that is 4th industrial revolution). The concepts of data-driven modelling, big data analytics, data-enabled predictions, real-time information sharing for control and monitoring the process are used in Industry 4.0. This will enable increased flexibility in manufacturing, which could result in better quality and higher productivity [29]. Intelligent manufacturing also uses cyber-physical systems along with above mentioned concepts. This will allow computation, which integrates them with the physical processes and feedback loop to adjust dynamic situations and requirements based on experience and learning capacities [29, 30]. ANNs possess better learning and generalisation capabilities, that enable both off-line and online monitoring of the systems.

In the present work, the soft computing tools (BPNN, GA-NN, RNN and ABC-NN) are used to model the turning process. The input variables considered are nose radius (NR), feed rate (FR), depth of cut (DOC) and cutting speed (CS). Machining quality characteristics, such as SR, MRR, circularity error and cylindricity error are treated as output (responses). The modelling methods used in machining processes are generally classified as forward modelling and inverse modelling. The form error (i.e. cylindricity and circularity error), average surface roughness and MRR are predicted in the present work for the known set of cutting parameters (i.e. NR, DOC, CS, and FR), which is known as forward mapping. Four neural network-based models (BPNN, GA-NN, ABC-NN and RNN) are developed and their performance is compared among themselves and that of regression model in forward modelling. Whereas in inverse approach, the measured form error, average surface roughness and MRR are the input to the system and corresponding set of cutting parameters are predicted. The transformation matrix in conventional approach (that is, regression model) might be non-square and singular. Hence, it is difficult to apply this and carryout reverse modelling. The reverse modelling is carried out in the present work by using NN based approaches, namely BPNN, RNN, ABC-NN, GA-NN and their performance is compared among themselves.

The organisation of this paper is as follows: In Sect. 2, the phenomenological behaviour of turning process about modelling is presented. In Sect. 3, the proposed methodology of network (BPNN, GA-NN, ABC-NN and RNN) models with their working principles is discussed. In Sect. 4, we study the performance of developed models is studied which involves discussion of network training results and testing predictions. Finally, concluding remarks for the defined objectives are made in Sect. 5.

2 Materials and methods

The applications of high-strength Aluminium 7075 alloys are used in structural, aerospace and automotive applications, where good surface finish and high geometrical and dimensional accuracy are required. Therefore, the turning process for machining of Al 7075 alloy should fulfil these requirements with high productivity (in terms of MRR). Tungsten carbide inserts with three different nose radii are mounted on the commercial tool holder SCLCL2525M16. It is to be noted that, the values of nose radii are obtained from DOE design matrix. Form accuracy, surface roughness, and MRR are primary quality characteristics and governed by the machining parameters (i.e. CS, FR, NR, and DOC). Hence, the former are treated as responses (output) and later ones as variables (input) in the present work. CNC lathe machine is used for turning Al 7075 with the dimension of φ30 mm diameter and 50 mm height (refer Fig. 1a, b). RONDCOM 31C measuring instrument is used to check the circularity of the machined surface (refer Fig. 1c). COMET L3D Tripod type 3D scanner equipment is used to know the form accuracy of the cylindrical specimen (refer Fig. 1d). The surface roughness is measured at many distinct locations on the turned samples by using SurfCom Flex 50 instrument (refer Fig. 1e).

The control variables and operating range are finalized with the help of Ishikawa diagram and literature survey respectively [31]. Full factorial design, Central composite design and Box-Behnken designs are the popular response surface methodologies used to develop process models. Although full factorial designs test all possible combination of factors with multiple levels, they are limited to estimate the main and low-order interaction effects. Fitting the second-order polynomial to input–output relation may result in better fit and accurate predictions. Further, this will provide information on higher order interaction of parameters and indicate their effect on response [32]. CCD and BBD models are designed to estimate the full quadratic (all linear, square and interaction factors) effects for modelling [32]. The BBD models require a smaller number of experiments as compared to CCD and FFD. Although, BBD models are considered as labour efficient models, they do not test all factors at extreme levels. Thus, the feature extraction of the response variable (i.e. outputs), if lies at extreme levels of inputs may not be estimated properly with BBD. Therefore, regression models for machining of Al 7075 alloy in turning process are developed by utilizing experimental matrix based on CCD [31]. The input–output variables for both forward and reverse modelling of artificial neural networks are presented schematically in Fig. 2.

The response equations are derived by utilizing the experimental input–output data. Further, huge amount of data required to train NN based models is generated by utilizing the regression equations. The regression equations for the responses, namely, cylindricity error, circularity error, surface roughness and material removal rate developed via experimental data, DOE and CCD are presented in Eq. [1,2,3,4].

3 Soft computing tools

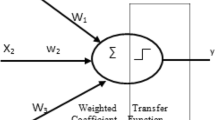

The theoretical background of GA, BP, ABC, tuned NN and RNN are discussed with a focus on basic working principles and functional behaviour. Three layered neural network models are developed to map the input–output relationships in turning process. In the present work, the input and output layer consists of four neurons representing the cutting parameters and machining quality characteristics respectively. In forward mapping, cutting parameters are treated as input neurons, whereas, machining quality characteristics represent the output neurons of the network. Conversely, in reverse mapping, machining quality characteristics are the inputs neurons and cutting parameters are the output neurons of the network. The neurons of the hidden layer are decided based on the minimum mean squared error value obtained during network parametric study. Linear (y = x), Log-sigmoid (y = 1/1 + e−ax) and Log-sigmoid (y = 1/1 + e−bx) transfer functions are used in input, hidden and output layer of the ANNs respectively. Linear and log-sigmoid transfer functions resulted in better prediction performances carried out earlier by authors [17, 25,26,27,28, 33, 34]. [Vij] and [Wjk] are the connection weight strengths between the input-hidden layers and hidden-output layer, respectively. The [V and W] weights are generated randomly in the ranges of zero to one. Terms, a and b will represent the transfer function coefficients, associated with the hidden and output layer, respectively. The term ‘x’ denotes the input value to the neuron. The present work has utilized 1000 (i.e. 27 experimental and 973 artificially generated through Eqs. 1 to 4) data set to tune the ANNs parameters. The following subsections will explain the development of neural network models for the Al 7075 turning process.

3.1 NN trained with BP algorithm (BPNN)

Neural network trained with BP algorithms are extensively reported in the literature to model complex input–output relationships. BP algorithm uses gradient search method to optimize NN parameters. BP algorithm uses a supervised learning mechanism. Huge amount of training data (1000 input–output data set) is passed through NN in batch mode. The network training is performed to update or modify the weights by altering network parameters (i.e. transfer function constants, bias, learning rate, alpha, hidden neurons). Mean squared error (MSE) is used as the criterion to evaluate the performance and the same is computed by utilizing Eq. 5.

Terms, Tk l and Ok l denotes the target and the network predicted values respectively for the kth output neuron and lth training scenario. L and N will represent the set of training scenarios and some network outputs. The weights of the network are updated with the help of generalised delta rule, adopted in the back-propagation algorithm and the same is presented in Eqs. 6, 7.

The terms, t and η will denote the iteration number and the learning rate, respectively. The computation of chain rule of differentiation (\({{\partial E} \mathord{\left/ {\vphantom {{\partial E} {\partial W_{jk} }}} \right. \kern-0pt} {\partial W_{jk} }},{{\partial E} \mathord{\left/ {\vphantom {{\partial E} {\partial v_{ij} }}} \right. \kern-0pt} {\partial v_{ij} }}\)) is shown below (refer Eqs. 8, 9).

The synaptic or connecting weights are updated with the help of the back-propagation algorithm. The equation used to update the weights is presented in Eq. 10.

3.2 NN trained with GA (GA-NN)

GA searches for optimal solutions in a multi-dimensional search space at many spatial locations. GA hits the global optimal solutions due to their stochastic search mechanism, which enables the user to obtain the optimal solution for complex manufacturing problems. In GA-NN, GA replaces BP algorithm (i.e. BP algorithm use gradient descent learning mechanism, which poses more probability to get trapped at the local solution) to train the neural networks. GA starts with initialising a set of the population of solutions, represented by chromosomes generated heuristically for the problem domain. Later, genetic parameters (i.e. crossover and mutation) are altered to locate the possible global solutions. In each successive generation or iteration, the individuals in the current population are decoded and examined according to the fitness function. The best individuals are selected based on their fitness function to form a new population (the generated solution might be comparatively better than the previous population). The best population, thus selected naturally undergoes the mutation and cross-over operation to generate the off-springs. The generated new off-springs are replaced with few or all population according to fitness value. This mechanism of learning creates hope that the generated new population could perform better than the previous one. This mechanism is repeated till the acceptable solutions, or pre-determined condition is met. In the auxiliary hybrid system of GA-NN, GA optimizes the network parameters (i.e. synaptic weights). During network training, GA-string will supply the information of network parameters, represented with 5-bit. Typical GA string used in the present work is shown below.

The appropriate choice of genetic algorithm parameters (i.e. hidden neurons, cross-over probability, mutation probability, size of population and generation) could result in optimal global solutions. A set of 1000 data points are supplied to the network in batch training mode represented in the form of GA-string could help to optimize the genetic algorithm parameters based on the computation of MSE. The computed MSE for all the responses is treated as the fitness value of GA-string. Noteworthy, that the bit-wise mutation, uniform cross over and tournament selection are employed to update and adjust the solutions.

3.3 NN trained with ABC (ABC-NN)

ABC is a metaheuristic, nature-inspired algorithm, which uses the artificial bees of a colony to locate the global solutions. ABC mimics the intelligent behaviour of honey bees looking for a quality food source. In ABC, honey bees are the essential components, wherein they split the duties and do share information correspond to food sources between many individuals. Employed bees, onlooker bees and scout bees are the three major groups of artificial bees in ABC. The scout bees do their duty to hunt for possible new food sources. The employed bees do share the food source information with onlooker bees which are waiting in the hive. If the information contains many food sources, the onlooker bees will decide which food source need to be exploited. In ABC, the position of food source represents the feasible solution to the optimization problem. The quality of food source at a particular location will be the fitness function value. The principle or practice of honey bees, searching the food sources and their positions are used to determine an optimal solution in multi-dimensional search space. ABC follows the necessary steps to update and locate the optimal position of food sources. The phases used in ABC are briefly described in the following sub-sections.

3.3.1 Initialization phase

The number of solutions in the population corresponding to the employed and onlooker bees are generated at random (refer Eq. 11).

3.3.2 Employed bee phase

After completion of the initialization phase, the fitness of all individual food sources is evaluated based on updating and selecting the feasible solutions by limiting the local (i.e. suboptimal) solutions. To update the solutions, all the employed bees will choose a new position of competitor food source. In this stage, the employed bees will generate an updated solution by searching the neighbourhood of its earlier food source. Equation 12 is used to determine the updated position of the food source.

The new feasible solution (i.e. Vij) is obtained from the previous solution (Xij), and a neighbour solution (Xkj) selected at random. The term, \(\theta\) represents the adaptively generated random number, whose value is distributed uniformly in the range of [− 1 to + 1]. Note that, if the generated new solution offers a better fitness function value than the current or previous one, then the employed and onlooker bees will replace with a new solution. Moreover, the employed bees will leave their position, move towards the new food source and share the information with the onlooker bees.

3.3.3 Onlooker bee phase

Here, each onlooker bee selects the feasible solution (i.e. food source), based on the fitness value information gathered from the employed bees. The probability (Pi) of onlooker bees in selecting the feasible solutions (i.e. new food source) is dependent on the relative fitness of each solution (refer Eq. 13).

3.3.4 Scout bee phase

Finally, scout bees are employed to evade local solutions. Note that, if the employed bee fails to locate the food source, which results in an abandoned current solution. During this stage, the employed bee tends to become the scout bee to search new solutions generated at random.

In ABC-NN, ABC replaces BP algorithm (i.e. in many cases the BP algorithm get trapped at local solutions, when the nature of error surface is multi-modal) to train the neural networks that could search globally the optimal weights [14]. In ABC-NN, the prediction performances dependent on minimum mean squared error rely on the appropriate choice of parameters (hidden neurons, size of population or food source positions, and maximum number of cycles or iterations) [18, 22, 25, 35, 36]. Thereby, 1000 input–output data sets are passed to neural networks to determine optimal weights after tuning the afore said that could resulted in minimum MSE. The trained ABC-NN is tested with random ten experimental cases (“Appendix”).

3.4 Recurrent neural network (RNN)

RNN uses a recursive loop to capture the dynamics of the process, through intermediate feedback connections [37, 38]. This learning mechanism will enable the RNN to predict the outputs in any process [33]. If the functions that estimates the network output is differentiable and the target output is well-known, then the BP algorithm is found to be more suitable to train the RNN. The structure of ANNs parameters (such as hidden neurons, weights, alpha, learning rate, sigmoid transfer function and their constants, bias value) is same as that of BPNN, except the intermediate feedback connection in the learning mechanism [26, 34, 39]. In turning process, the input behaves non-linearly with the output. The error prediction (difference value of the target and network output) information of all previous runs must be utilised in deciding the changes to be made for successive trials. RNN is trained with backpropagation algorithm which works with a steepest descent method (refer to Fig. 2). The MSE computation is done by utilising Eq. 5.

3.5 Data collection for network training and testing

ANNs are data-dependent models, wherein the prediction performances will rely on the quality and huge quantity of input–output training data. Conducting practical experiments to obtain huge quantity of training data is impractical. The well-planned statistical central composite design of experiments is used to conduct experiments. Table 1 provides information on variables and their operating range used in conducting experiments. The regression equations developed, tested statistically and validated through test cases are used to generate training data [31]. Supervised learning ANNs, such as BPNN, GA-NN, ABC-NN, and RNN are trained with one thousand set of input–output data.

To test the performance of developed neural network models, few practical experiments are conducted and input–output data is collected. Ten input–output data sets. In CNC turning equipment, there exists a provision to set the parameters between their respective levels of operating variables. The experimental data collected from ten experiments are used to test the prediction performances of neural network models. The best models are selected for both forward and reverse mappings based on the average absolute percent deviation value.

4 Results and discussion

This section discusses the results obtained in forward and reverse modelling of machining process. The detailed parametric study is carried out to optimize neural network structure (parameters) in all NN models. The optimized NN are used to make prediction of machining quality characteristics (forward mapping) and cutting parameters (reverse modelling). Test cases are used to test performances of all NN models in both forward and reverse modelling (refer “Appendix”). In forward mapping, the results of predictions are compared among the neural networks (BPNN, GA-NN, ABCNN and RNN) with that of statistical design of experiments (CCD). In reverse mapping, the results are compared among the neural network models (BPNN, GA-NN, RNN, ABCNN).

4.1 Parameter study results of forwarding modelling

Forward modelling will predict the outputs (i.e. CE, Ce, SR, and MRR) for the known set of inputs (i.e. CS, FR, DOC, and NR). BPNN, GA-NN, ABC-NN and RNN parameters are optimised by conducting systematic parameter study. The mean squared error varied for each parameter of BPNN, GA-NN, ABC-NN and RNN is presented in Figs. 3, 4, 5 and 6. Note that the parameter study is conducted to optimize the network parameters and weights. Mean squared error (MSE) is used to test the performance of training algorithms. Many authors [25,26,27,28, 33, 34] have made effort to reduce the mean squared error value while training neural networks. It is to be noted that many times the prediction performance is found to be good. In the present work, neural networks terminate the training when they met either of the following conditions,

-

1.

When the difference in the mean squared error values of previous and current iterations are less than 5 × 10−9.

-

2.

When the number of iterations reaches to a maximum of 1 lakh.

The results of optimal parameters obtained after conducting the parametric study are summarized in Table 2 (BPNN, RNN) and Table 3 (GA-NN, ABC-NN).

4.2 Comparison of ANNs and CCD model performances in forward modelling

Response-wise prediction of machining quality characteristics by neural network (BPNN, GA-NN, ABC-NN, RNN) and CCD models are discussed below.

The model (CCD, BPNN, GA-NN, ABC-NN, and RNN) predicted values of cylindricity error are compared with target (experimental) values obtained from ten test cases (refer “Appendix”). Scatter plots and percent deviation plots are made to carry out the said task. The BPNN and RNN prediction performances are found comparable, with major data points fall on the best-fit line (refer Fig. 7a–e). Note that, percent deviation in predicting cylindricity error by the five different models have followed a similar trend with the data points lie both on positive and negative sides (refer Fig. 8a). RNN has predicted percent deviation values with narrow ranges and close to reference zero line for cylindricity error compared to other tested models (refer Fig. 8a and Table 4).

Similar analysis is made for the rest of responses, namely circularity error, surface roughness and material removal rate. Response-wise range of percent deviation for each model is summarized in Table 4. Response-wise percent deviation for all models are presented in Fig. 8. It is interesting to note that the pattern of deviation is found similar for all models. Average absolute percent deviation values for all responses obtained through BPNN, RNN, GA-NN and ABC-NN in forward modelling is presented in Table 5. It is to be noted that, maximum value of average absolute percent deviation among all neural network models is found to be equal to 10.91 by GA-NN for response cylindricity error. However, the neural network based RNN has resulted in a minimum value of average absolute percent deviation for all responses. This indicates that, all predictions made by different models are within the acceptable range for machining industries.

The grand average absolute percent deviation values in prediction, obtained for all responses by CCD, ABC-NN, BPNN, RNN and GA-NN models are presented in Fig. 9. The model RNN has outperformed other models (BPNN, ABC-NN, GA-NN and CCD) in making predictions. The better performance of RNN as compared to other NN models might be due to to the presence of feedback mechanism. The computed mean squared error value of the trained neural network is found equal to 0.000201 for RNN, 0.000347 for BPNN, 0.000642 for GA-NN, 0.000508 for ABC-NN, respectively. The ABC-NN performance is found to be better than GA-NN. This might be due to better balance in conducting a local and global search by the bees (onlooker, scout, employed). However, GA uses mutation which simply provides a variety of solutions to the population after updating solutions. Thus, it may be concluded that feedback units of recurrent neural network urged as the best prediction model compared to other NN models. Therefore, meta-heuristic algorithms (GA and ABC) may not guarantee the better prediction always in a multi-modal and multi-variable problems. However, the performance purely depends on the capability of the algorithm to hit global minima solutions (i.e. nature of error surface or minimum mean squared error).

4.3 Reverse mapping

Reverse modelling aims to predict the input parameters for the desired machining quality attributes. In reverse modelling, the prediction performances of neural network models (BPNN, GA-NN, ABC-NN, and RNN) are compared among themselves. Note that, the network structure remains similar to that used in forwarding mapping, except the machining quality characteristics are treated as inputs and cutting parameters as outputs to the network. Also, training and test data used are the same in both forward and reverse modelling. The four ANNs model parameters are kept same as that forward mapping network parameters and their operating range are varied with incremental step size.

4.3.1 Parameter study results in reverse mapping

The BPNN architecture, in reverse modelling is shown in Fig. 2. The parameter study has been carried out to modify and alter the weights to a minimize the mean squared error during training. The optimised network parameters at the end of training for both BPNN and RNN are carried out and the results are presented in Table 6.

The parametric study for GA tuned neural network and ABC-NN is also carried-out and the results obtained are presented in Table 7.

4.3.2 Comparison of neural network model prediction performances in reverse mapping

In reverse mapping, the prediction of cutting parameters by neural network (BPNN, GA-NN, RNN, ABC-NN) models is made. The same ten test cases used in forward mapping are employed to test the performances of all neural network (BPNN, RNN, GA-NN, ABC-NN) in reverse modelling also. The percent deviation in predicting all inputs parameters (variables) is presented in Fig. 10. It is to be noted that pattern of deviation is found to be similar for all neural network models. The percent deviation values found to lie on both sides of zero reference line for all parameters and neural network models. The maximum percent deviation in prediction of input parameters on both positive and negative side are presented in Table 8. Most of the maximum percent deviation values are found to lie within ± 20%, indicating the predictions are within acceptable range for machining process.

The performances of NN models is compared response-wise in terms of average absolute percent deviation and the results are presented in Table 9. The performance of ABC-NN is found better for the parameters, cutting speed, depth of cut and nose radius, whereas RNN has shown better results for the parameter feed rate. The grand average absolute per cent deviation in making prediction of cutting parameters is computed and presented in Fig. 11. It has been observed from Fig. 11 that the ABC-NN has outperformed all other NN models in reverse modelling. Few test sample results of cylindricity error and circularity error are presented in Fig. 12. The better performance of ABC-NN might be due to better balance achieved while conducting a local and global search by the bees (onlooker, scout, employed). However, the performance purely depends on the capability of the algorithm to hit global minima solutions (i.e. nature of error surface or minimum mean squared error). The MSE obtained at the end of training (1000 data points) is found equal to 0.00604, 0.00708, 0.0153 and 0.0488 for ABC-NN, RNN, GANN, BP-NN, respectively. Although input–output data used to develop forward and reverse models viz. neural networks are same, but prediction accuracy varies to a larger extent. Low prediction accuracy was observed in reverse modelling might be due to the multi-modal nature of input–output of a turning process. In other words, for the set of desired output, there is multiple combinations of inputs due to multi- values modal nature of the input–output system (i.e. low values of one input and high of another input and vice versa could result in same output value).

5 Conclusions

In the present work, neural network based intelligent modelling is applied to Al 7075 turning process. The cutting parameters, namely cutting speed, feed rate, depth of cut and nose radius are considered as input, whereas machining quality characteristics, such as metal removal rate, surface roughness, cylindricity error and circularity error are treated as the output of machining process. Forward and reverse modelling is carried out by developing NN based BPNN, RNN, GA-NN and ABC-NN models. The following conclusions can be drawn from the present work-

-

1.

BPNN, GA-NN, RNN and ABC-NN tools are applied in both forward and reverse modelling of turning process. The ANNs structure and parameters are optimized by utilizing 1000 dataset obtained through regression equations. Further systematic study is carried out for all NN models (BPNN, GA-NN, RNN and ABC-NN). Batch mode of training is employed with an objective to minimize mean squared error.

-

2.

Forward modelling is carried out to predict the different machining quality characteristics, which are conflicting in nature (i.e. minimize: SR, CE and Ce and maximize: MRR). Neural network model (BPNN, GA-NN, RNN, and ABC-NN) prediction performances are compared among themselves and with that of regression equations obtained through CCD and DOE. RNN performance is found to be comparable with that of BPNN and much better as compared to GA-NN and ABC-NN. RNN uses self-feedback loop (i.e. feedback weights) to dynamically adjust the network parameters to minimize error. BPNN has outperformed both GA-NN and ABC-NN, regarding prediction accuracy in forwarding modelling. This might be due to nature of error surface. Thus, meta-heuristic algorithms (ABC and GA) may not guarantee better prediction always, as their performance depends on initialized and optimized weights, nature of error surface and optimized network parameters. All ANN models are tested for their performance by utilizing ten test cases (that is comparison with experimental results). It is to be noted that, all ANN models are capable to make prediction of machining quality characteristics (responses) within acceptable range of machining practices. The grand average absolute percent deviation values are found to be equal to 7.93, 7.76, 7.16 and 8.10 for ABC-NN, BPNN, RNN and GA-NN respectively.

-

3.

NN base BPNN, RNN, GA-NN and ABC-NN are developed in reverse modelling to predict cutting parameters from a set of known machining quality characteristics. The same 1000 dataset used in forward modelling is utilized in reverse modelling also. ABC-NN made marginally better prediction than RNN and far better compared to GA-NN and BPNN. RNN predictions are found better due to the embedded dynamic feedback system. ABC uses unique search mechanism which updates only in two phases, thus achieve better balance while conducting a local and global search by the bees (onlooker, scout, and employed) as compared to GA. Unlike GA, ABC uses few tuning algorithm-specific parameters and capable to hit global minima. GA-NN predictions are better than BPNN which might be due to local minima problem associated with BPNN. The grand average absolute percent deviation in modelling is found to be equal to 12.0, 10.67, 9.51 and 8.9 for BPNN, GA-NN, RNN and ABC-NN.

-

4.

The prediction accuracies in forward modelling are found to be much better than reverse modelling. It is to be noted that, for the set of desired output, there is multiple combinations of inputs due to multi-modal nature of the input-output system (i.e. low values of one input and high values of another input and vice versa could result in same output value). These reverse modelling tools are very-much useful in online monitoring process.

-

5.

The present research work will be very-much useful for the machining industries, where the industry personnel are interested to know the cutting parameters, resulting in best quality of machining. The methodology and results may be referred by the industries to set machining parameters and obtain good quality of machined part (specially for precision machining). Moreover, the reverse modelling may be employed to on-line monitoring and dynamically vary the cutting parameters.

References

Grzesik W (2016) Advanced machining processes of metallic materials. Elsevier, Netherland

Asiltürk I, Çunkaş M (2011) Modelling and prediction of surface roughness in turning operations using the artificial neural network and multiple regression method. Expert Syst Appl 38(5):5826–5832

Mustafa AY, Ali T (2011) Determination and optimization of the effect of cutting parameters and workpiece length on the geometric tolerances and surface roughness in turning operation. Int J Phys Sci 6(5):1074–1084

Fan C, Collins EG, Liu C, Wang B (2003) Radial error feedback control for bar turning in CNC turning centres. J Manuf Sci Eng 125(1):77–84

Kant G, Sangwan KS (2014) Prediction and optimization of machining parameters for minimizing power consumption and surface roughness in machining. J Clean Prod 83:151–164

Makadia AJ, Nanavati JI (2013) Optimisation of machining parameters for turning operations based on response surface methodology. Measurement 46(4):1521–1529

He CL, Zong WJ, Zhang JJ (2018) Influencing factors and theoretical modeling methods of surface roughness in turning process: state-of-the-art. Int J Mach Tool Manuf 129:15–26

Tomov M, Kuzinovski M, Cichosz P (2016) Development of mathematical models for surface roughness parameter prediction in turning depending on the process condition. Int J Mech Sci 113:120–132

Palani S, Natarajan U, Chellamalai M (2013) On-line prediction of micro-turning multi-response variables by machine vision system using adaptive neuro-fuzzy inference system (ANFIS). Mach Vis Appl 24(1):19–32

Rao KV, Murthy PBGSN (2018) Modeling and optimization of tool vibration and surface roughness in boring of steel using RSM ANN and SVM. J Intell Manuf 29(7):1533–1543

Grzesik W (1996) A revised model for predicting surface roughness in turning. Wear 194(1–2):143–148

Chandrasekaran M, Muralidhar M, Krishna CM, Dixit US (2010) Application of soft computing techniques in machining performance prediction and optimization: a literature review. Int J Adv Manuf Technol 46(5–8):445–464

Pratihar DK (2015) Expert systems in manufacturing processes using soft computing. Int J Adv Manuf Technol 81(5–8):887–896

Karaboga D, Akay B, Ozturk C (2007) Artificial bee colony (ABC) optimization algorithm for training feed-forward neural networks. In: International conference on modeling decisions for artificial intelligence, Springer, Berlin, Heidelberg, pp 318–329

Yu S, Wang K, Wei YM (2015) A hybrid self-adaptive particle swarm optimization–genetic algorithm–radial basis function model for annual electricity demand prediction. Energy Convers Manag 91:176–185

Yeh WC, Hsieh TJ (2012) Artificial bee colony algorithm-neural networks for S-system models of biochemical networks approximation. Neural Comput Appl 21(2):365–375

Jha MN, Pratihar DK, Bapat AV, Dey V, Ali M, Bagchi AC (2013) Knowledge-based systems using neural networks for electron beam welding process of reactive material (Zircaloy-4). J Intell Manuf 25(6):1315–1333

Akay B, Karaboga D (2012) Artificial bee colony algorithm for large-scale problems and engineering design optimization. J Intell Manuf 23(4):1001–1014

Rao RV, Pawar PJ (2010) Grinding process parameter optimization using non-traditional optimization algorithms. Proc Inst Mech Eng Part B J Eng Manuf 224(6):887–898

Tuba M, Alihodzic A, Bacanin N (2015) Cuckoo search and bat algorithm applied to training feed-forward neural networks. In: Yang XS (ed) Recent advances in swarm intelligence and evolutionary computation. Springer, Cham, pp 139–162

Luthra I, Chaturvedi SK, Upadhyay D, Gupta R (2017) Comparative study on nature inspired algorithms for optimization problem. In: 2017 international conference of electronics, communication and aerospace technology (ICECA), IEEE, vol 2, pp 143–147

Karaboga D, Akay B (2007) Artificial bee colony (ABC) algorithm on training artificial neural networks. In: 2007 IEEE 15th signal processing and communications applications, IEEE, pp 1–4

Karaboga D, Ozturk C (2009) Neural networks training by artificial bee colony algorithm on pattern classification. Neural Netw World 19(3):279

Mohamad ET, Li D, Murlidhar BR, Armaghani DJ, Kassim KA, Komoo I (2019) The effects of ABC, ICA, and PSO optimization techniques on prediction of ripping production. Eng Comput:1–16

Vundavilli PR, Kumar JP, Priyatham CS, Parappagoudar MB (2015) Neural network-based expert system for modeling of tube spinning process. Neural Comput Appl 26(6):1481–1493

Patel GCM, Shettigar AK, Krishna P, Parappagoudar MB (2017) Back propagation genetic and recurrent neural network applications in modelling and analysis of squeeze casting process. Appl Soft Comput 59:418–437

Patel GCM, Krishna P, Parappagoudar MB (2016) An intelligent system for squeeze casting process- soft computing based approach. Int J Adv Manuf Technol 86(9–12):3051–3065

Parappagoudar MB, Pratihar DK, Datta GL (2008) Forward and reverse mappings in green sand mould system using neural networks. Appl Soft Comput 8(1):239–260

Zhong RY, Xu X, Klotz E, Newman ST (2017) Intelligent manufacturing in the context of industry 4.0: a review. Engineering 3(5):616–630

Wang L, Törngren M, Onori M (2015) Current status and advancement of cyber-physical systems in manufacturing. J Manuf Syst 37:517–527

Patel GCM, Lokare D, Chate GR, Parappagoudar MB, Nikhil R, Gupta K (2020) Analysis and optimization of surface quality while machining high strength aluminium alloy for aerospace applications. Measurement. https://doi.org/10.1016/j.measurement.2019.107337

Montgomery DC (2017) Design and analysis of experiments. Wiley, Hoboken

Das D, Pratihar DK, Roy GG, Pal AR (2018) Phenomenological model-based study on electron beam welding process, and input-output modeling using neural networks trained by back-propagation algorithm, genetic algorithms, particle swarm optimization algorithm and bat algorithm. Appl Intell 48(9):2698–2718

Patel GCM, Shettigar AK, Parappagoudar MB (2018) A systematic approach to model and optimize wear behaviour of castings produced by squeeze casting process. J Manuf Process 32:199–212

Karaboga D, Gorkemli B, Ozturk C, Karaboga N (2014) A comprehensive survey: artificial bee colony (ABC) algorithm and applications. Artif Intell Rev 42(1):21–57

Akay B, Karaboga D (2009) Parameter tuning for the artificial bee colony algorithm. In: International conference on computational collective intelligence, Springer, Berlin, Heidelberg, pp 608–619

Pham DT, Karaboga D (1999) Training Elman and Jordan networks for system identification using genetic algorithms. Artif Intel Eng 13(2):107–117

Wai RJ, Liu CM (2009) Design of dynamic petri recurrent fuzzy neural network and its application to path-tracking control of nonholonomic mobile robot. IEEE Trans Ind Electron 56(7):2667–2683

Lahoti G, Pratihar DK (2017) Recurrent neural networks to model input-output relationships of metal inert gas (MIG) welding process. Int J Data Anal Tech Strateg 9(3):248–282

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no potential conflict of interest to publish the paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Summary of experimental results of the random test cases

Test case | Input variables | Experimental values of responses | ||||||

|---|---|---|---|---|---|---|---|---|

CS, m/min | FR, mm/rev | DOC, mm | NR, mm | MRR, cm3/min | SR, µm | Ce, µm | CE, µm | |

1 | 202 | 0.17 | 0.97 | 0.40 | 24.82 | 1.51 | 7.80 | 1.32 |

2 | 278 | 0.18 | 0.7 | 0.80 | 30.76 | 1.38 | 7.21 | 0.74 |

3 | 118 | 0.18 | 0.98 | 1.20 | 18.36 | 0.76 | 9.14 | 2.34 |

4 | 188 | 0.15 | 0.64 | 1.20 | 19.02 | 0.63 | 4.74 | 0.62 |

5 | 159 | 0.15 | 0.46 | 0.80 | 12.58 | 0.82 | 5.84 | 1.23 |

6 | 217 | 0.12 | 0.57 | 0.40 | 15.96 | 0.93 | 3.12 | 0.28 |

7 | 117 | 0.16 | 0.88 | 1.20 | 17.45 | 0.62 | 7.74 | 1.53 |

8 | 245 | 0.13 | 0.56 | 0.80 | 18.24 | 0.59 | 5.94 | 0.79 |

9 | 281 | 0.11 | 0.84 | 0.40 | 24.74 | 0.59 | 3.24 | 0.18 |

10 | 123 | 0.14 | 0.50 | 0.80 | 9.94 | 0.64 | 4.78 | 0.68 |

Rights and permissions

About this article

Cite this article

Shettigar, A.K., Patel, G.C.M., Chate, G.R. et al. Artificial bee colony, genetic, back propagation and recurrent neural networks for developing intelligent system of turning process. SN Appl. Sci. 2, 660 (2020). https://doi.org/10.1007/s42452-020-2475-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-2475-z