Abstract

This study aims to determine the watermark resistance to different attacks as well as the PSNR level, both of which are essential requirements of watermarking. In our research, we came up with an intelligent design based on NSCT-SVD that fulfills these requirements to a great extent and we managed to use different-sized images for watermark instead of using logos on the host images. Yet we were able to improve PSNR levels and resistance to various attacks. In this paper an NSCT-SVD-based smart watermark model is proposed. We first compare the PSO and PSO-GA algorithms for greater stability using larger SFs obtained by the PSO-GA-AI algorithm. The resulting host image is then decomposed by NSCT transform to obtain images below the low frequency range. Stationary Wavelet Transform (SWT) is performed once on these coefficients and the low frequency coefficients are fed to SVD. Afterwards, SWT transform is performed on the watermark image and the transform is once again taken from the HL coefficients and the LL frequencies are given to the SVD conversion. The rest of image process is insertion. This insertion process dramatically increases the visual transparency and PSNR value. The experiment shows that such a model is able to resist the repeated image attacks with better visibility and power. These results are compared before and after using SWT. We have used a PSO-based algorithm for better results on the False Positive rate in the embedding phase.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the increasing expansion of the world wide web and social networks, the use of copyright and the prevention of illegal copying in such a way that the proprietorship of the copyrighted work can be proven, has become more popular. In fact, digital watermarking can be a good solution to this problem [1,2,3]. With the development of Internet and digital media, creation of multimedia works has become easier than ever before and the security of this volume of information raises concerns [3]. Watermarking can be called the art of hiding information in a way that is not recognizable to others. This process should be accompanied by minimal alteration to the host image [2].

The most damaging kind of attack are the geometric attacks that do not actually remove the treasure but manipulate the watermarked object in such a way that the person extracting the treasure cannot extract it from the watermarked data [4]. Therefore, it is important to ensure the host image stability before inserting the watermark into the host image. Watermarks are generally divided into two categories of space domain and frequency domain. The former offers no effective protection against repetitive attacks [5]. In the frequency domain, conversions such as DFT, DCT, and DWT are used to insert the message. In the methods of this group, the watermarking operation is done in the field of sound conversion in such a way that first the whole image or any of its blocks are transferred to another domain and then watermarking is done in the corresponding field and the image is returned to the image domain again to obtain the watermarked image. What distinguishes the methods of this group from each other is the type of conversion function chosen and how the information is inserted in the field of conversion. In general, watermarking in the field of conversion has less storage capacity than the image domain, but instead exhibits greater resistance to any sabotage aimed at the image [6, 7]. DWT-based watermarking algorithms insert watermarks in areas with less sensitivity. In DCT-based methods, the host signal is broken into different frequency bands and the watermark can be inserted into different frequency bands [8]. In [9], an algorithm based on the combination of DWT and DCT is presented.

Contourlet transformation, which is another form of frequency domain transformations, creates boundaries with great accuracy. It is multi-scale and, unlike other transformations, offers a variety of directions [10]. Authors in [12] presented two algorithms which insert the watermark in coefficients with larger absolute values. Zaboli et al. [13], proposed a new method for non-blind watermarking of gray images that used the features of the human visual system and a new entropy-based method for the watermarking process. It decomposes the main image into four levels and the watermark is an image mixed with random noise sequences stored in the cover image. In Ref. [14], a new contourlet Conversion called Sharp Frequency Local contourlet is introduced which proposes a new structure of contourlet conversion claiming that it solves the non-positioning problem of the original contourlet conversion. The main case involves a combination of the Laplacian pyramid, Directional Filter Banks (DFB) and multi-resolution image interpretation. According to this article, filters are used in the new contourlet conversion more than the original contourlet conversion and sample increment and subtraction operations continue to be used. They used the new conversion in combination with SVD, which is another frequency domain transformation. GA is a population-based meta-heuristic algorithm [15]. Ref. [16] proposes a DCT-DWT-SVD-based algorithm using PSO which is another meta-heuristic algorithm based on Genetic Programming (GP) to modify single image values. In our simulations we have used a type of conversion called NSCT, which is actually an optimized version of contourlet conversion for further directions along with finer details provided in image parsing than other watermarking conversions. Also because contourlet outperforms in receiving smooth contours and provides more robust edges in many high frequency areas without disturbance. So we expected to be able to provide better results and since one of our goals was to achieve a method where the PSNR was increased, the redundant DWT which is a frequency-domain conversion is used. To fully assess our method, MSSIM, and MSE were also used in addition to PSNR. The SF coefficient, indicating the stability of the method against attacks, was compared with the standard test images in four ways using PSO, PSO-GA, PSOGA-AI, and PSO-AI to see in which mood the best result is obtained. We also tested the plan results with different dimensions of the host and watermark image to accurately evaluate it against the attacks. These results are presented in Sect. 4.

This paper develops an intelligent watermarking method using the contourlet and SVD combination and utilizing SF which is an algorithm to find the most robust image for insertion of watermark using an optimized PSO that combines neural networks with GA. It evaluates watermark visibility through the peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) and tests power with normalized cross-sectional correlation (NCC). Also using SWT algorithm, the PSNR value is increased. These parameters are compared before and after applying this algorithm.

The experiment showed that such a pattern had better watermark and visibility as well as better information security. We also used this algorithm to hide an image in a second image rather than inserting a few bits or a logo. The rest of this article is organized as follows: Sect. 2 introduces the relevant principles, Sect. 3 shows the proposed model, and Sect. 4 presents the results.

2 Background

2.1 Contourlet transform

The directions applied for embedding the watermark are limited in conventional wavelet transform because it has only three horizontal, vertical, and diagonal directions, while the Contourlet transform is a single transform in which the number of directional bands can be determined by the user at any given resolution [10]. In fact, this transform provides a multi-resolution representation of the signal. It is also an integral directional 2D transform used to describe fine curves and details in images and efficiently describes the smooth contours that are key components in images. The contourlet expansion is a multi-resolution directional expansion of the basic functions. One of the most important features of this transform is that it can specify multiple multidirectional analyses at each level of the multi-resolution pyramid [11]. The contourlet transform is done by a two-filter set to go from the space to the frequency domain. The first filter uses the Laplacein Pyramid (LP) to receive point discontinuities and then connects the point to the linear DFB via a directional filter bank, and then the presented image is obtained with the main elements of the contour sections [17].

CT can be divided into two main parts: Laplacian Pyramid (LP) decomposition and directional filter bank (DFB). The original image is decomposed into a low-pass image and a band-pass filtering image via LP method. Each band-pass filtering image is then parsed with DFB. If the same steps are repeated with the low-pass image, we can achieve multi-directional and multi-solution parsing. Figure 1 shows the CT structure. NSCT is obtained by coupling a non-sample pyramid structure (NSP) with a non-sample DFB (NSDFB). The NSCT structure is also shown in Fig. 2. Due to the basic properties of anisotropy and orientation that are not common in wavelets, Contourlet is superior to other transformations in image processing [10]. Also due to the fact that we witnessed a better set of directions and shapes compared to wavelets, the contourlet outperformed in receiving smooth contours. Considering the more powerful capacity of hidden edges, Contourlet is more suitable for hiding data in many high frequency areas without disturbing the original image [18].

For the mathematical formulation of the process, suppose \( x\left[ n \right] = f, \phi_{L, n} \) is an image signal for each \( f = L^{2} \left( {R^{2} } \right),\phi_{L, n} \), which is an orthogonal scaling function. Then, the discrete contourlet transform of x is:

In which, \( a_{J} \) and \( d_{j, k}^{\left( l \right)} \) are the complete and approximate coefficients, including:

In which, \( \rho_{j,k,n}^{{\left( { l} \right)}} \) is the basis function of the directed filter bank and \( \phi_{L + J, n} \) is the basis function of LP [19].

2.2 SVD function in watermarking

SVD decomposes a symmetric matrix into three matrices. These three decomposed matrices are, in particular, the singular matrices U, S, and V. If we assume that Y is a symmetric matrix, SVD can be obtained by the following equation:

In Eq. (4) we have U \( {\text{U}}^{\text{T}} = {\text{I}}_{\rm n} \) and \( {\text{VV}}^{\text{T}} = {\text{I}}_{\rm n} \). The columns U are orthogonal eigenvectors \( {\text{YY}}^{\text{T}} \), as the degree of the matrix Y, the elements of the diagonal matrix S can satisfy the relation in Eq. 5, and we can also rewrite the matrix Y as Eq. 6 shown below. In Eq. (6),\( {{\upmu }}_{\rm i} \) and \( {\text{V}}_{\rm i} \) are the i-th eigenvectors U and V and \( {{\updelta i}} \) is considered equal to the i-th singular value.

where \( {{\updelta i}} \) and \( {\text{V}}_{\rm i} \) are the ith eigenvectors of U and V and \( {{\updelta i}} \) is the i-th singular value.

2.3 Stationary wavelet transform

Stationary Wavelet Transform (SWT) is a waveform conversion algorithm designed to overcome the translation-in-variance of Discrete Wavelet Transform (DWT). The translation-in-variance is a result of removing the downstream and upstream samplers in the DWT and the upstream sampling of the filter coefficients by factor 2j−1 at the j-th level of the algorithm. SWT is intrinsically redundant, since the output of each level of SWT contains a number of samples equal to inputs. For N surface decomposition, there is N redundancy in the wavelet coefficients [20, 21].

The 2D SWT divides the image into four sub-bands. LL is the approximate image of the input image, known as the low frequency sub-band. LH, HL and HH sub-bands represent the horizontal, vertical and diagonal features of the original image, respectively. Figure 3 illustrates the implementation of the SWT up to three levels. In our experiments, we found that this conversion increased PSNR. The application of this conversion to the Barbara image in three levels is shown in Fig. 3 and SWT conversion to Barbara image is shown in Fig. 4.

2.4 PSO combined with GA

As mentioned, Genetic Algorithm is a search-based algorithm which is based on natural selection and genetics [22]. In problems where the objective function is non-derivative and the design variables are continuous or discrete, this algorithm seems appropriate. The genetic algorithm is presented based on the Darwinian principle of evolution, which is based on the struggle for survival as well as the survival of the fittest. Each member of the population is considered as a chromosome and the fit is obtained from the objective function. This function must also be optimized. Operators such as mutation, composition, and selection are used to evolve the original population. Those members of the population who are more fit will have a better chance of reproducing. After several repetitions, the population reaches a steady state. So at this point, the algorithm converges and most of the population members will be the same, indicating a near-optimal answer to the problem. Genetic algorithm control is performed by three operators: mutation rate, composition rate and size. Like other search algorithms, the optimal response is obtained in the genetic algorithm after many iterations, the repetition rate of which is determined by chromosome length and population size. However, evolutionary algorithms, especially GA and PSO, which are also used in our design, have advantages and disadvantages [23]. The operators used in GA are random and this algorithm is very sensitive to the initial values selected by the user. Also, the convergence rate to the response in this algorithm is low. The PSO algorithm has a more accurate performance. However, like GA, this algorithm is sensitive to the initial value. To overcome the limitations of PSO, its combination with GA has been proposed. The basis for this is that such a combined approach is expected to have the simultaneous benefits of PSO and GA. One of the advantages of PSO over GA is its algorithmic simplicity. Another obvious difference between the two is the ability of convergence control [24].

Considering the advantages and disadvantages of the two algorithms, the PSO-GA combination was first proposed by Angelin and Eberhard as a new algorithm to outperform each individual algorithm [25, 26]. In the hybrid algorithm, the speed of finding a response is significantly increased and the accuracy of the response will be more acceptable. This algorithm can be used for many optimization problems. The hybrid algorithm is a one-objective algorithm. In this scheme, we first run PSO and then GA. And for all members of the population, the best update is done and the children inherit the best memory from their parents and the speed of the first child randomly takes one of the speeds of the first and second parents and the remaining parent reaches the second child.

2.5 Artificial neural networks (ANN)

In the artificial neural network (ANN), attempts have been made to model the nervous systems of living organs, especially the human brain. ANN is made up of a large number of highly interconnected processing elements, such as neurons, that perform tasks together. ANNs have the ability to be trained and, like the brain, to learn by seeing different examples. A neural network is created from parallel processing units that are interconnected. Each of these units receives input from the other units. This unit then takes the sum of the inputs and calculates the output, and this output is sent to the other units to which it is connected [2]. In fact, artificial neural networks are a powerful technique for controlling the information contained in the data and arise from the generalization of this information. Teaching neural networks is not something that can be done through programming. Programming is generally time consuming for the analyst and forces him to examine and determine the exact behavior of the model. In fact, it can be said that neural networks learn patterns in data [11]. Neural networks are much more flexible in changing environments. Neural networks can also perform very complex interactions, so they can easily use inferential statistics or programming logic to model data that is very difficult to model [12].

2.6 Neuronal identifiers

This procedure begins with the selection of a neural model defined by its structure and related learning algorithm. Since neural networks are capable of learning, they can begin learning when neural models and input and output data are available. Different structures are trained and compared using the learning set and the simulation data set and the criterion (error target).

The best structure for us is a structure with the smallest unit (neurons). The artificial neural networks have an input layer (buffer layer), one or more oblique nonlinear hidden layers, and a linear/nonlinear output layer [25, 26]. Hybrid identifiers can identify simple nonlinear systems and cannot identify complex ones [26,27,28].

Figure 5 shows the structure of the NID multilayer neural network identifier with two nonlinear hidden layers. The size of the neural network is crucial in the design of the entire structure. There is no mathematical formula for calculating the optimal size of such networks. However, with large free units, NID quickly learns. The fundamental limitation in increasing the size of hidden layers is the limitation of the hardware structure of the system used in experimental work that requires powerful hardware. Figure 5 shows the multilayer neural network identifier structure, forward feeding NID with two nonlinear hidden layers [27]. In the proposed scheme, we use a multilayer neural network to optimize PSO and PSO-GA.

3 Method

In this section we present our proposed algorithm. The proposed algorithm consists of two algorithms with/without SWT transform. In the results section, all the results with/without the use of SWT algorithm are expressed. It is worth noting that the flowchart of the algorithm is given in Fig. 6. Our algorithm consists of two parts. SF calculation step and watermark insertion.

3.1 Watermark insertion

Step 1

Reading the host I and W images as watermarks.

Step 2

Implementing the contourlet transform (here NSCT is used which is the newer and better contourlet transform) on I image to obtain a low-pass image and directional pass-through images.

coeffs = nsctdec(double(I), nlevels, dfilter, pfilter)

nlevels = [0, 1, 3] equals decomposition level

And the parameters “Pyramidal filter and the Directional filter” are set to be equal to the following values

pfilter = ’maxflat’

dfilter = ’dmaxflat7’

Step 3

Obtaining 2D SWT transform from coeffs {1}

(coeffs is obtained in step 2)

Step 4

Applying redundant DWT

Obtaining SWT2 transform from HL coefficients of the previous step.

[LL, LH, HL, HH] = swt2(coeffs{1},1)

Step 5

Extracting singular values and Watermark embedding [28].

SVD operation is performed for each sub-band of the host image, \( {\text{A}}^{\text{K}} = {\text{U}}_{\rm K} \sum_{\rm k} {\text{V}}_{\rm K}^{\text{T}} \), where k is equal to the frequency sub-bands. We also perform SVD on watermark image W ⇒ \( {\text{U}}_{\rm W} \sum_{W} {\text{V}}_{\rm W}^{\text{T}} \), then we will obtain the main components of watermark image.

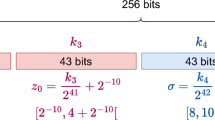

We put the main components of watermarking in the singular values of the host image in each \( \sum_{I}^{k} = \sum_{k}^{ } + \Delta \) • \( {A_{Wa}} \) sub-band. Δ scaling factor is obtained from PSO algorithm. At this point, the product between the principal components and the scaling factor (which we will explain below) is a point product and the coefficients corrected for each sub-band are equal to

Step 6

Calculation of the scaling factor for image embedding using PSO is as follows:

Step 7

We obtain the Inverse Discrete Wavelet Transform (IDWT) on the modified coefficients for each subgroup \( {\text{A}}_{\text{w}}^{\text{k}} \) [28].

Singular PSO is obtained in the scaling factor Δ using the objective function. For each iteration in the PSO, the Δ value is examined for several attacks. At the end of the PSO iteration, we obtain the near optimal scaling factor [28]

Step 8

Taking the inverse SWT transform from the obtained coefficients in the previous step.

We have evaluated our proposed algorithm with all the criteria stated in Sect. 3. Four standard images in image processing are used in our experiments as shown in Fig. 1. All 4 images were used as input images or hosts. Besides being host, image D was also used as watermark image. Both of our proposed algorithms were able to accept 255 × 255 and 512 × 512 images as both the host and the Watermark image. This showed the increased potential of our method. All experiments were performed with a computer with CPU Core i3 and all coding and resulting extractions were performed by MATLAB 2018.

This article uses NCC to examine the Watermark power. A larger NCC represents a better certainty of the watermark. To evaluate the power of the proposed model, the watermarked image was tested according to the common image processing operations such as mean filter, median filter, cropping, and salt-and-pepper noise according to the following table. Figure 7 shows the host images.

In both algorithms, the parameters for the contourlet transform were defined as follows: The decomposition rate was 3, and the pyramidal and directional filters were 7.9 and PKVA, respectively. The GA-PSO parameter was c1 = c2 = 1.4962 and was considered to be 5 in Formula W1. In addition, the population was 100, with 10 replicates.

In addition to using the MATLAB standard images commonly used by researchers, we decided to use images from other databases to further evaluate our proposed plan. Figure 8 shows our selected images from other databases for the Host image.

4 Results

SSIM and PSNR were used to test watermark visibility and the values were expressed before the attacks. Table 1 shows the PSNR, NCC, and SSIM values after attacks when the Barbara watermarked image was 255 × 255 and the host image dimensions were 255 × 255 and it is visible by applying the first proposed algorithm (without SWT transform). Table 2 also shows the NCC values extracted after significant geometric attacks.

As shown in Table 1, all values represented acceptable results of the corresponding algorithm. According to the values in Table 1, the algorithm showed good results against the geometric attacks. The best results were for the median filter 3 × 3. Flip in vertical flip + horizontal + vertical is the rotation around one dimension. The image has been rotated in three directions: vertical, horizontal, and again vertical. The weakest result was related to the crop attack with Baboon image. But the remarkable point was that the best result was in the same attack and for Lena’s image.

To test the capacity of the proposed algorithm, we tried not to use the logo images as watermarks, and instead used large images. Table 3 shows NCC values for the extracted watermarked image when the Barbara watermarked image was 512 × 512 and the dimensions of the host image were 512 × 512 by applying the second proposed algorithm (without using SWT transform). Also Table 4 shows PSNR, NCC, and SSIM values when the Barbara watermarked image was 512 × 512 and the of the host image were 512 × 512 by applying the second proposed algorithm.

It can be deduced from Tables 3 and 4 that by increasing the size of the watermarked image, algorithm 1 has shown acceptable results. The weakest result was related to the vertical flip + horizontal + vertical flip attack for the Lena image and the best result was again for this image when it was under crop attack showing that this image was more robust under this attack in our algorithm.

But the results presented in the following tables were extracted by applying algorithm 2 (i.e. without applying SWT). The PSNR, NCC and SSIM values are shown in Table 4 when the Barbara watermarked image was 512 × 512 and host image dimensions were 512 × 512. As can be seen, all the results under this algorithm performed much better. The PSNR values have improved significantly.

Also the SSIM index which was related to the watermark visibility shown in Table 5 showed a better value than when we used algorithm 1. That is, this algorithm has been able to minimize the watermark sensitivityFootnote 1 to a minimum. Also, the values listed in Table 6 show a large improvement over when we used algorithm 1. Also, the weakest result for NCC was equal to 0.8608 for the vertical flip + horizontal + vertical flip attack applied to the Lena image which is still a good result.

Tables 7 and 8 also show values for 512 × 512 host images and 255 × 255 watermarked images.One can see the reconstructed (extracted) image in Fig. 9, which is obviously of high visual quality. In the following, the results of the first part of the algorithm, i.e. SF calculation, are shown. Figure 10 illustrates the Iteration to Best Cost ratio by the PSO algorithm for the Lena host image with the Barbara watermarked image. Number of iterations were set to be 10 times. c1 = 1.4 and c2 = 1.4 and number of population members is equal to 10. The graph shows that the algorithm is successful after 4 iterations.

Figure 11 shows the results of PSO algorithm optimized with artificial intelligence (AI) with the same previous images with Swarm Size equal to 10. Table 9 shows the run-time of the PSO-AI in the proposal, Best Solution and Best Fitness.

Figure 12 shows the results of the PSO optimized with GA. Here the iterations are 10 and C1 and C2 are 2. The blue graph represents the cost. Best Fitness in the table indicates a decrease in this parameter relative to PSO-AI.

Figure 13 shows the results of PSO optimized with neural networks and genetic algorithm. The number of iterations is 10 and C1 and C2 are 2. The blue graph represents the cost. Best Fitness shown in Table 10 indicates a decrease in this parameter compared to PSO-AI. In this algorithm, first the PSO is run once and then the GA.

Table 11 shows the results for PSOGA-AI. According to the results, Best Fitness for PSO was 8.2279 which was higher than other algorithms and outperformed the optimized PSO algorithm. The results showed that PSO had the weakest results. Due to the high computational cost of PSOGA-AI and lower cost of this algorithm, its application was not cost effective. Therefore, we would have better results for computing SF with this algorithm in the proposed plan and each having higher Cost will be more stable in choosing images with this algorithm. As was mentioned earlier, it is concluded from our experiments that PSO-AI algorithm gives better results. Therefore, SF is estimated for other test images, so that the higher Cost implies higher SF. Figures 14, 15 and 16 show the results, taking into account the tables associated with implementing attacks to the images. The most stable images are: (1) Baboon, (2) Peppers, (3) Lena, and (4) Barbara.

There are two ways to obtain the false positive:

-

1.

Calculating false positive for watermark image and extracted watermark image:

In this case, we first calculate the difference between the original and the extracted watermark images, thus counting the pixels that are not equal to zero (i.e., pixels that are mistakenly detected as correct). The number of pixels divided by the total number of pixels will show false positive.

-

2.

Calculating false positive in the extraction step:

After watermarking algorithm, a series of input pixels change. In the extraction step, we consider pixels which are mistakenly referred to as watermarked pixels as false positives. Table 12 shows the false positive rate.

As mentioned at the beginning of this section, for the second part of our tests, we also used images from other databases. We used 2 images of 512 × 512 size as a host image and also a Baboon image of the same sizes as a watermark.

In order not to prolong the number of article pages, we only used the same sizes for the 4 selected images from other databases. It should be noted that in this step, the second section algorithm has been applied to the images. The results are listed in Tables 13 and 14. The Moon image is weak against the Histogram attack. The best results belong to the Sunrise image.

The results of Best Cost with 10 repetitions are given in Fig. 17. As can be seen in this figure, the best results belong to the Sunrise image and the weakest to the Moon image. Table 15 show the false positive.

5 Discussions

In this section we compare the results of our method with several other references. The results of other references are presented in Tables 16 and 17. Ref. [29, 30] used 512 × 512 images a host and a logo(32 × 32) for Watermark. Reference 32 used 512 × 512 images as a host image and a logo (64 × 64) used as a Watermark image. Reference 33 used 512 × 512 images and the logo (64 × 64) for Watermark and Ref. [33] used 512 × 512 images a host and a logo (512 × 512) for Watermark.

As noted above, SSIM and PSNR, which are key watermarking factors, are used to test watermark visibility, and the results compared to other references indicated the desirable status of our design. The PSNR value has increased substantially even when watermark and cover image sizes have increased. It should also be noted that our watermark image was not a logo. SSIM is also competitive. As can be seen from all references, with respect to watermark and host image size, better results are obtained for attacks, especially for PSNR.

Table 16 shows the PSNR and SSIM values and Table 17 shows the NCC values in other references. In [30] two logo images were used as Watermark image, so two values are given for PSNR and SSIM. We tried to select references close to our proposed design for comparison. However, comparing all the cases tested in our design required several other references which would increase the number of pages further.

As shown in the tables in Sect. 3, the results of the experiments show that the NCC rate in our design is even more acceptable with increasing size of the cover image and watermark compared to the references and the PSNR value of our design is much larger. To avoid prolongation, we only mention SSIM and PSNR again for Lena’s image. Compared to other references, it is evident that the resistance of the proposed design is acceptable, especially the PSNR value.

In Tables 18 and 19, the results of factors similar to the two other works performed in 2018 and 2019 are given for further comparison. Table 18 shows the results in Ref. [33]. For similar images in this reference, PSNR value is 38.5365. Table 19 also shows the results of Ref. [34]. Both references also used SVD conversion.

In Tables 20 and 21, we cite two recent works that evaluated their proposed algorithm structure differently. Table 20 shows the results in Ref. [35] which has used a new method based on the entropy-based logarithmic quantity of information in the range of the wavelet. Our method outperformed in the case of SSIM criteria and our PSNR value is closer to the desired value. Also, Table 21 shows the results of the common cases of the article [36], in comparison with this reference, our results seem to be generally better. The reference states that applications such as multimedia data security and medical imaging, which are highly complex, are not suitable, and presents a new robust and blind marking scheme based on DCuT and RDWT.

6 Conclusion

In combination with Contourlet Transformation, this paper proposes a watermark pattern based on PSO and SVD decomposition which includes insertion and extraction algorithms. This work shows that this method is not only in accordance with the principal conditions of digital watermarking, but also has a good resistance to common image attacks, namely filtering, noise, and cut. Furthermore, using the two proposed algorithms, we were able to prove that the presented algorithms have a great capacity, one of which gave considerably better results in terms of stability factors studied, as well as PSNR and SSIM. We also showed that improving the meta-heuristic algorithms by neural networks would give better results, compared the algorithms in this regard, and concluded that the PSO algorithm optimized by neural networks yields lower computational cost and higher performance. So, it is more optimal for estimating SF.

Notes

It does not cause changes that are recognizable to the human eyes.

Abbreviations

- SWT:

-

Stationary wavelet transform

- DWT:

-

Discrete wavelet transform

- PSNR:

-

Peak signal-to-noise ratio

- SSIM:

-

Structural similarity index

- NCC:

-

Normalized cross-sectional correlation

- CT:

-

Contourlet transform

- ANN:

-

Artificial neural networks

- MSSIM:

-

Mean structural similarity index measure

References

Chu W (2003) DCT-based image watermarking using subsampling. IEEE Trans Multimed 5(1):34–38

Amiri A, Mirzakuchaki S (2019) Increasing the capacity and PSNR in blind watermarking resist against cropping attacks. Iran J Electr and Electron Eng IJEEE 16(1)

Chen Z, Li L, Peng H, Liu Y, Yang Y (2018) A novel digital watermarking based on general non-negative matrix factorization. IEEE Trans Multimed 20(8):1973–11986

Martin Zlomek (2007) Video watermarking. M.S. Thesis, Faculty of Mathematics and Physics, Charles University in Prague

Hernandez J, Amado M, Perez-Gonzalez F (2000) DCT-domain watermarking techniques for still images: Detector performance analysis and a new structure. IEEE Trans Image Process 9. Special Issue on Image and Video Processing for Digital Libraries

Peter Wayner (2002) Disappearing ryptography information hiding steganography and watermarking. Morgan Kaufman Publisher

Shinfeng, Chin Feng Chen (2000) A robust DCT—Based watermarking for copyright protection. IEEE Trans Consum Electron 46(3)

Tsz Kin Tsui, Xiao-Ping Zhang, Dimitrios Androutsos (2008) Color image watermarking using multidimensional fourier transforms. IEEE Trans Inf Forens Secur 3(1)

AI-Haj A (2007) Combined DWT-DCT digital image watermarking. J Comput Sci 3(9):740–746

Fakhredanesh M, Rahmati M, Safabakhsh R (2013) Adaptive image steganography using contourlet transform. J Electron Imaging 22(4)

Do MN, Vetterli M (2005) The contourlet transform: an efficient directional multiresolution image representation. IEEE Trans Image Process 14(12):2091–2106

Venkata Narasimhulu C, Satya Prasad K (2010) A hybrid watermarking scheme using contourlet transform and singular value decomposition. IJCSNS: Int J Comput Sci Network Secur 10(9)

Zaboli S, Shahram Moin M (2007) CEW: a non-blind adaptive image watermarking approach based on entropy in contourlet domain. In: Proceedings of IEEE international symposium on industrial electronics, Vigo, Spain

Najafi E, Loukhaoukha K (2019) Hybrid secure and robust image watermarking scheme based on SVD and sharp frequency localized contourlet transform. J Inf Secur Appl 44:144–156

Golshan F, Mohammadi K (2013) A hybrid intelligent SVD-based perceptual shaping of a digital image watermark in DCT and DWT domain. Imaging Sci J 61(1):35–46

Bessel A, Nordin MJ, Abdulkareem MB (2017) An improved robust image watermarking scheme based on the singular value decomposition and genetic algorithm, Advances in visual informatics, IVIC 2017. Lecture Notes in Computer Science, Bangi

Duncan DY, Minh ND (2006) Directional muitiscale modeling of images using the contourlet transform. IEEE Trans Image Process 15(6)

Xueqiang L, Xinghao D, Donghui G (2007) Digital watermarking based on non - sampled contourlet transform. IEEE International Workshop on Anticounterfeiting, Security, Identification, IEEE

Asmare M, Asirvadam V, Hani A (2015) Image enhancement based on contourlet transform. SIViP 9:1679–1690

Liu J, Huang J, Luo Y, Cao L, Yang S, Wei D, Zhou R (2019) An optimized image watermarking method based on HD and SVD in DWT domain. IEEE Access 7:80849–80860

Dey N, Roy AB, Das A, Chaudhuri SS (2012) Stationary wavelet transformation based self-recovery of blind-watermark from electrocardiogram signal. Wireless Telecardiol, SNDS, pp 347–357

Masoum MAS, Ladjevardi M, Jafarian A, Fuchs EF (2004) Optimal placement, replacement and sizing of capacitor banks in distorted distribution networks by genetic algorithms. IEEE Trans Power Deliv 19(4):1794–1801

Ru N, Jianhua Y (2008) A GA and particle swarm optimization based hybrid algorithm. In: IEEE Congress on evolutionary computation in evolutionary computation, pp 1047–1050

Premalatha K, Natarajan AM (2009) Hybrid PSO and GA for global maximization. Open Probl Comput Math 2(4):597–608

Angeline P (1998) Evolutionary optimization versus particle swarm optimization: Philosophy and performance differences. Evol Program, VII, pp 601–610

Eberhart R, Shi Y (1998) Comparison between genetic algorithms and particle swarm optimization. VII, Evol Program, pp 611–616

Husien S, Badi H (2015) Artificial neural network for steganography. Springer Neural Comput Appl 26(1):111–116

Run RS, Horng SJ, Lai JL, Kao TW, Chen RJ (2012) An improved SVD based waterking technique for copyright protection. Expert Syst Appl 39:673–689

Lai CC (2011) An improved SVD-based watermarking scheme using human visual characteristics. Opt Commun 284(4):938–944

Ernawan F, Ramalingam M, Sadiq AS, Mustaffa Z (2017) An improved imperceptibility and robustness of 4x4 DCT-SVD image watermarking using modified entropy. J Telecommu Electron Comput Eng 9(2–7):111–116

Dong H, He M, Qiu M (2015) Optimized gray-scale ImageWatermarking algorithm based on DWT-DCTSVD and chaotic firefly algorithm. In: International conference on cyber-enabled distributed computing and knowledge discovery (CyberC), pp 310–313

Qian H, Tian HL, Li C (2016) Robust blind image watermarking algorithm based on singular value quantization, ACM. In: Proceedings of the international conference on internet multimedia computing and service, pp 277–280

Zheng et al W (2018) Robust and high capacity watermarking for image based on DWT-SVD and CNN. 2018 13th IEEE conference on industrial electronics and applications (ICIEA), pp 1233–1237

Zhou X, Zhang H, Wang C (2018) A robust watermarking technique based on DWT, APDCBT, and SVD. Symmetry 10(3):1–14

Liu J, Wu S, Xu X (2018) A logarithmic quantization-based image watermarking using information entropy in the wavelet domain. Entropy 20:945

Thanki R, Kothari A, Trivedi D (2019) Hybrid and blindwatermarking scheme in DCuT - RDWT domain. J Inf Secur Appl 46:231–249

Author information

Authors and Affiliations

Contributions

Ali Amiri developed the main idea, conducted the experiments and wrote the original manuscript. Sattar Mirzakuchaki supervised the experiments and modified the manuscript. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing of interest

The authors declare that they have no competing interests.

Availability of data and material

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Amiri, A., Mirzakuchaki, S. A digital watermarking method based on NSCT transform and hybrid evolutionary algorithms with neural networks. SN Appl. Sci. 2, 1669 (2020). https://doi.org/10.1007/s42452-020-03452-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-03452-0