Abstract

The progression toward automated driving and the latest advancement in vehicular networking have led to novel and natural human-vehicle-road systems, in which affective human-vehicle interaction is a crucial factor affecting the acceptance, safety, comfort, and traffic efficiency of connected and automated vehicles (CAVs). This development has inspired increasing interest in how to develop affective interaction framework for intelligent cockpit in CAVs. To enable affective human-vehicle interactions in CAVs, knowledge from multiple research areas is needed, including automotive engineering, transportation engineering, human–machine interaction, computer science, communication, as well as industrial engineering. However, there is currently no systematic survey considering the close relationship between human-vehicle-road and human emotion in the human-vehicle-road coupling process in the CAV context. To facilitate progress in this area, this paper provides a comprehensive literature survey on emotion-related studies from multi-aspects for better design of affective interaction in intelligent cockpit for CAVs. This paper discusses the multimodal expression of human emotions, investigates the human emotion experiment in driving, and particularly emphasizes previous knowledge on human emotion detection, regulation, as well as their applications in CAVs. The promising research perspectives are outlined for researchers and engineers from different research areas to develop CAVs with better acceptance, safety, comfort, and enjoyment for users.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the advancements in artificial intelligence and informatics communication technology, connected and automated vehicles (CAVs) are experiencing rapid development globally in terms of hardware, software, systems engineering, infrastructure, and policy [1, 2]. CAVs can use sensors, controllers, actuators, and integrate communication and network technologies to perform environmental perception, intelligent decision-making, and collaborative control, enabling safe, efficient, comfortable, and eco-friendly automated driving [3, 4]. Thus, the CAV has become an intelligent mobile terminal carrying rich functions and services. In the foreseeable future, as a part of the CAV that users can directly experience, intelligent cockpit will provide drivers and occupants with a safer, more efficient and more enjoyable ride experience.

Intelligent cockpit in CAVs is a mobile space with humans at its core, capable of dynamically perceiving and understanding human behavior and providing feedback accordingly [5]. The development of the intelligent cockpit will expand and deepen the relationship between humans, vehicles, and roads, opening up new opportunities for research and innovation. Among these opportunities, affective interaction in the intelligent cockpit is particularly valuable for improving the acceptance, safety, comfort, and enjoyment of human behavior and emotions in the context of CAVs [6,7,8]. Affective interaction refers to using sensors and other equipment to enable the intelligent cockpit to perceive human emotional expressions, accurately identify human emotions, and make corresponding decisions based on the recognition results. The intelligent cockpit then provides related functions or services to humans through different interaction modes, according to the decision-making strategy.

The role of affective interaction within intelligent cockpits plays an essential role in the development of CAVs from driver assistance to automated driving, from single-vehicle intelligent driving to multi-vehicle collaborative driving [9]. For single-vehicle automated driving, due to the trust concerns, human acceptance of the CAV can still be a significant issue for the popularities of CAVs, while the human emotional state can be a critical index for both real-time and long-term human trust [10, 11]. Besides, driving context can frequently change between a potentially stressful environment (requiring a high degree of concentration and responsibility to the human) and a relaxed environment. Affective interaction with the occupants will augment their driving experience and comfort, especially when sharing the driving authority with the CAV [12]. For multi-vehicle collaborative driving, in the development process of CAVs, there is bound to be a long-term mixed traffic situation where automated driving and human driving coexist. Studying the expression and behavior of human emotions and detecting and regulating emotions will help CAVs improve road safety and traffic efficiency [9].

While affective interaction could not only provide great opportunities in improving human acceptance, safety, and comfort of CAVs, and but also bring enormous potential to enhance the safety and mobility of public transport, there are still challenges for future research. First, developing a proper affective interaction framework for the intelligent cockpit in CAVs requires cross-disciplinary knowledge, including automotive engineering, cognitive psychology, human factor, affective computing, and intelligent transportation system. Collaboration between experts in these fields is necessary to develop a comprehensive and efficacious affective interaction system [13, 14]. More importantly, in CAVs, the relationship between the human, vehicle and road is more complicated than in traditional vehicles, as the road scenario causes the generation of human emotions in driving, and the human emotional responses will continue to impact the road scenario. Therefore, studying human-vehicle-road coupling is crucial for developing an efficient affective interaction system in CAVs. As illustrated in Fig. 1 shows the coupling delineates the multifaceted relationship between the human operator, the vehicle, and the surrounding road environment in CAVs. Different road scenarios, such as urban and rural, can trigger human emotions and behavior in driving. Human emotions such as stress and anxiety can affect human behavior and driving performance, as well as the perception of the road environment and the vehicle’s behavior. The vehicle’s acceleration, deceleration, speed, and other parameters can affect road scenarios.

Several studies have reviewed driver emotion in terms of driver emotion recognition [15] and regulation techniques [16]. However, very few consider the intricate interplay between humans, vehicles, roads, and the resulting emotional states within the context of CAVs. Besides, fundamental understandings and potential applications of human emotions in the context of CAVs are rarely considered. Considering these limitations, in this study, a literature review is presented to analyze human-vehicle-road affective interactions for future CAVs. In sum, the works of this study can be summarized as follows. To provide clarity, the organizational structure of this study, along with pertinent research domains, is depicted in Fig. 2.

-

First, the basic concepts of human emotions are introduced to have a clear understanding of the production process and classification of human emotions.

-

Second, the relationship between different emotions and emotional expressions is summarized. Facial expressions, speech expressions, body gesture expressions, and physiological expressions are fundamental to human emotion recognition and regulation.

-

Third, experimental methodologies for studying human emotions in driving are discussed, including experiment platform, emotion induction, emotion measurement, and emotion annotation.

-

Then, how to detect human emotion effectively in CAVs is reviewed from four aspects: in-cabin driver emotion detection, in-cabin occupant emotion detection, driving-context-based driver emotion detection and V2X-based human emotion detection.

-

Next, how to use the human–machine interface in CAVs to regulate the detected human emotion is discussed, consisting of in-cabin regulation methods, V2X-based human emotion regulation, regulation scenarios and regulation quality analysis method.

-

Finally, the significant challenges and promising areas are highlighted.

2 Human Emotion Basics

The human emotion basics are a prerequisite for the study of human emotion in CAVs. In this section, the basic concepts of human emotions are discussed in terms of the definition and classification of emotions.

2.1 Definition of Human Emotion

Emotion research has been conducted for decades. However, researchers studying emotions still have not reached a broad consensus on how to define emotions precisely. Figure 3a presents the situation-attention-cognitive appraisal-emotional response sequence specified by the modal model of emotion in a highly abstracted and simplified form [17,18,19,20,21]. According to the cognitive theory [22], an emotional response begins with a cognitive appraisal of the personal significance of a situation, which in turn gives rise to an emotional response involving subjective experience, physiological change, and behavior response [17].

Modal model and classification of human emotion. a is the emotion modal model [17] b and c are two discrete emotion models: b is the six basic emotions model [18], c is the emotion wheel model [19]. (d) and (e) are two dimensional emotion models, d is the 2D circumplex model adapted from [20], e is the 3D PAD emotion model [21]

Cognitive appraisal refers to the personal interpretation of a situation or event. The appraisal process includes: (a) whether a situation or event threatens people’s well-being, (b) whether there are sufficient personal resources available for coping with the demand of the situation and (c) whether the strategy for dealing with the situation is effective [22]. Subjective experience shows that all humans have several basic universal emotions (e.g., anger, happiness, sadness) regardless of culture and ethnicity, and the way to experience these emotions is highly subjective [23]. Physiological change is a biological awakening or a physical reaction experience during an emotion. For example, scare usually leads to heart racing, short breathing, drying mouth, tensing muscles, and sweating palms [24]. Behavioral response leads to behavior that is often, but not always, expressive, goal-directed, and adaptive [25], for example, a smile indicating happiness and a frown indicating sadness. Additionally, emotional responses often change the situation that gives rise to the response in the first place. Figure 3(a) also depicts this aspect of emotion by showing the response looping back to (and modifying) the situation that gives rise to the emotion.

2.2 Classification of Human Emotion

Emotions can be classified into different categories according to discrete emotion theory or dimensional emotion theory. Discrete emotion theory assumes that there exists a small number of separate emotions, characterized by coordinated response patterns in physiological, brain, and facial expressions. The current discrete emotion models mainly include the six emotion models (Fig. 3(b)) proposed by Ekman [18] and the emotion wheel model (Fig. 3(c)) proposed by Plutchik [19]. Among these two discrete models, the most acknowledged one is the basic emotion model. Ekman summarized the six basic emotions as happiness, sadness, anger, fear, surprise, and disgust. Other emotions were regarded as combinations of these basic emotions. In particular, a cross-cultural study conducted by Ekman supported the discrete emotion theory based on the six basic emotions [26].

Dimensional emotion theory proposed that emotional states can be accurately represented as a combination of several psychological dimensions (e.g., valence, arousal). The frequently used dimensional models include the circumplex model (Fig. 3(d)) and the 3D PAD emotion model (Fig. 3(e)). Most of these dimensional models contain valence and arousal. Valence refers to the degree of pleasure associated with emotions, whereas arousal refers to the intensity of the experienced emotions. Russell proposed the circumplex model in which emotions formed a circle defined by the dimensions of valence and arousal [27]. In the circumplex model, the subjective experience of pleasure and arousal is called core emotion [28]. Valence and arousal are useful as a basic taxonomy of emotional states [29], however, using only valence and arousal to project a high-dimensional emotional state onto a basic 2D space would result in information loss, for example, some emotions become indistinguishable (e.g., fear and anger), and some emotions are outside the space (e.g., surprise) [30]. Further, Mehrabian [21] extended the emotion model from 2D to 3D to develop a well-known pleasure-arousal-dominance emotion model. See Fig. 3(e) for illustration. The increased dimensional axis is named dominance. Ranging from submissive to dominant, dominance reflects the control ability of humans on emotions. In this model, anger and fear can be easily identified because anger is in the dominance axis, while fear is in the submissive axis.

-

Summary of human emotions basics: Discrete emotions such as anger and sadness have been widely studied for a long time in the past, and are considered to be the primary extreme negative emotions that lead to traffic accidents. In the study of human emotions in driving, the widely used discrete emotion method can intuitively reflect emotions, but only includes a limited number of emotions, and it is difficult to clearly express mixed emotions. Dimensional emotion methods have the advantages of being practical and context-sensitive, but are less intuitive and require a more complex data labeling process. Therefore, both discrete and dimensional emotions should be discussed in CAVs’ emotion research. Moreover, in previous studies, the main emotions studied in driving are the driver’s negative emotions, and the positive emotions of drivers and occupants should also receive more attention in the research of CAVs.

3 Human Emotion Expressions

Human emotion expressions are fundamental to emotion recognition and regulation in driving. Emotional expressions are multimodal, involving the face, speech, body gesture, and physiological changes [31]. Therefore, human emotion expressions will be discussed from the above aspects in this section, separately.

3.1 Facial Expression

The association between facial expression and emotion is investigated by a series of studies in psychology [34]. Ekman’s study shows that at least six basic emotions (anger, fear, disgust, happiness, sadness, and surprise) could be recognized cross-culturally by facial behavior [26]. Many of the relevant studies have quantified facial behavior using componential coding. In a further study, Ekman developed a facial motion coding system (FACS), the most comprehensive and widely used taxonomy in facial behavior coding [32]. FACS is an anatomically-based comprehensive measurement system that evaluates 44 different muscle movements (e.g., raising of the eyebrows, tightening of the lips). The action units (AUs) of FACS are suitable for studying human naturalistic facial behavior as the thousands of anatomically possible facial expressions can be described as a combination of 27 basic AUs and multiple AU descriptors. Table 1 illustrates the descriptor for each action unit and the AUs that can be observed in basic emotions [32]. Each AU corresponds to a specific facial movement, and different combinations of AUs represent different emotional expressions. Table 1 also presents the prototypical AUs observed in each basic emotion [33].

Most of the existing emotion studies on facial expression employed discrete emotion models, focusing on the expression of six basic emotions, due to their universal properties, and their apparent reference representations in human’ emotional life [35]. There are also some studies analyzing the relationship between facial expressions and emotions based on dimensional emotion models. Russell’s research shows that facial expression appears to indicate the valence of a person’s emotional state reliably [36]. For example, Duchenne smiles [37] involving the wrinkling of muscles around the eyes are reported to associate with positive emotional experiences. In contrast, negative emotion inductions are often associated with visible facial behavior in which the eyebrows are lowered and brought closer together [38].

3.2 Speech Expression

Speech delivers emotional information through linguistic and acoustic messages that reflect how the words are spoken [39]. The linguistic content of speech carries emotional information. Some of the information can be inferred directly from the surface features of the words, which have been summarized in emotional words dictionaries [40]. The rest of the information lies under the text surface and can only be understood when considering semantic contexts (e.g., discourse information). Although the association between linguistic content and emotion is language-dependent, multiple cross-lingual models have been developed for emotion detection [41, 42]. In addition to the linguistic messages to be expressed, the acoustic messages can also deliver rich information (such as age, gender, and hometown). Acoustic expressions are described by speech prosody (pitch, loudness, rhythm). A happy voice usually has a higher pitch, and a louder and faster speech rate, whereas a sad voice has a lower pitch and slower speech rate.

There are two opinions on acoustic expressions [43]. The first one is that acoustic expression that could distinguish different basic emotional states which are discrete. Early researchers such as Darwin stated that specific intonations corresponded to specific emotional states [43]. Banse and Scherer [44] studied the relationship between 14 induced emotions and 29 acoustic variables. The authors found that a combination of ten acoustic properties can distinguish discrete emotions. Cowie’s research [45] on qualitative acoustic correlations of basic emotions shows that the basic emotion-related prosodic features extracted from audio signals include pitch, energy, and speech rate. Table 2 illustrates the changes in various acoustic features during the occurrence of six basic emotions [45, 46]. The pitch, intensity, duration, and spectral are shown for each emotion, with changes indicated as either an increase, decrease, or no significant change.

The other opinion is the acoustic expressions are different in the continuous valence and arousal. The most consistent association reported in the literature is the relationship between arousal and acoustic pitch. Higher arousal levels are linked to higher-pitched vocal samples [47]. Bachorowski and Owen [48] suggested that acoustic pitch can be used to assess the level of emotional arousal currently experienced by individuals. It is much more challenging to find acoustic features sensitive to valence [47] because valence correlates strongly with textual features from the spoken word [49].

3.3 Body Gesture Expression

Many studies showed that body language plays a vital role in emotional communication, which conveys specific information about a person’s emotional state [50]. Body language includes different types of non-verbal indicators such as facial expressions, body postures, gestures, eye movements, touch, and the use of personal space [51]. Besides the face, hands describe the most abundant information of body language [52]. For example, people using open gestures are considered with positive emotions [53]. Head positioning also reveals much information about the emotional state. People are prone to speak more if the listener encourages them by nodding [53]. The above knowledge indicates that it is necessary to consider various parts of the body at the same time to correctly interpret body language as an indicator of emotional state [50].

Studies have investigated the body language emotional coding system [54], but a broad consensus has not yet been reached on how to code body posture and movement. Table 3 provides a summary of the general body expressions associated with basic emotions, with detailed descriptions of body, hand, arm, leg, and other expressions [50, 55]. Based on the discrete emotional model, Gunes and Piccardi [56] showed that using naive representations from the upper body can match body postures into six emotional categories, namely anger, disgust, fear, happiness, sadness, and uncertainty. Castellano et al. [57] showed that dynamic representations of body postures could be categorized as anger, joy, happiness, or sadness. Based on skeletal geometric features, Saha et al. [58] identified gestures corresponding to five basic human emotional states, including anger, fear, happiness, sadness, and relaxation.

Researchers also studied the emotional expression of body language based on dimensional emotion models. Glowinski et al. [59] showed that essential groups of emotion, associated with the four quadrants of the valence-arousal space, can be distinguished from the representation of trajectories of head and hands from the frontal and lateral view of the body. The compact representations were grouped into clusters that divided the inputs into four categories based on their positions in the dimensional space, namely high-positive, high-negative, low-positive or low-negative. Kosti et al. [60] used features extracted from the body and background to study the intensity values, arousal, and dominance.

3.4 Physiological Expression

Although an individual may not clearly express his or her emotions through speech, gestures, or facial expressions, changes in physiological patterns are inevitable and detectable [61]. Emotional experience is accompanied by a series of physiological expressions that will, in turn, enhance the human emotional experience. The human physiological expression can be described from three aspects, including autonomic nervous system (ANS) response and central nervous system (CNS) response.

3.4.1 ANS Response

The ANS is a general-purpose physiological system responsible for regulating peripheral function [62]. The system consists of sympathetic and parasympathetic branches, which are usually associated with activation and relaxation, respectively. When a person is positively or negatively excited, the sympathetic nerves of the ANS are activated [63]. This sympathetic activation increases the respiratory rate, raises heart rate (HR), reduces heart rate variability (HRV), and raises blood pressure [64].

Some emotional researchers proposed that each emotion (e.g., sadness, anger, fear) had a relatively certain and reliable autonomic response pattern [65]. Table 4 summarizes various studies investigating the autonomic specificity of basic emotions, which demonstrate that different emotions are associated with specific physiological responses, including galvanic skin response, heart rate, blood pressure, finger temperature, and heart rate variability [66, 67]. Other researchers indicated that it might be best to observe ANS responding from the perspective of the dimensional emotion model such as arousal [68]. Lang and his colleagues [69] found that skin conductance level (SCL) increases linearly with the rated arousal of emotional stimuli. A meta-analysis showed that blood pressure, cardiac output, HR, and skin conductance response duration respond to emotional valence [70].

3.4.2 CNS Response

The physiological correlation of discrete emotions is more likely to be found in brain activities rather than in peripheral physiological responses [71]. Therefore, emotional representations in the CNS response have been investigated using a variety of frameworks [72]. A single-system emotion model assumes that one neurological system drives the experience and expression of all emotions, whereas multisystem models conceptualize emotions and their correlations into discrete bins [73]. Recent advances in functional neuroimaging have provided an opportunity to delineate a greater central mechanism supporting emotional processes [74].

Neuroimaging studies have shown that human emotions are controlled by a circuit, the phases of which include the orbitofrontal cortex, ventromedial prefrontal cortex, amygdala, hypothalamus, brainstem, cingulate cortex, thalamus, hippocampus, nucleus accumbens, insula, cortical sensory layers, etcetera. These are the critical brain regions for emotional production, emotional experience, and emotional regulation [73]. Activation and inactivation of different brain regions may indicate that they play different roles in emotional processing [75]. The application of activation likelihood estimation (ALE) revealed consistent, dissociable activation of distinct areas for happiness, sadness, anger, fear, and disgust [76]. Emotional specificity was confirmed by comparing emotional categories to each other. Kirby and Robinson [77] replicated the work of Vytal and Hamann [76] using the BrainMap database and ten times the number of studies. Although evidence of different brain region networks was found in certain emotions, there were also brain regions that were always activated in all emotions, suggesting the existence of a multisystem model [77].

-

Summary of human emotions expressions: This section discusses the multimodal expression of human emotions from four aspects: facial expression, speech, body posture and physiological changes. Facial expressions and speech expressions are the best and most commonly used in-cabin methods to detect human emotions due to their non-invasive properties. Besides, for the drivers’ emotion detection, there is a strong correlation between body gestures and driving behaviors. Considering steering wheel angle and gas pedal angle can reflect the drivers’ body gestures, the relationship between body gestures and human emotions is illustrated. Moreover, physiological expressions are detailed in this section. Wearable physiological devices can be used to measure the physiological expressions (e,g., heart rate and skin conductance activity) in driving, and human brain activities are commonly used for the golden standard (ground truth) in emotion detection. With the development of sensing devices, CAVs will provide more detection methods for human emotion expression-based research in driving.

4 Human Emotion Experiment in Driving

Collecting rich and reliable data is an essential step in the study of human emotions while driving. Therefore, the purpose of this section is to discuss how to design experiments to collect valid data. Table 5 presents a summary of human emotion experiments in driving, including the experiment platforms, induction methods, emotion measurement methods, and emotion annotation methods.

4.1 Experiment Platform

As shown in the platform column of Table 5, the experiment platforms used in human emotion experiments in driving include both driving simulators and real vehicles for on-road driving. Driving simulators are widely used in automotive research, providing simple data collection, low-cost testing, driver safety, adaptability, and a fully controllable environment.

Consequently, driving simulators are often used in driver emotion research [78]. Limitations of the driving simulators compared to the real driving environment include limited physical, behavioral, and perceived fidelity, which may lead to unrealistic driving behavior, degraded subjects’ involvement level in the study, and hazards not taken seriously by the driver, thus affecting the reliability of the research results [79]. To date, even the most advanced driving simulators are not able to imitate highly complex and realistic driving situations [78]. Motion sickness is a common side-effect of subjects in simulator studies, leading to blurred vision, migraine, epilepsy, and dizziness, negatively affecting the usability of simulators [79].

Although many approaches have been conducted in the laboratory environment, a controlled environment still has a stronger influence on subjects’ emotions and behavior, which may limit the reliability of the generated results. Therefore, many researchers conduct on-road testing at the advanced stage of research [80]. Currently, there is no framework for on-road research for human emotion investigations. Generally, when deciding on a driving route, subjects are often required to travel along a route familiar to them, or pre-planned routes to cover different driving situations and road types. Previous automotive research suggests combining three different road types for planning road routes or comparing them: rural, urban, and major [81]. However, a significant concern for on-road research is that it is unsafe for an emotional driver to drive on real roads that may cause traffic accidents.

4.2 Emotion Induction

The high quality of emotion elicitation stimuli is vital for driver emotion studies [102]. The purpose of emotion-inducing methods is to induce the target emotions of subjects. Generally, choosing the appropriate elicitation scene or stimulus depends on the target emotions and the experimental situation. As shown in the induce method column of Table 5, various emotion induction techniques have been used in human emotion experiments in driving, including emotional video clips, traffic scenarios, music, and other methods. Siedlecka and Denson [108] have classified emotion induction techniques into five broad categories: situational procedures, visual stimuli, auditory stimuli, standardized imagery, and autobiographical recall. Emotional behavior can also be used as an emotional stimulus.

Situational procedures involve creating a driving situation and putting subjects directly into the situation that elicits the target emotion, and is generally achieved by controlling the traffic, environment, accidents and personal interaction (e.g., catch a plane during the time of road congestion) [90, 102]. Visual stimuli can be film clips (videos) or static images with emotional content selected to elicit target emotions [103]. Auditory stimuli activate emotions via specific types of auditory input (e.g., music and the voice of the smart agent in the car) [103]. Standardized imagery involves subjects creating vivid mental representations and imagining themselves in a novel event designed to evoke strong emotion [94]. Standardized imagery can consist of reading vignettes, often with guidance from the experimenter. Autobiographical recall involves summoning personal emotional memories to reactivate emotions from the original emotional experience. Emotional behavior as emotional stimuli involves participants posing specific expressions or behaviors (e.g., posing a smiling expression to induce happiness) to elicit target emotions [92]. Studies have shown that visual stimuli usually achieve better results in emotional induction among the basic emotions [108]. Besides, as shown in the induce method column of Table 5, situational procedures are mainly used for emotion induction in driving scenarios.

4.3 Emotion Measurement

Using appropriate methods to accurately measure various changes caused by emotions is essential for emotion detection. The measurement column of Table 5 lists various measurements that have been used in human emotion driving research. These measurements can be broadly classified into three categories: physiological measurements, behavioral measurements, and self-reported scales. Physiological measurements refer to the recording and analysis of biological signals from the human body. Behavioral measurements refer to the observation and analysis of human behavior in response to emotional stimuli. Self-reported scales refer to questionnaires or surveys that ask participants to rate their own emotional state in response to stimuli.

Since emotional features are closely related to the drivers’ physiological characteristics, physiological measurements such as electroencephalography (EEG), functional near-infrared spectroscopy (fNIRS), electrocardiography (ECG), electrodermal activity (EDA), and respiration (RESP) used in driver physiology can be used to detect driver emotions [99,100,101]. There are also some authors who use self-reported scales to measure driver emotions. Frequently used self-reported scales include the positive and negative affect schedule (PANAS) [109], the self-assessment manikin (SAM) [110], and the differential emotions scale (DES) [111].

In addition, researchers have used behavioral measurements to infer driver emotions. To recognize emotions more accurately through facial behavior during driving, Gao et al. [92] used the method of near-infrared (NIR) facial expression recognition. Emotional acoustic recognition based on dialogue between the driver and the in-vehicle information system was also to used to measure emotions [93, 95, 96]. Also, to identify drivers’ emotions through body posture movement, Raja et al. [94] proposed to use radio frequency (RF) to identify driver emotions. Some authors suggest collecting information about the driver, vehicle state, and surrounding changes to infer the driver state, Nor and Wahab [97] classified the drivers’ happiness and unhappiness emotion using a multilayer perceptron neural network based on the pressure characteristics of the gas and brake pedals.

Comprehensively considering emotion measurement from different aspects is vital for emotion understanding. In order to obtain more reliable results, different measurement tools are usually combined for research [102,103,104,105,106,107]. These researchers used physiological measurements, behavioral measurements, and self-reported measurements to identify drivers’ emotional states. For example, Wan et al. [102] reported the detection of anger using features of HR, blood volume pulse (BVP), skin temperature (ST), EDA, RESP, self-reported scale and driving behavior of drivers. Lee et al. [105] reported the detection of three basic emotions (neutral, boredom, frustration) using features of photoplethysmography (PPG), electromyography (EMG), as well as the IMU. The position of the in-cabin sensors used in driver emotion detection is illustrated in Fig. 4. The application of these methods has made massive progress in effectively monitoring and detecting driver emotions in real time.

Comparatively, physiological measurements are more objective and can be measured continuously; however, this measurement is highly intrusive and may have an impact on the measurement result. Although behavioral measurement is rarely intrusive and can be measured by non-contact sensors, its performance level could decline because people can try to fake or disguise their emotions. Self-reported scales measure the subjective experience of participants when applied correctly, and provide the opportunity to gain valuable insights about the experienced emotions, but such measurements cannot take place during the study without interruption. The methods require a relatively long period to perform, potentially resulting in unreliable data. In general, in order to obtain more accurate recognition results, an appropriate measurement method needs to be selected according to the experimental environment and purpose.

4.4 Emotion Annotation

To effectively detect human emotions in CAVs, it is important to obtain reliable emotional annotations which can be used as the standard for training and evaluation of the models. As shown in the annotation column of Table 5, there are three annotation methods: self-reports, experimental scenarios, and annotators. The first approach involves employing emotional self-reports [87, 103], which requires subjects to be able to express and quantify how they feel at a particular moment. The second approach involves using different experimental scenarios to label the emotional experiences [90, 99]. For example, in the case of on-road studies, researchers control different driving traffic to trigger specific emotional states, and in the case of driving simulator studies, researchers have several opportunities to modify experimental situations and induce specific emotional states. The third method involves the use of an external annotator, which can identify certain emotional states based on different signals (e.g., voice, facial expressions) of subjects [96, 97].

In comparison, self-reports require the cognitive recourse of subjects, they are subjective, and they may reflect strong biases (e.g., inconsistencies in evaluation criteria, false memories, and desire to impress the experimenter). Although the experiment scenarios reduce the burden on the subjects, it makes some strong assumptions that may not be widely generalized to all subjects and road conditions [15]. Annotators are very time-consuming and labor-intensive and require the use of experienced and well-trained observers, which may be challenging to find and will be expensive, especially at scale [15]. Besides, some studies have also explored combining multiple methods to overcome the weaknesses of any one method [112]. On the whole, no one method is perfect, so researchers need to choose a proper annotation approach according to the experimental scenario and purpose.

-

Summary of human emotion experiment in driving: This section discusses the platform, induction, measurement, and annotation in human emotion experiments. The collection of emotional data during driving mainly depends on the experimental platform. The data collection based on the driving simulator can reduce the driving risk caused by emotion during the experiment. Data collection based on on-road driving can collect more accurate responses from participants in real scenarios. At present, most studies choose to carry out driving simulator experiments in the early stage of emotion research and choose to carry out on-road driving experiments in the later stage or verification stage of emotion research. For the induction, measurement, and labeling of emotions, the choice should also be made according to the experimental platform. On the driving simulator, the experimental emotion measurement tools are rich, the induction method is controllable, and the labeling is easy, but there will be problems that the intensity of the collected emotional data is not high and the characteristics are not obvious. On the on-road driving, the emotions experienced by participants are more real, and the intensity of the collected emotional data is relatively high, but the emotional measurement tools of the experiment are easily limited (measurement equipment is easily disturbed in an uncontrollable environment), and labeling also requires a lot of time and human resources. Therefore, in the human emotion experiment in driving, it is necessary to combine the research stage and the purpose of the experiment, choose the appropriate experimental platform, and further choose the measurement method, induction method, and labeling tools for emotion research.

5 Human Emotion Detection in CAVs

Accurate, stable, and efficient detection of the emotional states of drivers and occupants is a prerequisite for affective human-vehicle interactions. Therefore, the purpose of this section is to discuss how CAVs use emotional expressions, driving context, and road information to recognize the emotional states of drivers and occupants.

5.1 In-cabin Driver Emotion Detection

Driver emotion detection algorithms are developed based on selected classification methods and measurement signals. The algorithms are usually implemented using supervised machine learning technologies. The algorithm column of Table 5 not only lists the different measurements used in driver emotion driving research, but also summarizes the main detection algorithms and corresponding recognition accuracy reported in previous studies. The detection algorithms used in these studies can be broadly classified into three categories: facial-expression-based, speech-based, driving-behavior-based, physiology-based, and multimodal-based.

5.1.1 Facial-Expression-Based

Facial expression is an effective, common-used, and non-invasive factor for emotion detection. There are two main detection tasks for emotion detection: discrete emotion detection (classification task for basic emotions) and dimensional emotion detection (regression task for valence, arousal and dominance). As shown in Table 5, most of the facial-expression-based studies choose the discrete emotion (six basic emotions and neutral) as the detection targets, and the deep neural network, such as CNN-based models, are commonly used in facial classification, such as GLFCNN [82], Xception [86, 87], and basic CNN [85, 90]. Equations (1) and (2) show the most commonly used loss function (multi-class cross-entropy loss and binary cross-entropy loss) for facial-expression-based emotion detection [82, 85, 86, 88]. As for dimensional emotion detection, some researchers choose the regression methods for emotion detection. The CogEmoNet was proposed to solve the regression task for dimensional emotion recognition, and the Mean Squared Error (MSE) and Consistency Correlation Coefficient (CCC) loss functions were used [86]. The loss function of MSE and CCC are shown in Eqs. (3) and (4):

Besides, some machine learning methods are used in facial expression classification, such as SVM [87, 91, 92] [82]. The loss function of SVM used in the detection procedure is hinge loss. According to Table 5, the results of CNN-based methods are generally better than those using traditional machine learning methods in driver emotion studies. CNN is a type of deep learning algorithm that can automatically learn and extract features from input data, making them well-suited for facial-expression-based emotion recognition tasks. In contrast, traditional machine learning methods require manual feature extraction and selection, which can be time-consuming and may not capture all relevant features. Using CNN-based methods can improve the accuracy of driver emotion recognition tasks and may be a promising approach for future research in this field.

5.1.2 Speech-Based and Driving-Behavior-Based

The speech data is also a practical and non-invasive method for driver emotion detection. Current speech-based studies are usually used to detect drivers’ road rage and negative emotions. The classification methods used are mainly traditional classification methods, such as KNN [94], GMM [95], SVM, and Random forest [93]. The commonly used loss functions for GMM, SVM, and random forest are log-likelihood loss, Hinger loss, and MSE loss, respectively. The log-likelihood loss is shown in Eq. (5). Besides, some researchers used neural networks to solve classification tasks. Kamaruddin et al. [96] used MLP to perform 4-class emotion classification based on audio information. For driving behavior data of drivers, Nor and Wahab used MLP to classify the happiness and neutral emotions [97].

5.1.3 Physiology-Based

The physiological signal is closely related to the drivers’ emotional features. Therefore, some studies attend to use physiological data to detect drivers’ emotions. The Logistic regression [98, 100], SVM [99], and backpropagation methods [101] are commonly used in the study. However, the number of studies addressing such classification tasks is fewer than other approaches. Since the physiological signal devices, such as ECG, and EEG devices, are not non-invasive. Meanwhile, high-quality physiological data is difficult to obtain compared to facial expressions and audio signals.

5.1.4 Multimodal-Based

The uni-modal approaches such as facial expression, audio, driving behaviors, and physiological data have their limits. Generally, the reliability of the uni-modal approaches is not good enough, especially for practical use. Therefore, some researchers choose multimodal signals to address the classification tasks. The most commonly used combinations, as shown in Table 5, are physiological data and driving behaviors data [102, 105,106,107], speech signals and driving behaviors data [103], and facial expression and speech signals [104, 107]. These combinations are often used in multimodal approaches, which combine multiple sources of data to improve the accuracy of emotion detection. The traditional machine learning methods, such as SVM [105], linear discriminant [106], least square support vector machine [102], and bayesian network [107] are commonly used in solving this task and the loss functions are hinge loss, and log loss function, respectively. These methods have been applied to different modalities and have achieved varying levels of accuracy in driver emotion detection. Transfer learning algorithms have also been successfully implemented for driver emotion detection, as shown in one study using a transfer learning approach to classify emotions based on facial expressions and speech signals [104]. Overall, the results of multimodal approaches are generally better than those of unimodal approaches, suggesting that combining different sources of data can improve the accuracy of emotion detection in driving. However, the choice of methods and combinations may depend on the specific context and available data.

5.2 In-cabin Occupant Emotion Detection

With the development of CAVs, emotion recognition for vehicle occupants gradually gains more attention. Since the current CAVs have not reached the level of fully autonomous driving, only a few numbers of researches on occupants’ emotion detection have been conducted. However, the models of general emotion detection might be able to be used for occupant emotion detection since there is no driving task involved for occupants. Table 6 presents a summary of the state-of-the-art emotion detection algorithms for occupants in vehicles in the past five years. Compared to the detection models used for drivers’ emotion detection, the detection models used for general emotion detection are more up-to-date and the accuracy is generally higher. In Table 6, the occupants’ emotion detection methods are categorized into four parts corresponding to the driver emotion detection, including facial-expression-based, speech-based, physiology-based, and multimodal-based. Then, each column in the table is discussed by category in the following subsections.

5.2.1 Facial-Expression-Based

In Table 6, facial expressions are one of the modalities used to detect the emotions of vehicle occupants. Some studies are summarized in the facial-expression-based part and the datasets used in these studies are the CK+ dataset, Japanese Female Facial Expression (JAFFE) Dataset, and CREMA-D dataset, which contain the facial expression data and labeled emotion annotations. The facial expressions in CK+, JAFFE, AFEW, and CREMA-D are acted by actors/actresses [113, 114, 116]. In terms of algorithms, Table 6 shows that the detection accuracies are generally higher when the expression datasets are acted compared to the dataset of spontaneous expressions. The reason is that acted facial expressions are more exaggerated and easier to recognize than spontaneous expressions, which tend to be more subtle and variable. However, spontaneous expressions are more reflective of real-world emotions and are therefore more relevant to emotion detection in naturalistic settings such as driving. Meanwhile, the algorithms shown in Table 6 are mainly CNN- and RNN-based models. The MSE and cross-entropy loss are commonly used as the loss function [117] in facial expression-based emotion detection. The loss functions are shown in Eqs. (1), (2), (3), and (4). Dimensional emotional recognition is not studied in occupants’ emotion detection and the reason might be due to the limitation of the public dataset that contains the facial expression and arousal and valence scores.

5.2.2 Speech-Based

Table 6 shows that some studies have employed speech signals as the approach to detect the emotions of vehicle occupants. Speech recognition systems can analyze the tone, pitch, and prosody of the driver’s voice to detect emotions such as frustration, excitement, and anger. They are summarized in the speech-based part and the major datasets used in speech-based studies are RAVDESS, IEMOCAP, eNTERFACE, and RECOLA database, which contain the emotional speech segments and the labeled emotion annotation. The speech records in the RAVDESS dataset, IEMOCAP dataset, and eNTERFACE dataset are acted by actors/actresses [119, 122] and the speech records are spontaneous in RECOLA database [123]. The algorithms shown in Table 6 are mainly CNN-based models and RNN-based models, such as Bi-directional LSTM architecture [119], Deep C-RNN [120], etc. The MSE [123] and cross-entropy [121] loss are commonly used as the loss function in speech-based detection.

5.2.3 Physiology-Based

As shown in Table 6, physiological signals such as EEG are used to detect the emotions of vehicle occupants. Sensors can be installed on the vehicle to measure these physiological signals, which can then be analyzed using machine learning algorithms to detect emotions. Previous studies are summarized in the physiological-based section. The major datasets used in physiology-based studies are SEED dataset [124, 125, 128], and DEAP dataset [129], which contain the emotional physiological signals, the labeled emotion and the dimensional emotion annotation. Most of the datasets choose EEG signals as the emotion-related factor and eye movement is also contained in some datasets, such as SEED dataset [126, 127]. Besides, the algorithms used in physiology-based detection are mainly CNN-based models and transfer learning models.

5.2.4 Multimodal-Based

Some studies also choose multimodal signals as the approach to detect the emotions of occupants. Table 6 summarizes studies that have employed multimodal approaches to emotion detection for vehicle occupants. These approaches combine multiple modalities, such as facial expressions, speech signals, and physiological signals to improve the accuracy of emotion detection. The major datasets used in these studies are the IEMOCAP and MOSI datasets, which contain speech signals, facial expressions, and labeled emotion annotations. Deep learning algorithms such as LSTM-based models have been employed in some studies to analyze these multimodal datasets and achieve high accuracy in emotion detection [131,132,133]. Typically, the number of multimodal detection studies is less than the uni-modal study. The reason might be due to the limitation of the public datasets that contain facial expressions and audio signals. The major loss function used in the multimodal approach is MSE [130] and cross-entropy [132, 133].

5.3 Driving-Context-Based Human Emotion Detection

Since human emotions are mainly triggered by the driving context or the events that happened during their driving, such as the weather, the road type, and the traffic conditions. The driving context is also an important factor for drivers’ emotion recognition. Although the studies towards such areas are limited because traditional vehicles lack of capacity to perceive the condition outside the vehicles, with the development of CAVs and the increase of the perception of automated vehicles, the combination of driving context and in-cabin collected signals will become possible and the accuracy of detection may be further improved.

Table 7 summarizes the current studies on the driving-context-based approach for drivers’ emotion detection. These studies focus on analyzing the influence of different driving contexts such as traffic conditions and road types on drivers’ emotional states. Bustos et al. [134] and Bitkina et al. [138] are examples of researchers that have investigated the influence of traffic conditions and road types on drivers’ stress levels. These studies have found that certain driving contexts such as heavy traffic or narrow roads can lead to increased stress levels in drivers. Logistic regression was used to detect the stress levels and the highest accuracy reached 82.80%. Dobbins et al. [137] used the combination of the physiological data and driving context to analyze the stress level, and correlation analysis was performed in their discussion. Bethge et al. [136] proposed a new method to use the traffic condition, weather, and vehicles’ dynamic information to detect drivers’ emotions, and VEmotion model was used to detect the emotions and the accuracy reached 71.00%. Liu et al. [135] used an RNN model to detect the drivers’ emotions by using the surrounding objects and vehicles’ dynamic information. The accuracy of the detection reaches 71%. The number of studies in driving-context-based emotion detection is limited according to Table 7, and further study in this area is needed with the development of CAVs.

5.4 V2X-Based Human Emotion Detection

Integrating V2X technology into driver emotion detection, a driver can recognize his neighbors’ emotional states in real-time by exchanging intelligent on-board data with other intelligent devices in ITS. For example, each CAV can share its emotional awareness information by interacting with other vehicles [139]. Furthermore, a local vehicle’s on-board learning data can be combined and processed by the centralized cloud so that to extend its intelligence to the global context [140]. Other emerging technologies are integrated with V2X to improve the efficiency of drivers’ emotion detection, such as machine learning [141] and edge computing [142].

In Ref. [139], a real-time anomaly data detection method is proposed for safe driving based on the driver’s emotional state and the cooperative vehicular networks. Zhang et al. [140] proposed a safety-oriented vehicular controller area network (SOVCAN) based on driver emotion detection. The architecture of SOVCAN consists of a data acquisition layer, an intra-net layer, and an inter-net layer. At the data acquisition layer, the driver’s physiological and psychological data are collected by the in-car mobile. At the intra-net layer, the data is transmitted to the on-board device for emotion perception. The processed data is then transferred to the cloud through vehicle-to-cloud (V2C), vehicle-to-infrastructure (V2I), and vehicle-to-vehicle (V2V) communications for preservation and remote control. Vogel et al. [141] introduced a multi-layer cognitive architecture-emotion-aware vehicle assistant (EVA) for the future design of emotion-aware CAVs. With the emergence of edge computing, Raja et al. [142] designed a driver assistant system RFexpress for emotion detection based on wireless edges.

-

Summary of human emotion detection in CAVs: This section discusses methods for recognizing human emotions in CAVs. In the emotion recognition subsections for the driver and occupant in the cabin, the existing emotion recognition models are illustrated based on facial expressions, speech, behavior, physiological changes and multimodal. In addition, as recently focused areas, human emotion recognition based on driving environment information and V2X is also introduced. On the whole, considering that the CAV is an intelligent mobile terminal with information perception inside and outside the vehicle and information communication capabilities, the human emotion detection method in CAVs can use the fusion of environmental information inside and outside the vehicle to achieve accurate, stable and efficient recognition.

6 Human Emotion Regulation in CAVs

After detecting human emotions, the CAV needs to use the human–machine interface to regulate driver emotions for affective human-vehicle interactions. Thus, different displays have been employed to regulate drivers’ emotions in the driving context, as shown in Table 8. The display is an essential component of driver’s emotion regulation, including visual displays such as screens and AR head-up displays, tactile displays such as the vibration of seats, steering wheels, and seat belts, olfactory displays such as lavender and lemon scents, and auditory displays such as voice output. Besides, this section introduces road-related regulation methods, regulation scenarios, and regulation quality analysis methods.

6.1 In-cabin Human Emotion Regulation

6.1.1 Visual Regulation

Vision is the dominant sense during driving, which is the reason for the majority of emotion regulation to be visual. For a low automated level, the use of visual stimuli for driver emotion regulation may cause potential distractions. However, a relaxation of visual regulation requirements is possible when the vehicle runs at a high level of automation. Table 8 describes current studies on the visual regulation of driver’s emotions including ambient light, visual intervention, state feedback, and visual relaxation techniques.

Ambient light refers to the method employing ambient illumination in the cockpit to implement the driver’s emotion regulation. Studies have shown that ambient light can have alarming or distracting qualities for drivers, but it may also have a calming effect, which may depend on brightness, position, and personal familiarity with the function [145, 149, 170]. Visual intervention is distractive by design, therefore, their application while driving requires to be carefully planned. It is also feasible to blatantly intervene when the possibly dangerous emotional state is likely to occur, such as providing a simple notification, telling the driver to take a break, and distracting the driver from the source of negative emotions, as well as presenting a positive notification to decrease drivers’ negative emotions [143, 146, 147]. State feedback refers to the visual display that allows drivers to understand their current emotional states clearly. Researchers’ studies found that direct feedback on the detected drivers’ states had little value for emotional regulation because visual state feedback could amplify the driver’s negative emotional states, which was unacceptable to the driver and needed to be avoided examined the visual state feedback of drivers’ negative emotions [143, 147, 170]. Besides, participants preferred to receive only safety-critical notifications of the driver’s state [144]. Relaxation techniques are approaches to impact drivers’ emotional states through dynamic behavior changes. Studies have revealed that this relaxation technique led to a decrease in arousal levels and relaxation techniques may become a valuable routine to increase the comfort of CAVs [148].

6.1.2 Tactile Regulation

In the driving context, the tactile display can be presented in multiple ways (e.g., vibration, airstream, force, temperature). The tactile display can be widely used because human skin includes a variety of receptors, namely mechanoreceptors (sensitive to pressure, vibration and slip), thermoreceptors (sensitive to temperature changes), nociceptors (responsible for pain) and proprioceptors (sensitive to position and movement), and any area of the human body can be activated by these receptors (e.g., finger or hand, wrist, arm, chest, back, forehead). Therefore, the tactile display has the potential for robust message delivery without generating additional cognitive demands or affecting driving performance. As shown in the tactile regulation of Table 8, current studies on the tactile regulation of driver’s emotions in CAVs mainly focus on temperature and vibration. Temperature regulation can be used to create a comfortable and calming environment in the vehicle. For example, heated or cooled seats can provide physical comfort to drivers and help them manage stress levels. Vibration regulation can also be used to regulate drivers’ emotional states. For example, vibration of the steering wheel or seat can provide physical feedback to help drivers stay alert and manage stress levels.

The study of temperature regulation by Schmidt et al. [154] reported that cool airstreams were associated with a decrease in low arousal and an increase in alertness and sympathetic activity, leading to better driving performance and acceptance. The other studies chose vibration to regulate drivers’ emotions by instructing the driver to breathe and reported the effectiveness [150,151,152,153]. Besides, the study also found that haptic warning signals were as effective as audible warning signals in handling lane departures for both normal driving situations and driving secondary task situations [155]. In general, tactile regulation is a promising way for driver emotion regulation and can probably be more sufficiently utilized in the future.

6.1.3 Olfactory Regulation

Humans can also obtain information from olfaction. The olfaction has been proven to be good at activating the neural system, which can help drivers to perceive important information better. Hence, although less common, olfactory displays are slowly entering the field of automotive HMI as a new display for human-vehicle interaction [161]. Table 8 also investigates studies that have used different odors to regulate drivers’ emotional states. Examples of odors studied include peppermint, rose, lavender, vanilla, cinnamon, and civet. Olfactory regulation can be used to create a calming and pleasant atmosphere in the vehicle. For example, lavender has been found to have a calming effect on drivers.

Raudenbush et al. demonstrated that both peppermint and cinnamon decreased drivers’ frustration and helped them focus on driving tasks [157]. Besides, Mustafa et al. [163] found that the presence of odors (vanilla and lavender) led drivers to experience positive emotions, such as relaxation with more feedback on comfortable and fresh feelings. Further, Dmitrenko et al. [156] selected the odors of different valence and arousal levels (e.g., rose, peppermint and civet) to regulate drivers’ anger, and found that pleasant odors (rose and peppermint) could be able to shift drivers’ emotions towards the positive valence. Furthermore, Dmitrenko et al. [159,160,161] proposed the method to map between different driving-related messages (“slow down,” “refuel,” and “pass by points of interest”) and four scents (lemon, lavender, mint, rose). Besides, some studies also found that intermittently applying scents can effectively keep drivers alert [158, 162]. Integrating olfactory regulation into CAVs can provide a more comprehensive and effective emotional regulation system for drivers. However, it is essential to note that olfactory responses can be subjective and vary between individuals. Therefore, a personalized approach to olfactory regulation may be necessary to ensure effectiveness for each individual driver. Overall, the olfactory regulation of driver’s emotions in CAVs is a promising area of research, with the potential to significantly improve the driving experience for drivers in the future.

6.1.4 Auditory Regulation

Besides the vision-based display, auditory displays are the second most common type of human-vehicle interaction. First, the auditory display is a high-resolution modality that can send complex information quickly through auditory icons (naturally occurring sounds), earcons (e.g., short tunes or bells), and spearcons (speech-based earcons). Second, the auditory display conveys directional (spatial) information, and auditory cues can be received from any direction. Third, compared to visual display, it reduces mental and visual distractions. Therefore, in the auditory regulation part of Table 8, studies on auditory emotion regulation are summarized, including adaptive music, empathetic speech, and auditory intervention. For example, calming music can be played in stressful driving situations to help drivers manage stress levels, and a virtual assistant can use empathetic speech to provide reassurance and guidance to drivers in unfamiliar driving situations.

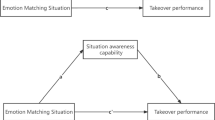

Many studies envisage the application of adaptive music in the driving environment to regulate the driver’s emotions [166, 167, 169]. Studies have indicated that recommending calm music to drivers in a high arousal state could help them calm down, and will affect driver’s emotional states and driving behavior in the long run [174, 178, 180]. However, other studies have pointed out that although music affected driving performance and reaction times, it had no significant effect on subjective emotion levels [168]. Empathetic speech requires empathetic adaptation of the in-vehicle voice agent’s speech output to adapt to the emotional state of drivers and occupants in the driving environment. Studies on empathetic speech revealed that the matched speech arousal level could have a positive impact on driving performance and the frequency of interactions [175, 179]. Besides, the empathetic speech was liked by drivers, and could decrease the impact of sadness and anger on driving [170]. Empathetic speech may be a suitable method to regulate the driver’s negative emotions. However, the above studies all worked with prototypical interactions. A clear description of empathy in voice interactions may require further research [16]. A typical form of auditory intervention is a reappraisal, which aims to illuminate frustrating situations in a more positive light. The study has shown that reappraisal may be an effective intervention that may nudge the driver towards a more positive state, thereby improving driving performance [172]. Another common form of auditory intervention is to instruct the driver to perform breathing exercises [152]. Other researchers have studied positive and negative comments [165], young and old voices [177], familiar and unfamiliar voices [171], warning voices [176], and different emotion regulation strategies to intervene driver emotions [181, 182]. In sum, auditory intervention may be an effective way to regulate the driver’s emotions while rarely leading to distraction.

6.2 V2X-Based Human Emotion Regulation

Seamless and real-time decisions of emotion regulation can be obtained in V2X, where drivers can be aware of their neighbors’ emotions in the situated environment by communicating with intelligent traffic participants such as the cloud [183, 184] and neighboring vehicles [185].

In Ref. [183], a crowd-cloud collaborative and situation-aware music recommendation system is designed to diminish drivers’ negative emotions. The sensing data and the driver’s social information are collected and processed by the in-car smartphone. By collaborating with cloud computing, unified and seamless crowdsensing decisions can be made based on the fusion of information. This approach enables the in-vehicle smartphone to intelligently recommend preferable music to regulate drivers’ negative emotions based on the situated environment by collaborating with its neighboring vehicles’ phones and the cloud. Krishnan et al. [184] proposed a context-aware music emotion-mapping approach for drivers. The V2C communication is applied to aggregate the crowd-sensed music emotion-mapping data to improve the efficiency and effectiveness of the music delivery for drivers. By incorporating the social context information of a driver (e.g., gender, age, and personality) collected from the cloud, the vehicle’s system ensures the timely delivery of suitable music according to different driver emotions. A location-based method was designed in Ref. [185] that enables road users to send and receive feedback on their driving behaviors through V2V communication. On the one hand, a driver can express his appreciation and disapproval towards nearby drivers about their polite and impolite driving behavior by gestures. On the other hand, he can receive others’ evaluations through audio and visual feedback. With the help of a driving simulator, the system is demonstrated to exert a positive influence on driving behaviors.

6.3 Regulation Scenarios

Experimental scenarios are particularly important for design, verification, and validation efforts. Scenes in the real world are infinitely rich, extremely complex, and unpredictable. It is very difficult to reproduce these scenes entirely in a virtual environment. Therefore, the key to realizing the test and verification of the driving simulator is to use the limited test scene to map the infinitely rich world. The elements of the test scene include two parts: the elements of test vehicles and the elements of the external traffic environment. The elements of the external traffic environment also include static environment elements, dynamic environment elements, traffic participants elements, and meteorological elements.

Most previous studies employed simulated scenarios to regulate human emotions. In Table 8, highway was a common experiment scenario and some studies simulated the highway scene to study visual intervention [143, 144, 148], auditory intervention [166, 169, 174, 178, 181], vibration intervention [152,153,154,155], and olfactory intervention [158, 159, 161, 162]. In addition, to make the scenario closer to real driving, some studies designed the car following [149, 170], curves, intersections [145], urban roads [152], and traffic signs, traffic lights, traffic density [166], traffic participants [171, 177] factors. Some researchers also conducted simulated driving experiments in different time periods (i.e., morning, afternoon, and evening) [153, 178]. A small number of on-road studies have been carried out for emotion regulation. Some researchers chose country trails and highways to study the effect of the visual intervention on the regulation [146]. Others studied the effect of haptic regulation using commuter routes that contain straights, curves, and traffic signs [151]. In sum, researchers can choose highways, complex urban roads, and simulated driving environments such as weather and driving tasks according to specific requirements when designing scenarios.

6.4 Regulation Quality Analysis Method

The analysis methods for regulation quality mainly include measurements and analysis. As shown in Table 8, driver emotion regulation in CAVs is commonly measured using self-report scales, driving behavior, and physiological measurements such as heart rate and respiration. Self-report scales are the most commonly used method to measure regulation quality, while some studies also investigate driving behavior and physiological changes under different regulation methods. The purpose of emotion regulation analysis is to examine differences in regulation quality across different regimens. Due to the different data distributions, existing studies have adopted different data analysis methods. Most studies have used ANOVA for quality analysis of emotion regulation due to its efficiency in differences analysis. Post hoc analysis is often performed after ANOVA to analyze differences between different regulation schemes. Other studies have used paired t-tests, independent-samples t-tests, main effects analysis, and non-parametric tests for regulation quality analysis.

-

Summary of human emotion regulation in CAVs: This section discusses methods for in-cabin human emotion regulation, V2X-based human emotion regulation, regulation scenarios, and regulation quality analysis method. In terms of emotion regulation schemes, the existing research is reviewed from the aspects of visual regulation, auditory regulation, tactile regulation, and olfactory regulation. Existing studies mainly focus on the driver’s single-modal emotion regulation, such as ambient light, adaptive music, vibration and odor. Benefiting the development of intelligent cockpit interaction technology in CAVs, studies on multimodal emotion regulation schemes for drivers and occupants are also potential.

7 Discussion and Perspectives

Human emotion is the main research direction in CAVs development. To improve acceptance, safety, and comfort in CAVs, an affective connected automated driving (ACAD) is proposed based on the previously reviewed literature in this paper. Figure 5 shows ACAD framework for human emotion research based on the human-vehicle-road coupling process in the context of CAVs. As shown, the proposed ACAD framework consists of three main layers, namely the human layer, the automated vehicle layer, and the road layer. The human layer describes the human’s emotional generation process and human behaviors in CAV. When the human is in a specific situation and is stimulated by the context, the human will have a cognitive appraisal for the current situation. This cognitive appraisal will then lead to different emotional responses, including subjective experiences, behavioral responses, and physiological changes that result in different emotional states and human behavior in CAVs.

The automated vehicle layer presents three modules of affectively automated driving, which are in-cabin human emotion detection, driving context perception, and emotion-based automated driving. In the in-cabin human emotion detection, both the physiological and physical behavioral data of the occupants is collected with multimodal sensor systems in the cabin. The data-driven multimodal emotion detection module output the emotion detection results based on the multimodal sensing data processing and information fusion. Meanwhile, in the driving context perception module, the driving scenario information, including obstacle positions, lane marks and vehicle states are perceived. While serving as the important input of automated driving, the traffic context is the key factor in inducing human emotions for better inference. Last, in the emotion-based automated driving module, the driving risk assessment will consider the driving scenario information and the in-cabin human emotion comprehensively. With the help of emotion detection, risk assessment can be more advanced and precise. Based on the risk, the decision-making can be generated by integrating the traffic rules and emotion regulation rules, which will further control vehicles according to different vehicle actuation configurations and fed back to the driver according to multimodal interaction interfaces, respectively.

The road layer includes vehicle communication with the surroundings, online driver emotion detection, history data collection, and the creation of emotion regulation strategies. The vehicle communicates with the surrounding mainly consisting of V2V, V2I, V2C and vehicle to pedestrian (V2P). Online driver emotion detection includes data processing, data fusion, and the output of driver emotion. The basic biometrics, habitual driving behavior and other drivers’ information are stored in the history data. Besides, online driver emotion detection and history data can be employed to generate driver emotion regulation strategies for different drivers to guide emotion-based automated driving. In sum, the proposed ACAD framework will dynamically record multimodal data for real-time emotion detection and further produce adaptive emotion regulation strategies to improve the affective interaction quality between humans and CAVs and promote driving safety.

Possible future research directions of human emotion in CAVs may consist of several aspects as follows:

7.1 Human Emotion Dataset in Driving

Over the past decade, the driver’s emotion datasets during driving proposed by some researchers have greatly promoted the research of human emotion detection in driving [9, 87, 186]. In the future, researchers still need rich and repeatable experimental datasets. To collect a reliable human emotion dataset in driving, the following issues should be considered.

-

Driver emotion dataset: Induction: Since driving is a complicated and long-lasting task, the intensity duration of the induced emotional state and the naturalness degree of induction are essential to the dataset and have a more significant impact on driving performance. Measurements: The dataset should include high-quality measurements of essential clues related to human emotional experience and expression (face, speech, physiology, behavior) while considering the low intrusiveness of the measurement metrics, which may include some indirect and non-intrusive indicators. Annotation and scenarios: The dataset should include annotations of the driver’s emotional states, and the dataset should consider the driving scenario and duration, and collect data as much as possible while driving the actual vehicle in real traffic. Characteristics: The personality characteristics of the participants, including age, gender, driving experience, and driving style, should also be considered.

-

Human emotion interaction dataset: In-cabin interaction between occupants: Future datasets should consider in-cabin interaction between occupants, as the emotional component plays a major role in human-object interactions in vehicles and interactions between occupants. Driver interaction with other road users: Studying the interaction behavior of humans with other road users under emotion will help to study different driving strategies for CAVs and improve driving safety. Interactions between emotions and different states: Studying the interaction between human emotions and motion sickness, load, and distraction in driving will help to improve the emotional experience of intelligent mobile spaces. Interaction datasets should also consider factors of induction, measurement, annotation, and characteristics.

7.2 Human Emotion Detection in CAVs

Human emotion detection in CAVs is a research field that is increasingly growing in importance and attention. To stimulate research in this area, previous research is analyzed to discuss some future research opportunities.

-

Multimodal driver emotion detection: Various measurement methods and algorithms (physiology, behavior, subjective scales) have been used in the field of driver emotion detection. However, unimodal driver emotion detection does not always accurately recognize the internal state (e.g., facial expressions do not always map exactly or via a simply fixed mapping onto the internal state). A more comprehensive multimodal detection method that combines multiple signals can better evaluate driver emotions. Notably, real-time communication of multiple sensors and fusion of metrics in an emotional multimodal recognition environment is critical.

-

Facial anonymization in emotion detection: Facial expression is commonly used in emotion detection among current studies. However, this detection method may cause a privacy leak in the future if implementing such an emotion detection algorithm to the cabin. Therefore, it will be important to balance facial anonymization and important information when developing the algorithm. Using a model, such as GAN network, to create a virtual face might be a solution.

-