Abstract

Driving space for autonomous vehicles (AVs) is a simplified representation of real driving environments that helps facilitate driving decision processes. Existing literatures present numerous methods for constructing driving spaces, which is a fundamental step in AV development. This study reviews the existing researches to gain a more systematic understanding of driving space and focuses on two questions: how to reconstruct the driving environment, and how to make driving decisions within the constructed driving space. Furthermore, the advantages and disadvantages of different types of driving space are analyzed. The study provides further understanding of the relationship between perception and decision-making and gives insight into direction of future research on driving space of AVs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Autonomous vehicles (AVs) are expected to improve driving safety compared with vehicles driven by humans. The driving space for an AV is the reconstruction of a surrounding real driving environment, including the free drivable area, obstacles, and other relevant driving elements, and it consists of all the static and dynamic traffic elements in the surrounding space and thus is a wider concept than drivable area or drivable space that indicates free space. In this paper, only the local space serving for local driving decision (rather than large-scale or road-level space) is discussed. Generating driving space is the process of environment modeling with sensor information and other driving constraints, such as traffic rules. As it is generated from perception and is the basis of decision-making, the driving space acts as a bridge (or interface) between the two, which are two key research areas in autonomous driving. The driving space is mainly dedicated to intelligent vehicles of level 3 or higher on the SAE scale [1], at which the vehicles must be able to monitor the environment and drive autonomously.

In modeling the real driving environment, it is unrealistic to describe all details due to the heavy calculation burden. Therefore, it is necessary to make simplification and abstraction to efficiently understand the surrounding space. In existing research, the world can be modeled with three approaches that define the simplified driving space [2]. The first is the grid space built by discrete sampling of the entire driving space. The second is the feature space built by continuous and sparse descriptions of the environment. The third is the topological space, a more abstract form with nodes and links that concentrate on key points or landmarks. In the grid space, the space is segmented into grids, and each cell (i.e., grid) is associated with occupancy probability. The feature space only describes key elements (e.g., obstacles, traffic lanes) by their features within the continuous driving space instead of describing the whole space. The topological space is defined by nodes and links, focusing on the connections and relationships between key points in the feature space. In addition, construction methods and decision methods (i.e., behavior planning, path planning, and control signal generation) for the three types of defined space are different correspondingly.

A systematic summary of the different types of driving space has previously been investigated from the perspective of path planning in other reviews [3, 4]. In contrast, this study aims to analyze the fundamental properties of different forms of driving space and systematically compares them from the perspective of construction methods and application in decision-making, which could help understand the relationship between the perception and decision-making.

The remainder of this article is structured as follows. The second section introduces the construction methods of different kinds of driving space and comparatively analyzes their advantages and disadvantages. In the third section, the application of the driving space is reviewed from the perspective of AV driving decisions, including rule-based and learning-based methods. For rule-based decisions, the discussion of application is based on different kinds of space, and for learning-based methods, end-to-end learning and reinforcement learning (RL) are considered separately. Finally, a brief conclusion and future research direction are given in the last section. The flowchart of the study is shown in Fig. 1.

2 Construction of the Driving Space

The driving space integrates the roles of perceiving the driving environment and providing the basis for decision-making. To obtain a complete understanding of the environment, the reconstructed driving space should contain the space boundary (usually the road boundary) and driving-relevant elements, such as traffic lanes and obstacles. The approaches for constructing the driving space fall into three categories: (1) the grid space, which is a discrete description covering the entire surrounding space; (2) the feature space, which is described in continuous coordinates and focuses on the position and shape of the space boundary and obstacles; and (3) the topological space, which is composed of nodes and links as an abstract representation of features. It should be noticed that topological space is widely used in robotic research while rarely used in research on autonomous vehicles.

2.1 Construction of the Grid Space

The concept of the grid space was first proposed through robotic research by Elfes in 1987 [5]. The space is first segmented into small grids, and then the probability of occupation is calculated for each cell. The detection task is performed by calculating the probability of occupation according to sensor information.

In the 1980s, most robotic driving space detections were realized by sonar sensors [5,6,7]. The location of obstacles and walls were found using sonar reflection. Moravec [8] went further and combined sonar sensors and stereo cameras to achieve grid space construction. Based on this idea, Marchese considered moving object by introducing time axis, constructing several grid spaces in future time series by prediction according to the speed of moving objects [9]. These early researches laid foundation of grid space. The basic ideas of space segmentation and occupation probability were widely applied in the following research.

The grid space is also widely used in research on AVs. LiDARs have replaced sonar sensors as distance sensors owing to their improved accuracy. The occupation probability is calculated using properties of the LiDAR point cloud in each grid, such as height and density. LiDAR detection of the grid space is divided into two categories: (1) 2D detection, usually performed by a LiDAR with 4 or fewer channels, and (2) 3D detection, usually performed by a LiDAR with 16 or more channels. In 2D detection, the LiDAR is installed in front of the vehicle, and the point cloud is closely spaced in vertical distance. The occupancy grids are then acquired by projecting the whole point cloud to the ground [10,11,12], as shown in Fig. 2a [9]. In 3D detection, the LiDAR is installed on the top of the vehicle; thus, the point cloud can directly reach the ground, as shown in Fig. 2b [13]. Bohren et al. [13] segmented the flat ground from the whole point cloud by plane extraction. Na et al. [14] considered the continuity of the ground to achieve detection on both even and uneven topographies. The points higher than the ground were marked as the space boundary. Moras et al. [11] went further and combined LiDAR detection with a high precision map, optimizing the detection results by lane and road boundary on the map. The fundamental idea of LiDAR-based AV driving space construction followed the pioneering robotic research.

Cameras have also been applied in the driving space construction for AVs. With the development of machine vision technology, it is now possible to segment the drivable area out of an image [15,16,17,18]. Some research only focused on the pixel plane, while others transformed the image to a grid map on the ground. Others have gone further and fit the boundary into the feature space. Yao et al. [15] achieved drivable area detection on the image with support vector machine (SVM). Hsu et al. [16] first segmented the drivable area on the image, and then transformed it to the ground plane using vanishing point detection and inverse perspective transform, and finally obtained a grid map of the drivable area. Camera-based approach focuses on free drivable area and operates in the image plane; however, the basic idea of grid space construction remains the same.

Sensor fusion in the grid space is achieved by the fusion of occupation probability, as shown by the example in Fig. 3. Each sensor calculates the probability independently, and then the probabilities are fused using the Bayes method [8, 19], Dempster–Shafer method [11, 20, 21], or other fusion methods [22,23,24]. The open framework of occupation probability makes grid space highly adaptive to sensor layout and fusion algorithm, which is an advantage of grid driving space.

The size and distribution of grids are two important factors in the grid space definition. In the existing research using regular grids [20, 25, 26], the size of the grid is approximately 20 cm, which is smaller than the size of the vehicles and pedestrians on road and thus is suitable for structural road application. Regarding grid distribution, the 2D grid space is more commonly used due to its simplicity, whereas the 3D grid space is rarely used due to its complexity. Plazaleiva et al. [27] used 3D LiDAR to perform space construction in a voxel grid, but the 3D grid map was then transformed into 2D for further application. In the application of 2D grid space, uniform distribution is commonly used because it is simpler to realize sensor fusion on grid cells of equal size. However, large amount of computation and storage resources are required in uniform grid space detection. Therefore, it is not economically feasible to calculate on each grid in a large open area. Considering this, some researchers have used non-uniform grids. The commonly used non-uniform grid layout is quadratic trees [3, 28, 29]. In this layout, the grids are dense where there is an obstacle and sparse in the free space; thus, a perception focus is formed, as shown in Fig. 4. The number of grids can be reduced compared to uniform distribution. Thus, computation cost and storage resource consumption can be reduced. However, there are still some drawbacks of non-uniform grids. The path planned in non-uniform grids is less smooth than in uniform grids [3], and it is also difficult to unify the results of different sensors in multi-sensor fusion, since the focus of each sensor might be different.

2.2 Construction of the Feature Space

In the construction of the driving space, obstacles are represented by the position coordinate values and their geometric shapes while the space boundary is fit into an analytic formula. The whole space is described continuously and geometrically as compared with the discrete description of the grid space.

In robotic research, the feature space is described by geometric figures composed of angles, edges, and curves, and some researchers also consider the speed of the obstacles (see Fig. 5). This is suitable for an indoor environment for a robot where obstacles and boundaries are in various unpredictable shapes. Correspondingly, these geometric elements are the targets of detection, which is typically performed by sonars or camera sensors. Ip et al. [30] detected geometric features in the space by clustering sensor information to find a collision-free path. Hardy et al. [31] used polygons to describe obstacles to construct a geometric feature space. In simultaneous localization and mapping (SLAM), a map is built in real time and enables the robot to locate itself within an unknown environment, which is a hotspot in robotic research. The feature map is widely used in SLAM [32,33,34] and consists of points, edges, corners, etc. SLAM is also applied in AVs as an auxiliary means for positioning, especially when GNSS (global navigation satellite system) does not work [35, 36]. For indoor robot applications, the feature space provides a different idea compared with the grid space. By modeling the space with sparse geometric features, it is more computationally economic and more intuitive.

On structural roads, space boundaries and on-road obstacles have certain patterns. Therefore, the feature space for AVs is constructed by traffic elements such as the road boundary, lanes, vehicles, and pedestrians, as shown in Fig. 6. Traffic lights, traffic signs, and crossings are also important elements in the feature space. The detection tasks can be divided into several small tasks: road boundary detection, lane detection, object detection, etc. These are all important research topics for environment perception.

Feature space construction is composed of several subtasks. Road boundary detection is typically based on LiDAR and camera similar to grid space construction. Here in the feature space, an analytic curve takes the place of the grid. For example, Loose et al. [37] used a Bézier curve to fit the road boundary. Lane detection is also important in the feature space, and numerous studies have been conducted on rule-based lane detection [38,39,40]. In recent years, some researchers used deep learning in lane detection [41, 42]. With the development of sensor technologies and machine vision, object detection and tracking have developed quickly in recent years. Vehicle and pedestrian detections are usually carried out on images by machine learning [43,44,45,46,47]. Some researchers have realized multiple object tracking (MOT) by the fusion of radar, LiDAR, and cameras [48, 49]; other researchers have achieved traffic sign detection [50, 51] and traffic light detection [52, 53]. By combining these elements, a complete feature driving space can be constructed. However, since each part of detection tasks is completed independently, further researches should be carried out to get a more systematic and integrated feature driving space.

2.3 Construction of the Topological Space

The topological space is another way to describe the environment besides the grid and feature space [2]. The topological space shows the landmarks and their connection relationship. Distinct landmarks in the space, usually vertexes of polygon obstacles, corners, and doors, are set as nodes in the topological space. The links show the connection between the nodes. The nodes in the topological space are depicted geometrically in the feature space; however, the topological space focuses on their connection rather than the actual distance and position in the world coordinate, such as the visibility graph [54] and Voronoi diagram [55]. Omar et al. [3] gave an example (see Fig. 7) where the vertexes are connected by straight links.

Visibility graph (a topological map) [3]

It is easy to find the shortest path in the visibility graph. Ryu et al. [2] pointed out that the topological space is more suitable than the grid and feature space for robot applications owing to sensing error endurance. There are two main advantages of topological space in robotic research. First, its perception system only needs to find key points in the space rather than accurate geometric boundaries or occupation possibility; thus, it has great advantage when the sensors are not accurate enough. Second, robots can steer quickly and follow sectional straight line as the shortest path, and therefore the topological space is more suitable in looking for shortest path on the landmark network.

However, considering the structural road and vehicle dynamics, the topological space is not that suitable in AV research. Firstly, the shortest path as sectional straight lines cannot be executed by a vehicle due to vehicle dynamic restriction. To obtain a drivable path, more accurate space boundary and geometry information should be provided other than landmarks. Secondly, on structural roads, path planning is no longer restricted to looking for non-collision path, but needs to consider traffic rules and behaviors of other traffic participants (e.g., vehicles and pedestrians). The detailed information is difficult to be described by existing topology-based space models. In addition, more accurate sensors on AVs make it possible to receive more detailed information; thus, error endurance as an advantage of topological space is not that important to AVs. Due to these facts, topological space is seldom applied in local driving space construction for AVs. However, some ideas of the topological space are embodied in grid-based path planning.

It should be noticed that topology is applied more in other aspects of autonomous driving than in driving space construction. For example, topology is used in SLAM, an important technology in autonomous driving. SLAM can improve location accuracy and is also applied in constructing high precision maps. GraphSLAM is a kind of SLAM that applies topology, with nodes representing the pose or the feature in the map, and links representing a motion event between two poses or a measurement of the map features. However, the poses are expressed in a topological graph when positioning and mapping are considered separately, though the map it builds is still a grid map or feature map [56, 57]. In other words, the topological graph is only an intermediate result showing the relationship between the poses and the map features; however, the output space expression is still grid based or feature based. Also, topological graph is widely applied in macroscopic road-level or lane-level navigation maps [58, 59], showing the connectivity between roads, lanes, and intersections. However, the topological space is seldom used in local dynamic driving space for local decision-making.

2.4 Comparative Analysis

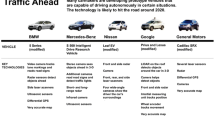

Table 1 lists the characteristics of different types of space for comparison. The following comparison results can be concluded.

- (1)

There are fundamental differences among the three types of driving space.

- (2)

The three space types have different mathematic characteristics. The grid space is discrete and emphasizes completeness, whereas the feature and topological space are continuous and sparse. Therefore, constructing grid space require many computational and storage resources. In contrast, the feature and topological space are sparse and intuitive and thus are more computationally economic but less detailed than grid space.

- (3)

Regarding detection targets, the grid space describes the space itself. Therefore, it does not focus on semantic information. The feature space focuses on the geometric features of the boundary and obstacles rather than the open space. The topological space focuses more on the link between key points in the feature space versus position and distance, which works well for indoor robots but is not suitable to apply in AVs.

- (4)

In robotic research, the grid space needs calculations on occupancy probability on grids; sensor fusion is then achieved by probability fusion. The feature space and topological space need the detection of geometric features, such as points, corners, edges, etc. The difference between the feature space and topological space lies in the representation methods. Topological space has advantages in looking for shortest path and error endurance.

- (5)

For AV applications, the grid and feature space are commonly used (versus the topological space). The grid space construction method is the same as that of robot applications, while the sensor layout is typically different. There is usually no semantic information in the grid space. The feature space contains different traffic elements, such as roads, vehicles, and pedestrians. Therefore, it has semantic information and benefits from object detection and tracking technologies. However, the detection methods of different elements in the feature space are researched separately; the construction of feature driving space for AVs still needs systematic integration.

Considering the observations above, some researchers have combined grid and feature representation for AV application. To take advantage of the grid representation of open space, the boundary can be fit into a continuous formula based on the grid space [60,61,62]. The position, shape, and speed of vehicles and pedestrians are acquired by object detection. Then, the above elements are integrated in the feature space, as in the theses of Zhang [63] and Liu [64]. However, each part of the detection task is completed independently; therefore, this solution still lacks completeness.

In all, the existing methods can achieve driving space detection using sensor information and then reconstruct the driving space by expressing it with grids, features, or topology. It can be found from the previous analysis that the three categories of space definitions and detections all have their advantages and disadvantages.

3 Application of the Driving Space in Autonomous Driving Decisions

The driving space provides the constraints for behavior planning, path planning, and control signal generation in the decision layer. Existing AV decision methods can be divided into two categories: rule-based and learning-based methods.

3.1 Rule-Based Decision Methods

A rule-based AV decision can be realized in the grid or feature space. In the decision layer, behavior decision and path planning are achieved using the driving space constraints. Although the topological space is seldom used in driving space construction in autonomous driving, its concept is applied in the grid space decision.

3.1.1 Rule-Based Decision Methods in the Grid Space

The rule-based decision methods in the grid driving space can be divided into two categories: those that directly plan a path in the grid space, and those that plan a path using discrete lattices sampled from the driving space.

Direct decisions on the grid space use the occupancy probability [65, 66]. Hundelshausen et al. [65] chose a trajectory from a group of arcs by setting the occupation probability of passing grids as cost, as shown in Fig. 8. Similarly, Mouhagir et al. [20] chose a clothoid curve and further applied the Markov decision to realize lane changing. These methods made good use of the probability information on the grid map. However, they were less intuitive and simple compared with decision methods in the feature space. Moreover, a single obstacle was represented as several independent grids (not a whole), causing unnecessary calculations.

Path planning in the grid space [65]

Decision-making using state lattices is another grid-based decision method. Discrete state lattices are generated by sampling within the driving space. The lattices are usually relatively large in size to reduce computation cost. In the decision method with state lattices, the goal is to find the collision-free drivable path in a free area; thus, the graph search or sample-based planning on the nodes is able to complete the task without the step of behavioral planning (e.g., go straight, turn left, etc.). Figure 9 shows an example of the planned path, in which nodes are connected with a sectional-continuous curve to the destination.

The nodes and links of state lattices are similar to those in the topological space. However, the nodes in state lattices are not geometrically distinct points but sampled discrete grids set in advance; thus, it should still be regarded as grid space. This similarity shows the decisions on state lattices are made by searching a path on the graph, which is similar to the decisions in the topological space. There are many specific path planning methods on the state lattice [67,68,69,70,71,72,73]. There has been much research on this type of decision process and thus is considered to be reliable. However, sampling in space causes accuracy reduction and information loss in the constructed driving space. Moreover, the generated sectional-continuous path is not as smooth as a single curve; therefore, the driving experience is not as comfortable as the path of a human driver.

3.1.2 Rule-Based Decision Method in the Feature Space

In the feature space, the space boundary formula, position, shape, and speed of obstacles are the decision inputs.

Rule-based behavior planning is usually based on the feature space. Behavior planning finds the best behavior among a finite number of possible behaviors [74,75,76,77], such as vehicle following, lane changing, merging, turning, etc. In existing behavior planning research, the road boundary and lanes are the essential inputs; for objects, usually only vehicles are considered. However, in environment perception research, there are typically many types of objects in the feature space; for example, there are eight types of objects in the research of Prabhakar et al. [78]. Objects such as traffic signs and traffic lights, as well as specific vehicle classifications (trucks, buses, etc.), are not well considered in the current behavior planning researches.

There is also much research on path planning in the feature space. Ziegler et al. [79] represented obstacles with polygons and then predicted their moving paths X(t) and finally achieved path planning Xpred, j(t), as shown in Fig. 10. Brechtel et al. [80] searched among many continuous trajectories in the feature space, which is also a typical method for trajectory planning. In feature space, the space boundary and obstacle information are the constraints for the optimization problem of path planning. There are many models of path planning in feature space, such as clothoid [81, 82], Bézier line [83, 84], spline [85, 86], etc. These methods focus on searching for a smooth, collision-free path. However, the semantic information provided by feature space is usually not fully considered.

Rule-based decision in the feature space [79]

3.2 Learning-Based Decision Methods

Machine learning is widely used in the decision layer of AVs. Some researchers have used supervised learning to realize end-to-end driving, which is usually based on deep learning on images or LiDAR clouds. Other researchers have used reinforcement learning to make driving decisions. In addition, combining learning-based and rule-based methods is also an important research area.

3.2.1 The Driving Space in the End-to-End Driving Decision

In 1989, Pomerleau [87] used a simple neural network with one hidden layer to realize the end-to-end prediction of steering angle. This was considered to be pioneering in end-to-end autonomous driving.

With the development of deep learning, a convolutional neural network (CNN) was applied in end-to-end driving. CNN has better performance in feature extraction than simple networks and is therefore better for end-to-end driving. Bojarski et al. [88] realized end-to-end control from image input to steering angle control, which made end-to-end driving another new research hotspot [89, 90]. In end-to-end driving, control signals are directly predicted from sensor input, while environment perception and space description are implemented in the neural network. Bojarski et al. [88] visualized the CNN weights, as shown in Fig. 11. It can be seen that CNN extracted the driving space (road boundary in this example); however, the driving space is not explicit and difficult to appropriately optimize.

Implicit driving space in end-to-end driving [88]

End-to-end driving has attracted researchers thanks to its novelty and simplicity. There is no need to remodel the environment and set complex control rules in this framework. However, problems arise with this simplicity. The system is highly integrated. The driving space is not an intermediate result of environment perception in the rule-based decision and is therefore difficult to be appropriately optimized. Moreover, since the neural network output is uncertain, there is no guarantee that the end-to-end output is reliable and safe in any conditions, especially in unfamiliar scenarios outside the training set. These problems may be solved with large-scale dataset and deeper neural networks. Future development of computational ability will support this method better. However, with current computational ability and datasets, the combination of end-to-end driving and rule-based methods is more reliable.

3.2.2 The Driving Space in the Reinforcement Learning Decision

The basic idea of reinforcement learning is to generate a control policy by adjusting actions according to environment–reward feedback. This framework is used in AV research and many other research areas. Markov decision is the basis of reinforcement learning and actions optimized by environment input. Therefore, it caters to the perception–decision framework of AV technologies.

In AV applications, reinforcement learning is similar to a human driver’s decision method. Firstly, the driving space is modeled with the road boundary, traffic lanes, position, speed, and acceleration of other vehicles in the feature space. Secondly, actions are defined as discrete behavior decisions [91, 92] (lane changing, going straight, turning, etc.) or continuous control signal outputs [93, 94] (steering angle and acceleration). Meanwhile, a reward is set according to the driving task, e.g., passing efficiency, collision, etc. Finally, the training process of trial and correction is carried out to optimize the driving policy. It is not suitable to apply grid space in reinforcement learning without combination with deep learning. As typical Markov decision process needs to describe the environment with a finite set of states and calculate the probability of transition between the states, it is difficult to apply grid space with large amount of data. As shown in Fig. 12 [91], this research is based on simulated feature space with the road boundary and vehicles as input, and behavior decisions as actions. Reinforcement learning relies on simulation since it needs to find the best driving policy by trial and error; therefore, it needs some adaption on real-road tests.

Reinforcement learning in the feature space [91]

Deep reinforcement learning combines the perception ability of deep learning with the decision ability of reinforcement learning. Therefore, deep reinforcement learning can adequately process higher dimensional or larger amount of data, e.g., the grid space or raw sensor information. Kashikara [95] used the grid space as CNN input to realize deep reinforcement learning. However, the applied grid space had low resolution with only one car occupying one grid. This is different from the high-resolution perception result, but the idea of using grid space is still important. Some researchers have used raw sensor input and deep reinforcement learning [96, 97] with images or point clouds as input [97]. Liu et al. [98] further combined deep reinforcement learning with supervised deep learning to make driving decisions. Deep reinforcement learning provides more options than traditional reinforcement learning and thus has greater potential in AV applications.

3.2.3 Combining Learning-Based and Rule-Based Decision Methods

Learning-based decision methods can avoid the complexity of setting rules for various scenarios; however, the trained network is a black box, which makes the output uncertain and uncontrollable, especially in unfamiliar scenarios. To improve safety, some researchers have combined learning-based and rule-based methods to make decisions. Correspondingly, the application of the driving space is also in combination form.

Xiong et al. [99] combined reinforcement learning, lane keeping, and collision avoidance by weighting their steering and acceleration control signal outputs, as shown in Fig. 13. The reinforcement learning directly used the sensor input; the other two tasks were carried out in the feature space, calculating control signals according to bias to lane center and positions of other vehicles. Hubschneider et al. [100] revised the trajectory of end-to-end learning with rule-based methods according to obstacle positions in the feature space. It can be found that the driving space is considered independently in both learning-based and rule-based components of the combined decision.

3.3 Summary of Driving Space Application in the AV Decision Layer

In summary, there are various forms of driving space applications in AV decisions. Table 2 presents the relationship between decision-making categories and driving space categories.

Except for end-to-end and some deep reinforcement learning decisions, most decision methods are based on the driving space reconstructed by the perception layer. Both the grid and feature space are applied in rule-based and learning-based decision methods.

Regarding rule-based decision methods, in the grid space, path planning is based on occupancy probability on grid map or by searching for a path in the sampled lattices. In the feature space, the road boundary and object information are the decision constraints for behavior planning or path planning. However, perception research and decision research are still not well integrated. For the grid space, research on perception focuses on improving accuracy through sensor fusion. However, the decision layer faces some problems rarely considered in perception research on grid space. Firstly, an obstacle is not described as a whole, and the computational cost is high. Secondly, for path planning on the sampled lattices, the paths are only sectionally continuous and thus are not as accurate as one single curve. For the feature space, the decision layer is better suited to the perception results and is more similar to human decision-making; however, the semantic information is still not thoroughly considered. For both grid space and feature space, the decision layer is still not able to make proper requests to the perception layer regarding accuracy and safety, nor can it determine what it needs to detect or how it needs to express, and thus cannot guide the perception layer to meet the demand of decision-making.

Regarding learning-based decision methods, end-to-end driving decisions directly use raw sensor input, and therefore driving space is not constructed explicitly. However, this causes difficulty in appropriate optimization and the problem of uncertainty. Therefore, combining it with rule-based methods in the explicit driving space is more reliable at this stage. In reinforcement learning, the feature space is better suited for application due to its simple description of the space; however, the existing research rely on simplified simulation without making full use of the constructed feature space. The grid space is applied in some conceptual deep reinforcement learning studies, but it still needs further study to cater to the constructed grid space by perception research. In learning-based methods, some research do not apply the explicit driving space, and some still depend on simplified simulations, and thus there is a gap between decision module and perception module. Learning-based decision methods need further development for the real applications; however, with the development of deep learning and computational ability, learning–based methods are promising. Future studies should follow the development of learning-based methods and focus on the application of driving space.

For the decision process combining rule-based methods and learning-based methods, each part applies to the driving space independently.

In summary, both rule-based and learning-based decisions are not sufficiently consistent when using driving space construction results in the perception layer. In addition, the wealth of information provided by driving space construction is not fully utilized by the decision-making process. This situation is exacerbated by the lack of demand for driving space construction from the decision layer. In addition, the existing decision-making methods in local driving space focus on local driving decision yet lack integration with the global driving task and map information.

4 Conclusions and Future Direction

Existing research on driving spaces form a complete forward path, from its construction to its applications in decision-making. Based on different types of space definitions, driving space construction technology has been improved with new sensor technology and sensor fusion algorithm; the decision technology uses the constructed driving space as its driving environment input. However, this chain still lacks integrity; the perception layer aims to increase accuracy, while research on decision focus on designing new decision policy but consider less on the characteristics of perception results. Therefore, the driving space construction results cannot support the decision-making process solidly. In rule-based decision methods, those based on the grid space experience unnecessary and repeated calculations. The methods that rely on the feature space cannot take full advantage of the wealth of information in the constructed driving space, especially semantic information. Learning-based methods are still in the stage of conceptual research; some of them do not need the driving space construction, and others use a simplified driving space in simulations. This results in a gap of driving space construction technologies between simulation and real world.

In future research, it will be important to combine the perception layer and decision layer more systematically based on a deeper understanding of the driving space. Based on analyzing the decision demand on accuracy and safety, it is important to determine what the driving space should contain and how to define and construct a driving space, so as to reduce the existing gap between perception and decision. This is of great significance for future research on the driving space.

References

SAE On-Road Automated Vehicle Standards Committee: Taxonomy and definitions for terms related to on-road motor vehicle automated driving systems. SAE Standard J3016, 01-16 (2014)

Ryu, B.S., Yang, H.S.: Integration of reactive behaviors and enhanced topological map for robust mobile robot navigation. IEEE Trans. Syst. Man Cybern. A Syst. Hum. 29(5), 474–485 (1999)

Souissi, O., Benatitallah, R., Duvivier, D., et al.: Path planning: a 2013 survey. In: International Conference on Industrial Engineering & Systems Management, IEEE, Rabat, Morocco, October 28–30 (2013)

Katrakazas, C., Quddus, M., Chen, W.H., et al.: Real-time motion planning methods for autonomous on-road driving: State-of-the-art and future research directions. Transp. Res. C Emerg. Technol. 60, 416–442 (2015)

Elfes, A.: Sonar-based real-world mapping and navigation. IEEE J. Robot. Autom. 3(3), 249–265 (1987)

Borenstein, J., Koren, Y.: Real-time obstacle avoidance for fast mobile robots. IEEE Trans. Syst. Man Cybern. 19(5), 1179–1187 (1989)

Moravec, H.P., Elfes, A.: High resolution maps from angle sonar. In: IEEE International Conference on Robotics and Automation, IEEE, St. Louis, USA, March 25–28 (1985)

Moravec, H.P.: Sensor fusion in certainty grids for mobile robots. Sensor Dev. Syst. Robot. 9, 61–74 (1989)

Marchese, F.M.: Multiple mobile robots path-planning with MCA. In: International Conference on Autonomic and Autonomous Systems. IEEE Computer Society, Silicon Valley, USA, July 19–21 (2006)

Li, L.: Research on Technology of Road Information Extraction Based on Four-Layer Laser Radar. Dissertation, Beijing University of Technology (2016)

Moras, J., Rodríguez, F.S.A., Drevelle, V., et al.: Drivable space characterization using automotive lidar and georeferenced map information. In: Intelligent Vehicles Symposium, IEEE, Alcala de Henares, Spain, 3–7 June (2012)

Llorens, J., Gil, E., Llop, J., et al.: Georeferenced LiDAR 3D vine plantation map generation. Sensors 11(6), 6237–6256 (2011)

Bohren, J., Foote, T., Keller, J., et al.: Little Ben: the Ben Franklin Racing Team’s entry in the 2007 DARPA urban challenge. J. Field Robot. 25(9), 598–614 (2008)

Na, K., Park, B., Seo, B.: Drivable space expansion from the ground base for complex structured roads. In: 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, Budapest, Hungary, October 9–12 (2016)

Yao, J., Ramalingam, S., Taguchi, Y., et al.: Estimating drivable collision-free space from monocular video. In: 2015 IEEE Winter Conference on Applications of Computer Vision, IEEE, Waikoloa, USA, January 5–9 (2015)

Hsu, C.M., Lian, F.L., Huang, C.M., et al.: Detecting drivable space in traffic scene understanding. In: International Conference on System Science and Engineering, IEEE, Dalian, China, June 30–July 2 (2012)

Kamini, S., Nerkar, M.H.: Colour vision based drivable road area estimation. Int. J. Innov. Res. Dev. 5(4), 234–237 (2015)

Söntges, S., Althoff, M.: Computing the drivable area of autonomous road vehicles in dynamic road scenes. IEEE Trans. Intell. Transp. Syst. 99, 1–12 (2017)

Tripathi, P., Singh, H., Nagla, K.S., et al.: Occupancy grid mapping for mobile robot using sensor fusion. In: 2014 International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT), Ghaziabad, India, February 7–8 (2014)

Mouhagir, H., Talj, R., Cherfaoui, V., et al.: Integrating safety distances with trajectory planning by modifying the occupancy grid for autonomous vehicle navigation. In: IEEE International Conference on Intelligent Transportation Systems. IEEE, Rio de Janeiro, Brazil, November 1–4 (2016)

Murphy, R.R.: Dempster-Shafer theory for sensor fusion in autonomous mobile robots. IEEE Trans. Robot. Autom. 14(2), 197–206 (1998)

Baig, Q., Aycard, O.: Low level data fusion of laser and monocular color camera using occupancy grid framework. In: International Conference on Control Automation Robotics & Vision. IEEE, Singapore, December 7–10, pp 905–910 (2011)

Thrun, S., Montemerlo, M., Dahlkamp, H., et al.: Stanley: the robot that won the DARPA grand challenge. J. Field Robot. 23(9), 661–692 (2006)

Stepan, P., Kulich, M., Preucil, L.: Robust data fusion with occupancy grid. IEEE Trans. Syst. Man Cybern. C 35(1), 106–115 (2005)

Li, H., Tsukada, M., Nashashibi, F., et al.: Multivehicle cooperative local mapping: a methodology based on occupancy grid map merging. IEEE Trans. Intell. Transp. Syst. 15(5), 2089–2100 (2014)

Hoermann, S., Henzler, P., Bach, M., et al.: Object detection on dynamic occupancy grid maps using deep learning and automatic label generation (2018). arXiv:1802.02202v1

Plazaleiva, V., José, G., Ababsa F.E., et al.: Occupancy grids generation based on Geometric-Featured Voxel maps. In: 2015 23rd Mediterranean Conference on Control and Automation (MED), IEEE, Torremolinos, Spain, June 16–19 (2015)

Finkel, R.A., Bentley, J.L.: Quad trees a data structure for retrieval on composite keys. Acta Informatica 4(1), 1–9 (1974)

Ghosh, S., Halder, A., Sinha, M.: Micro air vehicle path planning in fuzzy quadtree framework. Appl. Soft Comput. 11(8), 4859–4865 (2011)

Ip, Y.L., Rad, A.B., Chow, K.M., et al.: Segment-based map building using enhanced adaptive fuzzy clustering algorithm for mobile robot applications. J. Intell. Robot. Syst. 35(3), 221–245 (2002)

Hardy, J., Campbell, M.: Contingency planning over probabilistic obstacle predictions for autonomous road vehicles. IEEE Trans. Robot. 29(4), 913–929 (2013)

Mullane, J., Vo, B.N., Adams, M.D., et al.: A random-finite-set approach to Bayesian SLAM. IEEE Trans. Robot. 27(2), 268–282 (2011)

Guivant, J., Nebot, E., Baiker, S.: Localization and map building using laser range sensors in outdoor applications. J. Robot. Syst. 17(10), 565–583 (2000)

Dissanayake, M.G., Newman, P., Clark, S., et al.: A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 17(3), 229–241 (2001)

Wang, D., Liang, H., Mei, T., et al.: Lidar Scan matching EKF-SLAM using the differential model of vehicle motion. In: Intelligent Vehicles Symposium (IV), IEEE, Gold Coast, Australia, June 23–26 (2013)

Nguyen, D.V., Nashashibi, F., Dao, T.K., et al.: Improving poor GPS area localization for intelligent vehicles. In: Multisensor Fusion and Integration for Intelligent Systems (MFI), 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI). IEEE, Daegu, South Korea, November 16–18 (2017)

Loose, H., Franke, U.: B-spline-based road model for 3D lane recognition. In: International IEEE Conference on Intelligent Transportation Systems. IEEE, Funchal, Portugal, Funchal, Portugal, September 19–22 (2010)

Xiao, J., Luo, L., Yao, Y., et al.: Lane detection based on road module and extended Kalman filter. Pacific-Rim Symposium on Image and Video Technology, pp. 20–24. Springer, Wuhan (2017)

Aly, M.: Real time detection of lane markers in urban streets. In: 2018 New Generation of CAS (NGCAS). IEEE, Valletta, Malta, November 20–23 (2018)

Hur, J., Kang, S.N., Seo, S.W.: Multi-lane detection in urban driving environments using conditional random fields. In: 2013 IEEE Intelligent Vehicles Symposium (IV), IEEE, Gold Coast, Australia, June 23–26 (2013)

Kim, J., Park, C.: End-to-end ego lane estimation based on sequential transfer learning for self-driving cars. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops. IEEE Computer Society, Honolulu, USA, July 21–26 (2017)

Li, J., Mei, X., Prokhorov, D., et al.: Deep neural network for structural prediction and lane detection in traffic scene. IEEE Trans. Neural Netw. Learn. Syst. 28(3), 690–703 (2017)

Dollár, P., Wojek, C., Schiele, B., et al.: Pedestrian detection: an evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 34(4), 743–761 (2012)

Enzweiler, M., Gavrila, D.M.: Monocular pedestrian detection: survey and experiments. IEEE Trans. Pattern Anal. Mach. Intell. 31(12), 2179 (2009)

Wojek, C., Walk, S., Schiele, B.: Multi-cue onboard pedestrian detection. In: Computer Vision and Pattern Recognition 2009, IEEE, Miami, USA, June 20–25 (2009)

Qu, Y., Jiang, L., Guo, X.: Moving vehicle detection with convolutional networks in UAV videos. In: International Conference on Control, Automation and Robotics, IEEE, Hong Kong, China, April 28–30 (2016)

Yang, Z., Li, J., Li, H.: Real-time pedestrian and vehicle detection for autonomous driving. In: IEEE Intelligent Vehicles Symposium, IEEE, Changshu, China, June 26–30 (2018)

Wang, X., Xu, L., Sun, H., et al.: On-Road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 17(7), 2075–2084 (2006)

Premebida, C., Monteiro, G., Nunes, U., et al.: A Lidar and vision-based approach for pedestrian and vehicle detection and tracking. In: 2007 IEEE Intelligent Transportation Systems Conference, IEEE, Seattle, USA, September 30–October 3 (2007)

Zuo, Z., Yu, K., Zhou, Q., et al.: Traffic signs detection based on Faster R-CNN. In: 2017 IEEE 37th International Conference on Distributed Computing Systems Workshops (ICDCSW). IEEE, Dalian, China, December 19–21 (2017)

Fleyeh, H.: Color detection and segmentation for road and traffic signs. In: IEEE Conference on Cybernetics & Intelligent Systems, IEEE, Singapore, December 1–3 (2004)

Shen, Y., Ozguner, U., Redmill, K., et al.: A robust video based traffic light detection algorithm for intelligent vehicles. In: Intelligent Vehicles Symposium, IEEE, Xi’an, China, June 3–5 (2009)

Omachi, M., Omachi, S.: Traffic light detection with color and edge information. In: IEEE International Conference on Computer Science & Information Technology, IEEE, Beijing, China, August 8–11 (2009)

Hart, P.E., Nilsson, N.J., Raphael, B.: A formal basis for the heuristic determination of minimum cost paths. IEEE Trans. Syst. Sci. Cybern. 4(2), 100–107 (1968)

Gavrilova, M.L., Rokne, J.: Collision detection optimization in a multi-particle system. Int. J. Comput. Geom. Appl. 13(04), 279–301 (2003)

Thrun, S.: The graph SLAM algorithm with applications to large-scale mapping of urban structures. Int. J. Robot. Res. 25(5), 403–429 (2006)

Grisetti, G., Kümmerle, R., Stachniss, C., et al.: A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. Mag. 2(4), 31–43 (2010)

Zhu, Q., Li, Y.: Hierarchical lane-oriented 3D road-network model. Int. J. Geogr. Inf. Sci. 22(5), 479–505 (2008)

Akcelik, R.: Comparing lane based and lane-group based models of signalised intersection networks. Transp. Res. Procedia. 15, 208–219 (2016)

Schreier, M., Willert, V.: Robust free space detection in occupancy grid maps by methods of image analysis and dynamic B-spline contour tracking. In: International IEEE Conference on Intelligent Transportation Systems, IEEE, Anchorage, USA, September 16–19 (2012)

Thormann, K., Honer, J., Baum M.: Fast road boundary detection and tracking in occupancy grids from laser scans. In: IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, IEEE, Daegu, South Korea, November 16–18 (2017)

Homm, F., Kaempchen, N., Ota, J., et al.: Efficient occupancy grid computation on the GPU with Lidar and radar for road boundary detection. Intelligent Vehicles Symposium, pp. 21–24. IEEE, San Diego (2010)

Zhang, Q.: Unmanned Vehicle Road Scene Environment Modeling. Dissertation, Xi’an University of Technology (2018)

Liu, J.: Research on Key Technologies in Unmanned Vehicle Driving Environment Modelling Based on 3D Lidar. Dissertation, University of Science and Technology of China (2016)

Hundelshausen, F.V., Himmelsbach, M., Hecker, F., et al.: Driving with tentacles-integral structures for sensing and motion. J. Field Robot. 25(9), 640–673 (2009)

Đakulović, M., Čikeš, M., Petrović, I.: Efficient interpolated path planning of mobile robots based on occupancy grid maps. IFAC Proc. Vol. 45(22), 349–354 (2012)

Hart, P.E., Nilsson, N.J., Raphael, B.: A formal basis for the heuristic determination of minimum cost paths. ACM Sigart Bull. 4(2), 100–107 (1968)

Likhachev, M., Ferguson, D., Gordon, G.: Anytime search in dynamic graphs. Artif. Intell. 172(14), 1613–1643 (2008)

Stentz, A.: Optimal and efficient path planning for partially known environments. IEEE Int. Conf. Robot. Autom. IEEE 4, 3310–3317 (1994)

Nash, A., Daniel, K., Koenig, S., et al.: Theta*: Any-angle path planning on grids. J. Artif. Intell. Res. 39(1), 533–579 (2010)

Lavalle, S.: Rapidly-exploring random trees: a new tool for path planning. Res. Rep. 293–308 (1998)

Melchior, N.A., Simmons, R.: Particle RRT for path planning with uncertainty. In: IEEE International Conference on Robotics and Automation, IEEE, Roma, Italy, April 10–14 (2007)

Ziegler, J., Stiller, C.: Spatiotemporal state lattices for fast trajectory planning in dynamic on-road driving scenarios. In: IEEE/RSI International Conference on Intelligent Robots and Systems, IEEE, St. Louis, USA, October 10–15 (2009)

Montemerlo, M., Becker, J., Bhat, S., et al.: Junior: the Stanford entry in the urban challenge. J. Field Robot. 25(9), 569–597 (2008)

Urmson, C., Anhalt, J., Bagnell, D., et al.: Autonomous driving in urban environments: Boss and the urban challenge. J. Field Robot. 25(8), 425–466 (2008)

Leonard, J., How, J., Teller, S., et al.: A perception-driven autonomous urban vehicle. J. Field Robot. 25(10), 727–774 (2008)

Ziegler, J., Bender, P., Schreiber, M., et al.: Making Bertha drive-an autonomous journey on a historic route. IEEE Intell. Transp. Syst. Mag. 6(2), 8–20 (2014)

Prabhakar, G., Kailath, B., Natarajan, S., et al. Obstacle detection and classification using deep learning for tracking in high-speed autonomous driving. In: IEEE Region 10 Symposium (TENSYMP), IEEE, Cochin, India, July 14–16 (2017)

Ziegler, J., Bender, P., Dang, T., et al.: Trajectory planning for Bertha—A local, continuous method. In: IEEE Intelligent Vehicles Symposium Proceedings, IEEE, Dearborn, USA, June 8–11 (2014)

Brechtel, S., Gindele, T., Dillmann, R.: Probabilistic decision-making under uncertainty for autonomous driving using continuous POMDPs. In: 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), IEEE, Qingdao, China, October 8–11 (2014)

Fraichard, T., Scheuer, A.: From reeds and shepp’s to continuous-curvature paths. IEEE Trans. Robot. 20(6), 1025–1035 (2004)

Broggi, A., Medici, P., Zani, P., et al.: Autonomous vehicles control in the VisLab intercontinental autonomous challenge. Annu. Rev. Control 36(1), 161–171 (2012)

Rastelli, J.P., Lattarulo, R., Nashashibi, F.: Dynamic trajectory generation using continuous-curvature algorithms for door to door assistance vehicles. In: Intelligent Vehicles Symposium Proceedings, IEEE, Dearborn, USA, June 8–11 (2014)

Pérez, J., Godoy, J., Villagrá, J., et al.: Trajectory generator for autonomous vehicles in urban environments. In: IEEE International Conference on Robotics and Automation, IEEE, Karlsruhe, Germany, May 6–10 (2013)

Trepagnier, P.G., Nagel, J., Kinney, P.M., et al.: KAT-5: robust systems for autonomous vehicle navigation in challenging and unknown terrain. J. Field Robot. 23(8), 509–526 (2007)

Berglund, T., Brodnik, A., Jonsson, H., et al.: Planning smooth and obstacle-avoiding B-spline paths for autonomous mining vehicles. IEEE Trans. Autom. Sci. Eng. 7(1), 167–172 (2009)

Pomerleau, D.A.: ALVINN: an autonomous land vehicle in a neural network. Adv. Neural Inf. Process. Syst. 313–315 (1989)

Bojarski, M., Del, T.D., Dworakowski, D., et al.: End to end learning for self-driving cars (2016). arXiv:1604.07316

Zhang, J., Cho, K.: Query-efficient imitation learning for end-to-end autonomous driving (2016). arXiv:1605.06450

Xu, H., Gao, Y., Yu, F., et al.: End-to-end learning of driving models from large-scale video datasets (2016). arXiv:1602.01079

You, C., Lu, J., Filev, D., et al.: Highway traffic modeling and decision making for autonomous vehicle using reinforcement learning. In: 2018 IEEE Intelligent Vehicles Symposium (IV), IEEE, Changshu, China, June 26–30 (2018)

Qiao, Z., Muelling, K., Dolan, J.M., et al.: Automatically generated curriculum based reinforcement learning for autonomous vehicles in urban environment. In: 2018 IEEE Intelligent Vehicles Symposium (IV), IEEE, Changshu, China, June 26–30 (2018)

Zheng, R., Liu, C., Guo, Q.: A decision-making method for autonomous vehicles based on simulation and reinforcement learning. In: International Conference on Machine Learning & Cybernetics, IEEE, Tianjin, China, July 14–17 (2014)

Ma, X., Driggs-Campbell, K., Kochenderfer, M.J.: Improved robustness and safety for autonomous vehicle control with adversarial reinforcement learning. In: 2018 IEEE Intelligent Vehicles Symposium (IV), IEEE, Changshu, China, June 26–30 (2018)

Kashihara, K.: Deep Q learning for traffic simulation in autonomous driving at a highway junction. In: 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), IEEE, Banff, Canada, October 5–8 (2017)

Wang, P., Chan, C.Y.: Formulation of deep reinforcement learning architecture toward autonomous driving for on-ramp merge. In: 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), IEEE, Yokohama, Japan, October 16–19 (2017)

Fayjie, A.R., Hossain, S., Oualid, D., et al.: Driverless car: autonomous driving using deep reinforcement learning in urban environment. In: 2018 15th International Conference on Ubiquitous Robots (UR), IEEE, Honolulu, HI, USA, June 26–30 (2018)

Liu, K., Wan, Q., Li, Y.: A deep reinforcement learning algorithm with expert demonstrations and supervised loss and its application in autonomous driving. In: 2018 37th Chinese Control Conference (CCC), IEEE, Wuhan, China, July 25–27 (2018)

Xiong, X., Wang, J., Zhang, F., et al.: Combining deep reinforcement learning and safety based control for autonomous driving (2016). arXiv:1612.00147

Hubschneider, C., Bauer, A., Doll, J., et al.: Integrating end-to-end learned steering into probabilistic autonomous driving. In: 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), IEEE, Yokohama, Japan, October 16–19 (2017)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (Grant No. U1864203), and in part by the International Science, and Technology Cooperation Program of China (No. 2016YFE0102200).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yang, D., Jiao, X., Jiang, K. et al. Driving Space for Autonomous Vehicles. Automot. Innov. 2, 241–253 (2019). https://doi.org/10.1007/s42154-019-00081-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42154-019-00081-1