Abstract

In statistical inference, we commonly assume that samples are independent and identically distributed from a probability distribution included in a pre-specified statistical model. However, such an assumption is often violated in practice. Even an unexpected extreme sample called an outlier can significantly impact classical estimators. Robust statistics studies how to construct reliable statistical methods that efficiently work even when the ideal assumption is violated. Recently, some works revealed that robust estimators such as Tukey’s median are well approximated by the generative adversarial net (GAN), a popular learning method for complex generative models using neural networks. GAN is regarded as a learning method using integral probability metrics (IPM), which is a discrepancy measure for probability distributions. In most theoretical analyses of Tukey’s median and its GAN-based approximation, however, the Gaussian or elliptical distribution is assumed as the statistical model. In this paper, we explore the application of GAN-like estimators to a general class of statistical models. As the statistical model, we consider the kernel exponential family that includes both finite and infinite-dimensional models. To construct a robust estimator, we propose the smoothed total variation (STV) distance as a class of IPMs. Then, we theoretically investigate the robustness properties of the STV-based estimators. Our analysis reveals that the STV-based estimator is robust against the distribution contamination for the kernel exponential family. Furthermore, we analyze the prediction accuracy of a Monte Carlo approximation method, which circumvents the computational difficulty of the normalization constant.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In statistical inference, we often assume ideal assumptions for data distribution. For instance, samples are independent and identically distributed from a probability distribution that is included in a pre-specified statistical model. However, such an assumption is often violated in practice. The data set may contain unexpected samples by, say, the failure of observation equipment. Even a single extreme sample called an outlier can have a large impact to classical estimators. Robust statistics studies how to construct reliable statistical methods that efficiently work even when ideal assumptions on the observation are violated.

1.1 Background

Robust statistics has a long history. The theoretical foundation of robust statistics was established by many works including [1,2,3,4]. A typical problem setting of robust statistics is that the data is observed from the contaminated model \((1-\varepsilon )P+\varepsilon Q\), where Q is a contamination distribution and \(\varepsilon \) is a contamination ratio. The purpose is to estimate the target distribution P or its statistic, such as the location parameter or variance from the data. Samples from Q are regarded as outliers. Sensitivity measures such as the influence function or breakdown point are used to evaluate the robustness of the estimator against outliers. The influence function is defined as the Gâteaux derivative of the estimation functional to the direction of the point mass at an outlier. The most B-robust estimator is the estimator that minimizes the supremum of the modulus of the influence function [3]. The median is the most B-robust estimator for the mean value of the one-dimensional Gaussian distribution. The breakdown point is defined by the maximum contamination ratio such that the estimator still gives meaningful information of the target distribution P. It is well-known that the median asymptotically attains the maximum breakdown point under some assumptions. For the above reason, the median estimator is regarded as the most robust estimator of the mean value especially when the data is distributed from a contaminated Gaussian distribution.

Numerous works have appeared that study multi-dimensional extension of the median. The componentwise median and geometric median are straightforward extensions of the median to multivariate data. These estimators are, however, suboptimal as the estimator of the location parameter under the contaminated model [5,6,7]. The authors of [2] proposed the concept of data depth, which measures how deeply embedded a point is in the scattered data. The deepest point for the observed data set is called Tukey’s median. Numerous works have proved that Tukey’s median has desirable properties as an estimator of the multivariate location parameter for the target distribution; high breakdown point [8], redescending property of the influence function [9], the min–max optimal convergence rate [5], and possession of general notions of statistical depth [10].

As another approach of robust statistics, minimum distance methods have been explored by many authors including [11,12,13,14,15,16]. The robustness of statistical inference is closely related to the topological structure induced by the loss function over the set of probability distributions [17]. For instance, the negative log-likelihood loss used in the maximum likelihood estimator (MLE) corresponds to the minimization of the Kullback–Leibler (KL) divergence from the empirical distribution to the statistical model. A deep insight by [17] revealed that the KL-divergence does not yield a relevant topological structure on the set of probability distributions. That is a reason that the MLE does not possess robustness property to contamination. To construct a robust estimator, the KL divergence is replaced with the other divergences that induce a relevant topology, such as the density-power divergence or pseudo-spherical divergence [13,14,15], These divergences are included in the class of Bregman divergence [18]. The robustness property of Bregman divergence has been investigated by many works, including [19, 20].

Besides Bregman divergence, f-divergence [17, 21] and integral probability metrics (IPMs) [22] have been widely applied to statistics and machine learning. In statistical learning with deep neural networks, generative adversarial networks (GANs) have been proposed to estimate complex data distribution, such as the image-generating process [23]. GAN is regarded as a learning algorithm that minimizes the Jensen–Shannon (JS) divergence. The original GAN is extended to f-GAN, which is the learning method using f-divergence as a loss function [24]. This extension has enabled us to use other f-divergence for the estimation of generative models. Note that the variational formulation of the f-divergence accepts the direct substitution of the empirical distribution. IPMs such as the Wasserstein distance or maximum mean discrepancy (MMD), too, are used to learn generative models [25,26,27]. Learning methods using f-divergence and IPMs are formulated as the min–max optimization problem. The inner maximization yields the variational expression of the divergence.

Recently, [28, 29] found a relationship between f-GANs and robust estimators based on the data depth. Roughly, their works showed that depth-based estimators for the location parameter and the covariance matrix are approximately represented by the f-GAN using the total variation (TV) distance, i.e., TV-GAN. Furthermore, [28] proved that the approximate Tukey’s median by the TV-GAN attains the min–max optimal convergence rate as well as the original Tukey’s median [5]. The approximation of the data depth by GAN-based estimators is advantageous for computation. [6, 7] proposed polynomial-time computable robust estimators of the Gaussian mean and covariance. However, the computation algorithm often requires knowledge such as the contamination ratio, which is usually not usable in practice. On the other hand, GAN-based robust estimators are computationally efficient, though rigorous computation cost has not been theoretically guaranteed. Inspired by [28, 29], some authors have investigated depth-based estimators from the standpoint of GANs [30,31,32,33,34].

In this paper, we explore the application of GAN-based robust estimators to a general class of statistical models. In most theoretical analyses of Tukey’s median and its GAN-based approximation, usually, the Gaussian distribution or elliptical distribution is assumed. We propose a class of IPMs called the smoothed total variation distance to construct a robust estimator. As the statistical model, we consider the kernel exponential family [35, 36]. The kernel method is commonly used in statistics [37,38,39]. A kernel function corresponds to a reproducing kernel Hilbert space (RKHS). The kernel exponential family with a finite-dimensional RKHS produces the standard exponential family, and an infinite-dimensional RKHS provides an infinite-dimensional exponential family. We propose a statistical learning method using smoothed TV distance for the kernel exponential family and analyze its statistical properties, such as the convergence rate under contaminated models. Often, the computation of the normalization constant is infeasible [40,41,42,43]. In this paper, we use a Monte Carlo approximation to overcome the computational difficulty of the normalization constant. Then, we analyze the robustness property of approximate estimators.

1.2 Related works

This section discusses related works to our paper, highlighting GAN-based robust estimators, kernel exponential family and unnormalized models.

1.2.1 Relation between Tukey’s Median and GAN

Recent studies revealed some statistical properties of depth-based estimators, including Tukey’s median [5, 28,29,30,31,32,33,34]. A connection between the depth-based robust estimator and GAN was first studied by [28]. Originally, GAN was proposed as a learning algorithm for generative models. There are mainly two approaches to constructing learning algorithms for generative models.

One is to use f-divergences called f-GAN, including vanilla GAN [23, 24]. In the learning using f-GAN, the estimator is obtained by minimizing an approximate f-divergence between the empirical distribution and the generative model. In [28, 29, 31], the robustness of f-GAN implemented by DNNs has been revealed mainly for the location parameter and variance–covariance matrix of the normal distribution.

The other approach is to use IPM-based methods such as [25, 44]. In IPM-based learning, the estimator is computed by minimizing the gap between generalized moments of the empirical distribution and those of the estimated distribution. Hence, the IPM-based learning is similar to the generalized method of moments [45]. Gao, et al. [28] proved that Tukey’s median and depth-based robust estimators are expressed as the minimization of a modified IPM between the empirical distribution and the model. Some works, including [28, 30, 32] show that also the IPM-based estimators provide robust estimators for the parameters of the normal distribution. [30] studied the estimation accuracy of the robust estimator defined by the Wasserstein distance or the neural net distance [46], which is an example of the IPM.

The connection between the robust estimator and GAN allows us to apply recent developments in deep neural networks (DNNs) to robust statistics. However, most theoretical works on GAN-based robust statistics have focused on estimating the mean vector or variance–covariance matrix in the multivariate normal distribution, as shown above.

In our paper, we propose a smoothed variant of total variation (TV) distance called the smoothed TV (STV) distance and investigate the convergence property for general statistical models. The STV distance class is included in IPMs. The most related work to our paper is [32], in which the smoothed Kolmogorov–Smirnov (KS) distance over the set of probability distributions is proposed. In their paper, the smoothed KS distance is employed to estimate the mean value or the second-order moment of population distribution under contamination. On the other hand, we use the STV distance for the kernel exponential family, including infinite-dimensional models, to estimate the probability density. Furthermore, the estimation accuracy of the estimator using STV distance is evaluated by the TV distance. In many theoretical analyses, the loss function used to compute the estimator is again used to evaluate estimation accuracy. For example, the estimator based on the f-GAN is assessed by the same f-divergence. A comparison of estimation accuracy is not fairly performed in such an assessment. We use the TV distance to assess estimation accuracy for any STV-based learning in a unified manner. For that purpose, the difference between the TV distance and the STV distance is analyzed to evaluate the estimation bias induced by the STV distance.

1.2.2 Statistical inference with kernel exponential family

The exponential family plays a central role in mathematical statistics [47, 48]. Indeed, the exponential family has rich mathematical properties from various viewpoints; minimum sufficiency, statistical efficiency, attaining maximum entropy under the moment constraint of sufficient statics, geometric flatness in the sense of information geometry, and so on.

The kernel exponential family is an infinite dimensional extension of the exponential family [35]. Due to the computational tractability, the kernel exponential family is used as a statistical model for non-parametric density estimation [36, 49, 50].

Some authors have studied robustness for infinite-dimensional non-parametric estimators. In [51], the authors study an estimator based on a robust test, which is computationally infeasible. The TV distance is used as the discrepancy measure. In [52], the estimation accuracy of the wavelet thresholding estimator is evaluated using IPM loss defined by the Besov space.

On the other hand, we study the robustness property of the STV-based estimator with kernel exponential family. The estimation accuracy is evaluated by the TV distance, STV distance, and the norm in the parameter space. For the finite-dimensional multivariate normal distribution, we derive the minimax convergence rate of the robust estimator for the covariance matrix in terms of the Frobenius norm, while existing works mainly focus on the convergence rate under the operator norm.

1.2.3 Unnormalized models

For complex probability density models, including kernel exponential family, the computation of the normalization constant is often infeasible. In such a case, the direct application of the MLE is not possible. There are numerous works to mitigate the computational difficulty in statistical inference [40,41,42,43, 53,54,55,56,57,58]. The kernel exponential family has the same obstacle. To avoid the computation of the normalization constant, [36] investigated the estimation method using Fisher divergence. Another approach is to use the dual expression of the MLE for the kernel exponential family [36, 49, 50]. The IPM-based robust estimator considered in this paper, too, has a computational issue.

Here, we employ the Monte Carlo method. For the infinite-dimensional kernel exponential family, the representer theorem does not work to reduce the infinite-dimensional optimization problem to a finite one. However, the finite sampling by the Monte Carlo method enables us to use the standard representer theorem to compute the estimator. We analyze the relation between the number of Monte Carlo samples and the convergence rate under contaminated distribution.

1.2.4 Organization

The paper is organized as follows. In Sect. 2, we introduce some notations used in this paper and discrepancy measures that are used in statistical inference. Let us introduce the relationship between the data depth and GAN-based robust estimator. In Sect. 3, we show the definition of the smoothed TV distance. Some theoretical properties, such as the difference from the TV distance, are investigated. In Sect. 4, the smoothed TV distance with regularization is applied for the estimation of the kernel exponential family. We derive the convergence rate of the estimator in terms of the TV distance. In Sect. 5, we investigate the convergence rate of the estimator in the parameter space. For the estimation of the covariance matrix for the multivariate normal distribution, we prove that our method attains the minimax optimal rate in terms of the Frobenius norm under contamination, while most past works derived the convergence rate in terms of the operator norm. In Sect. 6, we investigate the convergence rate of the learning algorithm using Monte Carlo approximation for the normalization constant. Section 7 is devoted to the conclusion and future works. Detailed proofs of theorems are presented in Appendix.

2 Discrepancy measures for statistical inference

In this section, let us define the notations used throughout this paper. Then, we introduce some discrepancy measures for statistical inference.

2.1 Notations and definitions

We use the following notations throughout the paper. Let \({\mathcal {P}}\) be the set of all probability measures on a Borel space \(({\mathcal {X}},{\mathcal {B}})\), where \({\mathcal {B}}\) is a Borel \(\sigma \)-algebra. For the Borel space, \(L_0\) denotes the set of all measurable functions and \(L_1(\subset L_0)\) denotes the set of all integrable functions. Here, functions in \(L_0\) are allowed to take \(\pm \infty \). For a function set \({\mathcal {U}}\subset {L_0}\) and \(c\in {\mathbb {R}}\), let \(c\,{\mathcal {U}}\) be \(\{c u : u\in {\mathcal {U}}\}\) and \(-{\mathcal {U}}\) be \((-1){\mathcal {U}}\). The expectation of \(f\in L_0\) for the probability distribution \(P\in {\mathcal {P}}\) is denoted by \({\mathbb {E}}_P[f]=\int _{{\mathcal {X}}}f\textrm{d}P\). We also write \({\mathbb {E}}_{X\sim {P}}[f(X)]\) or \({\mathbb {E}}_{{P}}[f(X)]\) to specify the random variable X. The range of the integration, \({\mathcal {X}}\), is dropped if there is no confusion. For a Borel measure \(\mu \), \(P\ll \mu \) denotes that P is dominated by \(\mu \), i.e., \(\mu (A)=0\) for \(A\in {\mathcal {B}}\) leads to \(P(A)=0\). When \(P\ll \mu \) holds, P has the probability density function p such that the expectation is computed by \(\int _{{\mathcal {X}}}f(x)p(x)\textrm{d}\mu (x)\) or \(\int _{{\mathcal {X}}}f p\textrm{d}\mu \). The function p is denoted by \(\frac{\textrm{d}{P}}{\textrm{d}{\mu }}\). The simple function on \(A\in {\mathcal {B}}\) is denoted by \(\varvec{1}_A(x)\) that takes 1 for \(x\in {A}\) and 0 for \(x\not \in {A}\). In particular, the step function on the set of non-negative real numbers, \({\mathbb {R}}_+\), is denoted by \(\varvec{1}\) for simplicity, i.e., \(\varvec{1}(x)=1\) for \(x\ge 0\) and 0 otherwise. The indicator function \({\mathbb {I}}[A]\) takes 1 (resp. 0) when the proposition A is true (resp. false). The function \(\textrm{id}:{\mathbb {R}}\rightarrow {\mathbb {R}}\) stands for the identity function, i.e., \(\textrm{id}(x)=x\) for \(x\in {\mathbb {R}}\). The Euclidean norm of \({\varvec{x}}\in {\mathbb {R}}^d\) is denoted by \(\Vert {\varvec{x}}\Vert _2=\sqrt{{\varvec{x}}^T{\varvec{x}}}\).

Let us define \({\mathcal {H}}\) as a reproducing kernel Hilbert space (RKHS) on \({\mathcal {X}}\). For \(f,g\in {\mathcal {H}}\), the inner product on \({\mathcal {H}}\) is expressed by \(\langle f,g\rangle \) and the norm of f is defined by \(\Vert f\Vert =\sqrt{\langle f,f\rangle }\). For a positive number U, let \({\mathcal {H}}_U\) be the subset of the RKHS \({\mathcal {H}}\) defined by \(\{f\in {\mathcal {H}}:\Vert f\Vert \le U\}\). See [59] for details of RKHS.

In statistical inference, discrepancy measures for probability distributions play an important role. One of the most popular discrepancy measures in statistics is the Kullback–Leibler (KL) divergence

When \(P\ll \mu \) and \(Q\ll \mu \) holds, P (resp Q) has the probability density p (resp. q) for \(\mu \). Then, the KL divergence is expressed by

Note that the KL divergence does not satisfy the definition of the distance. Indeed, the symmetric property does not hold. Another important discrepancy measure is the total variation (TV) distance for \(P,Q\in {\mathcal {P}}\),

When P and Q respectively have the probability density function p and q for the Borel measure \(\mu \), we have

As an expansion of the total variation distance, the integral probability measure (IPM) is defined by

where \({\mathcal {F}}\subset L_0\) is a uniformly bounded function set, i.e., \(\sup _{f\in {\mathcal {F}}, x\in {\mathcal {X}}}|{f(x)}|<\infty \) [22]. If \({\mathcal {F}}\) satisfies \({\mathcal {F}}=-{\mathcal {F}}\), clearly one can drop the modulus sign in the definition of the IPM. The same property holds when \({\mathcal {F}}=\{c-f:\,f\in {\mathcal {F}}\}\) holds for a fixed real number c; see (1). IPM includes not only the total variation distance, but also Wasserstein distance, Dudley distance, maximum mean discrepancy (MMD) [60], etc. The MMD is used for non-parametric two-sample test [61] and the Wasserstein distance is used for the transfer learning or the estimation with generative models [44, 62,63,64,65]. In the sequel sections, we study robust statistical inference using a class of the IPM.

2.2 Depth-based methods and IPMs

Tukey’s median is a multivariate analog of the median. Given d-dimensional i.i.d. samples \(X_1,\ldots ,X_n\), Tukey’s median is defined as the minimum solution of

Let \({\widehat{P}}_{\varvec{\mu }}\) be the probability distribution having the uniform point mass on \(X_1-\varvec{\mu },\ldots ,X_n-\varvec{\mu }\) and \({\mathcal {F}}\) be the function set \({\mathcal {F}}=\{{\varvec{x}}\mapsto {\mathbb {I}}[{\varvec{u}}^T{\varvec{x}}\ge 0]\,:\,{\varvec{u}}\in {\mathbb {R}}^d,\,\Vert {\varvec{u}}\Vert _2=1\} \cup \{{\varvec{x}}\mapsto {\mathbb {I}}[{\varvec{u}}^T{\varvec{x}}>0]\,:\,{\varvec{u}}\in {\mathbb {R}}^d,\,\Vert {\varvec{u}}\Vert _2=1\}\). Then, we can confirm that the IPM between \({\widehat{P}}_{\varvec{\mu }}\) and the multivariate standard normal distribution \(N_d(\varvec{0},I_d)\) with the above \({\mathcal {F}}\) yields

i.e., one can drop the modulus in the definition of the IPM. Tukey’s median is expressed by the minimization of the above IPM.

Likewise, the covariance matrix depth is expressed by the IPM between the probability distribution \({\widehat{P}}_\Sigma \) having the uniform point mass on \(\Sigma ^{-1/2}X_1, \ldots , \Sigma ^{-1/2}X_n\) for a positive definite matrix \(\Sigma \) and \(N_d(\varvec{0},I_d)\). For the function set \({\mathcal {F}}=\{{\varvec{x}}\mapsto {\mathbb {I}}[{\varvec{u}}^T({\varvec{x}}{\varvec{x}}^T-I_d){\varvec{u}}\le 0]\,:\,{\varvec{u}}\in {\mathbb {R}}^d{\setminus }\{\varvec{0}\}\}\), we can confirm that

The last line is nothing but the covariance matrix depth. The minimizer of \(\textrm{IPM}({\widehat{P}}_{\Sigma },N_d(\varvec{0},I_d);{\mathcal {F}})\) over the positive definite matrix \(\Sigma \) is equal to the covariance matrix estimator with the data depth.

Another IPM-based expression of the robust estimator is presented by [28]. In order to express the estimator by the minimization of the IPM from the empirical distribution of data \(P_n\) to the statistical model, a variant of IPM loss is introduced. For instance, the robust covariance estimator with the data depth is expressed by the minimum solution of \(\lim _{r\rightarrow 0}\textrm{IPM}(P_n, N_d(\varvec{0},\Sigma );{\mathcal {F}}_{N_d(\varvec{0},\Sigma ),r})\) with respect to the parameter of the covariance matrix \(\Sigma \), where \({\mathcal {F}}_{Q,r}\) is a function set depending on the distribution Q and a positive real parameter r. Details are shown in Proposition 2.1 of [28]. Though the connection between the data depth and IPM is not straightforward, the GAN-based estimator is thought to be a promising method for robust density estimation.

3 Smoothed total variation distance

We define the smoothed total variation distance as a class of the IPM, and investigate its theoretical properties. All the proofs of theorems in this section are deferred to Appendix A.

3.1 Definition and some properties

As an extension of the TV distance, let us define the smoothed TV distance, which is a class of IPM.

Definition 1

Let \(\sigma :{\mathbb {R}}\rightarrow {\mathbb {R}}\) be a measurable function and \({\mathcal {U}}\subset L_0\) be a function set including the zero function. For \(P,Q\in {\mathcal {P}}\), the smoothed total variation (STV) distance, \(\textrm{STV}_{{\mathcal {U}},\sigma }(P,Q)\), is defined by

For the bias b, one can impose the constraint such as \(|{b}|\le R\) with a positive constant R. Regardless of the constraint on the bias b, we use the notations \(\textrm{STV}(P,Q)\) and \(\textrm{STV}_\sigma (P,Q)\) for \(\textrm{STV}_{{\mathcal {U}},\sigma }(P,Q)\) if there is no confusion.

The STV distance is nothing but the IPM with the function class \(\{\sigma (u-b):\,u\in {\mathcal {U}}, b\in {\mathbb {R}}\}\). We show that the STV distance is a smoothed variant of the TV distance and shares some statistical properties. When the function set \({\mathcal {U}}\) is properly defined, and \(\sigma \) is smooth, it is possible to construct a computationally tractable learning algorithm using the STV distance. On the other hand, learning with the TV distance is often computationally intractable, as the indicator function, which is non-differentiable, prevents from efficient optimization. In our paper, we focus on the STV distance such that the function in \({\mathcal {U}}\) is expressed by a ball in the RKHS. The details are shown in Sect. 3.3.

Some examples of STV distance are shown below.

Example 1

The total variation distance is expressed by \(\textrm{STV}_{L_0,\varvec{1}}\).

Example 2

The STV distance with the identity function \(\sigma =\textrm{id}\) is reduced to the IPM defined by the function set \({\mathcal {U}}\). The MMD [61] is expressed by the STV distance with \({\mathcal {U}}={\mathcal {H}}_1\) and \(\sigma =\textrm{id}\). The Wasserstein distance with 1st moment corresponds to the STV distance with \({\mathcal {U}}=\{f:{\mathcal {X}}\rightarrow {\mathbb {R}}\,:\,\text {f is 1-Lipschitz continuous}\}\) and \(\sigma =\textrm{id}\).

The authors of [30] revealed the robustness of the Wasserstein-based estimator called Wasserstein-GAN. As for the robustness of the MMD-based method, i.e., MMD-GAN, the authors of [31] obtained negative results according to theoretical analysis under a strong assumption and numerical experiments. Though the STV distance with RKHSs is similar to MMD, a significant difference is that a non-linear function \(\sigma \) and the RKHS ball \({\mathcal {H}}_U\) with variable radius are used in the STV distance. As a result, the STV-based method recovers the robustness. Section 4 and thereafter will discuss this in more detail.

Example 3

Let \({\mathcal {U}}\subset {L_0}\) be a function set. The STV distance with \({\mathcal {U}}\) and \(\sigma =\varvec{1}\) is the generalized Kolmogorov–Smirnov distance [32],

When \(\sigma \) is a cumulative distribution function of a probability distribution, the STV distance, \(\textrm{STV}_\sigma \), is the smoothed generalized Kolmogorov–Smirnov distance [32].

Let us consider some basic properties of the STV distance.

Lemma 1

For the STV distance, the non-negativity, \(\textrm{STV}(P,Q)\ge 0\), and the triangle inequality, \(\textrm{STV}(P,Q)\le \textrm{STV}(P,R)+\textrm{STV}(R,Q)\) hold for \(P,Q,R\in {\mathcal {P}}\). When \(\sigma \) satisfies \(0\le \sigma \le 1\), the inequality \(\textrm{STV}_{\sigma }(P,Q)\le \textrm{TV}(P,Q)\) holds.

We omit the proof, since it is straightforward.

Let us consider the following assumptions.

-

Assumption (A)

: The function set \({\mathcal {U}}\subset L_0\) satisfies \({\mathcal {U}}=-{\mathcal {U}}\), i.e., \({\mathcal {U}}\) is closed for negation.

-

Assumption (B)

: The function \(\sigma (z)\) is continuous and strictly monotone increasing. In addition, \(\displaystyle \lim _{z\rightarrow -\infty }\sigma (z)=0, \lim _{z\rightarrow \infty }\sigma (z)=1\) and \(\sigma (z)+\sigma (-z)=1, z\in {\mathbb {R}}\) hold.

Under Assumption (B), the STV distance is regarded as a class of the smoothed generalized Kolmogorov–Smirnov distance [32].

We show some properties of the STV distance under the above assumptions.

Lemma 2

Under Assumption (A), the following equality holds,

for \(P,Q\in {\mathcal {P}}\), i.e., one can drop the modulus sign.

Lemma 3

Under Assumptions (A) and (B), it holds that

for \(P,Q\in {\mathcal {P}}\).

Let us consider the STV distance such that \({\mathcal {U}}\) is given by the RKHS \({\mathcal {H}}\) or its subset \({\mathcal {H}}_U\). When the RKHS is dense in the set of all continuous functions on \({\mathcal {X}}\) for the supremum norm, the RKHS is called universal RKHS [66]. It is well known that the Gaussian kernel induces a universal RKHS.

Lemma 4

Suppose that \({\mathcal {H}}\) be a universal RKHS. Under Assumptions (A) and (B), \(\textrm{STV}_{{\mathcal {H}},\sigma }\) equals the TV distance. Furthermore, for \({\mathcal {H}}_U=\{f\in {\mathcal {H}}:\,\Vert f\Vert \le U\}\), the equality

holds for \(P,Q\in {\mathcal {P}}\).

3.2 Gap between STV distance and TV distance

One can quantitatively evaluate the difference between the TV distance and STV distance. First of all, let us define the decay rate of the function \(\sigma \).

Definition 2

Let \(\sigma \) be the function satisfying Assumption (B). If there exists a function \(\lambda (c)\) such that \(\lim _{c\rightarrow \infty }\lambda (c)=0\) and

hold for arbitrary \(c> C_0>0\), \(\lambda (c)\) is called the decay rate of \(\sigma \), where \(C_0\) is a positive constant.

Proposition 5

Assume the Assumptions (A) and (B). Suppose that the decay rate of \(\sigma \) is \(\lambda (c)\) for \(c>C_0>0\), i.e., (2) holds. For \(P,Q\in {\mathcal {P}}\), let \(\mu \) be a Borel measure such that \(P\ll \mu \) and \(Q\ll \mu \) hold. Let us define the function s(x) on \({\mathcal {X}}\) by \(s(x) = \log \frac{\frac{\textrm{d}{P}}{\textrm{d}{\mu }}(x)}{\frac{\textrm{d}{Q}}{\textrm{d}{\mu }}(x)}\), where \(\log \frac{a}{0}=\infty \) and \(\log \frac{0}{a}=-\infty \) for \(a>0\) and \(\frac{0}{0}=1\) by convention. Suppose \(s\in {\mathcal {U}}\oplus {\mathbb {R}}\). Then, for the STV distance with \(c\,{\mathcal {U}}, c>0\) and \(\sigma \), the inequality

holds for \(c> C_0\).

Note that for any pair of probability distributions, P and Q, there exists a measure \(\mu \) such that \(P\ll \mu \) and \(Q\ll \mu \). A simple example is \(\mu =P+Q\). The above proposition holds only for P and Q such that \(s\in {\mathcal {U}}\). Under mild assumptions, any pair of probability distributions in a statistical model satisfies the condition \(s\in {\mathcal {U}}\).

Remark 1

Let us consider the case of \(\textrm{TV}(P,Q)=1\). A typical example is the pair of P and Q for which there exists a subset A such that \(P(A)=1\) and \(Q(A)=0\). In this case, \(s(x)=\infty \) (resp. \(s(x)=-\infty \)) for \(x\in {A}\) (resp. \(x\not \in {A}\)). If \({\mathcal {U}}\) includes such a function, we have \(\sigma (s(x)-b)=+1\) for \(x\in A\) and otherwise \(\sigma (s(x)-b)=0\). Hence, the STV distance matches with the TV distance for P and Q.

Below, we show the lower bound of the decay rate and some examples.

Lemma 6

Under Assumption (B), the decay rate satisfies

The above lemma means that the order of the decay rate \(\lambda (c)\) is greater than or equal to 1/c.

Example 4

For the sigmoid function \(\sigma (z)=1/(1+e^{-z}), z\in {\mathbb {R}}\), the decay rate is given by \(\lambda (c)=1/c\) for \(c>1\). Indeed, the inequality

holds for \(t>1\) and \(c>1\). We see that the sigmoid function attains the lowest order of the decay rate. Likewise, we find that the decay rate \(\lambda (c)\) of the function \(\sigma (-z) \asymp e^{-z}\) for \(z>0\) is of the order 1/c.

Example 5

For the function \(\sigma (-z)\asymp z^{-\beta } (z\rightarrow \infty )\) with a constant \(\beta >0\), we can prove that there is no finite decay rate. Indeed, for \(\sigma (-z)\asymp z^{-\beta }\), we have

In the proof of Proposition 5, the density ratios, p/q and q/p, are replaced with the variable t. When the density ratios, p/q and q/p, are both bounded above by a constant \(T_0>0\), the range of the supremum is restricted to \(1\le t\le T_0\). In such a case, the decay rate is \(\lambda (c)=\frac{1}{c^\beta }\sup _{1\le t\le T_0}\frac{t-1}{(\log {t})^\beta }\asymp 1/c^\beta \). The above additional assumption makes the lower bound in Lemma 6 smaller.

3.3 STV distances on kernel exponential family

We use the STV distance for the probability density estimation. There are numerous models of probability densities. In this paper, we focus on the exponential family and its kernel-based extension called kernel exponential family [35, 36]. The exponential family includes important statistical models. The kernel exponential family is a natural extension of the finite-dimensional exponential family to an infinite-dimensional one while preserving computational feasibility. We consider the robust estimator based on the STV distance for the kernel exponential family.

Let \({\mathcal {H}}\) be the RKHS endowed with the kernel function k. The kernel exponential family \({\mathcal {P}}_{\mathcal {H}}\) is defined by

where A(f) is the moment generating function, \(A(f)=\log \int _{{\mathcal {X}}}e^{f(x)}\textrm{d}\mu \), and \(\mu \) is a Borel measure on \({\mathcal {X}}\).

The following lemmas indicate the basic properties of the STV distance for the kernel exponential family. The proofs are shown in Appendix A.

Lemma 7

For \(P_f, P_g\in {\mathcal {P}}_{\mathcal {H}}\), \(\textrm{STV}_{{\mathcal {H}}_{U},\varvec{1}}(P_f, P_g)\) equals the TV distance \(\textrm{TV}(P_f, P_g)\) for any \(U>0\).

Lemma 8

Suppose Assumption (B). For \(P_f, P_g\in {\mathcal {P}}_{{\mathcal {H}}}\), we have

The convergence rate is uniformly given by

for any \(P_f,P_g\in {\mathcal {P}}_{{\mathcal {H}}}\) as long as \(U/\Vert f-g\Vert >C_0\), where \(\lambda (c),c>C_0\) is the decay rate of \(\sigma \).

Lemma 4 shows a similar result to the former part in the above lemma. In Lemma 8, the RKHS is not necessarity universal.

When \(\sigma \) is the sigmoid function, we have \(\lambda (c)=1/c\) for \(c>1\). Thus,

holds for \(U>\Vert f-g\Vert \).

Remark 2

One can use distinct RKHSs for the STV and the probability model. Let \({\mathcal {H}}\) and \(\widetilde{{\mathcal {H}}}\) be RKHSs such that \({\mathcal {H}}\subset \widetilde{{\mathcal {H}}}\). Then, for \(P_f, P_g\in {\mathcal {P}}_{{\mathcal {H}}}\), it holds that \(\textrm{STV}_{\widetilde{{\mathcal {H}}}_{U},\varvec{1}}(P_f,P_g) = \textrm{TV}(P_f,P_g)\) and \(\lim _{U\rightarrow \infty }\textrm{STV}_{\widetilde{{\mathcal {H}}}_{U},\sigma }(P_f,P_g)= \textrm{TV}(P_f,P_g)\) under Assumption (B) for \(\sigma \).

Using the above lemma, one can approximate the learning with the TV distance by learning using STV distance, which does not include the non-differentiable indicator function in the loss function.

4 Estimation with STV distance for kernel exponential family

We consider the estimation of the probability density using the model \({\mathcal {P}}_{{\mathcal {H}}}\). We assume that i.i.d. samples generated from a contaminated distribution of \(P_{f_0}\). For example, the Huber contamination is expressed by the mixture of \(P_{f_0}\) and outlier distribution Q:

where \(Q\in {\mathcal {P}}\) is an arbitrary distribution. Our target is to estimate \(P_{f_0}\in {\mathcal {P}}_{{\mathcal {H}}}\) from the data. For that purpose, there are numerous estimators [2, 28,29,30,31, 33, 34, 67]. In our paper, we assume the following condition for the contaminated distribution.

-

Assumption (C)

: For the target distribution \(P_{f_0}\in {\mathcal {P}}_{{\mathcal {H}}}\) and the contamination rate \(\varepsilon \in (0,1)\), the contaminated distribution \(P_\varepsilon \) satisfies \(\textrm{TV}(P_{f_0}, P_\varepsilon )<\varepsilon \), and i.i.d. samples, \(X_1,\ldots ,X_n\), are generated from \(P_\varepsilon \).

The Huber contamination (5) satisfies Assumption (C).

The model \({\mathcal {P}}_{{\mathcal {H}}}\) is regarded as a working hypothesis that explains the data generation process. Suppose that, under an ideal situation, the data is generated from the “true distribution” \(P_0\), which may not be included in \({\mathcal {P}}_{{\mathcal {H}}}\). If data contamination occurs, \(P_0\) may shift to the contaminated distribution \(P_{\varepsilon }\). On the assumption that \(\min _{P\in {\mathcal {P}}_{{\mathcal {H}}}}\textrm{TV}(P,P_0)<\varepsilon \) and \(\textrm{TV}(P_0,P_{\varepsilon }) < \varepsilon \), all the theoretical findings from this section hold for the distribution \(P_{f_0}\in {\mathcal {P}}_{{\mathcal {H}}}\) satisfying \(\textrm{TV}(P_{f_0},P_0) < \varepsilon \). The above discussion means that we do not need to assume that the model \({\mathcal {P}}_{{\mathcal {H}}}\) should exactly include the true distribution. As a working hypothesis, however, we still need to select an appropriate model \({\mathcal {P}}_{{\mathcal {H}}}\). For finite-dimensional models, robust model-selection methods have been studied by [68, 69]. This paper does not address developing practical methods for robust model selection.

In the sequel, we consider the estimator using the STV distance, which is called STV learning. All proofs of theorems in this section are deferred to Appendix B.

4.1 Minimum STV distance estimators

For the RKHSs \({\mathcal {H}}\) and \(\widetilde{{\mathcal {H}}}\) such that \({\mathcal {H}}\subset \widetilde{{\mathcal {H}}}\) and \(\dim {\mathcal {H}}=d\le {\widetilde{d}}=\dim \widetilde{{\mathcal {H}}}<\infty \), let us consider the STV distance to obtain a robust estimator,

where \(P_n\) is the empirical distribution of data. Note that \(\textrm{STV}_{\widetilde{{\mathcal {H}}}_U,\varvec{1}}(P_f, P_n)\) does not necessarily match with the TV distance between \(P_f\) and \(P_n\), since usually \(P_n\in {\mathcal {P}}_{{\mathcal {H}}}\) does not hold. Finding \({\widehat{f}}\) is not computationally feasible. Here, let us consider the estimation accuracy of \({\widehat{f}}\). The TV distance between the target distribution and the estimated one is evaluated as follows.

Theorem 9

Assume Assumption (C). Suppose that the dimensnion \({\widetilde{d}}=\dim \widetilde{{\mathcal {H}}}\) is finite. The TV distance between the target distribution and the estimator \(P_{{\widehat{f}}}\) given by (6) is bounded above by

with probability greater than \(1-\delta \).

The STV-learning with \(\widetilde{{\mathcal {H}}}={\mathcal {H}}\) attains the lower error bound with \(\sqrt{d/n}\) instead of \(\sqrt{{\widetilde{d}}/n}\). In what follows, we assume \({\mathcal {H}}=\widetilde{{\mathcal {H}}}\).

Next, let us consider the estimator using \(\textrm{STV}_{{\mathcal {H}}_U,\sigma }\), where \(\sigma \) is the function satisfying Assumption (B):

A typical example of \(\sigma \) is the sigmoid function, which leads to a computationally tractable learning algorithm. The optimization problem is written by the min–max problem,

When \({\mathcal {H}}\) is the finite-dimensional RKHS, the objective function \({\mathbb {E}}_{P_f}[\sigma (u(X)-b)]-{\mathbb {E}}_{P_n}[\sigma (u(X)-b)]\) is differentiable w.r.t. the finite-dimensional parameters of the model under the mild assumption.

In the same way as Theorem 9, we can see that the estimator (7) satisfies

with a high probability. We need to control the bias term to guarantee the convergence of \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}})\). For that purpose, we introduce the regularization to the estimator (7).

4.2 Regularized STV learning

When we use the learning with the STV distance, the bias term appears in the upper bound of the estimation error. In order to control the bias term, let us consider the learning with regularization,

where f is rectricted to \({\mathcal {H}}_r\) with a positive constant r that possibly depends on the sample size n. When the volume of \({\mathcal {X}}\), i.e., \(\int _{{\mathcal {X}}}\textrm{d}\mu \), is finite, the regularized \(\textrm{STV}\)-based learning,

leads to a solution that is similar to \({\widehat{f}}_r\). Indeed, under Assumption (B) for \(\sigma \), we have

where \(P_0=p_0\textrm{d}\mu \) is the uniform distribution on \({\mathcal {X}}\) with respect to \(\mu \). Therefore, we have \(\Vert {\widehat{f}}_{\textrm{reg},r}\Vert \le r\). The following theorem shows the estimation accuracy of regularized STV learning.

Theorem 10

Assume Assumption (C). Let \(\sigma \) be the sigmoid function, which satisfies Assumption (B). Suppose that the dimension \(d=\dim {\mathcal {H}}\) is finite. We assume that \(\Vert f_0\Vert \le r\) and \(U\ge 2r\). Then, the TV distance between the target distribution and the above regularized estimators is bounded above by

with probability greater than \(1-\delta \).

Let us consider the choice of r and U. If r/U is of the order \(O(\sqrt{d/n})\), the convergence rate of \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_r})\) is \(O_p(\varepsilon +\sqrt{d/n})\). This is easily realized by setting \(U=r\sqrt{n/d}\) and \(r=r_n\) as any increasing sequence to infinity as \(n\rightarrow \infty \). On the other hand, the order of \(1/r^2\) appears in the upper bound of \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_{\textrm{reg},r}})\). By setting \(r\ge n^{1/4}\) and \(U\ge r\sqrt{n}\), we find that \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_{\textrm{reg},r}}) = O_p(\varepsilon +\sqrt{d/n})\). Therefore, with the appropriate setting of U and r, both \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_r})\) and \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_{\textrm{reg},r}})\) attain the order of \(\varepsilon +\sqrt{d/n}\).

Corollary 11

Assume the assumption of Theorem 10 and \(d=\dim \mathrm {{\mathcal {H}}}\). By setting \(r\ge n^{1/4}\) and \(U\ge r\sqrt{n}\), the inequalities

hold with a high probability.

Furthermore, we consider the STV learning in which the constraint \(u\in {\mathcal {H}}_U\) in \(\textrm{STV}_{{\mathcal {H}}_U,\sigma }\) is replaced with the regularization term,

Let \({\check{u}}\in {\mathcal {H}}\) and \({\check{b}}\in {\mathbb {R}}\) be the optimal solution in the inner maximum problem for a fixed f. Then, we have

The first inequality is obtained by comparing \(u={\check{u}}\) and \(u=0\). In the same way as (10), the norm of \({\check{f}}_{\textrm{reg},r}\) is bounded above as follows.

Thus, we have \(\Vert {\check{f}}_{\textrm{reg},r}\Vert \le r\) and \(\Vert {\check{u}}\Vert \le U\).

Theorem 12

Assume Assumption (C). Let \(\sigma \) be the sigmoid function, which satisfies Assumption (B). Suppose that the dimension \(d=\dim {\mathcal {H}}\) is finite. We assume that \(\Vert f_0\Vert \le r\) and \(U\ge 2r\). The TV distance between the target distribution and the regularized estimator \({\check{f}}_{\textrm{reg},r}\) in (11) is bounded above as follows,

with probability greater than \(1-\delta \).

Corollary 13

Assume the assumption in Theorem 12. The estimator \({\check{f}}_{\textrm{reg},r}\) with \(r\ge n^{1/4}\) and \(U=rn^{3/4}\), satisfies

with a high probability for a large n.

The regularization parameters, \(r=n^{1/4}\) and \(U=n\), agree to the assumption in the above Corollary.

The above convergence analysis shows that the regularization for the “discriminator” \(u\in {\mathcal {H}}_U\) should be weaker than that for the “generator” \(f\in {\mathcal {H}}_r\), i.e., \(r<U\) is preferable to achieve a high prediction accuracy. This is because the bias term induced by STV distance is bounded above by r/U up to a constant factor. The standard theoretical analysis of the GAN does not take the bias of the surrogate loss into account. That is, the loss function used in the probability density estimation is again used to evaluate the estimation error.

In our setting, we assume that the expectation of \(\sigma (u(X)-b)\) for \(X\sim P_f\) is exactly computed. In Sect. 6, we consider the approximation of the expectation by the Monte Carlo method. We can evaluate the required sample size of the Monte Carlo method.

4.3 Regularized STV learning for infinite-dimensional kernel exponential family

In this section, we consider the regularized STV learning for infinite dimensional RKHS. In the definition of the STV distance, suppose that the range of the bias term b is restricted to \(|{b}|\le U\) when we deal with infinite-dimensional models. For the kernel exponential family \({\mathcal {P}}_{{\mathcal {H}}}\) defined from the infinite-dimensional RKHS \({\mathcal {H}}\), we employ the regularized STV learning, (9), (10), and (11) to estimate the probability density \(p_{f_0}\) using contaminated samples.

Theorem 14

Assume Assumption (C). Let \(\sigma \) be the sigmoid function, which satisfies Assumption (B). Suppose \(\sup _{x}k(x,x)\le 1\). Then, for \(\Vert f_0\Vert \le r\), the estimation error bounds of the regularized STV learning, (9), (10), and (11), are given by

with probability greater than \(1-\delta \).

The proof is shown in Appendix B.4.

The model complexity of the infinite-dimensional model is bounded above by \(U/\sqrt{n}\) in the upper bound in Theorem 14, while that of the d-dimensional model is bounded above by \(\sqrt{d/n}\). Because of that, we can find the optimal order of U.

Corollary 15

Assume the assumption of Theorem 14. Then,

hold with a high probability, where the poly-log order is omitted in \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_r})\).

Remark 3

Using the localization technique introduced in Chapter 14 in [70], one can obtain a detailed convergence rate for infinite dimensional exponential families. Suppose that the kernel function \(k(x,x')\) is expanded as \(k(x,x')=\sum _{i=1}^{\infty }\mu _i \phi _i(x)\phi _i(x')\), where \(\phi _i,i=1,\ldots ,\) are the orthonormal basis of the squared integrable function sets \(L^2(P_{f_0})\). Suppose that \(\mu _i\) is of the order \(1/i^p,\,i=1,2,\ldots \). Using Theorem 14.20 in [70] with a minor modification, we find that

with probability greater than \(1-\exp \{-cn\delta _n^2\}\), where c is a positive constant. Setting \(U=n^{\frac{p}{4p+6}}\) for a fixed r, we have \(\textrm{TV}(P_{f_0}, P_{{\widehat{f}}_r})\lesssim \varepsilon + n^{-\frac{p}{4p+6}}\) with probability greater than \(1-\exp \{-cn^{\frac{3}{2p+3}}\}\).

5 Accuracy of parameter estimation

This section is devoted to studying the estimation accuracy in the parameter space. The proofs are deferred to Appendix C.

5.1 Estimation error in RKHS

So far, we considered the estimation error in terms of the TV distance. In this section, we derive the estimation error in the RKHS. Such an evaluation corresponds to the estimation error of the finite-dimensional parameter in the Euclidean space. A lower bound of the TV distance is shown in the following lemma.

Lemma 16

For the RKHS \({\mathcal {H}}\) with the kernel function \(k:{\mathcal {X}}\times {\mathcal {X}}\rightarrow {\mathbb {R}}\), we assume \(\sup _{x\in {\mathcal {X}}}k(x,x)\le 1\). Suppose that \(\int _{{\mathcal {X}}}\textrm{d}\mu =1\). Then, for \(f,g\in {\mathcal {H}}_r\), it holds that

where \(P_0\) is the probability measure \(p_f\textrm{d}\mu \) with \(f=0\), i.e., the uniform distribution \(p_0=1\) on \({\mathcal {X}}\) w.r.t. the measure \(\mu \).

For the RKHS \({\mathcal {H}}\), define

As shown in Lemma 16, the RKHS norm is bounded above by the total variation distance multiplied by \(\xi ({\mathcal {H}})\) for a fixed r.

We consider the estimation error in the RKHS norm. Let \({\mathcal {H}}_1,{\mathcal {H}}_2,\ldots ,{\mathcal {H}}_d,\ldots \) be a sequence of RKHSs such that \(\dim {\mathcal {H}}_d=d\). Our goal is to prove that the estimation error of the estimator \({\widehat{f}}_r\) in (9) for the statistical model \({\mathcal {P}}_{{\mathcal {H}}_{d,r}}\) is of the order \(\varepsilon +\sqrt{d/n}\), where \({\mathcal {H}}_{d,r}=({\mathcal {H}}_{d})_r=\{f\in {\mathcal {H}}_d\,:\,\Vert f\Vert \le r\}\).

Corollary 17

For a positive constant r, let us consider the finite-dimensional statistical model \({\mathcal {P}}_{{\mathcal {H}}_{d,r}},\, d=1,2,\ldots \) such that \(\inf _{d\in {\mathbb {N}}}\xi ({\mathcal {H}}_d)>0\). Assume Assumption (C) with \({\mathcal {H}}={\mathcal {H}}_{d,r}\) and Assumption (B) with the sigmoid function \(\sigma \). Suppose that \(f_0\in {\mathcal {H}}_{d,r}\). Then, the estimation error of the estimator \({\widehat{f}}_r\) in (9) with \(U\gtrsim \sqrt{n}\) is

with a high probability.

The proof is shown in Appendix C.2.

When \(\xi ({\mathcal {H}}_d)\) is not bounded below by a positive constant, the estimation accuracy is of the order \(\varepsilon +\frac{1}{\xi ({\mathcal {H}}_d)}\sqrt{\frac{d}{n}}\), meaning that the dependency on the dimension d is \(\sqrt{d}/\xi ({\mathcal {H}}_d)\) that is greater than \(\sqrt{d}\).

If an upper bound of \(\Vert f_0\Vert \) is unknown, r is treated as the regularization parameter depending on n. In such a case, the coefficient of \(\varepsilon \) depends on n, and it goes to infinity as \(n\rightarrow \infty \). In theoretical analysis, the upper bound of \(\Vert f_0\Vert \) is often assumed to be known especially for covariance matrix estimation [5, 29, 30, 51].

Let us construct an example of the sequence \({\mathcal {H}}_1,{\mathcal {H}}_2,\ldots \) satisfying the assumption of Corollary 17. Let \(\{t_j(x)\}_{j=1}^{\infty }\) be the orthonormal functions which are orthogonal to the constant function under \(\mu \), i.e,

for \(i,j=1,2,\ldots \). For a positive decreasing sequence \(\{\lambda _k\}_{k=1}^{\infty }\) such that \(\lambda _\infty :=\lim _{k\rightarrow \infty }\lambda _k>0\), define the sequence of finite-dimensional RKHSs, \({\mathcal {H}}_1, {\mathcal {H}}_2,\ldots ,{\mathcal {H}}_d,\ldots \) by

where the inner product \(\langle f,g\rangle \) for \(f=\sum _j\alpha _j t_j\) and \(g=\sum _j\beta _j t_j\) is defined by \(\langle f,g\rangle =\sum _{i}\alpha _i\beta _i/\lambda _i\). Here, \(t_1,\ldots ,t_d\) are the sufficient statistic of \({\mathcal {P}}_{{\mathcal {H}}_d}\). Then,

Example 6

The construction of the RKHS sequence allows a distinct sample space for each d. Suppose \(\mu _d\) be the d-dimensional standard normal distribution on \({\mathbb {R}}^d\). For \({\varvec{x}}=(x_1,\ldots ,x_d)\), define \(t_i({\varvec{x}})=x_i\). Let \(\lambda _i=1\) for all i. Then, the RKHS \({\mathcal {H}}_d\) is the linear kernel \(k({\varvec{x}},{\varvec{z}})=\sum _{i=1}^{d}\mu _i t_i({\varvec{x}})t_i({\varvec{z}})={\varvec{x}}^T{\varvec{z}}\) for \({\varvec{x}},{\varvec{z}}\in {\mathbb {R}}^d\) and the corresponding statistical model is the multivariate normal distribution \(N_d({\varvec{f}},I_d)\) with the parameter \({\varvec{f}}\in {\mathbb {R}}^d\).

Remark 4

For the infinite dimensional RKHS \({\mathcal {H}}\), typically \(\xi ({\mathcal {H}})=0\) holds. For example, the kernel function is defined as \(k(x,x')=\sum _{i=1}^{\infty }\lambda _i e_i(x)e_i(x')\) in the same way as above, where \(\{\lambda _i\}\) is a positive decreasing sequence such that \(\lambda _i\rightarrow 0\) as \(i\rightarrow \infty \). Hence, we have \(\xi ({\mathcal {H}})\le \int |\sqrt{\lambda _i}e_i(x)|^2\textrm{d}\mu =\lambda _i\rightarrow 0\) for \(i\rightarrow \infty \). Lemma 16 does not provide a meaningful upper bound of the estimation error in such an infinite-dimensional RKHS.

5.2 Robust estimation of normal distribution

Let us consider the robust estimation of parameters for the normal distribution.

First of all, we prove that regularized STV learning provides a robust estimator of the mean vector in the multi-dimensional normal distribution with the identity covariance matrix. For \({\mathcal {X}}={\mathbb {R}}^d\), let us define \(\textrm{d}\mu ({\varvec{x}})=C_d e^{-\Vert {\varvec{x}}\Vert _2^2/2}\textrm{d}{{\varvec{x}}}\) for \({\varvec{x}}\in {\mathbb {R}}^d\), where \(C_d\) is the normalizing constant depending on the dimension d such that \(\int _{{\mathbb {R}}^d}\textrm{d}\mu ({\varvec{x}})=1\). Let \({\mathcal {H}}\) be the RKHS with the kernel function \(k({\varvec{x}},{\varvec{z}})={\varvec{x}}^T{\varvec{z}}\) for \({\varvec{x}},{\varvec{z}}\in {\mathbb {R}}^d\). For the functions \(f({\varvec{x}})={\varvec{x}}^T{\varvec{f}}\) and \(g({\varvec{x}})={\varvec{x}}^T{\varvec{g}}\), the inner product in the RKHS is \(\langle f, g\rangle ={\varvec{f}}^T{\varvec{g}}\). The probability density of the d-dimensional multivariate normal distribution with the identity covariance matrix, \(N_d({\varvec{f}},I)\), with respect to the measure \(\mu \) is given by \(p_f({\varvec{x}})=\exp \{{\varvec{x}}^T{\varvec{f}}-\Vert {\varvec{f}}\Vert _2^2/2\}\). Corollary 11 guarantees that the estimated mean vector \(\widehat{{\varvec{f}}}_{\textrm{reg},r}\) by the regularized STV-learning satisfies

A lower bound of the TV distance between the d-dimensional normal distributions, \(N_d({\varvec{\mu }}_1,I)\) and \(N_d({\varvec{\mu }}_2,I)\), is presented in [71],

Therefore, we have

for a small \(\varepsilon \) and a large n. Though Corollary 17 provides the same conclusion, the above argument does not need the boundedness of \(\Vert {\varvec{f}}_0\Vert _2\). As shown in [5], a lower bound of the estimation error under the contaminated distribution is

Therefore, the estimator \(\widehat{{\varvec{f}}}_{\textrm{reg},r}\) attains the minimax optimal rate.

Next, let us consider the estimator of the covariance matrix for the multivariate normal distribution with mean zero, \(N_d(\varvec{0},\Sigma )\). For \({\mathcal {X}}={\mathbb {R}}^d\), let us define \(\textrm{d}\mu ({\varvec{x}})=C_d e^{-\Vert {\varvec{x}}\Vert _2^2/2}\textrm{d}{{\varvec{x}}}\) for \({\varvec{x}}\in {\mathbb {R}}^d\) as above. Let \({\mathcal {H}}\) be the RKHS with the kernel function \(k({\varvec{x}},{\varvec{z}})=({\varvec{x}}^T{\varvec{z}})^2\) for \({\varvec{x}},{\varvec{z}}\in {\mathbb {R}}^d\). Let \(f({\varvec{x}})={\varvec{x}}^T F {\varvec{x}}\) and \(g({\varvec{x}})={\varvec{x}}^T G{\varvec{x}}\) be quadratic functions defined by symmetric matrices F and G. Their inner product in the RKHS is given by \(\langle f,g\rangle =\textrm{Tr}F^TG\). The probability density w.r.t. \(\mu \) is defined by \(p_f({\varvec{x}})=\exp \{-{\varvec{x}}^T F {\varvec{x}}/2-A(F)\}\) for \(f({\varvec{x}})={\varvec{x}}^T F{\varvec{x}}\), which leads to the statistical model of the normal distribution \(N_d(\varvec{0},(I+F)^{-1})\). Let \(P_{f_0}\) be the normal distribution \(N_d(\varvec{0}, \Sigma _0)\) and \(P_{{\widehat{f}}}\) be the estimated distribution \(N_d(\varvec{0}, \widehat{\Sigma })\) using the regularized STV learning. Here, the estimated function \({\widehat{f}}({\varvec{x}})={\varvec{x}}^T {\widehat{F}}{\varvec{x}}\) in the RKHS provides the estimator of the covariance matrix \(\widehat{\Sigma } = (I+{\widehat{F}})^{-1}\). Then,

holds with high probability. As shown in [71], the lower bound of the TV distance between \(N_d(\varvec{0},\Sigma _0)\) and \(N_d(\varvec{0},\Sigma _1)\) is given by

where \(\Vert \cdot \Vert _{\textrm{op}}\) is the operator norm defined as the maximum singular value and \(\Vert \cdot \Vert _{\textrm{F}}\) is the Frobenius norm. If \(\varepsilon +\sqrt{d^2/n}\lesssim 1\), we have

where \(\Vert \Sigma _0\Vert _{\textrm{op}}\) is regarded as a constant. [29] proved that the estimater \(\widehat{\Sigma }\) based on the GAN method attains the error bound in terms of the operator norm,

The relationship between the Frobenius norm and the operator norm leads to the inequality,

A naive application of the result in [29] leads to \(\sqrt{d}\) factor to \(O(\varepsilon )\) term.

In the same way as the estimation of the mean vector, the estimator \(\widehat{\Sigma }\) attains the minimax optimality. Indeed, the following theorem holds. The proof is shown in Appendix C.3.

Theorem 18

Let us consider the statistical model \({\mathcal {N}}_R=\{N_d(\varvec{0}, \Sigma ):\,\Sigma \in {\mathfrak {T}}_R\}\), where \({\mathfrak {T}}_R=\{\Sigma \in {\mathbb {R}}^{d\times d}:\,\Sigma \succ {O},\, \Vert \Sigma \Vert _{\textrm{op}}\le 1+R\}\) for a positive constant \(R>1/2\). Then,

holds for \(\varepsilon \le 1/2\). The above lower bound is valid even for the unconstraint model, i.e., \(R=\infty \).

The covariance estimator based on STV learning attains the minimax optimal rate for the Frobenius norm, while the optimality in the operator norm is studied in several works [5, 29, 30]. Table 1 shows theoretical results revealed by some works.

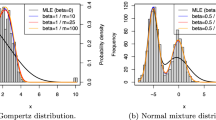

Let us show simple numerical results to confirm the feasibility of our method. We compare robust estimators for the mean vector and the full covariance matrix under Huber contamination. The expectation in the loss function is computed by sampling from the normal distribution.

For the mean vector of the multivariate normal distribution, the model \(p_f({\varvec{x}})=\exp \{{\varvec{x}}^T{\varvec{f}}-\Vert {\varvec{f}}\Vert _2^2/2\}\) and the RKHS \({\mathcal {H}}_U\) endowed with the linear kernel are used. The data is generated from \(0.9 N_d(\varvec{0}, I)+0.1 N_d(5\cdot {\varvec{e}},I)\) and the target is to estimate the mean vector of \(N_d(\varvec{0}, I)\), where \({\varvec{e}}\) is the d-dimensional vector \((1,1,\ldots ,1)^T\in {\mathbb {R}}^d\). We examine the estimation accuracy of the component-wise median, the GAN-based estimator using Jensen–Shannon loss, and the minimum TV distance estimator. We used the regularized STV-based estimator (11) with the gradient descent/ascent method to solve the min–max optimization problem. The regularization parameters are set to \(1/U^2=10^{-4}\) and \(1/r^2=3\times 10^{-5}\). The results are presented in Tables 2 and 3. As shown in theoretical analysis in [5], the component-wise median is sub-optimal. The TV-based estimator is not efficient, partially because of the difficulty of solving the optimization problem. As the smoothed variant of the TV-based estimator, GAN-based method and our method provide reasonable results.

For the estimation of the full covariance matrix for the multivariate normal distribution, the model \(p_f({\varvec{x}})=\exp \{{\varvec{x}}^T F {\varvec{x}}-A(F)\}\) and the RKHS \({\mathcal {H}}_U\) with the quadratic kernel are used. The data is generated from \(0.8 N_d(\varvec{0},\Sigma )+0.2 N(6\cdot {\varvec{e}}, \Sigma )\), where \(\Sigma _{ij}=2^{-|{i-j}|}\). We examined the STV-based estimator with Kendall’s rank correlation coefficient [72] and GAN-based method [29]. We used the regularized STV-based estimator (11) with the gradient descent/ascent method to solve the min–max optimization problem. The regularization parameters are set to \(1/U^2=10^{-4}\) and \(1/r^2=10^{-4}\). The results are presented in Table 4. Overall, the GAN-based estimator outperforms the other method. This is because the optimization technique of the GAN-based estimator using deep neural networks is highly developed in comparison to the STV-based estimator. In the STV-based method, the optimization algorithm sometimes encounters a sub-optimal solution, though the objective function is smooth. Developing an efficient algorithm for the STV-based method is important future work.

6 Approximation of expectation and learning algorithm

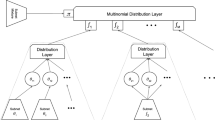

Let us consider how to solve the min–max problem of regularized STV learning. The optimization problem is given by

where \(\sigma \) is the sigmoid function. For the optimization problems on the infinite-dimensional RKHS, usually the representer theorem applies to reduce the infinite-dimensional optimization problem to the finite-dimensional one. However, the representer theorem does not work to the above optimization problem, since the expectation w.r.t. \(P_f, f\in {\mathcal {H}}_r\) appears. That is, the loss function may depend on f(x) of all \(x\in {\mathcal {X}}\). Even for the finite-dimensional RKHS, the computation of the expectation is often intractable. Here, we use the importance sampling method to overcome the difficulty.

We approximate the expectation w.r.t. \(P_f\) by the sample mean over \(Z_1,\ldots ,Z_\ell \sim q\), where q is an arbitrary density function such that the sampling from q and the computation of the probability density q(z) is feasible. A simple example is \(q=p_0\), i.e., the uniform distribution on \({\mathcal {X}}\) w.r.t. the base measure \(\mu \). Let us define \(\sigma _X:=\sigma (u(X)-b)\). The expectation \({\mathbb {E}}_{X\sim P_{f}}[\sigma (u(X)-b)]\) is approximated by \({\bar{\sigma }}_\ell \),

As an approximation of \(X\sim P_f\), we employ the probability distribution on \(Z_1,\ldots ,Z_\ell \) defined by

Then, an approximation of the estimator \({\widehat{f}}_r\) is given by the minimizer of \(\textrm{STV}_{{\mathcal {H}}_U,\sigma }(P_n,{\widehat{P}}_f)\), i.e.,

The approximation, \({\widetilde{f}}_r\), is usable regardless of the dimensionality of the model \({\mathcal {H}}\). Let us evaluate the error bound of the approximate estimator \(P_{{\widetilde{f}}_r}\).

Theorem 19

Assume Assumption (C). Suppose that \(\sup _{x\in {\mathcal {X}}}k(x,x)\le K^2\). Let us consider the approximate estimator \(P_{{\widetilde{f}}_r}\) obtained by the regularized STV learning using \(\textrm{STV}_{{\mathcal {H}}_U,\sigma }\), where \(\sigma \) is the sigmoid function satisfying Assumption (B) and the constraint \(|{b}|\le U\) is imposed the STV distance. Then, the estimation error of \(P_{{\widetilde{f}}_r}\) is

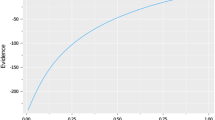

with probability greater than \(1-\delta \), where \(\textrm{C}_{{\mathcal {H}}_U}=UK\) for the infinite-dimensional \({\mathcal {H}}_U\) and \(\textrm{C}_{{\mathcal {H}}_U}=\sqrt{d}\) for the finite-dimensional \({\mathcal {H}}_U\) with \(d=\dim {\mathcal {H}}\).

For the finite-dimensional RKHS \({\mathcal {H}}\) with the dimension d endowed with the bounded kernel, the convergence rate of \(P_{{\widetilde{f}}}\) with \(U=r\sqrt{n}\) and \(\ell =n^2 r^2 e^{2Kr}\) attains

We expect that a tighter bound for the approximation will lead to a more reasonable sample size such as \(\ell =O(n)\).

For the infinite-dimensional RKHS, the convergence rate of \(P_{{\widetilde{f}}}\) with \(\ell =n,\, U=n^{1/4},\, r=O(\log \log {n})\) attains the following bound

with high probability, where the poly-log order is omitted. Since the loss function of the approximate estimator depends on f via \(f(Z_j),j=1,\ldots ,\ell \), the representation theorem works to compute the estimator \({\widetilde{f}}_r\). Indeed, the optimal solution of \({\widetilde{f}}_r\) is expressed by the linear sum of \(k(Z_j,\cdot ),j=1,\ldots ,\ell \) and the optimal solution of u is expressed by the linear sum of \(k(Z_j,\cdot ),j=1,\ldots ,\ell \) and \(k(X_i,\cdot ),i=1,\ldots ,n\). Hence, the problem is reduced to solve the finite-dimensional problem.

According to Theorem 19, the exponential term, \(e^{Kr}\), appears in the upper bound, meaning that we need a strong regularization to surpress that term. Recently, [49, 50] proposed an approximation method of the expectation in the penalized MLE for the kernel exponential family. It is an important issue to verify whether similar methods are also effective for STV-learning.

7 Concluding remarks

Since [28] connected the classical robust estimation with GANs, computationally efficient robust estimators using deep neural networks have been proposed. Most existing works focus on the theoretical analysis of estimators for the normal mean and covariance matrix under various conditions for contaminated distributions.

In this paper, we studied the IPM-based robust estimator for the kernel exponential family, including infinite-dimensional models for probability densities. As a class of IPMs, we defined the STV distance. The relationship between the TV distance and STV distance has an important role to evaluate the estimation accuracy of the STV-based robust estimator. For the covariance matrix estimation of the multivariate normal distribution, we proved the STV-based estimator attains the minimax optimal estimator for the Frobenius norm, while existing estimators are minimax optimal for the operator norm. Furthermore, we proposed an approximate STV-based estimator using importance sampling to mitigate the computational difficulty. The framework studied in this paper is regarded as a natural extension of existing IPM-based robust estimators for multivariate normal distribution to more general statistical models with theoretical guarantees.

The computational efficiency and stability of STV learning have room for improvement. Recent progress in robust statistics research has also been made from the viewpoint of computational algorithms; see [6, 73]. Incorporating these results into our research to improve our algorithms is an important research direction for practical applications.

Data availability

The dataset analyzed during the current study is available in the GitHub, https://github.co.jp/.

References

Huber, P.J.: Robust estimation of a location parameter. Ann. Math. Stat. 35(1), 73–101 (1964)

Tukey, J.W.: Mathematics and the picturing of data. In: Proceedings of the International Congress of Mathematicians, vol. 2, pp. 523–531 (1975)

Hampel, F.R., Ronchetti, E.M., Rousseeuw, P.J., Stahel, W.A.: Robust Statistics: The Approach Based on Influence Functions. Wiley Series in Probability and Statistics, Wiley, New York (1986)

Donoho, D.L., Huber, P.J.: The notion of breakdown point. A festschrift for Erich L. Lehmann 157184 (1983)

Chen, M., Gao, C., Ren, Z.: Robust covariance and scatter matrix estimation under Hubers contamination model. Ann. Stat. 46(5), 1932–1960 (2018)

Diakonikolas, I., Kamath, G., Kane, D.M., Li, J., Moitra, A., Stewart, A.: Robust estimators in high dimensions without the computational intractability. In: IEEE 57th Annual Symposium on Foundations of Computer Science, FOCS, pp. 655–664 (2016)

Lai, K.A., Rao, A.B., Vempala, S.S.: Agnostic estimation of mean and covariance. In: 2016 IEEE 57th Annual Symposium on Foundations of Computer Science (FOCS), pp. 665–674 (2016)

Donoho, D.L., Gasko, M.: Breakdown properties of location estimates based on halfspace depth and projected outlyingness. Ann. Stat. 20(4), 1803–1827 (1992)

Chen, Z., Tyler, D.E.: The influence function and maximum bias of Tukey’s median. Ann. Stat. 30(6), 1737–1759 (2002)

Zuo, Y., Serfling, R.: General notions of statistical depth function. Ann. Stat. 28(2), 461–482 (2000)

Yatracos, Y.G.: Rates of convergence of minimum distance estimators and Kolmogorov’s entropy. Ann. Stat. 13(2), 768–774 (1985)

Basu, A., Shioya, H., Park, C.: Statistical Inference: The Minimum Distance Approach. Monographs on Statistics and Applied Probability, Taylor & Francis, Florida (2010)

Basu, A., Harris, I.R., Hjort, N.L., Jones, M.C.: Robust and efficient estimation by minimising a density power divergence. Biometrika 85(3), 549–559 (1998)

Jones, M.C., Hjort, N.L., Harris, I.R., Basu, A.: A comparison of related density-based minimum divergence estimators. Biometrika 88(3), 865–873 (2001)

Fujisawa, H., Eguchi, S.: Robust parameter estimation with a small bias against heavy contamination. J. Multivar. Anal. 99(9), 2053–2081 (2008)

Kanamori, T., Fujisawa, H.: Robust estimation under heavy contamination using unnormalized models. Biometrika 102(3), 559–572 (2015)

Csiszár, I.: On topological properties of f-divergence. Studia Sci. Math. Hungar. 2, 329–339 (1967)

Bregman, L.M.: The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput. Math. Math. Phys. 7, 200–217 (1967)

Murata, N., Takenouchi, T., Kanamori, T., Eguchi, S.: Information geometry of \(U\)-Boost and Bregman divergence. Neural Comput. 16(7), 1437–1481 (2004)

Kanamori, T., Fujisawa, H.: Affine invariant divergences associated with composite scores and its applications. Bernoulli 20(4), 2278–2304 (2016)

Ali, S.M., Silvey, S.D.: A general class of coefficients of divergence of one distribution from another. J. R. Stat. Soc. B 28(1), 131–142 (1966)

Müller, A.: Integral probability metrics and their generating classes of functions. Adv. Appl. Probab. 29, 429–443 (1997)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, vol. 27 (2014)

Nowozin, S., Cseke, B., Tomioka, R.: f-GAN: Training generative neural samplers using variational divergence minimization. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.C.: Improved training of Wasserstein GANs. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Li, C.-L., Chang, W.-C., Cheng, Y., Yang, Y., Póczos, B.: MMD GAN: towards deeper understanding of moment matching network. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Chérief-Abdellatif, B.-E., Alquier, P.: Finite sample properties of parametric MMD estimation: robustness to misspecification and dependence. Bernoulli 28(1), 181–213 (2022)

Gao, C., Liu, J., Yao, Y., Zhu, W.: Robust estimation and generative adversarial networks. In: International Conference on Learning Representations (2019)

Gao, C., Yao, Y., Zhu, W.: Generative adversarial nets for robust scatter estimation: a proper scoring rule perspective. J. Mach. Learn. Res. 21, 160–116048 (2020)

Liu, Z., Loh, P.-L.: Robust W-GAN-based estimation under Wasserstein contamination. Inf. Inference J. IMA 12(1), 312–362 (2023)

Wu, K., Ding, G.W., Huang, R., Yu, Y.: On minimax optimality of GANs for robust mean estimation. In: AISTATS, vol. 108, pp. 4541–4551 (2020)

Zhu, B., Jiao, J., Jordan, M.I.: Robust estimation for non-parametric families via generative adversarial networks. In: 2022 IEEE International Symposium on Information Theory (ISIT), pp. 1100–1105 (2022). IEEE

Zhu, B., Jiao, J., Steinhardt, J.: Generalized resilience and robust statistics. Ann. Stat. 50(4), 2256–2283 (2022)

Zhu, B., Jiao, J., Tse, D.: Deconstructing generative adversarial networks. IEEE Trans. Inf. Theory 66(11), 7155–7179 (2020)

Fukumizu, K.: Infinite dimensional exponential families by reproducing kernel Hilbert spaces. In: 2nd International Symposium on Information Geometry and Its Applications (IGAIA 2005), pp. 324–333 (2005)

Sriperumbudur, B., Fukumizu, K., Gretton, A., Hyvärinen, A., Kumar, R.: Density estimation in infinite dimensional exponential families. J. Mach. Learn. Res. 18(57), 1–59 (2017)

Schölkopf, B., Smola, A.J.: Learning with Kernels. MIT Press, Cambridge, MA (2002)

Mohri, M., Rostamizadeh, A., Talwalkar, A.: Foundations of Machine Learning. MIT Press, London (2018)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, New York, NY (2014)

Gutmann, M., Hyvärinen, A.: Noise-contrastive estimation of unnormalized statistical models, with applications to natural image statistics. J. Mach. Learn. Res. 13, 307–361 (2012)

Gutmann, M.U., Hirayama, J.-i.: Bregman divergence as general framework to estimate unnormalized statistical models. In: Proceedings of the Twenty-Seventh Conference on Uncertainty in Artificial Intelligence, pp. 283–290 (2011)

Geyer, C.: On the convergence of Monte Carlo maximum likelihood calculations. J. R. Stat. Soc. B 56, 261–274 (1994)

Uehara, M., Kanamori, T., Takenouchi, T., Matsuda, T.: A unified statistically efficient estimation framework for unnormalized models. In: International Conference on Artificial Intelligence and Statistics, pp. 809–819 (2020). PMLR

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning, vol. 70, pp. 214–223 (2017)

Hall, A.R.: Generalized Method of Moments. Advanced Texts in Econometrics, Oxford University Press, New York (2005)

Arora, S., Ge, R., Liang, Y., Ma, T., Zhang, Y.: Generalization and equilibrium in generative adversarial nets (GANs). In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 70, pp. 224–232 (2017)

Lehmann, E.L., Casella, G.: Theory of Point Estimation, 2nd edn. Springer, New York, NY (1998)

Amari, S., Nagaoka, H.: Methods of Information Geometry. Translations of Mathematical Monographs, vol. 191. Oxford University Press, New York (2000)

Dai, B., Dai, H., Gretton, A., Song, L., Schuurmans, D., He, N.: Kernel exponential family estimation via doubly dual embedding (2019)

Dai, B., Liu, Z., Dai, H., He, N., Gretton, A., Song, L., Schuurmans, D.: Exponential family estimation via adversarial dynamics embedding (2020)

Chen, M., Gao, C., Ren, Z.: A general decision theory for Huber’s \(\epsilon \)-contamination model. Electron. J. Stat. 10(2), 3752–3774 (2016)

Uppal, A., Singh, S., Póczos, B.: Robust density estimation under Besov IPM losses. Adv. Neural Inf. Process. Syst. 33, 5345–5355 (2020)

Besag, J.: Statistical analysis of non-lattice data. J. R. Stat. Soc. D 24, 179–195 (1975)

Hyvärinen, A.: Some extensions of score matching. Comput. Stat. Data Anal. 51, 2499–2512 (2007)

Parry, M., Dawid, A.P., Lauritzen, S.: Proper local scoring rules. Ann. Stat. 40, 561–592 (2012)

Dawid, A.P., Lauritzen, S., Parry, M.: Proper local scoring rules on discrete sample spaces. Ann. Stat. 40, 593–608 (2012)

Varin, C., Reid, N., Firth, D.: An overview of composite likelihood methods. Stat. Sin. 21, 5–42 (2011)

Lindsay, B.G., Yi, G.Y., Sun, J.: Issues and strategies in the selection of composite likelihoods. Stat. Sin. 21, 71–105 (2011)

Berlinet, A., Thomas-Agnan, C.: Reproducing Kernel Hilbert Spaces in Probability and Statistics. Springer, New York (2011)

Borgwardt, K.M., Gretton, A., Rasch, M.J., Kriegel, H.-P., Schölkopf, B., Smola, A.J.: Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics 22(14), 49–57 (2006)

Gretton, A., Borgwardt, K.M., Rasch, M.J., Schölkopf, B., Smola, A.: A kernel two-sample test. J. Mach. Learn. Res. 13(25), 723–773 (2012)

Shen, J., Qu, Y., Zhang, W., Yu, Y.: Wasserstein distance guided representation learning for domain adaptation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Gao, R., Kleywegt, A.: Distributionally robust stochastic optimization with Wasserstein distance. Math. Oper. Res. 48(2), 603–655 (2023)

Lee, J., Raginsky, M.: Minimax statistical learning with Wasserstein distances. In: NeurIPS 2018, 3–8 December 2018, Montréal, Canada, pp. 2692–2701 (2018)

Courty, N., Flamary, R., Tuia, D., Rakotomamonjy, A.: Optimal transport for domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 39(9), 1853–1865 (2017)

Steinwart, I., Christmann, A.: Support Vector Machines. Springer, New York (2008)

Huber, P.J., Wiley, J., InterScience, W.: Robust Statistics. Wiley, New York (1981)

Ronchetti, E.: Robustness aspects of model choice. Stat. Sin. 7, 327–338 (1997)

Sugasawa, S., Yonekura, S.: On selection criteria for the tuning parameter in robust divergence. Entropy 23(9), 1147 (2021)

Wainwright, M.J.: High-dimensional Statistics: A Non-asymptotic Viewpoint. Cambridge University Press, Cambridge (2019)

Devroye, L., Mehrabian, A., Reddad, T.: The total variation distance between high-dimensional Gaussians with the same mean. arXiv (2018)

Kendall, M.G.: A new measure of rank correlation. Biometrika 30(1/2), 81–93 (1938)

Diakonikolas, I., Kane, D.M.: Algorithmic High-Dimensional Robust Statistics. Cambridge University Press, New York (2023)

Vapnik, V.N.: Statistical Learning Theory. Wiley, New York (1998)

Ma, Z., Wu, Y.: Volume ratio, sparsity, and minimaxity under unitarily invariant norms. IEEE Trans. Inf. Theory 61(12), 6939–6956 (2015)

Acknowledgements

This work was partially supported by JSPS KAKENHI Grant Numbers 19H04071, 20H00576, and 23H03460.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The corresponding author states that there is no conflict of interest.

Additional information

Communicated by Noboru Murata.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Proofs of proposition and lemmas in Sect. 3

1.1 A.1 Proof of Lemma 2

Proof

For any fixed \(u\in {\mathcal {F}}\), let us define \(\xi (b)\) and \({\bar{\xi }}(b)\) by

Note that \(\lim _{b\rightarrow \pm \infty }\xi (b)=\lim _{b\rightarrow \pm \infty }{\bar{\xi }}(b)=0\). We see that \(\xi (b)\) is left-continuous and \({\bar{\xi }}(b)\) is right-continuous. From the definition of the distribution function, \(\xi (b)={\bar{\xi }}(b)\) holds except for discontinuous points. Furthermore, for any \(b_0\in {\mathbb {R}}\), \(\lim _{b\rightarrow b_0\pm 0}\xi (b)=\lim _{b\rightarrow b_0\pm 0}{\bar{\xi }}(b)\) holds. In the below, we prove \(\sup _b{\bar{\xi }}(b)=\sup _b\xi (b)\).

Suppose \(\lim _{n\rightarrow \infty }\xi (b_n)=\sup _b\xi (b)\). When \(b_n\) is bounded, there exists \(b_0\) such that a subsequence of \(b_n\) converges \(b_0\). Then, \(\lim _{b\rightarrow b_0-0}\xi (b)=\sup _b\xi (b)\) or \(\lim _{b\rightarrow b_0+0}\xi (b)=\sup _b\xi (b)\) holds. This means \((0\le ) \sup _b\xi (b)\le \sup _b{\bar{\xi }}(b)\). Suppose \(\lim _{n\rightarrow \infty }{\bar{\xi }}(b_n')=\sup _b{\bar{\xi }}(b)\). When \(b_n'\) is unbounded, \(\sup _b{\bar{\xi }}(b)=0\) should hold. Hence \(\sup _b\xi (b)=\sup _b{\bar{\xi }}(b)=0\). When \(b_n'\) is bounded, there exists \(b_0'\) such that a subsequence of \(b_n'\) convergences \(b_0'\). Then, \(\lim _{b\rightarrow b_0'-0}{\bar{\xi }}(b)=\sup _b{\bar{\xi }}(b)\) or \(\lim _{b\rightarrow b_0'+0}{\bar{\xi }}(b)=\sup _b{\bar{\xi }}(b)\) holds. This means that \(\sup _b{\bar{\xi }}(b)\le \sup _b\xi (b)\). As a result, when \(b_n\) is bounded, we have \(\sup _b{\bar{\xi }}(b)=\sup _b\xi (b)\).