Abstract

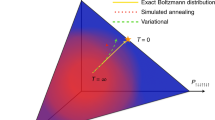

Markov chain Monte Carlo methods for sampling from complex distributions and estimating normalization constants often simulate samples from a sequence of intermediate distributions along an annealing path, which bridges between a tractable initial distribution and a target density of interest. Prior works have constructed annealing paths using quasi-arithmetic means, and interpreted the resulting intermediate densities as minimizing an expected divergence to the endpoints. To analyze these variational representations of annealing paths, we extend known results showing that the arithmetic mean over arguments minimizes the expected Bregman divergence to a single representative point. In particular, we obtain an analogous result for quasi-arithmetic means, when the inputs to the Bregman divergence are transformed under a monotonic embedding function. Our analysis highlights the interplay between quasi-arithmetic means, parametric families, and divergence functionals using the rho-tau representational Bregman divergence framework, and associates common divergence functionals with intermediate densities along an annealing path.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Change history

26 February 2024

A Correction to this paper has been published: https://doi.org/10.1007/s41884-024-00132-5

Notes

The identity dual representation leads to favorable properties for divergence minimization under linear constraints. Csiszár [20] characterize Beta divergences as providing scale-invariant projection onto the set of positive measures satisfying expectation constraints. Naudts [48] Chap. 8 discuss related thermodynamic interpretations. Here, the Beta divergence is preferred in place of the \(\alpha \)-divergence, which induces escort expectations due to the deformed dual representation \(\tau ({\tilde{\pi }}) = \log _{1-q}{\tilde{\pi }}= \frac{1}{q}{\tilde{\pi }}({x})^{q} - \frac{1}{q}\) from Example 5 [48, 80].

References

Adlam, B., Gupta, N., Mariet, Z., Smith, J.: Understanding the bias-variance tradeoff of Bregman divergences. arXiv preprint arXiv:2202.04167 (2022)

Alemi, A., Poole, B., Fischer, I., Dillon, J., Saurous, R.A., Murphy, K.: Fixing a broken ELBO. In: International Conference on Machine Learning, pp. 159–168 (2018)

Amari, S.: Differential geometry of curved exponential families-curvatures and information loss. Ann. Stat. 13, 357–385 (1982)

Amari, S., Nagaoka, H.: Methods of Information Geometry, vol 191. American Mathematical Society, New York (2000)

Amid, E., Anil, R., Fifty, C., Warmuth, M.K.: Layerwise Bregman representation learning of neural networks with applications to knowledge distillation. In: Transactions on Machine Learning Research (2022)

Ay, N., Jost, J., Vân Lê, H., Schwachhöfer, L.: Information Geometry, vol. 64. Springer, New York (2017)

Banerjee, A., Merugu, S., Dhillon, I.S., Ghosh, J.: Clustering with Bregman divergences. J. Mach. Learn. Res. 6, 1705–1749 (2005)

Banerjee, A., Guo, X., Wang, H.: On the optimality of conditional expectation as a Bregman predictor. IEEE Trans. Inf. Theory 51(7), 2664–2669 (2005)

Basu, A., Harris, I.R., Hjort, N.L., Jones, M.: Robust and efficient estimation by minimising a density power divergence. Biometrika 85(3), 549–559 (1998)

Bercher, J.F.: A simple probabilistic construction yielding generalized entropies and divergences, escort distributions and \(q\)-Gaussians. Phys. A Stat. Mech. Appl. 391(19), 4460–4469 (2012)

Betancourt, M., Byrne, S., Livingstone, S., Girolami, M., et al.: Geometric foundations of Hamiltonian Monte Carlo. Bernoulli 23(4A), 2257–2298 (2017)

Blondel, M., Martins, A.F., Niculae, V.: Learning with Fenchel–Young losses. J. Mach. Learn. Res. 21(35), 1–69 (2020)

Brekelmans, R., Huang, S., Ghassemi, M., Steeg, G.V., Grosse, R.B., Makhzani, A.: Improving mutual information estimation with annealed and energy-based bounds. In: International Conference on Learning Representations (2022)

Brekelmans, R., Masrani, V., Wood, F., Ver Steeg, G., Galstyan, A.: All in the exponential family: Bregman duality in thermodynamic variational inference. In: Proceedings of the 37th International Conference on Machine Learning, JMLR.org, ICML’20 (2020)

Brekelmans, R., Nielsen, F., Galstyan, A., Steeg, G.V.: Likelihood ratio exponential families. In: NeurIPS Workshop on Information Geometry in Deep Learning. https://openreview.net/forum?id=RoTADibt26_ (2020)

Burbea, J., Rao, C.: Entropy differential metric, distance and divergence measures in probability spaces: a unified approach. J. Multivariate Anal. 12(4), 575–596 (1982). https://doi.org/10.1016/0047-259X(82)90065-3

Chatterjee, S., Diaconis, P.: The sample size required in importance sampling. Ann. Appl. Probab. 28(2), 1099–1135 (2018)

Cichocki, A., Si, Amari: Families of alpha-beta-and gamma-divergences: flexible and robust measures of similarities. Entropy 12(6), 1532–1568 (2010)

Cichocki, A., Cruces, S., Si, Amari: Generalized alpha–beta divergences and their application to robust nonnegative matrix factorization. Entropy 13(1), 134–170 (2011)

Csiszár, I.: Why least squares and maximum entropy? An axiomatic approach to inference for linear inverse problems. Ann. Stat. 19(4), 2032–2066 (1991)

Del Moral, P., Doucet, A., Jasra, A.: Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 68(3), 411–436 (2006)

Duane, S., Kennedy, A.D., Pendleton, B.J., Roweth, D.: Hybrid Monte Carlo. Phys. Lett. B 195(2), 216–222 (1987)

Earl, D.J., Deem, M.W.: Parallel tempering: theory, applications, and new perspectives. Phys. Chem. Chem. Phys. 7(23), 3910–3916 (2005)

Eguchi, S., Komori, O., Ohara, A.: Information geometry associated with generalized means. In: Information Geometry and Its Applications IV, pp. 279–295. Springer, New York (2016)

Eguchi, S., Komori, O.: Path connectedness on a space of probability density functions. In: International Conference on Geometric Science of Information, pp. 615–624 (2015)

Eguchi, S.: Second order efficiency of minimum contrast estimators in a curved exponential family. Ann. Stat. 4, 793–803 (1983)

Eguchi, S.: A differential geometric approach to statistical inference on the basis of contrast functionals. Hiroshima Math. J. 15(2), 341–391 (1985)

Eguchi, S.: Information geometry and statistical pattern recognition. Sugaku Expos. 19(2), 197–216 (2006)

Frigyik, B.A., Srivastava, S., Gupta, M.R.: Functional Bregman divergence and Bayesian estimation of distributions. IEEE Trans. Inf. Theory 54(11), 5130–5139 (2008)

Geist, M., Scherrer, B., Pietquin, O.: A theory of regularized Markov decision processes. In: International Conference on Machine Learning, PMLR, pp. 2160–2169 (2019)

Gelman, A., Meng, X.L.: Simulating normalizing constants: from importance sampling to bridge sampling to path sampling. Stat. Sci. 5, 163–185 (1998)

Goshtasbpour, S., Cohen, V., Perez-Cruz, F.: Adaptive annealed importance sampling with constant rate progress. In: International Conference on Machine Learning (2023)

Grasselli, M.R.: Dual connections in nonparametric classical information geometry. Ann. Inst. Stat. Math. 62(5), 873–896 (2010)

Grosse, R.B., Maddison, C.J., Salakhutdinov, R.R.: Annealing between distributions by averaging moments. In: Advances in neural information processing systems, pp. 2769–2777 (2013)

Hardy, G., Littlewood, J., Plóya, G.: Inequalities. Math. Gazette 37(321), 236–236 (1953)

Jarzynski, C.: Equilibrium free-energy differences from nonequilibrium measurements: a master-equation approach. Phys. Rev. E 56(5), 5018 (1997)

Jarzynski, C.: Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 78(14), 2690 (1997)

Jaynes, E.T.: Information theory and statistical mechanics. Phys. Rev. 106(4), 620 (1957)

Kaniadakis, G., Scarfone, A.: A new one-parameter deformation of the exponential function. Phys. A Stat. Mech. Appl. 305(1–2), 69–75 (2002)

Knoblauch, J., Jewson, J., Damoulas, T.: Generalized variational inference: three arguments for deriving new posteriors. arXiv preprint arXiv:1904.02063 (2019)

Kolmogorov, A.N.: Sur la Notion de la Moyenne. G. Bardi, tip. della R. Accad. dei Lincei (1930)

Lin, J.: Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 37(1), 145–151 (1991)

Loaiza, G.I., Quiceno, H.R.: A Riemannian geometry in the \(q\)-exponential Banach manifold induced by \(q\)-divergences. In: Geometric Science of Information. First International Conference, GSI 2013, Paris, France, August 28–30, 2013. Proceedings, pp. 737–742. Springer, Berlin (2013)

Loaiza, G.I., Quiceno, H.: A \(q\)-exponential statistical Banach manifold. J. Math. Anal. Appl. 398(2), 466–476 (2013)

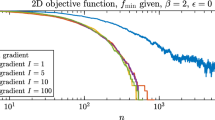

Masrani, V., Brekelmans, R., Bui, T., Nielsen, F., Galstyan, A., Steeg, G.V., Wood, F.: q-Paths: generalizing the geometric annealing path using power means. Uncertain. Artif. Intell. (2021)

Murata, N., Takenouchi, T., Kanamori, T., Eguchi, S.: Information geometry of U-boost and Bregman divergence. Neural Comput. 16(7), 1437–1481 (2004)

Naudts, J.: Estimators, escort probabilities, and phi-exponential families in statistical physics. arXiv preprint arXiv:math-ph/0402005 (2004)

Naudts, J.: Generalised Thermostatistics. Springer, New York (2011)

Naudts, J., Zhang, J.: Rho–tau embedding and gauge freedom in information geometry. Inf. Geom. 1(1), 79–115 (2018)

Neal, R.M.: MCMC using Hamiltonian dynamics. In: Handbook of Markov chain Monte Carlo, p. 113 (2011)

Neal, R.M.: Annealed importance sampling. Stat. Comput. 11(2), 125–139 (2001)

Nguyen, X., Wainwright, M.J., Jordan, M.I.: Estimating divergence functionals and the likelihood ratio by convex risk minimization. IEEE Trans. Inf. Theory 56(11), 5847–5861 (2010)

Nielsen, F., Nock, R.: On Rényi and Tsallis entropies and divergences for exponential families. arXiv preprint arXiv:1105.3259 (2011)

Nielsen, F.: An elementary introduction to information geometry. Entropy 22(10), 34 (2020)

Nielsen, F., Boltz, S.: The Burbea–Rao and Bhattacharyya centroids. IEEE Trans. Inf. Theory 57(8), 5455–5466 (2011)

Nock, R., Cranko, Z., Menon, A.K., Qu, L., Williamson, R.C.: \(f\)-GANs in an information geometric nutshell. In: Advances in Neural Information Processing Systems (2017)

Nock, R., Nielsen, F.: Fitting the smallest enclosing Bregman ball. In: European Conference on Machine Learning, pp. 649–656. Springer, New York (2005)

Nowozin, S., Cseke, B., Tomioka, R.: \(f\)-GAN: training generative neural samplers using variational divergence minimization. Neural Inf. Process. Syst. 29, 11 (2016)

Ogata, Y.: A Monte Carlo method for high dimensional integration. Numer. Math. 55(2), 137–157 (1989)

Pfau, D.: A Generalized Bias-Variance Decomposition for Bregman Divergences. Unpublished manuscript (2013)

Pistone, G., Sempi, C.: An infinite-dimensional geometric structure on the space of all the probability measures equivalent to a given one. Ann. Stat. 4, 1543–1561 (1995)

Poole, B., Ozair, S., Van Den Oord, A., Alemi, A., Tucker, G.: On variational bounds of mutual information. In: International Conference on Machine Learning, pp. 5171–5180 (2019)

Rényi, A.: On measures of entropy and information. In: Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, Calif., pp. 547–561 (1961). https://projecteuclid.org/euclid.bsmsp/1200512181

Rossky, P.J., Doll, J., Friedman, H.: Brownian dynamics as smart Monte Carlo simulation. J. Chem. Phys. 69(10), 4628–4633 (1978)

Si, Amari: Integration of stochastic models by minimizing \(\alpha \)-divergence. Neural Comput. 19(10), 2780–2796 (2007)

Si, Amari: Information Geometry and Its Applications, vol. 194. Springer, New York (2016)

Sibson, R.: Information radius. Z. Wahrscheinlichkeitstheor. Verwandte Gebiete 14(2), 149–160 (1969)

Syed, S., Romaniello, V., Campbell, T., Bouchard-Côté, A.: Parallel tempering on optimized paths. In: International Conference on Machine Learning (2021)

Tishby, N., Pereira, F.C., Bialek, W.: The information bottleneck method. In: Allerton Conference on Communications, Control and Computing, pp. 368–377 (1999)

Tsallis, C.: Introduction to Nonextensive Statistical Mechanics: Approaching a Complex World. Springer, New York (2009)

Tsallis, C.: Possible generalization of Boltzmann–Gibbs statistics. J. Stat. Phys. 52(1–2), 479–487 (1988)

Van Erven, T., Harremos, P.: Rényi divergence and Kullback–Leibler divergence. IEEE Trans. Inf. Theory 60(7), 3797–3820 (2014)

Vellal, A., Chakraborty, S., Xu, J.Q.: Bregman power k-means for clustering exponential family data. In: International Conference on Machine Learning, PMLR, pp. 22103–22119 (2022)

Welling, M., Teh, Y.W.: Bayesian learning via stochastic gradient Langevin dynamics. In: Proceedings of the 28th International Conference on Machine Learning (ICML-11), Citeseer, pp. 681–688 (2011)

Wong, T.K.L., Zhang, J.: Tsallis and Rényi deformations linked via a new \(\lambda \)-duality. arXiv preprint arXiv:2107.11925 (2021)

Xu, J., Lange, K.: Power k-means clustering. In: International Conference on Machine Learning, PMLR, pp. 6921–6931 (2019)

Zhang, J.: Divergence function, duality, and convex analysis. Neural Comput. 16(1), 159–195 (2004)

Zhang, J.: Nonparametric information geometry: from divergence function to referential-representational biduality on statistical manifolds. Entropy 15(12), 5384–5418 (2013)

Zhang, J.: On monotone embedding in information geometry. Entropy 17(7), 4485–4499 (2015)

Zhang, J., Matsuzoe, H.: Entropy, cross-entropy, relative entropy: deformation theory (a). Europhys. Lett. 134(1), 18001 (2021)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Frank Nielsen is currently a board member of the journal. He was not involved in the peer review or handling of the manuscript.

Additional information

Communicated by Jun Zhang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: the article was originally published without “Data Availability” and “Conflict of Interest”.

Appendices

Summary of Appendix

In Appendix A, we review annealed importance sampling as an example MCMC technique. In Appendix B, we prove our main result (Theorem 1). We discuss parameteric Bregman divergences and annealing paths between deformed exponential families from our representational perspective in Appendix C, where the \(\rho \)-affine property plays a crucial role. In Appendix D, we review the Eguchi relations and information-geometric structures induced by the rho–tau Bregman divergence and rho–tau Bregman information functionals (see Table 2). Finally, in Appendix E, we prove Theorem 2 showing that quasi-arithmetic paths in the \(\rho \)-representation are geodesics with respect to affine connections induced by the rho–tau Bregman divergence.

A Annealed importance sampling

We briefly present annealed importance sampling (AIS) [51] as a representative example of an MCMC method where the choice of annealing path can play a crucial role [34, 45]. AIS relies on similar insights as the Jarzynski equality in nonequilibrium thermodynamics [36, 37], and may be used to estimate (log) normalization or partition functions or sample from complex distributions.

More concretely, consider an initial distribution \(\pi _0({x}) \propto {\tilde{\pi }}_0({x})\) which is tractable to sample and is often chosen to have normalization constant \(\mathcal {Z}_0= 1\). We are often interested in estimating the normalizing constant \(\mathcal {Z}_1 = \int {\tilde{\pi }}_1({x})\) of a target distribution \(\pi _1({x}) \propto {\tilde{\pi }}_1({x})\), where only the unnormalized density \({\tilde{\pi }}_1\) is available. Since direct sampling from \(\pi _0({x})\) may require prohibitive sample complexity to accurately estimate the normalization constant ratio \(\mathcal {Z}_1/\mathcal {Z}_0\) [13, 17], AIS decomposes the estimation problem into a sequence of easier subproblems using a path of intermediate distributions \(\{{\tilde{\pi }}_{\beta _t}({x})\}_{\beta _0=0}^{\beta _T=1}\) between the endpoints \({\tilde{\pi }}_0({x})\) and \({\tilde{\pi }}_1({x})\). Most often, the geometric averaging path is used,

AIS proceeds by constructing a sequence of Markov transition kernels \({\mathcal {T}_t}({x}_{t+1}|{x}_{t})\) which leave \(\pi _{\beta _t}\) invariant, with \(\int \pi _{\beta _t}({x}_t) {\mathcal {T}_t}({x}_{t+1}|{x}_{t}) d{x}_t= \pi _{\beta _t} ({x}_{t+1})\). Commonly, this is achieved using kernels such as HMC or Langevin dynamics [50] which transform the samples, with Metropolis-Hastings accept-reject steps to ensure invariance. To interpret AIS as importance sampling in an extended state space [13, 51], we define the reverse kernel as \({\tilde{\mathcal {T}}_t}({x}_{t} | {x}_{t+1}) = \frac{\pi _{{\beta _t}}({x}_t){\mathcal {T}_t}({x}_{t+1} | {x}_{t}) }{\int \pi _{{\beta _t}}({x}_t) {\mathcal {T}_t}({x}_{t+1} | {x}_{t})d{x}}\). Using the invariance of \({\mathcal {T}_t}\), we observe that \(\pi _{{\beta _t}}({x}_{t+1}) {\tilde{\mathcal {T}}_t}({x}_{t} | {x}_{t+1}) = \pi _{{\beta _t}}({x}_t) {\mathcal {T}_t}({x}_{t+1} | {x}_{t})\).

To construct an estimator of \(\mathcal {Z}_T/\mathcal {Z}_0\) using AIS, we sample from \({x}_0 \sim \pi _0({x})\), run the transition kernels in the forward direction to obtain samples \({x}_{1:T}\), and calculate the importance sampling weights along the path,

Note that we have used the above identity relating \({\mathcal {T}_t}\) and \({\tilde{\mathcal {T}}_t}\) in the second equality, and the definition of the geometric averaging path in the last equality.

Finally, it can be shown that \(w({x}_{0:T})\) provides an unbiased estimator of \(\mathcal {Z}_1/\mathcal {Z}_0\), with \(\mathbb {E}[w({x}_{0:T})] = \mathcal {Z}_1/\mathcal {Z}_0\) [51]. We can thus estimate the partition function ratio using the empirical average over K annealing chains, \(\mathcal {Z}_1/\mathcal {Z}_0 \approx \frac{1}{K} \sum w_{0:T}^{(k)}\). We detail the complete AIS procedure in Alg. 1.

AIS is considered among the gold standard methods for estimating normalization constants. Closely related MCMC methods involving path sampling [31] include Sequential Monte Carlo [21], which may involve resampling steps to prioritize higher-probability \({x}_t\), or parallel tempering [23], which runs T parallel sampling chains in order to obtain accurate samples from each \(\pi _{\beta _t}({x})\).

B Proof of Theorem 1

In the main text and below, we present and prove Theorem 1 in terms of scalar inputs and decomposable Bregman divergences. As we show in Appendix B.2 Theorem 3, a similar Bregman divergence-minimization interpretation of quasi-arithmetic means holds for vector-valued inputs, where the representation function is applied element-wise. Most commonly, vectorized divergences are constructed between parameter vectors \({\varvec{\theta }}\) of some (deformed) exponential family. However, we argue in Appendix C.1 that these cases are best understood using representations of unnormalized densities (as in Theorem 1) and the \(\rho \)-affine property of parametric families. Nevertheless, we provide proof of Theorem 3 for completeness.

Theorem 1

(Rho–tau Bregman information) Consider a monotonic representation function \(\rho : {\mathcal {X}}_\rho \subset {\mathbb {R}}\mapsto {\mathcal {Y}}_\rho \subset {\mathbb {R}}\) and a convex function \(f: {\mathcal {Y}}_\rho \rightarrow \mathbb {R}\). Consider discrete mixture weights \({\varvec{\beta }}= \{\beta _i \}_{i=1}^N\) over N inputs \({\varvec{\pi }}(x) = \{ \pi _i(x) \}_{i=1}^N\), \(\pi _i(x) \in {\mathcal {X}}_\rho \), with \(\sum _i \beta _i = 1\). Finally, assume the expected value \(\mu _{\rho }({\varvec{\pi }}, {\varvec{\beta }}) :=\sum _{i=1}^N \beta _i ~ \rho ({\tilde{\pi }}_i(x)) \in \textrm{ri}({\mathcal {Y}}_\rho )\) is in the relative interior of the range of \(\rho \) for all \(x \in {\mathcal {X}}\). Then, we have the following results,

-

(i)

For a given Bregman divergence \(D_{f}[\rho ({\tilde{\pi }}_a):\rho ({\tilde{\pi }}_b)]\) with generator \({\varPsi }_f\), the optimization

$$\begin{aligned} {\mathcal {I}_{{f,\rho }}} \big ( {{\varvec{{\tilde{\pi }}}}, {\varvec{\beta }}} \big )&:=\min \limits _\mu \sum \limits _{i=1}^N \, \beta _i \, D_{f}\left[ \rho ({\tilde{\pi }}_i) : \rho (\mu ) \right] \, . \end{aligned}$$(16)has a unique minimizer given by the quasi-arithmetic mean with representation function \(\rho ({\tilde{\pi }})\)

$$\begin{aligned} \mu ^*_{\rho }({\varvec{{\tilde{\pi }}}}, {\varvec{\beta }}) = \rho ^{-1}\left( \sum \limits _{i=1}^N \beta _i \, \rho \big ( {\tilde{\pi }}_i \big ) \right) = \mathop {\mathrm {arg\,min}}\limits \limits _{\mu } \sum \limits _{i=1}^N \, \beta _i \, D_{f}\left[ \rho ({\tilde{\pi }}_i) : \rho (\mu ) \right] . \end{aligned}$$(17)The arithmetic mean is recovered for \(\rho ({\tilde{\pi }}_i) = {\tilde{\pi }}_i\) and any f [7].

-

(ii)

At this minimizing argument \(\mu _\rho ^*\), the value of the expected divergence (or right-hand side of Eq. (16)) is called the Rho–tau Bregman information and is equal to a gap in Jensen’s inequality for mixture weights \({\varvec{\beta }}\), inputs \(\rho ({\varvec{{\tilde{\pi }}}}) = \{ \rho ({\tilde{\pi }}_i) \}_{i=1}^N\), and the convex functional \({\varPsi }_f[\rho _{\tilde{\pi }}] = \int f\big ( \rho _{\tilde{\pi }}({x})) \big ) d{x}\),

$$\begin{aligned} {\mathcal {I}_{{f,\rho }}} \big ( {{\varvec{{\tilde{\pi }}}}, {\varvec{\beta }}} \big ) = \sum \limits _{i=1}^N \beta _i \, {\varPsi _{f}}\big [ \rho ({{\tilde{\pi }}_i}) \big ] - {\varPsi _{f}}\big [ \rho (\mu ^*_{\rho }) \big ] . \end{aligned}$$(18) -

(iii)

Using \(\mu \ne \mu ^*_{\rho }\) as the representative in Eq. (16), the suboptimality gap is a rho–tau Bregman divergence

$$\begin{aligned} D_{f}\big [ \rho \big ( \mu _\rho ^* \big ) : \rho \big ( \mu \big )\big ] = \sum \limits _{i=1}^N \beta _i ~ D_{f}\left[ \rho \big ( {\tilde{\pi }}_i \big ) : \rho \big ( \mu \big ) \right] - {\mathcal {I}_{{f,\rho }}}\big ( {\varvec{{\tilde{\pi }}}}, {\varvec{\beta }} \big ) , \end{aligned}$$(19)where \({\mathcal {I}_{{f,\rho }}}\big ( {\varvec{{\tilde{\pi }}}}, {\varvec{\beta }} \big )\) is evaluated at \(\mu _\rho ^*\) as in Eq. (18).

Proof

(ii): We first show the optimal representative \(\mu ^{*} = \rho ^{-1}\big (\) \(\sum _{i=1}^N \beta _i \, \rho ({\tilde{\pi }}_i)\big )\) yields a Jensen diversity in (ii), before proving this choice is the unique minimizing argument.

Expanding the expected divergence in Eq. (16) for \(\mu = {\mu }^*\), we have \(\sum _{i=1}^N \beta _i D_f [ \rho ({\tilde{\pi }}_i): \rho \big ( {\mu }^* \big ) ] = \sum _{i=1}^N \beta _i {\varPsi _{f}}[\rho ({\tilde{\pi }}_i)] - {\varPsi _{f}}[\rho ({\mu }^*)]\) \(- \int \big ( \sum _{i=1}^N \beta _i \rho ({\tilde{\pi }}_i) - \rho ({\mu }^*) \big ) \tau ({\mu }^*) d{x}\). Since \(\sum _i \beta _i \rho ({\tilde{\pi }}_i) = \rho ({\mu }^*)\), the final term cancels to yield

(i): For any other representative \(\mu \), we write the difference in expected divergence and use Eq. (51) to simplify,

where we note that \(\rho ({\mu }^*) = \sum _{i=1}^N \beta _i \rho ({\tilde{\pi }}_i)\). The rho-tau divergence is minimized if and only if \(\rho ({\mu }^*) = \rho (\mu )\) [77], thus proving (i).

(iii): Finally, we can express the suboptimality gap in Eq. (52) or rho–tau Bregman divergence in Eq. (54) as the gap in a conjugate optimization. Considering the conjugate expansion of \({\varPsi _{f}}[\rho ({{\mu }^*})]\), we have

for any choice of \(\tau ({\mu })\). This provides a lower bound on \({\varPsi }[\rho ({\mu }^*)]\), where the gap in the lower bound is the canonical form of the Bregman divergence. Indeed, substituting \({\varPsi ^*_{f^*}}[\tau ({\mu })] = \int \rho (\mu ({x})) \tau (\mu ({x})) d{x}- {\varPsi _{f}}[\rho ({\mu })]\) in Eq. (52), we have

\(\square \)

1.1 B.1 Interpretations of Theorem 1(iii)

Conjugate optimizations which treat f-divergences as a convex function of one argument are popular for providing variational lower bounds on divergences [52, 62] or min-max optimizations for adversarial training [56, 58]. Note however, that this proof provides a variational upper bound on the Bregman Information, which includes the Jensen–Shannon divergence (Example 3) and mutual information (Banerjee et al. [7, Ex. 6] ) as examples. To our knowledge, this upper bound has not been used extensively in the literature.

The equality in Eq. (19) can also be interpreted as a generalized bias-variance tradeoff for Bregman divergences ( [1, 60]).

1.2 B.2 Rho–tau Bregman information with vector-valued inputs

A more standard setting is to consider a finite-dimensional Bregman divergence over a vector of inputs, such as the natural parameters \({\varvec{\theta }}\) of a (deformed) exponential family \({\tilde{\pi }}_{{\varvec{\theta }}}^{(q)}({x}) = g({x})\exp _q \{ \langle {\varvec{\theta }}, {\varvec{T}}({x})\rangle \}\). However, we argue that this setting is best captured in our representational framework (see Appendix C), using \(\rho ({\tilde{\pi }}_{{\varvec{\theta }}}^{(q)}(x)) = \log _q \frac{{\tilde{\pi }}_{{\varvec{\theta }}}^{(q)}(x)}{g(x)} = \langle {\varvec{\theta }}, {\varvec{T}}({x})\rangle = \sum _{j=1}^d \theta ^j T^j(x)\) and the \(\rho \)-linearity of the density with respect to the appropriate base measure.

Nevertheless, we would also like to extend Theorem 1 to hold for N vector-valued, d-dimensional inputs.

Theorem 3

Consider a collection of inputs \({\varvec{u}} = \{ {\varvec{u}}_i \}_{i=1}^N\) where \({\varvec{u}}_{i} = \{u_{i}^1,..., u_{i}^j,..., u_{i}^d \}_{j=1}^d\). In this case, consider applying the monotonic representation function \(\rho : {\mathcal {X}}_\rho ^d \subset \mathbb {R}^d \rightarrow {\mathcal {Y}}_\rho ^d \subset \mathbb {R}^d\) elementwise \(\rho ({\varvec{u}}_i) :=\{\rho (u_{i}^1),..., \rho (u_{i}^j),..., \rho (u_{i}^d) \}_{j=1}^d\). For a convex generating function \(F: {\mathcal {Y}}_\rho ^d \subset \mathbb {R}^d \rightarrow \mathbb {R}\), define the Bregman divergence as

where the inner product sums over dimensions \(1 \le j \le d\). Using analogous definition of a conjugate representation as in Sect. 2.2, we have \(\tau ({\varvec{u}}) = \nabla _{\rho } F(\rho ({\varvec{u}}))\) with \(\tau (u^j)= \frac{\partial }{\partial (\rho ({\varvec{u}}))^j} F(\rho ({\varvec{u}})) \).

Finally, consider discrete mixture weights \({\varvec{\beta }}= \{\beta _i \}_{i=1}^N\) with \(\sum _i \beta _i = 1\), and assume the expected value \(\mu _{\rho }({\varvec{u}}, {\varvec{\beta }}) :=\sum _{i=1}^N \beta _i ~ \rho ({\varvec{u}}_i) \in \textrm{ri}({\mathcal {Y}}_\rho ^d)\) is in the relative interior of the range of \(\rho \). Then, we have the following results,

-

(i)

For a Bregman divergence with generator F, the optimization

$$\begin{aligned} {\mathcal {I}_{{F,\rho }}} \big ( {{\varvec{u}}, {\varvec{\beta }}} \big )&:=\min \limits _\mu \sum \limits _{i=1}^N \, \beta _i \, D_F \left[ \rho ({\varvec{u}}_i) : \rho ({\varvec{\mu }}) \right] . \end{aligned}$$(56)has a unique minimizer given by the quasi-arithmetic mean with representation function \(\rho ({\tilde{\pi }})\)

$$\begin{aligned} {\varvec{\mu }}_\rho ^*({\varvec{u}}, {\varvec{\beta }}) = \rho ^{-1}\left( \sum \limits _{i=1}^N \beta _i \, \rho \big ( {\varvec{u}}_i \big ) \right) = \mathop {\mathrm {arg\,min}}\limits \limits _{{\varvec{\mu }}} \sum \limits _{i=1}^N \, \beta _i \, D_F \left[ \rho ({\varvec{u}}_i) : \rho ({\varvec{\mu }}) \right] . \end{aligned}$$The arithmetic mean is recovered for \(\rho ({\varvec{u}}_i) = {\varvec{u}}_i\) and any F [7].

-

(ii)

At this minimizing argument, the value of the expected divergence in Eq. (56) is called the rho–tau Bregman information and is equal to a gap in Jensen’s inequality for the convex function F, mixture weights \({\varvec{\beta }}\), and inputs \({\varvec{u}}= \{ {\varvec{u}}_i\}_{i=1}^N\),

$$\begin{aligned} {\mathcal {I}_{{F,\rho }}} \big ( {{\varvec{u}}, {\varvec{\beta }}} \big ) = \sum \limits _{i=1}^N \beta _i \, F\big ( \rho ({\varvec{u}}_i) \big ) - F\big ( \rho ({\varvec{\mu }}_\rho ^*) \big ) . \end{aligned}$$(57) -

(iii)

Using \({\varvec{\mu }} \ne {\varvec{\mu }}_\rho ^*({\varvec{u}}, {\varvec{\beta }})\) as the representative in Eq. (56), the suboptimality gap is a rho-tau Bregman divergence

$$\begin{aligned} D_F\big [ \rho \big ( {\varvec{\mu }}_\rho ^* \big ) : \rho \big ( {\varvec{\mu }} \big )\big ]&= \sum \limits _{i=1}^N \beta _i ~ D_F\left[ \rho \big ( {\varvec{u}}_i \big ) : \rho \big ( {\varvec{\mu }} \big ) \right] - {\mathcal {I}_{{F,\rho }}}\big ( {\varvec{u}}, {\varvec{\beta }} \big ) . \end{aligned}$$(58)

Proof

(ii): Again, we start by showing that the optimal representative \({\varvec{\mu }}_\rho ^{*} = \rho ^{-1}\big (\) \(\sum _{i=1}^N \beta _i \, \rho ({\varvec{u}}_i)\big )\) yields a Jensen diversity in (ii). Expanding the expected divergence in Eq. (56) for \({\varvec{\mu }} = {\varvec{\mu }}_\rho ^{*}\), we have \(\sum _{i=1}^N \beta _i D_F [ \rho ({\varvec{u}}_i): \rho \big ( {\varvec{\mu }}_\rho ^* \big ) ] = \sum _{i=1}^N \beta _i F\big ( \rho ({\varvec{u}}_i) \big ) - F\big ( \rho ({\varvec{\mu }}_\rho ^*) \big )\) \(- \langle \sum _{i=1}^N \beta _i \rho ({\varvec{u}}_i) - \rho ({\varvec{\mu }}_\rho ^*), \tau ({\varvec{\mu }}_\rho ^*) \rangle \). Since \(\sum _i \beta _i \rho ({\varvec{u}}_i) = \rho ({\varvec{\mu }}_\rho ^*)\), the final term cancels to yield

(i, iii): Writing the difference in expected divergence for a suboptimal representative \({\varvec{\mu }}\) and using Eq. (59), we have

The rho–tau divergence is minimized iff \(\rho ({\varvec{\mu }}_\rho ^*) = \rho ({\varvec{\mu }})\) [77], thus proving (i). \(\square \)

C Parametric Bregman divergence and annealing paths within (deformed) exponential families

Consider a q-exponential family with a d-dimensional natural parameter vector \({\varvec{\theta }}\in \varTheta \subset {\mathbb {R}}^d\), sufficient statistic vector \({\varvec{T}}({x})\), and base density \(g({x})\),

We let \(\tilde{\pi }^{(q)}_{{\varvec{\theta }}}({x}) = g({x})\exp _q \{ \langle {\varvec{\theta }}, {\varvec{T}}({x})\rangle \}\) denote the unnormalized density, often abbreviating to \({\tilde{\pi }}_{{\varvec{\theta }}}({x})\) for convenience.

From the convexity of \(\exp _q\), it can be shown that the normalization constant \(\mathcal {Z}_q({\varvec{\theta }})\) is a convex function of the parameters \({\varvec{\theta }}\), with first derivative

Parametric Interpretation of Amari \(\alpha \)-divergence We now show that the Bregman divergence induced by \(\frac{1}{q} \mathcal {Z}_q({\varvec{\theta }})\), for \(q > 0\), corresponds to the Amari \(\alpha \)-divergence between parametric unnormalized densities,

where in (1) we use the fact that \(\log _q \frac{{\tilde{\pi }}_{{\varvec{\theta }}}({x})}{g({x})} = \langle {\varvec{\theta }}, {\varvec{T}}({x})\rangle \).

For \(q=1\) and the exponential family, we have \(\mathcal {Z}({\varvec{\theta }}) = \int {\tilde{\pi }}_{{\varvec{\theta }}}({x}) d{x}\) and \(\frac{\partial \mathcal {Z}({\varvec{\theta }})}{\partial \theta ^j} = \int {\tilde{\pi }}_{{\varvec{\theta }}}({x}) T^j({x}) d{x}\), which leads to the Bregman divergence

By contrast, the divergence generated by the log partition function \(\log \mathcal {Z}({\varvec{\theta }})\) yields the kl divergence between normalized distributions. Using \(\frac{\partial }{\partial \theta ^j} \log \mathcal {Z}({\varvec{\theta }}) = \frac{1}{\mathcal {Z}({\varvec{\theta }})} \int {\tilde{\pi }}_{{\varvec{\theta }}}({x})T^j({x}) d{x}= \int \pi _{{\varvec{\theta }}}({x}) T^j({x}) d{x}\), we recover the well-known result [66]

1.1 C.1 Parametric divergence in \(\rho = \log _q\) representation

Alternatively, we may view the parametric divergence \(D_{\frac{1}{q} \mathcal {Z}_q}[{\varvec{\theta }}^{\prime }: {\varvec{\theta }}]\) as a decomposable divergence in the \(\log _q\)-representation \(\rho ({\tilde{\pi }}_{{\varvec{\theta }}}^{(q)}) = \log _q \frac{{\tilde{\pi }}_{{\varvec{\theta }}}^{(q)}(x)}{g(x)}\), where

Note, the order of the \(\alpha \)-divergence is set by the deformation parameter q in the definition of the representation function \(\rho _q\) or q-exponential family. In Sect. 4.2 Example 11, we have used this Bregman divergence minimization to interpret the q-paths between arbitrary endpoints from a parametric perspective.

This interpretation also suggests that the form of the deformed family in Eq. (61), particularly its \(\log _q\)-linearity in \({\varvec{\theta }}\), is sufficient to derive the parametric divergence as a non-parametric rho-tau divergence using Eq. (64).

Due to this generality of the non-parametric perspective, we advocate viewing parametric annealing paths within the same q-exponential family through the lens of Theorem 1 instead of the vector-valued perspective in Theorem 3. Indeed, when annealing between parametric deformed exponential family endpoints in Example 15, the quasi-arithmetic mean in the \(\rho = \log _q\) representation (using Theorem 1) suggests linear or arithmetic mixing of the natural parameters. This interpretation is more natural, and analogous to the exponential family case, compared to taking the quasi-arithmetic mean of the parameter vectors \({\varvec{\theta }}\) directly (using Theorem 3).

1.2 C.2 Annealing paths between (deformed) exponential family endpoint densities

The above Bregman divergences can be used to analyze annealing paths in the special case where the endpoint densities \({\tilde{\pi }}_{{\varvec{\theta }}_0}\) and \({\tilde{\pi }}_{{\varvec{\theta }}_1}\) belong to the same (deformed) exponential family in Eq. (61).

Example 15

(Annealing within (deformed) exponential families) Due to the \(\rho \)-affine property of deformed exponential families, it is natural to consider the \(\rho ({\tilde{\pi }}) = \log _q {\tilde{\pi }}\) path within the \(\exp _q\) family.

Ignoring the normalization constant, the unnormalized density with respect to \(g({x})\) is linear in \({\varvec{\theta }}\) after applying the \(\rho (\tilde{\pi }_{{\varvec{\theta }}}) = \log \frac{\tilde{\pi }_{{\varvec{\theta }}}({x})}{g({x})}\) representation function, with \(\log \frac{{\tilde{\pi }}_{{\varvec{\theta }}}({x})}{g({x})} = \langle {\varvec{\theta }}, {\varvec{T}}({x})\rangle .\) Since the quasi-arithmetic mean also has this \(\rho \)-affine property (Eq. 5), we can see that the q-path or geometric path between (deformed) exponential endpoints \({\tilde{\pi }}_{{\varvec{\theta }}_0}({x})\) and \({\tilde{\pi }}_{{\varvec{\theta }}_1}({x})\) is simply a linear interpolation in the natural parameters

which includes the kl divergence and exponential family for \(q=1\).

Example 16

(Moment averaging path of Grosse et al. [34]) In the case of the standard exponential family,

Grosse et al. [34] propose the moment averaging path, which uses the dual parameter mapping \(\rho ({\varvec{\theta }}) = {\varvec{\eta }}({\varvec{\theta }}) = \mathbb {E}_{\pi _{{\varvec{\theta }}}}\left[ {\varvec{T}}({x})\right] \) as a representation function for the quasi-arithmetic mean,

for an appropriate dual divergence based on the dual of the log partition function \(\psi ^*({\varvec{\eta }}({\varvec{\theta }})) = D_{KL}[\pi _{{\varvec{\theta }}}(x): g(x)]\) (see [34]). While Grosse et al. [34] show performance gains using the moment averaging path, additional sampling procedures may be required to find \({\varvec{\theta }}_{\beta }\) via the inverse mapping \({\varvec{\eta }}^{-1}(\cdot )\).

D Information geometry of rho–tau divergences

We next review results from [78] describing the statistical manifolds induced by rho–tau Bregman Informations. We summarize using our notation in Table 2.

Eguchi relations The seminal Eguchi relations [26, 27] describe the statistical manifold structure \((\mathcal {M}, g, \nabla , \nabla ^*)\) induced by a divergence \(D[{\pi _a}:{\pi _b}]\). We first consider a manifold of parametric densities \(\mathcal {M}\) represented by a coordinate system \({\varvec{\theta }}(\pi ): \mathcal {M}_{{\varvec{\theta }}} \mapsto \varTheta \subset \mathbb {R}^N\), with \(\partial _i = \frac{\partial }{\partial \theta ^i}\) as a basis for the tangent space. The Riemannian metric is written \(g_{ij}({\varvec{\theta }}) = \langle \partial _i, \partial _j \rangle \), while the affine connection or covariant derivative is expressed using the scalar Christoffel symbols \(\varGamma _{ij,k}({\varvec{\theta }}) = \langle \nabla _{\partial _i} \partial _j, \partial _k \rangle \) [4, 54]. For a given divergence, taking the second and third order differentials yield the following metric and conjugate pair of affine connections

where \(({\partial _j})_{{\pi _{{\varvec{\theta }}_a}}}\) indicates partial differentiation with respect to the parameter \(\theta _{a}^j\) with index j of the first argument.

Statistical manifold from rho–tau divergence Following Zhang [77, 78], viewing \(D_{f,\rho }^{(\beta )}[{\tilde{\pi }}_0: {\tilde{\pi }}_1] :=\frac{1}{\beta (1-\beta )} \, {\mathcal {I}_{{f,\rho }}} \big ( {{\varvec{{\tilde{\pi }}}}, {\varvec{\beta }}} \big )\) in Eq. (22) as a divergence functional yields the following Riemannian metric and primal affine connection (expressed using the Christoffel symbols \(\varGamma _{ij,k}({\varvec{\theta }})\)),

Since our exposition in Sects. 2.2 and 4 considers a nonparametric manifold of arbitrary unnormalized densities, we also recall the nonparametric analogues of Eqs. (68) and (69) from Zhang [78].Footnote 3 For tangent vectors \(u({x}), v({x}), w({x})\) (such that \(\int u({x}) d{x}= 0\)) at a point \({\tilde{\pi }}({x})\),

where \(d_w u\) is the directional derivative of u in the direction of w and \(\rho ^{\prime }_{{\tilde{\pi }}}{({x})} = \rho ^{\prime }\big ( {\tilde{\pi }}({x}) \big )\). The parametric expression above can be recovered using, for example, \(u({x}) = \frac{\partial }{\partial \theta ^i}\pi _{{\varvec{\theta }}}({x})\).

Riemannian metrics To recover the Fisher–Rao metric, we may consider the \(\rho ({\tilde{\pi }}) = \log {\tilde{\pi }}\) and \(\tau ({\tilde{\pi }}) = \pi \) representations, which yields \(\rho ^\prime ({\tilde{\pi }}) \tau ^\prime ({\tilde{\pi }}) = {\tilde{\pi }}^{-1}\) as desired. However, the Fisher-Rao metric may also be recovered using the representations \(\rho ({\tilde{\pi }}) = \log _q({\tilde{\pi }})\), \(\tau ({\tilde{\pi }}) = \log _{1-q} {\tilde{\pi }}\) used to derive the \(\alpha \)-divergence in Example 5 (see e.g. Nielsen [54, Sect. 3.12] ). Finally, the Jensen–Shannon divergence and Zhang’s \((\beta , q)\) divergence also induce the Fisher–Rao metric due to the fact that the outer integration term \(\rho ^\prime ({\tilde{\pi }})\tau ^\prime ({\tilde{\pi }}) = {\tilde{\pi }}^{-1}\), while the metric for the Beta divergence integrates \(\rho ^\prime ({\tilde{\pi }})\tau ^\prime ({\tilde{\pi }}) = {\tilde{\pi }}^{-q}\) and thus may be referred to as a ‘deformed’ metric [49, 80].

Affine connections Recall that the standard \(\alpha \)-connection [3, 4] is given by \(\alpha (x;\rho ,\tau ,\beta ) = \alpha \cdot \pi _{{\varvec{\theta }}}({x})^{-1}\). From Table 2, we see that the \(\alpha \)-connection with \(\alpha = \beta \) is induced from either the Amari \(\alpha \)-divergence or the Jensen-Shannon divergence, which are the rho-tau Bregman Information corresponding to the kl divergences in either direction. When treating the \(\alpha \)-divergence as a rho-tau Bregman divergence (as in Example 5) instead of a Bregman Information (as in Example 4), we see that the order of the \(\alpha \)-connection is set by the representation parameter \(\alpha = q\). This mirrors our observations in Sect. 4.1. Finally, note that the Beta divergence (as a rho-tau Bregman divergence) induces the alpha connections of order q and 0, instead of 1 and 0 for the kl divergence or q and \(1-q\) for the Amari \(\alpha \)-divergence.

The divergence functionals derived from the rho–tau Bregman information for either the Beta, \(\alpha \), or Amari–Cichocki \((q,\lambda )\) divergences induce \(\alpha \)-connections which interpolate between the endpoint values based on the mixture parameter \(\beta \).

Limiting behavior of Amari-\(\alpha \) and Beta-divergences Finally, in Table 3, we recall the limiting behavior of the \(\alpha \) divergences as \(q\rightarrow 0\) or \(q \rightarrow 1\), and the Beta divergence as \(q \rightarrow 1\) or \(q \rightarrow 2\). While these families of divergences agree in their limiting behavior as \(q \rightarrow 1\), the Beta divergence recovers either the Euclidean Bregman divergence or Itakura–Saito divergence for \(q = 0\) and \(q \rightarrow 2\) respectively.

E Geodesics for the rho–tau Bregman divergence

In this section, we show that the quasi-arithmetic mixture path in the \(\rho ({\tilde{\pi }})\) representation of densities is a geodesic with respect to the primal connection induced by the rho-tau divergence \(D_{f}[\rho ({\tilde{\pi }}_1):\rho ({\tilde{\pi }}_0)]\). Recall from Zhang [78, Sect. 2.3 (Eq. 88)] that the \(\alpha \)-connection (or covariant derivative) associated with the rho–tau Bregman divergence has the form

where \(\dot{\gamma } = \frac{d\gamma }{dt}\) and the parameter \(\alpha \) plays the role of the convex combination or mixture parameter \(\beta \). In this section, we use the notation \(\nabla ^{(\alpha )}\) to represent the affine connection, instead of the Christoffel symbol notation from e.g. Eq. (71), with \(\varGamma ^{(\alpha )}_{wu,v}({\tilde{\pi }}) = \langle \nabla ^{(\alpha )}_w u, v \rangle \).

We are interested in the Bregman divergence and \(\nabla ^{(1)}\) connection for \(\alpha =1\). Using Eq. (5), we need to show that the geodesic equation \( \nabla ^{(1)}_{\dot{\gamma }} \dot{\gamma } = 0\) holds ([54] Sec. 3.12) for curves which are linear in the \(\rho \)-representation.

Theorem 2

(Geodesics for Rho-Tau Bregman Divergence) The curve \(\gamma _t = \rho ^{-1} \big ( (1-t) \rho ({\tilde{\pi }}_0) + t \, \rho ({\tilde{\pi }}_1) \big )\) (with time derivative \(\dot{\gamma _t} = \frac{d}{dt}\gamma _t\)) is auto-parallel with respect to the primal affine connection \(\nabla ^{(1)}\) induced by the rho-tau Bregman divergence \(D_{f}[\rho ({{{\tilde{\pi }}_a}}):\rho ({{{\tilde{\pi }}_b}})]\) for \(\beta = 1\). In other words, the following geodesic equation holds

The \(\rho \)-representation of the unnormalized density thus provides an affine coordinate system for the geometry induced by \(D_{f}[\rho ({{{\tilde{\pi }}_a}}):\rho ({{{\tilde{\pi }}_b}})]\).

Proof

We simplify each of the terms in the geodesic equation, where we rewrite the desired geodesic equation in Eq. (23) to match Eq. 88 of Zhang [78],

First, note the particularly simple expression for \(\frac{d\rho (\gamma _t)}{dt} = \rho ({\tilde{\pi }}_1) - \rho ({\tilde{\pi }}_0)\) given the definition \(\rho (\gamma (t)) = (1-t) \rho ({\tilde{\pi }}_0) + t \, \rho ({\tilde{\pi }}_1)\). Noting the chain rule \(\frac{d\rho _t}{dt} = \frac{d\rho (\gamma _t)}{d\gamma } \frac{d\gamma _t}{dt}\), we can rearrange to obtain an expression for \(\dot{\gamma }(t)\)

Taking the directional derivative \(d_{\dot{\gamma }}\dot{\gamma } = \frac{d\dot{\gamma }}{d\gamma }\cdot \dot{\gamma }\),

Rewriting the final term in Eq. (23), we have

Putting it all together, we have

Noting that \(\frac{d\rho (\gamma _t)}{d\gamma }^{-1} = \dot{\gamma }(\rho ({\tilde{\pi }}_1) - \rho ({\tilde{\pi }}_0))\) from Eq. (74), we have

which proves the proposition. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Brekelmans, R., Nielsen, F. Variational representations of annealing paths: Bregman information under monotonic embedding. Info. Geo. (2024). https://doi.org/10.1007/s41884-023-00129-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41884-023-00129-6