Abstract

Matrix reduction is the standard procedure for computing the persistent homology of a filtered simplicial complex with m simplices. Its output is a particular decomposition of the total boundary matrix, from which the persistence diagrams and generating cycles are derived. Persistence diagrams are known to vary continuously with respect to their input, motivating the study of their computation for time-varying filtered complexes. Computing persistence dynamically can be reduced to maintaining a valid decomposition under adjacent transpositions in the filtration order. Since there are \(O(m^2)\) such transpositions, this maintenance procedure exhibits limited scalability and is often too fine for many applications. We propose a coarser strategy for maintaining the decomposition over a 1-parameter family of filtrations. By reduction to a particular longest common subsequence problem, we show that the minimal number of decomposition updates d can be found in \(O(m \log \log m)\) time and O(m) space, and that the corresponding sequence of permutations—which we call a schedule—can be constructed in \(O(d m \log m)\) time. We also show that, in expectation, the storage needed to employ this strategy is actually sublinear in m. Exploiting this connection, we show experimentally that the decrease in operations to compute diagrams across a family of filtrations is proportional to the difference between the expected quadratic number of states and the proposed sublinear coarsening. Applications to video data, dynamic metric space data, and multiparameter persistence are also presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a triangulable topological space equipped with a tame continuous function, persistent homology captures the changes in topology across the sublevel sets of the space, and encodes them in a persistence diagram. The stability of persistence contends that if the function changes continuously, so too will the points on the persistence diagram (Cohen-Steiner et al. 2006, 2007). This motivates the application of persistence to time-varying settings, like that of dynamic metric spaces (Kim and Mémoli 2020). As persistence-related computations tend to exhibit high algorithmic complexity—essentially cubicFootnote 1 in the size of the underlying filtration—their adoption to dynamic settings poses a challenging computational problem. Currently, there is no recourse when faced with a time-varying complex containing millions of simplices across thousands of snapshots in time. Acquiring such a capability has far-reaching consequences: methods that vectorize persistence diagrams for machine learning purposes all immediately become computationally viable tools in the dynamic setting. Such persistence summaries include adaptive template functions (Polanco and Perea 2019), persistence images (Adams et al. 2017), and \(\alpha \)-smoothed Betti curves (Ulmer et al. 2019).

Cohen-Steiner et al. refer to a continuous 1-parameter family of persistence diagrams as a vineyard, and they give in Cohen-Steiner et al. (2006) an efficient algorithm for their computation. The vineyards approach can be interpreted as an extension of the reduction algorithm (Zomorodian and Carlsson 2005), which computes the persistence diagrams of a filtered simplicial complex K with m simplices in \(O(m^3)\) time, via a particular decomposition \(R = D V\) (or \(RU = D\)) of the boundary matrix D of K. The vineyards algorithm, in turn, transforms a time-varying filtration into a certain set of permutations of the decomposition \(R = DV\), each of which takes at most O(m) time to execute. If one is interested in understanding how the persistent homology of a continuous function changes over time, then this algorithm is sufficient, for homological critical points can only occur when the filtration order changes. Moreover, the vineyards algorithm is efficient asymptotically: if there are d time-points where the filtration order changes, then vineyards takes \(O(m^3 + md)\) time; one initial \(O(m^3)\)-time reduction at time \(t_0\) followed by one O(m) operation to update the decomposition at the remaining time points \((t_1, t_2, \dots , t_d)\). When \(d>> m\), the initial reduction cost is amortized by the cost of maintaining the decomposition, implying each diagram produced takes just linear time per time point to obtain.

Despite its theoretical efficiency, vineyards is often not the method of choice in practical settings. While there is an increasingly rich ecosystem of software packages offering variations of the standard reduction algorithm (e.g. Ripser, PHAT, Dionysus, etc. see (Otter et al. 2017) for an overview), implementations of the vineyards algorithm are relatively uncommon,Footnote 2 The reason for this disparity is perhaps explained by Lesnick and Wright (Lesnick and Wright 2015): “While an update to an RU decomposition involving few transpositions is very fast in practice... many transpositions can be quite slow... it is sometimes much faster to simply recompute the RU-decomposition from scratch using the standard persistence algorithm.” Indeed, they observe that maintaining the decomposition along a certain parameterized family is the most computationally demanding aspect of RIVET [11], a software for computing and visualizing two-parameter persistent homology.

The work presented here seeks to further understand and remedy this discrepancy: building on the work presented in Busaryev et al. (2010), we introduce a coarser approach to the vineyards algorithm. Though the vineyards algorithm is efficient at constructing a continuous 1-parameter family of diagrams, it is not necessarily efficient when the parameter is coarsely discretized. Our methodology is based on the observation that practitioners often don’t need (or want!) all the persistence diagrams generated by a continuous 1-parameter of filtrations; usually just \(n<< d\) of them suffice. By exploiting the “donor” concept introduced in Busaryev et al. (2010), we are able to make a trade-off between the number of times the decomposition is restored to a valid state and the granularity of the decomposition repair step, reducing the total number of column operations needed to apply an arbitrary permutation to the filtration. This trade-off, paired with a fast greedy heuristic explained in Sect. 3.4.2, yields an algorithm that can update a \(R = DV\) decomposition more efficiently than vineyards in coarse time-varying contexts, making dynamic persistence more computationally tractable for a wider class of use-cases. Both the source code containing the algorithm we propose and the experiments performed in Sect. 4 are available open source online.Footnote 3

1.1 Related work

To the author’s knowledge, work focused on ways of updating a decomposition \(R = DV\), for all homological dimensions, is limited: there is the vineyards algorithm (Cohen-Steiner et al. 2006) and the moves algorithm (Busaryev et al. 2010), both of which are discussed extensively in Sect. 2. At the time of writing, we were made aware of very recent work (Luo and Nelson 2021) that iteratively repairs a permuted decomposition via a column swapping strategy, which they call “warm starts.” Though their motivation is similar to our own, their approach relies on the reduction algorithm as a subprocedure, which is quite different from the strategy we employ here.

Contrasting the dynamic setting, there is extensive work on improving the efficiency of computing a single (static) \(R = DV\) decomposition. Chen and Kerber (2011) proposed persistence with a twist, also called the clearing optimization, which exploits a boundary/cycle relationship to “kill” columns early in the reduction rather than reducing them. Another popular optimization is to utilize the duality between homology and cohomology (De Silva et al. 2011), which dramatically improves the effectiveness of the clearing optimization (Bauer 2021). There are many other optimizations on the implementation side: the use of ranking functions defined on the combinatorial number system enables implicit cofacet enumeration, removing the need to store the boundary matrix explicitly; the apparent/emergent pairs optimization identifies columns whose pivot entries are unaffected by the reduction algorithm, reducing the total number of columns which need to be reduced; sparse data structures such as bit-trees and lazy heaps allow for efficient column-wise additions with \({\mathbb {Z}}_2 = {\mathbb {Z}}/2{\mathbb {Z}}\) coefficients and effective O(1) pivot entry retrieval, and so on Bauer (2021); Bauer et al. (2017).

By making stronger assumptions on the underlying topological space, restricting the homological dimension, or targeting a weaker invariant (e.g. Betti numbers), one can usually obtain faster algorithms. Edelsbrunner et al. (2000) give a fast incremental algorithm for computing persistent Betti numbers up to dimension 2, again by utilizing symmetry, duality, and “time-reversal” (Delfinado and Edelsbrunner 1995). Chen and Kerber (2013) give an output-sensitive method for computing persistent homology, utilizing the property that certain submatrices of D have the same rank as R, which they exploit through fast sub-cubic rank algorithms specialized for sparse-matrices.

If zeroth homology is the only dimension of interest, computing and updating both the persistence and rank information is greatly simplified. For example, if the edges of the graph are in filtered order a priori, obtaining a tree representation fully characterizing the connectivity of the underlying space (also known as the incremental connectivity problem) takes just \(O(\alpha (n) n)\) time using the disjoint-set data structure, where \(\alpha (n)\) is the extremely slow-growing inverse Ackermann function. Adapting this approach to the time-varying setting, Oesterling et al. (2015) give an algorithm that maintains a merge tree with e edges in O(e) time per-update. If only Betti numbers are needed, the zeroth-dimension problem reduces even further to the dynamic connectivity problem, which can be efficiently solved in amortized \(O(\log n)\) query and update times using either Link-cut trees or multi-level Euler tour trees (Kapron et al. 2013).

1.2 A motivating example

To motivate this effort, we begin with an illustrative example of why the vineyards algorithm does not always yield an efficient strategy for time-varying settings. Consider a series of grayscale images (i.e. a video) depicting a fixed-width annulus expanding about the center of a \(9 \times 9\) grid, and its associated sublevel-set filtrations, as shown in Fig. 1.

Each image in the series consists of pixels whose intensities vary with time, upon which we build a simplicial complex using the Freudenthal triangulation of the plane. For each complex, we create a filtration of simplices whose order is determined by the lower stars of pixel values. Two events critically change the persistence diagrams: the first occurs when the central connected component splits to form a cycle, and the second when the annulus splits into four components. From left to right, the \(\epsilon \)-persistent Betti numbersFootnote 4 of the five evenly spaced ‘snapshots’ of the filtration shown in Fig. 1 are: \((\beta _0,\beta _1) = (1,0), \; (1,1), \; (1,1), \; (1,1), \; (4,0)\). Thus, in this example, only a few persistence diagrams are needed to capture the major changes to the topology.

We use this data set as a baseline for comparing vineyards and the standard reduction algorithm \(\texttt {pHcol}\) (Algorithm 4). Suppose a practitioner wanted to know the major homological changes a time-varying filtration encounters over time. Since it is unknown a priori when the persistent pairing function changes, one solution is to do n independent persistence computations at n evenly spaced points in the time domain. An alternative approach is to construct a homotopy between a pair of filtrations (K, f), \((K,f')\) and then decompose this homotopy into adjacent transpositions based on the filtration order—the vineyards approach. We refer to the former as the discrete setting, which is often used in practice, and the latter as the continuous setting. Note that though the discrete setting is often more practical, it is not guaranteed to capture all homological changes in persistence that occur in the continuous 1-parameter family of diagrams.

The cumulative cost (in total column operations) of these various approaches are shown in Fig. 2, wherein the reduction (pHcol) and vineyard algorithms are compared. Two discrete strategies (green and purple) and two continuous strategies (black and blue) are shown.

The cumulative column operations needed to compute persistence across the time-varying filtration of grayscale images. Observe 10 independent persistence computations evenly spaced in time (green line) captures the major topological changes and is the most computationally efficient approach shown (colour figure online)

Note that without knowing where the persistence pairing function changes, a continuous strategy must construct all \(\approx 7 \times 10^{4}\) diagrams induced by the homotopy. In this setting, as shown in the figure, the vineyards approach is indeed far more efficient than naively applying the reduction algorithm independently at all time points. However, when the discretization of the time domain is coarse enough, the naive approach actually performs less column operations than vineyards, while still capturing the main events.

The existence of a time discretization that is more efficient to compute than continually updating the decomposition indicates that the vineyards framework must incur some overhead (in terms of column operations) to maintain the underlying decomposition, even when the pairing function determining the persistence diagram is unchanged. Indeed, as shown by the case where \(n = 10\), applying pHcol independently between relatively “close” filtrations is substantially more efficient than iteratively updating the decomposition. Moreover, any optimizations to the reduction algorithm (e.g. clearing Chen and Kerber 2011) would only increase this disparity. Since persistence has found many applications in dynamic contexts (Topaz et al. 2015; Xian et al. 2020; Lesnick and Wright 2015; Kim and Mémoli 2020), a more efficient alternative to vineyards is clearly needed.

Our approach and contributions are as follows: First, we leverage the moves framework of Busaryev et al. (2010) to include coarser operations for dynamic persistence settings. By a reduction to an edit distance problem, we give a lower bound on the minimal number of moves needed to perform an arbitrary permutation to the \(R = D V\) decomposition, along with a proof of its optimality. We also give worst-case sizes of these quantities in expectation as well as efficient algorithms for constructing these operations—both of which are derived from a reduction to the Longest Increasing Subsequence (LIS) problem. These operations parameterize sequences of permutations \({\mathcal {S}}= ( s_1, s_2, \dots , s_d )\) of minimal size d, which we call schedules. However, not all minimal size schedules incur the same cost (i.e., number of column operations). We investigate the feasibility of choosing optimal cost schedules, and show that greedy-type approaches can lead to arbitrarily bad behavior. In light of these results, we give an alternative proxy-objective for cost minimization, provide bounds justifying its relevance to the original problem, and give an efficient \(O(d^2 \log m)\) algorithm for heuristically solving this proxy minimization. A performance comparison with other reduction-based persistence computations is given, wherein move schedules are demonstrated to be an order of magnitude more efficient than existing approaches at calculating persistence in dynamic settings. In particular, we illustrate the effectiveness of efficient scheduling with a variety of real-world applications, including flock analysis in dynamic metric spaces and manifold detection from image data using 2D persistence computations.

1.3 Main results

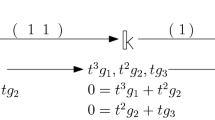

Given a simplicial complex K with filtration function f, denote by \(R = D V\) the decomposition of its corresponding boundary matrix D such that R is reduced and V is upper-triangular (see section 2.1 for details). If one has a pair of filtrations (K, f), \((K, f')\) of size \(m = |K |\) and \(R = D V\) has been computed for (K, f), then it may be advantageous to use the information stored in (R, V) to reduce the computation of \(R' = D' V'\). Given a permutation P such that \(D' = P D P^T\), such an update scheme has the form:

where \(*\) is substituted with elementary column operations that repair the permuted decomposition. It is known how to linearly interpolate \(f \mapsto f'\) using \(d \sim O(m^2)\) updates to the decomposition, where each update requires at most two column operations (Cohen-Steiner et al. 2006). Since each column operation takes O(m), the complexity of re-indexing \(f \mapsto f'\) is \(O(m^3)\), which is efficient if all d decompositions are needed. Otherwise, if only \((R', V')\) is needed, updating \(R \mapsto R'\) using the approach from Cohen-Steiner et al. (2006) matches the complexity of computing \(R' = D' V'\) independently.

We now summarize our main results (Theorem 1): suppose one has a schedule \({\mathcal {S}} = \left( s_1, s_2, \dots , s_d \right) \) yielding a corresponding sequence of decompositions:

where \(s_k = (i_k, j_k)\) for \(k=1,\ldots , d\), denotes a particular type of cyclic permutation (see Sect. 3.2). If \(i_k < j_k\) for all \(s_k \in {\mathcal {S}}\), our first result extends (Busaryev et al. 2010) by showing that (1) can be computed using \(O(\nu )\) column operations, where:

The quantities \(|{\mathbb {I}}_{k} |\) and \(|{\mathbb {J}}_{k} |\) depend on the sparsity of the matrices \(V_k\) and \(R_k\), respectively, and \(d \sim O(m)\) is a constant that depends on how similar f and \(f'\) are. As this result depends explicitly on the sparsity pattern of the decomposition itself, it is an output sensitive bound.

Our second result turns towards lower bounding \(d = |{\mathcal {S}} |\) and the complexity of constructing \({\mathcal {S}}\) itself. By reinterpreting a special set of cyclic permutations as edit operations on strings, we find that any sequence mapping f to \( f'\) of minimal size must have length (Proposition 3):

where \(\textrm{LCS}(f,f')\) refers to the size of the longest common subsequence between the simplexwise filtrations (K, f) and \((K,f')\) (see Sect. 3.2 for more details). We also show that the information needed to construct any \({\mathcal {S}}\) with optimal size can be computed in \(O(m \log \log m)\) preprocessing time and O(m) memory. We provide evidence that \(d \sim m - \sqrt{m}\) in expectation for random filtrations (Corollary 2). Although this implies d can be O(m) for pathological inputs, we give empirical results suggesting d can be much smaller in practice.

Outline: The paper is organized as follows: we review and establish the notations we will use to describe simplicial complexes, persistent homology, and dynamic persistence in Sect. 2. We also cover the reduction algorithm (designated here as pHcol), the vineyards algorithm, and the set of move-related algorithms introduced in Busaryev et al. (2010), which serves as the starting point of this work. In Sect. 3 we introduce move schedules and provide efficient algorithms to construct them. In Sect. 4 we present applications of the proposed method, including the computation of Crocker stacks from flock simulations and of a 2-dimensional persistence invariant on a data set of image patches derived from natural images. In Sect. 5 we conclude the paper by discussing other possible applications and future work.

2 Background

Suppose one has a family \(\{K_i\}_{i\in I}\) of simplicial complexes indexed by a totally ordered set I, and so that for any \(i< j \in I\) we have \(K_i \subseteq K_j\). Such a family is called a filtration, which is deemed simplexwise if \(K_j \smallsetminus K_i = \{\sigma _j\}\) whenever j is the immediate successor of i in I. Any finite filtration may be trivially converted into a simplexwise filtration via a set of condensing, refining, and reindexing maps (see Bauer 2021 for more details). Equivalently, a filtration can be also defined as a pair (K, f) where K is a simplicial complex and \(f: K \rightarrow I\) is a filter function satisfying \(f(\tau ) \le f(\sigma )\) in I, whenever \(\tau \subseteq \sigma \) in K. In this setting, \(K_i = \{ \, \sigma \in K: f(\sigma ) \le i \, \}\). Here, we consider two index sets: \([m] = \{ 1, \dots , m\}\) and \({\mathbb {R}}\). Without loss of generality, we exclusively consider simplexwise filtrations, but for brevity-sake refer to them simply as filtrations.

Let K be an abstract simplicial complex and \({\mathbb {F}}\) a field. A p-chain is a formal \({\mathbb {F}}\)-linear combination of p-simplices of K. The collection of p-chains under addition yields an \({\mathbb {F}}\)-vector space denoted \(C_p(K)\). The p-boundary \(\partial _p(\sigma )\) of a p-simplex \(\sigma \in K\) is the alternating sum of its oriented co-dimension 1 faces, and the p-boundary of a p-chain is defined linearly in terms of its constitutive simplices. A p-chain with zero boundary is called a p-cycle, and together they form \(Z_p(K) = \textrm{Ker}\,\partial _p\). Similarly, the collection of p-boundaries forms \(B_p(K) = \textrm{Im}\,\partial _{p+1}\). Since \(\partial _p \circ \partial _{p+1} = 0\) for all \(p\ge 0\), then the quotient space \(H_p(K) = Z_p(K) / B_{p}(K)\) is well-defined, and called the p-th homology of K with coefficients in \({\mathbb {F}}\). If \( f: K \rightarrow [m]\) is a filtration, then the inclusion maps \(K_i\subseteq K_{i+1}\) induce linear transformations at the level of homology:

Simplices whose inclusion in the filtration creates a new homology class are called creators, and simplices that destroy homology classes are called destroyers. The filtration indices of these creators/destroyers are referred to as birth and death times, respectively. The collection of birth/death pairs (i, j) is denoted \(\textrm{dgm}_p(K,f )\), and referred to as the p-th persistence diagram of (K, f). If a homology class is born at \(K_i\) and dies entering \(K_j\), the difference \(|i - j |\) is called the persistence of that class. In practice, filtrations often arise from triangulations parameterized by geometric scaling parameters, and the “persistence” of a homology class actually refers to its lifetime with respect to the scaling parameter.

Let \({\mathbb {X}}\) be a triangulable topological space; that is, so that there exists an abstract simplicial complex K whose geometric realization is homeomorphic to \({\mathbb {X}}\). Let \(f: {\mathbb {X}} \rightarrow {\mathbb {R}}\) be continuous and write \({\mathbb {X}}_a = f^{-1}(-\infty , a]\) to denote the sublevel sets of \({\mathbb {X}}\) defined by the value a. A homological critical value of f is any value \(a \in {\mathbb {R}}\) such that the homology of the sublevel sets of f changes at a, i.e. if for some p the inclusion-induced homomorphism \(H_p({\mathbb {X}}_{a - \epsilon }) \rightarrow H_p({\mathbb {X}}_{a+\epsilon })\) is not an isomorphism for any small enough \(\epsilon >0\). If there are only finitely many of these homological critical values, then f is said to be tame. The concept of homological critical points and tameness will be revisited in Sect. 2.2.

2.1 The reduction algorithm

In this section, we briefly recount the original reduction algorithm introduced in Zomorodian and Carlsson (2005), also sometimes called the standard algorithm or more explicitly pHcol (De Silva et al. 2011). The pseudocode is outlined in Algorithm 4 in the appendix. Without optimizations, like clearing or implicit matrix reduction, the standard algorithm is very inefficient. Nonetheless, it serves as the foundation of most persistent homology implementations, and its invariants are necessary before introducing both vineyards in Sect. 2.2 and our move schedules in Sect. 3.

Given a filtration (K, f) with m simplices, the output of the reduction algorithm is a matrix decomposition \(R = D V\), where the persistence diagrams are encoded in R and the generating cycles in the columns of V. To begin the reduction, one first assembles the elementary boundary chains \(\partial (\sigma )\) as columns ordered according to f into a \(m \times m\) filtration boundary matrix D. Setting \(V = I\) and \(R = D\), one proceeds by performing elementary left-to-right column operations on V and R until the following invariants are satisfied:

Decomposition Invariants:

-

I1.

\(R = D V\) where D is the boundary matrix of the filtration (K, f)

-

I2.

V is full-rank upper-triangular

-

l3.

R is reduced: if \(\textrm{col}_i(R) \ne 0\) and \(\textrm{col}_j(R) \ne 0\), then \(\textrm{low}_R(i) \ne \textrm{low}_R(j)\)

where \(\textrm{low}_R(i)\) denotes the largest row index of a non-zero entry in column i of R. We call the decomposition satisfying these three invariants valid. The persistence diagrams of the corresponding filtration can be determined from the lowest entries in R, once it has been reduced. Note that though R and V are not unique, the persistent pairing is unique (Zomorodian and Carlsson 2005).

It is at times more succinct to restrict to specific sub-matrices of D based on the homology dimension p, and so we write \(D_p\) to represent the \(d_{p-1} \times d_p\) matrix representing \(\partial _p\) (the same notation is extended to R and V). We illustrate the reduction algorithm with an example below.

Example 2.1

Consider a triangle with vertices u, v, w, edges \(a = (u,w)\), \(b = (v,w)\), \(c = (u, v)\), and whose filtration order is given as (u, v, w, a, b, c). Using \({\mathbb {Z}}_2\) coefficients, the reduction proceeds to compute \((R_1,V_1)\) as follows:

Since column c in \(R_1\) is 0, the 1-chain indicated by the column c in \(V_1\) represents a dimension 1 cycle. Similarly, the columns at u, v, w in \(R_0\) (not shown) are all zero, indicating three 0-dimensional homology classes are born, two of which are killed by the pivot entries in columns a and b in \(R_1\).

Inspection of the reduction algorithm from Edelsbrunner et al. (2000) suggests that a loose upper bound for the reduction is \(O(m^3)\), where \(m^4\) is the number of simplices of the filtration. Despite this high algorithmic complexity, many variations and optimizations to Algorithm 4 have been proposed over the past decade, see (Bauer 2021; Bauer et al. 2017; Chen and Kerber 2011) for an overview.

2.2 Vineyards

Consider a homotopy \(F(x,t): {\mathbb {X}} \times [0,1] \rightarrow {\mathbb {R}}\) on a triangulable topological space \({\mathbb {X}}\), and denote its “snapshot” at a given time-point t by \(f_t(x) = F(x,t)\). The snapshot \(f_0\) denotes the initial function at time \(t = 0\) and \(f_1\) denotes the function at the last time step. As t varies in [0, 1], the points in \(\textrm{dgm}_p(f_t)\) trace curves in \({\mathbb {R}}^3\) which, by the stability of persistence, will be continuous if F is continuous and the \(f_t\)’s are tame. Cohen-Steiner et al. (2007) referred to these curves as vines, a collection of which forms as vineyard—the geometric analogy is meant to act as a guidepost for practitioners seeking to understand the evolution of topological structure over time.

The original purpose of vineyards, as described in Cohen-Steiner et al. (2006), was to compute a continuous 1-parameter family of persistence diagrams over a time-varying filtration, detecting homological critical events along the way. As homological critical events only occur when the filtration order changes, detecting all such events may be reduced to computing valid decompositions at time points interleaving all changes in the filtration order. For simplexwise filtrations, these changes manifest as transpositions of adjacent simplices, and thus any fixed set of rules that maintains a valid \(R = D V\) decomposition under adjacent column transpositions is sufficient to compute persistence dynamically.

To ensure a decomposition is valid, these rules prescribe certain column and row operations to apply to a given matrix decomposition either before, during, or after each transposition. Formally, let \(S_{i}^j\) represent the upper-triangular matrix such that \(A S_{i}^j\) results in adding column i of A to column j of A, and let \(S_{i}^j A\) be the same operation on rows i and j. Similarly, let P denote the matrix so that \(A P^T\) permutes the columns of A and PA permutes the rows. Since the columns of P are orthonormal, \(P^{-1} = P^T\), then \(P A P^T\) performs the same permutation to both the columns and rows of A. In the special case where P represents a transposition, we have \(P = P^T\) and may instead simply write PAP. The goal of the vineyards algorithm can now be described explicitly: to prescribe a set of rules, written as matrices \(S_{i}^{j}\), such that if \(R = D V\) is a valid decomposition, then \((*P *R *P *) = (PDP)(*P*V *P *)\) is also a valid decomposition, where \(*\) is some number (possibly zero) of matrices encoding elementary column or row operations.

Example 2.2

To illustrate the basic principles of vineyards, we re-use the running example introduced in the previous section. Below, we illustrate the case of exchanging simplices a and b in the filtration order, and restoring RV to a valid decomposition.

Starting with a valid reduction \(R = DV\) and prior to performing the exchange, observe that the highlighted entry in \(V_1\) would render \(V_1\) non-upper triangular after the exchange. This entry is removed by a left-to-right column operation, given by applying \(S_1^{2}\) on the right to \(R_1\) and \(V_1\). After this operation, the permutation may be safely applied to \(V_1\). Both before and after the permutation P, \(R_1\) is rendered non-reduced, requiring another column operation to restore the decomposition to a valid state.

The time complexity of vineyards is determined entirely by the complexity of performing adjacent transpositions. Since column operations are the largest complexity operations needed and each column can have potentially O(m) entries, the complexity of vineyards is O(m) per transposition. Inspection of the individual cases of the algorithm from Cohen-Steiner et al. (2006) shows that any single transposition requires at most two such operations on both R and V. However, several factors can affect the runtime efficiency of the vineyards algorithm. On the positive side, as both the matrices R and V are often sparse, the cost of a given column operation is proportional to the number of non-zero entries in the two columns being modified. Moreover, as a rule of thumb, it has been observed that most transpositions require no column operations (Edelsbrunner et al. 2000). On the negative side, one needs to frequently query the non-zero status of various entries in R and V (consider evaluating e.g. Case 1.1 in Cohen-Steiner et al. (2006)), which accrues a non-trivial runtime cost due to the quadratic frequency with which they are required.

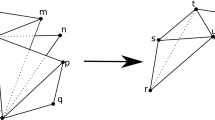

2.3 Moves

Originally developed to accelerate tracking generators with temporal coherence, Busaryev et al. (2010) introduced an extension of the vineyards algorithm which maintains a \(R = D V\) decomposition under move operations. A move operation \(\textrm{Move}(i,j)\) is a set of rules for maintaining a valid decomposition under the permutation P that moves a simplex \(\sigma _i\) at position i to position j. If \(j = i \pm 1\), this operation is an adjacent transposition, and in this sense moves generalizes vineyards. However, the move framework presented by Busaryev is actually distinct in that it exhibits several attractive qualities not inherited by the vineyards approach that warrants further study.

For completeness, we recapitulate the motivation of the moves algorithm from Busaryev et al. (2010). Let \(f: K \rightarrow [m]\) denote a filtration of size \(m = |K |\) and \(R = DV\) its decomposition. Consider the permutation P that moves a simplex \(\sigma _i\) in K to position j, shifting all intermediate simplices \(\sigma _{i+1}, \dots , \sigma _{j}\) down by one (\(i < j\)). To perform this shift, all entries \(V_{ik} \ne 0\) with column positions \(k \in [i+1,j]\) need to set to zero, otherwise PV is not upper-triangular. We may zero these entries in V using column operations \(V(*)\), ensuring invariant I2 (2.1) is maintained, however these operations may render \(R' = P R(*) P^T\) unreduced, breaking invariant I3. Of course, we could then reduce \(R'\) with additional column operations, but the number of such operations scales \(O(|i - j|^2)\), but this is no more efficient than simply performing the permutation and applying the reduction algorithm to columns [i, j] in R.

To bypass this difficulty, Busaryev et al. observed that since R is reduced, if it contains s pivot entries in the columns [i, j] of R, then \(R'\) must also have s pivots. Thus, if column operations render some pivot-column \(r_k\) of R unreduced, then its pivot entry \(\textrm{low}_R(k)\) becomes free,Footnote 5—if \(r_{k}\) is copied prior to its modification, we may re-use or donate its pivot entry to a later column \(r_{k+1}, \dots , r_j\). Repeating this process at most \(j - i - 1\) times ensures \(R'\) stays reduced in all except possibly at its i-th column. Moreover, since the k-th such operation simultaneously sets \(v_{ik} = 0\), \(V'\) retains its upper-triangularity.

Example 2.3

We re-use the running example from Sect. 2.1 and 2.2 to illustrate moves. The donor columns of R and V are denoted as \(d_R\) and \(d_V\), respectively. Consider moving edge a to the position of edge c in the filtration.

Note that the equivalent permutation using vineyards requires 4 column operations on both \(R_1\) and \(V_1\), respectively, whereas a single move operation accomplishes using only 2 column operations per matrix. The pseudocode for MoveRight is given in Algorithm 1 and for MoveLeft in Algorithm 2.

Regarding the complexity of move operations, which clearly depend on the sparsity of R and V, we recall the proposition shown in Busaryev et al. (2010):

Proposition 1

(Busaryev et al. 2010) Given a filtration with n simplices of dimensions \(p-1\), p, and \(p+1\), let \(R = DV\) denote its associated decomposition. Then, the operation \(\textrm{MoveRight}(i,j)\) constructs a valid decomposition \(R' = D'V'\) in \(O((|{\mathbb {I}} |+ |{\mathbb {J}} |)n)\) time, where \({\mathbb {I}}, {\mathbb {J}}\) are given by:

Moreover, the quantity \(|{\mathbb {I}} |+ |{\mathbb {J}} |\) satisfies \(|{\mathbb {I}} |+ |{\mathbb {J}} |\le 2(j - i)\).

Though similar to vineyards, move operations confer additional advantages:

-

M1:

Querying the non-zero status of entries in R or V occurs once per move.

-

M2:

\(R = D V\) is not guaranteed to be valid during the movement of \(\sigma _i \) to \( \sigma _j\).

-

M3:

At most O(m) moves are needed to reindex \(f \mapsto f'\)

First, consider property M1. Prior to applying any permutation P to the decomposition, it is necessary to remove non-zero entries in V which render \(P^TVP\) non-upper triangular, to maintain invariant I2. Using vineyards, one must consistently perform \(|i - j |- 1\) non-zero status queries interleaved between repairing column operations. A move operation groups these status queries into a single pass prior to performing any modifying operations.

Property M2 implies that the decomposition is not fully maintained during the execution of RestoreRight and RestoreLeft below, which starkly contrasts the vineyards algorithm. In this way, we interpret move operations as making a tradeoff in granularity: whereas a sequence of adjacent transpositions \((i, i{+}1), (i{+}1, i{+}2), \dots , (j{-}1, j)\) generates \(|i - j |\) valid decompositions in vineyards, an equivalent move operation \(\textrm{Move}(i,j)\) generates only one. Indeed, Property M3 directly follows from this fact, as one may simply move each simplex \(\sigma \in K\) into its new order \(f'(\sigma )\) via insertion sort. Note that the number of valid decompositions produced by vineyards is bounded above by \(O(m^2)\), as each pair of simplices \(\sigma _i, \sigma _j \in K\) may switch its relative ordering at most once during the interpolation from f to \(f'\).

As shown by example 2.3, moves can be cheaper than vineyards in terms of column operations. However, it is not clear that this is always the case upon inspection of Algorithm 1, as the usage of a donor column seemingly implies that many O(m) copy operations need to be performed. It turns out that we may handle all such operations except the first in O(1) time, which we formalize below.

Proposition 2

Let (K, f) denote a filtration of size \(|K |= m\) with decomposition \(R = D V\) and let T denote the number of column operations needed by vineyards to perform the sequence of transpositions:

where \(s_i\) denotes the transposition \((i,i+1)\), \(i < j\), and \(k = |i - j|\). Moreover, let M denote the number of column operations to perform the same update \(R \mapsto R'\) with \(\textrm{Move}(i,j)\). Then the inequality \(M \le T\) holds.

Proof

First, consider executing the vineyards algorithm with a given pair (i, j). As there are at most 2 column operations, any contiguous sequence of transpositions \((i,i+1), (i+1,i+2), \ldots (j-1,j)\) induces at most \(2(|i - j |)\) column operations in both R and V, giving a total of \(4(|i - j |)\) column operations.

Now consider a single MoveRight(i,j) outlined in Algorithm 1. Here, the dominant cost again are the column operations (line 5). Though we need an extra O(m) storage allocation for the donor columns \(d_*\) prior to the movement, notice that assignment to and from \(d_*\) (lines (4), and (7) in RestoreLeft and MoveRight, respectively) requires just O(1) time via a pointer swapping argument. That is, when \(d_\textrm{low}' < d_\textrm{low}\), instead of copying \(\textrm{col}_*(k)\) to \(d_*'\)—which takes O(m) time—we instead swap their column pointers in O(1) prior to column operations. After the movement, \(d_*\) contains the newly modified column and \(\textrm{col}_*(k)\) contains the unmodified donor \(d_*'\), so the final donor swap also requires O(1) time. Since at most one O(m) column operation is required for each index in [i, j], moving a column from i to j where \(i < j\) requires at most \(2(|i - j |)\) column operations for both R and V. The claimed inequality follows.

\(\square \)

As a final remark, we note that the combination of MoveRight and MoveLeft enable efficient simplex additions or deletions to the underlying complex. In particular, given K and a decomposition \(R = DV\), obtaining a valid decomposition \(R' = D' V'\) of \(K' = K \cup \{\sigma \}\) can be achieved by appending its requisite elementary chains to D and V, reducing them, and then executing MoveLeft\((m+1, i)\) with \(i = f'(\sigma )\). Dually, deleting a simplex \(\sigma _i\) may be achieved via MoveRight by moving i-th to the end of the decomposition and dropping the corresponding columns.

3 Our contribution: move schedules

We begin with a brief overview of the pipeline to compute the persistence diagrams of a discrete 1-parameter family \((f_1, f_2, \dots , f_n)\) of filtrations. Without loss of generality, assume each filtration \(f_i: K \rightarrow [m]\) is a simplexwise filtration of a fixed simplicial complex K with \(|K |=m\), and let \((f, f')\) denote any pair of such filtrations. Our strategy to efficiently obtain a valid RV decomposition of the filtration \((K,f')\) from a given decomposition of (K, f) is to decompose a fixed bijection \(\rho : [m] \rightarrow [m]\) satisfying \(f' = \rho \circ f\) into a schedule of updates:

Definition 1

(Schedule). Given a pair of filtrations \((K, f), (K,f')\) and \(R = DV\) the initial decomposition of (K, f), a schedule \({\mathcal {S}} = (s_1, s_2, \dots , s_d )\) is a sequence of permutations satisfying:

where, for each \(i \in [d]\), \(R_i = D_i V_i\) is a valid decomposition respecting invariants 2.1, and \(R'\) is a valid decomposition for \((K,f')\).

To produce this sequence of permutations \({\mathcal {S}} = (s_1, \dots , s_d)\) from \(\rho \), our approach is as follows: define \(q = (\rho (1), \rho (2), \ldots , \rho (m) )\). We compute a longest increasing subsequence \(\textrm{LIS}(q)\), and then use this subsequence to recover a longest common subsequence (LCS) between f and \(f'\), which we denote later with \(\textrm{LCS}(f, f')\). We pass q, \(\textrm{LIS}(q)\), and a “greedy” heuristic for picking moves h to our scheduling algorithm (to be defined later), which returns as output an ordered set of move permutations \({\mathcal {S}}\) of minimum size satisfying (5)—a schedule for the pair \((f, f')\). These sequence of steps is outlined in Algorithm 3 below.

Though using the LCS between a pair of permutations induced by simplexwise filtrations is a relatively intuitive way of producing a schedule of move-type decomposition updates, it is not immediately clear whether such an algorithm has any computational benefits compared to vineyards. Indeed, since moves is a generalization of vineyards, several important questions arise when considering practical aspects of how to implement Algorithm 3, such as e.g. how to pick a “good” heuristic h, how expensive can the \(\text {Schedule}\) algorithm be, or how \(|{\mathcal {S}}|\) scales with respect to the size of the complex K. We address these issues in the following sections.

3.1 Continuous setting

In the vineyards setting, a given homotopy \(F: K \times [0,1] \rightarrow {\mathbb {R}}\) continuously interpolating between (K, f) and \((K, f')\) is discretized into a set of critical events that alter the filtration order. As F determines the number of distinct filtrations encountered during the deformation from f to \(f'\), a natural question is whether such an interpolation can be modified so as to minimize \(|{\mathcal {S}}|\)—the number of times the decomposition is restored to a valid state. Towards explaining the phenomenon exhibited in Fig. 2, we begin by analyzing a class of interpolation schemes to establish an upper bound on this quantity.

Let \(F: K \times [0,1] \rightarrow {\mathbb {R}}\) be a homotopy of x-monotone curvesFootnote 6 between the filtrations \(f,f': K \rightarrow [m]\) whose function \(t\mapsto F(\sigma , t)\) is continuous and satisfies \(f(\sigma ) = F(\sigma , 0)\) and \(f'(\sigma ) = F(\sigma , 1)\) for every \(\sigma \in K\). Note that this family includes the straight-line homotopy \(F(\sigma , t) = (1 - t) f(\sigma ) + t f'(\sigma )\), studied in the original vineyards paper (Cohen-Steiner et al. 2006). If we assume that each pair of curves \(\big (t, F(\cdot , t)\big ) \subset [0,1]\times {\mathbb {R}}\) intersect in at most one point—at which they cross—the continuity and genericity assumptions on F imply that for \( \sigma ,\mu \in K\) distinct, the curves \(t \mapsto F(\sigma ,t)\) and \(t \mapsto F(\mu ,t)\) intersect if and only if \(f(\sigma ) > f(\mu )\) and \(f'(\sigma ) < f'(\mu )\), or \(f(\sigma ) < f(\mu )\) and \(f'(\sigma ) > f'(\mu )\). In other words, the number of crossings in F is exactly the Kendall-\(\tau \) distance (Diaconis and Graham 1977) between f and \(f'\):

After slightly perturbing F if necessary, we can further assume that its crossings occur at \(k = K_\tau (f,f')\) distinct time points \(0< t_1< \cdots< t_k < 1\). Let \(t_0 = 0\), \(t_{k+1}= 1\) and fix \(a_i \in (t_{i}, t_{i + 1})\) for \(i=0, \ldots , k\). Then, the order in K induced by \(\sigma \mapsto F(\sigma , a_i)\) defines a filtration \(f_i: K \rightarrow [m]\) so that \(f_0 = f\), \(f_k = f'\) and \({\mathcal {F}} = (f_0, f_1,\ldots , f_k)\) is the ordered sequence of all distinct filtrations in the interpolation from f to \(f'\) via F.

The continuity of the curves \(t\mapsto F(\cdot , t)\) and the fact that \(t_i\) is the sole crossing time in the interval \((t_{i-1}, t_{i+1})\), imply that the permutation \(\rho _i \) transforming \(f_{i-1}\) into \(f_i\), i.e. so that \(f_i = \rho _i \circ f_{i-1}\), must be (in cycle notation) of the form \(\rho _i = (\ell _i \;\; \ell _i +1 )\) for \(1 \le \ell _i < m\). In other words, \(\rho _i\) is an adjacent transposition for each \(i =1,\ldots , k\). Observe the size of the ordered sequence of adjacent transpositions \(S_F = (\rho _1, \rho _2, \ldots , \rho _k)\) defined from the homotopy F above is exactly \(K_\tau (f,f')\). On the positive side, the reduction of schedule planning to crossing detection implies the former can be solved optimally in output-sensitive \(O(m \log m + k)\) time by several algorithms (Boissonnat and Snoeyink 2000), where k is the output-sensitive term and m is the number of simplices in the filtration(s). On the negative side, \(k = K_\tau (f,f')\) scales in size to \(\sim O(m^2)\) in the worst case, achieved when \(f' = - f\). As mentioned in 2.2, this quadratic scaling induces a number of issues in the practical implementations of the vineyards algorithm

Remark 1

The grayscale image data example from Sect. 1.2 exhibits this quadratic scaling. Indeed, the Freudenthal triangulation of the \(9\times 9\) grid contains (81, 208, 128) simplices of dimensions (0, 1, 2), respectively. Therefore, \(m = 417\) and \(|S_F |\le \frac{1}{2}m(m-1) = 86,736\). As the homotopy given by the video is varied, \(\approx 70,\!000\) transpositions are generated, approaching the worst case upper bound due to the fact that \(f'\) is nearly the reverse of f.

If our goal is to decrease \(|S_F |\), one option is to coarsen \(S_F\) to a new schedule \({\widetilde{S}}_F\) by e.g. collapsing contiguous sequences of adjacent transpositions to moves, via the map \((i, i+1)(i+1, i+2)\cdots (j-1, j) \mapsto (j, i+1, \cdots , j-1, i)\) (if \(i < j\)). Clearly \(|{\widetilde{S}}_F |\le |S_F |\) and the associated coarsened \({\widetilde{S}}_F\) requires just O(m) time to compute. However, the coarsening depends entirely on the initial choice of F and the quadratic upper bound remains—it is always possible that there are no contiguous subsequences to collapse. This suggests one must either abandon the continuous setting or make stronger assumptions on F to have any hope of keeping \(|S_F |\sim O(m)\) in size.

3.2 Discrete setting

Contrasting the continuous-time setting, if we discard the use of a homotopy interpolation and allow move operations in any order, we obtain a trivial upper bound of O(m) on the schedule size: simply move each simplex in K from its position in the filtration given by f to the position given by \(f'\)—which we call the naive strategy. In particular, in losing the interpolation interpretation, it is no longer clear the O(m) bound is tight. Indeed, the “intermediate” filtrations need no longer even respect the face poset of the underlying complex K. In this section, we investigate these issues from a combinatorial perspective.

Let \(S_m\) denote the symmetric group. Given two fixed permutations \(p, q \in S_m\) and a set of allowable permutations \(\Sigma \subseteq S_m\), a common problem is to find a sequence of permutations \(s_1, s_2, \dots , s_d \in \Sigma \) whose composition satisfies:

Common variations of this problem include finding such a sequence of minimal length (d) and bounding the length d as a function of m. In the latter case, the largest lower bound on d is referred to as the distance between p and q with respect to \(\Sigma \). A sequence \(S = (s_1, s_2, \dots , s_d)\) of operations \(s \in \Sigma \subseteq S_m\) mapping \(p \mapsto q\) is sometimes called a sorting of p. When p, q are interpreted as strings, these operations \(s \in \Sigma \) are called edit operations. The minimal number of edit operations \(d_\Sigma (p, q)\) needed to sort \(p \mapsto q\) with respect to \(\Sigma \) is referred to as the edit distance (Bergroth et al. 2000) between p and q. We denote the space of sequences transforming \(p \mapsto q\) using d permutations in \(\Sigma \subseteq S_m\) with \(\Phi _\Sigma (p,q,d)\). Note the choice of \(\Sigma \) defines the type of distance being measured—otherwise if \(\Sigma = S_m\), then \(d_\Sigma (p, q) = 1\) trivially for any \(p\ne q \in S_m\).

Perhaps surprisingly, small changes to the set of allowable edit operations \(\Sigma \) dramatically affect both the size of \(d_\Sigma (p,q)\) and the difficulty of obtaining a minimal sorting. For example, while sorting by transpositions and reversals is NP-hard and sorting by prefix transpositions is unknown, there are polynomial time algorithms for sorting by block interchanges, exchanges, and prefix exchanges (Labarre 2013). Sorting by adjacent transpositions can be achieved in many ways: any sorting algorithm that exchanges two adjacent elements during its execution (e.g. bubble sort, insertion sort) yields a sorting of size \(K_\tau (p, q)\).

Here we consider sorting by moves. Using permutations, a move operation \(m_{ij}\) that moves i to j in [m], for \(i < j\), corresponds to the circular rotation:

In cycle notation, this corresponds to the cyclic permutation \(m_{ij} = (\hspace{5.0pt}i \hspace{5.0pt}j \hspace{5.0pt}j\text {-}1 \hspace{5.0pt}\dots \hspace{5.0pt}i\text {+}2 \hspace{5.0pt}i\text {+}1 \hspace{5.0pt})\). Observe that a move operation can be interpreted as a paired delete-and-insert operation, i.e. \(m_{ij} = (\textrm{ins}_j \circ \textrm{del}_i)\), where \(\textrm{del}_i\) denotes the operation that deletes the character at position i and \(\textrm{ins}_j\) the operation that inserts the same character at position j. Thus, sorting by move operations can be interpreted as finding a minimal sequence of edits where the only operations allowed are (paired) insertions and deletions—this is exactly the well known Longest Common Subsequence (LCS) distance. Between strings p, q of sizes m and n, the LCS distance is given by Bergroth et al. (2000):

With this insight in mind, we obtain the following bound on the minimum size of a sorting (i.e. schedule) using moves and the complexity of computing it.

Proposition 3

(Schedule Size). Let \((K, f), (K, f')\) denote two filtrations of size \(|K |= m\). Then, the smallest move schedule \(S^*\) re-indexing \(f \mapsto f'\) has size:

where we use \(\textrm{LCS}(f, f')\) to denote the LCS of the permutations of K induced by f and \(f'\).

Proof

Recall our definition of edit distance given above, depending on the choice \(\Sigma \subseteq S_m\) of allowable edit operations, and that in order for any edit distance to be symmetric, if \(s \in \Sigma \) then \(s^{-1} \in \Sigma \). This implies that \(d_\Sigma (p,q) = d_\Sigma (p^{-1}, q)\) for any choice of \(p,q \in S_m\). Moreover, edit distances are left-invariant, i.e.

Conceptually, left-invariance implies that the edit distance between any pair of permutations p, q is invariant under an arbitrary relabeling of p, q–as long as the relabeling is consistent. Thus, the following identity always holds:

where \(\iota = [m]\), the identity permutation. Suppose we are given two permutations \(p, q \in S_n\) and we seek to compute \(\textrm{LCS}(p, q)\). Consider the permutation \(p' = q^{-1} \circ p\). Since the LCS distance is a valid edit distance, if \(|\textrm{LCS}(p, q) |= k\), then \(|\textrm{LCS}(p', \iota ) |= k\) as well. Notice that \(\iota \) is strictly increasing, and that any common subsequence \(p'\) has with \(\iota \) must also be strictly increasing. The optimality of d follows from the optimality of the well-studied LCS problem (Kumar and Rangan 1987). \(\square \)

For any pair of general string inputs of sizes n and m, respectively, the LCS between them is computable in O(mn) with dynamic programming, and there is substantial evidence that the complexity cannot be much lower than this (Abboud et al. 2015). In our setting, however, we interpret the simplexwise filtrations \((K, f), (K, f')\) as permutations of the same underlying complex K—in this setting, \(d_{\textrm{LCS}}\) reduces further to the permutation edit distance problem. This special type of edit distance has additional structure to it, which we demonstrate below.

Corollary 1

Let \((K, f), (K, f')\) denote two filtrations of size \(|K |= m\), and let \(S^*\) denote schedule of minimal size re-indexing \(f \mapsto f'\). Then \(|S^*|= d\) can be determined in \(O(m \log \log m)\) time.

Proof

By the same reduction from Proposition 3, the problem of computing \(\textrm{LCS}(f, f')\) reduces to the problem of computing the longest increasing subsequence (LIS) of a particular permutation \(p' \in S_m\), which can be done in \(O( m \log \log m)\) time using van Emde Boas trees (Bespamyatnikh and Segal 2000) \(\square \)

Reduced complexity is not the only immediate benefit from Proposition 3; by the same reduction to the LIS problem, we obtain the worst-case bounds on \({\mathcal {S}}\) in expectation.

Corollary 2

If \((K, f), (K,f')\) are random filtrations of a common complex K of size m, then the expected size of a longest common subsequence \(\textrm{LCS}(f, f')\) between \(f,f'\) is no larger than \(m - \sqrt{m}\), with probability 1 as \(m \rightarrow \infty \).

Proof

The proof of this result reduces to showing the average length of the LIS for random permutations. Let \(L(p) \in [1,m]\) denote the maximal length of a increasing subsequence of \(p \in S_m\). The essential quantity to show the expected length of L(p) over all permutations:

A large body of work dates back at least 50 years has focused on estimating this quantity, which is sometimes called the Ulam-Hammersley problem. Seminal work by Baik et al. (1999) established that as \(m \rightarrow \infty \):

where \(c = -1.77108...\). Moreover, letting \(m \rightarrow \infty \), we have:

Thus, if \(p \in S_m\) denotes a uniformly random permutation in \(S_m\), then \(L(p)/\sqrt{m} \rightarrow 2\) in probability as \(m \rightarrow \infty \). Using the reduction from above to show that \(\textrm{LCS}(p,q) \Leftrightarrow \textrm{LIS}(p')\), the claimed bound follows. \(\square \)

Remark 2

Note the quantity from Corollary 2 captures the size of \(S^*\) between pairs of uniformly sampled permutations, as opposed to uniformly sampled filtrations, which have more structure due to the face poset. However, Boissonnat and CS (2018) prove the number of distinct filtrations built from a k-dimensional simplicial complex K with m simplices and t distinct filtration values is at least \(\left\lfloor \frac{t+1}{k+1}\right\rfloor ^m\). Since this bound grows similarly to m! when \(t \sim O(m)\) and \(k<< m\) fixed, \(d \approx n - \sqrt{n}\) is not too pessimistic a bound between random filtrations.

In practice, when one has a time-varying filtration and the sampling points are relatively close [in time], the LCS between adjacent filtrations is expected to be much larger, shrinking d substantially. For example, for the complex from Sect. 1.2 with \(m=417\) simplices, the average size of the LCS across the 10 evenly spaced filtrations was 343, implying \(d \approx 70\) permutations needed on average to update the decomposition between adjacent time points.

We conclude this section with the main theorem of this effort: an output-sensitive bound on the computation of persistence dynamically.

Theorem 1

Given a pair of filtrations \((K,f), (K,f')\), a decomposition \(R = DV\) of K, and a sequence \({\mathcal {S}} = \left( s_1, s_2, \dots , s_d \right) \) of cyclic ‘move’ permutations \(s_k = (i_k, j_k)\) satisfying \(i_k < j_k\) for all \(k \in [d]\), computing the updates:

where \(R' = D_d V_d\) denotes a valid decomposition of \((K, f')\) requires \(O(\nu )\) column operations, where \(\nu \) depends on the sparsity of the intermediate entries \(V_1, V_2, \dots , V_d\) and \(R_1, R_2, \dots , R_d\):

Moreover, the size of a minimal such \({\mathcal {S}}\) can be determined in \(O(m \log \log m)\) time and O(m) space.

Proof

Proposition 3 yields the necessary conditions for constructing \({\mathcal {S}}\) with optimal size d in \(O(m \log \log m)\) time and O(m). The definition of \(\nu \) follows directly from Algorithm 1. \(\square \)

3.3 Constructing schedules

While it is clear from the proof in Proposition 3 that one may compute the LCS between two permutations \(p, q \in S_m\) in \(O(m \log \log m)\) time, it is not immediately clear how to obtain a sorting \(p \mapsto q\) from a given \({\mathcal {L}} = \textrm{LCS}(p,q)\) efficiently. We outline below a simple procedure which constructs such a sorting \({\mathcal {S}} = (s_1, \dots , s_d)\) in \(O(dm\log m)\) time and O(m) space, or \(O(m \log m)\) time and O(m) space per update in the online setting.

Recall that a sorting \({\mathcal {S}}\) with respect to two permutations \(p, q \in S_m\) is an ordered sequence of permutations \({\mathcal {S}} = (s_1, s_2, \dots , s_d)\) satisfying \(q = s_d \circ \dots s_1 \circ p\). By definition, a subsequence in \({\mathcal {L}}\) common to both p and q satisfies:

where \(p^{-1}(\sigma )\) (resp. \(q^{-1}(\sigma )\)) denotes the position of \(\sigma \) in p (resp. q). Thus, obtaining a sorting \(p \mapsto q\) of size \(d = m - |{\mathcal {L}} |\) reduces to applying a sequence of moves in the complement of \({\mathcal {L}}\). Formally, we define a permutation \(s \in S_m\) as a valid operation with respect to a fixed pair \(p, q \in S_m\) if it satisfies:

The problem of constructing a sorting \({\mathcal {S}}\) of size d thus reduces to choosing a sequence of d valid moves, which we call a valid sorting. To do this efficiently, let \({\mathcal {U}}\) denote an ordered set-like data structure that supports the following operations on elements \(\sigma \in M\) from the set \(M = \{0, 1, \dots , m+1\}\):

-

1

\({\mathcal {U}} \cup \sigma \)—inserts \(\sigma \) into \({\mathcal {U}}\),

-

2

\({\mathcal {U}} \setminus \sigma \)—removes \(\sigma \) from \({\mathcal {U}}\),

-

3

\({\mathcal {U}}_{\textrm{succ}}(\sigma )\)—obtain the successor of \(\sigma \) in \({\mathcal {U}}\), if it exists, otherwise return \(m+1\)

-

4

\({\mathcal {U}}_{\textrm{pred}}(\sigma )\)—obtain the predecessor of \(\sigma \) in \({\mathcal {U}}\), if it exists, otherwise return 0

Given \({\mathcal {U}}\), a valid sorting can be constructed by querying and maintaining information about the \(\textrm{LCS}\) in \({\mathcal {U}}\). To see this, suppose \({\mathcal {U}}\) contains the current \(\textrm{LCS}\) between two permutations p and q. By definition of the LCS, we have:

for every \(\sigma \in {\mathcal {U}}\). Now, suppose we choose some element \(\sigma \notin {\mathcal {U}}\) which we would like to add to the LCS. If \(p^{-1}(\sigma ) < p^{-1}({\mathcal {U}}_{\textrm{pred}}(\sigma ))\), then we must move \(\sigma \) to the right of its predecessor in p such that (13) holds. Similarly, if \(p^{-1}({\mathcal {U}}_{\textrm{succ}}(\sigma )) < p^{-1}(\sigma )\), then we must move \(\sigma \) left of its successor in p. Assuming the structure \({\mathcal {U}}\) supports all of the above operations in \(O(\log m)\) time, we easily deduce a \(O(d m\log m)\) algorithm for obtaining a valid sorting.

3.4 Minimizing schedule cost

The algorithm outlined in Sect. 3.3 is a sufficient for generating move schedules of minimal cardinality: any schedule of moves S sorting \(f \mapsto f'\) above is guaranteed to have size \(|S |= m - |\textrm{LCS}(f, f') |\), and the reduction to the permutation edit distance problem ensures this size is optimal. However, as with the vineyards algorithm, certain pairs of simplices cost more to exchange depending on whether they are critical pairs in the sense described in Cohen-Steiner et al. (2006), resulting in a large variability in the cost of randomly generated schedules. This variability is undesirable in practice: we would like to generate a schedule which not only small in size, but is also efficient in terms of its required column operations.

3.4.1 Greedy approach

Ideally, we would like to minimize the cost of a schedule \({\mathcal {S}} \in \Phi _\Sigma (p,q,d)\) directly, which recall is given by the number of non-zeros at certain entries in R and V:

where \(|{\mathbb {I}}|+ |{\mathbb {J}}|\) are the quantities from Proposition 1. Globally minimizing the objective (14) directly is difficult due to the changing sparsity of the intermediate matrices \(R_k, V_k\). One advantage of the moves framework is that the cost of a single move on a given \(R = D V\) decomposition can be determined efficiently prior to any column operations. Thus, it is natural to consider whether one could minimize (14) by greedily choosing the lowest cost move in each step. Unfortunately, not only does this approach does not yield a minimal cost solution, we give a counter-example in the appendix (A.2) demonstrating such a greedy procedure may lead to arbitrarily bad behavior.

3.4.2 Proxy objective

In light of Sect. 3.4.1, we seek an alternative objective that correlates with (14) and does not depend on the entries in the decomposition. Given a pair of filtrations \((K, f), (K,f')\), a natural schedule \({\mathcal {S}} \in \Phi (f,f',d)\) of cyclic permutations \((i_1, j_1), (i_2, j_2), \dots , (i_d, j_d)\) is one minimizing the upper bound:

Unfortunately, even obtaining an optimal schedule \({\mathcal {S}}^*\in \Phi (f,f',d)\) minimizing (15) does not appear tractable due to its similarity with the k-layer crossing minimization problem, which is NP-hard for k sets of permutations when \(k \ge 4\) (Biedl et al. 2009). For additional discussion on the relationship between these two problems, see Sect. A.3 in the appendix.

In light of the discussion above, we devise a proxy objective function based on the Spearman distance (Diaconis and Graham 1977) which we observed is both efficient to optimize and effective in practice. The Spearman footrule distance F(p, q) between two \(p,q \in S_m\) is an \(\ell _1\)-type distance for measuring permutation disarrangement:

Like \(K_\tau \), F forms a metric on \(S_m\) and is invariant under relabeling. Our motivation for considering the footrule distance is motivated by the fact that F recovers \(\mathrm {{\widetilde{cost}}}({\mathcal {S}})\) when (p, q) differ by a cyclic permutation (i.e. \(F(p, m_{ij} \circ p) = 2 |i - j |\)) and by its usage on similarFootnote 7 combinatorial optimization problems. To adapt F to sortings, we decompose F additively via the bound:

where \({\hat{s}}_i = s_{i} \circ \cdots \circ s_2 \circ s_1\) denotes the composition of the first i permutations of a sorting \(S = (s_1, \dots , s_d)\) that maps \(p \mapsto \iota \) and \(s_0 = \iota \). As a heuristic to minimize (17), we greedily select the optimal \(k \in p \setminus \mathcal {L}\) at each step which minimizes the Spearman distance to the identity permutation:

Note that equality between F and \({\hat{F}}_{{\mathcal {S}}}\) (17) is achieved when the displacement of each \(\sigma \in p\) between its initial position in p to its value is non-increasing with every application of \(s_i\), which in general not guaranteed using the schedule construction method in Sect. 3. To build intuition for how this heuristic interacts with Algorithm 5, we show a purely combinatorial example below.

Example

The permutation \(p \in S_m\) to sort to the identity \(p \mapsto \iota \) is given as a sequence (4, 2, 7, 1, 8, 6, 3, 5, 0) and a precomputed LIS \({\mathcal {L}} = (1, 3, 5)\), which is highlighted in red.

On the left, a table records permuted sequences \(\{ {\hat{s}}_i \circ p \}_{i=0}^d\), given on rows \(i \in \{0, 1, \dots , 6\}\), for the moved elements (7, 0, 8, 4, 6, 2) (highlighted in yellow). In the middle, we show the Spearman distance cost (18) associated with both each permuted sequence (left column) and with each candidate permutation \(m_{ij} \circ p\) (rows). Note that elements \(\sigma \in {\mathcal {L}}\) (red) induce identity permutations that do not modify the cost, and thus the minimization is restricted to elements \(\sigma \in p \setminus {\mathcal {L}}\) (black). The right plot shows the connection to the bipartite crossing minimization problem discussed in Sect. 3.4.1.

3.4.3 Heuristic computation

We now introduce an efficient \(O(d^2 \log m)\) algorithm for constructing a move schedule that greedily minimizes (17). The algorithm is purely combinatorial, and is motivated by the simplicity of updating the Spearman distance between sequences which differ by a cyclic permutation.

First, consider an array \({\mathcal {A}}\) of size m which provides O(1) access and modification, initialized with the signed displacement of every element in p to its corresponding position in q. In the case where \(q = \iota \), note \({\mathcal {A}}(i) = i - p(i)\), thus \(F(p, \iota )\) is given by the sum of the absolute values of the elements in \({\mathcal {A}}\). In other words, computing \(F(m_{ij} \circ p, \iota )\) in \(O(\log m)\) time corresponds to updating an array’s prefix sum under element insertions and deletions, which is easily solved by many data structures (e.g. segment trees).

Because the values of \({\mathcal {A}}\) are signed, the reduction to prefix sums is not exact: it is not immediately clear how to modify \(|i - j |\) elements in \(O(\log m)\) time. To address this, observe that at any point during the execution of Algorithm 5, a cyclic permutation changes each value of \({\mathcal {A}}\) in at most three different ways:

Thus, \({\mathcal {A}}\) may be partitioned into at most four contiguous intervals, each upon which the update is constant. Such updates are called range updates, and are known to require \(O(\log m)\) time using e.g. an implicit treap data structure (Blelloch and Reid-Miller 1998).

Since single element modifications, deletions, insertions, and constant range updates can all be achieved in \(O(\log m)\) expected time with such a data structure, we conclude that equation (18) may be solved in just \(O(d^2 \log m)\) time.

Example

We re-use the previous example of sorting the sequence \(p = (4, 2, 7, 1, 8, 6, 3, 5,0)\) to the identity \(\iota = [m]\) using a precomputed LIS \({\mathcal {L}} = (1, 3, 5)\). In the left table shown below, we display the same sorting as before, with elements chosen to move highlighted in yellow; on the right, a table showing the entries of \({\mathcal {A}}\) are recorded.

The colors in the right table group the range updates: green values are unchanged between rows, blue values are modified (and shifted) by \(\pm 1\), and orange values are modified arbitrarily. As before, the ith entry of the column on the left-side shows \(F({\hat{s}}_i \circ p, \iota )\). Small black lines are used to show how the entries in \({\mathcal {A}}\) which change by \(\pm 1\) move.

4 Applications and experiments

4.1 Video data

A common application of persistence is characterizing topological structure in image data. Since a set of “snapshot” frames of a video can be equivalently thought of as discrete 1-parameter family, our framework provides a natural extension of the typical image analysis to video data. To demonstrate the benefit of scheduling and the scalability of the greedy heuristic, we perform two performance tests on the video data from Sect. 1.2: one to test the impact of repairing the decomposition less and one to measure the asymptotic behavior of the greedy approach.

In the first test, we fix a grid size of \(9 \times 9\) and record the cumulative number of column operations needed to compute persistence dynamically across 25 evenly-spaced time points using a variety of scheduling strategies. The three strategies we test are the greedy approach from section 3.4.2, the “simple” approach which uses upwards of O(m) move permutations via selection sort, and a third strategy which interpolates between the two. To perform this interpolation, we use a parameter \(\alpha \in [0,1]\) to choose \(m - \alpha \cdot d\) random simplices to move using the same construction method outlined in section 3.3. The results are summarized in the left graph on Fig. 3, wherein the mean schedule cost of the random strategies are depicted by solid lines. To capture the variation in performance, we run 10 independent iterations and shade the upper and lower bounds of the schedule costs. As seen in Fig. 3, while using less move operations (lower \(\alpha \)) tends to reduce column operations, constructing random schedules of minimal size is no more competitive than the selection sort strategy. This suggests that efficient schedule construction needs to account for the structure of performing several permutations in sequence, like the greedy heuristic we introduced, to yield an adequate performance boost.

In the second test, we aim to measure the asymptotics of our greedy LCS-based approach. To do this, we generated 8 video data sets again of the expanding annulus outlined in section 1.2, each of increasing grid sizes of \(5 \times 5\), \(6 \times 6\), \(\dots \), \(12 \times 12\). For each data set, we compute persistence over the duration of the video, again testing five evenly spaced settings of \(\alpha \in [0,1]\)—the results are shown in the right plot of Fig. 3. On the vertical axis, we plot the total number of column operations needed to compute persistence across 25 evenly-spaced time points as a ratio of the data set size (m); we also show the regression curves one obtains for each setting of \(\alpha \). As one can see from the Figure, the cost of using the greedy heuristic tends to increase sub-linearly as a function of the data set size, suggesting the move scheduling approach is indeed quite scalable. Moreover, schedules with minimal size tended to be cheaper than otherwise, confirming our initial hypothesis that repairing the decomposition less can lead to substantial reductions at run-time.

4.2 Crocker stacks

There are many challenges to characterizing topological behavior in dynamic settings. One approach is to trace out the curves constituting a continuous family of persistence diagrams in \({\mathbb {R}}^3\)—the vineyards approach—however this visualization can be cumbersome to work with as there are potentially many such vines tangled together, making topological critical events with low persistence difficult to detect. Moreover, the vineyards visualization does not admit a natural simplification utilizing the stability properties of persistence, as individual vines are not stable: if two vines move near each other and then pull apart without touching, then a pairing in their corresponding persistence diagrams may cross under a small perturbation, signaling the presence of an erroneous topological critical event (Topaz et al. 2015; Xian et al. 2020).

Acknowledging this, Topaz et al. (2015) proposed the use of a 2-dimensional summary visualization, called a crockerFootnote 8 plot. In brief, a crocker plot is a contour plot of a family of Betti curves. Formally, given a filtration \(K = K_0 \subseteq K_1 \subseteq \dots \subseteq K_m\), a p-dimensional Betti curve \(\beta _p^{\bullet }\) is defined as the ordered sequence of p-th dimensional Betti numbers:

Given a time-varying filtration \(K(\tau )\), a crocker plot displays changes to \(\beta _p^\bullet (\tau )\) as a function of \(\tau \). An example of a crocker plot generated from the simulation described below is given in Fig. 4. Since only the Betti numbers at each simplex in the filtration are needed to generate these Betti curves, the persistence diagram is not directly needed to generate a crocker plot; it is sufficient to use e.g. any of the specialized methods discussed in 1.1. This dependence only on the Betti numbers makes crocker plots easier to compute than standard persistence, however what one gains in efficiency one loses in stability; it is known that Betti curves are inherently unstable with respect to small fluctuations about the diagonal of the persistence diagram.

Xian et al. (2020) showed that crocker plots may be smoothed to inherit the stability property of persistence diagrams and reduce noise in the visualization. That is, when applied to a time-varying persistence module \(M = \{M_t\}_{t \in [0, T]}\), an \(\alpha \)-smoothed crocker plot for \(\alpha \ge 0\) is the rank of the map \(M_t(\epsilon - \alpha ) \rightarrow M_t(\epsilon + \alpha )\) at time t and scale \(\epsilon \). For example, the standard crock plot is a 0-smoothed crocker plot. Allowing all three parameters (\(t, \epsilon , \alpha \)) to vary continuously leads to 3D visualization called an \(\alpha \)-smoothed crocker stack.

Definition 2

(crocker stack) A crocker stack is a family of \(\alpha \)-smoothed crock plots which summarizes the topological information of a time-varying persistence module M via the function \(f_M: [0, T] \times [0, \infty ) \times [0, \infty ) \rightarrow {\mathbb {N}}\), where:

and \(f_M\) satisfies \(f_M(t,\epsilon ,\alpha ') \le f_M(t,\epsilon , \alpha )\) for all \(0 \le \alpha \le \alpha '\).

Note that, unlike crocker plots, applying this \(\alpha \) smoothing efficiently requires the persistence pairing. Indeed, it has been shown that crocker stacks and stacked persistence diagrams (i.e. vineyards) are equivalent to each other in the sense that either one contains the information needed to reconstruct the other (Xian et al. 2020). Thus, computing crocker stacks reduces to computing the persistence of a (time-varying) family of filtrations.

To illustrate the applicability of our method, we test the efficiency of computing these crocker stacks using a spatio-temporal data set. Specifically, we ran a flocking simulation similar to the one run in Topaz et al. (2015) with \(m = 20\) vertices moving around on the unit square equipped with periodic boundary conditions (i.e. \(S^1 \times S^1\)). We simulated movement by equipping the vertices with a simple set of rules which control how the individual vertices position change over time. Such simulations are also called boid simulations, and they have been extensively used as models to describe how the evolution of collective behavior over time can be described by simple sets of rules. The simulation is initialized with every vertex positioned randomly in the space; the positions of vertices over time is updated according to a set of rules related to the vertices’ acceleration, distance to other vertices, etc. To get a sense of the time domain, we ran the simulation until a vertex made at least 5 rotations around the torus.

Given this time-evolving data set, we computed the persistence diagram of the Rips filtration up to \(\epsilon = 0.30\) at 60 evenly spaced time points using three approaches: the standard algorithm pHcol applied naively at each of the 60 time steps, the vineyards algorithm applied to (linear) homotopy connecting filtrations adjacent in time, and our approach using moves. The cumulative number of O(m) column operations executed by three different approaches. Note again that vineyards requires generating many decompositions by design (in this case, \(\approx 1.8M\)). The standard algorithm pHcol and our move strategy were computed at 60 evenly spaced time points. As depicted in Fig. 5, our moves strategy is far more efficient than both vineyards and the naive pHcol strategies.

On the left, the cumulative number of column operations (log-scale) of the three baseline approaches tested. On the right, the normalized \(K_\tau \) between adjacent filtrations depicts the coarseness of the discretization—about \(5\%\) of the \(\approx O(m^2)\) simplex pairs between adjacent filtrations are discordant

4.3 Multiparameter persistence

Given a procedure to filter a space in multiple dimensions simultaneously, a multifiltration, the goal of multi-parameter persistence is to identify persistent features by examining the entire multifiltration. Such a generalization has appeared naturally in many application contexts, showing potential as a tool for exploratory data analysis (Lesnick 2012). Indeed, one of the drawbacks of persistence is its instability with respect to strong outliers, which can obscure the detection of significant topological structures (Buchet et al. 2015). One exemplary use case of multi-parameter persistence is to detect these strong outliers by filtering the data with respect to both the original filter function and density. In this section, we show the utility of scheduling with a real-world use case: detecting the presence of a low-dimensional topological space which well-approximates the distribution of natural images. As a quick outline, in what follows we briefly recall the fibered barcode invariant 4.3.1, summarize its potential application to a particular data set with known topological structure 4.3.2, and conclude with experiments of demonstrating how scheduling enables such applications 4.3.3.

4.3.1 Fibered barcode

Unfortunately, unlike the one-parameter case, there is no complete discrete invariant for multi-parameter persistence. Circumventing this, Lesnick and Wright (2015) associate a variety of incomplete invariants to 2-parameter persistence modules; we focus here on the fibered barcode invariant, defined as follows:

Definition 3

(Fibered barcode). The fibered barcode \({\mathcal {B}}(M)\) of a 2D persistence module M is the map which sends each line \(L \subset {\mathbb {R}}^2\) with non-negative slope to the barcode \({\mathcal {B}}_L(M)\):

Equivalently, \({\mathcal {B}}(M)\) is the 2-parameter family of barcodes given by restricting M to the of set affine lines with non-negative slope in \({\mathbb {R}}^2\).

Although an intuitive invariant, it is not clear how one might go about computing \({\mathcal {B}}(M)\) efficiently. One obvious choice is fix L via a linear combination of two filter functions, restrict M to L, and compute the associated 1-parameter barcode. However, this is an \(O(m^3)\) time computation, which is prohibitive for interactive data analysis purposes.