Abstract

Feature extraction plays an important role in pattern recognition because band-to-band registration and geometric correction from different satellite images have linear image distortion. However, new near-equatorial orbital satellite system (NEqO) images is different because they have nonlinear distortion. Conventional techniques cannot overcome this type of distortion and lead to the extraction of false features and incorrect image matching. This research presents a new method by improving the performance of the Scale-Invariant Feature Transformation (SIFT) with a significantly higher rate of true extracted features and their correct matching. The data in this study were obtained from the RazakSAT satellite covering a part of Penang state, Malaysia. The method consists of many stages: image band selection, image band compression, image sharpening, automatic feature extraction, and applying the sum of absolute difference algorithm with an experimental and empirical threshold. We evaluate a refined features scenario by comparing the result of the original extracted SIFT features with corresponding features of the proposed method. The result indicates accurate and precise performance of the proposed method from removing false SIFT extracted features of satellite images and remain only true SIFT extracted features, that leads to reduce the extracted feature from using three frame size: (1) from 2000 to 750, 552 and 92 for the green and red bands image, (2) from 678 extracted control points to be 193, 228 and 73 between the green and blue bands, and (3) from 1995 extracted CPs to be 656, 733, and 556 between the green and near-infrared bands, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Feature extraction from a remotely sensed dataset is an important step for different types of remote sensing applications such as band-to-band registration and geometric correction, image normalization. Thus, it has received considerable attention [1]. Over the past decades, a considerable number of studies have proposed several local feature extraction techniques, such as the SIFT algorithm [1, 2]. These methods involve a Speeded Up Robust Features (SURF) by Bay et al. [2] and Gradient Location Orientation Histogram referenced by Mikolajczyk and Schmid [3].

Image matching

The image matching is an aspect of several problems in different kinds of remote sensing and computer vision, including object recognition, bands registration, motion-tracking, and image geometric correction [1]. Lowe [4] described imagery features that have properties make them satisfactory for images matching of an object. The features and/or objects are invariant to imagery rotation, scaling and it seems partially invariant to any changes in viewing-point and illumination. They are incredibly localized in frequency and spatial domains and that will reduce the disruption probability by clutter, occlusion, or noise. A large numbers of objects can extract from different satellite image through using an efficient approaches.

Ground control points

The ground control points (GCPs) are groundtruth data collected from the study area from fieldwork using different instrument such as GPS. However, control points (CPs) are extraction control points are selected using from remote sensing imagery or using Google Earth [2, 3]. GCPs are very important stage for performing different kinds of remote sensing applications. Therefore, this step has received considerable attention [1, 2]. Therefore, a robust and flexible technique is always necessary to extract the GCPs automatically. Improving the extracted GCPs automatically will again improve the application accuracy [1]. The refined GCPs should then be used in determining transformation coefficients in different types of remote sensing applications. There are several algorithms have been used to perform feature extraction such as algorithms reported by [1,2,3,4].

The SIFT algorithm

SIFT algorithm is used for this study as it is one of the most effective algorithms that has been used to extract the features from images automatically [4,5,6,7,8,9]. However, applying SIFT for remote sensing imagery such as multi-sensor and/or near-equatorial orbit satellite (NEqO) images performs poorly or fails completely and will produce false CPs, which leads to an error in CP matching for sequence images that have the same features. The SIFT CPs are the SIFT extracted control points, and these CPs consider as a reference points can be used for performing images geometric and/or registration correction. Images captured at different times have a wide range of frequencies (intensity value of image pixel), and images captured at different frequencies have a different kind of response [10,11,12,13]. Therefore, matched CP pair correctness is important [3, 4, 14]. It is very common to get a location error for SIFT CPs [4, 9, 15, 16]. Finding an accurate method of refining CP quality is a difficult task [16,17,18]. Captured remotely sensed imagery, especially from near-equatorial satellites and multiple sensors, contains nonlinear geometric distortions [9, 16, 17, 19, 20].

Modified SIFT approaches

These types of errors are non-systematic and, in order to overcome such errors, it is impossible to collect CPs by conventional techniques for NEqO images because of the differences in altitude (sensor and topographic terrain), attitude (pitch, roll, and yaw), capturing time, illumination, viewing points, sun zenith and azimuth, and sensor zenith and azimuth during image capture [16, 21, 22]. Modified SIFT approaches widely adopted in with different types of data such as the synthetic aperture radar imagery [3]. The extraction of CPs for remote sensing images by employing the SIFT algorithm has also been improved. Shragai et al. [23] applied the SIFT approach for extraction the CPs from aerial imagery; it provided good results. Wessel et al. [24] modified a technique to extract GCPs for near-real-time SAR images and integrated it with the digital elevation model (DEM). Liu et al. [25] modified SIFT and called it bilateral filter SIFT (BFSIFT), which is used in the pyramid construct instead of the Gaussian filter. Liu and Yu [26] used the SIFT algorithm to match the sensed and reference images after performing edge extraction on SAR images. Chureesampant and Susaki [27] compared the SIFT-dealing performance of SAR images in different polarizations. Form these works above, knowledge with all challenges of SIFT approaches with remote sensing images is obtained.

Objective of this research

The NEqO satellite system is a very new generation of optical satellite, and unfortunately, no available online publications was found that used the RazakSAT satellite image, and the satellite after received some images lost in space. Therefore, a robust and flexible technique is necessary to automatically extract CPs. The objective of this study is to propose a new methodology that can use the extracted CPs to improve the feature extraction and that will lead to obtain an accurate result during performing different remote sensing applications such as pattern recognition, band-to-band registration, and geometric correction on images capture by different satellite systems.

Materials and methods

A new methodology to refine and improve the generated SIFT features automatically is presented in this research. This methodology is described in Fig. 1. The proposed approach starts by selecting the reference and slave images, and then image compression is performed on both images. Next, we apply a sharpening filter. Then, the SIFT algorithm is applied to generate feature extraction and/or CPs. After that, the generated CPs are refined by employing the sum of absolute difference (SAD) algorithm, which measures and compares the correlation similarity in brightness values (intensities) between the CPs in the reference and slave images to avoid obtaining bad CPs and errors in image matching. Finally, evaluations of the Refined SIFT CPs are performed by comparing the result of the Refined SIFT CPs with that of the original SIFT.

Study area description and dataset

The study area for this research is located in Penang state in northwest Malaysia, and it lies between 100°09′08′′–100°21′29′′E and 05°13′ 04′′–05°30′ 00′′N, as indicated in Fig. 2. Penang has an area of about 1048 km2, and the population reached 1.767 million in 2018. The Malaysian RazakSAT sensor imagery was adopted in this article. The RazakSAT sensor is a new-generation optical NEqO satellite system at an altitude of about 685 km in space [28]. The RazakSAT imagery coverage area is approximately (20 × 100) km, and its satellite imagery has four multispectral channels (red, green, blue, and near-infrared) and one panchromatic channel [18]. The spatial resolutions of the spectral and panchromatic bands are 5 and 2.5 m, respectively [29]. The images were captured over Malaysia and covered a part of Penang Island. The image was captured on August 1, 2009. Table 1 shows the wavelength of RazakSAT image bands. The Malaysian RazakSAT near the equatorial satellite traverses the Earth in an equatorial journey at ± 10° south and north of the equator line [30]. Figure 3 shows the NEqO satellite image bands of the RazakSAT satellite image that are obtained from the study area. The processed RazakSAT image has only 10% of cloud cover.

Using remotely sensed data from the NEqO satellite system has many advantages especially in equatorial countries, all the equatorial countries located in tropical area and these areas are cloudy area all the day, and it has high humidity lead to perform different kind of risks. Therefore, it is difficult task to monitor the environment and risks such as floods, landslides, earthquakes along these areas. It is necessary to use a new generation of satellite can monitor these countries during the day and support these areas with around 14 imagery each day [3, 28]. The NEqO satellite images are not available, because this kind of satellite is very new in space and till now its images used in private sector.

Dataset processing and analyzing

Master and sensed image selection

The use NEqO satellite system needs to be corrected geometrically. However, it is difficult task to do because of the nonlinear distortion. The first step of the proposed technique is the selection of master and sensed images. One of the difficulties encountered in this study is selecting the reference image because only one satellite image is available. Fortunately, the bands of this image have high distortion (nonlinear), which is perfect in this study. Each band of the image is considered as an individual image, and these four bands are involved in implementing the refine-SIFT method. The image used in this study is related to the near-equatorial satellite. All the bands in the image have a similar amount of noise, skewness, stretching, and rotation. Slight differences were observed in the study area [31, 32]. However, the green channel indicates fewer defects in the reference (master) image, and the remaining (R), (NIR), and (B) bands consider as slave imagery. In addition, green channel has nose (missing area) less than the others as indicates in Fig. 4 the RazakSAT image bands noise by putting circles around the noise of each band to show the noise of other bands regarding green band image.

Image compression

The second stage of this research is compression of image bands. Image compression is the reduction of the data amount that is required in order to represent imagery; it is also the reduction of data that encode the original image to fewer bits [32]. This is an important stage of this study, simply because the RazakSAT image had a large storage size of more than 3.5 gigabytes. Hence, MATLAB software was used for this stage. This large size of data is difficult to process and requires huge drive storage and these conditions increase the processing time and usage of storage of the computer. We attempted to reduce the storage amount of the imagery and the processing time. Therefore, both the reference image (band) and sensed image (band) of the RazakSAT satellite were converted to grayscale level in order to perform a normalization of the values to the interval (0–255). Afterward, image compression was performed on the images using MATLAB environment software. All bands were converted into JPEG form through image compression to minimize their size [26]. The processing time before performing image compression using MATLAB software was 2 days. However, after performing band compression, the processing time reduced dramatically to become only two minutes.

This procedure made the processing of the images more flexible and reliable. The specifications of the laptop computer that we used are as follows:

-

1.

The RAM was 8 gigabytes.

-

2.

The memory card was 8 gigabytes.

-

3.

The CPU was Core i7.

-

4.

The storage size was 1 terabyte.

Image sharpening

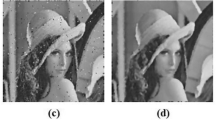

For research such as the current study, filtering the satellite images by using a sharpening filter to extract the CPs is easier and better [2, 32, 33]. Hence, a high-pass filter (HPF) was selected to filter the RazakSAT image’ bands. HPF is one of the basis filter uses for sharpening approaches. Imagery sharpening occurs when imagery contrast enhances between the adjoining area with a little variation of darkness or brightness. HPF works by retaining high-frequency information in imagery while reducing in low-frequency information [32, 33]. The HPF filter helps to remove the imagery’s low-frequency components, while the high-frequency ones remain. ENVI 5.0 Classic’s default HPF uses a kernel size of (3 × 3) by using (8) as the value of the center pixel and (− 1) for all exterior pixels. Figure 5 illustrates the image bands before and after applied the HPF filter.

Automatic feature extraction

For this study, we employed a SIFT approach. This transforms imagery to relative coordinates that are scale-invariant, as reported by Lowe [4, 5]. An important aspect of this procedure is that it creates large feature numbers that cover the imagery over all locations and scales [3, 4, 34]. The feature quantity is important for object recognition. However, the ability to detect small features in cluttered backgrounds requires at least three features for every object [5]. CPs are robust to changes in image scaling, skewing, illumination, and rotation with changes in viewpoints [4, 5]. SIFT has been applied to different fields, including computer vision, remote sensing, object recognition, medicine, and robotics [21, 27, 34]. The CPs were automatically extracted from the reference and sensed bands by using a feature-based approach employing the SIFT algorithm to extract control points automatically in three steps. In the first step, CPs were extracted from the reference image and stored in a database. In the second step, SIFT features were extracted from the sensed image.

The major stages of computation used to generate a set of image features through the use of the SIFT algorithm are presented below [4, 5]:

-

1.

Conduct scale-space extrema detection of CPs by using a cascade filtering approach that employs efficient algorithms to identify candidate CP locations by identifying locations and scales that can be repeatedly assigned under differing views of the same object. Therefore, image scale space is known as a function L (X, Y, σ) obtained from a convolution of a Gaussian variable in the form G (X, Y, σ) for input imagery I (X, Y), where the L (x, y, σ) will produce from the convolution of the G (x, y, σ):

where * denotes the convolution operation in X and Y, and

A method to efficiently detect stable CP locations in scale space was proposed by Lowe [5] through the adoption of scale-space extrema in the difference of Gaussian functions convolved with imagery, D (X, Y, σ), which can be calculated by computing the difference between the two separated scales using a constant (multiplicative factor k):

There are several reasons to select this function. First is the efficiency of the function’s calculation, simply because of the smoothing of imagery. The (L) needs to be calculated for the description of the scale-space feature. The (D) can be calculated by simple imagery subtraction. Secondly, the Gaussian function difference shows a close scale-normalized Laplacian of Gaussian approximation (σ2∇2G) [35]. In each scale-space octave (the octaves numbers and the scale depending on the original image size, while programming of SIFT, it will have to decide for anyone how many scales and octaves it wants. Low [4], who created the SIFT approach suggested it need to four octaves and five blur levels are very ideal for the SIFT method), the imagery will convolve with Gaussians to generate the set scale space imagery. Then the adjacent Gaussian imagery can be subtracted to generate the Gaussian image difference. The Gaussian imagery will be down-sampled by 2; this process will be repeated. We define (σ2) as the required factor for true scale invariance, and (σ2 ∇2G) is the CP maxima and minima [36]. The relationship between (D) and (σ2 ∇2G) can be understood by the heat diffusion equation [parameterized in terms of (σ) rather than the more usual (t = σ2)]:

∇2G can then be computed through finite difference approximation to ∂G/∂σ by using the difference of nearby scales at (kσ) and (σ):

and, therefore,

where (k − 1) is a constant in scales. So it does not affect extrema location, and k = 2½ [(generally s = 2, k = √2)]. It is divide each octave by s + 3, each octave image = image/2

-

2.

By equations below, the object localization can be conducted and filtering can be undertaken [5]:

$$D\left( x \right) = \frac{{\partial G^{T} }}{\partial x}x + X^{T} \cdot \frac{{\partial^{2} D_{x} }}{{\partial x^{2} }}$$(8)where (D) and derivatives will evaluate at the sample point and [x = (x, y, σ)T]. (x) it is the offset to this point. The (\(\hat{X}\)) is known as the extremum location and it can be determined by the function derivative with respect to (x) and setting it to (zero), giving:

$$\hat{X} = - \frac{{\partial^{2} D^{ - 1} }}{{\partial x^{2} }} \cdot \frac{\partial D}{{\partial x}}$$(9)

The Hessian and derivative of (D) is approximated by using the differences of neighboring sample points. The (3 × 3) outcome is a linear system that could be solved with minimal cost, and the \(\left[ {D\left( {\hat{X}} \right)} \right]\) is known as a function value at the extremum, used to reject unstable extrema has a low contrast Brown and Lowe [5]:

-

3.

Eliminating edge responses: The principal curvatures are computed from a 2 × 2 Hessian matrix [36]. H is computed at the location, and the scale of the keypoint is as follows:

where H = Hessian matrix. The derivatives are estimated by taking the differences of neighboring sample points. The eigenvalues of H are proportional to the principal curvatures of D. Borrowing from the approach employed by Harris and Stephens [35], to avoid explicitly computing the eigenvalues, the focus is on their ratio. Let α be the eigenvalue with the largest magnitude and β be a smaller one. The sum of the eigenvalues can then be computed from the trace of H, and their product can be computed from the determinant as follows:

where α and β are eigenvalues. When the determinant is a negative and because the curvature has different signs, the point is not considered as extremum. Let us assume that (r) is the ratio between the largest and smallest magnitude eigenvalues, so [α = rβ];

The [(r + 1)2/r] is the quantity at the minimum when the two eigenvalues are equal; they increase with the value of (r):

Fewer than 20 operations of the floating point are required in order to test each single CP. For this research, the experiments used a value of r equal to 10 to eliminate each CP that has a ratio of principal curvature equal to or greater than 10.

-

4.

Performing Orientation assignment: From assigning a consistent orientation to each CP regarding the local imagery properties, the descriptor of the CP will represent a relative relationship to this orientation and thus achieves invariance to image rotation. This approach is in contrast with the orientation invariant descriptors of Schmid and Mohr [36]. The CP scale is adopted to select the Gaussian smoothing imagery, L, with the closest scale, so the computations are conducted in a scale-invariant manner. For each imagery sample, L (x, y), the gradient magnitude, represented by m (x, y), and orientation, represented by θ (x, y), is computed by pixel differences:

The third step is matching between the SIFT features of the reference and sensed images. This was evaluated by individually comparing each feature from the sensed image to its previous counterpart in the database and identifying candidate matching features based on the Euclidean distance of their feature vectors through the use of the mathematical expression below [5]:

where p = (p1, p2,..., pn) and q = (q1, q2,..., qn) are two points in Euclidean n-space. The keypoint descriptors are highly distinctive; hence, the correct match of a single feature can result in a good probability using a large features database. However, in cluttered imagery, several objects do not have corrected matching [4, 5].

Improving the extracted features

Using extracted SIFT CPs results in numerous false and incorrect matches [2, 3, 7, 9, 15]. This phenomenon increases the image matching errors and negatively affects the application of the images in remote sensing applications [13, 37]. In this study, the new technique was employed to refine and improve the features extracted by the SIFT approach by removing the false extracted SIFT CPs that lead to incorrect matches by employing the SAD algorithm. The SAD approach works by measuring the similarity between blocks imagery. It is performed by computing the absolute difference between both of each pixel in (original block) reference image and a corresponding pixel in the slave image (block being used for comparison). All differences then will sum to establish a simple (metric of block similarity) [38, 39]. Several studies have employed the SAD algorithm in their applications, such as object recognition, motion estimation, and video compression [38,39,40]. Other researchers have performed different optimizations on SAD to make it faster, reduce the computation time, and obtain better matching between CPs [32, 41]. The SAD approach can be expressed [39] as follows:

where A and B are blocks, and x and y are the pixel indices of matrices A and B, respectively. N is the row and column number in the images. The location coordinates of the SIFT CPs were used and inputted in the refining processing together with the SAD algorithm for automatic extraction of the most accurate SIFT CPs [39, 40].

Results and discussion

The SAD algorithm for processing and analysis in this research was employed in a different manner than in all previous studies [39, 40]. All the previous researchers applied the SAD algorithm in processing the entire reference and sensed images to identify the similarity matrix based on area correlation [31, 38, 42,43,44,45,46]. However, in this study, the SAD algorithm was used to refine the extracted SIFT CPs. In some respects this is different from previous studies because the weakness of both SIFT and SAD in this kind of image is overcome by removing the incorrectly matched CPs when the SIFT algorithm produces CPs. The SAD functions measure the intensity similarity between the reference and sensed images by calculating the absolute differences in each SIFT CP in the image window and in the corresponding CP in the search window based on Eq. (19). Thereafter, all these differences should be integrated to ensure that the similarity between the two images is identified. Using an empirical threshold and suitable kernel size helps to determine and remove false SIFT CP pairs that are matched errors.

The employed steps are as follows: (1) The CPs should be automatically extracted by SIFT. (2) The reference and sensed images and the image coordinates of the extracted SIFT CPs of both images should be entered into the SAD algorithm. (3) The SAD algorithm should be run to measure the similarity in intensity between the areas around the CPs of the sensed image and the corresponding CPs in the reference image. Regarding to Eq. (18),the image window represent Block A located in reference image that has the generated SIFT CP and the search window represents the corresponding Block B in the sensed image that have the corresponding CPs to those in image windows as indicate in Eq. (18). The false CP matches can be removed by using the empirical threshold. So, we input the green band image as the referenced image and the red band image and blue band image as the sensed images into the SIFT algorithm to extract the SIFT features (CPs), and then we applied the SAD algorithm as explained above with different thresholds and the same frame size. Figures 6a, b and 7a, b and Tables 2, 3, and 4 show the results of this processing and analysis.

From Table 2, it can be seen that the extracted SIFT CPs before applying the proposed methodology of reference green band image and sensed red band image were 1500 and 2000, respectively for each single experiment. However, after applied the new technique with SAD algorithm lead to remove the false extracted SIFT CPs from the range of (700–1358). The remaining corrected extracted SIFT CPs were (750, 552, 920, respectively, for three experiment. From Figs. 6a, b and 7a, b, the result was obtained by empirically selecting the threshold. A value in between that was not extremely high or extremely small was selected to obtain a good number of CPs [47, 48]. The perfect frame size and threshold value for this study were 3 × 3 and 250, respectively, based on experimental results to remove the false CPs given by SIFT. Tables 2, 3, and 4 show the results of applying the SIFT and SAD algorithms by using different threshold values and frame sizes for all images. The processing was performed in the MATLAB environment.

Figure 6 illustrates the SIFT algorithm applied between the green image and the red band image. The extracted CPs were then refined by using the proposed method. Figures 6 and 7 shows the matching processing between the generated CPs from applied SIFT in Figs. 6a and 7a by the colored lines (yellow color) between the features in image (a) and those corresponding CPs in image (b) of Figs. 6b and 7b both of these feature are matched by these line. However, there are some matched lines are incorrected matching related to use SIFT algorithm and these error in matching put in circle to make it easy to recognize. The number of CPs decreases after the false SIFT CPs are removed. The first experimental threshold used was 200, as indicated in Table 2.

From Table 3, it can be seen that the extracted SIFT CPs before applying the proposed methodology of reference green band image and sensed red band image were 1500 and 2000, respectively for each single experiment. However, after applied the new technique with SAD algorithm lead to remove the false extracted SIFT CPs from the range of (228–383). The remaining corrected extracted SIFT CPs were (73–228), respectively, for three experiment.

Table 2 shows the operation of the SAD algorithm with different threshold values and frame sizes by performing three experiments on the reference image (green band image) and slave images (blue band). First, the SAD algorithm was used with a threshold value equal to 200 and frame size of 3 × 3. The numbers of extracted SIFT CPs were 1500 and 2000 key points in the reference and slave images, respectively, and the number of matched CPs was 1450. However, after the SAD algorithm was applied to the SIFT CPs, the matched CP number decreased to 750. The falsely matched CPs could not be removed by using this frame size and threshold. Thus, they still showed matching errors. In the second experiment, the threshold value was changed to 250 with the same frame size (3 × 3). The false CPs were removed, and the most accurately matched CPs were obtained by using both the SIFT and SAD algorithms. These key points represented the most accurate SIFT CPs. In the third experiment, the frame size was changed to 5 × 5, and a similar threshold value was used. Ninety-two matched CPs were obtained. Based on the experimental results indicated that using the threshold value of 250 and frame size of 3 × 3 provides a maximum number of SAD CPs (corrected CPs) and obtains the most precisely matched CPs, and the bad CPs are removed from the slave and reference image. Regarding Tables 2, 3 and 4 the false SIFT CPs numbered are 898, 228 and 117 between the reference and slave images. On the other side, applying SIFT and SAD between G–NIR bands give results more accurate regarding to applied SIFT and SAD with other bands.

Figure 7a, b shows the application of SIFT and then SAD for the green and blue bands, and Fig. 6a shows the result of applying the SIFT algorithm; the false CPs showed obvious matching errors. Figure 6b presents the result of applying the SAD algorithm to the extracted SIFT CPs before the biased CPs was removed. Clearly, using the SAD algorithm based on the extracted features showed better performance than using it based on area correlation. In the same way, Tables 3 and 4 show that the results of the proposed refined SIFT method compared with those of the original SIFT. The refined SIFT selects the true and accurate matched CPs based on the experimental results. Moreover, manually collecting the CPs is difficult and time consuming, particularly when the amount of data is large [23]. Thus, refined SIFT is more reliable, flexible, and accurate in extracting and improving CPs [3, 28]. Figures 8, 9, and 10 indicate the comparison between false and true extracted CPs of applying SIFT and SAD for the green, red, blue, and NIR bands.

Figure 8 illustrates the results of the three experiments of the matched, true and false extracted CPs before and after applying SAD algorithm. It is clear that when applied only SIFT method the number of matched CPs 1450 in all the three experiments. However, after applied SAD algorithm on the extracted SIFT CPs lead to remove the false and incorrect matched CPs 700, and became the true corrected CPs of SAD method only 750 instead of 1450 for the first experiment. In addition for the second and third experiment the false SIFT CPs (898, 1358) will remove with applied SAD method to make the corrected CPs will be (552, 92).

Applying SAD approach on NEqO satellite images will remove the extracted SIFT CPs and enhance the extracted CPs about 50% out of all extracted CPS. Figure 9 illustrates the results of the three experiments of the matched, true and false extracted CPs before and after applying SAD algorithm. It is clear that when applied only SIFT method the number of matched CPs 456 in all the three experiments. However, after applied SAD algorithm on the extracted SIFT, CPs lead to remove the false and incorrect matched CPs 263 and became the true corrected CPs of SAD method only 193 instead of 456 for the first experiment. In addition for the second and third experiment the false SIFT CPs (228, 383) will remove with applied SAD method to make the corrected CPs will be (228, 73).

Figure 10 illustrates the results of the three experiments of the matched, true and false extracted CPs before and after applying SAD algorithm. It is clear that when applied only SIFT method the number of matched CPs 850 in all the three experiments. However, after applied SAD algorithm on the extracted SIFT CPs lead to remove the false and incorrect matched CPs 650, and became the true corrected CPs of SAD method only 656 instead of 850 for the first experiment. In addition for the second and third experiment, the false SIFT CPs (117, 294) will remove with applied SAD method to make the corrected CPs will be (733, 556). These SAD CPs will be the reference points will use in case to perform bands image registration, normalization of radiometric correction, image geometric correction and other applications. The results prove that applying SAD algorithm will remove the SIFT false extracted CPs and produce the true CPs.

Conclusions

Features extraction methods have very affective role when performing image registration and geometric corrections for data collected from different sensor systems image, that have linear image distortion. Different kinds of algorithms adopted. However, for this research, SIFTS method was applied. The automatic extracting CPs by SIFT is not adequate for the new optical satellite generation of NEqO and multi-sensor images captured from different viewpoints and at different times and illumination. This paper presents a technical workflow that can be used for large-scale mapping based on automatically refining the extracted SIFT CPs using the SAD algorithm; it does so in a manner that is different to the conventional approach, involving the extracted CP coordinates of the reference and sensed images in the SAD algorithm and using an empirical threshold. The data adopted for implementation this study was obtained from the new generation of near-equatorial orbital satellite system called RazakSAT satellite image bands have been adopted for examine the proposed methodology, it covered the Penang Island, Malaysia. The application of refined SIFT was improved to remove false CPs that had matching errors. Finally, we evaluated the refined CP scenario by comparing the result of the original extracted SIFT CPs with that of the proposed method. The experimental results show that applying SAD approach on NEqO satellite images will remove the extracted SIFT CPs and enhance the extracted CPs about 50% out of all extracted CPs over all processed and analyzed bands image, for all the three experiments with using frame size of (3 × 3) for the first and second experiments and (5 × 5) for the third experiment. The number of extracted control points (CPs) to be reduced from 2000 to 750, 552, and 92 for the green and red bands image, from 678 extracted CPs to be 193, 228, and 73 between the green and blue bands, and from 1995 extracted CPs to be 656, 733, and 556 between the green and near-infrared bands, respectively. Results also indicate the reliability, effectiveness, and robustness of the proposed method, as well as its high precision that meets the requirements of different remote sensing applications, such as band-to-band registration, geometric, normalization of radiometric correction and change detection processing of near-equatorial satellite images. This result encourages further research to improve feature extraction approaches.

References

Crommelinck S, Bennett R, Gerke M, Nex F, Yang MY, Vosselman G (2016) Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens 8(8):689

Bay H, Tuytelaars T, Van Gool L (2006) Surf: speeded up robust features. In: Leonardis A, Bischof H, Pinz A (eds) European conference on computer vision. Springer, Berlin, pp 404–417

Mikolajczyk K, Schmid C (2005) A performance evaluation of local descriptors. IEEE Trans Pattern Anal Mach Intell 27(10):1615–1630

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vision 60(2):91–110

Lowe DG (1999) Object recognition from local scale-invariant features. In: Proceedings of the seventh IEEE international conference on computer vision, vol 2, pp 1150–1157. IEEE

Kang TK, Choi IH, Lim MT (2015) MDGHM-SURF: a robust local image descriptor based on modified discrete Gaussian-Hermite moment. Pattern Recogn 48(3):670–684

Du YH, Wu C, Zhao D, Chang Y, Li X, Yang S (2016) SIFT-based target recognition in robot soccer. Key Eng Mater 693:1419–1427 (Trans Tech Publications Ltd)

Dibs H, Al-Hedny S (2019) Detection wetland dehydration extent with multi-temporal remotely sensed data using remote sensing analysis and GIS techniques. Int J Civ Eng Technol 10:143–154

Zhang HZ, Kim DW, Kang TK, Lim MT (2019) MIFT: a moment-based local feature extraction algorithm. Appl Sci 9(7):1503

Fahad KH, Hussein S, Dibs H (2020) Spatial-temporal analysis of land use and land cover change detection using remote sensing and GIS techniques. In: IOP conference series: materials science and engineering, vol 671, No 1, p 012046. IOP Publishing

Yi Z, Zhiguo C, Yang X (2008) Multi-spectral remote image registration based on SIFT. Electron Lett 44(2):107–108

Mukherjee A, Velez-Reyes M, Roysam B (2009) Interest points for hyperspectral image data. IEEE Trans Geosci Remote Sens 47(3):748–760

Hasan M, Pickering MR, Jia X (2012) Modified SIFT for multi-modal remote sensing image registration. In: 2012 IEEE international geoscience and remote sensing symposium, pp 2348–2351. IEEE

Fonseca LM, Manjunath BS (1996) Registration techniques for multisensor remotely sensed imagery. PE RS Photogramm Eng Remote Sens 62(9):1049–1056

Ma W, Wen Z, Wu Y, Jiao L, Gong M, Zheng Y, Liu L (2016) Remote sensing image registration with modified SIFT and enhanced feature matching. IEEE Geosci Remote Sens Lett 14(1):3–7

Hall G, Strebel DE, Nickeson JE, Goetz SJ (1991) Radiometric rectification: toward a common radiometric response among multidate, multisensor images. Remote Sens Environ 35(1):11–27

Jensen JR (2005) Introduction to digital image processing. Remote Sens Pers 3:239–247

Ahmad A (2013) Classification simulation of RazakSAT satellite. Procedia Engineering 53:472–482

Sadeghi V, Ebadi H, Ahmadi FF (2013) A new model for automatic normalization of multi-temporal satellite images using Artificial Neural Network and mathematical methods. Appl Math Model 37(9):6437–6445

Langner A, Hirata Y, Saito H, Sokh H, Leng C, Pak C, Raši R (2014) Spectral normalization of SPOT 4 data to adjust for changing leaf phenology within seasonal forests in Cambodia. Remote Sens Environ 143:122–130

Helmer S, Lowe DG (2004, June) Object class recognition with many local features. In: 2004 conference on computer vision and pattern recognition workshop, pp 187–187. IEEE

Yu L, Zhang D, Holden EJ (2008) A fast and fully automatic registration approach based on point features for multi-source remote-sensing images. Comput Geosci 34(7):838–848

Shragai Z, Barnea S, Filin S, Zalmanson G, Doytsher Y (2005) Automatic image sequence registration based on a linear solution and scale invariant keypoint matching. In: BenCOS–ISPRS workshop in conjunction with ICCV, pp 5–11

Wessel B, Huber M, Roth A (2007) Registration of near real-time SAR images by image-to-image matching. 2007 PIA-Photogrammetric Image Analysis, vol 3, pp 179-184

Liu L, Wang Y, Wang Y (2008) SIFT based automatic tie-point extraction for multitemporal SAR images. In: 2008 international workshop on education technology and training & 2008 international workshop on geoscience and remote sensing vol 1, pp 499–503

Liu J, Z, & Yu X C (2008) Research on SAR image matching technology based on SIFT. ISPRS08, B1

Chureesampant K, Susaki J (2012) Automatic unsupervised change detection using multi-temporal polarimetric SAR data. In: 2012 IEEE international geoscience and remote sensing symposium, pp 6192–6195

Dibs H, Mansor S, Ahmad N, Pradhan B (2014) Registration model for near-equatorial earth observation satellite images using automatic extraction of control points. In: ISG conference

Dibs H, Hasab HA, Al-Rifaie JK, Al-Ansari N (2020) An optimal approach for land-use/land-cover mapping by integration and fusion of multispectral landsat OLI images: case study in Baghdad, Iraq. Water Air Soil Pollut 231(9):1–15

Narayanasamy A, Ahmad YA, Othman M (2017) Nanosatellites constellation as an IoT communication platform for near equatorial countries. In: IOP conference series: materials science and engineering, vol 260, no 1, pp 012028. IOP Publishing

Dandekar O, Shekhar R (2007) FPGA-accelerated deformable image registration for improved target-delineation during CT-guided interventions. IEEE Trans Biomed Circuits Syst 1(2):116–127

Dibs H, Hasab HA, Mahmoud AS, Al-Ansari N (2021) Fusion methods and multi-classifiers for improving land cover estimation by remote sensing analysis

Hasab HA, Dibs H, Dawood AS, Hadi WH, Hussain HM, Al-Ansari N (2020) Monitoring and assessment of salinity and chemicals in agricultural lands by a remote sensing technique and soil moisture with chemical index models. Geosciences 10(6):207

Deng H, Wang L, Liu J, Li D, Chen, Z, Zhou, Q (2012, October) Study on application of scale invariant feature transform algorithm on automated geometric correction of remote sensing images. In: International conference on computer and computing technologies in agriculture, Springer, Berlin, Heidelberg, pp 352–358

Harris C, Stephens M (1988, August) A combined corner and edge detector. In: Alvey vision conference, vol 15, no 50, pp 10–5244

Schmid C, Mohr R (1997) Local grayvalue invariants for image retrieval. IEEE Trans Pattern Anal Mach Intell 19(5):530–535

Chen J, Tian J (2009) Real-time multi-modal rigid registration based on a novel symmetric-SIFT descriptor. Prog Nat Sci 19(5):643–651

Förstner W, Gülch E (1987, June). A fast operator for detection and precise location of distinct points, corners and centres of circular features. In: Proc. ISPRS intercommission conference on fast processing of photogrammetric data, pp 281–305

Wong A, Clausi DA (2010) AISIR: automated inter-sensor/inter-band satellite image registration using robust complex wavelet feature representations. Pattern Recogn Lett 31(10):1160–1167

Zheng S, Huang Q, Jin L, Wei G (2012) Real-time extended-field-of-view ultrasound based on a standard PC. Appl Acoust 73(4):423–432

Nadir ND, Brahim BS, Josefina J (2011) Fast template matching method based optimized sum of absolute difference algorithm for face localization. Int J Comput Appl 18(8):0975–8887

Tirumalai A, Weng L, Grassmann A, Li M, Marquis S, Sutcliffe P (1997) New ultrasound image display with extended field of view. Proc Soc Photonics-Opt 30(31):409–419

Staatz G, Huebner D, Wildberger JE, Guenther RW (1999) Panoramic ultrasound of the spinal canal with determination of the conus medullaris level in neonates and young infants. ROFO-STUTTGART- 170(6):564–567

Weinstein SP, Conant EF, Sehgal C (2006, August). Technical advances in breast ultrasound imaging. In: Seminars in ultrasound, CT and MRI, vol 27, no 4, pp 273–283. WB Saunders

Mitterberger M, Christian G, Pinggera GM, Bartsch G, Strasser H, Pallwein L, Frauscher F (2007) Gray scale and color Doppler sonography with extended field of view technique for the diagnostic evaluation of anterior urethral strictures. J Urol 177(3):992–997

Ji S, Zhang T, Guan Q, Li J (2013) Nonlinear intensity difference correlation for multi-temporal remote sensing images. Int J Appl Earth Obs Geoinf 21:436–443

Watman C, Austin D, Barnes N, Overett G, Thompson S (2004) Fast sum of absolute differences visual landmark detector. In: IEEE international conference on robotics and automation, 2004. Proceedings. ICRA'04, vol 5, pp 4827–4832

Jiang Y (2019) Research on road extraction of remote sensing image based on convolutional neural network. EURASIP J Image Video Process 1:1–11

Acknowledgements

The authors are grateful to Prof. Dr. Shattri Mansor and Prof Dr. Noordin Ahmed from Putra Malaysia University for providing the data, Prof. Dr. Maged Marghany.

Funding

Open access funding provided by Lulea University of Technology.

Author information

Authors and Affiliations

Contributions

Conceptualization was done by HD and NA.; methodology was done by HD, HA, HS, and NA.; software was contributed by HD and NA.; validation was done by HD, HA, HS, and NA; investigation was done by HD and NA.; data curation was done by HD and NA.; writing—original draft preparation—was done by HD, HA, HS, and NA.; visualization was done by NA. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dibs, H., Hasab, H.A., Jaber, H.S. et al. Automatic feature extraction and matching modelling for highly noise near-equatorial satellite images. Innov. Infrastruct. Solut. 7, 2 (2022). https://doi.org/10.1007/s41062-021-00598-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41062-021-00598-7