Abstract

Artificial intelligence (AI) has been integrated into higher education (HE), offering numerous benefits and transforming teaching and learning. Since its launch, ChatGPT has become the most popular learning model among Generation Z college students in HE. This study aimed to assess the knowledge, concerns, attitudes, and ethics of using ChatGPT among Generation Z college students in HE in Peru. An online survey was administered to 201 HE students with prior experience using the ChatGPT for academic activities. Two of the six proposed hypotheses were confirmed: Perceived Ethics (B = 0.856) and Student Concerns (B = 0.802). The findings suggest that HE students’ knowledge and positive attitudes toward ChatGPT do not guarantee its effective adoption and use. It is important to investigate how attitudes of optimism, skepticism, or apathy toward AI develop and how these attitudes influence the intention to use technologies such as the ChatGPT in HE settings. The dependence on ChatGPT raises ethical concerns that must be addressed with responsible use programs in HE. No sex or age differences were found in the relationship between the use of ChatGPTs and perceived ethics among HE students. However, further studies with diverse HE samples are needed to determine this relationship. To promote the ethical use of the ChatGPT in HE, institutions must develop comprehensive training programs, guidelines, and policies that address issues such as academic integrity, privacy, and misinformation. These initiatives should aim to educate students and university teachers on the responsible use of ChatGPT and other AI-based tools, fostering a culture of ethical adoption of AI to leverage its benefits and mitigate its potential risks, such as a lack of academic integrity.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Artificial intelligence (AI) is increasingly being integrated into higher education, offering numerous benefits and transforming teaching and learning experiences (Essien et al. 2020; Kuka et al. 2022). The use of AI technologies in education allows for the automation of processes and the development of innovative learning solutions (Nikolaeva et al. n.d.; Rath et al. 2023). AI can improve student learning outcomes by providing personalized recommendations and feedback (Kuka et al. 2022). It also has the potential to enhance the role of teachers and promote the development of digital competencies (Ligorio 2022). However, the implementation of AI in higher education comes with challenges, such as the need to address diversity and inclusion, reduce socioeconomic barriers, and ensure the ethical use of AI (Isaac et al. 2023). As AI continues to advance, it is important for educators and institutions to adapt and equip students with the necessary skills for the digital age (Kuka et al. 2022).

Globally, the integration of AI in academia poses a concerning challenge that is not exclusive to any country or region but reflects a global trend toward digitalization and automation in education (Crompton and Burke 2023). Various studies have revealed that the use of the Chat Generative Pretrained Transformer (ChatGPT) by university students offers opportunities such as providing personalized assistance and support, particularly for those facing language barriers or other challenges (Hassan 2023). Students perceive the ChatGPT as a tool that supports autonomous learning, helping them with academic writing, language teaching, and in-class learning (Hassan 2023; Karakose and Tülübaş 2023). Likewise, the ChatGPT can enhance teaching and learning activities by providing creative ideas, solutions, and personalized learning environments, for example, by developing course content that resonates with diverse student needs and learning styles. This tailoring to individual preferences facilitates engagement and fosters a deeper understanding of complex subjects. Furthermore, teachers are increasingly relying on AI to generate innovative teaching materials and methods that incorporate multimedia elements and interactive activities, which enrich the learning experience and appeal to various learning modalities. It can also assist teachers in assessing student work and designing assessment rubrics (Hassan 2023; Karakose and Tülübaş 2023).

Academic integrity, a fundamental principle that encompasses dedication to honesty, fairness, trust, responsibility, and respect in all academic endeavors (Holden et al. 2021), as well as the practice of researching and completing academic work with equity and coherence and adhering to the highest ethical standards (Guerrero-Dib et al. 2020), has become more critical than ever in the current context. This urgency arises as the modern technological revolution and the increasing popularity of artificial intelligence chatbots such as ChatGPT have facilitated access to information and content generation (Bin-Nashwan et al. 2023).

The use of ChatGPT in academic settings raises concerns about academic integrity, as students may become overly reliant on the tool, leading to a decline in higher-order thinking skills such as critical thinking, creativity, and problem solving (Črček & Patekar 2023a; Putra et al. 2023). One of the most significant risks associated with ChatGPT is plagiarism, as students may use the tool to generate entire assignments or substantial portions of their work without properly acknowledging the source or engaging in original thought (Črček & Patekar 2023a; Putra et al. 2023). This form of academic misconduct can occur when students rely on ChatGPT for the complete development of tasks, effectively passing off AI-generated content as their own work. Moreover, students have expressed concerns about the potential for cheating, the spread of misinformation, and fairness issues related to the use of ChatGPT in academic contexts (Famaye et al. 2023).

Singh et al. (2023) revealed that students believe that universities should provide clearer guidelines and better education on how and where ChatGPT can be used for learning activities. Therefore, it is essential that educational institutions establish regulations and guidelines to ensure the responsible and ethical use of ChatGPT by university students (Zeb et al. 2024a). Furthermore, ChatGPT can enhance the teaching-learning process, but its successful implementation requires that teachers be trained for proper use (Montenegro-Rueda et al. 2023).

Consequently, teachers and policymakers must balance the benefits of the ChatGPT with the need to maintain ethical practices that promote critical thinking, originality, and integrity among students (Farhi et al. 2023).

Moreover, significant changes in how students perceive and use the ChatGPT are observed internationally, reflecting cultural, educational, and regulatory differences (Roberts et al. 2023). In the U.S., concerns have focused on academic integrity, with debates on how ChatGPT can be used for original content generation versus the potential facilitation of plagiarism (Kleebayoon and Wiwanitkit 2023; Sarkar 2023). In the United Kingdom, the focus is on educational quality and equity. There is growing concern about whether the use of the ChatGPT could deepen the gap between students who have access to advanced technology and those who do not (Roberts et al. 2023). With its vast student population, India faces unique challenges related to access (Taylor et al. 2023). Here, the concern is how to democratize access to technologies such as the ChatGPT to ensure that all students, regardless of their location or resources, can benefit from it. In India, this is reflected in the effort to integrate AI into its vast digital infrastructure, which faces unique challenges in terms of security and regulation. This initiative highlights the importance of adapting emerging technology to diverse social and economic contexts (Gupta and Guglani 2023). In China, the debate revolves around privacy and data security. The integration of the ChatGPT into the Chinese educational system raises questions about how student data are handled and protected in an artificial intelligence (AI) environment (Ming and Bacon 2023). Germany, with its strong emphasis on technical and vocational education, is interested in how ChatGPT can be used to enhance specific skills and practical applications, maintaining an ethical and quality balance (von Garrel and Mayer 2023). Finally, in Australia, concerns have focused on how to integrate the ChatGPT in a way that complements and enriches traditional teaching methods without replacing human contact and experiential learning (Prem 2019).

The widespread adoption of ChatGPT by Generation Z university students has sparked debate among researchers (Singh et al. 2023; Gundu and Chibaya 2023; Montenegro-Rueda et al. 2023). Several studies have examined student attitudes toward using ChatGPT as a learning tool and have found a high level of positive attitudes toward its use (Ajlouni et al. 2023a). Furthermore, it has been demonstrated that ChatGPT can generate positive emotions (β = 0.418***), which influences the intention to frequently use the tool (Acosta-Enriquez et al. 2024). Furthermore, studies indicate that ChatGPT has the potential to facilitate the learning process (Ajlouni et al. 2023). However, there are doubts about the accuracy of the data produced by the ChatGPT, and some students feel uncomfortable using the platform (Ajlouni et al. 2023). It is important that educational institutions provide guidelines and training for students on how and where ChatGPT can be used for learning activities (Singh et al. 2023). Additionally, to successfully implement ChatGPT in education, it is necessary to train teachers on how to use the tool properly (Montenegro-Rueda et al. 2023). Overall, the ChatGPT can enhance educational experience if used responsibly and in conjunction with specific strategies (Gundu and Chibaya 2023).

Therefore, the overall goal of this study is to assess the knowledge, concerns, attitudes, and perceptions of ethics regarding the use of ChatGPT among Generation Z university students in the Peruvian context. This study is relevant because it analyses how knowledge and attitudes influence the use of the ChatGPT and how the use of this tool influences the perceived ethics and concerns of Peruvian students. In Peru, studies on the adoption of AI and its impact on higher education are still scarce. Consequently, it was relevant to conduct this study because it allowed for understanding from the students’ perspective how knowledge about ChatGPT and attitudes toward using ChatGPT influence their experiences. Additionally, it was possible to determine how the use of ChatGPT affects the perceived ethics and concerns of student users regarding ChatGPT.

Theoretical framework

ChatGPT and higher education

ChatGPT is a language model developed by OpenAI that has garnered significant attention since its launch on November 30, 2022, with potential applications across various fields, such as tourism, education, and software engineering. Mich and Garigliano (2023) suggested that the introduction of ChatGPT is a revolutionary force in digital transformation processes that involves a series of risks, such as inappropriate and unethical use, in addition to completely revolutionizing the way users carry out their activities, whether work-related or academic. It has rapidly spread not only in developed countries but also in developing ones (Kshetri 2023). In the context of education, the ChatGPT has had positive impacts on the teaching-learning process, but proper teacher training is crucial for successful implementation (Montenegro-Rueda et al. 2023). Furthermore, ChatGPT has been used in software engineering tasks, demonstrating its potential for integration into workflows and its ability to break down coding tasks into smaller parts (Abrahamsson et al. 2023). However, it is important to note that ChatGPT has inherent limitations and risks that must be considered (Mich and Garigliano 2023).

On the other hand, ChatGPT has gained significant popularity in higher education (Arista et al., n.d.-a; Hassan 2023) compared to other language models developed by Google and Meta (Farhi et al. 2023). There are experiences where it has been used to provide personalized assistance and support to students, help them navigate university systems, answer questions, and provide feedback (Hassan 2023). However, the introduction of the ChatGPT has raised concerns about academic integrity (Arista et al. 2023). Nonetheless, it is important that teachers receive training on the proper use of ChatGPT to maximize its benefits (Montenegro-Rueda et al. 2023).

Student knowledge predicts ChatGPT use

The technology acceptance model (TAM) has been widely used to explain technology acceptance among students in various educational contexts. This model includes constructs such as perceived usefulness and ease of use, which are fundamental in determining technology adoption (Tang and Hsiao 2023). Considering the rapid development and potential impact of AI technologies such as ChatGPT, it is essential to understand how students from different disciplines perceive and adopt such tools.

Surveys of university students have revealed that their attitudes toward technology adoption vary according to their area of knowledge. For example, according to Huedo-Martínez et al. (2023), engineering and architecture students are more likely to adopt technologies before social sciences and humanities students are. These findings suggest that students’ familiarity with technology and their perception of its relevance to their field of study can influence their willingness to adopt new tools.

In the context of the ChatGPT, a survey among computer science students showed that while many are aware of the tool, they do not routinely use it for academic purposes. They express skepticism about its positive impact on learning and believe that universities should provide more guidelines and education on its responsible use by university students and faculty (Singh et al. 2023). Furthermore, a study with senior computer engineering students revealed that although students admire ChatGPTs’ capabilities and find them interesting and motivating, they also believe that their responses are not always accurate and that good prior knowledge is needed to work effectively with this language model (Sane et al. 2023; Shoufan 2023).

However, the TAM has been applied to various student groups beyond those in engineering and computer science. Duong et al. (2023) indicated that students who believe that ChatGPT can serve as a facilitator of knowledge exchange (a platform where they can exchange ideas, gather multiple perspectives, and collaboratively address academic challenges) will not only be more motivated but also more likely to actively engage in using this technology. This finding highlights the importance of perceived usefulness in driving technology adoption across different disciplines.

In summary, students’ knowledge and perceptions of ChatGPT play a crucial role in predicting its use. Factors such as familiarity with the tool, perception of its capabilities, and understanding of its limitations influence students’ decisions to use ChatGPT for academic purposes (Castillo-Vergara et al. 2109; Huedo-Martínez et al. 2023; Tang and Hsiao 2023). By considering the insights gained from the TAM and its application to various student groups, we can better understand the factors that drive the adoption of AI technologies such as ChatGPT in educational settings.

Hypothesis 1

Knowledge about the ChatGPT influences its use.

Attitudes toward artificial intelligence in education

An attitude is an evaluation of a psychological object and is characterized by dimensions such as good versus bad and pleasant versus unpleasant (Ajzen 2001; Svenningsson et al. 2022). Similarly, self-compassion refers to an individual’s mental readiness to perform certain behaviors (Almasan et al. 2023). Attitude has traditionally been divided into affective, cognitive, and behavioral components (Abd-El-Khalick et al. 2015; Breckler 1984; Fishbein and Ajzen 1975).

Education attitudes toward AI are formed and modified through complex interactions among experiences, beliefs, and knowledge. Psychological theories, such as classical and operant conditioning, social learning theory, and cognitive dissonance theory, provide a basis for understanding how these attitudes develop. Repeated exposure to positive experiences with AI in educational contexts can lead to a more favorable attitude toward it. Conversely, cognitive dissonance can arise when previous experiences or beliefs about AI conflict with new information or current experiences (Chan and Hu 2023).

Students’ attitudes toward AI, such as optimism, skepticism, or apathy, have a significant impact on their willingness to interact and learn with these technologies (Thong et al. 2023). A positive attitude can encourage greater exploration and use of tools such as ChatGPT (Yasin 2022). However, a negative attitude can result in resistance to using AI, limiting learning opportunities (Irfan et al. 2023). A study conducted at the University of Jordan revealed a high level of positive attitudes toward using the ChatGPT as a learning tool, with 73.2% of respondents agreeing on its potential to facilitate the learning process (Ajlouni et al. 2023).

Several factors influence attitudes toward AI in education. The cultural and social context is crucial, as cultural beliefs and social values play a significant role in shaping attitudes (Hsu et al. 2021). Previous educational experience with technologies in the educational environment can significantly influence attitudes toward AI (Adelekan et al. 2018). Based on the above, we formulate the following:

Hypothesis 2

The attitude toward the ChatGPT influences its use.

ChatGPT use influences students’ concerns

University students have shown a high level of positive attitudes toward using ChatGPT as a learning tool, with moderate affective components and high behavioral and cognitive components of attitudes (Ajlouni et al. 2023).

Famaye et al. (2023) used the theory of reasoned action to interpret students’ perceptions and dispositions toward ChatGPT, revealing that students perceived ChatGPT as a valuable tool to support learning but had concerns about cheating, misinformation, and fairness issues. Likewise, it has been found that ChatGPT has a substantial impact on student motivation and engagement in the learning process, with a significant correlation between teachers’ and students’ perceptions of ChatGPT use (Muñoz et al. 2023).

Studies specify that students have concerns about the use of the ChatGPT in educational settings, including skepticism about its positive effects on learning and concerns about potential fraud, misinformation, and fairness issues related to its use (Famaye et al. 2023; Singh et al. 2023).

Concerns have been raised about the accuracy of the data produced by the ChatGPT, discomfort in using the platform, and anxiety when unable to access ChatGPT services (Ajlouni et al. 2023). Additionally, students are skeptical about the positive impact of the ChatGPT on learning and believe that universities should provide clearer guidelines and better education on how and where the tool can be used for learning activities (Singh et al. 2023).

Furthermore, not only have students expressed their concerns, but educators have also voiced their concerns about the integration of the ChatGPT in educational settings, emphasizing the need for responsible and successful application in teaching or research (Halaweh 2023). Based on the reviewed literature, the following is proposed:

Hypothesis 3

Experience with the use of ChatGPT influences students’ concerns.

The use of the ChatGPT influences students’ perceptions of ethics

In the context of AI, certain ethical principles are fundamental for its responsible and fair use. These principles include transparency, where AI algorithms and operations must be understandable to users. Justice and equity are essential for ensuring that AI applications do not perpetuate biases or discrimination (Köbis and Mehner 2021). Regarding privacy, it is crucial to protect users’ personal and sensitive information. Moreover, accountability must be clearly established, especially in contexts where AI decisions have significant consequences (Elendu et al. 2023).

Ethical dilemmas in the use of AI in higher education include the use of student data, where privacy and consent issues arise (Khairatun Hisan and Miftahul Amri 2022). Biases in machine learning that could lead to unfair educational outcomes must also be considered (Goodman 2023). Academic autonomy must be balanced with the implementation of AI tools such as ChatGPT (Garani-Papadatos et al. 2022).

For the ethical implementation of AI in education, policies and regulations guiding its use are needed. This includes data protection regulations such as the General Data Protection Regulation (GDPR) in Europe and ethical guidelines for the development and use of AI provided by international and academic bodies (Ayhan 2023). Additionally, specific institutional policies of educational institutions must regulate the use of AI, ensuring alignment with the ethical values and principles of the academic community.

Students perceive the ChatGPT as a valuable tool for learning but have concerns about deception, misinformation, and equity (Famaye et al. 2023). Furthermore, the use of ChatGPT in academia has both negative and positive implications, with concerns about academic dishonesty and dependence on technology (Arista et al. 2023; Črček & Patekar 2023a; Farhi et al. 2023; Fuchs and Aguilos 2023; Hung and Chen 2023.; Ogugua et al. 2023; Robledo et al. 2023; Zeb et al. 2024a; Zhong et al. 2023). On the other hand, studies show that students use the ChatGPT for various academic activities, including generating ideas, summarizing, and paraphrasing with different perceptions of ethical acceptability (Črček & Patekar 2023a; Farhi et al. 2023).

Students expressed concern about the potential negative effects on cognitive abilities when they relied too much on the ChatGPT, indicating a moderate level of trust in the accuracy of the information provided by the ChatGPT (Bodani et al. 2023). Additionally, they believe that improvements to the ChatGPT are necessary and are optimistic that this will happen soon, emphasizing the need for developers to improve the accuracy of the responses given and for educators to guide students on effective prompting techniques and how to evaluate the generated responses (Shoufan 2023). Based on the above, the following is proposed:

Hypothesis 4

The use of the ChatGPT influences students’ perceptions of ethics.

The use of the ChatGPT and perceived ethics according to demographic variables

However, few studies have used gender and age as moderators of the use of the ChatGPT for comparison with students’ perceptions of ethics according to these demographic variables. However, they found that students perceive the use of ChatGPT as an idea generator to be more ethically acceptable, while other uses, such as writing a part of the assignment, cheating, and misinformation regarding sources, are considered unethical, raising concerns about academic misconduct and fairness (Črček & Patekar 2023a; Famaye et al. 2023).

Previous research has shown that demographic factors such as gender and age can influence the adoption and perception of new technologies, including AI-based tools such as ChatGPT. For example, a study by Lopes et al. (2023) involving participants aged 18 to 43 years revealed significant differences between responses generated by ChatGPT and those generated by humans, suggesting that the perceived reliability of ChatGPT may vary across age groups. Additionally, a study conducted in Jordan revealed that undergraduate students generally held positive attitudes toward using ChatGPT as a learning tool, although they expressed concerns about the data accuracy and discomfort associated with using the platform (Ajlouni et al. 2023). These findings indicate that age may play a role in shaping students’ perceptions and use of ChatGPTs.

Furthermore, gender differences have been observed in technology adoption and perception. Studies have shown that men and women often have different attitudes toward technology, with women sometimes expressing more concerns about privacy, security, and ethical implications (Venkatesh et al. 2000; Goswami and Dutta 2015). In the context of the ChatGPT, Singh et al. (2023) found that students in the United Kingdom were skeptical about the positive effects of the ChatGPT on learning and emphasized the need for clearer guidelines and training on its use in academic activities. It is plausible that these concerns and attitudes may vary between male and female students.

Given the evidence of age and gender differences in technology adoption and perception, it is reasonable to hypothesize that the relationship between the use of ChatGPT and perceived ethics may differ by sex (Hypothesis 5) and age group (Hypothesis 6). Investigating these hypotheses will provide valuable insights into how demographic factors influence students’ engagement with and attitudes toward AI-based tools such as ChatGPT. Understanding these differences can help educators and institutions develop targeted strategies to address concerns, provide appropriate guidance, and promote the responsible use of ChatGPTs in academic settings. Therefore, the following hypothesis is proposed:

Hypothesis 5

The relationship between the use of the ChatGPT and perceived ethics differs by sex.

Hypothesis 6

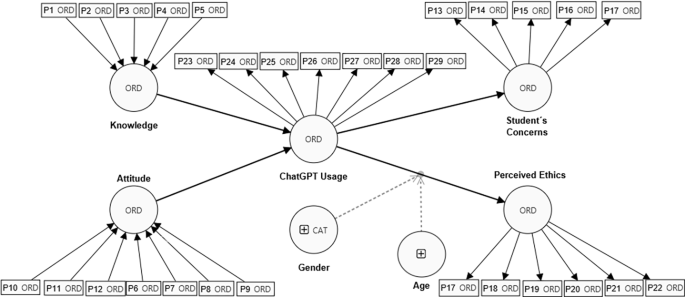

The relationship between the use of the ChatGPT and perceived ethics differs by age group. Figure 1 presents the proposed research model, along with the previously substantiated research hypotheses.

Method

This research was oriented toward a quantitative approach of exploratory and explanatory nature because its purpose was to evaluate the knowledge, attitudes, and perceptions of ethics regarding the use of the ChatGPT among university students. Additionally, the study involved a nonexperimental and cross-sectional design because it was conducted over a single period. Furthermore, the hypothetical-deductive method was employed because, based on a literature review, hypotheses were formulated, followed by an empirical evaluation to test these hypotheses.

Participants

In the study, a non-probabilistic accidental sampling method was employed, involving 201 participants who voluntarily completed the survey. Table 1 presents the socio-demographic characteristics of the participants. Regarding their fields of study, 1.49% were from agronomy and veterinary science, 1.49% from architecture and urban planning, 2.99% from exact and natural sciences, 46.27% from social sciences and humanities, 8.96% from health sciences, 9.45% from economics and administration, and 29.35% from engineering. Of the total participants from this generation, the majority were female (54.7%), and a smaller percentage were male (45.3%). The predominant age ranges of the participants were 20–22 and 17–19 years. The majority of participants were undergraduate students (9.5%). Laptops, computers and mobile phones were the devices most commonly used by participants to connect to the ChatGPT. Finally, regarding usage time, 42.8% indicated that they had been using ChatGPT for 1 to 2 months, and 18.4% reported using it for 3 to 4 months.

Instrument

The data collection instrument was created in Google Forms (https://forms.gle/dchuMwzrQsZNAoeHA) and presented in Spanish, contextualized to the Peruvian setting. The anonymous survey consisted of two sections: the first section presented the description of the questionnaire (objective, ethical aspects to consider, and contact of the principal investigator for inquiries) and included the question “Do you voluntarily agree to participate in the study?” With branching, participants who selected “yes” responded to the survey, and if they answered “no”, the form automatically closed. This section included sociodemographic questions such as age, sex, type of university, level of education, professional career, type of device, and approximate usage time of the ChatGPT to thoroughly understand the characteristics of the participants. In the second section, items for the constructs were placed, where knowledge (5 items adapted from Bodani et al. 2023), attitude (7 items adapted from Bodani et al. 2023), and concern (5 items adapted from Farhi et al. 2023) were measured with a three-point Likert scale (1 = Yes; 2 = No; 3 = Maybe). Moreover, the Perceived Ethics construct (6 items adapted from Malmström et al. 2023) and the Use of ChatGPT construct (6 items adapted from Haleem et al. 2022) used a five-point Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree).

Procedure and data analysis

An online survey was administered to assess the concerns, knowledge, attitudes, and perceptions of ethics of university students regarding the use of the ChatGPT. The study was conducted during the months of September and December of 2023 at four Peruvian universities located in the La Libertad and Lima departments. An online form containing two sections was submitted; the first section included an information sheet for participants and sociodemographic questions, while the second section contained the questionnaire items. In total, 225 responses were collected from participants; however, 201 responses were used for analysis since, of the 24 responses, students did not agree to participate in the study by selecting the “no, I accept” option in a mandatory branching question at the beginning of the form.

Regarding ethical aspects, the research protocol was approved by the ethics committee of the National University of Trujillo, and then the data were collected through an anonymous online survey. In addition, before participating in the study, all participants read the informed consent form and freely and voluntarily agreed to participate in the study.

The results of the analysis of the sociodemographic data were analyzed, and frequencies and percentages were obtained using Excel. To test the research hypotheses, structural equation modeling (SEM) was performed with the statistical software Smart-PLS v.4.0.9.8, which is based on the partial least squares (PLS) technique, to test the research hypotheses of the SEM (Ringle et al. 2022). Reliability was assessed using Cronbach’s alpha coefficient and composite reliability (CR), with values above 0.7 (Table 2). Convergent validity was assessed with the average variance extracted (AVE), with values above 0.5 (Table 2). Moreover, to evaluate discriminant validity, the criterion of Fornell and Larcker (1981) was followed, analyzing the square root of the AVE of each construct to ensure that its values were not higher than the correlations between all the other constructs and the specific construct (Table 3).

Results

Descriptive results

Table 4 provides a descriptive analysis of the evaluated constructs. For the Knowledge construct, the KNW1 to KNW5 items exhibited medians ranging from 1.000 to 2.000, suggesting that the responses tended toward the lower end of the scale. The standard deviation ranged from 0.441 to 0.906, indicating moderate variability in the responses. Additionally, the range of responses is 2 for all items, implying that responses are distributed across two distinct points on the scale.

Regarding the Attitude construct, items ATT1 to ATT7 predominantly have a median of 1.000, except for ATT4 and ATT7, for which the median is 2.000, suggesting a generally negative or neutral attitude. The standard deviation ranged from 0.623 to 0.936, indicating significant variability in the responses. The range is 2, indicating a distribution across two distinct points on the scale for all measured attitudes.

In terms of student concerns, items SC1 to SC5 show medians varying from 1,000 to 4,000, reflecting greater concern in certain aspects. The standard deviation ranges from 0.928 to 0.974, suggesting high variability in student concerns. The range is 3, indicating that the responses span three distinct points on the scale.

For the Perceived Ethics construct, items PE1 to PE6 consistently had medians of 4.000, indicating a positive perception of ethics. The standard deviation varies between 0.904 and 0.994, showing considerable variability in ethical perception. The range is 3, suggesting a distribution across three distinct points on the scale.

Last, in the use of the ChatGPT construct, the GPTUS1 to GPTUS6 items presented medians ranging from 1.000 to 4.000, indicating varying levels of use and attitudes toward ChatGPT. The standard deviation ranged from 0.869 to 1.097, indicating high variability in the use of ChatGPT. The range is 4, implying that the responses cover the entire 5-point scale, reflecting a wide range of experiences and perceptions regarding the use of the ChatGPT.

Model measurement results

For convergent validity, as shown in Table 2, factorial loadings, Cronbach’s alpha (α), composite reliability (CR), and average variance extracted (AVE) were analyzed. According to the criteria of (Hair 2009), it is recommended that the factorial loadings of all items surpass the threshold of 0.50, and precisely, the results of the study construct items show factorial loadings ranging from 0.703 to 0.977, satisfying this threshold. Based on the criterion of Nunnally (1994), when α and CR present values greater than 0.70, they are considered adequate, and as shown in Table 2, all the constructs meet this criterion. Finally, according to (Teo and Noyes 2014), AVE values are considered adequate when they are above 0.50, and as evidenced in Table 2, all the constructs exceed this threshold.

To analyze the discriminant validity of the research model, the criterion of Fornell and Larcker (1981) was used, according to which there is discriminant validity if the square root of the AVE located in the diagonal must be significantly greater than the correlations of the constructs located outside the diagonal. Table 3 shows that the diagonal values are significantly greater than the correlation values of the constructs that are outside the diagonal; consequently, the SEM has high discriminant validity.

Research hypothesis testing

Hypothesis testing for the SEM was conducted using the partial least squares (PLS) technique in SmartPLS software version 4.0.9.8. First, the goodness-of-fit indices were considerably acceptable: χ²= 4248.817, SRMR = 0.087, d_ULS = 4.009, d_G = 1.628, and NFI = 0.959.

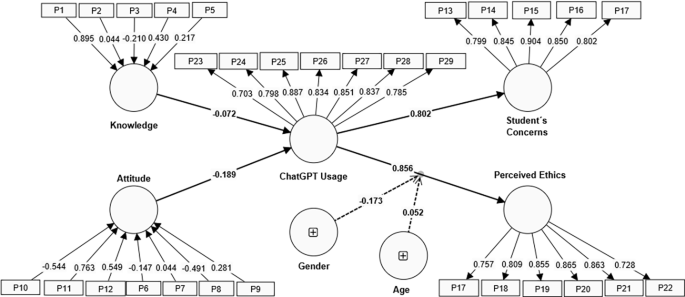

Second, Table 5; Fig. 2 show the standardized path coefficients, p values, and other results. Two out of the six hypotheses are accepted. The verified hypotheses presented path coefficients greater than 0.50, suggesting a significant and positive relationship. Furthermore, the two paths of the accepted hypotheses were highly influential (ChatGPT Usage → Perceived Ethics = 12.985; ChatGPT Usage → Student´s Concerns = 23.754).

Third, the values of the coefficient of determination (R2) indicate that knowledge and attitude explain 84.2% of the variation in ChatGPT usage. On the other hand, ChatGPT Usage explains 59% of the variation in Perceived Ethics and 64% of the variation in Students’ Concerns.

Research model solved

Figure 2 illustrates the resolved structural model from a study on ChatGPT usage, detailing the standardized path coefficients for hypothesized relationships between constructs such as knowledge, attitude, student concerns, and perceived ethics and their direct or indirect influence on ChatGPT usage. It also includes the moderating effects of gender and age on these relationships. The coefficients on the arrows represent the strength and direction of these relationships, with significant paths often highlighted to denote their impact on the model

Discussion

The main objective of the study was to evaluate the concerns, knowledge, attitudes, and ethics of university students regarding the use of the ChatGPT. The research model initially showed acceptable fit indices. Moreover, the R2 values demonstrated that knowledge and attitude explained 84.2% of the variation in ChatGPT usage. The ChatGPT Usage construct explains 59% of the variation in Perceived Ethics and 64% of the variation in Student´s Concerns.

Regarding hypothesis 1, the results indicate that knowledge about ChatGPT among students does not positively influence the use of this system (B=-0.072; P value = 0.586 > 0.05). In another context, Duong et al. (2023) reported that students who believe that ChatGPT serves as a facilitator of knowledge exchange are more motivated and more likely to use it in the future. On the other hand, (Huedo-Martínez et al. 2023) maintain that attitudes toward the adoption and use of technology vary depending on their area of knowledge; therefore, it is likely that this hypothesis was not confirmed because the participants belonged to Generation Z but rather from different professional careers. In addition, previous familiarity with similar technologies could have played an important role in students’ perceptions and use of the ChatGPT. Those with prior experience in artificial intelligence tools or chatbot systems may show differences in their approach and valuation of ChatGPT compared with those without such experience. This aspect, which was not directly covered by our research, could explain the variability in the acceptance and use of the ChatGPT among students from different disciplines.

Regarding the second research hypothesis, the results showed that attitude does not influence the use of ChatGPT (B=-0.189; P value = 0.404 > 0.05). In this respect, [53] indicated that students’ attitudes toward AI, such as optimism, skepticism, or apathy, have a significant impact on their willingness to interact and acquire knowledge through these technologies. On the other hand, Halaweh (2023) noted that a negative attitude results in resistance to using AI, limiting learning opportunities. Therefore, this relationship was likely not confirmed because students exhibit skepticism about the benefits of ChatGPT, which affects their willingness to use this language model. The skepticism surrounding the ChatGPT may stem from various sources, such as concerns about the accuracy of information provided by this AI-based tool, fears of an overreliance on technology in learning environments, or the belief that these tools could undermine critical and analytical thinking abilities. These issues underscore the intricate dynamics between attitudes toward artificial intelligence and its successful integration into educational contexts. Although positive views on AI may promote the intention to utilize tools such as ChatGPT, it is crucial to acknowledge that the adoption of AI technologies is not solely influenced by attitudes alone. Other elements, including perceived usefulness, ease of use, and potential risks associated with the technology, also significantly contribute to the adoption process. Thus, the relationship between attitudes toward AI and the effective implementation of AI-based tools should not be viewed as straightforward. Instead, it represents a complex interaction of multiple factors that must be meticulously evaluated when incorporating AI technologies into educational strategies.

The impact of contextual and individual factors on shaping attitudes toward ChatGPT should not be overlooked. Factors such as prior experience with technology, the level of understanding of AI, and even social and cultural contexts can profoundly influence perceptions and attitudes toward ChatGPT. This insight underscores the need for interventions designed to enhance receptiveness to ChatGPT. These should address the foundational elements by disseminating clear and precise information about its functions, limitations, and potential benefits for educational practices. When effectively incorporated into educational settings, ChatGPT can provide numerous advantages that augment the learning experience. For instance, it offers immediate feedback, personalized assistance, and access to an extensive database of information, thus enabling deeper exploration of subjects and fostering autonomous learning. Additionally, the ChatGPT can support educators in developing interactive educational content, creating assessment items, and providing tailored assistance to students with varied learning requirements. By elucidating these benefits and illustrating how ChatGPT can supplement and enhance conventional teaching approaches, educators can facilitate better appreciation among students and other stakeholders of the significant role this AI tool can play in education. Such understanding is likely to lead to more favorable attitudes and increased readiness to embrace ChatGPT as an essential educational resource.

On the other hand, according to hypothesis 3, the results demonstrated that the use of ChatGPT influenced students’ concerns (B = 0.802; P value = 0.000 < 0.05). According to these findings (Famaye et al. 2023; Singh et al. 2023), students are concerned about the use of ChatGPT in educational settings, including skepticism about its positive effects on learning and concerns about potential fraud, misinformation, and equity issues related to its use. Furthermore, they are skeptical about the positive impact of the ChatGPT on learning and believe that universities should provide clearer guidelines and better education on how and where the tool can be used for learning activities (Singh et al. 2023). Therefore, the use of the ChatGPT generates concerns among students about its positive consequences, privacy, and misinformation. This finding underlines the need for a comprehensive framework that addresses both the potentialities and challenges presented by the ChatGPT in the educational realm (Ayhan 2023). To address these concerns, it is essential that educational institutions adopt a proactive approach, offering specific training on the ethical and effective use of the ChatGPT, as well as on identifying and preventing fraud and misinformation.

To address concerns regarding academic integrity and the appropriate utilization of ChatGPT in educational contexts, it is imperative to establish explicit policies and guidelines that extend beyond mere training programs. These policies should be crafted to ensure that technological applications enhance conventional teaching methodologies, promoting deeper and more critical learning without undermining academic integrity or student equity. An effective strategy involves the integration of academic integrity principles and practices directly within teaching and learning modules. For instance, educators can construct assignments and activities that compel students to critically evaluate the data generated by ChatGPT, juxtapose it with alternative sources, and contemplate the ramifications of employing AI-based tools within their learning trajectory. By incorporating these activities into the curriculum, students can attain a more profound understanding of the responsible use of ChatGPT and develop the competencies necessary to uphold academic integrity amid technological progress. Furthermore, being transparent about how ChatGPT can serve as a beneficial resource for specific learning scenarios and being clear about its constraints are crucial for fostering trust in its application. Educators should facilitate open dialogs with students concerning the advantages and challenges associated with using ChatGPT, offering advice on when and how to employ the tool both effectively and ethically. By embedding academic integrity principles and practices within teaching and learning modules, educators can foster a culture of responsible AI usage that transcends isolated training initiatives and becomes a fundamental component of the educational framework.

Similarly, in relation to hypothesis 4, the results suggest that the use of ChatGPT influences students’ perceptions of ethics (B = 0.856; P value = 0.000 < 0.05). In this respect, Famaye et al. (n.d.-d) noted that the ChatGPT is a valuable tool for learning, but they have concerns about deception, misinformation, and equity. On the other hand, the use of ChatGPT in academia has both negative and positive effects, with concerns about academic dishonesty and dependence on technology (Arista et al. 2023; Črček & Patekar 2023a; Farhi et al. 2023; Fuchs and Aguilos 2023; Hung & Chen, 380 C.E.; Ogugua et al. 2023; Robledo et al. 2023; Zeb et al. 2024a; Zhong et al. 2023). Similarly, when students rely too much on the ChatGPT, they are concerned about the potential negative effects on their cognitive abilities (Bodani et al. 2023). Therefore, it is important to address these ethical issues associated with the use of the ChatGPT in educational settings to ensure that its implementation positively contributes to the academic and personal development of students. The influence of ChatGPT use on students’ perceived ethics underscores the importance of fostering ongoing dialog about ethical values and individual responsibilities in the use of AI technologies.

We believe that to address students’ ethical concerns, it is essential for educational institutions to implement training programs that specifically address issues of academic integrity and responsible technology use. These programs should teach students how to use ChatGPTs in a way that complements their learning without compromising their intellectual development or academic honesty. This includes guiding them on how to properly cite AI-generated material and distinguishing between acceptable collaboration with these tools and plagiarism.

Furthermore, it is crucial to promote a deeper understanding of the limitations of ChatGPT and other AI tools, as well as their implications for privacy and data security. By better understanding these limitations, students can make more informed and ethical decisions about when and how to use these technologies.

Moderation differences in the use of the ChatGPT and perceived ethics by sex and age

The findings of the present study indicate that neither gender nor age moderates the influence of ChatGPT use and perceived ethics among Generation Z students. In reality, few studies have used these demographic variables to evaluate this relationship. In another context, (Črček & Patekar 2023a; Famaye et al. 2023) indicate that students who perceive the most ethically acceptable use of the ChatGPT as an idea generator, while other uses are seen as unethical, leading to concerns about academic misconduct and equity. Lopes et al. (2023) reported differences between the responses generated by the ChatGPT and those generated by students aged 18 to 43 years. In the United Kingdom, students expressed some skepticism about the positive benefits of ChatGPTs for their learning. Therefore, these relationships may not be confirmed by various contextual factors, thus providing evidence that both male and female Generation Z students perceive risks such as dependence and misinformation by interacting with this system in the same way.

Theoretical and practical implications

The knowledge and attitudes of students toward ChatGPT do not automatically result in its broader adoption, underscoring the complexity of the factors that influence its acceptance. The ethical concerns raised by the use of ChatGPT highlight the necessity of developing theories that address the wider psychosocial implications of relying on and delegating tasks to AI, especially within the realm of academic integrity.

As students increasingly depend on ChatGPT for their academic tasks, they may outsource essential cognitive skills such as critical thinking, problem solving, and the generation of original content to this technology. This shift can lead to a reduced sense of personal accountability in upholding academic integrity, as students might view AI as a replacement for their own intellectual labor. Consequently, this transfer of functions to AI can contribute to the degradation of academic integrity, manifesting in behaviors such as plagiarism, the uncritical acceptance of AI-generated content, and a deficiency in original thought.

The evidence indicates that merely possessing knowledge of or holding favorable attitudes toward ChatGPT does not guarantee its effective integration into educational practices. A comprehensive approach that considers the interplay of multiple factors in the technological acceptance model while also addressing the psychosocial effects of dependency on AI and its potential impact on academic integrity is essential for thoroughly comprehending and mitigating the challenges posed by the integration of ChatGPTs in educational contexts.

Existing theoretical models, such as the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT2), could be expanded to include specific factors related to AI technologies such as the ChatGPT. These models should consider how previous experiences with similar technologies, performance expectations, the perceived effort needed, social influence, and facilitating conditions impact predisposition toward AI use. Furthermore, it is critical to understand how attitudes toward AI, such as optimism or skepticism, develop and how these attitudes not only affect the intention to use but also affect effective adoption and usage practices.

Limitations and future studies

The main limitations of the study were as follows: First, there was no scale for measuring students’ concerns or ethics perceptions regarding the use of the ChatGPT; therefore, although the quality tests of the study model were acceptable, these constructs cannot be measured with greater precision. Second, the survey was based on the experience of Generation Z students using the ChatGPT. Finally, the sample was obtained through nonprobabilistic sampling accidentally, so it is possible that the study’s results may not be generalizable to other contexts.

For future work, it would be important to create new scales to assess students’ perceived ethics. Additionally, it would be beneficial to expand the scope of the study to include participants from different generations, as well as those from various academic disciplines and cultures, to better understand how these variables may influence the perception and use of ChatGPTs and other artificial intelligence technologies. This expansion of the study could provide a more nuanced and generalizable view of AI acceptance in educational contexts.

Another important aspect for future research is the detailed analysis of how different educational and professional experiences with AI, including both positive and negative experiences, affect the willingness to adopt technologies such as ChatGPT. Understanding the dynamics of these previous experiences could offer valuable insights for the development of pedagogical and technological strategies that foster more effective and ethical adoption of AI in education.

When investigating the implementation strategies and regulatory frameworks that educational institutions might establish to promote the ethical and responsible utilization of ChatGPT, it is crucial to acknowledge the diverse needs and challenges that vary across different academic disciplines. Establishing clear policies concerning acceptable use, academic integrity, data privacy, and security is essential; however, these policies may require adaptation to meet the specific needs and contexts of each discipline.

For instance, in disciplines such as creative writing or the arts, the use of the ChatGPT could raise distinct concerns regarding originality and authenticity, necessitating policies tailored to address these specific issues. Conversely, in fields such as mathematics or computer science, the emphasis might shift toward ensuring that students comprehend the underlying principles and can apply these principles independently rather than depending on the solutions generated by AI.

Additionally, the creation of training programs for both students and educators should consider the varied applications of the ChatGPT across different disciplines. These programs should offer guidance for effectively integrating ChatGPT into particular disciplinary contexts while also addressing the potential risks and challenges posed by its use in each area.

By acknowledging the need for nuanced and context-specific approaches to regulatory frameworks and implementation strategies, educational institutions can more effectively support the ethical and responsible application of the ChatGPT across a broad spectrum of disciplines. This approach ensures that the technology is leveraged in a manner that augments learning outcomes while preserving academic integrity.

Furthermore, given the importance of attitudes toward AI in the adoption of technologies such as ChatGPT, future research could explore in depth the factors that contribute to the development of these attitudes. This could include studies examining the impact of informational campaigns, practical experiences with technology, and the role of media and social networks in shaping perceptions about AI.

Future studies should investigate how perceptions regarding the ethics and utilization of ChatGPT may differ based on demographic variables such as gender, age, and cultural background. This could yield important insights into the distinct concerns of various groups, potentially guiding the development of more inclusive, equitable, and culturally sensitive tools and policies. For instance, should specific age demographics show a tendency to negatively affect ChatGPT’s impact on academic fairness, educational institutions could develop tailored interventions to mitigate these perceptions. Moreover, cultural factors might significantly influence attitudes toward AI-based tools such as ChatGPT. Students of diverse cultural origins might exhibit different levels of familiarity with confidence in or receptiveness to AI technologies, which could affect their readiness to embrace and utilize ChatGPT for educational objectives. Additionally, cultural norms and values concerning education, academic integrity, and the role of technology in education may vary among cultures. These variances could result in different perceptions regarding the ethicality and suitability of employing the ChatGPT in educational contexts. For example, some cultural groups may value individual effort and originality more highly, whereas others might favor collaborative learning and the integration of technological support. By conducting research that addresses cultural barriers and differences, educational institutions can devise more culturally attuned approaches to incorporating ChatGPT into their pedagogical strategies. This might entail customizing training programs, support services, and policies to meet the particular needs and concerns of students from varied cultural backgrounds, ensuring that the technology is employed in a way that respects and accommodates cultural distinctions while fostering academic excellence and integrity.

Conclusions

The research contributes to the literature by suggesting that models such as the TAM and UTAUT2 incorporate specific variables related to AI technologies. Thus, a deeper understanding of how utility perceptions, effort, expectations, and previous experiences affect students’ disposition toward the use of ChatGPT can be obtained, providing a more solid foundation for the development of AI-oriented educational strategies and technologies.

The knowledge and positive attitudes of students toward ChatGPT do not guarantee its adoption and effective use. Despite the common belief that knowledge and positive attitudes toward a technology drive its use, our findings suggest that reality is more nuanced. Factors such as the area of knowledge, previous experience with similar technologies, and cultural and social context play crucial roles in how students perceive and decide to use ChatGPTs. Therefore, deeper theoretical models that consider context, disciplinary variables, and previous experiences with AI are needed.

It is important to investigate how attitudes of optimism, skepticism, or apathy toward AI develop and how these attitudes influence the intention to use technologies such as the ChatGPT.

The dependence of students on AI tools such as ChatGPT raises ethical concerns that must be addressed with training programs on responsible use. The significant impact of ChatGPT use on students’ ethical concerns highlights the critical need to develop and apply strong ethical frameworks in the implementation of AI in education.

This study examined the relationship between ChatGPT usage and perceived ethics, taking into account potential variations based on gender and age. Perceived ethics refers to students’ beliefs concerning the moral and ethical consequences of utilizing ChatGPT for academic purposes. The results indicated no significant differences in this relationship with respect to gender or age, suggesting that both male and female students, as well as students across various age groups, share similar views on the ethical implications of using the ChatGPT. Nonetheless, further research involving diverse samples and varying educational contexts is required to deepen the analysis of this relationship and investigate potential demographic variations. Understanding how students perceive the ethical ramifications of using ChatGPT is vital for the development of effective guidelines, policies, and training programs that foster the responsible and ethical use of AI-driven tools in education.

Finally, HEIs must develop policies, specific guidelines, and training programs to promote the ethical use of the ChatGPT, addressing issues such as academic integrity, privacy, and misinformation. In addition, educational institutions must strive to offer specific training on the ethical use of the ChatGPT, addressing issues such as misinformation, fraud, and academic integrity. This proactive approach will not only help mitigate students’ concerns but also promote more responsible and critical use of these technologies.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Abbreviations

- AI:

-

Artificial intelligence

- HEIs:

-

Higher education institutions

- TAM:

-

Technology acceptance model

- UTAUT2:

-

The unified theory of acceptance and use of technology

- α:

-

Cronbach’s alpha

- AVE:

-

Average variance extracted

- CR:

-

Composite reliability

- ChatGPT:

-

Chat-Generative Pretrained Transformer

- SEM:

-

Structural equation model

- PLS:

-

Partial least squares

References

Abd-El-Khalick F, Summers R, Said Z, Wang S, Culbertson M (2015) Development and large-scale validation of an instrument to assess arabic-speaking students’ attitudes toward Science. Int J Sci Educ 37(16):2637–2663. https://doi.org/10.1080/09500693.2015.1098789

Abrahamsson P, Anttila T, Hakala J, Ketola J, Knappe A, Lahtinen D, Liukko V, Poranen T, Ritala T-M, Setälä M (2023) ChatGPT as a Fullstack Web Developer - Early Results. Volume 489 LNBIP, Pages 201–209, Amsterdam. https://doi.org/10.1007/978-3-031-48550-3_20

Acosta-Enriquez BG, Arbulú Ballesteros MA, Huamaní Jordan O, López Roca C, Tirado S, K (2024) Analysis of college students’ attitudes toward the use of ChatGPT in their academic activities: Effect of intent to use, verification of information and responsible use. BMC Psychol 12(1). https://doi.org/10.1186/s40359-024-01764-z. Scopus

Adelekan S, Williamson M, Atiku S (2018) Influence of social entrepreneurship pedagogical initiatives on students’ attitudes and behaviours. J Bus Retail Manage Res 12(03). https://doi.org/10.24052/JBRMR/V12IS03/ART-15

Ajlouni A, Wahba F, Almahaireh A (2023) Students’ attitudes towards using ChatGPT as a Learning Tool: the case of the University of Jordan. 17(18):99–117. https://doi.org/10.3991/ijim.v17i18.41753

Ajzen I (2001) Nature and operation of attitudes. Ann Rev Psychol 52:27–58. https://doi.org/10.1146/ANNUREV.PSYCH.52.1.27

Almasan O, Buduru S, Lin Y, Karan-Romero M, Salazar-Gamarra RE, Leon-Rios XA (2023) Evaluation of attitudes and perceptions in students about the Use of Artificial Intelligence in Dentistry. Dentistry J 2023 11(5):125. https://doi.org/10.3390/DJ11050125

Arista A, Shuib L, Ismail M (2023) An Overview chatGPT in Higher Education in Indonesia and Malaysia. Pages 273–277, Online. https://doi.org/10.1109/ICIMCIS60089.2023.10349053

Ayhan Y (2023) The impact of Artificial Intelligence on Psychiatry: benefits and Concerns-An assay from a disputed ‘author’. Turkish J Psychiatry. https://doi.org/10.5080/u27365

Bin-Nashwan SA, Sadallah M, Bouteraa M (2023) Use of ChatGPT in academia: academic integrity hangs in the balance. Technol Soc 75:102370. https://doi.org/10.1016/j.techsoc.2023.102370

Bodani N, Lal A, Maqsood A, Altamash S, Ahmed N, Heboyan A (2023) Knowledge, attitude, and practices of General Population toward utilizing ChatGPT: a cross-sectional study. 13(4). https://doi.org/10.1177/21582440231211079

Breckler SJ (1984) Empirical validation of affect, behavior, and cognition as distinct components of attitude. J Personal Soc Psychol 47(6):1191–1205. https://doi.org/10.1037/0022-3514.47.6.1191

Castillo-Vergara M, Álvarez-Marín A, Pinto V, E., Valdez-Juárez LE (2109) Technological Acceptance of Industry 4.0 by students from rural areas 11(14). https://doi.org/10.3390/electronics11142109.

Chan C, Hu W (2023) Students’ voices on generative AI: perceptions, benefits, and challenges in higher education. Int J Educational Technol High Educ 20(1):43. https://doi.org/10.1186/s41239-023-00411-8

Črček N, Patekar J (2023a) Writing with AI: University Students’ Use of ChatGPT. 9(4):128–138. https://doi.org/10.17323/jle.2023.17379

Crompton H, Burke D (2023) Artificial intelligence in higher education: the state of the field. Int J Educational Technol High Educ 20(1). https://doi.org/10.1186/s41239-023-00392-8

Duong C, Vu T, Ngo T (2023) Applying a modified technology acceptance model to explain higher education students’ usage of ChatGPT: a serial multiple mediation model with knowledge sharing as a moderator. Int J Manage Educ 21(3):100883. https://doi.org/10.1016/J.IJME.2023.100883

Elendu C, Amaechi D, Elendu T, Jingwa K, Okoye O, John Okah M, Ladele J, Farah A, Alimi HA (2023) Ethical implications of AI and robotics in healthcare: a review. Medicine 102(50). https://doi.org/10.1097/MD.0000000000036671

Essien A, Chukwukelu G, Essien V (2020) Opportunities and challenges of adopting artificial intelligence for learning and teaching in higher education 67–78. https://doi.org/10.4018/978-1-7998-4846-2.ch005

Famaye T, Adisa I, Irgens G (2023) To Ban or Embrace: students’ perceptions towards adopting Advanced AI Chatbots in Schools. 1895:140–154. https://doi.org/10.1007/978-3-031-47014-1_10

Farhi F, Jeljeli R, Aburezeq I, Dweikat FF, Al-shami SA, Slamene R (2023) Analyzing the students’ views, concerns, and perceived ethics about chat GPT usage. Computers Education: Artif Intell 5(5). https://doi.org/10.1016/J.CAEAI.2023.100180

Fishbein M, Ajzen I (1975) Strategies of Change: Active Participation. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research 411–450

Fornell C, Larcker D (1981) Evaluating Structural equation models with unobservable variables and measurement error. J Mark Res 18(1):39. https://doi.org/10.2307/3151312

Fuchs K, Aguilos V (2023) Integrating Artificial Intelligence in Higher Education: empirical insights from students about using ChatGPT. 13(9):1365–1371. https://doi.org/10.18178/ijiet.2023.13.9.1939

Garani-Papadatos T, Natsiavas P, Meyerheim M, Hoffmann S, Karamanidou C, Payne SA (2022) Ethical principles in Digital Palliative Care for children: the MyPal Project and experiences made in Designing a Trustworthy Approach. Front Digit Health 4. https://doi.org/10.3389/fdgth.2022.730430

Goodman B (2023) Privacy without persons: a buddhist critique of surveillance capitalism. AI Ethics 3(3):781–792. https://doi.org/10.1007/s43681-022-00204-1

Goswami A, Dutta S (2015) Gender differences in technology Usage—A literature review. Open J Bus Manage 4(1). https://doi.org/10.4236/ojbm.2016.41006

Guerrero-Dib JG, Portales L, Heredia-Escorza Y (2020) Impact of academic integrity on workplace ethical behavior. Int J Educational Integr 16(1). https://doi.org/10.1007/s40979-020-0051-3

Gundu T, Chibaya C (2023) Demystifying the Impact of ChatGPT on Teaching and Learning. 1862, 93–104, 1862, Gauteng. https://doi.org/10.1007/978-3-031-48536-7_7

Gupta A, Guglani A (2023) Scenario Analysis of Malicious Use of Artificial Intelligence and Challenges to Psychological Security in India. In The Palgrave Handbook of Malicious Use of AI and Psychological Security. https://doi.org/10.1007/978-3-031-22552-9_15

Hair J (2009) Multivariate Data Analysis. Faculty and Research Publications. https://digitalcommons.kennesaw.edu/facpubs/2925

Halaweh M (2023) ChatGPT in education: strategies for responsible implementation 15, 15(2). https://doi.org/10.30935/cedtech/13036

Haleem A, Javaid M, Singh RP (2022) An era of ChatGPT as a significant futuristic support tool: a study on features, abilities, and challenges. BenchCouncil Trans Benchmarks Stand Evaluations 2(4). https://doi.org/10.1016/J.TBENCH.2023.100089

Hassan A (2023) The Usage of Artificial Intelligence in Education in Light of the Spread of ChatGPT. Springer Science and Business Media Deutschland GmbH 687–702. https://doi.org/10.1007/978-981-99-6101-6_50

Holden OL, Norris ME, Kuhlmeier VA (2021) Academic Integrity in Online Assessment: A Research Review. Front Educ 6. https://doi.org/10.3389/feduc.2021.639814

Hsu S, Li T, Zhang Z, Fowler M, Zilles C, Karahalios K (2021) Attitudes Surrounding an Imperfect AI Autograder. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems 1–15. https://doi.org/10.1145/3411764.3445424

Huedo-Martínez S, Molina-Carmona R, Llorens-Largo F (2023) Study on the attitude of Young people towards Technology. 10925:26–43. https://doi.org/10.1007/978-3-319-91152-6_3

Hung J, Chen J (2023) The benefits, risks and regulation of using ChatGPT in Chinese academia: a content analysis 12(7). https://doi.org/10.3390/socsci12070380

Irfan M, Aldulaylan F, Alqahtani Y (2023) Ethics and privacy in Irish higher education: a comprehensive study of Artificial Intelligence (AI) tools implementation at University of Limerick. Global Social Sci Rev VIII(II):201–210. https://doi.org/10.31703/gssr.2023(VIII-II).19

Isaac F, Diaz N, Kapphahn J, Mott O, Dworaczyk D, Luna-Gracía R, Rangel A (2023) Introduction to AI in Undergraduate Engineering Education 2023. https://doi.org/10.1109/FIE58773.2023.10343187

Karakose T, Tülübaş T (2023) How can ChatGPT facilitate teaching and learning: implications for Contemporary Education. 12(4):7–16. https://doi.org/10.22521/EDUPIJ.2023.124.1

Khairatun Hisan U, Miftahul Amri M (2022) Artificial Intelligence for Human Life: a critical opinion from Medical Bioethics perspective – part II. J Public Health Sci 1(02):112–130. https://doi.org/10.56741/jphs.v1i02.215

Kleebayoon A, Wiwanitkit V (2023) Artificial Intelligence, Chatbots, Plagiarism and Basic Honesty: comment. Cell Mol Bioeng 16(2):173–174. https://doi.org/10.1007/s12195-023-00759-x

Köbis L, Mehner C (2021) Ethical questions raised by AI-Supported mentoring in Higher Education. Front Artif Intell 4. https://doi.org/10.3389/frai.2021.624050

Kshetri N (2023) ChatGPT Developing Economies 25(2):16–19. https://doi.org/10.1109/MITP.2023.3254639

Kuka L, Hörmann C, Sabitzer B (2022) Teaching and learning with AI in higher education: a scoping review. Springer Sci Bus Media Deutschland GmbH 456:551–571. https://doi.org/10.1007/978-3-031-04286-7_26

Ligorio M (2022) Artificial Intelligence and learning [INTELLIGENZA ARTIFICIALE E APPRENDIMENTO]. 34(1):21–26. https://doi.org/10.1422/103844

Lopes E, Jain G, Carlbring P, Pareek S (2023) Talking Mental Health: a Battle of Wits Between Humans and AI 2023. https://doi.org/10.1007/s41347-023-00359-6

Malmström H, Stöhr C, Ou A (2023) Chatbots and other AI for learning: a survey of use and views among university students in Sweden. https://doi.org/10.17196/CLS.CSCLHE/2023/01

Mich L, Garigliano R (2023) ChatGPT for e-Tourism: a technological perspective. Volume 25(1):1–12. https://doi.org/10.1007/s40558-023-00248-x

Ming W, Bacon K (2023) How artificial intelligence promotes the education in China. ACM Int Conf Proceeding Ser 124–128. https://doi.org/10.1145/3588243.3588273

Montenegro-Rueda M, Fernández-Cerero J, Fernández-Batanero JM, López-Meneses E (2023) Impact of the implementation of ChatGPT in education: a systematic review. Computers, 12(8), Article 8. https://doi.org/10.3390/computers12080153

Muñoz S, Gayoso G, Huambo A, Tapia R, Incaluque J, Aguila O, Cajamarca J, Acevedo J, Huaranga H, Arias-Gonzáles J (2023) Examining the impacts of ChatGPT on Student Motivation and Engagement. 23(1):1–27

Nikolaeva IV, Levchenko AV, Zizikova S I. (n.d.). Artificial Intelligence Technologies for Evaluating Quality and Efficiency of Education 378, 360–365, 378. https://doi.org/10.1007/978-3-031-38122-5_50

Nunnally JC (1994) Bernstein: psychometric theory. McGraw-Hill, New York 1994:2015–2018. https://search.worldcat.org/title/28221417

Ogugua D, Yoon S, Lee D (2023) Academic Integrity in a Digital era: should the Use of ChatGPT be banned in schools? 28(7):1–10. https://doi.org/10.17549/gbfr.2023.28.7.1

Prem E (2019) Artificial intelligence for innovation in Austria. Technol Innov Manage Rev 9(12):5–15. https://doi.org/10.22215/timreview/1287

Putra F, Rangka I, Aminah S, Aditama M (2023) ChatGPT in the higher education environment: perspectives from the theory of high order thinking skills. 45(4):e840–e841. https://doi.org/10.1093/pubmed/fdad120

Rath K, Senapati A, Dalla V, Kumar A, Sahu S, Das R (2023) GROWING role of Ai toward digital transformation in higher education systems. Apple Academic, pp 3–26. https://doi.org/10.1201/9781003300458-2

Ringle CM, Wende S, Becker J-M (2022) SmartPLS 4. Oststeinbek: SmartPLS GmbH. http://Www.Smartpls.Com. https://www.smartpls.com/documentation/getting-started/cite/

Roberts H, Babuta A, Morley J, Thomas C, Taddeo M, Floridi L (2023) Artificial intelligence regulation in the United Kingdom: a path to good governance and global leadership? Internet Policy Rev 12(2). https://doi.org/10.14763/2023.2.1709

Robledo D, Zara C, Montalbo S, Gayeta N, Gonzales A, Escarez M, Maalihan E (2023) Development and validation of a Survey Instrument on Knowledge, attitude, and practices (KAP) regarding the Educational Use of ChatGPT among Preservice teachers in the Philippines. 13(10):1582–1590. https://doi.org/10.18178/ijiet.2023.13.10.1965

Sane A, Albuquerque M, Gupta M, Valadi J (2023) ChatGPT didn’t take me very far, did it? Proceedings of the ACM Conference on Global Computing Education Vol 2, 204. https://doi.org/10.1145/3617650.3624947

Sarkar A (2023) Exploring perspectives on the impact of Artificial Intelligence on the Creativity of Knowledge Work: Beyond Mechanised Plagiarism and Stochastic parrots. ACM Int Conf Proceeding Ser. https://doi.org/10.1145/3596671.3597650

Shoufan A (2023) Exploring students’ perceptions of ChatGPT: thematic analysis and Follow-Up survey. 11:38805–38818. https://doi.org/10.1109/ACCESS.2023.3268224

Singh H, Tayarani-Najaran MH, Yaqoob M (2023) Exploring Computer Science Students’ perception of ChatGPT in Higher Education: a descriptive and correlation study 13(9). https://doi.org/10.3390/educsci13090924

Svenningsson J, Höst G, Hultén M, Hallström J (2022) Students’ attitudes toward technology: exploring the relationship among affective, cognitive and behavioral components of the attitude construct. Int J Technol Des Educ 32(3):1531–1551. https://doi.org/10.1007/S10798-021-09657-7/FIGURES/2

Tang KY, Hsiao CH (2023) Review of TAM used in Educational Technology Research: a proposal. 2:714–718

Taylor S, Gulson K, McDuie-Ra D (2023) Artificial Intelligence from Colonial India: race, statistics, and Facial Recognition in the Global South. Sci Technol Hum Values 48(3):663–689. https://doi.org/10.1177/01622439211060839

Teo T, Noyes J (2014) Explaining the intention to use technology among pre-service teachers: a multigroup analysis of the Unified Theory of Acceptance and Use of Technology. Interact Learn Environ 22(1):51–66. https://doi.org/10.1080/10494820.2011.641674

Thong C, Butson R, Lim W (2023) Understanding the impact of ChatGPT in education. ASCILITE Publications 234–243. https://doi.org/10.14742/apubs.2023.461

Venkatesh V, Morris MG, Ackerman PL (2000) A longitudinal field investigation of gender differences in Individual Technology Adoption Decision-Making processes. Organ Behav Hum Decis Process 83(1):33–60. https://doi.org/10.1006/obhd.2000.2896

von Garrel J, Mayer J (2023) Artificial Intelligence in studies—use of ChatGPT and AI-based tools among students in Germany. Humanit Social Sci Commun 10(1). https://doi.org/10.1057/s41599-023-02304-7

Yasin M (2022) Youth perceptions and attitudes about artificial intelligence. Izv Saratov Univ Philos Psychol Pedagogy 22(2):197–201. https://doi.org/10.18500/1819-7671-2022-22-2-197-201

Zeb A, Ullah R, Karim R (2024a) Exploring the role of ChatGPT in higher education: opportunities, challenges and ethical considerations 44(1):99–111. https://doi.org/10.1108/IJILT-04-2023-0046

Zhong Y, Ng DTK, Chu SKW (2023) ICCE 2023 Exploring the Social Media Discourse: The Impact of ChatGPT on Teachers’ Roles and Identity. 1:838–848, 1.

Acknowledgements

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

Conceptualization: Benicio Gonzalo Acosta-Enriquez, Carmen Graciela Arbulu Perez Vargas, Marco Agustín Arbulú Ballesteros; Methodology: Milca Naara Orellana Ulloa, Cristian Raymound Gutiérrez Ulloa, Johanna Micaela Pizarro Romero; Formal analysis: Benicio Gonzalo Acosta-Enriquez, Marco Arbulú Ballasteros; Writing - preparation of the original draft: Néstor Daniel Gutiérrez Jaramillo, Héctor Ulises Cuenca Orellana, Carlos López Roca; Writing - revision and editing: Diego Xavier Ayala Anzoátegui, Carmen Graciela Arbulu Perez Vargas, Carlos López Roca. All the authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests that could bias the results of the manuscript.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Acosta-Enriquez, B.G., Arbulú Ballesteros, M.A., Arbulu Perez Vargas, C.G. et al. Knowledge, attitudes, and perceived Ethics regarding the use of ChatGPT among generation Z university students. Int J Educ Integr 20, 10 (2024). https://doi.org/10.1007/s40979-024-00157-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40979-024-00157-4