Abstract

In this paper, we introduce Integrity Games (https://integgame.eu/) – a freely available, gamified online teaching tool on academic integrity. In addition, we present results from a randomized controlled experiment measuring the learning outcomes from playing Integrity Games.

Integrity Games engages students in reflections on realistic and relevant academic integrity issues that lie in the grey zone between good practice and misconduct. Thereby, it aims to 1) motivate students to learn more about academic integrity, 2) increase their awareness of the grey-zone issues, and 3) increase their awareness of misconduct. To achieve these aims, the tool presents four gamified cases that lead students through an engaging narrative.

The experiment to measure learning outcomes was conducted in three European countries, and included N = 257 participants from across natural science, social science and the humanities. We show that the participants enjoyed playing Integrity Games, and that it increased their sensitivity to grey-zone issues and misconduct. However, the increases identified were similar to those achieved by the participants in the control group reading a non-gamified text.

We end by discussing the value of gamification in online academic integrity training in light of these results.

Similar content being viewed by others

Introduction

Promoting academic integrity is central to creating thriving learning environments in higher education. Furthermore, when students develop academic integrity during their studies, they are more likely to also take integrity into their professional careers (Guerro-Dib et al. 2020; Carpenter et al. 2004). Academic integrity has been promoted through several strategies in higher education, ranging from sanctions and oversight to preventive measures such as training and promotion of students’ ethical awareness through honour codes ((McCabe, Treviño & Butterfield 2001).

Recent studies show that a substantial fraction of European undergraduate students lack knowledge about academic integrity (Goddiksen et al. 2023a). These students’ understanding of basic rules of academic integrity is limited, and they struggle to navigate realistic grey-zone situations where the rules cannot be applied in a straightforward way. Similar results have been found in North America (Childers & Bruton 2016; Roig 1997). Given this lack of knowledge and skill, strategies to promote academic integrity and prevent deviations from academic integrity should arguably not rely on oversight and sanctions. Strategies for promoting academic integrity among students in higher education should also take an educational approach – an approach recognized by central actors in European higher education (e.g., Lerouge & Hol 2020).

We believe that undergraduate students’ lack of knowledge and skill related to academic integrity calls for dedicated training sessions where students are introduced to the rules and norms of academic integrity while at the same time being trained in navigating the grey-zone dilemmas they are likely to face during their studies. A recent meta-analysis (Katsarov et al. 2022 ) found that academic integrity training is most effective when participants are challenged to “imagine how they would personally deal with ethically problematic situations” (p. 939). Rules and codes of conduct should also be presented, but ethically problematic situations should not be reduced to a mere application of rules and codes, as such an approach will hamper students’ ability to make real-life judgements (p. 949–951).

This paper presents, and discusses the effectiveness of, the research-based online teaching tool Integrity Games (https://integgame.eu/), which we developed to enable training sessions where students engage personally with realistic dilemmas on academic integrity. The tool is aimed at university undergraduate students from all major fields of study, and is available at no cost to users and in five different languages: English, French, Portuguese, Hungarian and Danish.

In this paper, we first introduce Integrity Games and the ideas behind it (Integrity Games Section). Second, we present and discuss the results of a randomized controlled experiment that tested the effect of the tool on a) students’ sensitivity to grey-zone issues and misconduct, and b) their motivation to learn more about academic integrity (Assessment materials and methods, Results, Discussion Sections).

Existing online tools

Integrity Games supplements existing online tools that aim to educate undergraduate students on aspects of academic integrity. Studies of eight such online tools were reviewed by Stoesz & Yudintseva (2018) in a review of in all 21 studies on the effectiveness of academic integrity training. In addition, Kier (2019) tested Goblin Threat, a gamified online tool on plagiarism (https://www.lycoming.edu/library/plagiarism-game/). All nine online tools – and almost all the other studies reviewed by Stoesz & Yudintseva – focus exclusively on one (very important) aspect of academic integrity: plagiarism and citation practice. In particular, they focus on avoidance of severe plagiarism and the introduction of correct citation techniques. All 21 studies in the review showed positive results, although, as noted by Stoesz & Yudintzeva (2018, p. 14), the quality of the evidence was lacking in some studies.

Like other available tools, Integrity Games also covers plagiarism and good citation practice, but, as described in detail in The gamified cases Section, it is, to our knowledge, unique among tools aimed at undergraduate students in its strong emphasis on grey-zone issues rather than clear-cut misconduct (defined in detail in The gamified cases Section). Furthermore, Integrity Games incorporates a broader take on academic integrity as relating not just to citation and plagiarism, but also to collaborative practice and working with data.

While tools for training academic integrity aimed at undergraduate students lack focus on grey zones, online teaching tools on research integrity aimed at early career researchers often have a particular focus on grey zones (for a collection of tools see: https://embassy.science/wiki/Main_Page). Examples include the Dilemma Game (https://www.eur.nl/en/about-eur/policy-and-regulations/integrity/research-integrity/dilemma-game) developed by researchers at Rotterdam University. While there is some overlap in the integrity issues faced by early career researchers and undergraduate students, the overlap is not complete, and the issues of integrity play out differently at different levels. For instance, while both undergraduate students and early career researchers may face integrity issues when collaborating with peers, the specifics may be significantly different (partly because authorship has very different functions in teaching and learning compared to research). Trying to teach academic integrity to undergraduates using material designed for early career researchers therefore risks alienating the students from the topic. This is especially true for students who have no ambition to become researchers. So, although the approach in Integrity Games is similar to some tools on research integrity, it is unique in its focus on grey-zone issues faced by undergraduate students.

In addition to teaching tools that focus on academic and research integrity, there are a range of online teaching tools that focus on other aspects of ethics training for undergraduates. One such tool is Animal Ethics Dilemma (http://www.aedilemma.net/), a highly successful platform on animal ethics aimed at undergraduate students, which at the time of writing has over 100,000 registered users (Hanlon et al. 2007). Like the Rotterdam Dilemma Game mentioned above, Animal Ethics Dilemma engages players in ethical reflection by presenting a series of dilemmas, but unlike the Dilemma Game, Animal Ethics Dilemma sets the dilemmas in engaging narratives that change depending on the choices made by the player. This structural feature was a major inspiration for the structure of Integrity Games (see Integrity Games Section).

Gamification of online tools

Like other online tools on academic integrity, Integrity Games is partially gamified. There seems to be a trend towards gamification of online training. The tutorial Goblin Threat mentioned above is an example in point, as are the games recently developed by the BRIDGE project (https://www.academicintegrity.eu/wp/bridge-games/). There is conflicting evidence on the effect of gamified teaching. Recent reviews on the effect of gamified teaching by Bai, Hew & Huang (2020) and van Gaalen et al. (2021) indicate moderately positive effects on average, but also substantial variations. Bai, Hew & Huang (2020) found that the characteristics shared by most successful gamified teaching are that they involve some kind of scoring where the player earns points and a badge upon completion, and players are able to compare their performance to that of other players via a leader board. Keeping a score is one of six factors suggested by Anneta (2010) that contribute to making gamified teaching successful. The other factors include identity, which refers to the game’s ability to “capture the player’s mind into believing that he or she is a unique individual within the environment” (ibid, p. 106). When combined with a clear goal of the game, identity can lead to immersion where the player is intrinsically motivated to succeed in the game. In addition, the game should be interactive, it should show increasing complexity as the player proceeds, and provide relevant feedback to the player after completing a task. As described in The gamified cases Section, Integrity Games incorporates all of these elements to some extent, except for the scoring element.

Although there is some evidence for the effect of the gamified tools on academic integrity mentioned above, this evidence does not allow us to assess their effectiveness relative to non-gamified training. We therefore have little empirical evidence to help us decide between gamified and non-gamified approaches to academic integrity training. Given this lack of evidence, we decided to include a control group in our experiment who received non-gamified teaching that covered the same topics as Integrity Games to see which form of teaching would perform better (Assessment materials and methods Section).

Integrity Games

The core of Integrity Games (https://integgame.eu/) is four gamified cases presented in The gamified cases Section. In addition, the platform contains a dictionary of central concepts, a user guide for teachers, and an optional quiz designed partly to provide personalized suggestions on what cases to play, and partly to spark curiosity about the topics covered. The potential of the quiz to nudge players to spend more time on the games was explored in a separate experiment (Allard et al. 2023).

The gamified cases

Cases, be they historical or fictional, are a standard tool in integrity teaching. Typically, cases describe specific situations where academic integrity is at stake and encourage students to discuss appropriate actions. Cases are valuable in academic integrity training partly because they can help students develop ethical sensitivity and train their ability to navigate grey-zone issues (Katsarov et al. 2022; Committee on Assessing Integrity in Research Environments 2002, Ch. 5). Integrity Games builds on this well-known approach and adds some gaming elements.

The cases are fictional, but the dilemmas presented in them are drawn from an extensive empirical knowledge base originating from a mixed-methods study of students’ understanding and experiences with academic integrity. The study involved more than 6,000 students (including 1,639 undergraduate students) from universities in nine European countries, representing all faculties (some results are reported in Johansen et al. 2022; Goddiksen et al. 2023a; 2023b; 2021). This research, combined with the existing literature (particularly Roig 1997, Johansen & Christiansen 2020, and Childers & Bruton 2016), and the teaching experience of the authors formed the research basis for the development of the cases. The research base helped ensure that the dilemmas presented in the cases are among those that students within the target groups are most likely to face during their studies.

Contents of the cases

The four cases that are currently in Integrity Games cover three central topics under academic integrity:

-

1.

Citation practice

-

2.

Collaborating with, and getting help from, others

-

3.

Collecting, analysing, and presenting data

For each topic, academic integrity training may cover clear examples of good practice, obvious misconduct and the grey zones in between – often called detrimental or questionable practices.

Misconduct refers to the most severe deviations from good practice. Misconduct will typically be defined in an institution’s disciplinary rules and codes of conduct, which may in turn be based on national and international codes (e.g., ALLEA 2017, or WCRI 2010). For researchers, misconduct is often narrowly defined as plagiarism, falsification and fabrication (ALLEA 2017), but for undergraduate students misconduct will also cover other forms of intentional cheating, including cheating in tests and exams. Although Integrity Games includes examples of clear-cut misconduct, it focuses primarily on questionable practices.

Questionable practices are questionable in the sense that their ethical acceptability often depends heavily on the context. One example is deleting deviating data points from a report on an experiment (Johansen & Christiansen 2020). Is this ethically acceptable? As illustrated in two dilemmas in Integrity Games, the answer to this question depends heavily on why and how the deleting was done. If a student deletes deviating data without being transparent about it in order to avoid having to discuss it in the report, it would be akin to misconduct in the form of falsification. If, on the other hand, a student deletes deviating data based on an informed discussion with the instructor where they have identified a clear error in the measurement, the practice may be perfectly acceptable.

In addition to their context dependence, questionable practices are, in many cases, characterized by being less severe deviations from good practice than clear-cut cheating (Ravn & Sørensen 2021). They may not even be covered by local disciplinary rules. Freeriding in group work, for instance, will in many cases not be directly against a specific disciplinary rule, but is in many contexts still unethical, as it gives the free rider an unfair advantage, is detrimental to the free rider’s learning, and potentially also to the learning of the fellow group members.

When local disciplinary rules cover questionable practices, they can be harder to apply to concrete instances, partly due to the above-mentioned context dependence (Schmidt 2014). Additionally, the enforcement of rules on questionable practices may be less consistent, and the sanctions will typically be less severe. It is therefore more likely that students will end up in trade-off situations between, on the one hand, the risk of sanctions and blame from the institution that comes with deviating from good practice and, on the other hand, the risk of negative consequences of, for example, failing an exam or standing up against a fellow student. These trade-off situations have previously been shown to be among those that students find particularly ethically challenging (Goddiksen et al. 2021).

By focusing mainly on questionable practices, we hoped that the cases in Integrity Games would encourage reflection on both compliant practice, good practice, ethically acceptable practice and the potential differences between these. As discussed further in Intended use and learning outcomes Section, the reflections that Integrity Games is designed to inspire are not sufficient to develop the knowledge and competences to navigate real-life situations where integrity is at stake. As a minimum, students should always be given the opportunity to ask questions and discuss how rules and norms apply to their specific institutional and disciplinary context. Integrity Games is thus not intended to be a stand-alone tool that can replace classroom sessions. It is designed to be combined with other types of teaching.

The cases are constructed such that each case focuses on one of the three themes mentioned above. To enable students from a broad range of study programmes to relate to the dilemmas, the tool includes two different cases on collection, analysis and presentation of data; one was designed to be relatable for students working primarily with qualitative data (e.g., interview data) and one was designed for students primarily working with quantitative data (e.g., numerical data from laboratory experiments). Although there is overlap, the two cases are also different. For instance, the case on quantitative data includes dilemmas related to the collection and interpretation of personal data and potentially sensitive data.

The case on citation practice covers topics such as appropriate paraphrasing, self-plagiarism, plagiarism of ideas and responsibility when suspecting plagiarism by others.

The case on collaboration covers issues such as freeriding in group work, various ways of getting help on individual assignments, and one’s responsibility in cases when other group members deviate from good practice.

Structure of the cases

The cases are constructed using a branching narrative structure (Fig. 1), and draw on basic dramaturgical principles for engaging writing (Egri 1972; Pearce 1997). Each case starts with a concrete situation where the player is faced with a realistic, relatable dilemma involving academic integrity. The situation is described in the first-person present tense (“You are writing an assignment …”) and always includes an element of tension or conflict. In addition, there is often an element of pressure such as an upcoming exam or the need to impress a future supervisor. The presentation of the situations is designed to create identification and immersion (two of the characteristics of effective gamified teaching described in Gamification of online tools Section). To proceed in the case, the player has to choose between two or three pre-defined reactions to the dilemma. When the player has made their choice, they are given immediate feedback – another gamification element – in the sense that they are informed of the immediate consequences of her choice. For instance, if the player decides that the best reaction to having discovered a group member has plagiarized is to tell their teacher about this discovery, they are told that the teacher praised their decision, but their collaboration in the group was strained, and the group member was angry with her. Of course, this is not what will always happen, but it is a possible outcome of the choice. This approach to giving feedback – describing consequences, rather than telling the player that they gave a correct or incorrect answer – is to our knowledge unique among online teaching tools on academic integrity. We took this approach for several reasons. Firstly, there is not one way of handling many of the dilemmas presented to the students that is clearly the correct way, just as there are no simple solutions to the integrity dilemmas that face the students in real life. This is an important message to get across. Secondly, by describing consequences, the game avoids judging the player. Further, the focus on consequences makes it possible to show that doing the right thing sometimes has a cost. For instance, reporting cheating may be the right thing to do in some cases, but it may come at a cost to the relationship between the one who reported and the one who cheated (Goddiksen et al. 2021).

The pages describing the consequence of a choice also include an expandable textbox called “About your choice”. The textbox takes the concrete dilemma that the player has just faced to a more general level. For instance, the case on collaboration starts with a dilemma about a specific instance of group work and a specific person, the player’s good friend Kim, who is not contributing. The concrete dilemma is one version of a more general issue with free riders in group work. This is explained in the “About your choice” textbox, where the player is also challenged to consider to what extent the specifics of the dilemma matter for their choice. Would they, for instance, chose differently if Kim had not been a close friend, but a more distant classmate? The “About your choice” texts are thus designed to help the player realize that the dilemma they just faced is an example of a more general issue that can take different forms depending on the context, and that, in some cases, the context is central to the choices they make.

The second and third dilemma presented to the player depend on the player’s previous choices in two ways. Firstly, the dilemmas are the direct result of the player’s previous choices. This gives the player a sense of being part of a narrative where a story unfolds and choices have consequences. Secondly, players who make choices that indicate sensitivity to the norms of academic integrity in one dilemma are given a more subtle dilemma in the next level (building on the gaming characteristic increased complexity mentioned in Gamification of online tools Section). Conversely, players who choose against the norms of academic integrity in one dilemma are faced with a subsequent choice that involves more severe breaches of the norms in the following dilemmas. Players are thus faced with dilemmas that match their knowledge and sensitivity to academic integrity norms throughout the cases.

When the player has seen the consequences of the choice made in the first dilemma, the narrative continues, and the player is presented with a new dilemma which is again followed by a page describing the consequences of the choice made and an “About your choice” text. When the player has answered three dilemmas the case ends, and the player is presented with the final result in the form of a conclusion to the story. The cases do not have inbuilt notions of ‘winning’ or ‘losing’, nor does the player get any kind of score. In this sense, Integrity Games is a simulation, not a serious game (Annetta 2010).

Throughout the cases, a mouseover function allows the player to access information on central concepts from a dictionary built into Integrity Games.

Intended use and learning outcomes

Integrity Games is highly flexible and can be incorporated into teaching sessions in several ways. The uses discussed in the teacher manual (https://integgame.eu/forTeachers) include:

-

Playing selected cases as part of the preparation for a session on academic integrity,

Playing selected cases, individually or in groups, during a session on academic integrity.

Additionally, the platform allows access to the individual dilemmas through the “dilemmas” tab in the main menu. A teacher may thus consider assigning specific dilemmas for consideration as either preparation or as an exercise during class. This has the benefit that all students will have considered the same dilemmas, which will not be the case if they play through the cases on their own (as the dilemmas they face depend on the choices they make).

The tool was designed with the dual aim of 1) motivating students to learn about academic integrity and 2) developing students’ competencies about integrity issues. Concerning the latter, for each of the three themes described above (The gamified cases Section), the tool aims to contribute to the development of competences that enable students to act with integrity in real-life situations where academic integrity is at stake, including grey-zone situations. Following Stephens and Wangaard (2016), these competences include:

-

Sensitivity: Being able to identify and distinguish misconduct and grey-zone issues and knowing when it is one’s responsibility to act,

-

Skills in identifying appropriate actions, and

-

Will and courage to act.

Following Goddiksen & Gjerris (2022), both sensitivity and skills in identifying appropriate actions when facing grey-zone situations include a substantial knowledge component. For each of the three topics described in The gamified cases Section, Integrity Games was designed with the aim of contributing to the construction of:

-

Knowledge of the core academic integrity values and principles and how they are applied,

-

Knowledge of common grey-zone issues and the reasons why they are ‘grey’.

Integrity Games was not developed directly to develop will and courage in the players to act with integrity in real-life situations, although developing such will and courage may sometimes be a positive side-effect of gaining knowledge and sensitivity (Goddiksen & Gjerris 2022).

The randomized controlled experiment described in the following sections was developed to test the extent to which the aims related to motivation and sensitivity are realized when students play Integrity Games as part of a class on academic integrity.

Assessment materials and methods

Hypotheses tested in the experiment

The experiment described below was designed to test six hypotheses about the learning outcome from an intervention consisting of playing two cases in Integrity Games followed by a short group work session simulating a classroom discussion (details in Study intervention Section). The first three hypotheses concerned the participants’ absolute outcome of playing the cases:

-

1.

Motivation: We hypothesized that the participants would be more motivated to learn more about academic integrity after the intervention.

-

2.

Sensitivity to grey-zone issues: We hypothesized that participants would be more sensitive to the existence of grey-zone issues after the intervention.

-

3.

Sensitivity to misconduct: We hypothesized that participants would be more sensitive to misconduct after the intervention.

The last three hypotheses concerned the outcome of playing the cases relative to traditional integrity teaching. We hypothesized that the participants who had received the intervention would show a greater improvement on all three parameters (motivation, sensitivity to grey-zone issues, and sensitivity to misconduct) than participants in a control group who had engaged in the same group discussions, but read a non-gamified text covering the same topics (Appendix C) instead of playing Integrity Games (see Study intervention Section).

Ethical approval and recruitment

Prior to recruitment, the experiment was reviewed by the relevant research ethics committees at the three participating universities: in Denmark, the Research Ethics Committee for Science and Health at the University of Copenhagen approved the study (Ref: 504–0238/21–5000 Decision date: 02.02.2021), and in Switzerland, the Geneva Commission Cantonale d’Ethique de la Recherche approved the study (Req-ID: 2020–01397. Decision date: 01.04.2021). In Hungary, studies such as this one are not subject to ethical approval, but the Regional Ethics Committee did acknowledge the study (Registration ID: DE/ KK RKEB/IKEB 5660–2021, decision date: 02.08.2021).

Data were collected from February to November 2021 in 12 different course sessions (4 in Denmark, 6 in Switzerland, and 2 in Hungary). Each session lasted 2*45 min. At the beginning of the session, students were informed about the study’s aims and procedure, and could choose between participating in the study or not. Students who decided to participate checked a consent box in the anonymous online questionnaire that was part of the course procedure (Materials Section). Since the questionnaire included questions that were pedagogically meaningful, participants who did not wish to participate in the study were asked to respond to an identical questionnaire from which no data was stored. With this procedure, we made sure that all students received the same teaching content and that anonymity was guaranteed: teachers and researchers could not know which student participated in the study, nor could they trace participants’ responses to individual students.

Participants

Participants were recruited among students in mandatory or elective courses on ethics and philosophy of science at three major European research universities: the University of Copenhagen, the University of Debrecen and the University of Geneva.

In Denmark, participants were recruited among students in three mandatory philosophy of science courses for students in undergraduate programmes in agricultural economics, biotechnology, food science and natural resource management. These courses already contained a session on academic integrity, and one of the authors was allowed to take over the session to test the tool.

In Hungary, participants were recruited in mandatory bioethics courses for pharmacy and dentistry students.

In Switzerland, participants were recruited among students following elective courses in applied ethics, bioethics and epistemology of science, designed for interdisciplinary groups of students in philosophy, biology, biochemistry, pharmacy, neuroscience, and medicine. The intervention used in this study replaced a lesson ordinarily dedicated to academic integrity.

Study intervention

All intervention sessions followed the same detailed schedule (described below, further details in Appendix A). Sessions in Denmark were conducted by MG in Danish. Sessions in Switzerland were conducted by AA, CC, and CS in French. ACVA and OV conducted the sessions in Hungary in Hungarian and English.

All sessions took place online using the Zoom video conferencing platform (due to the ongoing COVID-19 pandemic). After a brief introduction, about 10 min, on what academic integrity means, and why it is important, we informed students about the aim of the experiment and the procedure of the study. We made it clear that participation in the study was anonymous and voluntary. We used the same introductory slides in each session.

After the introduction, we provided a link to the anonymous questionnaire in which participants could express whether they consented to participating in the study and take the pre-test (Materials Section). Thereafter, we randomly divided all participants into two breakout rooms using the inbuilt “Assign automatically” function in Zoom. Each breakout room was then given instructions separately. Participants in the treatment group were instructed to go to Integrity Games and play the “collaboration case” and the “plagiarism case”. Participants in the control group were instructed to read a text on integrity issues related to collaboration and plagiarism (See Appendix C). After 25 to 30 min of completing these individual tasks, participants in both groups were subdivided again in groups of 2–3 participants (participants from the control and intervention groups were not mixed). The groups were given the task of discussing integrity issues for 25 to 30 min based on a discussion grid (see Appendix A). To minimize the confounding effect of the different instructors, the instructors did not partake in these discussions. Thereafter, participants took the post-test (10 min). Finally, all participants joined the main session for concluding remarks by the instructor.

Materials

We designed the online questionnaire (presented in Appendix B) as a pre- and post-test with some questions being asked twice. Questions were designed to obtain information about participants’ motivation to learn about academic integrity and their sensitivity to grey-zone issues and misconduct. In addition, the post-test included questions on demographics (gender, age, country of study, and study direction), two questions relating to the user experience of the integrity website, and five questions relating to the experience of the group work (the latter are not reported in this paper).

To assess participants’ motivation to learn, we used a battery of questions adapted from the relevant subset of the Intrinsic Motivation Inventory (CSDT 2021) that deals specifically with students’ motivation to learn more. The battery contained six claims about the relevance and value of academic integrity training, which participant evaluated using a five-point Likert scale from “fully agree” to “fully disagree”. There was also an option to answer “I don’t know”. Examples of claims include “I believe that participating in teaching on academic integrity could be of some value to me” and “I think learning about academic integrity is not important for my future studies” (see Appendix B for complete list). Additionally, we asked participants to indicate how many hours they would be willing to spend on academic integrity training (not reported).

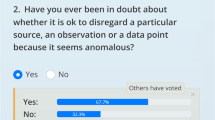

To assess participants’ sensitivity to grey-zone issues and misconduct, we expanded on the approach employed by Goddiksen et al. (2023a): in random order, the participants were presented with descriptions of ten different actions (see Table 1) and were asked whether they would “violate the rules that apply to them”. All questions called for answers picked from among five answer options: “Yes, it is a violation”, “No, it is not a violation”, “I don’t know”, “The rules are unclear” and “It depends on the situation”. These two latter answer options allowed the participant to indicate that an action is in a grey zone.

The actions were constructed such that for the actions in the column with acceptable practices in Table 1, the most correct answer to whether the actions are against the rules would be “No, it is not a violation”. For the actions in the column with non-compliant practices, the most correct answer would be “Yes, it is a violation”, whereas the most correct response to the actions in the grey-zone practices would be either “The rules are unclear” or “It depends on the situation” (we did not distinguish between these two correct answers for grey-zone practices in our data analysis).

The questionnaire and the text for the control group were translated from English into the students’ relevant languages: Danish, Hungarian, or French.

Data analysis

To develop a ‘Motivation-for-Academic-Integrity Score’, we first scored the motivation questions on a scale of 1 to 5, with 5 indicating the highest level of motivation. For instance, answering “fully agree” to the question “I believe that participating in teaching on academic integrity could be of some value to me” would be coded as 5, while answering “fully disagree” would be coded as 1. We then averaged the scores for the six questions.

We constituted a ‘Sensitivity-to-Misconduct Score’ by coding each participant answer as 0 if the participant chose an unjustifiable option, and 1 if the participant chose a justifiable answer. We then averaged the participant’s score, so that the final score represents the percentage of questions that the participant answered correctly. We proceeded similarly to constitute a ‘Sensitivity-to-Grey-Zones Score’.

To analyse the three scores for which we have both a pre- and post-experimental measure, we used a linear mixed-effect model using the R package lmer (Bates et al. 2015), using a random intercept nested within participants, and predicting the score using country of study, time (before the experiment vs. after the experiment), experimental condition, and an interaction between time and experimental condition as fixed effects. We were interested in discovering whether participant scores increased between the pre-test and the post-test, regardless of the intervention, and in knowing whether the final score was higher in the Integrity Games group than in the control group. Thus, the two coefficients of interest are the effect of time, and the interaction between time and experimental condition. Descriptive statistics were used (mean and SD) to describe baseline characteristics of both groups.

Results

In total, 408 participants started the experiment, 272 finished it. Among the participants who completed the post-test questionnaire, 128 were from Denmark, 63 from Switzerland, 81 from Hungary. Of these 272 participants, 157 were in the control group, and 115 were in the intervention group. There were statistically significantly less participants in the intervention group, indicating a possible failure of randomization or differential attrition (\(proportion=0.42\), 95% CI \([0.36\), \(0.48]\), \(p=.013\)).

Using the items of the motivation score, we excluded participants who had a Mahalanobis distance greater than the 99% predicted percentile based on the 12 items (including items from pre-test and post-test). This procedure led to the exclusion of 15 participants. We considered that a high Mahalanobis distance was likely a sign of inattention (for instance, a high Mahalanobis distance could indicate that participants provided divergent answers on items that are highly correlated). Our final sample size for all analyses was 257 participants.

Table 2 shows the demographics of the control and intervention groups.

Enjoyment and recommendation of the tool

As can be seen from Table 2, participants gave a mean agreement of 4.1 out of 5 (95% CI [3.9, 4.3]) with the claim “I would recommend 'Integrity Games' to teachers preparing classes on academic integrity for undergraduate students”, and indicated a mean agreement of 4.0 (95% CI [3.8, 4.1]) with the claim “Playing through the cases in 'Integrity Games' was fun”.

Motivation to learn

Even before starting the sessions, participants were, on average, fairly motivated to learn more about academic integrity, with a mean motivation of 3.8 (with 5 being the highest possible score) in the pre-test. The sessions did not result in significant changes in the participants’ motivation to learn. Across the experimental conditions, on average, participants did not significantly change their motivation to learn more about research integrity (\(b= 0.00, 95\mathrm{\% CI }\left[-0.05,0.06\right], t\left(255\right)=0.12, p=.909\). For further details, see Table 3). Moreover, the motivation of participants in the intervention group did not increase significantly more than participants in the control group (\(b= -0.03, 95\mathrm{\% CI }\left[-0.11,0.06\right], t\left(255\right)=-0.58, p=.564.\) For further details, see Table 3).

Sensitivity to grey zones

Participants generally showed a poor understanding of grey-zone issues in the pre-test. Students in the intervention group on average scored 0.28 and participants in the control group on average scored 0.27 in the pre-test (with 1 being the optimal score and 0 being the worst possible score) (see Table 2).

Across both experimental conditions, participants improved their sensitivity to grey-zone issues (b = 0.18, 95% CI \(\left[0.12,0.23\right]\), \(t\left(255\right)=6.33\), \(p<.001.\) For further details, see Table 4). However, the Sensitivity-to-Grey-Zones score of participants in the Integrity Games intervention group did not increase significantly more than that of participants in the control group (\(b= -0.01, 95\mathrm{\% CI }\left[-0.09,0.08\right], t\left(255\right)=-0.22, p=.827\). For further details, see Table 4.

Sensitivity to misconduct

Already in the pre-test, participants generally showed a good understanding of the questions about non-compliant practice. Students in the intervention group on average scored 0.65 and participants in the control group on average scored 0.61 in the pre-test (with 1 being the optimal score and 0 being the worst possible score). Both groups improved slightly from pre-test to post-test (\(b=0.09, 95\mathrm{\% CI }\left[0.06,0.12\right], t\left(255\right)=5.30, p<.001.\) Further details in Table 5), with both groups scoring an average of 0.7 in the post-test (Table 2). However, the Sensitivity-to-Misconduct Score of participants in the Integrity Games intervention group did not increase significantly more than that of participants in the control group (\(b=-0.03, 95\mathrm{\% CI }\left[-0.08,0.02\right], t\left(255\right)=-1.20, p=.231\). For further details, see Table 5).

Discussion

We set out to introduce the gamified online teaching tool Integrity Games, and test six hypotheses about the tool’s effectiveness. The hypotheses addressed the tool’s potential to motivate players to learn about academic integrity, and its potential to increase their sensitivity to grey-zone issues and misconduct (specifically plagiarism and falsification). In addition, we asked participants if they enjoyed playing the game and would recommend it to teachers preparing teaching in academic integrity for undergraduate students.

We found that the participants generally enjoyed playing Integrity Games, and would recommend it to teachers.

Regarding motivation to learn more about academic integrity, we showed that, in the specific experimental setup, playing two of the cases in Integrity Games followed by a group discussion did not increase the participants’ motivation to learn about academic integrity. In fact, neither the intervention group nor the control group showed any significant development in their motivation. One possible explanation for this is that the participants were, on average, already quite motivated to learn about academic integrity (the average motivation score in the pre-test was 3.8 out of 5) when starting the sessions. The potential to increase the motivation was thus somewhat limited. In addition, the literature on gamification mainly suggests that gamification may increase the player’s motivation to complete the task at hand (e.g., playing through the game rather than reading through a text). This is different from what we measured in the test. Further research is needed to investigate whether students who are assigned cases in Integrity Games as preparation for a class are more likely to prepare than students who are assigned a text.

Regarding sensitivity to misconduct and grey zones, we showed that there was a significant but small positive development from pre-test to post-test in the sensitivity to misconduct of the participants in the Integrity Games intervention group and a more substantial positive development in their sensitivity to grey-zone issues. These results indicate that, in absolute terms, Integrity Games is an effective teaching tool that can improve undergraduate students’ sensitivity to grey zones and misconduct. Most other tools on academic integrity (see Existing online tools Section) have, at best, only been effect tested in a pre-test/post-test setup, and in such a setup Integrity Games performs rather well when it comes to improving students’ sensitivity. However, as discussed below, the addition of the control group in our study adds important nuances to this result.

While our test showed that Integrity Games has the potential to increase players’ sensitivity to misconduct, it also showed that the participants in the control group developed their sensitivity to a similar extent. Thus, while Integrity Games has the potential to improve students’ sensitivity to grey-zone issues and misconduct, this potential may not be greater than non-gamified approaches. This is counter to what we had hypothesized (Hypotheses tested in the experiment Section). There are several possible explanations for this, some of which relate to the design of the tool, and some to the design of the test.

A central difference between Integrity Games and the text read by the control group is that Integrity Games incorporates some of the elements of effective gamified teaching tools listed in Gamification of online tools Section. In particular, it incorporates immersion, identification, increased complexity and immediate feedback. However, it does not, on its own, incorporate all characteristics listed in Gamification of online tools Section, nor were these additional elements incorporated in the experimental setup. Specifically, the setup did not involve any kind of “scoring” where students are told how well they did in the game (on an absolute or relative scale), nor was there a “prize” for completing the game (e.g., in the form of a badge). Both of these are characteristics of serious games that have been found to be successful in the past (Bai, Hew & Huang 2020).

Given that gamification has been found to have a positive impact on learning in other instances (Bai, Hew & Huang 2020), the missing effect in this case raises the question of whether the best explanation is found in the missing gamification elements, the potentially partial or poor implementation of the included gamification elements, or in a potential mismatch between the contents – ethically nuanced issues with no clear answers – and gamification. Our data do not offer a clear answer to this question, and we are not aware of other tests of gamified training tools on academic integrity that include a control group that receives non-gamified training.

Further possible explanations for the null result in the comparison between Integrity Games and the non-gamified text relate to the limitations of the test, of which there are several.

First, since the experiment did not include a second control group receiving no teaching between pre-test and post-test, it is not possible to directly assess the pre-test effect in the experiment. As argued by Hartley (1973), the teaching potential of a pre-test may be significant in studies where the learning outcome of the teaching is small, and the time between pre-test and post-test is relatively short. It is thus possible that much of the measured effect between pre-tests and post-tests in both the intervention and control-groups are due to participants having taken the same pre-test, and improving as a result of having taken the same test twice. This is perhaps most worrying for the results concerning sensitivity to misconduct, as the effect is quite small. In the test on sensitivity to grey zones, the pre-test effect may also be present, but is unlikely to account for the entire effect between pre-test and post-test. The effect on the sensitivity to grey zones was larger than the effect on sensitivity to misconduct, but the tests are very similar. We therefore see no reason to think the pre-test effect would be bigger for these questions than for the questions probing sensitivity to misconduct.

Second, since the participants studying in Switzerland were recruited from elective courses on various aspects of ethics and epistemology of science, they cannot be considered fully representative for undergraduate students in Switzerland e.g. when it comes to motivation to learn about ethics.

Third, it may be argued that the study setup was rather artificial in the sense that the group discussion was set up in a way that few teachers would do in a standard session. This design was chosen in order to increase the internal validity of the study by reducing the potential confounding effect of having different instructors in the different sessions (a common problem in tests of gamified teaching according to Bai, Hew & Huang, (2020). However, this study setup does limit the external validity of the study, as it is more difficult to transfer to classroom situations.

Additionally, the tests only provided data on sensitivity and motivation. We have no data on the students’ ability to make judgements in concrete cases involving grey-zone issues, nor do we have data on their actual behaviour after the test. Furthermore, the test does not tell us about the knowledge and motivation retention of the participants in the two groups. Stoesz & Yudintseva (2018) note that this is a common issue in tests of academic integrity training (see also Katsarov et al. 2022). Further research is needed to assess whether gamified training on academic integrity in general, and Integrity Games in particular, help improve the long-term retention of the understanding gained through the training, and whether it has a greater impact on the students’ behaviour and their ability to make judgements in the long-term.

Finally, the study design may to some extent have limited the positive effect of the gaming features of Integrity Games. As mentioned above, one reason why gamified teaching is thought to work is that it motivates students to finish the task at hand. This seems particularly important in situations where students are more easily distracted or where it is unclear how long the task will take. In this study, participants in both the control and intervention groups were asked to spend a specific, and rather limited, amount of time on their tasks, and they knew that the class would continue after they had finished the task. This is rather different from a normal preparation situation, where students would be asked to work on the task until it was done without knowing how long it will take, and it is likely that the gaming features of the tool would be more effective in an actual preparation situation than in the experimental setup.

Given these limitations, we can only tentatively conclude that the improved sensitivity to misconduct and grey-zone issues from pre-test to post-test for participants playing Integrity Games indicates that the tool can be valuable in academic integrity training aiming to help undergraduate students become more aware of the many grey-zone issues they are likely to encounter during their studies. We believe that, although the effect was modest in our experimental setup, the effect can be improved when properly integrated into classroom teaching which incorporates teacher feedback and discussion. The test therefore indicates that Integrity Games is a positive addition to the toolbox available to teachers who develop academic integrity training that goes beyond simply informing about clear-cut cheating.

However, our study also calls for further research on the educational potential of Integrity Games. In addition, our results raise more general questions about the value of gamification in academic integrity training on grey-zone issues. If, as indicated in recent reviews (Bai, Hew & Huang 2020; van Gaalen et al. 2021), gamification works mainly when elements of competition, scoring and winning can be built into the gamified tools, then it seems that gamification has the greatest potential when it is possible to clearly discern who performed best, for example by seeing who had the most correct answers. However, when dealing with grey-zone issues in academic integrity, a central caveat is that there may not be one correct way to deal with them (although some ways are clearly wrong). In dilemma-based games like Integrity Games, this makes it challenging to score students’ performance purely on their responses to how they would handle the dilemmas they are presented with. Furthermore, it may be argued that it is not the answers to the dilemmas themselves that are the most important. Rather, it is the reasoning that the students go through in order to get to the answers that is important. Is this reasoning based on the right values and understandings, and do students display the proper patterns of thought? These may be among the more appropriate questions to ask, but they are perhaps also more difficult (though not impossible) to use as the basis for a scoring system that enables the player to compete with other players and win the game. Thus, it can be particularly challenging to harvest the benefits of gamified training in academic integrity training.

Conclusion

We conclude that Integrity Games is a potentially valuable teaching tool on academic integrity for undergraduate students. Participants in our study found it fun to play, and they would recommend it to teachers of academic integrity. Furthermore, it has the potential to improve students’ sensitivity to grey-zone issues and to some extent also their sensitivity to misconduct. However, this potential may not be bigger than that of non-gamified teaching. Our study also calls for further research into the long-term retention of the positive effects of the understanding gained through playing Integrity Games, and similar gamified teaching tools, on academic integrity, and whether they have a positive effect on students’ long-term behaviour and their ability to make appropriate judgements in complex real-life cases.

Availability of data and materials

The raw data from the effect tests are available here: https://doi.org/https://doi.org/10.17894/ucph.dce5432a-a9ee-4fd0-b9c8-d99557a50ed3.

Abbreviations

- AA:

-

Aurélien Allard

- ACVA:

-

Anna Catharina Vieira Armond

- CC:

-

Christine Clavien

- CI:

-

Confidence Interval

- CS:

-

Céline Schöpfer

- df :

-

Degree of freedom

- HL:

-

Hillar Loor

- MG:

-

Mads Goddiksen

- MWJ:

-

Mikkel Willum Johansen

- OV:

-

Orsolya Varga

References

Allard A, Armond A, Goddiksen M, Johansen M, Loor H, Shöpfer C, Varga O, Clavien C (2023) The quizzical failure of a nudge aimed at promoting academic integrity. Res Integr Peer Rev

ALLEA (2017). The European Code of Conduct for Research Integrity - revised edition. Available from: https://www.allea.org/publications/joint-publications/european-code-conduct-research-integrity/

Annetta L (2010) The “I’s” Have It: a framework for serious educational game design. Rev Gen Psychol 14(2):105–113. https://doi.org/10.1037/a0018985

Bai S, Hew K, Huang B (2020) Does gamification improve student learning outcome? Evidence from a meta-analysis and synthesis of qualitative data in educational contexts. Educational Reseach Review 30 https://doi.org/10.1016/j.edurev.2020.100322

Bates D, Mächler M, Bolker B, Walker S (2015) “Fitting Linear Mixed-Effects Models Using lme4.” Journal of Statistical Software 67(1):1–48 https://doi.org/10.18637/jss.v067.i01

Carpenter DD, Harding TS, Finelli CJ, Passow H (2004) Does academic dishonesty relate to unethical behavior in professional practice? An exploratory study. Sci Eng Ethics 10:311–324. https://doi.org/10.1007/s11948-004-0027-3

Childers D, Bruton S (2016) “Should It Be Considered Plagiarism?” Student Perceptions of Complex Citation Issues. J Acad Ethics 14:1–17. https://doi.org/10.1007/s10805-015-9250-6

Committee on Assessing Integrity in Research Environments (2002) Integrity in scientific research: creating an environment that promotes responsible conduct. National Academies Press, Washington, DC

CSDT (2021). Intrinsic Motivation Inventory. Centre for Self-Determination Theory. URL= https://selfdeterminationtheory.org/intrinsic-motivation-inventory/. Accessed Dec 2021

Egri L (1972) The Art of Dramatic Writing: Its Basis in the Creative Interpretation of Human Motives. Simon and Schuster, New York

Goddiksen M, Quinn U, Kovács N, Lund T, Sandøe P, Varga O (2021) Good friend or good student? An interview study of perceived conflicts between personal and academic integrity among students in three European countries. Account Res 10(1080/08989621):1826319

Goddiksen M, Johansen M, Armond A, Centa M, Clavien C, Gefenas E, Globokar R, Hogan L, Kovács N, Merit M, Olsson I, Poškutė M, Quinn U, Santos J, Santos R, Schöpfer C, Strahovnik V, Varga O, Wall P, Sandøe P (2023a) Lund T (2023a) Grey zones and good practice: A European survey of academic integrity among undergraduate students. Ethics Behav 10(1080/10508422):2187804

Goddiksen M, Gjerris M (2022) Teaching phronesis in a research integrity course. FACETS 7(1) https://doi.org/10.1139/facets-2021-0064

Goddiksen M, Johansen M, Armond A, Clavien C, Hogan L, Kovács N, Merit M, Olsson I, Quinn U, Santos J, Santos R, Schöpfer C, Varga O, Wall P, Sandøe P, Lund T (2023b) “The person in power told me to” - European PhD students’ perspectives on guest authorship and good authorship practice. PLoS ONE 18(1):e0280018. https://doi.org/10.1371/journal.pone.0280018

Guerrero-Dib JG, Portales L, Heredia-Escorza Y (2020) Impact of academic integrity on workplace ethical behaviour. Int J Educ Integr 16:2. https://doi.org/10.1007/s40979-020-0051-3

Hanlon, A., Algers, A., Dich, T., Hansen, T., Loor, H. & Sandøe, P (2007). ‘Animal Ethics Dilemma’: an interactive learning tool for university and professional training. Animal Welfare 16(S), 155–158

Hartley J (1973) The effect of pre-testing on post-test performance. Instr Sci 2:193–214. https://doi.org/10.1007/BF00139871

Johansen M, Christiansen F (2020) Handling Anomalous Data in the Lab: Students’ Perspectives on Deleting and Discarding. Sci Eng Ethics 26:1107–1128

Johansen MW, Goddiksen MP, Centa M et al (2022) Lack of ethics or lack of knowledge? European upper secondary students’ doubts and misconceptions about integrity issues. Int J Educ Integr 18:20

Katsarov J, Andorno R, Krom A, et al (2022) Effective Strategies for Research Integrity Training—a Meta-analysis Educ Psychol Rev 34:935–955 https://doi.org/10.1007/s10648-021-09630-9

Kier C (2019) Plagiarism Intervention Using a Game-Based Tutorial in an Online Distance Education Course. Journal of Academic Ethics 17:429–439. https://doi.org/10.1007/s10805-019-09340-6

Lerouge I, Hol A (2020) Towards a Research Integrity Culture at Universities: From Recommendations to Implementation. LERU. Available at: https://www.leru.org/files/Towards-a-Research-Integrity-Culture-at-Universities-full-paper.pdf

McCabe D, Trevino L, Butterfield K (2001) Cheating in Academic Institutions: A Decade of Research. Ethics & Behaviour 11(3):219–232

Pearce C (1997) The Interactive Book: A Guide to the Interactive Revolution. MacMillan Technical Publishing

Ravn T, Sørensen M (2021) Exploring the Gray Area: Similarities and Differences in Questionable Research Practices (QRPs) Across Main Areas of Research. Sci Eng Ethics 27(40) https://doi.org/10.1007/s11948-021-00310-z

Roig M (1997) Can undergraduate students determine whether text has been plagiarized? The Psychological Record 47:113–122

Schmidt J (2014) Changing the paradigm for engineering ethics. Sci Eng Ethics 20(4):985–1010. https://doi.org/10.1007/s11948-013-9491-y

Stephens J, Wangaard D (2016) The achieving with integrity seminar: an integrative approach to promoting moral development in secondary school classrooms. Int J Educ Integr 12(1):1–16. https://doi.org/10.1007/s40979-016-0010-1

Stoesz BM, Yudintseva A (2018) Effectiveness of tutorials for promoting educational integrity: a synthesis paper. Int J Educ Integr 14:6. https://doi.org/10.1007/s40979-018-0030-0b

van Gaalen A, Brouwer J, Schönrock-Adema J, Bouwkamp-Timmer T, Jaarsma A, Georgiadis J (2021) Gamification of health professions education: a systematic review. Adv Health Sci Educ Theory Pract 26(2):683–711. https://doi.org/10.1007/s10459-020-10000-3

WCRI (2010) Singapore Statement on Research Integrity. Available from www.singaporestatement.org

Acknowledgements

The authors would like to thank Peter Sandøe for his input to the early design phase of Integrity Games, and the staff at imCode Partner AB for their technical assistance in setting up the online portal. We also thank all participants in the effect study, and Nóra Kovács for her comments on the analysis. In addition, we thank Mark Harvey Simpson of Global Denmark A/S, for language editing, and all partners in INTEGRITY for input and feedback on Integrity Games.

Funding

Open access funding provided by Copenhagen University The study was funded by European Union’s Horizon 2020 research and innovation programme under grant agreement No 824586. The funder had no influence on the design of the study or the analysis of the findings.

Author information

Authors and Affiliations

Contributions

Platform design and development | Test design | Data collection | Data analysis | Data interpretation | Writing first complete draft | Revising and commenting | |

|---|---|---|---|---|---|---|---|

MPG | x | x | x | x | x | x | |

AA | x | x | x | x | x | x | |

ACVA | x | x | x | ||||

CC | x | x | x | x | x | x | |

HL | x | x | |||||

CS | x | x | x | ||||

OV | x | x | x | x | x | x | |

MWJ | x | x | x | x | x |

Corresponding author

Ethics declarations

Competing interests

The authors have collectively designed and developed the content of the Integrity Games website. Hillar Loor is the CEO of imCode Partner AB, the company that was paid to develop the website. The website is and will remain freely available. The other authors have no financial conflict of interest regarding the promotion of the Integrity Games website.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Goddiksen, M.P., Allard, A., Armond, A.C.V. et al. Integrity games: an online teaching tool on academic integrity for undergraduate students. Int J Educ Integr 20, 7 (2024). https://doi.org/10.1007/s40979-024-00154-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40979-024-00154-7