Abstract

Continuous monitoring of mechanical parameters determining the state of natural and man-made systems is essential in a wide range of engineering disciplines from mechanical to civil and geotechnical engineering. To be effective, the monitoring response time needs to be commensurate with the characteristic time of variation of the processes being monitored e.g. from seconds as for example in some machinery, to weeks and months for mining excavations and years in the case of structures. The methods of measurement can therefore differ significantly for different applications. In spite of this, the methods of information processing—the computational monitoring—have considerable commonality. This review focuses on the methods of computational monitoring in solid and structural mechanics problems in different engineering applications. The traditional methods of monitoring are reviewed along with the corresponding computational and measurement methods. The basic principles of the computational monitoring in real time are established.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction: general definition and aims

1.1 Definition of computational monitoring in real time

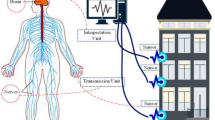

Acquiring continuous information about performance and integrity of engineering systems in real time is essential for efficient operation of many structural, mechanical and geotechnical systems, such as bridges, offshore and subsurface structures, power generation systems, rotating machinery, buildings, geotechnical foundations, boreholes and mining excavations and tailings and even in the cases involving living matter. Nowadays, the real time monitoring of system conditions is a routine for manufacturing, maintenance and the use of many engineering applications. The monitoring process involves the observation of a system, analysis of the obtained data and prediction of the future performance. Modern developments in sensor, communication and signal processing technologies together with computational capabilities enable high accuracy measurement and reliable evaluation of behaviour of engineering systems.

Various monitoring techniques have been developed to control the state of different engineering systems. Some techniques are visual and others employed sensors to monitor the system conditions and detect faults. Commonly these methods require a large number of sensors to provide the complete description of the system. The more complicated the system is, the larger number of sensors is needed. Furthermore the location of these sensors becomes increasingly important (Giniotis and Hope 2014). Adaptive sampling strategies that define the position of the sensors based on physical models are becoming an essential tool for the reliable assessment of engineering systems.

Generally, monitoring procedures are categorised as model-based and signal-based (Huston 2010; Farrar and Worden 2012; Stepinski et al. 2013). Although both approaches rely on signal processing techniques, model-based monitoring methods rely on mathematical or physical models of the system being monitored with the model parameters being estimated in the process of monitoring. The model-based methods play a vital role in the monitoring of structural loads and evaluation of remaining useful life of the system.

Signal-based or data-based monitoring methods, on the other hand, process direct measurements to relate the perceived system parameters to the signal features. A statistical model of training data can be established through machine learning approach for all possible states of the system. At the monitoring stage, the measured data is assigned a corresponding state using pattern recognition algorithms (Huston 2010; Farrar and Worden 2012).

We define computational monitoring as a hybrid approach. The aim of the computational monitoring is to estimate the state of an engineering system from measured data in real time by employing an adaptable computational model, which can be updated and adjusted to the current conditions. The implementation of the computational models into the monitoring process leads to more efficient monitoring and more accurate interpretation of the results. Computational monitoring enables development of self-calibrating monitoring systems and enhances system identification capabilities of monitoring systems.

This review focuses on the integration of real time monitoring and measurement with computational models to achieve predictive power.

1.2 Motivation for computational monitoring in real time

A number of engineering systems in exploitation are near the end of their design life. Methods for assessment of the remaining useful life are required to extend the operation of these systems beyond the basis service life. Real time monitoring techniques implementing physical models for reliable damage and fault detection are essential for the safe use of these ageing systems. Computational monitoring offers prospects for development of adaptive methods, which can account for the changing conditions.

Some engineering systems employ novel materials whose performance over time is not yet well established. These systems necessitate continuous monitoring that would measure the performance of the novel materials and improve the service time prediction. Furthermore, transition to more cost-effective systems may require a lowering of safety margins. In this case, the safety demands more accurate monitoring. The information about the system behaviour can be updated using long-term monitoring observations and implemented through computational modelling to optimise operational performance.

Under extreme events, the rapid condition monitoring is required to assess the integrity of the engineering systems and warn of the approaching failure or even catastrophe. In this situation, the computational monitoring enables quick implementation of rapidly changing conditions.

While failure of an engineering structure or a structure in the Earth’s crust is usually an instantaneous event that forms a critical point on the time axis, the processes leading to this critical event take time to progress. Naturally the monitoring targets these processes, whose pace determines the notion of ‘real time’. Here we assume that these processes are characterised by their characteristic times; the characteristic time of the process is the time required for it to exhibit noticeable change. We also assume that the minimum characteristic time of the processes leading to the failure of the system is known and will refer to it as the characteristic time of the system. Subsequently, monitoring in real time refers to the monitoring method whose aggregated reaction time (the time needed for acquiring and processing the information and displaying the results in a form conducive for decision making) is below the characteristic time of the system being monitored.

1.3 Computation monitoring in real time for intelligent monitoring systems

Computational monitoring is a next logical step in condition and structural health monitoring procedures. Historically, it started from non-destructive testing and evaluation (NDTE) (Farrar and Worden 2012; Stepinski et al. 2013). The NDTE methods were predominantly used to detect and characterise the damage at a predefined location. Commonly the NDTE techniques were implemented offline after the damage was located (Farrar and Worden 2012). Condition monitoring (CM) methods progressed to allow damage and fault detection in engineering systems during their operation. The CM has been usually used for monitoring of rotating and reciprocating machinery employed in manufacturing and power generation (Farrar and Worden 2012). Structural health monitoring (SHM) advanced to predict faults at early stages. SHM involves advanced sensor technologies to observe a structure or a mechanical system over time at predefined time increments. These observations are then processed to extract damage–sensitive features and statistically analysed to determine the health state of the system (Farrar and Worden 2012). Damage detection in SHM reduces to a problem of statistical pattern recognition implemented through machine learning algorithms (Farrar and Worden 2012). The fundamental challenge of SHM is to capture the system response on widely varying length and time scales required for an accurate establishment of the statistical models (Deraemaeker and Worden 2010).

Recent developments in miniaturisation and embedding technologies for sensors and actuators have been shifting the signal-based paradigm of SHM towards hybrid methods, in which physical and mathematical models of the system are required to design the monitoring system and establish the features for damage identification. The concept of smart materials and structures is another driving force changing the monitoring approaches. A smart engineering structure must not only integrate an ability to detect damage but also be able to self-heal itself. Computational monitoring in real time plays an essential role in development of these smarter engineering systems.

1.4 Components of computational monitoring in real time

Computational monitoring is a multidisciplinary research field including a number of basic sciences, such as mechanics, material science, electronics and computer science. The main components of a real time computational monitoring system are

-

Non-destructive testing and evaluation

-

Signal processing

-

Analysis and interpretation of monitoring data

-

Sensor and actuators

-

High performance computing

A monitoring system can be designed to address the global, local and component levels (Hunt 2006; Giniotis and Hope 2014). The global level monitoring observes the environment in which the engineering system is located. It recognises the influence of the surroundings and other autonomous engineering systems. At the local level, parameters defining health and performance of the system are monitored. This monitoring level is set to detect damage, control operational conditions and forecast future behaviour of the system. The component level monitoring only considers parameters that are important for the functionality of a specific component. In terms of the computational monitoring concept, these levels can be treated in the frame of a multi-scale computational model, which has become very popular in various engineering fields, see for example Crouch et al. (2013), Kim et al. (2013), Bogdanor et al. (2015), Guan et al. (2015) and Jenson and Unnikrishnan (2015).

Any monitoring system would consist of hardware and computational/software parts. The hardware part includes various sensors (Giniotis and Hope 2014), actuators, signal acquisition and processing units (e.g. Verma and Kusiak 2012; Kusiak et al. 2013), communication devises and power supply units. Nowadays, these modules become a part of the system architecture. The traditional structural monitoring sensors, such as strain gauges, accelerometers, temperature gauges, etc., are replaced with embedded MEMS and fibre optic sensors (e.g. Maalej et al. 2002; Hunt 2006; Huston 2010; Stepinski et al. 2013; Di Sante 2015). The power supply range, which is significantly broadened by applications of smart materials includes wire based, wireless and energy harvesting methods (Priya and Inman 2008; Annamdas and Radhika 2013). Wireless communication has become a method of choice for the data transfer (Lynch 2006; Uhl et al. 2007).

The software part contains data normalisation, data cleansing, data compression, and feature extraction units. The model-based methods also include packages for the analysis of engineering systems.

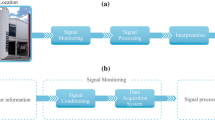

We need to add to this list that non-destructive testing and evaluation are based on measurements that could be both direct and indirect; the latter require interpretation models to express the results of the measurements in terms of the monitoring data. Furthermore, the parameter estimation from the measurements may require model calibration and in some cases additional measurements, which necessitates the inclusion of a measurement-computation loop. Finally, the monitoring results in developing (generalised) recommendations, be it verbal/written directives or digital signals to the actuators employed. These considerations can be expressed in terms of a diagram, Fig. 1.

In this paper, we discuss different components of monitoring systems with the aim to expose the state of art in monitoring as it is seen from different fields of engineering. We review the traditional areas of monitoring and give an outlook on methods of interpretations of monitoring data. We review measurement methods for monitoring with a particular emphasis on the methods supporting continuous real time observations. We also discuss computational models that are suitable for computing in real time. The review is concluded with the examples of computational monitoring in geotechnical engineering.

2 Traditional areas of application of monitoring

2.1 Condition monitoring

Condition monitoring (CM) focuses on early detection of failures and wear of machinery with the intention to minimize downtimes and operational costs. The CM data is commonly used to give a probabilistic forecast of the future conditions or the remaining useful life of the equipment (Marwala 2012). The CM is an important tool in the condition-based maintenance of machinery, which is widely replacing the more expensive run-to-failure and time-based preventive maintenance approaches (Jardine et al. 2006; Peng et al. 2010; Goyal and Pabla 2015). In the condition-based maintenance, the maintenance actions are undertaken based on real time observations and prognosis of the remaining useful life of the machine components established through a condition monitoring process (Jardine et al. 2006).

In general, the condition monitoring process includes the following five consecutive steps: (1) data acquisition step consisting of direct measurement of engineering parameters such as displacements, accelerations, strains, temperature and etc., (2) data analysis step processing the obtained data, (3) feature selection step determining specific aspects of the data, which can be used as failure indicators, (4) decisions making step identifying system failures through interpretation of the system feature and (5) condition diagnosis step concluding the process by giving estimation to the state of the system (Marwala 2012).

The CM techniques include:

-

Vibration based CM that deals with measurement and analysis of machine characteristics in frequency or modal domains. This is the most known CM method, which allows detection of various types of failures. As discussed in recent review papers, the application areas for the vibration based CM are ranging from structural engineering (Carden and Fanning 2004) and wind turbines (Hameed et al. 2009; Kusiak et al. 2013; De Azevedo et al. 2016) to diagnostics of electrical motors (Nandi et al. 2005) and gearboxes (Lei et al. 2014; Li et al. 2016), monitoring of rolling bearings (El-Thalji and Jantunen 2015) and tool condition monitoring in drilling and cutting operations (Rehorn et al. 2005);

-

Acoustic based CM that detects and analyses the elastic waves generated by rapidly released energy from local events, such as cracks, impacts, leaks, and similar events (Li 2002; Kusiak et al. 2013; Yan et al. 2015; De Azevedo et al. 2016);

-

Monitoring of electrical effects. This technique is typically performed for monitoring of electrical equipment, such as generators, motors, etc. It uses voltage and current measurements to detect an unusual phenomenon in the equipment such as electric motors (Nandi et al. 2005) and induction machines (Bellini et al. 2008; Zhang et al. 2011);

-

Lubricant analysis (oil debris monitoring). This type of CM is mostly performed off line. The oil samples are analysed in the laboratory for presence of wear debris, viscous properties and particles’ count and shapes (El-Thalji and Jantunen 2015; Phillips et al. 2015; De Azevedo et al. 2016);

-

Thermography. This technique allows for identification of failed components based on their thermal images. It is used for monitoring of faults in electronic parts, deformation monitoring, corrosion and weld monitoring and machinery inspection (Bagavathiappan et al. 2013; Kusiak et al. 2013);

-

Ultrasound testing. This technique is used for evaluation of material degradation through relating the properties of the material to ultrasonic wave propagation (Jhang 2009). Among the most common applications are determination of material thickness and the presence of flaws and bearing inspections (Kusiak et al. 2013; El-Thalji and Jantunen 2015).

-

Performance monitoring. This method is based on evaluating deviations in the equipment efficiency (Hameed et al. 2009; Kusiak et al. 2013).

A central part of any CM procedure is the fault detection and isolation (FDI). The FDI methods can be classified into model-based, signal-based, knowledge-based, hybrid (combining two or more other methods) and active control methods.

The model based FDI uses a model of the process being monitored to compare the measured output to the model-predicted one. In these methods, the model of the engineering system is obtained based on the physical principles or using systems identification techniques (Kozin and Natke 1986; Koh et al. 2003). Many model-based FDI methods have been developed during last four decades, e.g. see reviews (Isermann 2005; Heng et al. 2009; Marzat et al. 2012; Gao et al. 2015a, b). The model-based fault detection methods can be categorised as deterministic FDI, stochastic FDI, fault diagnosis for discrete-events and hybrid systems, and fault diagnosis for networked and distributed systems (Gao et al. 2015b). Deterministic model-based FDI methods include variety of the observer based methods and parity equations or parity space methods (Isermann 2005; Gao et al. 2015b). The stochastic model-based methods commonly use Kalman filter and the parameter estimation techniques. The Kalman filter method statistically tests residuals generated by Kalman filters in a structure similar to the observed one. The parameter estimation techniques identify and compare the reference parameters obtained from real time measurements and under healthy conditions (Gao et al. 2015b). Discrete-event fault diagnostics is represented by automata-based and Petri-net-based fault detection methods (Gao et al. 2015b). Another hybrid fault diagnostics systems—the bond graph method—recently become a popular approach to FDI in complex engineering systems. This method combines a discrete-event model based on observable events of the discrete dynamics with mode-change detection and isolation techniques of the continuous dynamics (Levy et al. 2014). Network fault diagnostics refers to the monitoring via wireless and networking communication channels. The recent network technologies have brought new challenges into the monitoring field, such as overcoming transmission delays, data dropouts, and limited capacity of the communication channels. The model-based FDI methods addressing these challenges were reviewed in Gao et al. (2015b). The distributed fault diagnosis treats a complex industrial process as an assembly of subsystems, where each subsystem is monitored separately (Marwala 2012). The main advantages of this distributed system are the reduced use of network resources and its readiness for extension. Recent development in this area were also discussed in Gao et al. (2015b). The distinctive advantage of all model-based FDI methods is that they are able to diagnose unknown faults using a small amount of data. At the same time, this diagnostic heavily relies on accuracy of the employed model.

If no adequate model is available, the condition monitoring is performed using signal-based methods utilising the measured process history and real time signal. The fault detection is based on the symptom analysis and prior knowledge of the healthy system. The signal-based FDI can be categorised as time-domain, frequency-domain and time–frequency signal-based methods (Gao et al. 2015b). The time-domain signal-based methods perform fault detection by extracting the time-domain features such as mechanical vibrations, changes of electric and induction current characteristics over time and impulse excitations are among the methods cited in Gao et al. (2015b). The frequency-domain signal-based methods make use of spectrum analysis to detect changes and/or faults. Frequency analysis of vibration signal is a common approach to CM of rotating machinery (Marwala 2012; Gao et al. 2015b). The time–frequency signal-based methods are mainly used for monitoring of systems featured by nonstationary signals. Among the methods that fall under this category are the short-time Fourier transform (Nandi et al. 2005; Cabal-Yepez et al. 2013), wavelet transforms (Cabal-Yepez et al. 2013), Hilbert–Huang transform, and Wigner–Ville distribution (Gao et al. 2015b). The main feature of the signal-based methods is that there is no need in a predefined model of the system. This is especially important for complex engineering systems where an adequate explicit system model is not available. On the other hand, the signal-based methods do not account for unbalanced input conditions, such as load deviations or power supply disturbances. Another deficiency of the signal-based methods is the need for historical data under both normal and faulty conditions.

In contrast to the model- and signal-based methods, which require either a predefined model or a signal pattern, the knowledge-based models are build on historical data only and so they are often referred as data-driven FDI method (Gao et al. 2015a). The knowledge-based systems are classified as qualitative and quantitative. The qualitative methods are represented by the expert-system-based method, qualitative trend analysis and signed directed graphs (Gao et al. 2015a). The quantitative methods are categorised as statistical- and non-statistical knowledge-based fault diagnosis. The statistical methods require a large amount of training data to adequately model the process (Gao et al. 2015a). The quantitative statistical methods include principal component analysis, partial least squares, independent component analysis, statistical pattern classifiers, and support vector machine (Samanta 2004; Widodo and Yang 2007; Phillips et al. 2015). The quantitative non-statistical methods are represented by artificial neural network and fuzzy logic approaches (e.g. Li 2002; Samanta 2004; Nandi et al. 2005; Basarir et al. 2007; Heng et al. 2009; Gao et al. 2015a). The training approaches for all knowledge-based methods are categorised as supervised and unsupervised modes. In the unsupervised mode, a knowledge base is formed by the data obtained from the normal operations. The real time measurements are then evaluated against this knowledge base. In the supervised mode, the data is trained using both normal and faulty conditions. The main weakness of the knowledge-based methods is the large computational costs associated with the large amount of historical data. The classification methods for new faults in complex systems may become a challenging task as well.

The hybrid FDI methods are introduced to overcome the limitations of the basic methods. Signal- and knowledge-based methods are naturally combined by using data driven classifiers on a basis of features extracted by a signal-based approach (as discussed in Gao et al. 2015a). The knowledge-based methods have been used to update statistical models in model-based FDI (Gao et al. 2015a; Laouti et al. 2015).

The active control FDI refers to a series of methods that enhances the fault detection capability by introducing specially designed input signal. This signal allows for more reliable identification of faulty modes (Gao et al. 2015a).

A large number of studies dealing with various aspects of the condition monitoring have been published and diverse FDI algorithms have been developed. Different methods have different limitations, which sometimes can be overcome by combining several methods together into hybrid methods. However, there are a number of common challenges remaining in CM (Heng et al. 2009). Among the areas that needed improvement are the integration between CM techniques, incomplete training/measurement data, influence of maintenance actions, varying operational conditions, coupled failure modes and full automation (Heng et al. 2009).

2.2 Damage detection and modelling

Damage is one of the major causes of failure in materials and structures. In recent years, damage mechanics has attracted an increasing interest in civil, aeronautical and mechanical engineering communities to prevent sudden failure by detecting damage in the early stages of service and/or predicting its initiation/propagation due to external loading. Various non-destructive techniques that rely on the overall changes of physical properties have been introduced to detect the presence of damage, assess its severity and identify its location within complex mechanical structures.

2.2.1 Non-destructive damage detection

Comprehensive surveys on damage detection and monitoring of engineering systems through changes in their vibration characteristics were given in Doebling et al. (1996), Salawu (1997), Doebling et al. (1998), Fan and Qiao (2011), Thatoi et al. (2012) and Stepinski et al. (2013). This type of monitoring can be used to diagnose damage in cracked beams, shells plates, pipelines (Wauer 1990; Dimarogonas 1996; Sabnavis et al. 2004) as well as composite materials (Montalvo et al. 2006). The methods of crack detection can also use the crack-induced bilinearity whereby the elastic modulus in tension is affected by the crack opening and hence smaller than that in compression, since the modulus is little affected by the crack that gets closed under compression (e.g. Adler 1970; Prime and Shevitz 1996; Rivola and White 1998; Chondros et al. 2001; Peng et al. 2008; Lyakhovsky et al. 2009), see also review and the discussion of properties of bilinear oscillators in Dyskin et al. (2012, 2013, 2014).

Among the novel methods of damage detection, it is also worth mentioning the impedance-based techniques. When bounded to mechanical structures Piezoelectric patches can be used as sensors/actuator to monitor and detect damaged elements (Naidu et al. 2002; Park et al. 2003). The detection approach consists in comparing the early age frequency response functions to the posterior responses. The eventual modifications of the signals can be attributed to the presence of damage (Raju 1997). For example, statistical process control (SPC) is a signal based method that monitors the changes in a process and relates them to specific operational conditions, in particular possible damages to the system (Farrar and Worden 2012). Damage prognosis (DP) is used to assess the remaining useful life of a system after damage is located (Farrar and Worden 2012). In geotechnical applications investigation of wave propagation is widely used to detect the internal damage, in particular the types of cracks can be inferred from the wave velocity variations with external load (Khandelwal and Ranjith 2010; Zaitsev et al. 2017a, b).

As a third category of non-destructive testing, infrared thermography offers a damage detecting method that does not rely on mechanical properties (Cielo et al. 1987; Muralidhar and Arya 1993; Lüthi et al. 1995). This technique monitors the effect of local defects on the heat flow trajectories. In a highly conductive medium the lines of heat transfer are predictable and well defined. They can be distorted locally in the presence of intrusive bodies or upon development of damage zones. Infrared cameras can be used to illustrate such anomalies (Sham et al. 2008).

Electromagnetic fields can also be useful to detect damage in civil infrastructures through Ground Penetrating Radar (GPR) (Xiongyao et al. 2007; Parkinson and Ékes 2008; Yelf et al. 2008). GPR uses an antenna containing a transmitter and a receiver that send electromagnetic waves through the subsurface of the monitored infrastructure. As the antenna is moved along predefined paths, energy is recorded in real time on radargrams that indicate the presence of anomalies associated with the change of dielectric properties.

2.2.2 Damage modelling

Modelling of the damage is usually based on Continuum damage mechanics theory, which was established in the pioneering works of Kachanov (1958) and Rabotnov (1969) that defined the concept of ‘‘effective’’ stress in materials embedding defects. Lemaitre and Chaboche introduced thermodynamic formulation and constitutive models that can predict the degradation of metallic materials due to mechanical loading (Lemaitre 1985; Chaboche 1987; Lemaitre and Chaboche 2001). Karrech et al. introduced a temperature dependent damage model to describe the damage of the lithosphere (Karrech et al. 2011a), a pressure and temperature dependent damage mechanics model for subsurface crustal strata (Karrech et al. 2011b), fluid flow dependent damage (Karrech et al. 2014), and fractal damage of materials with self-similar distribution of defects (Karrech et al. 2017). In the cases when the type of defects is known, the formulation of the constitutive model can be assisted by the theory of effective characteristics, e.g. Salganik (1973), Vavakin and Salganik (1975, 1978), Dyskin (2002, 2004, 2005, 2006, 2007, 2008) developed mechanics of materials (including geomaterials) with self-similar distributions of cracks or fractures.

2.3 Monitoring of mining operations

Instrumentation and monitoring are used in mining sector to make sure that the constructed mining engineering structures such as slopes, large underground openings, tailing dams behave according to the expectations defined at the design stage.

In open pit mines different forms of monitoring systems have been used to predict slope stability (Sakurai 1997). Previously monitoring points were fixed in the field and monitored periodically, whereas with the recent technology more improved systems such as slope monitoring radar have been used.

For underground mines the application area of monitoring is much wider than for the open pit mining. Continuous and periodic measurements are conducted for both early warning and calibration of numerical models. In addition to this in underground coal mining continuous monitoring is mandatory for the detection and observation of poisonous and combustible gasses i.e. CO and methane.

Field measurement studies are very important for both evaluation of the performance of existing and designed support elements and for calibration of numerical models. Numerical model calibration is a way of reliably estimation of strength properties of rock mass and reduction of uncertainties (Sakurai 1997). The calibration studies include the comparison of modelling results with the field measurements. During the calibration process, the input parameters are modified in a systematic manner until satisfactory agreement between the model results and field measurements is achieved. When the model is calibrated then it can be applied to evaluate similar mining layout and geological conditions.

2.4 Hydraulic fracture monitoring

Hydraulic fracturing is a technique used in the petroleum industry for reservoir stimulation, especially in tight gas reservoirs, in geothermal technologies, as well as in mining industry for block caving stimulation and for stress measurements. It involves cracking of the rock formation using high pressure fluids applied to a borehole. The success of hydraulic fracture operations vitally depends upon the direction and the dimensions of the produced fracture. Given that the hydraulic fracturing is very sensitive to the directions of the principal in situ stresses, to the distribution of pre-existing fractures and to the rock strength it is important to monitor the direction and dimensions of the hydraulic fracture.

A number of methods is used or proposed for hydraulic fracture monitoring. They include fracturing fluid pressure analysis, tracing the fracture fluid, microseismic mapping, crosswell seismic detection, vertical seismic profiling, measuring the surface tilt.

Conventional tracing the hydraulic fracture employs adding chemical and gamma-emitting tracers into the fracturing fluids (King 2011). Microseismic monitoring based on locating the signal sources, so-called passive microseismic mapping became quite popular. The passive microseismic mapping provides images of the fracture by detecting microseisms or micro-earthquakes that are triggered by shear slippage on reactivated bedding planes or natural fractures adjacent to the hydraulic fracture (Cipolla and Wright 2000).

An interesting development is the acoustic method based on generating the seismic waves from within the fluid itself by injecting the so-called noisy proppant (Willberg et al. 2006). The noisy proppant consists of small granular explosives which are floated into the fracture and under certain pressure explode to announce their position to surface mounted receivers. A modification of this method was proposed by Dyskin et al. (2011) whereby the explosive is replaced by more safe electronic pulse-emitting particles (which we term screamers) capable of synchronising themselves to create interference which increases the long wave energy content of their combined output. Both methods are active acoustic (microseismic) methods based on installing artificial noise-emitting devices.

Another method consists of the surface tilt monitoring and is based on the measurement of the fracture-induced tilt at many points above a hydraulic fracture (see lit. review in Mahrer 1999). In essence, it is a variant of strain measuring technique, as the surface tilt is a component of shear strain on the surface. The determination of fracture parameters requires solving a geophysical inverse problem, which possess a certain computational challenge.

2.5 Measurement while drilling

Measurement While Drilling (MWD) refers to the measurements taken downhole with an electromechanical device located in the bottom hole assembly of the drill string in the borehole drilling. The data obtained during drilling of exploration or production holes can reduce the cost and time and increase the accuracy of the site evaluation. The interpretation of the drill performance parameters, such as penetration rate, thrust on the bit, rotary speed and torque enables extracting the information regarding the rock mass properties.

Drill monitoring studies are not new in mining and can be traced back to the seventies (Brown and Barr 1978), Deveaux et al. (1983) and Schneider (1983) for detecting weak and water bearing zones. Multi-channel data logging by Brown and Barr (1978) demonstrated that monitored drill parameters could be used to locate fractures and discriminate between lithologies. With the technological advancements, recently more research in mining sector have been focused on MWD technique. Detailed information regarding rock type, lithology, rock strength (Hatherly et al. 2015; Basarir and Karpuz 2016), ground characteristics (Kahraman et al. 2015) can all be potentially determined from the recorded drilling parameters. This data recorded with respect to depth in the borehole must however be interpreted by direct comparison, correlation and calibration with core logs. It needs to me noted that, the MDW technique has a predecessor, the so-called under-excavation technique (Wiles and Kaiser 1994a, b) implying the in situ stress determination by measurements as an opening is excavated.

3 Methods of measurements for monitoring

The measurement is an essential part of any monitoring system. The measurements methods for monitoring should be non-destructive, robust to changes in the operational conditions and able to function over long periods of time. A number of methods that satisfy these requirements have been developed and used in the traditional areas of monitoring and experimental research studies. This section reviews different measurement methods suitable for the computational monitoring in real time.

3.1 Strain gauge monitoring

Strain gauges are the common tool for the measurement of deformations of solids and structures. They are inexpensive, simple in use and having various sensitivity levels. The strain gauges can be used for monitoring the external and internal deformations. For example, the electric resistance strain gauges are often used to monitor the strain inside the steel reinforcing bars (e.g. Maalej et al. 2002; Hunt 2006; Huston 2010; Stepinski et al. 2013; Di Sante 2015). For example, for 120 Ohm resistance strain gauges, the strains can be calculated as Strain (micro-stain) = CI × Volt/(2.5G × F), where F is the gauge factor, G is the gain, CI is connection index that is 1, 2, or 4 for full bridge installation, 2 for half a bridge, and 4 for one quarter of a bridge, respectively. This approach was used for testing of reinforced concrete members at the Structural Laboratory of the University of Western Australia. The example of the sensor application is demonstrated in Fig. 2: a 10 mm long electric resistance strain gauge of 120 Ohm resistance attached to the surface of reinforcing bar. The tests were conducted on RC columns and beams under static loading conditions. The behaviour of the structural members was monitored by correlating the global displacements measured externally and the rebar strains obtained by the embedded strain gauges. Figure 3 shows the tested RC column before and after testing. The column is 1200 mm long and has a square cross section of 180 mm. Figure 4a shows a typical vertical load-axial deflection curve and Fig. 4b shows the vertical load–strain curve at mid-span of the reinforced concrete column. It is seen that failure load was 1400 kN, which occurred at about 5.5 mm vertical deflection corresponding to 4500 micro-strain. Figure 5 demonstrates the four point bending test for the RC beam. The beam is 1200 mm long and has a square cross section of 180 mm wide. Figure 6a shows a typical vertical load-mid span deflection and Fig. 6b shows the vertical load–strain at mid-span of the reinforced concrete beam. It is seen that failure load was 83 kN which occurred about 8.5 mm vertical deflection which corresponds to 8500 micro-strain in tension and 500 micro-strain in compression.

3.2 Close-range photogrammetry

Strain gauging is complicated and time consuming, and the data obtained are constrained to the typically small strain gauge dimensions, which can be a considerable limitation especially in the case of high heterogeneity of the surface. Typically, the strain gauge is bonded to the surface and careful application of the strain gauge is critical to ensure good measurements. It is estimated that each strain gauge requires 2–3 h to apply and prepare for testing. The test engineer selects the positions of each measuring point carefully, using a computer finite-element analysis (FEA) model, and limits the number of strain gauges per component to minimize cost and setup time.

The strain gauge outputs a measurement of the strain at its location and solely in the direction in which it is mounted. Critical areas often have high strain gradients, but strain levels quickly decrease a few millimetres from the hot spot to levels too low to measure accurately. Consequently, the strain gauges are mostly ineffective for strain concentration measurements unless the precise location of the strain concentration is known. This is notoriously difficult with non-homogeneous materials such as rocks, see example in Fig. 7. Furthermore, the development and growth of fractures induced by the stress concentrators could damage the gauges.

It is therefore attractive to use the contactless measuring techniques. Of those, the most popular is the photogrammetry—a method of measuring the geometry of a surface by tracing the changes on the consecutive photographs—originated in geology and geodesy. It is attractive to apply the method for monitoring by measuring the displacement, strain and possibly rotation fields and their evolution. In order to reconstruct the full 3D displacements on the surface a stereo photography is needed, however if only in-plane displacements are sought, a single camera can be sufficient.

Currently two methods, the close-range photogrammetry and the Digital Image Correlation (DIC) are the most common. The close-range photogrammetry generates 3D coordinates of specific predefined points of interest at time intervals, whereas the photogrammetric measurement is based on determination of changes in these coordinates. The points of interest are marked beforehand using special artificial markers and coded targets, which facilitate their automatic detection and identification, see examples in Figs. 7 and 8. DIC, on the other hand, is based on pattern matching. Natural or artificially applied pattern of the surface of the test object is divided into correlation areas called macro-image facets or subsets of pixels. These subsets are correlated in each consecutive image to generate strain fields.

Close-range photogrammetry measurements of a laboratory prototype: a mark up of points of interest and reference points, b calibration bundle (Shufrin et al. 2016)

The close-range photogrammetry is characterised by the ratio of the camera focal length to a distance to the object chosen between 1:20 and 1:1000 and requires special reflective targets that should be fixed to the surface. With this method it is possible to achieve measurement precision of 1:500,000 with respect to the largest object dimension in off-line photogrammetry systems (Fraser 1992; Fraser et al. 2005; Luhmann 2010) and around 1:10,000 in on-line systems (Luhmann 2010). In addition to the high accuracy, the close-range photogrammetry offers a possibility of non-contact measurements, a possibility to monitor a wide network of points without any additional cost and applicability in a wide range of engineering situations. Multi- and single-camera close-range photogrammetry systems were applied to measurement of static (Franke et al. 2006; Jiang et al. 2008; Lee and Al-Mahaidi 2008; Ye et al. 2011) and dynamic deflections (Qi et al. 2014) of various civil engineering structures, capturing vibration (Ryall and Fraser 2002) and buckling (Bambach 2009) modes, assessment of thermal deformations (Fraser and Riedel 2000) and automatic fracture monitoring (Valença et al. 2012). It is also used in laboratory experiments for displacement and strain measurements (Shufrin et al. 2016).

The close-range photogrammetry reconstructs the object simultaneously from several images of the targets taken from different viewpoints by creating bundles of intersecting rays and triangulation. The accuracy of photogrammetric measurements is a function of (1) the number of images (the more images used the higher the accuracy of the bundle adjustment), (2) the spatial geometry of intersecting rays (the wider the geometry the smaller the triangulation errors), (3) the imaging scale (the greater the magnification the higher the accuracy of image measurements), and (4) the camera geometric reliability and resolution (Fraser et al. 2005; Luhmann 2006). (The use of so-called metric cameras with stable internal architecture and high resolutions leads to a higher precision.) To obtain high accuracy measurements of a stationary object, one needs to increase the number of camera views significantly by either using multiple cameras or moving the same camera around and taking many images. The latter approach, however, cannot be used to track the continuous deformation process, since an instant deformation state has to be simultaneously captured from numerous camera positions. However, the use of multiple metric or semi-metric cameras attracts high cost and synchronisation problems. The commercial software packages such as iWitnessPRO (Fraser and Cronk 2009) can be used for both camera calibration and measurements.

For example, the procedure allowing for measurement of planar deformations from digital images using a single camera was proposed in Shufrin et al. (2016). Figure 8a presents the laboratory setup used to measure deformation in the uniaxilly loaded prototype. The displacements are measured at the points of interest marked using special retroreflective targets. The reference points are also distributed around the prototype to provide wide spatial geometry for camera calibration and determination of the stationary position of the camera. Images of the object were taken from the stationary camera position during loading sequences as shown in Fig. 8b. This position is determined with respect to the prototype at the end of the experiment using images taken from calibration camera stations, see Fig. 8b.

3.3 Digital image correlation

Another method that uses the photographic images of the deformed objects is Digital Image Correlation (DIC). It is based on comparison of two images, before and after deformation. The surface should be covered with speckles (e.g. blobs of paint) irregularly placed, see Fig. 9. The algorithm selects subsets of pixels in the image before deformation (the reference image, Fig. 9a). Each subset is then correlated with the subsets from the deformed image and the new position of the subset centre in the deformed image is calculated, Fig. 9a, b. This process is repeated for all the subsets in the reference image and thus the displacement field is reconstructed. The strain field is then determined by differentiation as the symmetrical part of displacement gradient. Different applications were found and various processing methods were developed aimed at increasing resolution (e.g. Peters et al. 1983; Bruck et al. 1989; Chen et al. 1993; Vendroux and Knauss 1998; Pitter et al. 2001; Schreier and Sutton 2002; Wang and Kang 2002; Ma and Guanchang 2003; Pilch et al. 2004; Zhang et al. 2006; Pan and Li 2011; Rechenmacher et al. 2011; Hassan et al. 2017). Currently the error in displacement measurements can be as small as 0.01 pixel size. The strain field is usually determined by differentiation, however algorithms were developed for direct reconstruction of strains (e.g. Lu and Cary 2000).

DIC Speckled pattern and pixel subsets. a Continuous deformation: blue pixel subset is of the reference state and yellow subset is of a deformed image, b deformed image with discontinuities (Hassan et al. 2017)

The method also allows reconstructing the rotations, which is important in modelling and understanding the mechanical behaviour of particulate, granular and fragmented materials and associated processes of fracturing in these materials (Pasternak et al. 2015; Esin et al. 2017; Hassan et al. 2017).

The main advantage of the method is the simplicity of the setup and measurements. However, DIC performance deteriorates in dusty or smoky environment due to unwanted noise (Ha et al. 2009; Debella-Gilo and Kääb 2011). Another limitation is that the original DIC is based on the assumption of continuity of the displacement field and therefore is not suitable for measuring displacements in the presence of discontinuities or fractures. Special automatic methods were developed to enable displacement reconstruction in the presence of discontinuities (e.g. Helm 2008; Chen et al. 2010; Poissant and Barthelat 2010; Nguyen et al. 2011; Rupil et al. 2011; Fagerholt et al. 2013; Hassan et al. 2015; MacNish et al. 2015; Hassan et al. 2017). Roux and Hild (2006) and Roux et al. (2009) proposed a post processing technique to reconstruct the strain field around cracks assuming that the crack opening (displacement discontinuity) is always small (in the subpixel range). It should be noted that when the displacements are small the loss of accuracy should be expected.

3.4 Smart sensor technology

A smart system is the system, which is able to detect changes in its environment and to response in a certain way by altering its mechanical properties, shape or electromagnetic performance (Varadan and Varadan 2000). During the past two decades, these smart abilities have been widely adopted for the measurement and monitoring purposes. The smart sensors are permanently embedded into the structure in a predefined configuration. These sensors are able to provide enhanced monitoring information, which is not obtainable by the traditional sensors. The smart systems include piezoelectric sensors, wireless smart sensors and optical fibre sensors. The comprehensive reviews for the smart sensor technologies can be found in Worden and Dulieu-Barton (2004), Di Sante (2015).

Piezoelectric sensors are made of piezoelectric materials, which exhibit electromechanical coupling between the electric field and the mechanical strain. The piezoelectric sensors are used in impedance-based damage detection application (Park et al. 2003; Kim and Roh 2008; Baptista and Filho 2009; Annamdas and Radhika 2013) and guided waves-based damage detection methods (Park et al. 2006; Sohn and Kim 2010).

The wireless smart sensors networks are widely replacing the traditional wired systems (Zhao and Guibas 2004; Sazonov et al. 2009; Farrar and Worden 2012). Most wireless sensors implement multiple types of measurements, such as impedance-based monitoring, vibration based crack detection, etc. (Jessica 2005; Chintalapudi et al. 2006).

Optical fibre sensors are widely used in monitoring of civil infrastructure. They used to measure strain, temperature and internal pressure. These sensors are light, highly sensitive and insusceptible to electromagnetic interference. The most prominent example of this technology is the fibre Bragg grating, see for example Fig. 10 (Chi-Young and Chang-Sun 2002; Di Sante 2015).

Fibre Bragg grating sensor principles (Di Sante 2015)

3.5 Acoustic emission monitoring

The acoustic emission (AE) monitoring is based on capturing the elastic waves, which are emitted by a developing defect. AE is defined as the rapid release of strain energy from localised sources, such as fracture creation or propagation, plastic deformation or other mechanical performance like friction. The most prominent feature of the AE testing is that it is a continuous process where the structure is observed under existing loading conditions or even during the loading (Grosse and Ohtsu 2008). Thus, AE allows the real time monitoring capturing the events while they develop. The AE monitoring is used in many areas of engineering and vast comprehensive reviews on its application can be found in literature, e.g. aerospace engineering applications (Holford et al. 2017), testing of polymer composites (Romhány et al. 2017), monitoring of concrete structures (Zaki et al. 2015; Noorsuhada 2016) and hydraulic fracture propagation in rocks (Bunger et al. 2015).

3.6 Infrared thermographic non-destructive monitoring

Infrared thermographic non-destructive testing is a non-contact monitoring method, which is based on mapping surface temperatures of an object by capturing the emitted infrared radiation (IR) (Stepinski et al. 2013). The amount of IR radiation emitted by an object is measured by infrared cameras and recorded as thermal images of the object. The damage is detected through examination of anomalies in the thermal images of the object. The thermographic methods are divided into passive and active methods. The passive thermographic methods are able to detect global anomalies and are qualitative in nature. It is used for leakage detection (Inagaki and Okamoto 1997; Lewis et al. 2003), monitoring of heat losses and structural inspections (Grinzato et al. 2002; Stepinski et al. 2013; Theodorakeas et al. 2015). In the active thermographic monitoring, the energy is supplied to the object by an external source, either by a thermal wave source or by vibration source. The latter approach is called vibrothermography (Stepinski et al. 2013). In vibrothermography monitoring, the mechanical energy produced by the stress waves from the external or embedded vibration source converts into thermal energy at discontinuities, such as cracks, delaminations, etc. Thus, the defect turns into the heat source and can be directly detected on the thermal image. The active thermographic monitoring methods are used for the detection of cracks in ceramic (Kurita et al. 2009), metallic (Kordatos et al. 2012) and composite materials (Fernandes et al. 2015; Mendioroz et al. 2017), delamination failure in composite materials (Fernandes et al. 2015), welding inspection (Schlichting et al. 2012).

3.7 Electromagnetic emission monitoring

This method is based on registering electromagnetic emission from moving fracture tips. The emission has been detected at crack propagation in almost all materials (see review by Gade et al. 2014). The detection methods utilise electric or magnetic dipole sensors and capacitors (Gade et al. 2014). Electromagnetic emission is also observed during other fracture processes, for instance in rock samples under uniaxial compression of high magnitude (Mori et al. 2009).

3.8 Gravitational monitoring

When rock mass deformation produces volumetric strain the latter leads to change in rock density and the corresponding change in gravity. Even if the change is extremely small, the sensitivity of gravity meters is sufficient to detect it. On top of this, the place where deformation is developed moves, which can also be detected (Walsh 1975). Furthermore, the failure processes and damage accumulation in rocks and rock mass can produce dilatancy that is non-linear increase in volume. This phenomenon can also be measured by gravity meters and subsequently lead to the use of gravitational monitoring for fracture and failure monitoring (Fajklewicz 1996). In particular a resemblance of microgravity variations and the microseismic activity in the excavation was obtained. However, there was no correlation observed between microgravity and the advance of the excavation (Fajklewicz 1996). One notes though that the change in microgravity caused by elastic volumetric strain associated with the excavation advance is negative (compression) while the dilatancy related to fracture processes in the rock cause positive volumetric strain such that these two processes could mask each other.

4 Methods of analysis of monitoring data and system identification

Analysis and interpretation of monitoring data are usually based on two main approaches: model-based and signal-based methods. The model-based methods involve constructing of a physical model of the system and correlating it with the observation data. The signal-based methods are based on statistical models established by means of machine learning and pattern recognition. The third approach is a hybrid approach. The hybrid methods use the physical models to supplement experimental data, derive efficient monitoring strategy and calibrate the signal-based models.

4.1 Signal-based methods

4.1.1 Fuzzy logic

The term ‘fuzzy set’ was introduced by Zadeh (1965). Recently it has found popularity in various applications. One of the potential application areas of fuzzy systems is the rock engineering classification systems (Bellman and Zadeh 1970; Nguyen 1985; Nguyen and Ashworth 1985; Juang and Lee 1990; Habibagahi and Katebi 1996; Gokay 1998; Sonmez et al. 2003). It has also been used for the construction of models for predicting rock mass and material properties (Grima and Babuska 1999; Finol et al. 2001; Kayabasi et al. 2003; Lee et al. 2003). Other researchers have used fuzzy set and systems for predicting machine performance depending on the properties of rock mass and material (den Hartog et al. 1997; Deketh et al. 1998; Alvarez Grima and Verhoef 1999; Alvarez Grima et al. 2000; Basarir et al. 2007; Kucuk et al. 2011).

For example, Basarir et al. (2007) conducted complementary research work and presented an improvement of a previously published grading rippability classification system by the application of fuzzy set theory. The developed fuzzy based rippability classification system considers the utilization of the rock properties such as uniaxial compressive strength, Schmidt hammer hardness, the field p-wave velocity and the average discontinuity spacing, as well as the expert opinion. The fuzzy set theory was chosen mainly to deal with uncertainty and to eliminate bias and subjectivity. In the conventional set theory a given element can either belong or not belong to a set. Opposite to this, in the fuzzy modelling membership functions are used to provide transition from belong to a set to not belong to a set. Therefore, the passage is gradual rather than sharp as in the case of the conventional set theory. Designing membership functions is the most critical part of constructing fuzzy model. Each membership function uses linguistic terms such as very weak, weak, moderate, strong or very strong. These linguistic variables can be considered as the key for ill-defined systems. The input and output membership functions and the related linguistic terms of the constructed fuzzy model used to predict the rippability classes of rock masses are shown in Fig. 11. The links between input and output variables are connected by means of if–then rules i.e. “if seismic velocity is Fast (F) and uniaxial compressive strength is Strong (S) and discontinuity is Small (B) and Schmidt hammer is Very Hard (VH) then rating is Difficult (D)”. At the final stage of modelling, the rules are compiled and a crisp value for the output parameters, in this case the rating, is obtained and the output class is defined.

Membership functions for input variables, uniaxial mocpressive strength (a), Schmidt hammer hardness (b), seismic velocity (c), discontinuity spacing (d) and output variable rating (e) (Basarir et al. 2007)

The validity of the proposed fuzzy model was checked by the comparison against existing classification systems, direct ripping production values and expert opinions obtained at studied sites as shown in Table 1. The fuzzy logic based system yields the membership degree for the assed rippability class. Therefore, the developed system reduces the uncertainty and biased usage that appear in the existing classification systems.

More comprehensive and concise review on the rock engineering applications of fuzzy set theory was compiled in Adoko and Wu (2011).

4.1.2 Neural networks

Neural network or ANN is a computational method used to acquire, represent and compute a mapping from a multivariate space of information to another specified set of data representing that mapping (Garrett 1994; Singh et al. 2013). The ANN imitates human brain and nerve system, it consists of elements, each receives a number of inputs and generates single output without needing any predefined mathematical equations of the relationship between model input and model outputs. That is why the ANN is preferred over conventional mathematical models and has been used in many areas of engineering (Sawmliana et al. 2007; Shahin and Elchalakani 2008; Cevik et al. 2010; Cabalar et al. 2012; Singh et al. 2013; Alemdag et al. 2016).

4.1.3 Adaptive neuro fuzzy inference system (ANFIS)

The adaptive neuro fuzzy inference system (ANFIS) is one of the evolutionary modelling techniques. In fact, it is a combination of two widely used artificial intelligence methods namely the neural network and fuzzy logic. Each of these methods has its own advantages and disadvantages and the ANFIS combines the advantages of both of these methods. It takes the advantage of recognizing patterns and adapting the method to cope with the changing environment from neural networks and it takes the advantage of incorporating human knowledge and expertise to deal with uncertainty and imprecision taken from fuzzy logic. Due to these advantages, the ANFIS has been increasingly used in earth sciences in the applications complicated by high uncertainty (Gokceoglu et al. 2004; Singh et al. 2007; Iphar et al. 2008; Yilmaz and Yuksek 2009; Dagdelenler et al. 2011; Kucuk et al. 2011; Yilmaz and Kaynar 2011; Basarir et al. 2014; Asrari et al. 2015; Basarir and Karpuz 2016; Fattahi 2016).

Basarir et al. used one of the evolving soft computing methods, ANFIS, for the interpretation and analysis of the MWD (Measurement While Drilling) data for the prediction of Rock Quality Designation (RQD) (Basarir et al. 2017), percentage of drill core pieces in length of 10 cm or more. The input for the model consists of the most widely recorded and known drilling operational parameters such as bit load (BL), bit rotation (BR) and penetration rate (PR). The sequential network architecture, Fig. 12a showing the structure of the model, membership functions and corresponding linguistic terms of input parameters of the developed ANFIS model are shown in Fig. 12b. Statistical performance indicators show that the ANFIS modelling is suitable for obtaining and quantifying complex correlations between the MWD parameters and geotechnical conditions, see Fig. 13. In this particular application a good correlation with the RQD was obtained. Initial estimation of the RQD can be obtained based on MWD parameters.

Sequential network architecture (a) and membership functions (b) for the constructed ANFIS model in Basarir et al. (2017)

Correlations between measured and predicted RQD values (Basarir et al. 2017)

4.2 Model-based computational methods

4.2.1 Finite element method

For the last 50 years prediction of the behaviour of continuum mechanics systems, from geomechanics structures to human body organs, have been dominated by Finite Element Method (FEM) (Zienkiewicz 1965; Martin and Carey 1973; Zienkiewicz et al. 1977; Bathe 1996) that uses a computational grid in a form of mesh of interconnected triangular or rectangular elements for 2-D problems and tetrahedral or hexahedral elements for 3-D problems. Application of FEM in geomechanics started in the sixties of twentieth century (Anderson and Dodd 1966; Zienkiewicz and Cheung 1966; Zienkiewicz et al. 1966, 1968). It has been used in numerous computational geomechanics studies (Dysli 1983; Broyden 1984; Bardet 1990; Auvinet et al. 1996; Moresi et al. 2003; Yue et al. 2003; Kardani et al. 2013) with important contributions from O.C. Zienkiewicz, one of the founders of finite element method (Zienkiewicz et al. 1970, 1999) (Pastor et al. 2011).

Despite the progress achieved and the development of specialised finite element procedures for computational geomechanics (Broyden 1984; Auvinet et al. 1996; Moresi et al. 2003; Kardani et al. 2013), significant challenges (or even limitations) associated with finite element discretisation in geomechanics have been recognised (Zhang et al. 2000; Yue et al. 2003; Luan et al. 2005; Zhao et al. 2011; Kardani et al. 2013; Komoroczi et al. 2013; Pramanik and Deb 2015; Zhou et al. 2015b). These include: (1) Tedious and time consuming creation of finite element meshes of geological structures with complex geometry. Although such creation has been a subject of significant research effort, the existing solutions require water-tight surface defining the geometry. This is a challenge on its own as, unlike in typical engineering applications where such surfaces are defined using CAD, in geomechanics they typically need to be extracted from images and other geological data; (2) Accounting for large deformations/strains that geological structures may undergo (e.g. due to landslides, earthquakes etc.) typically require computationally expensive remeshing when the solution accuracy deteriorates due to element distortion; (3) Discontinuities due to fragmentation, cracks and geological faults remeshing typically require remeshing to ensure solution convergence in the crack area and to introduce changes to the computational grid to account for formation of crack/discontinuity; and (4) Modelling of fluid flow through soil and other geological structures.

To overcome these challenges, methods that do not rely on finite element discretisation have been proposed. This includes discrete element methods (DEM) and meshless (also referred to as mesh-free and element-free) methods.

4.2.2 Discrete element method

Discrete Element Method (DEM), also referred to as Distinct Element Method have been introduced by Cundall and Strack (1979) to model the mechanical behaviour of granular materials. The main ingredients of a discrete element model are the scheme that integrates the equations of motion of each particle and the interaction forces that govern the inter-particular contacts. The original version that Cundall and Strack developed uses rigid discs or spheres to model the individual elements, but more complex shapes have also been introduced by Karrech et al. (2008), Tao et al. (2010), Lu et al. (2015). As discontinuities/fragmentation in DEM can be produced through the loss of adhesive contact between the particles, the DEM has been regarded by some researchers as an effective tool for modelling of rocks undergoing cracking/fragmentation (Onate and Rojek 2004; Azevedo and Lemos 2006; Komoroczi et al. 2013) and layered and granular geological materials (Kruggel-Emden et al. 2007; Buechler et al. 2013). One of the key difficulties is that the desired macroscopic constitutive behaviour cannot be defined directly, but needs to be obtained by formulating an appropriate contact (force–displacement and damping or friction) law that governs interactions between the particles. In practice, this process tends to involve extensive calibration where the contact law input parameters are derived by quantitative comparison between the modelling and experimental results (Marigo and Stitt 2015). Changes in the type of load acting on the analysed medium may require new calibration; in particular modelling of viscous behaviour poses a major challenge (Komoroczi et al. 2013). Another major limitation of DEM modelling is its demand on computational time. Computational procedures to accelerate convergence under repeated loading conditions have been suggested (Karrech et al. 2007). Parallel computing offers new horizons for efficient DEM modelling with large numbers of degrees of freedom (Shigeto and Sakai 2011).

4.2.3 Meshless methods of continuum mechanics

Meshless methods are a family of methods of computational mechanics where the equations of continuum mechanics are spatially discretised over the analysed domain using a cloud of nodes (where forces and displacements are calculated) with no assumed structure on the interconnection between the nodes.

Smoothed particle hydrodynamics SPH is regarded as the first meshless method. It utilises a strong form of equations of continuum mechanics (Gingold and Monaghan 1977; Lucy 1977; Monaghan 1992). The SPH (often in combination with DEM) has been applied in geomechanics to model ductile failure and hydrofracturing (Komoroczi et al. 2013), rock blasting (Fakhimi and Lanari 2014), intersecting discontinuities and joints in geomaterials (Pramanik and Deb 2015), fast landslides (Clearly and Prakash 2004; Pastor et al. 2011), geophysical flows (Clearly and Prakash 2004) and fluidised soils (Rodriguez-Paz and Bonet 2005; Pastor et al. 2009). However, several important shortcomings of the SPH method such as instabilities in tension and the accuracy inferior to that of the finite element method, have been recognised in the literature (Belytschko et al. 1996). Therefore, substantial research effort has been devoted to meshless methods that utilise the Galerkin weak form of equations of continuum mechanics.

Meshless (element-free) Galerkin methods use the Moving-Least Squares (MLS) shape functions, while the spatial integration is typically conducted over a background grid that does not have to conform to the boundary of the analysed continuum (Belytschko et al. 1994, 1996, 1997). The nodes where the displacements are calculated are independent of the background grid (Belytschko et al. 1996; Murakami et al. 2005; Horton et al. 2010). This separation of the interpolation and integration grids allows for almost arbitrary placement of the nodes throughout the analysed continuum, which is well suited for automated generation of computational grids for complicated geometries. However, restrictions on the ratio of the number of integration points and nodes apply (Horton et al. 2010). An alternative approach is to use the nodes as vertices of the integration cells. Although this approach has been used in some commercial codes (Hallquist 2005), it imposes finite element-like constraints on the positions of the nodes and, therefore, is not regarded as a method of choice in the Galerkin-type meshless discretisation, see Fig. 14.

Meshless discretisation of a cylinder (diameter of 0.1 m and height of 0.1 m) with almost arbitrarily placed nodes (.) and regularly background integration points (+). In this discretisation, the number of integration points is two time greater than the number of nodes where the displacement is computed. Only 2D orthogonal views are provided as 3D visualisation results in a very unclear cloud of points (Horton et al. 2010)

As MLS shape function facilitate smooth interpolation of displacement and pressure fields, Galerkin-type meshless methods can be applied to solving problems that require modelling of soil–water coupling problems (Murakami et al. 2005). Introducing discontinuities/cracks in such methods is accomplished through a modification of the influence domains of the affected nodes (the nodes located on the opposite sides of the discontinuity cannot interact with each other) using either visibility criterion (Belytschko et al. 1996; Jin et al. 2014) or manifold method (Shi 1992) without any changes to the computational grid. Therefore, the Galerkin-type meshless methods have been applied in modelling of damage of rocks with pre-existing/initial cracks (Luan et al. 2005)and crack propagation in brittle rocks (Zhang et al. 2012a) and concrete (Belytschko et al. 2000) as well as ductile (Simkins and Li 2006) materials.

Although the MLS shape functions and background spatial integration facilitate versatility and robustness of Galerkin-type meshless methods, they are also encounter some challenges. As the MLS shape functions do not have the ideal Kronecker delta properties, they introduce inaccuracies when imposing essential boundary conditions. To overcome this challenge, coupling of meshless and finite element discretisation with essential boundary conditions imposed through finite element shape functions (Belytschko et al. 1996; Krongauz and Belytschko 1996; Zhang et al. 2014) and Lagrange multipliers (Belytschko et al. 1994, 1996) have been used. Recently Chowdhury et al. (2015) proposed and verified application of Modified Moving Least Squares (MMLS) with polynomial (quadratic) bases (Joldes et al. 2015a) and regularised weight functions for imposition of essential boundary conditions within the Galerkin-type meshless computation framework. As the MLS shape functions are not polynomials and their local support may not align with the integration cells, background integration using Gaussian quadrature may prove difficult to quantify errors. Unlike in Finite Element Methods, where the shape functions are polynomials, Gaussian quadrature that guarantees accuracy of the integration cannot be apriori selected. Gaussian adaptive integration scheme has been proposed to overcome this challenge (Joldes et al. 2015b).

For example, Fig. 15 presents a meshless (element free) Galerkin type computational model for a cantilever beam (length L = 48 mm, depth D = 12 mm) subjected to bending due to parabolically varying traction P = 1000 N acting on the left-hand-side edge of the model. The right-hand-side edge is rigidly constrained. Detailed description of this example is provided in Chowdhury et al. (2017). The model was implemented using Meshless Total Lagrangian Explicit Dynamics (MTLED) method developed by Horton et al. (2010), Chowdhury et al. (2015), Joldes et al. (2015a, c) with the Modified Moving Least Square (MMLS) shape functions. The essential boundary conditions (at the rigidly constrained edge of the beam) were imposed using recently developed Essential Boundary Conditions Imposition in Explicit Meshless (EBCIEM) method by Joldes et al. (2017). Adaptive Dynamic Relaxation algorithm by Joldes et al. (2011) was applied to ensure convergence to the steady (static) state. To facilitate accurate spatial integration, a very dense background integration grid consisting of 2500 rectangular integration cells (100 × 25 grid) was used. 10 × 10 Gauss quadrature was applied in each integration cell.

Computation of deformations of cantilever beam subjected to bending using Galerkin-type Meshless Total Lagrangian Explicit Dynamics (MTLED) method developed by Horton et al. (2010), Chowdhury et al. (2015), Joldes et al. (2015a, c), Chowdhury et al. (2017) with the Modified Moving Least Squarse (MMLS) shape functions by Chowdhury et al. (2017). The figure shows the differences (in mm) between the analytical solution from Timoshenko and Goodier (1970) and the deformations computed using MTLED. For the beam deflection of 3 mm, the maximum differences are below 2.5×10−3 mm. The beam length L = 48 mm, depth D = 12 mm, force P = 1000 N, Young’s modulus E = 3000 Pa, Poisson’s ratio ν = 0.3, and force P = 1000 N

The results indicate a very good agreement between the deformations computed using the meshless method (MTLED) and analytical solution by Timoshenko and Goodier (1970). The maximum differences are below 0.1% of the beam deflection.

As already mentioned, although the Galerkin-type meshless methods facilitate almost arbitrary placement of the nodes throughout the analysed continuum, restrictions on the ratio of the number of integration points and nodes (Horton et al. 2010) form constraints on automated grid generation. Although overcoming these restrictions (i.e. making meshless computational grids to provide stable and converged results for any placements of the nodes) still remains an unsolved challenge, modification of the MLS shape functions and adaptive integration proposed by Joldes et al. (2015a, b) may be regarded as the first step in providing the solution. The ultimate outcome can be the capability to create computational geomechanics models directly from images of geomaterials and geological structures—a concept used by Yue et al. (2003) for creation of finite element models of geomaterials. Effectiveness of a very similar approach (neuroimage as a computational models) has been proven in biomedical engineering when creating human body organ models for surgical simulation (Zhang et al. 2012b, 2013).

4.2.4 Isogeometric analysis

As discussed above, creating finite element meshes for computational geomechanics models includes extraction of geometry from images and then generation of the actual mesh. Geometry representation obtained through segmentation differs from that in the finite element analysis. This not only makes mesh generation difficult and often tedious, but also leads to inaccuracies as the mesh only approximates geometry extracted from the images. This problem is not limited to computational geomechanics. It has been recognised as a major challenge in biomedical engineering where patient-specific computational biomechanics models need to be created from medical images (Wittek et al. 2016). Hughes et al. (2005) pointed to the fact that differences in geometry representation in the Computer Aided Design (CAD) systems and finite element analysis lead to excessively long time (up to 80% of the entire analysis time) devoted to mesh generation in major engineering applications. In the isogeometric analysis, this problem is solved by eliminating the finite element polynomial representation of geometry and replacing it with the representation based on NURBS (Non-Uniform Rational B-Splines) (Hughes et al. 2005) which is a standard technology used in CAD systems. This implies creation of NURBS elements that exactly represent the geometry and facilitate direct translation of CAD geometric model to computational model. Isogeometric analysis has been applied in geomechanics in modelling of unsaturated flow in soils (Nguyen et al. 2014) and domains that contain inclusions exhibiting elasto-plastic behaviour (Beer et al. 2016). The necessity to create surfaces (represented by NURBS) remains a major limiting factor in application of isogeometric analysis in situations where the CAD geometric models are not readily available.

4.2.5 Inverse methods

Inverse modelling is a vast research area, which is applicable to every engineering discipline. In this paper, we mainly focus on aspects that are related to computational monitoring. Unlike forward modelling approaches, the inverse modelling is data-driven (Zhang et al. 2015). While a forward model is typically used in the design phase to make early decisions and facilitate pre-feasibility studies, the inverse modelling accompanies the service life of the structure. The inverse modelling takes the monitored behaviour data as inputs, expresses them as driving variables in a mathematical, empirical or numerical model and delivers predictions on efficiency, sustainability, stability and so on. Among the comprehensive reviews that are related to inverse problems in computational modelling of mechanical and civil engineering systems, it is worthwhile mentioning the work of Friswell and Penny (1992) presented an overview of inverse methods for damage location and detection. Inverse models have been used in civil engineering applications for the energy saving and control in modern buildings (Heo and Zavala 2012), fault detection and diagnosis in building management systems (Yoshida et al. 2001), modelling of leakage through a rockfill dam foundation (Zhou et al. 2015a), and monitor fatigue in steel bridge decks (Farreras-Alcover et al. 2017). In resource engineering, inverse approaches have been used to model variable density water flow (Bastani et al. 2010), investigate aquifer behaviour through pumping data (Samuel and Jha 2003), produce a data-driven approximation of the implicit relation between transmissivity and hydraulic head (Garcia and Shigidi 2006), design up-scaled borehole facilities for thermal energy storage (Tordrup et al. 2017), and health monitor offshore jacket platforms (Haeri et al. 2017).