Abstract

Fracture morphology characterization in broken glass panes is crucial for designing laminated safety glass (LSG) in civil engineering. Verifying completely broken LSG systems requires destructive sampling, increasing costs and hindering development. Therefore, to determine the residual load-bearing capacity, the link between the pre-fracture characteristics and the fracture morphology must be known. However, when the crack propagation needs to be directly captured with high-speed imaging, conventional methods are no longer sufficient for detecting cracks. To enable such investigations, we propose a novel machine learning framework for crack segmentation in high-speed imaging that addresses the complexity of glass fracture and minimises the required labour costs. In this study, the crack propagation of a sample was recorded and analysed at 2,000,000 images per second. The results showcase accuracies surpassing 97% while requiring only two labeled images for training, thus streamlining practical implementation. Furthermore, we show the method's robustness to the extent that hyperparameter tuning becomes unnecessary. Instead, we offer guidelines for selecting the most crucial hyperparameters depending on the problem. Our method offers a promising approach for non-linear temporal interpolation of noisy images, with implications for various applications extending beyond glass fracture analysis.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

In civil engineering the characterisation of the fracture morphology in broken glass panes is a necessary step towards the prediction of residual load bearing capacity. Currently, when building e.g. overhead glazing with laminated safety glass (LSG), the residual load bearing capacity must be proven experimentally according to DIN18008-1. Furthermore, the determination of the fracture pattern of thermally toughened safety glass (EN 12150–1, 2015) is also based on destructive testing. To enable a more time and cost-efficient development of LSG products, pre-fracture characteristics need to be linked to fracture morphology to estimate the residual load bearing capacity. The fracture morphology is dependent, among other things, on the residual stresses in the glass and its thickness (Barsom 1968).

With increasing thermal pre-stressing and thus residual stresses, the resulting fragments of a glass product get finer (Kerkhof 2011, Pourmoghaddam 2019). If a crack reaches the tensile zone in the core, the internal energy of the glass is released, because the equilibrium state is disturbed (Schneider 2016). The cracks will then propagate critically in the tensile zone since the tensile stresses pulls the crack tip open (Yoffe 1951, Irwin 1966). In this process, accumulated energy is dissipated with the creation of new glass surfaces at the crack sides (Griffith 1921). Due to the fact, that only a defined amount of energy can be transformed into new glass surfaces, the crack branches into 2 or more separate cracks, to accommodate the energy surplus (Nielsen 2016). Since higher pre-stressing also means that there is more internal energy to release via fractures, more branching occurs, resulting in a much finer fracture morphology and smaller splinters.

The fracturing process of glass is a highly complex phenomenon, that is actively being researched for many years (Kerkhof 2011, Schneider 2001, Pour-Moghaddam 2019). The fields of expertise are mostly separated into two parts, where one part is concerned with the propagation of individual cracks (Kerkhof 2011, Clark 1966) and the other with the crack front (Takahashi 1999, Nielsen 2016). While the mechanics of propagating cracks are investigated thoroughly, for a more generalised analysis a lot of experiments must be conducted. Furthermore, to get a better understanding of cracks in glass, investigations with high-speed imaging are necessary (Nielsen 2016). This allows further insights to be gained into the dynamic behaviour of cracks and their propagation. However, with a large set of images manual crack identification is not feasible. Under controlled conditions with good lighting, e.g. in a scanner, the cracks and fragments can be automatically recognised and analysed. When it comes to high-speed imaging, the identification process is much more complex, because the image quality decreases rapidly as the frame rate increases.

However, with a deeper understanding of the relationships between parameters such as prestress, glass dimensions, installation conditions, loading scenarios, and crack propagation, costly component tests could potentially be replaced by predictive models. To enable automated analysis of cracks in thermally tempered glass with minimal human intervention, we propose a framework for crack segmentation in high-speed imaging that requires only two manually labeled samples. In this study, the framework is tested on a high-speed recording of cracks propagating through a specimen. However, the basic procedure should also be applicable to other specimens, thicknesses and pre-stressing.

2 Data acquisition

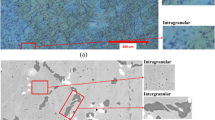

Crack images can be analysed automatically with e.g. fracsuite (Bohmann 2024) once crack propagation is complete, because specialized scanners (e.g. Cullet Scanner by SoftSolution) generate high-contrast images of such glass plates. In such images cracks appear consistently dark, which enables an easier identification (Fig. 1a). For this study however, the crack propagation was recorded at a frame rate of 2 million images per second with a Shimadzu HPV-X2 camera. In the crack propagation tests, the fracture was initiated using a pointed punch, that struck the glass plates in a well-defined manner at a location next to an edge. The additional energy added by the pointed punch and its position generally influence the crack morphology. However, this influence can be neglected in the context of crack detection. The camera was pointed at a location in some distance to the impact, to capture areas that are less influenced by it. The field of view that is recorded by the high-speed camera was 25 mm in height and 40 mm in width. Due to the high framerate, the image resolution is limited to 250 by 400 pixels resulting in a pixel size of 0.01 mm2.

Because of the limited resolution, various problems arise such as poor lighting conditions and low contrast. The poor lighting is mostly caused by the fact, that a high-power flash must be used to provide enough light. This also results in shadows and pointy reflections (Fig. 1b). In addition, the proportion of noise is significantly higher than with scans. It is therefore not possible to segment the cracks using established algorithms that are used for scanned images, because, among other things, the distinction between shadows, dirt and cracks cannot be considered.

3 Methods

To analyse the crack propagation between all images, the cracks must be detected pixel by pixel. Dividing an image pixel by pixel into classes or objects is called semantic segmentation. In our case, the classes are "crack" and "no crack". In the case of the scanned images, the image can be semantically segmented using well established methods (Bohmann 2024). However, high-speed images are usually too complex for standard computer vision methods like the Canny edge detector. A supervised learning method is suitable for achieving targeted results (Géron 2022). This means a model is trained on manually created labels (Fig. 2). Hence, the user supervises the training of the model indirectly by providing the labeled examples. For unstructured data (images), convolutional neural networks (CNNs) or visual transformers are commonly used. However, since transformers typically require a large dataset, we restrict ourselves to CNNs in this study.

Approaches such as this are used were originally developed for biomedical images (Ronneberger 2015). For biomedical problems, the aim is for a model to achieve reliable results for new patients without the model having to be retrained. This is called generalisation ability and requires a very large amount of data. The training data must cover virtually all possible cases that could influence the data. In our case, the training data would therefore have to cover a wide range of prestressing levels, manufacturers, cameras, lighting and much more. However, only a small number of samples need to be analysed in order to gain basic insights into crack growth. Therefore, instead of a model that covers all cases, a framework for analysing the crack propagation of a single sample is required.

In this study, we propose a concept comparable to nonlinear interpolation. Linear interpolation would not be sufficient for this, because not all cracks propagate linearly from the origin and can branch out. The model can be trained with only two images. One with complete crack propagation and one before forming any cracks. This means that only the first and the last image has to be labelled manually and all images in between can be analysed automatically (Fig. 3). To analyse the reliability of the method, all high-speed images were labelled manually for one experiment. A test set containing a total of 106 labelled images were therefore available for this study.

Workflow of the proposed framework. Only the first and last image (dark orange) are manually labelled (dark green) and trained on. After training, the cracks are predicted for all intermediate images (light orange). The resulting predictions (light green) can be regarded as the result of non-linear interpolation

3.1 Data pre-processing

The high-speed images contain distortions, markings and noise that can interfere with crack detection (Fig. 4a, c). By subtracting the background from the original crack image:

and scaling it:

a much cleaner image can be generated (Fig. 4b). Although it is free of most imperfections and markings, it has a significantly higher noise level (Fig. 4). However, the model can theoretically also create something like the difference itself and learn to distinguish between crack, background, and noise. To investigate the impact of pre-processed images, all combinations of crack image, difference, and background are examined as input in this study.

In addition to the high-speed images, scans of a large number of fully fractured glass panes could be used in this study (Fig. 5). Since thermally toughened glass was used in both cases, the scanned fracture patterns should be sufficiently similar to those in the high-speed images, making them suitable for transfer learning. Transfer learning involves leveraging knowledge gained from training on one task to improve learning or performance on a related but different task. In our case, train on the scanned images and then retrain on the high-speed images or just evaluate them. The big advantage would be that the labels can be generated automatically for the scanned images. Transfer learning would therefore make it possible to train a model with less high-speed images or even just scanned images and thus fully automate crack segmentation. However, the cracks are always clearly black (Fig. 5a), while cracks in the high-speed images can be both light and dark (depending on the reflection of the light). Therefore, the brightness of the images was randomly inverted so that the shape of the cracks and not the colour of the cracks is learned (Fig. 5b). The automatically generated labels (Fig. 5d) were visually adapted to the manually created labels using the dilation function of OpenCV (Bradski 2000).

3.2 Model

A neural network architecture inspired by U-Net (Ronneberger 2015) was selected for this study, originally designed for semantic segmentation of biomedical images. While newer architectures exist, U-Net remains a popular choice (Mo 2022, Yu 2023, Siddique 2021). We focus solely on U-Net to examine the impact of hyperparameters (e.g. number of filters), transfer learning and other training parameters. In U-Net, the encoder path compresses information as resolution decreases, while the decoder path restores resolution to the original dimensions (Fig. 6). The encoder captures details at different scales, and the decoder integrates them contextually, with skip connections merging intermediate results. Each pooling step halves the resolution in the centre of the model, while the number of filters there doubles (cf. Fig. 6a and b).

Exemplary model definitions for different hyperparameters. Colored boxes correspond to the following layers: Convolution blocks (light purple), residual blocks (yellow), max pooling (red), concatenate (green), transposed convolution (blue) and reshaping skip connection (purple arrow). Dimension of the feature maps at the corresponding boxes with width w at the bottom right, feature maps F at the bottom and height h at the left. Plotted with PlotNeuralNet (Iqbal 2018)

Our U-Net implementation using TensorFlow (2024) combines convolution blocks (CBs), residual blocks (RBs) (He 2016), max pooling layers, concatenation layers, and transposed convolution layers (Fig. 6). A CB includes a convolution layer with Rectified Linear Unit (ReLU) activation and a group normalization layer (Wu 2018). The first normalization layer has a single group, while subsequent layers have 16 channels per group. This setup normalizes input data individually in the first layer and CNN feature maps optimally in subsequent layers (Wu 2018). Group normalization reduces sensitivity to hyperparameters like batch size, streamlining the hyperparameter space. RBs are implemented akin to the 50-layer or larger variants of ResNet (He 2016). Last layer is a single-filter convolution layer with sigmoid activation.

3.3 Data augmentation

Since training is performed with only the smallest possible data set, classic data augmentation techniques were used to effectively enlarge the data set. Random flip for both directions, random rotate, random brightness (max delta 15%) and random contrast (max delta 15%) were used.

In addition, a variety of methods from noise to blur were tested for the transfer learning dataset to make it look more similar to the high-speed images. Most of the methods could not improve the results. Only random invert was used in the end, because the cracks in the high-speed images range from black to white, while the cracks in the transfer learning dataset are exclusively black.

3.4 Training parameters

Neural Networks are trained in epochs. During each epoch, the model is fed input data, makes predictions, compares those predictions to the actual target values, and updates its parameters accordingly to minimize the loss function. With each epoch the model's understanding of the data gets refined and the performance improved. A batch size of 4 was used by default for the training. Only for the larger models with 64 or 128 filters was the batch size automatically reduced depending on the available memory. The calculation was based on 160 samples per epoch.

The training was stopped 5 epochs after the results on the validation data no longer improved by 0.5 percentage points. After stopping training, the learnt parameters were taken from the last epoch an improvement was achieved. If the validation accuracy did not improve by 0.1 percentage points, the learning rate was reduced by a third. In the case of transfer learning, 8 epochs were initially trained on the transfer learning data set, each with one eighth of the transfer learning data. Then training was continued with a reduced learning rate on the high-speed images as described above.

Four models were trained for each of the hyperparameter combinations analysed (filters, pooling steps, layers per pooling step and input channels). This allows the random initialisation of the models to be considered and their robustness to be examined. A total of 500 models were trained and tested.

3.5 Metric

When dealing with datasets like crack segmentation, where cracks represent a small portion of the image, accuracy alone can be deceptive. For instance, if a model predicts no cracks across the entire image, it might still achieve a high accuracy because most of the image contains no cracks. Instead, precision and recall offer more insightful metrics. Precision measures the proportion of correctly identified crack pixels out of all the pixels predicted as crack, indicating how well the model avoids falsely labeling non-crack pixels as cracks. Recall, on the other hand, assesses the model's ability to capture all the actual crack pixels present in the dataset, showing how sensitive the model is in detecting genuine crack features. In addition to precision and recall, it's essential to acknowledge that these metrics are dependent on a threshold for classifying predictions as positive or negative. Adjusting this threshold can influence the trade-off between precision and recall. Therefore, the precision-recall curve provides insights into how the model performs across various threshold values. However, the Area Under the Curve in the Precision-Recall curve (AUC-PR) serves as a valuable aggregate measure that considers the model's performance across all possible thresholds. By encapsulating the trade-off between precision and recall, AUC-PR offers a comprehensive assessment of the model's discriminatory power without being sensitive to a specific threshold. This makes AUC particularly useful for evaluating models in scenarios with imbalanced datasets, such as crack segmentation tasks, where precision and recall alone may not provide a complete picture of performance (Géron 2022). In summary, AUC-PR can thus be used equivalently for accuracy, only that AUC-PR is robust to imbalanced data.

4 Results and discussion

To ensure that the method can be used flexibly and robustly, hyperparameters of both the model and the learning process need to be examined. This involved analysing how model hyperparameters can be selected and what data is required for training. The results were plotted with the default settings of seaborn (Waskom 2021), unless otherwise described.

4.1 Model hyperparameters

Four models for each combination of the following hyperparameter options were trained: 16–128 filters, 2–7 pooling steps, 1–3 layers. We trained the models with image 53 and 106 combined in the training and validation set (CombVal). Additionally, every training and validation set always includes an image before crack propagation to prevent hallucinations. Subsequently the models were tested on all remaining images. In general, the model’s performance mostly increases with complexity in the form of pooling steps and filters. The performance of the models only decreases from three pooling steps with 128 filters and six pooling steps with 64 filters (Fig. 7a). In both cases, these hyperparameters result in approximately the same number of parameters and therefore complexity. From this it can be concluded that there is (presumably depending on the problem) an upper model complexity limit above which the risk of overfitting increases. With few pooling steps, the results on early crack propagation (images 0 to 25) and late crack propagation (images 50 to 106) are closer to each other (Fig. 7b). With medium crack propagation, the results initially improve and then decrease again but mostly stay the same. A high number of pooling steps allows the model to see much of the image in its receptive field at once. As a result, the visually larger crack nodes can be recognised during late crack propagation, which is why the results here only improve with the size of the receptive field. In the case of crack propagation before the training examples, however, it is possible that parts of a crack node are "missing" and therefore the model does not recognise the entire crack node. With less pooling steps, the model has a smaller field of view and learns smaller patterns. These smaller patterns are therefore also better for recognising cracks in early stages and incomplete crack nodes.

We opted for four pooling steps and 64 filters so that early and late crack propagation can be easily recognised. No clear optimum can be seen for the number of layers per pooling step (Fig. 8a). For models with few pooling steps, additional layers appear to be particularly advantageous. This could be due to the fact that the effective receptive field is indirectly increased by the successive filtering. Here, the variance in results tends to increase with the number of layers. For this reason and due to the better computational efficiency, we trained with only one layer per pooling step for the remaining study.

With these settings and the CombVal sets, four models were trained for each plausible input channel combination. The best results were achieved with a combination of the original crack image, the difference (crack image minus background) and the background as input (Fig. 8b). The combinations of any two inputs perform similarly well, but slightly worse when all three inputs are utilized. As expected, the original crack image as the only input performs poorly. Surprisingly, however, the difference performs similarly poorly as the original. Here it seems to be crucial that the model itself can learn to distinguish between crack and background. The combination of three inputs was therefore selected for the remaining analyses.

4.2 Early stopping

In general, training is very stable (Fig. 9a). The results on the test split initially achieve comparable results to the validation split. From epoch four onwards, the results on the test data improve more slowly than in the other two splits. A plateau is reached from epoch six to seven. In the case of overfitting, the results on the test set would deteriorate from this point onwards, but here they remain constant. However, the results can vary depending on when the training is stopped. We had the best results on the test split with the training stopped at an accuracy of approx. 99.85% on the validation split (Fig. 9b). However, if the training was stopped at, for example, 99.0% accuracy on the validation split, the results on the test split would be less than 0.5 percentage points worse. The same applies if training is terminated when there is no improvement (in this case 99.99% accuracy on the validation split). This seems to apply to our selection of hyperparameters (one layer per pooling step, four pooling steps and 64 filters) as well as to all other hyperparameter combinations previously analysed. The method is therefore robust without risk of overfitting.

4.3 Transfer learning

Especially with such a small data set, transfer learning is considered the gold standard to achieve a better generalisation of the model (Zhuang 2020). In our case, however, the transfer learning data set does not lead to an improvement on the test split (Fig. 10a). The training process on the target data after transfer learning is comparable to the training without transfer data. The model also achieves 100% AUC-PR on the training and validation split. However, the results on the test split are on average 1.5 percentage points worse after transfer learning compared to no transfer learning at all (Fig. 10b).

This indicates that the data are too different. Normally, the random initialisation of neural networks scatters the risk of local minima and thus minimises the probability of overfitting. Initialisation by transfer learning means that this scattering is no longer present and instead, in our case, the risk of overfitting increases. Hence, automatically generated labels are not sufficient and human labeling is necessary.

4.4 Training data

The performance of a model depends heavily on what data was available during training and which criterion was used to end training. For this purpose, the image with final crack growth (100%) and an image with intermediate crack growth with a temporal position (TP) of 5–90% were selected, trained and tested. Three scenarios were then analysed: a) final image for training and intermediate image for validation (IntVal), b) final image for validation and intermediate image for training (FinVal) and c) a combination of both images for validation and training (CombVal).

The best results are generally achieved with CombVal (Fig. 11a). There seems to be a maximum with an intermediate image of about 50%. This could be because it contains more cracks in contrast to earlier images and more crack tips in contrast to later images. It is surprising that with IntVal the results are very strongly dependent on the position of the intermediate image and with FinVal almost not at all. Thus, the validation data seems to be much more important than the training data. This could be attributed to the fact that training is terminated based on the results of the validation data. Even in cases where the training data does not completely represent the fully fractured pattern, it is possible for the model to (by chance) achieve very good results on the validation data. Therefore, if the validation data represents the final image and training is terminated based on it, the learned model parameters will be those that (by chance) effectively recognize all cracks (as the final image contains all cracks, albeit at a late stage). Conversely, when very good training data is available but a very early image is used for validation, the learned model parameters will be those that best recognize cracks at this particularly early stage, but likely not those at a later stage.

Even if the position of the intermediate image in CombVal does not make a major difference to the overall result (Fig. 11a), it does make a difference depending on which image is being analysed. The intermediate image functions here practically like a support point (Fig. 11b). Depending on the problem, the results can therefore be selectively improved. The peak at 0% and the valley at 5–10%, which appear in all models, are remarkable. At 0%, no cracks are present and therefore all cracks are automatically recognised. At 5–10%, only a few cracks are visible. This means that even if only a few pixels were (not) detected incorrectly, the relative error is very high. This systematic error should therefore also remain present in similar problems.

With more than one image in the validation data, the results can be further improved (Fig. 12). The validation images were selected at the same distance from each other and from the boundary. CombVal again performs best (Fig. 12a), even if the improvement here is limited to a few percentage points. The greatest improvement is achieved with IntVal. Here, however, a total of 5 images are required (4 validation images and one training image) to achieve a comparable result to CombVal with 2 images. With FinVal, the number of training data increases and only the final crack image is used for validation. Although the training data quality has improved significantly here, the result remains the same due to the unchanged validation data set. This again shows how crucial the validation data set is.

In the case of CombVal, as the number of images increases, the results approach a straight line from 98% accuracy at 0% crack propagation to 100% accuracy at 100% crack propagation (Fig. 12b). This could be because the cracks in the early crack growth are even narrower and therefore generally more difficult to detect.

In order to analyse the plausibility of the results, individual images were randomly examined using a random model that was trained with the previously selected hyperparameters and CombVal. For this purpose, images from the first half of crack propagation were selected, as the results tended to be worse here (Fig. 12b).

All relevant cracks were detected in all images and all fragments were correctly separated (Fig. 13). Large errors occur mainly in the area of the first 5 images. But there are still hardly any cracks visible. Only thereafter, longer cracks become visible and are thus better recognized. So as long as very short cracks are not explicitly intended to be detected, the weaknesses in the extremely early crack growth can be neglected.

Prediction results of early crack propagation with the test image number on the left corresponding to the test image number in the plot above. The model was trained with two manually labeled images (53 and 106) as well as an image before crack propagation. Thus, the image before crack propagation automatically has a completely black label

In some cases, errors can even be found in the labels through the predictions of the model. In image 40, a crack was detected by the model on the left side of the image, which had been overlooked in the label (Fig. 13). The actual performance of the model is therefore higher in reality than shown in the results of this study. Such labelling errors can usually be avoided when creating e.g. two training samples, but inevitably creep in when creating e.g. 104 test samples.

5 Conclusion

In this study, we propose a novel semantic segmentation framework for high-speed images of thermally toughened glass. Our study aimed to address the challenge of detecting cracks in glass through high-speed imaging, thereby enabling subsequent analysis of fracture morphology. Traditionally, verifying completely broken laminated safety glass (LSG) systems in post fracture limit state (DIN 18008–1, 2020) and the determination of the fracture pattern test of fully tempered glass (thermally toughened safety glass) (EN 12150–1, 2015) relied on destructive sampling, leading to increased costs and development delays. By leveraging high-speed imaging technology, we sought to overcome this limitation and streamline the process of fracture analysis in the future. Following are the key findings of our study:

-

Accuracies (AUC-PR) of 97% were achieved with only two manually labeled images.

-

The method showed robust performance with most tested hyperparameter combinations, yielding accuracies over 94%. Therefore, fine-tuning hyperparameters was deemed nonessential. Only the number of pooling steps should be manually selected so that the maximum receptive field can capture the largest crack node at once. The number of filters can be gradually increased until it fails plausibility checks with the naked eye.

-

If the performance is insufficient in certain temporal segments (e.g. early crack propagation), additional labeled images can be added to the training and validation data as support points.

-

The risk of overfitting was low because the training and test results plateaued almost immediately after reaching the optimum. Optimal training stopping points, compared to stopping training when there was no more improvement in validation results, resulted in only a 1.5 percentage point improvement.

-

We recommend leveraging all available data for both training and validation, with a separate test dataset necessary only if initial results fail a visual plausibility check.

-

Model input should capture background and temporal changes, regardless of pre-processing methods.

-

Transfer learning led to worsened results, suggesting interpolation does not require strong generalization, and random initialization may be more effective.

The methodology should be able to detect all cracks visible to the human eye. Cracks that do not deflect or absorb light to a visible extent cannot be detected by the proposed methodology. Accordingly, only surface cracks that are large enough for the camera resolution can be detected in opaque materials. However, in the case of glass, even smaller cracks than the camera resolution can be detected, provided there is a sufficiently large change of the image overall compared to before the crack propagation. This is the case, for example, when the crack along with its shadow is large enough for the camera resolution. The method should therefore also be applicable to annealed glass. We have shown that the methods proposed here enable the rapid assessment of dynamic crack propagation, offering a cost-effective means to analyze crack distribution and morphology across various levels of thermally pre-stressed glass. Code and data have been published (Riedel 2024).

References

Barsom, J.: Fracture of tempered glass. Journal of the American Ceramic Society 51.2, 75–78 (1968)

Bohmann, L.: fracsuite (masterrelease). Zenodo (2024) 10.5281/zenodo.10758208

Bradski, G.: The OpenCV library. Dr. Dobb’s J. Softw. Tools Prof. Program. 25(11), 120–123 (2000)

Clark, A.B.J., Irwin, G.R.: Crack-propagation behaviors. Exp. Mech. 6(6), 321–330 (1966)

DIN 18008–1: Glass in Building – Design and construction rules – Part 1: Terms and general bases. Beuth, Berlin (2020)

EN 12150–1: Glass in building – Thermally toughened soda lime silicate safety glass – Part 1: Definition and description. Beuth, Berlin (2015)

Fineberg, J.: The dynamics of rapidly moving tensile cracks in brittle amorphous material. Dyn. Fract. Mech. (2006). https://doi.org/10.1142/9789812773326_0003

Géron, A.: Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow. O'Reilly Media, Inc. (2022)

Griffith, A.A.: The phenomena of rupture and flow in solids. Philosophical transactions of the royal society of London. Series A, containing papers of a mathematical or physical character 221(582–593), 163–198 (1921)

He, K., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778 (2016)

Iqbal, H.: HarisIqbal88/PlotNeuralNet v1.0.0 (v1.0.0). Zenodo (2018) https://doi.org/10.5281/zenodo.2526396

Irwin, G.R.: Analysis of stresses and strains near the end of a crack traversing a plate. J. Appl. Mech. 24(3), 361–364 (1957)

Kerkhof, F.: Bruchentstehung und Bruchausbreitung im Glas. Springer Berlin Heidelberg: Glastechnische Fabrikationsfehler, 523–587 (2011)

Mo, Y., et al.: Review the state-of-the-art technologies of semantic segmentation based on deep learning. Neurocomputing 493, 626–646 (2022)

Nielsen, J.H., Olesen, J.F., Stang, H.: The fracture process of tempered soda-lime-silica glass. Exp. Mech. 49(6), 855–870 (2009). https://doi.org/10.1007/s11340-008-9200-y

Nielsen, J.H., et al.: Remaining stress-state and strain-energy in tempered glass fragments. Glass Struct. Eng. 2(1), 45–56 (2016)

Pour-Moghaddam, N., et al.: Relationship between strain energy and fracture pattern morphology of thermally tempered glass for the prediction of the 2D macro-scale fragmentation of glass. Glass Struct. Eng. 4(2), 257–275 (2019). https://doi.org/10.1007/s40940-019-00112-4

Riedel, H.: hjhriedel/crack-high: v0.1-alpha (v0.1-alpha). Zenodo (2024) https://doi.org/10.5281/zenodo.10821855

Ronneberger, O., Fischer, P., & Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5–9, 2015, proceedings, part III 18, 234–241. Springer International Publishing (2015)

Schneider, J., et al.: Glasbau: Grundlagen, Berechnung. Springer-Verlag, Konstruktion (2016)

Schneider, J.: Festigkeit und Bemessung punktgelagerter Gläser und stoßbeanspruchter Gläser, Ph.D. dissertation, Technische Universität Darmstadt (2001)

Siddique, N., et al.: U-net and its variants for medical image segmentation: a review of theory and applications. IEEE Access 9, 82031–82057 (2021)

Takahashi, K.: Fast fracture in tempered glass. Key Eng. Mater. 166, 9–18 (1999)

TensorFlow Developers: TensorFlow (v2.15.1). Zenodo (2024) https://doi.org/10.5281/zenodo.10798587

Waskom, M.L.: Seaborn: statistical data visualization. J. Open Sour. Softw. 6(60), 3021 (2021). https://doi.org/10.21105/joss.03021

Wu, Y., He, K.: Group normalization. In: Proceedings of the European conference on computer vision (ECCV), pp. 3–19 (2018)

Yoffe, E.: The moving griffith crack. Phil. Mag. 42, 739–750 (1951)

Yu, Y., et al.: Techniques and challenges of image segmentation: a review. Electronics 12(5), 1199 (2023)

Zhuang, F., et al.: A comprehensive survey on transfer learning. Proc. IEEE 109(1), 43–76 (2020)

Acknowledgements

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)–426807020

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Riedel, H., Bohmann, L., Bagusat, F. et al. Crack segmentation for high-speed imaging: detection of fractures in thermally toughened glass. Glass Struct Eng 9, 117–130 (2024). https://doi.org/10.1007/s40940-024-00252-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40940-024-00252-5