Abstract

As potent approaches for addressing computationally expensive optimization problems, surrogate-assisted evolutionary algorithms (SAEAs) have garnered increasing attention. Prevailing endeavors in evolutionary computation predominantly concentrate on expensive continuous optimization problems, with a notable scarcity of investigations directed toward expensive combinatorial optimization problems (ECOPs). Nevertheless, numerous ECOPs persist in practical applications. The widespread prevalence of such problems starkly contrasts the limited development of relevant research. Motivated by this disparity, this paper conducts a comprehensive survey on SAEAs tailored to address ECOPs. This survey comprises two primary segments. The first segment synthesizes prevalent global, local, hybrid, and learning search strategies, elucidating their respective strengths and weaknesses. Subsequently, the second segment furnishes an overview of surrogate-based evaluation technologies, delving into three pivotal facets: model selection, construction, and management. The paper also discusses several potential future directions for SAEAs with a focus towards expensive combinatorial optimization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Combinatorial optimization refers to searching for the optimal value of an objective function within a finite but often large set of candidate solutions. Without loss of generality, a minimization combinatorial optimization problem (COP) can be formulated as

where \(f(\varvec{x})\) is the objective function, \(\varvec{x}=[x_1,..., x_n]\) is a vector containing n discrete decision variables, X is the search space, \(g(\varvec{x})\) is the constraint function. Unlike continuous optimization problems, the search space of COPs is a finite set rather than a continuous space [1]. In different COPs, discrete decision variables are encoded into various data structures. For example, the decision variables in neural architecture search (NAS), traveling salesman problem (TSP), and feature selection (FS) are ordinal integers representing the type of convolutional blocks [2], the sequence of visited cities [3], and binary variables indicating whether features are selected [4], respectively. Other typical discrete decision variables include categorical variables [5], trees [6], and graphs [7, 8]. While these data structures exhibit dissimilarity, it is imperative to note their shared characteristic of discreteness. Due to the discrete and non-convex nature, many COPs are NP-hard [9]. While addressing constraints in combinatorial optimization problems constitutes a significant research topic, it does not represent the principal focus of this article. Consequently, we will refrain from elaborating extensively on constraint handling within this discourse.

With the high computational efficiency, heuristic algorithms are frequently employed to tackle COPs in various applications, such as intensity-modulated radiotherapy planning [10], large-scale waste collection [11], task scheduling and multi-agent-path-finding [12], etc. Heuristic algorithms endeavor to identify optimal solutions by employing specific heuristic strategies. For example, the genetic algorithm (GA) [13] employs the principle of survival of the fittest, particle swarm optimization (PSO) [14] uses a position update strategy guided by personal and global experience, and the local search [15] follows a neighborhood expansion mechanism. The optimization process of heuristic algorithms needs to evaluate a large number of candidate solutions for finding out the optimal solution [16].

However, in some practical problems, such as the trauma system design [17, 18], site optimization of fast charging stations [19], bus stops spacing [20], and neural architecture search [21], the objective function \(f(\varvec{x})\) is black-box and its evaluation is computationally expensive. For instance, when evolutionary algorithms are used to handle neural architecture search problems, each individual is transformed to a neural network with the corresponding architecture through the mapping from integer-encoded genotype to phenotype, and then the weights of the network are initialized and iteratively trained based on the training data. Afterwards, the performance of the network on the test data is used as the fitness value. Such a single fitness evaluation typically takes several hours to days. As a result, the potential promising architecture may not be found through evolutionary algorithms within a limited computational budget due to the time-consuming fitness evaluation. Such issues are usually called expensive combinatorial optimization problems (ECOPs). When heuristic algorithms are applied to tackle ECOPs, the search process demands numerous objective evaluations. Notably, each evaluation may extend from several minutes to several hours. The cumulative count of these resource-intensive objective evaluations is a critical determinant of the overall duration of the optimization procedure. Given the considerable computational expenditure involved, conventional heuristic methodologies prove unsuitable for the efficient resolution of ECOPs.

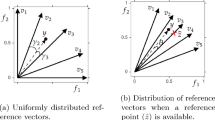

Surrogate-assisted evolutionary algorithms (SAEAs) have been widely investigated to tackle expensive optimization problems in which surrogate models are constructed based on historically real-evaluated solutions and their objective values [16, 22, 23]. Compared with real objective evaluations, surrogate-assisted evaluations are much cheaper in terms of computational cost. To reduce the computation time for optimizing expensive problems, surrogate models are used to replace in part the computationally expensive objective evaluations. Therefore, constructing proper surrogate models is crucial for SAEAs [24, 25]. Concerning the role of surrogate models, the landscape of SAEAs is characterized by two distinct categories: regression-based and classification-based SAEAs. Fig. 1 shows the basic framework of these two kinds of SAEAs. Regression-based SAEAs use surrogate models to predict the objective values of candidate solutions. In contrast, classification-based SAEAs regard environmental selection as a classification problem and build surrogate models to predict whether a candidate solution enters the next generation.

Surrogate models need to be expressive enough to catch the complexity of the objective function while being slightly sophisticated to ease the learning when only a small number of real-evaluated solutions is available. Generally, machine learning models with prediction ability can be adopted to construct surrogate models, such as Gaussian process (GP) [26, 27], random forest (RF) [18, 21], radial basis function network (RBFN) [24, 28], and k-nearest neighbour (KNN)[29]. Over the past years, SAEAs have become increasingly popular for various expensive optimization problems, including but not limited to high-dimensional [30,31,32], constrained [33, 34], and multi-/many-objective [35, 36], etc. However, most research in this field centers around expensive continuous optimization problems, with very little attention given to ECOPs. More importantly, continuous SAEAs cannot be directly used to combinatorial domains [37].

As SAEAs have achieved many successes in expensive continuous optimization, how to use SAEAs to solve ECOPs should also be widely studied. Unfortunately, the existing studies are mainly limited to expensive continuous optimization. A comprehensive survey is worth investigating for promoting and supporting the studies of recent trends for using SAEAs to handle ECOPs. To fill this gap, we aim to provide a comprehensive survey of the state-of-the-art work with discussions of the open issues and challenges for future work. We expect this survey to attract attention from researchers working on evolutionary computation to address new challenges in ECOPs.

When SAEAs are used to handle ECOPs, a couple of challenges need to be addressed. The first challenge is searching for the optimum within a combinatorially huge decision space. COPs encountered in practical applications frequently exhibit the characteristics of high-dimensional decision variables [38, 39]. An ample decision space can pose a challenge as it would require exploring a wide range of solutions to avoid getting stuck in local optima. Even if the surrogate models’ predictions are accurate, SAEAs still need to design efficient heuristic search strategies to quickly find the optimal solution. Several strategies have been proposed to improve the search ability of heuristic algorithms in discrete space, such as designing problem-specific variation operators, integrating local and global search strategies into one framework, and introducing learning models in population evolution. These strategies will be detailed in “Search strategy” Section. The second challenge is constructing accurate surrogate models. With the discrete nature of ECOPs, it is prickly to derive a discrete surrogate model that can accurately learn the structure of a combinatorial problem [40]. While there are continuous surrogate models with well-studied properties, the analysis of discrete surrogate models should be more developed. In “Surrogate-based evaluation” Section, we will introduce solutions to address this challenge from three aspects: model selection, construction, and management strategies. Model selection refers to selecting the most suitable one from numerous machine learning models. Model construction refers to how to improve prediction accuracy based on the selected model, such as ensemble models, multi-fidelity models, and others. Model management strategy involves selecting sampled data to update the trained model.

The remainder of this paper is organized as follows. In “Search strategy”Section introduces the commonly used search strategies in expensive combinatorial optimization. In “Surrogate-based evaluation” Section reviews the surrogate model techniques. In “Future challenges and research” Section proposes and discusses some future research directions and “Conclusions” Section gives the conclusions finally.

Search strategy

Search strategies in SAEAs are utilized to discover promising solutions. Various search strategies have been embedded into SAEAs to cooperate with surrogate models to find the optimal solution, which can be divided into four categories: global, local, hybrid, and learning-based strategies. This section briefly summaries widely used search strategies for handling ECOPs.

Global search strategy

The global search strategy achieves the goal of global exploration by simultaneously investigating multiple local search directions in the decision space. Specifically, a global search strategy starts by sampling some initialized solutions in the decision space. Each solution represents a local search direction. In the subsequent optimization process, the solutions with poor performance are gradually replaced by newly generated promising solutions. How to generate promising solutions is crucial for global search strategies. The current candidate solution generation techniques for ECOPs could be mainly classified as evolution-based, swarm-based, and distribution-based methods.

1) Evolution-based solution creation operators include crossover and/or mutation. Crossover, or recombination, exchanges and combines genetic information from multiple parent solutions to produce new candidate solutions, such as the point-based crossover used in genetic algorithms [4, 21, 40,41,42], the binomial crossover used in differential evolution [43, 44], and tree-based crossover used in genetic programming [29, 45, 46]. Crossover enhances the exploration capabilities of an SAEA. Mutation is usually applied to perturb a single decision variable to promote population diversity and avoid getting stuck in local optima, such as the bit flip mutation [40, 47], the uniform mutation [5], sub-tree mutation [48], grow mutation [42].

2) Swarm-based: Particle swarm optimization (PSO) is a swarm intelligent optimization algorithm inspired by the modeling and simulation of group behaviors of birds. In PSO, each particle denotes a candidate solution. Particles achieve global search by moving their positions in the decision space. PSO guides the particles to the potential global optimal region through the individual cognition and social interaction. Compared with evolution-based operators, swarm-based operators generate candidate particles by updating particles’ position. PSO was initially developed for continuous optimization problems. Due to fast convergence and the ability to explore large search spaces efficiently, it has been applied to many combinatorial optimization problems, such as feature selection problems [49,50,51]. There are generally two strategies to enable PSO to solve combinatorial optimization problems. One is to directly ignore the discreteness of decision variables. For instance, the authors in [52, 53] discretize continuous values into 0 or 1 based on a threshold. Another approach is to design a position update strategy suitable for discrete variables. For instance, a deduction-based velocity update strategy is proposed to enable PSO to handle binary-encode ECOPs [54]. Similarly, a correlation-guided updating strategy is proposed in [55] to generate promising particles in which the probability of flipping is connected with the correlativity. In [56], an asymmetric flipping operator, the flipping probability of features from being selected to unselected is not equal to the flipping probability of features from being unselected to selected, is introduced into the position updating process.

3) Distribution-based: Estimation of distributed algorithm (EDA) is a kind of efficient global search strategies based on statistical learning theory. To begin with, EDA initializes a population of solutions. After evaluating the objective values of all solutions, EDA selects a part of promising solutions to estimate the probabilistic model through the statistical learning method, so as to obtain the distribution of potential optimal solutions. Then EDA samples new solutions by the learned probabilistic model. EDA is initially used to solve continuous optimization problems, but due to its inherent learning characteristics, it has gradually been used to solve ECOPs. For example, in [57], a neural architecture search problem is transformed to a multinomial distribution learning problem, which describes the probability of sampling 8 connection operations. Similarly, the authors of [58] also use probabilistic models to sample the hyperparameters of candidate architectures. Unlike the unique multinomial distribution used in [57], two different models are used to respectively sample different hyperparameters. In addition, doubly stochastic matrix [59] and Mallows model [60] can be used as probability models over a permutation landscape.

Ant colony optimization (ACO) [61] can also be categorized under distribution-based global search strategies. The fundamental nature of ACO involves multiple ants simultaneously exploring the solution space. Each ant moves through the search space, constructing solutions by considering a set of possible choices. Ants deposit pheromone trails as they move. These chemical substances communicate information about the quality of the solution found. Better solutions are reinforced with higher pheromone levels. Subsequent ants construct solutions by probabilistically choosing their next move based on pheromone levels and heuristic information. The pheromone communication and probabilistic decision-making of the ants contribute to a collective and distributed search process [62]. ACO has been successfully applied to various ECOPs, including the job scheduling [63], structural learning of networks [64], feature selection[65, 66], mixed-variable optimization [67, 68], and more. Its ability to find near-optimal solutions in complex problem spaces makes it a valuable tool in various domains.

Local search strategy

Local search (LS) methods focus on improving a single solution iteratively by exploring its immediate neighborhood in the solution space. They do not systematically search the entire decision space but make small changes from a starting solution to find the best nearby solution. These methods start from an initial solution and repeatedly move to the best neighboring solution, aiming to improve the objective function value. In the context of combinatorial optimization, the neighborhood refers to a set of new solutions [69]. Generating a neighborhood around a given solution involves defining the rules or mechanisms to create variations or modifications to that solution. These modifications are also known as the neighborhood operator. A neighborhood can be defined as a specific local area within the entire decision space. LS is a process of iteratively moving or jumping from one neighborhood to another. The definition of neighborhood is a key operation in LS [70].

The specifics of how a neighborhood is defined and generated depend on the problem being solved. Methods used to generate neighborhoods can be roughly divided into two categories: horizontal swapping and vertical shifting. Fig. 2 shows an initial solution and its two neighborhoods generated by horizontal swapping and vertical shifting, respectively. The two-opt neighborhood is a specific type of neighborhood used in ECOPs [71]. It involves creating neighboring solutions by horizontally swapping any two decision variables of a given solution. The definition of the neighborhood significantly influences the scope of local search. Numerous algorithms do not confine themselves to merely swapping two decision variables. Instead, they employ multi-bit exchanges (k-opt) to generate diverse neighborhoods [72,73,74]. The \(\beta \)-sampling [72] is commonly applied to generate k-opt neighborhoods which stars at randomly choosing a segment containing k positions from a given solution and moving the segment to the end of the solutions. The vertical shifting method involves choosing one or multiple decision variables from the initial solution and subsequently altering these selected variables to any value within their respective range of values [18, 75]. Compared to horizontal swapping, vertical shifting is more flexible and makes it easier to explore unknown areas. However, vertical shifting requires prior knowledge of the range of decision variables. It is unsuitable for ECOPs where the decision variables are sequences, such as TSP and task planning problems.

Both horizontal swapping and vertical shifting necessitate a precise definition of parameters, including the selected bit to swap or shift. However, such specifications can introduce artificial biases into the LS process, potentially leading to a gradual deviation from the optimal solution in large-scale problems. To address this issue, an implicit neighborhood structure is proposed through the use of destroy and repair methods [76,77,78]. The destroy method disrupts a segment of the current solution, while the repair method reconstructs the affected solution. Notably, the destroy method usually incorporates randomness, ensuring that distinct segments of the current solution are affected.

In a complex and costly combinatorial optimization scenario, the selection of effective search strategies stands as a critical determinant influencing the optimization performance. The authors in [40] compare the performance of three Walsh surrogate-assisted optimizers on expensive multi-objective combinatorial optimization problems. The search strategies used in these three optimizers are the conventional MOEA/D, multiple LS, and Pareto LS, respectively. Experimental results show that the two local search optimizers are efficient in dealing with discrete optimization problems, which is to contrast with the MOEA/D optimizer.

Hybrid search strategy

Various search strategies possess distinct advantages and disadvantages. Relying solely on a single search strategy throughout the optimization process could lead the search direction to diverge from the optimal solution gradually. The integration of multiple search strategies into the optimization process can mitigate the occurrence of such situations. In recent years, there has been a notable surge in the development of algorithms that diverge from solely adhering to a single search strategy. Instead, these approaches amalgamate multiple search strategies, frequently drawing from algorithms in diverse areas of combinatorial optimization research. These approaches are commonly referred to as hybrid search strategies [79]. Hybrid search strategies aim to harness the synergy among diverse search strategies to enhance the quality of optimization outcomes. Assessing the synergy between search strategies and understanding their collaborative mechanisms are pivotal to designing effective hybrid methods. Unfortunately, creating an effective hybrid method is frequently arduous, demanding expertise from various optimization domains.

The amalgamation of a global search strategy and a local search strategy stands as the prevalent hybrid method in solving ECOPs [80]. Its main asset is the combination of the benefits of these two kinds of search strategies to explore and exploit the decision space, respectively [81]. There are generally two ways to integrate local and global search strategies: embedding and concatenation. Embedding-based hybrid search strategies are commonly labelled as memetic algorithms, the latter is commonly referred as multi-stage or hierarchical algorithms.

Memetic algorithms (MAs) are usually considered as extensions of EAs, which introduce the individual learning as a separate LS process for speeding up the search process. In MAs, EAs usually explore the entire search space as a global optimizer for locating the most promising regions with a population of individuals, while heuristic LS exploits the located regions by improving the individuals in the population. The authors in [82,83,84] conduct global search through crossover and mutation operators. Additionally, each solution in the population conducts LS within their neighborhood. Although conducting LS on each solution can greatly improve the exploration, it can also slow down the search efficiency. To overcome this drawback, the authors in [85] propose two adaptive strategies to dynamically determine the number of solutions undergoing LS. On strategy is designed from the perspective of the evolution generation, and the other is the population entropy. Similarly, the authors in [18, 75] conduct LS only for found non-dominated solutions. The authors in [86] conduct taboo searches on randomly selected solutions. Overall, there are three ways to choose solutions for LS: all solutions in the population, solutions who perform well, and randomly selected solutions.

Typically, during the early stage, search strategies should prioritize exploration across a wide space to prevent being trapped around local optima. As the process advances, local search should be strengthened for fast convergence. Based on this consideration, the concatenation hybrid method involves a two-step process. First, it performs a global search to identify potential solutions. Then, it uses the results of the global search as a starting point for a more targeted local search. Determining the appropriate time to switch the optimization process from global to local search is crucial in concatenation hybrid methods. The most commonly used criterion for making this judgment is that the best solution cannot be further updated [87].

Learning-based search strategy

In a search-based optimizer, problem-solving is conceptualized as a computational search process, characterized by an applicable search space covering candidate solutions, a search procedure to unveil these solutions, and a heuristic strategy to direct the search. Heuristic search strategies commonly integrate prior knowledge or search control mechanisms into the random search process to enhance search efficiency without compromising exploitation [84]. As the objective function for ECOPs is frequently treated as a black box, the availability of prior knowledge is typically limited. The search control mechanism exhibits different ways in different search strategies, such as the parent selection in evolution-based global search strategies, the neighborhood definition in LS strategies, and the selection of starting solution for MAs. However, a search control mechanism is only sometimes practical due to the discrete nature of ECOPs. For example, two parents who perform well may only sometimes produce promising solutions, and the local search for the best solution may not always find better ones.

In recent years, learning-based search strategies are proposed to further improve the utilization of search control mechanisms which feeds the information about the search progress to machine learning techniques and uses the trained learning model to guide the search process. There are three main learning-based approaches for combinatorial optimization: learning to configure algorithms, learning to select algorithms, and learning to directly predict optimal solutions [88].

The first method aims to automatically configure the parameters of a specific type of algorithms. In [89], the authors conduct a comparison of three distinct local search methods employing diverse restart strategies on a binary-encoded optimization problem: completely random assignment, initial assignment of the first four discrete variables as zero and the rest randomly, and assignment of the last four discrete variables as one and the rest randomly. Their experimental results reveal that different restart strategies yield diverse solutions. Consequently, the study applies a rank learner, specifically RankNet, to learn a search heuristic intended to select promising initial solutions for the local search process. In contrast, the authors in [90] use a well-trained recurrent neural network to directly generate starting population, rather than choose a population generation strategy.

The second method focuses on selecting a candidate algorithm or combining several existing algorithms against problem characteristics. In [91], the authors design four surrogate-assisted optimizer with different search strategies: DE, LS, full-crossover strategy, and trust region LS. During the optimization, Q-learning is used to learn search effects of different optimizers and adaptively select the optimizer to be used in the next stage. Unfortunately, the method is proposed to solve expensive continuous optimization problems. The performance of the method on ECOPs still needs further verification.

The third method is also known as neural combinatorial optimization (NCO) where a well-trained model, such as the sequence-to-sequence network [92, 93] and graph neural network [94, 95], directly predicts optimal or near-optimal solutions for a given problem instance. Although this kind of methods strive to make combinatorial optimization more automated and less reliant on human effort, their training process requires significant data. Training data needs to include information on the nature of solved problems and their optimal solutions. However, in many real-world ECOPs, collecting adequate training data can take significant time and may even be impossible [96]. The review of learning-based search strategies for combinatorial optimization can refer to [96,97,98].

In the domain of ECOPs, research concerning learning-based search strategies is still an emerging topic. While learning-based methodologies have shown substantial advancements in addressing COPs, their implementation in ECOPs remains notably limited. This limitation primarily arises from the fact that training learning models often necessitates a vast amount of data, especially when the acquired knowledge pertains to complex or dynamic configurations. Given that objective evaluations in ECOPs are time-consuming, there exists a scarcity of effective data for training accurate models. Additionally, the search control mechanism generated in surrogate-assisted optimization might be inaccurate, thereby increasing the demands for the robustness of learning models.

Discussions

A variety of search strategies are designed to efficiently explore discrete decision spaces and find optimal or near-optimal solutions with applying a small number of real objective evaluations. As shown in Fig. 3, the commonly used search strategies can be divided into four types: global, local, hybrid and learning-based. Their characteristics are discussed as follows.

-

1.

Global search strategies focus on exploring a wide decision space to find an optimal or near-optimal solution, including evolution-, swarm-, and distribution-based algorithms. They are used to search for solutions on a broader scale, emphasizing exploration and diversification to escape local optima and find better solutions. However, in the later optimization stage, global search strategies are prone to diversity degradation.

-

2.

Local search strategies focus on improving a single solution iteratively by exploring its immediate neighborhood in the decision space. They do not systematically search the entire solution space but make incremental changes from a starting solution to find the best nearby solution. Although these strategies typically only yield near-optimal solutions, they are highly efficient in solving practical problems.

-

3.

Hybrid search strategies that combine local and global search strategies are often used to capitalize on the strengths of each method, aiming to find better solutions in a reasonable time frame by leveraging local search for fine-tuning and global search for broader exploration. In situations where there is little prior knowledge about a problem’s nature or search preferences and efficiency is not a top priority, a hybrid search strategy would be a wise choice.

-

4.

Learning-based search strategies are a useful way to automate the optimization process without the influence of human bias. However, it is essential to note that such strategies often need a significant amount of data for training, which can prove to be a challenge in situations where data collection is expensive or limited.

Surrogate-based evaluation

In the optimization process of SAEAs, the objective values of most candidate solutions are evaluated by well-trained surrogate models. This means that SAEAs use surrogate models to drive the optimization process [16]. As a result, building accurate surrogate models is crucial for the success of SAEAs. In general, building accurate surrogate models involves three steps: model selection, construction, and management. In this section, we will summarize the commonly used tips of SAEAs to ECOPs from the three aspects.

Model selection

Model selection entails choosing the most appropriate model among various machine learning methods, considering the nature and specific characteristics of the problem to be addressed. A survey [99] presents different models for dealing with discrete decision variables. Based on this we present a more comprehensive summary of surrogate models that can be applied to discrete spaces.

-

1)

Naive model. If the solution remains representable as a vector (binary variables, integers, non-ordered categorical parameters, permutations), the discrete structure might be overlooked. The fundamental approach to address discrete decision spaces involves disregarding their discrete nature and directly employing continuous models, like the Gaussian process, to manage ECOPs.

Gaussian process, also known as Kriging, is a probabilistic surrogate model. In its basic form, Gaussian process assumes that the inputs are continuous. To model an unknown function \(f(\varvec{x})\), Gaussian process assumes that \(f(\varvec{x})\) at any point \(\varvec{x}\) is a Gaussian random variable \(N(\mu , \delta ^2)\), where \(\mu \) and \(\delta \) are two constants which are independent of \(\varvec{x}\). A Gaussian process is a collection of random variables that have a joint multivariate Gaussian distribution \(N(\varvec{\mu }, \varvec{C})\), where \(\varvec{\mu }\) is the mean vector and \(\varvec{C}\) is the covariance matrix. \(\varvec{\mu }\) and \(\varvec{C}\) are estimated by maximizing likelihood with evaluated data. Each element \(c_{x_i, x_j}\) in \(\varvec{C}\) describes the correlation between \(f(\varvec{x}_i)\) and \(f(\varvec{x}_j)\) related to the distance between \(\varvec{x}_i\) and \(\varvec{x}_j\). \(f(\varvec{x}_i)\) and \(f(\varvec{x}_j)\) are considered to be positively correlated if the distance between \(\varvec{x}_i\) and \(\varvec{x}_j\) is small. The correlation is usually defined as a kernel function of distance in the decision space.

The prediction accuracy of a Gaussian process model for discrete search spaces heavily depends on appropriate distance measures and kernel functions [23, 100]. Distance measures for discrete decision variables will be discussed in similarity-based models. This section mainly discuss the selection of kernel functions. The authors of [5, 101] use the Gaussian process model to fit the objective function where the decision variables are categorical parameters. The authors of [102] establish the Gaussian process model in a binary variable mixed integer nonlinear programming optimization framework to reduce identification load of the pollution sources. In [103], a Kriging-assisted simulated annealing algorithm is proposed to solve permutation-based ECOPs. Although the encoding methods for decision variables are different in the above three works, they all use the standard Gaussian kernel. In addition to these numerical variables, decision variables in some ECOPs may also be trees or graphs. The diffusion kernel [104, 105] is commonly developed for graph-based discrete search spaces.

-

2)

Inherently discrete model. One technique that can be explored in discrete space is the random forest model, which can effectively handle continuous, categorical, and nominal decision variables. Random forest is an ensemble of k decision trees, each embodying a binary tree structure. To train each decision tree, a bootstrap sampling method is employed, allowing certain samples to be selected multiple times while leaving out others. Within each decision tree, nodes randomly choose a part of features for splitting, preventing an over-reliance on specific features throughout the ensemble. Each tree learns different aspects of the data based on the random selection of samples and features, reducing the risk of overfitting. This makes the model’s performance on training data more stable and can better generalize to new data. Meanwhile, due to its ability to randomly select feature subsets to construct decision trees, it can effectively mitigate the impact of dimensional disasters. Recent research indicates that tree-structured surrogate models, like random forest, are better suited for addressing ECOPs [21, 23]. The random forest model has become increasingly popular as one of the most frequently utilized approaches for tackling ECOPs, such as constrained ECOPs [42, 83], single-objective ECOPs [21, 106], multi-objective ECOPs [18, 86], and noisy ECOPs [75].

-

3)

Similarity-based model. Similarity-based models employ similarity metrics or distance functions to assess the likeness or proximity between evaluated and candidate solutions in the decision space. Based on the similarity assessment, these models predict the fitness of candidate solutions. For instance, in [63], the objective value of a candidate solution is equals to that of its closest solution in terms of the Euclidean distance. In addition to directly using the objective value of the evaluated solution as the predicted value, K-nearest neighbor (KNN) and radial basis function networks (RBFN) also belong to similarity-based models.

The principle behind KNN is the assumption that solutions that are close in the decision space are likely to have similar objective values. Thus, the predicted value for a candidate solution is based on the values of its nearest neighbors. Specifically, for a given candidate solution, KNN first calculates the distances to all other evaluated solutions and then outputs the average (or weighted average) of K nearest neighbors’ objective values as the predicted value for the given solution. A widespread application of KNN is to solve expensive job shop scheduling problems. Job shop scheduling (JSS) involves creating schedules for producing jobs, aiming to minimize lead time and total costs for customers. Since the phenotypic characterizations is a vector of ranking number that indicates the decision made by a rule, the Euclidean distance is a reasonable technique to measure the similarity of different solutions [107]. KNN has also successfully used in multitask JSS to allocate individuals to specific tasks properly in [29], and dynamic flexible JSS [108]. In addition, Euclidean distance-based KNN is also used as a surrogate model to handle expensive feature selection problems in which a feature subset is encoded as a vector of binary variables [51].

Although the KNN model is simple to implement and does not require a training process, it is not able to predict whether or not a candidate solution will provide improvement over existing solutions. For that purpose, another similarity-based model is of interest: RBFN. RBFN is a particular type of neural network. It consists of an input layer, a layer of RBF neurons, and an output layer. Based on evaluated data \(\{(\varvec{x}_i, y_i)|i=1,2,\cdots , N\}\), RBFN approximates an objective function as follows:

where \(dis(\varvec{x},\varvec{x}_j)=||\varvec{x}-\varvec{x}_j||\) is the distance function, \(\phi (\cdot )\) is a kernel function, \(N_c\) is the number of RBF neurons, and \(w_j\) denotes the weight of the jth neuron. \(\varvec{x}_j\) is the jth center point, and K-means clustering algorithm is often used to obtain \(N_c\) center points [4]. When \(N_c=N\), each training data is treated as a central point. Commonly used kernel functions include the Gaussian [4] and cubic [32] kernel. A method for adaptively selecting kernel functions can be found in [109]. Due to the low computational complexity, RBFN is another popular model for solving ECOPs. For examples, an RBFN-assisted evolutionary algorithm is proposed in [4] to solve high-dimensional feature selection problems. An adaptation of multiple RBFNs is proposed in [110] to solve expensive mixed integer optimization problems. In [68], RBFN combined with RF is applied to approximate the objective function with continuous and discrete categorical variables.

A crucial step in adapting similarity-based models for discrete decision spaces is the choice of an appropriate distance measure. In [111], the suitability of various distance measures in similarity-based modeling is investigated on permutation problems. Experimental results show that the Hamming distance is the best performing measure. The Hamming distance is also used to measure the similarity of binary-encoded solutions [4]. The Euclidean distance is commonly used to measure the similarity for job shop scheduling problems [29, 107, 108]. To avoid adverse effects of inappropriate distance measures on the predictive capability of RFBN, a heterogeneous metric considering three distance measures is employed in [110] to replace the usual distance measures. The authors of [112] adapt an RBFN with arbitrary distance measures to model arbitrary combinatorial optimization problems. Their approach has also been applied to quadratic assignment problems.

-

4)

Mapping-based model. Given the challenges posed by discrete decision spaces in search and model building, a commonly adopted strategy involves mapping the intricate discrete space to another easy to handle space. Classical regression offers a well-established mapping approach for addressing discrete categorical parameters, relying on dummy variables and contrasts. A categorical variable can be mapped to a set of dummy variables, with each dummy representing a single level of the original variable [23].

Another example of a mapping approach is the representation learning. Autoencoders are frequently employed in unsupervised representation learning [113], wherein the encoder discerns inherent features within the input data, generating an encoded latent representation. Subsequently, the decoder reconstructs the input data from the derived latent representation. The resultant encoded latent representations typically exhibit continuity and possess fewer dimensions than the original input. Consequently, the encoder of autoencoders can effectively map original discrete variables onto the latent space, thereby facilitating the generation of low-dimensional and continuous representations conducive to constructing surrogate models.

Based on this idea, some methods have been proposed. In [114], an autoencoder is designed to compress the variable-length discrete integer block vectors to fixed-length continuous decimal latent vectors. To improve the mapping accuracy, a new loss function is proposed that not only considers the reconstruction loss but also takes the architecture similarity and the model scale similarity into account. In [115], an autoencoder is used to learn a network’s connection matrix to several continuous variables. The connection matrix of a network represents the connections among nodes, in detail, if there is a connection between two nodes, the corresponding entity in the matrix is 1; otherwise, 0. After matrix mapping, a surrogate model is constructed in the latent space. In [116], an encoder model is responsible to map a neural network architecture into a continuous representation. The autoencoder used in [115] and [115] are multi-layer perceptron models, while the encoder in [116] is a long short-term memory model.

-

5)

Simulation-based model. In numerous costly optimization problems, fitness evaluation frequently involves an iterative computational process. In such scenarios, a considerable amount of intermediate data is generated prior to the convergence of the simulation. This intermediate data becomes feasible for surrogate training during the initial iterations, enabling the subsequent deployment of the surrogate for predicting the converged fitness. The simulation-based model designed for job shop scheduling (JSS) illustrates such a procedure. Adhering to the principle of simplification, a surrogate model was developed by reducing the number of jobs and machines within the simulation framework for evaluating the fitness of solutions in dynamic JSS [45]. The results indicate that the formulated surrogate, referred to as the "half-shop simulation" using half the original quantity of jobs and machines, achieves commendable performance. This methodology was successfully extended to dynamic flexible JSS [117]. Drawing inspiration from the investigation presented in [45], an adaptive surrogate strategy was devised, enhancing the fidelities of simulation models across generations and implemented at various stages in dynamic flexible JSS [118]. The findings demonstrate that the proposed algorithm can achieve performance comparable to traditional methods without compromising training time efficiency.

Model construction

Model selection refers to choosing one or more machine learning models that best suit the problem to be solved, considering factors like the type and dimension of decision variables, the problem’s nature, and the cooperation pattern of search strategies and surrogate models. Model construction pertains to constructing accurate surrogates based on the chosen models and utilizing them to replace the expensive evaluations in the optimization process. There are two commonly used methods: single- and multi-model.

-

1)

Single-model. The single-model is the most straightforward and frequently employed model-building approach. It entails using a regression model to learn the mapping between decision variables and objective values. Alternatively, a classification model can also be utilized to learn the partial order relation among solutions based on objective values [119]. It is imperative to emphasize that certain SAEAs [40,41,42, 86] designed for addressing multi-objective ECOPs exhibit the construction of multiple surrogate models. Nonetheless, these approaches maintain alignment with the single-model methodology. Diverse surrogate models are employed to approximate different objective functions.

-

2)

Multi-surrogate model. Different models have their advantages and disadvantages on different problems. To enhance their robustness, the strategy of building multiple models has been investigated in EAs due to its promising performance. There are three distinct strategies of multiple surrogate models: hybrid, ensemble, and multi-fidelity. The distinctions among these approaches are illustrated in Fig. 4.

A hybrid method represents an integrated paradigm that integrates diverse surrogate models with the overarching aim of augmenting the comprehensive efficiency and effectiveness of the optimization process. Within the realm of hybrid methods, two discernible types of relationships exist between multiple surrogate models, namely competition and cooperation. In the competition-based hybrid methods, serving as a surrogate model is attributed to the one exhibiting superior performance among all models. Bagheri et al. [120] select the best-performed type of radial basis function interpolation on the basis of their median absolute errors obtained on newly evaluated solutions. Followed that, the authors in [121] iteratively choose the most suitable surrogate from a pool of 31 candidate models to serve as the cheaper substitute for the discrete objective function. Experimental results on fifteen binary-encoded combinatorial test cases show that the proposed algorithm is a robust solver. In contrast, cooperation-based hybrid methods simultaneously use all surrogate models to find promising solutions. For instance, the authors in [68] and [54] use two surrogate models to select solutions for accurate evaluation. A subtle distinction lies in the fact that, two surrogate models predict the objective values of identical solutions in [68], while two surrogate models predict the objective values of disparate solutions in [54].

Ensemble methods involve the use of multiple surrogate models to collectively make predictions or decisions. Combined models are called an ensemble. It is reported that ensembles often outperform individual models [122]. In ensemble methods, multiple models are trained independently, and their predictions are aggregated to produce a final result. Each model may employ the same candidate solutions and the corresponding observations from expensive function evaluations. In [47], a multi-surrogate assisted multi-objective evolutionary algorithm is proposed to address feature selection in regression and classification problems with time series data. A surrogate model is trained with one learning algorithm for each fold of the K-fold cross-validation. For the regression problem, random forest and long short-term memory have been used as learning algorithms, while for the classification problem, RF and support vector machine have been applied. The average of the output values of all surrogate models is used as the final predicted value. In [121], a self-adaptive multiple-surrogate assisted efficient global optimization algorithm is proposed which iteratively select the pool of candidate surrogate models rather than use all surrogate models. Model quality, i.e., the errors in surrogates, is used as the selection metic. The models for the ensemble are chosen based on their performance and the weights are adaptive and inversely proportional to the local modeling errors.

In addition to hybrid and ensemble methods, multi-fidelity methods are also developed for ECOPs. Multi-fidelity modeling uses several models of the same real system, where each model has its own degree of detail representing the real process. The authors in [17] describe a multi-fidelity optimization approach for the design of a trauma system. An SAEA with multi-fidelity levels is proposed in [75] to handle noisy ECOPs in which the averaging is used to reduce the influence of noises. The average of several evaluations can be treated as an approximation of the exact fitness. Multi-fidelity models are constructed using the average of different repeated evaluations. Experimental results show that multi-fidelity models outperform single-fidelity models. In addition, a multi-fidelity based surrogate was designed in [123] where there is more than one surrogate, and they can learn from each other in the evolutionary process. The learning between multiple surrogates is realized by the knowledge transfer via the crossover operator. The results show that the proposed multi-fidelity based surrogate algorithm has a better converge speed and can get significant performance. The authors in [124] use multi-fidelity models to solve neural architecture search problems.

Model management

Based on the capacity to evaluate new candidate solutions using actual objective functions, SAEAs can be categorized into two distinct types: online and offline SAEAs [16]. In the context of online SAEAs, some candidate solutions undergo evaluation by actual objective functions throughout the optimization process. These newly evaluated solutions serve as updated training data to iteratively refine and enhance existing surrogate models, thereby augmenting surrogate accuracy and search efficiency. Conversely, offline SAEAs exclusively construct surrogate models based on historically evaluated data, lacking real objective evaluations for newly generated solutions during optimization. This demarcation underscores the fundamental distinction between the two categories, elucidating the dynamic nature of online SAEAs in contrast to the historical reliance of offline SAEAs solely on past evaluations for surrogate model construction.

How to make full use of these real fitness evaluations to properly update surrogate models is key to online SAEAs, which is known as model management [22]. Prominent model management strategies primarily encompass two overarching categories: generation-based and individual-based strategies. Generation-based methods entail the comprehensive re-evaluation of all solutions within the population, primarily focusing on adjusting the frequency of real fitness evaluations as they become available. In contrast, individual-based methods center around selecting one or several proper solutions in each generation for real evaluation, aiming to enhance the quality of the model through strategically chosen assessments. In [86], a generation-based strategy, named non-selective fixed strategy, is used to evaluate every candidate solution that is generated using the conventional genetic operators. In addition, an individual-based strategy, which evaluates candidate solutions generated by a local search method is also applied. Although the generation-based strategy is relatively simple and direct, it requires a large number of function evaluations, which is not feasible in most ECOPs.

Individual-based methods typically select the model-predicted optimal solution for real objective evaluation. This selection is justified by the idea that the best-predicted individual, due to its anticipated superiority, has the capacity to enhance accuracy, especially in regions considered promising within the optimization landscape [54, 68]. For examples, four selection strategies are studied in [40], including the solution with the best predicted value correspondingly to a sub-problem, the solution with the best predicted value with respect to any sub-problem, the solution that improves the most predicted value to any sub-problem, and the solution with the best normalized improvement to any sub-problem. Experimental results show that the last strategy can be viewed as a relatively good choice.

However, exclusive reliance on sampling the optimal point tends to induce a concentration of sampling points within a local vicinity, thereby engendering an inhomogeneous training dataset. Subsequently, this inhomogeneous nature of the training dataset is susceptible to precipitating significant errors in the model. To mitigate this, sampling the uncertain area emerges as a judicious strategy, as it enhances model accuracy and facilitates exploration of the broader global space. For examples, the variance of the predicted values of all decision trees in a random forest is used in [89] to measure the uncertainty. Numerous infill sampling criteria have been proposed to conjoin uncertain information with fitness assessments. Such criteria include expected improvement, probability of improvement, and lower confidence bound [125].

In comparison to exclusively using a single infill criteria, the authors of [126] suggest employing different infill criteria for different types of surrogate models. In [121], the performance of an SAEA with expected improvement as an infill criterion is compared with that of exclusively using the prediction value of the promising surrogate. Experimental results show that prediction value is an efficient and promising infill criterion for multi-surrogate assisted evolutionary algorithms in general, while expected improvement is better for solving high-dimensional problems and highly multi-modal ordinal problems. In practical scenarios, addressing outliers [127], characterized as infrequent observations deviating significantly from most of the population, is paramount for improving model accuracy. Outliers introduce substantial interference and noise, thereby impeding the enhancement of the model’s accuracy. Drawing inspiration from this imperative, the authors of [83] posit a dynamic selection strategy aimed at harmonizing the convergence and diversity of the population.

Future challenges and research

SAEAs are probably the most efficient methods for expensive and time-demanding real-world optimization problems. Although prior research endeavors have reported promising achievements in ECOPs, there are still some issues and challenges that need to be addressed. Note that in addition to the challenges specific to ECOPs that will be described below, there are also some common challenges for both expensive continuous optimization problems, such as the low search efficiency on high-dimensional decision spaces, low accuracy of surrogate models with limited training data.

-

1)

Efficient search strategies. Utilizing heuristic search strategies in addressing complex ECOPs is fraught with challenges related to adaptability, constraint handling, and parameter sensitivity. The adaptability of heuristics proves to be a formidable challenge due to the diverse structures inherent in combinatorial problems [128]. Designing heuristics that seamlessly adapt to varying problem instances requires a nuanced understanding of problem-specific characteristics, making the development of universally adaptive algorithms complex. The intricate nature of constraints in real-world optimization scenarios poses a significant hurdle. Heuristics must navigate and satisfy multifaceted constraints, and a failure can result in infeasible solutions or limit the exploration of viable solution spaces. Parameter sensitivity compounds the challenges, as heuristics often involve tunable parameters crucial to their performance. Determining appropriate parameter configurations is a non-trivial task, as the algorithm’s sensitivity to parameter choices can influence solution quality and convergence. Striking a delicate balance between adaptability, constraint handling, and parameter tuning remains a central and intricate endeavor in developing effective search strategies for complex ECOPs.

-

2)

Complex mapping relation between decision variables and objectives. By employing decision variables as inputs and corresponding objective values as outputs in the training phase, surrogate models can acquire the capacity to delineate the mapping from decision variables to optimization objectives. In certain complex optimization problems, a direct mapping relationship between decision variables and optimization objectives may be absent. For instance, in soft robot design problems, the optimization objective encompasses not only the robot’s morphology but also its optimized control policies [5]. The control policy entails a complex decision-making process rather than a simple set of variables. Constructing an accurate surrogate model using traditional machine learning models in such scenarios poses challenges. One potential solution involves embedding problem-related knowledge within model construction [129]. Machine learning models offer a flexible framework for incorporating domain knowledge into the optimization process. By leveraging domain-specific knowledge, surrogate models can enhance the effectiveness of SAEAs in solving real-world problems. Another method is to build a binary relation learning model [130]. During the optimization of EAs, compared with the objective values of solutions, the relationship between pairs of solutions is more important, because the search only depends on the performance comparison between solutions. In cases where the objective function is relatively complex, machine learning models can be utilized to learn the relationships between candidate solutions, thereby replacing regression-based models.

-

3)

Model selection. The selection of surrogate models constitutes a pivotal challenge in applying SAEAs to tackle ECOPs. Balancing the accuracy of the underlying complex objective functions with the imperative of model simplicity introduces a delicate trade-off. Determining the quality of surrogate models still needs to be solved, posing a significant obstacle in the optimization workflow. Intriguingly, pursuing enhanced global accuracy for the surrogate does not necessarily guarantee optimal outcomes. The extensive array of potential surrogate model types and selection strategies further compounds the challenge, demanding careful consideration. Exploring hybrid and ensemble models presents a promising avenue to dissect the performance nuances and interactions among diverse approaches. The task of model selection remains an intricate and unsettled facet, underscoring the complexity of effectively integrating surrogates into the evolutionary optimization paradigm for combinatorial problems.

-

4)

Dimensionality. The question of dimensionality, i.e., the number of variables, is the other important issue. High-dimensional spaces often suffer from the curse of dimensionality, where the number of possible combinations grows exponentially with the dimensionality. This complicates the search process, making it more challenging to explore the solution space effectively. Moreover, the curse of dimensionality intensifies, demanding advanced machine learning methods to capture the complex relationships within the vast solution space effectively. Limited data availability poses a hurdle, hindering the training of accurate surrogate models. The intricacies of high-dimensional spaces amplify model complexity, leading to interpretability concerns and an increased risk of overfitting. A promising research direction is to use autoencoders in unsupervised learning to learn effective representations of low dimensional continuous hidden spaces, and then construct accurate surrogate models in the hidden spaces [7].

-

5)

Noise. Problems across diverse domains, including machine learning [131], control systems [132], and simulation optimization [128], possess inherent stochastic characteristics, leading to the introduction of noise. Noise refers to the presence of uncertainty, variability, or random fluctuations in the objective function evaluations. Noisy evaluations may lead to misleading surrogate models and suboptimal solutions. Capturing and representing the inherent noise in objective functions accurately is challenging. The construction of accurate surrogate models often involves computationally intensive tasks such as repeated function evaluations, which can be prohibitively expensive for optimization problems with costly objective functions. Future research directions may involve developing advanced surrogate models that can effectively handle noisy evaluations, devising adaptive algorithms capable of dynamically adjusting to varying levels of noise, and exploring novel strategies for incorporating domain knowledge into the surrogate-assisted optimization process.

-

6)

Theoretical analysis. Although many researchers have clearly pointed out that ECOPs are more difficult to be solved than expensive continuous problems, there is still an extreme lack of theoretical analysis on the difficulties of ECOPs and the differences between these two kind of expensive optimization problems. At present, some characteristics of ECOPs are described in a general and vague manner, and there is not much theoretical support. Regrettably, establishing a convergence proof for SAEAs towards either the global optimum or a local optimum is not straightforward, given the inherent complexity associated with proving the convergence of stochastic search algorithms to a global optimum, a task acknowledged for its non-trivial nature. Simultaneously, it is noteworthy that approximation errors of surrogate models often defy description through conventional Gaussian or uniform distributions. This characteristic renders a quantitative analysis of search dynamics on a surrogate challenging, if not impracticable. Consequently, a theoretical analysis of expensive combinatorial optimization becomes imperative to comprehend and navigate these complexities.

Conclusions

This paper provides a comprehensive survey on recent studies of SAEAs on different types of ECOPs. This survey summarizes and categorizes existing SAEAs from two aspects: search strategies and surrogate model techniques. In terms of search strategies, existing SAEAs are divided into four categories: global, layout, hybrid, and learning methods. In each category, existing related works are further classified and introduced. The surrogate model technology summarizes extant research across three aspects: model selection, construction, and management. These aspects respectively delineate the procedures for selecting models tailored to the solved problem, employing these models within the optimization process, and updating them as required. Of greater significance, the examination delves into the issues and challenges elucidated in the reviewed studies, offering a comprehensive understanding of this field and prospectively outlining avenues for future research. This survey aspires to contribute to the systematic organization of extant work, stimulate heightened interest, catalyze innovative ideas, and broaden the scope of inquiry within the emergent, dynamic, and captivating research domain.

The main reason we divide the entire review into two aspects-search strategies and surrogate-based evaluation technologies-is to correspond with the two challenges mentioned earlier. It is worth mentioning that although the most basic operation of SAEAs in dealing with expensive optimization problems is to use the predicted values of surrogate models instead of expensive evaluations, this is not the focus of all research in this field. The domain of research concerning SAEAs encompasses a vast and intricate array of investigations. Such inquiries extend beyond these two aspects, encompassing an array of ancillary topics. These include, but are not confined to, the refinement of sampling and preprocessing techniques for training data, augmentation methodologies for enriching training data, surrogate-assisted pre-selection and environment selection strategies, techniques for model updating, and methodologies for the generation of optimal solutions. The present review, however, confines its examination to extant literature within the overarching framework of two primary domains: search strategies and surrogate-based evaluation technologies.

Data availability

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

References

Blum C, Roli A (2003) Metaheuristics in combinatorial optimization: overview and conceptual comparison. ACM Comput Surv 35(3):268–308

Zhang H, Jin Y, Cheng R, Hao K (2021) Efficient evolutionary search of attention convolutional networks via sampled training and node inheritance. IEEE Trans Evol Comput 25(2):371–385

Jian S-J, Hsieh S-Y (2022) A niching regression adaptive memetic algorithm for multimodal optimization of the euclidean traveling salesman problem. IEEE Trans Evol Comput pp 1–1

Liu S, Wang H, Peng W, Yao W (2022) A surrogate-assisted evolutionary feature selection algorithm with parallel random grouping for high-dimensional classification. IEEE Trans Evol Comput 26(5):1087–1101

Bhatia J, Jackson H, Tian Y, Jie X, Matusik W (2021) Evolution gym: A large-scale benchmark for evolving soft robots. Adv Neural Inf Process Syst 34:2201–2214

Gupta A, Savarese S, Ganguli S, Fei-Fei L (2021) Embodied intelligence via learning and evolution. Nat Commun 12(1):5721

Wang S, Liu J, Jin Y (2019) Surrogate-assisted robust optimization of large-scale networks based on graph embedding. IEEE Trans Evol Comput 24(4):735–749

Runzhong W, Zhigang H, Gan L, Jiayi Z, Junchi Y, Feng Q, Shuang Y, Jun Z, Xiaokang Y (2021) A bi-level framework for learning to solve combinatorial optimization on graphs. In: Ranzato M, Beygelzimer A, Dauphin Y, Liang PS, Wortman Vaughan J (eds) Advances in neural information processing systems, vol 34. Curran Associates Inc, pp 21453–21466

Sabar NR, Ayob M, Kendall G, Rong Q (2013) Grammatical evolution hyper-heuristic for combinatorial optimization problems. IEEE Trans Evol Comput 17(6):840–861

Tian Y, Feng Y, Wang C, Cao R, Zhang X, Pei X, Tan KC, Jin Y (2022) A large-scale combinatorial many-objective evolutionary algorithm for intensity-modulated radiotherapy planning. IEEE Trans Evol Comput 26(6):1511–1525

Lan W, Ye Z, Ruan P, Liu J, Yang P, Yao X (2021) Region-focused memetic algorithms with smart initialization for real-world large-scale waste collection problems. IEEE Trans Evol Comput 26(4):704–718

Honglin Z, Yaohua W, Jinchang H, Yanyan W (2023) Collaborative optimization of task scheduling and multi-agent path planning in automated warehouses. Complex Intell Syst pp 1–12

Cai X, Sun H, Zhang Q, Huang Y (2019) A grid weighted sum pareto local search for combinatorial multi and many-objective optimization. IEEE Trans Cybern 49(9):3586–3598

Xue Yu, Chen W-N, Tianlong G, Zhang H, Yuan H, Kwong S, Zhang J (2018) Set-based discrete particle swarm optimization based on decomposition for permutation-based multiobjective combinatorial optimization problems. IEEE Trans Cybern 48(7):2139–2153

Abraham D, Juan JP, Eduardo GP, Nenad M (2015) Multi-objective variable neighborhood search: an application to combinatorial optimization problems. J Global Optim 63:515–536

Jin Y, Wang H, Chugh T, Guo D, Miettinen K (2019) Data-driven evolutionary optimization: an overview and case studies. IEEE Trans Evol Comput 23(3):442–458

Wang H, Jin Y, Jansen JO (2016) Data-driven surrogate-assisted multiobjective evolutionary optimization of a trauma system. IEEE Trans Evol Comput 20(6):939–952

Wang H, Jin Y (2020) A random forest-assisted evolutionary algorithm for data-driven constrained multiobjective combinatorial optimization of trauma systems. IEEE Trans Cybern 50(2):536–549

Lin J, Gebbran D, Dragičević T (2023) Surrogate-assisted combinatorial optimization of ev fast charging stations. IEEE Trans Transp Electr pp 1–1

Leprêtre F, Fonlupt C, Verel S, Marion V (2020) Combinatorial surrogate-assisted optimization for bus stops spacing problem. In: Artificial evolution: 14th international conference, Évolution artificielle, EA 2019, Mulhouse, France, October 29–30, 2019, Revised Selected Papers 14, Springer, pp 42–52

Sun Y, Wang H, Xue B, Jin Y, Yen GG, Zhang M (2019) Surrogate-assisted evolutionary deep learning using an end-to-end random forest-based performance predictor. IEEE Trans Evol Comput 24(2):350–364

Jin Y (2011) Surrogate-assisted evolutionary computation: recent advances and future challenges. Swarm Evol Comput 1(2):61–70

Bartz-Beielstein T, Zaefferer M (2017) Model-based methods for continuous and discrete global optimization. Appl Soft Comput 55:154–167

Liu S, Wang H, Yao W, Peng W (2023) Surrogate-assisted environmental selection for fast hypervolume-based many-objective optimization. IEEE Trans Evol Comput pp 1–1

Fan L, Wang H (2022) Surrogate-assisted evolutionary neural architecture search with network embedding. Complex Intell Syst, pp 1–19

Liu B, Zhang Q, Gielen GGE (2013) A gaussian process surrogate model assisted evolutionary algorithm for medium scale expensive optimization problems. IEEE Trans Evol Comput 18(2):180–192

Song Z, Wang H, He C, Jin Y (2021) A kriging-assisted two-archive evolutionary algorithm for expensive many-objective optimization. IEEE Trans Evol Comput 25(6):1013–1027

Ren Z, Sun C, Tan Y, Zhang G, Qin S (2021) A bi-stage surrogate-assisted hybrid algorithm for expensive optimization problems. Complex Intell Syst 7:1391–1405

Zhang F, Yi Mei S, Nguyen MZ, Tan KC (2021) Surrogate-assisted evolutionary multitask genetic programming for dynamic flexible job shop scheduling. IEEE Trans Evol Comput 25(4):651–665

Sun C, Jin Y, Cheng R, Ding J, Zeng J (2017) Surrogate-assisted cooperative swarm optimization of high-dimensional expensive problems. IEEE Trans Evol Comput 21(4):644–660

Cai X, Gao L, Li X (2020) Efficient generalized surrogate-assisted evolutionary algorithm for high-dimensional expensive problems. IEEE Trans Evol Comput 24(2):365–379

Gu H, Wang H, Jin Y (2022) Surrogate-assisted differential evolution with adaptive multi-subspace search for large-scale expensive optimization. IEEE Trans Evol Comput, pp 1–1

Jiao R, Xue B, Zhang M (2022) Investigating the correlation amongst the objective and constraints in gaussian process-assisted highly constrained expensive optimization. IEEE Trans Evol Comput 26(5):872–885

Wei F-F, Chen W-N, Li Q, Jeon S-W, Zhang J (2023) Distributed and expensive evolutionary constrained optimization with on-demand evaluation. IEEE Trans Evol Comput 27(3):671–685

Qin S, Sun C, Liu Q, Jin Y (2023) A performance indicator-based infill criterion for expensive multi-/many-objective optimization. IEEE Trans Evol Comput 27(4):1085–1099

Song Z, Wang H, Xue B, Zhang M, Jin Y (2023) Balancing objective optimization and constraint satisfaction in expensive constrained evolutionary multi-objective optimization. IEEE Trans Evol Comput, pp 1–1

Stork J, Friese M, Zaefferer M, Bartz-Beielstein T, Fischbach A, Breiderhoff B, Naujoks B, Tušar T (2020) Open issues in surrogate-assisted optimization. In: High-performance simulation-based optimization, pp 225–244

Omidvar MN, Li X, Yao X (2022) A review of population-based metaheuristics for large-scale black-box global optimization-part i. IEEE Trans Evol Comput 26(5):802–822

Omidvar MN, Li X, Yao X (2022) A review of population-based metaheuristics for large-scale black-box global optimization-part ii. IEEE Trans Evol Comput 26(5):823–843

Derbel B, Pruvost G, Liefooghe A, Verel S, Zhang Q (2023) Walsh-based surrogate-assisted multi-objective combinatorial optimization: a fine-grained analysis for pseudo-Boolean functions. Appl Soft Comput 136:110061

Pruvost G, Derbel B, Liefooghe A, Verel S, Zhang Q (2020) Surrogate-assisted multi-objective combinatorial optimization based on decomposition and walsh basis. In Proceedings of the 2020 genetic and evolutionary computation conference, pp 542–550

Gu Q, Wang Q, Xiong NN, Jiang S, Chen L (2021) Surrogate-assisted evolutionary algorithm for expensive constrained multi-objective discrete optimization problems. Complex Intell Syst, pp 1–20

Qinghua G, Wang D, Jiang S, Xiong N, Jin Yu (2021) An improved assisted evolutionary algorithm for data-driven mixed integer optimization based on two_arch. Comput Ind Eng 159:107463

Prado RS, Silva RCP, Guimarães FG, Neto OM (2010) Using differential evolution for combinatorial optimization: a general approach. In: 2010 IEEE international conference on systems, man and cybernetics, pp 11–18

Nguyen S, Zhang M, Tan KC (2017) Surrogate-assisted genetic programming with simplified models for automated design of dispatching rules. IEEE Trans Cybern 47(9):2951–2965

Fan Q, Bi Y, Xue B, Zhang M (2022) A global and local surrogate-assisted genetic programming approach to image classification. IEEE Trans Evol Comput

Espinosa R, Jiménez F, Palma J (2023) Multi-surrogate assisted multi-objective evolutionary algorithms for feature selection in regression and classification problems with time series data. Inf Sci 622:1064–1091

Wang S, Mei Y, Zhang M, Yao X (2022) Genetic programming with niching for uncertain capacitated arc routing problem. IEEE Trans Evol Comput 26(1):73–87

Xue B, Zhang M, Browne WN, Yao X (2015) A survey on evolutionary computation approaches to feature selection. IEEE Trans Evol Comput 20(4):606–626

Jiao R, Nguyen BH, Xue B, Zhang M (2023) A survey on evolutionary multiobjective feature selection in classification: approaches, applications, and challenges. IEEE Trans Evol Comput

Chen K, Xue B, Zhang M, Zhou F (2021) Correlation-guided updating strategy for feature selection in classification with surrogate-assisted particle swarm optimization. IEEE Trans Evol Comput 26(5):1015–1029

Xue B, Zhang M, Browne WN (2013) Particle swarm optimization for feature selection in classification: a multi-objective approach. IEEE Trans Cybern 43(6):1656–1671

Xue Yu, Xue B, Zhang M (2019) Self-adaptive particle swarm optimization for large-scale feature selection in classification. ACM Trans Knowl Discov Data (TKDD) 13(5):1–27

Pei H, Pan J-S, Chu S-C, Sun C (2022) Multi-surrogate assisted binary particle swarm optimization algorithm and its application for feature selection. Appl Soft Comput 121:108736

Nguyen BH, Xue B, Zhang M (2022) A constrained competitive swarm optimiser with an svm-based surrogate model for feature selection. IEEE Trans Evol Comput

Song X, Zhang Y, Gong D, Liu H, Zhang W (2022) Surrogate sample-assisted particle swarm optimization for feature selection on high-dimensional data. IEEE Trans Evol Comput

Zheng X, Ji R, Tang L, Zhang B, Liu J, Tian Q (2019) Multinomial distribution learning for effective neural architecture search. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 1304–1313

Li J-Y, Zhan Z-H, Xu J, Kwong S, Zhang J (2021) Surrogate-assisted hybrid-model estimation of distribution algorithm for mixed-variable hyperparameters optimization in convolutional neural networks. IEEE Trans Neural Netw Learn Syst

Santucci V, Ceberio J (2023) Doubly stochastic matrix models for estimation of distribution algorithms. arXiv preprint arXiv:2304.02458

Irurozki E, López-Ibáñez M (2021) Unbalanced mallows models for optimizing expensive black-box permutation problems. In Proceedings of the genetic and evolutionary computation conference, pp 225–233

Dorigo M, Birattari M, Stutzle T (2006) Ant colony optimization. IEEE Comput Intell Mag 1(4):28–39

Cáceres LP, López-Ibáñez M, Stützle T (2015) Ant colony optimization on a limited budget of evaluations. Swarm Intell 9(2):103–124

Dhananjay Thiruvady S, Nguyen FS, Zaidi N, Li X (2022) Surrogate-assisted population based aco for resource constrained job scheduling with uncertainty. Swarm Evol Comput 69:101029

Alonso-Barba JI, Luis de la O, Regnier-Coudert O, McCall J, Gámez JA, Puerta JM (2015) Ant colony and surrogate tree-structured models for orderings-based bayesian network learning. In: Proceedings of the 2015 annual conference on genetic and evolutionary computation, pp 543–550

Ma W, Zhou X, Zhu H, Li L, Jiao L (2021) A two-stage hybrid ant colony optimization for high-dimensional feature selection. Pattern Recogn 116:107933

Paniri M, Dowlatshahi MB, Nezamabadi-Pour H (2020) Mlaco: A multi-label feature selection algorithm based on ant colony optimization. Knowl-Based Syst 192:105285

Liao T, Socha K, Marco A, de Oca M, Stützle T, Dorigo M (2014) Ant colony optimization for mixed-variable optimization problems. IEEE Trans Evol Comput 18(4):503–518

Liu J, Wang Y, Sun G, Pang T (2021) Multisurrogate-assisted ant colony optimization for expensive optimization problems with continuous and categorical variables. IEEE Trans Cybern 52(11):11348–11361

Jaszkiewicz A (2002) Genetic local search for multi-objective combinatorial optimization. Eur J Oper Res 137(1):50–71

Blot A, Kessaci MÉ, Jourdan L (2018) Survey and unification of local search techniques in metaheuristics for multi-objective combinatorial optimisation. J Heuristics 24(6):853–877

Cai X, Sun H, Zhang Q, Huang Y (2018) A grid weighted sum pareto local search for combinatorial multi and many-objective optimization. IEEE Trans Cybern 49(9):3586–3598

Valls V, Quintanilla S, Ballestin F (2003) Resource-constrained project scheduling: a critical activity reordering heuristic. Eur J Oper Res 149(2):282–301

Katayama K, Hamamoto A, Narihisa H (2004) Solving the maximum clique problem by k-opt local search. In: Proceedings of the 2004 ACM symposium on applied computing, pp 1021–1025

Helsgaun K (2009) General k-opt submoves for the lin-kernighan tsp heuristic. Math Program Comput 1:119–163

Liu S, Wang H, Yao W (2022) A surrogate-assisted evolutionary algorithm with hypervolume triggered fidelity adjustment for noisy multiobjective integer programming. Appl Soft Comput 126:109263

Ropke S, Pisinger D (2006) An adaptive large neighborhood search heuristic for the pickup and delivery problem with time windows. Transport Sci 40(4):455–472

Pisinger D, Ropke S (2019) Large neighborhood search. Handbook of metaheuristics, pp 99–127

Mara STW, Norcahyo R, Jodiawan P, Lusiantoro L, Rifai AP (2022) A survey of adaptive large neighborhood search algorithms and applications. Comput Oper Res 146:105903

Blum C, Puchinger J, Raidl GR, Roli A (2011) Hybrid metaheuristics in combinatorial optimization: a survey. Appl Soft Comput 11(6):4135–4151

Han Y, Li J, Sang H, Liu Y, Gao K, Pan Q (2020) Discrete evolutionary multi-objective optimization for energy-efficient blocking flow shop scheduling with setup time. Appl Soft Comput 93:106343