Abstract

The Capsule Network (CapsNet) has been shown to have significant advantages in improving the accuracy of bearing fault identification. Nevertheless, the CapsNet faces challenges in identifying the type of bearing fault under nonstationary and noisy conditions. These challenges arise from the distinctive nature of its dynamic routing algorithm and the use of fixed single-scale kernels. To address these challenges, a multi-scale spatial–temporal capsule network (MSCN) based on sequence encoding is proposed for bearing fault identification under nonstationary and noisy environments. A spatial–temporal sequence encoding module focuses on feature correlations at various times and positions. Dilated convolution-based multiscale capsule layer (MCaps) is designed to capture spatial–temporal features at different scales. MCaps establishes connections between various layers, enhancing the comprehension and interpretation of spatial–temporal features. Furthermore, the Bhattacharyya coefficient is introduced into the dynamic routing to compare the similarity between capsules. The validity of the model is verified through comparative experiments, and the results show that MSCN has significant advantages over traditional methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Bearings are critical components in rotating machinery that are susceptible to wear, cracks, and other forms of damage [1]. Failure of critical equipment will affect the normal operation of rotating machinery, resulting in economic losses and jeopardizing personal safety. Therefore, research on fault diagnosis and monitoring of mechanical equipment is extremely important [2]. Conventional diagnosis techniques depend on manually extracting features to ascertain the fault state. Time-domain analysis, frequency-domain analysis, and time–frequency analysis are among the frequently employed techniques [2,3,4]. Traditional time–frequency analysis methods employ mathematical criteria to select components and perform envelope analysis to extract bearing characteristics, achieving fault identification to some extent. However, these methods struggle to capture the implicit relationships between diverse data features during the diagnostic process. Besides traditional fault diagnosis methods, fault-tolerant control is crucial in mechanical equipment fault diagnosis. When dealing with mechanical equipment faults, not only timely diagnosis of problems is necessary, but also fault-tolerant control must be implemented to ensure safe and reliable operation of the equipment [5]. Previous studies have proposed a series of data-driven fault-tolerant control methods that identify and compensate for equipment faults through adaptive dynamic programming, Q learning, and other technologies [6,7,8]. These methods use real-time input/output data for fault diagnosis and control decisions, thereby enhancing the fault tolerance and robustness of the system.

Although fault-tolerant control plays a crucial role in mechanical equipment fault diagnosis, an increasing number of researchers are now exploring the use of machine learning techniques for this purpose. With advancements in machine learning technology, approaches such as artificial neural networks [9], support vector machines [10], and others have been employed. However, the complexity of these computations often relies heavily on expert knowledge, which can pose challenges for less experienced on-site personnel and may impact the model's generalizability. Deep learning algorithms, such as convolutional neural networks (CNN) [11, 12], deep belief networks (DBN) [13], recurrent neural networks (RNN) [14] and long short-term memory network (LSTM) [15] have become dominant in fault diagnosis literature, owing to their tremendous advances in natural language processing and computer vision. These methods have achieved remarkable success when provided with large amounts of labeled training data. Currently, numerous researchers are working on creating novel, more intricate deep networks to enhance efficiency, primarily relying on identifying an architecture suited to the specific task [16].

Capsule network (CapsNet) is a machine learning architecture proposed by Hinton et al. in 2017 to enhance hierarchical feature training and classification capabilities [17]. The model consists of capsules, which are collections of neurons representing instantiated parameters of objects such as pose and orientation. The key innovation of CapsNet is the dynamic routing mechanism for iteratively optimizing and transferring information between capsule layers. CapsNet stands out in fault diagnostics due to its unique properties, including hierarchical representation, rotation invariance, and interpretable features. These characteristics make CapsNet particularly adept at capturing complex patterns within machinery data, enabling more effective fault diagnosis. Li et al. [18] introduced a dual convolution-capsule network containing dual groups of convolutional layers, pooling layers and capsule structures for fault identification in the case of limited data. Zhu et al. [19] presented a CapsNet with strong generalization that introduced an inception block and a regression branch. Li et al. [20] fused vertical and horizontal vibration signals into a capsule network for fault diagnosis. Chen et al. [21] integrated random incremental rules into CapsNet to improve the model generalization ability for different workloads. Liang et al. [22] propose a novel CapsNet model that applied the structure of the LSTM to eliminate the influence of noise. However, realizing fully automated and highly accurate fault diagnosis remains challenging due to a variety of uncontrolled factors, including noise, nonlinearity, instability, and weak fault characteristics due to complex transmission paths. Current methods inadequately utilize the inherent spatial and temporal features, leading to insufficient interaction between these two dimensions and, consequently, poor diagnostic accuracy. Collecting enough labeled data for each environment to improve the model's generalization performance is too expensive and impractical.

The advancement of temporal feature extraction methods and the spatial feature extraction capability of CapsNet provide the ability to extract discriminative features for fault diagnosis. Methods suitable for time series such as RNN and LSTM have made significant progress. RNN and LSTM effectively combine the input data at each time step with the concealed state from the prior one, capturing temporal dependencies in the features. This empowers them to excel in tasks related to sequence modeling, prediction, and other time-dependent applications. Tao et al. [23] proposed a fault diagnosis method based on the fusion of time–frequency information, using wavelet packet decomposition to convert vibration signals into time–frequency map data. Han et al. [24] leveraged static and dynamic information from vibration signals through the Gramian angular field and the Markov transition field encoding within a dual-channel input CapsNet. Wang et al. [25] presented a time CapsNet encoder-assisted classifier method, which can jointly optimize subspace learning and fault detection. Qin et al. [26] proposed a CapsNet model with an LSTM mechanism to improve remaining life estimation by capturing temporal correlations in time series data. Wang et al. [27] introduced the Bidirectional-LSTM into the CapsNet, enabling the network to handle the periodicity of vibration time series vibration signals. Zhao et al. [28] introduced a model, which combined a convolution capsule channel and an LSTM channel to extract spatial–temporal features from sensor data. The synergy between advanced temporal feature extraction methods such as RNN and LSTM, and the spatial feature extraction capabilities of CapsNet, presents a comprehensive approach to fault diagnosis, ensuring the automatic extraction of vital information from both temporal and spatial dimensions in the data. This combination allows for the automatic extraction of vital information from both temporal and spatial dimensions in the data. Despite the promising results shown by these methods, there is a need to evaluate their feasibility from both implementation and computational perspectives. Their limitation lies in the insufficient consideration of the interrelationship between spatial and temporal dimensions and the reliance on single-scale feature extraction. To address these challenges and improve the model's generalization ability, it is crucial to incorporate multiscale feature extraction techniques.

Two commonly used structures for multiscale feature fusion networks are parallel multi-branch networks and serial leapfrog connections. Parallel multi-branch networks refer to a network architecture where multiple parallel branches or pathways are employed to process input data at different scales or resolutions simultaneously. Serial leapfrog connections, on the other hand, involve a network architecture where layers or modules are connected in a sequential manner, with each layer focusing on a different scale or level of abstraction. Wu et al. [29] introduced an enhanced network model combining parallel and cascaded multi-scale convolutional layers to aggregate diverse receptive field features into the capsule vector space. Sun et al. [30] built a diagnostic model through the concatenation of wide convolutions, small-size convolutions, and attention modules. Zhang et al. [31] proposed a dual-scale capsule network combined with attention which is used to calculate convolutional feature weights of varying sizes, enabling effective feature extraction from vibration signals. Wu et al. [32] used the CapsNet for feature extraction and an attention mechanism to fuse key features across various scales. Wang et al. [33] presented a novel CapsNet for diagnostic tasks by combining wide convolution and multi-scale convolutional layers. While these methods may surpass traditional approaches, they still suffer from the following two fundamental limitations: (1)The above methods utilize information in both spatial and temporal dimensions but fail to fully consider the interrelationship and interactivity between these dimensions; (2) Multiscale networks may introduce a significant number of additional parameters, thereby increasing the complexity of the model. Therefore, there remains a pressing need to explore advanced solutions to enhance the generalization capability and extraction accuracy of bearing vibration signals, facilitating operations and enhancing diagnostic quality.

To address the aforementioned challenges, the innovative Multi-scale Spatial–temporal Capsule Network (MSCN) introduced in this paper offers distinctive advantages for fault diagnosis tasks. By harnessing CapsNet's spatial information advantages and its ability to learn temporal features, MSCN achieves comprehensive feature extraction crucial for accurate fault detection. The spatial–temporal sequence encoding module, integrated with a self-attention mechanism, facilitates the learning of correlations between features at various time steps and positions, enhancing comprehensive feature extraction. Unlike conventional single-structure networks, the integration of dilated convolution within the multi-scale capsule layer expands the model's receptive field while preserving resolution, thereby improving spatial extraction performance. To deal with the aforementioned challenges, the innovative Multi-scale Spatial–temporal Capsule Network (MSCN) introduced in this paper offers distinctive advantages for fault diagnosis tasks. The MSCN model has been successfully implemented and tested on the Case Western Reserve University (CWRU) bearing data set and the laboratory bearing data set, demonstrating its practical feasibility for industrial applications. Despite its complex structure, the model's computational requirements are manageable, making it a viable solution for fault diagnosis tasks. The contributions of the paper include the following:

-

1.

A spatial–temporal sequence encoding module is built utilizing a self-attention mechanism, facilitating the learning of correlations between features at various time steps and positions. The MSCN model offers a viable spatial–temporal solution, allowing the training of the diagnosis model with labeled data collected under specific working conditions.

-

2.

A multi-scale capsule layer (MCaps) is constructed based on dilated convolution to capture multi-scale information of fault characteristics without introducing additional parameters. The features of MCaps include acquiring critical information at various scales, studying the correlation between channels, and boosting the interaction of cross-channel feature data.

-

3.

Integration of the Bhattacharyya coefficient to evaluate the similarity between capsules, mitigating sensitivity of the dynamic routing mechanism and effectively enhancing model robustness by minimizing noise interference.

The rest of the paper is organized as follows. “Preliminaries” section introduces the related theories and methods of capsule network, dilated convolution and attention mechanism. In “Proposed method” section, the Multi-scale Spatial–temporal Capsule Network (MSCN) is introduced. “Results analysis and discussion” section includes two comparative trials, and certain outcomes are visually represented. “Conclusion” section gives the conclusion of the study.

Preliminaries

CapsNet

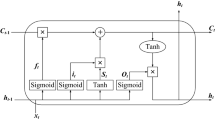

Different from traditional neural networks, which rely on neurons for information transmission, capsule network utilizes vectorized capsules as fundamental units, offering distinct advantages [34]. This design empowers capsule networks to efficiently extract crucial information by leveraging vector representation to preserve spatial relationships among features. There are four processes that make up the CapsNet's working mechanism, as shown in Fig. 1. Initially, the input matrix \(u_i\) is transformed into a vector, followed by multiplication with a weight matrix \(W_{ij}\) to generate a feature vector. This weight matrix captures spatial relationships and other crucial features at each level. The second step involves inputting the weights, and the third step is to add these weights. During this addition, the coupling coefficient \(c_{ij}\) is updated using a dynamic routing to facilitate information interaction between the current capsule and the higher-level capsule [35]. The fourth step is nonlinear transformation, which compresses the vector by the Squash function to guarantee that the output’s length is between 0 and 1. The dynamic routing algorithm serves as an iterative mechanism facilitating information flow between the primary capsule layer and the digital capsule layer, thereby enhancing the acquisition of useful information [36, 37].

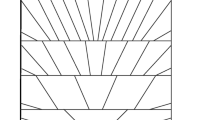

Dilated convolution

Dilated convolution, also referred to as atrous convolution or expansion convolution, widens the receptive field by introducing an expansion rate (r), which involves inserting zero values between pixels in the convolution kernel [38]. Figure 2 depicts the structure of dilated convolution with different expansion rates. In Fig. 2a, the traditional convolution has an expansion rate of 1, resulting in a 3 × 3 kernel size and corresponding receptive field. With a dilated rate of 2, the receptive field in Fig. 2b is expanded to 7 × 7 by replacing the 3 × 3 convolution with 0. Figure 2c shows the convolution with an expansion rate of 3, and the receptive field is increased to 13 × 13. While the parameters remain consistent across Fig. 2a–c, the receptive field expands with the increase in dilation rate. Dilated convolution reduces the training burden of the model by employing fewer parameters compared to traditional convolution. As a result, dilated convolutional kernels can capture more information than traditional convolution kernels without significantly increasing the computational cost [39].

Attention mechanism

The attention mechanism mimics the selective perception mechanism of the human visual system. It dynamically adjusts input weights, directing focus towards the most crucial areas [40]. Self-attention fundamentally involves calculating attention within the sequence itself, allocating distinct weight information to individual elements. Self-attention mechanisms typically employ three distinct linear mappings to compute attention weights: query mapping, key mapping, and value mapping, illustrated in Fig. 3 [41].

Proposed method

CapsNet has shown promise in intelligent fault diagnosis, but its limitations in fully extracting multi-scale features and handling spatiotemporal interactions hinder its effectiveness, particularly in complex industrial environments. To address these challenges, a model called Multi-scale Spatial–temporal Capsule Network (MSCN) has been proposed. MSCN integrates multiple structures and optimization algorithms, offering distinct advantages over conventional models. Our proposed MSCN model addresses the shortcomings of traditional CapsNet approaches by incorporating several key components, as illustrated in Fig. 4. Firstly, the Spatial–Temporal Sequence Encoding Module (SSEM) enhances the model's ability to capture correlations between features across different positions and time steps, thereby facilitating the extraction of comprehensive spatial–temporal features. Secondly, the Multi-Scale Capsule Layer (MCaps), leveraging dilated convolutions, enables efficient extraction of features at varying scales, thereby enriching the model's representation of input data. Furthermore, the Routing Capsule Layer (RoutingCaps) employs a dynamic routing mechanism utilizing Bhattacharyya coefficients, which effectively mitigates the impact of capsule length and enhances model robustness. The integration of these components in MSCN results in a data-driven approach that combines multi-scale feature extraction with spatial learning, all in an end-to-end manner. This approach not only simplifies signal processing complexities but also addresses issues such as vanishing and exploding gradients, thereby enhancing the model's capability to handle intricate datasets prevalent in industrial fault diagnosis scenarios. The details are elucidated in the subsequent subsections.

Spatial–temporal sequence encoding module

Aiming at the complex fault signal and the random noise distribution, the 1D input signals are converted into 2D matrices in the data preparation stage. The vibration signal is segmented using a sliding window, with these segments sequentially organized as rows in the vibration matrix. Nevertheless, a significant drawback of this conversion is the loss of temporal continuity, which presents significant challenges in capturing and analyzing temporal patterns and trends in vibration signals. To address this issue and preserve crucial time information in one-dimensional vibration signals, a spatiotemporal sequence encoding model (SSEM) is proposed. SSEM is designed to amplify the model's sensitivity to key features by consolidating global time series relationships for encoding feature importance.

The module combines sequence encoding and attention mechanism to help the model analyze the spatial and temporal relationships between features as shown in Fig. 5. First, sequence encoding provides the model with information regarding time steps and positions. Suppose that the input feature map combination of SSEM is denoted as \(X = \{ x_1 ,x_2 ,....x_t \} ,x_i \in R^{D \times 1}\), where t represents the number of time steps. The sequence encoding acquires the feature \(\left( {p_1 ,...,p_n } \right)\) from the encoder as input, and the required encoding \(\hat{p}_n\) is obtained by the following calculation:

where t represents the number of time steps, and \(d_{model}\) is the dimension of the input feature map. The parameter i is used to calculate the encoding. The choice of sinusoidal encoding with a geometric progression of wavelengths is motivated by its ability to smoothly represent sequence information, generalize to varying lengths, facilitate learning of relative positions, reduce positional collisions, and offer flexibility and interpretability.

The next step in SSEM is to convert the input feature map into three feature maps. This conversion is performed using 1 × 1 convolution, where each of the pixels in the input feature map is used as a query vector (Q), key-value vector (K), and value vector (V), respectively, as shown in Eq. (2).

where “\(\oplus\)” represents the feature superposition operation.

The transformed Query (Q) and Key (K) matrices are then normalized by a softmax operation to represent the attentional weights and are used for weighted summation with the Value (V) matrix. After SSEM obtains the feature vectors through the self-attention mechanism, the fusion module is proposed and two fusion methods are introduced: concatenation and element-wise addition. These methods are used to combine the two transformed feature vectors, providing flexibility for the model to integrate spatial–temporal information. which is described as follows:

where \(f^{attention}\) represents the self-attention weight distribution. Batch normalization layers are added after the fusion operation to improve the generalization of the encoded output.

The spatial–temporal sequence encoding module encodes temporal relationships, thus offering essential contextual awareness to the model. This adjustment of sequence length involves adaptively modifying weights to enable the model in learning relative positional and temporal dependencies. Following this, the MSCN model leverages the CapsNet structure to analyze spatial relationships among features, thereby facilitating feature learning across both temporal and spatial dimensions.

A multi-scale capsule layer based on dilated convolution

Vibration signals from rolling bearings encompass various complex fault modes and waveforms, which may manifest on different scales. Multi-scale feature extraction helps identify and separate these different vibration models. Therefore, the Multi-scale Capsule layer (MCaps) layer is proposed based on dilated convolution to capture shallow local and global features from vibration signals and subsequently encapsulate them into vector form. Dilated convolutions are integrated into the MCaps to model finer-grained features while preserving computational efficiency. This approach allows the network to learn the global feature distribution while maintaining resolution, enabling accurate recognition with abundant data.

As depicted in Fig. 6, MCaps consists of three channels. Each channel comprises a two-dimensional convolutional (Conv2D) layer, a batch normalization layer (BN), an activation layer, and a reshape layer, forming four interrelated components. Two of the convolution layers are dilated convolutions. Each filter in this module uses a different convolutional kernel to pull out local features from the input. Multi-scale features, extracted from different layers, are integrated for selective feature learning. Finally, the feature information is transformed into capsule form and passed to the routing capsule layer.

First, assume that the current input feature information is expressed as: \(X = [x_1 ,x_2 ,....,x_n ]\). Channel 1 adopts a typical convolutional layer and extracts local features through the convolution kernel, as shown in formula (4).

where (i, j) are the position coordinates in the output feature map, * is the convolution operation, m and n respectively represent the row index and column index in the convolution kernel K. Conv2D is used to compute each pixel value in the output feature map by multiplying and accumulating the convolution kernel element by element with the input image for extracting abstract features from the input. To reduce the number of parameters while expanding the receptive field, dilated convolution is introduced for the other channels. The calculation formula for its equivalent convolution kernel size is as follows:

where r is the expansion rate. The other channels use dilated convolution with varying dilation rates. Each kernel has random weights, which produce multiple feature maps from different kernels. This design achieves a wider receptive field and captures multi-scale features in the vibration signal.

The MCaps module has a multi-scale connection structure that feeds shallow features to routing capsule layers via convolutional layers, capsule network layers, and feature reuse. The features extracted from channel 1 are fused with the input by applying dilated convolution to channel 2.

where \(X_j^i\) represents the feature information output by the j-th layer in the i-th channel.

The input instantiation parameters are captured by the second convolution layer. The features extracted in channel 1 and channel 3 are fused with the dilated convolution output of channel 2 to enrich the fault features. The specific expression is as follows:

Ultimately, output with multi-level information can be offered fuller information for subsequent extraction. The Reshape operation converts the feature map into a vector form by transforming the output of the convolutional layer into capsule form. The convolutional product is reshaped into a capsule form with a predetermined length to capture essential characteristics in vectorized form.

In this paper, the activation function used is the rectified linear unit (ReLU), known for its simplicity and computational efficiency. ReLU sets negative inputs to zero and passes positive values unchanged [42]. This property introduces non-linearity and effectively addresses the vanishing gradient problem. Each channel includes a batch normalization layer (BN) positioned between the convolution and activation functions. Training can be substantially accelerated by using the BN, which makes all neuron input values in each layer adhere to a standard normal distribution with mean 0 and variance 1 [43]. Convolutions and capsule structures acquire multi-scale features, establishing interconnections between multiple layers to ensure seamless information propagation and interaction across layers.

Routingcaps with Bhattacharyya coefficient

In the realm of dynamic routing, it is conventional for routing weights to undergo adjustments during each forward propagation cycle. Excessive or erratic updates to the routing weights can perturb inter-capsule communication, impeding the model's ability to effectively capture and retain essential features and relationships. Essentially, a dynamic routing algorithm is a clustering algorithm, commonly utilizing distance to measure similarity. The similarity between vectors is measured by the vector inner product in the original dynamic routing algorithm, but by the Bhattacharyya coefficients in the routing capsule layer (Routingcaps), which weaken the effect of the capsule length. The Bhattacharyya coefficient is a probability distribution-based measure, which can consider the overlap between two probability distributions more comprehensively [44]. This serves to better illustrate the similarity between entities in entity representation, rendering it suitable for capsule representation. The model’s noise immunity and generalization ability are enhanced and the impact of capsule length is mitigated by the Bhattacharyya coefficient, an alternative to the vector inner product. The formula for updating the weights \({{\text{b}}}_{{\text{ij}}}\) of the improved dynamic routing algorithm is:

A multi-scale spatial–temporal capsule network for bearing fault diagnosis

The multi-scale spatial–temporal capsule network (MSCN) model is introduced in this study to further adapt to the pressing needs of the real bearing diagnostic industrial environment, as illustrated in Fig. 7. The pseudo-code for the improved network model is given by Algorithm 3-1.

Algorithm 3-1: MSCN

The following are the detailed steps:

-

Step 1: Set up a bearing fault monitoring device to collect fault signals.

-

Step 2: Classify raw vibration signals, normalize and label fault categories.

-

Step 3: Partition the data into test, and training sets.

-

Step 4: Transform 1D vibration signals into 2D matrices as described in “Spatial–temporal sequence encoding module” section.

-

Step 5: The MSCN model is trained using the training set, which enables it to modify its parameters in response to the given data. The validation set was used for model selection and hyperparameter tuning during training to avoid model overfitting.

-

Step 6: The test set is passed on into the trained MSCN model, and the model outputs the diagnostic results to assess the model's performance.

Results analysis and discussion

This section validates the effectiveness of the MSCN model and tests its diagnostic performance on the dataset. The data sets are Case Western Reserve University (CWRU) [45] and laboratory bearing fault [46] data sets.

Case 1: Case western reserve university (CWRU) rolling bearing data set

Description of the data set

Figure 8 illustrates the CWRU rolling bearing's data collection apparatus. A 1.5 KW (2 HP) motor, a torque sensor (decoder), and a power meter mounted on the driving end bearing seat make up the test platform. The acceleration sensor is used to gather vibration acceleration signals during operation [47]. In this experiment includes normal and three fault types of signals. The diameter of the fault bearing is 0.007 inches, 0.014 inches and 0.021 inches respectively, which makes a total of nine different fault types. Moreover, after adding four loads of 0 HP, 1 HP, 2 HP and 3 HP to the motor, the bearing operation under more complex operations can be realized. Ten distinct bearing operating states' time domain signals are shown in Fig. 9.

Parameter selection

This section provides a comprehensive overview of key parameters such as network structure, optimizer and learning rate of the MSCN model. As the basic framework for model construction, network structure directly affects the expression ability and learning potential of the model. Table 1 details the network structure parameters unique to the MSCN model. Choosing an optimizer is critical to maximizing model performance and ensuring stability. This article chooses the Adam optimizer with a learning rate of 0.001. The Adam optimizer is known for its ability to handle gradient-related challenges and is good at enhancing model generalization. Optimizers make a significant contribution to the training process through precise calibration of model parameters, ultimately improving efficiency and stability. The evaluation criterion chosen for assessing the model performance is accuracy. Accuracy measures the proportion of correctly classified instances among all instances in the dataset. It provides a comprehensive view of the model's classification performance across different classes or categories. MSCN is implemented using Python 3.6, TensorFlow 1.13 and Keras 2.2.4 on Windows 64-bit operating system. The host CPU core used is i5-8265U and the memory is 16 GB RAM.

The complexity of neural network model usually involves: number of parameters, number and width of layers, computational complexity, memory consumption and so on. The number of parameters and floating-point operations per second (FLOPS) are important indicators of model complexity. Table 2 lists the number of parameters and FLOPs of different models. The MSCN model is slightly higher than the CNN model in the number of parameters, but is equivalent to the CNN model in terms of computational load (FLOPs). Compared with DenseNet and ADFFCN, its FLOPs are significantly lower. It can be seen from Table 2 that the MSCN model can maintain a low computational load while having a relatively small number of parameters, which may make it a better choice in resource-constrained environments. Its performance in fault diagnosis experiments will be verified in the next section.

Sensitivity analysis

The experiments in this section aim to explore the impact of network hyperparameters on model performance, and evaluate the impact of these parameters on model training dynamics and performance evaluation indicators through different number of input samples. Training loss, validation loss, training accuracy, and validation accuracy for each combination of epochs and batch sizes were chosen as performance evaluation indicators to comprehensively assess the model's classification accuracy in fault diagnosis tasks and guide further optimization and improvement. The experimental design employed a fivefold cross-validation method combined with sensitivity analysis to investigate how various network hyperparameters (epochs and batch sizes) affect training dynamics and model performance. In the experiment, batch sizes of 32, 64, and 128 were chosen, and the number of epochs was set to 100, following established practices in deep learning research and preliminary experiments. Figure 10 displays the results of the sensitivity analysis, illustrating distinct trends in training loss and accuracy over epochs and batch sizes.

The analysis depicted in Fig. 10 reveals that a smaller batch size, such as 32, accelerates loss reduction and boosts accuracy during initial training stages. However, with increasing epochs, a larger batch size, like 128, achieves superior loss reduction and higher accuracy. Taking the loss curve as an example, all batch sizes exhibit decreasing trends with epochs, yet the batch size of 32 initially decreases most rapidly before stabilizing above the others. Conversely, batch sizes 64 and 128 show a more gradual decline but eventually reach lower loss values. Notably, larger batch sizes may necessitate more epochs for optimization. Based on the above analysis, a batch size of 64 is chosen because it demonstrates the best performance considering loss reduction speed, accuracy, and training efficiency. When the number of samples is small (e.g., 100), a smaller batch size (e.g., 32) exhibits lower training loss and higher training accuracy. As the number of samples increases (e.g., 500), a larger batch size (e.g., 128) can achieve better performance. As the amount of input increases, the model's performance improves. However, when the dataset is too large, it may increase training time and computational cost and may also lead to overfitting. Therefore, the dataset of each state is chosen to contain 300 samples, with 1024 sampling points in each sample. For each sample, the 1 × 1024 one-dimensional data is converted into a 32 × 32 two-dimensional feature matrix.

Ablation experiment

The following ablation experiments are designed to compare and assess the impact of various changes on the model classification effect in order to precisely assess the improvement effect of each section. The ablation experiment is divided into three parts: (1) CapsNet: traditional capsule network; (2) SSEM + CapsNet: based on the capsule network, a module is added for encoding input data; (3) SSEM + CapsNet + MCaps: Based on the capsule network, the SSEM module and multi-scale capsule layers are added, and the improved dynamic routing part is excluded; (4) MSCN. In this experiment, four data sets under varying loads are presented as experimental data. Under the conditions of no noise and different loads, the functions of each MSCN ablation model are evaluated, and the final comparison result of the average diagnostic accuracy of each ablation model is shown in Fig. 11 and Table 3.

In the context of the proposed MSCN for bearing fault diagnosis, experiments were conducted to assess the impact of three optimizations: Spatial–temporal Sequence Encoding Module (SSEM), Multi-scale Capsule layer (MCaps), and an improved routing algorithm based on Bhattacharyya coefficients. The results, as detailed in Fig. 11 and Table 3, demonstrate that the model's classification accuracy improves with the application of all three optimization procedures. The feature extraction part optimization has a more pronounced influences on model performance as it includes using sequence encoding to extract temporal information, using an attention mechanism to learn correlations and introducing dilated convolutions to learn multi-scale features.

Noise-free experimental analysis

This section examines the efficacy of MSCN under noise-free and no-load conditions. The network's capacity to grasp the inherent characteristics of the initial bearing vibration signal is assessed using the t-stochastic neighbor embedding (t-SNE) technique for two-dimensional visualization, as shown in Fig. 12. The t-SNE method transforms high-dimensional vibration signal attributes into a two-dimensional space, allowing for visualization based on the resulting two-dimensional data. Figure 12a displays the original data distribution, where colors seem intermingled. In contrast, Fig. 12b illustrates the distribution of vibration signals in a 2D space post model training. A distinct classification is apparent in the latter scenario. This improvement in classification performance underscores the robust feature extraction capabilities of the MSCN model, particularly when integrated with the MCaps. Utilizing MCaps effectively extracts features at different scales, enhancing the model's interpretation of the input data. Consequently, the MSCN model demonstrates exceptional diagnostic accuracy under noise-free conditions, underscoring its proficiency in comprehending and representing the intrinsic characteristics of the original vibration signal.

Analysis of noise experiment results

To evaluate the diagnostic effect of the model in complex environments, this section introduces Gaussian white noise signals with varying signal-to-noise ratios (SNR) to the bearing data set of CWRU, which provides an ideal testing environment. The proposed model is tested with noisy signals from − 6 dB to 6 dB. The performance of MSCN is verified by comparing it with CNN, ResNet [46], DenseNet, Multi-CNN, ResNet-LSTM [47], ADFFCN [30] and IMS-FACNN [48] model under 3 HP load conditions. The line chart in Fig. 13 illustrates the diagnostic accuracy of different models across varying SNR levels, enabling a more intuitive observation of their performance.

The diagnostic abilities of all algorithms notably improve with increasing SNR, as depicted in Fig. 13 and Table 4. Strong noise adversely affects the accuracy of all methods, with the CNN algorithm exhibiting the poorest performance at a signal-to-noise ratio of − 6 dB. Single-structure models demonstrate poor diagnostic performance due to their limited consideration of multi-scale feature extraction. Conversely, Multi-CNN and other hybrid-structure models perform significantly better than single-scale models. MSCN, with its unique integration of spatial and temporal aspects, excels in identifying fault features in environments with strong noise, achieving an accuracy of 84.1% and surpassing other algorithms. The algorithm's reliability substantially increases with noise reduction. At SNR = 0 dB, where the original signal power equals the noise, MSCN maintains the highest diagnostic accuracy at 90.8%. The improved dynamic routing algorithm, which evaluates the degree of overlap between capsule distributions, effectively enhances the model's robustness to noise, enabling more accurate fault identification.

Diagnostic experiment of test data under different loads

This section evaluates the fault diagnosis accuracy of MSCN under changing load conditions. The domain invariance of MSCN is verified by training on one dataset and testing on another dataset. Three datasets (1 HP, 2 HP, and 3 HP) representing different load conditions are introduced, denoted as dataset A, dataset B, and dataset C, respectively. The MSCN is compared with SVM, CNN, NSAE-LCN [20], DenseNet, ResNet-LSTM [47], and IMS-FACNN [48]. The results are compared and visualized in Fig. 14 and Table 5, where the horizontal axis represents load transformation. For example, A- > B means that the training data set is data set A, and data set B is used as test data. The vertical axis represents the model test accuracy.

Results presented in Fig. 14 and Table 5 demonstrate the superior fault diagnosis accuracy of the proposed MSCN model under various load conditions, compared to other models. High accuracy is a consistent achievement of MSCN across different load transformations, thanks to the use of a dynamic routing mechanism with Bhattacharyya coefficients in the Routing Capsule Layer. This mechanism aggregates relevant features, suppresses noise, and enhances the discriminative power of the model. It also reduces the impact of variations in capsule length, thereby improving the overall robustness and stability of the model. The domain invariance of MSCN ensures its effectiveness in real-world applications, particularly in industrial settings with fluctuating conditions. MSCN maintains high accuracy across different load conditions, which allows for timely fault diagnosis. This enhances operational efficiency, reduces maintenance costs, and minimizes disruptions to production processes.

Case 2: Laboratory bearing composite fault data set

Data set introduction

The laboratory data were obtained from the rolling bearings of the electric locomotive shown in Fig. 15 [47]. The specific parameters are shown in Table 6.

The sample information of this data set is shown in Table 7. There are three kinds of fault positions of this datasets: inner ring, outer ring and roller. There exist three distinct fault states, specifically damage, scratch, and mixed faults. This paper uses different fault data sets, including four composite faults, and four single-point faults and a normal signal. The time-domain waveforms in various states are shown in Fig. 16. Depending on the load, the signal is divided into four groups: A, B, C, D. In this section, the dataset for each state is chosen to contain 300 samples with 1024 sampling points in each sample. The hyperparameters batch size and epoch of this study were set to 32 and 100.

Analysis of noise-free experimental results

Under the noise-free condition of the locomotive bearing data set in the laboratory, the fault diagnosis accuracy under four different load conditions is compared with SVM, CNN, DenseNet, Multi-CNN, ResNet-LSTM [47], and ADFFCN [30] model to validate the viability of the MSCN. The specific diagnosis accuracy is shown in Fig. 17 and Table 8.

The noiseless experimental conditions provide an ideal environment for evaluating the performance of fault diagnosis models, allowing for a focused assessment of their capabilities in extracting and leveraging fault features from vibration signals. In this context, the accuracy of composite approaches outperforms single methods, like ResNet-LSTM, which achieves an accuracy of approximately 95%, as demonstrated in Fig. 17 and Table 8. MSCN consistently outperforms all other models with an average accuracy of 97.1%. MSCN incorporates a spatial–temporal sequence encoding module that enhances the model's ability to capture correlations between features across different positions and time steps. This enables comprehensive extraction of spatial–temporal features from the vibration signals, facilitating accurate fault diagnosis under various load conditions.

Performance analysis

This section adds Gaussian white noise with different signal-to-noise ratios (SNR) to the collected vibration signals, and verifies the performance of the proposed model through the average diagnostic accuracy of other models under the same load conditions. The comparison with other common models such as CNN, DenseNet, Multi-CNN, Resnet [46], Resnet-LSTM [47] and ADFFCN [30] is shown in Table 9 and Fig. 18.

As shown in Table 9 and Fig. 18, MSCN consistently outperforms other models across varying SNR levels. For instance, at − 6 dB SNR, MSCN achieves 84.1% accuracy, surpassing CNN and ResNet which achieve 63.1% and 52.5% accuracy respectively. Even at higher SNR levels, MSCN maintains its superiority, achieving 99.8% accuracy at 6 dB SNR, while ResNet-LSTM reaches only 86.5%. These consistent results underscore MSCN's robustness in accurately extracting fault features from noisy vibration signals. This can be attributed to MSCN's advanced spatial–temporal feature extraction capabilities, multi-scale representation learning, and robust dynamic routing mechanism. the comparative experimental results provide strong validation of MSCN's efficacy as a reliable and robust model for fault diagnosis, reaffirming its potential to significantly enhance diagnostic accuracy and reliability in practical applications.

The diagnostic performance of each model at the − 2 dB level is shown in Fig. 19. There are six data points in this image, representing different models. Box plots are used to display the data points, with the vertical line denoting the data range and the horizontal line representing the median. As can be seen from the figure, MSCN performs more steadily than the previous model. MSCN can extract potentially useful information from failure signals in the presence of noise. The incorporation of Bhattacharyya coefficient structures along with dilated convolutions in MSCN contributes significantly to its anti-noise performance. These structural enhancements enable MSCN to effectively mitigate the impact of noise, thereby improving its diagnostic accuracy and reliability in noisy environments.

Figure 20 presents the confusion matrix of MSCN under different SNR conditions. Algorithm classification performance can be assessed using the confusion matrix as a metric. It shows the classification results of the model on different categories in the form of a matrix. The rows represent the actual categories and the columns represent the categories predicted by the model. The diagonal elements of the matrix represent the accuracy of the model on each category. It is evident from Fig. 20 that identifying compound faults is more challenging than single faults, resulting in lower accuracy. The model exhibits lower diagnostic accuracy for fault types 1 and 2. With the increase in SNR, the multi-scale capsule structure enhances the network's recognition ability. These results reveal the superiority and stability of the proposed method in composite fault classification compared to other methods.

Conclusion

A Multi-scale Spatial–Temporal Capsule Network (MSCN) model is proposed based on the multi-scale features and time series features of bearing fault. The integration of a spatial–temporal coding module, a multi-scale capsule layer, and a routing capsule layer allows MSCN to capture spatial–temporal features effectively and extract multi-scale features from vibration signals. Validation experiments conducted on datasets from Case Western Reserve University and laboratory experiments demonstrate the robustness and adaptability of the MSCN model. MSCN achieves an average accuracy exceeding 97% in noise-free environments and maintains over 80% accuracy in various noisy environments. MSCN, compared to conventional CapsNet approaches, offers significant advantages. It captures multi-scale features effectively and facilitates spatiotemporal feature interaction, demonstrating superior performance in bearing fault diagnosis tasks. The MSCN model has good robustness in the face of complex noise environments and different load conditions, thereby ensuring its reliability and stability in fault-tolerant control. The integration of efficient algorithms and structures ensures computational tractability while delivering promising diagnostic outcomes. The MSCN model provides a robust and effective solution for intelligent fault diagnosis, showcasing advancements in methodology and computational feasibility. However, areas for improvement remain. Future research could focus on enhancing the denoising effects of the model, especially in cross-condition or cross-domain fault diagnosis scenarios. Exploration of advanced techniques for feature extraction and model optimization could further enhance the performance and applicability of the MSCN model in real-world applications.

Data availability

Data will be made available on request.

References

Hemati A, Shooshtari A (2023) Bearing failure analysis using vibration analysis and natural frequency excitation. J Fail Anal Prev 23(4):1431–1437. https://doi.org/10.1007/s11668-023-01700-0

Han T, Ma R, Zheng J (2021) Combination bidirectional long short-term memory and capsule network for rotating machinery fault diagnosis. Measurement 176:109208. https://doi.org/10.1016/j.measurement.2021.109208

Tao H, Shi H, Qiu J et al (2023) Planetary gearbox fault diagnosis based on FDKNN-DGAT with few labeled data. Meas Sci Technol 35(2):025036. https://doi.org/10.1088/1361-6501/ad0f6d

Li H, Liu T, Wu X et al (2020) A bearing fault diagnosis method based on enhanced singular value decomposition. IEEE Trans Ind Inform 17(5):3220–3230

Song X, Sun P, Song S et al (2023) Finite-time adaptive neural resilient DSC for fractional-order nonlinear large-scale systems against sensor-actuator faults. Nonlinear Dyn 111(13):12181–12196. https://doi.org/10.1007/s11071-023-08456-0

Stojanović V (2023) Fault-tolerant control of a hydraulic servo actuator via adaptive dynamic programming. Math Model Control 3(3):181–191

Wang R, Zhuang Z, Tao H et al (2023) Q-learning based fault estimation and fault tolerant iterative learning control for MIMO systems. ISA Trans 142:123–135. https://doi.org/10.1016/j.isatra.2023.07.043

Tao H, Zheng J, Wei J et al (2023) Repetitive process based indirect-type iterative learning control for batch processes with model uncertainty and input delay. J Process Control 132:103112. https://doi.org/10.1016/j.jprocont.2023.103112

Heo S, Lee JH (2018) Fault detection and classification using artificial neural networks. IFAC Pap Online 51(18):470–475. https://doi.org/10.1016/j.ifacol.2018.09.380

Li X, Yang Y, Pan H et al (2019) A novel deep stacking least squares support vector machine for rolling bearing fault diagnosis. Comput Ind 110:36–47. https://doi.org/10.1016/j.compind.2019.05.005

Zhang J, Yi S, Liang GUO et al (2020) A new bearing fault diagnosis method based on modified convolutional neural networks. Chin J Aeronaut 33(2):439–447. https://doi.org/10.1016/j.cja.2019.07.011

Wang H, Liu Z, Peng D et al (2022) Attention-guided joint learning CNN with noise robustness for bearing fault diagnosis and vibration signal denoising. ISA Trans 128(09):470–484. https://doi.org/10.1016/j.isatra.2021.11.028

Wang H, Wang H, Jiang G et al (2019) Early fault detection of wind turbines based on operational condition clustering and optimized deep belief network modeling. Energies 12(06):984. https://doi.org/10.3390/en12060984

An Z, Li S, Wang J et al (2020) A novel bearing intelligent fault diagnosis framework under time-varying working conditions using recurrent neural network. ISA Trans 100(05):155–170. https://doi.org/10.1016/j.isatra.2019.11.010

Chen X, Zhang B, Gao D (2021) Bearing fault diagnosis base on multi-scale CNN and LSTM model. J Intell Manuf 32:971–987. https://doi.org/10.1007/s10845-020-01600-2

Panigrahi S, Das J, Swarnkar T (2022) Capsule network based analysis of histopathological images of oral squamous cell carcinoma. J King Saud Univ Comput Inform Sci 34(7):4546–4553. https://doi.org/10.1016/j.jksuci.2020.11.003

Li DC, Zhang M, Kang TB et al (2022) Fault diagnosis of rotating machinery based on dual convolutional-capsule network (DC-CN). Measurement 187(1):110258. https://doi.org/10.1016/j.measurement.2021.110258

Zhu Z, Peng G, Chen Y et al (2019) A convolutional neural network based on a capsule network with strong generalization for bearing fault diagnosis. Neurocomputing 323:62–75. https://doi.org/10.1016/j.neucom.2018.09.050

Li L, Zhang M, Wang K (2020) A fault diagnostic scheme based on capsule network for rolling bearing under different rotational speeds. Sensors 20(7):1841. https://doi.org/10.3390/s20071841

Chen T, Wang Z, Yang X et al (2019) A deep capsule neural network with stochastic delta rule for bearing fault diagnosis on raw vibration signals. Measurement 148:106857. https://doi.org/10.1016/j.measurement.2019.106857

Liang Y, Li B, Jiao B (2021) A deep learning method for motor fault diagnosis based on a capsule network with gate-structure dilated convolutions. Neural Comput Appl 33:1401–1418. https://doi.org/10.1007/s00521-020-04999-0

Han B, Zhang H, Sun M et al (2021) A new bearing fault diagnosis method based on capsule network and Markov transition field/Gramian angular field. Sensors 21(22):7762. https://doi.org/10.3390/s21227762

Tao H, Qiu J, Chen Y et al (2023) Unsupervised cross-domain rolling bearing fault diagnosis based on time-frequency information fusion. J Franklin Inst 360(2):1454–1477. https://doi.org/10.1016/j.jfranklin.2022.11.004

Wang S, Zhao Q, Han Y et al (2023) Fault detection for process industries via temporal CapsNet encoder-assisted one-class classifier. IEEE Trans Instrum Meas 72:1–12

Qin Y, Yuen C, Shao Y et al (2022) Slow-varying dynamics-assisted temporal capsule network for machinery remaining useful life estimation. IEEE Trans Cybern 53(1):592–606

Wang Y, Cao G, Han J (2022) A combination of dilated self-attention capsule networks and bidirectional long-and short-term memory networks for vibration signal denoising. Machines 10(10):840. https://doi.org/10.3390/machines10100840

Zhao C, Huang X, Li Y et al (2021) A novel cap-LSTM model for remaining useful life prediction. IEEE Sens J 21(20):23498–23509

Wu K, Tao J, Yang D et al (2022) A rolling bearing fault diagnosis method based on enhanced integrated filter network. Machines 10(6):481. https://doi.org/10.3390/machines10060481

Sun Z, Yuan X, Fu X et al (2021) Multi-scale capsule attention network and joint distributed optimal transport for bearing fault diagnosis under different working loads. Sensors 21(19):6696. https://doi.org/10.3390/s21196696

Zhang Q, Li J, Ding W et al (2023) Mechanical fault intelligent diagnosis using attention-based dual-scale feature fusion capsule network. Measurement 207:112345. https://doi.org/10.1016/j.measurement.2022.112345

Wu K, Tao J, Yang D et al (2022) Rolling bearing fault diagnosis method based on multiple efficient channel attention capsule network. In: International conference on adaptive and intelligent systems, vol 13338. Springer, Cham, pp 357–370. https://doi.org/10.1007/978-3-031-06794-5_29

Wang Y, Ning D, Feng S (2020) A novel capsule network based on wide convolution and multi-scale convolution for fault diagnosis. Appl Sci 10(10):3659. https://doi.org/10.3390/app10103659

Afshar P, Heidarian S, Naderkhani F et al (2020) Covid-caps: a capsule network-based framework for identification of covid-19 cases from X-ray images. Pattern Recogn Lett 138:638–643. https://doi.org/10.1016/j.patrec.2020.09.010

Sun L, Zhao C, Huang X et al (2023) Cutting tool remaining useful life prediction based on robust empirical mode decomposition and Capsule-BiLSTM network. Proc Inst Mech Eng C J Mech Eng Sci 237(14):3308–3323. https://doi.org/10.1177/09544062221142197

Long J, Qin Y, Yang Z et al (2023) Discriminative feature learning using a multiscale convolutional capsule network from attitude data for fault diagnosis of industrial robots. Mech Syst Signal Process 182:109569. https://doi.org/10.1016/j.ymssp.2022.109569

Jie F, Nie Q, Li M et al (2021) Atrous spatial pyramid convolution for object detection with encoder-decoder. Neurocomputing 464:107–118. https://doi.org/10.1016/j.neucom.2021.07.064

Ma R, Han T, Lei W (2023) Cross-domain meta learning fault diagnosis based on multi-scale dilated convolution and adaptive relation module. Knowl Based Syst 261:110175. https://doi.org/10.1016/j.knosys.2022.110175

Zhu L, Geng X, Li Z et al (2021) Improving YOLOv5 with attention mechanism for detecting boulders from planetary images. Remote Sens 13(18):3776. https://doi.org/10.3390/rs13183776

Ding Y, Jia M, Miao Q et al (2022) A novel time–frequency transformer based on self-attention mechanism and its application in fault diagnosis of rolling bearings. Mech Syst Signal Process 168(4):108616. https://doi.org/10.1016/j.ymssp.2021.108616

Daubechies I, DeVore R, Foucart S et al (2022) Nonlinear approximation and (deep) ReLU networks. Constr Approx 55(1):127–172. https://doi.org/10.1007/s00365-021-09548-z

Wang SH, Muhammad K, Hong J et al (2020) Alcoholism identification via convolutional neural network based on parametric ReLU, dropout, and batch normalization. Neural Comput Appl 32(12):665–680. https://doi.org/10.1007/s00521-018-3924-0

Lu J, Yue J, Zhu L et al (2020) Variational mode decomposition denoising combined with improved Bhattacharyya distance. Measurement 151(2):107283. https://doi.org/10.1016/j.measurement.2019.107283

Wei H, Zhang Q, Shang M et al (2021) Extreme learning machine-based classifier for fault diagnosis of rotating machinery using a residual network and continuous wavelet transform. Measurement 183(10):109864. https://doi.org/10.1016/j.measurement.2021.109864

Yang B, Lei Y, Jia F et al (2019) An intelligent fault diagnosis approach based on transfer learning from laboratory bearings to locomotive bearings. Mech Syst Signal Process 122(5):692–706. https://doi.org/10.1016/j.ymssp.2018.12.051

Jais IKM, Ismail AR, Nisa SQ (2019) Adam optimization algorithm for wide and deep neural network. Knowl Eng Data Sci 2(1):41–46. https://doi.org/10.17977/um018v2i12019p41-46

Niyongabo J, Zhang Y, Ndikumagenge J (2022) Bearing fault detection and diagnosis based on densely connected convolutional networks. Acta Mech Autom 16(2):130–135. https://doi.org/10.2478/ama-2022-0017

Wang Y, Cheng L (2020) A combination of residual and long–short-term memory networks for bearing fault diagnosis based on time-series model analysis. Meas Sci Technol 32(1):015904. https://doi.org/10.1088/1361-6501/abaa1e

Xu Z, Li C, Yang Y (2021) Fault diagnosis of rolling bearings using an improved multi-scale convolutional neural network with feature attention mechanism. ISA Trans 110(4):379–393. https://doi.org/10.1016/j.isatra.2020.10.054

Acknowledgements

This paper is supported by the Key Research and Development Program of Shaanxi Province of China (2024GX-YBXM-066, 2022SF-259), and Xi'an Science and Technology Plan Project (22GXFW0128).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, Y., Chen, L. A multi-scale spatial–temporal capsule network based on sequence encoding for bearing fault diagnosis. Complex Intell. Syst. 10, 6189–6212 (2024). https://doi.org/10.1007/s40747-024-01462-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01462-8