Abstract

Lattice structures created using additive manufacturing technology inevitably produce inherent defects that seriously affect their mechanical properties. Predicting and analysing the effect of defects on the maximum stress is very important for improving the lattice structure design and process. This study mainly used the finite element method to calculate the lattice structure constitutive equation. The increase in defect type and quantity leads to difficulty in modelling and reduces calculation accuracy. We established a data-driven extreme gradient enhancement (XGBoost) with hyperparameter optimization to predict the maximum stress of the lattice structure in additive manufacturing. We used four types of defect characteristics that affect the mechanical properties—the number of layers, thick-dominated struts (oversize), thin-dominated struts (undersizing), and bend-dominated struts (waviness)—as the input parameters of the model. The hyperparameters of the basic XGBoost model were optimised according to the diversity of the inherent defect characteristics of the lattice structure, while the parameters selected by experience were replaced using the Gaussian process method in Bayesian optimization to improve the model’s generalisation ability. The prediction datasets included the type and number of defects obtained via computer tomography and the calculation results of the finite element model with the corresponding defects implanted. The root mean square error and R-squared error of the maximum stress prediction were 17.40 and 0.82, respectively, indicating the effectiveness of the model proposed in this paper. Furthermore, we discussed the influence of the four types of defects on the maximum stress, among which the thick strut defect had the greatest influence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

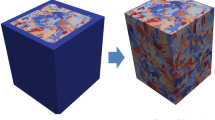

Metal lattice structures produced by additive manufacturing (AM) have attracted extensive attention owing to their advantages such as light weight, complex structure, and integrated structure function [1,2,3]. AM technology creates geometrically complex parts by connecting materials layer-by-layer with 3D model data drawn by computer-aided design (CAD) [4]. Selective laser melting (SLM) is widely used in the preparation of metal lattice structures owing to its short preparation cycle and wide application range [5]. SLM selectively melts a metal powder by scanning it with a laser [4]. Kagome, cone, octagonal truss, the body-centered cubic (BCC), and composite lattice structures are commonly used in existing research [6, 7]. AM can be used to fabricate these structures using stainless steel [8], titanium [9], and aluminium [10]. Inherent defects occur during manufacturing processes using additive manufacturing technology [11]. Furthermore, the three main types of inherent defects in metal lattice structures include oversizing, undersizing, and waviness [12,13,14,15]. Such defects affect the mechanical properties and failure response, resulting in the unavailability of certain special functions [12, 16,17,18]. Therefore, the relationship between the inherent defects and mechanical properties of lattice structures is crucial.

Most studies on lattice structure defects and mechanical properties use computer tomography (CT) scanning to classify defects and then combine the finite element (FE) model for further analysis. Campoli et al. [18] proposed a method to transform CT-reconstructed struts into a FE model. Lei et al. [7] equivalently incorporated inherent defects (oversizing and waviness) into an FE model and performed mechanical performance simulations. We concluded that the FE model with defects was closer to the actual mechanical experiment. Liu et al. [12] investigated the elastic and failure responses of inherent defects using nonlinear FE analysis of detailed imperfect models of regular octet and rhombicuboctahedron structures. Furthermore, oversizing struts is considered an important parameter affecting the lattice structure. Liu et al. [12] proposed the number of defects in a two-dimensional honeycomb structure and verified it in a three-dimensional metal lattice structure. This study provides a basis for future research. Baxter et al. [6] investigated the mechanical properties of lattice structures with hybrid topologies using an FE model. The majority of studies on the mechanical properties of lattice structures are based on FE models, which suggest that defects influence the structural quality. Additionally, the establishment of the corresponding FE model has limitations when the defect type or number of lattice structures increases [19, 20]. Therefore, the modelling process and accuracy become more difficult. Therefore, the law of the influence of various defects on performance is still not perfect. As a result, it is necessary to carry out in-depth theoretical and methodological research.

The performance prediction of lattice structures mainly focuses on fatigue life [21, 22]. Few studies exist on the prediction of maximum stress in metal lattice structures. The maximum stress can be a good reflection of the load-bearing capacity of a structure. Studying performance prediction methodologies based on data-driven lattice structures based on the distribution pattern and different characteristics of defects is required, and requires in-depth research because of the randomness or diversity of defects. Machine learning (ML) provides a new approach for lattice structure performance prediction. Therefore, a fast prediction model was established based on the known measured data of the sample using ML, and the prediction of the maximum stress of the lattice structure was carried out with full consideration of the random distribution pattern and diverse characteristics of defects. Furthermore, it was closer to the actual situation of the sample and more suitable for the randomness and diversity of lattice structure defects, with stronger generalisation compared to the FE model.

ML is a type of artificial intelligence that relies on a set of algorithms that learn from data without direct programming [23]. Jin et al. [24] summarised the application of machine learning in AM in detail. The main applications are geometric design, process parameter configuration, and in-situ anomaly detection [25,26,27]. There are few studies on the prediction of maximum stresses in metal lattice structures. The maximum stress can be a good reflection of the load-bearing capacity of the structure and can simplify the complexity of the prediction model when combined with machine learning. Malakar et al. [28] designed a hierarchical feature selection (HFS) model based on a genetic algorithm to optimise the local and global features extracted from each handwritten image. Bacanin et al. [29] proposed an improved version of the Firefly algorithm, which corrects the defects of the original algorithm through explicit exploration mechanisms and a chaotic local search strategy and is verified in the deep learning subdomain of convolutional neural networks. We used five standard reference datasets in the image processing: MNIST, Fashion-MNIST, Semeion, USPS, and CIFAR-10. The aforementioned studies are characterised by a novel and promising research field, namely, a hybrid approach between meta-heuristics and machine learning. This new field of research successfully combines machine learning and swarm intelligence methods and has proven capable of obtaining excellent results in different fields.

A data-driven XGBoost-BGP maximum stress prediction model is proposed in this study based on the aforementioned research. We used a Bayesian hyperparameter optimisation method to optimise the hyperparameters and to further improve the prediction and generalisation abilities of the model. Furthermore, we also analysed the relationship between the four input parameters and the maximum stress. The significant contributions of this study are as follows:

-

We proposed a data-driven prediction model of the AM lattice structure with inherent defects based on improved XGBoost.

-

We used the Gaussian process to optimise the super parameters of the XGBoost model instead of selecting them based on experience.

-

We presented the relationship between the four types of input parameters of the model and the maximum stress prediction results.

The remainder of this paper is organised as follows: “Related works” introduces machine learning research on the prediction of lattice structures. “Methodology” introduces the prediction model used in this study. The analysis and findings of the experiment are presented in “Experimental results and analysis”. The discussions are presented in “Discussion”. Conclusions and future work are discussed in “Conclusion and future work”.

Related works

Machine learning has advanced and is now used in various sectors [30]. Nasiri and Khosravani [31] focused on the use of ML to forecast the fracture and mechanical behaviour of AM items. Investigations, reviews, and discussions have been conducted on the use of ML in the characterisation of polymers and AM components. The research and analysis also highlight the constraints, difficulties, and potential of commercial ML applications in AM. We consider the advantages of ML for predicting mechanical qualities, improving AM parameters, and assessing 3D-printed objects. Furthermore, Jin et al. [32] presented a three-layer neural-network structure to predict the mechanical characteristics of an ultra-fine-grained Fe-C alloy. Experiments on samples prepared from metal AM often require repeated experiments, which are time-consuming and expensive. If the model is simulated, the simplified assumptions made during the simulation process may differ from the experimental defects because of the complex inherent defect characteristics of the lattice structure. Therefore, predicting the mechanical properties of metal lattice structural parts fabricated using additive manufacturing is challenging. Many studies have demonstrated the feasibility of ML methods to address the aforementioned metal AM process optimisation challenges. Furthermore, they have also introduced ML methods to solve process optimisation problems in metal additive manufacturing. However, the majority of the research objects in the existing literature are fatigue life predictions of metal lattice structures based on machine learning and there are few prediction studies on the maximum stress.

Zhang et al. [21] proposed a fatigue process assessment method based on an adaptive network fuzzy inference system. A high-cycle fatigue life was successfully predicted using the fatigue data of 139 SS316L components from the same SLM machine. However, when they used data from the published literature for evaluation, the results were less than ideal. Therefore, the composition of the data during model training is important for the generalisation ability of the model. Chen and Liu [22] proposed using a probabilistic physics-guided neural network to study the effects of different parameters on probabilistic fatigue life. However, the initial model parameters were not selected.

Taking the inherent defects of metal BCC prepared by AM as the research object, a data-driven XGBoost-BGP maximum stress prediction model is proposed. The model contained four parameters (number of layers, thick-dominated struts, thin-dominated struts, and bend-dominated struts) as the input and one structural feature (maximum stress) as the output. We used a Bayesian hyperparameter optimisation method to optimise the hyperparameters of the model to further improve the prediction and generalisation abilities of the model. The experimental results show that the proposed prediction model can accurately predict the maximum stress of structural samples containing defects. The analysis results of the relationship between the four input parameters and the maximum stress show that the maximum stress of the lattice structure specimen is most affected by the thick-dominated strut.

Methodology

The maximum stress prediction model proposed in this paper is mainly divided into two parts: the construction of the dataset and the training of the XGBoost-BGP model, as shown in Fig. 1.

In the first part, defects were counted, and the dataset was constructed as illustrated in Fig. 2 and Table 1. The dataset’s creation can be divided into four steps: scanning the samples, segmentation of the scanned images according to the edge detection method, defect detection, and defect statistics. The details are presented in “Dataset”.

The second part is about the training of the XGBoost-BGP maximum stress model, which is mainly divided into three steps.

The first step was to initialise the parameters of the XGBoost model. The XGBoost model is described in detail in “XGBoost model”. The second step is to define the Bayesian optimisation function and determine the hyperparameter search space, as described in “Bayesian hyperparameter optimization method”. The third step was to obtain the maximum value of the acquisition function of the Bayesian optimisation function using a Gaussian process and a 5-fold cross-validation in this process. The Gaussian process method is described in “Gaussian process”, and the acquisition function is described in “Acquisition function”. The definition of root mean square error (RMSE) is shown in “Evaluation measures”. These details are described in the following sections.

Dataset

The data used in this study consisted of 115 FE models. The type and number of inherent defects in each model were calculated based on the models created using the CT sections. The statistical content included the number of layers, thick-dominated struts, thin-dominated struts, and bent-dominated struts. A stepwise flowchart showing the automated metrology and analysis is shown in Fig. 2. In step 1, the scanning data of the part obtained by the CT scanner are subjected to noise filtering and threshold function processing to obtain a binary image of the lattice structure. In step 2, strut edges were identified using the Canny edge detection function, and the image segments for each strut were extracted using this method. In step 3, the extracted image segment of each strut was compared with the standard strut image to confirm the defect type of each strut. In step 4, we counted the number of various types of defects according to the classification method in step 3.

FE models were established for the mechanical performance simulation based on the above statistics, and the maximum stresses were taken as the label of the dataset. The metrics chosen as features are listed in Table 1.

Table 2 lists the data statistics. Figure 3 shows a histogram of the frequency distribution and a quantile-quantile (Q-Q) of the maximum stress. Furthermore, the maximum stress can be approximately regarded as a normal distribution, as seen in the figure, which is conducive to the training of machine learning models. Figure 4 shows the box and whisker diagrams of features. There are outliers in the data set. However, they were not eliminated during the training process of this study to improve the robustness of the model. In addition, Fig. 5 shows a correlation matrix diagram.

XGBoost model

Chen and Guestrin [33] proposed extreme gradient boosting (XGBoost), which is an improved machine learning method based on tree boosting with a strong learning ability [34,35,36]. XGBoost employs the second derivative (Hessian) to determine the direction and amount of the greatest descent more accurately than simply calculating and following the gradient [37, 38]. A range of regularisation techniques are also supported by XGBoost to improve model generalisation [39].

The dataset (D) in this paper consists of n samples. \(D = \{(F_i, s_i)\}(i=1,2,3...n)\), where \(F_i\) are the the defect feature of the input and \(s_i\) is the maximum stress.

In this paper, XGBoost is a model consisting of K regression trees and \(\hat{s_i}\) is the sum of all scores predicted by K trees. The formula is described as follows:

where \(f_k(F_i)\) is prediction scores of a regression tree and H is the hypothesis space of \(f_k(F_i)\).

where \(\nu \) is the leaf score, one of the parameters used to measure the complexity of the model. I(F) is the Fth sample’s leaf node. Equation (3) shows the result of the t-th iteration prediction.

In this paper, the objective function of the maximum stress prediction model is defined as follows:

where L is the loss function and \(\varOmega (f_t)\) is the model’s complexity.

where \(\gamma \) and \(\lambda \) are hyperparameters, also known as the coefficients of the penalty term. T represents the total number of leaf nodes.

In order to generalise Eq. (4) without specifying the specific formulation of the objective function, a second-order Taylor expansion is used to simplify. The simplified formula is as follows:

Finally, the objective function of the prediction model is obtained.

The prediction results and generalisation ability of XGBoost’s prediction model are affected by model hyperparameters [33]. Table 3 shows the range of hyperparameters to be optimised for the XGBoost-BGP prediction model.

Bayesian hyperparameter optimization method

The hyperparameter-tuning problem of the XGBoost prediction model cannot be solved using traditional optimisation methods [40]. However, Bayesian optimisation methods can effectively solve these problems. The Bayesian optimisation process is illustrated in Fig. 6.

Equation (10) shows the purpose of Bayesian optimisation, which is to find a function that maximises the value of this function at the sampling point.

where M represents the \(\textbf{x}\) search space.

The principle of Bayesian optimisation (BO) is to infer the posterior information of the function through the prior distribution of the function and the information of the sample points. Subsequently, the optimal solution to the function is obtained using posterior information and a criterion. This criterion is also known as the acquisition function (AC). Considering that the hyperparameter search space in this study is a continuous numerical value, a Gaussian process (GP) is used to obtain the posterior information.

Gaussian process

The prior distribution of the Bayesian optimisation in this study is GP. The GP function is expressed as follows:

The \(\mu (\textbf{z})=0\). \(c(\textbf{x}, \textbf{z}') = exp (-\frac{1}{2\theta }\Vert \textbf{z} - \textbf{z}'\Vert ^2)\),where \(\theta \) is a parameter of the kernel width. \(\textbf{x}\) and \(\textbf{x}'\) represent samples.

The posterior distribution of \(f(\textbf{z})\) is mainly divided into two steps. The first step is to find a new training set \(S_{1:i-1} = \{\textbf{z}_i, f(\textbf{z}_i)\}^{i-1}_{1}\). The new training set consists of \(i-1\) observations.

The value of f follows a multivariate normal distribution \(f \sim \mathcal {N}(\textbf{0}, \textbf{C})\), where

Finding the function value of the new sampling point \(\mathbf {x_t}\) in accordance with f is the second step. Given the assumption of the Gaussian process, \([\textbf{f}_{1:i-1} f_{i}]^\textrm{T}\) still follows the i-dimensional normal distribution:

where \(\textbf{f}_{1:it-1} = [f_1, f_2, \cdots , f_{i-1}]^ \textrm{T}\), \(\textbf{c} = [c(\mathbf {z_i}, \mathbf {z_1}) c(\mathbf {z_i}, \mathbf {z_2}) \cdots c(\mathbf {z_i}, \mathbf {z_{i-1}})]\) and \({f_i}\) follow one-dimensional normal distribution i.e. \(f_t \sim \mathcal {N}(\mu _{i}, \sigma _i^2)\). It can be seen from the joint normal distribution property that \(\mu _i(\mathbf {z_i})=\textbf{c}^ \textrm{T}C^\mathrm {-1}\textbf{f}_{1:i-1}\), \(\sigma _i(\mathbf {z_i})^2=c(\mathbf {z_i}, \mathbf {z_i})-\textbf{c}^ \textrm{T}C^\mathrm {-1}\textbf{c}\).

Acquisition function

As aforementioned, the acquisition function expresses the epistemic measure computed in accordance with the GP to seek the next place to evaluate the function [41]. The Expected Improvement (EI) acquisition function was used in this study because of its utility and simplicity. EI is a type of acquisition function based on improvement criteria. The EI function estimates the degree of improvement a point can experience when examining the area around its current ideal value. The EI formula is as follows:

where \(Q = \frac{\mu (\textbf{y}) - f(\textbf{y}^+)}{\sigma (\textbf{y})}\). \(\mu (\textbf{y})\) is the mean of the probability density function, \(\sigma ^2(\textbf{y})\) is the variance of the probability density function.

Evaluation measures

In this study, the model assessment indicators were R-square (\(R^2\)) and RMSE. Assume that \(s_1, s_2, \cdot \cdot \cdot , s_n\) are the actual values, \(\hat{s}_1, \hat{s}_2, \cdot \cdot \cdot , \hat{s}_n\) are the predicted values, and \(\bar{s}\) is the mean of \(s_i\); these indices can be calculated as

Experimental results and analysis

Training the prediction model

The environment configured in this study was Ubuntu 16.04 LTS, Anaconda 3, XGBoost 0.4.1, and Bayesian Optimisation 1.2.0.

The training and test sets were split in a ratio of 7:3. The initial number of GP points was six, and the number of optimisation iterations was 30. The number of training iterations was 100, and 5-fold cross-validation was used to record the optimal results. Optimal results were obtained after multiple trainings, and the corresponding hyperparameters were recorded.

Training results and prediction results

The mean values of RMSE for the training and test sets based on the default hyperparameters, the grid search (GS), the Harris Hawks optimisation algorithm (HHO) and the BO-GP methods are shown in Fig. 7. The prediction model is assumed to be more stable if the difference between the two is smaller and stable. The RMSE differences for the three hyperparameter optimisation methods are shown in Fig. 8. The generalisation ability of the XGBoost-BGP model is better; the difference between the training and validation sets is approximately 12.33. Therefore, the \(R^2\) value was 0.82 and the RMSE was 17.40. The \(R^2\) and the RMSE of the model trained with the default hyperparameters of XGBoost are only 0.78 and 19.19, respectively, before tuning the hyperparameters.

Table 4 shows a comparison of the prediction results of other typical methods. Furthermore, the method proposed in this study yields the best prediction results. Additionally, we used the same hyperparameter optimisation method.

Hyperparameter optimization comparison

To demonstrate that the BO-GP method is better suited for the prediction model proposed in this study, the GS hyperparameter optimisation method and the HHO are used for comparison. GS is widely used in hyperparametric optimisation, and HHO is a state-of-the-art (SOTA) baseline method. Experiments show that BO is more effective than GS in terms of data volume and parameter dimensions. In addition, the proposed BO-GP hyperparameter optimisation method is slightly better than HHO, but inferior to HHO in terms of convergence speed. The comparison results are presented in Table 5. The final proposed model was built using the hyperparameters obtained by BO-GP. Furthermore, the chosen parameters can be regarded as optimal after numerous interactions of optimisation, with the RMSE serving as the observation basis. The final hyperparameters are listed in Table 6.

The sign test results are shown in Table 7 to further illustrate that the BO-GP hyperparameter optimisation algorithm used in this study is applicable to the XGBoost model. The sign method calculates the total number of winning cases, and the winning times are distributed according to a binomial distribution. Figure 9 shows the comparison of deviation of three optimization methods based on XGBoost. According to the test criterion, if the calculated result is greater than \(\frac{n}{2}+\sqrt{n}\), the algorithm is better, with \(p<0.05\) [46]. Therefore, the hyperparameter optimisation algorithm used in this study is slightly better than the other two methods.

Analysis of prediction results

To better demonstrate the prediction effect of the XGBoost-BGP model, actual and predicted scatterplots on the test set are generated, as shown in Fig. 10a–c show the deviation of the predicted value from the actual value. The overall deviation results are acceptable and the fitting effect of the XGBoost-BGP model is good.

To demonstrate the generality of the model in this study, we also refer to the data in Ref. [15]. According to the literature, three sets of data are equivalently obtained, including only typical defects. The predicted results are shown in Table 8. The test results show that the XGBoost-BGP model has good generality.

Analysis of factors affecting mechanical properties of structures

XGBoost can determine the relationship between variables and output values [47]. This study considered the relationship between the four input variables and the maximum stress. The principle is to count the number of times the input feature appears in all the trees. The greater the frequency with which the input feature appears, the more obvious the effect of the input feature on maximum stress. The individual input feature scores are shown in Fig. 11. ’Oversize’ (the thick-dominated struts) has the greatest effect on the maximum stress. The influence of ’undersizing’ (the thin-dominated struts) on the maximum stress is second only to that of ’oversize’. The effect of these two features on the maximum stress is mainly because of the residual stress concentration caused by the irregular strut size, which changes the maximum stress. This fully reveals the relationship between the strut and maximum stress. In addition, ’waviness’ (the bend-dominated struts) is also one of the main factors affecting the maximum stress. However, there is a certain uncertainty in the maximum stress influence because of its complex characteristics. The effect of ’number of layers’ on the maximum stress is the least among the four features, but this does not mean that the feature of ’number of layers’ can be ignored. In addition, by making appropriate changes to the input features for different structures, prediction of the maximum stress of different structures can still be achieved.

Discussion

The XGBoost-BGP prediction model proposed in this study can accurately predict the maximum stress of SLM-fabricated BCC structural samples. This study only selected the most important features as training features because of the complex representation of inherent defects in lattice structures and the limited available data. Furthermore, given sufficient data, the training features can be extended to include the type of powder prepared, powder diameter, powder composition, standard radius of the struts, angle between struts, and angle between struts and the ground.

The prediction model proposed in this study had some limitations. The main factor-limiting models are features of the lattice structure. Features of datasets commonly have an impact on the upper bound of the model. This study selected four typical features based on the actual sample. Additionally, the accuracy of the model can be improved by adjusting hyperparameters if features are added.

Conclusion and future work

In this study, we proposed an XGBoost model based on the Gaussian process method in Bayesian hyperparameter optimisation to achieve maximum stress prediction of SLM-fabricated BBC structures. The model contained four parameters (number of layers, thick-dominated struts, thin-dominated struts, and bend-dominated struts) as the input and one structural feature (maximum stress) as the output. The datasets were derived from the actual prototypes and simulations. According to the experimental results, the maximum stress prediction model of XGBoost-BGP proposed in this study optimises \(R^2\) and RMSE to 0.82 and 17.40, respectively. In addition, we discussed the relationship between the four input parameters and maximum stress. The thick dominant strut had the greatest influence on the maximum stress of the lattice structure sample.

Future work will focus on three areas. The first direction is to consider samples with more complex structural features to raise the upper limit of the model. The second direction is to improve XGBoost parameter optimisation using a meta-inspired learning hyperparameter optimisation method. The third direction is to carry out the analysis of other performance parameters to reveal the influence of defects on other performance parameters.

Data availability

Due to the nature of this research, participants of this study did not agree for their data to be shared publicly, so supporting data is not available.

References

Alabort E, Barba D, Reed RC (2019) Design of metallic bone by additive manufacturing. Scr Mater 164:110–114

Zadpoor AA (2019) Mechanical performance of additively manufactured meta-biomaterials. Acta Biomater 85:41–59

Yang Y, Song X, Li X, Chen Z, Zhou C, Zhou Q, Chen Y (2018) Recent progress in biomimetic additive manufacturing technology: from materials to functional structures. Adv Mater 30(36):1706539

Hertlein N, Deshpande S, Venugopal V, Kumar M, Anand S (2020) Prediction of selective laser melting part quality using hybrid Bayesian network. Addit Manuf 32:101089

Li P, Wang Z, Petrinic N, Siviour CR (2014) Deformation behaviour of stainless steel microlattice structures by selective laser melting. Mater Sci Eng, A 614:116–121

Barnes B, Babamiri BB, Demeneghi G, Soltani-Tehrani A, Shamsaei N, Hazeli K (2021) Quasi-static and dynamic behavior of additively manufactured lattice structures with hybrid topologies. Addit Manuf 48:102466

Lei H, Li C, Meng J, Zhou H, Liu Y, Zhang X, Wang P, Fang D (2019) Evaluation of compressive properties of slm-fabricated multi-layer lattice structures by experimental test and \(\mu \)-ct-based finite element analysis. Mater Des 169:107685

Xiao Z, Yang Y, Xiao R, Bai Y, Song C, Wang D (2018) Evaluation of topology-optimized lattice structures manufactured via selective laser melting. Mater Des 143:27–37

Crupi V, Kara E, Epasto G, Guglielmino E, Aykul H (2017) Static behavior of lattice structures produced via direct metal laser sintering technology. Mater Des 135:246–256

Li C, Lei H, Liu Y, Zhang X, Xiong J, Zhou H, Fang D (2018) Crushing behavior of multi-layer metal lattice panel fabricated by selective laser melting. Int J Mech Sci 145:389–399

Yan C, Hao L, Hussein A, Raymont D (2012) Evaluations of cellular lattice structures manufactured using selective laser melting. Int J Mach Tools Manuf 62:32–38

Liu L, Kamm P, García-Moreno F, Banhart J, Pasini D (2017) Elastic and failure response of imperfect three-dimensional metallic lattices: the role of geometric defects induced by selective laser melting. J Mech Phys Solids 107:160–184

Echeta I, Dutton B, Leach RK, Piano S (2021) Finite element modelling of defects in additively manufactured strut-based lattice structures. Addit Manuf 47:102301

Lozanovski B, Downing D, Tino R, du Plessis A, Tran P, Jakeman J, Shidid D, Emmelmann C, Qian M, Choong P et al (2020) Non-destructive simulation of node defects in additively manufactured lattice structures. Addit Manuf 36:101593

Li C, Lei H, Zhang Z, Zhang X, Zhou H, Wang P, Fang D (2020) Architecture design of periodic truss-lattice cells for additive manufacturing. Addit Manuf 34:101172

Arabnejad S, Johnston RB, Pura JA, Singh B, Tanzer M, Pasini D (2016) High-strength porous biomaterials for bone replacement: a strategy to assess the interplay between cell morphology, mechanical properties, bone ingrowth and manufacturing constraints. Acta Biomater 30:345–356

Bagheri ZS, Melancon D, Liu L, Johnston RB, Pasini D (2017) Compensation strategy to reduce geometry and mechanics mismatches in porous biomaterials built with selective laser melting. J Mech Behav Biomed Mater 70:17–27

Campoli G, Borleffs MS, Amin Yavari S, Wauthle R, Weinans H, Zadpoor AA (2013) Mechanical properties of open-cell metallic biomaterials manufactured using additive manufacturing. Mater Des 49:957–965

Skylar-Scott MA, Mueller J, Visser CW, Lewis JA (2019) Voxelated soft matter via multimaterial multinozzle 3d printing. Nature 575(7782):330–335

Vangelatos Z, Zhang Z, Grace X G, Grigoropoulos CP (2020) Tailoring the dynamic actuation of 3d-printed mechanical metamaterials through inherent and extrinsic instabilities. Adv Eng Mater 22(6):1901586

Zhang M, Sun C-N, Zhang X, Goh PC, Wei J, Hardacre D, Li H (2019) High cycle fatigue life prediction of laser additive manufactured stainless steel: A machine learning approach. Int J Fatigue 128:105194

Chen J, Liu Y (2021) Fatigue property prediction of additively manufactured ti-6al-4v using probabilistic physics-guided learning. Addit Manuf 39:101876

Galan EA, Zhao H, Wang X, Dai Q, Huck WTS, Ma S (2020) Intelligent microfluidics: the convergence of machine learning and microfluidics in materials science and biomedicine. Matter 3(6):1893–1922

Jin Z, Zhang Z, Demir K, Grace X G (2020) Machine learning for advanced additive manufacturing. Matter 3(5):1541–1556

Garaigordobil A, Ansola R, Santamaría J, de Bustos IF (2018) A new overhang constraint for topology optimization of self-supporting structures in additive manufacturing. Struct Multidiscip Optim 58(5):2003–2017

Zhang W, Zhou L (2018) Topology optimization of self-supporting structures with polygon features for additive manufacturing. Comput Methods Appl Mech Eng 334:56–78

Hsu YC, Yu CH, Buehler MJ (2020) Using deep learning to predict fracture patterns in crystalline solids. Matter 3(1):197–211

Malakar S, Ghosh M, Bhowmik S, Sarkar R, Nasipuri M (2020) A ga based hierarchical feature selection approach for handwritten word recognition. Neural Comput Appl 32(7):2533–2552

Bacanin N, Stoean R, Zivkovic M, Petrovic A, Rashid TA, Bezdan T (2021) Performance of a novel chaotic firefly algorithm with enhanced exploration for tackling global optimization problems: application for dropout regularization. Mathematics 9(21):2705

Zhang Y, Zhang M (2021) Machine learning model-based two-dimensional matrix computation model for human motion and dance recovery. Complex Intell Syst 7(4):1805–1815

Nasiri S, Khosravani MR (2021) Machine learning in predicting mechanical behavior of additively manufactured parts. J Mark Res 14:1137–1153

Jin LD, Feng YL, Zhang M (2021) Construction of a machine-learning-based prediction model for mechanical properties of ultra-fine-grained fe-c alloy. J Mark Res 15:4914–4930

Chen T, Guestrin C (2016) Xgboost: a scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp 785–794

Nasiri H, Hasani S (2022) Automated detection of COVID-19 cases from chest X-ray images using deep neural network and XGBoost. Radiography 28(3):732–738

Movsessian A, Cava DG, Tcherniak D (2022) Interpretable machine learning in damage detection using Shapley additive explanations. ASCE-ASME J Risk Uncertainty Eng Syst Part B Mech Eng 8(2):021101

Qiu Y, Zhou J, Khandelwal M, Yang H, Yang P, Li C (2021) Performance evaluation of hybrid woa-xgboost, gwo-xgboost and bo-xgboost models to predict blast-induced ground vibration. Eng Comput 38:1–18

Chelgani SC, Nasiri H, Tohry A (2021) Modeling of particle sizes for industrial hpgr products by a unique explainable ai tool—a conscious lab development. Adv Powder Technol 32(11):4141–4148

Nasiri H, Homafar A, Chehreh Chelgani S (2021) Prediction of uniaxial compressive strength and modulus of elasticity for travertine samples using an explainable artificial intelligence. Results Geophys Sci 8:100034

Nasiri H, Alavi SA(2022) A novel framework based on deep learning and ANOVA feature selection method for diagnosis of COVID-19 cases from chest X-ray images. Comput Intell Neurosci 2022:1–11

Shi R, Xinyue X, Li J, Li Y (2021) Prediction and analysis of train arrival delay based on xgboost and Bayesian optimization. Appl Soft Comput 109:107538

Shahriari B, Swersky K, Wang Z, Adams RP, De Freitas N (2015) Taking the human out of the loop: a review of Bayesian optimization. Proc IEEE 104(1):148–175

Hancock JT, Khoshgoftaar TM (2020) Catboost for big data: an interdisciplinary review. J Big Data 7(1):1–45

Ke G, Meng Q, Finley T, Wang T, Chen W, Ma W, Ye Q, Liu TY (2017) Lightgbm: a highly efficient gradient boosting decision tree. Adv Neural Inf Process Syst 30:1–9

Cai J, Kai X, Zhu Y, Fang H, Li L (2020) Prediction and analysis of net ecosystem carbon exchange based on gradient boosting regression and random forest. Appl Energy 262:114566

Fatahi R, Nasiri H, Dadfar E, Chelgani SC (2022) Modeling of energy consumption factors for an industrial cement vertical roller mill by shap-xgboost: a" conscious lab" approach. Sci Rep 12(1):1–13

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol Comput 1(1):3–18

Xing L, Sun J, Song Z, Li G, Wang Z, Yunjian H, Wang Q, Zhang D (2020) Prediction and analysis of cold rolling mill vibration based on a data-driven method. Appl Soft Comput 96:106706

Acknowledgements

The authors gratefully thank the S &T Program of Hebei (nos. 216Z1704G, 20312202D, 20311001D, 20310401D) for supporting and also gratefully thank each of the authors of the references. In addition, we would like to thank Editage (www.editage.cn) for English language editing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this study. No conflict of interest exists in the submission of this manuscript and manuscript is approved by all authors for publication. I would like to declare on behalf of my co-authors that the work described was original research that has not been published previously, and not under consideration for publication elsewhere, in whole or in part.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Z., Zhang, Y., Wen, Y. et al. Data-driven XGBoost model for maximum stress prediction of additive manufactured lattice structures. Complex Intell. Syst. 9, 5881–5892 (2023). https://doi.org/10.1007/s40747-023-01061-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01061-z