Abstract

Landslides are dangerous disasters that are affected by many factors. Neural networks can be used to fit complex observations and predict landslide displacement. However, hyperparameters have a great impact on neural networks, and each evaluation of a hyperparameter requires the construction of a corresponding model and the evaluation of the accuracy of the hyperparameter on the test set. Thus, the evaluation of hyperparameters requires a large amount of time. In addition, not all features are positive factors for predicting landslide displacement, so it is necessary to remove useless and redundant features through feature selection. Although the accuracy of wrapper-based feature selection is higher, it also requires considerable evaluation time. Therefore, in this paper, reliability-enhanced surrogate-assisted particle swarm optimization (RESAPSO), which uses the surrogate model to reduce the number of evaluations and combines PSO with the powerful global optimization ability to simultaneously search the hyperparameters in the long short-term memory (LSTM) neural network and the feature set for predicting landslide displacement is proposed. Specifically, multiple surrogate models are utilized simultaneously, and a Bayesian evaluation strategy is designed to integrate the predictive fitness of multiple surrogate models. To mitigate the influence of an imprecise surrogate model, an intuitional fuzzy set is used to represent individual information. To balance the exploration and development of the algorithm, intuition-fuzzy multiattribute decision-making is used to select the best and most uncertain individuals from the population for updating the surrogate model. The experiments were carried out in CEC2015 and CEC2017. In the experiment, RESAPSO is compared with several well-known and recently proposed SAEAs and verified for its effectiveness and advancement in terms of accuracy, convergence speed, and stability, with the Friedman test ranking first. For the landslide displacement prediction problem, the RESAPSO-LSTM model is established, which effectively solves the feature selection and LSTM hyperparameter optimization and uses less evaluation time while improving the prediction accuracy. The experimental results show that the optimization time of RESAPSO is about one-fifth that of PSO. In the prediction of landslide displacement in the step-like stage, RESAPSO-LSTM has higher prediction accuracy than the contrast model, which can provide a more effective prediction method for the risk warning of a landslide in the severe deformation stage.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Landslides are major geological disasters that cause great property losses and casualties every year [1]. The successful prediction of the displacement of landslides within a certain period will play a vital role in disaster prevention and mitigation. Therefore, how to efficiently predict landslide displacement has become an urgent problem to be solved. In the early stage of landslide displacement prediction, researchers usually adopt strict mathematical and mechanical analysis or statistical models such as grey GM(1,1). However, because the landslide displacement problem is a complex nonlinear system, this kind of method is only suitable for the prediction of the upcoming landslide, and the reusability is not high.

With the rapid development of artificial intelligence technology, an increasing number of scholars are introducing machine learning methods to landslide displacement prediction problems [2, 3]. As a mature and effective machine learning method, the long short-term memory (LSTM) neural network can better deal with the situation where the input samples are correlated because of its special structural design. Therefore, it is widely used in various sequence problems, such as natural language processing and time series prediction [4, 5]. However, feature selection and hyperparameter selection have a great influence on the final prediction result of the LSTM neural network.

Generally, there are three methods for feature selection: the filter, wrapper, and embedded methods. In the filter method, the correlation between each feature and the label is first calculated, and then the most appropriate feature is selected as the training input according to the correlation. In the wrapper method, a feature subset is selected, the feature subset is substituted into the machine learning algorithm for training, and the training error is regarded as the score of the subset to judge its quality. The embedded method is similar to the filter method. However, the correlation calculation method between features is replaced by a machine learning algorithm. Therefore, unlike the wrapper method, the filter method and the embedded method are not dependent on the specific network when features are selected. Although calculations are performed sufficiently fast in these methods, they are not as effective as the wrapper method. However, to evaluate the advantages and disadvantages of each feature subset, each feature subset must be substituted into specific network training to obtain the corresponding evaluation when using the wrapper method, and training consumes considerable time.

In addition, to test the hyperparameters of a set of LSTM algorithms, it is often necessary to construct the corresponding LSTM model and train the model to obtain its prediction accuracy. Therefore, each evaluation of hyperparameters requires a large amount of evaluation time.

Therefore, wrapper-based feature selection and hyperparameter optimization is a computationally expensive black box optimization problem, where the global optimum must be found. Moreover, there is no explicit objective function, and each evaluation is very time consuming.

The evolutionary algorithm (EA) has been proposed due to its powerful global search ability and strong generality. It can be used to search for the global optimal solution to optimization problems with nonderivable functions or even without explicit objective functions. Therefore, compared with traditional mathematical optimization methods, EAs are used in a wider range of real-world engineering problems [6, 7]. However, EAs often require a large number of evaluations to adapt to the search space and find the global optimal solution [8, 9]. Therefore, it is often difficult to use general EAs for computationally expensive problems.

The surrogate-assisted evolutionary algorithm (SAEA) is an important method for solving computationally expensive problems [10]. Unlike in ordinary EA, in SAEA, a search is performed not for a real objective function but for a surrogate model that approximates a real objective function. Therefore, by reducing the number of fitness evaluations on the real objective function, SAEA can be used to effectively reduce the time cost and economic cost.

In recent years, a large number of studies on high-dimensional computationally expensive problems have been carried out, and less attention has been given to low-dimensional problems [11,12,13]. Although high-dimensional computationally expensive problems are more challenging, there are still many low-dimensional problems in real-world engineering applications, such as airfoil design problems, pressure vessel design problems, and welded beam design problems [14,15,16]. In addition, SAEAs for high-dimensional computationally expensive problems are not necessarily suitable for low-dimensional problems. More powerful surrogate models can be used for low-dimensional problems. Particle swarm optimization (PSO), as a global optimization algorithm, has been widely considered by industry and academia because of its fast convergence rate and generality. Therefore, this paper focuses on the application of PSO to low-dimensional computationally expensive problems.

Although the SAEA framework avoids PSO from performing a large number of fitness evaluations directly on real objective functions and makes it suitable for computationally expensive problems. But its performance is limited by several factors.

-

1.

Different models have different characteristics, which gives them different fitting abilities for different types of functions [29, 30]. Therefore, to adapt to different search spaces, the simultaneous utilization of multiple surrogate models becomes a more reliable option [30]. However, multisurrogate model-based methods require a suitable strategy to integrate the predicted values of multiple surrogate models.

-

2.

The fitting ability of the surrogate model is different in different local regions. However, few studies have considered the local confidence of surrogate models.

-

3.

In SAEA, it is often necessary to select suitable individuals from the population to be evaluated in the real objective function to update the surrogate model. Good evaluation points can effectively improve the accuracy of the surrogate model. In the promising-uncertain strategy, an optimal and uncertain point from the population is selected; thus, both exploration and development are considered [17, 18]. However, in the selection process, only the fitness of the individual is taken as the basis for selection, i.e., the fitness maximum and minimum are selected without considering more individual information or the influence of the error between the surrogate model and the real objective function on the fitness. As a result, the selected points have little improvement on the accuracy of the surrogate model.

Based on the above analysis, we propose reliability-enhanced surrogate-assisted particle swarm optimization (RESAPSO). In RESAPSO, the weights of each region of the surrogate model are adaptively adjusted using BES to more accurately predict the fitness of each individual. In this paper, we use intuitionistic fuzzy sets to extract fuzzy attribute information from individuals and use IFMADM to select new evaluated points so that the surrogate model can better approximate the real objective function. The main contributions of this paper are as follows.

-

a.

Based on the surrogate model, a new surrogate-assisted evolutionary algorithm, RESAPSO, is designed to meet the needs of computationally expensive problems. Using RESAPSO, we search for the best subset of landslide features while optimizing the hyperparameters of the LSTM network and apply this model to the landslide displacement prediction problem.

-

b.

A Bayesian evaluation strategy (BES) is designed based on Bayes' theorem. BES is an individual fitness evaluation strategy suitable for multisurrogate models. It uses the error of each surrogate model and the real function in the local region to estimate the internal confidence of each model in the local region and adjusts the weight of each surrogate model adaptively. Specifically, the error between the predicted fitness of each surrogate model and the true fitness is calculated and represented in the form of a probability that sums to 1. Then, multiple evaluated points in the local region are regarded as likelihood samples; combined with the prior probability of each surrogate model, the posterior probability is calculated using Bayes' theorem, and finally, it is converted into the weight of each model. In BES, which is a fitness evaluation strategy based on multisurrogate models, the local confidence of each surrogate model is considered, and the local weight of each surrogate model can be adaptively adjusted to overcome the first and second shortcomings mentioned above.

-

c.

Intuitionistic fuzzy multiattribute decision-making (IFMADM) is used to select appropriate individuals from the population to update the surrogate model. Due to the error between the surrogate model and the true objective function, the fitness of each individual is imprecise. Therefore, intuitionistic fuzzy sets [19] (IFSs) are used to deal with the fuzzy information carried by individuals. In addition to individual fitness, the local accuracy of the surrogate model is considered as one of the decision attributes. Therefore, based on IFMADM, both individual fitness and credibility are considered when selecting promising and uncertain points. By using IFSs to address the problem of imprecise individual information on the surrogate model and using IFMADM to consider the fitness and local credibility of the individual at the same time, the promising individuals and the uncertain individuals are selected more accurately to improve the accuracy of the surrogate model.

-

d.

RESAPSO is compared with a variety of SAEA on well-known benchmark functions to prove the advantages of RESAPSO in terms of its optimization capabilities. In addition, the comprehensive analysis of RESAPSO’s search accuracy, convergence speed, stability, and other aspects further proves its optimization ability. In the landslide displacement prediction experiment, the accuracy of RESAPSO with 100 iterations was better than that of PSO with 500 iterations, providing higher prediction accuracy while keeping the number of assessments low. Furthermore, compared with the other optimization algorithms, the LSTM model based on RESAPSO can better predict the severe landslide displacement during the strong rainy season.

The rest of this paper is organized as follows. The “Related work” section introduces SAEAs and surrogate models. “The proposed RESAPSO” section briefly describes RESAPSO, including the Bayesian evaluation strategy (BES), the promising-uncertain strategy based on intuitionistic fuzzy multiattribute decision-making (IFMADM), and the method of extracting individual information and transforming it into intuitionistic fuzzy sets. The “Experimental analysis” section includes a comparison with other SAEAs and applications in feature selection and hyperparameter selection. The “Conclusion” section summarizes this research.

Related work

Table 1 lists the representative EA-based feature selection, hyperparameter optimization, and surrogate-assisted evolution algorithms in recent years.

Feature selection

As a global optimization algorithm, evolutionary algorithm can search for the global optimal solution in the case of underivable and no explicit objective function, which has stronger generalization [20]. Therefore, various evolutionary algorithms have been applied to feature selection [21,22,23]. In these methods, the relationship between features or new operators is used to accelerate the convergence rate of the population, thereby reducing the computational cost. However, a large number of evaluations are still required. In order to reduce the number of evaluations, some recent studies have proposed SAEA-based feature selection methods [24].

Hyperparameter optimization

In addition to manually tuning hyperparameters, other commonly used tuning methods include the random search, grid search, and Bayesian optimization algorithms [25]. In a grid search, the computational complexity and width of the grid need to be balanced; too large a width leads to low accuracy, and too small a width leads to too much computation. The stability of a random search is too low. In the Bayesian optimization algorithm, the number of evaluations is reduced through the kriging model, and exploration and development points are selected using Bayesian probability estimation. Therefore, Bayesian optimization can be regarded as a heuristic optimization algorithm based on a single surrogate model. The Bayesian optimization algorithm differs from RESAPSO, which utilizes more surrogate models. In this paper, the Bayes theorem is used to estimate fitness, and the development and exploration points are selected through IFMADM. In addition to the methods mentioned above, a variety of evolutionary algorithms have been applied to the hyperparameter optimization of machine learning algorithms to improve their optimization ability [26,27,28]. However, these methods still require a large number of evaluations or reduce the number of evaluations at the cost of accuracy [29].

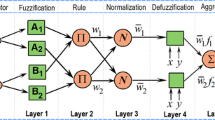

Surrogate-assisted evolutionary algorithm

The SAEA constructs a surrogate model with a small number of individuals evaluated in the real objective function, and the population searches on the surrogate model instead of the real objective function, thus significantly reducing the number of evaluations on the computationally expensive real objective function [30, 31]. Common surrogate models include the Kriging model [32, 33], artificial neural network (ANN) [34, 35], polynomial regression (PR) [36], radial basis function neural network (RBFNN) [37,38,39], etc.

The SAEA framework effectively reduces the number of evaluations on the real objective function so that EAs can be applied to computationally expensive problems. However, it is difficult to adapt the single surrogate model to complex real-world applications.

Different surrogate models have different characteristics [40, 41]; thus, they have different fitting abilities for different types of functions. The experimental results of Sun et al. [37] showed that by relying on multiple surrogate models, the prediction accuracy of the surrogate model can be higher and more stable [42]. As an interpolation model, the kriging model [32] can fit complex search space, but it requires a lot of calculation. An artificial neural network [34, 35] also has a strong fitting ability but requires a large amount of calculation and a large number of training samples. Polynomial regression [43] has a small amount of computation and a relatively low ability to fit a complex local space, but it can better simulate the trend of the global environment. The radial basis function neural network [37, 38, 44] is relatively balanced in all aspects and is the most widely used surrogate model. Therefore, when the accuracy of a single model cannot be improved, using multiple models at the same time becomes the optimal choice. However, the multimodel integration strategy requires adaptive adjustment of the weight of each surrogate model. Common weight updating strategies include the root of mean square error (RMSE) [17], prediction variance [45], and prediction residual error sum of square (PRESS) [41]. In these methods, the weight of the corresponding surrogate model is calculated through the error of the selected individual in the surrogate model and the real objective function. Since each selected individual may be in any region of the search space, the accuracy of each surrogate model is different in different regions of the search space. Therefore, such methods cannot reflect the accuracy of surrogate models within local regions.

In addition to using multisurrogate models to indirectly improve the prediction accuracy of fitness, there are also studies that directly improve the prediction accuracy of surrogate models through a reasonable selection of evaluation points [38, 46].

The above methods focus computational resources on regions where global optimal solutions may exist to improve the accuracy of the surrogate model in the corresponding regions. These methods can be used effectively in environments where the local optimal solution and the global optimal solution are close. However, the exploration ability of the algorithm is reduced, so it is difficult to cope with more complex multimodal environments. To enable the algorithm to explore the search space while developing the local optimal region, most studies adopt the promising-uncertain strategy [17, 18]. In this strategy, the best individual, called the promising point, is selected to improve the local accuracy of the model, thereby improving the local development ability of the algorithm, and the worst individual, called the uncertain point, is selected to explore the search space, thereby providing diversity for the algorithm. However, as mentioned above, it is difficult to select ideal promising solutions and uncertain solutions from the population when an algorithm is run due to the influence of fitness fuzziness. Therefore, such methods usually use the kriging model [47, 48]. The kriging model provides a confidence value with the predicted results to help the algorithm determine whether it is worth investing computational resources at an individual’s position. Other than the kriging model, some researchers have also used the multisurrogate model voting mechanism to select the potential best individuals and uncertain individuals [17].

The proposed RESAPSO

The selected individual represents the individual selected by IFMADM, and the evaluated point represents the individual evaluated in the real objective function. When an individual is selected by IFMADM, it is transformed into a selected individual, and when a selected individual is substituted into the real objective function, it can be transformed into an evaluated point.

Overall framework

Figure 1 shows the overall framework of RESAPSO. The workflow in the figure represents the running process of the algorithm, and the data flow represents the data exchange process in the work of the algorithm. Initially set database and archive to be empty. First, Latin hypercube sampling is used to select the initial points, which are evaluated on the real objective function and stored in the database. The kriging model, RBFNN model, and PR model are constructed according to the evaluated points in the database. Use the optimizer (PSO) to search for the surrogate model, and the individual fitness is evaluated using the BES. All individuals in each iteration will be stored in the archive. When an epoch is over, all the individuals in the Archive are transformed into intuitionistic fuzzy sets, and IFMADM is used to select the promising point and uncertainty point. The promising point and uncertainty point are then evaluated by the real objective function and stored in the Database. Finally, empty the Achieve and update the surrogate model. The epochs continue until the maximum number of fitness evaluations is reached.

Particle swarm optimization

Due to its simple implementation and strong versatility, PSO has received extensive attention from researchers and engineers, and various variants have been developed and applied to various practical problems. In this paper, the PSO proposed by Shi [49] is adopted. The particle update equation is as follows.

where \({x}_{i}^{t}\) and \({v}_{i}^{t}\) represent the position and velocity of particle \(i\) at the \(t\) th iteration, respectively, and \({g}^{t}\) represents the position of the optimal particle in the population at the \(t\)th iteration. \({p}^{t}\) represents the historical optimal position of the particle's \(i\) until the \(t\)th iteration, \({c}_{1}\) and \({c}_{2}\) are two preset constants, and \({r}_{1}\) and \({r}_{2}\) are two random numbers in [0, 1]. \({w}^{t}\) represents the inertia weight in the \(t\)th iteration. In this paper, the commonly used inertia weight of linear descent is adopted.

Bayesian evaluation strategy

To synthesize the predicted fitness of multiple models and make it closer to the real objective function, a Bayesian evaluation strategy based on Bayes’ theorem, which uses the posterior probability to represent the weight of each surrogate model in a local area, is proposed.

Specifically, whenever the individual’s fitness needs to be predicted, the weight of all evaluated points in the individual’s neighborhood will be calculated using Eq. (4). This weight represents the accuracy of the surrogate model, and the larger the weight is, the more accurate the surrogate model. The final fitness prediction can be obtained using the weighted sum of the distances between the evaluation points and the individual, namely, Eq. (3). When an individual is the selected individual, the true fitness of the selected individual will be recorded based on the evaluated points in its neighborhood. When the fitness of individuals in subsequent epochs needs to be predicted, the error between the previously predicted fitness and the true fitness will be calculated from the evaluated point to obtain the current accuracy of each model at that point, that is, Eq. (6).

The calculation for the synthetic fitness of each individual is as follows.

where \({\mathrm{fit}}_{i}\) represents the synthetic fitness of individual \(i\). \({\mathrm{prefit}}_{i,k}\) represents the fitness of individual \(i\) calculated on surrogate model \(k\), and \(l\) represents the number of surrogate models. Since the kriging model, radial basis function neural network, and polynomial regression model have been used in this paper, \(l=3\). In Eq. (3), \(\mathrm{ne}(i)\) represents the number of evaluated points in the neighborhood of individual \(i\). \({\mathrm{wg}}_{j}\) represents the weight of the \(j\)th evaluated point in the neighborhood of individual \(i\), and the calculation of \({\mathrm{wg}}_{j}\) is described in Eq. (7). \(P({\theta }_{k}|{x}_{j})\) represents the posterior approximation of the \(k\)th surrogate model on the evaluated point \(j\), and its calculation is as follows.

where \(P({x}_{j}|{\theta }_{k})\) represents the likelihood function of surrogate model \(k\) at evaluated point \(j\), and \(P({\theta }_{r})\) represents the prior approximation of the \(r\)th surrogate model at evaluated point \(j\). In this paper, we assume that all surrogate models have the same prior approximation, that is, the prior approximation of each surrogate model on any evaluated point is \(P({\theta }_{r})=1/l\).

where \(\Delta \) is used to prevent the denominator from being 0; in this paper, Δ = 1. abs(·) is used to calculate the absolute value, \(sep\) represents the selected individual, and \(n(j)\) represents the number of times the surrogate model has predicted the fitness of the selected point at the evaluated point \(j\). In Eq. (6), \({\mathrm{prefit}}_{j,k}^{q}\) represents the fitness of the selected individual predicted by surrogate model \(k\) at evaluation point \(j\) for the \(q\)th iteration, and \({\mathrm{reafit}}_{\mathrm{sep}}^{q}\) represents the true fitness of the selected individual. In essence, Eq. (6) takes the error of each evaluated point and the selected individual in its domain as a sampling in Bayes’ theorem.

In addition, when the neighborhood of the individual contains multiple evaluated points, in addition to considering the credibility of each surrogate model in the neighborhood of the individual, it is also necessary to consider the distance between these evaluated points and the individual. The closer the evaluated point is to the individual, the higher the credibility is. Based on the above considerations, this paper takes the Euclidean distance between the individual and these evaluated points as the weight, and the calculation for the weight is as follows.

where \({\mathrm{wg}}_{j}\) represents the weight of evaluated point \(j\), \({d}_{i,j}\) represents the Euclidean distance between \(i\) and \(j\), and \(\mathrm{ne}(i)\) represents the number of evaluated points in the neighborhood of individual \(i\). Note \(j\) in the neighborhood of \(i\). Figure 2 shows a schematic diagram of calculating the synthetic fitness.

In Fig. 2, the green squares represent individuals in the population, where solid green squares represent selected individuals and nonsolid squares represent ordinary individuals. I1 and I2 are the selected individuals, and I3 is the ordinary individual. The fitness of the selected individuals will be calculated in the real objective function after the population iteration. The yellow circles represent evaluated points. The blue curve represents the real objective function. The left and right brackets indicate the neighborhood range of an individual. The black solid arrow indicates that the evaluated individual transmits the confidence of each surrogate model at its location to the individual, who fitness is calculated using Eq. (3). The red dashed arrow indicates that after the selected individual is calculated in the real objective function, the real fitness will be fed back to the evaluated individual, and the evaluated individual will adjust the confidence of each surrogate model at its location through Eq. (6). It can be seen from the above figure that the surrogate model at each evaluated point will undergo multiple confidence adjustments. In this paper, each error at the same evaluated point is expressed in the form of probability and taken as a sampling in Bayes’ theorem to approximate the true distribution through posterior probability.

Intuitionistic fuzzy multiattribute decision making

With the special advantage of IFS in dealing with uncertain and fuzzy problems, IFMADM can be used to select the optimal plan that best meets the decision maker’s expectations in an uncertain environment. A common IFMADM process is as follows [50].

Step 1. Transform the attributes of all decision-making plans into the form of intuitionistic fuzzy sets and input the prior weight of each attribute in the form of intuitionistic fuzzy sets.

Step 2. Combine all the decision attribute weights expressed in the form of intuitionistic fuzzy sets into certainty weights. The transformation method is as follows.

In the above equation, \({\mu }_{w}({a}_{i})\) and \({\pi }_{w}({a}_{i})\) represent the degree of membership and hesitation of attribute \({a}_{i}\), respectively, and \(m\) represents the number of attributes. \(\alpha \in [\mathrm{0,1}]\) and \(\upbeta \in [\mathrm{0,1}]\) represent the importance of membership and hesitation, respectively, in IFMADM. Since there is no prior information, the weights of all decision attributes in this paper are equal, and \(\alpha =1\), \(\upbeta =0.5\).

Step 3. According to the weight, all attributes of the decision-making plan are combined into a comprehensive evaluation. The specific synthesis equation is as follows.

where \({A}_{i}\) is the intuitionistic fuzzy set of individual \(i\), which represents the comprehensive evaluation of decision-making plan \(i\). \({\mu }_{ij}\) represents the degree of membership of decision-making plan \(i\) on attribute \(j\), and \({\gamma }_{ij}\) represents the degree of nonmembership of decision-making plan \(i\) on attribute \(j\).

Step 4. Calculate the intuitionistic fuzzy distance between the comprehensive evaluation of each decision-making plan and the ideal plan.

The ideal plan G is defined as G = {\(\langle g,\mathrm{1,0}\rangle \)}, that is, the degree of membership is 1, and the degree of nonmembership is 0. The negative ideal plan B is defined as B = {\(\langle b,\mathrm{1,0}\rangle \)}, that is, the degree of membership is 0, and the degree of nonmembership is 1.

where \({\xi }_{i}\) represents the degree of similarity to the ideal solution. The closer to 1 the decision plan is, the closer it is to the ideal solution. The closer to 0 the decision plan is, the closer it is to the negative ideal solution. \(\mathrm{D}(X, Y)\) represents calculating the distance between any two intuitionistic fuzzy sets \(X\) and \(Y\). This paper uses the Hamming distance calculation, which is given as follows.

where \({\mu }_{X}({a}_{i})\), \({\pi }_{X}({a}_{i})\), \({\gamma }_{x}({a}_{i})\), \({\mu }_{Y}({a}_{i})\), \({\pi }_{Y}({a}_{i})\) and \({\gamma }_{Y}({a}_{i})\) represent the membership, hesitation, and nonmembership of the intuitionistic fuzzy set \(X\) and \(Y\) on the attribute \({a}_{i}\), respectively, and \(m\) represents the number of attributes in the intuitionistic fuzzy set \(X\). Since all attributes in the IFMADM introduced in this paper have been combined into a comprehensive evaluation by Eq. (10), \(m\)=1.

Step 5. Sort all \(\xi \) from largest to smallest, and the first one is the optimal decision-making plan. Therefore, the larger \({\xi }_{i}\) is, the more likely it is that individual \(i\) is a promising individual or an uncertain individual.

The method of intuitionistic fuzzification of individual information

It is difficult to rely on the fitness information alone to verify whether an individual's area is the most promising area or the most uncertain area. Therefore, information from each individual is extracted from multiple perspectives as decision-making indicators for IFMADM to select individuals, thereby improving the accuracy of decision-making. Specifically, these include individual fitness, regional reliability, maximum fitness deviation, and regional unreliability. The first two are used to select promising individuals, and the latter two are used to select uncertain individuals.

Promising individual selection

In this paper, the fitness \(p{a}_{1}\) and regional reliability \({pa}_{2}\) of each individual are selected as the decision attributes of the promising individuals in IFMADM. In addition to using fitness measures to assess whether the quality of an individual is promising, the regional reliability of an individual is also measured. This is because the fitness of each individual on the surrogate model is not the real fitness of the objective function, so the distance between the individual and the evaluated point is taken as the decision attribute and used to measure the reliability of individual \(i\).

The fitness attribute pa1 of each individual is described by IFSs, and the conversion equation is as follows.

where \(\mathrm{prefit}\) is a matrix, \({\mathrm{prefit}}_{i,k}\) represents the fitness of individual \(i\) predicted by surrogate model \(k\), \(\mathrm{minprefit}\) represents the smallest element in \(\mathrm{prefit}\), \(\mathrm{fit}\) is a vector, \({\mathrm{fit}}_{i}\) represents the synthetic fitness (calculated by Eq. (3)) of individual \(i\), \(\mathrm{min fit}\) represents the smallest scalar in \(\mathrm{fit}\), and \(e\) is the natural constant.

In this paper, two types of individual fitness information are extracted as individual fitness attributes, the average fitness of each surrogate model and the synthetic fitness.

where \({\mu }_{i}(p{a}_{1})\), \({\pi }_{i}(p{a}_{1})\), \({\gamma }_{i}({pa}_{1})\) represent the degree of membership, hesitation, and nonmembership of the individual \(i\) on the attribute pa1, respectively, \(\mathrm{max }l\mathrm{fit}\) and \(\mathrm{min }l\mathrm{fit}\) represent the maximum and minimum values in \(l\mathrm{fit}\), respectively, \(\mathrm{max }l\mathrm{prefit}\) represents the maximum value in \(l\mathrm{prefit}\), and 1/2 is to limit \({\pi }_{i}\) and \({\gamma }_{i}\) to [0,0.5] to ensure that \({\mu }_{i}+{\pi }_{i}+{\gamma }_{i}=1\) holds true. The 1/2 below has the same meaning.

Equation (15) represents the degree of support that individual \(i\) is the promising point. The higher the value is, the greater the degree of support that individual \(i\) is a promising individual, and vice versa. Equation (16) is a measure of the degree of hesitation that individual \(i\) is the promising point. As shown in Eq. (15), the larger \({\pi }_{i}\) is, the smaller \({\mu }_{i}\). Therefore, the larger Eq. (16) is, the less likely it is that individual \(i\) is the promising point, and vice versa. Equation (17) represents the degree of nonmembership. The higher the value is, the higher the opposition that individual \(i\) is the promising point, and vice versa.

The regional reliability attribute \(p{a}_{2}\) of each individual is described by IFSs, and the conversion equation is as follows.

where \({\mu }_{i}(p{a}_{2})\), \({\uppi }_{i}({pa}_{2})\), and \({\gamma }_{i}({pa}_{2})\) represent the degree of membership, hesitation, and nonmembership of the reliability attribute of individual \(i\), respectively, \(d\) is a vector, \({d}_{i}\) represents the Euclidean distance between individual \(i\) and its nearest evaluated point, \({d}_{\mathrm{max}}\) represents the maximum value of all \(d\), \(\mathrm{ne}\) is a vector, \(\mathrm{ne}(i)\) represents the number of evaluated points in the neighborhood of individual \(i\), \({\mathrm{ne}}_{\mathrm{max}}\) represents the number of evaluated points in the neighborhood with the most evaluated points, and max{} in the outer layer is used to prevent the denominator from being zero. Equation (18) indicates that the more evaluated points in the neighborhood of the individual there are, the more reliable the individual and the higher the reliability of supporting the individual as a promising individual. Equation (20) indicates that the farther the individual is from the nearest evaluated point, the less reliable the individual is, and the higher the reliability of opposing the individual as a promising individual.

Uncertain individual selection

In this paper, the maximum fitness deviation \({ua}_{1}\) and regional unreliability ua2 are selected as intuitionistic fuzzy decision attributes. These attributes are chosen for two reasons. First, the larger the fitness difference of an individual in each surrogate model is, the higher the uncertainty of the individual. Second, the farther from the evaluated point is individual is, the lower the degree of reliability of the individual.

The maximum fitness deviation \({ua}_{1}\) of each individual on the surrogate model is described by an IFS, and the conversion equation is as follows.

where \(\mathrm{max prefi}{\mathrm{t}}_{i}\) and \(\mathrm{min prefi}{\mathrm{t}}_{i}\) represent the maximum and minimum fitness of individual \(i\) on all surrogate models, respectively. Equation (21) is used to calculate the maximum difference of individual \(i\) in the surrogate model.

where \({\mu }_{i}(u{a}_{1})\), \({\uppi }_{i}({ua}_{1})\), and \({\gamma }_{i}({ua}_{1})\) represent the degree of membership, hesitation, and nonmembership of individual \(i\) on attribute \(u{a}_{1}\), respectively, and \(\mathrm{maxprefit}\) and \(\mathrm{minprefit}\) represent the maximum and minimum fitness in all surrogate models for all individuals, respectively. Equation (22) uses the maximum difference of individual \(i\) on the surrogate model to describe the degree of membership of individual \(i\) as an uncertain individual. The greater the difference is, the more uncertain the individual gains, and vice versa. Equation (23) uses the magnitude of the fitness difference to express the hesitation that individual \(i\) is an uncertain individual. Equation (24) expresses the degree of opposition to the unreliability of the individual. The larger \({\gamma }_{i}(u{a}_{1})\) is, the higher the reliability of individual \(i\), and vice versa.

The regional unreliability \({ua}_{2}\) of each individual is described by an IFS, and the conversion equation is as follows.

The definitions of the parameters in Eq. (25) are consistent with those in Eq. (20), while the definitions of the parameters in Eq. (27) are consistent with those in Eq. (18).

Both the regional reliability attribute and the regional unreliability attribute use two pieces of information, the distance of the nearest evaluated point and the number of evaluated points in the neighborhood. The more evaluated points there are in the individual neighborhood and the closer they are to the evaluated points, the higher the regional reliability, which is the opposite of regional unreliability. Therefore, the membership degree and nonmembership calculation methods of reliability and unreliability are opposite each other.

Through the above conversion equation, the information contained in the individuals is expressed by intuitionistic fuzzy sets, and promising individuals and uncertain individuals are determined. Its pseudo code is similar to Algorithm 1.

RESAPSO process

The PSO, BES, IFMADM, and intuitionistic fuzzification methods are described in detail above. The remaining details of RESAPSO are as follows: The initial model is constructed using Latin hypercube sampling (LHS), which is commonly used in SAEA. The kriging model, radial basis function neural network, and polynomial regression are used as surrogate models. As mentioned above, the algorithmic pseudocode is given in Algorithm 2.

Experimental analysis

Neighborhood selection

There are two main functions of the neighborhood. The first function is to select the evaluated points used to estimate fitness. In this case, if the neighborhood is too large, the evaluated points that are too far away can provide useless surrogate model fitting information, while if the neighborhood is too small, there will be no evaluated points in the neighborhood to provide model fitting information. The second function is to calculate the fitting accuracy of the surrogate model on the evaluated points. In this case, a neighborhood that is too large will cause the wrong fitting information to be learned, while a neighborhood that is too small will cause the evaluated points to be unable to be updated for a long time. Therefore, the setting of the neighborhood range should change with the change in the dimension, and the following equation should be satisfied.

where dim represents the dimension and ubi represents the upper bound of the ith dimension in the search space. lbi represents the lower bound of the ith dimension, and neigi represents the neighborhood size of the ith dimension. The left part of Eq. (27) represents the hypervolume of the search space, the cumulative product on the right represents the hypervolume of the neighborhood, and 5 × dim on the right represents the number of initial sampling points. In the case that the initial sampling points are evenly distributed, the above equation allows each individual to have an evaluated point in the neighborhood. In the case of a nonuniform distribution, some individuals can be affected by multiple evaluated points, thereby improving the estimation accuracy.

The following equation can be obtained by transforming Eq. (28).

In Eq. (29), xi represents the ratio of the maximum length to the neighborhood on the ith dimension.

Assuming that the shape of the neighborhood is proportionally reduced in the search space, that is, x1 = x2 = ⋯ = xdim, the following equation can be used to calculate the neighborhood radius.

The 1/2 in Eq. (30) expresses the neighborhood in the form of a radius.

BES and IFMADM strategy performance tests

The experiment in this section verifies the effectiveness of the proposed BES and IFMADM by executing RESAPSO on different benchmark functions. The benchmark function is shown in Table 2.

RESAPSO is executed independently 20 times on each benchmark function. Each execution of RESAPSO outputs the fitting results of the kriging model, polynomial regression model, radial basis function neural network model, and BES, and the average of the 20 fitting results is the final result. In addition, the 20 test results are sorted from smallest to largest according to the optimal fitness searched, and all the evaluated points of the test results corresponding to the median are taken as the experimental results of IFMADM.

The parameter settings of RESAPSO are as follows. The optimizer (PSO) parameters are as follows: population size popsize = 100, maximum number of iterations maxiter = 500, c1 = c2 = 2, and w = 1.2–0.8 × (t/maxiter), where t represents the number of iterations at runtime. Initially, the sampling number of LHS is 5 × dim, where dim represents the dimension of the problem. The neighborhood parameters required by BES can be calculated using Eq. (30). The surrogate models used in this paper include the kriging model, radial basis function neural network, and polynomial regression model, which are implemented using the SURROGATES toolbox [51].

In Fig. 3, predict represents the model obtained using BES, while real represents the real objective function. KRG represents the kriging model, and PR represents the polynomial regression model. In addition, RBF represents the radial basis function neural network, and the red asterisk represents the evaluated point. From the analysis of the above four functions, it can be found that for different objective functions, different surrogate models have their advantages and disadvantages. The best model on Ackley is the RBFNN model, and the worst is the kriging model. The worst model on Rastrigin is the RBFNN model, and the best is the PR model. On Griewank, because there are few evaluated points, it is impossible to fit such a complicated model, so the fitting effect is generally poor. The best model on Schwefel is the RBF model, and the worst is the PR model. In particular, the best fitting effect is the kriging model in the interval [40,60] of the Schwefel function. Therefore, to obtain better fitness prediction results, the algorithm needs to adaptively adjust the approximation of each surrogate model to different objective functions or even in different regions and use the approximation to synthesize the estimated fitness.

From the above 4 functions, it can be seen that the various surrogate models are better balanced when BES is implemented. On Ackley and Griewank, although there is a large deviation from the real objective function using the kriging model, with the help of BES, RESAPSO can correctly identify the degree of approximation between each surrogate model and the real objective function. Thus, the kriging model does not have a significant impact on the estimated results. RESAPSO has been completely approximated to the real objective function in the interval [− 100,60] of the Rastrigin function. A better approximation degree is obtained using RESAPSO compared with any surrogate model in the intervals [− 100, 70], [− 40, 20], and [65, 90] of the Schwefel function.

In addition, Fig. 3 shows that different surrogate models have different characteristics. The kriging model is easy to overfit. For example, the curve amplitude of the kriging model on the Ackley function is larger; thus, this model is more suitable for a complex multimodal function. The PR model has difficulty fitting curves with large changes, such as the Griewank and Schwefel functions. This model is suitable for a unimodal function with little change. The RBF model is more balanced, and good performance is obtained, which is one of the reasons why most SAEAs use RBF as the surrogate model.

In addition, from the evaluated points depicted in the above four functions, it can be found that the distribution of evaluated points is relatively uniform, and clustering occurs only at extreme points. This is because a promising individual and an uncertain individual are selected in each decision. This measure not only ensures the exploratory ability of RESAPSO but also allows the regions where the global optimum may exist to be fully exploited.

Comparative experiment

Experimental design

The experimental platform is MATLAB 2018a based on the Windows 10 64-bit system, and the CPU, with 16 GB memory, is AMD Ryzen 7 5800X. The comparative experiment comprehensively examines the performance of RESAPSO from three aspects, accuracy, convergence speed, and stability. The benchmark functions are CEC2015 10D and CEC2017 10D, and their search spaces are both [− 100,100]D [52, 53]. The maximum number of real function evaluations (FEs) is 11 × dim, and each algorithm runs independently 20 times. In this paper, the following SAEAs are selected as comparison algorithms: CAL-SAPSO [17], which also uses PSO as the optimizer and RBFNN, PR, and the kriging model as surrogate models; SA-COSO [12] for high-dimensional and computationally expensive problems; SHPSO [40], which mainly improves the search strategy; and ESAO [54] and SA-MPSO [55], which have been proposed in recent years. The parameter settings of the comparison algorithm follow those of the original paper. The RESAPSO parameter settings are described in the “BES and IFMADM strategy performance test” section.

Accuracy comparison

To compare the convergence accuracy more comprehensively, the accuracy of each algorithm is compared on CEC2015 10D and CEC2017 10D. In CEC2015, F1–F2 are unimodal functions, F3–F9 are multimodal functions, F10–F12 are hybrid functions, and F13–F15 are composition functions. In CEC2017, F1–F3 are unimodal functions, and F4–F10 are multimodal functions. Additionally, F11–F20 are hybrid functions, and F21–F30 are composition functions.

In addition, to compare the performance of each algorithm more comprehensively, Friedman ranking is introduced in the experiment. Friedman ranking is used to obtain the average ranking of each algorithm on all functions according to the ranking of each algorithm in each function. Therefore, the smaller the score is, the better the overall performance of the algorithm. The Wilcoxon signed rank test with a significance level of 0.05 is used. The statistical results on CEC2015 and CEC2017 are shown in Tables 2 and 3, respectively.

Table 3 shows the mean and standard deviation of each algorithm on the CEC2015 10D. In Table 3, Friedman represents the Friedman ranking, and U/M/H/C represents the number of functions ranked first for each algorithm for the unimodal, multimodal, hybrid, and composition functions, respectively. + , −, and = indicate that RESAPSO is significantly better than the comparison algorithm, significantly inferior to the comparison algorithm, and has no significant difference from the comparison algorithm, respectively. Table 3 shows that RESAPSO ranks first among the 13 functions with respect to the mean value. SHPSO, CAL-SAPSO, SA-COSO, ESAO, and SA-MPSO rank first for 3, 2, 2, 0, and 1 functions, respectively. RESAPSO is significantly better than the other algorithms for unimodal functions, approximately 105 better than the other algorithms in terms of F1, and approximately 106 better than the other algorithms in terms of F2. There are two reasons for these results. First, for each IFMADM, one promising individual and one uncertain individual will be selected. When the objective function is a unimodal function, half of the computing resources are used to exploit the global optimum. Second, IFMADM can be used to select promising individuals more efficiently to improve the accuracy of local exploitation. For multimodal functions (F3–F9), RESAPSO outperforms the other algorithms for a total of 4 multimodal functions. This is because IFMADM can comprehensively evaluate all individuals based on the search history information of the PSO and obtain the most uncertain individual, thereby improving the fitting ability of each surrogate model and then improving the global optimization ability of RESAPSO. For the hybrid function, RESAPSO, SHPSO, and SA-MPSO each rank first for one function. SHPSO, CAL-SAPSO, and SA-COSO each rank first for a composition function. In addition, RESAPSO's Friedman rank is 2.4, which proves that RESAPSO has a better overall performance.

Table 4 shows the mean and standard deviation of each algorithm on CEC2017 10D. It can be seen from the table that RESAPSO ranks first for 12 functions. SHPSO, CAL-SAPSO, SA-COSO, ESAO, and SA-MPSO rank first for 4, 4, 0, 1, and 9 functions, respectively. For the unimodal functions (F1–F3), the mean value using RESAPSO is more than 105 better than that of the other algorithms for F1. Benefiting from the expression and discernibility of intuitionistic fuzzy sets on uncertain problems, RESAPSO can be used to analyze the region where the extrema point is most likely to exist. Therefore, better results can be achieved using RESAPSO for a unimodal function with only one extreme point. For the multimodal functions (F4–F10), RESAPSO ranks first among the three functions and performed better than the other algorithms. This proves that IFMADM can be used to accurately select unexplored regions. Thus, the surrogate model can have a better global fit, improving the exploration ability of RESAPSO. RESAPSO has the best overall performance on the hybrid functions (F11–F20), ranking first for 6 functions. This is because the hybrid function is more complicated. Better fitting results cannot be obtained on a unimodal function and a multimodal function at the same time using a single surrogate model. SAEA based on a multimodel strategy cannot be used to effectively distinguish the fitting degree from different surrogate models in different neighborhoods. Therefore, better performance cannot be achieved. RESAPSO uses BES to dynamically and adaptively analyze the fit of each model in the region, thereby improving the reliability of RESAPSO’s estimation of individual fitness. Therefore, RESAPSO has a greater advantage with respect to hybrid functions. RESAPSO is slightly inferior to SA-MPSO with respect to the combination function and ranks second. In summary, RESAPSO has more advantages for most functions.

In addition, RESAPSO ranks first in terms of the Friedman rankings on CEC2015 and CEC2017, with scores of 2.4 and 1.8, respectively, which shows that RESAPSO has better solution accuracy and optimization efficiency than the latest SAEAs and has stronger comprehensive optimization capabilities.

Convergence curve comparison

Although the convergence accuracy can reflect the searching ability of each algorithm on each benchmark function, the internal details of the algorithm cannot be well reflected. Therefore, a convergence curve diagram is drawn based on the experimental results on CEC2017 10D to analyze the exploration and exploitation capabilities of RESAPSO.

It can be seen from Fig. 4 that for functions F1, F4–F9, F12–F15, and F18, there is a relatively obvious rapid decline process in the later stage of RESAPSO operation. There are two reasons for this situation. First, in the early stage of RESAPSO, the uncertain individuals selected by IFMADM explore the search space better, so the selected promising individuals are closer to the global optimum. Second, the fitness of each individual can be more accurately estimated using BES, providing PSO with more reliable accuracy so that it can find the global optimum for a model that is more similar to the real objective function. Therefore, RESAPSO performs better on unimodal functions, multimodal functions, and hybrid functions that can be decomposed into unimodal functions and multimodal functions. On F27, F28, and F30, RESAPSO is better than the other algorithms. For functions F22 and F25, RESAPSO also has a faster search speed in the later stage. Hence, the results of the algorithms for functions F23 and F29 are similar.

The simulation experiment of the above convergence curve shows that RESAPSO can maintain high convergence accuracy for all 30 functions. Compared with the comparison algorithm for the unimodal, multimodal, and hybrid functions, RESAPSO has a rapid convergence ability. Even for more complex combined functions, RESAPSO can still maintain a continuous and stable convergence rate.

Convergence stability comparison

In multiple independent tests, the minimum, median, maximum, upper quantile, and lower quantile are simultaneously considered in a boxplot, and outliers are identified quickly. Thus, compared with the standard deviation, a boxplot can describe the overall stability of an algorithm in more detail and display it more intuitively in the form of a graph. Therefore, a boxplot based on the CEC2017 10D data is drawn to analyze the stability of each algorithm.

Figure 5 shows the boxplots of the experimental results of all algorithms on CEC2017 10D. For the unimodal functions, the stability and accuracy of RESAPSO on function F1 are significantly better than those of the other algorithms. On F2, SHPSO performs best, and the accuracy of RESAPSO on F3 is similar to that of SHPSO. This proves the effectiveness of RESAPSO in terms of the local search. The reason is that the proposed fuzzy information extraction method enables IFMADM to effectively distinguish promising individuals in the population, thereby improving the local development capabilities of RESAPSO. For multimodal functions, RESAPSO has better accuracy and stability for F8–F10. Although the accuracy values for F6 and F7 using RESAPSO are not as good as those using SA-MPSO and CAL-SAPSO, the equal accuracy is better. For hybrid functions, the stability and accuracy values of RESAPSO for functions F12–F15, F18, and F19 are better than those of the other algorithms. This is because a hybrid function is more complicated. RESAPSO can better identify the fit of each surrogate model in the neighborhood through BES and provide a more reliable estimate for IFMADM. IFMADM is used to analyze the fuzzy information of individuals to select promising individuals and uncertain individuals for local development and global exploration of the search space to achieve better optimization results. Among all the composition functions, RESAPSO has better stability in general. Although the optimal results for F29 using RESAPSO are not as good as those using SHPSO, RESAPSO has better stability. Although the stability of RESAPSO for F30 is slightly worse than the other algorithms, its accuracy is better.

Combining the boxplot results of the 30 benchmark functions, better stability can be maintained for the multimodal function and the composition function using RESAPSO. For the unimodal function and the hybrid function, better stability and higher accuracy are obtained using RESAPSO.

RESAPSO for feature selection and hyperparameter optimization in landslide displacement prediction

China is a region with frequent geological disasters. In 2021, there were 4772 geological disasters in China, of which 2335 were landslides, and the economic loss reached 3 billion yuan. The Three Gorges area is an important economic hub in the middle and lower reaches of the Yangtze River in China. The abundant rainfall and high reservoir water level also make this area a landslide-prone place. Along with landslides, a large amount of loess, sediment, and vegetation are poured into the river, blocking the river’s course, increasing the water level, and eroding the slope, thus increasing the landslide displacement [56]. During the dry season, the landslide displacement rate is slow. The transition between rainy and dry seasons causes the formation of step landslides, which can easily cause landslide disasters.

Through memory units, LSTM neural networks can better maintain the feature dependence among long sequences, and thus are suitable for solving complex nonlinear systems such as landslides, which are strongly influenced by time. However, the influencing factors of landslide are complex and diverse, and different influencing factors and hyperparameters of LSTM can affect the prediction accuracy of the model. Therefore, this paper will use RESAPSO for feature selection and LSTM hyperparameter optimization to improve the effectiveness of landslide displacement prediction.

The deep learning environment configuration in the experiment is as follows: Python 3.8, Keras 2.08. The LSTM neural network is designed based on the Keras library, and it is encapsulated in the form of a function, which is called by Matlab. Other software and hardware environments are described above.

The Baijiabao landslide [57] is located on the right bank of Xiangxi River, a first-class tributary of Yangtze River in Three Gorges reservoir area (110° 45′ 33.4″ E, 30° 58′ 59.9″ N), 2.5 km from the mouth of Xiangxi River. the average width of the landslide is about 400 m, longitudinal length about 550 m, average thickness 45 m, area 2.2 × 105 with an area of 2.2 × 105 and a volume of 9.9 × 106. The average thickness is 45 m, the area is 2.2 × 105 and the volume is 9.9 × 106. Engineering geological plan of Baijiabao landslide is shown in Fig. 6.

In Fig. 6, the red line represents the landslide boundary, and the yellow triangle represents the installation location of detector, the sensing data of the location of ZG323 in the figure are used.

ZG323 records the local monthly displacement data. At the same time, we obtained the daily rain capacity and daily reservoir level from the nearby reservoir. In this paper, the monthly reservoir water level is obtained by taking the average value of the daily reservoir level. The monthly rain capacity, reservoir level, and displacement data of ZG323 are plotted as Fig. 7.

Figure 7 shows the rainfall, reservoir water level, and displacement curves from March 2007 to September 2018. Where the histogram represents rainfall, the blue dashed line represents the reservoir water level, and the red solid line represents the cumulative displacement. It can be seen from the figure that the water level of the reservoir decreases significantly when it is in the rainy season. When the rainy season ends, the reservoir level rises significantly. This is because the rainfall process has an obvious hysteresis effects. Whenever the rainy season comes, the reservoir will release water in advance, reducing the reservoir water level to a lower level to prevent the reservoir water level from breaking through the warning level. In addition, whenever it is in the rainy season, the displacement curve grows with a large range, which proves that rainfall has a large impact on landslide displacement. The opposite is true for the reservoir level, where landslide displacement is accelerated when the reservoir level drops. However, rainfall and reservoir level usually have hysteresis effects on landslide displacement, so it is necessary to select appropriate features to avoid the model learning useless and redundant features. Therefore, this paper uses the reservoir water level and rainfall as the training features of the model.

The landslide data are available monthly, and the predicted value is the cumulative landslide displacement. In order to better train the model, this paper extracts a variety of features from the reservoir water level and rainfall. After extraction, the feature set contains a total of 10 features, and each feature and its description are shown in Table 5.

The hyperparameters of LSTM include the time step, number of units, and input gate activation function. For features, there are only two possibilities, selecting and not selecting. Thus, the features can be converted into a binary form, and 10 features can be converted into two binary lengths of 5. Since rainfall and reservoir levels are recorded on a daily basis, Therefore, the interval size of the input data on the time sequence is adjusted by the time step to prevent the influence of random noise on the model. There are three optional activation functions, namely, the sigmoid, tanh, and ReLU functions. Therefore, the search space is 5-dimensional and constitutes the following objective function.

In Eq. (31), \({m}_{\mathrm{test}}\) represents the number of test samples, and abs(·) represents the absolute value function. f (xi, Θ) represents the loss of the sample obtained after inputting the sample xi into the prediction model, and the prediction model structure is controlled by the variable Θ. θ1, θ2, θ3, θ4, θ5 represent 5 scalars of Θ. θ1 is a decimal number, which represents features 1–5. θ2 is a decimal number, which represents features 6–10. θ3 represents the time step. θ4 represents the number of LSTM units. θ5 represents the activation function of the input gate of the LSTM. There are three options: sigmoid (θ5 = 0), tanh (θ5 = 1), and ReLU (θ5 = 2). All scalars of Θ will be rounded from continuous values to discrete values when constructing the model. The loss function of the trained LSTM model was the mean square error. Finally, multiplying by 100 improves the difference between individuals. The LSTM optimization process based on RESAPSO is shown in Fig. 8.

To test our algorithm, we compare it with the current mainstream methods, including hand-tuned LSTM, PSO, and Bayesian optimization (BO). Since the grid method requires manual setting of the grid width, it is not included. For PSO, we test both 100 and 500 FEs to verify the performance of our algorithm. In PSO, the following common parameter settings are adopted [58]:\({c}_{1}={c}_{2}=1.49445\),\(w=0.9-0.5\times (t/\mathrm{maxiter})\), where \(\mathrm{maxiter}\) represents the maximum number of iterations. The RESAPSO parameter settings are described in the “BES and IFMADM strategy performance test” section. There are 100 FEs for BO and RESAPSO. The LSTM model considers all training features, i.e., θ1=32 and θ2 = 32, and its hyperparameters are θ3 = 5, θ4 = 1, and θ5 = 1. For training LSTM, the maximum training epoch is 150. To prevent random interference from heuristic algorithms, except for hand-tuned LSTM, each algorithm uses the average of 10 independent tests as the statistical result. In addition, to prevent random interference during LSTM neural network training, each optimal solution Θbest constructs 10 networks and trains them independently 10 times, and the average value of these 10 network losses is used as the fitness of the optimal solution Θbest. The Wilcoxon signed ranks test with a significance level of 0.05 is used. The final statistical results are shown in Table 6.

Note that the standard deviation of the LSTM model in Table 6 is the result of ten training sessions under one set of hyperparameters, while in the remaining models, it is the result of ten sets of hyperparameters optimized by RESAPSO or PSO.

The + and = symbols in Table 6 indicate that RESAPSO is significantly better than the comparison algorithm, and there is no significant difference between RESAPSO and the comparison algorithm, respectively. PSO-LSTM (100) and PSO-LSTM (500) indicate that the FEs of PSO are 100 and 500, respectively, and LSTM represents an LSTM model that does not use optimization algorithms. Table 6 shows that the prediction accuracy of RESAPSO is better than that of PSO (100) and PSO (500), Bayesian optimization and manual tuning. In addition, the prediction accuracy of RESAPSO is significantly better than that of PSO (100), and there is no significant difference between the accuracy values obtained using PSO (500) and BO. This means that in the feature selection and hyperparameter optimization of landslide displacement prediction, RESAPSO and 500 iterations of PSO have the same ability. In summary, RESAPSO only needs one-fifth of the time of PSO to achieve the same prediction accuracy, which greatly reduces the optimization time while maintaining the prediction accuracy. The landslide displacement prediction result is shown in Fig. 9.

In Fig. 9, in order to make the comparison between algorithms clearer, the regions with large gaps between algorithms are enlarged. As shown in the figure, in the Three Gorges area, every year from August to February of the following year is the non-rainy season, and the landslide displacement at this stage is gentle creep period, and all models have good performance in landslide displacement prediction at this stage. During the rainy season from March to July each year, the landslide displacement is more intense and presents as “step-like” in this period, and the prediction model is usually difficult to predict accurately. The prediction of the step-like period is the most critical part of the prediction of landslide displacement because the drastic displacement change is likely to cause a landslide disaster. As can be seen from the figure, in the maximum displacement interval [980–1100 mm] from 2016-3 to 2016-7, RESAPSO-LSTM is closest to the actual measured values compared to other algorithms. In 2017-3 to 2017-7, performed slightly worse. In the minimum displacement interval [1200–1250 mm] from 2018-3 to 2018-7, the RESAPSO-LSTM prediction is the closest to the actual displacement, while the PSO500-LSTM and LSTM predicted a large fluctuation in displacement. The above shows that the RESAPSO-LSTM model can accurately predict both larger displacement changes and smaller displacement predictions during landslide step-like periods.

Conclusion

Aiming at computationally expensive problems, this paper proposes a reliability-enhanced surrogate-assisted particle swarm optimization, RESAPSO. To solve the weight adaptive problem of the multisurrogate model ensemble strategy, this paper considers the influence of neighborhood on the accuracy of the surrogate model and proposes a Bayesian evaluation strategy that uses a posteriori probability as the confidence of each surrogate model. By taking the confidence of the evaluated points in the neighborhood as the sample points, the Bayes’s theorem is used to calculate the posterior probability, and it is used as the confidence of the surrogate model in the neighborhood, so as to reasonably integrate multiple surrogate models and then improve the fitness prediction accuracy. In addition, in order to more reasonably select promising points and uncertain points, this paper uses the intuitionistic fuzzy sets to extract multi-dimensional decision attributes from individuals and uses IFMADM to select promising points and uncertain points.

SHPSO is a SAEA that mainly improves the search strategy of PSO, and this paper mainly improves the model update strategy. The experimental results of the comparison between RESAPSO and SHPSO show that, compared with the improvement of search strategy, the model updating strategy proposed in this paper is more effective. Similar to CAL-SAPSO, RESAPSO also uses the PSO and Kriging models, but this paper uses the multi-surrogate model method. The results of the experiment with CAL-SAPSO show the advantages of the multi-model strategy. SA-COSO is a SAEA for high-dimensional problems, while this paper mainly focuses on low-dimensional problems. Comparative experiments confirm that SAEA for high-dimensional problems is not necessarily applicable to low-dimensional problems. ESAO and SA-MPSO are the SAEAs proposed in recent years, and the experimental results show the advanced performance of our algorithm.

Experimental results on CEC2015 and CEC2017 show that RESAPSO is superior in accuracy, convergence speed, and stability and ranks first in the Friedman test. In addition, On F1 and F2 of CEC2015, RESAPSO outperformed other algorithms by about 105, and 106, respectively; on CEC2017 F1, RESAPSO outperformed other algorithms by about 105. On these two functions, RESAPSO almost reaches the optimal value. In order to predict the landslide displacement, the RESAPSO-LSTM model is established, which effectively solves the landslide feature selection and the optimization of LSTM hyperparameters and uses fewer evaluation times while improving the prediction accuracy. The experimental results show that RESAPSO-LSTM has the highest prediction accuracy compared with the contrast model, and the optimization time of RESAPSO is about one-fifth that of PSO. The RESAPSO-LSTM model can reflect the displacement prediction in time in both large and small displacement changes in the displacement prediction of a landslide step abrupt period, providing a more effective prediction method for the risk warning of a landslide in a severe deformation period.

As a landslide is a very complex nonlinear dynamic system, in addition to rainfall and reservoir water level, landslides are also affected by different internal and external factors, such as soil water content, groundwater, vegetation coverage, clay properties, and geological structure. In addition, landslides are affected by many complex random factors, such as human engineering activities and extreme weather, showing very complex nonlinear evolution characteristics. At present, due to the lack of detailed monitoring data for these factors, this paper only studies the influence of rainfall and reservoir water level as the main trigger factors on landslide displacement. With the increase of monitorable factors, the landslide displacement prediction problem will be transformed into an expensive computational problem with high dimensions, which will limit the application of Kriging models. As a result, in future work, the RBFNN model and PR model with fast calculation speed are considered to fit the global trend, while the Kriging model with slow calculation speed but high accuracy is used for local accurate fitting, so that the landslide disaster prediction in the complex environment can be solved with massive, high-dimensional, and multi-feature fusion.

Data availability

Due to the data on landslide displacement, rainfall, and reservoir levels are protected, we cannot provide relevant data. The code for RESAPSO is available at https://github.com/VeteranDriverONE/RESAPSO.

References

Wang Y, Fang Z, Wang M (2020) Comparative study of landslide susceptibility mapping with different recurrent neural networks. Comput Geosci 138:104445

Xu C, Huang K, Wei C (2022) Landslide displacement prediction based on variational mode decomposition and LSTM. In: 2022 20th international conference on optical communications and networks (ICOCN), pp. 1–3

Chen K, Liu H, Tan X (2022) Research on prediction of landslide displacement based on BP neural network and D-S evidence theory. In: 2022 3rd international conference on computer vision, image and deep learning & international conference on computer engineering and applications (CVIDL & ICCEA), pp. 103–106

Abbasimehr H, Paki R (2021) Improving time series forecasting using LSTM and attention models. J Ambient Intell Humaniz Comput 13:1–19

Jelodar H, Wang Y, Orji R (2020) Deep sentiment classification and topic discovery on novel coronavirus or COVID-19 online discussions: NLP using LSTM recurrent neural network approach. IEEE J Biomed Health Inform 24(10):2733–2742

Wang GG, Gandomi AH, Alavi AH (2016) A hybrid method based on krill herd and quantum-behaved particle swarm optimization. Neural Comput Appl 27(4):989–1006

Agarwal P, Mehta S (2014) Nature-inspired algorithms: state-of-art, problems and prospects. Int J Comput Appl 100(14):14–21

Zhao W, Wang L, Zhang Z (2018) A novel atom search optimization for dispersion coefficient estimation in groundwater. Futur Gener Comput Syst 91(2):601–610

Mirjalili S, Gandomi AH, Mirjalili SZ (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114(12):163–191

Jiang H, Gao G, Ren Z (2022) SMARTEST: a surrogate-assisted memetic algorithm for code size reduction. IEEE Trans Reliab 71(1):190–203

Dong H, Wang P, Yu X (2021) Surrogate-assisted teaching-learning-based optimization for high-dimensional and computationally expensive problems. Appl Soft Comput 99:106934

Sun C, Jin Y, Cheng R (2017) Surrogate-assisted cooperative swarm optimization of high-dimensional expensive problems. IEEE Trans Evol Comput. 21(4):644–660

Wang W, Liu HL, Tan KC (2022) A surrogate-assisted differential evolution algorithm for high-dimensional expensive optimization problems. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2022.3175533

Fan C, Hou B, Zheng J (2020) A surrogate-assisted particle swarm optimization using ensemble learning for expensive problems with small sample datasets. Appl Soft Comput 91:106242

Wu D, Guan Q, Fan Z (2022) AutoML with parallel genetic algorithm for fast hyperparameters optimization in efficient IoT time series prediction. IEEE Trans Ind Inform. https://doi.org/10.1109/TII.2022.3231419

Tayarani-N M, Yao X, Xu H (2015) Meta-heuristic algorithms in car engine design: a literature survey. IEEE Trans Evol Comput. 19(5):609–629

Wang H, Jin Y, Doherty J (2017) Committee-based active learning for surrogate-assisted particle swarm optimization of expensive problems. IEEE Trans Cybern 47(9):2664–2677

Jin Y, Olhofer M, Sendhoff B (2000) On evolutionary optimization with approximate fitness functions. In: Proceedings of the genetic and evolutionary computation conference (GECCO), pp. 786–793

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20(1):87–96

Hajizamani M, Helfroush MS, Kazemi K (2020) Optimum feature selection using hybrid grey wolf differential evolution for motor imagery brain computer interface. In: 2020 10th international conference on computer and knowledge engineering (ICCKE), pp. 605–610

Song X-f, Zhang Y, Gong D-w (2021) Feature selection using bare-bones particle swarm optimization with mutual information. Pattern Recogn 112:107804

Tarkhaneh O, Nguyen TT, Mazaheri S (2021) A novel wrapper-based feature subset selection method using modified binary differential evolution algorithm. Inf Sci 565:278–305

Wang J, Ye M, Xiong F (2021) Cross-scene hyperspectral feature selection via hybrid whale optimization algorithm with simulated annealing. IEEE J Sel Top Appl Earth Observ Remote Sens 14:2473–2483

Altarabichi M G, Nowaczyk S, Pashami S (2021) Surrogate-assisted genetic algorithm for wrapper feature selection. In: 2021 IEEE congress on evolutionary computation (CEC), pp. 776–785

Snoek J, Larochelle H, Adams RP (2012) Practical Bayesian optimization of machine learning algorithms. In: Advances in neural information processing systems, pp. 2951–2959

Rokhsatyazdi E, Rahnamayan S, Amirinia H (2020) Optimizing LSTM based network for forecasting stock market. In: 2020 IEEE congress on evolutionary computation (CEC), pp. 1–7

Bai Y, Bain M (2020) Using a semi-evolutionary algorithm to optimize deep network hyper-parameters with an application to donor detection. In: 2020 IEEE symposium series on computational intelligence (SSCI), pp. 2655–2662

Han JH, Choi DJ, Park SU (2020) Hyperparameter optimization for multi-layer data input using genetic algorithm. In: 2020 IEEE 7th international conference on industrial engineering and applications (ICIEA), pp. 701–704

Biswas S, Saha D, De S (2021) Improving differential evolution through Bayesian hyperparameter optimization. In: 2021 IEEE congress on evolutionary computation (CEC), pp. 832–840

Qiu H, Xu Y, Gao L (2016) Multi-stage design space reduction and metamodeling optimization method based on self-organizing maps and fuzzy clustering. Expert Syst Appl 46:180–195

Zhang Z, Chen H, Cheng Q S (2020) Surrogate-assisted enhanced global optimization based on hybrid DE for antenna design. In: 2020 IEEE MTT-S international conference on numerical electromagnetic and multiphysics modeling and optimization (NEMO), pp.1–4

Namura N, Shimoyama K, Obayashi S (2017) Expected improvement of penalty-based boundary intersection for expensive multiobjective optimization. IEEE Trans Evol Comput. 21(6):898–913

Zhang Y, Gong C, Li C (2020) Surrogate-assisted memetic algorithm with adaptive patience criterion for computationally expensive optimization. In: 2020 IEEE congress on evolutionary computation (CEC), pp. 1–8

Eason J, Cremaschi SJC, Engineering C (2014) Adaptive sequential sampling for surrogate model generation with artificial neural networks. Comput Chem Eng 68:220–232

Pan L, He C, Tian Y (2019) A classification-based surrogate-assisted evolutionary algorithm for expensive many-objective optimization. IEEE Trans Evol Comput. 23(1):74–88